Abstract

This study aimed to develop an artificial intelligence model that can detect mesiodens on panoramic radiographs of various dentition groups. Panoramic radiographs of 612 patients were used for training. A convolutional neural network (CNN) model based on YOLOv3 for detecting mesiodens was developed. The model performance according to three dentition groups (primary, mixed, and permanent dentition) was evaluated, both internally (130 images) and externally (118 images), using a multi-center dataset. To investigate the effect of image preprocessing, contrast-limited histogram equalization (CLAHE) was applied to the original images. The accuracy of the internal test dataset was 96.2% and that of the external test dataset was 89.8% in the original images. For the primary, mixed, and permanent dentition, the accuracy of the internal test dataset was 96.7%, 97.5%, and 93.3%, respectively, and the accuracy of the external test dataset was 86.7%, 95.3%, and 86.7%, respectively. The CLAHE images yielded less accurate results than the original images in both test datasets. The proposed model showed good performance in the internal and external test datasets and had the potential for clinical use to detect mesiodens on panoramic radiographs of all dentition types. The CLAHE preprocessing had a negligible effect on model performance.

Subject terms: Dental diseases, Dentistry, Panoramic radiography, Mathematics and computing

Introduction

Mesiodens refers to a supernumerary tooth located in the anterior maxilla, and it is the most common type of supernumerary tooth1. Impacted mesiodens has various effects on the succeeding teeth or adjacent permanent teeth and it causes delayed eruption, rotation, displacement, crowding, diastema, and root resorption2. Odontogenic cysts involving mesiodens may also occur, interfering with implant placement and orthodontic tooth movement3.

Panoramic radiography is a widely used imaging modality for diagnosis and treatment planning in dentistry4–6. However, the geometry of panoramic radiography results in disadvantages such as image blurring, distortion, low resolution, and superposition of additional structures, and these factors make it difficult to accurately diagnose lesions7–10. The anterior regions of the maxilla and mandible are especially difficult to diagnose due to the thin image layer of the device, overlapping of the vertebrae11, and the air space between the tongue and the palate12. The degree of overlapping and blurring depends on the device and the patient’s position. Thus, for a clinician with little experience or when many images need to be read in a short time, the detection of mesiodens on panoramic radiographs is often missed.

Because mesiodens may cause a variety of complications, its diagnosis is important. An accurate diagnosis would allow timely removal of mesiodens, reducing the risk of complications, the risk of which is especially high in mixed and primary dentition due to overlap between the developing succeeding tooth germ and mesiodens. Thus, it is helpful for dental clinicians to develop an automatic diagnostic artificial intelligence (AI) model using panoramic radiography. However, only one study has focused on the automated detection of mesiodens, and it was limited to permanent dentition13. Image preprocessing has been widely applied in medical image-based deep learning studies14–16. Contrast-limited histogram equalization (CLAHE) has often been used in studies using panoramic radiography17,18, but no previous studies have focused on comparing the model performance between original images and CLAHE images.

This study aimed to develop an AI model to automatically detect mesiodens on panoramic radiography for primary, mixed, and permanent dentition groups. The performance of the model was validated internally and externally with multi-center test data, and the effect of preprocessing was investigated.

Materials and methods

Subjects

This study was approved by the Institutional Review Board of Yonsei University Dental Hospital (No. 2-2021-0043) and was conducted in accordance with ethical regulations and guidelines. The requirement for informed consent was waived since this was a retrospective study and all data in this study were used after anonymization.

The training and validation of the AI model used the panoramic radiographs of 612 patients with an impacted mesiodens in the anterior maxillary region who visited Yonsei University Dental Hospital from July 2017 to January 2021. The panoramic images were acquired from two types of equipment: RAYSCAN Alpha (Ray Co., Ltd., Hwaseong-si, Korea) and PaX-i3D Green (Vatech Co., Ltd., Hwaseong-si, Korea). The panoramic images were divided into three groups according to the stage of tooth development, as follows: primary dentition (3–6 years), mixed dentition (7–13 years), and permanent dentition (over 14 years old). Table 1 shows the detailed distribution of the training and validation dataset. Since mesiodens is more difficult to diagnose in primary and mixed dentition than in permanent dentition, panoramic radiographs were collected mainly with primary and mixed dentition.

Table 1.

Number of panoramic radiographs with mesiodens in the training and validation datasets.

| Group | Training | Validation | Total |

|---|---|---|---|

| Primary dentition | 267 | 29 | 296 |

| Mixed dentition | 186 | 21 | 207 |

| Permanent dentition | 98 | 11 | 109 |

| Total | 551 | 61 | 612 |

The test dataset consisted of internal data from Yonsei University Dental Hospital and external data obtained using ProMax (Planmeca Inc., Helsinki, Finland) from Gangnam Severance Hospital. The internal test dataset consisted of 65 images with mesiodens and 65 images without mesiodens, and the external test dataset consisted of 58 images with mesiodens and 60 images without mesiodens. Table 2 shows the test dataset. All panoramic images were selected from patients who had cone-beam computed tomography scans, which were used to confirm the presence of mesiodens.

Table 2.

Number of panoramic radiographs in the internal and external test datasets.

| Group | Internal test | External test | ||

|---|---|---|---|---|

| With mesiodens | Without mesiodens | With mesiodens | Without mesiodens | |

| Primary dentition | 30 | 30 | 10 | 20 |

| Mixed dentition | 20 | 20 | 23 | 20 |

| Permanent dentition | 15 | 15 | 25 | 20 |

| Total | 65 | 65 | 58 | 60 |

Image preparation and preprocessing

Panoramic radiographs were downloaded in the bitmap format with a matrix size of approximately 2996 (width) × 1502 (height) pixels. As preprocessing, the CLAHE method was applied to all the original images. The CLAHE method involves applying equalization based on dividing the image into several regions of almost equal sizes19. Applying the CLAHE method has been found to improve the image quality compared to other histogram equalization methods, and it has been widely used in deep learning model studies based on medical images20–23. The experiments using the original images and CLAHE images were implemented in Windows 10 with the TensorFlow 1.16.0 framework on an NVIDIA GPU (TITAN RTX).

Development and evaluation of the model

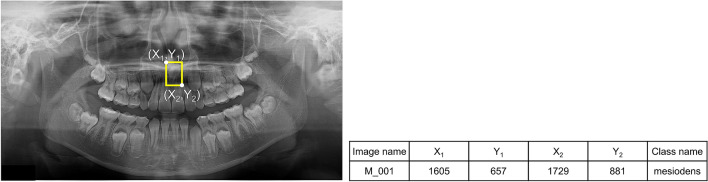

We developed a model based on YOLOv3 for detecting mesiodens. YOLO is a representative deep learning (DL) detection algorithm that has shown much better performance than other detection algorithms24. This model required information on the training dataset (i.e., the location and class name of ground truth) for model training. An oral radiologist with over 20 years of experience performed annotation using a rectangular region of interest (ROI) including just the mesiodens as a gold standard using the graphical image annotation tool LabelImg (version 1.8.4, available at https://github.com/tzutalin/labelImg). From the annotations, the coordinates of the upper left (X1, Y1) and lower right (X2, Y2) corners of the ROI were determined and its class name was extracted as “mesiodens” (Fig. 1). The information extracted from the input images was used in the model training process.

Figure 1.

The oral radiologist annotated the mesiodens with a yellow rectangular box. The coordinate information of the upper left (X1, Y1) and the lower right (X2, Y2) was determined, and the class name was extracted as “mesiodens.”

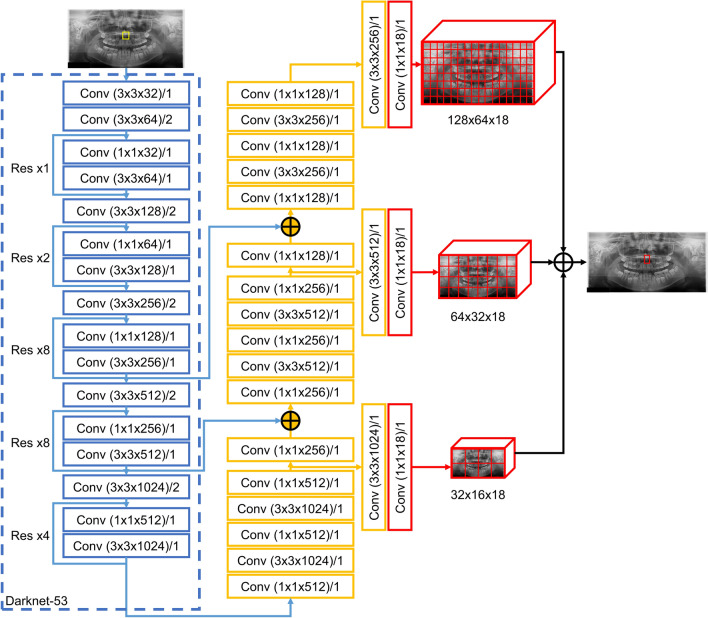

The panoramic images were resized to 512 (width) × 256 (height) pixels and input to the backbone (darknet-53 architecture), which consisted of 53 convolutional layers, with batch normalization added to all convolutional layers. The leak rectified linear unit (ReLU) was used as the activation function. The output comprised three feature maps that passed through the convolutional layers, and mesiodens was automatically detected at different resolutions through these three feature maps. When the model detected mesiodens, it provided an image marked with a red box in the detected area, and if there was no detected mesiodens, it provided the input panoramic image without a box (Fig. 2). It was judged that the model correctly predicted mesiodens when the intersection over union (IoU) value of the detected mesiodens area was 0.5 or higher25. The first step of model training used the weights of the YOLO model pre-trained using the COCO dataset24 and the model was trained for 300 epochs with our dataset. In the initial 150 epochs, only the weights of the last 3 layers were trained with our dataset, and in the next 150 epochs, the weights of the entire network were trained on our dataset.

Figure 2.

Overall architecture of the proposed model from YOLOv3. Res, residual network; Conv, convolutional network.

In the original images, the detection performance of the proposed model was evaluated in terms of accuracy, sensitivity, and specificity using internal and external test datasets. The results with and without the CLAHE method were compared for the primary, mixed, and permanent groups.

Results

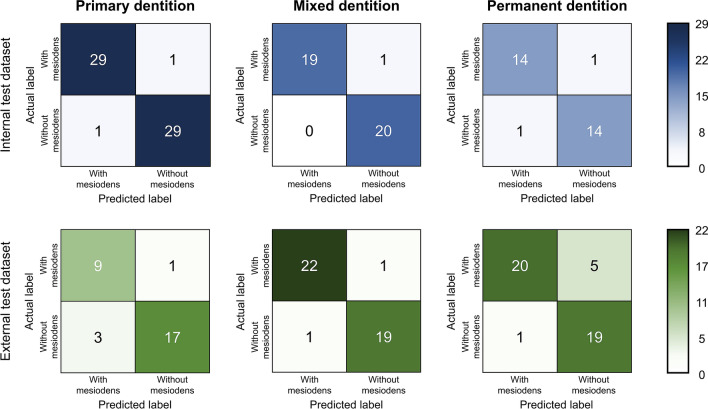

Table 3 shows the accuracy, sensitivity and specificity of the model using internal and external test datasets for the original images. The accuracy of the internal test dataset was 96.2% and that of the external test dataset was 89.8%. For the primary, mixed, and permanent dentition, the accuracy of the internal test dataset was 96.7%, 97.5%, and 93.3%, respectively, and the accuracy of the external test dataset was 86.7%, 95.3%, and 86.7%, respectively.

Table 3.

Accuracy, sensitivity, and specificity of the model (%).

| Internal test | External test | |||||

|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | |

| Primary | 96.7 | 96.7 | 96.7 | 86.7 | 90.0 | 85.0 |

| Mixed | 97.5 | 95.0 | 100.0 | 95.3 | 95.7 | 95.0 |

| Permanent | 93.3 | 93.3 | 93.3 | 86.7 | 80.0 | 95.0 |

| Total | 96.2 | 95.4 | 96.9 | 89.8 | 87.9 | 91.7 |

Confusion matrices of the model using the internal and external test datasets according to the dentition group are shown in Fig. 3.

Figure 3.

Confusion matrices of the internal and external test dataset for the primary, mixed, and permanent dentition groups.

Table 4 shows the accuracy, sensitivity, and specificity of the model using internal and external test datasets depending on whether the CLAHE preprocessing method was applied. The accuracy and specificity of the original images was higher than that of the CLAHE images for both the internal and external test datasets.

Table 4.

Accuracy, sensitivity, and specificity of the model according to CLAHE preprocessing (%).

| Internal test | External test | |||

|---|---|---|---|---|

| Original image | CLAHE image | Original image | CLAHE image | |

| Accuracy | 96.2 | 93.1 | 89.8 | 88.1 |

| Sensitivity | 95.4 | 95.4 | 87.9 | 86.2 |

| Specificity | 96.9 | 90.8 | 91.7 | 90.0 |

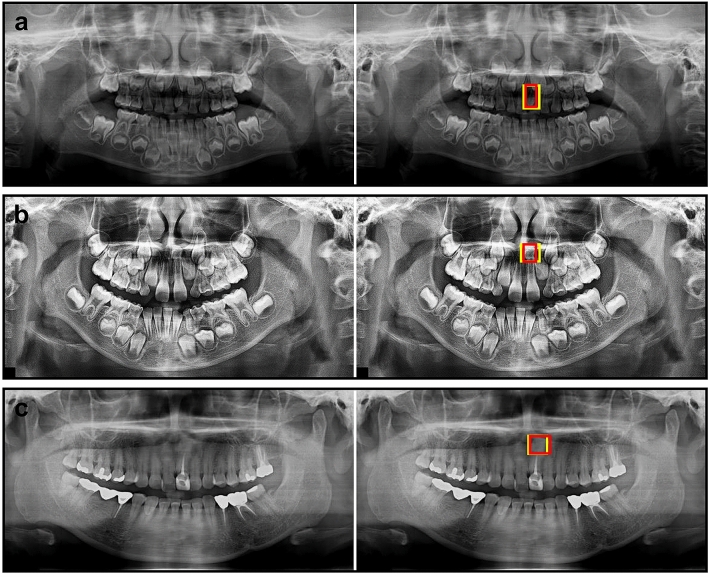

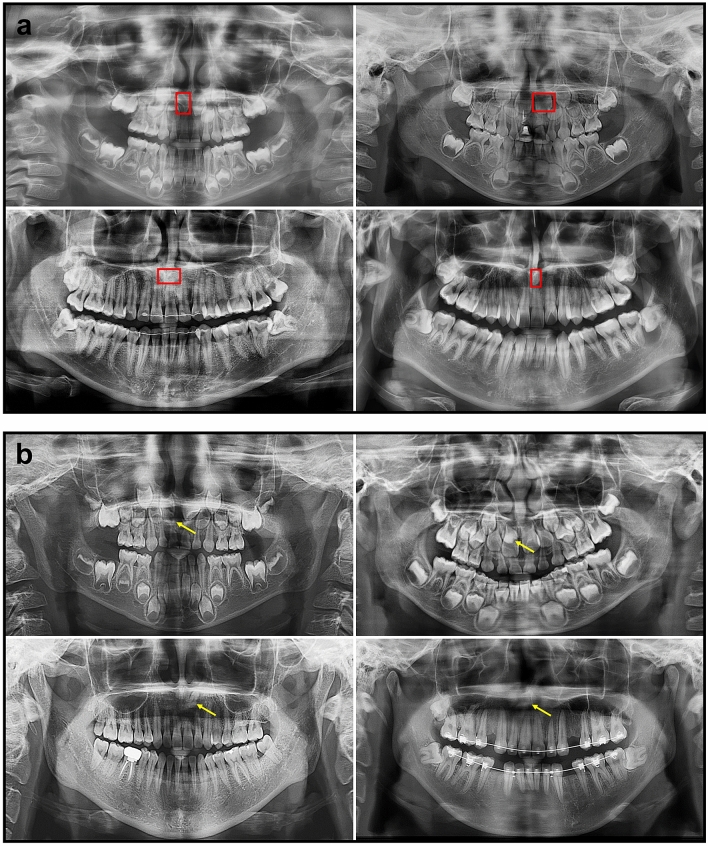

Figure 4 shows examples of mesiodens correctly detected by the developed model. False-positive and false-negative cases are presented in Fig. 5. The incorrect detection cases were confused with the succeeding tooth germ, anterior nasal spine, and ala of the nose.

Figure 4.

Examples of correctly detected mesiodens in primary dentition (a), mixed dentition (b), and permanent dentition (c). The left side was the input image and the right side was the output image. The mesiodens annotated by the radiologist is shown as the yellow box and the automatically detected mesiodens is shown as the red box.

Figure 5.

Examples of false-positive cases (a) and false-negative cases (b). Red boxes denote the incorrectly detected regions, while yellow arrows show undetected mesiodens.

Discussion

AI research has been conducted in a diverse range of fields, and numerous studies have also been conducted in the dental field. DL algorithms with convolutional neural networks (CNNs) have received considerable attention and have been used for segmentation, classification, and detection with panoramic radiographs17,21,26. Many studies have applied these techniques to orthodontic diagnoses, root canal treatment, tooth extraction, and the diagnosis of lesions27,28. The most common application has been for classifying cysts and tumors of the jaw17,29,30.

Recently, various algorithms such as the YOLO algorithm, deformable parts model, and R-CNN algorithm have been introduced and found to effectively detect cancer, nodules, fractures, or other lesions on medical images31–34. The YOLO algorithm was first proposed by Redmon et al.24, and it has been applied in the dental field to detect various diseases on panoramic radiographs17,29,35,36. Yang et al.35 detected cysts and tumors of the jaw using YOLOv2 and obtained a precision of 0.707 and recall of 0.680. Kwon et al.17 developed a YOLOv3 model that showed high performance, with sensitivity values of 91.4%, 82.8%, 98.4%, and 71.7% for dentigerous cysts, periapical cysts, odontogenic keratocysts, and ameloblastomas, respectively. Son et al.36 developed a model to detect mandibular fractures using YOLOv4.

Early AI research tended to use images obtained from a single device at one institution37–39, making the resulting models difficult to generalize, and the problem arose that performance deteriorated when AI models were applied in actual clinical practice. The assessment of the real-world clinical performance of a model-based DL algorithm requires external validation using data collected at institutions other than the institution that provided the training data40. In particular, multi-center research is especially important for panoramic radiography because the thickness of the image layer is different for each type of equipment, and blurring and overlapping can vary depending on the equipment and patient position. We conducted training and validation using images obtained with two types of equipment, and tested the model with images obtained from different devices at internal and external institutions to confirm generalizability. The accuracy, sensitivity, and specificity of the developed model using the internal test dataset were all more than 95%, and the corresponding performance metrics with the external test dataset showed only slightly poorer performance compared with the internal test results, with values of 89.8%, 87.9%, and 91.7%, respectively. Previous studies that performed external testing generally showed similar trends. In a DL study for the diagnosis of mandibular condylar fractures using panoramic radiographs from two hospitals, when images from the same hospital were used as the training and test data sets, the accuracy was 80.4% and 81%, respectively. In contrast, when images from different hospitals were used, the accuracy decreased to 59.0% and 60%21. This drop-off in performance is thought to be due to variation between the training panoramic radiographs and the external panoramic radiographs (e.g., differences in image noise and brightness), as mentioned in other research41.

Due to the geometric configuration of panoramic radiographs, the maxillary anterior region is the most blurred, and diseases or mesiodens in this area is often missed. Kuwada et al.13 developed DL models (AlexNet, VGG-16, and DetectNet) and compared their performance. A limitation of that previous study is that it was performed on permanent dentition only, with panoramic radiographs from one institution, and without external testing. In contrast, our study developed a model using the YOLOv3 algorithm and compared its performance according to preprocessing. Furthermore, our study was conducted with all dentition groups (primary, mixed, and permanent dentition) on panoramic radiographs and was tested internally and externally using multi-center data. Our proposed model showed high accuracy (more than 93%) in primary and mixed dentition, as well as permanent dentition, and the accuracy, sensitivity, and specificity showed good performance (over 87%) for the external test dataset. The CLAHE preprocessing method was also applied to investigate whether preprocessing improved model performance. Rahman et al.42 studied five image enhancement techniques, including CLAHE, to detect COVID-19 on chest X-rays, all of which showed very reliable performance. Some studies have applied CLAHE to panoramic radiography17,18. In the present study, the original images without CLAHE had higher accuracy, sensitivity, and specificity, both internally and externally, except for sensitivity in internal testing. Therefore, the CLAHE preprocessing method had a negligible effect on the performance of the model, and preprocessing should be carefully considered during model development.

Our study has limitations in that the number of samples used was small and that multiple mesiodens were not included. Although it is currently very challenging to collect external data related to this topic, further research using imaging data from more centers and devices will improve the performance of the model.

Conclusion

The developed CNN model for fully automatic detection of mesiodens showed high performance in multi-center tests for all types of dentition, including primary, mixed, and permanent dentition. The developed model has the potential to help dental clinicians diagnose mesiodens on panoramic radiographs.

Author contributions

S.H. proposed the ideas; K.J., J.K. and S.H. collected data; E.H. designed the deep learning model; K.J. and Y.K. analyzed and interpreted data; E.H., K.J., Y.K. and S.H. critically reviewed the content; and E.H., K.J. and S.H. drafted and critically revised the article.

Funding

This work was funded by a National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (No. 2019R1A2C1007508).

Data availability

The data generated and analyzed during the current study are not publicly available due to privacy laws and policies in Korea, but are available from the corresponding author on reasonable request.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Eun-Gyu Ha and Kug Jin Jeon.

References

- 1.Van Buggenhout G, Bailleul-Forestier I. Mesiodens. Eur. J. Med. Genet. 2008;51:178–181. doi: 10.1016/j.ejmg.2007.12.006. [DOI] [PubMed] [Google Scholar]

- 2.Betts A, Camilleri GE. A review of 47 cases of unerupted maxillary incisors. Int. J. Paediatr. Dent. 1999;9:285–292. doi: 10.1111/j.1365-263X.1999.00147.x. [DOI] [PubMed] [Google Scholar]

- 3.Khambete N, Kumar R, Risbud M, Kale L, Sodhi S. Dentigerous cyst associated with an impacted mesiodens: Report of 2 cases. Imaging Sci. Dent. 2012;42:255–260. doi: 10.5624/isd.2012.42.4.255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Barrett AP, Waters BE, Griffiths CJ. A critical evaluation of panoramic radiography as a screening procedure in dental practice. Oral Surg. Oral Med. Oral Pathol. 1984;57:673–677. doi: 10.1016/0030-4220(84)90292-5. [DOI] [PubMed] [Google Scholar]

- 5.Rushton VE, Horner K. The use of panoramic radiology in dental practice. J. Dent. 1996;24:185–201. doi: 10.1016/0300-5712(95)00055-0. [DOI] [PubMed] [Google Scholar]

- 6.Choi JW. Assessment of panoramic radiography as a national oral examination tool: Review of the literature. Imaging Sci. Dent. 2011;41:1–6. doi: 10.5624/isd.2011.41.1.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Akkaya N, Kansu O, Kansu H, Cagirankaya LB, Arslan U. Comparing the accuracy of panoramic and intraoral radiography in the diagnosis of proximal caries. Dentomaxillofac. Radiol. 2006;35:170–174. doi: 10.1259/dmfr/26750940. [DOI] [PubMed] [Google Scholar]

- 8.Flint DJ, Paunovich E, Moore WS, Wofford DT, Hermesch CB. A diagnostic comparison of panoramic and intraoral radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endod. 1998;85:731–735. doi: 10.1016/S1079-2104(98)90043-9. [DOI] [PubMed] [Google Scholar]

- 9.Akarslan ZZ, Akdevelioğlu M, Güngör K, Erten H. A comparison of the diagnostic accuracy of bitewing, periapical, unfiltered and filtered digital panoramic images for approximal caries detection in posterior teeth. Dentomaxillofac. Radiol. 2008;37:458–463. doi: 10.1259/dmfr/84698143. [DOI] [PubMed] [Google Scholar]

- 10.Jeon KJ, et al. Application of panoramic radiography with a multilayer imaging program for detecting proximal caries: A preliminary clinical study. Dentomaxillofac. Radiol. 2020;49:20190467. doi: 10.1259/dmfr.20190467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Anthonappa RP, King NM, Rabie AB, Mallineni SK. Reliability of panoramic radiographs for identifying supernumerary teeth in children. Int. J. Paediatr. Dent. 2012;22:37–43. doi: 10.1111/j.1365-263X.2011.01155.x. [DOI] [PubMed] [Google Scholar]

- 12.Mallya SM. White and Pharoah's Oral Radiology: Principles and Interpretation/Sanjay M. Mallya, Ernest W.N. Lam. Elsevier; 2018. [Google Scholar]

- 13.Kuwada C, et al. Deep learning systems for detecting and classifying the presence of impacted supernumerary teeth in the maxillary incisor region on panoramic radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020;130:464–469. doi: 10.1016/j.oooo.2020.04.813. [DOI] [PubMed] [Google Scholar]

- 14.Lin GM, et al. Transforming retinal photographs to entropy images in deep learning to improve automated detection for diabetic retinopathy. J. Ophthalmol. 2018;2018:1–6. doi: 10.1155/2018/2159702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Shakeel PM, Burhanuddin MA, Desa MI. Lung cancer detection from CT image using improved profuse clustering and deep learning instantaneously trained neural networks. Measurement. 2019;145:702–712. doi: 10.1016/j.measurement.2019.05.027. [DOI] [Google Scholar]

- 16.Lu, H. C., Loh, E. W. & Huang, S. C. The classification of mammogram using convolutional neural network with specific image preprocessing for breast cancer detection. In 2019 2nd International Conference on Artificial Intelligence and Big Data (ICAIBD), 9–12 (IEEE, 2019).

- 17.Kwon O, et al. Automatic diagnosis for cysts and tumors of both jaws on panoramic radiographs using a deep convolution neural network. Dentomaxillofac. Radiol. 2020;49:20200185. doi: 10.1259/dmfr.20200185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Poedjiastoeti W, Suebnukarn S. Application of convolutional neural network in the diagnosis of jaw tumors. Healthc. Inform. Res. 2018;24:236–241. doi: 10.4258/hir.2018.24.3.236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Reza AM. Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J. VLSI Signal Process. Syst. Signal Image Video Technol. 2004;38:35–44. doi: 10.1023/B:VLSI.0000028532.53893.82. [DOI] [Google Scholar]

- 20.Hassan R, Kasim S, Jafery WWC, Shah ZA. Image enhancement technique at different distance for iris recognition. Int. J. Adv. Sci. Eng. Inf. Technol. 2017;7:1510. doi: 10.18517/ijaseit.7.4-2.3392. [DOI] [Google Scholar]

- 21.Nishiyama M, et al. Performance of deep learning models constructed using panoramic radiographs from two hospitals to diagnose fractures of the mandibular condyle. Dentomaxillofac. Radiol. 2021;50:20200611. doi: 10.1259/dmfr.20200611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Saiz FA, Barandiaran I. COVID-19 detection in chest X-ray images using a deep learning approach. Int. J. Interact. Multim. Artif. Intell. 2020;6:1–4. [Google Scholar]

- 23.Al-Antari MA, Al-Masni MA, Choi M-T, Han S-M, Kim T-S. A fully integrated computer-aided diagnosis system for digital X-ray mammograms via deep learning detection, segmentation, and classification. Int. J. Med. Inform. 2018;117:44–54. doi: 10.1016/j.ijmedinf.2018.06.003. [DOI] [PubMed] [Google Scholar]

- 24.Redmon, J. & Farhadi, A. Yolov3: An Incremental Improvement. arXiv preprint http://arxiv.og/abs/1804.02767 (2018).

- 25.Everingham M, Van Gool L, Williams CK, Winn J, Zisserman A. The pascal visual object classes (VOC) challenge. Int. J. Comput. Vision. 2010;88:303–338. doi: 10.1007/s11263-009-0275-4. [DOI] [Google Scholar]

- 26.Lee JH, Han SS, Kim YH, Lee C, Kim I. Application of a fully deep convolutional neural network to the automation of tooth segmentation on panoramic radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020;129:635–642. doi: 10.1016/j.oooo.2019.11.007. [DOI] [PubMed] [Google Scholar]

- 27.Hung K, Montalvao C, Tanaka R, Kawai T, Bornstein MM. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: A systematic review. Dentomaxillofac. Radiol. 2020;49:20190107. doi: 10.1259/dmfr.20190107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Khanagar SB, et al. Developments, application, and performance of artificial intelligence in dentistry—A systematic review. J. Dent. Sci. 2021;16:508–522. doi: 10.1016/j.jds.2020.06.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ariji Y, et al. Automatic detection and classification of radiolucent lesions in the mandible on panoramic radiographs using a deep learning object detection technique. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2019;128:424–430. doi: 10.1016/j.oooo.2019.05.014. [DOI] [PubMed] [Google Scholar]

- 30.Lee A, et al. Deep learning neural networks to differentiate Stafne’s bone cavity from pathological radiolucent lesions of the mandible in heterogeneous panoramic radiography. PLoS ONE. 2021;16:e0254997. doi: 10.1371/journal.pone.0254997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Pons G, Martí R, Ganau S, Sentís M, Martí J. Computerized detection of breast lesions using deformable part models in ultrasound images. Ultrasound Med. Biol. 2014;40:2252–2264. doi: 10.1016/j.ultrasmedbio.2014.03.005. [DOI] [PubMed] [Google Scholar]

- 32.Zhang M, et al. Deep-learning detection of cancer metastases to the brain on MRI. J. Magn. Reson. Imaging. 2020;52:1227–1236. doi: 10.1002/jmri.27129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Tolba, M. F. Deep learning in breast cancer detection and classification. In Proceedings of the International Conference on Artificial Intelligence and Computer Vision (AICV2020), Vol. 1153, 322 (Springer Nature, 2020).

- 34.Liu C, Hu SC, Wang C, Lafata K, Yin FF. Automatic detection of pulmonary nodules on CT images with YOLOv3: Development and evaluation using simulated and patient data. Quant. Imaging Med. Surg. 2020;10:1917–1929. doi: 10.21037/qims-19-883. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Yang H, et al. Deep learning for automated detection of cyst and tumors of the jaw in panoramic radiographs. J. Clin. Med. 2020;9:1839. doi: 10.3390/jcm9061839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Son DM, Yoon YA, Kwon HJ, An CH, Lee SH. Automatic detection of mandibular fractures in panoramic radiographs using deep learning. Diagnostics. 2021;11:933. doi: 10.3390/diagnostics11060933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hiraiwa T, et al. A deep-learning artificial intelligence system for assessment of root morphology of the mandibular first molar on panoramic radiography. Dentomaxillofac. Radiol. 2019;48:20180218. doi: 10.1259/dmfr.20180218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Tuzoff DV, et al. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac. Radiol. 2019;48:20180051. doi: 10.1259/dmfr.20180051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Scholl RJ, Kellett HM, Neumann DP, Lurie AG. Cysts and cystic lesions of the mandible: Clinical and radiologic-histopathologic review. Radiographics. 1999;19:1107–1124. doi: 10.1148/radiographics.19.5.g99se021107. [DOI] [PubMed] [Google Scholar]

- 40.Kim DW, Jang HY, Kim KW, Shin Y, Park SH. Design characteristics of studies reporting the performance of artificial intelligence algorithms for diagnostic analysis of medical images: Results from recently published papers. Korean J. Radiol. 2019;20:405–410. doi: 10.3348/kjr.2019.0025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ran AR, et al. Detection of glaucomatous optic neuropathy with spectral-domain optical coherence tomography: A retrospective training and validation deep-learning analysis. Lancet Digit. Health. 2019;1:e172–e182. doi: 10.1016/S2589-7500(19)30085-8. [DOI] [PubMed] [Google Scholar]

- 42.Rahman T, et al. Exploring the effect of image enhancement techniques on COVID-19 detection using chest X-ray images. Comput. Biol. Med. 2021;132:104319. doi: 10.1016/j.compbiomed.2021.104319. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data generated and analyzed during the current study are not publicly available due to privacy laws and policies in Korea, but are available from the corresponding author on reasonable request.