Abstract

This study is informed by two research gaps. One, Artificial Intelligence's (AI's) Machine Learning (ML) techniques have the potential to help separate information and misinformation, but this capability has yet to be empirically verified in the context of COVID-19. Two, while older adults can be particularly susceptible to the virus as well as its online infodemic, their information processing behaviour amid the pandemic has not been understood. Therefore, this study explores and understands how ML techniques (Study 1), and humans, particularly older adults (Study 2), process the online infodemic regarding COVID-19 prevention and cure. Study 1 employed ML techniques to classify information and misinformation. They achieved a classification accuracy of 86.7% with the Decision Tree classifier, and 86.67% with the Convolutional Neural Network model. Study 2 then investigated older adults' information processing behaviour during the COVID-19 infodemic period using some of the posts from Study 1. Twenty older adults were interviewed. They were found to be more willing to trust traditional media rather than new media. They were often left confused about the veracity of online content related to COVID-19 prevention and cure. Overall, the paper breaks new ground by highlighting how humans' information processing differs from how algorithms operate. It offers fresh insights into how during a pandemic, older adults—a vulnerable demographic segment—interact with online information and misinformation. On the methodological front, the paper represents an intersection of two very disparate paradigms—ML techniques and interview data analyzed using thematic analysis and concepts drawn from grounded theory to enrich the scholarly understanding of human interaction with cutting-edge technologies.

Keywords: AI, Machine learning techniques, COVID-19 pandemic, Older adult, Interview, Information-misinformation

1. Introduction

When the internet was introduced to daily life, it was meant to offer immensely diverse knowledge and information (Ratchford et al., 2001). The internet has however also led to a growth of ignorance in various forms and guises that are labelled using terms such as fake news, disinformation and misinformation. This study specifically uses the term ‘misinformation’. Access to the internet is now, often, access to resources that reinforce biases, ignorance, prejudgments, and absurdity. Parallel to a right to information, some researchers believe that there is a right to ignorance (Froehlich, 2017).

Meanwhile, a pandemic, COVID-19, has exposed several difficulties with the present global health care system. A societal concern for healthcare organizations and the World Health Organization (WHO) has been the spread of online misinformation that can exacerbate the impact of the pandemic (Ali, 2020). Almost 90% of Internet users seek online health information as one of the first tasks after experiencing a health concern (Chua & Banerjee, 2017). Therefore, regarding the pandemic COVID-19, where there is little a priori information and knowledge, individuals are likely to explore the online avenue.

However, when searching for such information on the internet and social media, one is faced with an avalanche of information referred to as an ‘infodemic’, which includes a mixture of facts and hoaxes that are difficult to separate from one another (WHO, 2020a). If a hoax related to COVID-19 prevention and cure is mistaken as a fact, there could be serious ramifications on people's health and well-being. Conceivably, healthcare organizations and public health authorities are keen to ensure that people are not deceived by COVID-19-related misinformation that has been circulating online. This is reflected in their propensity to submit misinformation-exposing posts on their social media channels (Raamkumar et al., 2020).

Social media, also known as online social networks (OSN), have now emerged as contemporary ways to reach the consumer market. Artificial Intelligence (AI)—traditionally referring to an artificial creation of human-like intelligence that can learn, reason, plan, perceive, or process natural language (Russell & Norvig, 2009)—is associated with social media. It is “an area of computer science that aims to decipher data from the natural world often using cognitive technologies designed to understand and complete tasks that humans have taken on in the past” (Ball, 2018, para. 4). The adoption of AI, a cutting-edge technology, has been propelled to an unprecedented level in the wake of the pandemic. With regards to health, AI-enabled mobile applications are now widely used for infection detection and contact-tracing (Fong et al., 2020). Even with regards to the infodemic, AI's ML techniques can play a crucial role. Research has shown that ML techniques can help separate information from misinformation (Katsaros et al., 2019; Kinsora et al., 2017; Shu et al., 2017; Tacchini et al., 2017). However, despite the hype and enthusiasm around AI and social media, there is still a lack of understanding in terms of how consumers interact and engage with these technologies (Ameen et al., 2020; Capatina et al., 2020; Rai, 2020; Wesche & Sonderegger, 2019). The extent to which algorithms can help detect misinformation amid information related to COVID-19 is therefore worth investigating.

Older adults constitute a consumer demographic group that is particularly susceptible to COVID-19 (WHO, 2020b). The pandemic causes pneumonia and symptoms such as fever, cough and shortness of breath among older adults (Adler, 2020), who usually exert maximal pressure on healthcare systems (WHO, 2020b). Moreover, ceteris paribus, older adults can also be susceptible to the ‘infodemic’. They are less confident than younger individuals in tackling the challenges that the online setting has to offer (Xie et al., 2021). Hence, older adults are more willing to trust the traditional media rather than what AI feeds them through social media (Media Insight Project, 2018). Still, they often end up becoming a victim of online misinformation (Guess et al., 2019; Seo et al., 2021).

To protect this segment of the population from misinformation about COVID-19 prevention and cure, health care organizations would require a systematic understanding of not only the ‘infodemic’, but also of how older adults respond to it. Both are issues on which the literature has shed little light. To fill this gap, the aim of this study is: To explore and understand how AI's ML techniques (Study 1) and older adults (Study 2) process the infodemic regarding COVID-19 prevention and cure.

With this overarching research aim, the objective of this study is two-fold. First, it investigates the extent to which algorithms can distinguish between information and misinformation related to COVID-19 prevention and cure (Study 1). For this purpose, a supervised ML framework was developed to classify facts and hoaxes.

Second, the study investigates older adults’ information processing behaviour in the face of the COVID-19 infodemic (Study 2). Informed by the results of Study 1 along with the theoretical lenses of misinformation, information processing and trust, 20 older adults were interviewed to understand how they had been coping with the infodemic associated with COVID-19 prevention and cure in their daily lives.

This study is important and timely for several reasons. First, The World Health Organization (WHO) declared that besides finding preventions and cures for the pandemic, it was also concerned about the online infodemic. By addressing the infodemic problem from both the computational and behavioral perspectives, the study represents a timely endeavour in the aftermath of the COVID-19 outbreak. Second, the study introduces a machine learning framework to classify information and misinformation related to COVID-19 prevention and cure. As will be shown later, the classification performance was generally promising. Third, the study offers fresh insights into how older adults, a vulnerable consumer group of society, interact with information and misinformation during a pandemic. The findings can provide insights to health organizations on how to better reach this vulnerable group for creating awareness.

The rest of this study proceeds as follows: Section 2 reviews the literature on AI, misinformation—particularly healthcare misinformation, older adults’ ICT adoption as well as trust. Section 3 describes the research methods for both Study 1 and Study 2. Section 4 and Section 5 present the findings of Study 1 and Study 2 respectively. Section 6 discusses the findings while Section 7 closes the study with a conclusion.

2. Literature review

2.1. AI and misinformation

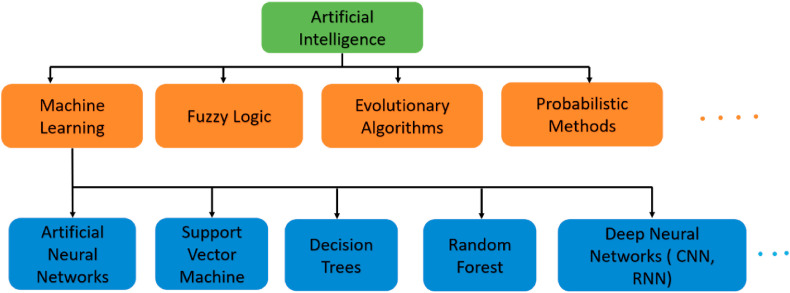

AI is increasingly becoming the veiled decision-maker of the present era (Dharaiya, 2020; Fong et al., 2020). Nonetheless, the definition of AI is problematic as researchers apply it according to the situation and context in question (e.g., McCarthy, 2007; Minsky, 1968; Hernández-Orallo, 2017). One of the problems with defining and understanding the nature of AI are the associated paradigms or components of intelligence that include, Machine Learning, Deep Learning and Neural Networks (Wooldridge, 2018). Fig. 1 illustrates some of the component paradigms and their relationships under the umbrella term of AI. .

Fig. 1.

AI Paradigms (not exhaustive).

In this study, AI's definition includes using machines for scanning large numbers of internet and OSN related web pages quickly and efficiently. These are activities that human beings cannot complete as rapidly and effectively as machines. This is due to the machines being algorithmically trained to do so; thereby expediting tasks. Research has also found that AI is a key component of the popular OSNs used every single day (Hernández-Orallo, 2017; Russell & Norvig, 2009).

Many of AI's most impressive capabilities are powered by machine learning, a subset of AI that enables trained machine systems to make accurate predictions based on large sets of data (Kaliyar, 2018; Tacchini et al., 2017). There is evidence that machine learning can help address the problem of online misinformation (Katsaros et al., 2019; Kinsora et al., 2017; Wooldridge, 2018).

The proliferation of the internet and OSNs has transformed the cyberspace into a storehouse of online falsehood, which is often expressed using a variety of terms such as fake news, disinformation and misinformation. According to the UK Parliament (2018), the term fake news is ‘bandied around’ without any agreed definition. It also has a political flavour (Vosoughi et al., 2018); thus, is avoided in the rest of this study. False online information is called disinformation when shared deliberately but misinformation when propagated inadvertently (Pal & Banerjee, 2019). The lines are often blurred between the two as one seldom has insights into people's motivations at the point when they share false information. Therefore, this study uses ‘misinformation’ as an umbrella term when referring to false information swirling online.

Misinformation accelerates propaganda, creates anxiety, induces fear, and sways public opinion; thereby having adverse societal impacts (Bradshaw and Howard, 2018; Subramanian, 2017). A particularly worrying trait of misinformation is that it spreads “farther, faster, deeper, and more broadly” compared with information (Vosoughi et al., 2018, p. 1146). Moreover, humans are seldom able to detect misinformation (Dunbar et al., 2017; Levine, 2014). Misinformation has been shown to be detrimental in various domains that range from the stock market (Bollen et al., 2011) and natural disasters (Gupta et al., 2013) to terror attacks (Starbird et al., 2014), politics (Berinsky, 2017) and healthcare (Wang et al., 2019).

Studying healthcare misinformation related to disease prevention and cure; namely COVID-19 presently where not much previous information and knowledge exists; is particularly necessary. Due to online channels, people now have free access to abundant, but often questionable, healthcare information (Adams, 2010). This information repository is utilised regularly by virtual communities (Frost & Massagli, 2008; Temkar, 2015). For healthcare organizations, it is disconcerting that patients as well as those who are vulnerable can act based on online messages long before consulting medics (van Uden-Kraan et al., 2010). When people refer to social media for knowledge and information instead of seeking professional advice, not only is there the potential to impair their healthcare decision-making but this also can erode society's willingness to approach health authorities in general (Hou & Shim, 2010; van Uden-Kraan et al., 2010). Therefore, in recent years, scholars have been shedding light on the management of health-related misinformation with a renewed sense of urgency (for a review, see Wang et al., 2019). Joining this academic discourse, the current study specifically focuses on how AI can be used to separate information from misinformation about COVID-19 prevention and cure.

2.2. Tackling healthcare misinformation during crises

Healthcare misinformation is often fuelled during crises situations such as disease outbreaks. In the past, the world has witnessed outbreaks of Ebola and SARS, with both giving rise to a fair share of healthcare misinformation (Fung et al., 2016; Ma, 2008). But no crisis in the history of mankind comes anywhere close to the magnitude of the COVID-19 pandemic, with so many countries being affected simultaneously in a short period of time. The infodemic that the pandemic of such a scale has engendered makes it difficult for people to find reliable guidance about COVID-19 prevention and cure (WHO, 2020a).

Nonetheless, as most of the globe has been enduring lockdown, people's likelihood to be exposed to—and in turn overwhelmed by—the infodemic is high. Worryingly, by early 2020, posts from authoritative sources such as the WHO and the US Centers for Disease Control and Prevention (CDC) cumulatively only achieved some hundred thousand engagements, considerably eclipsed by hoax and conspiracy theory sites, which amassed over 52 million (Mian & Khan, 2020). These suggest that healthcare organizations have their task cut out to protect the public from COVID-19-related prevention and cure misinformation.

Broadly speaking, healthcare organizations can tackle the problem using a two-step process. The first step involves detection, which seeks to identify misinformation from the pool of online content. On the scholarly front, a common technique in this vein is to employ AI-powered machine learning algorithms to classify online messages as either information or misinformation. Traditionally, these algorithms take as their input an array of features based on common linguistic properties of authentic and fictitious information (Chua & Banerjee, 2016; Katsaros et al., 2019; Kinsora et al., 2017; Shu et al., 2017; Tacchini et al., 2017). However, as content style is ever evolving, a predetermined set of linguistic features may not always help in the detection. Therefore, more sophisticated algorithms leverage deep learning that automatically learns nuances in patterns between information and misinformation without an a priori feature set (Kaliyar, 2018). In addition, there are platforms such as Hoaxy that monitor misinformation using network analysis and visualisation (Shao et al., 2018). Furthermore, independent third-party platforms such as Snopes.com also help detect misinformation.

Once misinformation is detected, the second step involves correction that seeks to expose and refute the false claim. Healthcare organizations can develop corrective messages to combat misinformation (Pal et al., 2020; Tanaka et al., 2013). WHO, for example, maintains a repository of corrective messages debunking COVID-19 misinformation. Public Health England has also been posting misinformation-correcting messages on its social media channels (Raamkumar et al., 2020).

Despite such efforts from healthcare organizations, the literature is silent about several pertinent questions: When people come across dubious information, how do they detect message veracity? How do they process corrective messages? How do they engage with the social media posts of health organizations—if at all, and why? To what extent do healthcare organizations’ efforts to create awareness about misinformation actually work? This study attempts to answer these questions in the context of COVID-19 by specifically focusing on older adults, an under-investigated but worth-investigating segment of the population.

2.3. Older adults’ ICT adoption and online information behavior

Older adults are an extremely diverse group varying considerably in their abilities, skills and experiences, which makes it particularly challenging to generalize their needs and life conditions (Östlund et al., 2015). Hence, they are often categorized (Whitford, 1998) as pre-seniors (aged 50–64 years), young-old (aged 65–74 years), and old-old (aged 75–85 years).

For older adults, ICT adoption presents a double-edged sword. For one, the use of technology promotes independent living (Chumbler et al., 2004). Contrastingly, it fosters a digital divide, which refers to the gap in the adoption of digital information and communication tools between those who are tech-savvy and those who are tech-apprehensive (Choudrie & Vyas, 2014; Choudrie et al., 2020). The literature has consistently documented older adults as being relatively less confident in technology use (Magsamen-Conrad et al., 2015; Wagner et al., 2010; Yoon et al., 2020). Even when they are willing to use technology, they are thwarted by previous experiences of discomfort, a perceived lack of support, and a perceived low usefulness of technology. These ultimately inhibit their technology adoption (Peine et al., 2015; Selwyn et al., 2003) and could lead to them being socially and digitally excluded, and lonely and socially isolated.

In the wake of the lockdown caused by COVID-19, as the degree of face-to-face communication shrank abruptly, older adults were reportedly forced to rely on online channels for information seeking even though it is outside their comfort zone (Xie et al., 2021). Amid the uncertainty under such a circumstance, investigating how they decide what to trust and what not to trust is crucial to better understand their ability to discern between information and misinformation while navigating the infodemic.

2.4. Trust and older adults

There exist various definitions of trust. For instance, trust has been conceptualized as an institutional construct by sociologists or economists, a personal trait by personality theorists, and a willingness to be vulnerable by social psychologists (Beldad et al., 2010; Lewicki & Bunker, 1996). According to Flavián et al. (2006), trust consists of three user perceptions, namely, honesty, benevolence, and competence of the trust target. As a cognitive (thinking) construct, trust can be split into two different types (Anderson & Narus, 1990; Doney & Cannon, 1997; Panteli & Sockalingam, 2005). The first type is dispositional trust which is mainly motivated by faith in humanity (McKnight et al., 1998). A second type of trust is conditional trust that is usually found in the initial period of a relationship when there are no cues for distrust (Panteli & Sockalingam, 2005).

Despite the lack of consensus in definitions, scholars unanimously highlight the necessity to study trust under situations of uncertainty (Beldad et al., 2010; Corritore et al., 2003; Racherla et al., 2012). Trust issues are critical for web-based systems including e-commerce and online shopping (Gefen et al., 2003; Golbeck & Hendler, 2006; Stewart, 2006). More pertinently, research has started to shed light on trust in the realm of health-related online information (Chua et al., 2016; Sillence et al., 2004, 2007). However, little is known about how trust-related decision-making unfolds, especially from the perspective of older adults. Clearly, there is a need for qualitative research to offer a deeper understanding of this phenomenon.

In this vein, two competing possibilities present themselves. One, older adults might be overly suspicious about the veracity of all online content, which they could thus choose to ignore altogether. Instead, they could rely on advice from healthcare organizations, governments and the traditional media, thereby not being susceptible to the infodemic (Magsamen-Conrad et al., 2015; Seo et al., 2021). Two, they could take all online content without a pinch of salt; thereby, reaching a point of being vulnerable to the infodemic (McKnight et al., 2002; Riegelsberger et al., 2005).

To capture the continuum between the two extremes, this study defines trust as older adults’ willingness to treat the infodemic with a sense of relative security that it contains information but little misinformation. Older adults are likely to be more comfortable with, and hence pay more attention to, traditional media vis-à-vis new media (Magsamen-Conrad et al., 2015; Seo et al., 2021; Xie et al., 2021). Yet, the pandemic has made it difficult for the public to resist the temptation to go online and search for COVID-19 prevention and cure. Therefore, how older adults have been coping with the COVID-19 infodemic is worth exploring.

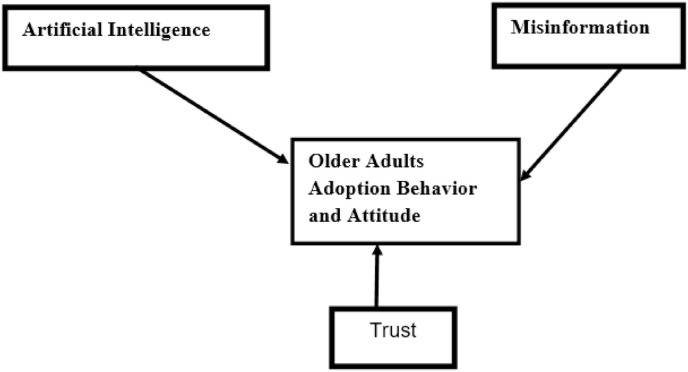

2.5. Outcome of the literature review

The outcome of the literature review is two-fold. First, even though AI has the potential to help separate information and misinformation, this potential has yet to be empirically verified in the context of COVID-19. Second, while older adults can be particularly susceptible to the virus as well as its infodemic, their information processing behaviour amid the pandemic has not been understood.

To illustrate the relationship between the various themes of this study that will be useful for the qualitative study 2, Fig. 2 is provided.

Fig. 2.

Linking the themes of this study.

To address these research gaps, a mixed methods research was conducted. Study 1 constitutes the quantitative aspect while Study 2 is qualitative.

3. Research methodology

3.1. Study 1

Data Collection. For this quantitative study, true and false claims, related to COVID-19 prevention and cure, were collected between February and April 2020. These claims came from primarily three sources: fact-checking websites, news portals, and websites of non-government organizations. A variety of fact-checking websites such as, Factly, FactCheck, HealthFeedback, SMHoaxSlayer, Snopes, etc., were consulted. News portals such as, The Guardian, The New York Times, The Huffington Post, BBC News, Washington Times, and The Independent, etc. were also leveraged. For further data triangulation, data were also drawn from the websites of non-government organizations such as, the Centers for Disease Control and Prevention (CDC), National Health Service (NHS), State Administration of Traditional Chinese Medicine, the WHO, etc.

While the sampling was non-random and self-selected, the scope of data collection was confined to only those claims that had been verified by one or more of the authoritative sources listed above as either true or false. If the veracity of a claim was still under investigation at the point of data collection, it was not admitted. The data was collated manually. Thereafter, a standard data cleaning and pre-processing process was utilised to remove punctuations, special characters and white spaces. Standard stop words (excluding the negation words) were also removed manually. Given the contemporary phenomenon under investigation, there exists no labelled ground truth dataset of true and false claims.

The manual process eventually led to a sample size of 143. Of these, 61 were true claims (information) and the remaining 82 false (misinformation). For each entry, the dataset includes not only the information/misinformation claims but also the successive investigations carried out by the authoritative sources.

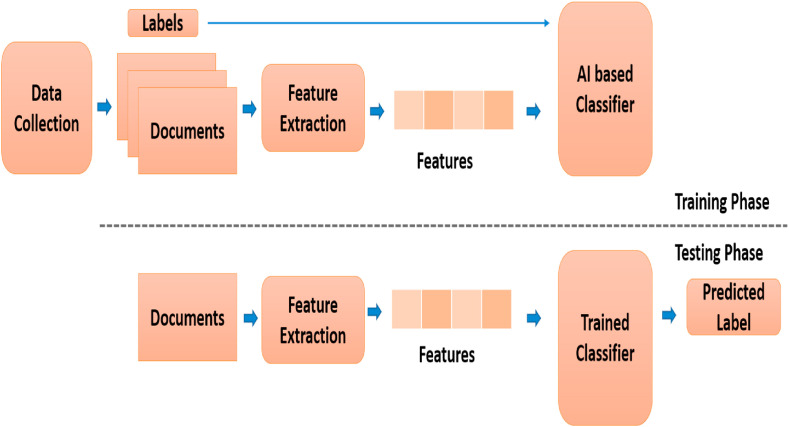

Data Analysis.Fig. 3 depicts the AI-enabled framework developed for the classification of COVID-19 information and misinformation. After the manual data collection, five features were finalized to develop and train the model. These include: (1) Claim (containing the textual claim), (2) Text (detailed explanation of the claim in the article), (3) Investigation (text describing the investigation of the claim as carried out by a fact checking site or any other authoritative body), (4) Claim Supporting Agency Presence (indicating whether the authoritative body that carried out the investigation is explicitly stated), and (5) Label (ground truth of either true or false).

Fig. 3.

Data processing and classification.

Thereafter, the 143 data points were divided such that 90% was used for classifier training and the remaining 10% for model testing. The study used four traditional machine learning algorithms; namely, Support Vector Machine (SVM), Decision Tree (DT), Random Forest (RF), Stochastic Gradient Descent (SGD), along with two deep learning models: Long Short-Term Memory (LSTM), and Convolutional Neural Network (CNN). The classification performance was measured using the metrics of precision: recall, F1-measure, sensitivity, specificity and accuracy (Gadekallu et al., 2020; Roy et al., 2020; Schültze et al., 2008).

3.2. Study 2

Data Collection. Following Study 1, the purpose of Study 2 was to acquire a richer and deeper understanding of online information and misinformation from the experiences of older adults. For this purpose, a qualitative approach was used that employed the data collection technique of in-depth, semi-structured interviews.

Specifically, 20 older adults from various sectors and cultures were interviewed (see Table 1 ). A non-random, convenience, purposive and snowball sampling approach was used. The data collection period ranged from June 1 to July 15, 2020. Due to the lockdown, to replace face to face interviews, data collection occurred using the digital platforms of Zoom and Teams that individuals could easily access.

Table 1.

Details of the participants in Study 2.

| No | Age in Years | Gender | Education | Occupation | Years of Internet use | Code |

|---|---|---|---|---|---|---|

| 1 | 50 | Male | Postgraduate | Academic | 25 | A1 |

| 2 | 53 | Male | Postgraduate | Academic | 25 | B1 |

| 3 | 74 | Male | Postgraduate | Retired, self employed | 25 | C1 |

| 4 | 87 | Female | Vocational skills certificate | Retired, self-employed music teacher | 12 | D1 |

| 5 | 52 | Female | A levels, B-tech | General Practitioner's Practice Manager | 25 | E1 |

| 6 | 52 | Female | Postgraduate | Academic | 25 | F1 |

| 7 | 54 | Male | Postgraduate | Academic | 25 | G1 |

| 8 | 60 | Male | Professional qualification | Accountant | 25 | H1 |

| 9 | 64 | Female | College for ballet dance training | Manager in IT department | 20 | J1 |

| 10 | 53 | Female | Undergraduate degree | Macmillan Nurse | 21 | K1 |

| 11 | 55 | Male | Postgraduate | Academic | 30 | L1 |

| 12 | 65 | Male | Undergraduate degree. Professional qualification |

Retired. Previously, Accountant | 25 | M1 |

| 13 | 56 | Female | Postgraduate | Project Manager | 25 | N1 |

| 14 | 57 | Male | Postgraduate | IT Project Manager | 19 | P1 |

| 15 | 72 | Female | Undergraduate | Worked as a primary school classroom assistant. Retired now. | 8 | Q1 |

| 16 | 63 | Female | Postgraduate-Practice Manager | Complementary therapist | 25 | R1 |

| 17 | 51 | Female | Professional qualification | Accountant | 25 | S1 |

| 18 | 60 | Female | Postgraduate | Business Analyst | 25 | T1 |

| 19 | 56 | Female | Undergraduate, Postgraduate diplomas | Semi-retired | 25 | U1 |

| 20 | 67 | Male | Postgraduate | Academic | 30 | V1 |

For participant recruitment, snowball sampling was used where contact was made with non-government organizations such as, Age (UK) Hertfordshire, public sector organizations departments for elderly services and other such organizations. The selection criteria included seeking older adults aged 50 years and above, who had regular internet use experience. Participant recruitment was accomplished using word of mouth and invitation to those invited by the charity organization. The sample size was not known a priori. Prior to data collection, it was estimated that approximately between 12 and 40 participants would be needed (Saunders & Townsend, 2016). We proceeded with additional interviews beyond the 12 to provide supplementary and substantial perspectives (i.e., adding value to the study), to ensure the theoretical saturation of the empirical data.

Data Analysis. The open-ended interviews commenced after obtaining ethics clearance. The questions were informed by the theoretical constructs drawn from previous studies of trust, and older adults’ information behaviour amid crises. During the interview, participants were also shown items related to information and misinformation identified in Study 1 and were asked to comment on the accuracy of the items; thereby, ensuring triangulation. They were also asked to explain the ways in which they decided whether to trust the relayed content.

Each interview lasted between 1 and 1.5 h and were recorded. The collection of data was based on the view that “[w]hat we call our data are really our own constructions of other people's constructions of what they and their compatriots are up to” (Geertz, 1973). The principal author conducted the interview and after analysing the transcriptions, discussed the results with a diverse member of the writing team to avoid biases. Secondary data (e.g., websites of various health care organizations, OSNs) and field notes were also used. The collection of various data ensured triangulation and cross-checking (Orlikowski, 1993).

The analysis was conducted using a deductive approach, where the process began with a preliminary examination of the data, which assisted the coding procedure. The coding technique was based on the classical (or Glasserian) grounded theory methodology (Glasser & Strauss, 1967). The coding scheme was derived from the extant literature, by referring primarily to conceptualizations of trust, and older adults’ information processing behaviour. The specific coding technique was chosen over others because it offered a robust and systematic instrument for coding, without necessarily restricting the researcher into any preconceived codes and categories, while offering a tangible method for building relationships between them.

Open coding commenced by identifying as many codes as possible. In many instances, some concepts were newly emergent; i.e., not suggested by the literature; thus, these were placed into newly created codes for further examination. Open codes were then grouped together, to develop our study's core categories. This formed the stage of selective coding, where several open codes were grouped into subcategories, being each other's variants, or dimensions and properties of the core category (Urquhart, 2012). This resulted in identifying the core categories, i.e., misinformation, trust, older adult's information processing behaviour; thus, allowing us to scale up the analysis.

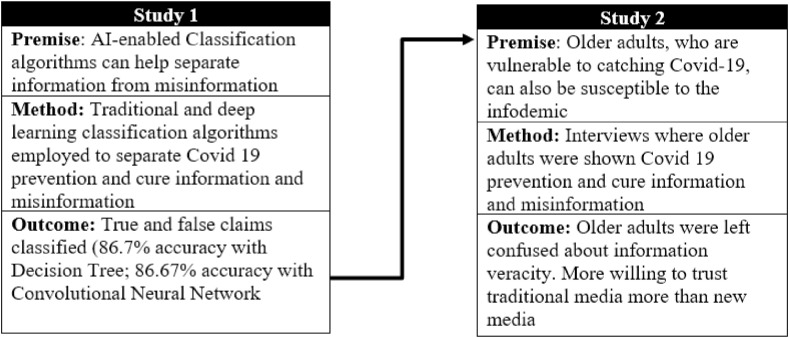

Then, ‘first-order data’ that referred to the interviewee's constructions, ‘key idea’ referred to the extraction of the quotation's essential meaning, and ‘second-order concepts’ contained our constructions, based on our analysis and extant literature were formed (Walsham, 1995). Finally, while developing our study's chains of evidence, the analysis began revealing the relationships among the various core categories. This entailed extracting representative vignettes from the empirical material to support the arguments. Fig. 4 shows the link between the two studies.

Fig. 4.

The link between Study 1 and Study 2.

4. Findings of study 1

This study sought to classify information and misinformation related to COVID-19 prevention and cure. Table 2 presents the classification performance. The model achieved an accuracy of 86.7% with the Decision Tree classifier, and 86.67% with the Convolutional Neural Network model. When classifying using CNN a SoftMax threshold was kept to avoid any false negatives. This was utilised to ensure that none of the fake news was classified as a true sample. Due to this stringent threshold some of the true news was classified as false. These steps were applied to ensure that the users are not misled by the ML model. The performance is in line with previous usage of classification on comparable or smaller sample sizes, when limited data points are available for training (Barz & Denzler, 2020; Codella et al., 2015, pp. 118–126; Liu & An, 2020).

Table 2.

Performance (all values in %) in classifying information and misinformation.

| Algorithm | Training Accuracy |

Precision | Recall | F1 | Sensitivity | Specificity | Testing Accuracy |

|---|---|---|---|---|---|---|---|

| SVM | 84.37 | 81.07 | 80 | 80.18 | 77.78 | 83.33 | 80 |

| DT | 94 | 86.66 | 86.66 | 86.66 | 88.89 | 83.33 | 86.7 |

| RF | 91 | 86.66 | 86.66 | 86.66 | 88.89 | 83.33 | 86.7 |

| SGD | 89.1 | 80 | 80 | 79.61 | 88.89 | 66.67 | 80 |

| LSTM | 77.34 | 73.33 | 73.33 | 73.33 | 77.77 | 66.66 | 73.33 |

| CNN | 89.06 | 89.1 | 86.7 | 86 | 100 | 66.67 | 86.67 |

In the dataset, there were several causes and cures of COVID-19. The common causes included 5G, dairy products, and China engaging in biological war. The common cures included drinking lukewarm water with lemon slices, gargling, eating root vegetables and vitamins. There was also an emphasis on medicines like Hydroxychloroquine.

An apparent difference between the true and the false claims was that the true posts were linked to authoritative sources (e.g., the WHO), and usually had some scientific backing. However, the false ones could not be linked to either authoritative sources or scientific evidence. In fact, authoritative sources had to intervene to debunk them categorically through counterevidence. Among the OSNs, it was found that several of the misinformation claims circulated widely on Facebook but were relatively less conspicuous on Twitter.

5. Findings of study 2

5.1. AI, ICT adoption, and trust

To triangulate and verify the findings of study 1, this study explored how the infodemic regarding COVID-19 prevention and cure is dealt with by older adults. The questions asked were about how older adults had been handling the online COVID-19 infodemic. During the interviews, participants were asked to view and comment on the true and the false claims that were classified in Study 1.

At this point, several disparities between algorithms and humans emerged. When participants viewed posts that were not known to them, they identified them as false, or being unaware and as such could not comment upon their credibility. This was applicable to content from websites such as, the WHO.

Participants were shown the WHO's COVID-19 myth busters page,1 which contained several corrective messages that debunked misinformation. When individuals viewed the page, they were largely unsure about its veracity. Many of them deemed it as false. Upon further probing, they commented upon it being too colorful and containing pictures, which they did not associate with a non-government organization like the WHO.

The researcher remained silent throughout the entire process as individuals scrolled through the page and attempted to identify the authenticity of the page. Once their probe ended, the researcher informed them of the page being true. This led to much surprise among the participants: “This does not look like a page that a UN type of organization would have. I would have expected it to have fewer pictures and less content than it presently has.” (M1). “No, that cannot be. The things that they have there are all known, what is so new about the items from WHO?” said Q1.

Sources from other countries were mostly viewed with a pinch of salt. For example, on viewing a page from the China Daily,2 B1 indicated that the page did not look credible due to the right-hand side containing the editor's picks. B1 also commented that he was not likely to read such a page as he relied on classic news websites, or their apps. “I would seek information from a third party; for example, I would look at BBC, Reuters, Guardian and such” (B1). A page containing misinformation from The New York Times,3 a well-known source, perplexed A1: “I did not expect this to be a false story because this is New York Post. It's well known” (A1).

To determine whether an individual can identify true or false claims from OSN/social media, a post from Facebook was also shown.4 The initial reaction from most participants was that it was false. But as they probed further, they became increasingly uncertain.

These activities confirmed that older adults were not always sure of posts’ veracity. This caused much confusion, which in turn could lead to participants to miss out on important information. Comparatively, these findings revealed that a trained algorithm will focus on the assigned task and not have any confusion. On the other hand, human participants have diverse ways of identifying true and false posts, which could be influenced by their previous information processing behaviour. That is, if someone is used to visiting a certain website and becomes familiar with it, they will identify the content according to their preconceptions.

What was also discovered is that with human participants, website features such as, spellings, images, advertisements, images of famous individuals, such as, celebrities were pertinent in forming decisions, which is not the case with AI. With AI, the results were based on the training that was provided.

5.2. Classic versus innovative communication channels

When considering the way that older adults process information during the COVID-19 period, it was found that there was a reliance on classic communication channels such as, the radio, personal networks, the personal General Practitioner doctor, friends and family. Many individuals found the televised 5 o'clock evening bulletins to offer most up-to-date information on COVID-19 prevention and cure. They also mentioned missing the bulletins presently as they felt that the bulletins revealed a lot of important information such as, the lockdown measures that were in place, the statistics about COVID-19 and its global impacts, or the numbers of deaths occurring over 24 h.

For recent, up-to-date information on COVID-19, many participants also referred to a suggestion that the government should implement a central website dedicated to COVID-19 content. This was because such a website would be focused only on COVID-19 issues, and not have many results to go through as in the case of the central GOV. UK website. Many participants referred to GOV. UK. But due to it being a central site for all government matters, it resulted in information overload. Instead, participants mentioned and suggested that a solely COVID-19 website should be developed. If such a website was implemented, it should serve as the first port-of-call for COVID-19 related matters.

What was also learnt from the interviews is that many participants viewed OSNs as being a source of misinformation; therefore, either individuals had no OSN account, or if they did, they screened the content. As an individual commented: “OSN leads to too much volume that makes it difficult to filter the right and wrong information. For me, emails, texts are the best ways. However, if texts and emails are from an unknown source, I will delete it” (V1). V1 also revealed that the only OSN that he trusted was LinkedIn due to its being an OSN for professionals. Another OSN that V1 used was Whatsapp, but that was more for professional ways as the individual kept in touch with international academic partners, friends and families from their countries of origin. In the context of OSN, some individuals felt that they offered little value, and in fact led to problems. “I was hacked in my Facebook account, and suffered a financial loss, so I do not see the value of the OSN,” said D1.

5.3. Regimes of control and regimes of work

From the interviews, a serendipitous finding became apparent. During the earlier COVID-19 period, Government lockdowns prevented individuals from attending their workplaces, which meant that individuals including older adults were working at home. This was a situation that many of the older adults faced (i.e. the young-old, pre-senior and old-old). This was a novel finding as generally, older adults are expected to retire and pursue other interests and the home is a place of enjoyment and solace. However, in our study, except for the old-old older adult who was teaching small children how to play the keyboard, all the other older adults were in employment, or entrepreneurs and as such, an organizational perspective emerged.

This led us to consider within organizations, the concept of a number of rules, operational regimes and regulations that pertain to regimes of work and control (Kallinikos & Hasselbladh, 2009). Regimes of control are “formal templates for structuring and monitoring the collective contributions of people in organizations, irrespective of the nature and particular character of that contribution” (Kallinikos & Hasselbladh, 2009, p. 269). On the other hand, regimes of work are made of “technological solutions, forms of knowledge, skill profiles, and administrative methods” (Kallinikos & Hasselbladh, 2009, p. 267).

In this sense, regimes of control related to the diffusion of formal organizations and shaped the criteria of relevance to work regimes. They are thus associated to the aims and priorities of particular groups (e.g., managers). On the other hand, regimes of work are more than the goals of a collective or of an individual but are the result of intentionally designed structures and task processes (Kallinikos & Hasselbladh, 2009). Hence, within the work environment, one acted according to one's training and in line to the particular work regimes. Actions align to the work routines and standard procedures behavioural aspects blend together in a way that it was difficult to distinguish one from the other (Kallinikos, 2006).

In our study, all the older adults referred to working in their routine ways in their workplace and even if information about COVID-19 prevention and cure arrived, it was not attended to instantaneously. Instead, individuals mentioned dismissing such information and continuing with their routine: “I paid attention to other sources such as, the official letter from the Prime Minister and the booklet about COVID-19, but not information that was sent to me from OSNs” (N1).

What was also discovered is that due to individuals usually having a schedule (generally work based), any information about COVID-19 was not attended to. This was the case, particularly if the details came from unknown sources. Instead, it could be attended to at a certain time of the day; eg. in the morning, afternoon, or evening as the individuals were logging out of the workplace system.

6. Discussion

The aim of this study was to explore and understand how AI's ML techniques (Study 1) and older adults (study 2) process the infodemic regarding COVID-19 prevention and cure. For this, a mixed methods research was conducted. This led to this study unravelling several findings that are worth discussing in view of the literature.

Firstly, this study shows that humans, particularly older adults—a consumer group that is not researched extensively, cannot process information ‘algorithmically’ to separate hoaxes from facts. Building on previous computational research (Katsaros et al., 2019; Kinsora et al., 2017; Shu et al., 2017; Tacchini et al., 2017), this study shows the possibility to classify information and misinformation related to COVID-19 prevention and cure with a reasonable level of accuracy. This promising result notwithstanding, older adults were often left confused about the veracity of such online content. This is consistent with the unanimous finding in the literature that humans are not adept in detecting deception (Dunbar et al., 2017; Levine, 2014). Nonetheless, extending prior research, this study offers a possible reason for this phenomenon: Unlike algorithms, humans make veracity decisions not by examining the content per se. They are also influenced by their prior assumptions, predispositions, previous online experiences as well as a suite of heuristics related to website features. This could be why their perceptions of authenticity were not always in harmony with actual authenticity.

Secondly, the study demonstrates how older adults, a vulnerable population segment who lie at the wrong end of the digital divide, process information and misinformation during a pandemic. COVID-19 prevention and cure information and misinformation were being transmitted using various channels. For instance, news websites offered details of the pandemic updates. General practitioners sent letters to their patients with underlying conditions. The Prime Minister sent a letter and a booklet about COVID-19. In addition, OSN transmitted information about COVID-19. Corroborating earlier works (Magsamen-Conrad et al., 2015; Seo et al., 2021; Xie et al., 2021), it was found that older adults were more inclined to rely on traditional media rather than new media. This is a positive finding because their reliance on the classic communication channels and authoritative sources rendered them relatively immune to online misinformation. This has important implications for the younger generation who tend to explore OSNs at the expense of professional advice, and hence tend to be easily misled by misinformation. It is ironic that older adults, who are expected to be less confident in ICT adoption, seem more immune to online misinformation than the youth, who are believed to be more tech-savvy. The former was also less likely to be overwhelmed by the online information overload.

Thirdly, the study demonstrates that corrective messages with attractive presentation may not be considered convincing by older adults. In this regard, the literature on the difference between misinformation and rebuttals should be brought to bear. There seems to be an implicit assumption that misinformation is more sensational than corrective messages, and hence the former tends to become viral more easily than the latter (Chua and Banerjee, 2016, 2017; Pal et al., 2020; Tanaka et al., 2013). In consequence, corrective messages need to be more attractive and persuasive to compete with misinformation. However, this study challenges such an implicit assumption by showing that when corrective messages come with attractive pictures,1 older adults may treat the corrective messages as if they were misinformation. As M1 summarized, “This does not look like a page that a UN type of organization would have. I would have expected it to have fewer pictures and less content than it presently has.”

7. Conclusions

7.1. Implications to theory and practice

This study makes important theoretical contributions to the literature on digital divide, older adults’ information processing, AI and misinformation. Set in the context of COVID-19, the study goes beyond the nature of the cutting-edge technology AI and misinformation research that has been undertaken within the AI community by using a mixed methods approach. Study 1 commenced by collecting claims verified to be either true or false through a rigorous fact-checking process. A classification framework was developed that included both traditional machine learning algorithms and deep learning. The classification performance was generally promising. Next, using a sample of older adults and a qualitative approach of semi-structured interviews in Study 2, it was learnt that individual prejudices lead to biases.

Everything included, it can be said that Kozyrkov's (2019) view of bias not arising from AI algorithms but from individuals holds true. By combining the computational and behavioural paradigms, the study offers a more holistic understanding of the digital divide, and human interaction with cutting-edge technologies compared with previous works (e.g., Choudrie & Vyas, 2014; Sarker et al., 2011). Additionally, it leverages the socio-technical research during the COVID-19 era by understanding the role of a vulnerable demographic group in implementation outcomes. The study highlights that trust is important within the older adults demographic consumer group, but that the trust is placed much more in classic communication channels such as, TV and radio, or the government and general practitioners rather than the contemporary OSN platforms such as, Facebook, Twitter or Instagram.

The study has implications for research during the COVID-19 period as the pandemic has given rise to new directions of inquiry. Previous COVID-19 studies on the theme of computers in human behaviour such as, Li et al. (2020) examined YouTube videos for their usability and reliability. The videos were analyzed using the novel COVID-19 Specific Score (CSS), modified DISCERN (mDISCERN) and modified JAMA (mJAMA) scores. From the fact checking dataset in Study 1, it was found that some of the false claims circulated on YouTube. But from the interviews in Study 2, YouTube was viewed to be a source of knowledge and information by older adults. Brennen et al. (2020) identified some of the main types, sources and claims of COVID-19 misinformation by combining a systematic content analysis of fact-checked claims about the virus and the pandemic, with social media data indicating the scale and scope of engagement. The emphasis of this study was on the linguistic nuances of the claims. From the interviews however, the older adults mostly did not pay attention to the English language nuances to separate misinformation from information. The only exception was those with computer science or information systems backgrounds.

The study also has practical relevance on several fronts. For one, it achieved a classification accuracy of 86.7% with the Decision Tree classifier, and 86.67% with the Convolutional Neural Network model. Despite the promising classification performance, misinformation was found to be widely prevalent on Facebook. Therefore, the extent to which OSNs employ AI to periodically weed out misinformation remains unclear.

Besides, it was found that older adults paid more attention to traditional media rather than new media when identifying information. Therefore, to reach this segment of the population, healthcare organizations and public authorities are recommended to invest in mass media messages rather than OSN, which could be more appropriate to raise awareness among the youth. They also need to rethink their strategy to develop the corrective messages. Augmenting the messages with attractive pictures may not be the most appropriate strategy.

Finally, the finding that older adults are relatively immune to online misinformation has significance for the youth. The younger generation may want to take a leaf out of the older generation's book in terms of how to take online information with a pinch of salt and rely on official sources. While the use of the internet, social media and OSN has its value, the youth needs to be cautious when it comes to acting upon health-related online content.

7.2. Key lessons learnt

In sum, the following key lessons can be learnt from this study: First, ML techniques can distinguish between COVID-19 prevention and cure information and misinformation with reasonable accuracy. Second, humans cannot process information ‘algorithmically’ to separate information from misinformation. Third, older adults are often left confused about the veracity of information related to COVID-19 available on OSNs. Fourth, older adults prefer traditional media to digital media for COVID-19 information seeking, and hence are quite immune to online misinformation. Fifth, corrective messages with attractive presentation may not always be perceived as convincing.

Overall, the study contributes in several ways. Contextualised in COVID-19, it breaks new ground by highlighting how humans’ information processing differs from the ways that algorithms operate. It offers fresh insights into how during a pandemic, older adults—a vulnerable demographic segment—interact with online information and misinformation. On the methodological front, the study represents an intersection of two very disparate paradigms; namely, machine learning algorithms and interview data analyzed using thematic analysis and concepts drawn from grounded theory to enrich the scholarly understanding of human interaction with cutting-edge technologies.

7.3. Limitations and future research directions

The findings of this study need to be viewed in consideration of the following limitations. In Study 1, the size of the dataset was small. Related research in the future is recommended to acquire a larger database of information and misinformation. Apart from ML techniques, clustering algorithms may also be implemented on the COVID-19 infodemic to understand the topics and sub-topics that have been creating a buzz on social media and OSNs.

Study 2 solely relied on older adults who are competent in using digital platforms such as Zoom and Teams. This could be a source of bias in the sample; and hence in the responses obtained. Nonetheless, given that the interviews were conducted during the lockdown period in the UK, it was not feasible to reach older adults who would not be reachable through virtual platforms. Future research needs to incorporate the views of a more diverse set of older adults, including but not limited to doctors, businessmen, shopkeepers, social activists, religious leaders, government officials, and even jobless individuals. In this way, the sample size could also be increased.

In addition, the study reveals a conundrum that scholars need to address going forward. On the one hand, if corrective messages that debunk misinformation are plain and simple, they may not become viral easily on social media. If so, they will lose out to misinformation. On the other hand, if corrective messages contain attractive pictures,1 they may be mistaken as unconvincing. This has been shown to be true at least for older adults. Therefore, this study hopes to ignite a body of research that will focus on understanding the ideal characteristics of misinformation-debunking messages online.

Credit author statement

Choudrie, Jyoti: Conceptualisation, writing both the original draft and revised versions, Qualitative data collection, analysis, referencing, correspondence, reviewing and writing, project management and administration. Banerjee, Snehasish: Formatting of the versions; Writing - Original Draft, Writing - Review & Editing, analysis of the qualitative approach; visualisation and co-managing the project and administration. Kotecha, Ketan; Walambe, Rahee: Conceptualisation of the Artificial intelligence part; Supervision, data collection, writing the Artificial intelligence parts, managing the quantitative approach (Artificial Intelligence part) and administration from India. Karende, Hema; Ameta, Juhi: Data collection of Artificial intelligence and answering questions about artificial intelligence.

Footnotes

https://www.who.int/emergencies/diseases/novel-coronavirus-2019/advice-for-public/myth-busters. Viewed: November 15, 2020.

https://covid-19.chinadaily.com.cn/a/202003/17/WS5e702f52a31012821727fa19.html. Viewed: November 15, 2020.

https://nypost.com/2020/02/22/dont-buy-chinas-story-the-coronavirus-may-have-leaked-from-a-lab/. Viewed: November 15, 2020.

https://www.facebook.com/mohan.kashira/posts/2544522515654318. Viewed: November 15, 2020.

References

- Adams S.A. Revisiting the online health information reliability debate in the wake of “web 2.0”: An inter-disciplinary literature and website review. International Journal of Medical Informatics. 2010;79(6):391–400. doi: 10.1016/j.ijmedinf.2010.01.006. [DOI] [PubMed] [Google Scholar]

- Adler S.E. 2020. Why Coronaviruses hit older adults hardest.https://www.aarp.org/health/conditions-treatments/info-2020/coronavirus-severe-seniors.html Retrieved July 20, 2020, from. [Google Scholar]

- Ali I. The COVID-19 pandemic: Making sense of rumor and fear. Medical Anthropology. 2020;39(5):376–379. doi: 10.1080/01459740.2020.1745481. [DOI] [PubMed] [Google Scholar]

- Ameen N., Tarhini A., Shah M., Hosany S. 2020. Call for paper: Consumer interaction with cutting-edge technologies – special issue in computers in human behavior.https://www.journals.elsevier.com/computers-in-human-behavior/call-for-papers/consumer-interaction-with-cutting-edge-technologies Retrieved July 29, 2020, from. [Google Scholar]

- Anderson J.C., Narus J.A. A model of distributor firm and manufacturer firm working partnerships. Journal of Marketing. 1990;54(1):42–58. [Google Scholar]

- Ball B.E. 2018. How artificial intelligence is changing social media marketing.http://www.prepare1.com/how-artificial-intelligence-is-changing-social-media-marketing-research/ Retrieved July 20, 2020 from. [Google Scholar]

- Barz B., Denzler J. IEEE winter conference on applications of computer vision. 2020. Deep learning on small datasets without pre-training using cosine loss; pp. 1371–1380. [Google Scholar]

- Beldad A., De Jong M., Steehouder M. How shall I trust the faceless and the intangible? A literature review on the antecedents of online trust. Computers in Human Behavior. 2010;26(5):857–869. [Google Scholar]

- Berinsky A.J. Rumors and health care reform: Experiments in political misinformation. British Journal of Political Science. 2017;47(2):241–262. [Google Scholar]

- Bollen J., Mao H., Zeng X. Twitter mood predicts the stock market. Journal of Computational Science. 2011;2(1):1–8. [Google Scholar]

- Brennen J.S., Simon F., Howard P.N., Nielsen R.K. 2020, April 7. Types, sources, and claims of COVID-19 misinformation.https://reutersinstitute.politics.ox.ac.uk/types-sources-and-claims-covid-19-misinformation Retrieved July 30, 2020, from. [Google Scholar]

- Capatina A., Kachour M., Lichy J., Micu A., Micu A.E., Codignola F. Matching the future capabilities of an artificial intelligence-based software for social media marketing with potential users' expectations. Technological Forecasting and Social Change. 2020;151 [Google Scholar]

- Choudrie J., Pheeraphuttranghkoon S., Davari S. The digital divide and older adult population adoption, use and diffusion of mobile phones: A quantitative study. Information Systems Frontiers. 2020;22(3):673–695. [Google Scholar]

- Choudrie J., Vyas A. Silver surfers adopting and using Facebook? A quantitative study of Hertfordshire, UK applied to organizational and social change. Technological Forecasting and Social Change. 2014;89:293–305. [Google Scholar]

- Chua A., Banerjee S. Proceedings of the international MultiConference of engineers and computer scientists. IAENG; 2016. Linguistic predictors of rumor veracity on the Internet; pp. 387–391. [Google Scholar]

- Chua A., Banerjee S. To share or not to share: The role of epistemic belief in online health rumors. International Journal of Medical Informatics. 2017;108:36–41. doi: 10.1016/j.ijmedinf.2017.08.010. [DOI] [PubMed] [Google Scholar]

- Chua A., Banerjee S., Guan A.H., Xian L.J., Peng P. SAI computing conference. IEEE; 2016. Intention to trust and share health-related online rumors: Studying the role of risk propensity; pp. 1136–1139. [Google Scholar]

- Chumbler N.R., Mann W.C., Wu S., Schmid A., Kobb R. The association of home-telehealth use and care coordination with improvement of functional and cognitive functioning in frail elderly men. Telemedicine Journal and e-Health. 2004;10(2):129–137. doi: 10.1089/tmj.2004.10.129. [DOI] [PubMed] [Google Scholar]

- Codella N., Cai J., Abedini M., Garnavi R., Halpern A., Smith J.R. International workshop on machine learning in medical imaging. Springer; Cham: 2015. Deep learning, sparse coding, and SVM for melanoma recognition in dermoscopy images. [Google Scholar]

- Corritore C.L., Kracher B., Wiedenbeck S. On-line trust: Concepts, evolving themes, a model. International Journal of Human-Computer Studies. 2003;58(6):737–758. [Google Scholar]

- Dharaiya D. 2020. What cutting edge technology can we expect in 2020?https://becominghuman.ai/what-cutting-edge-technology-can-we-expect-in-2020-754c68532d95 Retrieved April 4, 2020, from. [Google Scholar]

- Doney P.M., Cannon J.P. An examination of the nature of trust in buyer-seller relationships. Journal of Marketing. 1997;61:35–51. [Google Scholar]

- Dunbar N.E., Jensen M.L., Harvell-Bowman L.A., Kelley K.M., Burgoon J.K. The viability of using rapid judgments as a method of deception detection. Communication Methods and Measures. 2017;11(2):121–136. [Google Scholar]

- Flavián C., Guinalíu M., Gurrea R. The role played by perceived usability, satisfaction and consumer trust on website loyalty. Information & Management. 2006;43(1):1–14. [Google Scholar]

- Fong S.J., Dey N., Chaki J. Artificial intelligence for coronavirus outbreak. Springer; Singapore: 2020. AI-enabled technologies that fight the coronavirus outbreak; pp. 23–45. [Google Scholar]

- Froehlich T.J. BiD: textos universitaris de biblioteconomia i documentació; 2017. A not-so-brief account of current information ethics: The ethics of ignorance, missing information, misinformation, disinformation and other forms of deception or incompetence.http://bid.ub.edu/pdf/39/en/froehlich.pdf Retrieved from. [Google Scholar]

- Frost J., Massagli M. Social uses of personal health information within PatientsLikeMe, an online patient community: What can happen when patients have access to one another's data. Journal of Medical Internet Research. 2008;10(3):e15. doi: 10.2196/jmir.1053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fung I.C.H., Fu K.W., Chan C.H., Chan B.S.B., Cheung C.N., Abraham T., Tse Z.T.H. Social media's initial reaction to information and misinformation on Ebola, august 2014: Facts and rumors. Public Health Reports. 2016;131(3):461–473. doi: 10.1177/003335491613100312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gadekallu T.R., Khare N., Bhattacharya S., Singh S., Reddy Maddikunta P.K., Ra I.H., Alazab M. Early detection of diabetic retinopathy using PCA-firefly based deep learning model. Electronics. 2020;9(2) doi: 10.3390/electronics9020274. Article 274. [DOI] [Google Scholar]

- Gefen D., Karahanna E., Straub D.W. Trust and TAM in online shopping: An integrated model. MIS Quarterly. 2003;27(1):51–90. [Google Scholar]

- Glasser B.G., Strauss A.L. Alden; Chicago, IL: 1967. The development of grounded theory. [Google Scholar]

- Golbeck J., Hendler J. Inferring binary trust relationships in web-based social networks. ACM Transactions on Internet Technology. 2006;6(4):497–529. [Google Scholar]

- Guess A., Nagler J., Tucker J. Less than you think: Prevalence and predictors of fake news dissemination on Facebook. Science Advances. 2019;5(1) doi: 10.1126/sciadv.aau4586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gupta A., Lamba H., Kumaraguru P., Joshi A. Proceedings of the international conference on world wide web. 2013. Faking sandy: Characterizing and identifying fake images on twitter during hurricane sandy. [Google Scholar]

- Hernández-Orallo J. Evaluation in artificial intelligence: From task-oriented to ability-oriented measurement. Artificial Intelligence Review. 2017;48(3):397–447. [Google Scholar]

- Hou J., Shim M. The role of provider–patient communication and trust in online sources in Internet use for health-related activities. Journal of Health Communication. 2010;15:186–199. doi: 10.1080/10810730.2010.522691. [DOI] [PubMed] [Google Scholar]

- Kaliyar R.K. Proceedings of the international conference on computing communication and automation. IEEE; 2018. Fake news detection using a deep neural network; pp. 1–7. [Google Scholar]

- Kallinikos J. Edward Elgar Publishing; 2006. The consequences of information: Institutional implications of technological change. 2006. [Google Scholar]

- Kallinikos J., Hasselbladh H. Vol. 27. Emerald Group Publishing Limited; 2009. pp. 257–282. (Work, control and computation: Rethinking the legacy of neo-institutionalism. Institutions and ideology). 2009. [Google Scholar]

- Katsaros D., Stavropoulos G., Papakostas D. Proceedings of the international conference on web intelligence. IEEE; 2019. Which machine learning paradigm for fake news detection? pp. 383–387. [Google Scholar]

- Kinsora A., Barron K., Mei Q., Vydiswaran V.V. Paper presented at the 2017 IEEE international conference on healthcare informatics [ICHI] 2017. Creating a labeled dataset for medical misinformation in health forums. [Google Scholar]

- Kozyrkov C. 2019. What is AI bias?https://towardsdatascience.com/what-is-ai-bias-6606a3bcb814 Retrieved July 29, 2020, from. [Google Scholar]

- Levine T.R. Truth-default theory (TDT) a theory of human deception and deception detection. Journal of Language and Social Psychology. 2014;33(4):378–392. [Google Scholar]

- Lewicki R., Bunker B. In: Trust in organizations: Frontiers of theory and research. Kramer R.M., Tyler T.R., editors. Sage; Thousand Oaks, CA: 1996. Developing and maintaining trust in work relationships; pp. 114–139. [Google Scholar]

- Li H.Y., Bailey A., Huynh D., et al. YouTube as a source of information on COVID-19: A pandemic of misinformation? BMJ Global Health. 2020;5 doi: 10.1136/bmjgh-2020-002604. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu J.E., An F.P. Scientific Programming; 2020. Image classification algorithm based on deep learning-kernel function. [DOI] [Google Scholar]

- Ma R. Spread of SARS and war-related rumors through new media in China. Communication Quarterly. 2008;56(4):376–391. [Google Scholar]

- Magsamen-Conrad K., Upadhyaya S., Joa C.Y., Dowd J. Bridging the divide: Using UTAUT to predict multigenerational tablet adoption practices. Computers in Human Behavior. 2015;50:186–196. doi: 10.1016/j.chb.2015.03.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKnight D.H., Choudhury V., Kacmar C. Developing and validating trust measures for e-commerce: An integrative typology. Information Systems Research. 2002;13(3):334–359. [Google Scholar]

- Media Insight Project . 2018. How younger and older Americans understand and interact with news.https://www.americanpressinstitute.org/publications/reports/survey-research/ages-understand-news/ Retrieved July 20, 2020 from. [Google Scholar]

- Mian A., Khan S. Coronavirus: The spread of misinformation. BMC Medicine. 2020;18(1):1–2. doi: 10.1186/s12916-020-01556-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minsky M.L. MIT Press; 1968. Semantic information processing. [Google Scholar]

- Orlikowski W.J. CASE tools as organizational change: Investigating incremental and radical changes in systems development. MIS Quarterly. 1993;17(3):309–340. [Google Scholar]

- Östlund B., Olander E., Jonsson O., Frennert S. STS-inspired design to meet the challenges of modern aging. Welfare technology as a tool to promote user driven innovations or another way to keep older users hostage? Technological Forecasting and Social Change. 2015;93:82–90. [Google Scholar]

- Pal A., Banerjee S. Handbook of research on deception, fake news, and misinformation online. IGI Global; 2019. Understanding online falsehood from the perspective of social problem; pp. 1–17. [Google Scholar]

- Pal A., Chua A., Goh D. How do users respond to online rumor rebuttals? Computers in Human Behavior. 2020;106:106243. [Google Scholar]

- Panteli N., Sockalingam S. Trust and conflict within virtual inter-organisational alliances: A framework for facilitating knowledge sharing. Decision Support Systems. 2005;39(4):599–617. [Google Scholar]

- Peine A., Faulkner A., Jæger B., Moors E. Science, technology and the ‘grand challenge’of ageing—understanding the socio-material constitution of later life. Technological Forecasting and Social Change. 2015;93:1–19. [Google Scholar]

- Raamkumar A.S., Tan S.G., Wee H.L. Measuring the outreach efforts of public health authorities and the public response on Facebook during the COVID-19 pandemic in early 2020: Cross-country comparison. Journal of Medical Internet Research. 2020;22(5) doi: 10.2196/19334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Racherla P., Mandviwalla M., Connolly D.J. Factors affecting consumers' trust in online product reviews. Journal of Consumer Behaviour. 2012;11(2):94–104. [Google Scholar]

- Rai A. Explainable AI: From black box to glass box. Journal of the Academy of Marketing Science. 2020;48(1):137–141. [Google Scholar]

- Ratchford B.T., Talukdar D., Lee M.S. A model of consumer choice of the Internet as an information source. International Journal of Electronic Commerce. 2001;5(3):7–21. [Google Scholar]

- Riegelsberger J., Sasse M.A., McCarthy J.D. The mechanics of trust: A framework for research and design. International Journal of Human-Computer Studies. 2005;62(3):381–422. [Google Scholar]

- Roy P.K., Singh J.P., Banerjee S. Deep learning to filter SMS spam. Future Generation Computer Systems. 2020;102:524–533. [Google Scholar]

- Russell S.J., Norvig P. 3rd ed. Prentice Hall; Upper Saddle River, New Jersey: 2009. Artificial intelligence: A modern approach. [Google Scholar]

- Sarker S., Ahuja M., Sarker S., Kirkeby S. The role of communication and trust in global virtual teams: A social network perspective. Journal of Management Information Systems. 2011;28(1):273–310. [Google Scholar]

- Saunders M.N., Townsend K. Reporting and justifying the number of interview participants in organization and workplace research. British Journal of Management. 2016;27(4):836–852. [Google Scholar]

- Schütze H., Manning C.D., Raghavan P. Cambridge University Press; 2008. Introduction to information retrieval. [Google Scholar]

- Selwyn N., Gorard S., Furlong J., Madden L. Older adults' use of information and communications technology in everyday life. Ageing and Society. 2003;23:561–582. [Google Scholar]

- Seo H., Blomberg M., Altschwager D., Vu H.T. New Media & Society; 2021. Vulnerable populations and misinformation: A mixed-methods approach to underserved older adults' online information assessment. [DOI] [Google Scholar]

- Shao C., Hui P.-M., Wang L., Jiang X., Flammini A., Menczer F., Ciampaglia G.L. Anatomy of an online misinformation network. PloS One. 2018;13(4) doi: 10.1371/journal.pone.0196087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shu K., Sliva A., Wang S., Tang J., Liu H. Fake news detection on social media: A data mining perspective. ACM SIGKDD Explorations Newsletter. 2017;19(1):22–36. [Google Scholar]

- Sillence E., Briggs P., Fishwick L., Harris P. Proceedings of the SIGCHI conference on human factors in computing systems. ACM; 2004. Trust and mistrust of online health sites; pp. 663–670. [Google Scholar]

- Sillence E., Briggs P., Harris P.R., Fishwick L. How do patients evaluate and make use of online health information? Social Science & Medicine. 2007;64(9):1853–1862. doi: 10.1016/j.socscimed.2007.01.012. [DOI] [PubMed] [Google Scholar]

- Starbird K., Maddock J., Orand M., Achterman P., Mason R.M. IConference 2014 Proceedings; 2014. Rumors, false flags, and digital vigilantes: Misinformation on twitter after the 2013 Boston marathon bombing. [Google Scholar]

- Stewart K.J. How hypertext links influence consumer perceptions to build and degrade trust online. Journal of Management Information Systems. 2006;23(1):183–210. [Google Scholar]

- Tacchini E., Ballarin G., Della Vedova M.L., Moret S., de Alfaro L. 2017. Some like it hoax: Automated fake news detection in social networks. arXiv preprint arXiv:1704.07506. [Google Scholar]

- Tanaka Y., Sakamoto Y., Matsuka T. Proceedings of the Hawaii international conference on system sciences. IEEE; New York, NY: 2013. Toward a social-technological system that inactivates false rumors through the critical thinking of crowds; pp. 649–658. [Google Scholar]

- Temkar P. Clinical operations generation next… the age of technology and outsourcing. Perspectives in Clinical Research. 2015;6(4):175–178. doi: 10.4103/2229-3485.167098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Uden-Kraan C.F., Drossaert C.H., Taal E., Smit W.M., Seydel E.R., van de Laar M.A. Experiences and attitudes of Dutch rheumatologists and oncologists with regard to their patients' health-related Internet use. Clinical Rheumatology. 2010;29(11):1229–1236. doi: 10.1007/s10067-010-1435-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- UK Parliament . 2018, July 29. Disinformation and ‘fake news’: Interim report contents.https://publications.parliament.uk/pa/cm201719/cmselect/cmcumeds/363/36311.htm Retrieved July 9, 2020 from. [Google Scholar]

- Urquhart C. Sage; 2012. Grounded theory for qualitative research: A practical guide. [Google Scholar]

- Vosoughi S., Roy D., Aral S. The spread of true and false news online. Science. 2018;359(6380):1146–1151. doi: 10.1126/science.aap9559. [DOI] [PubMed] [Google Scholar]

- Wagner N., Hassanein K., Head M. Computer use by older adults: A multi-disciplinary review. Computers in Human Behavior. 2010;26(5):870–882. [Google Scholar]

- Walsham G. Interpretive case studies in IS research: Nature and method. European Journal of Information Systems. 1995;4(2):74–81. [Google Scholar]

- Wang Y., McKee M., Torbica A., Stuckler D. Systematic literature review on the spread of health-related misinformation on social media. Social Science & Medicine. 2019;240:112552. doi: 10.1016/j.socscimed.2019.112552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wesche J.S., Sonderegger A. When computers take the lead: The automation of leadership. Computers in Human Behavior. 2019;101:197–209. [Google Scholar]

- Whitford M. Market in motion. Hotel and Motel Management. 1998;213(7):41–43. [Google Scholar]

- Xie B., Daqing H., Tim M., Youfa W., Dan W., et al. Global health crises are also information crises: A call to action. Journal of the Association for Information Science and Technology. 2021 doi: 10.1002/asi.24357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- WHO . 2020. WHO (2020). Novel coronavirus(2019-nCoV) situation report – 13.https://www.who.int/docs/default-source/coronaviruse/situation-reports/20200202-sitrep-13-ncov-v3.pdf Retrieved April 4, 2020, from. [Google Scholar]

- WHO . World Health Organization – Regional Office for Europe; 2020. Statement – older people are at highest risk from COVID-19, but all must act to prevent community spread.http://www.euro.who.int/en/health-topics/health-emergencies/coronavirus-covid-19/statements/statement-older-people-are-at-highest-risk-from-covid-19,-but-all-must-act-to-prevent-community-spread Retrieved June 12, 2020, from. [Google Scholar]

- Wooldridge M. Penguin Random House; UK: 2018. Artificial intelligence. [Google Scholar]

- Yoon H., Jang Y., Vaughan P.W., Garcia M. Older adults' Internet use for health information: Digital divide by race/ethnicity and socioeconomic status. Journal of Applied Gerontology. 2020;39(1):105–110. doi: 10.1177/0733464818770772. [DOI] [PubMed] [Google Scholar]