Abstract

Background

More so than face-to-face counseling, users of online text-based services might drop out from a session before establishing a clear closure or expressing the intention to leave. Such premature departure may be indicative of heightened risk or dissatisfaction with the service or counselor. However, there is no systematic way to identify this understudied phenomenon.

Purpose

This study has two objectives. First, we developed a set of rules and used logic-based pattern matching techniques to systematically identify premature departures in an online text-based counseling service. Second, we validated the importance of premature departure by examining its association with user satisfaction. We hypothesized that the users who rated the session as less helpful were more likely to have departed prematurely.

Method

We developed and tested a classification model using a sample of 575 human-annotated sessions from an online text-based counseling platform. We used 80% of the dataset to train and develop the model and 20% of the dataset to evaluate the model performance. We further applied the model to the full dataset (34,821 sessions). We compared user satisfaction between premature departure and completed sessions based on data from a post-session survey.

Results

The resulting model achieved 97% and 92% F1 score in detecting premature departure cases in the training and test sets, respectively, suggesting it is highly consistent with the judgment of human coders. When applied to the full dataset, the model classified 15,150 (43.5%) sessions as premature departure and the remaining 19,671 (56.5%) as completed sessions. Completed cases (15.2%) were more likely to fill the post-chat survey than premature departure cases (4.0%). Premature departure was significantly associated with lower perceived helpfulness and effectiveness in distress reduction.

Conclusions

The model is the first that systematically and accurately identifies premature departure in online text-based counseling. It can be readily modified and transferred to other contexts for the purpose of risk mitigation and service evaluation and improvement.

Keywords: E-counseling, Text-based counseling, Dropouts, Pattern matching, Text matching

Highlights

-

•

A model derived from heuristics-based rules and logic-based pattern matching techniques that identifies premature departure in online text-based counseling was developed and tested.

-

•

The model achieved high accuracy vis-à-vis human annotation in making the binary judgement of whether or not a chat ended prematurely.

-

•

Premature departure was significantly associated with lower perceived helpfulness and effectiveness in distress reduction evaluated by the service users.

-

•

The proposed model has a relatively high level of transparency and reproducibility. It can be easily understood, and readily modified and transferred to other similar contexts.

1. Introduction

The demand for professional mental health services has been rapidly increasing (Lipson et al., 2019). Online text-based counseling is an emerging mode of service that provides support through text, such as instant messages, SMS, social networking sites, and email (Hoermann et al., 2017). Some examples of large-scale, nationwide services of this nature include Crisis Text Line in the US, Lifeline Australia, and Kids Help Phone in Canada. Despite their relatively short history, their reach is rapidly growing. For example, Crisis Text Line has accumulated over 5.7 million text conversations since 2013 (Crisis Text Line, 2021), and Lifeline Australia annually completes over 90,000 text-based conversations (Lifeline, 2021).

Online text-based counseling has the advantage of the ease of access and anonymity. It has great potential to provide timely emotional support to those in need (Hoermann et al., 2017; Reynolds et al., 2013; Yip et al., 2020). It is particularly apt for those who might have limited access to or are less willing to use traditional face-to-face services, especially among youth. However, the convenience and anonymity of online services also pose a great challenge in gauging and assessing users' risk and experience, as well as estimating the effectiveness of the service. The low response rates in post-service evaluation assessment (Fukkink and Hermanns, 2009) and the infeasibility of conducting randomized trials in these contexts (Mathieu et al., 2021) warrant the identification and development of alternative means to assess the effectiveness of online services (Rickwood et al., 2019).

Online text-based counseling can be categorized into asynchronous and synchronous types. Asynchronous services (e.g., emails, forums) have time delays or lags between each message; an exchange between the user and counselor might span one or a few days. In this format, an immediate response is not typically expected. On the other hand, synchronous services are those in which the user and the counselor are engaging in more-or-less real-time exchanges. The user typically initiates the chat, and the counselor is expected to engage with the user soon after.

Synchronous text-based counseling sessions may not have a set end-time or duration; users may depart from it at any time without a clear indication of closure. User premature departure is common (Szlyk et al., 2020) and has potentially important implications. An abrupt ending can signify heightened symptom severity and risk. It can also be caused by the user's dissatisfaction with the service. Because non-text feedback is largely unavailable in text-based counseling, it is difficult to ascertain when the user has departed and why. Thus, accurately identifying the incidences of premature departure and their causes can potentially help such online services to improve user experience and potentially service quality, such as by developing good practices that help reduce the occurrence of premature departure. Despite its importance, to date, only one published work has documented this phenomenon (Nesmith, 2018). In order to explain premature departure, we would first need to be able to accurately identify them. This paper aimed to help fill this gap by developing and validating a systematic method of identifying such cases in historical chat data.

1.1. Importance of identifying and understanding premature departure

We defined premature departure1 in synchronous text-based counseling sessions as when the user stops replying to the counselor without either 1) a clear ending in the conversation (e.g., words such as “bye”, “thank you”) or 2) an expression of an intention to leave. In contrast, a completed session is one that either has a clear ending, the user has indicated an intention to leave (e.g., “I will go offline”), or the counselor has suggested an ending to the session while the user is still responsive.

Proper closure is desirable in a counseling session, whether online or face-to-face. In traditional counseling, dropout often refers to incidents when the user unilaterally stops attending planned sessions (Wierzbicki and Pekarik, 1993). Children and youth who drop out tend to have more complex problems and show poorer outcomes in the long run (Connell et al., 2008; Reis and Brown, 1999; Saxon et al., 2010). Dropouts have been shown to indicate dissatisfaction or lack of perceived progress (de Haan et al., 2013); mental health care professionals are encouraged to minimize them (Barrett et al., 2008; O'Keeffe et al., 2019; Windle et al., 2020). Ending a face-to-face session in the middle of it, however, is rare and largely undocumented. As more online text-based counseling services emerge, understanding premature departure as a type of termination is increasingly relevant. Dropouts in this context can serve as an important form of user feedback (Rickwood et al., 2019).

Premature departure is common in synchronous online text-based counseling. For example, the US Crisis Text Line classifies sessions prematurely ended as “dropped” or “incomplete conversations” (Szlyk et al., 2020). However, to date, only one empirical study examined termination in this type of counseling. In an analysis of 49 text-based crisis counseling transcripts, Nesmith (2018) categorized themes of crisis chat endings based on whether users agreed to a plan or showed indications of the chat being helpful to them. Most notably documented were “positive closings,” where the user used optimistic language and explicitly expressed satisfaction, and the session ended with mutual agreement. This type of ending accounted for 65% of the sampled chats. The classification “abrupt ending” was given when the user ended the chat abruptly without providing an explanation, an action plan, or any indication of whether they found the session to be helpful (14%). The author noted that the reason for abrupt ending is inevitably uncertain; possible reasons include the user being busy, or not wanting to continue the conversation and therefore leaving suddenly. Lastly, “vague/ambiguous” ending refers to those where the closing was ambiguous, and the user's response to a safety plan was noncommittal (4%). The author further found that more positive closings were observed (76%) when the users were not in a crisis, or when they were seeking specific information or other straightforward requests. The above observations suggest that “positive” completed chats with a mutual closure are the preferred outcome in text-based counseling, whereas “abrupt” premature departures may indicate dissatisfaction with at least some aspect of the session.

Because premature departure may signify user dissatisfaction (Nesmith, 2018), it has important practical implications on service evaluation and development. The evaluation of online text-based counseling services has relied on self-report measures (Fukkink and Hermanns, 2009). However, the response rate of post-chat surveys is typically low, e.g., 1.4% in Fukkink and Hermanns (2009); 9% in Rickwood et al. (2019). Furthermore, research on response biases in user satisfaction suggests a positive association between satisfaction and response rate (Mazor et al., 2002); the post-chat survey data may thus be positively biased. As suggested by previous research (Rickwood et al., 2019), dropout or premature departure might be an alternative, non-self-report approach to assessing user dissatisfaction.

Before we can treat premature departure as a proxy for user dissatisfaction and use it as an outcome through which to evaluate a service, the phenomenon needs to be properly captured, described, and understood, and its implications empirically examined. In this study, we aimed to develop a systematic method to classify prematurely ended counseling sessions. We also quantitatively investigated their implications on the outcomes of the session.

This study has two objectives. First, we aimed to identify premature departure and develop a set of rules to do so systematically. Once developed, we aimed to use logic-based pattern techniques to accurately identify these incidences of premature departure across a larger sample. Even with a clear definition, identifying premature departure can be complex. The challenge is to distinguish key patterns and develop language rules in order to accurately identify them. To tackle this problem, we propose a model combining logic rules and pattern matching methodology. To the best of our knowledge, this study is the first attempt to develop an algorithm to detect premature departure on a synchronous online text-based counseling platform.

Second, as a means to validate the importance of premature departure, we examined its association with user satisfaction. We hypothesized that sessions which the user rated to be less helpful were more likely to have ended prematurely. To test this hypothesis, we analyzed the user's post-chat questionnaires using two outcomes. We first compared the proportion of users from premature and completed chats who filled in the post-chat questionnaire. Based on research on the relationship between user satisfaction and response bias (Mazor et al., 2002), we hypothesized the two were associated and predicted that the users who prematurely departed were less likely to have completed the post-chat questionnaire. Then, we compared the user satisfaction outcomes between those who prematurely departed and those who did not. We hypothesized that there was a relationship between the two; that users' satisfaction was lower among those who left prematurely. The results can inform us whether premature departure can serve as a key indicator of user satisfaction in online text-based counseling.

2. Method

2.1. Data

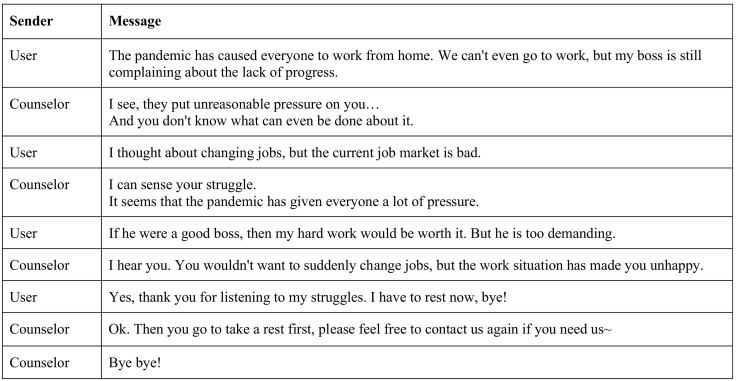

This study analyzed anonymous session data from the Hong Kong Jockey Club Open Up, a 24/7 online text-based counseling service designed specifically to cater to youngsters experiencing emotional distress (Yip et al., 2020). Users of Open Up chat anonymously, without charge, with professional counselors and trained volunteers via the service's web portal, SMS, WhatsApp, Facebook messenger, or WeChat. Fig. 1 shows the English translation of a fictitious conversation (based on real chats) between a user and a counselor; the Cantonese version can be found in Fig. A.1 in Appendix A.

Fig. 1.

An ending excerpt of a fictive “completed” session.

We obtained all the valid session transcripts from Open Up from the year 2020, i.e., from Jan 1, 2020, to Dec 31, 2020. The dataset contains 34,821 valid sessions. A valid session was defined as a conversation in which the user was successfully connected to a counselor and had more than four exchanges. In this study, one exchange is defined as each switch in speaker (e.g., from the user to the counselor). On average, each valid session lasted for 54 min (SD = 45 min). As an illustration, the excerpt in Fig. 1 contains seven exchanges. In the dataset, on average, each session consists of 40 exchanges (SD = 30) between a counselor and a user.

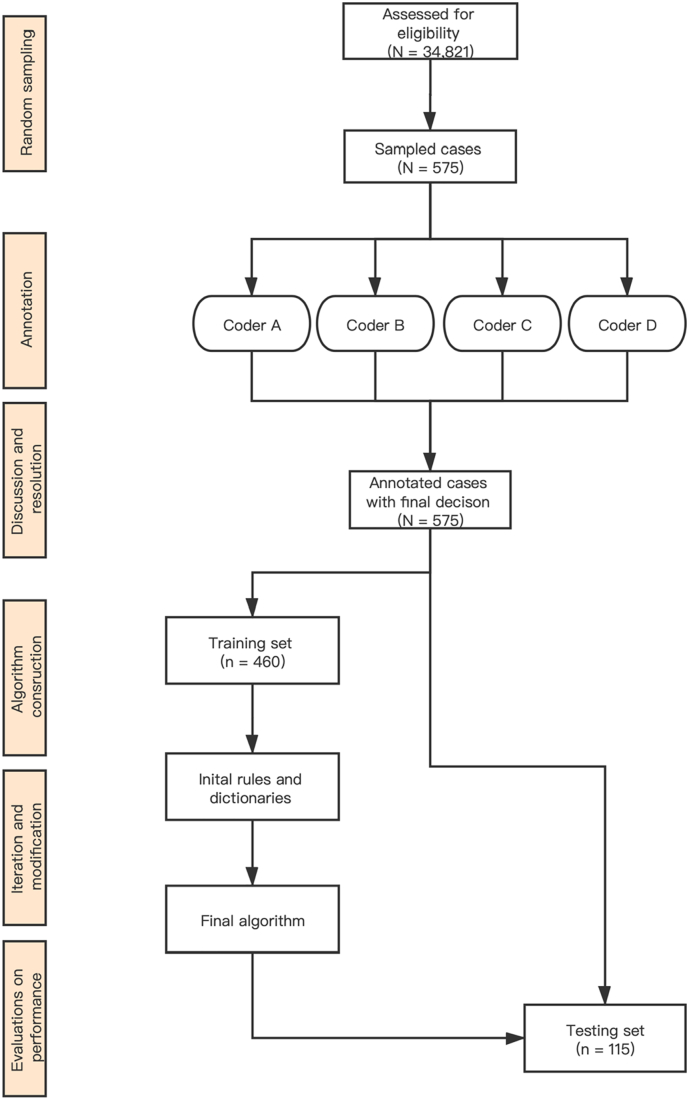

In this study, we randomly sampled 575 valid sessions. Each unique user (based on IP address) was only sampled once. That is, to the best of our knowledge, the 575 sessions were each with a unique user. We split the dataset into the training set (80%) and the test set (20%). The training set consisted of 460 sessions and the test set 115 sessions.

2.2. Operationalization of premature departure

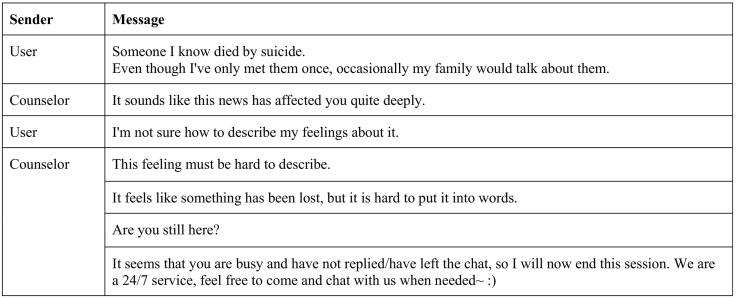

Premature departure was operationalized as when a user stopped replying to the counselor, and there was no clear ending in the conversation, or the user did not indicate that they would leave. In this definition, whether a session is complete or not is determined from the user's, as opposed to the counselor's, messages and perspective. An example is provided in Fig. 2; the Cantonese version can be found in Fig. A.2.

Fig. 2.

An ending of a fictive “premature departure” session.

In practice, there is no a priori agreement between the user and the counselor on when and how a session should end. As Open Up is a free and anonymous service, users could leave at any time during the conversation without explanation. Therefore, premature departure is mainly determined by the users and can come in many forms, much like how text conversations end in real life. However, in some scenarios, the counselor may suggest ending the session under some circumstances such as the user has not replied for a long time or significant progress has been made during the session. In those situations, counselors will use standard phrases to propose termination.

2.3. Coding process

Four trained coders (FC, CT, JF, EC) annotated the 575 randomly sampled sessions. All coders have relevant higher education (i.e., at least a bachelor's degree) and work experience in psychology, counseling, and/or social work. Each session was coded as either “completed” or “premature departure” based on their judgment vis-à-vis the pre-determined definition. The coding aimed to capture the ending features and signifiers from both users and counselors, including expressions such as “bye”, “thank you” and “I am feeling better, thanks”, as well as an intention to leave. For each session, the coders also marked the key phrases, rules, or reasons that indicated or justified their binary decisions. Each session was coded independently by two coders. High inter-rater reliability was achieved (Krippendorff's alpha = 95.9%) (Hayes and Krippendorff, 2007). Disagreements were discussed and resolved among all four coders (Fig. 3).

Fig. 3.

Flow diagram of the progress through coding, algorithm construction, and evaluation.

2.4. Construction of coding rules

After coding the training set (n = 460), the four coders discussed the typical examples, common rules, and helpful tips to identify the premature departure and completed cases. When a rule was identified and formulated (details below), it was tested and validated manually in the coding sample to see whether it accurately classified the session, or if examples counter to the rule were found. If so, the rule was revised or removed through discussion by the coding team. This process was repeated until a qualitatively valid model was agreed upon. As a result, a logic-based pattern matching algorithm was developed based on the training set, which was further validated on the test set (n = 115).

2.5. Premature departure classification algorithm

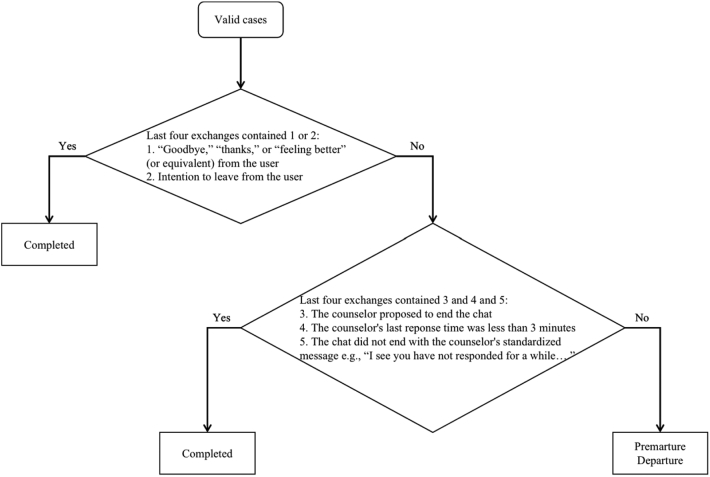

We adopt IF-THEN statements to formulate our logic rules. The proposed algorithm has two layers of rules. Each layer contains a condition and a conclusion. As premature departure by definition describes the ending of a session, not surprisingly we found that whether a chat ending was premature or complete could be determined by the content in the last four message exchanges. Therefore, the last four exchanges of the session were extracted as the focus for analysis and further rule development. In the first layer, in accordance with the a priori definition, the most determining rules were:

-

1.

There was a proper goodbye or thanks signifier in the last four exchanges from the user; and.

-

2.

The user mentioned their intention to leave.

If the user mentioned any keywords and phrases that satisfied either 1 or 2, the session was treated as a completed case. Otherwise, the second layer rules were activated.

The second layer rules were designed to check whether the counselor, in the last four exchanges, proposed to end the chat. The reason behind this rule is that there were scenarios where users would not stop chatting unless the counselor proposed to end the session. In our analysis, we found that counselors would propose to end the session when they have been chatting with the user for a while and there was no suicide risk or increased suicide risk indicated during the chat. Under such circumstances, the counselor would proactively propose to end the session and suggest the user rest and digest the material covered in the session. The user can re-enter the service as a fresh session if needed. Therefore, if the counselor proposed to end the chat when the user was still active online, the session was classified as a completed case. The rule in this layer contains three sub-conditions, including:

-

3.

The counselor proposed to end the chat proactively in the last four exchanges;

-

4.

The counselor's last response time to the user was less than 3 min2;

-

5.

The chat did not end with the counselor's standardized message, e.g., “I see you have not responded for a while…”

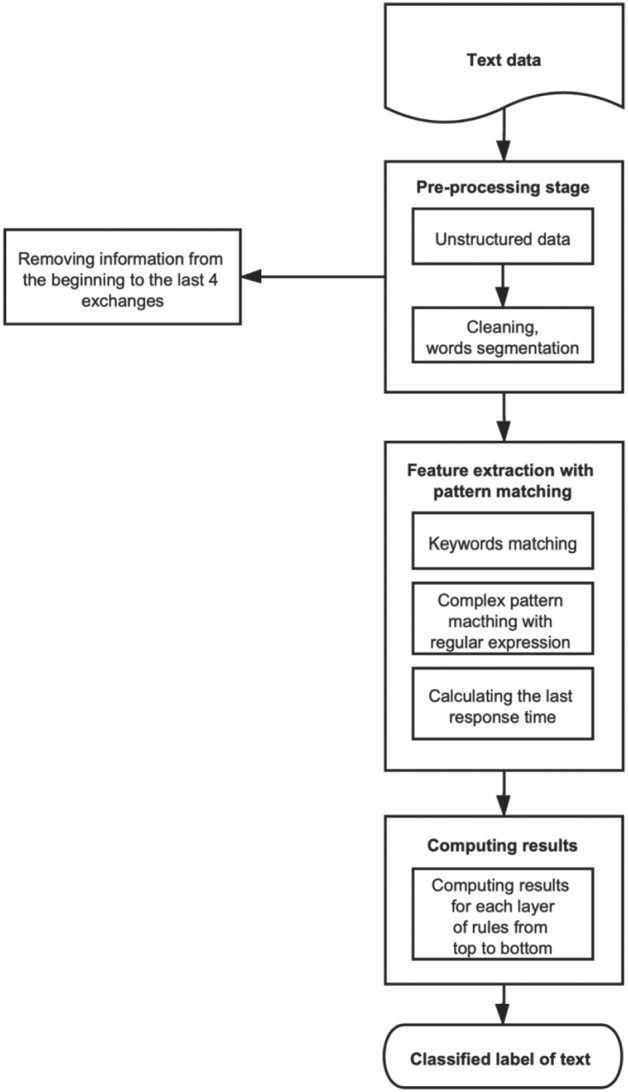

Conditions 4 and 5 were used to confirm that the user was still active online when the counselor proposed to end the chat. If conditions 3, 4, and 5 were met at the same time, the session was treated as a completed case; the remaining sessions were categorized as premature departure. Fig. 4 presents the logical flow chart that depicts the rules. We also tested an alternative model as a comparison (Fig. B.1, Appendix B) with additional rules suggested by coders from the initial coding. We compared the two models' performance (Table B.2) and chose the one with the higher F1 score as the proposed model.

Fig. 4.

The architecture of the premature departure detection framework.

2.6. Pattern matching with text

Pattern matching with text refers to the task of finding a static pattern in a text (Amir et al., 2007). Different variants of pattern matching algorithm have been widely applied to medical text (e.g., Menger et al., 2018) and social media text data (e.g., Hung et al., 2016). For the purpose of this study, pattern matching method was used to automate and implement the proposed algorithm to the 575 sampled cases. The algorithm scanned through the last four exchanges between users and counselors to check the status of different patterns. Fig. C.1 in Appendix C reports lists of expressions and conditions used in the algorithm shown in Fig. 4, and Fig. C.2 reports the additional expressions and conditions for the alternative model.

Fig. 5 presents the workflow and details of implementing the proposed algorithm. Four steps were required to implement the algorithm of detecting premature departure on a large-scale dataset. First, the last four message exchanges alongside the timestamp and sender's information were extracted for pattern matching. Data preprocessing including removing punctuations and extra space, and splicing sentences into a list of keywords and phrases with the help of jieba3 were implemented. In order to better fit the local cultural and linguistic context, colloquial words and local slangs were added to the jieba dictionary to optimize the word segmentation. Second, keywords and complex string matching, and the calculation of the last response time were carried out to the last four message exchanges. Third, we gather the results from each layer of the rules from top to bottom to check whether the classification conditions were met. Last, the binary classification of premature departure (i.e., “Yes” or “No”) was computed according to the logical flow of the algorithm presented in Fig. 4 and the alternative model.

Fig. 5.

Diagram depicting the implementation of the proposed algorithm.

2.7. Model evaluation

Standard metrics of pattern recognition and classification, including precision, recall, and F1 score, were used for evaluating the model performance (Sebastiani, 2002; Sokolova and Lapalme, 2009). In our classification task, we defined premature departure as the positive class whereas completed cases as the negative class. We used the F1 score of the positive class as the desired metric to represent the overall model performance because, in online emotional support counseling, premature departure is presumably undesirable and thus practically more important to flag. The weighted F1 score is also presented as a reference. Where:

True positive (TP) is an outcome where the model correctly predicts the positive class;

True negative (TN) is an outcome where the model correctly predicts the negative class;

False positive (FP) is an outcome where the model incorrectly predicts the positive class; and

False negative (FN) is an outcome where the model incorrectly predicts the negative class.

Precision refers to the proportion of correctly classified positive cases among the classified positive cases (Precision = ). Recall (equivalent to sensitivity) refers to the proportion of correctly classified positive cases among all positive cases (Recall = ).

The F1 score is the harmonic mean of precision and recall (F1 score = ) and the weighted F1 score is a variant of F1 score which calculates metrics for each label and finds their average weighted by support (the number of true instances for each label), Fweighted_average = . Where F1positive_cases and F1negative_cases are the F1 score for premature departure cases and completed cases, and C1 and C2 are the number of instances in premature departure cases and completed cases.

2.8. User satisfaction and service outcomes

Upon exiting the service, Open Up users were automatically invited to fill out a post-service survey. Among the 34,821 valid sessions, 3598 (10.3%) filled out the survey. Three questions were used to indicate distress reduction, service outcome and user satisfaction. For the first two questions, “How do you feel now compared to before the conversation?” and “How much do you think this service has helped you?”, participants responded using a 5-point Likert-scale, ranging from 1 = very bad and not helpful at all, to 5 = very good and very helpful. For the third question, “Would you recommend our service to a friend in need?”, the respondents answered yes, no, or not sure. In our analyses, we only compared the yes and no responses. Comparisons in sample characteristics and user satisfaction for premature departure and completed groups were compared using chi-square test and t-test.

3. Results

3.1. Model effectiveness

The identification model achieved high precision and high recall towards both completed cases and premature departure cases on the training set (Table 1), indicating that the proposed algorithm can accurately replicate the decision of the human coders on premature classification. The model also achieved a 92% F1 score for premature departure cases in the test set. This demonstrated that the model is robust and applicable to new data.

Table 1.

The performance of the premature departure classification algorithm on the training set and the test set.

| Case label | Training set |

Test set |

||||||

|---|---|---|---|---|---|---|---|---|

| n | Precision | Recall | F1 score | n | Precision | Recall | F1 score | |

| Completed | 264 | 0.97 | 0.98 | 0.98 | 63 | 0.95 | 0.92 | 0.94 |

| Premature Departure | 196 | 0.97 | 0.96 | 0.97 | 52 | 0.91 | 0.94 | 0.92 |

| Total | 460 | 0.97a | 115 | 0.93a | ||||

Weighted F1 score.

3.2. Validation of premature departure with service outcomes

We applied the algorithm to all 34,821 sessions from the dataset. The model classified 15,150 (43.5%) sessions as premature departure, while the remaining 19,671 (56.5%) were classified as completed sessions. Table 2 reports the characteristics of completed cases and premature departure cases, and their comparison. The premature departure cases were shorter in length compared to completed cases in terms of words per message, number of messages, number of exchanges, and durations in minutes.

Table 2.

Characteristics of completed case and premature departure cases.

| Characteristics | Completed n = 19,671 (56.5%) |

Premature departure n = 15,150 (43.5%) |

p-value |

|---|---|---|---|

| Mean duration (min:s (SD)) | 59:53 (38:01) | 41:39 (33:02) | <.001 |

| Mean number of exchanges (SD) | 49.4 (33.4) | 25.5 (25.3) | <.001 |

| Mean number of messages (SD) | 87.3 (62.0) | 45.1 (45.2) | <.001 |

| Mean number of words per message (SD) | 8.8 (10.9) | 8.3 (10.7) | <.001 |

Table 3 reports the relationships between premature departure and willingness to fill the post-chat survey. Only 604 (4.0%) users from the sessions classified as premature departure filled out the post-chat survey, whereas 2994 (15.2%) users in completed sessions did so. Chi-square test revealed that completed cases were more likely to fill the post-chat survey than premature departure cases, χ2(1, N = 34,821) = 1165.68, p < .001 (Table 3).

Table 3.

Cross tabulation of premature departure and post-chat survey filling.

| Post-chat survey filled | Case label |

Pearson Chi-square | p-value | Cramer's phi | ||

|---|---|---|---|---|---|---|

| Completed (%a) | Premature departure (%a) | All | ||||

| Yes | 2994 (15.2%) | 604 (4.0%) | 3598 | 1165.68 | <.001 | 0.18 |

| No | 16,677 (84.8%) | 14,546 (96.0%) | 31,223 | |||

| Total | 19,671 | 15,150 | 34,821 | |||

Percentage conditional on total predicted cases.

Table 4 summarizes the results of comparisons between premature departure sessions and completed sessions on user satisfaction. The results showed that satisfaction among completed cases was significantly higher than premature departure cases across all three indicators. The effect size of differences between the two groups ranged from small to large.

Table 4.

User satisfaction from the post-session survey.

| Category | Case label | n | Mean | SD | SE | 95% CI | p-value | Cohen’s d | |

|---|---|---|---|---|---|---|---|---|---|

| “How do you feel now compared to before the conversation?” | Completed | 2949 | 4.11 | 0.78 | 0.01 | 4.08 | 4.14 | <.001 | −0.83 |

| Premature departure | 590 | 3.41 | 1.14 | 0.05 | 3.31 | 3.50 | |||

| “How much do you think this service has helped you?” | Completed | 2907 | 3.72 | 0.98 | 0.02 | 3.68 | 3.76 | <.001 | −0.79 |

| Premature departure | 577 | 2.89 | 1.29 | 0.05 | 2.79 | 3.00 | |||

| Category | Case label | n | Yes | No | p-value | Cramer's Phi |

|---|---|---|---|---|---|---|

| “Would you recommend our service to a friend in need?” | Completed | 2129 | 2034 | 95 | <.001 | 0.26 |

| Premature departure | 350 | 268 | 82 | |||

| Total | 2479 | 2302 | 177 |

4. Discussion

Abrupt ending in online text-based counseling sessions is an understudied phenomenon with important implications. To our knowledge, this is the first attempt to define and systematically detect users' premature departure. In this study, we proposed and validated a model that combines logical rules and pattern matching techniques to classify premature departure using a large database of transcripts from an online text-based counseling service. The optimal model achieved 97% and 92% F1 score in detecting premature departure cases on the training and test sets, respectively. This level of accuracy affords good confidence that premature departure can be retroactively detected using an algorithm. Furthermore, the performance of the proposed model also provides a benchmark for similar classification tasks in other comparable services. The post-service survey data suggest premature departure tends to be associated with lower user satisfaction. Thus, our model provides a means to capture premature departure and, in turn, understand user experience and potentially mitigate risk, and evaluate and improve service quality.

Given its service volume and the inherent ambiguity of the user's presence, it is not feasible for frontline counselors of online text-based counseling to annotate each session for premature departure in real-time. The proposed model can assist counselors or program evaluators in making the assessment and potentially help them identify reasons for premature departure. Such classification can be useful for various downstream tasks, such as service evaluation. Premature departure might, for example, signify user dissatisfaction, which is not easily discernible due to the service's anonymity, ease of access (and exit), and text-based nature. Scholars have proposed alternative methods of service evaluation, such as the retroactive coding of user experience. For example, Mokkenstorm et al. (2017) adapted the Crisis Call Outcome Rating Scale, which includes items on user expression of gratitude or being understood by the counselor (Bonneson and Hartsough, 1987). However, this method can be labor-intensive, as human coders would need to assess multiple aspects of each session. Given the current result, premature departure can be detected using a simple logic decision model. We have also shown that it can be automated with a high level of accuracy. This model marks a significant pioneering contribution in the clear classification of online counseling session endings.

This study has significant theoretical and practical implications. Theoretically, this study furthers the definition and operationalization of the ubiquitous premature departure phenomenon in text-based counseling services. While the literature on treatment termination and adherence is sizable, they do not directly inform premature departure in the online text-based context because 1) there is often no set appointments or session duration, 2) the user may be multitasking, and 3) there are fewer non-verbal cues. These contextual differences pose additional challenges in understanding when and why a service user might depart from a session without a clear closure or even signaling the exit. Informed by previous work on treatment termination and our current study, we maintain that premature departure is a behavior that potentially reflects and conveys valuable information about the user's experience with, and attitudes towards, the service. Practically, in addition to self-report outcomes (e.g., post-chat survey) and in-text explicit expression of satisfaction, premature departure can be treated as an outcome variable with which the user's impression of the service or risk level can be inferred. As such, it can be a starting point for exploring factors that influence users' experience. Researchers can, for example, use this outcome as a means to develop strategies that would help improve the quality of the session by bringing upon a proper closure.

Also, as premature departure was found in our study to reflect users' experience of the service, it can be used to help improve the service. For example, services can strive to develop and adopt good practices that aims to reduce the rate of premature departures and, by extension, improve the effectiveness of the service.

Model simplicity and results accuracy are often two desired outcomes in classification. They usually do not go hand-in-hand, however. A tradeoff between them is needed. How to choose the optimal model is subject to the aim and characteristics of the specific task. In some complex and scattered contexts, such as detecting suicide risk in the online counseling service, complex models may capture more information or latent features than simple models. Xu et al. (2021), for example, showed that knowledge-aware neural networks can adequately detect users who are at risk and outperformed baseline Natural Language Processing models. However, in simple and general contexts such as comparing similarity between two text documents (Arts et al., 2018) or sentiment analysis for product review contents (Indhuja and Raj, 2014), simple methods such as logic-based rules and text matching can also achieve sufficiently satisfying results. In our preliminary investigation of premature departure, we found that prematurely departed cases display very distinct patterns and logics. This led us to choose and test the simple method. Evidently, the current results validate our observation and the derived hypothesis.

Other notable contributions of the proposed model are its transparency and reproducibility. One of the major concerns in deep learning or complex machine learning algorithms is that they are usually a “black box”, which renders them not only difficult to comprehend and optimize (Watson et al., 2019) but also hard to be replicated (Toh et al., 2019). To tackle these problems, this study adopted the rule-based model approach, which has a relatively high level of interpretability and facilitates the possibility of transfer learning because it can be easily understood, and readily modified and transferred to other similar contexts. That is, one can modify the layers of rules or enrich the dictionary of expressions and apply the model to the targeted context.

Further research can consider the following directions. First, in our study, we used the last four exchanges to build the model. There is a possibility that premature departure is a cumulative process with earlier signifiers. Using the full session may provide further insights on the topic. Second, while we opted for a simple solution in solving the identified classification problem, more complex methods with sophisticated text transformation and feature extraction such as word embedding, and complex architecture of models such as deep learning algorithms might be more appropriate in other contexts or when the classification problem warrants them. Third, building on our current model, future studies can investigate the association between premature departure and other variables to help explain and predict factors that may influence different session endings and, by extension, session efficacy or quality. This study used a dataset from a synchronous service but premature departure might also be relevant and important to asynchronous services. Future studies can consider modifying our model to fit such contexts and test its efficacy.

This study has several limitations. First, the model was developed primarily on spoken Cantonese linguistic patterns. Whether the model can also be effective in other languages needs further testing. Nonetheless, the proposed methodology itself is likely applicable to other text-based services and can be easily extended or transferred to other languages and cultural contexts.

Other online health providers can readily build and test their own models based on ours. Second, the data came from a service that was designed for youth and young adults aged 11 to 35 years. Whether the proposed model can work well for other age groups with different communication styles and norms warrants further exploration. Third, given the anonymous accessibility of the platform, there are many possible external factors, such as the unstable internet connection or sudden urgent matters, that may account for a portion of premature departures. We asserted and tested that at least a portion of premature departure is due to internal factors, such as risk or failing to meet the user's needs. This should be further investigated by future studies. Finally, although we found that those who prematurely departed were also less satisfied with the service, further work is needed to verify this relationship due to the low response rate in the post-service questionnaire, which, as reviewed above, is not uncommon in the evaluation of online services.

5. Conclusion

In online text-based counseling services, the lack of a predetermined session duration is both an advantage for its flexibility and a limitation for its possible abrupt termination. Our study offers a novel logic-based pattern matching decision model to help accurately detect cases of premature departure, which in turn was found to be negatively associated with user satisfaction and outcomes. Because a prematurely departed user might be at heightened risk, our model is one step forward to help systematically and cost-effectively identify such incidents. The method proposed is transparent and highly reproducible, which can be readily modified and transferred to other similar contexts. A better understanding of premature departure can help to improve the efficiency and effectiveness of the text-based counseling service. This study presents a first important step towards that direction.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

Acknowledgments

The research is supported by the Hong Kong Jockey Club Charities Trust with the partnership of five organizations (The Boys' and Girls' Clubs Association of Hong Kong, Caritas Hong Kong, Hong Kong Children and Youth Services, The Hong Kong Federation of Youth Groups, and St. James' Settlement).

Funding

This work was funded by the Hong Kong Jockey Club Charities Trust (2021-0174), the strategic theme-based research support by the University of Hong Kong, a CRF grant (C7151-20G) and the Humanities and Social Science Prestigious Fellowship.

We coined this term to underscore that 1) we are focusing on endings that are caused by the user's departure, as opposed to the counselor's (hence “departure”), 2) the ending is one without a closure, but it need not be sudden (hence “premature” and not “abrupt”).

The most widely used Chinese word segmentation module. URL: https://github.com/fxsjy/jieba.

Supplementary materials to this article can be found online at https://doi.org/10.1016/j.invent.2021.100486.

Slow response may trigger the departure of users; this condition is used to appropriately ensure that the user is still online during the counselor's message. For this parameter, we tested 1 to 5 min and found that 3-min is the optimal threshold interval in terms of the model performance as measured by its weighted average F1 score.

Contributor Information

Christian S. Chan, Email: shaunlyn@hku.hk.

Paul S.F. Yip, Email: sfpyip@hku.hk.

Appendix A. Supplementary Materials

Supplementary materials

References

- Amir A., Landau G.M., Lewenstein M., Sokol D. Dynamic text and static pattern matching. ACM Trans. Algorithms. 2007;3(2) doi: 10.1145/1240233.1240242. [DOI] [Google Scholar]

- Arts S., Cassiman B., Gomez J.C. Text matching to measure patent similarity. Strateg. Manag. J. 2018;39(1):62–84. doi: 10.1002/smj.2699. [DOI] [Google Scholar]

- Barrett M.S., Chua W.J., Crits-Christoph P., Gibbons M.B., Casiano D., Thompson D. Early withdrawal from mental health treatment: implications for psychotherapy practice. Psychotherapy (Chic) 2008;45(2):247–267. doi: 10.1037/0033-3204.45.2.247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonneson M.E., Hartsough D.M. Development of the crisis call outcome rating scale. J. Consult. Clin. Psychol. 1987;55(4):612–614. doi: 10.1037/0022-006X.55.4.612. [DOI] [PubMed] [Google Scholar]

- Connell J., Barkham M., Mellor-Clark J. The effectiveness of UK student counselling services: an analysis using the CORE system. Br. J. Guid. Couns. 2008;36(1):1–18. doi: 10.1080/03069880701715655. [DOI] [Google Scholar]

- Crisis Text Line Crisis trends. 2021. https://crisistrends.org/#visualizations Retrieved from.

- de Haan A.M., Boon A.E., de Jong J.T., Hoeve M., Vermeiren R.R. A meta-analytic review on treatment dropout in child and adolescent outpatient mental health care. Clin. Psychol. Rev. 2013;33(5):698–711. doi: 10.1016/j.cpr.2013.04.005. [DOI] [PubMed] [Google Scholar]

- Fukkink R.G., Hermanns J.M. Children's experiences with chat support and telephone support. J. Child Psychol. Psychiatry. 2009;50(6):759–766. doi: 10.1111/j.1469-7610.2008.02024.x. [DOI] [PubMed] [Google Scholar]

- Hayes A.F., Krippendorff K. Answering the call for a standard reliability measure for coding data. Commun. Methods Meas. 2007;1(1):77–89. doi: 10.1080/19312450709336664. [DOI] [Google Scholar]

- Hoermann S., McCabe K.L., Milne D.N., Calvo R.A. Application of synchronous text-based dialogue Systems in Mental Health Interventions: systematic review. J. Med. Internet Res. 2017;19(8) doi: 10.2196/jmir.7023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hung B.W.K., Jayasumana A.P., Bandara V.W. Detecting Radicalization Trajectories Using Graph Pattern Matching Algorithms. IEEE International Conference on Intelligence and Security Informatics: Cybersecurity and Big Data. 2016. pp. 313–315. [DOI] [Google Scholar]

- Indhuja K., Raj P.C.R. Fuzzy Logic Based Sentiment Analysis of Product Review Documents. 2014 First International Conference on Computational Systems and Communications (Iccsc) 2014. pp. 18–22. [DOI] [Google Scholar]

- Lifeline Impact report. 2021. https://www.lifeline.org.au/media/i42pflqj/lifeline_impact_report_autumn_2021_web.pdf Retrieved from.

- Lipson S.K., Lattie E.G., Eisenberg D. Increased rates of mental health service utilization by U.S. College students: 10-year population-level trends (2007-2017) Psychiatr. Serv. 2019;70(1):60–63. doi: 10.1176/appi.ps.201800332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mathieu S.L., Uddin R., Brady M., Batchelor S., Ross V., Spence S.H., Watling D., Kõlves K. Systematic review: the state of research into youth helplines. J. Am. Acad. Child Adolesc. Psychiatry. 2021;60(10):1190–1233. doi: 10.1016/j.jaac.2020.12.028. [DOI] [PubMed] [Google Scholar]

- Mazor K.M., Clauser B.E., Field T., Yood R.A., Gurwitz J.H. A demonstration of the impact of response bias on the results of patient satisfaction surveys. Health Serv. Res. 2002;37(5):1403–1417. doi: 10.1111/1475-6773.11194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Menger V., Scheepers F., van Wijk L.M., Spruit M. DEDUCE: a pattern matching method for automatic de-identification of Dutch medical text. Telematics Inform. 2018;35(4):727–736. doi: 10.1016/j.tele.2017.08.002. [DOI] [Google Scholar]

- Mokkenstorm J.K., Eikelenboom M., Huisman A., Wiebenga J., Gilissen R., Kerkhof A., Smit J.H. Evaluation of the 113Online suicide prevention crisis chat service: outcomes, helper behaviors and comparison to telephone hotlines. Suicide Life Threat. Behav. 2017;47(3):282–296. doi: 10.1111/sltb.12286. [DOI] [PubMed] [Google Scholar]

- Nesmith A. Reaching young people through texting-based crisis counseling. Adv. Soc. Work. 2018;18(4):1147–1164. doi: 10.18060/21590. [DOI] [Google Scholar]

- O'Keeffe S., Martin P., Target M., Midgley N. 'I just stopped Going': a mixed methods investigation into types of therapy dropout in adolescents with depression. Front. Psychol. 2019;10 doi: 10.3389/fpsyg.2019.00075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reis B.F., Brown L.G. Reducing psychotherapy dropouts: maximizing perspective convergence in the psychotherapy dyad. Psychotherapy. 1999;36(2):123–136. doi: 10.1037/h0087822. [DOI] [Google Scholar]

- Reynolds D.J., Jr., Stiles W.B., Bailer A.J., Hughes M.R. Impact of exchanges and client-therapist alliance in online-text psychotherapy. Cyberpsychol. Behav. Soc. Netw. 2013;16(5):370–377. doi: 10.1089/cyber.2012.0195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rickwood D., Wallace A., Kennedy V., O'Sullivan S., Telford N., Leicester S. Young people's satisfaction with the online mental health service eheadspace: development and implementation of a service satisfaction measure. JMIR Ment. Health. 2019;6(4) doi: 10.2196/12169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saxon D., Ricketts T., Heywood J. Who drops-out? Do measures of risk to self and to others predict unplanned endings in primary care counselling? Couns. Psychother. Res. 2010;10(1):13–21. doi: 10.1080/14733140902914604. [DOI] [Google Scholar]

- Sebastiani F. Machine learning in automated text categorization. ACM Comput. Surv. 2002;34(1):1–47. doi: 10.1145/505282.505283. [DOI] [Google Scholar]

- Sokolova M., Lapalme G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009;45(4):427–437. doi: 10.1016/j.ipm.2009.03.002. [DOI] [Google Scholar]

- Szlyk H.S., Roth K.B., Garcia-Perdomo V. Engagement with crisis text line among subgroups of users who reported suicidality. Psychiatr. Serv. 2020;71(4):319–327. doi: 10.1176/appi.ps.201900149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toh T.S., Dondelinger F., Wang D. Looking beyond the hype: applied AI and machine learning in translational medicine. EBioMedicine. 2019;47:607–615. doi: 10.1016/j.ebiom.2019.08.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson D.S., Krutzinna J., Bruce I.N., Griffiths C.E., McInnes I.B., Barnes M.R., Floridi L. Clinical applications of machine learning algorithms: beyond the black box. BMJ. 2019;364 doi: 10.1136/bmj.l886. [DOI] [PubMed] [Google Scholar]

- Wierzbicki M., Pekarik G. A meta-analysis of psychotherapy dropout. Prof. Psychol. Res. Pract. 1993;24 doi: 10.1037/0735-7028.24.2.190. [DOI] [Google Scholar]

- Windle E., Tee H., Sabitova A., Jovanovic N., Priebe S., Carr C. Association of Patient Treatment Preference with Dropout and Clinical Outcomes in adult psychosocial mental health interventions: a systematic review and meta-analysis. JAMA Psychiatry. 2020;77(3):294–302. doi: 10.1001/jamapsychiatry.2019.3750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu Z., Xu Y., Cheung F., Cheng M., Lung D., Law Y.W., Yip P.S. Detecting suicide risk using knowledge-aware natural language processing and counseling service data. Soc. Sci. Med. 2021;283 doi: 10.1016/j.socscimed.2021.114176. [DOI] [PubMed] [Google Scholar]

- Yip P., Chan W.L., Cheng Q., Chow S., Hsu S.M., Law Y.W., Yeung T.K. A 24-hour online youth emotional support: opportunities and challenges. Lancet Reg. Health West Pac. 2020;4 doi: 10.1016/j.lanwpc.2020.100047. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary materials