Abstract

Certain facial features provide useful information for recognition of facial expressions. In two experiments, we investigated whether foveating informative features of briefly presented expressions improves recognition accuracy and whether these features are targeted reflexively when not foveated. Angry, fearful, surprised, and sad or disgusted expressions were presented briefly at locations which would ensure foveation of specific features. Foveating the mouth of fearful, surprised and disgusted expressions improved emotion recognition compared to foveating an eye or cheek or the central brow. Foveating the brow led to equivocal results in anger recognition across the two experiments, which might be due to the different combination of emotions used. There was no consistent evidence suggesting that reflexive first saccades targeted emotion-relevant features; instead, they targeted the closest feature to initial fixation. In a third experiment, angry, fearful, surprised and disgusted expressions were presented for 5 seconds. Duration of task-related fixations in the eyes, brow, nose and mouth regions was modulated by the presented expression. Moreover, longer fixation at the mouth positively correlated with anger and disgust accuracy both when these expressions were freely viewed (Experiment 2b) and when briefly presented at the mouth (Experiment 2a). Finally, an overall preference to fixate the mouth across all expressions correlated positively with anger and disgust accuracy. These findings suggest that foveal processing of informative features is functional/contributory to emotion recognition, but they are not automatically sought out when not foveated, and that facial emotion recognition performance is related to idiosyncratic gaze behaviour.

Introduction

Foveal processing of emotion-informative facial features

In this study, we investigate the differential contributions of foveal and extrafoveal visual processing of facial features to the identification of facially expressed emotions [see also e.g., 1]. The fovea, a small region of the retina that corresponds to the central 1.7° of the visual field [2], is preferentially specialized for processing fine spatial detail. With increasing eccentricity from the fovea, there is a decline in both visual acuity (i.e., the spatial resolving capacity of the visual system) and contrast sensitivity (i.e., the ability to detect differences in contrast) [3,4]. Extrafoveal (or more broadly, peripheral) vision also differs qualitatively from central vision, receiving different processing and optimized for different tasks [4,5]. At normal interpersonal distances of approximately 0.45 to 2.1 m [6] not all features of one person’s face can fall within another person’s fovea at once [7]; at a distance of around 0.7 m, for example, the size of the foveated region would encompass most of one eye. Thus, at such interpersonal distances, detailed vision of another’s face requires multiple fixations, bringing different features onto the fovea. Features falling outside the fovea nevertheless receive some visual processing, perhaps determining the next fixation location and even contributing directly to the extraction of socially relevant information, such as identity and emotion.

Many aspects of face perception, including the recognition of emotional expressions, rely on a combination of part- or feature-based and configural or holistic processing [8,9]. Although configural or holistic processing appears to contribute more to face perception at normal interpersonal distances than at larger distances [10–12, though see 13], the extraction of information from individual facial features nevertheless helps underpin a range of face perception abilities, including facial emotion recognition, even when the face subtends a visual angle equivalent to a real face viewed at a normal interpersonal distance. Findings from studies that involved presenting observers with face images filtered with randomly located Gaussian apertures or “Bubbles” whose size and corresponding spatial frequency content varied have shown that certain facial features are more informative than others for recognition and discrimination of each basic emotional expression from the others [14–17]. For anger, for example, the brow region is informative, especially at mid-to-high spatial frequencies (15–60 cycles per image, where the face takes up most of the image); for fear, the eye region is informative, especially at high spatial frequencies (60–120 cycles per image), and the mouth at lower spatial frequencies (3.8–15 cycles per image); for disgust, the mouth and sides of the nose are most informative, especially at the middle to the highest spatial frequencies (15–120 cycles per image). The relative contribution to emotion classification performance of these emotion-informative regions and of the spatial frequency information at those regions can vary depending on the combination of emotions used in the task, however [16,17].

In a previous study, we showed that emotion classification performance varies according to which emotion-informative facial feature is fixated [1]. In that study, we used a slightly modified version of a ‘brief-fixation paradigm’ developed by Gamer and Büchel [18], in which faces were presented for 80 ms, a time insufficient for a saccade, at a spatial position that guaranteed that a given feature–the left or right eye, the left or right cheek, the central brow, or mouth–fell at the fovea. In one experiment, participants classified angry, fearful, happy and emotionally neutral faces, and in another experiment a different group of participants classified angry, fearful, surprised and neutral faces. Across both experiments, observers were more accurate and faster at discriminating angry expressions when the brow was projected to their fovea than when one or other cheek or eye (but not mouth) was, an effect that was principally associated with a reduction in the misclassifications of anger as emotionally neutral. This finding is consistent with the importance of mid-to-high spatial-frequency information from the brow in allowing observers to distinguish angry expressions from expressions of other basic emotions [14–17]. Yet, in the first experiment, performance in classifying fear and happiness was not influenced by whether the most informative features (eyes and mouth, respectively) were projected foveally or extrafoveally. In the second experiment, observers more accurately classified fearful and surprised expressions when the mouth was projected to the fovea, effects that were principally associated with reductions in the number of confusions between these two emotions. This enhanced ability to distinguish between fearful and surprised expressions when the mouth was fixated is consistent with previous work showing that the mouth distinguishes fearful from surprised as well as from neutral and angry expressions, whereas the eyes and brow do not distinguish between prototypical fearful and surprised expressions [14,15,19,20].

In the context of the brief-fixation paradigm, while the effect of enforced fixation on a facial feature informs us about whether foveal processing of that feature is beneficial for recognition of target expressions, the subsequent saccades might inform us about what extrafoveal information is being sought out following initial fixation on a facial feature. Previous research using the brief-fixation paradigm revealed that there was a higher proportion of reflexive first saccades upwards from fixation on the mouth than downwards from fixation on the eyes or on a midpoint between the eyes and that this effect was modified by the viewed emotion [18,21–26]. In these experiments, observers classified either angry, fearful, happy and neutral faces, or just fearful, happy and neutral faces, which were presented for 150 ms. Reflexive first saccades were defined as the first saccades from enforced fixation on the face that occurred within 1 s of face offset. The greater propensity for observers to saccade upwards from fixation on the mouth than downwards from fixation on or between the eyes tended to be evident for fearful, neutral and angry faces and markedly reduced or absent for happy faces. Might these saccades, which were presumably triggered in response to the face, be targeting the informative facial features of the expression (e.g., the eyes of the fearful expressions) when not initially fixated? That is the implication in at least some of these previous studies, in which the authors summarize the upward saccades from the mouth as ‘toward the eyes’ and the downward saccades from the eyes as ‘toward the mouth’, and even sometimes claim that their findings show that people reflexively saccade toward diagnostic emotional facial features [see especially 18,22,26]. The suggestion that the appearance of emotion-informative facial features outside foveal vision can trigger reflexive eye-movements towards these features gains some support from a study by Bodenschatz et al. [27]: when primed with fearful faces, observers fixated the eye region of a subsequently presented neutral face quicker and dwelled on this region for longer whereas they fixated the mouth region quicker and dwelled on this region for longer when primed with a happy face compared to a sad prime.

Yet, in the context of the brief-fixation paradigm, our previous study [1] did not find any evidence to indicate that reflexive first saccades preferentially target emotion-distinguishing facial features. Although we replicated the key finding of more upward saccades from enforced fixation on the mouth than downward saccades from enforced fixation on the eyes, the modulation of this effect by the expressed emotion was evident in our first experiment, in which angry, fearful, happy and neutral faces were used (the effect was evident for angry and neutral faces, less so for fearful faces, and not at all for happy faces), but not in our second experiment, in which angry, fearful, surprised and neutral faces were used. Moreover, in an attempt to provide a more spatially precise measure of the extent to which reflexive first saccades target diagnostic features, we calculated a saccade path measure by projecting the vector of each first saccade on to the vectors from the enforced fixation location to each of the other facial locations of interest, normalized for the length of the target vector (given that the target locations vary in distance from a given fixation location). Using this measure, we did not find any support for the hypothesis that observers’ first saccades from initial fixation on the face will seek out emotion-distinguishing features. Instead, we found that reflexive first saccades tended towards the left and centre of the face, which might reflect one or more of (a) a centre-of-gravity effect, that is, a strong tendency for first saccades to be to the geometric center of scenes or configurations [e.g., 28–31], including faces [e.g., 32], (b) the left visual field/right hemisphere advantage in emotion perception [e.g., 33–35] and (c) the strong tendency for first saccades onto a face to target a location below the eyes, just to the left of face centre, which is also the optimal initial fixation point for determining a face’s emotional expression [36].

In the first two of three experiments reported in the present paper, we extend our previous work by using the same brief-fixation method to investigate whether fixating an informative facial feature improves emotion recognition over fixating non-informative facial features, here using different combinations of emotion: angry, fearful, surprised and sad faces in Experiment 1 and angry, fearful, surprised and disgusted faces in Experiment 2a. We aimed particularly to answer the following questions: (1) Would fixation on the brow of angry faces improve emotion classification accuracy even when ‘neutral’ was no longer a response option or stimulus condition? (2) Would the enhanced ability to distinguish between fearful and surprised expressions when fixating the mouth still be evident when those expressions are presented along with different combinations of emotions? (3) Would fixation on the brow or mouth enhance classification accuracy for sad expressions, relative to fixation on an eye or cheek, given that observers rely on medium-to-high spatial frequency information at both these regions to classify sad faces [14–17]? (4) Would fixation on the mouth enhance classification accuracy for disgusted expressions, relative to fixation on the brow, an eye or cheek, given that observers rely on medium-to-high spatial frequency information on and around the mouth to classify disgusted faces [14–17]? We also here extend our previous work [1] by examining the direction and paths of reflexive first saccades triggered by the onset of the briefly presented face, to further test the hypothesis that those saccades target emotion-informative facial features. Although we found no support for this hypothesis in our previous study, additional tests of this hypothesis with different combinations of emotions are warranted.

In the last of the three experiments reported in this paper (Experiment 2b), we presented faces for a longer duration, thus allowing observers to freely fixate multiple locations on the face. Our aims were to investigate whether different facial expressions elicit specific fixation patterns under task instructions to classify the expression and whether these fixation patterns are related to emotion classification performance. A small number of eye-tracking studies indicate that emotion-informative facial features modulate eye movements when observers view images of facial expressions [26,37–40]. However, these studies either did not require participants to classify the facially expressed emotion or, when they did, the relationship between the amount of time spent fixating informative features and emotion recognition performance was not examined. These studies therefore do not address whether the increased time spent fixating an informative facial feature contributes to the accuracy of facial expression recognition, except for one recent study [41], which found that when the observers misclassified expressions, they explored regions of the face that supported the recognition of the mistaken expression.

Nonetheless, there is some, albeit limited, evidence that fixation of emotion-distinguishing features whilst free-viewing faces aids emotion recognition. Notably, a selective impairment in recognizing fear from faces associated with bilateral amygdala damage is the result of a failure to saccade spontaneously to and thus fixate the eye region [42], a region that is informative for fear [15,17]. Remarkably, instructing the patient with bilateral amygdala damage to fixate the eyes restored fear recognition performance to normal levels [42]. Recognition of negatively-valenced emotions (i.e., fear, anger, sadness), especially fear, was shown to be impaired in individuals with Autism Spectrum Disorders (ASDs) and the impairment is linked to a decreased preference to fixate or saccade to the eye region of the facial expressions [25,43]. Another study with neurotypical participants found that accuracy in detecting emotional expressions was predicted by participants’ fixation patterns, particularly with fixations to the eyes and, to a lesser extent, the nose and mouth, though mostly for subtle rather than strong expressions [44]. These studies suggest that fixating on the informative facial features aids successful emotion recognition and failure to do so leads to impairments in emotion recognition. Yet it remains unclear the extent to which this reflects an emotion-specific pattern (i.e., more or longer fixations for one feature for a particular emotion and for a different feature for a different emotion) as opposed to a pattern reflecting the relative importance of particular facial features irrespective of the emotion. Moreover, to our knowledge, existing evidence does not support an alternative hypothesis, namely, that because observers are more efficient at extracting task-relevant information from emotion-distinguishing facial features they fixate those features less.

Experiment 1

In this experiment, we used the same modified version of Gamer and Büchel’s [18] brief-fixation paradigm used by Atkinson and Smithson [1], here with angry, fearful surprised and sad expressions, to test two hypotheses: (1) A single fixation on an emotion-distinguishing facial feature will enhance emotion identification performance compared to when another part of the face is fixated (and thus the emotion-informative feature is projected to extrafoveal retina). (2) Under these task conditions, observers’ reflexive first saccades from initial fixation on the face will preferentially target emotion-distinguishing facial features when those features are not already fixated.

Based on previous work showing that the brow is informative for anger recognition [14–17] and the mouth for distinguishing between fear and surprise [14,15,20], and in line with our previous findings [1], we predicted that enforced fixation on (1) the central brow would improve emotion recognition for anger compared to other initial fixation locations, and (2) the mouth would improve emotion recognition accuracy for fearful and surprised expressions. Based on previous research showing that the brow and mouth are informative for the recognition of sadness [14–16], we also predicted that (3) enforced fixation on the brow and mouth would improve accuracy for sad expressions, relative to fixation on a cheek and either eye. The cheeks were chosen as fixation locations to be relatively uninformative regions for the chosen expressions.

To test the second hypothesis, that reflexive first saccades would target emotion-informative facial features, we used a measure of saccade direction that mapped the direction of the reflexive saccades onto six possible saccade paths leading to the target facial features. If the reflexive saccades do indeed target emotion-informative facial features, then the paths of those saccades would be expected to be more similar to the paths leading to the relevant informative features than to the paths leading to the less-informative features. On the other hand, the direction of the reflexive saccades might instead reflect a central or a leftward bias, or both, as we have found previously [1].

Method

Participants

Thirty-three participants took part (31 female; mean age = 20.7 years, age range = 18–31). All participants were undergraduate Psychology students and had normal or corrected-to-normal vision. All participants gave written consent to take part in the experiment and were awarded participant pool credit for their participation. The study was approved by the Durham University Psychology Department Ethics Sub-committee.

Materials

Twenty-four facial identities (12 males, 12 females) were chosen from the Radboud Faces Database [45]. All faces were of White adults with full frontal pose and gaze. Angry, fearful, surprised and sad expressions from each identity were utilised leading to a total of 96 images. The images used were spatially aligned, as detailed in [45]. The images were presented in colour and were cropped from their original size to 384 (width) × 576 (height) pixels, so that the face took up more of the image than in the original image set. Each image therefore subtended 14.9 (w) × 22.3 (h) degrees of visual angle at a viewing distance of 57cm on a 1024 × 768 resolution screen.

Design

A within-subjects design was used, with Expression (anger, fear, surprise and sadness) and Fixation Location (eyes, brow, cheeks, and mouth) as repeated-measures variables. There were 4 blocks of 96 trials. Over the course of 4 blocks, participants were presented with each emotion at each of the 4 initial fixation locations 24 times (once for each identity), with the eye and the cheek fixation locations selected equally (12 times) on the left and right. The stimuli were randomly ordered across the 4 blocks, with a new random order for each participant.

Apparatus and procedure

The experiment was executed and controlled using the Matlab® programming language with the Psychophysics Toolbox extensions (Brainard, 1997; Pelli, 1997; Kleiner et al, 2007). To control stimulus presentation and to measure gaze behaviour, we used an EyeLink 1000 desktop-mounted eye-tracker (SR Research, Mississauga, ON, Canada). Each stimulus block was started with the default nine-point calibration and validation sequences. Recording was binocular but only data from the left eye was analysed since data from the left eye started the trial in a gaze-contingent manner. Default criteria for fixations, blinks, and saccades implemented in the Eyelink system were used.

Each trial started with a fixation cross located at one of 25 possible locations on the screen. This was to make both the exact screen location of the fixation cross and the to-be-fixated facial feature unpredictable. These 25 possible locations for the fixation cross were at 0, 25, or 50 pixels left or right and up or down from the center of the screen. These fixation-cross positions were randomly ordered across trials. Following the presentation of the fixation cross on one of these randomly selected screen locations, a face image was presented so that one of the facial features of interest was aligned with the location of that fixation cross. Faces were presented in a gaze-contingent manner on each trial: The participants needed to fixate within 30 pixels (1.16 degrees of visual angle) of the fixation cross for 6 consecutive eye-tracking samples following which a face showing one of the four target expressions was presented. The facial features of interest (which henceforth we refer to as ‘fixation locations’) were the left or right eye, the central brow, the centre of the mouth, and locations on the left and right cheeks. These initial fixation locations can be seen in Fig 1. The face was presented for 82.4 ms (7 monitor refreshes) on a monitor with an 85 Hz refresh rate. Following the face presentation, the participant pressed a key on a QWERTY keyboard to indicate their answer. The row of number keys near the top of the keyboard were used, with 4 for anger, 5 for fear, 8 for surprise and 9. The keys were labelled A, F and Su and Sa from left to right and the order of these keys remained the same for each participant. Participants pressed the A and F keys with the left and the Su and Sa keys with the right hand. This configuration was chosen to optimize the reach of the participants to the keyboard from either side of the chinrest. Participants were asked to memorize the keys so as not to look down towards the keyboard during the experiment. A valid response needed to be registered for the next trial to begin.

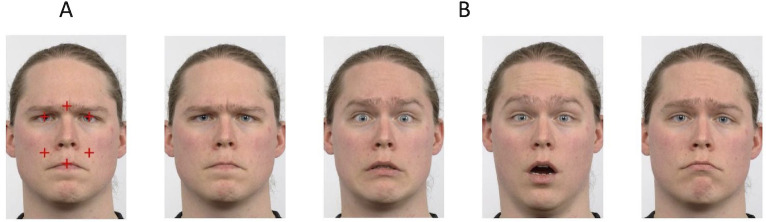

Fig 1. Initial fixation locations and example facial expressions used.

(A) An example face image used in the experiments (from the [45] database), overlaid, for illustrative purposes, with red (dark grey) crosses to mark the possible enforced fixation locations. (B) Example images of each expression used in Experiment 1 (left to right: anger, fear, surprise, sadness). The face images are republished in slightly adapted form from the Radboud Faces Database [45] under a CC BY license, with permission from Dr Gijsbert Bijlstra, Radboud University.

Data analysis

Our main analyses consisted in repeated measures ANOVAs and planned comparison t-tests. Where the assumption of sphericity was violated, the Greenhouse-Geisser corrected F-value is reported. Significant interactions were followed up by simple main effects analyses (repeated measures). Significant main effects were followed up with pairwise comparisons. Planned comparisons (one-tailed paired samples t-tests) were conducted to test the hypotheses relating to the fixation location specific to each expression, which led to three comparisons for each expression, i.e., for anger: brow > eyes, brow > mouth, brow > cheeks; and for fear, surprise and disgust: mouth > eyes, mouth > brow, mouth > cheeks. All planned and pairwise comparisons were corrected for multiple comparisons using the Bonferroni-Holm method (uncorrected p-values are reported). For ANOVAs, we report both the partial eta squared (ηp2) and generalized eta squared (ηG2) measures of effect size [46–48]; for the partial eta squared values we also report 90% confidence intervals (CIs) calculated using code extracted from the ‘ci.pvaf’ function in the MBESS package (ver. 4.6.0) for R [49,50]. For t-tests, we report Cohen’s dz effect size with 95%, as output by JASP. The data are summarised in rain-cloud plots [51] using Matlab code from https://github.com/RainCloudPlots/, supplemented with error bars indicating the 95% CIs calculated in JASP (ver 0.10.2) using bias-corrected accelerated bootstrapping with 1000 samples.

Emotion classification accuracy. Trials with reaction times shorter than 200 ms were disregarded as automatic responses not reflecting a genuine perceptual response. For this experiment, there were no such outliers. Unbiased hit rates were calculated using the formula supplied by Wagner [52]. The “unbiased hit rate” (Hu) accounts for response biases in classification experiments with multiple response options [52]. Hu for each participant is calculated as the squared frequency of correct responses for a target emotion in a particular condition divided by the product of the number of stimuli in that condition representing this emotion and the overall frequency that that emotion category is chosen for that condition. Hu ranges from 0 to 1, with 1 indicating that all stimuli in a given condition representing a particular emotion have been correctly identified and that that emotion label has never been falsely selected for a different emotion.

Prior to analysis, the unbiased hit rates were arc sine square-root transformed in order to better approximate a normal distribution of the accuracy data. Emotion recognition accuracy was analysed by a 4 × 4 repeated measures ANOVA with emotion and initial fixation location as factors. Unless otherwise stated, the data from the left and right sides of the face (when the fixation location was an eye or cheek) were collapsed for the analyses.

Eye-movement analysis: Reflexive saccade selection criteria. Reflexive saccades were defined as saccades that were triggered by onset of a face image and executed following face offset. Accordingly, reflexive saccades used for the reported analyses were chosen as those that happened within the 82.4 ms to 1000 ms window after face onset [similar to e.g., 18,26]. In other words, the reflexive saccades used were the ones that were executed within 1000ms after face offset. All reflexive saccades that had amplitudes smaller than 0.5 degrees were disregarded. Finally, of all the saccades that complied with these criteria, only the first saccade was used. After this data reduction, participants who had reflexive first saccades on fewer than 20% of the total trials per block (i.e., 20% of 96) in any one of the 4 blocks were removed from further analysis. This threshold was chosen to strike a balance between having enough trials per condition and not wanting to exclude the data for too many participants. All saccade direction related analyses were carried out on this set of data.

Eye-movement analysis: Proportions up vs. down. These analyses are reported in the Supplementary Results and Discussion in S1 File, to allow comparison with previous studies [18,21–26].

Eye-movement analysis: Saccade paths. To estimate the paths of the reflexive saccades, six vectors were plotted from the starting coordinates of each saccade to the coordinates of the six possible initial fixation locations. These make up the saccade path vectors. Then, the dot products of the reflexive saccade vector and the six possible saccade path vectors were calculated and normalised to the magnitude of the saccade path vectors. This measure represents the similarity between the reflexive saccade path and the possible saccade path vectors. Identical vectors would produce a value of 1, whereas a saccade in exactly the same direction as a possible saccade path to a target but overshooting that target by 50% would produce a value of 1.5. Negative saccade path values, on the other hand, indicate a saccade that is going in the opposite direction of the possible saccade path. Trials with > 3 out of the 5 normalized saccade paths (excluding trajectories from the initial fixation location to itself) whose absolute values > 1.5 were classed as outliers. This effectively excluded saccades that ended beyond the edge of the face. Such outliers accounted for 0.85% of the recorded measures and were excluded from the analyses.

Results

Emotion classification accuracy

The unbiased hit rates are summarized in Fig 2. There was a main effect of emotion, F(1.66, 53.06) = 17.02, p < .001, ηp2 = .35, 90% CI [.17 .47], ηG2 = .1, reflecting worse accuracy for fear compared to the other emotions (all uncorrected ps < .001). A main effect of fixation location, F(3, 96) = 3.43, p = .02, ηp2 = .1, 90% CI [.01 .18], ηG2 = .02, reflected higher recognition accuracy for fixation on the mouth (M = 0.722, SD = 0.114) compared to the cheeks (M = 0.685, SD = 0.116), p = .006, dz = 0.51 (Bonferroni-Holm adjusted α = .0083) and eyes (M = 0.685, SD = 0.116), p = .009, dz = 0.48 (Bonferroni-Holm adjusted α = .01). Importantly, there was a significant interaction between these factors, F(5.49, 175.63) = 3.68, p = .003, ηp2 = .1, 90% CI [.02 .15], ηG2 = .02, which we followed up with simple main effects analyses.

Fig 2. Emotion classification accuracy (mean unbiased hit rates) as a function of emotion category and fixation location in Experiment 1.

Red circles indicate the mean value across participants and error bars indicate the 95% CIs (see Methods). The raincloud plot combines an illustration of data distribution (the ‘cloud’) with jittered individual participant means (the ‘rain’) for each condition [51].

There was a main effect of fixation location for angry expressions, F(3,96) = 3.14, p = .029, ηp2 = .09, 90% CI [.005 .17], ηG2 = .04. Planned comparisons revealed that anger recognition accuracy with fixation at the brow (M = 0.755, SD = 0.112) was higher compared to fixation at the cheeks (M = 0.7, SD = 0.132), t(32) = 2.45, p = .01, dz = 0.43, 95% CI [0.12 ∞] (Bonferroni-Holm adjusted α = .0167). The full pairwise comparisons additionally revealed that fixation on the mouth (M = 0.754, SD = 0.127) led to significantly higher anger accuracy compared to fixation on the cheeks, t(32) = 2.92, p = .006, dz = 0.51, 95% CI [0.14 0.87] (Bonferroni-Holm adjusted α = .0083).

There was a main effect of fixation location for fearful faces, F(3, 96) = 3.83, p = .012, ηp2 = .11, 90% CI [.01 .19], ηG2 = .03. Planned comparisons showed that fixation on the mouth (M = 0.658, SD = 0.185) led to improved fear recognition compared both to the eyes (M = 0.59, SD = 0.141), t(32) = 2.98, p = .003, dz = 0.52, 95% CI [0.21 ∞] and the brow (M = 0.604, SD = 0.171), t(32) = 2.55, p = .008, dz = 0.44, 95% CI [0.14 ∞]. Fear recognition accuracy was also greater with fixation on the mouth than on the cheeks (M = 0.616, SD = 0.192), though this effect did not survive correction for multiple comparisons, t(32) = 2.04, p = .025, dz = 0.36, 95% CI [0.06 ∞]. The full pairwise comparisons did not reveal any additional differences between the fixation locations.

There was also a main effect of fixation location for surprised faces, F(3, 96) = 5.78, p = .001, ηp2 = .15, 90% CI [.04 .24], ηG2 = .05. Planned comparisons confirmed higher accuracy for surprise with fixation at the mouth (M = 0.746, SD = 0.129) compared to an eye (M = 0.664, SD = 0.132), t(32) = 4.28, p < .001, dz = 0.75, 95% CI [0.42 ∞] and the brow (M = 0.691, SD = 0.14), t(32) = 3.19, p = .002, dz = 0.56, 95% CI [0.24 ∞]. There was no difference in accuracy between fixation at the mouth and fixation at a cheek (M = 0.709, SD = 0.154), t(32) = 1.51, p = .071. The full pairwise comparisons did not reveal any additional differences between the fixation locations. Finally, there was no effect of initial fixation location for sad expressions (F < 1, p > .4).

To further investigate the effect of fixation location, we also examined the confusion matrices to identify whether there were systematic misclassifications of the target expressions as another emotion (see Table 1). Anger was most often misclassified as sadness, but this misclassification was reduced when angry expressions were presented with initial fixation at the brow. Fearful expressions tended to be misclassified as surprised and this misclassification was reduced when the fearful expression was presented with initial fixation at the mouth.

Table 1. Confusion matrices for Experiment 1 (brief fixation paradigm).

| Anger | Fear | Surprise | Sadness | Anger | Fear | Surprise | Sadness | |

|---|---|---|---|---|---|---|---|---|

| Eyes | Brow | |||||||

| Anger | 84.22 | 2.90 | 1.64 | 11.24 | 86.74 | 2.15 | 1.77 | 9.34 |

| Fear | 3.66 | 70.45 | 22.98 | 2.90 | 3.41 | 69.07 | 23.23 | 4.29 |

| Surprise | 1.14 | 10.48 | 86.24 | 2.15 | 1.26 | 7.95 | 88.89 | 1.89 |

| Sadness | 9.22 | 1.64 | 2.90 | 86.24 | 9.34 | 1.39 | 2.53 | 86.74 |

| Cheeks | Mouth | |||||||

| Anger | 82.32 | 2.53 | 1.01 | 14.14 | 85.98 | 1.52 | 0.25 | 12.25 |

| Fear | 4.17 | 71.46 | 20.58 | 3.79 | 2.78 | 74.87 | 16.79 | 5.56 |

| Surprise | 1.64 | 9.22 | 87.88 | 1.26 | 1.39 | 8.59 | 89.14 | 0.88 |

| Sadness | 10.48 | 1.64 | 1.64 | 86.24 | 9.47 | 1.39 | 1.89 | 87.25 |

One confusion matrix is shown for each of the 4 initial fixation locations (eyes, brow, cheeks, mouth). The row labels indicate the presented expression and the column labels indicate the participant responses. The data are the % of trials each emotion category was given as the response to the presented expression. %s reported in bold represent the most prevalent confusions for each expression.

Eye movement analysis

We conducted analyses of saccade direction using the saccade path measure described in Data Analysis to compare the paths of reflexive saccades to possible saccade paths targeting one of the other initial fixation locations. We first analysed the saccade path measures collapsed across fixation location and then for each fixation location separately. If reflexive first saccades target emotion-distinguishing features, then we would expect those saccades to be directed more strongly towards the brow and possibly also the mouth for angry and sad faces, and more strongly towards the mouth for fearful and surprised faces.

Reflexive saccade data from three participants were removed from the analysis of eye-movement data since they did not meet the criteria for inclusion. The following analyses are conducted on 30 participants. On average, for the 30 participants, 70% (range: 32%-98%) of all trials included a reflexive saccade.

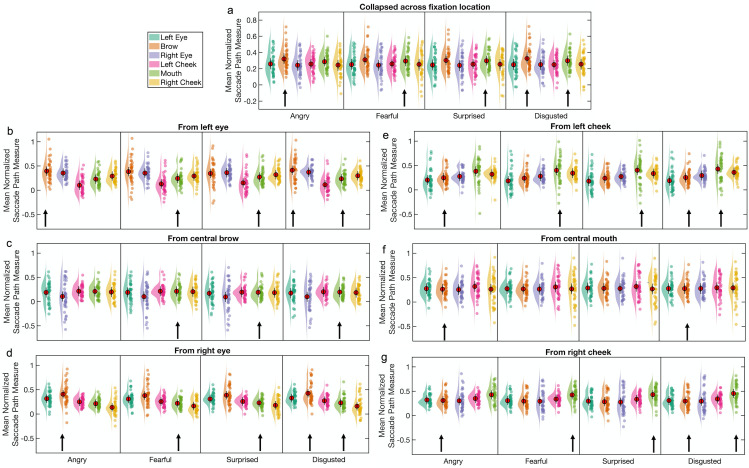

Saccade path analysis: Collapsed across fixation location. The mean saccade paths of the first saccades were calculated for each emotion and collapsed across all the initial fixation locations. A visualisation of these data can be seen in Fig 3A. A 4 × 6 repeated measures ANOVA was used to compare the mean saccade paths towards each of the six possible saccade targets for each expression. A main effect of target location, F(1.82, 52.82) = 8.71, p < .001, ηp2 = .23, 90% CI [.07 .36], ηG2 = .13, was modified by an interaction with emotion, F(7.39, 214.44) = 4.76, p < .001, ηp2 = .14, 90% CI [.05 .19], ηG2 = .007. There was a negligible main effect of emotion (F = 1.58, p = .2). Simple main effects analyses revealed differences in the mean normalized saccade path values between target locations for all 4 emotions (all Fs > 6.5, ps < .001) and between emotions for the 3 upper-face target locations (left eye, brow, right eye; Fs > 3.9, ps < .015) but not for the 3 lower-face target locations (left cheek, mouth, right cheek; Fs < 2.5, ps > .05). Pairwise comparisons to follow up the main effect of target location revealed that reflexive first saccades were more strongly in the direction of the brow (M = 0.303, SD = 0.111) than of any of the other 5 target locations: left eye (M = 0.244, SD = 0.117), t(29) = 3.87, p < .001, dz = 0.71, 95% CI [0.3 1.1]; right eye (M = 0.185, SD = 0.134), t(29) = 6.17, p < .001, dz = 1.13, 95% CI [0.66 1.58]; left cheek (M = 0.206, SD = 0.098), t(29) = 5.22, p < .001, dz = 0.95, 95% CI [0.51 1.38]; mouth (M = 0.208, SD = 0.101), t(29) = 4.13, p < .001, dz = 0.76, 95% CI [0.34 1.16]; right cheek (M = 0.167, SD = 0.124), t(29) = 6.12, p < .001, dz = 1.12, 95% CI [0.65 1.57]. This finding that saccades were more strongly in the direction of the brow than of any of the other 5 target locations was evident for all 4 emotions, as revealed by examining pairwise comparisons for each emotion separately, though after correction for multiple comparisons, this was not the case for the brow compared to the mouth and left eye of fearful faces (respectively, t = 2.94, p = .006, adjusted α = .0046, and t = 2.8, p = .009, adjusted α = .0056; all other ts > 3.5, ps ≤ .001). Collapsed across emotion, reflexive saccades were also more strongly in the direction of the left eye than of the left cheek, t(29) = 3.72, p < .001, dz = 0.68, 95% CI [0.28 1.07], an effect that was also evident for each emotion when examined separately, but only for angry and surprised faces after correction for multiple comparisons (respectively, t = 4.68, p < .001, adjusted α = .0046, and t = 3.15, p = .004, adjusted α = .005; all other ts < 3.2, ps > .003).

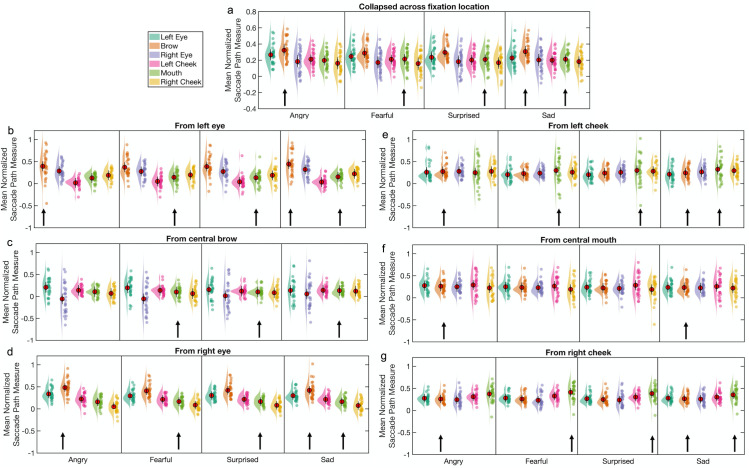

Fig 3. Mean normalized saccade paths as a function of facial expression and target location for Experiment 1.

The normalized saccade path is a measure of the directional strength of the reflexive first saccades (executed after face offset) towards target locations of interest, in this case (a) to 6 target locations, collapsed across initial fixation location (N = 30), and from (b) the left eye, (c) the brow, (d) the right eye, (e) the left cheek, (f) the mouth, and (g) the right cheek, to the remaining 5 regions of interest (N = 27). Red circles indicate the mean value across participants and error bars indicate the 95% CIs (see Methods). The raincloud plot combines an illustration of data distribution (the ‘cloud’) with jittered individual participant means (the ‘rain’) for each condition [51]. Arrows indicate the most emotion-informative (‘diagnostic’) facial features for each emotion.

Another set of simple main effects analyses, comparing the mean normalized saccade path values between emotions for the brow and mouth target locations separately, revealed an effect of emotion for the brow target location only, F(2.33, 67.49) = 7.08, p < .001, ηp2 = .2, 90% CI [.06 .31], ηG2 = .01 (other F = 1.17, p = .32). Pairwise comparisons revealed that reflexive first saccades were more strongly directed towards the brow for angry faces, t(29) = 4.27, p < .001, dz = 0.78, 95% CI [0.36 1.18], and sad faces, t(29) = 2.82, p = .009, dz = 0.51, 95% CI [0.13 0.89], than for fearful faces.

Saccade path analysis: From fixation on individual features. Next, we conducted separate 4 × 5 repeated measures ANOVAs for each of the 6 fixation locations, with emotion and saccade target as factors. The data are summarized in Fig 3B–3F. Note that the data for a further 3 participants had to be excluded from these analyses, due to missing data in one or more cells of the design matrices; thus N = 27 for these analyses.

For saccades starting from fixation at the left eye, there was a significant effect of target location, F(1.07, 27.84) = 54.47, p < .001, ηp2 = .68, 90% CI [.48 .77], ηG2 = .35. All pairwise comparisons were significant (see S1 Table). Reflexive first saccades were most strongly directed towards the brow (M = 0.399, SD = 0.233), followed by the right eye (M = 0.289, SD = 0.162), right cheek (M = 0.199, SD = 0.127), mouth (M = 0.14, SD = 0.112) and left cheek (M = 0.037, SD = 0.099). This effect was not modified by an interaction with emotion (F = 1.21, p = .3), as would be expected if reflexive first saccades targeted emotion-distinguishing features. There was a negligible effect of emotion (F = 1.64, p = .21).

For saccades starting from fixation at the right eye, there was a significant effect of target location, F(1.15, 29.79) = 108.78, p < .001, ηp2 = .81, 90% CI [.68 .86], ηG2 = .48, but no effect of emotion (F < 1, p > .4). All pairwise comparisons for the effect of target location were significant (see S1 Table). Reflexive first saccades were most strongly directed towards the brow (M = 0.433, SD = 0.162), followed by the left eye (M = 0.309, SD = 0.117), left cheek (M = 0.217, SD = 0.098), mouth (M = 0.163, SD = 0.094) and right cheek (M = 0.073, SD = 0.106). In contrast to the results for saccades from the left eye, the effect of target location for saccades from the right eye was modified by an interaction with emotion, F(3.48, 90.56) = 3.75, p = .01, ηp2 = .13, 90% CI [.02 .21], ηG2 = .014. Simple main effects analyses showed that the effect of target location was significant for all 4 emotions (Fs > 48.0, ps < .001). Pairwise comparisons showed the same pattern for all 4 emotions as for the main effect (brow > left eye > left cheek > mouth > right cheek). Interestingly, pairwise comparisons across emotions for the brow target location revealed that reflexive first saccades from fixation at the right eye were directed more strongly towards the brow for angry faces (M = 0.483, SD = 0.178) than for fearful (M = 0.408, SD = 0.157), t(26) = 3.98, p < .001, dz = 0.77, 95% CI [0.33 1.19], surprised (M = 0.419, SD = 0.166), t(26) = 3.12, p = .004, dz = 0.6, 95% CI [0.19 1.01], and sad faces (M = 0.42, SD = 0.195), t(26) = 2.87, p = .008, dz = 0.55, 95% CI [0.14 0.95].

For saccades starting from fixation at the brow, there was a marginal effect of target location, F(1.05, 27.16) = 4.09, p = .052, ηp2 = .14, 90% CI [NA .33], ηG2 = .09, and a negligible effect of emotion (F = 1.8, p = .15). Numerically, reflexive first saccades were more strongly directed towards the left eye (M = 0.173, SD = 0.204) and left cheek (M = 0.135, SD = 0.094) than towards the mouth (M = 0.11, SD = 0.096), right cheek (M = 0.084, SD = 0.129) and right eye (M = -0.01, SD = 0.306), but none of the pairwise comparisons was significant after correction for multiple comparisons (all uncorrected ps ≥ .02, dzs ≤ 0.47). Nonetheless, the effect of target location for saccades from the brow was modified by an interaction with emotion, F(3.3, 85.88) = 5.25, p = .002, ηp2 = .17, 90% CI [.04 .26], ηG2 = .017. Simple main effects analyses showed an effect of target location for angry faces, F(4) = 6.96, p < .001, and fearful faces, F(4) = 6.68, p < .001, a marginal effect for surprised faces, F(4) = 2.39, p = .056, and no effect for sad faces, F(4) = 0.83, p = .5. Reflexive first saccades from the brow of angry faces were more strongly in the direction of the mouth (M = 0.107, SD = 0.103) than of the right cheek (M = 0.069, SD = 0.138), t(26) = 3.13, p = .004, dz = 0.6, 95% CI [0.19 1.01] (Bonferroni-Holm adjusted α = .005), and marginally more strongly towards the left cheek (M = 0.141, SD = 0.098) than towards the right cheek, t(26) = 3.02, p = .006, dz = 0.58, 95% CI [0.17 0.99] (Bonferroni-Holm adjusted α = .0056) and the mouth, t(26) = 2.9, p = .007, dz = 0.58, 95% CI [0.15 0.96] (Bonferroni-Holm adjusted α = .0063). Reflexive first saccades from the brow of fearful faces were more strongly in the direction of the three lower-face features than of the right eye (M = -0.055, SD = 0.316): mouth (M = 0.101, SD = 0.116), t(26) = 3.24, p = .003, dz = 0.62, 95% CI [0.21 1.03]; right cheek (M = 0.064, SD = 0.162), t(26) = 3.27, p = .003, dz = 0.63, 95% CI [0.21 1.04]; left cheek (M = 0.137, SD = 0.107), t(26) = 3.17, p = .004, dz = 0.62, 95% CI [0.19 1.02].

For saccades from fixation at the mouth, there was no main effect of target location or of emotion on the directional strength of those saccades, nor an effect of the interaction (all Fs < 1.6, ps > .2).

For saccades from fixation at the left cheek, there was no main effect of target location or of emotion on the directional strength of those saccades (both Fs < 1.2, ps > .3). The effect of the interaction was small and not statistically significant, F(2.71, 70.56) = 2.29, p = .091, ηp2 = .08, 90% CI [NA .17], ηG2 = .009.

For saccades from fixation at the right cheek, there was a significant main effect of target location, F(1.06, 27.43) = 10.1, p = .003, ηp2 = .28, 90% CI [.07 .46], ηG2 = .13, but no main effect of emotion (F < 1, p > .6). Reflexive first saccades from the right cheek were most strongly directed towards the mouth (M = 0.38, SD = 0.165) followed by the left cheek (M = 0.311, SD = 0.103), left eye (M = 0.275, SD = 0.94), brow (M = 0.258, SD = 0.102), and right eye (M = 0.242, SD = 0.131). The results of the pairwise comparisons can be seen in S1 Table. There was a marginal effect of the interaction between emotion and target location, F(2.9, 75.4) = 2.69, p = .054, ηp2 = .09, 90% CI [NA .18], ηG2 = .006. Simple main effects analyses revealed effects of target location for all 4 emotions (all Fs > 5.5, ps < .001). Pairwise comparisons to follow up these simple main effects are reported in S2 Table. For angry faces, reflexive first saccades from the right cheek were directed more strongly towards the mouth (M = 0.373, SD = 0.179) compared to the left cheek (M = 0.31, SD = 0.109) and left eye (M = 0.275, SD = 0.095). For fearful faces, the most notable results were that reflexive first saccades from the right cheek were directed more strongly towards the mouth (M = 0.411, SD = 0.184) than towards all other target locations: right eye (M = 0.231, SD = 0.147), brow (M = 0.258, SD = 0.11), left eye (M = 0.28, SD = 0.1), and left cheek (M = 0.326, SD = 0.111). Similarly, for surprised faces, the most notable results were that reflexive first saccades were more strongly directed towards the mouth (M = 0.383, SD = 0.169) compared to all other target locations: right eye (M = 0.235, SD = 0.164), brow (M = 0.248, SD = 0.124), left eye (M = 0.265, SD = 0.105), and left cheek (M = 0.304, SD = 0.103). A similar pattern of results was also evident for sad faces, with the saccades more strongly in the direction of the mouth (M = 0.354, SD = 0.164) than of the right eye (M = 0.257, SD = 0.124), brow (M = 0.266, SD = 0.107), left eye (M = 0.279, SD = 0.11) and left cheek (M = 0.303, SD = 0.12); however, none of the pairwise comparisons was significant after correction for multiple comparisons (all uncorrected ps ≥ .012, dzs ≤ 0.52; Bonferroni-Holm adjusted α = .005).

Discussion

Using a combination of angry, fearful, surprised and sad expressions in a brief-fixation paradigm, we aimed to investigate the contribution of initially fixating an informative facial feature to emotion recognition and seeking out of informative facial features when these are not initially fixated. We found an interaction between expression and initial fixation location on recognition accuracy. Initially fixating on the central brow of angry faces led to greater recognition accuracy compared to initially fixating on a cheek, as expected and as found by Atkinson and Smithson [1], though not compared to fixation on an eye or the mouth; indeed, fixation on the mouth also elicited greater anger recognition accuracy than fixation on a cheek. The greater accuracy for anger with fixation at the brow was associated with a reduction in the misclassifications of anger as sadness–this is akin to the reduction in the misclassifications of anger as neutral, as found by Atkinson and Smithson (2020), who used neutral but not sad faces. For fearful and surprised facial expressions, fixation on the central mouth led to better recognition, compared to fixation on an eye or the brow, which was associated with a reduction in the misclassifications between these expressions, as expected and as found by Atkinson and Smithson [1]. No effect of initial fixation location was found for sad faces.

Using our saccade path measure, we found that, when the data were collapsed across fixation location, reflexive first saccades were more strongly in the direction of the brow than of most or all the other locations of interest (left and right eyes, left and right cheeks, mouth). If reflexive first saccades target emotion-informative facial features, then we would have expected to find this result for angry faces and perhaps sad faces but not for fearful and surprised faces. Yet the brow had the largest normalized saccade path values for all 4 emotions. Moreover, if reflexive first saccades target emotion-informative facial features, then we would also have expected to find larger normalized saccade path values for the mouth than for other target locations for fearful and surprised and perhaps also sad faces, but no such effects were evident. Nonetheless, comparing the normalized saccade path values for the brow across emotions revealed that reflexive first saccades were more strongly directed towards the brow for angry faces and sad faces than for fearful faces, which hints at some small role for the emotion-informative nature of certain facial features in attracting saccades.

Analyses of the saccade path measures for the separate fixation locations revealed that the tendency for reflexive first saccades to be in the direction of the brow was evident only for saccades leaving the left and right eyes. Moreover, saccades leaving either the left or right eye were more strongly in the direction of the opposite eye than of the lower-face locations (mouth and cheeks). These findings help explain why there were proportionately fewer saccades downwards from the eyes compared to upwards from the mouth. The tendency for saccades to be directed towards the brow was not modified by the emotional expression when those saccades were initiated from the left eye, but it was for saccades initiated from the right eye. Reflexive first saccades from fixation at the right eye were directed more strongly towards the brow for angry faces than for fearful, surprised and sad faces. This is consistent with the hypothesis that emotion-informative facial features attract saccades, though note that, for saccades from the right eye as with saccades from the left eye, the brow was the location with the largest saccade path measures for all 4 emotions, not just for anger.

For reflexive first saccades from the brow itself, the saccade path measures were small with large variability, yet for angry faces, these values indicated a tendency for the direction of those saccades to be more towards the left cheek than the mouth and right cheek and more towards the mouth than the right cheek. This is reminiscent of Atkinson and Smithson’s [1] finding of a bias towards the left and center of the face for downward saccades from the brow for angry, fearful, happy and neutral faces in their Experiment 1 and for angry and (less clearly) for fearful faces, but not for surprised or neutral faces, in their Experiment 2. For fearful faces in the present experiment, we found that there was a tendency for the saccades to be more in the direction of all 3 lower-face features than of the right (but not left) eye.

The other main notable findings from the saccade path analyses was that reflexive first saccades from the right cheek of fearful and surprised faces were directed more strongly towards the mouth than towards all other target locations. Although this might in part reflect a tendency for those saccades to target the closest facial feature to the fixated location, it is also consistent with the hypothesis that emotion-informative facial features–in this case, the mouth–attract saccades.

Experiment 2

In Experiment 2a we aimed to address the same research questions as for Experiment 1, this time using a different combination of facial expressions. Those questions were: (1) Does a single fixation on an emotion-distinguishing facial feature enhance emotion-recognition accuracy? (2) Do reflexive first saccades from initial fixation on the face target emotion-distinguishing features? We replicated Experiment 1 using a new combination of facial expressions–angry, fearful, surprised and disgusted (replacing sad)–for two reasons. First, we wanted to replicate our results relating to anger, fear and surprise in the context of a different combination of emotions. Second, we wanted to test whether enforcing fixation on the mouth would enhance the recognition of disgust and whether reflexive saccades are more strongly directed to the mouth of disgusted faces, given previous research indicating that mid-to-high spatial frequency information at the mouth and the neighbouring wrinkled nose region is informative for the recognition of disgust [14–17]. Furthermore, previous research also suggests that observers spend more time looking at the mouth regions of disgusted facial expressions (more specifically, the upper lip in [40]). Previous research also suggests high confusion rates between disgust and anger, especially disgusted expressions being misclassified as angry [53–57]. Jack et al. [56] found that the resolution of this misclassification occurs when the upper lip raiser action unit is activated in the expression dynamics. Therefore, we hypothesized higher emotion recognition accuracy for disgusted faces and reduced misclassifications when the mouth is foveated.

In Experiment 2b we addressed two additional research questions: (3) Do observers, when required to classify facially expressed emotions, spend more time fixating the emotion-distinguishing facial features compared to less informative features? (4) Is the time spent fixating the emotion-distinguishing facial features related to accuracy in classifying the relevant emotion? Experiment 2b was the same as Experiment 2a with the principal exception that the face stimuli were displayed for considerably longer (5 s rather than 82 ms), thus allowing the participants to freely view the faces.

Methods

Participants

Forty participants took part in the brief-fixation paradigm (Experiment 2a; female = 31, male = 9; mean age = 21.9 years, age range = 19–42) and of these 40, 39 participants also completed the long-presentation paradigm (Experiment 2b; female = 30, males = 9; mean age = 22 years, age range = 19–42). All participants were undergraduate or postgraduate students in Psychology and had normal or corrected-to-normal vision. All participants gave written consent to take part and undergraduate participants were rewarded participant pool credit (postgraduate participants did not receive any compensation for their time). The study was approved by the Durham University Psychology Department Ethics Sub-committee.

Materials

For Experiment 2a (brief fixation), the face stimuli were identical to those of Experiment 1 except that faces with disgusted expressions replaced the sad faces (for the same identities) from the same face database (i.e., 96 images, comprising 24 identities × 4 emotions: anger, fear, surprise, disgust). For Experiment 2b (long presentation free-viewing), a subset of the face image set used in the brief-fixation experiment was used, such that there were 12 facial identities (6 males, 6 females), each presented in each of 4 expressions (angry, fearful, surprised and disgusted) leading to a total of 48 images.

Design and procedure

The design and procedure of Experiment 2a were identical to those of Experiment 1. Experiment 2b had the same design and procedure except that there were 4 blocks of 48 (rather than 96) trials and each face was presented for 5 s (rather than for 82.4 ms). Thus, over the 4 blocks (total trials = 192) of Experiment 2b, each of the 48 images (12 identities × 4 emotions) was presented once at each of the 4 initial fixation locations (eyes, brow, cheeks, mouth), with the eye and the cheek fixation locations selected equally on the left and right. Within each block, faces were presented at each of the 4 initial fixation locations 12 times (3 per emotion). The order of image presentation was randomised for each participant within each block. Participants were asked to press the relevant keyboard button as soon as they were confident what emotion was shown on the face and the face image remained on the screen for 5 s regardless of when they made their response. The order of Experiments 2a and 2b was counterbalanced for each participant so that half of the participants completed the brief-fixation paradigm first and long-presentation paradigm later and the other half did the opposite. The average delay between the two sessions for all participants was 3.2 days.

Data analysis

The data analysis procedures for Experiment 2a were the same as for Experiment 1. For both Experiments 2a and 2b, unbiased hit rates were calculated following the removal of trials with RTs < 200ms. For Experiment 2a this led to the removal of 0.02% of all trials, and for Experiment 2b, the removal of 0.01% of all trials.

For Experiment 2b, we also calculated for each participant the mean total fixation duration for each of 4 regions of interest (ROIs) per emotion, that is, for each participant we summed the durations of all fixations in each ROI per trial, up to the point at which the participant pressed the response button (or, in the very small number of trials where participants responded after face offset, for the full 5 s face duration), and then calculated the average of these total fixation durations for each emotion for each participant. This allowed us to assess task-related fixations to facial regions of interest as a function of the displayed emotion and the relationship between those task-related fixations and emotion classification performance. (An alternative analysis, reported in the Supplementary Results and Discussion in S1 File, used the percentage of fixation times, relative to the total fixation duration on the image, rather than sum of fixation times. This controls for any differences in image viewing times across conditions and participants. The results of this alternative analysis were very similar to those reported here for the total fixation duration.)

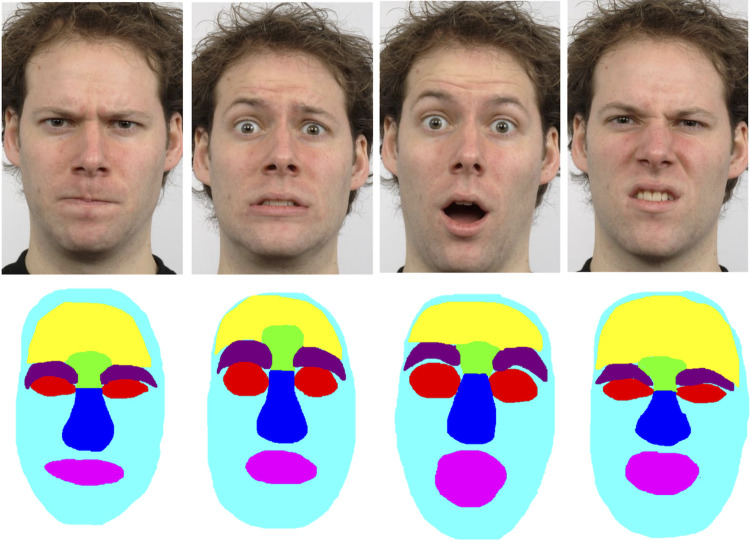

The ROIs were the eyes (combining left and right eyes into a single ROI), brow, nose and mouth. These ROIs were drawn freehand, similar to some previous studies [e.g., 32,40,58], using a bespoke C++ programme (see Fig 4). This allowed us to delineate more precisely the shape and size of each ROI as compared to an alternative strategy of delineating rectangular ROIs [e.g., 37]; importantly, it also allowed for changes in the size of ROIs across emotions. Consequently, the average size of the ROIs varied and varied across emotions, as can be seen in Table 2. The average size of each ROI in degrees of visual angle is presented in Table 3. The typical average accuracy of the EyeLink 1000 system is 0.5°, which is much smaller than the sizes of our chosen ROIs, giving us the confidence that any fixation falling within these ROIs will be captured accurately by the eye tracker. Several previous studies have controlled for variation in the size of ROIs by normalising the fixation measures relative to the areas of the relevant ROIs, to account for the possibility that larger ROIs would acquire more fixations [32,58–60]. This assumption that larger ROIs acquire more fixations has, however, been called into question [61], and is not supported by either our own data or that of some other groups using face stimuli [e.g., 40]. Our data shows that, averaged across emotions and initial fixation locations, the eyes received the most fixations per trial (M = 3.4, SD = 1.9), as compared to the nose (M = 3.0, SD = 1.3), mouth (M = 1.2, SD = 0.8) and brow (M = 0.4, SD = 0.3), and yet the eyes ROI was the third largest of the 4 ROIs on average. Moreover, variations in the sizes of each ROI across facial identities for a given emotion did not correlate with the number of fixations to those identity- and emotion-specific ROIs, with only one exception: the size of disgusted eyes across facial identities positively correlated with the mean number of fixations in those disgusted eyes, r = .884, p < .001 (all other ps > .05). Note that, as with the fixation duration data, these frequency data refer to fixations between face onset and the participant’s button press (or, in the very small number of cases where participants responded after face offset, until the 5 s face offset). We therefore report and analyse the raw (i.e., non-normalised) fixation data.

Fig 4. Examples of facial expression images for Experiments 2a and 2b and corresponding ROIs.

From left to right: anger, fear, surprise, disgust. The size of each ROI is dependent on the underlying expressions and the shape of the facial feature; the forehead (yellow), eyebrows (deep purple) and rest of the face (cyan) regions were not included in the analysis of total fixation duration. The face images are republished in slightly adapted form from the Radboud Faces Database [45] under a CC BY license, with permission from Dr Gijsbert Bijlstra, Radboud University.

Table 2. Mean sizes (pixels2) of individually drawn ROIs.

| Eyes | Brow | Nose | Mouth | |

|---|---|---|---|---|

| Angry | 5949.8 | 4637.6 | 7716.2 | 6001.2 |

| Fearful | 8623.2 | 5304.9 | 8920.2 | 8286.8 |

| Surprised | 6774.7 | 4169.9 | 7667.8 | 8933.8 |

| Disgusted | 8545.5 | 4495.5 | 9067.4 | 9175.8 |

Table 3. The average sizes of each ROI in degrees of visual angle (mean of maximum horizontal × maximum vertical) for each expression.

| Left Eye | Brow | Right Eye | Nose | Mouth | |

|---|---|---|---|---|---|

| Anger | 3.56 × 1.57 | 3.56 × 2.85 | 3.54 × 1.59 | 3.59 × 4.42 | 5.80 × 2.06 |

| Fear | 3.5 × 2.40 | 3.45 × 3.23 | 3.35 × 2.38 | 3.67 × 4.90 | 5.75 × 2.83 |

| Disgusted | 3.61 × 1.80 | 3.72 × 2.59 | 3.64 × 1.79 | 3.88 × 4.07 | 4.96 × 3.26 |

| Surprised | 3.49 × 2.41 | 3.36 × 2.72 | 3.42 × 2.31 | 3.60 × 5.10 | 4.76 × 3.72 |

Results

Experiment 2a: Brief-fixation paradigm

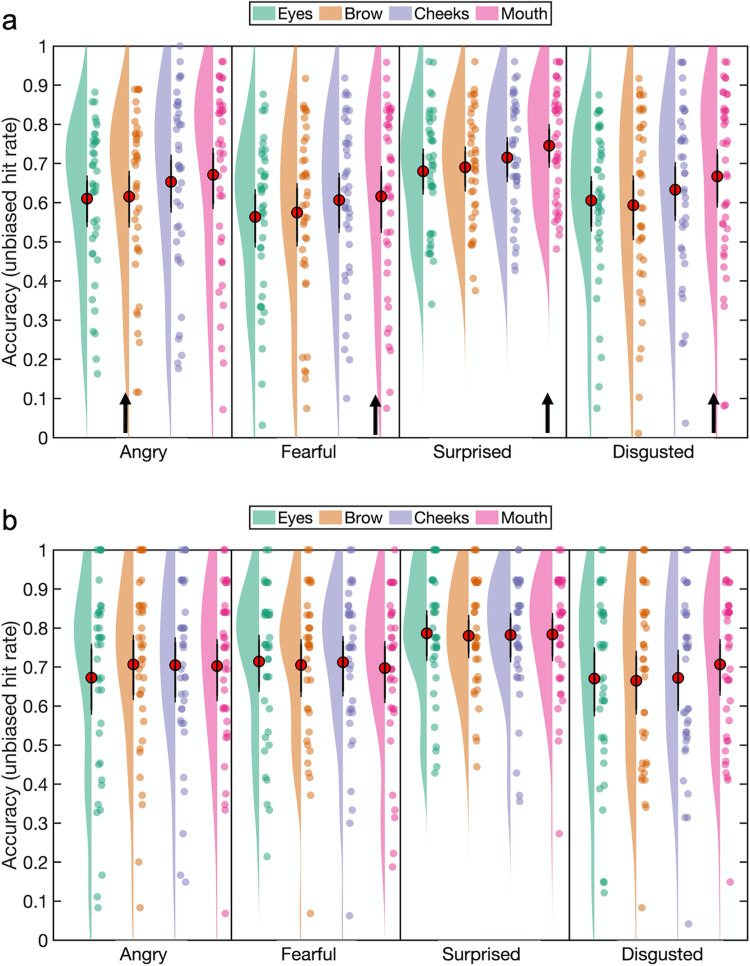

Emotion classification accuracy. The unbiased hit rates are summarized in Fig 5A. An ANOVA on the arcsine square-root transformed unbiased hit rates revealed a main effect of emotion, F(1.63, 63.54) = 6.96, p = .003, ηp2 = .15, 90% CI [.03 .27], ηG2 = .04. Emotion recognition accuracy for surprised expressions was significantly higher than the accuracy for fearful expressions (p < .001). There was also a main effect of fixation location, F(3, 117) = 10.34, p = .003, ηp2 = .21, 90% CI [.1 .3], ηG2 = .02. Fixating on the mouth region led to higher emotion recognition accuracy compared to fixating on the eyes and the brow (both ps < .001) but there was no difference between the mouth and the cheek (p = .52). The interaction between emotion and initial fixation failed to reach significance (F < 1, p > .8).

Fig 5. Emotion recognition accuracy (mean unbiased hit rates) as a function of emotion category and fixation location.

Emotion recognition accuracy indexed by mean unbiased hit rates for the brief fixation paradigm in Experiment 2a (a) and the free viewing paradigm in Experiment 2b (b). Red circles indicate the mean value across participants and error bars indicate the 95% CIs (see Methods). The raincloud plot combines an illustration of data distribution (the ‘cloud’) with jittered individual participant means (the ‘rain’) for each condition [51]. Arrows indicate the most emotion-informative (‘diagnostic’) facial features for each emotion.

To further investigate our hypotheses relating to each expression separately, planned comparisons were carried out. This resulted in 3 one-tailed paired samples t-tests for each expression (minimum Bonferroni-Holm adjusted α = .017).

Contrary to our prediction, classification accuracy for anger was not enhanced with fixation at the brow (M = 0.615, SD = 0.215) relative to any of the other 3 fixation locations: cheek (M = 0.653, SD = 0.23), mouth (M = 0.671, SD = 0.237) and eyes (M = 0.61, SD = 0.191) (ps > .3). Indeed, a full set of pairwise comparisons revealed greater accuracy for anger with fixation on the mouth or a cheek than on the brow: mouth > brow, t(39) = -3.09, p = .004, dz = -0.49, 95% CI [-0.81–0.16]; cheek > brow, t(39) = -2.53, p = .016, dz = -0.4, 95% CI [-0.72–0.08]. Anger classification accuracy was also greater with fixation on the mouth than on an eye t(39) = 3.78, p < .001, dz = 0.6, 95% CI [0.26 0.93], and with fixation on a cheek than on an eye, W(39) = 565, p = .005, dz = 0.53, 95% CI [0.22 0.74].

We expected that enforced fixation on the mouth would improve emotion recognition for fear, surprise and disgust. Accuracy for fear with fixation at the mouth (M = 0.616, SD = 0.236) was significantly higher compared to fixation on an eye (M = 0.563, SD = 0.201), t(39) = 2.57, p = .007, dz = 0.41, 95% CI [0.13 ∞], and with fixation at the brow (M = 0.575, SD = 0.222), though only marginally so after correction for multiple comparisons, t(39) = 1.98, p = .028, dz = 0.31, 95% CI [0.04 ∞] (Bonferroni-Holm adjusted α = .025), and not compared with fixation on a cheek (M = 0.607, SD = 0.209), t < 1, p > .3, dz = 0.08.

Similar results were found for surprised faces: Recognition accuracy was higher with fixation at the mouth (M = 0.745, SD = 0.142) compared to the eyes (M = 0.68, SD = 0.152), t(39) = 3.77, p < .001, dz = 0.6, 95% CI [0.31 ∞], brow (M = 0.69, SD = 0.143), t(39) = 3.33, p < .001, dz = 0.53, 95% CI [0.25 ∞], and cheeks (M = 0.715, SD = 0.145), t(39) = 1.94, p = .03, dz = 0.31, 95% CI [0.04 ∞].

Similar results were also found for disgust faces: Recognition accuracy was higher with fixation at the mouth (M = 0.667, SD = 0.226) compared to the eyes (M = 0.605, SD = 0.197), t(39) = 2.98, p = .002, dz = 0.47, 95% CI [0.19 ∞], brow (M = 0.593, SD = 0.235), t(39) = 3.55, p < .001, dz = 0.56, 95% CI [0.4228 ∞], and cheeks (M = 0.633, SD = 0.223), t(39) = 1.97, p = .028, dz = 0.31, 95% CI [0.04 ∞].

To investigate whether there were any systematic misclassifications between the target expressions, we computed confusion matrices as seen in Table 4. Expressions of disgust were most often misclassified as anger, but this was greatly reduced with enforced brief fixation on the mouth. Fearful expressions were often misclassified as surprised, but this was reduced with enforced brief fixation on one of the lower facial features, i.e., a cheek or the mouth.

Table 4. Confusion matrices for Experiment 2a (brief fixation paradigm).

| Anger | Fear | Surprise | Disgust | Anger | Fear | Surprise | Disgust | |

|---|---|---|---|---|---|---|---|---|

| Eyes | Brow | |||||||

| Anger | 79.58 | 6.77 | 1.15 | 12.50 | 80.83 | 5.00 | 2.40 | 11.77 |

| Fear | 3.02 | 69.69 | 22.08 | 5.21 | 3.23 | 68.33 | 23.96 | 4.48 |

| Surprise | 1.25 | 11.26 | 85.09 | 2.40 | 0.94 | 8.54 | 88.23 | 2.29 |

| Disgust | 23.88 | 0.83 | 1.67 | 73.62 | 26.15 | 1.35 | 2.08 | 70.42 |

| Cheeks | Mouth | |||||||

| Anger | 81.46 | 5.31 | 1.77 | 11.46 | 81.04 | 6.46 | 0.83 | 11.67 |

| Fear | 2.40 | 71.98 | 19.58 | 6.04 | 2.71 | 72.19 | 18.23 | 6.88 |

| Surprise | 0.63 | 9.49 | 88.01 | 1.88 | 0.94 | 7.60 | 90.10 | 1.35 |

| Disgust | 20.83 | 0.94 | 2.71 | 75.52 | 16.88 | 1.15 | 2.81 | 79.17 |

One confusion matrix is shown for each of the 4 initial fixation locations (eyes, brow, cheeks, mouth). The row labels indicate the presented expression and the column labels indicate the participant responses. The data are the % of trials each emotion category was given as the response to the presented expression. %s reported in bold represent the most prevalent confusions for each expression.

Eye movement analysis. As for Experiment 1, we next examined whether reflexive first saccades targeted expression-informative facial features, using the saccade path analysis described in the Data Analysis section. We first report an analysis with the data collapsed across fixation location, followed by separate analyses for each fixation location.

Reflexive saccade data from two participants were removed from the analysis of eye-movement data since they did not meet the criteria for inclusion (see Experiment 1). The following analyses were conducted on the data for 38 participants. On average, for the 38 participants, 82.74% of all trials included a reflexive saccade.

Saccade path analysis: Collapsed across fixation location. The mean saccade trajectories of the first saccades are shown in Fig 6A for each emotion, collapsed across initial fixation location; therefore, for each emotion there are 6 possible saccade targets (left and right eye, brow, left and right cheek, and the mouth). A 4 × 6 repeated measures ANOVA was run using emotion and saccade target location as factors. A marginal main effect of target location, F(2.12, 78.26) = 2.77, p = .066, ηp2 = .07, 90% CI [NA .16], ηG2 = .04, which indicated that first saccades were more strongly directed towards the brow (M = 0.314, SD = 0.135) than the left eye (M = 0.25, SD = 0.135), t(37) = 4.29, p < .001, dz = 0.7, 95% CI [0.34 1.05] and right eye (M = 0.244, SD = 0.135), t(37) = 3.53, p = .001, dz = 0.57, 95% CI [0.23 0.91], but not mouth (M = 0.293, SD = 0.112; t < 1, p > .5). First saccades also tended to be directed more strongly towards the brow than the left cheek (M = 0.255, SD = 0.11), t(37) = 2.65, p = .012, dz = 0.43, 95% CI [0.1 0.76] and right cheek (M = 0.251, SD = 0.14), t(37) = 2.2, p = .034, dz = 0.36, 95% CI [0.03 0.68], and more strongly towards the mouth than the right cheek, t(37) = 2.25, p = .03, dz = 0.37, 95% CI [0.03 0.69], though not after correction for multiple comparisons (adjusted α = .0033). There was no main effect of emotion (F < 1, p > .7) and a negligible effect of the interaction (F = 1.51, p = .15, ηp2 = .04, ηG2 = .001). This analysis was also repeated with initial saccades that took place within 500ms of face offset, as reported in the Supplementary Results and Discussion in S1 File. In that analysis, no significant main effects or an interaction were found.

Fig 6. Mean normalized saccade paths as a function of facial expression and target locations for Experiment 2a.

The normalized saccade path is a measure of the directional strength of the reflexive first saccades (executed after face offset) towards target locations of interest, in this case (a) to 6 target locations, collapsed across initial fixation location (N = 38), and from (b) the left eye, (c) the brow, (d) the right eye, (e) the left cheek, (f) the mouth, and (g) the right cheek, to the remaining 5 regions of interest (N = 38). Red circles indicate the mean value across participants and error bars indicate the 95% CIs (see Methods). The raincloud plot combines an illustration of data distribution (the ‘cloud’) with jittered individual participant means (the ‘rain’) for each condition [51]. Arrows indicate the most emotion-informative (‘diagnostic’) facial features for each emotion.

Saccade path analysis: From fixation on individual features. Separate 4 × 5 repeated measures ANOVAs were conducted for each of the 6 fixation locations, with emotion and saccade target as factors. The data are summarized in Fig 6B–6G.

For reflexive first saccades from fixation at the left eye, there was a main effect of target location, F(1.1, 41.06) = 24.08, p < .001, ηp2 = .39, 90% CI [.19 .53], ηG2 = .22. The results of follow-up pairwise comparisons are shown in S3 Table. The interaction between expression and target location failed to reach significance after Greenhouse-Geisser correction, F(3.85, 142.54) = 2.32, p = .062, ηp2 = .06, 90% CI [NA .11], ηG2 = .008. The main effect of emotion was not significant either (F < 1, p > .5).

For reflexive first saccades from fixation at the brow, there was no main effect of target location or of emotion, nor an effect of the interaction (Fs < 2.4, ps > .1, ηp2s < .07).

For reflexive first saccades from fixation at the right eye, there was a main effect of target location, F(1.06, 39.07) = 26.22, p < .001, ηp2 = .42, 90% CI [.21 .55], ηG2 = .24. The results of follow-up pairwise comparisons are shown in S3 Table. The main effect of emotion and the effect of the interaction were negligible (both Fs < 1.8, ps = .16, ηp2s ≤ .05).

The directional strength of reflexive first saccades from fixation on the mouth did not vary as a function of target location or emotion or their interaction (Fs < 1.1, ps > .35, ηp2s < .03). The directional strength of reflexive first saccades from fixation on the left cheek varied as a function of target location, F(1.05, 38.76) = 10.04, p = .003, ηp2 = .21, 90% CI [.05 .38], ηG2 = .14, but not as a function of emotion or of the interaction (Fs < 1.1, ps > .35, ηp2s < .03). Similarly, the directional strength of reflexive first saccades from fixation on the right cheek varied as a function of target location, F(1.04, 38.52) = 10.99, p = .002, ηp2 = .23, 90% CI [.06 .39], ηG2 = .098, but not as a function of emotion or of the interaction (Fs < 1.1, ps > .35, ηp2s < .03). The results of both sets of pairwise comparisons are shown in S3 Table. Similar to the analysis of saccades collapsed across initial fixation, the analysis of saccades starting within 500ms of face offset from each initial fixation location is reported in Supplementary Results and Discussions in S1 File. This analysis yielded similar results to those reported here.

Experiment 2b: Long-presentation paradigm

Emotion classification accuracy. The descriptive statistics for the unbiased hit rates can be seen in Fig 5B. The ANOVA on the arcsine square-root transformed unbiased hit rates revealed a main effect of emotion, F(1.53, 58.11) = 5.58, p = .011, ηp2 = .13, 90% CI [.02 .25], ηG2 = .032. Surprise (M = 0.783, SD = 0.13) was better recognised compared to fear (M = 0.707, SD = 0.174), t(38) = 4.77, p < .001, dz = 0.76, 95% CI [0.4 1.12] and disgust (M = 0.678, SD = 0.196), t(38) = 3.24, p = .002, dz = 0.52, 95% CI [0.18 0.85]. There was no effect of fixation location and no significant interaction between emotion and fixation location (Fs < 1, ps > .5, ηp2s < .03).

To investigate whether there were any systematic misclassifications between target expressions, we computed confusion matrices as seen in Table 5. Similar to the brief-fixation paradigm, disgust was misclassified most often as anger and fear was often misclassified as surprise. Misclassification of disgust as anger was reduced with fixation on the mouth, whereas misclassification of fear as surprise was slightly reduced with fixation on the brow.

Table 5. Confusion matrices for Experiment 2b (free-viewing paradigm).

| Anger | Fear | Surprise | Disgust | Anger | Fear | Surprise | Disgust | |

|---|---|---|---|---|---|---|---|---|

| Eyes | Brow | |||||||

| Anger | 81.76 | 5.58 | 1.72 | 10.94 | 85.65 | 3.64 | 0.86 | 9.85 |

| Fear | 1.07 | 79.23 | 15.63 | 4.07 | 1.29 | 78.76 | 13.52 | 6.44 |

| Surprise | 0.21 | 5.15 | 91.85 | 2.79 | 0.43 | 7.33 | 89.22 | 3.02 |

| Disgust | 20.86 | 0.22 | 0.22 | 78.71 | 20.47 | 0.43 | 0.22 | 78.88 |

| Cheeks | Mouth | |||||||

| Anger | 85.26 | 4.70 | 0.85 | 9.19 | 84.05 | 5.17 | 0.86 | 9.91 |

| Fear | 1.72 | 78.88 | 15.09 | 4.31 | 1.50 | 78.33 | 15.02 | 5.15 |

| Surprise | 0.00 | 6.00 | 90.79 | 3.21 | 0.86 | 6.25 | 90.52 | 2.37 |

| Disgust | 19.78 | 0.86 | 1.08 | 78.28 | 17.63 | 0.00 | 0.00 | 82.37 |

One confusion matrix is shown for each of the 4 initial fixation locations (eyes, brow, cheeks, mouth). The row labels indicate the presented expression and the column labels indicate the participant responses. The data are the % of trials each emotion category was given as the response to the presented expression. %s reported in bold represent the most prevalent confusions for each expression.

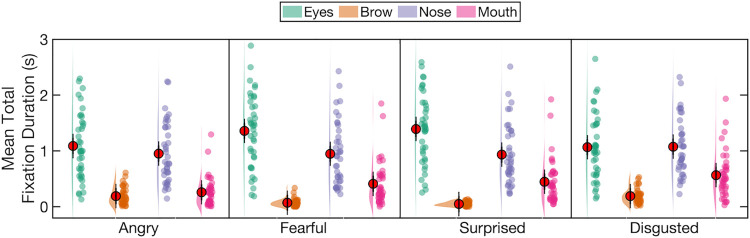

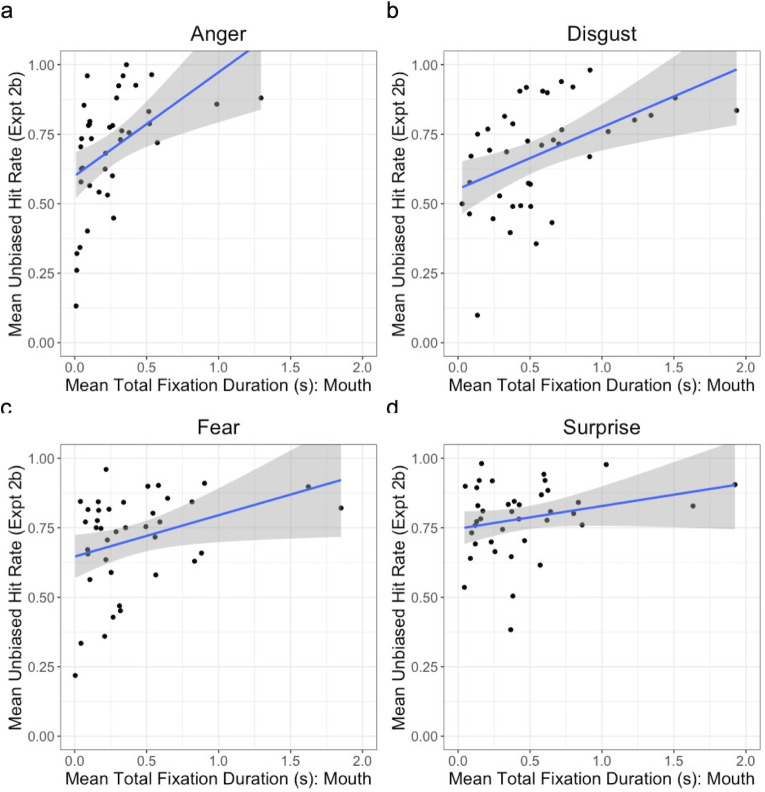

Eye movement analysis: Mean total fixation duration. To investigate whether participants spent more time fixating the informative facial features for each of the expressions in the experiment, we calculated the mean total fixation duration, up to the point of the participant’s button press, for the eyes, brow, nose and mouth ROIs per emotion per participant. Descriptive statistics can be seen in Fig 7. A repeated measures ANOVA on these total fixation durations revealed significant main effects of emotion, F(3, 114) = 70.74, p < .001, ηp2 = .651, 90% CI [.56 .7], ηG2 = .007, and region of interest, F(1.7, 64.43) = 39.97, p < .001, ηp2 = .513, 90% CI [.36 .61], ηG2 = .484. The main effect of emotion reflected that the mean total fixation durations were longer for disgusted faces (M = 724ms, SD = 110) than for surprised (M = 706ms, SD = 106; uncorrected p = .004, dz = 0.487), fearful (M = 697ms, SD = 104; uncorrected p = .002, dz = 0.52) and angry (M = 622ms, SD = 110; uncorrected p < .001, dz = 2.107) faces, longer for surprised (uncorrected p < .001, dz = 1.639) and fearful (uncorrected p < .001, dz = 1.691) than for angry faces, but did not differ between fearful and surprised faces (uncorrected p > .2, dz = 0.184). The main effect of ROI showed that the mean total fixation durations were longer for the eyes (M = 1227ms, SD = 605) than for the brow (M = 125ms, SD = 88; uncorrected p < .001, dz = 1.767) and mouth (M = 420ms, SD = 360; uncorrected p < .001, dz = 1.033) but not nose (M = 977ms, SD = 531; uncorrected p > .1, dz = 0.241), and longer for the nose than for the brow (uncorrected p < .001, dz = 1.636) and mouth (uncorrected p < .001, dz = 0.802), and longer for the mouth than for the brow (uncorrected p < .001, dz = 0.759).

Fig 7. The mean total fixation duration per trial for the four ROIs for each emotion in Experiment 2b.

Note: Red circles indicate the mean value across participants and error bars indicate the 95% CIs (see Methods). The raincloud plot combines an illustration of data distribution (the ‘cloud’) with jittered individual participant means (the ‘rain’) for each condition [51]. The ROIs are illustrated in Fig 4.