Abstract

Our memories can differ in quality from one event to the next, and emotion is one important explanatory factor. Still, the manner in which emotion impacts episodic memory is complex: Whereas emotion enhances some aspects of episodic memory—particularly central aspects—it dampens memory for peripheral/contextual information. Extending previous work, we examined the effects of emotion on one often overlooked aspect of memory, namely, temporal context. We tested whether emotion would impair memory for when an event occurred. Participants (N = 116 adults) watched videos wherein negative and neutral images were inserted. Consistent with prior work, results showed that emotion enhanced and impaired memory, respectively, for “what” and “which.” Unexpectedly, emotion was associated with enhanced accuracy for “when”: We found that participants estimated that neutral images occurred relatively later, but there was no such bias for negative images. By examining multiple features of episodic memory, we provide a holistic characterization of the myriad effects of emotion.

Keywords: emotion, episodic memory, temporal memory, time, open data, preregistered

The relationship between memory and emotion has been a vibrant area of study for decades. Intuitively, we know that the most impactful and easily recalled memories are those that are most emotional and meaningful to us, such as the experience of a natural disaster, a wedding, or a bad grade. In agreement with such intuition, many laboratory and autobiographical studies have shown that emotion enhances memory for prior events (e.g., Cahill & McGaugh, 1995; Kensinger & Corkin, 2003; LaBar & Cabeza, 2006), often referred to in the literature as the emotional-enhancement-of-memory effect. Mechanistically, this effect is linked to augmented attentional and sensory processing of emotional material (e.g., Talmi et al., 2007; Todd et al., 2012; for a review, see Todd et al., 2020), in addition to postencoding consolidation processes (McGaugh, 2018).

However, the effects of emotion on episodic memory are not uniform. Indeed, in many studies, an important distinction is made between the impact of emotion on the central emotional content per se versus the contextual (or peripheral) information that composes an event: Whereas emotion can enhance memory for central content, it can weaken memory for peripheral information (Kensinger et al., 2007) as well as associations between central and contextual material or their coherence (in laboratory studies, this includes spatial background, adjacent content in view, or sequential presentation; Bisby & Burgess, 2014; Kensinger et al., 2007; Madan et al., 2012, 2017). If you were trekking through the woods and encountered a snake, you are likely to remember that you saw a snake, but you may have difficulties remembering exactly where you saw it. This effect (namely, reduced associative memory) is partially attributed to attentional narrowing: Emotional content usurps attentional resources at the expense of surrounding content (Mather & Sutherland, 2011; Talmi, 2013). (Still, attention cannot fully account for this phenomenon; for a discussion, see Bisby et al., 2018.)

The above-mentioned studies focused on emotional memory for either items or associative spatial or adjacent context. Here, we aimed to extend this body of research by examining the effect of emotion (in this case, negative emotion) on another important element of an event: its temporal context. Time, like space, is an essential dimension of episodic memory (Tulving, 1972), but it has received far less attention in the literature. Thus, we asked, how is memory for when an event occurred affected by emotion? Reprising the above example, if one were to place the hiking event on a timeline, how well could one situate the encounter with the snake on that timeline? According to one hypothesis—which we tested here—negative emotion will dampen memory for temporal context. We based our hypothesis, in part, on the perspective that augmented attentional allocation to negative items at encoding may disrupt processing of temporal features of an experience (akin to the effects of emotion on other episodic associations).

In support of our hypothesis, a burgeoning literature on emotion and temporal memory suggests that its effects are indeed disruptive. For example, studies involving temporal order—one index of “when” memory—suggest that participants are more likely to recall emotionally arousing content out of order in comparison with nonarousing content (e.g., Huntjens et al., 2015; Maddock & Frein, 2009; but see Schmidt et al., 2011). Studies examining duration memory likewise demonstrate that emotion distorts memory for time; in such studies, emotional experiences, particularly when negative, are later remembered as having elapsed more slowly than they actually did (Campbell & Bryant, 2007; Johnson & MacKay, 2019; Loftus et al., 1987). Other work shows that emotion disrupts the temporal contiguity of recall (i.e., the tendency to recall items in the order in which they were encoded; Siddiqui & Unsworth, 2011). We note, however, that although the literature seems to favor an impairing effect of emotion on temporal memory, there are some studies involving list identification that show that emotion enhances temporal memory (for which list an item appeared on; also referred to as source memory; D’Argembeau & Van der Linden, 2005; Rimmele et al., 2012; but see Minor & Herzmann, 2019), and it is acknowledged that the effects of emotion on time are likely complex and nuanced.

In the present preregistered study (https://aspredicted.org/p4ci6.pdf), we built on this prior work using a novel-timeline (“when”) paradigm (for related methodology, see Montchal et al., 2019). To contextualize our results in the broader emotional-memory literature, we simultaneously tested “what” (item recognition) and in “which” (associative recognition for a spatial context) memory within the same paradigm, providing a holistic representation of emotional episodic memories. In a second experiment, we directly replicated our findings in an independent sample. Following prior work, we hypothesized that emotion would augment memory for what emotional content was previously viewed but attenuate memory for which unfolding spatial background that content is placed in. Our novel hypothesis was that emotion would additionally attenuate memory for when that content was viewed.

Statement of Relevance.

Through memory, humans are able to relive the past in rich detail. Our memories are crucial to our sense of self and guide thoughts and actions. As one important factor, emotion plays a crucial role in the fidelity of memory, searing in our minds the best and worst of times. Still, experimental data show that not all the details from emotional events are preserved in memory. Here, we probed three aspects of memory for negative and neutral events: what, when, and which. We used a laboratory analogue of real-world remembering, in which participants watched videos designed to mimic aspects of an unfolding real-life experience. Emotion enhanced memory for “what” but reduced memory for “which.” Critically, emotion also altered memory for “when”; participants estimated that neutral images occurred later, but negative images were not associated with such bias. Thus, our results highlight the myriad effects of emotion on memory.

Method

Participants

All participants were University of British Columbia undergraduate students recruited via the Human Subject Pool (Sona Systems; https://sona-systems.com), tested in our laboratory, and given course credit for participation. The study was approved by the Behavioural Research Ethics Board at The University of British Columbia. Participants were required to be between the ages of 18 and 35 years and to self-report fluency in English to ensure comprehension of the task. We conducted a power analysis based on Madan et al.’s (2017) second experiment. The effect sizes for the impact of emotion on “what” (Cohen’s d = 0.44) and “which” (d = 0.52) memory indicated that a sample size of 56 and 41, respectively, was adequate for detecting an effect on these aspects of memory (α = .05, 1 − β = .90). Here, we sought to collect data from 60 participants in each cohort.

In cohort A (n = 60; 42 female), the mean age was 20.89 years (SD = 2.94). One participant’s age was excluded from the mean because they entered an age of “2.” We preregistered the study involving cohort A. We ran a replication study (exact replication), which involved cohort B, that was not preregistered. In cohort B (n = 56; 44 female), our replication sample, the mean age was 20.64 years (SD = 1.96). Cohort B was originally intended to be another 60 participants, but data collection was interrupted because of the COVID-19 pandemic; we shut down all laboratory testing on March 13, 2020.

Materials

Participants completed a general health and demographics questionnaire used for all studies in our laboratory. Participants also completed a number of questionnaires that are not discussed in this article (see the Supplemental Material available online).

Video stimuli consisted of 60 video clips, which were downloaded from YouTube and later edited in iMovie (Apple, 2019) to 18 s each. The videos displayed everyday experiences (e.g., a walking tour, a street vendor cooking) and were selected to not be too repetitive or exciting yet interesting enough to maintain attention.

Image stimuli were selected from the Nencki Affective Picture System (NAPS) database (Marchewka et al., 2014). On the basis of the normed ratings from the database, we selected a total of 60 negative and 60 neutral images (see Table 1 and the Supplemental Material). Whereas negative and neutral stimuli significantly differed in valence and arousal (all ps < .001), they were matched for low-level visual properties (luminance, contrast, and entropy; all ps > .13; see Table 1).

Table 1.

Properties of the Images From the Nencki Affective Picture System

| Property | Negative images | Neutral images | ||

|---|---|---|---|---|

| M | SD | M | SD | |

| Valence | 2.48a | 0.40 | 5.50b | 0.46 |

| Arousal | 6.91a | 0.39 | 4.64b | 0.32 |

| Luminance | 121.32a | 28.86 | 113.80a | 33.57 |

| Contrast | 63.12a | 11.43 | 65.44a | 12.09 |

| Entropy | 7.60a | 0.32 | 7.53a | 0.33 |

| L*a*b-L | 50.08a | 11.53 | 46.73a | 13.47 |

| L*a*b-A | 2.66a | 4.77 | 1.35a | 4.48 |

| L*a*b-B | 7.18a | 7.82 | 5.40a | 8.84 |

Note: Within each row, means with different subscripts differ significantly (p < .001). L*a*b refers to the Commission Internationale de l’Éclairage L*a*b color space. In this system, the L dimension corresponds to luminance, whereas A and B correspond to channels that range from red to green and from blue to yellow, respectively (Tkalčič & Tasic, 2003). The mean represents the average across all pixels in the image.

To create encoding trials, we inserted half of the NAPS images into the video clips (one per video) using iMovie. Each image lasted for 2 s. The assignment of the images to the video clips was random and was also counterbalanced across conditions. The time at which these images were displayed within the video was randomized across trials but restricted to the middle 16 s of the video (i.e., the image could start no earlier than 2 s into the video and no later than 16 s into the video, with an image offset no later than 18 s; for further counterbalancing information, see the Supplemental Material). The mean image-placement times did not significantly differ between the conditions, t(58) = 0.14, p = .89, and they were also counterbalanced across the negative and neutral conditions (negative: M = 9.73 s, SD = 4.58; neutral: M = 9.57 s, SD = 4.88). The image was added into the film sequence (i.e., not superimposed into the movie, and the movie was not visible as a border around the image).

Trials for a recognition-memory (“what”) phase included the 60 old NAPS images used during encoding and the remaining 60 new images. The old and new images were matched in arousal, valence, and the above-mentioned low-level visual properties (all ps > .35). To create trials for an associative recognition memory (“which”) phase, we took a single screenshot from each video at a random interval (but excluding the first and last 2 s of the video and 1 s before and after image presentation), and four screenshots from other viewed video clips served as lures (see the Supplemental Material).

Procedure

The memory task consisted of four phases, administered in the order described below (i.e., the order of phases in the memory task was not counterbalanced).

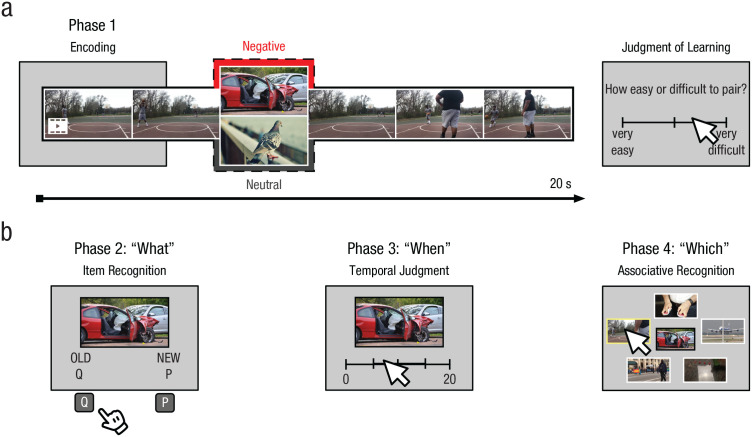

Phase 1 (encoding)

At encoding, participants were shown each video clip (with the inserted NAPS image) in a random order. To maintain participants’ focus, we asked participants the following question immediately after each video: “How easy or difficult do you think it will be to remember this video and image pairing?” They responded using a slider scale (from very easy to very difficult), and this served as a judgment of learning rating (e.g., Caplan et al., 2019; see Fig. 1a). The slider was presented for a fixed 4 s, followed by a 2-s fixation cross. For completeness, we report analyses of these ratings data in the Supplemental Material (this analysis was not preregistered). Participants were asked to wait for 10 min before starting Phase 2, during which they were provided with a coloring sheet (to fill the time; also see the Supplemental Material).

Fig. 1.

Illustration of the procedure for (a) the encoding task and (b) the three memory tasks. In Phase 1, participants saw video clips in which 2-s negative or neutral images had been inserted. Afterward, they rated how easy or difficult they thought it would be to remember which video and image were paired. In Phases 2 through 4, participants then judged whether they had seen each image before (“what”), at what point during the associated video each image had appeared (“when”), and which of five images was taken from the video that had previously appeared with the target image (“which”). Because of reproduction restrictions, substitute pictures are shown for the negative and neutral conditions, rather than those from the Nencki Affective Picture System database.

Phase 2 (item recognition; “what”)

Next, participants were shown the old and new images in a random order. Each image was displayed for 4 s, and participants judged whether the image was old or new by pressing a key (“Q” for old, “P” for new; see Fig. 1b). Each judgment was followed by a 2-s fixation cross.

Phase 3 (temporal judgment; “when”)

In Phase 3, participants saw old images (in a random order) accompanied by a timeline that ranged from 0 to 20 s with two lines marking each quarter of the timeline. Participants judged when in the video they had seen the image by clicking on the appropriate spot on the timeline (see Fig. 1b). Participants were given up to 8 s to respond, and their response was followed by a 2-s fixation cross.

Phase 4 (associative recognition; “which”)

Finally, participants saw old images (in a random order) surrounded by five screenshots from the encoded videos presented below the old image. These five screenshots were the target image and four lure images (placement of choices was random). Participants were asked to select the target (i.e., the video that was previously paired with the image; see Fig. 1b). Participants were given up to 8 s to respond, and a 2-s fixation cross was displayed between trials. This five-alternative forced-choice procedure for the associative-recognition test was modeled after Madan et al.’s (2017) methodology.

At the end of the task, participants were debriefed (see the Supplemental Material).

Results

All reported analyses conform to those specified in our preregistration, unless noted. Two participants in cohort A and one participant in cohort B (our replication cohort) were excluded from the reported results because preliminary analyses indicated poor performance in the item-recognition task (Phase 2), and mean accuracy was collapsed across both conditions below 3 standard deviations from the mean. These three participants were also the only ones with mean accuracy below 60% (50% corresponded to chance performance). An additional participant was excluded from cohort A because the experimenter erroneously restarted the experiment after the participant had seen some of the stimuli. Although we report parametric statistics, we reran analyses using nonparametric tests (related-samples Wilcoxon signed rank test, as noted in our preregistration) because of violations of normality for some variables, and the results did not change.

Phase 2 (item recognition; “what”)

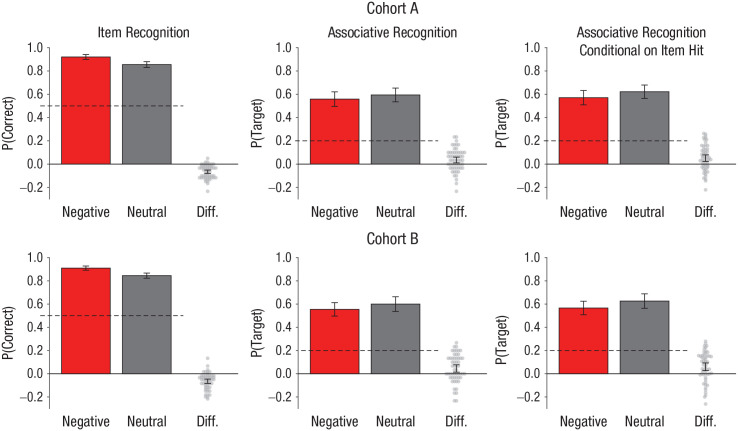

For each participant, d′ was calculated and analyzed (the same pattern of results was observed for accuracy, but we preregistered only the analysis of d′). A paired-samples t test showed that participants in cohort A performed better in the negative (M = 3.28, SD = 0.93) than the neutral (M = 2.58, SD = 0.86) condition, t(56) = 8.07, p < .001, d = 1.07, as predicted. That is, emotional images were remembered better. This effect was replicated in cohort B (negative: M = 3.02, SD = 0.83; neutral: M = 2.35, SD = 0.82), t(54) = 6.80, p < .001, d = 0.92 (see Fig. 2, which shows accuracy scores to ease interpretation).

Fig. 2.

Performance of cohort A (top row) and cohort B (bottom row). The proportion of negative and neutral images correctly identified as old or new in the item-recognition task (“what”) is shown on the left. The proportion of negative and neutral target images correctly selected in the associative-recognition task (“which”) is shown in the middle. Associative recognition of negative and neutral images in the “which” task conditioned on item hit in the “what” task is shown on the right. In each graph, difference scores (Diff.) for each participant were calculated by subtracting accuracy for negative images from accuracy for neutral images. The dashed lines denote chance performance (50% and 20% correct for the item- and associative-recognition tasks, respectively). The error bars represent 95% confidence intervals.

We also examined c (response bias), although this was not a preregistered analysis. A paired-samples t test showed that greater bias was present in the negative (M = 0.18, SD = 0.34) than the neutral (M = 0.41, SD = 0.38) condition in cohort A, t(56) = 4.23, p < .001, d = 0.56. This effect was replicated in cohort B (negative: M = 0.14, SD = 0.32; neutral: M = 0.30, SD = 0.31), t(54) = 3.10, p = .003, d = 0.42. Thus, participants were more likely to endorse any item as old in the negative condition.

Phase 3 (temporal judgment; “when”)

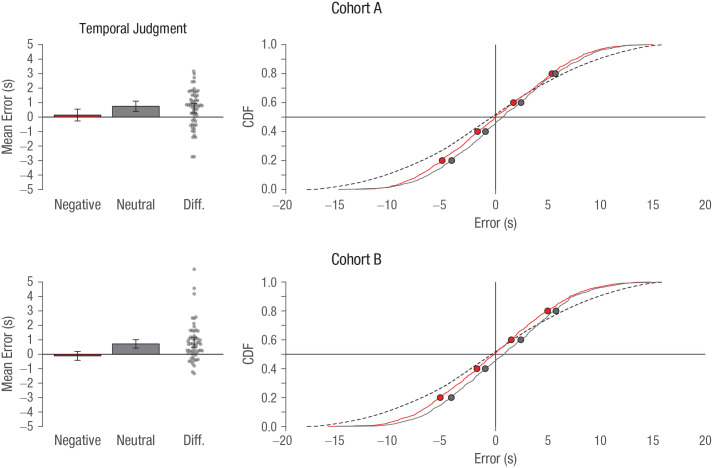

Accuracy was assessed by first subtracting each participant’s response along the timeline from the actual time when the image was placed in the video for each trial (i.e., the amount of error in their estimate) and then averaging across trials for each participant, per our preregistration. In this analysis, scores that lie closer to zero indicate better performance. A paired-samples t test showed that participants in cohort A performed better in the negative (M = 0.14, SD = 1.58) relative to the neutral (M = 0.75, SD = 1.36) condition, t(56) = 3.62, p = .001, d = 0.48, contrary to our prediction. This effect was replicated in cohort B (negative: M = −0.12, SD = 1.16; neutral: M = 0.72, SD = 1.11), t(54) = 4.62, p < .001, d = 0.62 (see Fig. 3, left). The larger mean for the neutral condition can be interpreted as participants estimating that the item occurred later in time than it actually did. An analysis (not preregistered) examining temporal memory that was conditional on successful item recognition (i.e., hits) showed a similar pattern in both cohort A, t(56) = 3.61, p = .001, d = 0.48, and cohort B, t(54) = 4.33, p < .001, d = 0.58.

Fig. 3.

Performance of cohort A and cohort B on the temporal-judgment task (“when”). The bar graphs show the mean error in participants’ estimates of when the target image had appeared in the associated video. Difference scores (Diff.) for each participant were calculated by subtracting accuracy for negative images from accuracy for neutral images. The error bars represent 95% confidence intervals. For interpreting the aggregate response distributions, we plot the empirical cumulative distribution functions (CDFs) for negative (red) and neutral (gray) stimuli (the dotted line denotes chance responses). Each circle marker corresponds to 20-percentile increments of the distribution.

To better assess these responses, we aggregated data across participants and examined the resulting empirical cumulative distribution functions (CDFs; the approach detailed in this paragraph was not preregistered but was performed to increase clarity of the results). The x-axis position of the rise in the CDF indicates whether there is a bias in responding earlier or later for one of the conditions (see Fig. 3, right). Here, we see that the response CDF for the negative distribution is centered at zero, whereas the neutral condition is shifted rightward, corresponding to later time judgments. In this analysis, precision/sensitivity is represented by the slope in the CDF, whereas bias would correspond to a shift along the x-axis. If participants were generally more variable, this would appear as a gradual incline in the CDF; in contrast, high precision would be shown as a very steep step change in the function. Tendencies to respond relatively earlier or later (i.e., bias) are shown as a leftward or rightward shift in where the CDF rises. More technically, perfect precision corresponds to a sharp step increase at error = 0 (i.e., a piecewise function, overlapping with the axis mark): CDF = {0(error < 0), 1(error ≥ 1)}. The chance distribution was estimated through simulations as our upper bound, determined by comparing each trial’s correct time with a uniform distribution ranging from 0 s to 20 s (inclusive of each end value).

As can be seen in Figure 3, both the neutral and negative conditions had similar slopes but are sufficiently more precise (steeper) than the chance distribution. To test this quantitatively, we conducted a bootstrapping and distribution-fitting procedure (see the Supplemental Material). Based on the cohort A data, the 95% confidence interval (CI) of the fitted shape parameter that corresponds to the distribution slope was [3.59, 3.90] for the negative condition and [3.85, 4.22] for the neutral condition. Because both of these intervals overlap, we failed to find a statistical difference in the temporal-judgment precision between the two conditions. However, these values are significantly higher than the simulated chance data, which had a fitted shape parameter of 2.82, corresponding to the shallower slope in the distribution—and lower precision. Hence, this provides a statistical comparison for participants’ response data being more precise than chance responding. The 95% CIs for the fitted shape parameter in cohort B produced very similar results (negative condition: 95% CI = [3.66, 3.96]; neutral condition: 95% CI = [3.93, 4.24]); again, both intervals overlap, but we also see very similar values and consistently slightly higher values for the neutral condition, indicating that the pattern of results is relatively robust.

Phase 4 (associative recognition; “which”)

The proportion of trials with correct responses (correct trials/total trials) was calculated for each participant. A paired-samples t test (preregistered) showed that participants were less accurate in associative recognition in the negative (M = 0.56, SD = 0.24) relative to the neutral (M = 0.59, SD = 0.23) condition in cohort A, t(56) = 2.85, p = .006, d = 0.38, as predicted. This effect was replicated in cohort B (negative: M = 0.55, SD = 0.22; neutral: M = 0.60, SD = 0.24), t(54) = 2.81, p = .007, d = 0.38 (see Fig. 2). (Note that chance was 20% because there were five choices.) An analysis (not preregistered) examining associative recognition that was conditional on successful item recognition (i.e., hits) demonstrated a similar pattern in cohort A, t(56) = 3.55, p = .001, d = 0.47, and cohort B, t(54) = 3.51, p = .001, d = 0.47 (see Fig. 2).

Exploratory analysis relating effects of emotion on performance across the three task phases

An exploratory analysis (not preregistered) in the full sample examined potential associations between performance (difference scores) across the different aspects of memory probed. Because this involved three comparisons, we used a Bonferroni-corrected value (α) of .016. This analysis failed to yield any significant associations (all ps > .09). Accordingly, any change in performance due to emotion from one phase did not predict a corresponding change in any other phase.

Discussion

Here, we asked participants to study negative and neutral images in the context of a naturalistic, unfolding laboratory episode and tested their memory for what they saw, in which spatial context they saw it, and—most critically—when they saw it. Our study yielded a number of findings. First, we observed a robust emotional enhancement of memory in our “what” item-recognition task: Participants remembered negative images better than neutral ones (~6% enhancement in accuracy) and also showed a tendency to endorse emotional items as old (i.e., emotion enhanced both sensitivity and bias). This effect replicates a well-established literature (see below) and extends it to a novel procedure. Second, we found that negative emotion hindered associative (in “which” spatial context) memory: Participants were less adept at remembering the item–background video pairing for negative items than neutral items (i.e., reduced associative recognition; ~4% difference). This associative deficit demonstrates a conceptual replication of a burgeoning literature in the context of a novel paradigm. Third, and most central to our present goal, we unexpectedly found that negative emotion was associated with more accurate judgments for “when” an image occurred within an ongoing episode (qualified below). Hence, we observed support for only two of our three hypotheses.

The literature on emotion and temporal memory is limited relative to the literature on other facets of emotional episodic memory. Nonetheless, this literature largely favors the perspective that emotion reduces temporal memory, for example, in studies of order judgments and duration (as noted in the introduction; for a review, see Palombo & Cocquyt, 2020), with some exceptions (e.g., Schmidt et al., 2011). For example, Maddock and Frein (2009) compared temporal-order memory for negative pairs (vs. neutral or positive pairs) and found an impairment for negative stimuli (also see Huntjens et al., 2015). What then can account for the findings in our paradigm?

On the surface, our paradigm differs from others in the literature in many ways. First, each of our episodes contained only one negative (or neutral) item, presented against a backdrop of neutral, mundane content. Under such circumstances, is it possible that “when” was afforded with greater centrality in the context of the unfolding scenario? That is, perhaps the timing was encoded as an intrinsic feature of an image (Mather, 2007). By analogy, a number of studies on emotion and memory show that properties in other domains that are intrinsic to a stimulus benefit from emotion, including stimulus color (MacKay et al., 2004), the visuospatial location of the stimulus (Costanzi et al., 2019; González-Garrido et al., 2015; Mather & Nesmith, 2008), and even objects overlaid on neutral background scenes (Madan et al., 2020). Critically, we note that in our study, negative emotion did not affect precision per se, but it did affect participants’ responding; in the neutral condition, there was a shift to later temporal estimates. In other words, when participants made temporal judgments in the neutral condition, they tended to judge the events as having happened later. By contrast, in the negative condition, participants’ responses were not consistently biased to be either early or late. If timing was encoded as an intrinsic feature that was enhanced by negative emotion, we might have expected to see enhanced precision in the negative condition, but instead, we observed comparable precision for both conditions. It is not clear what mechanism can account for the present results.

It is notable that on the “what” memory task, although participants were more accurate in the negative condition (with higher d′ scores, a measure of sensitivity), they also showed a more liberal response bias for negative items (i.e., they were more willing to endorse items at test as “old” in the negative condition). Although increased sensitivity for emotional stimuli is observed in the literature (for a review, see Bennion et al., 2013; also see Grider & Malmberg, 2008), such findings are not uniformly observed. Moreover, a more lenient response bias for emotional stimuli is often reported, either in conjunction or in the absence of changes in sensitivity (see Bennion et al., 2013; Dougal & Rotello, 2007). Relevant to this issue, one factor often attributed to performance differences between negative and neutral conditions is the degree of semantic interrelatedness among items; negative items are inherently more interrelated than neutral items. Although some studies have included a categorized neutral condition matched to the emotional condition in semantic interrelatedness (e.g., Buchanan et al., 2006; Madan et al., 2012; Maratos et al., 2000; Talmi & Moscovitch, 2004), this is generally constrained to studies using word stimuli. Because of the richness and complexity of image stimuli, semantic relatedness is often not controlled for in these studies (but see Barnacle et al., 2018) and could have influenced performance on the tests used here. This is an important consideration for future research.

We performed individual-differences analyses to determine whether the emotional impact in each of the three memory tasks was associated (and failed to find such relationships). However, we caution against drawing strong conclusions from these analyses because our study was not designed to allow for strict comparison among the three tasks given that the nature of the tests differed (e.g., recall vs. recognition), the order of tasks was not counterbalanced, and the tests were not matched in difficulty.

Instead, we consider more broadly whether the effect of emotion on “what,” “when,” and “which” may evoke different mechanisms; we draw on recent theoretical models that propose that memory enhancements for negative items versus the reduced binding of those items to their context are supported by somewhat distinct neurocognitive systems. For example, according to one view, the amygdala facilitates the usurping of cognitive resources (attention, perception, etc.) toward emotional content and the binding of emotion to items (see Yonelinas & Ritchey, 2015). By contrast, associative impairments akin to the one observed here in our “which” task (namely, findings of attenuated memory for an item or background pairing) are thought to be mediated by disrupted hippocampal item-context binding (e.g., Bisby & Burgess, 2017; Yonelinas & Ritchey, 2015). Although a detailed discussion of the neuroimaging literature is beyond the scope of this article, we note that these theoretical ideas are supported by functional MRI data (e.g., Bisby et al., 2016; Kensinger & Schacter, 2006; Madan et al., 2017).

What about temporal memory? We surmised that a hippocampal binding mechanism (item-context binding) would lead to impaired temporal-context memory in the face of emotional content, in keeping with the findings reported in a burgeoning literature on temporal processing in this structure (e.g., Montchal et al., 2019) and the presence of time cells (for a review, see Eichenbaum, 2017). For example, in a recent study by Montchal and colleagues (2019), temporal precision (measured using a task that inspired ours) was associated with functional MRI activity in hippocampal subregions and lateral entorhinal cortex. Yet our finding suggests that emotion does not impair all aspects of hippocampal binding, and indeed, there is good reason to believe that item-context bindings involve different mechanisms depending on the nature of the context (e.g., Dimsdale-Zucker et al., 2018; Madan et al., 2020). Follow-up neuroimaging work probing emotional effects on temporal memory (with aligned psychometrics) is needed to further explicate how these structures may contribute to the unexpected pattern of temporal behavioral effects observed in the present study versus their contribution to the “what” and “which” aspects of emotional memory. However, as a first step, additional behavioral work is needed to better characterize the full spectrum of effects of emotion on various aspects of temporal memory using a range of paradigms (e.g., temporal order, duration, temporal distance).

Our study has some limitations. First, we did not include positive stimuli and thus cannot disentangle the effects of valence versus arousal. Although some of the effects of emotion on memory appear to be arousal specific, emotional-memory phenomena can diverge by valence (Bowen et al., 2018; Clewett & Murty, 2019). Second, our paradigm has limited ecological validity. The negative stimuli were juxtaposed by completely unrelated background content (appearing and disappearing in an abrupt fashion), whereas negative emotional occurrences in the real world unfold more naturally. It is therefore possible that we created an oddball effect (Sakaki et al., 2014), in which negative images were more salient than neutral ones when juxtaposed with the neutral backgrounds. Moreover, although we sought to create dynamic unfolding experiences, the events were not robustly dynamic (e.g., a scenario involving a street vendor cooking). If one’s memory for “when” is drawn from what is happening in an event, there may have been less information for people to draw on. In our paradigm, this may have produced heavier reliance on absolute time rather than relative time because the event per se provided less diagnostic information. Emotion in the context of the latter may result in a different pattern of results, and we highlight the importance of considering both event characteristics and the temporal measurement choice per se.

The present study and our emphasis on emotion’s effects on temporal processing are topical, particularly given recent theoretical and modeling work (e.g., Cohen & Kahana, 2020; Palombo & Cocquyt, 2020; Talmi et al., 2019) that calls attention to the need for more empirical data on how emotion affects our unfolding experiences. Our novel approach and unexpected results add important data to a burgeoning field of study and stand to further fuel theoretical models of emotional memory.

Supplemental Material

Supplemental material, sj-docx-1-pss-10.1177_0956797621991548 for Exploring the Facets of Emotional Episodic Memory: Remembering “What,” “When,” and “Which” by Daniela J. Palombo, Alessandra A. Te, Katherine J. Checknita and Christopher R. Madan in Psychological Science

Footnotes

ORCID iD: Christopher R. Madan  https://orcid.org/0000-0003-3228-6501

https://orcid.org/0000-0003-3228-6501

Supplemental Material: Additional supporting information can be found at http://journals.sagepub.com/doi/suppl/10.1177/0956797621991548

Transparency

Action Editor: Daniela Schiller

Editor: Patricia J. Bauer

Author Contributions

A. A. Te and K. J. Checknita contributed equally to this study. All the authors designed the study. A. A. Te and K. J. Checknita collected the data. All the authors analyzed the data, wrote the manuscript, and approved the final manuscript for submission.

Declaration of Conflicting Interests: The author(s) declared that there were no conflicts of interest with respect to the authorship or the publication of this article.

Funding: This work was supported by a Discovery Grant from the Natural Sciences and Engineering Research Council of Canada (RGPIN-2019-04596) and the John R. Evans Leaders Fund from the Canadian Foundation for Innovation (38817).

Open Practices: All data have been made publicly available via OSF and can be accessed at https://osf.io/fcwx3. The design and analysis plans for cohort A were preregistered at https://aspredicted.org/p4ci6.pdf. Deviations from the preregistration are noted in the text. This article has received the badges for Open Data and Preregistration. More information about the Open Practices badges can be found at http://www.psychologicalscience.org/publications/badges.

References

- Apple. (2019). iMovie (Version 10.1.11) [Computer software]. https://www.apple.com/imovie/

- Barnacle G. E., Tsivilis D., Schaefer A., Talmi D. (2018). Local context influences memory for emotional stimuli but not electrophysiological markers of emotion-dependent attention. Psychophysiology, 55(4), Article e13014. 10.1111/psyp.13014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bennion K. A., Ford J. H., Murray B. D., Kensinger E. A. (2013). Oversimplification in the study of emotional memory. Journal of the International Neuropsychological Society, 19(9), 953–961. 10.1017/S1355617713000945 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisby J. A., Burgess N. (2014). Negative affect impairs associative memory but not item memory. Learning & Memory, 21(1), 21–27. 10.1101/lm.032409.113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisby J. A., Burgess N. (2017). Differential effects of negative emotion on memory for items and associations, and their relationship to intrusive imagery. Current Opinion in Behavioral Sciences, 17, 124–132. 10.1016/j.cobeha.2017.07.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisby J. A., Horner A. J., Bush D., Burgess N. (2018). Negative emotional content disrupts the coherence of episodic memories. Journal of Experimental Psychology: General, 147(2), 243–256. 10.1037/xge0000356 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisby J. A., Horner A. J., Hørlyck L. D., Burgess N. (2016). Opposing effects of negative emotion on amygdalar and hippocampal memory for items and associations. Social Cognitive and Affective Neuroscience, 11(6), 981–990. 10.1093/scan/nsw028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bowen H. J., Kark S. M., Kensinger E. A. (2018). NEVER forget: Negative emotional valence enhances recapitulation. Psychonomic Bulletin & Review, 25(3), 870–891. 10.3758/s13423-017-1313-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchanan T. W., Etzel J. A., Adolphs R., Tranel D. (2006). The influence of autonomic arousal and semantic relatedness on memory for emotion words. International Journal of Psychophysiology, 61, 26–33. 10.1016/j.ijpsycho.2005.10.022 [DOI] [PubMed] [Google Scholar]

- Cahill L., McGaugh J. L. (1995). A novel demonstration of enhanced memory associated with emotional arousal. Consciousness and Cognition, 4(4), 410–421. 10.1006/ccog.1995.1048 [DOI] [PubMed] [Google Scholar]

- Campbell L. A., Bryant R. A. (2007). How time flies: A study of novice skydivers. Behaviour Research and Therapy, 45(6), 1389–1392. 10.1016/j.brat.2006.05.011 [DOI] [PubMed] [Google Scholar]

- Caplan J. B., Sommer T., Madan C. R., Fujiwara E. (2019). Reduced associative memory for negative information: Impact of confidence and interactive imagery during study. Cognition and Emotion, 33, 1745–1753. 10.1080/02699931.2019.1602028 [DOI] [PubMed] [Google Scholar]

- Clewett D., Murty V. P. (2019). Echoes of emotions past: How neuromodulators determine what we recollect. eNeuro, 6(2). 10.1523/ENEURO.0108-18.2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen R. T., Kahana M. J. (2020). A memory-based theory of emotional disorders. bioRxiv. 10.1101/817486 [DOI] [PMC free article] [PubMed]

- Costanzi M., Cianfanelli B., Saraulli D., Lasaponara S., Doricchi F., Cestari V., Rossi-Arnaud C. (2019). The effect of emotional valence and arousal on visuo-spatial working memory: Incidental emotional learning and memory for object-location. Frontiers in Psychology, 10, Article 2587. 10.3389/fpsyg.2019.02587 [DOI] [PMC free article] [PubMed] [Google Scholar]

- D’Argembeau A., Van der Linden M. (2005). Influence of emotion on memory for temporal information. Emotion, 5(4), 503–507. 10.1037/1528-3542.5.4.503 [DOI] [PubMed] [Google Scholar]

- Dimsdale-Zucker H. R., Ritchey M., Ekstrom A. D., Yonelinas A. P., Ranganath C. (2018). CA1 and CA3 differentially support spontaneous retrieval of episodic contexts within human hippocampal subfields. Nature Communications, 9, Article 294. 10.1038/s41467-017-02752-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dougal S., Rotello C. M. (2007). “Remembering” emotional words is based on response bias, not recollection. Psychonomic Bulletin & Review, 14, 423–429. 10.3758/BF03194083 [DOI] [PubMed] [Google Scholar]

- Eichenbaum H. (2017). On the integration of space, time, and memory. Neuron, 95, 1007–1018. 10.1016/j.neuron.2017.06.036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- González-Garrido A. A., López-Franco A. L., Gómez-Velázquez F. R., Ramos-Loyo J., Sequeira H. (2015). Emotional content of stimuli improves visuospatial working memory. Neuroscience Letters, 585, 43–47. 10.1016/j.neulet.2014.11.014 [DOI] [PubMed] [Google Scholar]

- Grider R. C., Malmberg K. J. (2008). Discriminating between changes in bias and changes in accuracy for recognition memory of emotional stimuli. Memory & Cognition, 36, 933–946. 10.3758/MC.36.5.933 [DOI] [PubMed] [Google Scholar]

- Huntjens R. J. C., Wessel I., Postma A., van Wees-Cieraad R., de Jong P. J. (2015). Binding temporal context in memory: Impact of emotional arousal as a function of state anxiety and state dissociation. Journal of Nervous and Mental Disease, 203(7), 545–550. 10.1097/NMD.0000000000000325 [DOI] [PubMed] [Google Scholar]

- Johnson L. W., MacKay D. G. (2019). Relations between emotion, memory encoding, and time perception. Cognition and Emotion, 33(2), 185–196. 10.1080/02699931.2018.1435506 [DOI] [PubMed] [Google Scholar]

- Kensinger E. A., Corkin S. (2003). Effect of negative emotional content on working memory and long-term memory. Emotion, 3(4), 378–393. 10.1037/1528-3542.3.4.378 [DOI] [PubMed] [Google Scholar]

- Kensinger E. A., Garoff-Eaton R. J., Schacter D. L. (2007). Effects of emotion on memory specificity: Memory trade-offs elicited by negative visually arousing stimuli. Journal of Memory and Language, 56(4), 575–591. 10.1016/j.jml.2006.05.004 [DOI] [Google Scholar]

- Kensinger E. A., Schacter D. L. (2006). Amygdala activity is associated with the successful encoding of item, but not source, information for positive and negative stimuli. The Journal of Neuroscience, 26, 2564–2570. 10.1016/10.1523/JNEUROSCI.5241-05.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaBar K. S., Cabeza R. (2006). Cognitive neuroscience of emotional memory. Nature Reviews Neuroscience, 7(1), 54–64. 10.1038/nrn1825 [DOI] [PubMed] [Google Scholar]

- Loftus E. F., Schooler J. W., Boone S. M., Kline D. (1987). Time went by so slowly: Overestimation of event duration by males and females. Applied Cognitive Psychology, 1(1), 3–13. 10.1002/acp.2350010103 [DOI] [Google Scholar]

- MacKay D. G., Shafto M., Taylor J. K., Marian D. E., Abrams L., Dyer J. R. (2004). Relations between emotion, memory, and attention: Evidence from taboo Stroop, lexical decision, and immediate memory tasks. Memory & Cognition, 32(3), 474–488. 10.3758/BF03195840 [DOI] [PubMed] [Google Scholar]

- Madan C. R., Caplan J. B., Lau C. S. M., Fujiwara E. (2012). Emotional arousal does not enhance association-memory. Journal of Memory and Language, 66(4), 695–716. 10.1016/j.jml.2012.04.001 [DOI] [Google Scholar]

- Madan C. R., Fujiwara E., Caplan J. B., Sommer T. (2017). Emotional arousal impairs association-memory: Roles of amygdala and hippocampus. NeuroImage, 156(1), 14–28. 10.1016/j.neuroimage.2017.04.065 [DOI] [PubMed] [Google Scholar]

- Madan C. R., Knight A. G., Kensinger E. A., Mickley Steinmetz K. R. (2020). Affect enhances object-background associations: Evidence from behaviour and mathematical modelling. Cognition and Emotion, 34(5), 960–969. 10.1080/02699931.2019.1710110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maddock R. J., Frein S. T. (2009). Reduced memory for the spatial and temporal context of unpleasant words. Cognition and Emotion, 23(1), 96–117. 10.1080/02699930801948977 [DOI] [Google Scholar]

- Maratos E. J., Allan K., Rugg M. D. (2000). Recognition memory for emotionally negative and neutral words: An ERP study. Neuropsychologia, 38, 1452–1465. 10.1016/S0028-3932(00)00061-0 [DOI] [PubMed] [Google Scholar]

- Marchewka A., Żurawski Ł., Jednoróg K., Grabowska A. (2014). The Nencki Affective Picture System (NAPS): Introduction to a novel, standardized, wide-range, high-quality, realistic picture database. Behavior Research Methods, 46(2), 596–610. 10.3758/s13428-013-0379-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mather M. (2007). Emotional arousal and memory binding: An object-based framework. Perspectives on Psychological Science, 2(1), 33–52. 10.1111/j.1745-6916.2007.00028.x [DOI] [PubMed] [Google Scholar]

- Mather M., Nesmith K. (2008). Arousal-enhanced location memory for pictures. Journal of Memory and Language, 58(2), 449–464. 10.1016/j.jml.2007.01.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mather M., Sutherland M. R. (2011). Arousal-biased competition in perception and memory. Perspectives on Psychological Science, 6(2), 114–133. 10.1177/1745691611400234 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGaugh J. L. (2018). Emotional arousal regulation of memory consolidation. Current Opinion in Behavioral Sciences, 19, 55–60. 10.1016/j.cobeha.2017.10.003 [DOI] [Google Scholar]

- Minor G., Herzmann G. (2019). Effects of negative emotion on neural correlates of item and source memory during encoding and retrieval. Brain Research, 1718, 32–45. 10.1016/j.brainres.2019.05.001 [DOI] [PubMed] [Google Scholar]

- Montchal M. E., Reagh Z. M., Yassa M. A. (2019). Precise temporal memories are supported by the lateral entorhinal cortex in humans. Nature Neuroscience, 22(2), 284–288. 10.1038/s41593-018-0303-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palombo D. J., Cocquyt C. (2020). Emotion in context: Remembering when. Trends in Cognitive Sciences, 24(9), 687–690. 10.1016/j.tics.2020.05.017 [DOI] [PubMed] [Google Scholar]

- Rimmele U., Davachi L., Phelps E. A. (2012). Memory for time and place contributes to enhanced confidence in memories for emotional events. Emotion, 12(4), 834–846. 10.1037/a0028003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sakaki M., Fryer K., Mather M. (2014). Emotion strengthens high-priority memory traces but weakens low-priority memory traces. Psychological Science, 25(2), 387–395. 10.1177/0956797613504784 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmidt K., Patnaik P., Kensinger E. A. (2011). Emotion’s influence on memory for spatial and temporal context. Cognition and Emotion, 25(2), 229–243. 10.1080/02699931.2010.483123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Siddiqui A. P., Unsworth N. (2011). Investigating the role of emotion during the search process in free recall. Memory & Cognition, 39(8), 1387–1400. [DOI] [PubMed] [Google Scholar]

- Talmi D. (2013). Enhanced emotional memory: Cognitive and neural mechanisms. Current Directions in Psychological Science, 22(6), 430–436. [Google Scholar]

- Talmi D., Lohnas L. J., Daw N. D. (2019). A retrieved context model of the emotional modulation of memory. Psychological Review, 126, 455–485. 10.1037/rev0000132 [DOI] [PubMed] [Google Scholar]

- Talmi D., Moscovitch M. (2004). Can semantic relatedness explain the enhancement of memory for emotional words? Memory & Cognition, 32, 742–751. 10.3758/BF03195864 [DOI] [PubMed] [Google Scholar]

- Talmi D., Schimmack U., Paterson T., Moscovitch M. (2007). The role of attention and relatedness in emotionally enhanced memory. Emotion, 7(1), 89–102. 10.1037/1528-3542.7.1.89 [DOI] [PubMed] [Google Scholar]

- Tkalčič M., Tasic J. F. (2003). Colour spaces: Perceptual, historical and applicational background. In Zajc B., Tkalčič M. (Eds.), Proceedings of the IEEE Region 8 EUROCON 2003: Computer as a Tool (Vol. 1, pp. 304–308). IEEE. 10.1109/EURCON.2003.1248032 [DOI] [Google Scholar]

- Todd R. M., Cunningham W. A., Anderson A. K., Thompson E. (2012). Affect-biased attention as emotion regulation. Trends in Cognitive Sciences, 16(7), 365–372. 10.1016/j.tics.2012.06.003 [DOI] [PubMed] [Google Scholar]

- Todd R. M., Miskovic V., Chikazoe J., Anderson A. K. (2020). Emotional objectivity: Neural representations of emotions and their interaction with cognition. Annual Review of Psychology, 71, 25–48. 10.1146/annurev-psych-010419-051044 [DOI] [PubMed] [Google Scholar]

- Tulving E. (1972). Episodic and semantic memory. In Tulving E., Donaldson W. (Eds.), Organization of memory (pp. 381–403). Academic Press. [Google Scholar]

- Yonelinas A. P., Ritchey M. (2015). The slow forgetting of emotional episodic memories: An emotional binding account. Trends in Cognitive Sciences, 19(5), 259–267. 10.1016/j.tics.2015.02.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material, sj-docx-1-pss-10.1177_0956797621991548 for Exploring the Facets of Emotional Episodic Memory: Remembering “What,” “When,” and “Which” by Daniela J. Palombo, Alessandra A. Te, Katherine J. Checknita and Christopher R. Madan in Psychological Science