Abstract

What is social pressure, and how could it be adaptive to conform to others’ expectations? Existing accounts highlight the importance of reputation and social sanctions. Yet, conformist behavior is multiply determined: sometimes, a person desires social regard, but at other times she feels obligated tobehave a certain way, regardless of any reputational benefit—i.e. she feels a sense of should. We develop a formal model of this sense of should, beginning from a minimal set of biological premises: that the brain is predictive, that prediction error has a metabolic cost, and that metabolic costs are prospectively avoided. It follows that unpredictable environments impose metabolic costs, and in social environments these costs can be reduced by conforming to others’ expectations. We elaborate on a sense of should’s benefits and subjective experience, its likely developmental trajectory, and its relation to embodied mental inference. From this individualistic metabolic strategy, the emergent dynamics unify social phenomenon ranging from status quo biases, to communication and motivated cognition. We offer new solutions to long-studied problems (e.g. altruistic behavior), and show how compliance with arbitrary social practices is compelled without explicit sanctions. Social pressure may provide a foundation in individuals on which societies can be built.

Keywords: Allostasis, Predictive Coding, Evolution, Metabolism, Affect, Social Pressure

Nature, when she formed man for society, endowed him with an original desire to please, and an original aversion to offend his brethren. She taught him to feel pleasure in their favourable, and pain in their unfavourable regard. ….

But this desire for the approbation, and this aversion to the disapprobation of his brethren, would not alone have rendered him fit for that society for which he was made. Nature, accordingly, has endowed him, not only with a desire of being approved of, but with a desire of being what ought to be approved of …. The first desire could only have made him wish to appear to be fit for society. The second was necessary in order to render him anxious to be really fit.

Adam Smith, (1790/2010, III, 2.6–2.7)

1. Introduction

How does social pressure work? And what benefit does an individual gain by conforming to others’ expectations (e.g. expectations to help others, Schwartz, 1977; expectations to hurt others, Fiske & Rai, 2014; or even innocuous expectations, like suppressing a cough in a quiet hallway)? Conformity in the face of social pressure is a well-known behavioral phenomenon (Asch, 1951, 1955; Greenwood, 2004; Milgram, 1963; Moscovici, 1976) and is multiply determined (Batson & Shaw, 1991; Deutsch & Gerard, 1955; Dovidio, 1984; Schwartz, 1977). For example, if you and a group of others were asked a question, and if all other group members gave a unanimous response (Asch, 1951, 1955), then if you copied the group’s answer at least two sources of influence might have motivated your behavior: you might have copied them because you assumed they were knowledgeable (i.e. you experienced informational influence), or you may have copied them despite knowing they were incorrect (i.e. you experienced normative influence; Deutsch & Gerard, 1955; Dovidio, 1984; Toelch & Dolan, 2015). In this paper, our aim is to elaborate on how normative influence motivates behavior. Typically, it is assumed that normative influence motivates individuals through actual or anticipated social rewards and punishment (e.g. reputation, social approval; Cialdini et al., 1990; Constant et al., 2019; Kelley, 1952; Paluck, 2016; FeldmanHall & Shenhav, 2019; Toelch & Dolan, 2015; but see Greenwood, 2004). That is, one individual conforms to another’s expectation (or to expectations shared collectively; i.e. norms; Bicchieri, 2006; Hawkins et al., in press) to “gain or maintain acceptance” (Kelley, 1952, p. 411), to avoid “social sanctions” (Cialdini et al., 1990, p. 1015; see also, Schwartz, 1977, p. 225), to achieve “social success” (Paluck et al., 2016, p. 556), or to “signal belongingness to a group” (Toelch & Dolan, 2015, p. 580).

But this explanation cannot be complete. For one, non-conformists are frequently popular (Moscovici, 1976, Chapter 4), which implies that individuals sometimes gain acceptance or achieve social success by violating expectations and norms. But more importantly, just as conformist behavior is multiply determined (by informational and normative influence; Deutsch & Gerard, 1955), normatively motivated behavior is multiply determined too. As Adam Smith observed (among others; e.g. Asch, 1952/1962, Chapter 12; Batson & Shaw, 1991; Dovidio, 1984; Greenwood, 2004; Piliavin et al., 1981; Schwartz, 1977; Tomasello, in press), a person is motivated both by a desire for social regard, and by a sense that she should behave a certain way. If a person were only motivated by reputation (i.e. reputation-seeking), then she would only be motivated to appear norm compliant (Smith, 1790/2010, III, 2.7). People can, indeed, be motivated by reputation-seeking (e.g. when they explicitly select behaviors that will make others like them). However, in this paper we focus on Smith’s second motivation—the motivation to match one’s behavior (i.e. conform) to individual others’ expectations or to the norms of a culture, without expecting or aiming to bring about a social (e.g. reputational) or non-social (e.g. money, food) reward. We call this felt obligation to conform to others’ expectations a sense of should.

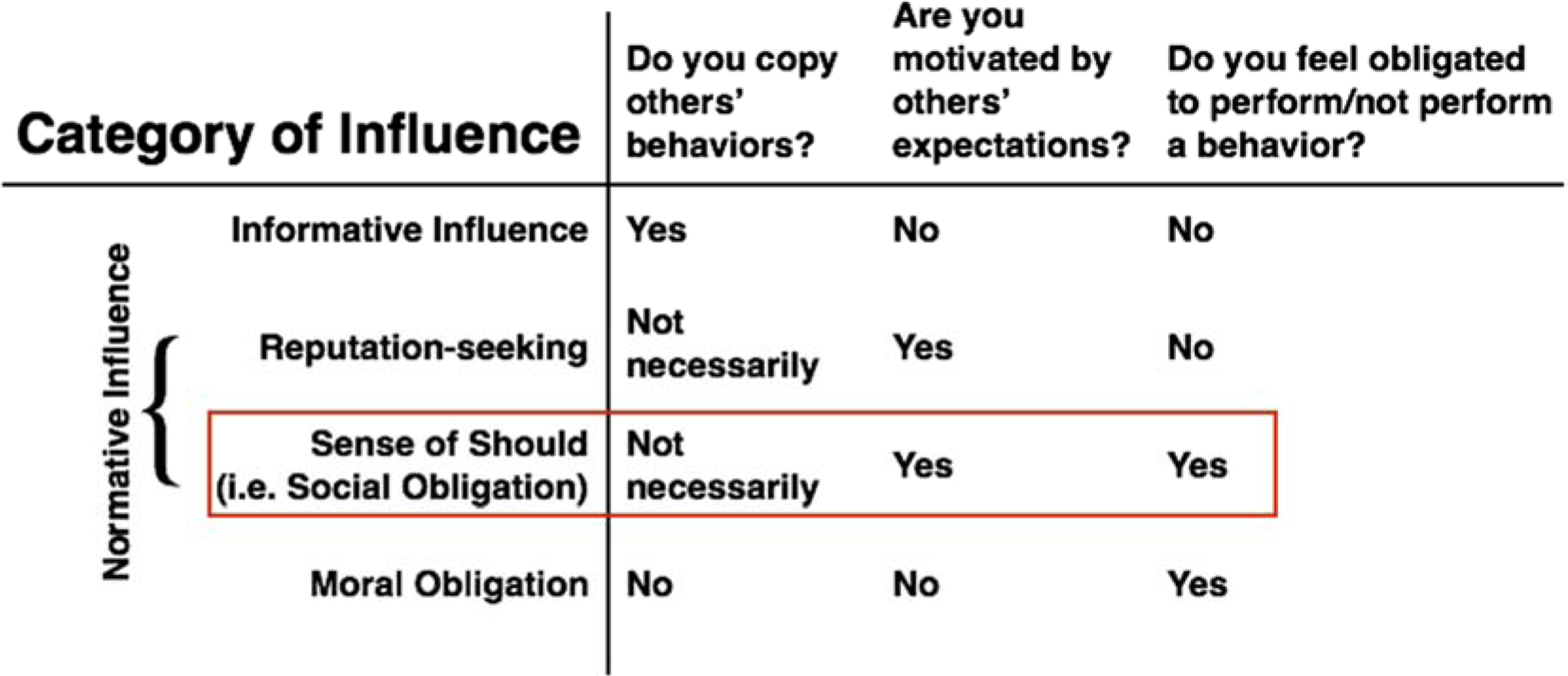

Adam Smith highlighted that reputation-seeking and obligation are separable motives; however, he and others (e.g. Tomasello, in press) do not distinguish between moral and non-moral (i.e. social) obligations (Figure 1). For present purposes, we distinguish these influences on the basis of whether others’ expectations motivate behavior. A sense of should refers to a felt social obligation to conform to others’ expectations. By contrast, following Schwartz (1977), we use moral obligation to refer to cases where an action is motivated by an internalized personal value, even when the action would violate others’ expectations—for example, a moral obligation “to tell the truth even if it is painful” (Asch, 1952/1962, p. 356) motivates an individual to violate others’ expectations, opposing a sense of should. In this paper, we are centrally concerned with how others’ expectations motivate behaviors via a sense of should, independent of reputation-seeking or internalized personal values.

Figure 1.

Diagram of relevant influences on behavior. Informative influence refers to the “wisdom of the crowd”, where an individual copies others’ behavior because she assumes they are knowledgeable (Deutsch & Gerard, 1955). Normative influence motivates compliance with others’ expectation, but it does not necessarily motivate copying their behaviors—i.e. an individual may copy others’ behavior to fit in socially (Asch, 1951; Deutsch & Gerard, 1955; Kelley, 1952), or she may help a victim because others expect her to (Schwartz & Gottlieb, 1976, 1980). Within normative influence, we distinguish reputation-seeking, where an individual explicitly aims to receive praise or avoid blame, from a sense of should, where an individual feels obligated to conform to others’ expectations. Moralobligation refers to cases where an individual feels obligated to perform a behavior, but is motivated by something besides others’ expectations (e.g. personal values; Schwartz, 1977). Note that a behavior (or category of behaviors) may be typically called “moral” (e.g. sharing) but the behavior could be motivated by any of these influences. This list of influences is also not exhaustive.

Social pressure and its subjective experience (a sense of should), then, describes something much more common than moral obligation. A sense of should may motivate you to observe arbitrary, typically unenforced social customs (e.g. wearing nail polish if female), to tolerate physical discomfort in social settings (e.g. waiting to go to the restroom during a lecture), and to follow others’ commands (e.g. passing the salt when asked). This motivation to conform to others’ expectations may be the social scaffolding that makes society possible (Foucault, 1975/2012; Greenwood, 2004; Nettle, 2018; Searle, 1995, 2010; also see Emirbayer, 1997); yet, as to why individuals conform to expectations, feel social obligations, or accept social institutions: “there does not seem to be any general answer” (Searle, 2010, p. 108). Our aim in this paper is to address this question from evolutionary and biological principles, beginning with empirical research in neuroscience and neuronal metabolism, building to a formal account of a sense of should’s function and proximate mechanisms, and ending with an outline of how this individual motivation might emergently produce social phenomenon ranging from communication, to status quo biases, culture, and motivated cognition.

1.1. A biologically-based sense of should

In our framework, a sense of should refers to a felt obligation to conform to others’ expectations. We will suggest that a sense of should is learned, and is experienced as an anticipatory anxiety toward violating others’ expectations (see also, Dovidio, 1984; Piliavin et al., 1981). We hypothesize that this anticipatory anxiety stems from the unpredictable social environment (and its affective consequences) that an expectation-violating behavior is anticipated to create. That is, when you violate others’ predictions about your behavior, we hypothesize that their behavior becomes (or is anticipated to become) more difficult to predict for you.

In this paper, we ground our account of a sense of should in a biologically plausible evolutionary context. Prior research in evolutionary psychology and behavioral economics has also acknowledged motives beyond reputation-seeking, noting that behaviors can be motivated by “irrational” (typically emotional) sources, which are experienced as distinct from rational, self-interested motivations. For example, responding with anger might be a more effective deterrent against cheaters, compared to dispassionately deciding whether to retaliate (Frank, 1988). Or, self-deception might insulate your consciousness from the true motives driving your behavior, helping you more easily deceive others (Hippel & Trivers, 2011; Trivers, 1976/2016). Or, emotions that lead you to cooperate without considering costs may signal to others that you are a trustworthy partner, securing future reciprocal exchanges (M. Hoffman et al., 2015). As evolutionary models, these all provide detailed accounts of the ultimate benefits; however, they provide sparse accounts of the proximate mechanisms. For example, it is taken as a sufficient explanation that “negative emotions” (Fehr &Gächter, 2002), or “moral outrage” (Jordan et al., 2016) motivate prosocial punishment. These appeals to emotion are a route to a black box—they offer no further explanation of the proximate mechanism, only a description and a label. That is, given that decades of research has failed to identify any consistent neural architecture implementing discrete emotional experiences (Barrett, 2017a; Clark-Polner et al., 2016; Guillory & Bujarski, 2014; Westermann et al., 2007), there is no clear path to pursue proximate accounts of “negative emotions” or “moral outrage” to the biological level on which natural selection operates.

By contrast, modern accounts of emotion have suggested that emotions derive from a combination of bodily (interoceptive) sensation (signals from the body to the brain indicating, for example: heart-rate, respiration, metabolic and immunological functioning; Barrett & Simmons, 2015; Craig, 2015; Seth, 2013) and a brain capable of categorizing patterns of sensory experience (Barrett, 2006a, 2014, 2017a, 2017b; Barrett & Bliss‐Moreau, 2009; Russell, 2003; Russell & Barrett, 1999). By leveraging these advances in the study of the brain and emotional experience, we can provide a full evolutionary account, showing how, at an ultimate level, individual fitness is promoted by conforming to others’ expectations, and how, at a proximate level, this sense of should works. Importantly, this evolutionary account does not depend on the plausibility of discrete, functionally specific adaptations (i.e. modules; Cosmides & Tooby, 1992). Instead, we suggest that a sense of should is an emergent phenomenon, and could arise from domain-general developments (e.g. in the capacity for inference and memory, in combination with a social context). This domain-general account also raises the possibility that that prosocial behavior in humans is not necessarily made adaptive by the long-term benefits of reciprocal altruism (Hamilton, 1964; Trivers, 1971). Rather, behaving as others expect may be adaptive as a simple consequence of the immediate biological benefits of a predictable social environment.

To explain a sense of should, we will situate our approach in the context of a biological common denominator: energy consumption (i.e. metabolics). Humans, like all organisms, are resource rational (Griffiths et al., 2015; Lieder & Griffiths, 2019): they optimize their use of critical resources, which, for living creatures, are metabolic. At a psychological level of analysis, behavior can be understood as driven by distinct motives—e.g. “self-interest” (such as reputation-seeking) vs. a sense of should. However, we suggest that social behavior may be more systematically understood by beginning at a deeper level of analysis, a level where both “self-interest” and a sense of should act as strategies for satisfying the energetic needs of the organism.

Many researchers are accustomed to considering evolutionary fitness only in terms of reproductive success (e.g. Dawkins, 1976/2016; but see, Wilkins & Bourrat, 2019); however, “at its biological core, life is a game of turning energy into offspring” (Pontzer, 2015, p. 170), meaning that for all organisms the management of metabolic resources is central—reproduction is one metabolic investment among many1. We will suggest that a sense of should, like “self-interested” motivation in the traditional sense, is adaptive because it allows humans to manage the metabolic demands imposed by their social environment. Both motives are self-interested in an ultimate sense, and provide complementary routes to the same adaptive end.

1.2. Outline.

In this paper, we use a biological framework to develop a mechanistic account of the sense of should. We address why people are motivated to conform to others’ expectations, and make our logic clear in a formal mathematical model. We begin by outlining the biological foundations of our approach (section 2), applying key insights from cybernetics (Conant & Ross Ashby, 1970; Ross Ashby, 1960a) and information theory (Shannon & Weaver, 1949/1964) to characterize the brain as a predictive, metabolically-dependent, model-based regulator of its body in the world. For humans, this world is largely social, and at the core of our approach is the hypothesis that individuals make this social environment more predictable by inferring others’ expectations and conforming to them. By conforming, an individual can regulate others’ behavior, the rate of her own learning, and the metabolic costs imposed by her social environment. We formalize the individual adaptive advantages of this strategy (section 3), then elaborate on the proximate psychological experience of a sense of should, the precursors for its development, its relationship to mental inference, and what is unique about this indirect form of influence. Finally, we explore the potential for our framework to unify disparate evolutionary, anthropological, and psychological phenomena (section 4), including status quo biases, communication, game-theoretic explanations of behavior, and the inheritance of culture and social norms. Taken together, this paper aims to begin from biological principles, and end with a unified framework to describe socially motivated behavior.

2. Biological foundations for a sense of should

The biological foundations for a sense of should involve a general account of what a brain is for and how it regulates the body’s interactions with the world. In this section, we review established work in neuroscience and introduce key concepts related to brain energetics—the metabolic processes that power neural activity. We show that organisms promote their own survival by using a predictive, regulatory model (i.e. a brain) to ensure that interactions with their environment are metabolically efficient. A logical consequence is that unpredictable environments are metabolically costly. With this foundation in place, we suggest that the human brain also regulates the metabolic costs of its social environment, via a sense of should.

To some readers, it may seem unintuitive, or even reductive to ground motivation in metabolism (but see Churchland, 2019). However, it must be remembered that Western, Educated, Industrialized, Rich, and Democratic people (i.e. W.E.I.R.D.; Henrich et al., 2010) are spoiled for resources in a way that is unprecedented among past and present human societies (let alone the animal kingdom). We (or, we who are economically secure professors and professionals) are cushioned by grocery stores, houses, and a culture that sustains them, meaning that calculations balancing fighting, fleeing, and feeding are not currently experienced as pressing concerns. These calculations may not be salient to us, but they are central to the evolutionary history of all organisms, and within behavioral ecology a gain or loss in metabolic efficiency can determine whether an individual, or even a species, survives (Brown et al., 2004; Kleiber, 1932). W.E.I.R.D. culture may buffer many metabolic concerns, but we suggest that these concerns nonetheless shaped our evolutionary history, forming the psychological processes that allowed society to emerge. If we want to understand how society is maintained—and how a life of metabolic leisure is supported—then we must begin from these biological principles.

2.1. A brain regulates a body in its environment.

As an organ common to humans, flies, rats, and worms, a brain has a common purpose, shared across species: to regulate a body in its interaction with the environment (Barrett, 2017a; Barrett & Simmons, 2015; Moreno & Mossio, 2015; Ross Ashby, 1960b; Sterling & Laughlin, 2015). Fundamentally, the brain’s job “reduces to regulating the internal milieu and helping the organism survive and reproduce” (Sterling & Laughlin, 2015, p. 11), a conjecture supported by evidence from neuroanatomy (Chanes & Barrett, 2016; Kleckner et al., 2017), and from neural physiology and electric signal processing (Sterling & Laughlin, 2015). Of course, regulation varies in its particulars—the innards and environs of worms and humans pose drastically different regulatory challenges—but the core regulatory role of the brain remains unchanged. On this account, sensation and cognition are functionally in the service of this regulation—they are the means to an end: what you see, feel, think, and so on, is all in the service of the brain regulating its body’s interactions with the world.

At the core of regulation lies the management of metabolic processes. To survive, grow, thrive, and ultimately reproduce, an organism requires a near continuous intake of energetic resources, such as glucose, water, oxygen, and electrolytes—it must be watered and fed. Resources maintain the body and fuel physical movements, movements that can acquire more resources or protect against potential threats. All actions have some metabolic cost, but to acquire more resources organisms must forage or hunt. What this means is that survival is not a matter of minimizing metabolic expenditures—instead, organisms must be efficient: they must invest energy to provide the largest metabolic return.

The brain itself is a significant energy investment. In rats, it accounts for ~5% of energy consumption; in chimpanzees ~9%; and in humans, ~20% (Clarke & Sokoloff, 1999; Hofman, 1983; and this percentage is even higher in children, see Goyal et al., 2014; Kennedy & Sokoloff, 1957). Cognitive functions, such as learning, are metabolic investments also: they require energy in the form of glucose and glycogen (e.g. Hertz & Gibbs, 2009), which are metabolized to produce neurotransmitters—e.g. glutamate (Gasbarri & Pompili, 2014)—and ATP molecules (Mergenthaler et al., 2013), the foundational energy source for the brain. In times of scarcity, learning may be a poor investment and may be limited to features that promote survival in the short term. But in times of abundance, an organism can promote its own survival by learning and exploring the environment (Burghardt, 2005), finding safer or more metabolically efficient ways to exploit it (Cohen et al., 2007). This interplay between conservation of energy during scarcity, and investment during abundance, is critical to keep in mind. In explaining a sense of should, we will be largely focused on methods of conservation; yet, exploration (including sometimes violating expectations and norms) will serve a critical role in learning (see Section 2.3.1 Constructing and Coasting).

This idea, that metabolic resources should be spent frugally and invested wisely, dates at least as far back as Darwin, who observed that “natural selection is continually trying to economize in every part of the organization” and that “it will profit the individual not to have its nutriment wasted on building up [a] useless structure” (Darwin, 1859/2001, p. 137). If a costly biological structure provides no return (i.e. it does not promote survival or reproduction) then evolution should select against it. This logic can be extended to behavior and cognition, implying that an organism’s cognition should only be as complex as is necessary for it to survive in its ecological niche (Godfrey-Smith, 1998, 2002, 2017). This observation foreshadows our hypothesis: the human ecological niche is social; and therefore, the social environment profoundly affects which behaviors and cognitions are energetically optimal.

As a good regulator, the brain facilitates survival by modeling the environment, and at the same time it must not spend more energetic resources than necessary. In the next section, we discuss how a brain promotes survival by acting as an internal model of its body and environment. In section 2.3, we discuss how a brain uses efficient, predictive processing schemes to minimize the metabolic costs of neuronal signaling. Then, in section 3, we return to the social world, demonstrating how a sense of should motivates humans to manage the metabolic costs imposed by other people.

2.2. Allostatic regulation: The brain is a predictive model.

A brain regulates its body, and in doing so it should avoid costly mistakes. For example, when threatened, a coordinated suite of fight-or-flight responses are deployed in a context-sensitive way (e.g. raising blood pressure; redirecting bloodflow from kidneys, skin, and the gut to muscles; increasing synthesis of oxidative enzymes and decreasing production of immune system cells; Mason, 1971; Sterling & Eyer, 1988; Weibel, 2000; see also, Barrett & Finlay, 2018). Critically, an organism must implement these bodily changes before a predator’s teeth close around its neck—it must respond to the anticipated harm, not the harm itself. Likewise, even getting up from a chair requires a redistribution of blood pressure before you stand (i.e. a slight rocking head motion induces vestibular activity, raising sympathetic nervous activity before standing; Fridman et al., 2019), or else the error, “postural hypotension”, will cause fainting and perhaps a sprain or a broken bone (Sterling, 2012). Mistakes can be dangerous, even deadly, and a good regulator must avoid serious errors.

A core principle of cybernetics makes clear how this challenge is met: “Every good regulator of a system must be a model of that system” (Conant & Ross Ashby, 1970). Your body, in its interaction with the environment, is the system in question, and your brain is the internal model of that system—i.e. its regulator (Barrett, 2017a, 2017b; Conant & Ross Ashby, 1970; Ross Ashby, 1960b; Seth, 2015). The best models learn: they modify themselves when mistakes occur so that they can predict better in the future (Ross Ashby, 1960a). To regulate efficiently, then, the brain must regulate predictively—it must anticipate outcomes and direct behavior accordingly.

This predictive regulation is called allostasis (Schulkin, 2011; Sterling, 2012, 2018; Sterling & Eyer, 1988), where a brain anticipates the needs of the body and attempts to satisfy those needs before they arise, minimizing costly errors. For instance, organisms should be motivated to forage before vital metabolic parameters (e.g. glucose, water) run out of safe bounds (Sterling, 2012). Allostatic regulation stands in contrast to the more familiar homeostatic regulation, where parameters are kept stable around a set-point, e.g. as in a thermostat, which cools the room when it gets too hot and warms it when it gets too cold. For any living organism homeostatic regulation is risky: it only occurs in reaction to events, meaning that it must wait for errors to occur (Conant & Ross Ashby, 1970). With a brain (i.e. a model of the system), such errors can be avoided (Barrett, 2017a; Conant & Ross Ashby, 1970; Seth, 2015): by modeling the system, organisms can adapt to environmental perturbations before they occur. Allostasis then, is powerful because it is predictive—a model anticipates challenges and prepares the organism to meet them.

Evidence for allostasis is hidden in plain sight, just below the surface of familiar experimental paradigms. For instance, when shocks are delivered to rats, stress-induced physiological damage is minimized when a cue makes shocks predictable. Compared to unsignaled shocks, signaled shocks halved the size and quantity of resultant ulcers, even when the signaled shocks could not be escaped or avoided (Weiss, 1971). Further, some of the most compelling evidence for anticipatory regulation comes from Pavlov. Pavlov’s classic experiments—where dogs first salivate to the food stimulus, and later to the conditioned stimulus of the dinner bell—are commonly taken as evidence for a reactive, stimulus–response driven psychology. But Pavlov’s Nobel prize was awarded for his work in physiology, where he demonstrated that both before and during feeding, the dog’s saliva and stomach acid is prepared with the appropriate mix of secretions to facilitate digestion (Garrett, 1987; Pavlov, 1904/2018; Sterling & Laughlin, 2015). For fats, lipase is prepared in the mouth and bile in the stomach. For bread, starch-converting amylase is secreted with saliva. For meats, acid and protease accumulates in the stomach. In each case, the brain predictively coordinates a suite of bodily responses: when food enters the stomach, it meets an environment already prepared to metabolize it.

Allostasis implies that all organisms use a model to guide behavior. This conclusion may appear to conflict with recent work in reinforcement learning, which suggests that organisms switch between “model-based” (i.e. goal-directed) and “model-free” (i.e. habitual) modes of learning (Crockett, 2013; Cushman, 2013; Daw et al., 2011, 2005; Morris & Cushman, in press; but see Friston et al., 2016). Specifically, model-free learning does not create a plan to reach a goal (e.g. the “cheese” in a maze); instead, it reinforces discrete actions (e.g. move left, move right) through a repeated process of trial-and-error. But, as said above, trial-and error strategies are inherently dangerous. Organisms will sometimes make mistakes, and when these mistakes occur, organisms should learn from them; however, organisms should never completely abandon the internal model into which they have continually invested metabolic resources, reverting to a pure trial-and-error strategy. (Of course, it would be plausible to consider model-free and model-based strategies along a spectrum, from short-term to long-term model-based strategies, in which case our point is simply that organisms never completely move to the model-free pole). In computational simulations model-free strategies can learn across millions of trials, but for a living organism each mistake could be fatal, bringing learning to a premature end (e.g. Yoshida, 2016).

The appeal of model-free learning typically stems from an assumption that it is computationally cheap compared to a model-based strategy. For example, it is sometimes assumed that a model-based strategy involves activating a brain-region (e.g. prefrontal cortex) and engaging in an expensive search through a goal-directed decision tree (Daw et al., 2005; Russek et al., 2017). But this perspective misunderstands how and when living organisms pay down the cost of their internal model. Learning consumes metabolic resources (Gasbarri & Pompili, 2014) to construct and modify a neural architecture. But the cost of creating this neural architecture is distributed over the course of a lifetime (Goyal et al., 2014; Kennedy & Sokoloff, 1957; Moreno & Lasa, 2003; Moreno & Mossio, 2015)—you have been investing in an internal model of your ecological niche since the day you were born. The metabolic costs of task-based neural activity are low—i.e. “engaging” in a cognitive task does not drastically increase the brain’s metabolic rate2 (Raichle & Gusnard, 2002; Sokoloff et al., 1955)—but this is because the brain is always engaged: it must constantly generate predictions and regulate the internal milieu, even when the organism is lying still in the scanner (Raichle, 2015). The costs of model-based strategies, then, do not stem from activating brain regions, or searching through a decision-tree (as a computer would do); rather, they stem from a steady metabolic investment in brain structure, and informational uptake, distributed across a lifetime. However, although the costs of task-based activation are relatively small, the overall cost of the brain remains a critical concern, especially given that it consumes approximately 20% of an adult human’s metabolic budget at rest (Clarke & Sokoloff, 1999). Any adaptation in neural design that can minimize these ongoing costs will be advantageous (Darwin, 1859/2001). In the next section, we explore a principle of neural design that controls the metabolic costs of signaling: predictive processing.

2.3. Metabolic costs of neuronal signaling are minimized by encoding prediction error.

An organism implements a predictive (i.e. allostatic) model to regulate its body in its interactions with an environment (Sterling, 2012). Beyond minimizing errors, a predictive model can also make neural activity metabolically efficient. This efficiency is made possible by predictive processing, a property of signal transmission that removes redundant information. Predictive processing is a core component of information theory (Shannon & Weaver, 1949/1964), a branch of mathematics and engineering that is central to biology, language, physics, and computer science, among other areas. For present purposes, the important point is simply that an incoming sensory signal that is perfectly predicted is redundant—it carries no information, meaning there is nothing to be encoded. For example, if a light is on then a predictive system only needs to take up information when the light is turned off (i.e. the system only encodes changes). In this way, the cost of neuronal signaling can be kept efficient by transmitting only unpredicted signals, i.e. by transmitting prediction error.

Neuronal signaling costs account for the majority of the brain’s metabolic budget. Signaling costs account for ~75% of energy expenditures in grey matter (Attwell & Laughlin, 2001; Sengupta et al., 2010), and ~40% in white matter (Harris & Attwell, 2012). Almost all of these costs stem from the Na+/K+ pump, which restores the neuronal ion gradient, extruding 3 Na+ and importing 2 K+ ions for each ATP consumed (Attwell & Laughlin, 2001). In grey matter—which consumes approximately three times more energy than white matter at rest (Harris & Attwell, 2012; Sokoloff et al., 1977)—major contributions to the signaling budget include the maintenance of the resting gradient (~11% of the signaling budget), restoration of the gradient after action potentials (~22%) and restoration after postsynaptic activations of ion channels by glutamate (~64%; Sengupta et al., 2010). Compared to these constant costs, tissue construction is a relatively minor expense (Niven, 2016). If natural selection pressures organisms to economize their use of metabolic resources (Darwin, 1859/2001), and if adult humans devote ~13% of their energy budget at rest3 to neuronal signaling (Attwell & Laughlin, 2001; Clarke & Sokoloff, 1999), then organisms must make signaling costs efficient to survive (Bullmore & Sporns, 2012; Niven & Laughlin, 2008; Sengupta et al., 2013). Predictive processing solves this dilemma, minimizing signaling costs by transmitting only signals that the internal model did not predict.

In recent years, a coherent family of mathematically formalized accounts of neural communication have emerged, with predictive processing at their core (Barrett, 2017a, 2017b; Barrett & Simmons, 2015; Chanes & Barrett, 2016; A. Clark, 2013, 2015; Denève & Jardri, 2016; Friston, 2010; Friston et al., 2017; Hohwy, 2013; Kleckner et al., 2017; Rao & Ballard, 1999; Sengupta et al., 2013; Seth, 2015; Shadmehr et al., 2010). Among these accounts, the algorithmic and implementational specifics differ and are actively debated (see Spratling, 2017); however, the core idea—that the brain is fundamentally predictive—is old, and is consistent with the work of Islamic philosopher Ibn al-Haytham (in his 11th century Book of Optics), Kant (Kant, 1781/2003), and Helmholtz (von Helmholtz, 1867/1910) (for a brief discussion, see Shadmehr et al., 2010). Predictive processing approaches are also well-established in the motor learning literature (Shadmehr et al., 2010; Shadmehr & Krakauer, 2008; Sheahan et al., 2016; Wolpert & Flanagan, 2016), where copies of motor commands are also sent to sensory cortices (called efferent copies; Sperry, 1950; von Holst, 1954). Efferent copies modify neural activity in sensory cortices (e.g. Fee et al., 1997; Sommer & Wurtz, 2004a, 2004b; Yang et al., 2008), allowing them to anticipate the sensory consequences of motor commands (e.g. visual, visceral, somatosensory) before sensory information travels from the periphery to the brain (Franklin & Wolpert, 2011). For example, people cannot easily tickle themselves (Claxton, 1975), but when self-tickling is delayed or reoriented by a robotic hand the sensation becomes stronger (Blakemore et al., 1999). That is, a predictable sensation (self-tickling) is uninformative and ignored, but when the relationship between a motor command and sensory feedback is altered (by a delay or reorientation), the efferent copy no longer predicts the sensory consequences—the sensory consequences become informative, and the sensation is experienced.

Predictive processing approaches of neural organization go further, adding that the brain is loosely organized in a predictive hierarchy (Barbas, 2015; Felleman & Van Essen, 1991; Mesulam, 1998), with primary sensory neurons at the bottom and compressed, multimodal summaries at the top. This process of prediction, comparison, and transmission of prediction error is thought to occur at all levels of the hierarchy. In general, at a given level of the hierarchy, when prediction signals mismatch with incoming information (passed from a lower level), the neurons at that level have the opportunity to change their pattern of firing to capture the unexpected input. This unexpected input is prediction error. Prediction error need not be consciously attended to be processed—its propagation is a fundamental currency of neural communication. For example, in primary sensory cortices, prediction signals are compared with incoming sensory signals (e.g. frequencies of light, pressure on the skin, etc.), whereas in association cortices prediction signals are compressed multimodal summaries of sensory and motor information, and are compared with slightly less compressed summaries of this sensory and motor information (Barrett, 2017a; Chanes & Barrett, 2016; Friston, 2008). Social predictions always involve these compressed, multimodal summaries (Bach & Schenke, 2017; Baldassano et al., 2017; Koster-Hale & Saxe, 2013; Ondobaka et al., 2017; Richardson & Saxe, 2019; Theriault et al., under review).

Predictive processing approaches have the potential to radically reorganize mainstream views of cognitive science (A. Clark, 2013) and psychological science more generally (Hutchinson & Barrett, 2019). For present purposes, however, we draw two less radical conclusions: first, neuronal signaling has a metabolic cost; and second, by predicting signals (at all levels of the cortical hierarchy), and encoding only prediction error, the metabolic costs of neuronal signaling can be minimized (Sengupta et al., 2013).

2.3.1. Constructing and Coasting.

For predictive processing to be efficient, the brain must make accurate predictions in the first place. To make these predictions, the brain must encode information (i.e. encode prediction error), building on its existing model to create one that is more powerful and more generalizable. That is, to maintain metabolic efficiency in the long-run, organisms must learn. They learn by exposing themselves to novelty (e.g. Burghardt, 2005; Cohen et al., 2007), paying a short-term metabolic cost to encode information and contribute to a model that can make accurate predictions in the future. The goal of a brain, then, cannot be to always minimize prediction error, or to always minimize metabolic expenditures (see, the “dark room” criticism of free energy predictive processing accounts, where the brain’s primary goal is to minimize prediction error; also see, Friston et al., 2012; Seth, 2015). Instead, to survive and even thrive, organisms must invest resources wisely, managing the trade-off between the metabolic efficiency granted by their internal model’s accurate predictions, and the metabolic costs of model construction, which necessarily involves taking up information as prediction error4.

We hypothesize that behavior involves a balancing act between these concerns. At times organisms will seek novelty (i.e. seek prediction error), constructing a more generalizable model of the environment. and at other times organisms will seek—or create—predictability, coasting on the metabolically efficient predictions of their existing model. The interplay between constructing and coasting5 will be critical to an understanding of how humans control their social environment (for a similar approach, see Friston et al., 2015). Our primary concern in this paper is with a sense of should, which is a strategy for coasting—we will suggest that conforming to others’ expectations creates a predictable social environment, minimizing the metabolic costs of prediction error (but see Section 3.4, for an example of construction in the context of mental inference).

2.4. Summary.

A brain implements a predictive model to regulate an organism’s body in its environment. (Conant & Ross Ashby, 1970; Ross Ashby, 1960b; Sterling, 2012; Sterling & Laughlin, 2015). A brain is also a significant metabolic investment (Clarke & Sokoloff, 1999), and as organisms must be metabolically efficient to survive, the brain’s energetic costs must be regulated (especially the high costs of neuronal signaling; Attwell & Laughlin, 2001). Predictive processing satisfies this need for neuronal efficiency by limiting energy expenditures, transmitting only unpredicted signals from one level of the neural hierarchy to the next (A. Clark, 2013; Friston, 2010; Shannon & Weaver, 1949/1964). It follows then, that prediction error carries a metabolic cost (Sengupta et al., 2013), and unpredictable environments are metabolically costly.

From this empirical foundation, we can develop our account of a sense of should. This account hinges on one additional point: you contribute to the social environment of others, and they comprise the social environment for you. Encoding information (i.e. encoding prediction error) about these other people is a metabolic demand, but this metabolic demand can be controlled. We propose that humans learn to control the behavior of others (and by extension, the metabolic demands others impose) by conforming to their expectations. This control is not coercive—that is, others are not forced to perform particular behaviors—rather, this form of control can make others’ behavior more predictable. Other people can be made more predictable when you are predictable to them.

3. A metabolic and predictive framework for modeling a sense of should

In this section, we outline our central hypothesis: that a sense of should regulates the metabolic pressures of group living, i.e. that people are motivated to conform to others’ expectations, and by conforming, they maintain a more predictable—and by extension, a more metabolically efficient—social environment. If your behavior conforms to other people’s predictions (i.e. if your behavior minimizes prediction error for them) then they will have less reason to change their behavior, making them more predictable for you.

We suggest that a sense of should serves a metabolic function, and that it should develop in nearly all humans—but we are not assuming that it is innate. On our account, there is no need to assume that a sense of should is a specialized, or domain-specific adaptation (c.f. Cosmides & Tooby, 1992). Rather, we suggest that a sense of should is an emergent product, both of domain-general capabilities (e.g. Heyes, 2018) that are exceptionally well-developed in humans (e.g. associative learning, memory), and of social context (specifically, a social context where others’ behaviors are contingent on your own). Further, the metabolic benefits of a sense of should almost certainly coexist (or conflict) with other adaptive strategies, including self-interested hedonically motivated behavior, exploration, reputation-seeking, or reciprocal altruism in repeated interactions (e.g. Axelrod, 1981; Trivers, 1971). In this section, we develop a formal model of a sense of should (using mathematical formalism to make all assumptions explicit) and in section 4, we elaborate on the implications of this model in dynamic social contexts. Our story, then, begins with metabolic frugality, but ends with the complex interplay of motivations that characterize human social life.

3.1. The metabolic benefits of conformity.

To formalize the individual adaptive benefits of conforming to others’ expectations, we use a working example: a person named Amelia. We assume that Amelia’s brain, like the brain of any organism, consumes metabolic resources to maintain her internal milieu and to move her body around the world. Amelia’s brain processes unexpected sensory information as prediction error, which is neurally communicated at a metabolic cost (section 2.3). Formally:

| (1) |

where

represents Amelia’s total metabolic expenditures across some arbitrary time period,

represents the metabolic costs of encoding prediction error across that time period, and

represents other metabolic costs not related to neuronal signaling.

In predictive processing models, a precision term, weighting prediction errors according to their certainty, is often included (e.g. H. Feldman & Friston, 2010), but for the sake of simplicity we omit these terms while developing our model (but see section 4.1.1).

Prediction error comes from sensory changes in the body (interoceptive sources) and sensory changes in the surrounding world (exteroceptive sources). Interoceptive prediction error refers to unexpected information about the condition of the body (signaling, for example, heart-rate, respiration, metabolic and immunological functioning; Barrett & Simmons, 2015; Craig, 2015; Seth, 2013). Exteroceptive prediction error refers to unexpected information in the environment (signaled by sights, sounds, etc.). Exteroceptive prediction error, experienced by Amelia, could come from many sources, each of which could be defined as an entity6 (e.g. animals, machines, inanimate objects, the weather). For the purposes of our model, the critical distinction among entities is whether a given entity does, or does not, predict Amelia’s behavior. If an entity predicts Amelia’s behavior, then the prediction error she receives from that entity is called reciprocal prediction error (our examples assume that these entities are human, but see footnote 17 for an extension to non-biological entities). If an entity does not predict Amelia’s behavior (e.g. as in weather, falling rocks, walls, ceilings), then the prediction error she receives from that entity is called non-reciprocal prediction error. Formally:

| (2) |

where

represents the metabolic cost (to Amelia) of encoding prediction error across a time period,

represents Amelia’s interoceptive prediction error,

represents Amelia’s reciprocal prediction error, from n entities in the environment,

represents Amelia’s non-reciprocal prediction error, from m entities in the environment, and

∝ denotes a proportional relationship, as the exact relation between prediction error and metabolic cost is unknown.

For Amelia, the adaptive advantage of conformity stems from regulating reciprocal prediction error.

Conforming to others’ expectations benefits Amelia by reducing the likelihood that others will change their behavior in unanticipated ways—i.e. all else being equal, conforming keeps others more predictable. This conclusion can be derived by examining reciprocal prediction error. The reciprocal prediction error experienced by Amelia is generated by multiple entities in her environment, but for now we narrow the focus to one person, named Bob. Using her internal model, Amelia predicts Bob’s behavior, and her prediction error from Bob () equals the magnitude of the difference between Bob’s behavior () and her prediction about his behavior ().

Likewise, Bob predicts Amelia’s behavior, and his prediction error () equals the magnitude of the difference between Amelia’s behavior () and his prediction about her behavior ().

When Amelia’s behavior deviates from Bob’s predictions (i.e. when ) Bob receives information in the form of prediction error (Shannon & Weaver, 1949/1964). This information may cause some change to Bob’s internal, predictive model (), proportional to the amount of information provided. Critically, if Bob encodes the prediction error (i.e. Bob learns), then these changes in Bob’s internal model may cause a proportionate change in his behavior (). That is, on average, when Bob’s predictions are violated, Bob may change his internal model by some amount, and his behavior may change with it.

If Bob’s behavior changes, and if Amelia is unable to anticipate exactly how it will change (in the next moment, and in some number of moments following it), then prediction error will increase for Amelia7.

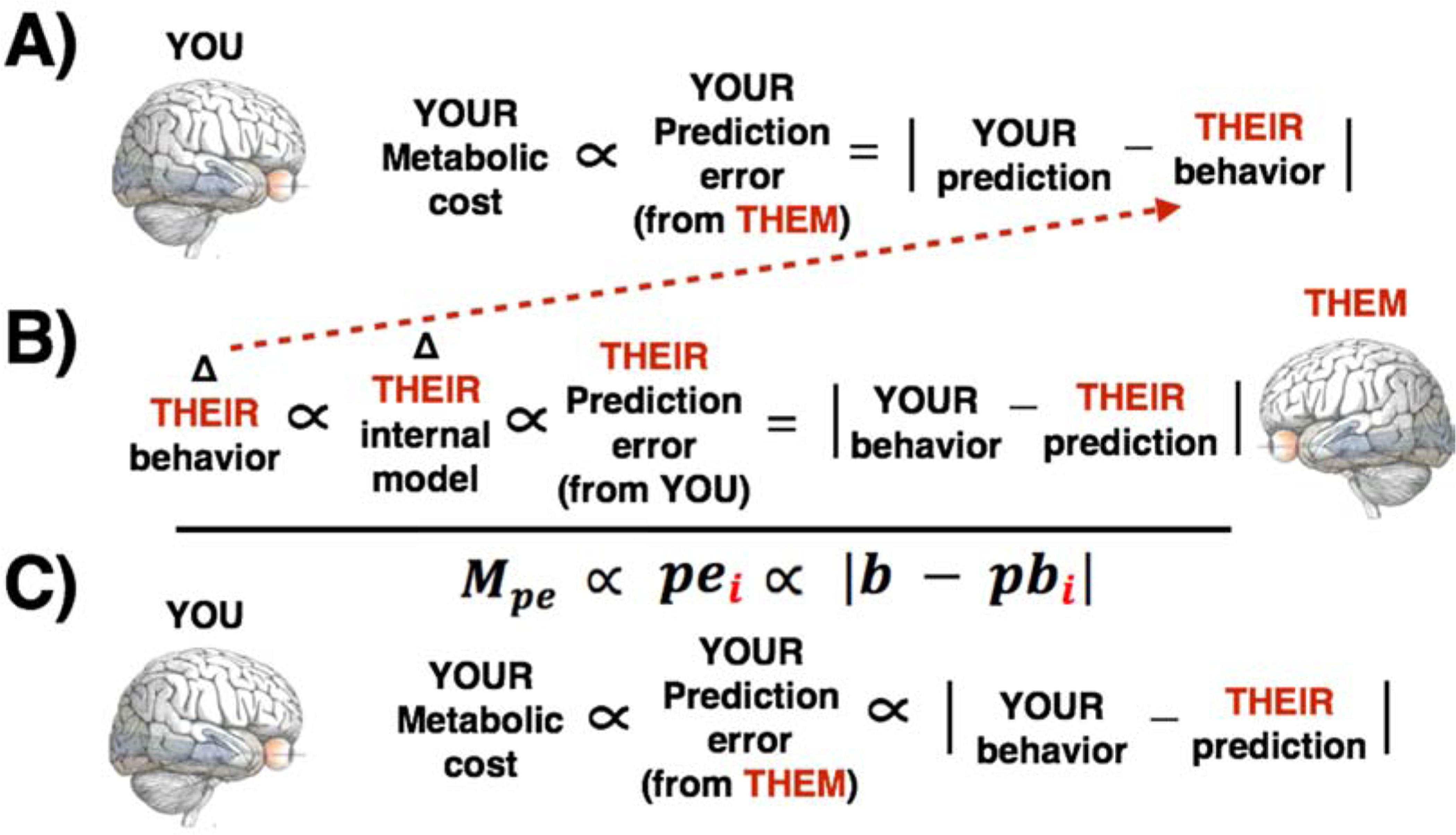

It follows, then, that the prediction error Amelia experiences from Bob (), is related to the difference between her behavior and Bob’s predictions about her behavior (). This relationship is mediated by changes in Bob’s internal model and his behavior (Figure 2).

Figure 2.

Illustration of the derivation of Equation 4, modeling the control of prediction error (and its metabolic costs) by conforming to others’ predictions. A) Your prediction error equals the difference between the predicted and actual behavior of another person, and is assumed to carry a metabolic cost (section 2.3). Others’ predictions can be understood as a vector of sensory signals, and your behavior is a matched length vector. B) Prediction error for others equals the difference between your behavior and their prediction. As prediction error is informative (Shannon & Weaver, 1949/1964), prediction error produces some proportional change in others’ internal models, which in turn produces some proportional change in their behavior. C) Considered together, the relationships imply that your prediction error (and its metabolic costs) are more likely to increase when your behavior violates others’ predictions.

Formally:

| (3) |

Which reduces to:

Thus, Amelia can manage the metabolic costs imposed by Bob by conforming to his expectations. In the more general case, Bob is one arbitrary entity (i):

| (4) |

where

represents the metabolic cost (to Amelia) of encoding prediction error across a time period,

represents Amelia’s reciprocal prediction error, from one entity (i) in the environment,

b represents Amelia’s behavior, and

represents the prediction of one entity (i) about Amelia’s behavior.

Thus, the prediction error experienced by Amelia, from one entity (e.g. Bob), and the metabolic costs of that prediction error, are proportional to the discrepancy between her behavior and Bob’s predictions about her behavior. In this formulation (which is intentionally presented as a simplified sketch of the core components; a full version would include, at a minimum, precision weighting terms; see section 4.1.1), Amelia’s behavior (b) could be treated as a binomial vector, representing all possible features of her behavior in a given instance (i.e. each entry in the vector denotes one specific feature of her behavior, marking it as present or absent). A prediction about her behavior () is a matched length binomial vector, meaning Bob predicts the features of Amelia’s behavior. When these two vectors are identical, both the proportional change in Bob’s behavior, and the proportional increase in Amelia’s prediction error, are minimized8. All else being equal, Amelia can regulate the metabolic costs of prediction error by conforming to others’ expectations.

A brain implements a predictive model to regulate an organism’s body in its environment (Conant & Ross Ashby, 1970; Ross Ashby, 1960b; Sterling, 2012; Sterling & Laughlin, 2015; see Section 2.2). With respect to this model, organisms must balance (at least) two pressures: they must balance constructing (e.g. disrupting their environment at some metabolic cost, but gaining information that can be added to their internal model and inform future predictions) and coasting (e.g. using their existing internal model to make accurate, metabolically efficient predictions; section 2.3.1). Broadly, a sense of should is a strategy to facilitate coasting—it maintains a predictable social environment, allowing Amelia’s internal model to continue issuing accurate predictions. To issue accurate predictions, Amelia had to invest in constructing a sufficiently accurate internal model in the first place. In this way, conforming to others’ expectations secures Amelia’s initial investment, as every time that she violates others’ expectations, she increases the likelihood that their behavior (and their internal models) will change in ways that she cannot predict. If other people changed in ways Amelia could not predict, then she would need to reinvest in model construction all over again. As long as she conforms to others’ predictions (and as long as others’ internal models don’t suddenly, and unbeknownst to Amelia, change dramatically), her predictions of others will remain relatively accurate and efficient.

This process—conforming to others’ expectations in order to coast on a predictable environment—also implies a positive feedback loop: when Amelia conforms, she makes her environment more predictable, which will make mental inference (i.e. estimating ; see section 3.4) easier, which then makes conforming easier still. But this positive feedback loop cannot run forever: eventually, overconforming may come at a cost to Amelia’s survival or reproduction. That is, at an extreme, Amelia might become a doormat9. Coasting on the metabolic benefits of a sense of should, then, must be balanced with satisfying other adaptive needs for survival and reproduction. Amelia cannot only conform to social pressure, she must balance the benefits of a predictable social environment against her other needs (e.g. food, sex, safety)10.

But the predictable social environment generated by Amelia’s conformity may also help her implement other strategies, ranging from deception to reciprocal altruism. If Amelia’s social environment is predictable, and if her behavior ensures that it will remain predictable, then she can engage in long-term social planning. This social planning could be cooperative or competitive: Amelia can leverage the social predictability (that she helped create) to extend alliances with other people, or she can use social predictability identify occasions where it is to her advantage to deceive or betray them. Thus, although a sense of should is experienced as a motivation distinct from self-interested reputation-seeking, or utility maximization (Asch, 1952/1962, Chapter 12; Batson & Shaw, 1991; Dovidio, 1984; Greenwood, 2004; Piliavin et al., 1981; Schwartz, 1977; Smith, 1790/2010; Tomasello, in press), its interplay with other motives within a social environment can make new strategies possible.

This section has shown how it could be individually adaptive to conform to others’ expectations, and how these advantages follow from the biological foundations already established in section 2. In the following sections, we develop the framework surrounding this model further, showing how a sense of should is experienced as a psychologically distinct motivation (section 3.2), how it might develop, (section 3.3), how it facilitates mental inference (section 3.4), and how social influence via a sense of should differs from social influence as it is more typically considered (section 3.5).

3.2. The psychological experience of a sense of should.

We have established that a sense of should can regulate predictability in a social environment, and that this strategy is distinct from the pursuit of reputation or social reward. But Adam Smith (Smith, 1790/2010), and others (e.g. Asch, 1952/1962; Batson & Shaw, 1991; Dovidio, 1984; Greenwood, 2004; Piliavin et al., 1981; Schwartz, 1977; Tomasello, in press) go further, suggesting that a sense of should is psychologically distinct from a more general motivation to seek rewards, such as reputation. Following them, we suggest that unpredictability can be aversive in and of itself (Hogg, 2000, 2007). When Amelia violates others’ expectations, she disrupts her social environment, producing metabolic and affective consequences. When this relationship between cause and consequence is learned, Amelia’s brain should motivate her to regulate these violations of others’ expectations prospectively (i.e. allostatically; section 2.2), allowing a sense of should to emerge as an anticipatory aversion to violating others’ expectations. To provide a full account of this process, we briefly review the modern scientific understanding of affect.

Affect refers to the psychological experience of valence (i.e. pleasantness vs. unpleasantness) and arousal (i.e. alertness and bodily activation vs. sleepiness and stillness). Valence and arousal are core features of consciousness (Barrett, 2017a; Damasio, 1999; Dreyfus & Thompson, 2007; Edelman & Tononi, 2000; James, 1890/1931; Searle, 1992, 2004; Wundt, 1896), and, when intense, become the basis of emotional experience (Barrett, 2006b; Barrett & Bliss‐Moreau, 2009; Russell, 2003; Russell & Barrett, 1999; Wundt, 1896, Chapter 7). Affect is a low-dimensional transformation of interoceptive signals, which communicate the autonomic, immunological, and metabolic status of the body (Barrett, 2017a; Barrett & Bliss‐Moreau, 2009; Barrett & Simmons, 2015; Chanes & Barrett, 2016; Craig, 2015; Seth, 2013; Seth & Friston, 2016). Valence and arousal are sometimes considered to be independent dimensions of affect, but, in reality, they exhibit complex interdependencies (Barrett & Bliss‐Moreau, 2009; Francis & Oliver, 2018; Gomez et al., 2016; Kuppens et al., 2013). For present purposes, it is enough to say that arousal is not necessarily valenced, yet, context will guide its interpretation as pleasant or unpleasant (Barrett, 2017a, 2017b).

Recent work has demonstrated that prediction error is associated with the physiological correlates of arousal. For example, prediction error is associated with electrodermal, pupillary, neurochemical, and cardiovascular responses that reflect patterns of ANS (autonomic nervous system) arousal (Braem et al., 2015; Critchley et al., 2005; Crone et al., 2004; Dayan & Yu, 2006; Hajcak et al., 2003; Mather et al., 2016; Preuschoff et al., 2011; Spruit et al., 2018; Yu & Dayan, 2005). Unpredictable environments, then, including ones created by Amelia’s non-conformity, generate arousal, and this arousal will be interpreted in the context of ongoing exteroceptive and interoceptive information. Given this, we can assert that unpredictable social environments are arousing; what must also be established, is how they become aversive.

Having one’s expectations violated is sometimes pleasant, and sometimes aversive. For example, comedy often stems from incongruity and transgressing norms (M. Clark, 1970; Olin, 2016). Likewise, intentionally provoking a speaker with a pointed question may be disruptive, but their answer could be informative (and therefore useful for constructing the brain’s internal model, facilitating future predictions; section 2.3.1). Disruptions can be adaptive. However, disruptions will always involve processing prediction error, and therefore, they will always be metabolically costly (section 2.3). Given this, in the absence of some other benefit to be gained, metabolic efficiency is best served by avoiding such disruptions, i.e. coasting on the brain’s existing model. Further, violating others’ expectations is risky. For example, if Amelia tells a dirty joke, how others interpret their arousal will decide whether the joke is interpreted as hilarious or offensive. Thus, although it can occasionally be pleasant to violate other’s expectations, there is reason to expect that transgressing norms will often be stressful and unpleasant.

When Amelia violates others’ expectations, then, she invites an aversive outcome (i.e. “punishment”). But for a sense of should, the “punishment” does not come from other people, or at least, not explicitly from them—no second or third party intentionally administered it for the purpose of punishing Amelia, nor did anyone pay a cost or risk anything to censure her. Instead, for Amelia to receive the punishment, it is only necessary that others react naturally to their expectations being violated, changing their internal model, and changing their behavior with it (Equation 4; Figure 2). When others’ behaviors change, prediction error increases for Amelia, and the metabolic efficiency of her internal model temporarily suffers. She will experience arousal, which if intense or pervasive enough will often be experienced as aversive. The punishment, then, arrives as both a metabolic cost, and as the experience of negative affect. The affective experience was not something imposed on Amelia by others; rather, it stems from the way she makes meaning of her own interoceptive sensations (Barrett, 2017a, 2017b). Indeed, classic accounts of helping behavior (a special case of conforming to others’ expectations) suggest that helping arises from the combination of evoked arousal by a suffering victim, and the helper’s ability to reduce that arousal by helping (Dovidio, 1984; Piliavin et al., 1981). The categorization of interoceptive sensation was even present in classic accounts of moral development:

“Two adolescents, thinking of stealing, may have the same feeling of anxiety in the pit of their stomachs. One … interprets the feeling as ‘being chicken’ and ignores it. The other … interprets the feeling as ‘the warning of my conscience’ and decides accordingly.”

(Kohlberg, 1972; pp. 189–190).

This, then, may be what separates a “self-interested” motivation to pursue rewards and avoid punishments (Smith, 1790/2010), from a sense of should (and possibly from moral obligation, which is beyond our present scope; Figure 1). Rewards and punishments (e.g. pleasure, pain) are externally administered, whereas a sense of should necessarily involves self-caused disruptions of the social environment, and a subsequent interpretation of interoceptive sensation. It is a punishment that Amelia’s brain literally inflicts on itself.

It must be noted that Amelia experiences aversive outcomes through her interpretation of affect, but a sense of should actually motivates her to avoid such consequences prospectively. That is, we propose that a sense of should is experienced as an anticipatory aversion to violating others’ expectations11. The brain is an allostatic regulator—it anticipates the needs of the body and attempts to meet those needs before they arise, thereby avoiding errors (section 2.2). If Amelia’s brain has learned that violating others’ expectations decreases her metabolic efficiency (and consciously, Amelia experiences violating others’ expectations as aversive), then, Amelia will prospectively avoid such situations and the behaviors that trigger them. In the absence of some competing goal, the most metabolically efficient option will often be to behave as others expect. A sense of should, then, is not an exceptional motivation—in social settings, a sense of should is a default. Given the metabolic importance of a predictable social niche, and given that any individual can disrupt that niche by violating others’ expectations, we hypothesize that, all else being equal, adults continuously adjust their behavior to fit others’ expectations, only rarely making a hard break from observing social norms to exclusively pursue their own interests. Indeed, if this weren’t true, group living might be impossible.

3.3. The development of a sense of should.

A sense of should is a motivation to prospectively avoid behaviors that deviate from others’ expectations in the service of metabolic efficiency. However, a sense of should does not involve conditioning avoidance of any specific behavior; rather, it involves learning a relationship. The relationship is between your behavior and others’ expectations, . When the discrepancy between your behavior and others’ expectations is large, the social environment becomes less predictable, and metabolic and affective consequences follow. Put another way, developing a sense of should involves learning what behaviors are appropriate (i.e. expected by others) in a given context.

To learn this relationship, Amelia must accomplish at least two developmental tasks. First, she must be able to accurately predict the behaviors of social agents (as otherwise she cannot experience prediction error when her predictions are violated). Her ability to make sophisticated predictions, especially ones that extend beyond the present, will develop gradually during infancy and early childhood. Newborns live in an environment structured by their caregivers, and the predictions necessary for a newborn’s survival are largely limited to those dyads—e.g. newborns learn that crying summons a blurry shape (i.e. a parent) that relieves interoceptive discomfort by feeding, burping or hugging them (Atzil et al., 2018). As Amelia’s newborn brain develops a more sophisticated internal model, and as it begins to initiate interactions with adults and other children, it constructs increasingly sophisticated predictions about their behaviors. The more frequent and sophisticated these predictions are, the more potential there is for them to be violated, and for their metabolic/affective consequences to be experienced.

How these metabolic and affective consequences motivate behavior may also change across the lifespan. For example, in childhood the brain accounts for a larger portion of the whole body metabolic budget (Goyal et al., 2014; Kennedy & Sokoloff, 1957), meaning that, compared to adults, children may be more likely to tolerate fluctuations in the metabolic costs imposed by their environment, as these fluctuations comprise a smaller portion of the brain’s total metabolic budget. Likewise, older adults are more likely to self-select out of high-arousal situations (Sands et al., 2016; Sands & Isaacowitz, 2017), and are more likely to experience high arousal stimuli as aversive (regardless of whether the stimuli were experienced as positive or negative by younger adults; Keil & Freund, 2009) suggesting that older adults may be less likely to tolerate these metabolic costs (or the corresponding affective experiences). Further, young children (e.g. 4-year olds) are more likely to entertain a range of predictions (i.e. an explore strategy, which would oppose a sense of should) and adults are more likely to limit predictions to the outcomes that are most likely (i.e. an exploit strategy, which a sense of should facilitates; Gopnik et al., 2015, 2017; Lucas et al., 2014; Seiver et al., 2013), potentially as a consequence of the late development of prefrontal cortex and associated processes supporting cognitive control (Thompson-Schill et al., 2009). In the context of social pressure, our framework suggests that these developmental changes—e.g. in metabolic efficiency, sensitivity to arousal, and cognitive control—may underlie changes in sensitivity to a sense of should, and that, as a social consequence of these changes, children may show less aversion to unpredictable social settings, whereas older adults may strive to maintain this social stability12.

The second developmental task is for Amelia to develop the ability to make precise inferences about others’ expectations of her. A sense of should involves learning a relationship between her behavior and others’ expectations (), and Amelia must infer others’ expectations () precisely enough to identify when her behavior conforms, and when it is discrepant. In the next section (section 3.4), we outline how this capability might develop. We hypothesize that Amelia is born with a minimal “toolkit” of domain general processes (e.g. memory, associative learning; for a similar view, see Heyes, 2018), and from this foundation, develops a fine-tuned ability to make inferences about the predictions made by others’ internal models (i.e. an ability to engage in mental inference). Given this, children may experience arousal in unpredictable social settings, but it may only be later in development that they understand that the relationship between their own behavior and others’ expectations regulates this arousal, and only when they learn this contingency will they feel obligated to conform to others’ expectations (for a similar account of empathic development, see M. L. Hoffman, 1975; for review, see Dovidio, 1984).

3.4. Mental inference and a sense of should.

Inferences about others’ expectations are at the core of our approach (Equation 4; Figure 2). To select a behavior that matches others’ expectations, and that controls prediction error in the social environment, Amelia must first infer what behavior others expect of her. We use the term mental inference to stand in for all of these inferences about others’ expectations, with the caveat that others’ expectations may be formulated as high-level, abstract predictions about mental states, as low-level, concrete predictions about behaviors, or as predictions at any level of abstraction in between (Kozak et al., 2006; Vallacher & Wegner, 1987). There are many competing accounts of mental inference, and most likely a number of underlying proficiencies and/or cognitive processes that combine to facilitate it (Apperly, 2012; Gerrans & Stone, 2008; Schaafsma et al., 2015; Warnell & Redcay, 2019), but the core problem that accounts of mental inference aim to solve is this: How do people make inferences about others’ minds (i.e. predictions generated by others’ internal models)13, given that others’ internal models cannot be directly observed. Three prominent theoretical perspectives—simulation theories, modular theories, and ‘theory’ theory—all provide different answers. Simulation theories suggest that Amelia performs mental inference by using her own mind (i.e. her internal model) as a simulator (e.g. Goldman, 2009; Goldman & Jordan, 2013; Gordon, 1992). For example, she may feed “pretend beliefs” and “pretend desires” into her own “decision-making mechanism”, treating the “output” as the inferred mental state (Goldman & Jordan, 2013, p. 452). Modular theories propose that mental inference is made possible by innately specified cognitive mechanisms (e.g. Baillargeon et al., 2010; Baron-Cohen, 1997; Leslie, 1987; Leslie et al., 2005; Scholl & Leslie, 2001), claiming, for example, that “the concepts of belief, desire, and pretense [are] part of our genetic endowment”, and that mental inference is made possible by “a module that spontaneously and postperceptually processes behaviors that are attended, and computes the mental states that contributed to them” (Scholl & Leslie, 2001, p. 697). Finally, ‘theory’ theory proposes that mental inference is a subcategory of the more general process of inference (Gopnik, 2003; Gopnik & Wellman, 1992, 2012). That is, in mental inference, as in learning more generally, children construct theories: they “infer causal structure from statistical information, through their own actions on the world and through observations of the actions of others” [emphasis added] (Gopnik & Wellman, 2012, p. 1085). Adjudicating between these accounts is beyond the scope of this paper, but our approach can make clear how domain general trial-and-error learning (as in ‘theory’ theory), combined with the use of prior information (as in simulation theory) might allow one brain to make inferences about the unobservable predictions of another. We suggest that mental inference is necessary to experience a sense of should, and that conversely, the interpersonal dynamics that make a sense of should possible (Equation 4; Figure 2) can be used to facilitate more precise mental inferences.

As discussed in section 3.1, if Amelia’s behavior violates someone’s expectations (e.g. Bob), then Bob’s internal model and behavior will change proportional to the violation. This change in Bob’s behavior creates prediction error for Amelia (Equation 4; Figure 2). There is a relationship, then, between Bob’s predictions about Amelia (which are generated by his internal model), and the prediction error that Amelia receives from him. Amelia cannot infer exactly what Bob predicts, but she can identify when she has violated Bob’s predictions: when Amelia has violated Bob’s predictions, his behavior is more likely to change, increasing prediction error for her. This link—between others’ predictions and the prediction error Amelia receives when violating them—may provide a route through which Amelia can cumulatively construct a model of others’ minds. Further, by using this route in combination with her prior knowledge about Bob, or people more generally, Amelia can inform her guesses about what Bob’s predictions might be, reducing the need for metabolically expensive trial-and-error learning. We demonstrate this below, extending our model from section 3.1.

Prediction error experienced by Amelia, from one person (i), is proportional to the discrepancy between her behavior and his prediction.

But, Amelia has no direct access to his prediction. Instead, she must infer it. The equation can be rewritten to only include information accessible to Amelia: her prediction error, her behavior, her mental inference about what someone expects, and the error in that inference. Initially, the error in Amelia’s inference will be unknown to her, but we will suggest that she can estimate it by applying prior knowledge and engaging in a dynamic process of trial and error—forming a mental inference, enacting a behavior, then estimating her error.

| (5) |

where

is a vector representing Amelia’s estimate (i.e. her mental inference) of entity i’s prediction about her behavior, and

e is the error in Amelia’s estimate, such that .

For example, Amelia cannot directly confirm that her father expects her to call him on his birthday, as his expectations are not externally observable. However, she can infer, based on her prior knowledge, that he probably expects a call. In this case, Amelia can use her inference () to stand in for the actual predictions her father has about her behavior (). There is always the possibility that she is wrong (i.e. that e is large). For example, she may have accidentally offended him the day before, and he may prefer that she not call this year.

Amelia had to use prior knowledge to generate her inference about her father’s birthday expectations. The prior knowledge informing her prediction could come from many sources, but most obviously, it could come from her prior experience with her father. For example, if she knows he expected a call last year (or even that he is sensitive and cares about this sort of gesture in other non-birthday contexts), then she has some reason to infer that he expects a call today. To provide a formalized sketch of this route to inference, we represent Amelia’s estimate of her father’s current prediction () as a Bayesian posterior, conditioned on some number (n) of prior predictions she knows he has made (). In psychology, such an inference about a particular person is commonly called a dispositional inference (Heider, 1958; Jones & Davis, 1965; Kelley, 1967; for review, see Gilbert, 1998; Malle, 2011).

At another extreme, Amelia could use prior knowledge from other people (aside from her father) to generate an inference about what her father expects. For example, she could infer that her father expects a phone call through her prior experience with everyone who has had a birthday. This is a complementary route to the same inference. Here, the context (i.e. it being someone’s birthday) is held constant, and Amelia infers her father’s expectation using her knowledge of others’ predictions in the same context. Again, we represent Amelia’s estimate of her father’s prediction () as a Bayesian posterior, this time conditioned on the average of some number (n) of prior predictions () that some number of entities (m) have made. In psychology, an inference based on what people typically do (i.e. what they do on average) within a given context is commonly called a situational inference (Gilbert, 1998; Kelley, 1967).

As both dispositional and situational inferences use prior experience, they should be imprecise in infancy and early childhood, but gradually become more refined as children grow and accumulate experience (section 3.3)14. In this way, as Amelia’s internal model takes on more information across development, it increases the amount of prior information on which her predictions can be based, akin to the core insight of simulation theory (Goldman, 2009; Goldman & Jordan, 2013; Gordon, 1992).

This approach (and others, see Bach & Schenke, 2017), can articulate how dispositional inferences, situational inferences, and other combinations of prior knowledge are used to estimate the predictions others might make. That is, all of these forms of inference are special cases of the more general process of applying prior knowledge. For example, Amelia’s situational inference used knowledge about all entities (m) in a given context to generate an inference about her father’s predictions. She could also have used some subset of m (e.g. other fathers, other men, or other older adults). This provides a natural means of integrating stereotypes into our approach, as such subsets might also be formed on the basis of observable features (e.g. skin color, accent; Kinzler et al., 2009) or other grouping factors that Amelia’s internal model has learned to see as relevant15.

However, as an explanation of mental inference, an account that only used prior experience to guide inference could not be complete. Such an account would be circular: all means of estimating of others’ expectations () would require prior estimates of others’ expectations. That is, the examples of dispositional and situational inference reviewed above have required that Amelia use what others expected before () to estimate what they expect now (). Assuming that Amelia has no innate knowledge of what others expect, such a model cannot answer how Amelia ever formed an estimate about others’ expectations in the first place. We’ve arrived at the same hurdle as all other accounts of mental inference: if other minds cannot be directly observed, then how can Amelia infer their contents (i.e. their predictions)?

As alluded to at the beginning of this section, we hypothesize that Amelia can learn about others’ predictions by violating them. She cannot determine precisely what others expect of her, but she can use prior knowledge to form an estimate (even an imprecise one), enact a behavior, and then use the resultant prediction error to determine whether her estimate was accurate. Extending the analogy from ‘theory’ theory, where mental inference is built from a process similar to scientific inference (Gopnik, 2003; Gopnik & Wellman, 1992, 2012): Amelia’s estimate () could be considered as a hypothesis about an entity’s expectation, her behavior (b) could be considered as an experiment, and the prediction error () generated by the entity for Amelia (including her associated metabolic costs and affective experiences; section 3.2) could considered as evidence. Through this process, Amelia can estimate the inaccuracy of her initial estimate (e). Formally, given Equation 5:

If b and are known, then:

where

is the prediction error experienced by Amelia from an entity (e.g. Bob), and

e is the error in Amelia’s estimate of Bob’s prediction about her behavior (i.e. the error in her mental inference).