Abstract

How do viewers interpret graphs that abstract away from individual-level data to present only summaries of data such as means, intervals, distribution shapes, or effect sizes? Here, focusing on the mean bar graph as a prototypical example of such an abstracted presentation, we contribute three advances to the study of graph interpretation. First, we distill principles for Measurement of Abstract Graph Interpretation (MAGI principles) to guide the collection of valid interpretation data from viewers who may vary in expertise. Second, using these principles, we create the Draw Datapoints on Graphs (DDoG) measure, which collects drawn readouts (concrete, detailed, visuospatial records of thought) as a revealing window into each person's interpretation of a given graph. Third, using this new measure, we discover a common, categorical error in the interpretation of mean bar graphs: the Bar-Tip Limit (BTL) error. The BTL error is an apparent conflation of mean bar graphs with count bar graphs. It occurs when the raw data are assumed to be limited by the bar-tip, as in a count bar graph, rather than distributed across the bar-tip, as in a mean bar graph. In a large, demographically diverse sample, we observe the BTL error in about one in five persons; across educational levels, ages, and genders; and despite thoughtful responding and relevant foundational knowledge. The BTL error provides a case-in-point that simplification via abstraction in graph design can risk severe, high-prevalence misinterpretation. The ease with which our readout-based DDoG measure reveals the nature and likely cognitive mechanisms of the BTL error speaks to the value of both its readout-based approach and the MAGI principles that guided its creation. We conclude that mean bar graphs may be misinterpreted by a large portion of the population, and that enhanced measurement tools and strategies, like those introduced here, can fuel progress in the scientific study of graph interpretation.

Keywords: bar graph of means, data visualization, DDoG measure, Bar-Tip Limit error, MAGI principles

Introduction

Background on bar graphs

How can one maximize the chance that visually conveyed quantitative information is accurately and efficiently received? This question is fundamental to data-driven fields, both applied (policy, education, medicine, business, engineering) and basic (physics, psychological science, computer science). Long a crux of debate on this question, “mean bar graphs”—bar graphs depicting mean values—are both widely used, for their familiarity and clean visual impact, and widely criticized, for their abstract nature and paucity of information (Tufte & Graves-Morris, 1983, p. 96; Wainer, 1984; Drummond & Vowler, 2011; Weissgerber et al., 2015; Larson-Hall, 2017; Rousselet, Pernet, & Wilcox, 2017; Pastore, Lionetti, & Altoe, 2017; Weissgerber et al., 2019; Vail & Wilkinson, 2020).

Conversely, bar graphs are considered a best-practice when conveying counts—whether raw or scaled into proportions or percentages. These “count bar graphs” are more concrete and hide less information than mean bar graphs. They are usefully extensible: bars may be stacked on top of each other to convey parts of a whole (a stacked bar graph) or arrayed next to each other to convey a distribution (a histogram). And they take advantage of a core bar graph strength: the alignment of bars at a common baseline supports rapid, precise height estimates and comparisons (Cleveland & McGill, 1984; Heer & Bostock, 2010).

When a bar represents a count, it can be thought of as a stack, and the metaphor of bars-as-stacks is relatively accessible across ages and expertise levels (Zubiaga & MacNamee, 2016). In fact, the introduction of bar graphs in elementary education is often accomplished in just this way: with manipulative stacks (Figure 1a), translated to more abstract drawn stacks (Figure 1b), and further abstracted to undivided bars (Figure 1c).

Figure 1.

Elementary progression of count bar graph instruction. (a) Children are taught first using manipulatives; (b) then they transition to drawn stacks; (c) finally, they are introduced to undivided bars.

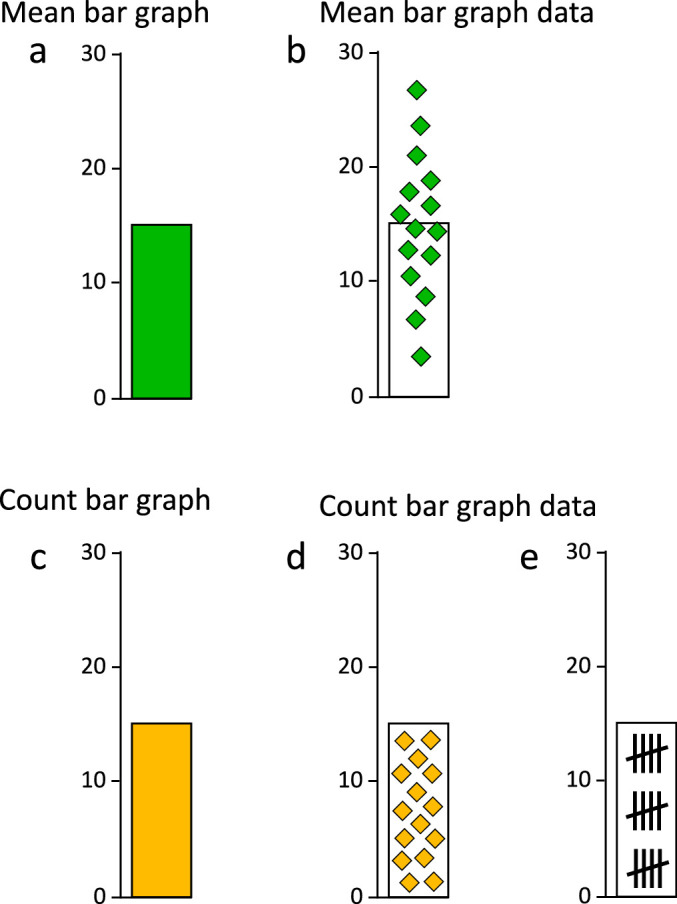

While the mean bar graph potentially borrows some virtues from the count bar graph, the use of a single visual symbol (the bar, Figures 2a, 2c) to represent two profoundly different quantities (means and counts, Figures 2b, 2d) adds inherent ambiguity to the interpretation of that visual symbol.

Figure 2.

Data distribution differs categorically between mean and count graphs. (a) Mean bar graphs and (c) count bar graphs do not differ in basic appearance, but they do depict categorically different data distributions. (b) In a mean bar graph, the bar-tip is the balanced center point, or mean, with the data distributed across it. We call this a Bar-Tip Mean distribution. (d, e) In a count bar graph, the bar-tip acts as a limit, containing the summed data within the bar. We call this a Bar-Tip Limit (BTL) distribution.

The information conveyed by these two visually identical bar graph types differs categorically, as Figure 2 shows. Because a count bar graph depicts summation, its bar-tip is the limit of the individual-level data, which are contained entirely within the bar (Figures 2d, 2e). We call this a Bar-Tip Limit (BTL) distribution. A mean bar graph, in contrast, depicts a central tendency; it uses the bar-tip as the balanced center point, or mean, and the individual-level data are distributed across that bar-tip (Figure 2b). We call this a Bar-Tip Mean distribution.

The question of mean bar graph accessibility

While the rationale for using mean bar graphs—or mean graphs more generally—varies by intended audience, a common theme is visual simplification. Potentially, the simplification of substituting a single mean value for many raw data values could ease comparison, aid pattern-seeking, enhance discriminability, reduce clutter, or remove distraction (Barton & Barton, 1987; Franzblau & Chung, 2012). Communications intended for nonexpert consumers, such as introductory textbooks, may favor mean bar graphs because their visual simplicity is assumed to yield accessibility (Angra & Gardner, 2017). In contrast, communications intended for experts, such as scholarly publications, may favor mean bar graphs because their visual simplicity is assumed to yield efficiency (Barton & Barton, 1987). In either case, the aim is to enhance communication. Yet simplification that abstracts away from the concrete underlying data could also mislead if the viewer fails to accurately intuit the nature of that underlying data.

Visual simplification can, in some cases, enhance comprehension. Yet is this the case for mean bar graphs? Basic research in vision science and psychology provides at least four theoretical causes to doubt the accessibility of mean bar graphs. First, in many domains, abstraction, per se, reduces understandability, particularly in nonexperts (Fyfe et al., 2014; Nguyen et al., 2020). Second, less-expert consumers may lack sufficient familiarity with a mean bar graph's dependent variable to accurately intuit the likely range, variability, or shape of the distribution that it represents. In this context, the natural tendency to discount variation (Moore et al., 2015) and exaggerate dichotomy (Fisher & Keil, 2018) could potentially distort interpretations. Third, the visual salience of a bar could be a double-edged sword, initially attracting attention, but then spreading it across the bar's full extent, in effect tugging one's focus away from the mean value represented by the bar-tip (Egly et al., 1994). Finally, the formal visual simplicity (i.e., low information content) of the bar does not guarantee that it is processed more effectively by the visual system than a more complex stimulus. Many high-information stimuli like faces (Dobs et al., 2019) and scenes (Thorpe et al., 1996) are processed more rapidly and accurately than low-information stimuli such as colored circles or single letters (Li et al., 2002). Relatedly, a set of dots is accurately and efficiently processed into a mean spatial location (Alvarez & Oliva, 2008), raising questions about what is gained by replacing datapoints on a graph with their mean value. Together, these theory-driven doubts provided a key source of broad motivation for the present work.

Adding to the broad concerns raised by basic vision science and psychology research are numerous specific, direct critiques of mean bar graphs in particular. Such critiques, however, are chiefly theoretical, as opposed to empirical, and focus on expert audiences, as opposed to more general audiences (Tufte & Graves-Morris, 1983, p. 96; Wainer, 1984; Drummond & Vowler, 2011; Weissgerber et al., 2015; Larson-Hall, 2017; Rousselet, Pernet, & Wilcox, 2017; Pastore, Lionetti, & Altoe, 2017; Weissgerber et al., 2019; Vail & Wilkinson, 2020). In contrast, our present work provides a direct, empirical test of the claim that mean bar graphs are accessible to a general audience (see Related works and Results for a detailed discussion of prior work of this sort).

Aim and process for current work

To assess mean bar graph accessibility, we needed an informative measure of abstract-graph interpretation; that is, a measure of how graphs that abstract away from individual-level data to present only summary-level, or aggregate-level, information, are interpreted. Over more than a decade, our lab has developed cognitive measures in areas as diverse as sustained attention, aesthetic preferences, stereoscopic vision, face recognition, visual motion perception, number sense, novel object recognition, visuomotor control, general cognitive ability, emotion identification, and trust perception (Wilmer & Nakayama, 2007; Wilmer, 2008; Wilmer et al., 2012; Degutis et al., 2013; Halberda et al., 2012; Germine et al., 2015; Fortenbaugh et al., 2015; Richler, Wilmer, & Gauthier, 2017; Deveney et al., 2018; Sutherland et al., 2020).

The decision to develop a new measure is never easy; it takes a major investment of time and energy to iteratively refine and properly validate a new measure. Yet the accessibility of abstract-graphs presented a special measurement challenge that we felt warranted such an investment. The challenge: record the viewer's conception of an invisible entity—the individual-level data underlying an abstract-graph—while neither invoking potentially unfamiliar statistical concepts nor explaining those concepts in a way that could distort the response.

Our lab's standard process for developing measures has evolved to include three components: (1) Identify guiding principles (Wilmer, 2008; Wilmer et al., 2012; Degutis et al., 2013). (2) Design and refine a measure (Wilmer & Nakayama, 2007; Wilmer et al., 2012; Halberda et al., 2012; Degutis et al., 2013; Germine et al., 2015; Fortenbaugh et al., 2015; Richler, Wilmer, & Gauthier, 2017; Deveney et al., 2018; Kerns, 2019; Sutherland et al., 2020). (3) Apply the measure to a question of theoretical or practical importance (Wilmer & Nakayama, 2007; Wilmer et al., 2012; Halberda et al., 2012; Degutis et al., 2013; Germine et al., 2015; Fortenbaugh et al., 2015; Richler, Wilmer, & Gauthier, 2017; Deveney et al., 2018; Kerns, 2019; Sutherland et al., 2020). We devoted over a year of intensive piloting to the refinement of these three components for the present project. While the parallel nature of the development process produced a high degree of complementarity between the three, each represents its own separate and unique contribution.

A turning point in the development process was our rediscovery of a group of drawing-based neuropsychological tasks that have been used to probe for pathology of perception, attention, and cognition in brain damaged patients (Landau et al., 2006; Smith, 2009). In one such task, the patient is asked to draw a clock, and pathological inattention to the left side of visual space is detected via the bunching of numbers to the right side of the clock (Figure 3). This clock drawing test exhibits a feature that became central to the present work: readout-based measurement.

Figure 3.

Readout of a clock drawn by a hemispatial neglect patient. (https://commons.wikimedia.org/wiki/File:AllochiriaClock.png) The Draw Datapoints on Graphs (DDoG) measure was inspired by readout-based neuropsychological tasks like the one that produced this distorted clock drawing. Such readout-based tasks have long been used with brain damaged patients to probe for pathology of perception, attention, and cognition (Smith, 2009).

A readout is a concrete, detailed, visuospatial record of thought. Measurement via readout harnesses the viewer's capacity to transcribe their own thoughts, with relative fullness and accuracy, when provided with a response format that is sufficiently direct, accessible, rich, and expressive. As the clock drawing task in Figure 3 seeks to read out remembered numeral positions on a clock face, our measure seeks to read out assumed datapoint positions on a graph.

Contribution 1: Identify guiding principles

Distillation of the Measurement of Abstract Graph Interpretation (MAGI) principles

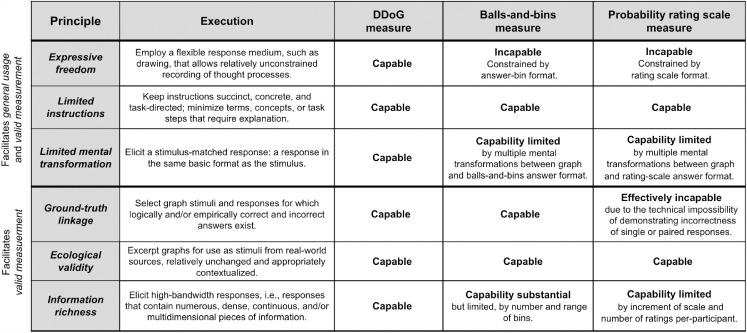

The first of this paper's three contributions is the introduction of six succinct, actionable principles to guide collection of graph interpretation data (Table 1). These principles are aimed specifically at common graph types (e.g., bar/line/box/violin) and graph markings (e.g., confidence/prediction/interquartile interval) that abstract away from individual-level data to present aggregate-level information. These Measurement of Abstract-Graph Interpretation (MAGI) principles center around two core aims, general usage and valid measurement.

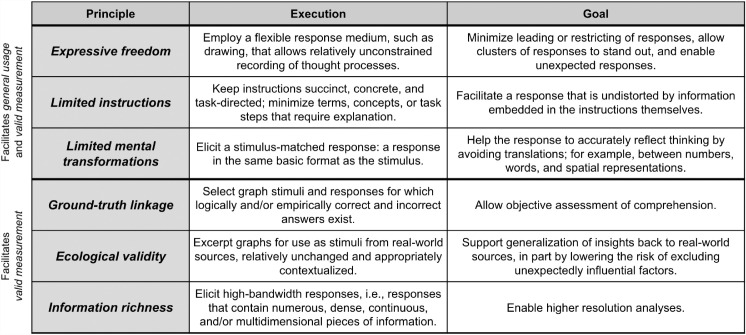

Table 1.

Measurement of Abstract Graph Interpretation (MAGI) principles.

|

General usage—that is, testing of a general population that may vary in statistical and/or content expertise—is most directly facilitated if a measure: (1) avoids constraining the response via limited options (Expressive freedom); (2) avoids priming the response via suggestive instructions (Limited instructions); and (3) avoids obscuring or hindering thinking via unnecessary interpretive or translational steps (Limited mental transformations).

Valid measurement is facilitated by all six principles. The three principles just mentioned—Expressive freedom, Limited instructions, and Limited mental transformations—all help responses to more directly reflect the viewer's actual graph interpretation. This is especially true of Limited mental transformations. As we will see below, a set of mental transformations embedded in a popular probability rating scale measure delayed for over a decade the elucidation of a phenomenon that our investigation here reveals.

Valid measurement is additionally facilitated by: (1) objective scoring, via the existence of correct answers (Ground-truth linkage); (2) real-world applicability of results, via the sourcing of real graphs (Ecological validity); and (3) a clear, high-resolution window into viewers’ thinking, via a response that has high detail and bandwidth (Information richness).

As shown in the next two sections, the MAGI principles provided a foundational conceptual basis for the creation of the Draw Datapoints on Graphs (DDoG) measure and for understanding the ease with which that DDoG measure identified the common, categorical Bar-Tip Limit (BTL) error. These principles additionally provided a structure for comparing our new measure with existing measures (Related works). In these ways, we demonstrate the utility of the MAGI principles as a metric for the creation, evaluation, and comparison of graph interpretation measures.

Contribution 2: Design and refine a measure

Creation of the Draw Datapoints on Graphs (DDoG) measure

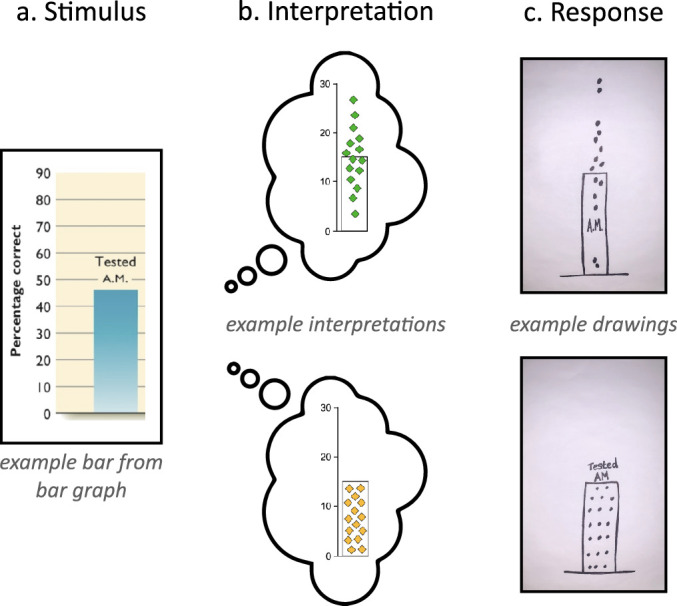

Our second contribution is the use of the MAGI principles to create a readout-based measure of graph comprehension. We call this the Draw Datapoints on Graphs (DDoG) measure. Figure 4 illustrates the DDoG measure's basic approach: it uses a graph as the stimulus (Figure 4a); the graph is interpreted (4b); and this interpretation is then recorded by sketching a version of the graph (4c). The reference-frame of the graph is retained throughout.

Figure 4.

The Draw Datapoints on Graph (DDoG) measure maintains the graph as a consistent reference frame across its three stages. (a) Participants are presented with a graph stimulus that (b) produces a mental representation of the data; (c) this interpretation is recorded by sketching a version of the graph along with hypothesized locations of individual data values. Drawings are representative examples of the two common responses seen in pilot data collection: the correct Bar-Tip Mean response (top), and the incorrect Bar-Tip Limit (BTL) response (bottom).

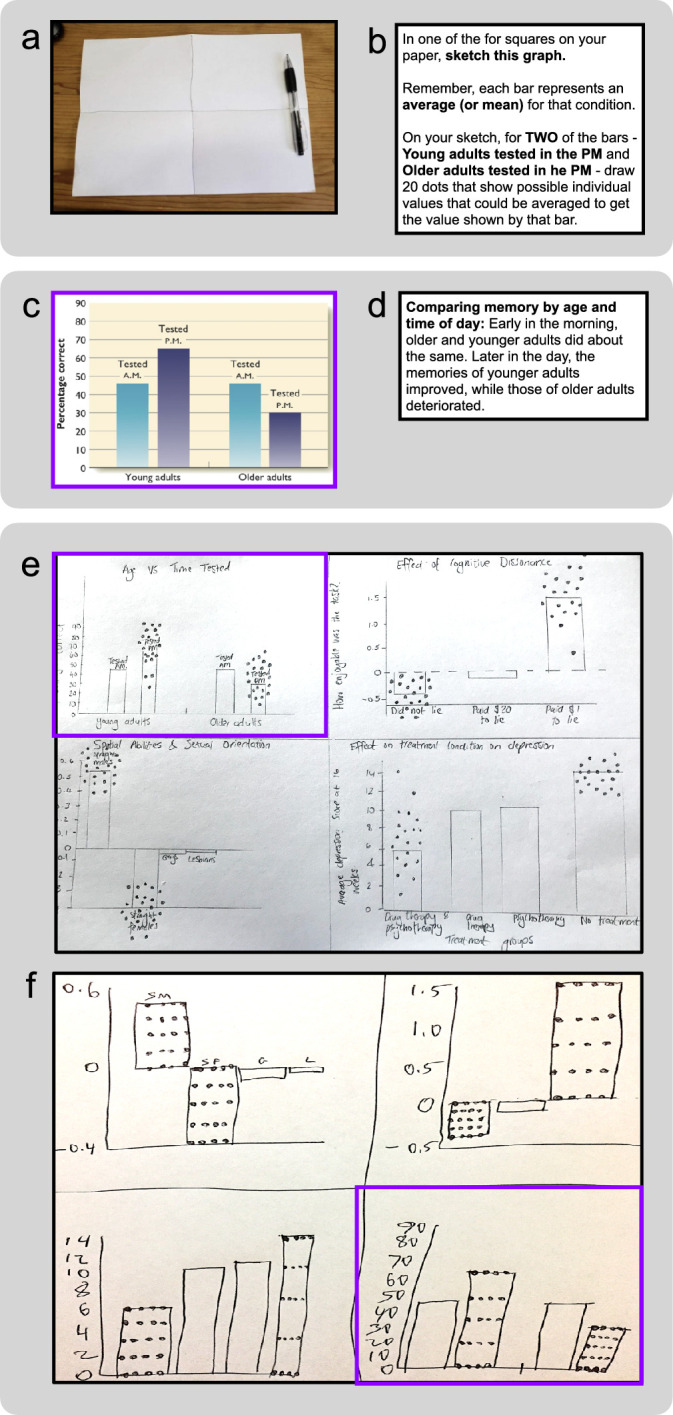

Figure 5 shows a more detailed schematic of the DDoG procedure used in the present study, which expresses the six MAGI principles (Table 1) as follows: The instructions (Figure 5b) are succinct, concrete, and task-directed (Limited instructions). The graph stimulus (5c) and its caption (5d) are taken directly from a textbook (Ecological validity). The drawing-based response medium (5a, e, f) provides the participant with flexibility to record their thoughts in a relatively unconstrained manner (Expressive freedom). The matched format between the stimulus (5c) and the response (5e, 5f, purple outline) minimizes the mental transformations required to record an interpretation (4b) (Limited mental transformations). The extent of the response—160 drawn datapoints, each on a continuous scale (5e, 5f)—provides a high-resolution window into the thought-process (Information richness). Finally, the spatial and numerical concreteness of the readout (5e, 5f), allows easy comparison to a variety of logical and empirical benchmarks of ground-truth (Ground-truth linkage). In these ways, the DDoG measure demonstrates each of the MAGI principles.

Figure 5.

The DDoG measure implements the MAGI principles. The DDoG measure collects readouts of abstract-graph interpretation. Shown are the major pieces of the DDoG measure's procedure, with the relevant MAGI principle(s) in parentheses. (a) Drawing page: showed the participant how to set up their paper for drawing (Expressive freedom, Limited instructions). (b) Instructions: explained what to draw (Limited instructions). (c) Stimulus graph: was the graph to be interpreted (Ecological validity, Ground-truth linkage, Limited mental transformations). (d) Figure caption: was presented with each stimulus graph to help clarify its content (Ecological validity). (e–f) Representative readouts: four-graph readouts from two separate participants, with readouts of graph stimulus shown in c outlined in purple (we refer to this stimulus below as AGE) (Expressive freedom, Information richness, Limited mental transformations). Representative AGE stimulus graph (c) sketches are outlined in purple. Representative readouts demonstrate (e) the correct Bar-Tip Mean response and (f) the incorrect Bar-Tip Limit (BTL) response.

While we use the DDoG measure here to record interpretations of mean bar graphs, it can easily be applied to any other abstract-graph; that is, any graph that replaces individual-level data with summary-level information.

Contribution 3: Apply the measure

Using the DDoG measure to test the accessibility of mean bar graphs

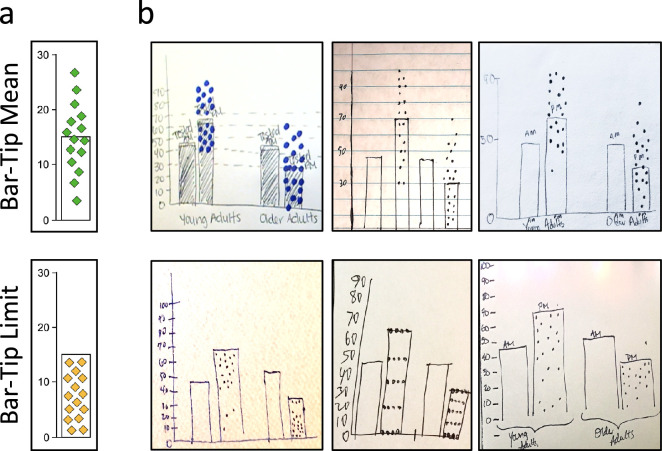

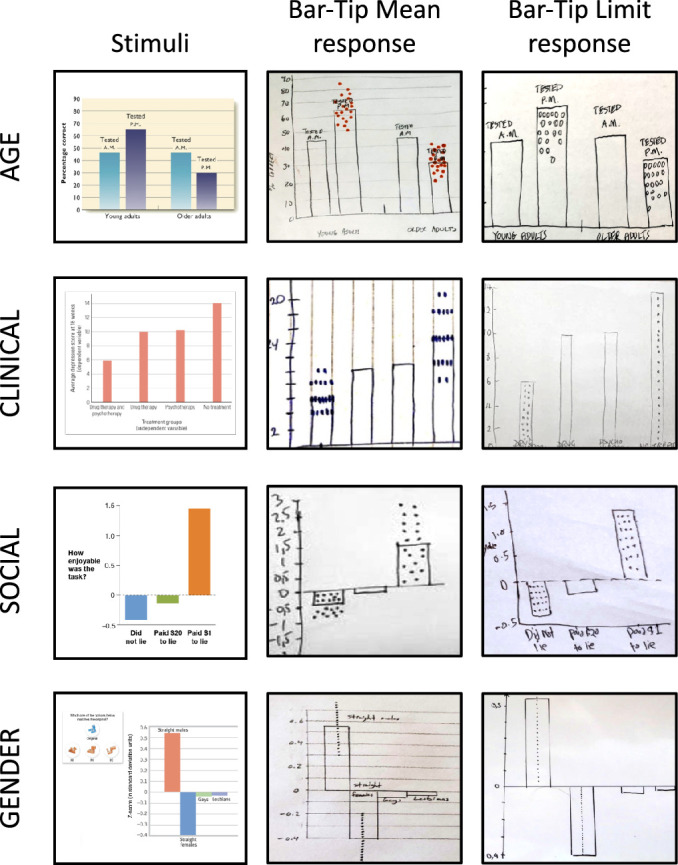

From our earliest DDoG measure pilots looking at mean bar graph accessibility, a substantial subset of participants drew a very different distribution of data than the rest (Figure 6, Figures 5e & f, and Figure 4c).

Figure 6.

The difference between Bar-Tip Mean and Bar-Tip Limit thinking is easily observable. (a) Cartoon and (b) readout examples of the two common DDoG measure responses: the correct Bar-Tip Mean response (top) and the incorrect Bar-Tip Limit (BTL) response (bottom). These readouts were all drawn for the same stimulus graph, which we refer to as AGE (see Figure 11).

When instructed to draw underlying datapoints for mean bar graphs (Figure 5b), most participants drew a (correct) distribution with datapoints balanced across the bar-tip (Figure 6ab, top). A substantial subset, however, drew most, or all, datapoints within the bar (Figure 6ab, bottom), treating the bar-tip as a limit. This minority response would have been correct for a count bar graph (as shown in Figure 2d), but it was severely incorrect for a mean bar graph (Figure 2b). We named this incorrect response pattern the Bar-Tip Limit (BTL) error.

The dataset that we examine in Results contains over three orders of magnitude more drawn datapoints than the two early-pilot drawings shown in Figure 4c: 44,000 datapoints, drawn in 551 sketches, by 149 participants. This far larger dataset yields powerful insights into the BTL error by establishing its categorical nature, high prevalence, stability within individuals, likely developmental influences, persistence despite thoughtful responding, and independence from foundational knowledge and graph content. Yet, in a testament to the DDoG measure's incisiveness, the two early pilot drawings shown in Figure 4c already substantially convey all three of the core contributions of the present paper: a severe error in mean bar graph interpretation (the BTL error), saliently revealed by a new readout-based measure (the DDoG measure) that collects high-quality graph interpretations from experts and nonexperts alike (using the MAGI principles).

Moreover, it takes only a few readouts to move beyond mere identification of the BTL error, toward elucidation of an apparent mechanism. Considering just the readouts we have seen so far, observe the stereotyped nature of the BTL error response across participants (Figure 6b), graph form, and content (Figures 5e & f). Notice the similarity of this stereotyped response to the Bar-Tip Limit data shown in Figure 2d. These clues align perfectly with a mechanism of conflation, where mean bar graphs are incorrectly interpreted as count bar graphs (Figure 2).

In retrospect, the stage was clearly set for such a conflation. The use of one graph type (bar) to represent two fundamentally different types of data (counts and means) makes it difficult to visually differentiate the two (Figure 2) and sets up the inherent cognitive conflict that is the source of this paper's title (“Two graphs walk into a bar”). Additionally, the physical stacking metaphor for count bar graphs (Figure 1) makes a Bar-Tip Limit interpretation arguably more straightforward and intuitive; and the common use of count bar graphs as a curricular launching pad to early education could easily solidify the Bar-Tip Limit idea as a familiar, well-worn path for interpretation of bar graphs (Figure 1).

Yet, remarkably, none of the many prior theoretical and empirical critiques of mean bar graphs considered that such a conflation might occur (Tufte & Graves-Morris, 1983, p. 96; Wainer, 1984; Drummond & Vowler, 2011; Weissgerber et al., 2015; Larson-Hall, 2017; Rousselet, Pernet, & Wilcox, 2017; Pastore, Lionetti, & Altoe, 2017; Weissgerber et al., 2019; Vail & Wilkinson, 2020; Newman & Scholl, 2012; Correll & Gleicher, 2014; Pentoney & Berger, 2016; Okan et al., 2018). The concrete, granular window into graph interpretation provided by DDoG measure readouts, however, elucidates both phenomenon and apparent mechanism with ease.

Related works

Here we embed our three main contributions—the Measurement of Abstract Graph Interpretation (MAGI) principles, the Draw Datapoints on Graphs (DDoG) measure, and the Bar-Tip Limit (BTL) error —into related psychological, vision science, and data visualization literatures.

Literature related to the DDoG measure

Classification of the DDoG measure

A core design feature of the DDoG measure is its “elicited graph” measurement approach whereby a graph is produced as the response. This approach can, in turn, be placed within two increasingly broad categories: readout-based measurement and graphical elicitation measurement. Like elicited graph measurement, readout-based measurement produces a detailed, visuospatial record of thought; yet its content is broader, encompassing nongraph products such as the clock discussed above (Figure 3). Graphical elicitation, broader still, encompasses any measure with a visuospatial response, regardless of detail or content (Hullman et al., 2018; a similar term, graphic elicitation, refers to visuospatial stimuli, not responses, Crilly et al., 2006). Having embedded the DDoG measure within these three nested measurement categories—elicited graph, readout-based, and graphical elicitation—we next use these categories to distinguish it from existing measures of graph cognition.

Vision science measures

Vision science has long been an important source of graph cognition measures (Cleveland & McGill, 1984; Heer & Bostock, 2010), and recent years have seen an accelerated adoption of vision science measures in studies of data visualization (hereafter datavis). Of particular interest is a recent tutorial paper by Elliott and colleagues (2020) that cataloged behavioral vision science measures with relevance to datavis. Despite its impressive breadth—laying out nine measures with six response types and 11 direct applications (Elliott et al., 2020)—not a single elicited graph, readout-based, or graphical elicitation approach was included. This fits with our own experience that such methods are rarely used in vision science.

This rarity is even more surprising given that the classic drawing tasks that helped to inspire our current readout-focused approach are well known to vision scientists (Landau et al., 2006; Smith, 2009; Figure 3); indeed, they are commonly featured in Sensation and Perception textbooks (Wolfe et al., 2020). Yet usage of such methods within vision science has been narrow; restricted primarily to studies of extreme deficits in single individuals (Wolfe et al., 2020).

It is unclear why readout-based measurement is uncommon in broader vision science research. Perhaps the relative difficulty of structuring a readout-based task to provide a consistent quantitative measurement across multiple participants has been prohibitive. Or maybe reliable collection of drawn samples from a distance was logistically untenable until the recent advent of smartphones with cameras and accessible image-sharing technologies. We hypothesize that factors such as these may have limited the use of readout-based measurement in vision science, and we believe the proof-of-concept provided by the MAGI principles (Table 1) and the DDoG measure (Figure 4) supports their broader use in the future.

Although the DDoG measure shares its readout-based approach with classic patient drawing tasks (Figure 3), it differs from them in a subtle but important way. Patient drawing tasks are typically stimulus-indifferent, using stimuli (e.g., clocks) as mere tools to reveal the integrity of a broad mental function (e.g., spatial attention). The DDoG measure, in contrast, seeks to probe the interpretation of a specific stimulus (this bar graph) or stimulus type (bar graphs in general). The focus on how the stimulus is interpreted, rather than on the integrity of a stimulus-independent mental function, distinguishes the DDoG measure from classic patient drawing tasks.

Graphical elicitation measures

Moving beyond vision science, we next examine the domain of graphical elicitation for DDoG-related measures. A birds-eye perspective is provided by a recent review of evaluation methods in uncertainty visualization (Hullman et al., 2018). Uncertainty visualization's study of distributions, variation, and summary statistics makes it an informative proxy for datavis research related to our work. Notably, that review called the method for eliciting a response “a critical design choice.” Of the 331 measures found in 86 qualifying papers, from 11 application domains (from astrophysics to cartography to medicine), only 4% elicited any sort of visuospatial or drawn response, thus qualifying as graphical elicitation (Hullman et al., 2018). None elicited either a distribution or a full graph; thus, none qualified as using an elicited graph approach. Further, though some studies elicited markings on a picture (Hansen et al., 2013) or map (Seipel & Lim, 2017), or movement of an object across a graph (Cumming, Williams, & Fidler, 2004), none sought to elicit a detailed visuospatial record of thought; thus, none qualified as using a readout-based approach. Survey methods such as multiple choice, Likert scale, slider, and text entry were the most common response elicitation methods—used 66% of the time (Hullman et al., 2018).

While readouts are rare in both vision science and datavis, there exists a broader graphical elicitation literature in which readouts are more common and elicited graphs are not unheard of (Jenkinson, 2005; O'Hagan et al., 2006; Choy, O'Leary & Mengersen, 2009). Yet still, these elicited responses tend to differ from our work in two key respects. First, they are typically used exclusively with experts, whereas the DDoG measure prioritizes general usage. Second, their aim is typically to document preexisting knowledge, and they therefore use stimuli as a catalyst or prompt, rather than as an object of study. The DDoG measure, in contrast, holds the stimulus as the object of study: we want to know how the graph was interpreted. The DDoG measure is therefore distinctive even in the broadly defined domain of graphical elicitation.

A small subset of graphical elicitation studies do elicit a visuospatial response, drawn on a graph, from a general population. There remains, however, a core difference in the way the DDoG measure utilizes the elicited response. DDoG uses the elicited response as a measure (dependent variable). The other studies, in contrast, use it as a way to manipulate engagement with, or processing of, a numerical task (as an independent variable; Stern, Aprea, & Ebner, 2003; Natter & Berry, 2005; Kim, Reinecke, & Hullman, 2017a; Kim, Reinecke, & Hullman, 2017b). While it is possible for a manipulation to be adapted into a measure, the creation of a new measure requires time and effort for iterative, evidence-based refinement and validation (e.g., Wilmer, 2008; Wilmer et al., 2012; Degutis et al., 2013). The DDoG measure is therefore distinctive in having been created and validated explicitly as a measure.

Frequency framed measures

Another way in which the DDoG measure may be distinguished from previous methods is in its use of a frequency framed response. Frequency framing is the communication of data in terms of individuals (e.g., “20 of 100 total patients” or “one in five patients”) rather than percentages or proportions (e.g., “20% of patients” or “one fifth of patients”). The DDoG measure collects frequency-framed responses in terms of individual values.

Frequency framing has long been considered a best practice for data communication to nonexperts (Cosmides & Tooby, 1996), yet it remains uncommon in the measurement of graph cognition. Illustratively, as of 2016, only a small handful of papers in uncertainty visualization had utilized frequency framing (Hullman, 2016); and in all cases, frequency framing was used in the instructions or stimulus rather than in the measured response (e.g., Hullman, Resnick, & Adar, 2015; Kay et al., 2016).

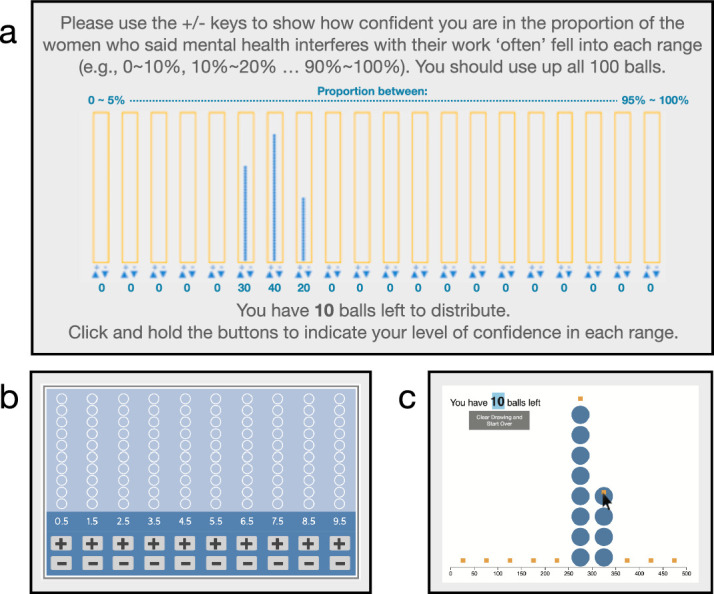

Two other, more recent, datavis studies have elicited frequency framed responses (Hullman et al., 2017; Kim, Walls, Krafft & Hullman, 2019). These studies were geared toward nonexperts and used the so-called balls-and-bins paradigm, originally developed by Goldstein and Rothschild (2014), where balls are placed in virtual bins representing specified ranges (Figure 7). The studies aimed to elicit beliefs about sampling error around summary statistics. This usage contrasts with our aim of eliciting direct interpretation of the raw, individual-level, nonaggregated data that produced a mean.

Figure 7.

The balls-and-bins approach. Three recent adaptations of the balls-and-bins approach to eliciting probability distributions. This approach was originally developed by Goldstein and Rothschild (2014). The sources of these adaptations of balls-and-bins are: (a) Kim, Walls, Kraft & Hullman, 2019; (b) Andre, 2016; (c) Hullman et al. 2018.

This difference in usage joins more substantive differences, captured by two MAGI principles: Limited mental transformations and Expressive freedom (Table 1). While the current implementation of balls-and-bins requires spatial, format, and scale translations between stimulus and response, the DDoG measure achieves Limited mental transformations by applying a stimulus-matched response: imagined datapoints are drawn directly into a sketched version of the graph itself. Similarly, while balls-and-bins predetermines key aspects of the response such as bin widths, bin heights, ball sizes, numbers of bins, and the range from lowest to highest bin, the DDoG measure achieves Expressive freedom by allowing drawn responses that are constrained only by the limits of the page. Further, the lack of built-in tracks or ranges that constrain or suggest data placement reduces the risk of the “observer effect,” whereby constraints or suggestions embedded in the measurement procedure itself alter the result.

Defining thought inaccuracies

Textual definitions of errors, biases, and confusions

To discuss the literature regarding this project's third contribution—identification of the Bar-Tip Limit (BTL) error—it is helpful to distinguish three different types of inaccurate thought processes: errors, biases, and confusions. We will do this first in words, and then numerically.

-

•

Errors are “mistakes, fallacies, misconceptions, misinterpretations” (Oxford University Press, n.d.). They are binary, categorical, qualitative inaccuracies that represent wrong versus right thinking.

-

•

Biases are “leanings, tendencies, inclinations, propensities” (Oxford University Press, n.d.). They are quantitative inaccuracies that exist on a graded scale or spectrum. They exhibit consistent direction but varied magnitude.

-

•

Confusions are “bewilderments, indecisions, perplexities, uncertainties” (Oxford University Press, n.d.). They indicate the absence of systematic thought, resulting, for example, from failures to grasp, remember, or follow instructions.

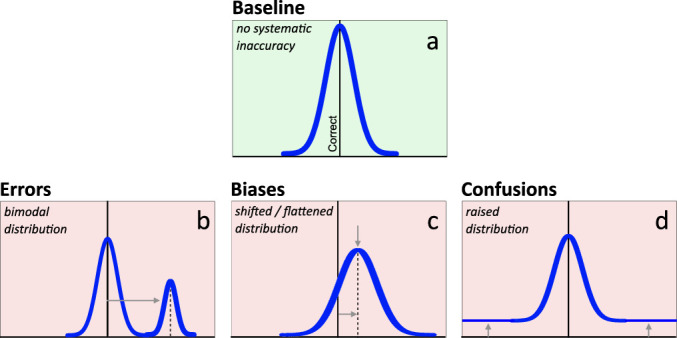

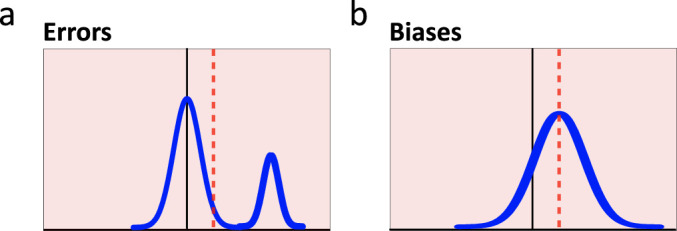

Numerical/visual definitions of errors, biases, and confusions

Let us now consider how each type of inaccurate thought process would be instantiated in data. Such depictions can act as visual definitions to compare with real datasets. In Figure 8, patterns of data distributions representing correct thoughts (8a), systematic errors (8b), biases (8c), and confusions (8d), are illustrated as frequency curves. They are numerical formalizations of the textual definitions above, providing visual cues for differentiating these four thought processes in a graphing context.

Figure 8.

Three types of inaccurate graph interpretation. Prototypical response distributions provide visual definitions for three types of inaccuracy. Top row: (a) Baseline response pattern (green) indicates no systematic inaccuracy. Responses cluster symmetrically around the correct response value, with imprecision in task input, processing, and output reflected in the width of the spread around that correct response. Bottom row: Light red backgrounds illustrate the presence of inaccurate responses. Gray arrows indicate the major change from the baseline response pattern for each type of inaccurate response. (b) Systematic error responses form their own distinct mode. (c) Systematic bias responses shift and/or flatten the baseline response distribution. (d) Confused responses—expressed as random, unsystematic responding—are uniformly distributed, thus raising the tails of the baseline distribution to a constant value without altering the mean, median, or mode.

Figure 8a, represents a baseline case: a data distribution (blue curve) that is characteristic of generally correct interpretation, absent systematic inaccuracy. This baseline case prototypically produces a symmetric distribution, with the mean, median, and mode all corresponding to a correct response. Normal imprecision, from expected variation in stimulus input, thought processes, or response output, is reflected by the width of the distribution. (If everyone answered with 100% precision, the “distribution” would be a single stack on the correct answer).

Figure 8b illustrates a subset of systematic errors within an otherwise correct dataset. In distributions, an erroneous, categorically inaccurate subset prototypically presents as a separate mode, additional to the baseline curve. Errors siphon responses from the competing baseline distribution, which remains clustered around the correct answer, retaining the correct modal response. The hallmark of an error is, therefore, a bimodal distribution of responses whose prevalence and severity are demonstrated, respectively, by the height and separation of the additional mode.

Figure 8c illustrates a subset of systematic biases within an otherwise correct dataset. Given their graded nature, a biased subset tends to flatten and shift the baseline distribution of responses. The prototypical bias curve demonstrates a single, shifted mode. To the extent that a bias reflects a pervasive, relatively inescapable aspect of human perception, cognition, or culture, there will be relatively less flattening, and more shifting, of the response distribution.

Finally, Figure 8d illustrates a subset of confusions within an otherwise correct dataset. A confused subset tends to yield random responses, which lift the tails of the response distribution to a constant, nonzero value as responses are siphoned from the competing baseline distribution.

A rough but informative way to distinguish between erroneous, biased, and confused thinking is to simply compare an observed distribution of responses directly to the blue curves in Figure 8. This visual approach may be complemented with an array of analytic approaches that provide quantitative estimates of the degree of evidence for a particular type of inaccurate thinking (e.g., Freeman & Dale, 2013; Pfister et al., 2013; Zhang & Luck, 2008). In Results, we will use both visual and analytic approaches.

The success of any approach rests upon the precision and accuracy of measurement: as measurement quality is reduced, it becomes increasingly difficult to detect clear thought patterns reflected in the data. Our results will show unequivocal bimodality in the collected data, supporting both the presence of an erroneous graph interpretation (the BTL error) and the precision and accuracy of the DDoG measure.

Literature related to the BTL error

Probability rating scale results

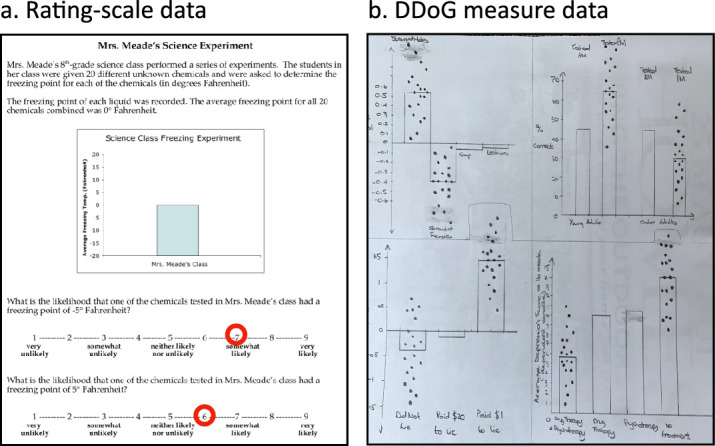

In examining prior work related to our discovery of the BTL error, studies by Newman and Scholl (2012), Correll and Gleicher (2014), Pentoney and Berger (2016), and Okan and colleagues (2018) are seminal and contain the most reproducible prior evidence against mean bar graph accessibility. Their core result is an asymmetry recorded via a probability rating scale: the average participant, when shown a mean bar graph, rated an inside-of-bar location for data somewhat more likely than a comparable outside-of-bar location (∼1 point on a 9-point probability rating scale, Figure 9a).

Figure 9.

A side-by-side comparison of a probability rating scale and a DDoG measure response. DDoG measure's more concrete, detailed, visuospatial (aka readout-based) approach may have contributed to the ease with which it identified the BTL error and its apparent conflation mechanism, which were missed by prior studies using the probability rating scale approach. (a) The probability rating scale response sheet provided to each participant in the original report of asymmetry by Newman and Scholl (2012, Study 5). These Likert-style scales, anchored by colloquial English words (from “very unlikely” to “very likely”), were used to characterize the likelihood that individual values occurred at two specific y-axis values, one within the bar (−5) and one outside the bar (+5). The red circles indicate the resulting mean ratings of 6.9 and 6.1 (from Study 5, Newman & Scholl, 2012). (b) The DDoG measure response sheet from an individual participant given four stimulus graphs and asked to sketch each graph along with 20 hypothesized datapoints for two specified bars on the graph.

The original paper, by Newman and Scholl (2012), reported five replications of this asymmetry, including in-person and online testing, varied samples (ferry commuters, Amazon Mechanical Turk recruits, Yale undergraduates), and conditions that ruled out key numerical and spatial confounds. Five independent research groups have since replicated and extended this finding. The first was Correll and Gleicher (2014), who extended the result to viewers’ predictions of future data, and who showed that graph types that were symmetrical around the mean (e.g., violin plots) did not produce the effect. Soon after, Pentoney and Berger (2016) found this asymmetry present for a bar graph with confidence intervals, but absent when the bar was removed, leaving only the confidence intervals; this replicated Correll and Gleicher's (2014) finding that the effect requires the presence of a traditional bar graph. Okan and colleagues (2018) also replicated both the core asymmetry (this time using health data) and the finding that nonbar graphs did not produce the effect; they additionally found that the asymmetry, counterintuitively, increased with graph literacy. Finally, Godau, Vogelgesang, and Gaschler (2016) and Kang and colleagues (2021) showed that the asymmetry remains in aggregate—carrying through to judgments of the grand mean of multiple bars.

Newman and Scholl's (2012) hypothesized mechanism was a well-known perceptual bias, whereby in-object locations are processed slightly more effectively than out-of-object locations. The studies that Newman and Scholl (2012) cited for this perceptual bias, for example, had on average 4% faster and 0.6% more accurate responses for in-object stimuli relative to out-of-object stimuli (Egly et al., 1994; Kimchi, Yeshurun, & Cohen-Savransky, 2007; Marino & Scholl, 2005). While subtle, this bias was believed to reflect a fundamental aspect of object processing (Newman & Scholl, 2012), and it was therefore assumed to be pervasive and inescapable. Newman and Scholl's (2012) hypothesis was that this “automatic” and “irresistible” perceptual bias had produced a corresponding “within-the-bar bias” in graph interpretation.

Probability rating scale comparison

At the end of Results, we will compare our detailed findings, obtained via our DDoG measure, to those of the probability rating scale studies reviewed just above. Here, we compare the more general capabilities of the two measurement approaches. Figure 9 shows side-by-side examples of a probability rating scale response from Newman and Scholl's (2012) Study 5 (Figure 9a), and a DDoG measure readout (Figure 9b). Using the MAGI principles (Table 1) as a basis for comparison, we can see key differences in: Limited mental transformations, Ground-truth linkage, Information richness, and Expressive freedom.

The MAGI principle of Limited mental transformations aims to facilitate an accurate readout of graph interpretation. As discussed in Frequency framed measures even a mental transformation as seemingly trivial as a translation from a ratio (one in five) to a percentage (20%) can severely distort a person's thinking (Cosmides & Tooby, 1996). The DDoG measure limits mental transformations via its stimulus-matched response (Figures 4 and 9b). Absent such a matched response, the mental transformations required by a measure may limit its accuracy.

In the example from Newman and Scholl (2012), Study 5 (Figure 9a), several mental transformations are needed for probability rating scale responses; among them, translation from numbers (−5 and 5) to graph locations (in or out of the bar, and to what degree in or out), from graph locations to probabilities (represented in whatever intuitive or numerical way a person's mind may represent them), from probabilities to the rating scale's English adjectives (e.g. “somewhat,” “very”), and, separately, from the vertical scale on the graph's y-axis to the horizontal rating scale. While it is possible that some of these mental transformations are accomplished effectively and relatively uniformly for most participants, our reanalysis below of the probability rating scale studies (Results: A reexamination of prior results) will suggest that their presence contributes a certain amount of irreducible “noise” (inaccuracy) to the response.

With regard to Ground-truth linkage, it is straightforward to evaluate a DDoG measure readout (Figure 9b) relative to multiple logical and empirical benchmarks of ground-truth. Logical benchmarks include, for example, “Is the mean of the drawn datapoints at the bar-tip?” Empirical benchmarks include “How closely does the drawn data reflect the [insert characteristic] in the actual data?” In contrast, there is no logically or empirically correct (or incorrect) response for the probability rating scale measure (Figure 9a). Even discrepant rated values for in-bar versus out-of-bar locations—though suggestive of inaccurate thinking—could be accurate in a case of a skewed raw data distribution, which could plausibly lead to more raw data in-bar than out-of-bar (or to the opposite). The probability rating scales therefore lack a conclusive Ground-truth linkage. DDoG measure responses, in contrast, specify real numerical and spatial values with a concreteness that is easier to compare to a variety of ground-truths.

The DDoG measure's precision is most directly supported by its Information richness: in the present study, each participant produces 160 drawn datapoints on a continuous scale. In contrast, the probability rating scale shown in Figure 9a yields only two pieces of information (the two ratings) per participant. Granted, the relative information-value of a single integer rating, versus a single drawn datapoint, is not easily compared. Further, as we will see below, multiple ratings at multiple graph locations can increase precision of the probability rating scale measure (Results: A reexamination of prior results). Nevertheless, it would be difficult to imagine a case where a probability rating scale measure yielded more information richness, and, in turn, higher precision, than the DDoG measure.

Finally, comparing Expressive freedom between the two measures, the rating scale measure constrained each response to a nine-point integer scale. On this scale, responses are led and constrained to a degree that disallows many types of unexpected responses and limits the capacity of distinct graph interpretations to stand out from each other. These constraints contrast sharply with the flexibility of the drawn readout that the DDoG measure produces.

The probability rating scale and the DDoG measure therefore differ in terms of four separate MAGI principles (Table 1): Limited mental transformations, Ground-truth linkage, Information richness, and Expressive freedom.

MAGI principles used as a metric

We have used the MAGI principles above to highlight notable differences between the DDoG measure and specific implementations of two other measurement approaches: balls-and-bins (Figure 7) and probability rating scale (Figure 9a). However, the question of whether these differences are integral to the measure-type, or restricted to specific implementations, remains. Table 2 models the use of the MAGI principles as a vehicle to compare, in a more structured way, potentially integral features and limitations of these same three graph interpretation methods.

Table 2.

Using MAGI principles to compare graph interpretation measures.

|

We believe that all three measures share a capability for Limited instructions and Ecological validity, though choices specific to each implementation may affect whether the principles are actually expressed (see Hullman et al. (2017) and Kim, Walls, Kraft and Hullman (2019) for implementations of the balls-and-bins method, and see Newman and Scholl (2012), Correll and Gleicher (2014), Pentoney and Berger (2016), and Okan and colleagues (2018) for implementations of probability rating scales). In contrast, the three measures appear to differ more or less intrinsically in their approaches to Expressive freedom, Limited mental transformations, and (to a lesser extent) Information richness. That said, measure development is an inherently iterative, dynamic process, and what seems intrinsic at one point in time can sometimes shift as development progresses.

Because the DDoG measure and MAGI principles were built around each other and refined in parallel, it makes sense that they are closely aligned in their optimization for assessment of abstract-graph interpretation, with a focus on general usage and valid measurement. Recognizing that measures with different aims and motivations may be best suited for different tasks, this use of MAGI principles, in table form (Table 2), provides a model of its utility for comparison and targeting of measures with a similar set of aims and motivations.

Summary of related works

In the four subsections of Related works above, we first documented and sought to better understand the relative rarity of elicited-graph, readout-based, graphical elicitation, and frequency-framed measurement in studies of graph cognition, while arguing for the value of greater usage of elicited-graph and readout-based measurement in particular. We next distinguished—in both written/verbal and numerical/visual form—three distinct types of inaccurate graph interpretation: errors, biases, and confusions. Third, we examined key results and methods from prior studies of mean bar graph inaccessibility. And, finally, we provided an illustrative example of how the Measurement of Abstract Graph Interpretation (MAGI) principles can be used to compare and contrast relevant measures.

Methods

The current investigation has two parts: (A) examine the accessibility of a set of ecologically valid mean bar graphs in an educationally diverse sample via our new Draw Datapoints on Graphs (DDoG) measure (sections Participant Data Collection through Define the Average) and (B) assess the relative frequency of mean versus count bar graphs across educational and general sources (section Prevalence of Mean Versus Count Bar Graphs). Included in the Methods sections devoted to “A” are detailed specifications for the current implementation of the DDoG measure, as well as the thinking behind that implementation, as a guide to future DDoG measure usage.

Participant data collection

Piloting

The development of the DDoG measure was highly iterative. Early piloting was performed at guest lectures, lab meetings, and in college classrooms at various levels of the curriculum by author JBW, and in several online data collection pilot studies by both authors. Early wording differed from the more refined wording used in the present investigation. Yet even the earliest pilots produced the core result of the current investigation (Figure 4): a common, severely inaccurate interpretation of mean bar graphs that we call the Bar-Tip Limit (BTL) error. The DDoG measure thus appears—at least in the context of the BTL error—robust to fairly wide variations in its wording. A constant through all DDoG measure iterations, however, was succinct, concrete, task-directed instructions: what came to be expressed as the Limited instructions MAGI principle (Table 1).

Recruitment

Data collection was completed remotely using Qualtrics online survey software. There were neither live nor recorded participant-investigator interactions, to minimize priming, coaching, leading, or other forms of experimenter-induced bias (Limited instructions principle). Participants were recruited via the Amazon Mechanical Turk (MTurk), Prolific, and TestableMinds platforms. Because no statistically robust differences were observed between platforms, data from all three were combined for the analyses reported below. Participants were paid $5 for an expected 30 minutes of work; the median time taken was 33 minutes.

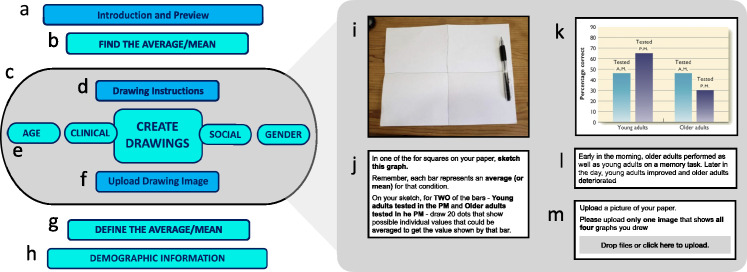

Procedure

Figure 10 provides a flowchart of the procedure. Participants (a) read an overview of the tasks along with time estimates; (b) read and recorded mean values from stimulus graphs; (c) (grey zone) completed the DDoG measure drawing task for stimulus graphs; (g) provided a definition for the average/mean value; and (h) reported age, gender, educational attainment, and prior coursework in psychology and statistics.

Figure 10.

Flowchart of study procedure. The present study consisted of five main sections (a, b, c, g, h). Sections and subsections colored teal (b, e, g, h) produced data that were analyzed for this study. Subsections on the right (i, j, k, l, m) are an expansion of section c of the flowchart, showing (i) the drawing page, (j) the drawing instructions, (k) one of the four stimulus graphs, (l) the graph caption, and (m) the upload instructions. See Methods for further procedural details.

Foundational knowledge tasks

Graph reading task: Find the average/mean

Participants were asked to “warm up” by reading mean values from a set of mean bar graphs that were later used in the DDoG measure drawing task (wording: “What is the average (or mean) value for [condition]?”) (Figure 10j). This warm-up served as a control, verifying that the participant was able to locate a mean value on the graph.

To allow for some variance in sight-reading, responses within a tolerance of two tenths of the distance between adjacent y-axis tick-marks were counted as correct (see Figure 11). The results reported in Results: Independence of the BTL error from foundational knowledge remain essentially unchanged for response-tolerances from zero (only exactly correct responses) through arbitrarily high values (all responses). No reported prevalence values dip below 19%.

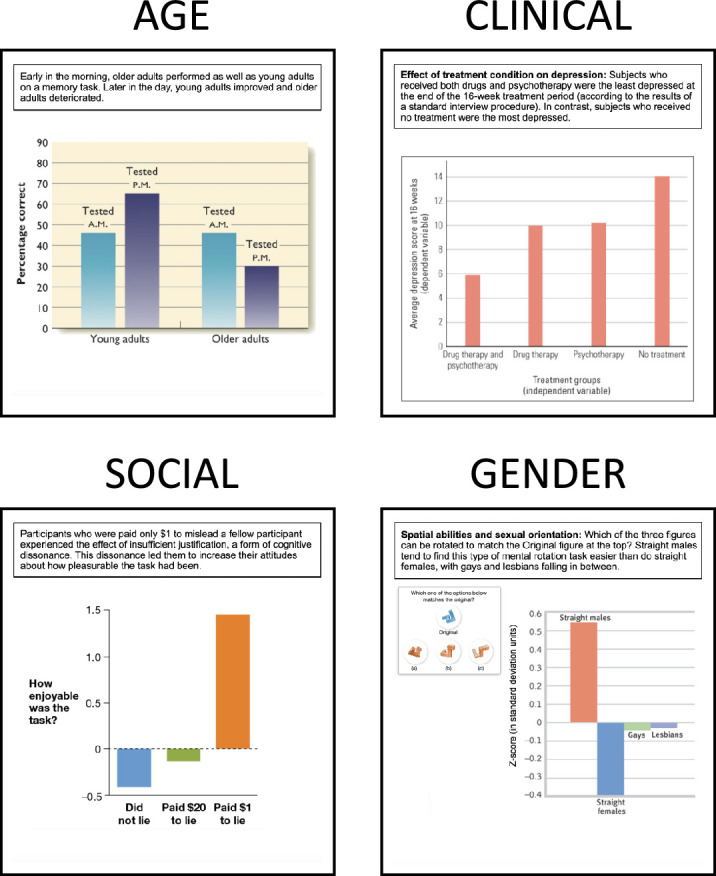

Figure 11.

The four stimulus graphs used in this study. These stimulus graphs were taken from popular Introductory Psychology textbooks to ensure the direct real-world relevance of our results (see ecological validity MAGI principle). Figure legends were adapted as necessary for comprehensibility outside the textbook. Stimulus graph textbook sources are AGE: Kalat, 2016; CLINICAL: Gray and Bjorklund, 2017; SOCIAL: Grison & Gazzaniga, 2019; and GENDER: Myers & DeWall, 2017.

Graph reading was correct for 93% of graphs. As a control for possible carryover/learning effects, 114 of the 551 total DDoG measure response drawings were completed without prior exposure to the graph (i.e., without a warm-up for that graph). No evidence of carryover/learning effects was observed.

Definition task: Define the average/mean

After completing the DDoG measure drawing task, as a control for comprehension and thoughtful responding, participants were asked to explain the concept of the average/mean. Most participants (112 of 190, or 64%) got the following version of the question: “From your own memory, what is an average (or mean)? Please just give the first definition that comes to mind. If you have no idea, it is fine to say that. Your definition does not need to be correct (so please don't look it up on the internet!)” For other versions of the question, see open data spreadsheet. No systematic differences in results based on question wording were observed. Participants were provided a text box for answers with no character limit. Eighty-three percent of responses were correct, with credit being given for responses that were correct either conceptually (e.g., “a calculated central value of a set of numbers”) or mathematically (e.g., “sum divided by the total number of respondents”).

The Draw Datapoints on Graphs (DDOG) measure: Method

Selection of DDoG measure stimulus graphs

For the present investigation, four mean bar graph stimuli, shown in Figure 11, were taken from popular Introductory Psychology textbooks. This source of graphs was selected for three main reasons. First, Introductory Psychology is among the most popular undergraduate science courses, serving two million plus students per year in the United States alone (Peterson & Sesma, 2017); and this course tends to rely heavily on textbooks, with the market for such texts estimated at 1.2 to 1.6 million sales annually (Steuer & Ham, 2008). The high exposure of these texts gives greater weight to the data visualization choices made within them. Second, Introductory Psychology attracts students with widely varying interests, skills, and prior data experiences, meaning that data visualization best practices developed in the context of Introductory Psychology may have broad applicability to other contexts involving diverse, nonexpert populations. Third, given the relevance of psychological research to everyday life, inaccurate inferences fueled by Introductory Psychology textbook data portrayal could, in and of themselves, have important negative real-world impacts.

The mean bar graph stimuli were chosen to exhibit major differences in form, and to reflect diversity of content (Figure 11). They convey concrete scientific results, rather than abstract theories or models, so that understanding can be measured relative to the ground-truth of real data (Ground-truth linkage). Introductory texts were chosen because of their direct focus on conveying scientific results to nonexperts (Ecological Validity). Stimuli were selected to show “independent groups” (aka between-participants) comparisons because these comparisons are one step more straightforward, conceptually and statistically, than “repeated-measures” (aka within-participants) comparisons.

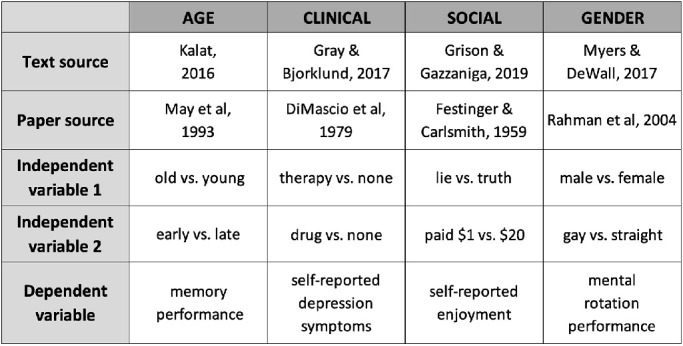

We refer to the four stimulus graphs by their independent variables: as AGE, CLINICAL, SOCIAL, and GENDER. The stimuli were selected, respectively, from texts by Kalat (2016), Gray and Bjorklund (2017), Grison and Gazzaniga (2019), and Myers and DeWall (2017), which ranked 7, 22, 3, and 1 in median Amazon.com sales rankings across eight days during March and April of 2019, among the 23 major textbooks in the Introductory Psychology market. Further details about these graphs are shown in Table 3.

Table 3.

Stimulus graph sources and variables.

|

Stimuli were chosen to represent both meaningful content differences (four areas about which individual participants might potentially have strong personal opinions), and form differences (differing relationship of bars to the baseline) to evaluate replication of results across graphs despite differences that might reasonably be expected to change graph interpretation.

The specific form difference we examined was the distinction between unidirectional bars (all bars emerge from the same side of the baseline) and bidirectional bars (bars emerge in opposite directions from the baseline). Two of the four graphs, AGE and CLINICAL were unidirectional (Figure 11, top), and the other two, SOCIAL and GENDER, were bidirectional (Figure 11, bottom). While unidirectional graphs were more common than bidirectional graphs in the surveyed textbooks, two examples of each were selected to set up an internal replication mechanism.

Replication of results could potentially be demonstrated across graphs in terms of mean values (the mean result of different graphs could be similar), or in terms of individual differences (an individual participant's result on one graph could predict their result on another graph). Both types of replication were observed.

Graph drawing task: The DDoG measure

The DDoG measure was designed using the MAGI principles (Table 1), and it was modeled after the patient drawing tasks mentioned above (Landau et al., 2006; Agrell & Dehlin, 1998). Ease of administration and incorporation into an online study were further key design considerations.

The Expressive freedom inherent in the drawing medium avoids placing artificial constraints on participant interpretation, and it has multiple potential benefits: it helps to combat the “observer effect,” whereby a restricted or leading measurement procedure impacts the observed phenomenon; it lends salience to a consistent, stereotyped pattern (such as the Bar-Tip Limit response shown in Figures 2d, 4c (bottom), 5f, 6b (bottom), and 13 (right)); and it allows unexpected responses, which render the occasional truly inattentive or confused response (Figure 8d) clearly identifiable.

Figure 13.

DDoG measure readouts illustrating the two common, categorically different response types for each of the four stimulus graphs. The left column shows the four stimulus graphs (AGE, CLINICAL, GENDER, SOCIAL). For each stimulus graph, the center column shows a representative correct response (Bar-Tip Mean), and the right column shows an illustrative incorrect response (Bar-Tip Limit).

Twenty drawn datapoints per bar was chosen as a quantity small enough to avoid noticeable impacts of fatigue or carelessness, while remaining sufficient to gain a visually and statistically robust sense of the imagined distribution of values. Recent research suggests that graphs that show 20 datapoints enable both precise readings (Kay et al., 2016) and effective decisions (Fernandes et al., 2018).

Before beginning the drawing task, participants were instructed to divide a page into quadrants, and they were shown a photographic example of a divided blank page (Figure 10i). They were then presented with task instructions (Figure 10j) with one of the four bar graph stimuli and its caption (Figure 10k, 10l). This process—instructions, graph stimulus, caption—was repeated four total times, with the order of the four graph stimuli randomized to balance order effects such as learning, priming, and fatigue. After completing the four drawings on a single page, each participant photographed and uploaded their readout (Figure 10m) via Qualtrics’ file upload feature. Few technical difficulties were reported, and only a single submitted photograph was unusable for technical reasons (due to insufficient focus). All original photographs are posted to the Open Science Framework (OSF).

DDoG measure data

Collection of DDoG measure readouts

One hundred ninety participants nominally completed the study. Of these, 149 (78%) followed the directions sufficiently to enable use of their drawings, a usable data percentage typical of online studies of this length (Litman & Robinson, 2020). Based on predetermined exclusion criteria, participant submissions were disqualified if most or all of their drawings were unusable for any of the following:

-

(1)

Zero datapoints were drawn (18 participants).

-

(2)

Datapoints were drawn but in no way reflected either the length or direction of target bars, thereby demonstrating basic misunderstanding of the task (16 participants).

-

(3)

Drawings lacked labels or bar placement information necessary to disambiguate condition or bar-tip location (five participants).

-

(4)

Photograph was insufficiently focused to allow a count (one participant).

Each of the 149 remaining readouts contained four drawn graphs, for 596 graphs total. Of these, 30 individual drawings were excluded for one or more of the above reasons, and 15 were excluded for the additional predetermined exclusion criterion of a grossly incorrect number of datapoints, defined as >25% difference from the requested 20 datapoints.

The remaining 551 drawn graphs (92.4% of the 596) from 149 participants were included in all analyses below. Ages of these 149 participants ranged from 18 to 71 years old (median 31). Reported genders were 96 male, 52 female, and one nonbinary. Locations included 26 countries and 6 continents. The most common countries were United Kingdom (n = 58), United States (n = 34), Portugal (n = 8), and Greece/Poland/Turkey (each n = 4).

Coding of DDoG measure readouts via BTL index

As a systematic quantification of datapoint placement in DDoG measure readouts, a Bar-Tip Limit (BTL) index was computed. The BTL index estimated the within-bar percentage of datapoints for each graph's two target bars by dividing within-bar datapoints (i.e., datapoints drawn on the baseline side of the bar-tip) by the total number of drawn datapoints for the two target bars and multiplying by 100. Written as a formula: [(# datapoints on baseline-side of bar-tips) / (total # datapoints)] × 100

The highest possible index (100) represents an image in which all drawn datapoints are on the baseline sides of their respective bar-tips (i.e., within the bar; a Bar-Tip Limit response). An index of 50 represents a drawing with equal numbers of datapoints on either side of the bar-tip (i.e., balanced distribution across the mean line, or a Bar-Tip Mean response). BTL index values in this study ranged from 27.5 (72.5% of points drawn outside of the bar) to 100 (100% of points drawn within the bar).

The straightforward coding procedure was designed to yield a reproducible, quantitative measure of datapoint distribution relative to the bar-tip, however, one ambiguity existed. Datapoints drawn directly on the bar-tip could reasonably be considered to either use the bar-tip as a limit (if the border is considered part of the object) or not (if a datapoint on the edge is considered outside the bar). This ambiguity was handled as follows: (1) if, at natural magnification, a drawn datapoint was clearly leaning toward one or the other side of the bar-tip, it was assigned accordingly, and (2) datapoints on the bar-tip for which no clear leaning was apparent were alternately assigned first inside the bar-tip, then outside, and continuing to alternate thereafter.

This procedure maximizes reproducibility by minimizing the need for the scorer to interpret the drawer's intent—and it was successful (see Reproducibility of BTL index coding procedure). Yet reproducibility may have come at the cost of some minor conservatism in quantifying drawings where the participant placed no datapoints beyond the bar-tip—arguably displaying a complete Bar-Tip Limit interpretation—yet placed some of the datapoints directly on the bar-tip. For example, if six of the 20 datapoints for each bar were placed on the bar-tip, the drawing would get a BTL index of only 85, when it arguably represented a pure BTL conception of the graph.

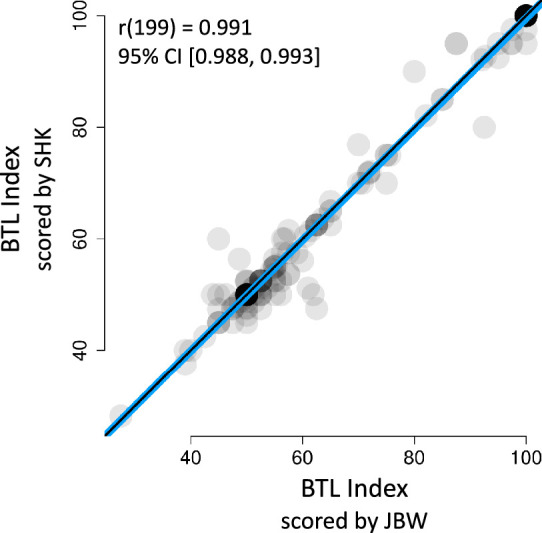

Reproducibility of BTL index coding procedure

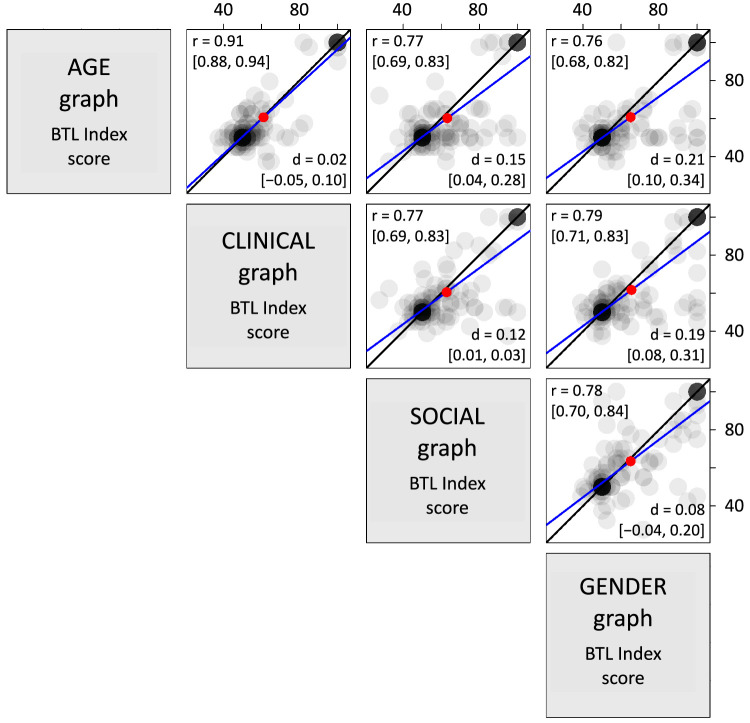

Reproducibility of the BTL index coding was evaluated by comparing independently scored BTL indices of the two coauthors for a substantial subset of 201 drawn graphs. Figure 12 shows the data from this comparison where the x coordinate is JBW's scoring and the y coordinate is SHK's scoring of each drawing. The correlation coefficient between the two sets of ratings is extremely high (r(199) = 0.991, 95% CI [0.988, 0.993]). Additionally, the blue best-fit line is nearly indistinguishable from the black line representing hypothetically equivalent indices between the two raters.

Figure 12.

Interrater reliability of Bar-Tip Limit (BTL) index coding demonstrates high repeatability of coding method. Coauthors SHK and JBW independently computed the BTL index for a subset of 201 drawn graphs (of 551 total). Each dot represents both BTL indices for a given drawn graph (y-value from SHK, x-value from JBW). Semitransparent dots code overlap as darkness. The black line is a line of equivalence, which shows x = y for reference. The line of best fit is blue. The close correspondence of these two lines, and the close clustering of dots around them, demonstrate high repeatability of the BTL index coding procedure.

The fact that the line of best fit is so closely aligned with the line of equivalence is important. In theory, even with a correlation coefficient close to 1.0, one rater might give ratings that are shifted higher/lower, or that are more expanded/compressed, compared to the other. This would show up as a best-fit line that was either vertically shifted relative to the line of equivalence, or of a different slope compared to the line of equivalence. The absence of such a vertical shift or slope difference is therefore particularly strong evidence for repeatability. This strong evidence is echoed by a high interrater reliability statistic (Krippendorf's alpha) of 0.93 (Krippendorf, 2011); Krippendorf's alpha varies from 0 to 1, and a score of 0.80 or higher is considered strong evidence of repeatability (Krippendorf, 2011). These analyses therefore demonstrate that the BTL index coding procedure is highly repeatable, and that variations in coding—a potential source of noise that could theoretically constrain the capacity of a measure to precisely capture Bar-Tip Limit interpretation—can be minimized.

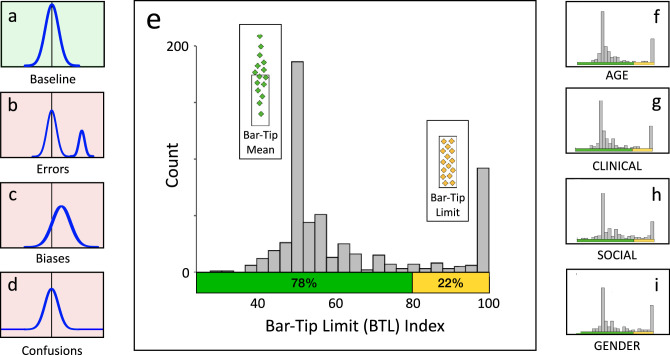

Establishing cutoffs and prevalence

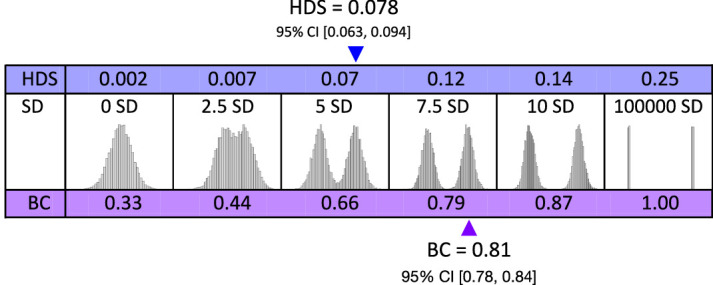

For purposes of estimating the prevalence of the BTL error in the current sample, a BTL index cutoff of 80 was selected. This was the average result when five clustering methods (k-means, median clustering, average linkage between groups, average linkage within groups, and centroid clustering) were applied via SPSS to the 551 individual graph BTL indices (cutoffs were 75, 75, 80, 85, and 85, respectively). 80 was additionally verified as reasonable via visual inspection of the data shown in Figure 14.

Figure 14.

Bimodal distribution of Bar-Tip Limit (BTL) index values reveals that the BTL error represents a categorical difference. The main graph (e) plots the distribution of BTL index values for all 551 DDoG measure drawings. The left inset graphs show visual definitions of: (a) baseline (no systematic inaccuracy), (b) errors, (c) biases, and (d) confusions (Figure 8). Note how closely e (our data) matches b (data pattern for errors). The right inset graphs (f, g, h, i) plot BTL indices by graph stimulus (AGE, CLINICAL, GENDER, SOCIAL), which provide four internal replications of the aggregate result (e). The colors on the x-axes indicate the location of the computed cutoff of 80, taken from our cluster analyses, between the two categories of responses: Bar-Tip Mean responses (green) and Bar-Tip Limit (BTL) responses (yellow).

Due to the strongly bimodal distribution of BTL index scores (see Results), only 14 of the 551 total drawings (2.5%) fell within the entire range of computed cutoffs (75 to 85). Therefore, though the computed confidence intervals around prevalence estimates, reported in Results, do not include uncertainty in selecting the cutoff, this additional source of uncertainty is small (at most, perhaps ± 1.25%).

For Pentoney and Berger's (2016) data, analyzed below in Prior work reflects the same phenomenon: Prevalence, the respective cutoffs from the same five clustering methods produced BTL error percentages of 19.5, 19.5, 19.5, 19.5, and 24.6, for an average percentage of 20.5.

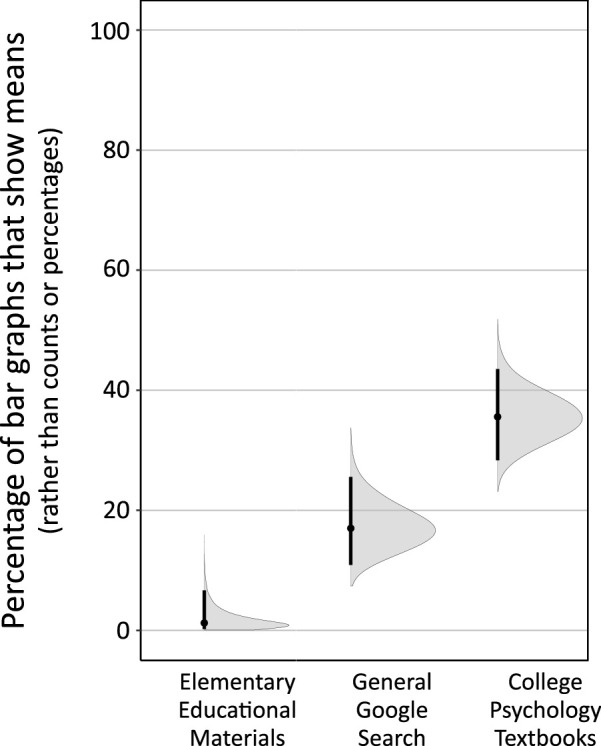

Prevalence of bar graphs of means versus bar graphs of counts

The methods discussed in this subsection pertain to Results section Ecological exposure to mean versus count bar graph. In that investigation, we assessed the likelihood of encountering mean versus count bar graphs across a set of relevant contexts by tallying the frequency of each bar graph type from three separate sources: elementary educational materials accessible via Google Image searches, college-level Introductory Psychology textbooks, and general Google Images searches. It was hypothesized that these sources would provide rough, but potentially informative, insights into the relative likelihood of exposure to each bar graph type in these areas. The methods used for each source were:

The elementary education Google image search used the phrase: “bar graph [X] grade,” with “first” to “sixth” in place of [X]. The first 50 grade-appropriate graph results, from independent internet sources, for each grade-level, were categorized as either count bar graph, mean bar graph, or other (histogram or line graph).

The college-level Introductory Psychology textbook count tallied all of the bar graphs of real data across the following eight widely-used Introductory textbooks: Ciccarelli & Berstein, 2018; Coon, Mitterer & Martini, 2018; Gazzaniga 2018; Griggs 2017; Hockenbury & Nolan, 2018; Kalat, 2016; Lilienfeld, Lynn & Namy, 2017; and Myers & DeWall, 2017. The bar graphs were then categorized as dealing with either means or counts (histograms excluded).

The general Google Image search used the phrase “bar graph.” The first 188 graphs were categorized as count bar graphs, mean graphs, or were excluded for being not bar graphs (tables, histograms), or for containing insufficient information to determine what type of bar graph they were.

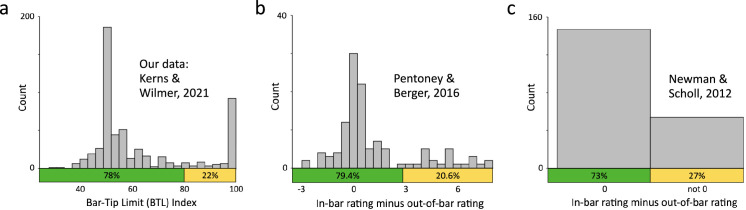

Plotting and evaluation of data from previous studies

In our reexamination of prior results, we compare previous study results to the present results. Data is replotted from Newman and Scholl (2012) and Pentoney and Berger (2016) as Figure 20. For Newman and Scholl (2012), data from Study 5 is plotted categorically as “0” (no difference between in-bar and out-of-bar rating) versus “not 0” (positive or negative difference between in-bar and out-of-bar rating). The directionality of the latter differences, while desired, are not obtainable from the information reported in the original paper. For Pentoney and Berger (2016), the data plotted in their Figure 3 are pooled across the three separate conditions whose stimuli included bar graphs. A similar pattern of bimodality is evident in all three of those conditions. We used WebPlotDigitizer to extract the raw data from Pentoney and Berger's (2016) Figure 3.

Figure 20.

A reexamination of prior results reveals both consistency with our current results and overlooked evidence for the Bar-Tip Limit (BTL) error. Shown side by side for direct comparison are plotted data from: (a) The present study using the DDoG measure; (b) Pentoney and Berger (2016) (PB); (c) Newman and Scholl (2012) Study 5 (NS5). All graphs label correct Bar-Tip Mean response values in green and computed (or inferred, in the case of NS5) Bar-Tip Limit (BTL) response values in yellow. Notably, the PB study used the exact same 9-point rating scale and graph stimulus (of hypothetical chemical freezing temperature data) as the NS5 study (Figure 9 shows the scale and stimulus) but added ratings of four additional temperatures (−15, −10, 10, 15) to NS5's original two (−5, 5). Comparing PB's results (b) to ours (a) suggests that while PB's version of the probability rating scale measure achieved a fairly high degree of accuracy and precision at values near zero, it still suffered from apparently irreducible inaccuracy and/or imprecision as values diverged from zero (see text for further discussion).

Statistical tools and packages

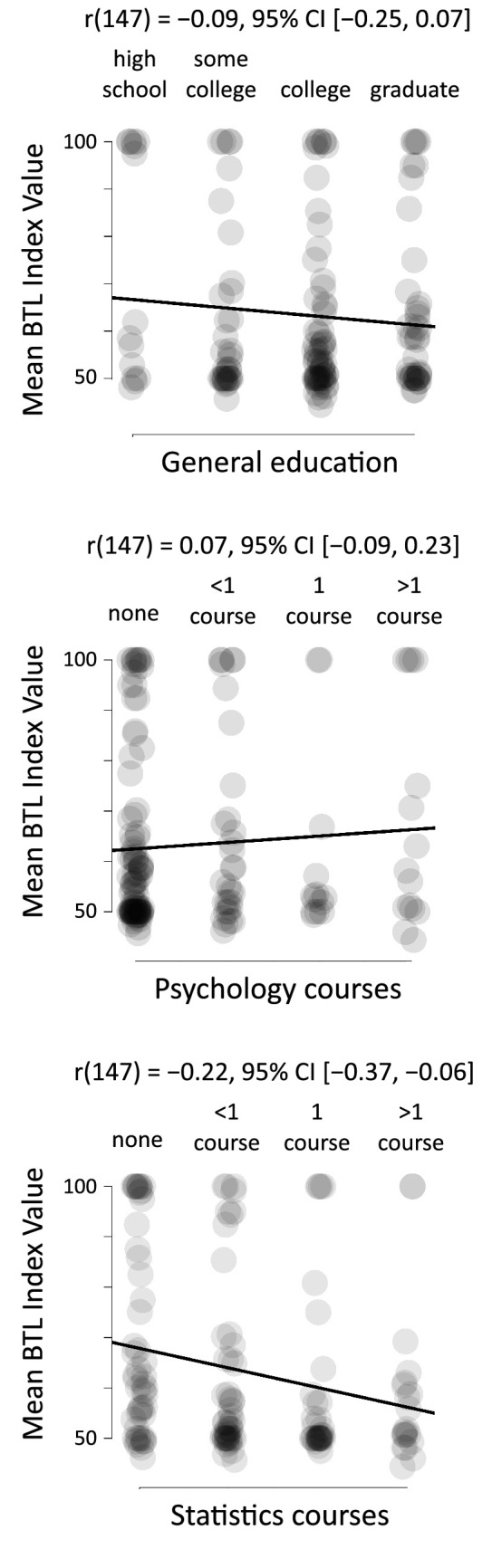

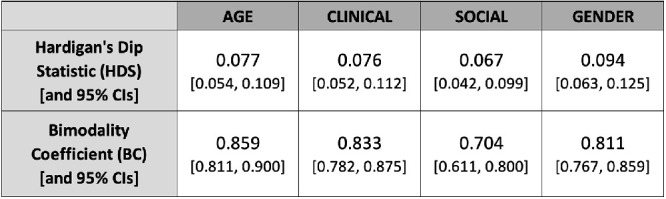

The graphs shown in Figures 12, 16, and 18 were produced by ShowMyData.org, a suite of web-based data visualization apps coded in R by the second author using the R Shiny package. The statistics and distribution graphs shown in Figures 14, 17, 19, and 20 were produced via the ESCI R package. The bimodality simulations and graphs shown in Figure 15 were produced in base R, and the numerical analyses of bimodality using Hartigan's Dip Statistic (HDS) and Bimodality Criterion (BC) (Freeman & Dale, 2013; Pfister et al., 2013) were computed via the mousetrap R package. CIs on HDS, BC, and Cohen's d were computed with resampling (10,000 draws) via the boot R package. The clustering analyses that estimated BTL error cutoffs and prevalence were conducted via SPSS.

Figure 16.

The Bar-Tip Limit (BTL) error persists across differences in graph form and content. Each of the six subplots in this figure compares individual BTL index values for two graph stimuli, one plotted as the x-value and the other as the y-value. Each gray dot represents one participant's data. Mean values are shown as red dots. Gray dots are semitransparent so that darkness indicates overlap. High overlap is observed near BTL index values of 50 (Bar-Tip Mean) and 100 (Bar-Tip Limit) for both stimulus graphs, indicating persistence of interpretation between compared stimuli, despite differences in graph form and content. This persistence is reflected numerically in the high correlations among (Pearson's r), and the small mean differences between (Cohen's d), graph stimuli. The high correlations are echoed visually by steep lines of best fit (blue), and the small differences are echoed visually by the proximity of mean values (red dots) to lines of equivalence (black lines, which show where indices are equal for the two stimuli). In brackets are the 95% CIs for r and d.

Figure 18.

The Bar-Tip Limit (BTL) error is substantially independent of education. Mean BTL indices for each participant plotted against general education level, number of psychology courses taken, and number of statistics courses taken (each reported via the four-point scale shown on the respective graph). The black line is the least-squares regression line, computed with rated responses treated as interval-scale data. Axis ranges and graph aspect ratios were chosen by ShowMyData.org such that the physical slope of each regression line equals its respective correlation coefficient (r).

Figure 17.

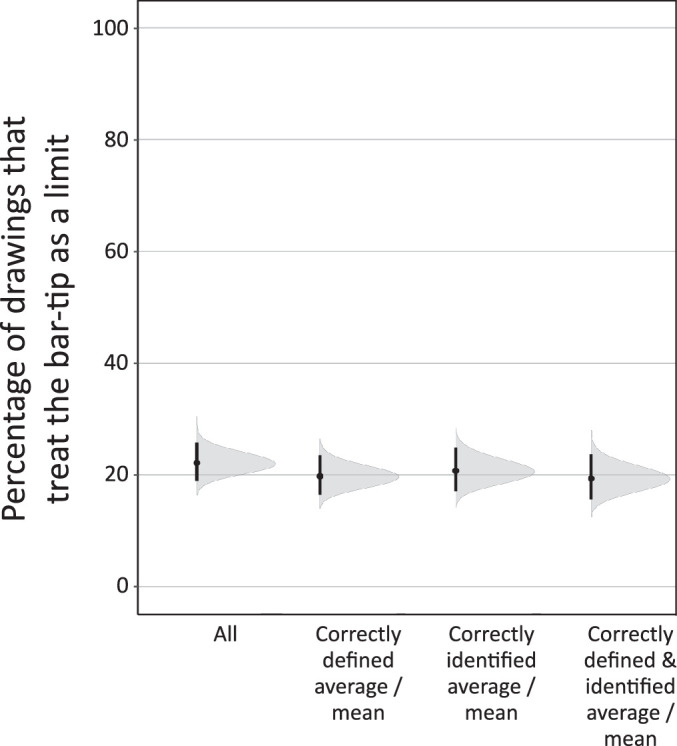

The Bar-Tip Limit (BTL) error occurs despite correctly defining “mean” and correctly locating it on the graph. Percentage of readouts that showed the BTL error (defined as a BTL index over 80). “All” is the full dataset (n = 551 readouts). “Correctly defined average/mean” is restricted to participants who produced a correct definition for the mean (n = 486 readouts). “Correctly identified average/mean” is restricted to participants who correctly identified a mean value on the same graph that produced the readout (n = 413 readouts). “Correctly defined & identified average/mean” is restricted to participants who both correctly defined the mean and correctly identified a mean value on the graph (n = 367 readouts). In all cases, the proportion of BTL errors hovers around one in five. Vertical lines show 95% CIs, and gray regions show full probability distributions for the uncertainty around the percentage values.

Figure 19.