Abstract

Social media trust and sharing behaviors have considerable implications on how risk is being amplified or attenuated at early stages of pandemic outbreaks and may undermine subsequent risk communication efforts. A survey conducted in February 2020 in the United States examined factors affecting information sharing behaviors and social amplification or attenuation of risk on Twitter among U.S. citizens at the early stage of the COVID-19 outbreak. Building on the social amplification of risk framework (SARF), the study suggests the importance of factors such as online discussion, information seeking behaviors, blame and anger, trust in various types of Twitter accounts and misinformation concerns in influencing the spread of risk information during the incipient stages of a crisis when the publics rely primarily on social media for information. An attenuation of risk was found among the US public, as indicated by the overall low sharing behaviors. Findings also imply that (dis)trust and misinformation concerns on social media sources, and inconsistencies in early risk messaging may have contributed to the attenuation of risk and low risk knowledge among the US publics at the early stage of the outbreak, further problematizing subsequent risk communication efforts.

Keywords: Social amplification of risk framework, Twitter, Trust, Risk communication, COVID-19, Misinformation concerns

1. Introduction

Effective risk communication in times of crises requires public trust and a delicate balance of risk management and messaging strategies (Reynolds & Seeger, 2014). Messaging must balance fear and efficacy so that the publics are informed and alerted of the risk but are also aware of their own ability to deal with the risk to maximize compliance behaviors (Witte, 1996). Too little fear and attenuation of risk perceptions, and the publics are unlikely to take the threat seriously and may take no action. Too much fear and amplification of risk perceptions, and the publics may panic and perceive themselves unable to take action (Witte, 1996).

This balance is especially crucial at the beginning of pandemic outbreaks such as COVID-19, as either amplification or attenuation of risk may undermine subsequent risk communication efforts (Kasperson et al., 1988). First discovered in China in December 2019, the later identified new strain of coronavirus (SARS-CoV2) otherwise known as COVID-19 started to rapidly spread across the globe in early 2020. However, though COVID-19 was known to be highly contagious and has been spreading in China and European countries in early February, it wasn't until late February that the first case of community spread was reported in the United States (Schumaker, 2020). Between this large window of time, the U.S. publics would learn about the threat and risk of COVID-19 only from the media, rather than from personal experience. As media are the primary risk amplification or attenuation sources (Kasoerson et al., 1988), this window of time prior to the U.S. outbreak provides the ideal setting to understand COVID-19 risk amplification and attenuation, and its impact on subsequent risk communication efforts.

As people increasingly obtain and share risk information online, social media adds another layer of complexity to the situation. Publics may trust, gain and share risk information from various channels, many of which may have competing messages regarding the COVID-19 risk, further contributing to either amplification or attenuation of risk.

Therefore, the present study uses survey methodology to assess factors affecting information sharing behaviors and social amplification or attenuation of risk on Twitter among U.S. citizens from February 12 (a day after the disease produced by the novel coronavirus was officially named COVID-19 - “Co” standing for coronavirus, “Vi” for virus, and “D" for disease) to February 25, a day before the first case of suspected community spread was reported in the United States (Schumaker, 2020). This window of time when publics would know about the threat and risk of COVID-19 only from the media (rather than from personal experience) is vital to understand the role of social media trust and of more recent phenomena such as misinformation concerns in publics’ risk communication activities, as well as how these factors may contribute to the amplification or attenuation of risk during the emergence of an unprecedented threat.

The social amplification of risk framework (SARF) (Kasperson et al., 1988) was applied to understand how factors such as interpersonal/online discussion, information seeking behaviors, blame, emotions, trust in various Twitter sources, and misinformation concerns may affect coronavirus knowledge and information sharing behaviors. This sharing of risk information has important societal implications, as past research has documented incongruities between public opinion in the United States and the scientific community on topics ranging from vaccine safety to climate change (Scheufele & Krause, 2019). The U.S. publics’ risk sharing behaviors and the potential amplification and attenuation of risk prior to the outbreak have great implications for later COVID-19 developments in the U.S.

2. Theoretical framework

2.1. Social amplification of risk framework

The Social Amplification of Risk Framework (SARF) was proposed to understand why some risk events characterized as small may “produce massive public reactions,” while others judged as serious by experts receive little attention from the public (Kasperson et al., 1988, p. 178). Both social amplification and attenuation of risk undermine the effectiveness of risk communication. The SARF posits that, while some risk information is communicated directly, most risk messages are transmitted via some kind of social institution such as media. Risk messages communicated indirectly may be amplified or attenuated by several psychological, social, and cultural factors, thus shaping the salience of risk (Kasperson et al., 1988).

The SARF was initially developed in the traditional mass media environment and has identified amplification/attenuation factors such as frequency and volume of media coverage, dramatization of the media content, and ambiguity of the information (i.e., contradictory risk messages) (Kasperson et al., 1988). The framework was designed to be flexible and provided space for researchers to “deduct empirically testable theories and to offer a perspective to interpret and classify risk communication data” (Renn, 1991, p. 320). Studies applying SARF have focused on various contexts such as pandemic outbreaks (Lewis & Tyshenko, 2009; Wirz et al., 2018), health-related topics (Chong & Choy, 2018; Strekalova & Krieger, 2017), and disaster risks (Brenkert-Smith, Dickinson, Champ, & Flores, 2013; Jagiello & Hills, 2018).

Recent research has expanded the SARF on the internet (Chong & Choy, 2018; Chung, 2011; Guo & Li, 2018) and social media (Fellenor et al., 2017; Strekalova & Krieger, 2017; Wirz et al., 2018; Zhang et al., 2017). The online and social media environment added complexity to the risk amplification process (Chung, 2011; Chung & Choy, 2018; Fellenor et al., 2018) and was found to be more powerful than traditional media in amplifying risk (Ng, Yang, & Vishwanath, 2018).

A few factors were identified as risk amplifiers in the online and social media environment. First, spikes in risk amplifications online often correspond with related offline events (Chong & Choy, 2018; Wirz et al., 2018) as well as traditional media coverage of the risk event (Fellenor et al., 2018). Online media and government agency (e.g., CDC) serve as social stations for transmitting risk information to the general public and certain social groups and for impacting the circulation and public engagement of the risk information online (Chung, 2011; Wirz et al., 2018).

Second, public engagement was found to be a major predictor of the amplification of risk online (Pedgeon, Kasperson, & Slovic, 2003). Rather than passive consumers, lay publics actively engage in the transmission of risk messages, thus changing and shaping the narrative and story (Chung, 2011; Strekalova & Krieger, 2017). As a result, social media risk amplification is characterized as emotionally intense, time-compressed, and with less authority control over risk information (Chong & Choy, 2018; Wirz et al., 2018).

In addition, certain framing of the risk event or different risk signals may also fuel the volume and propagation of risk information (Chung, 2011; Fellenor et al., 2018). For example, by framing a tunnel construction project in risk terms, environmentalists in South Korea were able to amplify the risk perceptions and eventually garner national public attention (Chung, 2011). Similarly, in examining the social amplification of ash dieback disease risk in the U.K. on Twitter, Fellenor et al. (2018) found that interactions about the risk were often framed by social group affiliations, interests, and identities.

Finally, sentiments and blame play a large role in amplifying risk online (Chong & Choy, 2018; Wirz et al., 2018). The negative emotion of anger was found to be the most predominant emotion online for risk amplification and the expression of anger is often associated with blame. For instance, Wirz et al. (2018) discovered that blame attribution constituted 30% of sentiments on Twitter and 71% on Facebook in the social amplification of Zika-related risk information. Zika became a politically charged topic in the U.S. as amplification of Zika risk rippled to involve debates concerning Zika-related funding (Wirz et al., 2018). In a similar vein, Chong and Choy (2018) found that risk perceptions about haze were amplified when Singaporeans expressed anger in blaming the government of inaction on Facebook. Anger was also shown to coexist with other types of emotions such as sadness (Chong & Choy, 2018).

Prior research applying SARF in social media risk communication has primarily examined the volume of public attention measured by number of visits, comments, likes and shares as proxies for social amplification (Chong & Choy, 2018; Chung, 2011; Strekalova & Krieger, 2017; Wirz et al., 2018). Therefore, the present study examines publics’ risk information sharing behaviors to indicate public attention toward the risk and social amplification of risk. Based on social media SARF literature, discussion volume (online and offline), information seeking, blame, and emotions were found to be closely associated with the social amplification of risk (i.e., risk information sharing behaviors).

2.1.1. Online and offline discussion

Discussion volume, both online and offline, is one of the major predictors of risk information amplification on social media (Binder, Scheufele, Brossard, & Gunther, 2011; Chong & Choy, 2018; Wirz et al., 2018). Research has shown that public online discussions related to risk are mostly episodic, and spikes in discussions correlate with occurrence of unprecedented offline risk events (Chong & Choy, 2018; Wirz et al., 2018). This indicates that as publics consume risk-related news, they are also more likely to engage in the discussion of risk information, and consequently, disseminate the risk information. The conversations and discussions surrounding the risk event and information were often not restricted to online only, but also include offline interpersonal discussions (Binder et al., 2011). For example, interpersonal discussion frequency among community members, contingent on support attitude, was found to be positively associated with amplification of risk perception of a nearby biological research facility.

2.1.2. Information seeking behaviors

Risk events are characterized as uncertainty and anxiety-producing. Availability of risk information was related with risk amplifications on social media (Guo & Li, 2018). Heightened uncertainty regarding a risk may result in increased public concerns and conspiracy theories (Kasperson et al., 1988; Wirz et al., 2018) and may boost publics' tendencies to seek information related to the risk (Zhang, Borden, & Kim, 2018). As information seeking behaviors are closely associated with information sharing behaviors (Hilverda & Kuttschreuter, 2018; Zhang & Shay, 2019), information seeking behaviors prompted by the uncertainty and lack of information may also increase social media users’ likelihood of sharing the information.

2.1.3. Blame

In addition to information seeking behaviors, the uncertainty created by risk events also prompts publics to attribute and assign responsibilities for the risk event. Blame attribution has been identified as one of the most prominent themes of public sentiments in the social media risk amplification process (Fellenor et al., 2018; Chong & Choy, 2018; Wirz et al., 2018). Publics often attribute blame to government entities due to their inability or inefficiency in managing the risk events. The blaming sentiments often spike risk amplification and create “ripple effects” or secondary impacts of the initial risk event (Kasperson et al., 1988; Wirz et al., 2018). Failure to effectively respond or manage the risk may lead to public blame and may consequently create secondary impacts such as lower levels of public trust and reluctance to accept technology related to the risk (Renn, Burns, Kasperson, Kasperson, & Slovic, 1992; Kasperson et al., 1988). For example, public blame on the U.S. government and legislative groups regarding Zika-related funding has sparked a second peak on the Zika risk amplification process on Twitter (Wirz et al., 2018).

In the case of COVID-19, the initial COVID-19 outbreak created uncertainty and ambiguity and U.S. publics may attribute the pandemic outbreak to Chinese government's mismanagement and inability to contain the initial spread of the cases (Walsh, 2020). As sentiments of blame may contribute to risk amplification and public attention (Wirz et al., 2018), blame attribution on the Chinese government may lead to a ripple effect, generating higher level of information sharing behaviors.

2.1.4. Emotions

Emotions, especially negative emotions such as anger and fear, are strong predictors of online behaviors in social media (Berger & Milkman, 2012; Song, Dai, & Wang, 2016), risk and crisis (Zhang & Borden, 2020; Zhang et al., 2018), and social amplification of risk (Burns, Peters, & Slovic, 2012). While anger was found to be the most viral sentiment online (Berger & Milkman, 2012), high arousal negative emotions such as anger and fear were both found to be closely associated with motivational tendencies such as behavioral intentions (Zhang & Borden, 2020; Zhang et al., 2018). Similarly, using the SARF, Burns et al. (2012) suggested that negative emotions, including fear and anger, were high predictors of increased risk perceptions after an economic crisis.

2.2. Trust and misinformation concerns

Although trust was not a focal concept in the original SARF (Kasperson et al., 1988), (mis)trust in communication sources has been regarded as an important area of theoretical development for SARF (Kasperson, Kasperson, Pidgeon, & Slovic, 2003; Perko, 2011). For example, a recent study has found that trust in information sources predicted agricultural advisors’ belief in climate change (Mase, Cho, & Prokopy, 2015). As social media sources play a significant role in communicating risk as information brokers and “social stations”, and as misinformation and conspiracy theories have been a concern for risk amplification on social media (Wirz et al., 2018), the present study examines the role of trust in information sources as risk amplifier.

Distrust in news media, as measured by The Edelman Trust Barometer (2021), has increased in the United States from 48% to 55% in the past year alone. This is in part due to rising concerns about misinformation and disinformation, or what some politicians erroneously and strategically call “fake news” (Amazeen & Bucy, 2019), and has important implications during times of uncertainty and continuously emerging new information, such as the recent outbreak of the novel coronavirus. When the public no longer trusts mainstream news sources, it is more likely to seek information from alternative platforms such as social media (Tsfati & Ariely, 2014; Tsfati & Cappella, 2003), which, in the absence of gatekeepers, provides mechanisms for users to receive and amplify inaccurate information much faster than ever (Valenzuela, Halpern, Katz, & Miranda, 2019).

While the term “fake news” is an oxymoron (Ireton & Posetti, 2018) used to describe reporting that an actor finds inconvenient or disagrees with (Wardle & Derakhshan, 2018), disinformation is a concept that has attracted a lot of public alarm and scholarly attention in research examining information dissemination on social platforms (Shu, Wang, Lee, & Liu, 2020). Defined as deliberately deceptive news (Ireton & Posetti, 2018; Tandoc, Lim, & Ling, 2018), disinformation contains fabricated facts and can be motivated by either political or financial interests. Unlike disinformation, misinformation is false information that is disseminated by people who think it is accurate, rather than by malicious entities that are aware of the truth and aim to manipulate public opinion (Wardle & Derakhshan, 2018). Regardless of the actors’ intentions in spreading false information, the openness of social media has created an ideal setting for such inaccuracies to spread like fire, creating an “information disorder” (Shu et al., 2020, p. 2). Pew survey research (Barthel, Mitchell, & Holcomb, 2016) found that while Americans are concerned about misinformation, they generally feel confident they can detect it in the news, while 23% say they mistakenly shared fabricated news stories in the past. In a study of 38 countries, Newman, Fletcher, Kalogeropoulos, and Nielsen (2019) found that, compared to their Western European counterparts, Americans are twice as concerned about misinformation on the internet, with 67% reporting high levels of concern. While trust in a source of information and interpersonal online discussions might make social media users more confident in sharing information about health risks, concerns about misinformation might make them more hesitant to do so, especially since the number of Americans (43%) who think the public is responsible for preventing the spread of fabricated news is almost equal to the those who think governments (45%) or social networking sites and search engines (42%) should shoulder the responsibility (Barthel et al., 2016).

As patients increasingly turn to social media and online sources for health information, trust in risk information available online has raised concerns among medical and mass communication scholars (Lin, Levordashka, & Utz, 2016; Song, Dai, & Wang, 2016). Even before social media were such a staple in consumers' health news diet, trust in health information on the Internet was positively associated with people's discussion of health-related topics, online health information seeking, and online information sharing (Hou & Shim, 2010). Likewise, Song, Omori, et al. (2016) found that trust in social-media health information (both experience- and expertise-based) was a significant predictor of seeking health information online. Additionally, trust in various information sources was found to be associated with beliefs about the risk and subsequently lead to behaviors regarding the risk in a recent study expanding SARF (Mase et al., 2018).

While a recent study conducted in India (Neyazi, Kalogeropoulos, & Nielsen, 2021) found no correlation between misinformation concerns and online news sharing, concern about “fake news” and inaccurate information on social networks has been very high in the U.S. (Newman et al., 2019). Given the incipient level of the crisis, and as increased doubts and debates on facts regarding a risk event may heighten public uncertainty and may influence the credibility of information sources (Kasperson et al., 1988), the present study argues that misinformation concerns may alter the degree to which online interpersonal discussions and trusted sources affect risk amplification and information sharing behaviors. More specifically, though online discussion may increase information sharing regarding the risk, Twitter users might be more cautious about amplifying risk messages when they perceive certain platforms or content as potential misinformation land mines, thus lowering sharing behaviors. Similarly, despite trust in certain Twitter accounts or sources may increase sharing behaviors, Twitter users may be reluctant to share if they perceive possible speculative information to be circulating on the social network.

2.3. The present study

The present study is especially interested in understanding the relationship between trust (in Twitter sources) and risk sharing behaviors on Twitter as well as between trust (in Twitter sources) and coronavirus knowledge. Previous SARF literature has indicated that social stations (i.e., various sources) may lead to risk amplification (Kasperson et al., 1988) and that trust in sources may affect beliefs and behaviors associated with risk (Mase et al., 2015). However, the relationships between trust in sources and risk sharing and between trust in sources and risk knowledge have not been tested in the social media environment. Therefore, the present study proposes the following research questions:

RQ1: How is trust associated with information sharing behaviors? More specifically, how does trust in different Twitter sources associate with information sharing behaviors?

RQ2: How is trust associated with coronavirus knowledge? More specifically, how does trust in different Twitter sources associate with coronavirus knowledge?

The review of literature, including the SARF and previous studies applying SARF, has suggested that a number of factors, including interpersonal discussions (offline and online), information seeking behaviors, blame, negative emotions (fear and anger) and trust, may contribute to risk sharing behaviors on social media (Binder et al., 2011; Burns et al., 2012; Hilverda & Kuttschreuter, 2018; Kasperson et al., 1988; Wirz et al., 2018). Applying these assumptions in the context of Twitter at the early stage of the pandemic outbreak in the U.S., the present study proposes the following hypotheses:

H1: Interpersonal discussions (offline and online) are positively associated with information sharing behaviors.

H2: Information seeking behaviors are positively associated with information sharing behaviors.

H3: Blame is positively associated with information sharing behaviors.

H4: Negative emotions (fear and anger) are positively associated with information sharing behaviors.

Furthermore, as discussed in the literature review section, uncertainty at the incipient stage of the pandemic may increase publics’ doubts and concern regarding accuracy about the risk information (Kasperson et al., 1988; Wirz et al., 2018) and Twitter users may be reluctant to share risk information if they perceive possible speculative information to be circulating on Twitter. Therefore, the present study hypothesizes that misinformation concerns may alter the degree to which online interpersonal discussions and trusted sources affect risk amplification and information sharing behaviors.

H5: Misinformation concerns moderate online interpersonal discussion's effect on information sharing behaviors.

H6: Misinformation concerns moderate trusted accounts’ effect on information sharing behaviors.

3. Method

The present study employed an online survey to examine how risk transmission may amplify or attenuate at the early stage of pandemic outbreak in the United States. Prior to distribution, the study received approval from the Institutional Review Broad on February 10, 2020. Survey participants were recruited from Amazon Mechanical Turk, a service that has been shown to produce reliable data if surveys include attention checks to reduce random answering (Rouse, 2015) and where participants are more likely to read instructions than typical undergraduate student populations (Ramsey, Thompson, McKenzie, & Rosenbaum, 2016). Several measures were taken to ensure data quality: first, reCAPCHA were used to filter out bots; second, participants were required to have an approval rate of 99%; and finally, participants were required to be living in the United States. A total of N = 508 were recruited in small batches over the course of two weeks, from February 12 to February 25, 2020. After data cleaning, N = 450 were retained. When asked about Twitter usage, 87.6% of participants reported to use Twitter from regularly to rarely and 12.4% reported never. Therefore, only Twitter users were eventually retained in the sample, N = 394, and used in analysis.

3.1. Participants

Within the sample, N = 239 (60.7%) identified as male, N = 150 (38.1%) identified as female, and N = 5 (1.3%) identified as other. Average age for the participants was M = 36.51 (SD = 11.21). As for race and ethnicity, N = 43 (10.9%) were Hispanic or Latino, N = 322 (81.7%) were white, N = 34 (8.6%) were black or African American, N = 25 (6.3%) were Asian, N = 2 (0.5%) were American Indian or Alaska Native, and N = 11 (2.8%) identified as other.

In terms of employment status, N = 344 (87.3%) were employed and N = 50 (12.7%) were not employed. As for education, most participants had a bachelor's degree (N = 167, 42.4%), followed by some college or technical training (N = 128, 32.5%), post-graduate degree (N = 55, 14.0%), high school graduate (N = 40, 10.2%), and less than high school (N = 4, 1.0%). See Table 1 for participant profile.

Table 1.

Participant profile

| Demographics | Categories | N (%) |

|---|---|---|

| Gender | Male | 239 (60.7) |

| Female | 150 (38.1) | |

| Other | 5 (1.3) | |

| Age | Mean 36.51 (Range: 18–73) | |

| Ethnicity | Hispanic or Latino | 43 (10.9) |

| Race | White | 322 (81.7) |

| Black/African American | 34 (8.6) | |

| American Indian/Alaska Native | 2 (.5) | |

| Asian | 25 (6.3) | |

| Other (e.g., multiracial) | 11 (2.8) | |

| Education | Less than high school | 4 (1.0) |

| High school diploma | 40 (10.2) | |

| Some college or technical training | 128 (32.5) | |

| Bachelor's degree | 167 (42.4) | |

| Post-graduate work or degree | 55 (14.0) | |

| Household Income | Less than $30,000 | 81 (20.6) |

| $30,000 - less than $50,000 | 88 (22.3) | |

| $50,000 - less than $75,000 | 107 (27.2) | |

| $75,000 - less than $100,000 | 70 (17.8) | |

| $100,000 or more | 48 (12.1) | |

| Employment | Not employed | 50 (12.7) |

| Employed | 344 (87.3) | |

| Marital Status | Single | 252 (64.0) |

| Married | 124 (36.0) | |

| Total | 394 (100.0) | |

In general, 99.7% of the participants had heard of the novel coronavirus and reported to be very familiar with it (M = 4.4, SD = 0.78) (1 = not at all, 5 = a great deal). While risk perceptions were at a medium level (M = 3.59/5, SD = 0.83), perceived vulnerability was relatively low in this sample (M = 2.09/5, SD = 0.98).

3.2. Procedure

After consenting to the survey, participants were first asked about their familiarity with the coronavirus, their risk perceptions and perceived vulnerability. They were then asked to recall and rate their information sharing and seeking behaviors, as well as their online and offline discussion regarding the coronavirus in the past week. Afterwards, participants answered questions measuring their trust in various information sources on Twitter, knowledge about coronavirus, misinformation concerns, negative emotions (fear and anger), their blame attribution on the Chinese government, as well as related demographic information.

3.3. Measurement

All variables were measured on 5-point Likert scales. Information sharing behaviors was created with six items asking participants whether they have posted or retweeted about coronavirus-related news (1 = never, 5 = very frequently). Information seeking behaviors were adopted from Zhang and Shay (2019) with four items such as followed CDC, sought information on social media, etc. (1 = never, 5 = very frequently).

The knowledge variable (M = 2.26, SD = 1.05) was created as an additive scale with four items assessing participant knowledge of the coronavirus, symptoms, transmission, etc. The variable was treated as dummy variables with 1 = correct answer and 0 = incorrect. An index for misinformation concerns was adapted from Reuter, Hartwig, Kirchner, and Schlegel (2019) with five items such as “misinformation is a really serious threat” (1 = strongly disagree, 5 = strongly agree). Face-to-face discussion about coronavirus was adopted from literature on political talk (de Zuniga, Bachmann, Hsu, & Brundidge, 2013) with eight items asking how frequently respondents talked face-to-face about the coronavirus with family/friends, acquaintances, strangers, people with similar political views, people with dissimilar political views, people from a different race or ethnicity, people from a different social class, and people who propose alternatives or policies for problem solving (1 = never, 5 = all the time). Online discussion about coronavirus adopted the same scale asking participants specifically about their online discussion. Negative emotions, including fear and anger, were measured by asking the participants the degree to which they experienced the emotions (1 = none at all, 5 = a great deal) (Kim & Niederdeppe, 2013; Zhang & Borden, 2020). Blame (attribution of responsibility to the Chinese government) was measured with a scale adopted from Zhang and Kim (2017) (1 = strongly disagree, 5 = strongly agree). See Table 2 for measurements, Cronbach's alphas, and Means and Standard Deviations.

Table 2.

Measurements.

| Information sharing behaviors |

Cronbach's Alpha = .88, M = 2.08, SD = .97 1. Seen news about coronavirus on Twitter 2. Favorited tweets sharing news about the coronavirus 3. Retweeted posts sharing news about the coronavirus 4. Replied to tweets sharing news about the coronavirus 5. Posted tweets with news or opinion about the coronavirus 6. Dispelled a recurring inaccurate information about coronavirus |

| Information seeking |

Cronbach's Alpha = .86, M = 2.24, SD = 1.08 1. Followed relevant government agencies such as CSC's social media account for more information on the crisis 2. Visited relevant government agencies such as CDC's website for more information on the crisis 3. Sought information about the crisis from my friends and family on social media 4. Sought information about the crisis from an expert (public figure) who I already follow |

| Misinformation concerns |

Cronbach's Alpha = .74, M = 4.32, SD = .67 1. Misinformation is a really serious threat to this country 2. I have been exposed to misinformation and I believed it (not included) 3. Others have been exposed to misinformation and believed it 4. Misinformation can change people's attitudes 5. Misinformation can be stopped by large tech companies (not included) 6. Social media are leading sources of misinformation |

| Face-to-face/online discussion about coronavirus |

Cronbach's Alpha = .93, M = 2.23, SD = .92 (Face-to-face) Cronbach's Alpha = .95, M = 2.24, SD = 1.05 (online) 1. People I know well, like family members and close friends 2. People I don't know very well, like acquaintances I have met in real life 3. Strangers, or people that you have only met online 4. People whose political views are similar to yours and generally agree with you 5. People whose political views are different from yours and generally disagree with you 6. People from a different race or ethnicity 7. People from a different social class 8. People who propose alternatives or policies for problem solving |

| Blame |

Cronbach's Alpha = .95, M = 3.40, SD = 1.20 1. The Chinese government is highly responsible for the crisis 2. The Chinese government should be held accountable 3. The crisis is the fault of the Chinese government 4. I blame the Chinese government for the crisis |

| Fear |

Cronbach's Alpha = .95, M = 2.23, SD = 1.07 I feel … scared; fearful; afraid; anxious; worried; concerned |

| Anger |

Cronbach's Alpha = .93, M = 1.92, SD = 1.04 I feel … angry; irritated; disgusted; contempt; annoyed |

Finally, the level of trust in Twitter sources of coronavirus information was adapted from Song, Omori, et al. (2016), with answers ranging from 1 = distrust to 5 = trust. Participants were asked to rate their degree of trust in Twitter accounts of national television stations (M = 3.34, SD = 1.25), local television stations (M = 3.54, SD = 1.06), newspapers (M = 3.59, SD = 1.15), cable television channels (M = 3.20, SD = 1.28), major news websites (M = 3.45, SD = 1.19), alternative news websites or blogs (M = 2.57, SD = 1.19), “people I follow (that I don't personally know)” (M = 2.70, SD = 1.13), federal agencies such as CDC (M = 4.00, SD = 1.14), local health department (M = 3.96, SD = 1.09), state health department (M = 3.95, SD = 1.11), politicians (M = 2.43, SD = 1.18), celebrities (M = 2.17, SD = 1.14), friends and families (M = 3.31, SD = 1.07), and the Chinese government (M = 2.12, SD = 1.18).

4. Results

To answer RQ1 and test hypotheses 1 through 5 about factors affecting information sharing behaviors, a hierarchical regression analysis was conducted where trust in various sources of information on Twitter were entered in the first block, interpersonal discussions, information seeking behaviors, blame, and emotions were entered in the second model, and demographics were entered in the third. Respondents who reported they never use Twitter were excluded from the analyses, with final N = 394. The sample size is appropriate to conduct Linear Multiple Regression with 26 predictors, an effect size of = 0.15, power 1- β = 0.99, and α = 0.05 (Cohen, 1988).

In answer to RQ1, which asked what sources of trust are most associated with information sharing behaviors, Table 3 shows that trust in Twitter accounts of regular people the respondents follow despite not necessarily knowing them personally (B = 0.10, p = .022), of politicians (B = 0.13, p = .003), of celebrities (B = 0.17, p = .001), and of the Chinese government (B = 0.09, p = .004) was significantly positively related to COVID-19 information sharing behaviors. Trust in these types of accounts explained 33% of variance in the dependent variable. Only trust in accounts of the Chinese government remained significantly associated with information sharing behaviors in the second model, which controlled for emotions, blame, interpersonal discussions, and information seeking behaviors, as well as in the third model, which controlled for demographics, and where trust in accounts of regular people became significant again.

Table 3.

Regression analysis with variables predicting information sharing behaviors.

| Trust in Twitter accounts of … | Model 1 |

Model 2 |

Model 3 |

|||

|---|---|---|---|---|---|---|

| B | (SE) | B | (SE) | B | (SE) | |

| National TV | -.06 | .05 | -.02 | .04 | -.02 | .04 |

| Local TV | .01 | .05 | .02 | .04 | .04 | .04 |

| Newspapers | -.02 | .05 | .02 | .04 | .01 | .04 |

| Cable TV | -.01 | .04 | -.04 | .03 | -.05 | .03 |

| Major news websites | -.02 | .05 | -.004 | .03 | .01 | .03 |

| Blogs and alternative news sites | .07 | .04 | .01 | .02 | .02 | .02 |

| Regular people I follow | .103∗ | .04 | .05 | .03 | .07∗ | .03 |

| Friends and family | .04 | .04 | -.05 | .03 | -.05 | .03 |

| Federal agencies like the CDC | -.05 | .05 | .01 | .04 | .01 | .04 |

| Local health department | -.02 | .05 | -.05 | .04 | -.05 | .04 |

| State health department | .04 | .06 | .007 | .04 | -.01 | .04 |

| Politicians | .13∗ | .04 | .05 | .03 | .05 | .03 |

| Celebrities | .17∗∗ | .05 | .02 | .03 | .01 | .03 |

| Chinese government |

.14∗∗ |

.04 |

.09∗ |

.03 |

.09∗ |

.03 |

| Fear | -.008 | .03 | .00 | .03 | ||

| Anger | .14∗∗ | .03 | .14∗∗ | .03 | ||

| Blame | .05∗ | .02 | .05∗ | .02 | ||

| Face-to-face discussion | .07 | .05 | .04 | .05 | ||

| Online discussion | .17∗∗ | .04 | .18∗∗ | .04 | ||

| Information seeking |

.36∗∗ |

.03 |

.35∗∗ |

.03 |

||

| Age | -.003 | .002 | ||||

| Gender (Male) | .09 | .05 | ||||

| Race (White) | .07 | .07 | ||||

| Community (Rural) | .006 | .04 | ||||

| Education | .05∗ | .03 | ||||

| Income |

.01 |

.01 |

||||

| N | 394 | 394 | 394 | |||

| R2 | .33 | .69 | .70 | |||

| F | 13.88∗∗ | 42.15∗∗ | 33.64∗∗ | |||

∗p < .05, ∗∗p < .001.

RQ2 asked how trust in various types of Twitter accounts is associated with knowledge about the coronavirus. A linear regression analysis (Table 4 ) was run, where trust in each type of account were entered as independent variables. Trust in local TV (B = −0.21, p = .003) and the local health department (B = −0.18, p = .024) was negatively associated with knowledge, and trust in the Twitter account of the CDC was positively associated with knowledge (B = 0.18, p = .012).

Table 4.

Regression analysis with trust variables predicting knowledge about the coronavirus.

| Trust in Twitter accounts of … | B | (SE) |

|---|---|---|

| National TV | .11 | .07 |

| Local TV | -.21∗ | .07 |

| Newspapers | .04 | .07 |

| Cable TV | -.03 | .05 |

| Major news websites | .10 | .06 |

| Blogs and alternative news sites | -.07 | .05 |

| Regular people I follow | -.03 | .05 |

| Friends and family | .08 | .05 |

| Federal agencies like the CDC | .18∗ | .07 |

| Local health department | .09 | .08 |

| State health department | -.18∗ | .07 |

| Politicians | -.007 | .05 |

| Celebrities | -.11 | .06 |

| Chinese government |

-.08 |

.05 |

| N | 394 | |

| R2 | .15 | |

| F | 4.89∗∗ |

∗p < .05, ∗∗p < .001.

H1 predicted that interpersonal discussions about the coronavirus are positively associated with information sharing behaviors. As Table 3 shows in Model 2, only online discussion (B = 0.17, p = .000) was positively correlated with the dependent variable. H1 was partially supported. Online discussions remained statistically significant in the third model, which controlled for demographic variables (B = 0.17, p = .000), while face-to-face discussions were again not significant predictors. None of the demographics made a difference in information sharing behaviors, with the exception of education, which was positively associated with the dependent variable (B = 0.07, p = .02).

H2 predicted that information seeking behaviors are positively associated with information sharing behaviors. Regression analysis (see Table 3) shows that information seeking was significantly correlated with the dependent variable both in Model 2 (B = 0.36, p = .000) and in Model 3 (B = 0.35, p = .000). H2 was supported.

H3 predicted that blame is positively correlated with information sharing behaviors regarding the coronavirus. Table 3 shows that blame was a significant predictor of information sharing both in Model 2 (B = 0.05, p = .03) and in Model 3 (B = 0.05, p = .04). H3 was supported.

H4 predicted that emotions (fear and anger) will be positively associated with information sharing behaviors. The regression analysis in Table 3 (Models 2 and 3) found that fear did not make a difference in either model, but anger was positively associated with information sharing behaviors (B = 0.14, p = .000), even when controlling for demographics (B = 0.14, p = .000). Therefore, H4 was only partially supported.

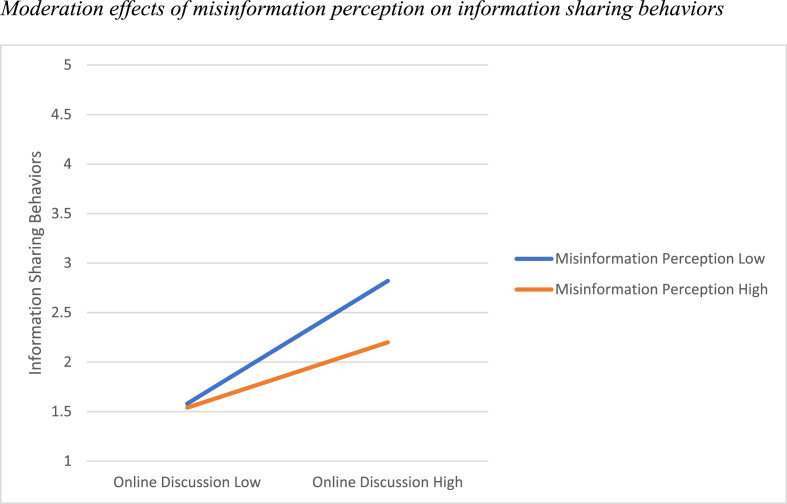

H5 predicted that misinformation concerns moderate online interpersonal discussion's effect on information sharing behaviors. To test the moderation effect, PROCESS, a regression-based approach to moderation and mediation analysis (Hayes, 2013), was employed in SPSS. A simple moderation analysis where the coronavirus information sharing behavior index was entered as the dependent (Y) variable, online discussion was entered as independent (X) variable, and concern about misinformation as moderator (W) variable (Model 1 in PROCESS) found that although online discussion (B = 1.21, p = .000) had a positive association to sharing behaviors, when interacted with misinformation concerns, the association became negative (B = −0.14, p = .013). See Table 5 and Fig. 1 . The model explains 49% of the variance in the dependent variable. H5 was supported. In other words, if respondents were concerned about misinformation, they were less likely to share information about the novel coronavirus even when they would otherwise engage in online conversations with others.

Table 5.

Misinformation concerns moderate online discussion's effect on information sharing behaviors.

| Information sharing behaviors |

|||||

|---|---|---|---|---|---|

| B | (SE) | t | LLCI | ULCI | |

| Online discussion | 1.21∗∗∗ | .25 | 4.89 | .73 | 1.70 |

| Misinformation concerns | .13 | .14 | .90 | -.15 | .41 |

| (M) Online discussion x Misinformation concerns |

-.14∗ |

.06 |

−2.48 |

-.25 |

-.03 |

| Conditional effects of Online discussion at the values of Misinformation concerns | |||||

| 3.75 | .69∗∗∗ | .05 | 14.27 | .59 | .78 |

| 4.50 | .58∗∗∗ | .03 | 16.93 | .52 | .65 |

| 5.00 |

.52∗∗∗ |

.05 |

10.50 |

.42 |

.61 |

| N | 394 | ||||

| R2 | .49 | ||||

| F (3, 390) | 124.17∗∗∗∗ | ||||

∗p < .05, ∗∗p < .001.

Fig. 1.

Moderation effects of misinformation perception on information sharing behaviors.

H6 predicted that misinformation concerns moderate trusted accounts’ effect on information sharing behaviors. Because regression analysis in Table 3 found that trust in the Twitter accounts of regular people and of the Chinese government were consistently correlated with information sharing behaviors, they were included in moderation models with PROCESS (Hayes, 2013). The regression-based models found no interaction effect for both trust in regular people Twitter accounts and trust in Chinese government Twitter accounts. H6 was not supported.

5. Discussion

A survey of U.S. social media users set out to examine risk amplification and attenuation factors associated with coronavirus-related information sharing behaviors and knowledge in the incipient stages of the COVID-19 pandemic in the United States (February 11–25, 2020). Regression analyses showcased associations that have important implications for risk communication in a world where media consumers rely increasingly on alternative sources of news, such as social media.

Despite the high awareness of the novel coronavirus SARS-CoV2 (M = 4.4 on a 5-point scale, SD = 0.78), relatively low overall sharing behaviors (M = 1.97 on a 5-point scale, SD = 0.96) may have indicated an attenuation of risk at the brink of a massive pandemic outbreak in the U.S. However, this low sharing behavior might be a blessing in disguise considering the respondents’ overall mediocre level of knowledge about how the virus presented itself and was transmitted (M = 2.26 on 4-point additive scale, SD = 1.04). Supporting existing literature (Chung, 2011; Strekalova & Krieger, 2017), the participants in the present study were more likely to share information about COVID-19 if they engaged in discussions about the coronavirus online, but not face-to-face, with people in their social circle. This also indicates that social media discussions of risk may have a more prominent effect than that of offline risk discussion when it comes to risk amplification (Ng et al., 2018).

Regression analysis also found that users who actively sought information about the risk (from the CDC, public figures, and on social media) were more likely to help spread the word on their social networking sites. This has important implications for the institutions that want to increase awareness and protective actions among the larger public during the early stages of a pandemic. They should make scientific information available widely and frequently on their websites and official social media accounts to increase the likelihood that information seekers would contribute to the social amplification of the risk.

Supporting previous literature (Chong & Choy, 2018; Wirz et al., 2018), blame attribution on the Chinese government and negative emotion of anger generated higher level of information sharing behaviors. The pandemic, though still at the incipient level, has created uncertainty and ambiguity, leading to anger and blaming sentiments that amplifies the propagation of the risk. While anger was found to be positively associated with information sharing behaviors, fear was not. This may indicate that different negative arousal emotions may function differently. Anger was often found to be associated with blame attribution and fear was usually related with personal (health) safety (Song, Dai, & Wang, 2016). This is also consistent with the Extended Parallel Process Model that if people are experiencing too much fear and not enough efficacy in a pandemic, they may engage in fear control and avoid self-protective or other behaviors (Witte, Cameron, McKeon, & Berkowitz, 1996). At the early stage of COVID-19 in the U.S., though the publics were attributing blame to the Chinese government, they were either not alert enough to perceive themselves to be at risk yet or having too much fear without any ways to address or alleviate the fear. Either possibility may not amplify risk or lead to information sharing behaviors.

Analysis of trust in information sources on Twitter yielded interesting results. When controlling for other variables, only trust in regular people (whom social-media users follow on Twitter but don't know personally) and trust in the Chinese government led to increased information sharing behaviors. This may be consistent with The Edelman Trust Barometer (2019) results showing an increasing distrust in mainstream news sources and government sources in the U.S. However, people may feel that information from strangers on Twitter are more reliable and shareable. For social-media users to follow strangers, they must be using specific criteria, such as common interests and lifestyles, rather than heuristics related to authority or celebrity, such as with the accounts of well-established media, health, and governmental institutions. Because they relate more easily to regular people, this parasocial interaction leads social-media users to feel a sense of closeness or what social psychology calls “ambient intimacy” (Lin, Levordashka, & Utz, 2016). As for trust in the Chinese government, those who trust the Chinese government may also be following the pandemic outbreak in China before it was declared a pandemic in the United States. This engagement with the risk event may lead to their increased information sharing behaviors.

Furthermore, the present study found that while trust in local TV and state health departments did not increase knowledge of COVID-19, only trust in the CDC did. At the early stage of a pandemic with little expert knowledge of the virus, direct communication from federal agencies such as The Centers for Disease Control and Prevention is the most beneficial. However, sources such as local TV and state health departments may report and communicate contradictory information about the pandemic, undermining the effects of health risk education. This is consistent with prior research (Jagiello & Hills, 2018) that, in the risk amplification/attenuation process, the more a message is transmitted, the more negative and biased it may become. This illustrates the importance of direct communication of health risk information/education from credible sources at early stages of a pandemic.

The moderation test found that misinformation concerns only moderate online interpersonal discussion's effect on information sharing behaviors but does not moderate trust in information sources' effect on sharing behaviors. This may suggest that, though people have misinformation concerns, those worries over misinformation may be directed at distrusted sources or general online information (such as in an online discussion). When misinformation concerns are high, people are reluctant to share information on Twitter despite engagement in online discussion. However, once an information source is considered to be credible, information sharing behaviors are not affected by misinformation perceptions. This may indicate that, if social institutions such as the government or the media wish to alert public about potential risks and to encourage propagation of reliable information, establishing trust is crucial.

Not surprisingly, trust in the Twitter account of the CDC led to increased knowledge about the coronavirus. As the survey was conducted before COVID-19 became a national story, trust in celebrities, local television stations, and state health departments led to lower levels of knowledge about the virus, most likely because there were not yet sharing timely information at these early stages of the public health crisis or perhaps they were sharing contradictory information, as it often happens during uncertain situations.

5.1. Theoretical and practical implications

The present study's theoretical contributions related to SARF are twofold. First, it expands SARF on social media by identifying risk amplification factors online. Among other factors, online discussions, information seeking behaviors, anger, blame, and trusted information sources could lead to an increase in the spread of risk information. In addition to factors identified in previous literature, the present study further adds (dis)trust and misinformation perceptions to the framework as an important amplification or attenuation factor on social media. Second, the study shed light on risk amplification and risk management at the initial stage of risk events.

Findings imply that risk amplification on social media differs significantly from that of traditional media. First, the conversationality and interconnectedness nature of social media (Sundar, Bellur, Oh, Jia, & Kim, 2016) allows for risk information to propagate through public engagements. As publics engage in discussions and information seeking behaviors online, they are also actively framing and amplifying the risk. Second, sentiments of blame and negative emotion of anger often coexist online in leading to risk sharing behaviors and contributing to risk amplification. Signs of blame and anger on social media should be monitored as they might cause ripple effect, spiking public attention of the risk event. Third, not all sources on social media may become “social stations” or information brokers of risk information, as public trust in only a few sources may lead to information sharing behaviors and the trusted sources are often situational or contextual. As the present study showed, only trust in other regular people and the original source of outbreak the Chinese government led to information sharing behaviors.

A major contribution of the present study to SARF is delineating misinformation perception's role in social media risk amplification. The study showcased that misinformation concerns affect the way people obtain and share information on social media, contributing to the amplification or attenuation of risk. When engaging in online discussions regarding the risk, concerns regarding misinformation may caution people and discourage people from further sharing the risk. Additionally, trust in information sources on social media may overcome concerns regarding misinformation. These findings may be a double-edged sword. On the one hand, it may exacerbate misinformation in the risk amplification process as it is unknown whether the information shared is accurate or not. People could be placing trust on regular people who may also be sharing inaccurate information, further circulating misinformation on social media. This is also evident in the result that only trust in CDC led to increased knowledge of the coronavirus. On the other, it indicates the importance of increasing the availability of accurate risk information and cultivating trusted sources for the public in disseminating risk information.

Findings provide insights into risk management and communication at early stages of a serious pandemic outbreak. Despite having a large window of preparation time, the U.S. became one of the countries with the most confirmed coronavirus cases (Johns Hopkins Coronavirus Resource Center, 2020). The general attenuation of risk prior to the outbreak in the U.S., as indicated by overall low information sharing behaviors, has revealed serious hidden issues in the country's preparations for and responses to the pandemic. First, publics (dis)trust and misinformation concerns in various sources on social media have prevented the scientific community to alert and properly educate the public about the risk from the very beginning. Second, inconsistencies in the risk messaging further confuses the publics, leading to an attenuation of risk. Despite official risk communicators such as the CDC's communication efforts early on, the pandemic risk had been given a lower priority in local media coverage or at the state level, minimizing the risk and knowledge of the risk.

Based on these findings, a few practical suggestions are offered. Public trust is the foundation of effective risk communication and trust-building prior to crises are crucial (Reynolds & Seeger, 2014). Since it is trust in regular people that predicted increased information sharing, public health institutes such as the CDC could target opinion leaders to help spread educational information and close the knowledge gap. Consistent risk messaging throughout all levels (local and national) are also vital to avoid over amplification or attenuation of risk. Risk communication efforts and messaging therefore need to be centralized. As people actively seek and create risk information on social media, and information seeking is positively associated with information sharing, public health institutions such as CDC should make accurate risk information available as widely as possible. Ensuring consistent risk messaging at the state and local level is also essential.

6. Limitations and future research

A limitation of the present study is that it did not capture the political leaning of the respondents, especially in light of the finding that trust in politicians was an important factor in the models predicting information sharing and that the crisis was politicized in the early stages, with politicians being cited more than scientists in the initial news coverage (Hart, Chinn, & Soroka, 2020). Future studies should take political leaning into account when analyzing the following stages of the crisis, in a highly polarized society. Trust variables and misinformation concerns could be added to classic SARF studies focusing on other health or environmental crises with relatively low public risk perceptions and high likelihood for misinformation such as climate change (Leiserowitz, 2005). As climate change risk is prone to misinformation on social media (Treen, Williams, & O'Neill, 2020), future research could examine how trust in various information sources and misinformation concerns may be associated with climate change risk sharing on social media.

7. Conclusion

Overall, the present study found high levels of concern about misinformation and low levels of information sharing, suggesting an attenuation of risk in the initial stages of the COVID-19 crisis. When amplification did occur, it was predicted by key variables in the SARF literature including anger, blame, online discussion, and information seeking behaviors. A key contribution of the present study to SARF is the finding that risk amplification was also predicted by trust in Twitter accounts of non-scientific sources, such as regular people, politicians, celebrities, and the Chinese government. However, trust in local sources (local media and state health department) was negatively associated with coronavirus knowledge. Federal agency such as the CDC was the only source positively associated with coronavirus knowledge Furthermore, while misinformation concerns may deter people from sharing risk information on Twitter, trust in sources on Twitter may overcome people's misinformation concerns.

The present study adds to the SARF literature regarding risk amplification and/or attenuation on social media during initial stages of a crisis. Findings suggest that trust and misinformation concerns are crucial factors to consider when it comes to dissemination of risk information on Twitter. Trust in Twitter sources that act as “social stations” could significantly amplify or attenuate risk messages at the outbreak of a crisis. However, alarmingly, trust in the “wrong” sources may lead to either an amplification of incorrect risk information or an attenuation of risk when public alert is needed at the outbreak of a crisis. Either of these two outcomes would problematize subsequent risk communication efforts.

The present study also provides practical implications for risk management and communication on social media at early stages of a pandemic. When a need to alert the publics of an impending risk arises such as at the outbreak of a potential pandemic, it is important to cultivate trusted sources, to increase the overall availability of accurate information, and to ensure the consistency of risk information through all local and national levels of sources on Twitter.

Credit author statement

Xiaochen Angela Zhang: Conceptualization, methodology, writing (intro, SARF literature review and discussion), revision, review and editing. Raluca Cozma: Survey data collection, writing (intro, trust and misinfo concern literature review and implications), original analysis, revision.

References

- Barometer E.T. 2021. Edelman trust barometer.https://www.edelman.com/sites/g/files/aatuss191/files/2021-03/2021%20Edelman%20Trust%20Barometer.pdf Retrieved from. [Google Scholar]

- Barthel M., Mitchell A., Holcomb J. Many Americans believe fake news is sowing confusion. Pew Research Center. 2016;15:12. [Google Scholar]

- Brenkert-Smith H., Dickinson K.L., Champ P.A., Flores N. Social amplification of wildfire risk: The role of social interactions and information sources. Risk Analysis. 2013;33:800–817. doi: 10.1111/j.1539-6924.2012.01917.x. [DOI] [PubMed] [Google Scholar]

- Binder A.R., Scheufele D.A., Brossard D., Gunther A.C. Interpersonal amplification of risk? Citizen discussions and their impact on perceptions of risks and benefits of a biological research facility. Risk Analysis. 2011;31:324–334. doi: 10.1111/j.1539-6924.2010.01516.x. [DOI] [PubMed] [Google Scholar]

- Burns W.J., Peters E., Slovic P. Risk perception and the economic crisis: A longitudinal study of the trajectory of perceived risk. Risk Analysis. 2012;32:659–677. doi: 10.1111/j.1539-6924.2011.01733.x. [DOI] [PubMed] [Google Scholar]

- Chong M., Choy M. The social amplification of haze-related risks on the Internet. Health Communication. 2018;33:14–21. doi: 10.1080/10410236.2016.1242031. [DOI] [PubMed] [Google Scholar]

- Chung I.J. Social amplification of risk in the internet environment. Risk Analysis. 2011;31:1883–1896. doi: 10.1111/j.1539-6924.2011.01623.x. [DOI] [PubMed] [Google Scholar]

- Cohen J. Routledge; New York, NY: 1988. Statistical power analysis for the behavioral science. [Google Scholar]

- Fellenor J., Barnett J., Potter C., Urquhart J., Mumford J.D., Quine C.P. The social amplification of risk on Twitter: The case of ash dieback disease in the United Kingdom. Journal of Risk Research. 2018;21:1163–1183. doi: 10.1080/13669877.2017.1281339. [DOI] [Google Scholar]

- Hart P.S., Chinn S., Soroka S. Politicization and polarization in COVID-19 news coverage. Science Communication. 2020;42(5):679–697. doi: 10.1177/1075547020950735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayes A.F. Guilford; New York: 2013. An introduction to mediation, moderation, and conditional process analysis: A regression-based approach. [Google Scholar]

- Hilverda F., Kuttschreuter M. Online information sharing about risks: The case of organic good. Risk Analysis. 2018;38:1904–1920. doi: 10.1111/risa.12980. [DOI] [PubMed] [Google Scholar]

- Hou J., Shim M. The role of provider–patient communication and trust in online sources in Internet use for health-related activities. Journal of Health Communication. 2010;15(sup3):186–199. doi: 10.1080/10810730.2010.522691. [DOI] [PubMed] [Google Scholar]

- Ireton C., Posetti J. UNESCO; Paris: 2018. Journalism, fake news & disinformation: Handbook for journalism education and training.https://unesdoc.unesco.org/ark:/48223/pf0000265552_eng [Google Scholar]

- Jagiello R.D., Hills T.T. Bad news has wings: Dread risk mediates social amplification in risk communication. Risk Analysis. 2018;38:2193–2207. doi: 10.1111/risa.13117. [DOI] [PubMed] [Google Scholar]

- Johns Hopkins Coronavirus Resource Center . 2020. COVID-19 dashboard.https://coronavirus.jhu.edu/map.html Retrieved from. [Google Scholar]

- Kasperson J.X., Kasperson R.E., Pidgeon N., Slovic P. In: The social amplification of risk. Pidgeon N., Kaperson R., Slovic P., editors. Cambridge University Press; Cambridge: 2003. The social amplification of risk: Assessing fifteen years of research and theory; pp. 13–46. [Google Scholar]

- Kasperson R.E., Renn O., Slovic P., Brown H.S., Emel J., Goble R., et al. The social amplification of risk: A conceptual framework. Risk Analysis. 1988;8:177–187. doi: 10.1111/j.1539-6924.1988.tb01168.x. [DOI] [Google Scholar]

- Kim H.K., Niederdeppe J. The role of emotional response during an H1N1 influenza pandemic on a college campus. Journal of Public Relations Research. 2013;25(1):30–50. doi: 10.1080/1062726X.2013.739100. [DOI] [Google Scholar]

- Leiserowitz A.A. American risk perceptions: Is climate change dangerous? Risk Analysis. 2005;25:1433–1442. doi: 10.1111/j.1540-6261.2005.00690.x. [DOI] [PubMed] [Google Scholar]

- Lewis R.E., Tyshenko M.G. The impact of social amplification and attenuation of risk and the public reaction to mad cow disease in Canada. Risk Analysis. 2009;29:714–728. doi: 10.1111/j.1539-6924.2008.01188.x. [DOI] [PubMed] [Google Scholar]

- Lin R., Levordashka A., Utz S. Ambient intimacy on Twitter. Cyberpsychology. Journal of Psychosocial Research on Cyberspace. 2016;10(1) doi: 10.5817/CP2016-1-6. Article 6. [DOI] [Google Scholar]

- Lin W.Y., Zhang X., Song H., Omori K. Health information seeking in the Web 2.0 age: Trust in social media, uncertainty reduction, and self-disclosure. Computers in Human Behavior. 2016;56:289–294. doi: 10.1016/j.chb.2015.11.055. [DOI] [Google Scholar]

- Mase A.S., Cho H., Prokopy L.S. Enhancing the Social Amplification of Risk Framework (SARF) by exploring trust, the availability heuristic, and agricultural advisors' belief in climate change. Journal of Environmental Psychology. 2015;41:166–176. doi: 10.1016/j.jenvp.2014.12.004. [DOI] [Google Scholar]

- Newman N., Fletcher R., Kalogeropoulos A., Nielsen R.K. The reuters institute digital news report 2019. Reuters Institute for the Study of Journalism. 2019 https://reutersinstitute.politics.ox.ac.uk/sites/default/files/2019-06/DNR_2019_FINAL_0.pdf Retrieved from. [Google Scholar]

- Neyazi T.A., Kalogeropoulos A., Nielsen R.K. Misinformation concerns and online news participation among internet users in India. Social Media+ Society. 2021;7(2) doi: 10.1177/20563051211009013. [DOI] [Google Scholar]

- Ng Y.J., Yang J., Vishwanath A. To fear or not to fear? Applying the social amplification of risk framework on two environmental health risks in Singapore. Journal of Risk Research. 2018;21:1487–1501. doi: 10.1080/13669877.2017.1313762. [DOI] [Google Scholar]

- Pedgeon N., Kasperson R.E., Slovic P. Cambridge University Press; Cambridge, UK: 2003. The social amplification of risk. [Google Scholar]

- Perko T. Importance of risk communication during and after a nuclear accident. Integrated Environmental Assessment and Management. 2011;7(3):388–392. doi: 10.1002/ieam.230. [DOI] [PubMed] [Google Scholar]

- Ramsey S.R., Thompson K.L., McKenzie M., Rosenbaum A. Psychological research in the internet age: The quality of web-based data. Computers in Human Behavior. 2016;58:354–360. doi: 10.1016/j.chb.2015.12.049. [DOI] [Google Scholar]

- Renn O. In: Communicating risks to the public. Kasperson R., Stallen P., editors. Springer; Dordrecht: 1991. Risk communication and the social amplification of risk; pp. 287–324. [Google Scholar]

- Renn O., Burns W.J., Kasperson J.X., Kasperson R.E., Slovic P. The social amplification of risk: Theoretical foundations and empirical applications. Journal of Social Issues. 1992;48(4):137–160. doi: 10.1111/j.1540-4560.1992.tb01949.x. [DOI] [Google Scholar]

- Reuter C., Hartwig K., Kirchner J., Schlegel N. 14th international conference on wirtschaftsinformatik. 2019. Fake news perception in Germany: A representative study of people's attitudes and approaches to counteract misinformation. February 24-27, Siegen, Germany. [Google Scholar]

- Reynolds B., Seeger M. 2014. Crisis and emergency risk communication.https://emergency.cdc.gov/cerc/ppt/cerc_2014edition_Copy.pdf Retrieved from: [DOI] [PubMed] [Google Scholar]

- Rouse S.V. A reliability analysis of Mechanical Turk data. Computers in Human Behavior. 2015;43:304–307. doi: 10.1016/j.chb.2014.11.004. [DOI] [Google Scholar]

- Scheufele D.A., Krause N.M. Science audiences, misinformation, and fake news. Proceedings of the National Academy of Sciences. 2019;116(16):7662–7669. doi: 10.1073/pnas.1805871115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schumaker E. ABC News; 2020. Timeline: How coronavirus got started. The outbreak spanning the globe began in December, in Wuhan, China.https://abcnews.go.com/Health/timeline-coronavirus-started/story?id=69435165 April 7. Retrieved from. [Google Scholar]

- Shu K., Wang S., Lee D., Liu H. Springer International Publishing; 2020. Disinformation, misinformation, and fake news in social media. [Google Scholar]

- Song Y., Dai X., Wang J. Not all emotions are created equal: Expressive behavior of the networked public on China's social media site. Computers in Human Behavior. 2016;60:525–533. doi: 10.1016/j.chb.2016.02.086. [DOI] [Google Scholar]

- Song H., Omori K., Kim J., Tenzek K.E., Hawkins J.M., Lin W.Y., et al. Trusting social media as a source of health information: Online surveys comparing the United States, Korea, and Hong Kong. Journal of Medical Internet Research. 2016;18(3):e25. doi: 10.2196/jmir.4193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strekalova Y.A., Krieger J.L. Beyond words: Amplification of cancer risk communication on social media. Journal of Health Communication. 2017;22:849–857. doi: 10.1080/10810730.2017.1367336. [DOI] [PubMed] [Google Scholar]

- Sundar S.S., Bellur S., Oh J., Jia H., Kim H. Theoretical importance of contingency in human-computer interaction. Communication Research. 2016;43:595–625. doi: 10.1177/0093650214534962%20Article%20information. [DOI] [Google Scholar]

- Tandoc E.C., Jr., Lim Z.W., Ling R. Defining “fake news. Digital Journalism. 2018;6(2):137–153. doi: 10.1080/21670811.2017.1360143. [DOI] [Google Scholar]

- Treen K., Williams H., O'Neill S. Online misinformation about climate change. WIREs Climate Change. 2020;11(5) doi: 10.1002/wcc.665. [DOI] [Google Scholar]

- Tsfati Y., Ariely G. Individual and contextual correlates of trust in media across 44 countries. Communication Research. 2014;41(6):760–782. doi: 10.1177/0093650213485972. [DOI] [Google Scholar]

- Tsfati Y., Cappella J.N. Do people watch what they do not trust? Exploring the association between news media skepticism and exposure. Communication Research. 2003;30(5):504–529. doi: 10.1177/0093650203253371. [DOI] [Google Scholar]

- Valenzuela S., Halpern D., Katz J.E., Miranda J.P. The paradox of participation versus misinformation: Social media, political engagement, and the spread of misinformation. Digital Journalism. 2019;7(6):802–823. doi: 10.1080/21670811.2019.1623701. [DOI] [Google Scholar]

- Walsh N. 2020. The Wuhan files: Leaked documents reveal China's mishandling of the early stages of COVID-19. cnn.com.https://www.cnn.com/2020/11/30/asia/wuhan-china-covid-intl/index.html Retrieved from: [Google Scholar]

- Wardle C., Derakhshan H. In: Journalism,‘fake news’& disinformation (43-54) Ireton C., Posetti J., editors. UNESCO; Paris: 2018. Thinking about ‘information disorder’: Formats of misinformation, disinformation, and mal-information. [Google Scholar]

- Wirz C.D., Xenos M.A., Brossard D., Scheufele D., Chung J.H., Massarani L. Rethinking social amplification of risk: Social media in Zika in three languages. Risk Analysis. 2018;38(12):2599–2624. doi: 10.1111/risa.13228. [DOI] [PubMed] [Google Scholar]

- Witte K., Cameron K.A., McKeon J.K., Berkowitz J.M. Predicting risk behaviors: Development and validation of a diagnostic scale. Journal of Health Communication. 1996;1(4):317–341. doi: 10.1080/108107396127988. [DOI] [PubMed] [Google Scholar]

- Zhang X., Borden J. How to communicate cyber-risk? An examination of behavioral recommendations in cybersecurity crises. Journal of Risk Research. 2020;23(10):1336–1352. doi: 10.1080/13669877.2019.1646315. [DOI] [Google Scholar]

- Zhang X., Borden J., Kim S. Understanding publics' post-crisis social media engagement behaviors: An examination of antecedents and mediators. Telematics and Informatics. 2018;35(8):2133–2146. doi: 10.1016/j.tele.2018.07.014. [DOI] [Google Scholar]

- Zhang X., Kim S. An examination of consumer-company identification as a key predictor of consumer responses in corporate crisis. Journal of Contingencies and Crisis Management. 2017;25(4):232–243. doi: 10.1111/1468-5973.12147. [DOI] [Google Scholar]

- Zhang X., Shay R. An examination of antecedents to perceived community resilience in disaster post-crisis communication. Journalism & Mass Communication Quarterly. 2019;96(1):264–287. doi: 10.1177/1077699018793612. [DOI] [Google Scholar]

- de Zuniga H.G., Bachmann I., Hsu S.H., Brundidge J. Expressive versus consumptive blog use: Implications for interpersonal discussion and political participation. International Journal of Communication. 2013;7:22. https://ijoc.org/index.php/ijoc/article/view/2215 [Google Scholar]