Abstract

The prelimbic and infralimbic cortices of the rodent medial prefrontal cortex mediate the effects of context and goals on instrumental behavior. Recent work from our laboratory has expanded this understanding. Results have shown that the prelimbic cortex is important for the modulation of instrumental behavior by the context in which the behavior is learned (but not other contexts), with context potentially being broadly defined (to include at least previous behaviors). We have also shown that the infralimbic cortex is important in the expression of extensively-trained instrumental behavior, regardless of whether that behavior is expressed as a stimulus-response habit or a goal-directed action. Some of the most recent data suggest that infralimbic cortex may control the currently active behavioral state (e.g., habit vs. action or acquisition vs. extinction) when two states have been learned. We have also begun to examine prelimbic and infralimbic cortex function as key nodes of discrete circuits and have shown that prelimbic cortex projections to an anterior region of the dorsomedial striatum are important for expression of minimally-trained instrumental behavior. Overall, the use of an associative learning perspective on instrumental learning has allowed the research to provide new perspectives on how these two “cognitive” brain regions contribute to instrumental behavior.

Keywords: prefrontal, prelimbic, infralimbic, instrumental, operant, renewal, reinstatement, action, habit

Instrumental (operant) conditioning is a method for studying voluntary behavior in the animal laboratory. In a typical procedure, a contingency is arranged between a behavior (response) and an outcome; performance of the behavior leads to the outcome and the behavior is reinforced by its consequences (Skinner, 1938; Thorndike, 1911). According to contemporary theories of instrumental learning (Balleine & O’Doherty, 2010; Bouton, 2017; Hogarth, Balleine, Corbit, & Killcross, 2013), instrumental behavior can be guided by any of several associations that the procedure makes available for learning. The behavior itself can enter into at least two hypothetical associations: one between the response (R) and its outcome (O) (which supports “goal-directed action”) and another between the preceding stimulus (S) and the R (that supports “habit”). For habit, contiguity between S, R, and O is thought to reinforce the S-R association, but O itself is not part of the associative structure. Habit becomes especially important after an action has received extensive repetition or practice. Habit and action are typically distinguished by outcome devaluation methods, in which the value of O is separately decreased, for example, by taste aversion conditioning or by feeding O to satiety just before the test. The aversion to O, or satiety to O, produces a reduction in R when R is motivated by a representation of O (and is thus a goal-directed action), but not when R is purely habitual (triggered by S without involving a representation of O) (Adams, 1982; Adams & Dickinson, 1981; Dickinson, 1985). A great deal of research has studied the effects of action and habit learning, as well as their ties to the striatum and prefrontal cortex, to the guidance of instrumental behavior in animals and humans (e.g., Balleine, 2019; Balleine & O’Doherty, 2010).

Instrumental behavior can also be guided by the context in which it occurs (Bouton, 2019). Context can include not just static exteroceptive stimuli such as the testing box but also recent reinforcers, prior behaviors, and interoceptive stimuli such as the passage of time, drug state, deprivation state, and stress (Bouton, 1993, 2019). Instrumental behavior is reduced by a context change after conditioning (e.g., Bouton, Todd, Vurbic, & Winterbauer, 2011; Thrailkill & Bouton, 2015a). However, the context seems especially important in controlling extinction, the reduction in responding that occurs when the rewarding O is removed after instrumental learning (Rescorla, 2001). Extinction of instrumental (or Pavlovian) behavior produces a context-specific form of inhibition; removal from the extinction context causes the extinguished instrumental behavior to return or “renew” (Bouton et al., 2011; Nakajima, Tanaka, Urishihara, & Imada, 2000; Todd, 2013; Todd, Winterbauer, & Bouton, 2012). In ABA renewal of extinguished instrumental behavior, instrumental conditioning occurs in context A, extinction occurs in context B, and the extinguished behavior renews in the training context A. In ABC renewal, instrumental conditioning occurs in context A, extinction occurs in context B, and the extinguished behavior renews in a novel, non-extinction context, context C. AAB renewal is similar, but in this case conditioning and extinction occur in the same context, context A, and the extinguished behavior renews in a novel context B. Both ABC renewal and AAB renewal show that mere removal from the extinction context, without a return to the acquisition context, can cause extinguished behavior to renew (Bouton et al., 2011). Contexts can modulate instrumental behavior through a direct S-R association with the instrumental response (e.g., Thrailkill & Bouton, 2015a) or a hierarchical S-(R-O) association with the response-outcome association (Trask & Bouton, 2014).

Medial prefrontal cortex and instrumental conditioning

There is evidence that the medial prefrontal cortex plays an important role in instrumental behavior, and the research we will review here clearly implicates it in contextual control. The rodent medial prefrontal cortex (mPFC) can be divided into medial precentral cortex, anterior cingulate cortex, prelimbic cortex (PL), and infralimbic cortex (IL) based on considerations such as cytoarchitecture and connectivity (Heidbreder & Groenewegen, 2003; Ongur & Price, 2000). We focus here on PL and IL. Based on connectivity analysis, the rat PL and IL may be homologous with primate area 32 and area 25, respectively (Heilbronner, Rodriguez-Romaguera, Quirk, Groenewegen, & Haber, 2016). While PL and IL have similar projection patterns to thalamic nuclei, they have different projection patterns to a number of other brain regions (Vertes, 2004). For example, striatal projections of the IL are largely confined to the shell region of the nucleus accumbens (NAc) (Mailly, Aliane, Groenwegen, Haber, & Deniau, 2013; McGeorge & Faull, 1989; Vertes, 2004). In contrast, PL projections to the striatum form a large dorso-ventral band throughout the rostro-caudal NAc (core and shell) and dorsomedial striatum (DMS) (Mailly et al., 2013).

We based our initial work on a body of research from other laboratories supporting a role for the PL and IL in extinction and ABA renewal of instrumental conditioning. A conclusion of this work has been that PL is important for ABA renewal and IL is important for extinction. For example, pharmacological inactivation of PL prior to test reduced ABA renewal of extinguished alcohol-seeking (Palombo et al., 2017; Willcocks & McNally, 2013) and tetrodotoxin-mediated inactivation of PL prior to testing reduced ABA renewal of extinguished cocaine-seeking (Fuchs et al., 2005). In rats that had undergone extinction of cocaine-seeking, pharmacological inactivation of IL impaired extinction expression (LaLumiere, Niehoff, & Kalivas, 2010; Peters, LaLumiere, & Kalivas, 2008). However, in studies of extinction and renewal of heroin-seeking, pharmacological inactivation of IL, rather than PL, impaired ABA renewal (Bossert et al., 2011); the same effect was produced when only neurons active during context A exposure were lesioned (Bossert et al., 2011) and when IL and NAc shell were pharmacologically “disconnected” (Bossert et al., 2012). Pharmacological “disconnection” of ventral hippocampus CA1 (vCA1) and IL, or inhibition of vCA1 terminals in IL with DREADDs, also impaired ABA renewal of extinguished heroin-seeking (Wang et al., 2018).

Research from other laboratories has also supported a role for PL and IL in actions and habits, respectively. Balleine and Dickinson (1998) were the first to identify a role for PL in supporting actions when they showed that pre-training excitotoxic lesions of PL impaired action expression at test (Balleine & Dickinson, 1998). Killcross and Coutureau (2003) provided a within-subjects demonstration of the PL supporting actions and the IL supporting habits. Prior to acquisition, excitotoxic (or sham) lesions were made of the PL or IL. Rats then underwent instrumental conditioning of a lever-press response for 20 sessions in context A (high training condition). On the days of the final 5 sessions of training in context A, they also underwent conditioning of a lever-press response, for a different reinforcer, in context B (low training condition). Two tests were conducted. One test was immediately after devaluation (home cage pre-feeding) of the reinforcer associated with the high training response and the other test day was immediately after devaluation of the reinforcer associated with the low training response. Sham lesion control rats reduced responding on the low training lever (indicating action) but not the high training lever (indicating habit). PL lesioned rats did not express the low training response as an action (unlike controls) but expressed the high training response as a habit (like controls). IL lesioned rats showed the opposite pattern; these rats did express the low training response as an action (like controls) but also expressed the high training response as an action (unlike controls). Overall, the authors interpreted the findings to mean that the PL is necessary for goal-directed actions whereas the IL is necessary for habits.

Other studies have also tended to support the PL/IL action/habit functional dichotomy first identified by Killcross and Coutureau (2003). Pre-training excitotoxic lesions of PL prevented expression of actions at test (Corbit & Balleine, 2003; Ostlund & Balleine, 2005). Pharmacological inactivation of PL prior to each session of training also prevented expression of action at test (Tran-Tu-Yen, Marchand, Pape, DiScala, & Coutureau, 2009). Pre-training excitotoxic lesions of IL prevented expression of habit at test (Schmitzer-Torbert et al., 2015) and pharmacological inactivation of IL prior to test impaired habit expression (Coutureau & Killcross, 2003). Optogenetic inhibition of IL at the time of lever-presses during contingency degradation prevented habit expression (i.e., produced sensitivity to contingency degradation as shown by suppression of responding, which was not seen in control rats) (Barker, Glen, Linsenbardt, Lapish, & Chandler, 2017). Using a T-maze task, Smith and colleagues showed that optogenetic inhibition of IL impaired habit learning and expression (Smith & Graybiel, 2013; Smith, Virkud, Deisseroth, & Graybiel, 2012). Findings from Smith et al. (2012) also suggest that IL may control the expression of the most recently learned behavior and/or the suppression of a previously learned behavior.

Some work has also implicated connections between PL to pDMS in action expression. Contralateral excitotoxic lesions “disconnecting” PL and pDMS impaired expression of action at test compared to ipsilateral lesions of PL and pDMS (Hart, Bradfield, & Balleine, 2018). However, this technique does not allow determination of whether this effect is due to direct PL-to-pDMS projections or indirect interactions between PL and pDMS (via other structures). Using a pathway-specific application of DREADDs, Hart, Balleine and colleagues have shown that bilateral projections from PL to pDMS are important for the acquisition of goal-directed responding (Hart, Bradfield, Fok, Chieng, & Balleine, 2018). They used a dual-virus approach involving injections of AAV-Cre recombinase into pDMS and a Cre-dependent inhibitory DREADD into the PL and showed that injections of the DREADD ligand clozapine-N-oxide (CNO) prior to acquisition sessions prevented the expression of a goal-directed action at test (Hart, Bradfield, Fok, et al., 2018). They further showed that inhibition of PL projections to NAc during acquisition had no effect; rats still showed action at test (Hart, Bradfield, Fok, et al., 2018).

Context and the medial prefrontal cortex

We began our research on the role of the mPFC in instrumental conditioning with a study comparing the PL and IL in extinction and renewal (Eddy, Todd, Bouton, & Green, 2016). As suggested above, the instrumental conditioning literature on mPFC substrates of extinction and renewal has mostly used methods in which drugs have been used as reinforcers. We reasoned that a food reinforcement paradigm might be useful to examine because the associative learning processes are arguably better understood with food reinforcers vs. drug reinforcers. For example, not only ABA but also AAB and ABC renewal have all been demonstrated with food pellet reinforcers (Bouton et al., 2011; Todd, 2013). Drug reinforcers might contrastingly introduce a number of idiosyncratic factors such as withdrawal effects. Eddy et al. (2016) found that PL is important for ABA renewal of extinguished responding for a food reinforcer while IL is important for both ABA renewal and expression of extinction. We first trained rats to lever-press for food pellets in context A and then extinguished lever-pressing in context B (unless otherwise noted, the response is usually reinforced on a variable interval [VI] 30-s schedule). In separate experiments, we then infused baclofen/muscimol (B/M; GABAB/GABAA receptor agonists, respectively) into either PL (Experiment 1), IL (Experiment 2), or areas ventral to the IL (Experiment 3) prior to testing rats in context A and B in a counterbalanced order. Pharmacological inactivation of PL reduced renewal in context A (ABA renewal) but had no effect on the expression of extinction in context B. In contrast, inactivation of IL also reduced ABA renewal but, in addition, reduced expression of extinction (i.e., increased responding) in context B. Inactivation of a region ventral to IL had no effect on renewal or extinction, strengthening the conclusion that inactivation effects were specific to a given brain region. We also infused fluorophore-conjugated muscimol into the PL or IL of a separate set of rats to confirm that spread of B/M was likely minimal and confined to a given region with our method. All told, the results were consistent with previous results showing that PL is important for ABA renewal of extinguished cocaine- and alcohol-seeking (Fuchs et al., 2005; Willcocks & McNally, 2013) and IL is important for expression of extinguished cocaine-seeking (LaLumiere et al., 2010; Peters et al., 2008). Our results showing that inactivation of IL reduced ABA renewal of extinguished food-seeking also replicated findings of Bossert et al. (2011) for ABA renewal of extinguished heroin-seeking.

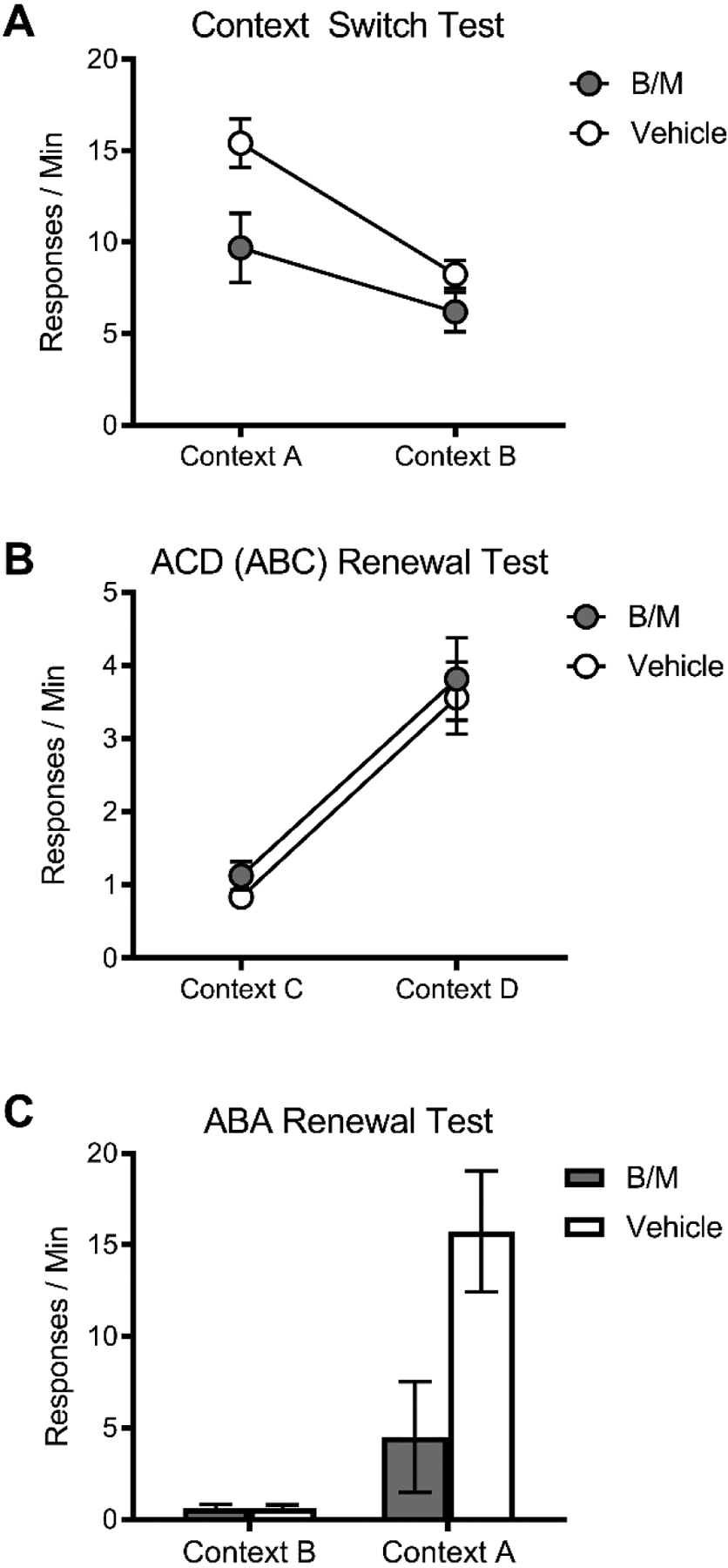

The results of Eddy et al. (2016) raised several important questions. One is whether PL involvement in ABA renewal of extinguished instrumental responding is due to the PL specifically mediating the response-enhancing effects of the acquisition context A or whether it might play a role whenever the organism shows renewal when it has merely detected that it is no longer in the extinction context B. In subsequent experiments (Trask, Shipman, Green, & Bouton, 2017), we found that PL is specifically important when responding is tested in the acquisition context; it may not modulate responding outside of the acquisition context (Figure 1). In Experiment 1, rats were trained across 6 30-min sessions to lever-press for sucrose pellets on a VI 30-s schedule in context A (Table 1). (The rats had previously been given initial magazine training in both contexts A and B.) The day after the final session of acquisition, rats underwent an intra-PL infusion of B/M or vehicle and were tested in context A and context B, counterbalanced and separated by approximately 30 minutes. As in previous studies (e.g., Bouton et al., 2011; Thrailkill & Bouton, 2015a), a context-switch effect was present in vehicle-infused rats; they emitted fewer lever presses in context B (non-trained) compared to context A (acquisition). More important, inactivation of PL significantly reduced lever-pressing in context A but had no effect on lever-pressing in context B (Figure 1A). This result suggests that PL is important for mediating the effects of the acquisition context, but not non-trained contexts, on instrumental responding. It further suggests that the reason that PL has been repeatedly shown to be important for ABA renewal may be because this form of renewal involves a return to the acquisition context.

Figure 1.

(A) PL inactivation (with B/M) before testing attenuated responding in context A (where responding had been trained), but not context B (where responding had not been trained). (B) PL inactivation before testing had no impact on “ABC” (ACD) renewal in a context where responding had not been trained, which was robust and similar between groups. (C) PL inactivation before testing attenuated renewal in context A and had no impact in context B.

Table 1.

Design of the Trask et al. (2017) experiments. Intra-PL infusion occurred prior to each of the 3 tests.

| Group | Acquisition | Context Switch Test | Reacquisition | Extinction | ACD (“ABC”) Renewal Test | Reacquisition | Extinction | ABA Renewal Test |

|---|---|---|---|---|---|---|---|---|

| B/M VEH |

A: R+ | A: R−, B: R− | A: R+ | C: R− | C: R−, D: R− | A: R+ | B: R− | A: R−, B: R− |

A, B, C, and D refer to contexts (counterbalanced). R represents lever-pressing. + and − refer to reinforced and unreinforced, respectively.

Such an idea suggested that PL would be less important in ABC renewal, where renewal occurs in a neutral context that is merely different from the extinction context. The differential involvement of PL in ABA vs. ABC renewal was confirmed in two additional experiments (Trask et al., 2017) (Table 1). Both used the same rats as in Experiment 1. In Experiment 2a, inactivation of PL had no effect on ABC renewal (Figure 1B). Rats from Experiment 1 underwent 6 days of re-training in context A, and then 4 days of extinction in context C. On the last day of the experiment, rats received an intra-PL infusion of B/M or vehicle and were tested in context C and a non-trained context, context D. (The rats had been given prior magazine training in context D.) Vehicle-infused rats exhibited low responding in the extinction context C, and renewal of extinguished responding in context D, outside of the extinction context (“ACD” renewal, equivalent to ABC renewal). Inactivation of PL had no effect on this form of renewal, supporting the idea that PL is only necessary in the acquisition context, context A. Experiment 2b, still using the same rats as in Experiments 1 and 2a, then confirmed that the null result of PL inactivation in Experiment 2a was not due to cannulae that were no longer functioning or changes in tissue caused by the infusions in Experiment 1. Here we re-trained rats in context A for one day followed by extinction in context B, and then tests in contexts A and B after intra-PL infusion of B/M or vehicle. Inactivation of PL significantly reduced ABA renewal tested at this point (Figure 1C), suggesting that the lack of effect of PL inactivation on “ABC” renewal in Experiment 2a was not due to side effects of the infusions in Experiment 1. Reduction in ABA renewal after PL inactivation also replicated our results in Eddy et al. (2016). Overall, the results of Trask et al. (2017) point to the conclusion that PL is necessary when the animal is in the acquisition context, but not when it is in a non-acquisition context, regardless of whether the test is performed before or after extinction.

Research on the contextual control of instrumental behavior (and also Pavlovian behavior) has suggested that many different kinds of cues can play the role of context (e.g., Bouton, 1993, 2019; Bouton, Maren, & McNally, 2021). Bouton (1993; 2019) has proposed that not only physical stimuli (like a Skinner box) but also interoceptive (e.g., hunger, stress, drug states) and even other behaviors can serve as contexts in Pavlovian and instrumental conditioning (e.g., Schepers & Bouton, 2017, 2019; Thrailkill & Bouton, 2015b; Thrailkill, Trott, Zerr, & Bouton, 2016). Is the role of PL unique to the Skinner-box context that investigators have typically manipulated? The answer appears to be no; we confirmed that PL is also involved in mediating the effects of “behavioral contexts” (Thomas, Thrailkill, Bouton, & Green, 2020). This work utilized a discriminated heterogeneous behavioral chain procedure, in which rats learn to make a sequence of two responses to obtain a food pellet reinforcer (e.g., Thrailkill & Bouton, 2016). In this method, a response (e.g., lever-press; R1) is cued by a discriminative stimulus (S1). Emitting R1 (typically satisfying a random-ratio 4 requirement) turns off S1 and turns on a second discriminative stimulus, S2. If the response associated with S2, R2, is then emitted (e.g., chain-pull, again with a random-ratio 4 requirement), S2 turns off and a food pellet reinforcer is delivered.

Prior work had suggested that the context controlling R2 may be R1, rather than the Skinner box, which controls R1. For example, after training the S1-R1-S2-R2-O chain described above, a switch from one Skinner box context (context A) to another (context B) reduced R1, but not R2, when the two responses were tested independently (Thrailkill et al., 2016). In contrast, when R2 was tested after R1 (as part of the complete S1-R1-S2-R2 chain) in both contexts, the switch to B weakened it—because the context switch had weakened the R1 responding that preceded it. Extinction of R1 (but not the first response of a separately trained chain) likewise weakens R2 (Thrailkill & Bouton, 2015b). And perhaps most clearly consistent with a “contextual” role for R1, if R2 is extinguished outside the context of the chain, it renews when it is returned to the chain—so that R1 (but not the first response of separately trained chain) is available and emitted before it (Thrailkill et al., 2016). Thus, R1 (but not the Skinner box) appears to have the properties of a context for R2.

Thomas et al. (2020) used the design of Thrailkill et al. (2016) to confirm that the PL not only mediates the context effects of the Skinner box on R1 (as in Trask et al., 2017), but also the context-like effects of R1 on R2 (Table 2 and Figure 2). Rats first underwent the usual S1-R1-S2-R2-O chain training in context A. Next, they received two tests in context A on separate days and in a counterbalanced order. R1 was tested by itself and, since the test took place in context A, R1 was in its acquisition context. R2 was also tested by itself, but since the test took place in the absence of R1, R2 was outside its hypothesized acquisition context. For these tests, rats received presentations of either S1 or S2 with both response manipulanda present; a response on the appropriate manipulandum turned off the S according to a random ratio 4 schedule, as in acquisition. Prior to each test, rats received an intra-PL infusion of either B/M or vehicle. PL inactivation reduced R1 (tested in its acquisition context, context A) but had no effect on R2 (tested outside its acquisition context, without R1) (Figure 2A). This is analogous to what Trask et al. (2017) found: PL inactivation reduced responding only in the acquisition context. All rats then underwent S1-R1-S2-R2-O re-training, as well as exposure to a second physical context, context B. They then received another two tests, again on separate days and in a counterbalanced order, after an intra-PL infusion of either B/M or vehicle. Both tests were conducted in context B this time. One test consisted of the opportunity to complete the entire S1-R1-S2-R2-O chain. In this test, unlike the other one, R1 was outside its acquisition context (because the test was in context B) but R2 was in its acquisition context (because of the opportunity to emit R1 first). Here, PL inactivation reduced R2 when tested as part of the chain it was trained with (i.e., in its acquisition context) but not R1, which was being tested outside of its acquisition context (Figure 2B). A second test showed that PL inactivation had no effect on R2 tested by itself (without R1) in context B (Figure 2C); R2 was only reduced when tested as part of the original chain. Overall, the results of Thomas et al. (2020) suggest the possibility that PL may be involved in processing even non-physical contexts; PL may thus process “acquisition context” in something like a functional, rather than a physical, sense. An important future direction might be to assess a possible PL role in internal states that appear to function like contexts. PL might function as a “hub” for bringing together these many different types of context into one functional role: identification of the state under which a behavior was first learned. A role for PL in acquisition contexts might also be the underlying explanation for why PL lesions impair various forms of reinstatement of drug-seeking (e.g., Capriles, Rodaros, Sorge, & Stewart, 2003; Rogers, Ghee, & See, 2008); reinstatement of drug-seeking in general might involve a return to a drug-state acquisition context, which the PL would be sensitive to.

Table 2.

Design of the Thomas et al. (2020) experiment. Intra-PL infusion occurred prior to each of the 4 tests.

| Group | Acquisition | Tests A.1 and A.2 | Reacquisition | Tests B.1 and B.2 |

|---|---|---|---|---|

| B/M VEH |

A:S1R1→S2R2+ | A:S1R1−, A:S2R2− | A:S1R1→S2R2+ | B:S1R1→S2R2−, B:S2R2− |

A and B refer to contexts (counterbalanced). R1 and R2 represent lever-pressing and chain-pulling (counterbalanced). + and − refer to reinforced and unreinforced, respectively.

Figure 2.

(A) PL inactivation attenuated R1 in context A (where responding had been trained) but not R2. (B) PL inactivation attenuated R2 when tested with R1 (with which it had been trained) in context B. (C) PL inactivation did not affect R2 when tested without R1 in context B.

Instrumental goals and the medial prefrontal cortex

The research reviewed above helped establish a role for PL in the antecedent (contextual) control of the instrumental response. In another, related line of research, we have examined a role for PL and IL in encoding information about the behavior’s consequence (or goal) that controls goal-directed actions. This work began as a follow-up to previous studies described earlier showing that the PL is important for acquisition (but not expression) of goal-directed actions while the IL is important for acquisition and expression of habits (Corbit & Balleine, 2003; Coutureau & Killcross, 2003; Killcross & Coutureau, 2003; Ostlund & Balleine, 2005; Tran-Tu-Yen et al., 2009). Recall that an instrumental (operant) response is defined as “goal-directed” if separate devaluation of the reinforcer, or degradation of the contingency between the response and the reinforcer, reduces responding. Typically, reinforcer devaluation occurs between acquisition and testing, either by pairing the reinforcer with an injection of lithium chloride (a control group receives equivalent reinforcer exposure and lithium chloride injections, but they are not paired) or by pre-feeding one reinforcer to satiation (a second reinforcer associated with a different response does not undergo pre-feeding). In a subsequent test conducted in extinction, rats will reduce responding associated with the devalued reinforcer if responding is goal-directed but will not reduce responding if it is habitual. One factor that typically produces a habitual response is extensive training.

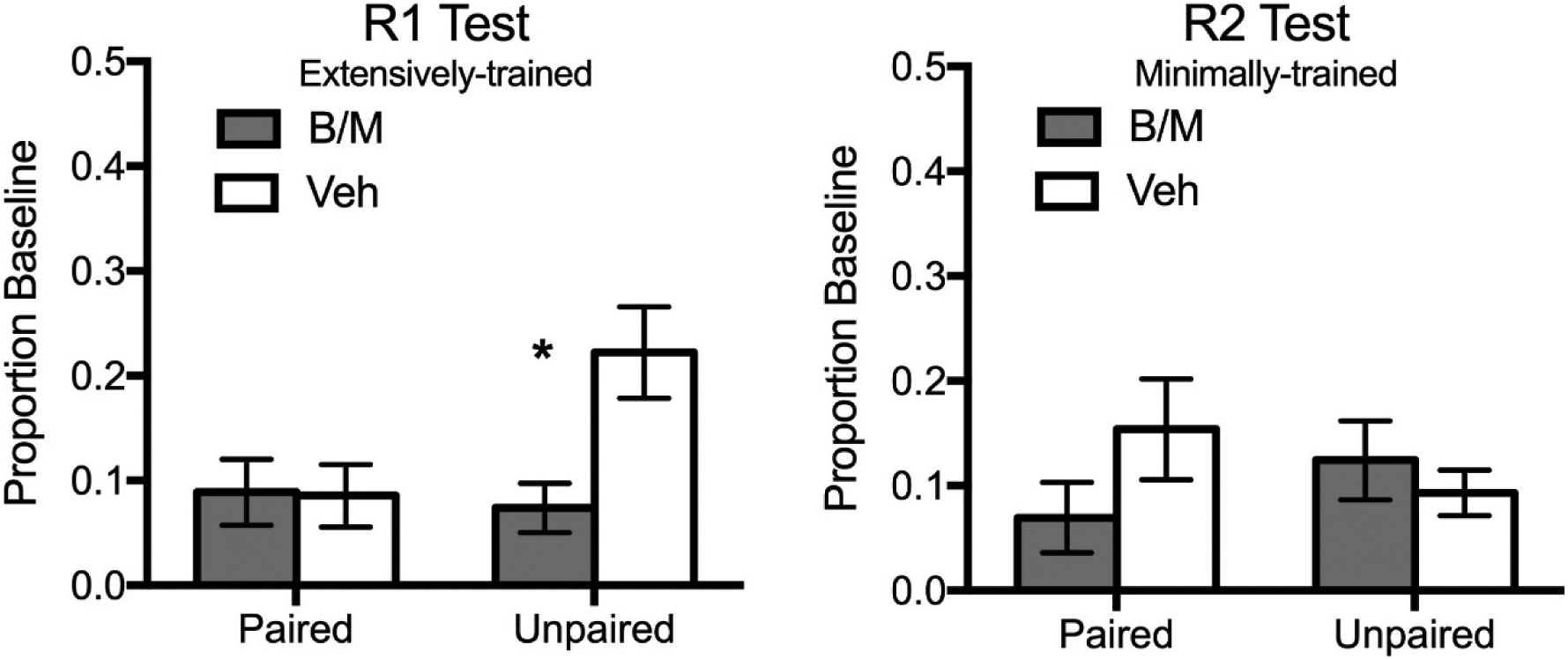

Our results suggest that the PL and IL become engaged after different amounts of training, rather than being linked to the status of the response as an action or habit per se (Shipman, Trask, Bouton, & Green, 2018). In these experiments, rats were trained on one response (R1; a lever-press or chain-pull) in context A for 24 sessions (Table 3). On the days of the final 4 sessions of R1 training, we also added and trained a second response (R2; a chain-pull or lever-press, the response not trained as R1) in context B. Thus, similar to Killcross and Coutureau (2003), at the end of training, each rat had an extensively-trained response (R1) and a minimally-trained response (R2). Rats then underwent lithium chloride-induced reinforcer devaluation or an unpaired control procedure to the point at which the experimental subjects completely rejected the reinforcer. Finally, the rats underwent intra-PL (Experiment 1) or intra-IL (Experiment 2) infusion of either B/M or vehicle. Unexpectedly, vehicle-infused control rats expressed the extensively-trained R1 as a goal-directed action at test, despite its extensive training. Inactivation of the PL reduced expression of the minimally-trained R2 but had no effect on expression of the extensively-trained R1, despite the status of both R1 and R2 as actions. This result suggests that the PL is involved in expression of actions, but not uniformly. Inactivation of the IL reduced expression of an extensively-trained action (R1, Figure 3). This result suggests that the IL is involved not only in the expression of habits (e.g., Killcross & Coutureau, 2003) but also in the expression of extensively-trained actions.

Table 3.

Design of Shipman et al. (2018), Experiment 2. Intra-IL infusion occurred prior to the test.

| Group | Acquisition | Devaluation | Test |

|---|---|---|---|

| B/M-Paired | A: R1+ (24 sessions) B: R2+ (4 sessions) |

A/B: O--LiCI | A: R1− B: R2− |

| B/M-Unpaired | A/B: O / LiCI | ||

| VEH-Paired | A/B: O--LiCI | ||

| VEH-Unpaired | A/B: O / LiCI |

A and B refer to contexts (counterbalanced). R1 and R2 represent lever-pressing and chain-pulling (counterbalanced). O represents the outcome. + and − refer to reinforced and unreinforced, respectively.

Figure 3.

IL inactivation attenuated an extensively-trained, goal-directed R1 but not a minimally-trained R2.

Our subsequent work suggested that the story is even more interesting than simply the amount of training being the critical factor in engaging the PL or IL. Trask, Shipman, Green, and Bouton (2020) went on to show that the extensively-trained R1 in the Shipman et al. (2018) experiments had very likely been a habit prior to the introduction of R2 training (even though this was in another context) on the days of the final 4 sessions of R1 training. In all four experiments we reported, one group replicated the training conditions of Shipman et al. (2018), with R1 receiving extensive training in context A and R2 receiving minimal training in context B on the days of the final 4 sessions of R1 training. In Experiment 1, a second group merely received exposure to context B, with no R2 training, on the days of the final 4 sessions of R1 training (Table 4). At test, the first group expressed an action (replicating Shipman et al., 2018) but the second group expressed a habit (Figure 4), suggesting that something about concurrent R2 training on the final days of R1 training converted R1 from a habit back to an action. In Experiment 2, we asked whether the intermixing of R2 training with R1 training was necessary for R1 to be expressed as an action at test. In this experiment, the second group received its four R2 training sessions in context B after the 24 R1 training sessions in context A so that R1 and R2 were never trained on the same day. The first group again expressed an action at test (as in Shipman et al., 2018) but the second group expressed a habit. In Experiment 3, we asked whether R1 and R2 need to share the same reinforcer during intermixed training in order for an extensively-trained R1 to be expressed as an action at test. In this experiment, the second group received R2 training with a different reinforcer, O2, than R1. In this case, both groups expressed an action at test. Finally, in Experiment 4, we showed that simply delivering free reinforcers in context B, without making them contingent on R2, causes an extensively trained R1 to be expressed as an action at test. Overall, the results of these experiments suggest that an extensively-trained response can be converted from a habit back to an action when reinforcers are delivered in an unexpected manner. This occurs even when the unexpected reinforcers are delivered in a different context and differ in identity from the trained reinforcer, as long as the unexpected reinforcers occur on the same day(s) as the expected reinforcers. This further suggests that conversion of an action to a habit through extensive training is not a fixed endpoint; responses can be converted in the opposite direction as well, from habit back to action. This conclusion is consistent with other complementary work that has recently been conducted in our laboratory (e.g., Bouton, Broomer, Rey, & Thrailkill, 2020; Steinfeld & Bouton, 2020, 2021); (see Bouton, 2021 for a review). It might also be consistent with the results of previous experimenters who have shown that when two Rs are trained concurrently, habit is not expressed after extensive training (Colwill & Rescorla, 1985, 1988; Kosaki & Dickinson, 2010).

Table 4.

Design of Trask et al. (2020), Experiment 1.

| Group | Acquisition | Devaluation | Test |

|---|---|---|---|

| R2-Paired | A: R1+ (24 sessions) B: R2+ (4 sessions) |

A/B: O--LiCI | A: R1− |

| R2-Unpaired | A/B: O / LiCI | ||

| Exposure-Paired | A: R1+ (24 sessions) B: ---- |

A/B: O--LiCI | |

| Exposure-Unpaired | A/B: O / LiCI |

A and B refer to contexts (counterbalanced). R1 and R2 represent lever-pressing and chain-pulling (counterbalanced). O represents the outcome. + and − refer to reinforced and unreinforced, respectively.

Figure 4.

Concurrent training of a second response (R2) in context B at the end of extensive training of a target response (R1) context A causes R1 to be expressed as an action rather than a habit.

Circuits, and New Thinking about the Function of the IL

The work reviewed above has begun to expand our understanding of the roles of the PL and IL in context and goal processing in instrumental learning. The results also raise a number of questions. One is about the neuroanatomy involved. The anatomical division between PL and IL is somewhat arbitrary and these regions may be better distinguished by their connections with other brain regions. Newer approaches in behavioral neuroscience allow examination of the function of two brain regions together, through the manipulation of projections from one to the other.

Hart, Balleine and colleagues have shown that projections from PL to pDMS are important for the acquisition of goal-directed responding (Hart, Bradfield, Fok, et al., 2018). Some of our own recent work (Shipman, Johnson, Bouton, & Green, 2019) has suggested additional projections from PL to a relatively anterior region of DMS (aDMS) are important for expression of instrumental responding. In this study, we injected an inhibitory DREADD, pAAV8-hSyn-hM4D(Gi)-mCherry, or the control construct pAAV8-hSyn-EGFP into PL and implanted bilateral guide cannulae into aDMS. After a 6–7 week incubation period, in which the DREADDs-mCherry or EGFP was expressed at PL axon terminals in aDMS (as verified by subsequent histology), rats underwent minimal instrumental conditioning (6 sessions). We then conducted two test sessions, separated by one day of retraining, in which all rats received intra-aDMS infusions of the DREADDs ligand CNO in one session and vehicle in the other (counterbalanced). Rats that expressed inhibitory DREADDs reduced their lever-pressing at test when CNO was infused into aDMS (attenuating inputs from the PL), compared to vehicle infusions; there was no effect of intra-aDMS CNO on EGFP (i.e., non-DREADDs) rats. Although we did not evaluate whether the response was a goal-directed action, it is very likely it was given the minimal amount of training involved.

Our work reviewed above has also led us to ask new questions about the functions of the PL and IL in instrumental conditioning. One point worth making is that IL has been thought to influence both habit (as discussed above) and extinction learning. Bouton (2021) and Steinfeld and Bouton (2021) recently emphasized the abstract similarity between habit learning and extinction learning: Both appear to interfere with prior learning (e.g., action), and both do so in a context-specific way. That is, removal from either the habit-learning or the extinction-learning context can cause action to renew (Steinfeld & Bouton, 2020, 2021). Other authors have also linked IL to retroactive interference effects in instrumental conditioning (Barker, Taylor, & Chandler, 2014; Coutureau & Killcross, 2003; Gourley & Taylor, 2016; Mukherjee & Caroni, 2018; Smith et al., 2012). Barker et al. (2014) drew a parallel between fear acquisition and extinction on the one hand, and actions and habits on the other, suggesting that IL may actively inhibit actions to promote habit expression and actively inhibit acquisition behavior (e.g., Pavlovian fear expression) to promote extinction expression. Removal of this inhibition during habit expression would lead to action expression (i.e., a return to sensitivity to reinforcer devaluation). Removal of this inhibition during extinction expression would lead to expression of acquisition (i.e., a return of Pavlovian fear).

Some additional, as-yet unpublished recent work in our laboratory (Broomer, Green, & Bouton, unpublished observations) has therefore asked whether IL might have effects on both habit and extinction because its larger function is to switch between conflicting behavioral strategies. Expanding on Barker et al. (2014), Broomer et al. suggested that IL might be especially important in controlling the behavioral state or strategy that is currently active when two different strategies have been learned. We tested the hypothesis that IL inactivation could cause either a habit to be expressed as an action (the familiar result), or an overtrained action that had renewed to action after habit learning might contrastingly be expressed as a habit. Rats were given minimal instrumental training of a response in context A followed by extensive training of the same response in context B. Steinfeld and Bouton (2021) had shown that this procedure leads to expression of a habit in B and an action in A. Importantly, though, the action in A has a history, at test, of being a habit through the extra training in B. Thus, rats express a habit in B that was previously an action (in A) and an action in A that was previously a habit (in B) (Steinfeld & Bouton, 2021), allowing a test of the “switch” hypothesis in both directions (habit interfering with action and action interfering with habit). In one experiment, after moderate lever-press training in A and then more extensive (habit) training in B, the rats had reinforcer devaluation or unpaired reinforcer and lithium chloride injections in both contexts. Broomer et al. (unpublished observations) then inactivated IL in half of the rats prior to tests in A and B. Unexpectedly, lever pressing in vehicle rats was an action in both contexts (we had expected action in A and habit in B, the latter of which might have been disrupted by the infusion procedure). However, IL inactivation caused rats to express a habit in both contexts. This represents a return to habit from action expression. Note that previous studies have demonstrated that IL inactivation at test leads to the reverse: a return to action from habit expression (Coutureau & Killcross, 2003). The newest results provide support for the general idea that IL is not necessary for expression of habits per se (cf. Shipman et al., 2018) but instead may be necessary for switching between an action and a habit, bidirectionally. A follow-up experiment showed that when a goal-directed action had no history of ever being a habit, IL inactivation has no effect. Recall that in Eddy et al. (2016), IL inactivation attenuated both extinction performance in context B and renewal in context A. Thus, an explanation of the IL results in Eddy et al. (2016) is that IL inactivation in context A (the acquisition context) attenuated the representation of acquisition and permitted expression of extinction learning, whereas IL inactivation in context B (the extinction context) attenuated the representation of extinction and permitted expression of acquisition learning.

We predict that IL inactivation would produce the same effect whether responding is extinguished or is instead punished in context B after acquisition in context A (Bouton & Schepers, 2015); in the latter case, IL inactivation would attenuate the representation of punishment in context B, permitting the expression of acquisition learning. A few previous studies have examined a role for IL in punished instrumental responding but it is not clear whether they provide support for or against this idea. In one study, optogenetic IL inactivation did prevent a decrease in punished lever-press responding at test (Halladay et al., 2020). In another, pharmacological IL inactivation non-specifically increased responding during a choice test (rewarded vs. punished lever) (Jean-Richard-dit-Bressel & McNally, 2016). And in yet another, excitotoxic IL lesions made prior to behavioral (“seeking-taking”) chain training for cocaine did not affect suppression of the seeking response when it was subsequently punished (Pelloux, Murray, & Everitt, 2013).

Our results are consistent with a role for IL in controlling the currently-selected of two conflicting behavioral states or strategies. When an overtrained action expresses as a habit, IL inactivation creates action; in contrast, when an overtrained action expresses as an action, IL inactivation creates habit. Further consistent with this approach are some complex results reported by Smith et al. (2012). In that study, rats were given extensive training in a T-maze to choose the left arm (and receive a reward) in response to one cue and to choose the right arm (and receive a different reward) to another cue. Reinforcer devaluation of one of the rewards followed by a test session confirmed that the response was a habit (i.e., rats continued to choose the now devalued arm when cued to do so). Optogenetic inhibition of IL during test caused rats to choose the non-devalued arm over the devalued arm, even when the devalued arm was cued. Thus, IL inhibition abolished habit. Subsequently, rats underwent further training sessions with rewards again available. Rats that had undergone IL inactivation continued to choose only the non-devalued arm, even when the devalued arm was cued. After extended (but not minimal) additional training, when IL was inactivated, rats now chose the devalued arm (and even consumed the devalued reward). This surprising result was interpreted as revealing IL control of a “replacement habit” of always choosing the non-devalued arm, which was then impaired when IL was inactivated. But another possibility, first noted by Broomer (2021), is that goal-direction was actually being expressed (the rats were avoiding the averted goal, after all) and was replaced by the earlier habit -- the rats thus returned to the devalued arm. The results are thus consistent with the idea that the first IL inactivation revealed an action that had been suppressed by habit, and the second IL inactivation revealed a habit that had been suppressed by an action. The overall pattern seems to challenge the canonical view that IL simply controls habit learning. IL may have a more general role in controlling interference processes.

In non-human primates, the anterior cingulate cortex (which may be homologous to the rodent cingulate, prelimbic, and infralimbic cortices as a whole; (Heilbronner et al., 2016)) is important for ”conflict monitoring and control” (Botvinick, Braver, Barch, Carter, & Cohen, 2001). Conflict can be created by a stimulus activating two or more incompatible responses, such as in a Stroop task (Botvinick et al., 2001). To what extent is this similar to activation of two or more incompatible associations and, by the view developed here, IL engagement? The answer is not yet clear because the experimental designs for examining conflict monitoring and control appear very different from what we’ve discussed above. For example, Killcross and colleagues have developed a rodent version of a conflict task in which two different instrumental responses are activated and the context in which this occurs guides which of the two responses is emitted (Haddon, George, & Killcross, 2008; Haddon & Killcross, 2006a, 2006b). In this task, rats learn a biconditional discrimination: in context A, S1 (an auditory stimulus) is the cue for R1 (left lever)-O1 and S2 (a different auditory stimulus) is the cue for R2 (right lever)-O1; in context B, S3 (a visual stimulus) is the cue for R1-O2 and S4 (a different visual stimulus) is the cue for R2-O2. In test sessions in both contexts, trials consist of an auditory and a visual stimulus presented together, either “congruently” (e.g., S1/S3, both of which were associated with R1) or “incongruently” (e.g., S1/S4, one of which was associated with R1 and the other of which was associated with R2). On incongruent trials, rats use the context to guide responding (e.g., if S1/S4 is presented in context A, then S1 will guide the choice of lever). PL, but not IL, inactivation at test leads to a reduction in contextually-guided “correct” responding (Marquis, Killcross, & Haddon, 2007). In a variation of the basic task, many more trials are given in context A vs. B and the same O is used in both contexts (Haddon et al., 2008). In this case, on incongruent trials in the minimally-trained context B, rats will use the extensively-trained stimulus, rather than the context, to guide responding (Haddon et al., 2008; Haddon & Killcross, 2011). IL inactivation at test leads to a reduction in these “incorrect” responses, which represent removal of interference from the overtrained stimulus (Haddon & Killcross, 2011).

Summary

Overall, our results move beyond the common views of PL and IL roles in instrumental behavior which suggest, on the one hand, PL support of renewal and actions and, on the other, IL support of extinction and habits. We have shown that PL is important for the effects of acquisition context (but not other contexts) on goal-directed instrumental behavior, including non-physical contexts. We have also shown that IL does not simply mediate expression of habits but may instead function to bidirectionally switch between actions and habits (and perhaps also between acquisition and extinction performance). These results have implications for broader views of prefrontal cortex support of executive functions.

Highlights.

Prelimbic cortex for the modulation of instrumental behavior by acquisition context

Prelimbic cortex for the modulation of instrumental behavior by non-physical contexts

Infralimbic cortex for control of interference

Infralimbic cortex for bidirectional switching between actions and habits

Acknowledgments

Manuscript preparation was supported in part by Grant RO1 DA 033123 from the National Institutes of Health. The authors were friends and colleagues of David Bucci since the early 2000s, when he held a faculty position in our department. We shared with him a love for learning theory and a respect for its usefulness in investigating the brain. Dave was also a dedicated mentor of students. We would like to thank the graduate students who contributed so much to our own collaborative work reviewed here: Matthew Broomer, Meghan Eddy, Megan Shipman, Callum Thomas, Travis Todd, and Sydney Trask. We also thank Matthew Broomer and Callum Thomas for their comments on an earlier draft of the manuscript.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Credit Author Statement

The two authors contributed equally to writing this manuscript.

References

- Adams CD (1982). Variations in the sensitivity of instrumental responding to reinforcer devaluation. Quarterly Journal of Experimental Psychology, 34B, 77–98. [Google Scholar]

- Adams CD, & Dickinson A (1981). Instrumental responding following reinforcer devaluation. Quarterly Journal of Experimental Psychology, 33B, 109–121. [Google Scholar]

- Balleine BW (2019). The meaning of behavior: Discriminating reflex and volition in the brain. Neuron, 104, 47–62. [DOI] [PubMed] [Google Scholar]

- Balleine BW, & Dickinson A (1998). Goal-directed instrumental action: Contingency and incentive learning and their cortical substrates. Neuropharmacology, 37, 407–419. [DOI] [PubMed] [Google Scholar]

- Balleine BW, & O’Doherty JP (2010). Human and rodent homologies in action control: Corticostriatal determinants of goal-directed and habitual action. Neuropsychopharmacology, 35, 48–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barker JM, Glen WB, Linsenbardt DN, Lapish CC, & Chandler LJ (2017). Habitual behavior is mediated by a shift in response-outcome encoding by infralimbic cortex. eNeuro, 4, 1–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barker JM, Taylor JR, & Chandler LJ (2014). A unifying model of the role of the infralimbic cortex in extinction and habits. Learning & Memory, 21, 441–448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bossert JM, Stern AL, Theberge FR, , N. J., Wang HL, Morales M, & Shaham Y (2012). Role of projections from ventral medial prefrontal cortex to nucleus accumbens shell in context-induced reinstatement of heroin seeking. Journal of Neuroscience, 32, 4982–4991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bossert JM, Stern AL, Theberge FR, Cifani C, Koya E, Hope BT, & Shaham Y (2011). Ventral medial prefrontal cortex neuronal ensembles mediate context-induced relapse to heroin. Nature Neuroscience, 14, 420–422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick MM, Braver TS, Barch DM, Carter CS, & Cohen JD (2001). Conflict monitoring and cognitive control. Psychological Review, 108, 624–652. [DOI] [PubMed] [Google Scholar]

- Bouton ME (1993). Context, time, and memory retrieval in the interference paradigms of Pavlovian learning. Psychological Bulletin, 114, 80–99. [DOI] [PubMed] [Google Scholar]

- Bouton ME (2017). Learning theory. In Sadock BJ, Sadock VA, & Ruiz P (Eds.), Kaplan & Sadock’s Comprehensive Textbook of Psychiatry (10th ed., Vol. 1, pp. 716–728). Philadelphia, PA: Wolters Kluwer. [Google Scholar]

- Bouton ME (2019). Extinction of instrumental (operant) learning: Interference, varieties of context, and mechanisms of contextual control. Psychopharmacology, 236, 7–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME (2021). Context, attention, and the switch between habit and goal-direction in behavior. Learning & Behavior. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Broomer MC, Rey CN, & Thrailkill EA (2020). Unexpected food outcomes can return a habit to goal-directed action. Neurobiology of Learning and Memory, 169, 107163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Maren S, & McNally GP (2021). Behavioral and neurobiological mechanisms of Pavlovian and instrumental extinction learning. Physiological Reviews, 101, 611–681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, & Schepers ST (2015). Renewal after the punishment of free operant behavior. Journal of Experimental Psychology: Animal Learning and Cognition, 41, 81–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Todd TP, Vurbic D, & Winterbauer NE (2011). Renewal after the extinction of free operant behavior. Learning & Behavior, 39, 57–67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Broomer MC (2021). The role of the infralimbic cortex in switching between an instrumental behavior’s status as a goal-directed action or habit. (Master of Arts). University of Vermont, Burlington VT. [Google Scholar]

- Broomer MC, Green JT, & Bouton ME (unpublished observations). The role of infralimbic cortex in switching between an instrumental behavior’s status as goal-directed action or habit.

- Capriles N, Rodaros D, Sorge RE, & Stewart J (2003). A role for the prefrontal cortex in stress- and cocaine-induced reinstatement of cocaine seeking in rats. Psychopharmacology, 168, 66–74. [DOI] [PubMed] [Google Scholar]

- Colwill RM, & Rescorla RA (1985). Instrumental responding remains sensitive to reinforcer devaluation after extensive training. Journal of Experimental Psychology: Animal Behavior Processes, 11, 520–536. [PubMed] [Google Scholar]

- Colwill RM, & Rescorla RA (1988). The role of response-reinforcer associations increases throughout extended instrumental training. Animal Learning & Behavior, 16, 105–111. [Google Scholar]

- Corbit LH, & Balleine BW (2003). The role of the prelimbic cortex in instrumental conditioning. Behavioural Brain Research, 146, 145–157. [DOI] [PubMed] [Google Scholar]

- Coutureau E, & Killcross S (2003). Inactivation of the infralimbic prefrontal cortex reinstates goal-directed responding in overtrained rats. Behavioural Brain Research, 146, 167–174. [DOI] [PubMed] [Google Scholar]

- Dickinson A (1985). Actions and habits: The development of behavioural autonomy. Philosophical Transactions of the Royal Society B: Biological Sciences, 308, 67–78. [Google Scholar]

- Eddy MC, Todd TP, Bouton ME, & Green JT (2016). Medial prefrontal cortex involvement in the expression of extinction and ABA renewal of instrumental behavior for a food reinforcer. Neurobiology of Learning and Memory, 128, 33–39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuchs RA, Evans KA, Ledford CC, Parker MP, Case JM, Mehta RH, & See RE (2005). The role of the dorsomedial prefrontal cortex, basolateral amygdala, and dorsal hippocampus in contextual reinstatement of cocaine seeking in rats. Neuropsychopharmacology, 30, 296–309. [DOI] [PubMed] [Google Scholar]

- Gourley SL, & Taylor JR (2016). Going and stopping: dichotomies in behavioral control by the prefrontal cortex. Nature Neuroscience, 19, 656–664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haddon JE, George DN, & Killcross S (2008). Contextual control of biconditional task performance: Evidence for cue and response competition in rats. Quarterly Journal of Experimental Psychology, 61, 1307–1320. [DOI] [PubMed] [Google Scholar]

- Haddon JE, & Killcross S (2006a). Both motivational and training factors affect response conflict choice performance in rats. Neural Networks, 19, 1192–1202. [DOI] [PubMed] [Google Scholar]

- Haddon JE, & Killcross S (2006b). Prefrontal cortex lesions disrupt the contextual control of response conflict. Journal of Neuroscience, 26, 2933–2940. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haddon JE, & Killcross S (2011). Inactivation of the infralimbic prefrontal cortex in rats reduces the influence of inappropriate habitual responding in a response-conflict task. Neuroscience, 199, 205–212. [DOI] [PubMed] [Google Scholar]

- Halladay LR, Kocharian A, Piantadosi PT, Authement ME, Lieberman AG, Spitz NA, … Holmes A (2020). Prefrontal regulation of punished ethanol self-administration. Biological Psychiatry, 87, 967–978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart G, Bradfield LA, & Balleine BW (2018). Prefrontal cortico-striatal disconnection blocks the acquisition of goal-directed action. Journal of Neuroscience, 38, 1311–1322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart G, Bradfield LA, Fok SY, Chieng B, & Balleine BW (2018). The bilateral prefronto-striatal pathway is necessary for learning new goal-directed actions. Current Biology, 28, 1–12. [DOI] [PubMed] [Google Scholar]

- Heidbreder CA, & Groenewegen HJ (2003). The medial prefrontal cortex in the rat: Evidence for a dorso-ventral distinction based upon functional and anatomical characteristics. Neuroscience and Biobehavioral Reviews, 27, 555–579. [DOI] [PubMed] [Google Scholar]

- Heilbronner SR, Rodriguez-Romaguera J, Quirk GJ, Groenewegen HJ, & Haber SN (2016). Circuit-based corticostriatal homologies between rat and primate. Biological Psychiatry, 80, 509–521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hogarth L, Balleine BW, Corbit LH, & Killcross S (2013). Associative learning mechanisms underpinning the transition from recreational drug use to addiction. Annals of the New York Academy of Sciences, 1282, 12–24. [DOI] [PubMed] [Google Scholar]

- Jean-Richard-dit-Bressel P, & McNally GP (2016). Lateral, not medial, prefrontal cortex contributes to punishment and aversive instrumental learning. Learning & Memory, 23, 607–617. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killcross S, & Coutureau E (2003). Coordination of actions and habits in the medial prefrontal cortex of rats. Cerebral Cortex, 13, 400–408. [DOI] [PubMed] [Google Scholar]

- Kosaki Y, & Dickinson A (2010). Choice and contingency in the development of behavioral autonomy during instrumental conditioning. Journal of Experimental Psychology: Animal Behavior Processes, 36, 334–342. [DOI] [PubMed] [Google Scholar]

- LaLumiere RT, Niehoff KE, & Kalivas PW (2010). The infralimbic cortex regulates the consolidation of extinction after cocaine self-administration. Learning & Memory, 17, 168–175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mailly P, Aliane V, Groenwegen HJ, Haber SN, & Deniau J-M (2013). The rat prefrontalstriatal system analyzed in 3D: Evidence for multiple interacting functional units. Journal of Neuroscience, 33, 5718–5727. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marquis J-P, Killcross S, & Haddon JE (2007). Inactivation of the prelimbic, but not infralimbic, prefrontal cortex impairs the contextual control of response conflict in rats. European Journal of Neuroscience, 25, 559–566. [DOI] [PubMed] [Google Scholar]

- McGeorge AJ, & Faull RLM (1989). The organization of the projection from the cerebral cortex to the striatum in the rat. Neuroscience, 29, 503–537. [DOI] [PubMed] [Google Scholar]

- Mukherjee A, & Caroni P (2018). Infralimbic cortex is required for learning alternatives to prelimbic promoted associations through reciprocal connectivity. Nature Communications, 9, 2727. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakajima S, Tanaka S, Urishihara K, & Imada H (2000). Renewal of extinguished lever-press responses upon return to the training context. Learning and Motivation, 31, 416–431. [Google Scholar]

- Ongur D, & Price JL (2000). The organization of networks within the orbital and medial prefrontal cortex of rats, monkeys and humans. Cerebral Cortex, 10, 206–219. [DOI] [PubMed] [Google Scholar]

- Ostlund SB, & Balleine BW (2005). Lesions of the medial prefrontal cortex disrupt the acquisition but not the expression of goal-directed learning. Journal of Neuroscience, 25, 7763–7770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palombo P, Leao RM, Bianchi PC, de Oliveira PEC, da Silva Planeta C, & Cruz FC (2017). Inactivation of the prelimbic cortex impairs the context-induced reinstatement of ethanol seeking. Frontiers in Pharmacology, 8, Article 725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelloux Y, Murray JE, & Everitt BJ (2013). Differential roles of the prefrontal cortical subregions and basolateral amygdala in compulsive cocaine seeking and relapse after voluntary abstinence in rats. European Journal of Neuroscience, 38, 3018–3026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peters J, LaLumiere RT, & Kalivas PW (2008). Infralimbic prefrontal cortex is responsible for inhibiting cocaine seeking in extinguished rats. Journal of Neuroscience, 28, 6046–6053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rescorla RA (2001). Experimental extinction. In Mowrer RR & Klein SB (Eds.), Handbook of contemporary learning theories. Mahwah, NJ: Lawrence Erlbaum Associates Inc. [Google Scholar]

- Rogers JL, Ghee S, & See RE (2008). The neural circuitry underlying reinstatement of heroin-seeking behavior in an animal model of relapse. Neuroscience, 151, 579–588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schepers ST, & Bouton ME (2017). Hunger as a context: Food seeking that is inhibited during hunger can renew in the context of satiety. Psychological Science, 28, 1640–1648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schepers ST, & Bouton ME (2019). Stress as a context: Stress causes relapse of inhibited food seeking if it has been associated with prior food seeking. Appetite, 132, 131–138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmitzer-Torbert N, Apostolidis S, Amoa R, O’Rear C, Kaster M, Stowers J, & Ritz R (2015). Post-training cocaine administration facilitates habit learning and requires the infralimbic cortex and dorsalateral striatum. Neurobiology of Learning and Memory, 118, 105–112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shipman ML, Johnson GC, Bouton ME, & Green JT (2019). Chemogenetic silencing of prelimbic cortex to anterior dorsomedial striatum projection attenuates operant responding. eNeuro, 6, 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shipman ML, Trask S, Bouton ME, & Green JT (2018). Inactivation of prelimbic and infralimbic cortex respectively affects minimally-trained and extensively-trained goal-directed actions. Neurobiology of Learning and Memory, 155, 164–172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skinner BF (1938). The behavior of organisms: An experimental analysis. New York: D. Appleton-Century Company, Inc. [Google Scholar]

- Smith KS, & Graybiel AM (2013). A dual operator view of habitual behavior reflecting cortical and striatal dynamics. Neuron, 79, 361–374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith KS, Virkud A, Deisseroth K, & Graybiel AM (2012). Reversible online control of habitual behavior by optogenetic perturbation of medial prefrontal cortex. Proceedings of the National Academy of Science, 109, 18932–18937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinfeld MR, & Bouton ME (2020). Context and renewal of habits and goal-directed actions after extinction. Journal of Experimental Psychology: Animal Learning and Cognition, 46, 408–421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinfeld MR, & Bouton ME (2021). Renewal of goal direction with a context change after habit learning. Behavioral Neuroscience, 135, 79–87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thomas CMP, Thrailkill EA, Bouton ME, & Green JT (2020). Inactivation of the prelimbic cortex attenuates operant responding in both physical and behavioral contexts. Neurobiology of Learning and Memory, 171, 107189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thorndike EL (1911). Animal intelligence: Experimental studies. New York: Macmillan. [Google Scholar]

- Thrailkill EA, & Bouton ME (2015a). Contextual control of instrumental actions and habits. Journal of Experimental Psychology: Animal Learning and Cognition, 41, 69–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, & Bouton ME (2015b). Extinction of chained instrumental behaviors: Effects of procurement extinction on consumption responding. Journal of Experimental Psychology: Animal Learning and Cognition, 41, 232–246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, & Bouton ME (2016). Extinction and the associative structure of heterogenous instrumental chains. Neurobiology of Learning and Memory, 133, 61–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thrailkill EA, Trott JM, Zerr CL, & Bouton ME (2016). Contextual control of chained instrumental behaviors. Journal of Experimental Psychology: Animal Learning and Cognition, 42, 401–414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd TP (2013). Mechanisms of renewal after the extinction of instrumental behavior. Journal of Experimental Psychology: Animal Behavior Processes, 39, 193–207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd TP, Winterbauer NE, & Bouton ME (2012). Effects of the amount of acquisition and contextual generalization on the renewal of instrumental behavior after extinction. Learning & Behavior, 40, 145–157. [DOI] [PubMed] [Google Scholar]

- Tran-Tu-Yen DAS, Marchand AR, Pape J-R, DiScala G, & Coutureau E (2009). Transient role of the rat prelimbic cortex in goal-directed behaviour. European Journal of Neuroscience, 30, 464–471. [DOI] [PubMed] [Google Scholar]

- Trask S, & Bouton ME (2014). Contextual control of operant behavior: Evidence for hierarchical associations in instrumental learning. Learning & Behavior, 42, 281–288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trask S, Shipman ML, Green JT, & Bouton ME (2017). Inactivation of the prelimbic cortex attenuates context-dependent operant responding. Journal of Neuroscience, 37, 2317–2324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trask S, Shipman ML, Green JT, & Bouton ME (2020). Some factors that restore goal-direction to a habitual behavior. Neurobiology of Learning and Memory, 169, 107161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vertes RP (2004). Differential projections of the infralimbic and prelimbic cortex in the rat. Synapse, 51, 32–58. [DOI] [PubMed] [Google Scholar]

- Wang N, Ge F, Cui C, Li Y, Sun X, Sun L, … Yang M (2018). Role of glutamatergic projections from the ventral CA1 to infralimbic cortex in context-induced reinstatement of heroin seeking. Neuropsychopharmacology, 43, 1373–1384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Willcocks AL, & McNally G (2013). The role of the medial prefrontal cortex in extinction and reinstatement of alcohol-seeking in rats. European Journal of Neuroscience, 37, 259–268. [DOI] [PubMed] [Google Scholar]