Abstract

Tomato is one of the most essential and consumable crops in the world. Tomatoes differ in quantity depending on how they are fertilized. Leaf disease is the primary factor impacting the amount and quality of crop yield. As a result, it is critical to diagnose and classify these disorders appropriately. Different kinds of diseases influence the production of tomatoes. Earlier identification of these diseases would reduce the disease’s effect on tomato plants and enhance good crop yield. Different innovative ways of identifying and classifying certain diseases have been used extensively. The motive of work is to support farmers in identifying early-stage diseases accurately and informing them about these diseases. The Convolutional Neural Network (CNN) is used to effectively define and classify tomato diseases. Google Colab is used to conduct the complete experiment with a dataset containing 3000 images of tomato leaves affected by nine different diseases and a healthy leaf. The complete process is described: Firstly, the input images are preprocessed, and the targeted area of images are segmented from the original images. Secondly, the images are further processed with varying hyper-parameters of the CNN model. Finally, CNN extracts other characteristics from pictures like colors, texture, and edges, etc. The findings demonstrate that the proposed model predictions are 98.49% accurate.

Keywords: image processing, convolution neural network, plant leaf disease, deep learning, artificial intelligence

1. Introduction

Plants are an integral part of our lives because they produce food and shield us from dangerous radiation. Without plants, no life is imaginable; they sustain all terrestrial life and defend the ozone layer, which filters ultraviolet radiations. Tomato is a food-rich plant, a consumable vegetable widely cultivated [1]. Worldwide, there are approximately 160 million tons of tomatoes consumed annually [2]. The tomato, a significant contributor to reducing poverty, is seen as an income source for farm households [3]. Tomatoes are one of the most nutrient-dense crops on the planet, and their cultivation and production have a significant impact on the agricultural economy. Not only is the tomato nutrient-dense, but it also possesses pharmacological properties that protect against diseases such as hypertension, hepatitis, and gingival bleeding [1]. Tomato demand is also increasing as a result of its widespread use. According to statistics, small farmers produce more than 80% of agricultural output [2]; due to diseases and pests, about 50% of their crops are lost. The diseases and parasitic insects are the key factors impacting tomato growth, making it necessary to research the field crop disease diagnosis.

The manual identification of pests and pathogens is inefficient and expensive. Therefore, it is necessary to provide automated AI image-based solutions to farmers. Images are being used and accepted as a reliable means of identifying disease in image-based computer vision applications due to the availability of appropriate software packages or tools. They process images using image processing, an intelligent image identification technology which increases image recognition efficiency, lowers costs, and improves recognition accuracy [3].

Although plants are necessary for existence, they experience numerous obstacles. An early and accurate diagnosis helps decrease the risk of ecological damage. Without systematic disease identification, product quality and quantity suffer. This has a further detrimental effect on a country’s economy [1]. Agricultural production must expand by 70% by 2050 to meet global food demands, according to the United Nations Food and Agriculture Organization (FAO) [2]. In opposition, chemicals used to prevent diseases, such as fungicides and bactericides, negatively impact the agricultural ecosystem. We therefore need quick and effective disease classification and detection techniques that can help the agro-ecosystem. Advance disease detection technology, such as image processing and neural networks, will allow the design of systems capable of early disease detection for tomato plants. The plant production can be reduced by 50% due to stress as a result [1]. Inspecting the plant is the first step in finding disease, then figuring out what to work with based on prior experience is the next step [3]. This method lacks scientific consistency because farmers’ backgrounds differ, resulting in the process being less reliable. There is a possibility that farmers will misclassify a disease, and an incorrect treatment will damage the plant. Similarly, field visits by domain specialists are pricey. There is a need for the development of automated disease detection and classification methods based on images that can take the role of the domain expert.

It is necessary to tackle the leaf disease issue with an appropriate solution [4,5]. Tomato disease control is a complex process that takes constant account of a substantial fraction of production cost during the season [6,7,8,9]. Vegetable diseases (bacteria, late mildew, leaf spot, tomato mosaic, and yellow curved) are prevalent. They seriously affect plant growth, which leads to reduced product quality and quantity [10]. As per past research, 80–90% of diseases of plants appear on leaves [11]. Tracking the farm and recognizing different forms of the disease with infected plants takes a long time. Farmers’ evaluation of the type of plant disease might be wrong. This decision could lead to insufficient and counterproductive defense measures implemented in the plant. Early detection can reduce processing costs, reduce the environmental impact of chemical inputs, and minimize loss risk [12,13,14].

Many solutions have been proposed with the advent of technology. Here in this paper, the same solutions are used to recognize leaf diseases. Compared with other image regions, the main objective is to make the lesion more apparent. Problems such as (1) shifts in illumination and spectral reflectance, (2) poor input image contrast, and (3) image size and form range have been encountered. Pre-processing operations include image contrast, greyscale conversion, image resizing, and image cropping and filtering [15,16,17]. The next step is the division of an image into objects. These objects are used to determine regions of interest as infected regions in the image [18]. Unfortunately, the segmentation method has many problems:

When the conditions of light differ from eligible photographs, color segmentation fails.

Regional segmentation occurs because of initial seed selection.

Texture varieties take too long to handle.

The next step for classification is to determine which class belongs to the sample. Then, one or more different input variables of the procedure are surveyed. Occasionally, the method is employed to identify a particular type of input. Improving the accuracy of the classification is by far the most extreme classification challenge. Finally, the actual data are used to create and validate datasets dissimilar to the training set.

The rest of the paper is organized as follows: Section 2 reviews the extant literature. Then, the material method and process are described in Section 3. Next, the results analysis and discussion are explained in Section 4. Finally, Section 5 is the conclusion.

2. Related Work

Various researchers have used cutting-edge technology such as machine learning and neural network architectures like Inception V3 net, VGG 16 net, and SqueezeNet to construct automated disease detection systems. These use highly accurate methods for identifying plant disease in tomato leaves. In addition, researchers have proposed many deep learning-based solutions in disease detection and classification, as discussed below in [19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48].

A pre-trained network model for detecting and classifying tomato disease has been proposed with 94–95% accuracy [19,20]. The Tree Classification Model and Segmentation is used to detect and classify six different types of tomato leaf disease with a dataset of 300 images [21]. A technique has been proposed to detect and classify plant leaf disease with an accuracy of 93.75% [22]. The image processing technology and classification algorithm detect and classify plant leaf disease with better quality [23]. Here, an 8-mega-pixel smartphone camera is used to collect sample data and divides it into 50% healthy and 50% unhealthy categories. The image processing procedure includes three elements: improving contrast, segmenting, and extracting features. Classification processes are performed via an artificial neural network using a multi-layer feed-forward neural network, and two types of network structures are compared. The result was better than the Multilayer Perceptron (MLP) network and Radial Basis Function (RBF) network results. The quest divides the plant blade’s picture into healthy and unhealthy; it cannot detect the form of the disease. Authors used leaf diseases to identify and achieve 87.2% classification accuracy through color space analysis, color time, histogram, and color coherence [24].

AlexNet and VGG 19 models have been used to diagnose diseases affecting tomato crops using a frame size of 13,262. The model is used to achieve 97.49% precision [25]. A transfer learning and CNN Model is used to accurately detect diseases infecting dairy crops, reaching 95% [26]. A neural network to determine and classify tomato plant leaf conditions using transfer learning as an AlexNet based deep learning mechanism achieved an accuracy of 95.75% [27,28]. Resnet-50 was designed to identify 1000 diseases of tomato leaf, i.e., a total of 3000 pictures with the name of lesion blight, late blight, and the yellow curl leaf. The Network Activation function for comparison has been amended to Leaky-ReLU, and the kernel size has been updated to 11 × 11 for the first convolution layer. The model predicts the class of diseases with an accuracy of 98.30% and a precision of 98.0% after several repetitions [29]. A simplified eight-layered CNN model has been proposed to detect and classify tomato leaf disease [30]. In this paper, the author utilized the PlantVillage dataset [31] that contains different crop datasets. The tomato leaf dataset was selected and used to performe deep learning; the author used the disease classes and achieved a better accuracy rate.

A simple CNN model with eight hidden layers has been used to identify the conditions of a tomato plant. The proposed techniques show optimal results compared to other classical models [32,33,34,35]. The image processing technique uses deep learning methods to identify and classify tomato plant diseases [36]. Here, the author used the segmentation technique and CNN to implement a complete system. A variation in the CNN model has been adopted and applied to achieve better accuracy.

LeNet has been used to identify and classify tomato diseases with minimal resource utilization in CPU processing capability. Furthermore, the automatic feature extraction technique has been employed to improve classification accuracy [37]. ResNet 50 model has been used to classify and identify tomato disease. The authors detected the diseases in multiple steps: Firstly, by segregating the disease dataset. Secondly, by adapting and adjusting the model based on the transfer learning approach, and lastly, by enhancing the quality of the model by using data augmentation. Finally, the model is authenticated by using the dataset. The model outperformed various legacy methods and achieved 97% accuracy [38]. Hyperspectral images identify rice leaf diseases by evaluating different spectral responses of leaf blade fractions and identifying Sheath blight (ShB) leaf diseases [39]. A spectral library has been created using different disease samples [40]. An improved VGG16 has been used to identify apple leaf disease with an accuracy rate of 99.01% [41].

The author employed image processing, segmentation, and a CNN to classify leaf disease. This research attempts to identify and classify tomato diseases in fields and greenhouse plants. The author used deep learning and a robot in real-time to identify plant diseases utilizing the sensor’s image. AlexNet and SqueezeNet are deep learning architectures used to diagnose and categorize plant disease [42]. The authors built convolutional neural network models using leaf pictures of healthy and sick plants. An open-source PlavtVillage dataset with 87,848 images of 25 plants classified into 58 categories and a model was used to identify plant/disease pairs with a 99.53% success rate (or healthy plant). The authors suggest constructing a real-time plant disease diagnosis system based on the proposed model [43].

In this paper, the authors reviewed all CNN variants for plant disease classification. The authors also briefed all deep learning principles used for leaf disease identification and classification. The authors mainly focused on the latest CNN models and evaluated their performance. Here, the authors summarized CNN variants such as VGG16, VGG19, and ResNet. In this paper, the authors discuss pros, cons, and future aspects of different CNN variants [44].

This work is mainly focused on investigating an optimal solution for plant leaf disease detection. This paper proposes a segmentation-based CNN to provide the best solution to the defined problem. This paper uses segmented images to train the model compared to other models trained on the complete image. The model outperformed and achieved 98.6% classification accuracy. The model was trained and tested on independent data with ten disease classes [45].

A detailed learning technique for the identification of disease in tomato leaves using enhanced CNNs is presented in this article.

The dataset for tomato leaves is built using data augmentation and image annotation tools. It consists of laboratory photos and detailed images captured in actual field situations.

The recognition of tomato leaves is proposed using a Deep Convolutional Neural Network (DCNN). Rainbow concatenation and GoogLeNet Inception V3 structure are all included.

In the proposed INAR-SSD model, the Inception V3 module and Rainbow concatenation detect these five frequent tomato leaf diseases.

The testing results show that the INAR-SSD model achieves a detection rate of 23.13 frames per second and detection performance of 78.80% mAP on the Apple Leaf Disease Dataset (ALDD). Furthermore, the results indicate that the innovative INAR-SSD (SSD with Inception module and Rainbow concatenation) model produces more accurate and faster results for the early identification of tomato leaf diseases than other methods [46].

An EfficientNet, a convolutional neural network with 18,161 plain segmented tomato leaf images, is used to classify tomato diseases. Two leaf segmentation models, U-net and Modified U-net, are evaluated. The models’ ability was examined categorically (healthy vs disease leaves and 6- and 10-class healthy vs sick leaves). The improved U-net segmentation model correctly classified 98.66% of leaf pictures for segmentation. EfficientNet-B7 surpassed 99.95% and 99.12% accuracy for binary and six-class classification, and EfficientNet-B4 classified images for ten classes with 99.89 percent accuracy [47].

Disease detection is crucial for crop output. Therefore, disease detection has led academics to focus on agricultural ailments. This research presents a deep convolutional neural network and an attention mechanism for analyzing tomato leaf diseases. The network structure has attention extraction blocks and modules. As a result, it can detect a broad spectrum of diseases. The model also forecasts 99.24% accuracy in tests, network complexities, and real-time adaptability [48].

Convolutional Neural Networks (CNNs) have revolutionized image processing, especially deep learning methods. Over the last two years, numerous potential autonomous crop disease detection applications have emerged. These models can be used to develop an expert consultation app or a screening app. These tools may help enhance sustainable farming practices and food security. The authors looked at 19 studies that employed CNNs to identify plant diseases and assess their overall utility [49].

To depict the illustrations, the authors depended on the PlantVillage dataset. The authors did not evaluate the performance of the neural network topologies using typical performance metrics such as F1-score, recall, precision, etc. Instead, they assessed the model’s accuracy and inference time. This article proposes a new deep neural network model and evaluates it using a variety of evaluation metrics.

3. Materials and Methods

In this part, cutting-edge methodologies, models, and datasets are utilized to attain outcomes.

3.1. Dataset

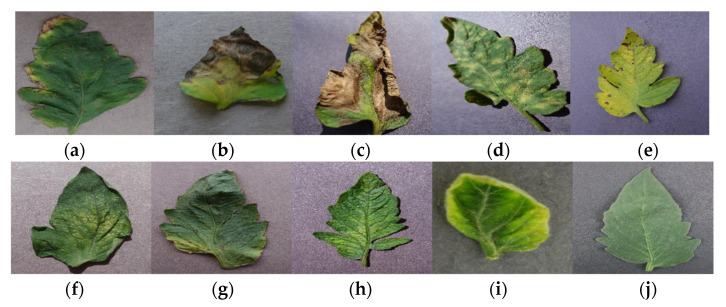

There were ten unique classes of disease in the sample. Tomato leaves of nine types were infected, and one class was resistant. We used reference photos and disease names to identify our dataset classes, as shown in Figure 1.

Figure 1.

Sample leaf image with disease and pathogen for (a) Bacterial_Spot(Xanthomonas vesicatoria), (b) Early_Blight(fungus Alternaria solani), (c) Late_Blight(Phytophthora infestans), (d) Leaf_Mold(Cladosporium fulvum), (e) Septoria_Leaf_Spot(fungus Septoria lycopersici), (f) Spider_Mites(floridana), (g) Target_Spot(fungus Corynespora), (h) Tomato_Mosaic_Virus(Tobamovirus), (i) Tomato_Yellow_Leaf_Curl_Virus(genus Begomovirus), (j) Healthy_Leaf.

In the experiment, the complete dataset was divided in the ratio of 80:20 for training and testing and validation data.

3.2. Image Pre-Processing and Labelling

Before training the model, image pre-processing was used to change or boost the raw images that needed to be processed by the CNN classifier. Building a successful model requires analyzing both the design of the network and the format of input data. We pre-processed our dataset so that the proposed model could take the appropriate features out of the image. The first step was to normalize the size of the picture and resize it to 256 × 256 pixels. The images were then transformed into grey. This stage of pre-processing means that a considerable amount of training data are required for the explicit learning of the training data features. The next step was to group tomato leaf pictures by type, then mark all images with the correct acronym for the disease. In this case, the dataset showed ten classes in test collection and training.

3.3. Training Dataset

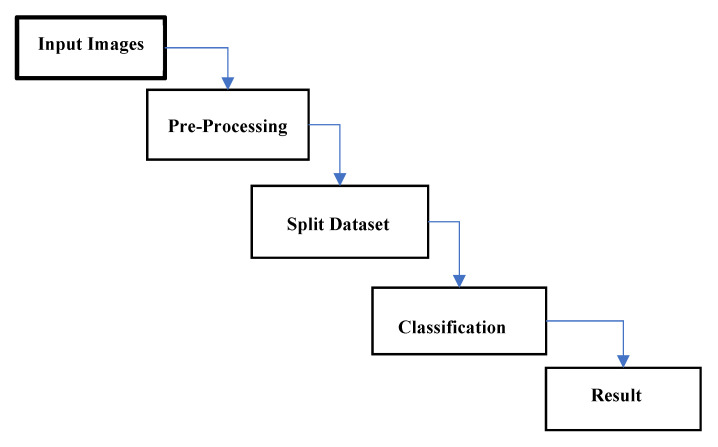

Preparing the dataset was the first stage in processing the existing dataset. The Convolutional Neural Network process was used during this step as image data input, which eventually formed a model that assessed performance. Normalization steps on tomato leaf images are shown in Figure 2.

Figure 2.

Classifier model used.

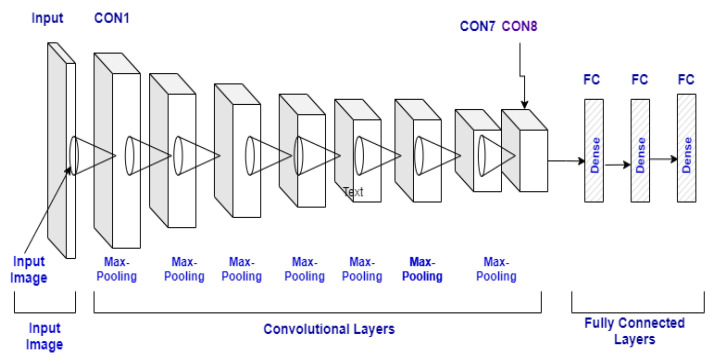

3.4. Convolutional Neural Network

The CNN is a neural network technology widely employed today to process or train the data in images. The matrix format of the Convolution is designed to filter the pictures. For data training, each layer is utilized in the Convolution Neural Network, including the following layers: input layer, convo layer, fully connected layer pooling layer, drop-out layer to build CNN, and ultimately linked dataset classification layer. It can map a series of calculations to the input test set in each layer. The complete architecture is shown in Figure 3, and a description of the model is in Table 1.

Figure 3.

CNN model architecture.

Table 1.

Hyper-parameter of deep neural network.

| Parameter | Description |

|---|---|

| No. of Convolution Layer | 8 |

| No. of Max Pulling Layer | 8 |

| Dropout Rate | 0.5 |

| Network Weight Assigned | Uniform |

| Activation Function | Relu |

| Learning Rates | 0.01, 0.01, 0.1 |

| Epocho | 50, 100, 150 |

| Batch Size | 36, 64, 110 |

3.4.1. Convolutional Layer

A convolution layer is used to map characteristics using the convolution procedure with the presentation layer. Each function of the map is combined with several input characteristics. Convolution can be defined as a two-function operation and constitutes the basis of CNNs. Each filter is converted to each part of the input information, and a map or 2D function map is generated. The complexity of the model encounters significant layer convolutional performance optimization. Calculated in the following equation for input z of the ith coalescent layer (1):

| (1) |

Where is a convolution operation and f is used for an activation function, and Q is a layer kernel convolution. , is the kernel layer convolution amount. Each kernel of Qi is a weight matrix K × K × L. The number of input channels is K as the window size.

3.4.2. Pooling Layer

The pooling layer increases the number of parameters exponentially to maximize and improve precision. Furthermore, with growing parameters, the size of the maps is reduced. The pooling layer reduces the overall output of the convolution layer. It reduces the number of training parameters by considering the spatial properties of an area representing a whole country. It also distributes the total value of all R activations to the subsequent activation in the chain. In the m-th max-pooled band, there are J-related filters that are combined.

| (2) |

| (3) |

where N ∈ {1, …., R} is pooling shift allowing for overlap between pooling zones where N < R. It reduces the output dimensionality from K convolution bands to M = ((K - R))/(N + 1) pooled bands and the resulting layer is p = [p_1, …, p_m] ∈ R^(M.J.)

Finally, a maximum of four quadrants indicates the value maximum with average pooling results.

3.4.3. Fully Connected Layer

Each layer in the completely connected network is connected with its previous and subsequent layers. The first layer of the utterly corresponding layer is connected to each node in the pooling layer’s last frame. The parameters used in the CNN model take more time because of the complex computation; it is the critical drawback of the fully linked sheet. Thus, the elimination of the number of nodes and links will overcome these limitations. The dropout technique will satisfy deleted nodes and connections.

3.4.4. Dropout

An absence is an approach in which a randomly selected neuron is ignored during training, and they are “dropped out” spontaneously. This means that they are briefly omitted from their contribution to the activation of the downstream neurons on the forward transfer, and no weight changes at the back are applied to the neuron. Thus, it avoids overfitting and speeds up the process of learning. Overfitting is when most data has achieved an excellent percentage through the training process, but a difference in the prediction process occurs. Dropout occurs when a neuron is located in the network in the hidden and visible layers.

Performance Evaluation Metrics. The accuracy, precision, recall, and F1-score measures are used to evaluate the model’s performance. To avoid being misled by the confusion matrix, we applied the abovementioned evaluation criteria.

Accuracy. Accuracy (Acc) is a measure of the proportion of accurately classified predictions that have been made so far. It is calculated as follows:

Note that abbreviations such as “TP”, “TN”, “FP”, and “FN” stand for “true positive”, “true negative”, “false positive”, and “false negative”, respectively.

Precision. Precision (Pre) is a metric that indicates the proportion of true positive outcomes. It is calculated as follows:

Recall. Recall (Re) is a metric that indicates the proportion of true positives that were successfully detected. It is calculated as follows:

F1-Score. The F1-Score is calculated as the harmonic mean of precision and recall and is defined as follows:

| (4) |

Proposed Algorithm: Steps involved for Disease Detection

Step 1: Input color of the image IRGB of the leaf procure from the PlantVillage dataset.

Step 2: Given IRGB, generate the mask Mveq using CNN-based segmentation.

Step 3: Encrust IRGB with Mveq to get Mmask.

Step 4: Divide the image Mmask into smaller regions Ktiles (square tiles).

Step 5: Classify Ktiles from Mmask into Tomato.

Step 6: Finally, Ktiles is the leaf part to detect disease.

Step 7: Stop.

The disease detection starts with inputted image IRGB from the multiclass dataset. After input image IRGB is the mask segmented Mveq using CNN. The mask image is divided into a different region Ktiles. Afterward, it selects the Region of Interest (RoI), and the same is used to detect leaf disease.

The proposed algorithm for disease detection is given below:

| Algorithm: Disease Detection |

| Input: acquired from a dataset |

| Output: Disease detection; |

|

|

|

|

| tomato diseases; |

|

|

4. Results Analysis and Discussion

The complete experiment was performed on Google Colab. The result of the proposed method is described with different test epochs and learning rates and explained in the next sub-section.

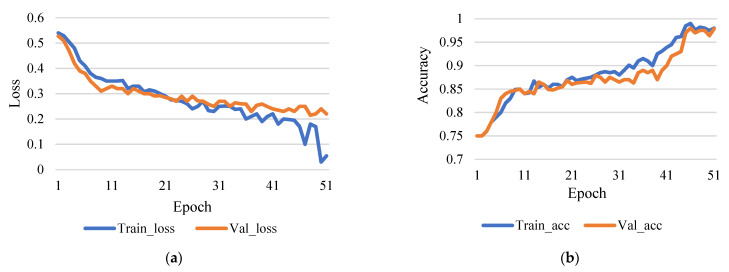

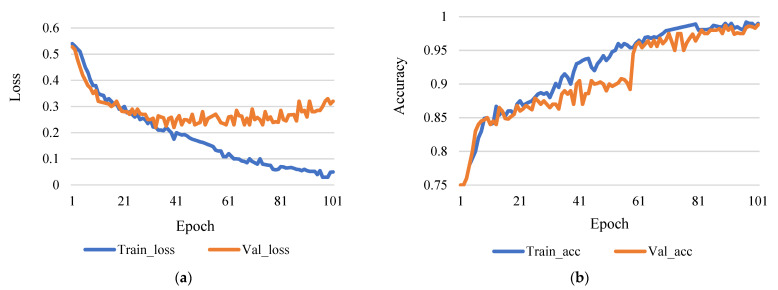

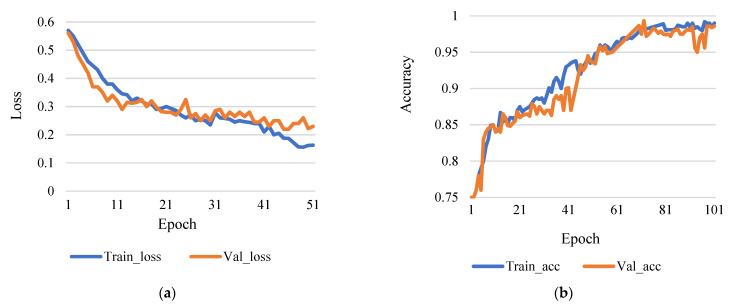

This research used epoch 50 and epoch 100 for comparison, though learning rates were 0.0001. Figure 4a shows the comparison between training and validation loss, and Figure 4b shows the comparison between training accuracy and validation accuracy.

Figure 4.

(a) Training loss vs validation loss (rate of learning 0.0001 and epoch 50). (b) Training accuracy vs validation accuracy (rate of learning 0.0001 and epoch 50).

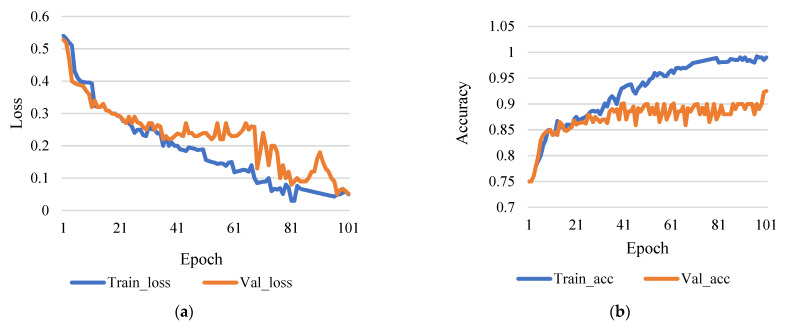

Figure 5a shows the comparison between training loss and validation loss, and Figure 5b shows training accuracy and validation accuracy. Here, Figure 5b shows that the accuracy rate of 98.43% is achieved with a training step at 100 epochs and the rate of learning 0.0001. Therefore, it is reasonable to infer that more iterations will result in higher data accuracy based on the research technique. However, the number of epochs increases as the training phase lengthens.

Figure 5.

(a) Training loss vs validation loss (rate of learning 0.0001 and epoch 100). (b) Training accuracy vs validation accuracy (rate of learning 0.0001 and epoch 100).

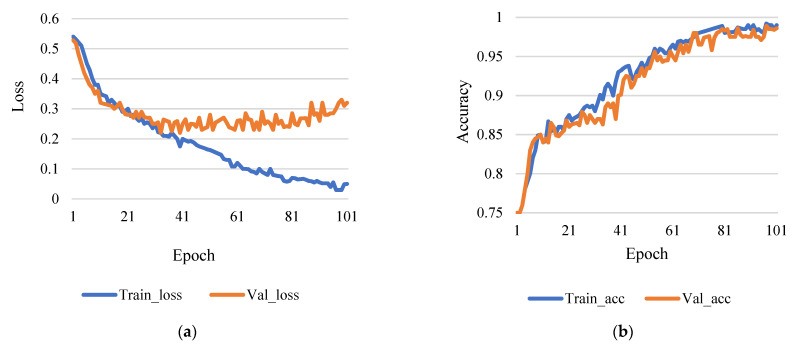

This assessment looks to evaluate how machine learning plays a role in the process. For example, one of the training variables used to calculate the weight correction value for the course is the learning rate (1). This test is based on the epochs 50 and 100, while the learning rates are 0.001 and 0.01 used for comparison. Figure 6a shows the comparison between training loss and validation loss, and Figure 6b shows training accuracy and validation accuracy.

Figure 6.

(a) Training loss vs validation loss (rate of learning 0.001 and epoch 50). (b) Training accuracy vs validation accuracy (rate of learning 0.001 and epoch 50).

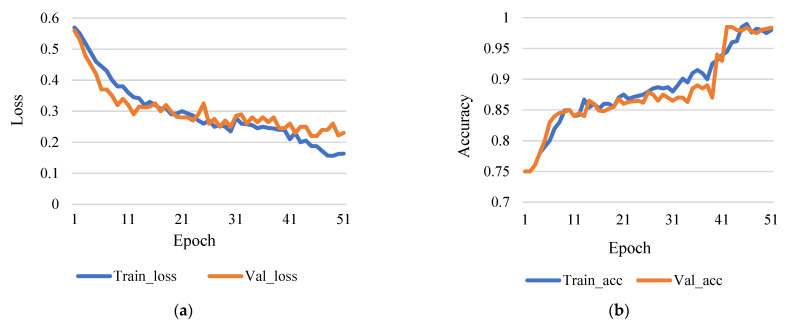

Figure 7a shows the comparison between training loss and validation loss, and Figure 7b shows training accuracy and validation accuracy. According to Figure 7b, the accurate rate of 98.42% is indicated by step 50 and a learning rate of 0.001. Furthermore, Figure 8a shows the comparison between training loss and validation loss, and Figure 8b shows training accuracy and validation accuracy. Figure 8b shows that a level of accuracy of 98.52% is achieved. Figure 9a shows the training and validation losses, and Figure 9b shows training and validation accuracy. It also shows an accuracy rate of 98.5% with the 100 steps and 0.01 learning rate. Based on the assessment process used, it can evaluate a more accurate percentage of data with a greater learning rate.

Figure 7.

(a) Training loss vs validation loss (rate of learning 0.001 and epoch 100). (b) Training accuracy vs validation accuracy (rate of learning 0.001 and epoch 100).

Figure 8.

(a) Training loss vs validation loss (rate of learning 0.01 and epoch 50). (b) Training accuracy vs validation accuracy (rate of learning 0.01 and epoch 50).

Figure 9.

(a) Training loss vs validation loss (rate of learning 0.01 and epoch 100). (b) Training accuracy vs validation accuracy (rate of learning 0.01 and epoch 100).

As shown in the results of Table 2, the accuracy rate is dependent on both the learning rate and the epoch: The more significant the epoch value, the more precise the calculation. Table 2 describes that the experiment was performed by varying different parameters such as epoch (two values) and learning rate (three values). Different learning rates were used to find out detection accuracy. The accuracy of the experiment on different variations values is shown in Table 2.

Table 2.

Test results.

| Dataset Amount | Image Size | Epoch | Learning Rate | Accuracy (%) |

|---|---|---|---|---|

| 3000 | 256 × 256 px | 50 | 0.0001 | 98.47% |

| 50 | 0.001 | 98.42% | ||

| 50 | 0.01 | 98.52% | ||

| 100 | 0.0001 | 98.43% | ||

| 100 | 0.001 | 98.58% | ||

| 100 | 0.01 | 98.5% |

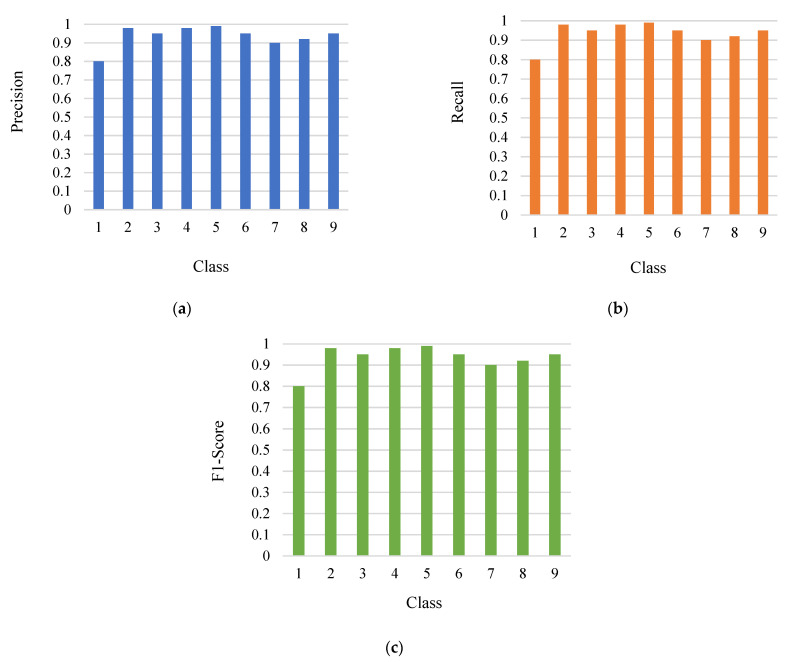

The precision, recall, and F1-Score of the model is shown in Figure 10 (a–c). The performance of parameters is measured by accuracy, but other factors such as precision, recall, and F1-score also contribute to it. These factors are computed for all the classes and shown below in the experiment performed. These factors are calculated on the true positive, true negative, false positive, and false negative values for all the classes. The high precision shows that the accuracy will be increased. A high recall value indicates the number of relevant positive values. The f-score represents the weighted average of the precision and recall.

Figure 10.

(a) Precision; (b) Recall; (c) F1-Score.

In Table 3, the proposed approach’s performance is compared to that of three standardized models. The results show that the proposed model outperforms the other classical models using segmentation and an added extra layer in the model.

Table 3.

Comparison with other models.

| S.No. | Model | Accuracy Rate | Space | Training Parameters | Non-Trainable |

|---|---|---|---|---|---|

| 1 | Mobinet | 66.75 | 82,566 | 18,020,552 | 455,262 |

| 2 | VGG16 | 79.52 | 85,245 | 21,000,254 | 532,654 |

| 3 | InceptionV3 | 64.25 | 90,255 | 22,546,862 | 658,644 |

| 4 | Proposed | 98.49 | 22,565 | 1,422,542 | 0 |

5. Conclusions and Future Scope

The article discussed a deep neural network model for detecting and classifying tomato plant leaf diseases into predefined categories. It also considered morphological traits such as color, texture, and leaf edges of the plant. This article introduced standard profound learning models with variants. This article discussed biotic diseases caused by fungal and bacterial pathogens, specifically blight, blast, and browns of tomato leaves. The proposed model detection rate was 98.49 percent accurate. With the same dataset, the proposed model was compared to VGG and ResNet versions. After analyzing the results, the proposed model outperformed other models. The proposed approach for identifying tomato disease is a ground-breaking notion. In the future, we will expand the model to include certain abiotic diseases due to the deficiency of nutrient values in the crop leaf. Our long-term objective is to increase unique data collection and accumulate a vast amount of data on several diseases of plants. To improve accuracy, we will apply subsequent technology in the future.

Acknowledgments

This project was funded by the Deanship of Scientific Research (DSR), King Abdulaziz University, Jeddah. The authors, therefore, gratefully acknowledge DSR’s technical and financial support.

Author Contributions

Conceptualization, N.K.T., V.G. and A.A.; methodology, H.M.A., S.G.V. and D.A.; validation, S.K. and N.G.; formal analysis, A.A. and N.G.; investigation, N.K.T. and V.G.; resources, A.A.; data curation, S.G.V. and H.M.A.; writing—original draft, N.K.T., V.G. and A.A.; writing—review and editing, S.K., H.M.A. and N.G.; supervision, S.G.V. and D.A.; project administration, H.M.A. and S.G.V. All authors have read and agreed to the published version of the manuscript.

Funding

This project was funded by the Deanship of Scientific Research (DSR), King Abdulaziz University, Jeddah, under grant No. (D438-830-1442). The authors, therefore, gratefully acknowledge DSR’s technical and financial support.

Institutional Review Board Statement

Not Applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Schreinemachers P., Simmons E.B., Wopereis M.C. Tapping the economic and nutritional power of vegetables. Glob. Food Secur. 2018;16:36–45. doi: 10.1016/j.gfs.2017.09.005. [DOI] [Google Scholar]

- 2.Stilwell M. The global tomato online news processing in 2018. [(accessed on 15 November 2021)]. Available online: https://www.tomatonews.com/

- 3.Wang R., Lammers M., Tikunov Y., Bovy A.G., Angenent G.C., de Maagd R.A. The rin, nor and Cnr spontaneous mutations inhibit tomato fruit ripening in additive and epistatic manners. Plant Sci. 2020;294:110436–110447. doi: 10.1016/j.plantsci.2020.110436. [DOI] [PubMed] [Google Scholar]

- 4.Sengan S., Rao G.R., Khalaf O.I., Babu M.R. Markov mathematical analysis for comprehensive real-time data-driven in healthcare. Math. Eng. Sci. Aerosp. (MESA) 2021;12:77–94. [Google Scholar]

- 5.Sengan S., Sagar R.V., Ramesh R., Khalaf O.I., Dhanapal R. The optimization of reconfigured real-time datasets for improving classification performance of machine learning algorithms. Math. Eng. Sci. Aerosp. (MESA) 2021;12:43–54. [Google Scholar]

- 6.Peet M.M., Welles G.W. Greenhouse tomato production. Crop. Prod. Sci. Hortic. 2005;13:257–304. [Google Scholar]

- 7.Khan S., Narvekar M. Novel fusion of color balancing and superpixel based approach for detection of tomato plant diseases in natural complex environment. J. King Saud Univ.-Comput. Inf. Sci. 2020 doi: 10.1016/j.jksuci.2020.09.006. in press. [DOI] [Google Scholar]

- 8.Kovalskaya N., Hammond R.W. Molecular biology of viroid–host interactions and disease control strategies. Plant Sci. 2014;228:48–60. doi: 10.1016/j.plantsci.2014.05.006. [DOI] [PubMed] [Google Scholar]

- 9.Wisesa O., Andriansyah A., Khalaf O.I. Prediction Analysis for Business to Business (B2B) Sales of Telecommunication Services using Machine Learning Techniques. Majlesi J. Electr. Eng. 2020;14:145–153. doi: 10.29252/mjee.14.4.145. [DOI] [Google Scholar]

- 10.Wilson C.R. Plant pathogens–the great thieves of vegetable value; Proceedings of the XXIX International Horticultural Congress on Horticulture Sustaining Lives, Livelihoods and Landscapes (IHC2014); Brisbane, Australia. 17–22 August 2014. [Google Scholar]

- 11.Zhang S.W., Shang Y.J., Wang L. Plant disease recognition based on plant leaf image. J. Anim. Plant Sci. 2015;25:42–45. [Google Scholar]

- 12.Agarwal M., Singh A., Arjaria S., Sinha A., Gupta S. ToLeD: Tomato leaf disease detection using convolution neural network. Procedia Comput. Sci. 2020;167:293–301. doi: 10.1016/j.procs.2020.03.225. [DOI] [Google Scholar]

- 13.Ali H., Lali M.I., Nawaz M.Z., Sharif M., Saleem B.A. Symptom based automated detection of citrus diseases using color histogram and textural descriptors. Comput. Electron. Agric. 2017;138:92–104. doi: 10.1016/j.compag.2017.04.008. [DOI] [Google Scholar]

- 14.Basavaiah J., Anthony A.A. Tomato Leaf Disease Classification using Multiple Feature Extraction Techniques. Wirel. Pers. Commun. 2020;115:633–651. doi: 10.1007/s11277-020-07590-x. [DOI] [Google Scholar]

- 15.Ma J., Du K., Zheng F., Zhang L., Sun Z. A segmentation method for processing greenhouse vegetable foliar disease symptom images. Inf. Process. Agric. 2019;6:216–223. doi: 10.1016/j.inpa.2018.08.010. [DOI] [Google Scholar]

- 16.Sharma P., Hans P., Gupta S.C. Classification of plant leaf diseases using machine learning and image preprocessing tech-niques; Proceedings of the 10th International Conference on Cloud Computing, Data Science & Engineering (Confluence); Noida, India. 29–31 January 2020. [Google Scholar]

- 17.Li G., Liu F., Sharma A., Khalaf O.I., Alotaibi Y., Alsufyani A., Alghamdi S. Research on the natural language recognition method based on cluster analysis using neural network. Math. Probl. Eng. 2021;2021:9982305. doi: 10.1155/2021/9982305. [DOI] [Google Scholar]

- 18.Singh V., Misra A.K. Detection of plant leaf diseases using image segmentation and soft computing techniques. Inf. Process. Agric. 2017;4:41–49. doi: 10.1016/j.inpa.2016.10.005. [DOI] [Google Scholar]

- 19.Hasan M., Tanawala B., Patel K.J. Deep learning precision farming: Tomato leaf disease detection by transfer learning; In Proceeding of the 2nd International Conference on Advanced Computing and Software Engineering (ICACSE); Sultanpur, Inida. 8–9 February 2019. [Google Scholar]

- 20.Adhikari S., Shrestha B., Baiju B., Kumar S. Tomato plant diseases detection system using image processing; Proceedings of the 1st KEC Conference on Engineering and Technology; Laliitpur, Nepal. 27 September 2018; pp. 81–86. [Google Scholar]

- 21.Sabrol H., Satish K. Tomato plant disease classification in digital images using classification tree; Proceedings of the International Conference on Communication and Signal Processing (ICCSP); Melmaruvathur, India. 6–8 April 2016; pp. 1242–1246. [Google Scholar]

- 22.Salih T.A. Deep Learning Convolution Neural Network to Detect and Classify Tomato Plant Leaf Diseases. Open Access Libr. J. 2020;7:12. doi: 10.4236/oalib.1106296. [DOI] [Google Scholar]

- 23.Ishak S., Rahiman M.H., Kanafiah S.N., Saad H. Leaf disease classification using artificial neural network. J. Teknol. 2015;77:109–114. doi: 10.11113/jt.v77.6463. [DOI] [Google Scholar]

- 24.Sabrol H., Kumar S. Fuzzy and neural network-based tomato plant disease classification using natural outdoor images. Indian J. Sci. Technol. 2016;9:1–8. doi: 10.17485/ijst/2016/v9i44/92825. [DOI] [Google Scholar]

- 25.Rangarajan A.K., Purushothaman R., Ramesh A. Tomato crop disease classification using pre-trained deep learning algorithm. Procedia Comput. Sci. 2018;133:1040–1047. doi: 10.1016/j.procs.2018.07.070. [DOI] [Google Scholar]

- 26.Coulibaly S., Kamsu-Foguem B., Kamissoko D., Traore D. Deep neural networks with transfer learning in millet crop images. Comput. Ind. 2019;108:115–120. doi: 10.1016/j.compind.2019.02.003. [DOI] [Google Scholar]

- 27.Sangeetha R., Rani M. Tomato Leaf Disease Prediction Using Transfer Learning; Proceedings of the International Advanced Computing Conference 2020; Panaji, India. 5–6 December 2020. [Google Scholar]

- 28.Mortazi A., Bagci U. Automatically designing CNN architectures for medical image segmentation; Proceedings of the International Workshop on Machine Learning in Medical Imaging; Granada, Spain. 16 September 2018; pp. 98–106. [Google Scholar]

- 29.Jiang D., Li F., Yang Y., Yu S. A tomato leaf diseases classification method based on deep learning; Proceedings of the Chinese Control and Decision Conference (CCDC); Hefei, China. 22–24 August 2020; pp. 1446–1450. [Google Scholar]

- 30.Agarwal M., Gupta S.K., Biswas K.K. Development of Efficient CNN model for Tomato crop disease identification. Sustain. Comput. Inform. Syst. 2020;28:100407–100421. doi: 10.1016/j.suscom.2020.100407. [DOI] [Google Scholar]

- 31.PlantVillege. [(accessed on 3 July 2021)]. Available online: https://www.kaggle.com/emmarex/plantdisease.

- 32.Kaur P., Gautam V. Research patterns and trends in classification of biotic and abiotic stress in plant leaf. Mater. Today Proc. 2021;45:4377–4382. doi: 10.1016/j.matpr.2020.11.198. [DOI] [Google Scholar]

- 33.Kaur P., Gautam V. Plant Biotic Disease Identification and Classification Based on Leaf Image: A Review; Proceedings of the 3rd International Conference on Computing Informatics and Networks (ICCIN); Delhi, India. 29–30 July 2021; pp. 597–610. [Google Scholar]

- 34.Suryanarayana G., Chandran K., Khalaf O.I., Alotaibi Y., Alsufyani A., Alghamdi S.A. Accurate Magnetic Resonance Image Super-Resolution Using Deep Networks and Gaussian Filtering in the Stationary Wavelet Domain. IEEE Access. 2021;9:71406–71417. doi: 10.1109/ACCESS.2021.3077611. [DOI] [Google Scholar]

- 35.Wu Y., Xu L., Goodman E.D. Tomato Leaf Disease Identification and Detection Based on Deep Convolutional Neural Net-work. Intelli. Autom. Soft Comput. 2021;28:561–576. doi: 10.32604/iasc.2021.016415. [DOI] [Google Scholar]

- 36.Tm P., Pranathi A., SaiAshritha K., Chittaragi N.B., Koolagudi S.G. Tomato leaf disease detection using convolutional neural networks; Proceedings of the Eleventh International Conference on Contemporary Computing (IC3); Noida, India. 2–4 August 2018; pp. 1–5. [Google Scholar]

- 37.Kaushik M., Prakash P., Ajay R., Veni S. Tomato Leaf Disease Detection using Convolutional Neural Network with Data Augmentation; Proceedings of the 5th International Conference on Communication and Electronics Systems (ICCES); Coimbatore, India. 10–12 June 2020; pp. 1125–1132. [Google Scholar]

- 38.Lin F., Guo S., Tan C., Zhou X., Zhang D. Identification of Rice Sheath Blight through Spectral Responses Using Hyperspectral Images. Sensors. 2020;20:6243–6259. doi: 10.3390/s20216243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Li Y., Luo Z., Wang F., Wang Y. Hyperspectral leaf image-based cucumber disease recognition using the extended collaborative representation model. Sensors. 2020;20:4045–4058. doi: 10.3390/s20144045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Yan Q., Yang B., Wang W., Wang B., Chen P., Zhang J. Apple leaf diseases recognition based on an improved convolutional neural network. Sensors. 2020;20:3535–3549. doi: 10.3390/s20123535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ashok S., Kishore G., Rajesh V., Suchitra S., Sophia S.G., Pavithra B. Tomato Leaf Disease Detection Using Deep Learning Techniques; Proceedings of the 5th International Conference on Communication and Electronics Systems (ICCES); Coimbatore, India. 10–12 June 2020; pp. 979–983. [Google Scholar]

- 42.Durmuş H., Güneş E.O., Kırcı M. Disease detection on the leaves of the tomato plants by using deep learning; Proceedings of the 6th International Conference on Agro-Geoinformatics; Fairfax, VA, USA. 7–10 August 2017; pp. 1–5. [Google Scholar]

- 43.Ferentinos K.P. Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 2018;145:311–318. doi: 10.1016/j.compag.2018.01.009. [DOI] [Google Scholar]

- 44.Lu J., Tan L., Jiang H. Review on Convolutional Neural Network (CNN) Applied to Plant Leaf Disease Classification. Agriculture. 2021;11:707. doi: 10.3390/agriculture11080707. [DOI] [Google Scholar]

- 45.Sharma P., Berwal Y.P., Ghai W. Performance analysis of deep learning CNN models for disease detection in plants using image segmentation. Inf. Process. Agric. 2020;7:566–574. doi: 10.1016/j.inpa.2019.11.001. [DOI] [Google Scholar]

- 46.De Luna R.G., Dadios E.P., Bandala A.A. Automated image capturing system for deep learning-based tomato plant leaf disease detection and recognition; Proceedings of the TENCON 2018—2018 IEEE Region 10 Conference; Jeju, Korea. 28–31 October 2018; pp. 1414–1419. [Google Scholar]

- 47.Chowdhury M.E., Rahman T., Khandakar A., Ayari M.A., Khan A.U., Khan M.S., Al-Emadi N., Reaz M.B., Islam M.T., Ali S.H. Automatic and Reliable Leaf Disease Detection Using Deep Learning Techniques. AgriEngineering. 2021;3:294–312. doi: 10.3390/agriengineering3020020. [DOI] [Google Scholar]

- 48.Zhao S., Peng Y., Liu J., Wu S. Tomato Leaf Disease Diagnosis Based on Improved Convolution Neural Network by Attention Module. Agriculture. 2021;11:651. doi: 10.3390/agriculture11070651. [DOI] [Google Scholar]

- 49.Boulent J., Foucher S., Théau J., St-Charles P.L. Convolutional neural networks for the automatic identification of plant diseases. Front. Plant Sci. 2019;10:941. doi: 10.3389/fpls.2019.00941. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.