Abstract

Background: This study aimed to develop a theoretical model to explore the behavioral intentions of medical students to adopt an AI-based Diagnosis Support System.

Methods: This online cross-sectional survey used the unified theory of user acceptance of technology (UTAUT) to examine the intentions to use an AI-based Diagnosis Support System in 211 undergraduate medical students in Vietnam. Partial least squares (PLS) structural equational modeling was employed to assess the relationship between latent constructs.

Results: Effort expectancy (β = 0.201, p < 0.05) and social influence (β = 0.574, p < 0.05) were positively associated with initial trust, while no association was found between performance expectancy and initial trust (p > 0.05). Only social influence (β = 0.527, p < 0.05) was positively related to the behavioral intention.

Conclusions: This study highlights positive behavioral intentions in using an AI-based diagnosis support system among prospective Vietnamese physicians, as well as the effect of social influence on this choice. The development of AI-based competent curricula should be considered when reforming medical education in Vietnam.

Keywords: artificial intelligence, diagnosis, theoretical model, intention, medical students

Introduction

Artificial intelligence (AI) was first introduced some years ago, but in recent years, there has been increasing exploration of the utility and cost-saving of such technology (1, 2). AI brings about great potential in changing existing healthcare practice, from prevention, screening, diagnosis, treatment, and care (2, 3). AI could tap onto data from existing medical records; or even data from smartphones that individuals possess, and data from the applications that individuals use, including their social media posts (3, 4). By using large datasets and employ advanced techniques such as machine learning and deep learning approaches, AI informs more precise predictions of behavioral patterns and understanding of existent medical conditions (3). These benefits would facilitate the clinical decision process, improve the efficacy and accuracy of diagnosis, and diminish physician's workload. Evidence on the utility of AI in healthcare has been widely recorded from dentistry (5), primary care (6), radiology (7), ophthalmology (8) or pathology (9). AI has been recommended for inclusion in routine workflow processes (7). It is thus evident from these studies that the use of AI has been explored in various domains, and it is a promising technology for healthcare.

However, although many reports show the promising role of AI, the usage of AI is still in the early stage. Recent studies indicated low rates of physicians who were familiar or had chances to adopt AI in their clinical practices, even in technologically advanced nations such as 5.9% in South Korea (10) or 23% in the United States (11). Many technological, social, organizational, and individual challenges to apply AI principles in healthcare facilities have been discussed thoroughly in literature (2, 12–15). Nonetheless, the most important factor was physician's attitudes and perceptions toward AI, which can decide whether they would want to integrate AI in their practice or not (13, 14, 16). In healthcare, when the clinical decision is closely related to the patient's lives, health professionals are more likely to be cautious to use new technology in treatment and care; thus, it is not easy for them to trust and use a new product to support their practice.

Health systems can actively involve in the roles of AI adopters and innovators. Therefore, given the rapid expansion of AI applications in healthcare, it is crucial for future health workforces to prepare their capacities, as well as positive perceptions and attitudes to participate in the development of these novel tools. Prior studies indicated some controversial results about the attitudes and intentions to use AI in healthcare practices among medical students. For example, a study in the United States revealed that although the majority of radiology students had a belief in the future role of AI, they felt less interest in the applications of AI in the radiology field (17). Another study in the United Kingdom showed that 49% of medical students were more likely to apply for a radiology career due to AI (18). Understanding determinants of their behavioral intention to use and adopt AI in healthcare delivery is thus necessary for developing medical education curriculum to facilitate AI competence.

In Vietnam, it was been reported in 2019 that more organizations (both healthcare and non-healthcare related) have started developing AI technologies, and utilizing such technologies (19). In 2019, the Vietnam Ministry of Health has issued Decision No. 4888/QD-BYT about the applications and development of smart health care during 2019–2025, which underlines the importance of digital health and strategies to integrate digital health, including AI, into routine health service delivery (20). To date, there remains limited evaluation of AI amongst Vietnamese healthcare services. From our knowledge, there has been only prior publication, that of Vuong et al. (21) that presents a framework seeking to evaluate the AI readiness of the Vietnamese healthcare sector. The authors reported that the implementation of AI in healthcare in Vietnam is limited by several factors, such as the lack of funding; the necessary information infrastructure; and most importantly, the lack of understanding and misunderstanding of AI. Whilst the previous article by Vuong et al. (21) has provided some insights into the challenges with AI implementation and utilization, the review focused on issues at a macro-level, and has not evaluated the perspectives of individual healthcare professionals. For there to be a high uptake rate of AI on the ground, there needs to be an understanding of existing attitudes, preferences, and perspectives of future physicians.

In healthcare, various theories have been used to understand comprehensive facilitating factors in the individual's adoption and acceptance of a novel technology. For instance, several theories included the theory of planned behavior, the theory of diffusion of innovations, the technology acceptance model, or the unified theory of user acceptance of technology (UTAUT). Of which, UTAUT has been recognized as one of the most common theories to examine the adoption behavior of one individual (22–25). UTAUT was developed based on other dominant behavioral theories. Venkatesh et al. showed a higher explanatory level of UTAUT compared to other theories in exploring the information technology adaptation, with 70% of the variance for behavioral intentions and 50% of the variance for actual use (26, 27). A previous study in Chinese physicians showed that initial trust and performance expectancy were significant predictors for the AI adoption intentions (28). This study aimed to use UTAUT to explore the behavioral intentions of medical students to adopt an AI-based Diagnosis Support System. Understanding medical student's attitudes and perspectives would help to resolve potential barriers in adoption at the ground level, and such a survey would also help guide AI policy formulation at different levels.

Materials and Methods

In this section, we presented literature review and conceptual framework of this study. Moreover, study design, data collection method, and statistical analysis were described.

Literature Review and Conceptual Framework

UTAUT has been used widely in the literature to examine the behaviors of an individual in adopting the technology. UTAUT explains individual's behaviors via four constructs: (1) performance expectancy, (2) effort expectancy; (3) social influence, and (4) facilitating conditions (26). Because AI-based Diagnosis Support System has not been implemented in entire Vietnam, we supposed that there was very difficult for medical students to have a chance to use AI systems during their clerkship or when they studied in the medical university. Therefore, we used UTAUT to explore the behavioral intention, which was defined as the willingness of medical students to use this system in the future if they had an opportunity. The behavioral intention was a significant predictor of actual use; thus, it is valid to determine the factors associated with the behavioral intention of AI use, which would partly reflect the AI practice in the future (13).

Firstly, three main constructs of the UTAUT model (i.e., performance expectancy, effort expectancy, and social influence) were included. The performance expectancy refers to “the degree to which a person believes that using a particular system would enhance his or her job performance,” while the effort expectancy is defined as “the degree of ease associated with the use of the system,” and the social influence refers to “the degree to which an individual perceives that important others believe he or she should use the new system” (26). All of them have been revealed to have positive associations with behavioral intentions in different studies regarding IT adoption (26). Performance expectancy is found to be related to effort expectancy because it is supposed that people were more likely to perceive that one technology is useful if they ease using this technology (29). In literature, previous studies showed that medical students believed that AI would help to enhance the performance of practices and AI would be integrated deeply in healthcare, from administrative works to clinical routine (30–33). Indeed, medical students are considered to have high AI literacy than current health professionals. A survey in the United States indicated that medical students were more likely to have basic knowledge about AI and prefer to use AI in patient care when comparing to their faculties (31). Another survey in the United Kingdom found that medical students who were taught about AI were more likely to adopt AI in their practices (18). Social influence may also affect the intention to use AI in healthcare. Prior research in both the general public and health professionals recommended that medical students should learn and practice AI during their studies (31, 34–36). Experts shared that future physicians should have a good understanding and can transforming AI from potential threats to become helpful assistants (37).

Via literature review, we also decided to develop the model with three additional constructs: task complexity, personal innovativeness in IT, and technology characteristics. Task complexity is the level of difficulty for completing an assigned task (38); hence, technology can have different roles in different tasks. Health professionals in their daily practices will face a variety of tasks, from simple to complex tasks. If they perceived that their tasks are difficult, they are more likely to accept the support from the AI system to increase their performance (i.e., performance expectancy). A study in Canada showed that medical students perceived the usefulness of AI in providing diagnosis, prognosis, building personalized medication, and performing robotic surgery, which indicated the promising roles of AI in addressing task complexity (33). Meanwhile, personal innovativeness in information technology (IT) means that one person is willing to try an innovation (particularly in IT) (39), while technology characteristics refer to the system, interface, etc. which allow users to use the technology for completing their tasks (40). Prior studies showed the potential relationships between these two constructs with effort expectancy (41, 42). Overall, we attempted to examine the association between task complexity and performance expectancy; and between personal innovativeness in IT and technology characteristics with effort expectancy.

Along with these three constructs, we added perceived substitution crisis and initial trust constructs aiming to examine the facilitating conditions to behavioral intentions. Perceived substitution crisis was served as a potential barrier for medical students to adopt technology in their future practice. Several obstacles such as the likelihood of being replaced by AI, being dependent on AI, being unemployed due to AI, and decreasing diagnosis capacity due to AI would greatly affect the benefits of physicians. Previous research found that 17% of German medical students agreed that AI could replace health professionals (30), and 49% of English medical students stated that they did not prefer the radiology field because of AI (18). Therefore, the perceived substitution crisis was suggested to be included when examining the intention to use AI among health professionals (2, 12–15).

For the initial trust, Mcknight et al. defined trust in the field of technology as “beliefs about a technology's capability rather than its will or its motives” (43). Trust is an important determinant of technology acceptance and adoption (44–47). Physicians are more likely to be cautious when adopting new technology in patients to prevent any potential harm; thus, trusting can help to reduce any suspicions and facilitate the use of the AI system among physicians. In a previous study, lack of trust in AI was the main contributor to the negative attitude among Chinese people toward the application of AI in healthcare (34). Another study in Canada found that medical students did not believe AI could deliver personalized and empathetic care (33). Thus, we hypothesized that trust would be positively associated with the behavioral intention to use AI systems in medical students. Given the matter that in Vietnam's medical education curriculum, none of course about AI was tough, we supposed that our medical students did not have any previous experience with AI and AI-based Diagnosis Support System. Thus, among different stages in trust formation, we concentrated on the initial stage, i.e., initial trust, which reflected how people trust in a technology that they have no experience.

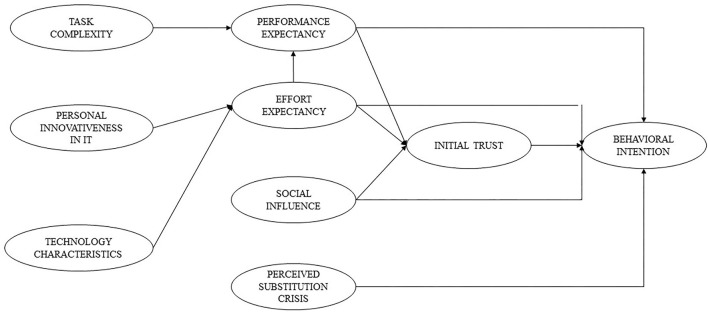

Additionally, to identify the relationship between initial trust and behavioral intention, we developed a trust-based theoretical model to explore the trust of medical students in a novel technology as an AI-based Diagnosis Support System. We estimated the associations between performance expectancy, effort expectancy, and social influence with initial trust. Previous studies indicated that performance expectancy and effort expectancy were two forms of technology-specific expectations as discussed above, which are believed to result in trust formation (48). Social influence was also found to be an associated factor with trust in other settings. Prior research revealed that those without any experience with technology were more likely to be dependent on the opinions of their important people, which in turn formulated their trust (48–50). The final conceptual framework used in this study is illustrated in Figure 1.

Figure 1.

A theoretical model to explore trusts and intentions to use AI-based diagnosis support system.

Study Design and Data Collection

Data of this study was obtained from an Internet survey from December 2019 to February 2020. This online survey was designed by using an online platform called Survey Monkey (https://www.surveymonkey.com/), which is a highly secure online platform. This survey was sent to medical students at medical university in Vietnam, with the inclusion criteria as follow: (1) aged 18 years or above; (2) currently studying undergraduate medical doctor programs in a medical university in Vietnam; (3) having a valid online account (such as email or social network sites) to help to recruit other medical students. We used the snowball sampling technique to recruit participants. First, we sent out the survey to a core group with twenty medical students who were from different medical universities. After they completed the survey, they were asked to invite other medical students in their networks to do the survey. The recruitment chain stopped when no one was invited or completed the survey within 7 days. A total of 223 medical students from different provinces (Hanoi, Ho Chi Minh city, and other provinces) were enrolled in the study. We obtained their electronic informed consent before doing the survey. After excluding invalid responses, data of 211 (completion rate 94.6%) medical students were used for analysis.

Variables and Questionnaire

In this study, we developed a structured questionnaire with two parts: the demographic characteristics section (including age, gender, living area, specialty, and location), and 26 items that reflected the 9 latent constructs for our theoretical models. These items were about performance expectancy (PE), effort expectancy (EE), social influence (SI), task complexity (TC), personal innovativeness in IT (PI), technology characteristics (TECH), perceived substitution crisis (PC), initial trust (IT), and behavioral intention. These items were selected based on a literature review (26, 28, 41, 42, 48). Participants were asked to respond using a 5-point Likert scale ranging from “strongly disagree” (1), “disagree” (2), “somewhat agree” (3), “agree” (4) to “strongly agree” (5). The proposed constructs and profiles are shown in the Supplementary Material.

Data Analysis

Stata software version 15.0 was used to analyze the data. Properties of measurement were evaluated. Internal consistency reliability was assessed by using Cronbach's alpha. Good internal consistency was defined as a Cronbach's alpha ≥0.7. Validity was examined, including convergent, discriminant, and construct validities. Convergent validity was assessed via two criteria: factor loading >0.70 and average variance extracted of each construct ≥0.5 (41). Regarding discriminant validity, we computed the variance inflation factor (VIF) to examine the multicollinearity of each construct. Construct with VIF value >10 indicated that it was not appropriate as a component of regression analysis. The square root of AVE per construct was also computed, and good discriminant validity was achieved when the square root of AVE of a construct was higher than its correlations with other constructs. Given that a sample size of 211 medical students might not be sufficient for the structural equation modeling (SEM) method, we employed partial least squares (PLS) SEM, which is a 2nd-generation SEM, to assess the relationship between latent constructs. We considered a statistical significance when the p < 0.05.

Results

Table 1 depicts the demographic characteristics of our sample. The mean age of selected medical students was 20.6 years old (SD = 1.5). The majority of them were female at 73.5%, lived in urban areas (89.1%), and Ho Chi Minh city (59.7%). Most of the respondents belonged to the general physician program (57.8%).

Table 1.

Characteristics of respondents (n = 211).

| Characteristics | |

|---|---|

| Age, years, Mean (SD) | 20.6 (1.5) |

| Gender, n (%) | |

| Male | 55 (26.5) |

| Female | 155 (73.5) |

| Living area, n (%) | |

| Urban | 188 (89.1) |

| Rural | 23 (10.9) |

| Specialty | |

| General physician | 122 (57.8) |

| Odonto-Stomatology | 48 (22.7) |

| Traditional medicine | 41 (19.4) |

| Location | |

| Hanoi | 51 (24.2) |

| Ho Chi Minh city | 126 (59.7) |

| Other provinces | 34 (16.1) |

Table 2 showed that the initial trust construct had the lowest mean score at 3.0 (SD = 0.9), while TC had the highest mean score at 3.8 (SD = 0.9). Overall, the Cronbach's alpha of each construct ranged from 0.738 to 0.909, suggesting good reliability among constructs. All item loadings of these constructs were above 0.7, and all construct's AVE values were above 0.5, indicating good convergent validity.

Table 2.

Reliability and validity of the measure (n = 211).

| Factor | No. of items | Factor loading | Mean | SD | Cronbach's alpha | AVE |

|---|---|---|---|---|---|---|

| PE | 4 | 0.847–0.915 | 3.7 | 0.8 | 0.903 | 0.775 |

| EE | 2 | 0.945–0.953 | 3.3 | 0.9 | 0.89 | 0.901 |

| SI | 4 | 0.827–0.894 | 3.4 | 0.7 | 0.88 | 0.736 |

| PI | 4 | 0.771–0.869 | 3.4 | 0.7 | 0.854 | 0.696 |

| TC | 2 | 0.879–0.901 | 3.8 | 0.9 | 0.738 | 0.791 |

| TECH | 3 | 0.824–0.916 | 3.1 | 0.8 | 0.846 | 0.765 |

| PC | 4 | 0.710–0.862 | 3.1 | 0.8 | 0.825 | 0.646 |

| IT | 2 | 0.957–0.957 | 3 | 0.9 | 0.909 | 0.916 |

| BI | 1 | – | 3.4 | 0.9 | – | 1 |

PE, performance expectancy; EE, effort expectancy; SI, social influence; PI, perceived innovativeness in IT; IT, initial trust; TC, task complexity; TECH, technology characteristics; PC, perceived substitution crisis; BI, behavioral intention.

In Table 3, regarding discriminant validity, the value of the square root of AVE per construct was higher than its correlation coefficient with other constructs. Moreover, the results of VIF analysis showed that all VIF values were below 10, suggesting no multicollinearity existed.

Table 3.

Correlation of latent variables and square root of AVE of each construct (n = 211).

| PE | EE | SI | PI | IT | TC | TECH | PC | BI | |

|---|---|---|---|---|---|---|---|---|---|

| PE | 0.8803* | ||||||||

| EE | 0.6936 | 0.9492* | |||||||

| SI | 0.6794 | 0.6656 | 0.8579* | ||||||

| PI | 0.7408 | 0.7391 | 0.7243 | 0.8343* | |||||

| IT | 0.4937 | 0.5586 | 0.6834 | 0.5427 | 0.9571* | ||||

| TC | 0.6002 | 0.5015 | 0.5763 | 0.6523 | 0.3213 | 0.8894* | |||

| TECH | 0.5527 | 0.6099 | 0.691 | 0.5801 | 0.7728 | 0.3925 | 0.8746* | ||

| PC | 0.3873 | 0.4568 | 0.523 | 0.463 | 0.3192 | 0.3935 | 0.4374 | 0.8037* | |

| BI | 0.5458 | 0.5453 | 0.6856 | 0.5755 | 0.4904 | 0.4838 | 0.4686 | 0.3729 | 1.000* |

PE, performance expectancy; EE, effort expectancy; SI, social influence; PI, perceived innovativeness in IT; IT, initial trust; TC, task complexity; TECH, technology characteristics; PC, perceived substitution crisis; BI, behavioral intention.

Squared root of AVE.

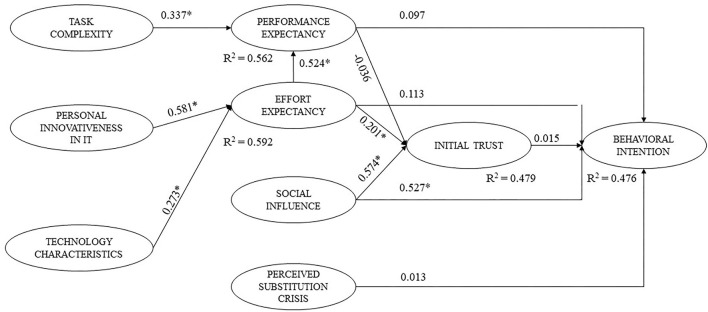

Figure 2 illustrates path coefficients and p-values of PLS analysis. Regarding the behavioral intention model, only social influence (β = 0.527, p < 0.05) was positively related to the behavioral intention. Meanwhile, other constructs such as performance expectancy, effort expectancy, initial trust, and perceived substitution crisis showed no associations with behavioral intentions to use AI. Overall, the model with five proposed constructs for behavioral intentions, including performance expectancy, effort expectancy, social influence, perceived substitution crisis, and initial trust, explained 47.6% (R2 = 0.476) of the behavioral intention's variance.

Figure 2.

Structural model and standardized path coefficients (n = 211). *p < 0.05.

Figure 2 also shows that effort expectancy (β = 0.201, p < 0.05) and social influence (β = 0.574, p < 0.05) were positively associated with initial trust, while no association was found between performance expectancy and initial trust (p > 0.05). The model including performance expectancy, effort expectancy, and social influence explained 47.9% of the variance of initial trust (R2 = 0.479).

Discussion

Developing and adopting AI in healthcare are essential due to its great benefits in enhancing healthcare professional's performance and efficiency. Overall, the perceptions of our students about diagnosis-related capacities of AI, effort to use AI, and intention to use AI were positive. It is clear that the role of AI in healthcare delivery has been widely documented, where AI has shown its success in the interpretation of image and examination data, as well as clinical outcomes prediction and management (15, 51). Nonetheless, information about AI and its application in Vietnam has been disseminated in mainstream media but not in university settings. Our results indicated that undergraduate medical students in Vietnam had great confidence in the knowledge of their work characteristics, understanding how AI could assist them to promote diagnosis performance, and desire to use AI when available. However, there were still some gaps between their expectancy and preparation, including awareness of technology characteristics and capacities to use such technology. Equipping the medical students with the basics, as well as the correct understanding and attitudes about the application of AI in medicine, are crucial in the digitalization of the healthcare system. However, currently, the medical training program in Vietnam has not been systematically updated in this area. The AI content has been mainly shared through scientific seminars or short-term courses, without a specific program to develop the AI capabilities.

This lack of pre-paration might also lead to the findings that the majority of our sample somewhat agreed or agreed that AI would replace the position of physicians in healthcare. This result was congruent with findings among medical students worldwide, particularly those in the radiology field (13, 14, 18, 52). Several previous studies found contradict results where the medical students stated that AI could not have a role as an alternative for the physicians in the future (33, 53), particularly in some fields that need a “sense of caring” or “art of caring” such as psychological health or aging care (53–55). Many authors argued that AI should be treated as a virtual assistant rather than being a replacement for physicians in healthcare. However, prospective physicians should acquire fundamental knowledge about mathematics, data science, AI, as well as ethical and legal issues related to AI (56). They should understand the systemic bias behind AI algorithms due to the insufficient data, which might be a great reason for health equity issues when making a clinical decision (57, 58). Moreover, other humanistic aspects such as communication skills, empathy, decision-making, or leadership skills should also be required (53). Acquiring these capacities would enable physicians to take advantage of AI in integrating it into their routine clinical practices. Thus, it is needed to call actions to innovate the medical education programs in the digital area.

Our path analysis showed the dominance of social influence on the intention of using AI for future work among undergraduate medical students, instead of other factors such as performance expectancy or initial trust, which were found in the previous research (28). Although this result is unexpected compared to what we hypothesized, there were several reasons which can be used to explain this phenomenon. First, this study was conducted on undergraduate medical students, whose healthcare delivery experience, as well as perceptions about the diagnosis process, were constrained. Moreover, given that AI has not yet been scaled up in Vietnam and AI-related curriculums for medical students had not yet been developed, we supposed that the majority of our sample had no experience with an AI-based diagnosis support system. This limitation hinders the way medical students perceived their capacities in adopting AI, as well as results in the homogeneity in their competency and trust evaluation. Moreover, because of this lacking experience, it is understandable when undergraduate medical students tended to be heavily dependent on the experiences of senior physicians in their social networks and information they gathered in social media about AI. With the exchange and sharing of practical experiences from those who have used this AI system, students' trust and intention to use the AI system in the future would be improved.

The findings of this study suggested several implications. First, undergraduate medical students should actively find opportunities to update and involve in AI development and adoption to increase their necessary AI knowledge and capacities. Self-learning ability is important to acquire new knowledge in the context where AI curricula at medical schools have not been paid sufficiently. Second, our study suggested the importance of role model approaches for facilitating the use of AI in this group. Opportunities to gain hands-on experience in different teaching hospitals are critical. AI may be useful for diagnosing rare conditions, which are often only seen at large teaching hospitals. Finally, this study underlined the need to integrate AI curriculums in the current medical education, which helped medical students to prepare appropriate capacities in technology adoption. Further studies should be performed to measure the preference and effectiveness of different education strategies to facilitate AI applications in healthcare among health professionals and medical students. Moreover, they should also assess whether training students with AI helps or hinders their diagnostic abilities.

Some limitations should be acknowledged in this study. First, since our study was conducted on medical students who had no experience with AI-based diagnostic support systems, we have not yet assessed whether they would use these systems or not in the future. A longitudinal follow-up study evaluating the rate of use of this system among medical students after graduation is essential to help refine the theoretical model. Second, our research was conducted online and had recruited medical students in entire Vietnam; however, this study may be limited to the group of medical students with Internet access, while other groups of medical students were not accessed. In addition, a small sample size might reduce the statistical power. Other studies on larger sample sizes need to be conducted, which help verify our results in other medical student groups. Third, in addition to constructs included in the theoretical model, the study has not assessed the mediating effects of other factors such as age, gender, and previous training in AI use during university studies, etc., which could affect the relationship among factors in the theoretical model.

Conclusions

This study highlights positive behavioral intentions in using an AI-based diagnosis support system among prospective Vietnamese physicians, as well as the effect of social influence on this choice. The development of AI-based competent curricula should be considered when reforming medical education in Vietnam.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Institutional Review Board of Youth Research Institute. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

LN: conception, design, acquisition, interpretation of data, drafting the article, and final approval of the version to be published. HN: conception, design, drafting the article, and final approval of the version to be published. AT: design, analysis, interpretation of the data, drafting the article, and final approval of the version to be published. CN: acquisition, analysis, interpretation of data, drafting the article, and final approval of the version to be published. LV and MZ: conception and design of data, revising article, and final approval of the version to be published. CH: conception and design of data, drafting the article, and final approval of the version to be published. BT, CL, and RH: conception, design, interpretation of data, drafting the article, and final approval of the version to be published. All authors contributed to the article and approved the submitted version.

Funding

This study was funded by NUS iHeathtech Other Operating Expenses (R-722-000-004-731) and NUS Department of Psychological Medicine Other Operating Expenses (R-177-000-003-001).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpubh.2021.755644/full#supplementary-material

References

- 1.Polesie S, McKee PH, Gardner JM, Gillstedt M, Siarov J, Neittaanmäki N, et al. Attitudes toward artificial intelligence within dermatopathology: an international online survey. Front Med. (2020) 7:591952. 10.3389/fmed.2020.591952 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bohr A, Memarzadeh K. The rise of artificial intelligence in healthcare applications. In: Artificial Intelligence in Healthcare. (2020). p. 25–60. 10.1016/B978-0-12-818438-7.00002-2 [DOI] [Google Scholar]

- 3.Blease C, Locher C, Leon-Carlyle M, Doraiswamy M. Artificial intelligence and the future of psychiatry: qualitative findings from a global physician survey. Digi Health. (2020) 6:2055207620968355. 10.1177/2055207620968355 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Barnett I, Torous J, Staples P, Sandoval L, Keshavan M, Onnela J-P. Relapse prediction in schizophrenia through digital phenotyping: a pilot study. Neuropsychopharmacology. (2018) 43:1660–6. 10.1038/s41386-018-0030-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Shan T, Tay FR, Gu L. Application of artificial intelligence in dentistry. J Dent Res. (2020) 100:232–44. 10.1177/0022034520969115 [DOI] [PubMed] [Google Scholar]

- 6.Mistry P. Artificial intelligence in primary care. Br J Gen Pract. (2019) 69:422–3. 10.3399/bjgp19X705137 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Chong LR, Tsai KT, Lee LL, Foo SG, Chang PC. Artificial intelligence predictive analytics in the management of outpatient MRI appointment no-shows. AJR Am J Roentgenol. (2020) 215:1155–62. 10.2214/AJR.19.22594 [DOI] [PubMed] [Google Scholar]

- 8.Ting DSW, Pasquale LR, Peng L, Campbell JP, Lee AY, Raman R, et al. Artificial intelligence and deep learning in ophthalmology. Br J Ophthalmol. (2019) 103:167–75. 10.1136/bjophthalmol-2018-313173 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Niazi MKK, Parwani AV, Gurcan MN. Digital pathology and artificial intelligence. Lancet Oncol. (2019) 20:e253–61. 10.1016/S1470-2045(19)30154-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Oh S, Kim JH, Choi S-W, Lee HJ, Hong J, Kwon SH. Physician confidence in artificial intelligence: an online mobile survey. J Med Internet Res. (2019) 21:e12422. 10.2196/12422 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Esmaeilzadeh P. Use of AI-based tools for healthcare purposes: a survey study from consumer's perspectives. BMC Med Inform Decis Mak. (2020) 20:170. 10.1186/s12911-020-01191-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kelly CJ, Karthikesalingam A, Suleyman M, Corrado G, King D. Key challenges for delivering clinical impact with artificial intelligence. BMC Med. (2019) 17:195. 10.1186/s12916-019-1426-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Petitgand C, Motulsky A, Denis JL, Régis C. Investigating the barriers to physician adoption of an artificial intelligence- based decision support system in emergency care: an interpretative qualitative study. Stud Health Technol Inform. (2020) 270:1001–5. [DOI] [PubMed] [Google Scholar]

- 14.Singh RP, Hom GL, Abramoff MD, Campbell JP, Chiang MF. Current challenges and barriers to real-world artificial intelligence adoption for the healthcare system, provider, and the patient. Transl Vis Sci Technol. (2020) 9:45. 10.1167/tvst.9.2.45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Jiang F, Jiang Y, Zhi H, Dong Y, Li H, Ma S, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol. (2017) 2:230–43. 10.1136/svn-2017-000101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Sarwar S, Dent A, Faust K, Richer M, Djuric U, Van Ommeren R, et al. Physician perspectives on integration of artificial intelligence into diagnostic pathology. NPJ digital medicine. (2019) 2:28. 10.1038/s41746-019-0106-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Park CJ Yi PH, Siegel EL. Medical student perspectives on the impact of artificial intelligence on the practice of medicine. Curr Probl Diagn Radiol. (2020) 50:614–9. 10.1067/j.cpradiol.2020.06.01 [DOI] [PubMed] [Google Scholar]

- 18.Sit C, Srinivasan R, Amlani A, Muthuswamy K, Azam A, Monzon L, et al. Attitudes and perceptions of UK medical students towards artificial intelligence and radiology: a multicentre survey. Insights Imaging. (2020) 11:14. 10.1186/s13244-019-0830-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Insider SD-V. Artificial Intelligence (AI) is developing rapidly in Vietnam. (2021). Available online at: https://vietnaminsider.vn/artificial-intelligence-ai-is-developing-rapidly-in-vietnam/

- 20.Health VMo . Decision No. 4888/QD-BYT Introducing the Scheme for Application and Development of Smart Healthcare Information Technology for the 2019 - 2025 Period. Hanoi, Vietnam: Ministry of Health; (2019). [Google Scholar]

- 21.Vuong QH, Ho MT, Vuong TT, La VP, Ho MT, Nghiem KP, et al. Artificial intelligence vs. natural stupidity: evaluating AI readiness for the Vietnamese medical information system. J Clin Med. (2019) 8:168. 10.3390/jcm8020168 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kijsanayotin B, Pannarunothai S, Speedie SM. Factors influencing health information technology adoption in Thailand's community health centers: applying the UTAUT model. Int J Med Inform. (2009) 78:404–16. 10.1016/j.ijmedinf.2008.12.005 [DOI] [PubMed] [Google Scholar]

- 23.AbuShanab E, Pearson JM. Internet banking in Jordan: the unified theory of acceptance and use of technology (UTAUT) perspective. J Syst Inf Technol. (2007) 9:78–97. 10.1108/13287260710817700 [DOI] [Google Scholar]

- 24.Wang YS, Wu MC, Wang HY. Investigating the determinants and age and gender differences in the acceptance of mobile learning. Br J Educ Technol. (2009) 40:92–118. 10.1111/j.1467-8535.2007.00809.x [DOI] [Google Scholar]

- 25.Kim S, Lee K-H, Hwang H, Yoo S. Analysis of the factors influencing healthcare professional's adoption of mobile electronic medical record (EMR) using the unified theory of acceptance and use of technology (UTAUT) in a tertiary hospital. BMC Med Inform Decis Mak. (2015) 16:1–12. 10.1186/s12911-016-0249-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Venkatesh V, Morris MG, Davis GB, Davis FD. User Acceptance of information technology: toward a unified view. MIS Quarterly. (2003) 27:425–78. 10.2307/30036540 [DOI] [Google Scholar]

- 27.Venkatesh V, Thong JYL, Chan FKY, Hu PJ-H, Brown SA. Extending the two-stage information systems continuance model: incorporating UTAUT predictors and the role of context. Inf Syst J. (2011) 21:527–55. 10.1111/j.1365-2575.2011.00373.x [DOI] [Google Scholar]

- 28.Fan W, Liu J, Zhu S, Pardalos PM. Investigating the impacting factors for the healthcare professionals to adopt artificial intelligence-based medical diagnosis support system (AIMDSS). Ann Oper Res. (2020) 294:567–92. 10.1007/s10479-018-2818-y [DOI] [Google Scholar]

- 29.Cimperman M, Makovec Brenčič M, Trkman P. Analyzing older user's home telehealth services acceptance behavior-applying an Extended UTAUT model. Int J Med Inform. (2016) 90:22–31. 10.1016/j.ijmedinf.2016.03.002 [DOI] [PubMed] [Google Scholar]

- 30.Pinto Dos Santos D, Giese D, Brodehl S, Chon SH, Staab W, Kleinert R, et al. Medical student's attitude towards artificial intelligence: a multicentre survey. Eur Radiol. (2019) 29:1640–6. 10.1007/s00330-018-5601-1 [DOI] [PubMed] [Google Scholar]

- 31.Wood EA, Ange BL, Miller DD. Are we ready to integrate artificial intelligence literacy into medical school curriculum: students and faculty survey. J Med Educ Curric Dev. (2021) 8:23821205211024078. 10.1177/23821205211024078 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Cho SI, Han B, Hur K, Mun JH. Perceptions and attitudes of medical students regarding artificial intelligence in dermatology. J Eur Acad Dermatol Venereol. (2021) 35:e72–3. 10.1111/jdv.16812 [DOI] [PubMed] [Google Scholar]

- 33.Mehta N, Harish V, Bilimoria K, Morgado F, Ginsburg S, Law M, et al. Knowledge of and attitudes on artificial intelligence in healthcare: a provincial survey study of medical students. medRxiv. (2021). 10.1101/2021.01.14.21249830 [DOI] [Google Scholar]

- 34.Gao S, He L, Chen Y, Li D, Lai K. Public perception of artificial intelligence in medical care: content analysis of social media. J Med Internet Res. (2020) 22:e16649. 10.2196/16649 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Dumić-Cule I, Orešković T, Brkljačić B, KujundŽić Tiljak M, Orešković S. The importance of introducing artificial intelligence to the medical curriculum - assessing practitioner's perspectives. Croat Med J. (2020) 61:457–64. 10.3325/cmj.2020.61.457 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Yun D, Xiang Y, Liu Z, Lin D, Zhao L, Guo C, et al. Attitudes towards medical artificial intelligence talent cultivation: an online survey study. Ann Transl Med. (2020) 8:708. 10.21037/atm.2019.12.149 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Ahuja AS. The impact of artificial intelligence in medicine on the future role of the physician. PeerJ. (2019) 7:e7702. 10.7717/peerj.7702 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gallupe RB, DeSanctis G, Dickson GW. Computer-based support for group problem-finding: an experimental investigation. MIS Quarterly. (1988) 12:277–96. 10.2307/248853 [DOI] [Google Scholar]

- 39.Agarwal R, Prasad JA. Conceptual and operational definition of personal innovativeness in the domain of information technology. Inf Syst Res. (1998) 9:204–15. 10.1287/isre.9.2.204 [DOI] [Google Scholar]

- 40.Goodhue DL, Thompson RL. Task-technology fit and individual performance. MIS Quarterly. (1995) 19:213–36. 10.2307/249689 [DOI] [Google Scholar]

- 41.Wu I-L, Li J-Y, Fu C-Y. The adoption of mobile healthcare by hospital's professionals: an integrative perspective. Decis Support Syst. (2011) 51:587–96. 10.1016/j.dss.2011.03.003 [DOI] [Google Scholar]

- 42.Zhou T, Lu Y, Wang B. Integrating TTF and UTAUT to explain mobile banking user adoption. Comput Human Behav. (2010) 26:760–7. 10.1016/j.chb.2010.01.013 [DOI] [Google Scholar]

- 43.McKnight DH. Trust in Information Technology. The Blackwell Encyclopedia of Management. Oxford: Blackwell; (2005). p. 329–31. [Google Scholar]

- 44.Benbasat I, Wang W. Trust In and Adoption of Online Recommendation Agents. J Assoc Inf Syst. (2005) 6:4. 10.17705/1jais.00065 [DOI] [Google Scholar]

- 45.Yan H, Pan K. Examining mobile payment user adoption from the perspective of trust transfer. Int. J. Netw. Virtual Organ. (2015) 15:136–51. 10.1504/IJNVO.2015.070423 [DOI] [Google Scholar]

- 46.Chiu C-M, Hsu M-H, Lai H, Chang C-M. Re-examining the influence of trust on online repeat purchase intention: the moderating role of habit and its antecedents. Decis Support Syst. (2012) 53:835–45. 10.1016/j.dss.2012.05.021 [DOI] [Google Scholar]

- 47.Bansal G, Zahedi FM, Gefen D. The impact of personal dispositions on information sensitivity, privacy concern and trust in disclosing health information online. Decis Support Syst. (2010) 49:138–50. 10.1016/j.dss.2010.01.010 [DOI] [Google Scholar]

- 48.Li X, Hess TJ, Valacich J. Why do we trust new technology? A study of initial trust formation with organizational information systems. J Strateg Inf Syst. (2008) 17:39–71. 10.1016/j.jsis.2008.01.001 [DOI] [Google Scholar]

- 49.Li X, Hess TJ, Valacich JS. Using attitude and social influence to develop an extended trust model for information systems. SIGMIS Database. (2006) 37:108–24. 10.1145/1161345.1161359 [DOI] [Google Scholar]

- 50.Kelman HC. Compliance, identification, and internalization: three processes of attitude change. J Conflict Resolut. (1958) 2:51–60. 10.1177/00220027580020010632697142 [DOI] [Google Scholar]

- 51.Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. (2019) 25:44–56. 10.1038/s41591-018-0300-7 [DOI] [PubMed] [Google Scholar]

- 52.Gong B, Nugent JP, Guest W, Parker W, Chang PJ, Khosa F, et al. Influence of artificial intelligence on Canadian medical student's preference for radiology specialty: a national survey study. Acad Radiol. (2019) 26:566–77. 10.1016/j.acra.2018.10.007 [DOI] [PubMed] [Google Scholar]

- 53.Johnston SC. Anticipating and training the physician of the future: the importance of caring in an age of artificial intelligence. Acad Med. (2018) 93:1105–6. 10.1097/ACM.0000000000002175 [DOI] [PubMed] [Google Scholar]

- 54.Stokes F, Palmer A. Artificial intelligence and robotics in nursing: ethics of caring as a guide to dividing tasks between AI and humans. Nurs Philos. (2020) 21:e12306. 10.1111/nup.12306 [DOI] [PubMed] [Google Scholar]

- 55.Kim JW, Jones KL, D'Angelo E. How to prepare prospective psychiatrists in the era of artificial intelligence. Acad Psychiatry. (2019) 43:337–9. 10.1007/s40596-019-01025-x [DOI] [PubMed] [Google Scholar]

- 56.Paranjape K, Schinkel M, Nannan Panday R, Car J, Nanayakkara P. Introducing artificial intelligence training in medical education. JMIR Med Educ. (2019) 5:e16048. 10.2196/16048 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Gomolin A, Netchiporouk E, Gniadecki R, Litvinov IV. Artificial intelligence applications in dermatology: where do we stand? Front Med (Lausanne). (2020) 7:100. 10.3389/fmed.2020.00100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Wahl B, Cossy-Gantner A, Germann S, Schwalbe NR. Artificial intelligence (AI) and global health: how can AI contribute to health in resource-poor settings? BMJ global health. (2018) 3:e000798. 10.1136/bmjgh-2018-000798 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.