Abstract

Due to the COVID‐19 epidemic crisis, students from higher education institutions around the world are forced to participate in comprehensive online curriculums. In such a scenario, it is worth investigating how students perceived their learning outcomes and satisfaction based on this method of teaching and learning online. This study aims to explore the role of six factors, namely, system quality, course design, learner‐learner interaction, learner‐instructor interaction, learner‐content interaction, and self‐discipline, on university students' perceived learning outcomes and their effect on student satisfaction with online curricula during the COVID‐19 epidemic. A structural equation modelling technique was used to assess survey questionnaires obtained from 457 validated students at a Public University in China. The results demonstrated that these determinants had a positive effect on satisfaction and learning outcomes, whereas learner‐instructor interaction had no significant effect. Furthermore, the strongest determinant that affected not only students' satisfaction but also their learning outcomes was the learner‐content interaction.

Keywords: COVID‐19, determinants of e‐learning, learning outcomes, satisfaction, self‐discipline

1. INTRODUCTION

The COVID‐19 pandemic is an unprecedented crisis that has led to a fundamental change in countries worldwide and represents the greatest threat to humanity as a whole in the new millennium. To minimize the impact of the epidemic on realistic education circumstances, a number of countries have applied stringent social distancing initiatives, and a policy of self‐isolation/locking has culminated in the immediate closure of university and college campuses and altered the methods used to offer complete distance/online learning environments by synchronous and asynchronous formats to replace the traditional school curriculum during this period of the COVID‐19 crisis (Baber, 2020; Bao, 2020; Longhurst et al., 2020; Sangster et al., 2020; Wargadinata et al., 2020).

Regarding students' online learning experiences, previous research has examined student satisfaction and learning outcomes related to students' expectations of learning experiences and perceived value of a course in online environments (Kuo et al., 2013; Yukselturk & Yildirim, 2008). Many researchers have cited various determining factors that affected student learning outcomes, engagement or satisfaction in an online learning setting (P. S. D. Chen et al., 2010; Hong et al., 2003; A. Khan et al., 2017; Kuo et al., 2013; Liaw, 2008; Nortvig et al., 2018; Yu & Yu, 2010), such as online self‐efficacy (Al‐Azawei & Lundqvist, 2015; Joo et al., 2013; Liaw, 2008), learning styles (Al‐Azawei & Lundqvist, 2015), course structure (Baber, 2020; Cheng, 2012; S. B. Eom et al., 2006) and organization (Gray & DiLoreto, 2016), self‐regulated practices (S. B. Eom et al., 2006; Paechter et al., 2010), instructional design (Costley & Lange, 2016), instructor presence (Gray & DiLoreto, 2016), learner‐learner, learner‐instructor, or learner‐content interactions (Baber, 2020; Kang & Im, 2013; Ke & Kwak, 2013; Kuo et al., 2013; Kuo, Belland, et al., 2014), and system quality or facilitation support (Al‐Fraihat et al., 2020; AlMulhem, 2020; Goel et al., 2013; Uddin et al., 2019; Wang & Chiu, 2011; Yakubu & Dasuki, 2018). For example, S. Eom (2009) examined some antecedents (course structure, students' learning styles, and self‐motivation) and their association between interactions and students' perceived learning outcomes and satisfaction in university asynchronous courses by using online platforms. Mtebe and Raphael (2018) identified several factors, such as course quality, system quality, service quality, instructor quality, and perceived usefulness, which affect student satisfaction with the e‐learning system of the University of Dar es Salaam. Alqurashi (2019) examined how learner‐content interaction, learner‐instructor interaction, and learner‐learner interaction can predict student satisfaction and perceived learning in online learning environments. They observed that learner‐content interaction was the highest and most significant indicator of student satisfaction. Alqahtani and Rajkhan (2020) also addressed several major critical factors from a managerial perspective, such as instructor or learner characteristics, information technology, instructional design, e‐Learning environment, level of collaboration and so on, and concluded that knowledge management, assistance, student characteristics, and information technology were the most critical factors influencing the e‐learning process during the COVID‐19 pandemic.

Based on the above‐mentioned work, though previous studies have paid attention to the exploration of several relevant variables affecting students' perception of learning effect and satisfaction with current online learning, greater focus should be given to the shift towards comprehensive online education during the COVID‐19 pandemic to recognize possible impact factors on perceived learning outcomes and student satisfaction (Baber, 2020) despite the few studies that have been undertaken to investigate student learning during the COVID‐19 outbreaks (Almaiah et al., 2020; Baber, 2020; Javed et al., 2020; Li et al., 2020; Sangster et al., 2020; Saxena et al., 2020; Shah et al., 2020; Wargadinata et al., 2020). In light of the sudden effects of the COVID 19 outbreak, we recognized that students must use the platform and tools provided by their school to perform a thorough online learning program at home, review the teaching material prepared by teachers, and partake in online conversations or exchanges with teachers and students. Therefore, this study chooses system quality, course design, and interactions (learner‐learner, learner‐instructor, and learner‐content) as the major possible determinants that may influence Chinese university students' perceived learning outcomes and student satisfaction when they participated in completely online courses during the COVID‐19 pandemic. Moreover, unlike the actual face‐to‐face schooling of the past, individual characteristics such as self‐discipline also affect online learning outcomes and student satisfaction in the entirely virtual learning setting, and hence are included in this predictor for this investigation.

2. LITERATURE REVIEW

2.1. System quality

Learning systems provide an online (synchronous or asynchronous) teaching and learning environments that can promote educator‐to‐student contact, monitor students' learning progress, and facilitate the secure sharing of online course materials (Alla et al., 2013). In general, the quality of the learning system is seen as a critical factor in promoting the success of e‐learning (Alsabawy et al., 2012; Williams & Jacobs, 2004; Yakubu & Dasuki, 2018) based on whether teachers or students can effectively teach or learn. Several researchers (Musa & Othman, 2012) have indicated that successful e‐learning depends on the quality of the website, technological tools and the infrastructure through which both instructors and learners use to access learning materials or resources of different courses (Chopra et al., 2019) and that these factors affect the teachers' and students' use of the e‐learning systems (Petter et al., 2008). Chou and Liu (2005) argued that students who experience increased system effectiveness when using the learning system will exhibit increased learning satisfaction, and these factors may improve continuance in system use. Similarly, researchers (McGill & Klobas, 2009; Waheed et al., 2016) also claimed that system quality (services, management and technological features) has positive effects on learning outcomes and satisfaction towards online learning (Tajuddin et al., 2013). John and Duangekanong (2018) investigated graduate students' perception towards eLearning at a University in Thailand and found that eLearning system quality has a positive influence on students' perceived satisfaction and eLearning effectiveness. Sarwar et al. (2020) conducted a Nationwide survey of Pakistani undergraduate dentistry students regarding the self‐reported efficacy of online learning during the COVID‐19 pandemic, finding that the learning system and service quality have the biggest impact on student satisfaction regarding online learning. Hence, system quality could affect the university students' learning outcomes and satisfaction levels when they engaged in online courses during the COVID‐19 pandemic. We therefore hypothesise that:

System quality has a positive impact on student learning outcomes.

System quality has a positive impact on student satisfaction.

2.2. Course design

Course design involves the planning and design of the course content and evaluation and collocation of effective teaching strategies by the instructor, which can promote student learning. Jaggars and Xu (2016) summarized several studies on online course design and argued that it involved several characteristics, such as well‐organized content, a range of opportunities for interpersonal connection options, and productive use of technology. Adeyinka and Mutula (2010) noted that course design is judged by students based on the degree to which the e‐learning system content meets their needs and is seen as a key element that affects student perceptions of online courses. Students perceptions of the overall usability of the course are likely associated with student satisfaction and learning outcomes (S. B. Eom et al., 2006). For example, researchers (Swan et al., 2012) noted that course design and course structure could affect the learning process and learning outcomes, especially in online courses or online teaching processes (Rubin & Fernandes, 2013). If online courses are planned with clear expectations and guidelines, students will be more engaged in studying (Dykman & Davis, 2008; Ku et al., 2011). Some researchers (N. S. Chen et al., 2008; Liaw, 2008) mentioned that the design of e‐learning materials and activities had a stronger positive influence on students' satisfaction than LMS technical capabilities. Tarigan (2011) indicated that learner satisfaction is affected more by the course design than by the type of technology used to deliver the instructions. Similarly, Eom and Ashill (2016) also emphasized that course design and structure are closely associated with the learner satisfaction and perceived learning outcomes especially when the course content is structured into logical and understandable components that are interesting and activates the desire of the learner to learn. Thus, this study proposes the following hypotheses:

Course design has a positive impact on student learning outcomes.

Course design has a positive impact on student satisfaction.

2.3. Interaction

Interaction is known to be an essential aspect of successful online learning. A well‐known interactive category of distance education, as identified by Moore (1989), is comprised of three types, namely learner‐learner, learner‐instructor and learner‐content interactions, which are widely used to describe how to communicate in e‐learning or technology‐based learning environments (Garrison et al., 2003; Yildiz Durak, 2018). Learner‐instructor interaction is a two‐way interaction between learners and instructors that helps to enhance or sustain student involvement with teaching material, while learner‐learner interactions occur among learners for the purpose of sharing knowledge or ideas on course content. It is beneficial to serve cognitive purposes and social appearances. Learner‐content interaction involves contact between student and the subject matter which then results in an improvement in their comprehension, perspective and/or cognitive structure (Moore, 1989). Abou‐Khalil et al. (2021) argued that three interactive types (learner‐learner, learner‐instructor and learner‐content interactions) were recognized as a fundamental framework to provide the minimal connections needed for effective online learning in a crisis situation due to treating learning as a social and cognitive process. Previous research has indicated the positive influence of these interactions on student satisfaction in distance education (Bray et al., 2008; Kuo et al., 2013; Thurmond, 2003). Lu et al. (2013) also noted that learner‐instructor interactions, peer interactions, and class interactions are positively related to online learning satisfaction and increase students' e‐learning performance. In addition, online courses with high levels of interactivity result in increased levels of student motivation as well as improved learning and satisfaction compared to less interactive learning environments (Croxton, 2014). We therefore assume that these three possible interactions types will affect students' online learning outcomes and satisfaction. Accordingly, the following hypotheses will be put forward:

Learner‐learner interaction has a positive impact on student learning outcomes.

Learner‐learner interaction has a positive impact on student satisfaction.

Learner‐instructor interaction has a positive impact on student learning outcomes.

Learner‐instructor interaction has a positive impact on student satisfaction.

Learner‐content interaction has a positive impact on student learning outcomes.

Learner‐content interaction has a positive impact on student satisfaction.

2.4. Self‐discipline

Self‐discipline is known to be an individual variable that is correlated with effortful perseverance on goal‐oriented tasks. Individuals with strong self‐discipline are able to successfully handle conflicts between momentary impulse‐driven goals with minor, satisfying short‐term gains and longer‐term goals with more massive gains that require considerable commitment and determination (Duckworth & Gross, 2014). Previous research (Goodwin & Hein, 2016; Zimmerman & Kitsantas, 2014) demonstrated that self‐discipline is correlated with higher academic success when adjusting for capacity‐building variables such as intelligence (Duckworth & Seligman, 2005). Lievens et al. (2009) suggested that self‐discipline is a strong predictor of long‐term academic performance. Hagger and Hamilton (2019) also mentioned that self‐discipline is related to effort given to educational activities. Some scholars argued that students need more self‐discipline in online education in contrast to conventional classroom education (Allen & Seaman, 2007; Panigrahi et al., 2018). Online learning may require greater responsibility for learner involvement (Moore & Kearsley, 2011). Compared with face‐to‐face teaching, students participating in online learning may experience external temptations or interference at home during the epidemic. Accordingly, we suppose that students' self‐discipline has a positive effect on their learning outcome and satisfaction regarding online learning. Therefore, the following hypotheses have been formulated:

Self‐discipline has a positive impact on student learning outcomes.

Self‐discipline has a positive impact on student satisfaction.

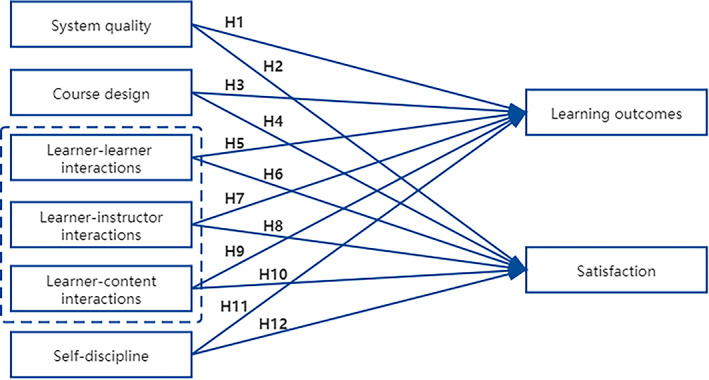

This study sought to understand more about the learning outcomes and satisfaction levels of university students under the use of online curricula during the COVID‐19 epidemic. Based on a previous literature review, a research model and related hypotheses are depicted, as shown in Figure 1.

FIGURE 1.

The research model [Colour figure can be viewed at wileyonlinelibrary.com]

3. METHOD

3.1. Participants

This survey was performed through an online questionnaire at Public University in China. All target participants were part of a comprehensive online learning experience for one semester (16 weeks from March to June 2020) during the COVID‐19 epidemic. We used a convenience sampling (non‐randomized) technique to collect university students' responses, since it is easier to collect data through an online survey, especially in the context of the epidemic. Before recruiting participants, consent approval was obtained. The response to the questionnaire survey was voluntary, and the anonymity of all data collected was ensured. After preliminary questionnaire data collection, 504 valid returns were obtained in this investigation. In total, 457 (90.7%) valid questionnaires were included in this study after removing returns with missing demographic data and incomplete responses. Demographic information of the participants is presented in Table 1. Regarding gender, 195 males (42.7%) and 262 females (57.3%) were included in this study. In addition, 85.1% (n = 389) were undergraduate students, and 14.9% (n = 68) were graduate students. Of the undergraduate students, 66 were in the first year, 158 in the second, 125 in the third, and 36 in the fourth. Most of the students are studying science, engineering, medicine, and agronomy (49.2%), whereas the remaining students (38%) are studying education, liberal arts, social science, management, and other (12.8%) fields.

TABLE 1.

Participants' characteristics

| Profile | Category | Number (n = 457) | Percentage (%) |

|---|---|---|---|

| Gender | Male | 195 | 42.7 |

| Female | 262 | 57.3 | |

| Grade | Freshman | 66 | 14.4 |

| Sophomore | 158 | 34.6 | |

| Junior | 125 | 27.4 | |

| Senior | 36 | 7.9 | |

| Grade five | 4 | 0.9 | |

| Graduate student | 68 | 14.9 | |

| Major | Engineer | 86 | 18.8 |

| Education | 62 | 13.6 | |

| Medicine | 54 | 11.8 | |

| Management | 51 | 11.2 | |

| Agronomy | 50 | 10.9 | |

| Science | 35 | 7.7 | |

| Social Science | 30 | 6.6 | |

| Liberal Arts | 30 | 6.6 | |

| Others | 59 | 12.8 |

3.2. Online learning platform and tool used

A supported learning system called TronClass (https://tronclass.com.cn/) (see Figure 2) was used to provide an asynchronous online learning environment to assist teachers and students in various online activities, such as display course contents (videos or ppts), course notifications, online group work, and online assessments. During the COVID‐19 epidemic, there were approximately 10,000 online courses. In addition, 55,572 lecture videos were developed and uploaded. Another real‐time conferencing tool ‘DingTalk ZJU’ (see Figure 3) was used to support online synchronous teaching via live streaming (one to many) or online discussion (many to many) instead of traditional face‐to‐face classroom teaching. In general, teachers use these two tools simultaneously for online teaching in their own home or school office. In addition, students are required to use their laptops or tablets to participate in online course activities. The process of online teaching and learning was continued for 16 weeks in total.

FIGURE 2.

Screenshot of the ‘TronClass’ learning system [Colour figure can be viewed at wileyonlinelibrary.com]

FIGURE 3.

Screenshot of a real‐time conferencing software ‘DingTalkZJU’ [Colour figure can be viewed at wileyonlinelibrary.com]

3.3. Instruments

The survey contained questions on demographics, six indicator variables, and student satisfaction and learning outcomes. The 36 items for the questionnaire were based on previous research (Al‐Fraihat et al., 2020; Chang et al., 2014; Costa Jr & McCrae, 1992; S. B. Eom & Ashill, 2016; Hung & Chou, 2015; Kuo, Walker, et al., 2014), and two educational technology experts modified the questionnaire to ensure the retention of the original meanings and suitability for university students. This questionnaire is based on a 5‐point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree). The following measurements are used in this study:

The system quality scale was adopted from Al‐Fraihat et al. (2020) to measure students' perceptions of the quality of the learning platform or tools. This measurement includes four items. The Cronbach's coefficient alpha value is 0.83.

The course design scale was developed by Hung and Chou (2015) to test student perception towards course design concerning course planning, structure, content arrangement and strategies application. The coefficient alpha is 0.88.

The measure of interaction was revised from the current instrument produced by Kuo, Walker, et al. (2014). This scale of 15 items is used to examine students' interactions, including learner‐learner, learner‐instructor, learner and content interactions, in the online learning environment. The Cronbach's coefficient alpha values for learner‐learner interactions, learner‐instructor interactions and learner‐content interactions are 0.93, 0.88 and 0.92, respectively.

The self‐discipline scale was adopted from Costa Jr and McCrae (1992). It contains five items to measure students' self‐discipline. Its Cronbach's coefficient alpha value is 0.85.

The scale of learning outcomes is based on that reported by S. B. Eom and Ashill (2016) and consists of four items to examine students' self‐reported of learning outcomes.

The learning satisfaction scale included five items (Kuo, Walker, et al., 2014) and was introduced to measure students' awareness of their satisfaction with online learning experiences. Its Cronbach's coefficient alpha value is 0.93.

4. DATA ANALYSIS

Partial least squares structural equation modelling (PLS‐SEM), which is an effective alternative to covariance‐based structural equation modelling that is particularly useful for a small sample (Chin & Newsted, 1999) and abnormally distributed samples (Hair et al., 2016), was used in this study. The PLS model is composed of a measurement model and a structural model and was assessed by using SmartPLS 3.0. Specifically, target endogenous variable variance (R 2), inner model path coefficient, indicator reliability, and validity were assessed.

4.1. Item analysis

The internal validity of the original items in each construct was examined using confirmatory factor analysis. If the factor loading is less than the thresholds of 0.5, the items should be deleted (Hair et al., 2016; Hair et al., 2019). The results revealed that the number of items was reduced from 9 to 4 for ‘system quality,’ from 6 to 4 for ‘learner‐instructor interactions,’ from 4 to 3 for ‘leaner‐content interactions,’ and from 10 to 5 for ‘self‐discipline.’

4.2. Reliability and validity analysis of the constructs

The measurement model is further evaluated in terms of reliability and convergent validity (Gefen & Straub, 2005). As noted in Table 2, Cronbach's α values for system quality, course design, learner‐learner interaction, learner‐instructor interaction, learner‐content interaction, self‐discipline, learning outcome and satisfaction were 0.825, 0.749, 0.892, 0.725, 0.829, 0.858, 0.849 and 0.893, respectively, indicating that the variables were reliable (Cronbach's α > 7) (Byrne, 2001). All of the outer model loadings falling between 0.701 and 0.890 (> 0.6) reached the appropriate range to ensure convergent validity (Hulland, 1999). Bagozzi and Yi (1988) proposed that the AVE value should be either 0.5 or greater and that CR should meet the recommended threshold of 0.7 or greater (Hair et al., 1998). All of the AVE values fell between 0.571 and 0.745, whereas CR values ranged from 0.843 to 0.921. Overall, the results indicate a high degree of confidence regarding the validity and reliability of the indicators used in the research model.

TABLE 2.

The convergent validity and reliability of measures

| Construct | Items | Loadings | AVE | CR | Cronbach's α |

|---|---|---|---|---|---|

| System quality | 4 | 0.776–0.858 | 0.656 | 0.884 | 0.825 |

| Course design | 3 | 0.775–0.871 | 0.654 | 0.849 | 0.749 |

| Learner‐learner interaction | 8 | 0.701–0.812 | 0.571 | 0.914 | 0.892 |

| Learner‐instructor interaction | 4 | 0.734–0.784 | 0.573 | 0.843 | 0.725 |

| Learner‐content interaction | 3 | 0.817–0.887 | 0.745 | 0.897 | 0.829 |

| Self‐discipline | 5 | 0.734–0.836 | 0.636 | 0.897 | 0.858 |

| Learning outcomes | 4 | 0.811–0.866 | 0.688 | 0.898 | 0.849 |

| Satisfaction | 5 | 0.762–0.890 | 0.702 | 0.921 | 0.893 |

Both the Fornell–Larcker criterion and the heterotrait–monotrait (HTMT) correlation ratio were tested in determining the discriminant validity. Fornell‐Larcker criterion indicate that the square root of the AVE of each construct should be greater than the cross‐correlations between each construct and the others in the model and not less than 0.50 (Fornell & Larcker, 1981). Table 3 demonstrates that the square root of the AVE of eight constructs was between 0.756 and 0.863, exceeding the cut‐off value of 0.50 and exhibiting sufficient discriminant validity. Moreover, the HTMT is defined as the mean value of the item correlations across the constructs relative to the (geometric) mean of the average correlations for the items that measure the same construct (Henseler et al., 2014). In this research, all the HTMT values were lower than the recommended threshold value of 0.85, demonstrating that discriminate validity was established for all constructs of the model as seen in Table 4.

TABLE 3.

Discriminant validity

| Construct | Latent variable correlation | |||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

| 1. System quality | 0.810 | |||||||

| 2. Course design | 0.391 | 0.808 | ||||||

| 3. Learner‐learner interaction | 0.239 | 0.316 | 0.756 | |||||

| 4. Learner‐instructor interaction | 0.300 | 0.376 | 0.492 | 0.757 | ||||

| 5. Learner‐content interaction | 0.398 | 0.401 | 0.409 | 0.355 | 0.863 | |||

| 6. Self‐discipline | 0.262 | 0.170 | 0.336 | 0.265 | 0.252 | 0.798 | ||

| 7. Learning outcome | 0.416 | 0.347 | 0.343 | 0.271 | 0.467 | 0.301 | 0.829 | |

| 8. Satisfaction | 0.516 | 0.509 | 0.512 | 0.411 | 0.566 | 0.413 | 0.710 | 0.838 |

Note: Diagonals represent the square root of the average variance extracted, whereas the other matrix entries represent the correlations.

TABLE 4.

Discriminant validity of Heterotrait‐Monotrait ratio of correlations (HTMT)

| Construct | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| 1. System quality | — | |||||||

| 2. Course design | 0.482 | — | ||||||

| 3. Learner‐learner interaction | 0.275 | 0.369 | — | |||||

| 4. Learner‐instructor interaction | 0.383 | 0.502 | 0.602 | — | ||||

| 5. Learner‐content interaction | 0.486 | 0.493 | 0.473 | 0.451 | — | |||

| 6. Self‐discipline | 0.305 | 0.182 | 0.376 | 0.328 | 0.285 | — | ||

| 7. Learning outcome | 0.494 | 0.389 | 0.386 | 0.333 | 0.553 | 0.340 | — | |

| 8. Satisfaction | 0.601 | 0.582 | 0.569 | 0.497 | 0.653 | 0.460 | 0.821 | — |

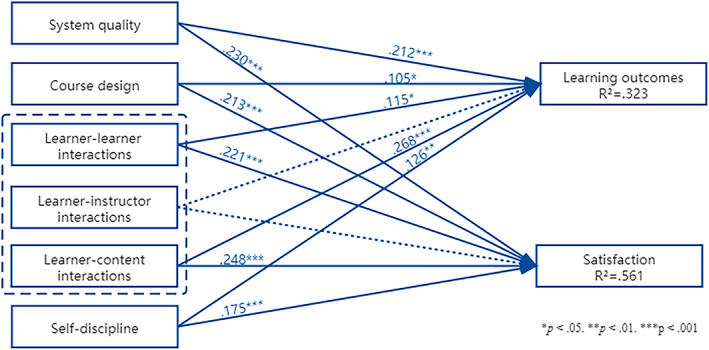

4.3. The structural model and hypotheses testing

Table 5 and Figure 4 present the path analysis results for testing each hypothesis. Ten out of the 12 hypotheses were supported with significant path coefficient values. The explained variances (R 2) of learning outcome and satisfaction were 0.323 and 0.516, respectively. All the hypotheses proposed were supported with the exception of two (H7 and H8). System quality had a positive effect on learning outcome (β = 0.212, p < 0.001) and satisfaction (β = 0.230, p < 0.001), indicating that H1 and H2 were supported. Course design had a positive effect on learning outcome (β = 0.105, p < 0.05) and satisfaction (β = 0.213, p < 0.001), indicating that H3 and H4 were supported. The learner‐learner interaction had a positive effect on learning outcome (β = 0.115, p < 0.05) and satisfaction (β = 0.221, p < 0.001), indicating that H5 and H6 were supported. No significant association was noted between learner‐instructor interaction and learning outcomes or between learner‐instructor interaction and satisfaction, indicating that H7 and H8 were rejected. Learner‐content interaction had a positive effect on learning outcome (β = 0.268, p < 0.001) and satisfaction (β = 0.248, p < 0.001), indicating that H9 and H10 were supported. Self‐discipline had a positive effect on learning outcome (β = 0.126, p < 0.01) and satisfaction (β = 0.175, p < 0.001), indicating that H11 and H12 were supported.

TABLE 5.

Summary of hypothesis tests

| Hypotheses | Path | β | t‐value | p | Support |

|---|---|---|---|---|---|

| H1 | System quality → Learning outcomes | 0.212*** | 4.517 | 0.000*** | Yes |

| H2 | System quality → Satisfaction | 0.230*** | 6.196 | 0.000*** | Yes |

| H3 | Course design → Learning outcomes | 0.105* | 2.380 | 0.017* | Yes |

| H4 | Course design → Satisfaction | 0.213*** | 5.775 | 0.000*** | Yes |

| H5 | Learner‐learner interaction → Learning outcomes | 0.115* | 2.238 | 0.029* | Yes |

| H6 | Learner‐learner interaction → Satisfaction | 0.221*** | 5.515 | 0.000*** | Yes |

| H7 | Learner‐instructor interaction→ Learning outcomes | −0.018 | 0.334 | 0.729 | No |

| H8 | Learner‐instructor interaction→ Satisfaction | 0.018 | 0.426 | 0.668 | No |

| H9 | Learner‐content interaction → Learning outcomes | 0.268*** | 5.135 | 0.000*** | Yes |

| H10 | Learner‐content interaction → Satisfaction | 0.248*** | 6.341 | 0.000*** | Yes |

| H11 | Self‐discipline → Learning outcomes | 0.126** | 2.829 | 0.004** | Yes |

| H12 | Self‐discipline → Satisfaction | 0.175*** | 5.142 | 0.000*** | Yes |

Note: *p < 0.05. **p < 0.01. *** p < 0.001.

FIGURE 4.

Results of testing hypothesis [Colour figure can be viewed at wileyonlinelibrary.com]

5. DISCUSSION

In today's higher education climate, online learning using platforms and tools is prevalent. Although the evidence suggests the positive impacts of multiple determinants on learning outcomes and student satisfaction, it is also worth exploring student perceived learning outcomes and satisfaction across platforms and tools during a crisis period, such as the epidemic. This study examined system quality, course design, learner‐learner interaction, learner‐instructor interaction, learner‐content interaction, and self‐discipline to predict university students' learning outcome and satisfaction towards when using TronClass and DingTalk in the COVID‐19 epidemic. These results explained 32.3% of the learning outcome and 56.1% of the satisfaction, indicating that system quality, course design, learner‐instructor interaction, learner‐content interaction, and self‐discipline had significant positive effects on both students' learning outcome and satisfaction. Among these tested determinants, learner‐content interaction had the most significant impact on students' learning outcome and satisfaction. These findings are aligned with that of previous research demonstrating that system quality (McGill & Klobas, 2009; Tajuddin et al., 2013; Waheed et al., 2016), course design (Adeyinka & Mutula, 2010; Swan et al., 2012), learner‐learner interaction (Quadir et al., 2019), and learner‐content interaction (J. Khan & Iqbal, 2016; Lin et al., 2017; Quadir et al., 2019) positively affect students' perceptions on learning outcomes and satisfaction. Kuo et al. (2013) also demonstrated that learner‐instructor and learner‐content interaction were good predictors of student satisfaction. Similarly, Baber's research (Baber, 2020) found that interaction, student motivation, course structure, instructor knowledge, and facilitation significantly influence students' perceived learning outcome and student satisfaction. He also pointed out that the barriers to social interaction in online learning could lead to barriers against the effectiveness of online learning for students, particularly during the COVID‐19 pandemic (Baber, 2021). Moreover, self‐discipline also significantly affected learning outcomes and satisfaction. This finding can be explained by the fact that students with high levels of self‐discipline and responsibility have higher expectations of results. It is not difficult to infer self‐discipline plays a crucial role in the learner's self‐management of the learning process. Octaberlina and Muslimin (2020) reported that almost all students (96%) are easily affected by external temptations (e.g., games, YouTube) during online learning during the epidemic; however, some studies found that self‐discipline did not predict either achievement measure significantly (Zimmerman & Kitsantas, 2014). The success of online learning does not exclusively depend on technology and course design but also involves student self‐discipline and responsibility, especially when a large number of face‐to‐face courses are converted to online courses in a short period. However, the learner‐instructor interaction had no significant predictive effect. This finding is consistent with the findings in the literature on student satisfaction (Sobaih et al., 2020). Ramage (2002) showed that learner‐instructor interactions in synchronous media appear to have no clear effect on educational outcomes. We believe that students felt that learner‐instructor interactions should occur more frequently in online learning environments during the crisis; however, teachers may have been struggling with the online teaching curriculum and the overwhelming number of questions and enquiries (Sobaih et al., 2020).

6. THEORETICAL AND PRACTICAL IMPLICATIONS

The method of learning online was found to be beneficial, as it offered flexibility and convenience for the learners. The current COVID‐19 pandemic has raised the need for online learning and shifted the perspectives of learners. It is worth investigating the effectiveness of online learning and student satisfaction towards comprehensive online learning in the sense of extreme social isolation during the COVID‐19 epidemic. The present study has noteworthy theoretical and practical implications. The theoretical implications of this research are that it sheds light on system quality, course design, learner‐learner interaction, learner‐content interaction, and self‐discipline, factors that all influence university student learning overcome and satisfaction, whereas interaction between instructors and students has not had a substantial effect on learning outcomes and satisfaction during the COVID19 epidemic in the context of this study. Regardless, learner‐instructor interactions are also very necessary to maintain engaging learning‐instructor sessions to maximize more effective and productive online courses. Our findings add to existing literature in revealing that system quality, course design, learner‐learner interaction, learner‐content interaction, and self‐discipline are crucial motivators for learning outcomes and satisfaction in this specific context. These results have significantly facilitated the current understanding not only for the pandemic period, but also for future application. In addition, practical implications of this study serve to contribute to a deeper understanding for instructors, administrators, educational institutes and stakeholders, so that they can make timely decisions and create more detailed policies in line with these results during the COVID‐19 pandemic, that is, motivating teachers to consider more closely on how to implement effective instructional approaches into online contents, or build more instructor‐student interactions to facilitate student engaging in their online curricula, as well as placing more attention on students' status and learning situation, affordability of internet access for students, and the selection of online learning tools needed to conduct effective and efficient online teaching activities. System administrators or institutes should consider offering comprehensive, convenient and timely system or service support for interacting with students which are conducive to preserving an optimistic learning experience. In particular, the current research is crucial for higher education institutions to begin or revisit their e‐learning services amid the current COVID‐19 pandemic, a phenomenon which has directly caused a paradigm change in the field of online learning and education. It is possible that even once the COVID‐19 pandemic has been overcome, there will continue to be a growth in online education.

7. CONCLUSIONS

Because isolation was a crucial reaction to preventing the spread of COVID‐19, we tried to investigate some factors, such as system quality, course design, interaction among learner‐learner, learner‐instructor, learner‐content, and self‐discipline, that influence Chinese university students' perceptions towards learning outcome and satisfaction in completely online learning environments. To test the impact of these indicators, we collected data during the COVID‐19 pandemic in June 2020, a unique time when schools had closed almost all in‐person classroom instruction. In this context, our findings revealed that system quality, course design, learner‐learner interaction, learner‐content interaction, and self‐discipline play significant roles in influencing student perception of their online learning outcome and satisfaction. However, the learner‐instructor interaction does not influence learning outcome and satisfaction.

Several limitations are noted. First, students' perception of learning outcomes and satisfaction to online learning experiences may be affected by different courses or the teaching requirements of teachers. The sample was collected from students attending a public Chinese university using a convenient sampling technique that could affect the representativeness of the sample and the generalization of the results. The main focus group might limit the generalisability of our findings to students from other university groups or students from different areas and countries. Future research could replicate our study and compare diverse university students' perceptions, especially students from different countries. Additionally, given that this study used TronClass and DingTalk as learning platforms and interactive tools to investigate university students' learning outcome and satisfaction for online learning during the COVID‐19 epidemic, caution should be taken when extending the results to other learning platforms. Moreover, a questionnaire assessing student opinions was used in this study; however, an in‐depth qualitative investigation would reveal personal opinions and detailed explorations inferring the relationships between the proposed constructs. Further research may thus support these findings by combining quantitative and qualitative methods.

CONFLICT OF INTEREST

The authors declare that they have no conflict of interest.

PEER REVIEW

The peer review history for this article is available at https://publons.com/publon/10.1111/jcal.12555.

ACKNOWLEDGMENTS

The authors thank Mr. Yixuan Chen's data collecting assistance.

Su C‐Y, Guo Y. Factors impacting university students' online learning experiences during the COVID‐19 epidemic. J Comput Assist Learn. 2021;37:1578–1590. 10.1111/jcal.12555

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available from the corresponding author, Yuqing Guo, upon reasonable request.

REFERENCES

- Abou‐Khalil, V. , Helou, S. , Khalifé, E. , Chen, M. A. , Majumdar, R. , & Ogata, H. (2021). Emergency online learning in low‐resource settings: Effective student engagement strategies. Education in Science, 11(1), 24. 10.3390/educsci11010024 [DOI] [Google Scholar]

- Adeyinka, T. , & Mutula, S. (2010). A proposed model for evaluating the success of WebCT course content management system. Computers in Human Behavior, 26(6), 1795–1805. 10.1016/j.chb.2010.07.007 [DOI] [Google Scholar]

- Al‐Azawei, A. , & Lundqvist, K. (2015). Learner differences in perceived satisfaction of an online learning: An extension to the technology acceptance model in an Arabic sample. Electronic Journal of E‐Learning, 13(5), 408–426 Retrieved from https://eric.ed.gov/?id=EJ1084245 [Google Scholar]

- Al‐Fraihat, D. , Joy, M. , Masa'deh, R. , & Sinclair, J. (2020). Evaluating E‐learning systems success: An empirical study. Computers in Human Behavior, 102, 67–86. 10.1016/j.chb.2019.08.004 [DOI] [Google Scholar]

- Alla, M. M. S. O. , Faryadi, Q. , & Fabil, N. B. (2013). The impact of system quality in E‐learning system. Journal of Computer Science and Information Technology, 1(2), 14–23 Retrieved from http://jcsitnet.com/journals/jcsit/Vol_1_No_2_December_2013/3.pdf [Google Scholar]

- Allen, I. E. , & Seaman, J. (2007). Making the grade: Online education in the United States, 2006. ERIC. Retrieved from https://eric.ed.gov/?id=ED530101

- Almaiah, M. A. , Al‐Khasawneh, A. , & Althunibat, A. (2020). Exploring the critical challenges and factors influencing the E‐learning system usage during COVID‐19 pandemic. Education and Information Technologies, 25(6), 5261–5280. 10.1007/s10639-020-10219-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- AlMulhem, A. (2020). Investigating the effects of quality factors and organizational factors on university students' satisfaction of e‐learning system quality. Cogent Education, 7(1), 1–16. 10.1080/2331186X.2020.1787004 [DOI] [Google Scholar]

- Alqahtani, A. Y. , & Rajkhan, A. A. (2020). E‐learning critical success factors during the COVID‐19 pandemic: A comprehensive analysis of E‐learning managerial perspectives. Education in Science, 10(9), 216. 10.3390/educsci10090216 [DOI] [Google Scholar]

- Alqurashi, E. (2019). Predicting student satisfaction and perceived learning within online learning environments. Distance Education, 40(1), 133–148. 10.1080/01587919.2018.1553562 [DOI] [Google Scholar]

- Alsabawy, A. Y. , Cater‐Steel, A. , & Soar, J. (2012). A model to measure e‐learning systems success. In Belkhamza Z. & Azizi Wafa S. (Eds.), Measuring organizational information systems success: New technologies and practices (pp. 293–317). IGI Global. 10.4018/978-1-4666-0170-3.ch015 [DOI] [Google Scholar]

- Baber, H. (2020). Determinants of students' perceived learning outcome and satisfaction in online learning during the pandemic of COVID19. Journal of Education and E‐Learning Research, 7(3), 285–292. 10.20448/journal.509.2020.73.285.292 [DOI] [Google Scholar]

- Baber, H. (2021). Social interaction and effectiveness of the online learning–a moderating role of maintaining social distance during the pandemic COVID‐19. Asian Education and Development Studies. 10.1108/AEDS-09-2020-0209 [DOI] [Google Scholar]

- Bagozzi, R. P. , & Yi, Y. (1988). On the evaluation of structural equation models. Journal of the Academy of Marketing Science, 16(1), 74–94. 10.1007/BF02723327 [DOI] [Google Scholar]

- Bao, W. (2020). COVID ‐19 and online teaching in higher education: A case study of Peking University. Human Behavior and Emerging Technologies, 2(2), 113–115. 10.1002/hbe2.191 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bray, E. , Aoki, K. , & Dlugosh, L. (2008). Predictors of learning satisfaction in Japanese online distance learners. The International Review of Research in Open and Distributed Learning, 9(3), 1–24. 10.19173/irrodl.v9i3.525 [DOI] [Google Scholar]

- Byrne, B. M. (2001). Structural equation modeling with AMOS, EQS, and LISREL: Comparative approaches to testing for the factorial validity of a measuring instrument. International Journal of Testing, 1(1), 55–86. 10.1207/S15327574IJT0101_4 [DOI] [Google Scholar]

- Chang, K. E. , Chang, C. T. , Hou, H. T. , Sung, Y. T. , Chao, H. L. , & Lee, C. M. (2014). Development and behavioral pattern analysis of a mobile guide system with augmented reality for painting appreciation instruction in an art museum. Computers & Education, 71, 185–197. 10.1016/j.compedu.2013.09.022 [DOI] [Google Scholar]

- Chen, N. S. , Lin, K. M. , & Kinshuk. (2008). Analysing users' satisfaction with e‐learning using a negative critical incidents approach. Innovations in Education and Teaching International, 45(2), 115–126. 10.1080/14703290801950286 [DOI] [Google Scholar]

- Chen, P. S. D. , Lambert, A. D. , & Guidry, K. R. (2010). Engaging online learners: The impact of web‐based learning technology on college student engagement. Computers & Education, 54(4), 1222–1232. 10.1016/j.compedu.2009.11.008 [DOI] [Google Scholar]

- Cheng, Y. (2012). Effects of quality antecedents on e‐learning acceptance. Internet Research, 22(3), 361–390. 10.1108/10662241211235699 [DOI] [Google Scholar]

- Chin, W. W. , & Newsted, P. R. (1999). Structural equation modeling analysis with small samples using partial least squares. In Hoyle R. H. (Ed.), Statistical strategies for small sample research (pp. 307–341). Sage. [Google Scholar]

- Chopra, G. , Madan, P. , Jaisingh, P. , & Bhaskar, P. (2019). Effectiveness of e‐learning portal from students' perspective: A structural equation model (SEM) approach. Interactive Technology and Smart Education, 16(2), 94–116. 10.1108/ITSE-05-2018-0027 [DOI] [Google Scholar]

- Chou, S. W. , & Liu, C. H. (2005). Learning effectiveness in a web‐based virtual learning environment: A learner control perspective. Journal of Computer Assisted Learning, 21(1), 65–76. 10.1111/j.1365-2729.2005.00114.x [DOI] [Google Scholar]

- Costa, P. T., Jr. , & McCrae, R. R. (1992). Revised NEO personality inventory (NEO‐PI‐R) and NEO five‐factor (NEO‐FFI) inventory professional manual. PAR. [Google Scholar]

- Costley, J. , & Lange, C. (2016). The effects of instructor control of online learning environments on satisfaction and perceived learning. Electronic Journal of E‐Learning, 14(3), 169–180 Retrieved from https://search.proquest.com/docview/1819069057?accountid=15198 [Google Scholar]

- Croxton, R. A. (2014). The role of interactivity in student satisfaction and persistence in online learning. Journal of Online Learning and Teaching, 10(2), 314–325 Retrieved from https://jolt.merlot.org/vol10no2/croxton_0614.pdf [Google Scholar]

- Duckworth, A. , & Gross, J. J. (2014). Self‐control and grit: Related but separable determinants of success. Current Directions in Psychological Science, 23(5), 319–325. 10.1177/0963721414541462 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duckworth, A. L. , & Seligman, M. E. P. (2005). Self‐discipline outdoes IQ in predicting academic performance of adolescents. Psychological Science, 16(12), 939–944. 10.1111/j.1467-9280.2005.01641.x [DOI] [PubMed] [Google Scholar]

- Dykman, C. A. , & Davis, C. K. (2008). Online education forum: Part two ‐ teaching online versus teaching conventionally. Journal of Information Systems Education, 19(2), 157–164 Retrieved from http://jise.org/volume19/n2/JISEv19n2p157.pdf [Google Scholar]

- Eom, S. (2009). Effects of interaction on students' perceived learning satisfaction in university online education: An empirical investigation. International Journal of Global Management Studies, 1(2), 60–74 Retrieved from http://search.ebscohost.com/login.aspx?direct=true&db=buh&AN=50743740&lang=zh-cn&site=eds-live [Google Scholar]

- Eom, S. B. , & Ashill, N. (2016). The determinants of students' perceived learning outcomes and satisfaction in university online education: An update. Decision Sciences Journal of Innovative Education, 14(2), 185–215. 10.1111/dsji.12097 [DOI] [Google Scholar]

- Eom, S. B. , Wen, H. J. , & Ashill, N. (2006). The determinants of students' perceived learning outcomes and satisfaction in university online education: An empirical investigation. Decision Sciences Journal of Innovative Education, 4(2), 215–235. 10.1111/j.1540-4609.2006.00114.x [DOI] [Google Scholar]

- Fornell, C. , & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39–50. 10.1177/002224378101800104 [DOI] [Google Scholar]

- Garrison, D. R. , Archer, W. , & Anderson, T. (2003). A theory of critical inquiry in online distance education. In Moore M. G. & Anderson W. G. (Eds.), Handbook of distance education (pp. 113–127). Lawrence Erlbaum Associates. [Google Scholar]

- Gefen, D. , & Straub, D. (2005). A practical guide to factorial validity using PLS‐graph: Tutorial and annotated example. Communications of the Association for Information Systems, 16(1), 91–109. 10.17705/1CAIS.01605 [DOI] [Google Scholar]

- Goel, L. , Johnson, N. A. , Junglas, I. , & Ives, B. (2013). How cues of what can be done in a virtual world influence learning: An affordance perspective. Information Management, 50(5), 197–206. 10.1016/j.im.2013.01.003 [DOI] [Google Scholar]

- Goodwin, B. , & Hein, H. (2016). Research says/the X factor in college success. Educational Leadership, 73(6), 77–78 Retrieved from https://eric.ed.gov/?id=EJ1092947 [Google Scholar]

- Gray, J. A. , & DiLoreto, M. (2016). The effects of student engagement, student satisfaction, and perceived learning in online learning environments. International Journal of Educational Leadership Preparation, 11(1), 98–119 Retrieved from https://eric.ed.gov/?id=EJ1103654 [Google Scholar]

- Hagger, M. S. , & Hamilton, K. (2019). Grit and self‐discipline as predictors of effort and academic attainment. British Journal of Educational Psychology, 89(2), 324–342. 10.1111/bjep.12241 [DOI] [PubMed] [Google Scholar]

- Hair, J. F. , Black, W. C. , Babin, B. J. , & Anderson, R. E. (1998). Multivariate data analysis: A global perspective. Prentice hall. [Google Scholar]

- Hair, J. F. , Hult, G. T. M. , Ringle, C. M. , & Sarstedt, M. (2016). A primer on partial least squares structural equation modeling (PLS‐SEM). Sage. [Google Scholar]

- Hair, J. F. , Risher, J. J. , Sarstedt, M. , & Ringle, C. M. (2019). When to use and how to report the results of PLS‐SEM. European Business Review, 31(1), 2–24. 10.1108/EBR-11-2018-0203 [DOI] [Google Scholar]

- Henseler, J. , Ringle, C. M. , & Sarstedt, M. (2014). A new criterion for assessing discriminant validity in variance‐based structural equation modeling. Journal of the Academy of Marketing Science, 43(1), 115–135. 10.1007/s11747-014-0403-8 [DOI] [Google Scholar]

- Hong, K. S. , Lai, K. W. , & Holton, D. (2003). Students' satisfaction and perceived learning with a web‐based course. Educational Technology & Society, 6(1), 116–124 Retrieved from https://www.jstor.org/stable/pdf/jeductechsoci.6.1.116.pdf [Google Scholar]

- Hulland, J. (1999). Use of partial least squares (PLS) in strategic management research: A review of four recent studies. Strategic Management Journal, 20(2), 195–204. [DOI] [Google Scholar]

- Hung, M. L. , & Chou, C. (2015). Students' perceptions of instructors' roles in blended and online learning environments: A comparative study. Computers & Education, 81, 315–325. 10.1016/j.compedu.2014.10.022 [DOI] [Google Scholar]

- Jaggars, S. S. , & Xu, D. (2016). How do online course design features influence student performance? Computers & Education, 95, 270–284. 10.1016/j.compedu.2016.01.014 [DOI] [Google Scholar]

- Javed, A. I. , Zahoor, Z. , & Saeed, S. (2020). Effect of COVID‐19 and emerging trends of higher education in Pakistan. Research Journal of PNQAHE, 3(2), 177–183 Retrieved from http://pnqahe.org/journal/wp-content/uploads/2020/07/2020-Vol-3-Issue-1-2.pdf [Google Scholar]

- John, V. K. , & Duangekanong, D . (2018, January). E‐learning adoption and e‐learning satisfaction of learners: A case study of management program in a university of Thailand. Paper presented at the ISTEL‐Winter 2018, Okinawa, Japan. Abstract. Retrieved from https://ssrn.com/abstract=3246771

- Joo, Y. J. , Lim, K. Y. , & Kim, J. (2013). Locus of control, self‐efficacy, and task value as predictors of learning outcome in an online university context. Computers & Education, 62, 149–158. 10.1016/j.compedu.2012.10.027 [DOI] [Google Scholar]

- Kang, M. , & Im, T. (2013). Factors of learner‐instructor interaction which predict perceived learning outcomes in online learning environment. Journal of Computer Assisted Learning, 29(3), 292–301. 10.1111/jcal.12005 [DOI] [Google Scholar]

- Ke, F. , & Kwak, D. (2013). Constructs of student‐centered online learning on learning satisfaction of a diverse online student body: A structural equation modeling approach. Journal of Educational Computing Research, 48(1), 978–122. 10.2190/EC.48.1.e [DOI] [Google Scholar]

- Khan, A. , Egbue, O. , Palkie, B. , & Madden, J. (2017). Active learning: Engaging students to maximize learning in an online course. Electronic Journal of E‐Learning, 15(2), 107–115 Retrieved from https://search.proquest.com/docview/1935254895?accountid=15198 [Google Scholar]

- Khan, J. , & Iqbal, M. J. (2016). Relationship between student satisfaction and academic achievement in distance education: A case study of AIOU Islamabad. FWU Journal of Social Sciences, 10(2), 137–145 Retrieved from http://search.ebscohost.com/login.aspx?direct=true&db=obo&AN=120697574&lang=zh-cn&site=eds-live [Google Scholar]

- Ku, H. Y. , Akarasriworn, C. , Rice, L. A. , Glassmeyer, D. M. , & Mendoza, B. (2011). Teaching an online graduate mathematics education course for in‐service mathematics teachers. Quarterly Review of Distance Education, 12(2), 135–147 Retrieved from http://search.ebscohost.com/login.aspx?direct=true&db=aph&AN=66173720&lang=zh-cn&site=ehost-live [Google Scholar]

- Kuo, Y. C. , Belland, B. R. , Schroder, K. E. E. , & Walker, A. E. (2014). K‐12 teachers' perceptions of and their satisfaction with interaction type in blended learning environments. Distance Education, 35(3), 360–381. 10.1080/01587919.2015.955265 [DOI] [Google Scholar]

- Kuo, Y. C. , Walker, A. E. , Belland, B. R. , & Schroder, K. E. E. (2013). A predictive study of student satisfaction in online education programs. The International Review of Research in Open and Distance Learning, 14(1), 16–39. 10.19173/irrodl.v14i1.1338 [DOI] [Google Scholar]

- Kuo, Y. C. , Walker, A. E. , Schroder, K. E. E. , & Belland, B. R. (2014). Interaction, internet self‐efficacy, and self‐regulated learning as predictors of student satisfaction in online education courses. The Internet and Higher Education, 20, 35–50. 10.1016/j.iheduc.2013.10.001 [DOI] [Google Scholar]

- Li, Y. , Chen, X. , Chen, Y. , & Zhang, F. (2020). Investigating of college students' online learning experience during the pandemic and it enlightenment: Taking chu kochen honors college, Zhejiang university as a sample. Open Education Research, 26(5), 60–70 Retrieved from http://openedu.sou.edu.cn/upload/qikanfile/202009240931094816.pdf [Google Scholar]

- Liaw, S. S. (2008). Investigating students' perceived satisfaction, behavioral intention, and effectiveness of e‐learning: A case study of the blackboard system. Computers & Education, 51(2), 864–873. 10.1016/J.COMPEDU.2007.09.005 [DOI] [Google Scholar]

- Lievens, F. , Ones, D. S. , & Dilchert, S. (2009). Personality scale validities increase throughout medical school. Journal of Applied Psychology, 94(6), 1514–1535. 10.1037/a0016137 [DOI] [PubMed] [Google Scholar]

- Lin, C. , Zheng, B. , & Zhang, Y. (2017). Interactions and learning outcomes in online language courses. British Journal of Educational Technology, 48(3), 730–748. 10.1111/bjet.12457 [DOI] [Google Scholar]

- Longhurst, G. J. , Stone, D. M. , Dulohery, K. , Scully, D. , Campbell, T. , & Smith, C. F. (2020). Strength, weakness, opportunity, threat (SWOT) analysis of the adaptations to anatomical rducation in the United Kingdom and Republic of Ireland in response to the Covid‐19 pandemic. Anatomical Sciences Education, 13(3), 301–311. 10.1002/ase.1967 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu, J. , Yang, J. , & Yu, C. S. (2013). Is social capital effective for online learning? Information Management, 50(7), 507–522. 10.1016/j.im.2013.07.009 [DOI] [Google Scholar]

- McGill, T. J. , & Klobas, J. E. (2009). A task‐technology fit view of learning management system impact. Computers & Education, 52(2), 496–508. 10.1016/j.compedu.2008.10.002 [DOI] [Google Scholar]

- Moore, M. G. (1989). Three types of interaction. The American Journal of Distance Education, 3(2), 1–7. 10.1080/08923648909526659 [DOI] [Google Scholar]

- Moore, M. G. , & Kearsley, G. (2011). Distance education: A systems view of online learning (3rd ed.). Wadsworth. [Google Scholar]

- Mtebe, J. S. , & Raphael, C. (2018). Key factors in learners' satisfaction with the e‐learning system at the University of Dar es Salaam, Tanzania. Australasian Journal of Educational Technology, 34(4), 107–122. 10.14742/ajet.2993 [DOI] [Google Scholar]

- Musa, M. A. , & Othman, M. S. (2012). Critical success factor in e‐learning: An examination of technology and student factors. International Journal of Advances in Engineering & Technology, 3(2), 140–148. [Google Scholar]

- Nortvig, A. M. , Petersen, A. K. , & Balle, S. H. (2018). A literature review of the factors influencing e‐learning and blended learning in relation to learning outcome, student satisfaction and engagement. Electronic Journal of E‐Learning, 16(1), 46–55 Retrieved from https://search.proquest.com/docview/2041573256?accountid=15198 [Google Scholar]

- Octaberlina, L. R. , & Muslimin, A. I. (2020). EFL students perspective towards online learning barriers and alternatives using Moodle/Google classroom during COVID‐19 pandemic. International Journal of Higher Education, 9(6), 1–9. 10.5430/ijhe.v9n6p1 [DOI] [Google Scholar]

- Paechter, M. , Maier, B. , & Macher, D. (2010). Students' expectations of, and experiences in e‐learning: Their relation to learning achievements and course satisfaction. Computers & Education, 54(1), 222–229. 10.1016/j.compedu.2009.08.005 [DOI] [Google Scholar]

- Panigrahi, R. , Srivastava, P. R. , & Sharma, D. (2018). Online learning: Adoption, continuance, and learning outcome ‐ a review of literature. International Journal of Information Management, 43, 1–14. 10.1016/j.ijinfomgt.2018.05.005 [DOI] [Google Scholar]

- Petter, S. , DeLone, W. , & McLean, E. (2008). Measuring information systems success: Models, dimensions, measures, and interrelationships. European Journal of Information Systems, 17(3), 236–263. 10.1057/ejis.2008.15 [DOI] [Google Scholar]

- Quadir, B. , Yang, J. C. , & Chen, N. S. (2019). The effects of interaction types on learning outcomes in a blog‐based interactive learning environment. Interactive Learning Environments. 10.1080/10494820.2019.1652835 [DOI] [Google Scholar]

- Ramage, T. R. (2002). The “no significant difference” phenomenon: A literature review. The e‐Journal of Instrcutional Science and Technology, 5(1) Retrieved from https://core.ac.uk/download/pdf/144217035.pdf [Google Scholar]

- Rubin, B. , & Fernandes, R. (2013). Measuring the community in online classes. Journal of Asynchronous Learning Networks, 17(3), 115–136. 10.24059/olj.v17i3.344 [DOI] [Google Scholar]

- Sangster, A. , Stoner, G. , & Flood, B. (2020). Insights into accounting education in a COVID‐19 world. Accounting Education, 29(5), 431–562. 10.1080/09639284.2020.1808487 [DOI] [Google Scholar]

- Sarwar, H. , Akhtar, H. , Naeem, M. M. , Khan, J. A. , Waraich, K. , Shabbir, S. , … Khurshid, Z. (2020). Self‐reported effectiveness of e‐learning classes during COVID‐19 pandemic: A nation‐wide survey of Pakistani undergraduate dentistry students. European Journal of Dentistry, 14(S 01), S34–S43. 10.1055/s-0040-1717000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saxena, C. , Baber, H. , & Kumar, P. (2020). Examining the moderating effect of perceived benefits of maintaining social distance on e‐learning quality during COVID‐19 pandemic. Journal of Educational Technology Systems, 49(4), 532–554. 10.1177/0047239520977798 [DOI] [Google Scholar]

- Shah, S. , Diwan, S. , Kohan, L. , Rosenblum, D. , Gharibo, C. , Soin, A. , … Provenzano, D. A. (2020). The technological impact of COVID‐19 on the future of education and health care delivery. Pain Physician, 23(4S), S367–S380 Retrieved from https://pubmed.ncbi.nlm.nih.gov/32942794/ [PubMed] [Google Scholar]

- Sobaih, A. E. E. , Hasanein, A. M. , & Abu Elnasr, A. E. (2020). Responses to COVID‐19 in higher education: Social media usage for sustaining formal academic communication in developing countries. Sustainability, 12(16), 1–18. 10.3390/su12166520 35136666 [DOI] [Google Scholar]

- Swan, K. , Matthews, D. , Bogle, L. , Boles, E. , & Day, S. (2012). Linking online course design and implementation to learning outcomes: A design experiment. The Internet and Higher Education, 15(2), 81–88. 10.1016/j.iheduc.2011.07.002 [DOI] [Google Scholar]

- Tajuddin, R. A. , Baharudin, M. , & Hoon, T. S. (2013). System quality and its influence on students' learning satisfaction in UiTM Shah Alam. Procedia ‐ Social and Behavioral Sciences, 90, 677–685. 10.1016/j.sbspro.2013.07.140 [DOI] [Google Scholar]

- Tarigan, J. (2011). Factors influencing users satisfaction on e‐learning systems. Jurnal Manajemen Dan Kewirausahaan, 13(2), 177–188. 10.9744/jmk.13.2.177-188 [DOI] [Google Scholar]

- Thurmond, V. A. (2003). Examination of interaction variables as predictors of students' satisfaction and willingness to enroll in future web‐based courses while controlling for student characteristics (Doctoral dissertation). Available from ProQuest Dissertations and Theses database. (UMI No. 3111497).

- Uddin, M. D. M. , Ghosh, A. , & Isaac, O. (2019). Impact of the system, information, and service quality of online learning on user satisfaction among public universities students in Bangladesh. International Journal of Management and Human Science, 3(2), 1–10 Retrieved from https://ejournal.lucp.net/index.php/ijmhs/article/view/784 [Google Scholar]

- Waheed, M. , Kaur, K. , & Kumar, S. (2016). What role does knowledge quality play in online students' satisfaction, learning and loyalty? An empirical investigation in an e‐learning context. Journal of Computer Assisted Learning, 32(6), 561–575. 10.1111/jcal.12153 [DOI] [Google Scholar]

- Wang, H. C. , & Chiu, Y. F. (2011). Assessing e‐learning 2.0 system success. Computers & Education, 57(2), 1790–1800. 10.1016/j.compedu.2011.03.009 [DOI] [Google Scholar]

- Wargadinata, W. , Maimunah, I. , Dewi, E. , & Rofiq, Z. (2020). Student's responses on learning in the early COVID‐19 pandemic. Tadris: Jurnal Keguruan Dan Ilmu Tarbiyah, 5(1), 141–153. 10.24042/tadris.v5i1.6153 [DOI] [Google Scholar]

- Williams, J. B. , & Jacobs, J. (2004). Exploring the use of blogs as learning spaces in the higher education sector. Australasian Journal of Educational Technology, 20(2), 232–247. 10.14742/ajet.1361 [DOI] [Google Scholar]

- Yakubu, M. N. , & Dasuki, S. (2018). Assessing e‐learning systems success in Nigeria: An application of the DeLone and McLean information systems success model. Journal of Information Technology Education: Research, 17, 183–203. 10.28945/4077 [DOI] [Google Scholar]

- Yildiz Durak, H. (2018). Flipped learning readiness in teaching programming in middle schools: Modelling its relation to various variables. Journal of Computer Assisted Learning, 34(6), 939–959. 10.1111/jcal.12302 [DOI] [Google Scholar]

- Yu, T. , & Yu, T. (2010). Modelling the factors that affect individuals' utilisation of online learning systems: An empirical study combining the task technology fit model with the theory of planned behaviour. British Journal of Educational Technology, 41(6), 1003–1017. 10.1111/j.1467-8535.2010.01054.x [DOI] [Google Scholar]

- Yukselturk, E. , & Yildirim, Z. (2008). Investigation of interaction, online support, course structure and flexibility as the contributing factors to students' satisfaction in an online certificate program. Journal of Educational Technology & Society, 11(4), 51–65 Retrieved from https://search.proquest.com/docview/1287038642?accountid=15198 [Google Scholar]

- Zimmerman, B. J. , & Kitsantas, A. (2014). Comparing students' self‐discipline and self‐regulation measures and their prediction of academic achievement. Contemporary Educational Psychology, 39(2), 145–155. 10.1016/j.cedpsych.2014.03.004 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author, Yuqing Guo, upon reasonable request.