Abstract

Slime mould algorithm (SMA) is a population-based metaheuristic algorithm inspired by the phenomenon of slime mould oscillation. The SMA is competitive compared to other algorithms but still suffers from the disadvantages of unbalanced exploitation and exploration and is easy to fall into local optima. To address these shortcomings, an improved variant of SMA named MSMA is proposed in this paper. Firstly, a chaotic opposition-based learning strategy is used to enhance population diversity. Secondly, two adaptive parameter control strategies are proposed to balance exploitation and exploration. Finally, a spiral search strategy is used to help SMA get rid of local optimum. The superiority of MSMA is verified in 13 multidimensional test functions and 10 fixed-dimensional test functions. In addition, two engineering optimization problems are used to verify the potential of MSMA to solve real-world optimization problems. The simulation results show that the proposed MSMA outperforms other comparative algorithms in terms of convergence accuracy, convergence speed, and stability.

1. Introduction

Solving optimization problems means finding the best value of the given variables which satisfies the maximum or minimum objective value without violating the constraints. With the continuous development of artificial intelligence technology, real-world optimization problems are becoming more and more complex. Traditional mathematical methods find it difficult to solve nonproductivity and noncontinuous problems effectively and are easily trapped in local optima [1, 2]. Metaheuristic optimization algorithms are able to obtain optimal or near-optimal solutions within a reasonable amount of time [3]. Thus they are widely used for solving optimization problems, such as mission planning [4–7], image segmentation [8–10], feature selection [11–13], and parameter optimization [14–18]. Metaheuristic algorithms find optimal solutions by modeling physical phenomena or biological activities in nature. These algorithms can be divided into three categories: evolutionary algorithms, physics-based algorithms, and swarm-based algorithms. Evolutionary algorithms, as the name implies, are a class of algorithms that simulate the laws of evolution in nature. Genetic algorithms [19] based on Darwin's theory of superiority and inferiority are one of the representatives. There are other algorithms such as differential evolution which mimic the crossover and variation mechanisms of genetics [20], biogeography-based optimization inspired by natural biogeography [21], and evolutionary programming [22] and evolutionary strategies [23]. Physics-based algorithms search for the optimum by simulating the laws or phenomena of physics in the universe. The simulated annealing, inspired by the phenomenon of metallurgical annealing, is the best-known physics-based algorithm. Apart from SA, other physics-based algorithms have been proposed, such as gravity search algorithm [24], sine cosine algorithm [25], black hole algorithm [26], nuclear reaction optimizer [27], and Henry gas solubility optimization [28]. Swarm-based algorithms are inspired from the social group behavior of animals or humans. Particle swarm optimization [29] and ant colony optimization [30], which simulate the foraging behavior of birds and ants, are two of the most common swarm-based algorithms. In addition to those, the researchers have proposed new swarm-based algorithms. The grey wolf optimizer [31] simulates the collaborative foraging of grey wolves. The salp swarm algorithm [32] is inspired by the foraging and following of the salps. Monarch butterfly optimization [33] is inspired by the migratory activities of monarch butterfly populations. The naked mole-rat algorithm [34] mimics the mating patterns of naked mole-rats. However, the no free lunch theory points out that no single algorithm can solve all optimization problems well [35]. This motivates us to continuously propose new algorithms and improve existing ones. Recently, inspired by the phenomenon of slime oscillations, Li proposed a new population-based algorithm called slime mould algorithm (SMA) [36]. Although SMA is competitive compared to other algorithms, there are some shortcomings in SMA. Due to the shortcoming of diminished population diversity in SMA, it easily falls into local optimum [37]. The selection of update strategies by SMA weakens the exploration ability [38, 39]. As the problem grows more complex, SMA converges slower in late iterations and has difficulty maintaining a balance between exploitation and exploration [40, 41]. To further enhance the performance of SMA and considering that the NFL encourages us to continuously improve these existing algorithms, a modified variant of SMA called MSMA is proposed in this paper. A chaotic opposition-based learning strategy is first used to improve population diversity. The search scope is expanded using the inverse solution of the imposed chaos operator. Second, two adaptive parameter control strategies are proposed to balance the relationship between exploitation and exploration better. Finally, a spiral search strategy is introduced to enhance the global exploration ability of the algorithm and avoid falling into local optimum. To verify the superiority of MSMA, 13 functions with variable dimensions and 10 functions with fixed dimensions were used for testing. The differences between the algorithms were also analyzed using the Wilcoxon test and the Friedman test. Moreover, two engineering optimization problems were used to verify the performance of MSMA further.

The remainder of this paper is organized as follows. A review of the basic SMA is provided in Section 2. Section 3 provides a detailed description of the proposed MSMA. In Section 4, the effectiveness of the proposed improved strategy and the superiority of the improved algorithm are verified using classical test functions. Based on this, the MSMA is applied to solve the two engineering design problems in Section 4. The main reasons for the success of MSMA are discussed in Section 5. Finally, conclusions and future works are given in Section 6.

2. Slime Mould Algorithm

In this section, the basic procedure of SMA is described. SMA works by simulating the behavioral and morphological changes of slime mould during the foraging process. The mathematical model of the slime mould is as follows:

| (1) |

where t denotes the number of current iterations. Xtbest denotes the optimal individual. XtA and XtB are two individuals randomly selected from the population at iteration t. vb is a parameter in the range of −a to a. vc is a variable decreasing from 1 to 0. W denotes the weight of slime mould. p is a variable that is calculated by the following formula:

| (2) |

where S(i) is the fitness of X. NP is the population number. DF is the best fitness so far.

The formula for a is as follows:

| (3) |

where tmax is the maximum number of iterations.

The formula of W is calculated as follows:

| (4) |

| (5) |

where condition denotes individuals ranking in the top half of fitness. bF and ωF denote the best fitness and worst fitness in the current population, respectively.

The mathematical formula for updating the position of the slime mould is as follows:

| (6) |

where ub and ub are the upper and lower bounds of the search space, respectively.

3. Proposed MSMA

To overcome the shortcomings of the basic SMA, this paper proposes three improvement strategies to enhance its performance. A chaotic opposition-based learning strategy is used to enhance the population diversity, as well as balancing algorithm exploitation and exploration ability using self-adaptive strategy. A spiral search strategy is used to prevent the algorithm from falling into local optimum. The three improvement strategies are described in detail in the following.

3.1. Chaotic Opposition-Based Learning Strategy

Opposition-based learning (OBL) is a new technique that has emerged in recent years in computing, proposed by Tizhoosh [42]. It has been shown that the probability that the reverse solution gets closer to the global optimal solution is nearly 50% higher than that of the current original solution. OBL enhances population diversity mainly by generating the reverse position of each individual and evaluating the original and reverse individuals to retain the dominant individuals into the next generation. The OBL formula is as follows:

| (7) |

where Xio is the reverse solution corresponding to Xit.

To further enhance the population diversity and overcome the deficiency that the reverse solution generated by the basic OBL is not necessarily better than the current solution, considering that chaotic mapping has the characteristics of randomness and ergodicity, it can help to generate new solutions and enhance the population diversity. Therefore, this paper combines chaotic mapping with OBL and proposes a chaotic opposition-based learning strategy. The specific mathematical model is described as follows:

| (8) |

where XiTo denotes the inverse solution corresponding to the ith individual in the population. λi is the corresponding chaotic mapping value.

3.2. Self-Adaptive Strategy

3.2.1. New Nonlinear Decreasing Strategy

During the iterative optimization of SMA, the changes of parameter a have an important impact on the balance of exploitation and exploration. In SMA, a decreases rapidly in the early iterations and slows down in the later iterations. Smaller a in the early stage is not conducive to global exploration. Therefore, in order to further balance the exploitation and exploration and enhance the global exploration capability and the convergence capability of local exploitation, a new nonlinear decreasing strategy is proposed in this paper. The new definition of parameter a is shown as follows:

| (9) |

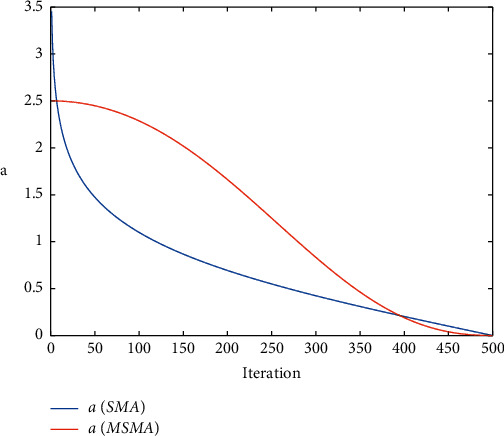

To visually illustrate the effect of the new strategy, we compare it with the parameter change strategy in SMA, as shown in Figure 1. The new strategy proposed in this paper decreases slowly in the early stages, which increases the time for global exploration. In the late iteration, the reduction is also faster than the original strategy, which facilitates the SMA to accelerate the exploitation.

Figure 1.

Comparison of parameter a.

3.2.2. Linear Decreasing Selection Range

For equation (1), the original SMA randomly selects two individuals from all populations. This is not conducive to the later convergence of the algorithm. In order to enhance the convergence of SMA, the selection range in equation (1) is reduced with increasing number of iterations. The selection range parameter SR is described as follows:

| (10) |

where SRmax and SRmin are the maximum and minimum selection ranges, respectively.

3.3. Spiral Search Strategy

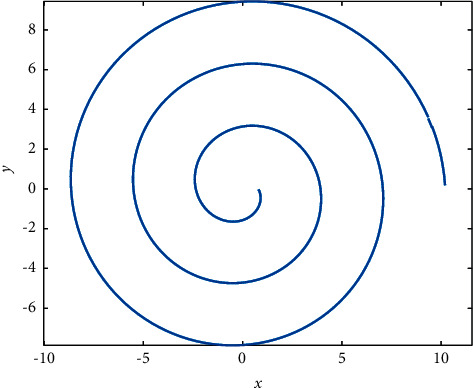

In order to better balance the exploitation and exploration of SMA, this paper introduces a spiral search strategy. The spiral search diagram is shown in Figure 2.

Figure 2.

Spiral search schematic.

As can be seen from Figure 2, the spiral search strategy can expand the search scope and better improve the global exploration performance. The mathematical formula of the spiral search strategy is shown as follows:

| (11) |

The spiral search strategy and the original strategy are chosen randomly according to the probability to update the population location. Thus, the modified position updating formula is as follows:

| (12) |

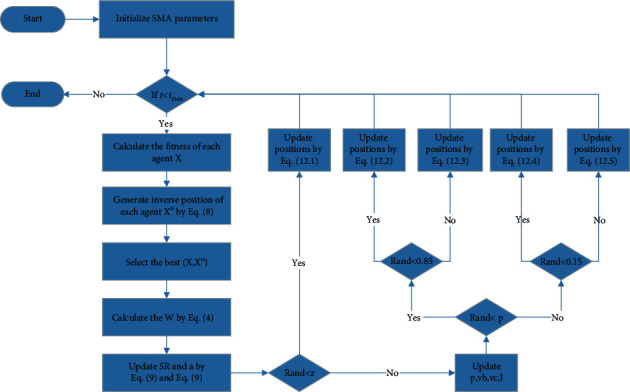

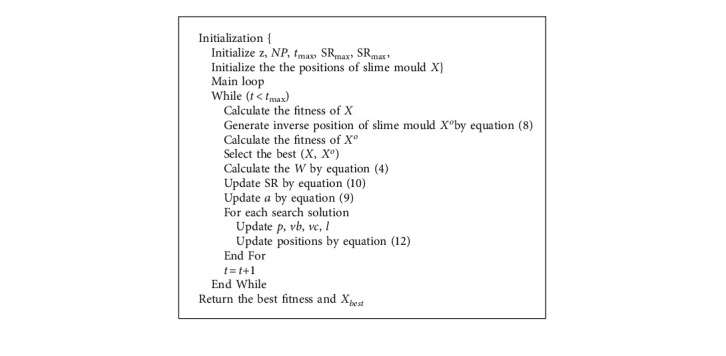

and the pseudocode and flowchart of the MSMA are shown in Algorithm 1 and Figure 3.

Figure 3.

Flowchart of MSMA.

3.4. Computational Complexity Analysis

MSMA is mainly composed of subsequent components: initialization, fitness assessment, reverse population fitness assessment, ranking, weight update, and position update, in which Np denotes the number of slime moulds, D denotes the function's dimension, and T denotes the maximum number of iterations. The computational complexity of initialization isO(D), fitness evaluation and inverse population fitness evaluation is O(Np+Np), the computational complexity of ranking is O(NP · log N), the computational complexity of weight update is O(Np · D), and the complexity of position update is O(Np · D). Therefore, the total complexity of MSMA is O(D+T · Np · (2+log N+D)).

4. Numerical Experiment and Analysis

In this section, various experiments are performed to verify the performance of MSMA. The experiments mainly include twenty-three classical test functions and two engineering design optimization problems.

4.1. Benchmark Test Functions and Parameter Settings

The 23 benchmark test functions include 7 unimodal, 6 multimodal, and 10 fixed-dimension multimodal functions. The unimodal functions have only one global optimal solution and are usually used to verify the exploitation capability of the algorithm. The multimodal functions have multiple locally optimal solutions and are therefore often used to examine the algorithm's exploration ability and its ability to escape from local optimums. These benchmark functions are listed in Table 1.

Table 1.

The classic benchmark functions (M: multimodal, U: unimodal, S: separable, N: nonseparable, dim: dimension, range: limits of search space, optimum: global optimal value).

| Test function | Name | Type | Dim | Range | Optimum |

|---|---|---|---|---|---|

| f 01(x)=∑i=1Dxi2 | Sphere | US | 30 | [−100, 100] | 0 |

| f 02(x)=∑i=1D|xi|+∏i−1D|xi| | Schwefel 2.22 | UN | 30 | [−10, 10] | 0 |

| f 03(x)=∑i=1D(∑j−1Dxi)2 | Schwefel 1.2 | UN | 30 | [−100, 100] | 0 |

| f 04(x)=maxi{|xi|, 1 ≤ i ≤ D} | Schwefel 2.21 | US | 30 | [−100, 100] | 0 |

| f 05(x)=∑i=1D100(xi+12 − xi2)2+(xi − 1)2 | Rosenbrock | UN | 30 | [−30, 30] | 0 |

| f 06(x)=∑i=1D(⌊xi+0.5⌋)2 | Step | US | 30 | [−100, 100] | 0 |

| f 07(x)=∑i=1Dixi4+random[0,1) | Quartic | US | 30 | [−1.28, 1.28] | 0 |

|

| |||||

| Schwefel 2.26 | MS | 30 | [−500, 500] | −418.9829∗D | |

| f 09(x)=∑i=1D(xi2 − 10 cos(2πxi)+10) | Rastrigin | MS | 30 | [−5.12, 5.12] | 0 |

| Ackley | MS | 30 | [−32, 32] | 8.8818e − 16 | |

| Griewank | MN | 30 | [−600, 600] | 0 | |

| Penalized | MN | 30 | [−50, 50] | 0 | |

| f 13(x)=0.1{sin2(3πxi)+∑i=1D(xi − 1)2[1+ sin2(3πxi)]+(xD − 1)2[1+ sin2(2πxD)]}+∑i−1Du(xi, 5,100,4) | Penalized2 | MN | 30 | [−50, 50] | 0 |

|

| |||||

| f 14(x)=((1/500)+∑j=125(1/j+∑i=12(xi − aij)6))−1 | Foxholes | MS | 2 | [−65.53, 65.53] | 0.998004 |

| f 15(x)=∑i=111(ai − (x1(bi2+bix2)/bi2+bix3+x4))−1 | Kowalik | MS | 4 | [−5, 5] | 0.0003075 |

| f 16(x)=4x12 − 2.1x14+1/3x16+x1x2 − 4x22+x24 | Six-Hump Camel Back | MN | 2 | [−5, 5] | −1.03163 |

| f 17(x)=(x2 − (5.1/4π2)x12+(5/π)x1 − 6)2+10(1 − (1/8π))cos x1+10 | Branin | MS | 2 | [−5, 10]×[0, 15] | 0.398 |

| f 18(x)=[1+(x1+x2+1)2(19 − 14x1+3x12 − 14x2+6x1x2+3x22)] × [30+(2x1 − 3x2)2(18 − 32x1+12x12+48x2 − 36x1x2+27x22)] | Goldstein Price | MN | 2 | [−5, 5] | 3 |

| f 19(x)=−∑i=14(ciexp(−∑j−13aij(xj − pij)2) | Hartman 3 | MN | 3 | [0, 1] | −3.8628 |

| f 20(x)=−∑i=14(ciexp(−∑j−16aij(xj − pij)2) | Hartman 6 | MN | 6 | [0, 1] | −3.32 |

| f 21(x)=−∑i=15[(X − ai)(X − ai)T+ci]−1 | Langermann 5 | MN | 4 | [0, 10] | −10.1532 |

| f 22(x)=−∑i=17[(X − ai)(X − ai)T+ci]−1 | Langermann 7 | MN | 4 | [0, 10] | −10.4029 |

| f 23(x)=−∑i=110[(X − ai)(X − ai)T+ci]−1 | Langermann 10 | MN | 4 | [0, 10] | −10.5364 |

The experimental results of MSMA are compared with those of the other eight algorithms. The comparison algorithms are MPA [43], MFO [44], SSA [32], EO [45], MRFO [46], HHO [47], GSA, and GWO. To ensure fairness, all algorithms were run on a Windows 10 AMD R7 4800U 16 GB platform and code was programmed using MATLAB R2016b. In the experiment, the number of populations NP is 30 and the maximum number of iterations t is 500. The results of 50 independent runs of the experiment are recorded. The parameters of the comparison algorithms were set according to the original literature as shown in Table 2.

Table 2.

Algorithm parameter setting.

| Algorithm | Parameters |

|---|---|

| MPA | P=0.5, FADs=0.2 |

| MFO | a=−1 (linearly decreased over iterations) |

| SSA | P=0.2, C=0.2 |

| EO | a 1=2, a2=1 |

| MRFO | S=2 |

| HHO | — |

| GSA | G 0=100, Rnorm=2, Rpower=1 |

| GWO | a=2 (linearly decreased over iterations) |

4.2. Chaotic Mapping Selection Test

The chaotic opposition-based learning strategy proposed in this paper combines chaotic mapping and opposition-based learning mechanisms. To verify which chaotic mapping is used, 10 chaotic mappings are combined with the opposition-based learning mechanism. The SMA using chaotic mapping with ID 1 is named SMA-C1. The rest of the SMA algorithms using chaotic mapping are named similarly. The details of the chaotic mappings are shown in Table 3. Table 4 lists the results of each algorithm for solving the benchmark test functions.

Table 3.

Ten-chaotic-mapping information.

| ID | Mapping type | Function |

|---|---|---|

| 1 | Chebyshev map | x i+1=cos(i cos−1(xi)) |

| 2 | Circle map | x i+1=mod(xi+b − (a/2π)sin(2πxk), 1), a=0.5 and b=0.2 |

| 3 | Gauss map | |

| 4 | Iterative map | x i+1=sin(aπ/xi), a=0.7 |

| 5 | Logistic map | x i+1=axi(1 − xi), a=4 |

| 6 | Pricewise map | |

| 7 | Sine map | x i+1=a/4 · sin(πxi), a=4 |

| 8 | Singer map | x i+1=μ(7.86xi − 23.32xi2+28.75xi3 − 13.301875xi4), μ=1.07 |

| 9 | Sinusoidal map | x i+1=axi2sin(πxi), a=2.3 |

| 10 | Tent map |

Table 4.

Results of 10 chaotic maps on all benchmark functions.

| F(x) | Measure | SMA | SMA-C1 | SMA-C2 | SMA-C3 | SMA-C4 | SMA-C5 | SMA-C6 | SMA-C7 | SMA-C8 | SMA-C9 | SMA-C10 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 4.25E − 317 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 |

| Std | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F2 | Mean | 3.31E − 139 | 0.00E + 00 | 1.19E − 318 | 0.00E + 00 | 6.21E − 317 | 1.78E − 288 | 0.00E + 00 | 0.00E + 00 | 2.90E − 319 | 1.60E − 311 | 0.00E + 00 |

| Std | 2.34E − 138 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F3 | Mean | 1.58E − 322 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 |

| Std | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F4 | Mean | 2.40E − 140 | 1.69E − 285 | 3.76E − 264 | 1.59E − 281 | 1.08E − 304 | 5.84E − 294 | 1.25E − 312 | 3.718E − 310 | 5.50E − 290 | 1.50E − 272 | 5.79E − 305 |

| Std | 1.70E − 139 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F5 | Mean | 5.97E + 00 | 6.86E − 01 | 6.46E − 01 | 7.08E − 01 | 1.16E − 01 | 6.26E − 01 | 1.19E + 00 | 2.28E − 01 | 1.05E − 01 | 1.19E + 00 | 1.15E − 01 |

| Std | 1.04E + 01 | 4.07E + 00 | 3.94E + 00 | 3.73E + 00 | 1.65E − 01 | 3.95E + 00 | 5.40E + 00 | 9.78E − 01 | 1.57E − 01 | 5.19E + 00 | 2.79E − 01 | |

| F6 | Mean | 5.39E − 03 | 1.10E − 04 | 1.17E − 04 | 1.13E − 04 | 1.22E − 04 | 1.14E − 04 | 1.06E − 04 | 1.12E − 04 | 1.08E − 04 | 1.13E − 04 | 1.06E − 04 |

| Std | 3.23E − 03 | 5.56E − 05 | 5.07E − 05 | 6.74E − 05 | 6.10E − 05 | 5.74E − 05 | 5.23E − 05 | 6.79E − 05 | 4.55E − 05 | 5.95E − 05 | 4.87E − 05 | |

| F7 | Mean | 1.99E − 04 | 4.01E − 05 | 2.88E − 05 | 4.32E − 05 | 3.62E − 05 | 3.56E − 05 | 2.57E − 05 | 4.28E − 05 | 3.39E − 05 | 2.78E − 05 | 4.95E − 05 |

| Std | 1.75E − 04 | 3.02E − 05 | 2.42E − 05 | 3.80E − 05 | 3.41E − 05 | 2.83E − 05 | 2.68E − 05 | 4.63E − 05 | 3.00E − 05 | 2.78E − 05 | 5.01E − 05 | |

|

| ||||||||||||

| F8 | Mean | −1.26E + 04 | −1.26E + 04 | −1.26E + 04 | −1.26E + 04 | −1.26E + 04 | −1.26E + 04 | −1.26E + 04 | −1.26E + 04 | −1.26E + 04 | −1.26E + 04 | −1.26E + 04 |

| Std | 4.05E − 01 | 4.05E − 02 | 2.71E − 02 | 2.43E − 02 | 4.94E − 02 | 2.13E − 02 | 3.62E − 02 | 2.41E − 02 | 3.10E − 02 | 2.86E − 02 | 2.15E − 02 | |

| F9 | Mean | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 |

| Std | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F10 | Mean | 8.88E − 16 | 8.88E − 16 | 8.88E − 16 | 8.88E − 16 | 8.88E − 16 | 8.88E − 16 | 8.88E − 16 | 8.88E − 16 | 8.88E − 16 | 8.88E − 16 | 8.88E − 16 |

| Std | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F11 | Mean | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 |

| Std | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F12 | Mean | 3.33E − 03 | 1.48E − 05 | 1.39E − 04 | 1.17E − 05 | 1.08E − 05 | 9.13E − 06 | 1.19E − 05 | 1.78E − 04 | 1.15E − 05 | 1.94E − 05 | 1.54E − 05 |

| Std | 5.08E − 03 | 2.24E − 05 | 9.02E − 04 | 6.63E − 06 | 8.30E − 06 | 5.52E − 06 | 1.10E − 05 | 8.02E − 04 | 8.61E − 06 | 4.11E − 05 | 1.60E − 05 | |

| F13 | Mean | 7.07E − 03 | 9.56E − 04 | 3.75E − 04 | 1.45E − 03 | 3.80E − 04 | 3.69E − 04 | 1.43E − 03 | 1.60E − 04 | 1.70E − 03 | 1.09E − 03 | 5.73E − 04 |

| Std | 1.53E − 02 | 2.86E − 03 | 1.57E − 03 | 4.18E − 03 | 1.59E − 03 | 1.66E − 03 | 4.60E − 03 | 1.19E − 04 | 3.94E − 03 | 3.06E − 03 | 2.18E − 03 | |

|

| ||||||||||||

| F14 | Mean | 9.98E − 01 | 9.98E − 01 | 9.98E − 01 | 9.98E − 01 | 9.98E − 01 | 9.98E − 01 | 9.98E − 01 | 9.98E − 01 | 9.98E − 01 | 9.98E − 01 | 9.98E − 01 |

| Std | 1.42E − 12 | 7.21E − 14 | 4.64E − 14 | 9.73E − 14 | 4.15E − 14 | 6.11E − 14 | 1.12E − 13 | 8.66E − 14 | 4.45E − 14 | 6.47E − 14 | 7.89E − 14 | |

| F15 | Mean | 5.85E − 04 | 4.35E − 04 | 4.20E − 04 | 4.85E − 04 | 4.58E − 04 | 4.28E − 04 | 4.34E − 04 | 4.61E − 04 | 4.44E − 04 | 4.19E − 04 | 4.31E − 04 |

| Std | 2.70E − 04 | 1.56E − 04 | 1.18E − 04 | 2.37E − 04 | 1.90E − 04 | 1.87E − 04 | 1.38E − 04 | 2.21E − 04 | 1.83E − 04 | 1.86E − 04 | 1.69E − 04 | |

| F16 | Mean | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 |

| Std | 1.58E − 09 | 1.33E − 09 | 5.15E − 11 | 6.70E − 11 | 8.03E − 11 | 2.64E − 10 | 9.28E − 11 | 4.88E − 11 | 9.90E − 11 | 2.38E − 10 | 5.83E − 11 | |

| F17 | Mean | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 |

| Std | 2.66E − 08 | 2.45E − 09 | 4.31E − 09 | 9.68E − 10 | 2.80E − 09 | 1.25E − 09 | 2.99E − 09 | 3.22E − 09 | 2.14E − 09 | 1.76E − 09 | 1.17E − 09 | |

| F18 | Mean | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 |

| Std | 7.54E − 11 | 5.22E − 07 | 6.07E − 07 | 6.42E − 07 | 3.34E − 07 | 3.80E − 07 | 1.44E − 06 | 2.70E − 07 | 4.03E − 07 | 4.52E − 07 | 7.69E − 07 | |

| F19 | Mean | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 |

| Std | 2.77E − 07 | 1.96E − 06 | 1.88E − 06 | 1.71E − 06 | 2.17E − 06 | 9.06E − 07 | 1.29E − 06 | 1.79E − 06 | 3.01E − 06 | 1.77E − 06 | 1.41E − 06 | |

| F20 | Mean | −3.24E + 00 | −3.26E + 00 | −3.25E + 00 | −3.26E + 00 | −3.26E + 00 | −3.24E + 00 | −3.24E + 00 | −3.25E + 00 | −3.25E + 00 | −3.25E + 00 | −3.23E + 00 |

| Std | 5.72E − 02 | 6.10E − 02 | 6.03E − 02 | 6.21E − 02 | 6.10E − 02 | 5.86E − 02 | 5.61E − 02 | 6.02E − 02 | 6.13E − 02 | 5.98E − 02 | 5.49E − 02 | |

| F21 | Mean | −1.02E + 01 | −1.02E + 01 | −1.02E + 01 | −1.02E + 01 | −1.02E + 01 | −1.02E + 01 | −1.02E + 01 | −1.02E + 01 | −1.02E + 01 | −1.02E + 01 | −1.02E + 01 |

| Std | 3.18E − 04 | 7.93E − 06 | 1.19E − 05 | 8.78E − 06 | 1.22E − 05 | 1.77E − 05 | 1.56E − 05 | 9.99E − 06 | 1.19E − 05 | 1.93E − 05 | 8.11E − 06 | |

| F22 | Mean | −1.04E + 01 | −1.04E + 01 | −1.04E + 01 | −1.04E + 01 | −1.04E + 01 | −1.04E + 01 | −1.04E + 01 | −1.04E + 01 | −1.04E + 01 | −1.04E + 01 | −1.04E + 01 |

| Std | 2.97E − 04 | 8.60E − 06 | 1.65E − 05 | 2.86E − 05 | 9.23E − 06 | 9.51E − 06 | 9.87E − 06 | 1.46E − 05 | 6.55E − 06 | 9.76E − 06 | 1.43E − 05 | |

| F23 | Mean | −1.05E + 01 | −1.05E + 01 | −1.05E + 01 | −1.05E + 01 | −1.05E + 01 | −1.05E + 01 | −1.05E + 01 | −1.05E + 01 | −1.05E + 01 | −1.05E + 01 | −1.05E + 01 |

| Std | 3.10E − 04 | 1.08E − 05 | 7.86E − 06 | 1.40E − 05 | 8.20E − 06 | 5.95E − 06 | 8.98E − 06 | 5.22E − 06 | 9.32E − 06 | 6.80E − 06 | 1.50E − 05 | |

As shown in Tables 4 and 5, SMA-C1, SMA-C2, SMA-C3, SMA-C4, SMA-C5, SMA-C6, SMA-C7, SMA-C8, SMA-C9, and SMA-C10 all show better results compared to SMA. This indicates that all 10 chaotic opposition-based learning strategies can improve SMA performance. SMA-C6 achieved the best results in solving the unimodal functions F1–F7, which indicates that the Pricewise map can better enhance the exploitation ability of SMA. When solving the multimodal functions F8–F13, SMA-C5 achieved satisfactory answers, which shows that the Logistic map can enhance the exploration ability of SMA. SMA-C4 achieves satisfactory solutions in the fixed-dimensional functions F14–F23, which indicates that Iterative map can enhance the local optimal avoidance ability of SMA. While SMA-C7 with Sine map is not the best performer in any of the three categories, it is ranked first in the overall ranking. This indicates that Sine map has the best effect in improving the comprehensive performance of SMA. In summary, in this paper, the Sine map with the first overall ranking is chosen to generate chaotic mapping values for chaotic opposition-based strategy.

Table 5.

Friedman test results for ten chaotic mappings.

| F(x) | SMA-C1 | SMA-C2 | SMA-C3 | SMA-C4 | SMA-C5 | SMA-C6 | SMA-C7 | SMA-C8 | SMA-C9 | SMA-C10 | SMA |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1–F7 | 4.00 | 5.29 | 5.00 | 4.71 | 5.00 | 2.29 | 3.14 | 3.14 | 5.29 | 2.86 | 11.00 |

| F8–F13 | 4.17 | 3.17 | 2.83 | 3.00 | 1.33 | 4.00 | 3.17 | 3.83 | 4.00 | 3.00 | 6.00 |

| F14–F23 | 5.90 | 6.40 | 6.00 | 4.70 | 5.00 | 7.30 | 5.00 | 5.80 | 5.40 | 5.70 | 8.80 |

| F1–F23 | 4.87 | 5.22 | 4.87 | 4.26 | 4.04 | 4.91 | 3.96 | 4.48 | 5.00 | 4.13 | 8.74 |

4.3. Improvement Strategy Effectiveness Test

As seen in Section 3, three strategies are used in this paper to improve SMA performance. To evaluate the impact of each strategy on SMA, three SMA-derived algorithms (MSMA-1, MSMA-2, and MSMA-3) are developed according to Table 6. COBL represents chaotic opposition-based learning strategy, SA represents adaptive strategy, and SS represents spiral search strategy. Table 6 lists the results of each algorithm for solving the benchmark test functions.

Table 6.

MSMA variants with different improvement strategies.

| Algorithm | COBL | SA | SS |

|---|---|---|---|

| SMA | No | No | No |

| MSMA-1 | Yes | No | No |

| MSMA-2 | No | Yes | No |

| MSMA-3 | No | No | Yes |

| MSMA | Yes | Yes | Yes |

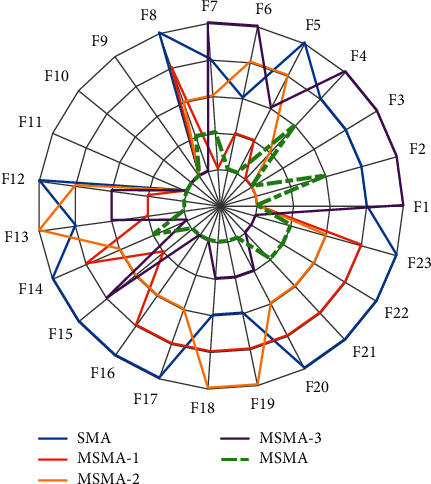

As shown in Tables 7 and 8, MSMA with complete improvement strategies performs best overall. The three SMA-derived algorithms also ranked higher than SMA. The ranking from highest to lowest is as follows: MSMA-1, MSMA-2, and MSMA-3. It is shown that the three strategies have the largest to smallest impact on MSMA performance in the following order: COBL > SA > SS. Further analysis shows that MSMA-1 performs best in solving the unimodal functions F1–F7. This indicates that COBL can significantly improve the local search ability of SMA. MSMA-3 achieves satisfactory results on multimodal functions F8–F13 and F14–F23. This shows that SS can improve the global exploration capability of SMA, allowing the algorithm to get rid of local optimal solutions. MSMA-2 performs better in all three types of functions, which indicates that the adaptive strategy balances the exploitation and exploration capabilities of SMA. It is worth noting that MSMA-3 performs less well than SMA in the unimodal functions. This is due to the fact that the spiral search strategy expands the search of the space around the individual, resulting in a weakened exploitation capability. However, the combination of the three strategies allows the comprehensive performance of MSMA to be significantly improved, further illustrating the importance of balanced exploitation and exploration capabilities to enhance the performance of an algorithm. Finally, to more visually show the performance of each algorithm, a radar plot is drawn based on the ranking of each algorithm. As shown in Figure 4, the smaller the area enclosed by each curve, the better the performance. Obviously, MSMA has the smallest enclosed area, which means that MSMA has the best performance. On the contrary, SMA has the largest area.

Table 7.

The statistical results of MSMA-derived algorithms on classical test functions.

| F(x) | Measure | SMA | MSMA-1 | MSMA-2 | MSMA-3 | MSMA |

|---|---|---|---|---|---|---|

| F1 | Mean | 4.25E − 317 | 0.00E + 00 | 0.00E + 00 | 4.38E − 227 | 0.00E + 00 |

| Std | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F2 | Mean | 3.31E − 139 | 0.00E + 00 | 0.00E + 00 | 8.07E − 118 | 8.78E − 169 |

| Std | 2.34E − 138 | 0.00E + 00 | 0.00E + 00 | 5.68E − 117 | 0.00E + 00 | |

| F3 | Mean | 1.58E − 322 | 0.00E + 00 | 0.00E + 00 | 2.52E − 213 | 0.00E + 00 |

| Std | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F4 | Mean | 2.40E − 140 | 4.23E − 295 | 3.84E − 277 | 9.79E − 116 | 1.37E − 166 |

| Std | 1.70E − 139 | 0.00E + 00 | 0.00E + 00 | 6.90E − 115 | 0.00E + 00 | |

| F5 | Mean | 5.97E + 00 | 7.32E − 01 | 5.00E + 00 | 2.20E + 00 | 3.10E − 03 |

| Std | 1.04E + 01 | 4.10E + 00 | 1.04E + 01 | 7.36E + 00 | 8.40E − 03 | |

| F6 | Mean | 5.39E − 03 | 1.06E − 04 | 8.33E − 03 | 1.65E − 02 | 2.62E − 05 |

| Std | 3.23E − 03 | 5.69E − 05 | 2.52E − 02 | 5.78E − 02 | 1.77E − 04 | |

| F7 | Mean | 1.99E − 04 | 4.04E − 05 | 1.60E − 04 | 2.15E − 04 | 4.48E − 05 |

| Std | 1.75E − 04 | 3.94E − 05 | 1.90E − 04 | 2.77E − 04 | 4.83E − 05 | |

|

| ||||||

| F8 | Mean | −1.26E + 04 | −1.26E + 04 | −1.26E + 04 | −1.26E + 04 | −1.26E + 04 |

| Std | 4.05E − 01 | 2.77E − 02 | 1.68E − 02 | 1.44E − 03 | 1.70E − 02 | |

| F9 | Mean | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 |

| Std | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F10 | Mean | 8.88E − 16 | 8.88E − 16 | 8.88E − 16 | 8.88E − 16 | 8.88E − 16 |

| Std | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F11 | Mean | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 |

| Std | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F12 | Mean | 3.33E − 03 | 1.08E − 05 | 5.43E − 04 | 1.20E − 04 | 7.92E − 08 |

| Std | 5.08E − 03 | 6.97E − 06 | 1.54E − 03 | 6.35E − 04 | 9.56E − 08 | |

| F13 | Mean | 7.07E − 03 | 1.03E − 03 | 2.20E − 02 | 3.05E − 03 | 8.62E − 04 |

| Std | 1.53E − 02 | 3.63E − 03 | 9.07E − 02 | 6.13E − 03 | 3.64E − 03 | |

|

| ||||||

| F14 | Mean | 9.98E − 01 | 9.98E − 01 | 9.98E − 01 | 9.98E − 01 | 9.98E − 01 |

| Std | 1.42E − 12 | 4.63E − 14 | 5.84E − 16 | 3.28E − 16 | 2.21E − 16 | |

| F15 | Mean | 5.85E − 04 | 4.64E − 04 | 5.50E − 04 | 5.54E − 04 | 4.28E − 04 |

| Std | 2.70E − 04 | 1.83E − 04 | 2.72E − 04 | 3.76E − 04 | 3.26E − 04 | |

| F16 | Mean | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | − 1.03E + 00 |

| Std | 1.58E − 09 | 5.23E − 11 | 1.11E − 12 | 4.60E − 16 | 4.54E − 16 | |

| F17 | Mean | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 |

| Std | 2.66E − 08 | 7.65E − 10 | 1.76E − 11 | 3.36E − 16 | 3.36E − 16 | |

| F18 | Mean | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 |

| Std | 7.54E − 11 | 2.91E − 07 | 8.52E − 07 | 2.52E − 14 | 2.01E − 14 | |

| F19 | Mean | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | − 3.86E + 00 |

| Std | 2.77E − 07 | 2.46E − 06 | 6.61E − 06 | 3.26E − 09 | 1.75E − 12 | |

| F20 | Mean | −3.24E + 00 | −3.25E + 00 | −3.26E + 00 | −3.26E + 00 | − 3.27E + 00 |

| Std | 5.72E − 02 | 6.45E − 02 | 6.17E − 02 | 6.12E − 02 | 5.95E − 02 | |

| F21 | Mean | −1.02E + 01 | −1.02E + 01 | −1.02E + 01 | −1.02E + 01 | −1.02E + 01 |

| Std | 3.18E − 04 | 2.08E − 05 | 1.04E − 07 | 3.63E − 14 | 5.13E − 14 | |

| F22 | Mean | −1.04E + 01 | −1.04E + 01 | −1.04E + 01 | −1.04E + 01 | −1.04E + 01 |

| Std | 2.97E − 04 | 1.32E − 05 | 1.43E − 07 | 9.10E − 15 | 1.05E − 13 | |

| F23 | Mean | −1.05E + 01 | −1.05E + 01 | −1.05E + 01 | −1.05E + 01 | −1.05E + 01 |

| Std | 3.10E − 04 | 9.74E − 06 | 7.08E − 08 | 8.74E − 15 | 6.67E − 14 | |

Table 8.

Friedman test results for MSMA-derived algorithms.

| F(x) | SMA | MSMA-1 | MSMA-2 | MSMA-3 | MSMA |

|---|---|---|---|---|---|

| F1–F7 | 4.00 | 1.29 | 2.29 | 4.71 | 1.71 |

| F8–F13 | 2.83 | 1.83 | 2.50 | 1.67 | 1.17 |

| F14–F23 | 4.60 | 3.80 | 3.40 | 1.60 | 1.40 |

| F1–F23 | 3.96 | 2.52 | 2.83 | 2.57 | 1.43 |

Figure 4.

Ranking of improvement strategies.

4.4. Comparison and Analysis of Optimization Results

Tables 9 to Table 12 list the optimization results for F1–F13 of each algorithm for Dim = 30, 100, 500, 1000. Table 13 then shows the results of the ten algorithms in fixed-dimensional functions F14–F23. From the optimization results, MSMA achieves better results in most of the test functions.

Table 9.

Comparison of results on F1–F13 with 30D.

| F(x) | Measure | GWO | GSA | HHO | MRFO | EO | SSA | MFO | MPA | SMA | MSMA |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 1.29E − 27 | 2.10E − 05 | 8.36E − 96 | 0.00E + 00 | 7.66E − 41 | 2.06E − 07 | 1.61E + 03 | 1.54E − 30 | 1.29E − 288 | 0.00E + 00 |

| Std | 1.17E − 27 | 1.49E − 04 | 5.77E − 95 | 0.00E + 00 | 2.81E − 40 | 2.97E − 07 | 3.70E + 03 | 9.64E − 30 | 0.00E + 00 | 0.00E + 00 | |

| F2 | Mean | 9.44E − 17 | 1.51E − 01 | 3.66E − 49 | 2.96E − 207 | 5.93E − 24 | 2.31E + 00 | 3.59E + 01 | 9.52E − 18 | 5.27E − 147 | 2.89E − 164 |

| Std | 7.62E − 17 | 4.92E − 01 | 2.52E − 48 | 0.00E + 00 | 5.77E − 24 | 1.64E + 00 | 2.24E + 01 | 2.27E − 17 | 3.73E − 146 | 0.00E + 00 | |

| F3 | Mean | 1.19E − 05 | 1.03E + 03 | 5.16E − 77 | 0.00E + 00 | 5.04E − 09 | 1.44E + 03 | 1.91E + 04 | 1.74E − 16 | 1.43E − 293 | 0.00E + 00 |

| Std | 2.55E − 05 | 3.61E + 02 | 2.49E − 76 | 0.00E + 00 | 1.45E − 08 | 7.65E + 02 | 1.24E + 04 | 8.29E − 16 | 0.00E + 00 | 0.00E + 00 | |

| F4 | Mean | 6.03E − 07 | 6.94E + 00 | 1.68E − 48 | 4.53E − 199 | 4.75E − 10 | 1.26E + 01 | 6.96E + 01 | 5.88E − 16 | 1.32E − 143 | 6.72E − 161 |

| Std | 7.38E − 07 | 1.98E + 00 | 8.39E − 48 | 0.00E + 00 | 1.34E − 09 | 4.24E + 00 | 9.70E + 00 | 1.82E − 15 | 9.33E − 143 | 4.75E − 160 | |

| F5 | Mean | 2.70E + 01 | 5.78E + 01 | 9.11E − 03 | 2.27E + 01 | 2.54E + 01 | 3.10E + 02 | 4.81E + 06 | 2.55E + 01 | 9.10E + 00 | 2.56E − 02 |

| Std | 7.05E − 01 | 6.24E + 01 | 1.25E − 02 | 5.56E − 01 | 1.98E − 01 | 4.22E + 02 | 1.92E + 07 | 5.57E − 01 | 1.16E + 01 | 1.31E − 01 | |

| F6 | Mean | 8.33E − 01 | 2.42E − 16 | 1.62E − 04 | 7.89E − 11 | 6.81E − 06 | 2.73E − 07 | 2.82E + 03 | 1.54E − 03 | 5.00E − 03 | 7.93E − 07 |

| Std | 4.26E − 01 | 1.47E − 16 | 1.72E − 04 | 1.99E − 10 | 4.88E − 06 | 6.06E − 07 | 6.09E + 03 | 1.09E − 02 | 3.01E − 03 | 1.70E − 06 | |

| F7 | Mean | 2.22E − 03 | 8.92E − 02 | 1.16E − 04 | 1.28E − 04 | 1.48E − 03 | 1.77E − 01 | 3.50E + 00 | 1.40E − 03 | 1.88E − 04 | 4.79E − 05 |

| Std | 1.36E − 03 | 4.46E − 02 | 9.09E − 05 | 1.02E − 04 | 8.31E − 04 | 7.02E − 02 | 5.92E + 00 | 8.75E − 04 | 1.79E − 04 | 4.17E − 05 | |

|

| |||||||||||

| F8 | Mean | −5.96E + 03 | −2.69E + 03 | −1.24E + 04 | −8.17E + 03 | −8.96E + 03 | −7.43E + 03 | −8.66E + 03 | −9.09E + 03 | −1.26E + 04 | −1.26E + 04 |

| Std | 8.24E + 02 | 5.09E + 02 | 9.08E + 02 | 7.49E + 02 | 5.89E + 02 | 6.96E + 02 | 8.90E + 02 | 4.94E + 02 | 3.77E − 01 | 1.77E − 02 | |

| F9 | Mean | 2.90E + 00 | 2.93E + 01 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 5.24E + 01 | 1.62E + 02 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 |

| Std | 4.52E + 00 | 7.30E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 1.78E + 01 | 3.66E + 01 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F10 | Mean | 1.03E − 13 | 1.19E − 08 | 8.88E − 16 | 8.88E − 16 | 8.63E − 15 | 2.53E + 00 | 1.56E + 01 | 1.53E − 15 | 8.88E − 16 | 8.88E − 16 |

| Std | 1.69E − 14 | 2.71E − 09 | 0.00E + 00 | 0.00E + 00 | 2.23E − 15 | 6.39E − 01 | 6.60E + 00 | 3.18E − 15 | 0.00E + 00 | 0.00E + 00 | |

| F11 | Mean | 5.42E − 03 | 2.82E + 01 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 1.58E − 02 | 2.08E + 01 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 |

| Std | 1.05E − 02 | 7.27E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 1.15E − 02 | 3.76E + 01 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F12 | Mean | 4.47E − 02 | 1.92E + 00 | 1.21E − 05 | 2.80E − 12 | 6.27E − 07 | 7.79E + 00 | 4.32E + 01 | 8.04E − 05 | 4.75E − 03 | 7.58E − 08 |

| Std | 2.49E − 02 | 1.17E + 00 | 2.22E − 05 | 4.49E − 12 | 5.39E − 07 | 3.23E + 00 | 1.66E + 02 | 3.07E − 04 | 7.41E − 03 | 1.10E − 07 | |

| F13 | Mean | 6.57E − 01 | 9.94E + 00 | 1.52E − 04 | 2.25E + 00 | 1.90E − 02 | 1.49E + 01 | 8.20E + 06 | 4.90E − 02 | 7.96E − 03 | 8.61E − 04 |

| Std | 2.35E − 01 | 7.66E + 00 | 3.62E − 04 | 1.23E + 00 | 3.19E − 02 | 1.43E + 01 | 5.80E + 07 | 6.74E − 02 | 1.22E − 02 | 3.63E − 03 | |

Table 12.

Comparison of results on F1–F13 with 1000D.

| F(x) | Measure | GWO | GSA | HHO | MRFO | EO | SSA | MFO | MPA | SMA | MSMA |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 6.10E − 02 | 4.74E + 03 | 1.22E − 93 | 0.00E + 00 | 7.49E − 21 | 1.03E + 04 | 4.67E + 04 | 6.72E − 25 | 0.00E + 00 | 0.00E + 00 |

| Std | 7.28E − 01 | 1.46E + 295 | 3.02E − 48 | 2.16E − 199 | 4.37E − 13 | 1.19E + 03 | 1.34E + 03 | 1.16E + 03 | 8.28E − 01 | 0.00E + 00 | |

| F2 | Mean | 4.62E − 01 | 3.12E + 01 | 1.97E − 47 | 0.00E + 00 | 2.26E − 13 | 2.77E + 01 | 3.70E + 02 | 1.49E + 02 | 3.00E + 00 | 1.50E − 01 |

| Std | 1.52E + 06 | 7.43E + 06 | 3.46E − 28 | 0.00E + 00 | 1.49E + 05 | 5.41E + 06 | 1.79E + 07 | 2.88E − 09 | 2.69E − 74 | 5.21E − 01 | |

| F3 | Mean | 2.88E + 05 | 4.35E + 06 | 2.44E − 27 | 0.00E + 00 | 1.14E + 05 | 2.88E + 06 | 3.39E + 06 | 1.49E − 08 | 1.90E − 73 | 0.00E + 00 |

| Std | 7.76E + 01 | 3.29E + 01 | 7.44E − 49 | 1.34E − 192 | 8.19E + 01 | 4.40E + 01 | 9.95E + 01 | 1.90E − 12 | 1.60E − 69 | 0.00E + 00 | |

| F4 | Mean | 3.54E + 00 | 1.54E + 00 | 4.14E − 48 | 0.00E + 00 | 1.34E + 01 | 2.16E + 00 | 1.91E − 01 | 3.78E − 12 | 1.13E − 68 | 2.65E − 159 |

| Std | 1.05E + 03 | 2.09E + 07 | 4.21E − 01 | 9.96E + 02 | 9.97E + 02 | 1.17E + 08 | 1.25E + 10 | 9.97E + 02 | 5.41E + 02 | 1.86E − 158 | |

| F5 | Mean | 2.85E + 01 | 2.10E + 06 | 5.40E − 01 | 3.97E − 01 | 9.14E − 02 | 1.10E + 07 | 3.23E + 08 | 1.70E − 01 | 4.05E + 02 | 2.46E + 01 |

| Std | 2.03E + 02 | 1.24E + 05 | 7.29E − 03 | 1.71E + 02 | 2.06E + 02 | 2.35E + 05 | 2.72E + 06 | 1.84E + 02 | 4.44E + 01 | 4.94E + 01 | |

| F6 | Mean | 2.93E + 00 | 4.93E + 03 | 1.10E − 02 | 4.29E + 00 | 1.85E + 00 | 1.02E + 04 | 5.03E + 04 | 3.31E + 00 | 6.32E + 01 | 1.48E + 00 |

| Std | 1.57E − 01 | 5.57E + 03 | 1.80E − 04 | 1.56E − 04 | 5.42E − 03 | 1.73E + 03 | 1.97E + 05 | 1.83E − 03 | 6.49E − 04 | 2.84E + 00 | |

| F7 | Mean | 3.28E − 02 | 4.96E + 02 | 1.74E − 04 | 1.32E − 04 | 2.79E − 03 | 1.94E + 02 | 5.88E + 03 | 9.24E − 04 | 5.76E − 04 | 4.72E − 05 |

| Std | −8.59E + 04 | −1.43E + 04 | −4.19E + 05 | −1.08E + 05 | −1.14E + 05 | −9.00E + 04 | −8.84E + 04 | −1.31E + 05 | −4.19E + 05 | 3.96E − 05 | |

|

| |||||||||||

| F8 | Mean | 1.43E + 04 | 2.81E + 03 | 3.16E + 01 | 6.45E + 03 | 6.62E + 03 | 8.66E + 03 | 6.69E + 03 | 4.36E + 03 | 3.88E + 02 | −4.19E + 05 |

| Std | 1.96E + 02 | 6.64E + 03 | 0.00E + 00 | 0.00E + 00 | 2.18E − 13 | 7.64E + 03 | 1.54E + 04 | 0.00E + 00 | 0.00E + 00 | 1.66E + 02 | |

| F9 | Mean | 4.95E + 01 | 2.03E + 02 | 0.00E + 00 | 0.00E + 00 | 5.97E − 13 | 1.70E + 02 | 2.14E + 02 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 |

| Std | 1.77E − 02 | 1.10E + 01 | 8.88E − 16 | 8.88E − 16 | 2.12E − 12 | 1.45E + 01 | 2.04E + 01 | 3.88E − 14 | 8.88E − 16 | 0.00E + 00 | |

| F10 | Mean | 2.67E − 03 | 1.62E − 01 | 0.00E + 00 | 0.00E + 00 | 1.13E − 12 | 1.74E − 01 | 2.06E − 01 | 3.40E − 14 | 0.00E + 00 | 8.88E − 16 |

| Std | 4.99E − 02 | 2.05E + 04 | 0.00E + 00 | 0.00E + 00 | 1.58E − 16 | 2.14E + 03 | 2.45E + 04 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F11 | Mean | 7.67E − 02 | 2.65E + 02 | 0.00E + 00 | 0.00E + 00 | 5.54E − 17 | 8.74E + 01 | 4.61E + 02 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 |

| Std | 1.24E + 00 | 1.23E + 05 | 1.63E − 06 | 5.39E − 01 | 8.10E − 01 | 1.09E + 07 | 3.06E + 10 | 6.16E − 01 | 5.61E − 03 | 0.00E + 00 | |

| F12 | Mean | 2.71E − 01 | 8.72E + 04 | 2.53E − 06 | 3.34E − 02 | 1.76E − 02 | 3.80E + 06 | 1.10E + 09 | 2.37E − 02 | 1.15E − 02 | 1.79E − 05 |

| Std | 5.04E + 01 | 3.00E + 06 | 4.45E − 04 | 4.97E + 01 | 4.91E + 01 | 3.58E + 07 | 2.24E + 10 | 4.85E + 01 | 1.31E + 00 | 3.38E − 05 | |

| F13 | Mean | 1.52E + 00 | 1.15E + 06 | 6.53E − 04 | 2.92E − 02 | 2.73E − 01 | 7.45E + 06 | 1.02E + 09 | 2.94E − 01 | 2.38E + 00 | 2.17E − 02 |

| Std | 1.63E + 00 | 1.02E + 06 | 1.03E − 03 | 1.28E − 02 | 2.92E − 01 | 8.94E + 06 | 1.32E + 09 | 2.65E − 01 | 4.92E + 00 | 5.43E − 02 | |

Table 13.

Comparison of results on F14–F23 with fixed dimension.

| F(x) | Measure | GWO | GSA | HHO | MRFO | EO | SSA | MFO | MPA | SMA | MSMA |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F14 | Mean | 4.43E + 00 | 5.16E + 00 | 1.27E + 00 | 9.98E − 01 | 9.98E − 01 | 1.30E + 00 | 2.52E + 00 | 9.98E − 01 | 9.98E − 01 | 9.98E − 01 |

| Std | 4.15E + 00 | 3.11E + 00 | 1.04E + 00 | 1.05E − 16 | 1.79E − 16 | 6.10E − 01 | 2.03E + 00 | 1.57E − 16 | 6.40E − 13 | 3.45E − 16 | |

| F15 | Mean | 2.85E − 03 | 4.64E − 03 | 3.73E − 04 | 3.83E − 04 | 2.39E − 03 | 2.42E − 03 | 1.21E − 03 | 3.07E − 04 | 5.97E − 04 | 3.54E − 04 |

| Std | 6.54E − 03 | 2.85E − 03 | 1.35E − 04 | 2.51E − 04 | 6.06E − 03 | 5.37E − 03 | 1.10E − 03 | 5.03E − 15 | 3.14E − 04 | 1.85E − 04 | |

| F16 | Mean | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 | −1.03E + 00 |

| Std | 2.25E − 08 | 4.31E − 16 | 4.15E − 09 | 2.50E − 16 | 3.14E − 16 | 1.75E − 14 | 2.24E − 16 | 6.22E − 16 | 1.23E − 09 | 4.51E − 16 | |

| F17 | Mean | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 | 3.98E − 01 |

| Std | 9.38E − 07 | 3.36E − 16 | 7.42E − 06 | 3.36E − 16 | 3.36E − 16 | 9.04E − 15 | 3.36E − 16 | 3.36E − 16 | 3.17E − 08 | 3.36E − 16 | |

| F18 | Mean | 3.00E + 00 | 3.00E + 00 | 3.00 E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 | 3.00E + 00 |

| Std | 3.52E − 05 | 4.61E − 15 | 3.63E − 07 | 1.13E − 15 | 1.37E − 15 | 2.61E − 13 | 1.57E − 15 | 2.12E − 15 | 1.36E − 10 | 2.49E − 14 | |

| F19 | Mean | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 | −3.86E + 00 |

| Std | 2.67E − 03 | 2.69E − 15 | 2.65E − 03 | 3.12E − 15 | 2.90E − 15 | 7.50E − 11 | 3.14E − 15 | 2.78E − 15 | 2.47E − 07 | 3.29E − 12 | |

| F20 | Mean | −3.26E + 00 | −3.32E + 00 | −3.07E + 00 | −3.26E + 00 | −3.26E + 00 | −3.23E + 00 | −3.22E + 00 | −3.32E + 00 | −3.25E + 00 | −3.26E + 00 |

| Std | 7.69E − 02 | 3.11E − 16 | 1.27E − 01 | 6.00E − 02 | 6.58E − 02 | 5.79E − 02 | 6.39E − 02 | 1.10E − 11 | 5.92E − 02 | 6.05E − 02 | |

| F21 | Mean | −9.24E + 00 | −6.44E + 00 | −5.23E + 00 | −8.42E + 00 | −8.94E + 00 | −7.44E + 00 | −6.39E + 00 | −1.02E + 01 | −1.02E + 01 | −1.02E + 01 |

| Std | 2.14E + 00 | 3.63E + 00 | 9.15E − 01 | 2.44E + 00 | 2.50E + 00 | 3.20E + 00 | 3.36E + 00 | 4.01E − 11 | 2.08E − 04 | 1.08E − 13 | |

| F22 | Mean | −1.02E + 01 | −1.01E + 01 | −5.12E + 00 | −8.78E + 00 | −8.59E + 00 | −9.18E + 00 | −7.49E + 00 | −1.04E + 01 | −1.04E + 01 | −1.04E + 01 |

| Std | 1.05E + 00 | 1.42E + 00 | 8.74E − 01 | 2.51E + 00 | 3.01E + 00 | 2.67E + 00 | 3.39E + 00 | 2.83E − 11 | 2.88E − 04 | 5.61E − 14 | |

| F23 | Mean | −1.04E + 01 | −1.02E + 01 | −5.07E + 00 | −9.13E + 00 | −9.73E + 00 | −7.13E + 00 | −7.74E + 00 | −1.05E + 01 | −1.05E + 01 | −1.05E + 01 |

| Std | 1.15E + 00 | 1.56E + 00 | 3.83E − 01 | 2.40E + 00 | 2.22E + 00 | 3.67E + 00 | 3.51E + 00 | 4.75E − 11 | 3.33E − 04 | 7.30E − 14 |

Specifically, for the unimodal functions F1–F7, MSMA achieved satisfactory results both in low dimensions and in high dimensions. MSMA can obtain the theoretical optimal solutions of F1 and F3 stably in different dimensions. In comparison, SMA failed to achieve the theoretical optimal value in all the test functions and performed weaker than MSMA. Comparing the test results of each dimension, we found that MSMA's performance has not dropped too much with increasing dimensions. This indicates that MSMA has excellent local exploitation capability. For the multimodal functions F8–F13, the MSMA steadily achieves the theoretical optimal values at F9–F11 with Dim = 30, 100, 500, 1000. When in low dimensions (Dim = 30, 100), MSMA performs best in solving F8. As the dimension increases, MSMA ranks second, only after SSA. MSMA has the best comprehensive performance in the multimodal functions, indicating that the improved strategy greatly enhances the global exploration capability of SMA.

Fixed-dimension functions are often used to test the ability of an algorithm to keep a balance between exploitation and exploration. The SMA performs best in six of the ten functions (F14, F16, F17, and F21–F23) when analyzing the mean and standard deviation. In addition, MSMA provides a better solution than SMA in all fixed-dimensional functions. Therefore, we can conclude that the MSMA proposed in this paper can well balance the exploitation and exploration capabilities with strong local optimal avoidance.

4.5. Convergence and Stability Analysis

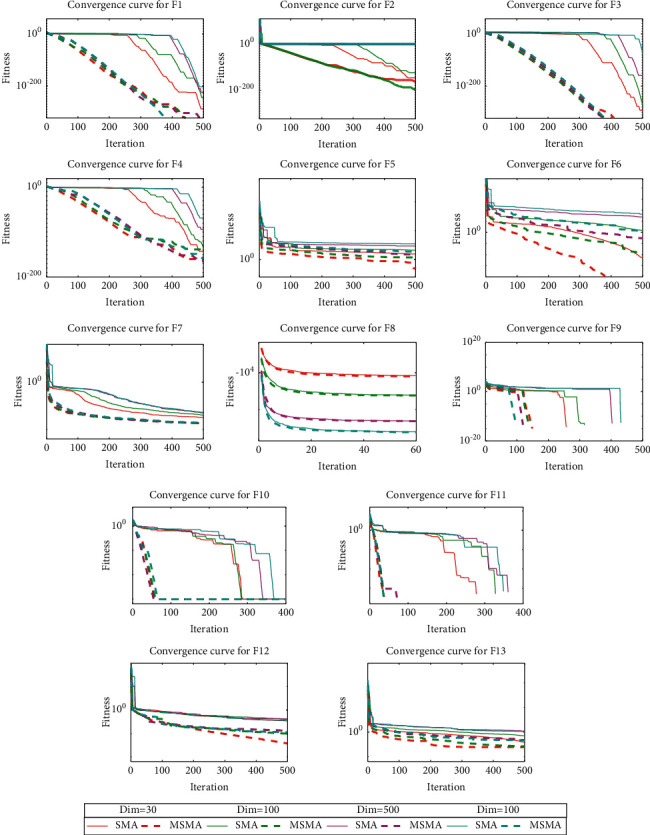

In order to analyze the convergence performance of MSMA, convergence curves are plotted according to the results of different dimensions, as shown in Figure 5. We can learn that the convergence speed and convergence accuracy of MSMA are better than those of SMA in different dimensional cases. In addition, the convergence speed and convergence accuracy of MSMA do not decrease too much as the dimensionality increases. Therefore, the improvement strategy proposed in this paper can effectively improve the convergence speed of SMA and achieve better optimization results.

Figure 5.

Convergence curves of SMA and MSMA on 13 test functions in 4 different dimensional cases.

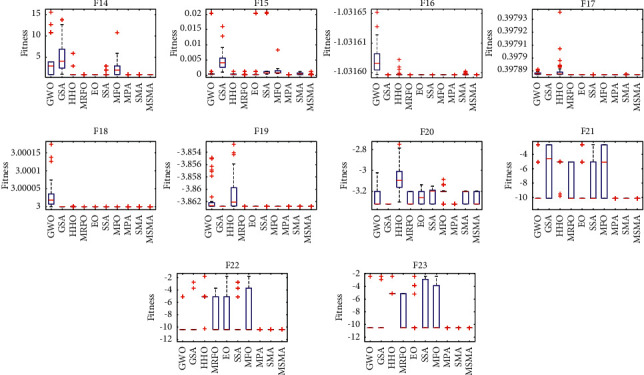

To analyze the distribution properties of MSMA on a fixed-dimensional function, box plots were drawn. From Figure 6, it can be seen that the maximum, minimum, and median values of MSMA are almost the same in most of the test functions. Especially for F14 and F17, there are no outliers in MSMA. The above shows that MSMA is superior to the comparison algorithm in terms of stability.

Figure 6.

Boxplot analysis for fixed-dimensional functions.

4.6. Statistical Test

To statistically validate the differences between MSMA and the comparison algorithms, Wilcoxon's rank-sum test [48] and Friedman test [49] were used for testing.

Table 14 presents the statistical results with a significance level of 0.05. The symbols “+/=/−” indicate that MSMA is better than, similar to, or worse than the comparison algorithm. As shown in Table 14, MSMA outperforms other comparative algorithms in different cases and achieves results of 91/23/3, 96/18/3, 94/18/5, 93/19/5, and 66/15/9, confirming the significant superiority of MSMA in most cases compared to other algorithms.

Table 14.

Statistical results of Wilcoxon's rank-sum test.

| MSMA VS. | F1–F13 (Dim = 30) | F1–F13 (Dim = 100) | F1–F13 (Dim = 500) | F1–F13 (Dim = 1000) | F14–F23 | |

|---|---|---|---|---|---|---|

| Wilcoxon's rank-sum test (+/=/−) | GWO | 12/1/0 | 13/0/0 | 13/0/0 | 13/0/0 | 8/2/0 |

| GSA | 12/0/1 | 13/0/0 | 13/0/0 | 13/0/0 | 6/2/2 | |

| HHO | 10/3/0 | 5/5/3 | 4/5/4 | 4/5/4 | 9/1/1 | |

| MRFO | 6/7/0 | 7/6/0 | 6/6/1 | 6/6/1 | 6/2/2 | |

| EO | 11/2/0 | 11/2/0 | 11/2/0 | 11/2/0 | 6/3/1 | |

| SSA | 12/0/1 | 13/0/0 | 13/0/0 | 13/0/0 | 10/0/0 | |

| MFO | 13/0/0 | 13/0/0 | 13/0/0 | 13/0/0 | 6/2/2 | |

| MPA | 8/4/1 | 11/2/0 | 11/2/0 | 11/2/0 | 6/3/1 | |

| SMA | 7/6/0 | 10/3/0 | 10/3/0 | 9/4/0 | 10/0/0 | |

| Sum | 91/23/3 | 96/18/3 | 94/18/5 | 93/19/5 | 66/15/9 | |

Table 15 shows the statistics of F1–F13 in different dimensions and the fixed-dimensional functions F14–F23. The statistics show that MSMA ranks first in all cases. Therefore, it can be considered that MSMA has the best performance compared to other algorithms.

Table 15.

Statistical results of the Friedman test.

| GWO | GSA | HHO | MRFO | EO | SSA | MFO | MPA | SMA | MSMA | |||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1–F13 | Dim = 30 | Friedman value | 7.23 | 7.85 | 3.27 | 2.96 | 4.85 | 8.31 | 9.62 | 5.08 | 3.65 | 2.19 |

| Friedman rank | 7 | 8 | 3 | 2 | 5 | 9 | 10 | 6 | 4 | 1 | ||

| Dim = 100 | Friedman value | 7.00 | 8.54 | 2.58 | 2.96 | 5.31 | 8.62 | 9.69 | 5.15 | 3.19 | 1.96 | |

| Friedman rank | 7 | 8 | 2 | 3 | 6 | 9 | 10 | 5 | 4 | 1 | ||

| Dim = 500 | Friedman value | 7.00 | 8.31 | 2.35 | 3.04 | 5.85 | 8.54 | 9.69 | 4.69 | 3.27 | 2.27 | |

| Friedman rank | 8 | 8 | 2 | 3 | 6 | 9 | 10 | 5 | 4 | 1 | ||

| Dim = 1000 | Friedman value | 7.00 | 8.31 | 2.35 | 3.04 | 5.92 | 8.38 | 9.85 | 4.69 | 3.27 | 2.19 | |

| Friedman rank | 8 | 9 | 2 | 3 | 6 | 9 | 10 | 5 | 4 | 1 | ||

|

| ||||||||||||

| F14–F23 | Fixed dim. | Friedman value | 7.30 | 5.40 | 8.70 | 4.05 | 4.70 | 7.30 | 6.05 | 3.00 | 5.80 | 2.70 |

| Friedman rank | 8 | 5 | 10 | 3 | 4 | 8 | 7 | 2 | 6 | 1 | ||

|

| ||||||||||||

| F1–F23 | All dim. | Friedman value | 7.24 | 7.52 | 3.99 | 3.11 | 4.83 | 8.17 | 9.14 | 4.76 | 3.94 | 2.30 |

| Friedman rank | 7 | 8 | 4 | 2 | 6 | 9 | 10 | 5 | 3 | 1 | ||

4.7. Engineering Design Problems

Engineering design optimization problems are often solved using metaheuristic algorithms. In this section, MSMA is used to solve two engineering design problems: the welded beam design problem and the tension/compression spring design problem. The results provided by MSMA are compared with those of other algorithms.

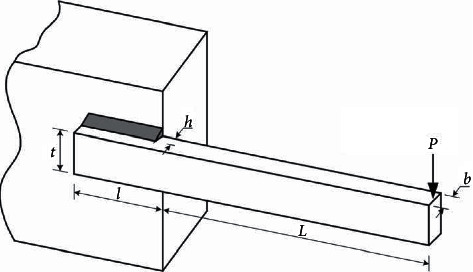

4.7.1. Welded Beam Design Problem

The welded beam design problem is a classical structural optimization problem, proposed by Coello [50]. As shown in Figure 7, the objective of this design problem is to minimize the manufacturing cost of the welded beam. The optimization variables include weld thickness h (x1), joint beam length l (x2), beam height t (x3), and beam thickness b (x4). The mathematical model of the welded beam design problem is as follows:

| (13) |

It is subject to

| (14) |

where

| (15) |

Figure 7.

Schematic of welded beam design problem.

The results of MSMA solving this problem are compared with those of other algorithms, as shown in Table 16. The results show that MSMA is the optimal algorithm for solving this problem, and the optimal solutions for each parameter are [0.205729, 3.470488, 9.036623, 0.205729], with the corresponding minimum cost of 1.724852.

Table 16.

Comparison results of the MSMA for the welded beam design problem.

| Algorithm | Optimal values for variables | Optimal cost | |||

|---|---|---|---|---|---|

| x 1 | x 2 | x 3 | x 4 | ||

| DDSCA [51] | 0.20516 | 3.4759 | 9.0797 | 0.20552 | 1.7305 |

| HGA [52] | 0.205712 | 3.470391 | 9.039693 | 0.205716 | 1.725236 |

| MGWO-III [53] | 0.205667 | 3.471899 | 9.036679 | 0.205733 | 1.724984 |

| IAPSO [54] | 0.205729 | 3.470886 | 9.036623 | 0.205729 | 1.724852 |

| TEO [55] | 0.205681 | 3.472305 | 9.035133 | 0.205796 | 1.725284 |

| hHHO-SCA [56] | 0.190086 | 3.696496 | 9.386343 | 0.204157 | 1.779032 |

| HPSO [57] | 0.20573 | 3.470489 | 9.036624 | 0.20573 | 1.724852 |

| CPSO [58] | 0.202369 | 3.544214 | 9.048210 | 0.205723 | 1.728024 |

| WCA [59] | 0.205728 | 3.470522 | 9.036620 | 0.205729 | 1.724856 |

| SaDN [60] | 0.2444 | 6.21787 | 8.2915 | 0.2444 | 1.9773 |

| MSMA | 0.205729 | 3.470488 | 9.036623 | 0.205729 | 1.724852 |

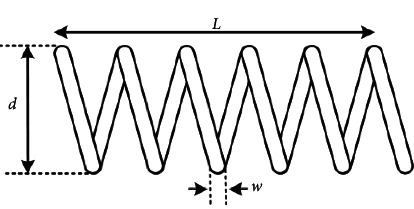

4.7.2. Tension/Compression Spring Design Problem

The tension/compression spring design problem is a mechanical engineering design optimization problem. As shown in Figure 8, the objective of this problem is to reduce the weight of the spring. It includes three optimization objectives: wire diameter w (x1), average coil diameter d (x2), and the number of coils L (x3). The comparison results are shown in Table 17. The mathematical model of this problem is described below.

Figure 8.

Schematic of tension/compression spring design problem.

Table 17.

Comparison results of the MSMA for the tension/compression spring design problem.

| Algorithm | Optimal values for variables | Optimal cost | ||

|---|---|---|---|---|

| x 1 | x 2 | x 3 | ||

| GA3 [61] | 0.051989 | 0.363965 | 10.890522 | 0.0126810 |

| SaDN [60] | 0.051622 | 0.355105 | 11.384415 | 0.012665 |

| CPSO [58] | 0.051728 | 0.357644 | 11.244543 | 0.0126747 |

| CDE [62] | 0.051609 | 0.354714 | 11.410831 | 0.0126702 |

| DDSCA [51] | 0.052669 | 0.380673 | 10.0153 | 0.012688 |

| GSA [24] | 0.050276 | 0.323680 | 13.525410 | 0.0127022 |

| hHHO-SCA [56] | 0.054693 | 0.433378 | 7.891402 | 0.0128229 |

| AEO [63] | 0.051897 | 0.361751 | 10.879842 | 0.012667 |

| MVO [64] | 0.05251 | 0.3762 | 10.33513 | 0.012970 |

| MSMA | 0.051808 | 0.35959 | 11.210570 | 0.012665 |

| (16) |

The results showed that MSMA achieved the lowest cost of 0.012665 compared to GA3, CPSO, CDE, DDSCA, GSA, hHHO-SCA, AEO, and MVO. The corresponding values of the variables were [0.051747, 0.358090, 11.122192].

5. Discussion

In this section, the reasons for the superior performance of MSMA are discussed. The results in Table 5 demonstrate that the chaotic opposition-based learning strategy can enhance the performance of SMA. The different results of different chaotic mappings are caused by the different sequences generated by each chaotic mapping. The results reported in Table 8 demonstrate that all three improvement strategies proposed in this paper can improve the performance of the algorithm. MSMA-1 is competitive in unimodal functions. This is mainly due to the utilization of chaotic mapping for MSMA-1 to enhance the exploitation. MSMA-3 uses a spiral search strategy to improve the performance on the multimodal functions. It is due to the fact that the strategy expands the search of each individual for the space around itself and the population diversity is better. MSMA-2 maintains a balance of exploitation and exploration through adaptive strategies and thus ranks medium in both the multimodal and unimodal functions. The best performance of MSMA indicates that these three strategies complement each other and maintain a good balance between exploitation and exploration. This is also evidenced by the results of the Friedman test in Table 15.

6. Conclusions

In this paper, three improvement strategies are proposed in order to improve the performance of SMA. Firstly, a chaotic opposition-based learning strategy is used to enhance the population diversity. Secondly, two adaptive parameter control strategies are proposed to effectively balance the exploitation and exploration of SMA. Finally, a spiral search strategy is used to expand the SMA to search near individuals and avoid falling into local optimum. To evaluate the performance of the proposed MSMA, 23 classical test functions are used, including 13 multidimensional functions (Dim = 30, 100, 500, 1000) and 10 fixed-dimensional functions.

From the experimental results and the discussion just mentioned, the following conclusions can be drawn.

The sine mapping works best in combination with the opposition-based learning mechanism. Using chaotic opposition-based learning strategy can enhance the exploitation capability of MSMA.

Using a spiral search strategy can significantly enhance MSMA's exploration capabilities and avoid getting trapped in local optimum.

The two self-adaptive strategies maintain a good balance between exploitation and exploration.

Compared with the eight advanced algorithms, MSMA has better convergence accuracy, faster convergence speed, and more stable performance. MSMA has the potential to solve real-world optimization problems.

In future work, we will use MSMA to solve the multi-UAV path planning problem and the task assignment problem. Moreover, MSMA can be extended as a multiobjective optimization algorithm.

Algorithm 1.

Pseudocode of the MSMA.

Table 10.

Comparison of results on F1–F13 with 100D.

| F(x) | Measure | GWO | GSA | HHO | MRFO | EO | SSA | MFO | MPA | SMA | MSMA |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 1.33E − 12 | 4.13E + 03 | 3.86E − 90 | 0.00E + 00 | 4.47E − 29 | 1.45E + 03 | 6.16E + 04 | 1.16E − 28 | 1.74E − 246 | 0.00E + 00 |

| Std | 1.12E − 12 | 9.39E + 02 | 2.73E − 89 | 0.00E + 00 | 6.92E − 29 | 5.34E + 02 | 1.52E + 04 | 3.55E − 28 | 0.00E + 00 | 0.00E + 00 | |

| F2 | Mean | 4.01E − 08 | 1.73E + 01 | 6.85E − 50 | 1.76E − 200 | 1.58E − 17 | 4.61E + 01 | 2.41E + 02 | 8.70E − 16 | 1.80E − 124 | 2.39E − 200 |

| Std | 1.59E − 08 | 3.70E + 00 | 2.41E − 49 | 0.00E + 00 | 1.41E − 17 | 8.01E + 00 | 3.81E + 01 | 1.78E − 15 | 1.27E − 123 | 0.00E + 00 | |

| F3 | Mean | 5.43E + 02 | 1.48E + 04 | 2.22E − 58 | 0.00E + 00 | 8.10E + 00 | 5.44E + 04 | 2.33E + 05 | 1.35E − 13 | 1.82E − 276 | 0.00E + 00 |

| Std | 6.01E + 02 | 3.60E + 03 | 1.52E − 57 | 0.00E + 00 | 1.88E + 01 | 2.45E + 04 | 5.10E + 04 | 4.56E − 13 | 0.00E + 00 | 0.00E + 00 | |

| F4 | Mean | 1.01E + 00 | 1.81E + 01 | 2.84E − 48 | 1.82E − 198 | 5.57E − 02 | 2.83E + 01 | 9.30E + 01 | 2.32E − 14 | 1.54E − 134 | 1.18E − 146 |

| Std | 1.33E + 00 | 2.10E + 00 | 1.74E − 47 | 0.00E + 00 | 3.76E − 01 | 3.63E + 00 | 2.33E + 00 | 6.16E − 14 | 1.09E − 133 | 8.34E − 146 | |

| F5 | Mean | 9.78E + 01 | 1.01E + 05 | 4.49E − 02 | 9.46E + 01 | 9.66E + 01 | 1.64E + 05 | 1.67E + 08 | 9.71E + 01 | 4.07E + 01 | 2.37E + 00 |

| Std | 6.73E − 01 | 5.38E + 04 | 5.40E − 02 | 9.85E − 01 | 1.06E + 00 | 9.49E + 04 | 6.46E + 07 | 7.43E − 01 | 4.00E + 01 | 1.37E + 01 | |

| F6 | Mean | 1.01E + 01 | 3.99E + 03 | 5.07E − 04 | 8.61E − 01 | 3.78E + 00 | 1.37E + 03 | 5.98E + 04 | 3.79 E + 00 | 1.47E + 00 | 1.57E − 02 |

| Std | 9.48E − 01 | 8.20E + 02 | 6.39E − 04 | 4.38E − 01 | 5.64E − 01 | 3.49E + 02 | 1.30E + 04 | 8.39E − 01 | 1.54E + 00 | 4.79E − 02 | |

| F7 | Mean | 6.57E − 03 | 3.66E + 00 | 1.79E − 04 | 1.45E − 04 | 2.49E − 03 | 2.73E + 00 | 2.70E + 02 | 1.71E − 03 | 3.13E − 04 | 4.68E − 05 |

| Std | 3.04E − 03 | 1.70E + 00 | 2.00E − 04 | 1.23E − 04 | 1.21E − 03 | 6.03E − 01 | 1.15E + 02 | 9.14E − 04 | 2.84E − 04 | 4.60E − 05 | |

|

| |||||||||||

| F8 | Mean | −1.65E + 04 | −4.59E + 03 | −4.19E + 04 | −2.37E + 04 | −2.59E + 04 | −2.11E + 04 | −2.35E + 04 | −2.53E + 04 | −4.19E + 04 | −4.19E + 04 |

| Std | 2.78E + 03 | 6.46E + 02 | 4.13E + 00 | 1.53E + 03 | 1.49E + 03 | 1.89E + 03 | 2.09E + 03 | 1.10E + 03 | 1.62E + 01 | 3.52E + 00 | |

| F9 | Mean | 1.03E + 01 | 1.72E + 02 | 0.00E + 00 | 0.00E + 00 | 2.27E − 15 | 2.45E + 02 | 8.68E + 02 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 |

| Std | 7.34E + 00 | 1.79E + 01 | 0.00E + 00 | 0.00E + 00 | 1.61E − 14 | 4.22E + 01 | 7.20E + 01 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F10 | Mean | 1.30E − 07 | 4.43E + 00 | 8.88E − 16 | 8.88E − 16 | 3.41E − 14 | 1.01E + 01 | 1.99E + 01 | 3.09E − 15 | 8.88E − 16 | 8.88E − 16 |

| Std | 5.07E − 08 | 6.21E − 01 | 0.00E + 00 | 0.00E + 00 | 5.55E − 15 | 1.35E + 00 | 1.29E − 01 | 2.37E − 15 | 0.00E + 00 | 0.00E + 00 | |

| F11 | Mean | 1.44E − 03 | 6.86E + 02 | 0.00E + 00 | 0.00E + 00 | 2.54E − 04 | 1.44E + 01 | 5.66E + 02 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 |

| Std | 5.78E − 03 | 4.40E + 01 | 0.00E + 00 | 0.00E + 00 | 1.80E − 03 | 3.69E + 00 | 1.20E + 02 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F12 | Mean | 2.91E − 01 | 1.00E + 01 | 3.73E − 06 | 5.30E − 03 | 3.93E − 02 | 3.33E + 01 | 3.10E + 08 | 4.82E − 02 | 1.03E − 02 | 9.55E − 06 |

| Std | 7.04E − 02 | 3.73E + 00 | 5.96E − 06 | 2.65E − 03 | 9.47E − 03 | 1.25E + 01 | 1.79E + 08 | 1.10E − 02 | 1.69E − 02 | 1.63E − 05 | |

| F13 | Mean | 6.66E + 00 | 2.06E + 03 | 1.81E − 04 | 9.87E + 00 | 5.80E + 00 | 9.59E + 03 | 6.38E + 08 | 8.92E + 00 | 1.73E − 01 | 1.22E − 03 |

| Std | 4.21E − 01 | 4.00E + 03 | 2.26E − 04 | 1.22E − 01 | 1.02E + 00 | 1.91E + 04 | 3.28E + 08 | 5.38E − 01 | 4.24E − 01 | 3.59E − 03 | |

Table 11.

Comparison of results on F1–F13 with 500D.

| F(x) | Measure | GWO | GSA | HHO | MRFO | EO | SSA | MFO | MPA | SMA | MSMA |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 1.70E − 03 | 5.31E + 04 | 2.41E − 90 | 0.00E + 00 | 1.05E − 22 | 9.42E + 04 | 1.16E + 06 | 3.40E − 25 | 3.86E − 229 | 0.00E + 00 |

| Std | 4.97E − 04 | 4.22E + 03 | 1.71E − 89 | 0.00E + 00 | 1.31E − 22 | 6.70E + 03 | 3.39E + 04 | 1.32E − 24 | 0.00E + 00 | 0.00E + 00 | |

| F2 | Mean | 1.09E − 02 | 7.96E + 266 | 3.58E − 48 | 5.55E − 200 | 7.18E − 14 | 5.36E + 02 | 1.49E + 125 | 1.55E + 01 | 6.53E − 01 | 7.97E − 02 |

| Std | 1.54E − 03 | 1.35E + 116 | 1.33E − 47 | 0.00E + 00 | 4.44E − 14 | 2.08E + 01 | 1.05E + 126 | 5.01E + 01 | 2.37E + 00 | 4.94E − 01 | |

| F3 | Mean | 3.42E + 05 | 1.32E + 06 | 5.24E − 33 | 0.00E + 00 | 2.93E + 04 | 1.42E + 06 | 4.90E + 06 | 3.76E − 08 | 4.11E − 166 | 0.00E + 00 |

| Std | 9.35E + 04 | 7.64E + 05 | 3.71E − 32 | 0.00E + 00 | 3.71E + 04 | 6.85E + 05 | 8.91E + 05 | 2.60E − 07 | 0.00E + 00 | 0.00E + 00 | |

| F4 | Mean | 6.58E + 01 | 2.77E + 01 | 1.14E − 46 | 3.29E − 195 | 7.66E + 01 | 4.04E + 01 | 9.89E + 01 | 5.35E − 13 | 4.25E − 95 | 1.66E − 166 |

| Std | 5.95E + 00 | 1.74E + 00 | 7.58E − 46 | 0.00E + 00 | 1.67E + 01 | 2.87E + 00 | 4.13E − 01 | 1.09E − 12 | 3.00E − 94 | 0.00E + 00 | |

| F5 | Mean | 4.98E + 02 | 7.35E + 06 | 2.03E − 01 | 4.96E + 02 | 4.97E + 02 | 3.80E + 07 | 5.06E + 09 | 4.97E + 02 | 2.23E + 02 | 7.01E + 00 |

| Std | 3.14E − 01 | 1.18E + 06 | 1.99E − 01 | 6.47E − 01 | 3.86E − 01 | 4.87E + 06 | 2.25E + 08 | 2.60E − 01 | 2.09E + 02 | 1.40E + 01 | |

| F6 | Mean | 9.11E + 01 | 5.28E + 04 | 1.79E − 03 | 6.38E + 01 | 8.70E + 01 | 9.37E + 04 | 1.15E + 06 | 7.48E + 01 | 2.45E + 01 | 3.02E − 01 |

| Std | 2.13E + 00 | 3.62E + 03 | 2.56E − 03 | 2.71E + 00 | 1.71E + 00 | 7.19E + 03 | 4.05E + 04 | 1.96E + 00 | 2.99E + 01 | 5.49E − 01 | |

| F7 | Mean | 4.85E − 02 | 8.33E + 02 | 1.39E − 04 | 1.12E − 04 | 4.56E − 03 | 2.72E + 02 | 3.85E + 04 | 1.80E − 03 | 5.40E − 04 | 4.90E − 05 |

| Std | 1.15E − 02 | 1.34E + 02 | 1.18E − 04 | 9.88E − 05 | 1.83E − 03 | 4.42E + 01 | 2.06E + 03 | 1.18E − 03 | 4.84E − 04 | 3.96E − 05 | |

|

| |||||||||||

| F8 | Mean | −5.48E + 04 | −1.06E + 04 | −2.09E + 05 | −7.44E + 04 | −7.57E + 04 | −6.03E + 04 | −6.15E + 04 | −8.44E + 04 | −2.09E + 05 | −2.09E + 05 |

| Std | 1.14E + 04 | 1.87E + 03 | 1.13E + 02 | 3.87E + 03 | 5.60E + 03 | 6.06E + 03 | 4.79E + 03 | 3.49E + 03 | 2.52E + 02 | 1.25E + 02 | |

| F9 | Mean | 6.90E + 01 | 2.66E + 03 | 0.00E + 00 | 0.00E + 00 | 5.46E − 14 | 3.17E + 03 | 6.99E + 03 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 |

| Std | 2.05E + 01 | 1.29E + 02 | 0.00E + 00 | 0.00E + 00 | 2.18E − 13 | 1.22E + 02 | 1.17E + 02 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F10 | Mean | 1.83E − 03 | 1.02E + 01 | 8.88E − 16 | 8.88E − 16 | 5.26E − 13 | 1.42E + 01 | 2.03E + 01 | 1.69E − 14 | 8.88E − 16 | 8.88E − 16 |

| Std | 3.85E − 04 | 2.57E − 01 | 0.00E + 00 | 0.00E + 00 | 2.53E − 13 | 2.30E − 01 | 1.45E − 01 | 2.01E − 14 | 0.00E + 00 | 0.00E + 00 | |

| F11 | Mean | 1.21E − 02 | 8.62E + 03 | 0.00E + 00 | 0.00E + 00 | 9.77E − 17 | 8.51E + 02 | 1.05E + 04 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 |

| Std | 3.27E − 02 | 1.90E + 02 | 0.00E + 00 | 0.00E + 00 | 3.64E − 17 | 5.07E + 01 | 2.84E + 02 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | |

| F12 | Mean | 7.46E − 01 | 9.10E + 03 | 1.80E − 06 | 2.86E − 01 | 5.83E − 01 | 1.36E + 06 | 1.19E + 10 | 3.95E − 01 | 1.28E − 02 | 3.40E − 05 |

| Std | 4.60E − 02 | 1.25E + 04 | 2.69E − 06 | 2.81E − 02 | 2.70E − 02 | 6.59E + 05 | 7.05E + 08 | 2.86E − 02 | 2.83E − 02 | 6.32E − 05 | |

| F13 | Mean | 5.08E + 01 | 2.89E + 06 | 5.03E − 04 | 4.97E + 01 | 4.92E + 01 | 3.64E + 07 | 2.22E + 10 | 4.85E + 01 | 1.62E + 00 | 2.49E − 02 |

| Std | 1.63E + 00 | 1.02E + 06 | 1.03E − 03 | 1.28E − 02 | 2.92E − 01 | 8.94E + 06 | 1.32E + 09 | 2.65E − 01 | 4.92E + 00 | 6.70E − 02 | |

Acknowledgments

The authors acknowledge funding received from the following science foundations: the National Natural Science Foundation of China (no. 62101590) and the Science Foundation of Shanxi Province, China (2020JQ-481, 2021JM-224, and 2021JM-223).

Data Availability

The data used to support the findings of this study are included within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- 1.Wu G., Pedrycz W., Suganthan P. N., Mallipeddi R. A variable reduction strategy for evolutionary algorithms handling equality constraints. Applied Soft Computing . 2015;37:774–786. doi: 10.1016/j.asoc.2015.09.007. [DOI] [Google Scholar]

- 2.Katebi J., Shoaei-parchin M., Shariati M., Trung N. T., Khorami M. Developed comparative analysis of metaheuristic optimization algorithms for optimal active control of structures. Engineering with Computers . 2020;36(4):1539–1558. doi: 10.1007/s00366-019-00780-7. [DOI] [Google Scholar]

- 3.Nadimi-Shahraki M. H., Taghian S., Mirjalili S. An improved grey wolf optimizer for solving engineering problems. Expert Systems with Applications . 2021;166 doi: 10.1016/j.eswa.2020.113917.113917 [DOI] [Google Scholar]

- 4.Tang A.-D., Han T., Zhou H., Xie L. An improved equilibrium optimizer with application in unmanned aerial vehicle path planning. Sensors . 2021;21(5):p. 1814. doi: 10.3390/s21051814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Li Y., Han T., Zhao H., Gao H. An adaptive whale optimization algorithm using Gaussian distribution strategies and its application in heterogeneous ucavs task allocation. IEEE Access . 2019;7:110138–110158. doi: 10.1109/ACCESS.2019.2933661. [DOI] [Google Scholar]

- 6.Wang X., Zhao H., Han T., Zhou H., Li C. A grey wolf optimizer using Gaussian estimation of distribution and its application in the multi-UAV multi-target urban tracking problem. Applied Soft Computing . 2019;78:240–260. doi: 10.1016/j.asoc.2019.02.037. [DOI] [Google Scholar]

- 7.Wang X., Zhao H., Han T., Wei Z., Liang Y., Li Y. A Gaussian estimation of distribution algorithm with random walk strategies and its application in optimal missile guidance handover for multi-UCAV in over-the-horizon air combat. IEEE Access . 2019;7:43298–43317. doi: 10.1109/ACCESS.2019.2908262. [DOI] [Google Scholar]

- 8.Abd Elaziz M., Yousri D., Al-qaness M. A. A., AbdelAty A. M., Radwan A. G., Ewees A. A. A Grunwald-Letnikov based Manta ray foraging optimizer for global optimization and image segmentation. Engineering Applications of Artificial Intelligence . 2021;98:p. 104105. doi: 10.1016/j.engappai.2020.104105. [DOI] [Google Scholar]

- 9.Wunnava A., Naik M. K., Panda R., Jena B., Abraham A. A novel interdependence based multilevel thresholding technique using adaptive equilibrium optimizer. Engineering Applications of Artificial Intelligence . 2020;94 doi: 10.1016/j.engappai.2020.103836.103836 [DOI] [Google Scholar]

- 10.Salgotra R., Singh U., Singh S., Mittal N. A hybridized multi-algorithm strategy for engineering optimization problems. Knowledge-Based Systems . 2021;217 doi: 10.1016/j.knosys.2021.106790.106790 [DOI] [Google Scholar]

- 11.Alweshah M., Khalaileh S. A., Gupta B. B., Almomani A., Hammouri A. I., Al-Betar M. A. The monarch butterfly optimization algorithm for solving feature selection problems. Neural Computing & Applications . 2020 doi: 10.1007/s00521-020-05210-0. [DOI] [Google Scholar]

- 12.Alweshah M. Solving feature selection problems by combining mutation and crossover operations with the monarch butterfly optimization algorithm. Applied Intelligence . 2021;51(6):4058–4081. doi: 10.1007/s10489-020-01981-0. [DOI] [Google Scholar]

- 13.Qasim O. S., Al-Thanoon N. A., Algamal Z. Y. Feature selection based on chaotic binary black hole algorithm for data classification. Chemometrics and Intelligent Laboratory Systems . 2020;204 doi: 10.1016/j.chemolab.2020.104104.104104 [DOI] [Google Scholar]

- 14.Wei Z., Huang C., Wang X., Zhang H. Parameters identification of photovoltaic models using a novel algorithm inspired from nuclear reaction. Proceedings of the 2019 IEEE Congress on Evolutionary Computation, CEC 2019-Proceedings; June 2019; Wellington, New Zealand. [DOI] [Google Scholar]

- 15.Lin X., Wu Y. Parameters identification of photovoltaic models using niche-based particle swarm optimization in parallel computing architecture. Energy . 2020;196 doi: 10.1016/j.energy.2020.117054.117054 [DOI] [Google Scholar]

- 16.Abdel-Basset M., Mohamed R., Chakrabortty R. K., Sallam K., Ryan M. J. An efficient teaching-learning-based optimization algorithm for parameters identification of photovoltaic models: analysis and validations. Energy Conversion and Management . 2021;227 doi: 10.1016/j.enconman.2020.113614.113614 [DOI] [Google Scholar]

- 17.Hao Q., Zhou Z., Wei Z., Chen G. Parameters identification of photovoltaic models using a multi-strategy success-history-based adaptive differential evolution. IEEE Access . 2020;8:35979–35994. doi: 10.1109/ACCESS.2020.2975078. [DOI] [Google Scholar]

- 18.Gangwar S., Pathak V. K. Dry sliding wear characteristics evaluation and prediction of vacuum casted marble dust (MD) reinforced ZA-27 alloy composites using hybrid improved bat algorithm and ANN. Materials Today Communications . 2020;25 doi: 10.1016/j.mtcomm.2020.101615.101615 [DOI] [Google Scholar]

- 19.Holland J. H. Adaptation in Natural and Artificial Systems . Ann Arbor, MI, USA: University of Michigan Press; 1992. [Google Scholar]

- 20.Sarker R. A., Elsayed S. M., Ray T. Differential evolution with dynamic parameters selection for optimization problems. IEEE Transactions on Evolutionary Computation . 2014;18(5):689–707. doi: 10.1109/TEVC.2013.2281528. [DOI] [Google Scholar]

- 21.Ma H., Fei M., Yang Z. Biogeography-based optimization for identifying promising compounds in chemical process. Neurocomputing . 2016;174:494–499. doi: 10.1016/j.neucom.2015.05.125. [DOI] [Google Scholar]

- 22.Fogel D. B. Applying evolutionary programming to selected traveling salesman problems. Cybernetics & Systems . 1993;24(1):27–36. doi: 10.1080/01969729308961697. [DOI] [Google Scholar]

- 23.Beyer H.-G., Schwefel H.-P. Evolution strategies – a comprehensive introduction. Natural Computing . 2002;1(1):3–52. doi: 10.1023/A:1015059928466. [DOI] [Google Scholar]

- 24.Rashedi E., Nezamabadi-pour H., Saryazdi S. GSA: a gravitational search algorithm. Information Sciences . 2009;179(13):2232–2248. doi: 10.1016/j.ins.2009.03.004. [DOI] [Google Scholar]

- 25.Mirjalili S. SCA: a Sine Cosine Algorithm for solving optimization problems. Knowledge-Based Systems . 2016;96:120–133. doi: 10.1016/j.knosys.2015.12.022. [DOI] [Google Scholar]

- 26.Hatamlou A. Black hole: a new heuristic optimization approach for data clustering. Information Sciences . 2013;222:175–184. doi: 10.1016/j.ins.2012.08.023. [DOI] [Google Scholar]

- 27.Wei Z., Huang C., Wang X., Han T., Li Y. Nuclear reaction optimization: a novel and powerful physics-based algorithm for global optimization. IEEE Access . 2019;7:66084–66109. doi: 10.1109/ACCESS.2019.2918406. [DOI] [Google Scholar]

- 28.Hashim F. A., Houssein E. H., Mabrouk M. S., Al-Atabany W., Mirjalili S. Henry gas solubility optimization: a novel physics-based algorithm. Future Generation Computer Systems . 2019;101:646–667. doi: 10.1016/j.future.2019.07.015. [DOI] [Google Scholar]

- 29.Kennedy J., Eberhart R. Particle swarm optimization. Proceedings of the IEEE International Conference on Neural Networks-Conference Proceedings; December 1995; Perth, Australia. [Google Scholar]

- 30.Dorigo M., Di Caro G. Ant colony optimization: a new meta-heuristic. Proceedings of the 1999 Congress on Evolutionary Computation, Cecidology; July 1999; Washington, DC, USA. [Google Scholar]

- 31.Mirjalili S., Mirjalili S. M., Lewis A. Grey wolf optimizer. Advances in Engineering Software . 2014;69:46–61. doi: 10.1016/j.advengsoft.2013.12.007. [DOI] [Google Scholar]

- 32.Mirjalili S., Gandomi A. H., Mirjalili S. Z., Saremi S., Faris H., Mirjalili S. M. Salp Swarm Algorithm: a bio-inspired optimizer for engineering design problems. Advances in Engineering Software . 2017;114:163–191. doi: 10.1016/j.advengsoft.2017.07.002. [DOI] [Google Scholar]

- 33.Wang G.-G., Deb S., Cui Z. Monarch butterfly optimization. Neural Computing & Applications . 2019;31(7):1995–2014. doi: 10.1007/s00521-015-1923-y. [DOI] [Google Scholar]

- 34.Salgotra R., Singh U. The naked mole-rat algorithm. Neural Computing & Applications . 2019;31(12):8837–8857. doi: 10.1007/s00521-019-04464-7. [DOI] [Google Scholar]

- 35.Wolpert D. H., Macready W. G. No free lunch theorems for optimization. IEEE Transactions on Evolutionary Computation . 1997;1(1):67–82. doi: 10.1109/4235.585893. [DOI] [Google Scholar]

- 36.Li S., Chen H., Wang M., Heidari A. A., Mirjalili S. Slime mould algorithm: a new method for stochastic optimization. Future Generation Computer Systems . 2020;111:300–323. doi: 10.1016/j.future.2020.03.055. [DOI] [Google Scholar]

- 37.Zhao S., Wang P., Heidari A. A., et al. Multilevel threshold image segmentation with diffusion association slime mould algorithm and Renyi’s entropy for chronic obstructive pulmonary disease. Computers in Biology and Medicine . 2021;134 doi: 10.1016/j.compbiomed.2021.104427.104427 [DOI] [PubMed] [Google Scholar]

- 38.Liu Y., Heidari A. A., Ye X., Liang G., Chen H., He C. Boosting slime mould algorithm for parameter identification of photovoltaic models. Energy . 2021;234 doi: 10.1016/j.energy.2021.121164.121164 [DOI] [Google Scholar]

- 39.Ewees A. A., Abualigah L., Yousri D., et al. Improved slime mould algorithm based on firefly algorithm for feature selection: a case study on QSAR model. Engineering with Computers . 2021 doi: 10.1007/s00366-021-01342-6. [DOI] [Google Scholar]

- 40.Yu C., Heidari A. A., Xue X., Zhang L., Chen H., Chen W. Boosting quantum rotation gate embedded slime mould algorithm. Expert Systems with Applications . 2021;181 doi: 10.1016/j.eswa.2021.115082.115082 [DOI] [Google Scholar]

- 41.Houssein E. H., Mahdy M. A., Blondin M. J., Shebl D., Mohamed W. M. Hybrid slime mould algorithm with adaptive guided differential evolution algorithm for combinatorial and global optimization problems. Expert Systems with Applications . 2021;174 doi: 10.1016/j.eswa.2021.114689.114689 [DOI] [Google Scholar]

- 42.Tizhoosh H. R. Opposition-based learning: a new scheme for machine intelligence. Proceedings of the International Conference on Computational Intelligence for Modelling, Control and Automation, CIMCA 2005 and International Conference on Intelligent Agents, Web Technologies and Internet; November 2005; Vienna, Austria. [Google Scholar]

- 43.Faramarzi A., Heidarinejad M., Mirjalili S., Gandomi A. H. Marine predators algorithm: a nature-inspired metaheuristic. Expert Systems with Applications . 2020;152 doi: 10.1016/j.eswa.2020.113377.113377 [DOI] [Google Scholar]

- 44.Mirjalili S. Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowledge-Based Systems . 2015;89:228–249. doi: 10.1016/j.knosys.2015.07.006. [DOI] [Google Scholar]

- 45.Faramarzi A., Heidarinejad M., Stephens B., Mirjalili S. Equilibrium optimizer: a novel optimization algorithm. Knowledge-Based Systems . 2020;191 doi: 10.1016/j.knosys.2019.105190.105190 [DOI] [Google Scholar]

- 46.Zhao W., Zhang Z., Wang L. Manta ray foraging optimization: an effective bio-inspired optimizer for engineering applications. Engineering Applications of Artificial Intelligence . 2020;87 doi: 10.1016/j.engappai.2019.103300.103300 [DOI] [Google Scholar]

- 47.Heidari A. A., Mirjalili S., Faris H., Aljarah I., Mafarja M., Chen H. Harris hawks optimization: algorithm and applications. Future Generation Computer Systems . 2019;97:849–872. doi: 10.1016/j.future.2019.02.028. [DOI] [Google Scholar]

- 48.García S., Fernández A., Luengo J., Herrera F. Advanced nonparametric tests for multiple comparisons in the design of experiments in computational intelligence and data mining: experimental analysis of power. Information Sciences . 2010;180(10):2044–2064. doi: 10.1016/j.ins.2009.12.010. [DOI] [Google Scholar]