Abstract

Machine-learned force fields combine the accuracy of ab initio methods with the efficiency of conventional force fields. However, current machine-learned force fields typically ignore electronic degrees of freedom, such as the total charge or spin state, and assume chemical locality, which is problematic when molecules have inconsistent electronic states, or when nonlocal effects play a significant role. This work introduces SpookyNet, a deep neural network for constructing machine-learned force fields with explicit treatment of electronic degrees of freedom and nonlocality, modeled via self-attention in a transformer architecture. Chemically meaningful inductive biases and analytical corrections built into the network architecture allow it to properly model physical limits. SpookyNet improves upon the current state-of-the-art (or achieves similar performance) on popular quantum chemistry data sets. Notably, it is able to generalize across chemical and conformational space and can leverage the learned chemical insights, e.g. by predicting unknown spin states, thus helping to close a further important remaining gap for today’s machine learning models in quantum chemistry.

Subject terms: Cheminformatics, Computational chemistry, Method development, Quantum chemistry, Chemical physics

Current machine-learned force fields typically ignore electronic degrees of freedom. SpookyNet is a deep neural network that explicitly treats electronic degrees of freedom, closing an important remaining gap for models in quantum chemistry.

Introduction

Molecular dynamics (MD) simulations of chemical systems allow to gain insights on many intricate phenomena, such as reactions or the folding of proteins1. To perform MD simulations, knowledge of the forces acting on individual atoms at every time step of the simulation is required2. The most accurate way of deriving these forces is by (approximately) solving the Schrödinger equation (SE), which describes the physical laws governing chemical systems3. Unfortunately, the computational cost of accurate ab initio approaches4 makes them impractical when many atoms are studied, or the simulation involves thousands (or even millions) of time steps. For this reason, it is common practice to use force fields (FFs)—analytical expressions for the potential energy of a chemical system, from which forces are obtained by derivation—instead of solving the SE5. The remaining difficulty is to find an appropriate functional form that gives forces at the required accuracy.

Recently, machine learning (ML) methods have gained increasing popularity for addressing this task6–15. They allow to automatically learn the relation between chemical structure and forces from ab initio reference data. The accuracy of such ML-FFs (also known as machine learning potentials) is limited by the quality of the data used to train them and their computational efficiency is comparable to conventional FFs11,16.

One of the first methods for constructing ML-FFs for high-dimensional systems was introduced by Behler and Parrinello for studying the properties of bulk silicon17. The idea is to encode the local (within a certain cutoff radius) chemical environment of each atom in a descriptor, e.g., using symmetry functions18, which is used as input to an artificial neural network19 predicting atomic energies. The total potential energy of the system is modeled as the sum of the individual contributions, and forces are obtained by derivation with respect to atom positions. Alternatively, it is also possible to directly predict the total energy (or forces) without relying on a partitioning into atomic contributions20–22. However, an atomic energy decomposition makes predictions size extensive and the learned model applicable to systems of different size. Many other ML-FFs follow this design principle, but rely on different descriptors23–25 or use other ML methods6,8,26, such as kernel machines7,27–32, for the prediction. An alternative to manually designed descriptors is to use the raw atomic numbers and Cartesian coordinates as input instead. Then, suitable atomic representations can be learned from (and adapted to) the reference data automatically. This is usually achieved by “passing messages” between atoms to iteratively build increasingly sophisticated descriptors in a deep neural network architecture. After the introduction of the deep tensor neural network (DTNN)33, such message-passing neural networks (MPNNs)34 became highly popular and the original architecture has since been refined by many related approaches35–37.

However, atomic numbers and Cartesian coordinates (or descriptors derived from them) do not provide an unambiguous description of chemical systems38. They only account for the nuclear degrees of freedom, but contain no information about electronic structure, such as the total charge or spin state. This is of no concern when all systems of interest have a consistent electronic state (e.g., they are all neutral singlet structures), but leads to an ill-defined learning problem otherwise (Fig. 1a). Further, most ML-FFs assume that atomic properties are dominated by their local chemical environment11. While this approximation is valid in many cases, it still neglects that quantum systems are inherently nonlocal in nature, a quality which Einstein famously referred to as “spooky actions at a distance”39. For example, electrons can be delocalized over a chemical system and charge or spin density may instantaneously redistribute to specific atoms based on distant structural changes (Fig. 1b)40–44.

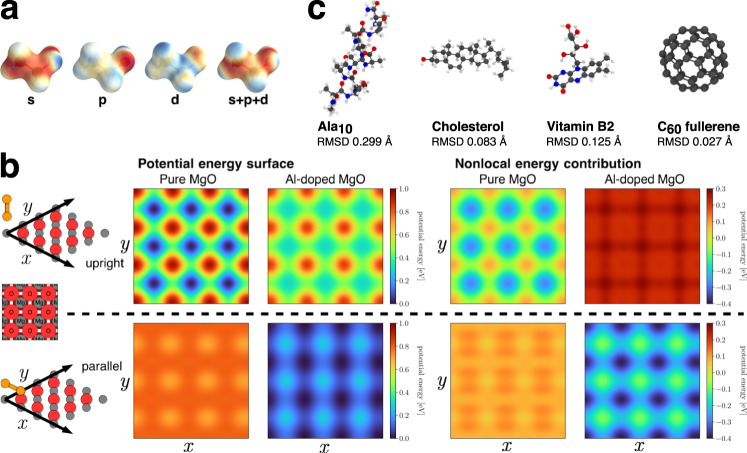

Fig. 1. Main features of SpookyNet and problems addressed in this work.

a Optimized geometries of Ag/Ag (left) and singlet/triplet CH2 (right). Without information about the electronic state (charge/spin), machine learning models are unable to distinguish between the different structures. b Au2 dimer on a MgO(001) surface doped with Al atoms (Au: yellow, Mg: gray, O: red, Al: pink). The presence of Al atoms in the crystal influences the electronic structure and affects Au2 binding to the surface, an effect which cannot be adequately described by only local interactions. c Potential energy Epot (solid black) for O–H bond dissociation in water. The asymptotic behavior of Epot for very small and very large bond lengths can be well-approximated by analytical short-ranged Esr (dotted red) and long-ranged Elr (dotted orange) energy contributions, which follow known physical laws. When they are subtracted from Epot, the remaining energy (solid blue) covers a smaller range of values and decays to zero quicker, which simplifies the learning problem. d Visualization of a random selection of learned interaction functions for SpookyNet trained on the QM7-X71 dataset. They are designed to closely resemble atomic orbitals, facilitating SpookyNet’s ability to extract chemical insight from data.

ML-FFs have only recently begun to address these issues. For example, the charge equilibration neural network technique (CENT)45 was developed to construct interatomic potentials for ionic systems. In CENT, a neural network predicts atomic electronegativities (instead of energy contributions), from which partial charges are derived via a charge equilibration scheme46–48 that minimizes the electrostatic energy of the system and models nonlocal charge transfer. Then, the total energy is obtained by an analytical expression involving the partial charges. Since they are constrained to conserve the total charge, different charge states of the same chemical system can be treated by a single model. The recently proposed fourth-generation Behler-Parinello neural network (4G-BPNN)49 expands on this idea using two separate neural networks: The first one predicts atomic electronegativities, from which partial charges are derived using the same method as in CENT. The second neural network predicts atomic energy contributions, receiving the partial charges as additional inputs, which contain implicit information about the total charge. The charge equilibration scheme used in CENT and 4G-BPNNs involves the solution of a system of linear equations, which formally scales cubically with the number of atoms, although iterative solvers can be used to reduce the complexity49. Unfortunately, only different total charges, but not spin states, can be distinguished with this approach. In contrast, neural spin equilibration (NSE)50, a recently proposed modification to the AIMNet model51, distinguishes between α and β-spin charges, allowing it to also treat different spin states. In the NSE method, a neural network predicts initial (spin) charges from descriptors that depend only on atomic numbers and coordinates. The discrepancy between predictions and true (spin) charges is then used to update the descriptors and the procedure is repeated until convergence. An alternative approach to include information about the charge and spin state is followed by the BpopNN model52. In this method, electronic information is encoded indirectly by including spin populations when constructing atomic descriptors. However, this requires running (constrained) density functional theory calculations to derive the populations before the model can be evaluated. A similar approach is followed by OrbNet53: Instead of spin populations, the atomic descriptors are formed from the expectation values of several quantum mechanical operators in a symmetry-adapted atomic orbital basis.

The present work introduces SpookyNet, a deep MPNN which takes atomic numbers, Cartesian coordinates, the number of electrons, and the spin state as direct inputs. It does not rely on equilibration schemes, which often involve the costly solution of a linear system, or values derived from ab initio calculations, to encode the electronic state. Our end-to-end learning approach is shared by many recent ML methods that aim to solve the Schrödinger equation54–56 and mirrors the inputs that are also used in ab initio calculations. To model local interactions between atoms, early MPNNs relied on purely distance-based messages33,35,37, whereas later architectures such as DimeNet57 proposed to include angular information in the feature updates. However, explicitly computing angles between all neighbors of an atom scales quadratically with the number of neighbors. To achieve linear scaling, SpookyNet encodes angular information implicitly via the use of basis functions based on Bernstein polynomials58 and spherical harmonics. Spherical harmonics are also used in neural network architectures for predicting rotationally equivariant quantities, such as tensor field networks59, Cormorant60, PaiNN61, or NequIP62. However, since only scalar quantities (energies) need to be predicted for constructing ML-FFs, SpookyNet projects rotationally equivariant features to invariant representations for computational efficiency. Many methods for constructing descriptors of atomic environments use similar approaches to derive rotationally invariant quantities from spherical harmonics25,63,64. In addition, SpookyNet allows to model quantum nonlocality and electron delocalization explicitly by introducing a nonlocal interaction between atoms, which is independent of their distance. Following previous works, its energy predictions are augmented with physically motivated corrections to improve the description of long-ranged electrostatic and dispersion interactions37,65,66. Additionally, SpookyNet explicitly models short-ranged repulsion between nuclei. Such corrections simplify the learning problem and guarantee correct asymptotic behaviour (Fig. 1c). As such, SpookyNet is a hybrid between a pure ML approach and a classical FF. However, it is much closer to a pure ML approach than methods like IPML67, which rely exclusively on parametrized functions (known from classical FFs) for modeling the potential energy, but predict environment-dependent parameters with ML methods. Further, inductive biases in SpookyNet’s architecture encourage learning of atomic representations which capture similarities between different elements and interaction functions designed to resemble atomic orbitals, allowing it to efficiently extract meaningful chemical insights from data (Fig. 1d).

Results

SpookyNet architecture

SpookyNet takes sets of atomic numbers and Cartesian coordinates , which describe the element types and positions of N atoms, as input. Information about the electronic wave function, which is necessary for an unambiguous description of a chemical system, is provided via two additional inputs: The total charge encodes the number of electrons (given by Q + ∑iZi), whereas the total angular momentum is encoded as the number of unpaired electrons . For example, a singlet state is indicated by S = 0, a doublet state by S = 1, and so on. The nuclear charges Z, total charge Q and spin state S are transformed to F-dimensional embeddings and combined to form initial atomic feature representations

| 1 |

Here, the nuclear embeddings eZ contain information about the ground state electron configuration of each element and the electronic embeddings eQ and eS contain delocalized information about the total charge and spin state, respectively. A chain of T interaction modules iteratively refines these representations through local and nonlocal interactions

| 2 |

where contains all atom indices within a cutoff distance rcut of atom i and is the relative position of atom j with respect to atom i. The local interaction functions are designed to resemble s, p, and d atomic orbitals (see Fig. 1d) and the model learns to encode different distance and angular information about the local environment of each atom with the different interaction functions (see Fig. 2a). The nonlocal interactions on the other hand model the delocalized electrons. The representations x(t) at each stage are further refined through learned functions according to and summed to the atomic descriptors

| 3 |

from which atomic energy contributions Ei are predicted with linear regression. The total potential energy is given by

| 4 |

where Erep, Eele, and Evdw are empirical corrections, which augment the energy prediction with physical knowledge about nuclear repulsion, electrostatics, and dispersion interactions. Energy-conserving forces can be obtained via automatic differentiation. A schematic depiction of the SpookyNet architecture is given in Fig. 3.

Fig. 2. Examples of chemical insights extracted by SpookyNet.

a Visualization of the learned local chemical potential for ethanol (see methods). The individual contributions of s-, p-, and d-orbital-like interactions are shown (red: low energy, blue: high energy). b Potential energy surface scans obtained by moving an Au2 dimer over an (Al-doped) MgO surface in different (upright/parallel) configurations (the positions of Mg and O atoms are shown for orientation). SpookyNet learns to distinguish between local and nonlocal contributions to the potential energy, allowing it to model changes of the potential energy surface when the crystal is doped with Al atoms far from the surface. c A model trained on small organic molecules learns general chemical principles that can be transferred to much larger structures outside the chemical space covered by the training data. Here, optimized geometries obtained from SpookyNet trained on the QM7-X database (opaque in color) are shown and compared with reference geometries obtained from ab initio calculations (transparent in gray). As indicated by the low root mean square deviations (RMSD), geometries predicted by SpookyNet are very similar to the reference.

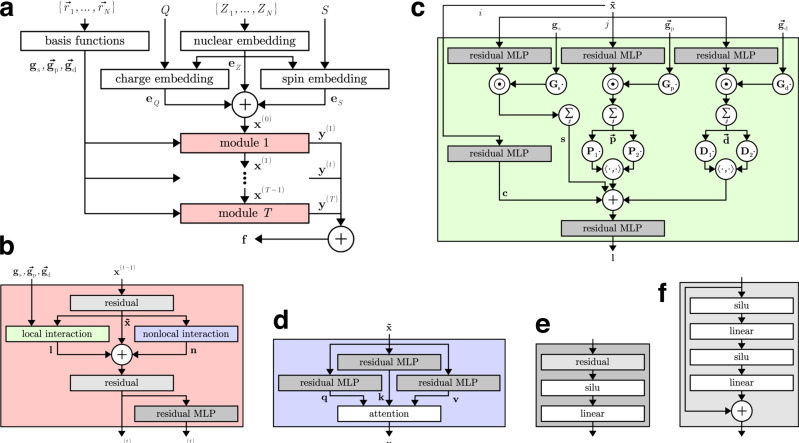

Fig. 3. Schematic depiction of the SpookyNet architecture with color-coded view of individual components.

a Architecture overview, for details on the nuclear and electronic (charge/spin) embeddings and basis functions, refer to Eqs. (9), (10), and (13), respectively. b Interaction module, see Eq. (11). c Local interaction block, see Eq. (12). d Nonlocal interaction block, see Eq. (18). e Residual multilayer perceptron (MLP), see Eq. (8). f Pre-activation residual block, see Eq. (7).

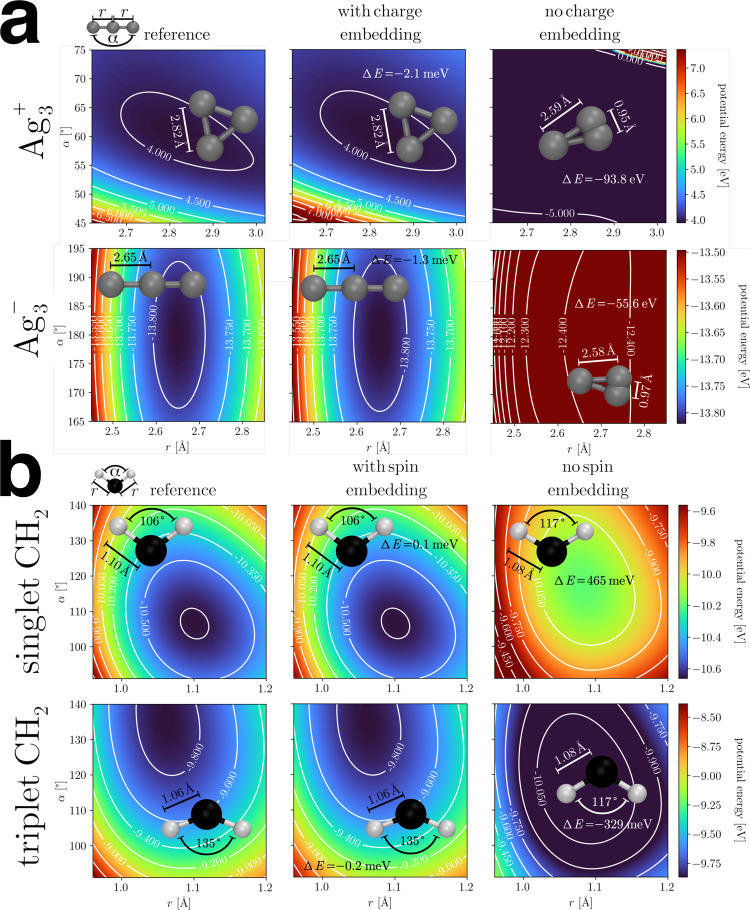

Electronic states

Most existing ML-FFs can only model structures with a consistent electronic state, e.g., neutral singlets. An exception are systems for which the electronic state can be inferred via structural cues, e.g., in the case of protonation/deprotonation37. In most cases, however, this is not possible, and ML-FFs that do not model electronic degrees of freedom are unable to capture the relevant physics. Here, this problem is solved by explicitly accounting for different electronic states (see Eq. (1)). To illustrate their effects on potential energy surfaces, two exemplary systems are considered: Ag/Ag and singlet/triplet CH2, which can only be distinguished by their charge, respectively, their spin state. SpookyNet is able to faithfully reproduce the reference potential energy surface for all systems. When the charge/spin embeddings eQ/eS (Eq. (1)) are removed, however, the model becomes unable to represent the true potential energy surface and its predictions are qualitatively different from the reference (see Fig. 4). As a consequence, wrong global minima are predicted when performing geometry optimizations with a model trained without the charge/spin embeddings, whereas they are virtually indistinguishable from the reference when the embeddings are used. Interestingly, even without a charge embedding, SpookyNet can predict different potential energy surfaces for Ag/Ag. This is because explicit point charge electrostatics are used in the energy prediction (see Eq. (4)) and the atomic partial charges are constrained such that the total molecular charge is conserved. However, such implicit information is insufficient to distinguish both charge states adequately. In the case of singlet/triplet CH2, there is no such implicit information and both systems appear identical to a model without electronic embeddings, i.e., it predicts the same energy surface for both systems, which neither reproduces the true singlet nor triplet reference.

Fig. 4. Potential energy surfaces of a Ag and Ag and b singlet and triplet CH2.

The middle and right columns show the prediction of SpookyNet with and without charge/spin embedding, respectively, whereas the reference ground truth is shown in the left column. Minimum energy structures and prediction errors (ΔE) for the minimum energy are also shown.

Models with electronic embeddings even generalize to unknown electronic states. As an example, the QMspin database68 is considered. It consists of ~13k carbene structures with at most nine non-hydrogen atoms (C, N, O, F), which were optimized either in a singlet or triplet state. For each of these, both singlet and triplet energies computed at the MRCISD+Q-F12/cc-pVDZ-F12 level of theory are reported, giving a total of ~26k energy-structure pairs in the database (see ref. 69 for more details). For the lack of other models evaluated on this dataset, SpookyNet is compared to itself without electronic embeddings. This baseline model only reaches a mean absolute prediction error (MAE) of 444.6 meV for unknown spin states. As expected, the performance is drastically improved when the electronic embeddings are included, allowing SpookyNet to reach an MAE of 68.0 meV. Both models were trained on 20k points, used 1k samples as validation set, and were tested on the remaining data. An analysis of the local chemical potential (see methods) reveals that a model with electronic embeddings learns a feature-rich internal representation of molecules, which significantly differs between singlet and tripled states (see Supplementary Fig. 3). In contrast, the local chemical potential learned by a model without electronic embeddings is almost uniform and (necessarily) identical between both states, suggesting that the electronic embeddings are crucial to extract the relevant chemistry from the data.

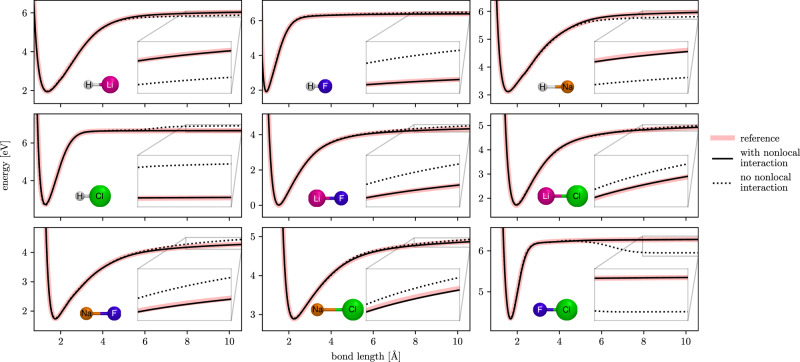

Nonlocal effects

For many chemical systems, local interactions are sufficient for an accurate description. However, there are also cases were a purely local picture breaks down. To demonstrate that nonlocal effects can play an important role even in simple systems, the dissociation curves of nine (neutral singlet) diatomic molecules made up of H, Li, F, Na, and Cl atoms are considered (Fig. 5). Once the bonding partners are separated by more than the chosen cutoff radius rcut, models that rely only on local information will always predict the same energy contributions for atoms of the same element (by construction). However, since electrons are free to distribute unevenly across atoms, even when they are separated (e.g., due to differences in their electronegativity), energy contributions should always depend on the presence of other atoms in the structure. Consequently, it is difficult for models without nonlocal interactions to predict the correct asymptotic behavior for all systems simultaneously. As such, when the nonlocal interactions are removed from interaction modules (Eq. (2)), SpookyNet predicts an unphysical “step” for large interatomic separations, even when a large cutoff is used for the local interactions. In contrast, the reference is reproduced faithfully when nonlocal interactions are enabled. Note that such artifacts—occurring if nonlocal interactions are not modeled—are problematic e.g., when simulating reactions. Simply increasing the cutoff is no adequate solution to this problem, since it just shifts the artifact to larger separations. In these specific examples, even the inclusion of long-range corrections is insufficient to avoid artifacts in the asymptotic tails (analytical corrections for electrostatics and dispersion are enabled for both models), although they can help in some cases37,70.

Fig. 5. Dissociation curves of different diatomic molecules predicted by SpookyNet with/without nonlocal interactions.

Individual panels show the dissociation curves for different species. From top-left to bottom/right: HLi, HF, HNa, HCl, LiF, LiCl, NaF, NaCl, and FCl.

More complex nonlocal effects may arise for larger structures. For example, Ko et al. recently introduced four datasets for systems exhibiting nonlocal charge transfer effects49. One of these systems consists of a diatomic Au cluster deposited on the surface of a periodic MgO(001) crystal (Au2–MgO). In its minimum energy configuration, the Au2 cluster “stands upright” on the surface on top of an O atom. When some of the Mg atoms (far from the surface) are replaced by Al (see Fig. 1b), however, the Au2 cluster prefers to “lie parallel” to the surface above two Mg atoms (the distance between the Au and Al atoms is above 10 Å). In other words, the presence of Al dopant atoms nonlocally modifies the electronic structure at the surface in such a way that a different Au2 configuration becomes more stable. This effect can be quantified by scanning the potential energy surface of Au2–MgO by moving the Au2 cluster above the surface in different configurations (see Fig. 2b). Upon introduction of dopant Al atoms, nonlocal energy contributions destabilize the upright configuration of Au2, particularly strongly above the positions of oxygen atoms. In contrast, the parallel configuration is lowered in energy, most strongly above positions of Mg atoms.

When applied to the Au2–MgO system, SpookyNet significantly improves upon the values reported for models without any treatment of nonlocal effects and also achieves lower prediction errors than 4G-BPNNs49, which model nonlocal charge transfer via charge equilibration (see Table 1). For completeness, values for the other three systems introduced in ref. 49 are also reported in Table 1, even though they could be modeled without including nonlocal interactions (as long as charge embeddings are used). For details on the number of training/validation data used for each dataset, refer to ref. 49 (all models use the same settings).

Table 1.

Root mean square errors (RMSEs) of energies (meV/atom), forces (meV Å−1) and charges (me) for the datasets introduced in ref. 49.

| 2G-BPNN | 3G-BPNN | 4G-BPNN | SpookyNet | ||

|---|---|---|---|---|---|

| C10H2/C10H | Energy | 1.619 | 2.045 | 1.194 | 0.364 |

| Forces | 129.5 | 231.0 | 78.00 | 5.802 | |

| Charges | — | 20.08 | 6.577 | 0.117 | |

| Na8/9Cl | Energy | 1.692 | 2.042 | 0.481 | 0.135 |

| Forces | 57.39 | 76.67 | 32.78 | 1.052 | |

| Charges | — | 20.80 | 15.83 | 0.111 | |

| Ag | Energy | 352.0 | 320.2 | 1.323 | 0.220 |

| Forces | 1803 | 1913 | 31.69 | 26.64 | |

| Charges | — | 26.48 | 9.976 | 0.459 | |

| Au2–MgO | Energy | 2.287 | — | 0.219 | 0.107 |

| Forces | 153.1 | — | 66.0 | 5.337 | |

| Charges | — | — | 5.698 | 1.013 |

The values for 2G-, 3G-, and 4G-BPNNs are taken from ref. 49. Best results in bold.

Generalization across chemical and conformational space

For more typical ML-FF construction tasks where nonlocal effects are negligible and all molecules have consistent electronic states, SpookyNet improves upon the generalization capabilities of comparable ML-FFs. Here, the QM7-X database71 is considered as a challenging benchmark. This database was generated starting from ~7k molecular graphs with up to seven non-hydrogen atoms (C, N, O, S, Cl) drawn from the GDB13 chemical universe72. Structural and constitutional (stereo)isomers were sampled and optimized for each graph, leading to ~42k equilibrium structures. For each of these, an additional 100 non-equilibrium structures were generated by displacing atoms along linear combinations of normal modes corresponding to a temperature of 1500 K, which leads to ~4.2M structures in total. For each of these, QM7-X contains data for 42 physicochemical properties (see ref. 71 for a complete list). For constructing ML-FFs, however, the properties Etot and Ftot, which correspond to energies and forces computed at the PBE0+MBD73,74 level of theory, are the most relevant.

Because of the variety of molecules and the strongly distorted conformers contained in the QM7-X dataset, models need to be able to generalize across both chemical and conformational space to perform well. Here, two different settings are considered: In the more challenging task (unknown molecules/unknown conformations), a total of 10,100 entries corresponding to all structures sampled for 25 out of the original ~7k molecular graphs are reserved as test set and models are trained on the remainder of the data. In this setting, all structures in the test set correspond to unknown molecules, i.e., the model has to learn general principles of chemistry to perform well. As comparison, an easier task (known molecules/unknown conformations) is constructed by randomly choosing 10,100 entries as test set, so it is very likely that the training set contains at least some structures for all molecules contained in QM7-X (only unknown conformations need to be predicted). SpookyNet achieves lower prediction errors than both SchNet35 and PaiNN61 for both tasks and is only marginally worse when predicting completely unknown molecules, suggesting that it successfully generalizes across chemical space (see Table 2). Interestingly, a SpookyNet model trained on QM7-X also generalizes to significantly larger chemical structures: Even though it was trained on structures with at most seven non-hydrogen atoms, it can be used e.g., for geometry optimizations of molecules like vitamin B2, cholesterol, or deca-alanine (see Fig. 2c). The optimized structures predicted by SpookyNet have low root mean square deviations (RMSD) from the ab initio reference geometries and are of higher quality than structures obtained from other models trained on QM7-X (see Supplementary Fig. 6). Remarkably, it even predicts the correct structures for fullerenes, although the QM7-X dataset contains no training data for any pure carbon structure. As an additional test, the trained model was also applied to structures from the conformer benchmark introduced in ref. 75, which contains structures with up to 48 non-hydrogen atoms. Here, the relative energies between different conformers are predicted with sub-kcal accuracy, although absolute energies are systematically overestimated for large structures (see the conformer benchmark in the Supplementary Discussion for details).

Table 2.

Mean absolute errors for energy (meV) and force (meV Å−1) predictions for the QM7-X71 dataset.

| task | SchNet35 | PaiNN61 | SpookyNet | |

|---|---|---|---|---|

| Known molecules/ | Energy | 50.847 | 15.691 | 10.620 (0.403) |

| Unknown conformations | Forces | 53.695 | 20.301 | 14.851 (0.430) |

| Unknown molecules/ | Energy | 51.275 | 17.594 | 13.151 (0.423) |

| Unknown conformations | Forces | 62.770 | 24.161 | 17.326 (0.701) |

Results for SpookyNet are averaged over four runs, the standard deviation between runs is given in brackets. Best results in bold.

Since the QM7-X dataset has only recently been published, the performance of SpookyNet is also benchmarked on the well-established MD17 dataset21. MD17 consists of structures, energies, and forces collected from ab initio MD simulations of small organic molecules at the PBE+TS76,77 level of theory. Prediction errors for several models published in the literature are summarized in Table 3 and compared to SpookyNet, which reaches lower prediction errors or closely matches the performance of other models for all tested molecules.

Table 3.

Mean absolute errors for energy (kcal mol−1) and force (kcal mol−1 Å−1) predictions for the MD17 benchmark.

| sGDML22 | SchNet35 | PhysNet37 | FCHL1926 | NequIP62 | PaiNN61 | SpookyNet | ||

|---|---|---|---|---|---|---|---|---|

| Aspirin | Energy | 0.19 | 0.37 | 0.230 | 0.182 | — | 0.159 | 0.151 (0.008) |

| Forces | 0.68 | 1.35 | 0.605 | 0.478 | 0.348 | 0.371 | 0.258 (0.034) | |

| Ethanol | Energy | 0.07 | 0.08 | 0.059 | 0.054 | — | 0.063 | 0.052 (0.001) |

| Forces | 0.33 | 0.39 | 0.160 | 0.136 | 0.208 | 0.230 | 0.094 (0.011) | |

| Malondialdehyde | Energy | 0.10 | 0.13 | 0.094 | 0.081 | — | 0.091 | 0.079 (0.002) |

| Forces | 0.41 | 0.66 | 0.319 | 0.245 | 0.337 | 0.319 | 0.167 (0.015) | |

| Naphthalene | Energy | 0.12 | 0.16 | 0.142 | 0.117 | — | 0.117 | 0.116 (0.001) |

| Forces | 0.11 | 0.58 | 0.310 | 0.151 | 0.096 | 0.083 | 0.089 (0.018) | |

| Salicylic acid | Energy | 0.12 | 0.20 | 0.126 | 0.114 | — | 0.114 | 0.114 (0.004) |

| Forces | 0.28 | 0.85 | 0.337 | 0.221 | 0.238 | 0.209 | 0.180 (0.040) | |

| Toluene | Energy | 0.10 | 0.12 | 0.100 | 0.098 | — | 0.097 | 0.094 (0.001) |

| Forces | 0.14 | 0.57 | 0.191 | 0.203 | 0.101 | 0.102 | 0.087 (0.014) | |

| Uracil | Energy | 0.11 | 0.14 | 0.108 | 0.104 | — | 0.104 | 0.105 (0.001) |

| Forces | 0.24 | 0.56 | 0.218 | 0.105 | 0.172 | 0.140 | 0.119 (0.021) |

Results for SpookyNet are averaged over ten random splits, the standard deviation between runs is given in brackets. All models are trained on 1000 data points (separate models are used for each molecule), best results in bold.

Discussion

The present work innovates by introducing SpookyNet, an MPNN for constructing ML-FFs, which models electronic degrees of freedom and nonlocal interactions using attention in a transformer architecture78,79. SpookyNet includes physically motivated inductive biases that facilitate the extraction of chemical insight from data. For example, element embeddings in SpookyNet include the ground state electronic configuration, which encourages alchemically meaningful representations. An analytical short-range correction based on the Ziegler-Biersack-Littmark stopping potential80 improves the description of nuclear repulsion, whereas long-range contributions to the potential energy are modeled with point charge electrostatics and an empirical dispersion correction, following previous works37,65,81–84. These empirical augmentations allow SpookyNet to extrapolate beyond the data it was trained on based on physical knowledge from data.

While the general method to combine analytical long-range contributions with the predictions of a neural network is inspired by PhysNet37, there are several differences between PhysNet and the present approach. Notably, PhysNet does not include analytical short-range corrections and uses interaction functions that rely on purely radial information (instead of incorporating higher-order angular information). In addition, PhysNet cannot model different electronic states or nonlocal effects. In contrast, SpookyNet can predict different potential energy surfaces for the same molecule in different electronic states and is able to model nonlocal changes to the properties of materials such as MgO upon introduction of dopant atoms. Further, it successfully generalizes to structures well outside the chemical and conformational space covered by its training data and improves upon existing models in different quantum chemical benchmarks. The inductive biases incorporated into the architecture of SpookyNet encourage learning a chemically intuitive representation of molecular systems (see Fig. 2a). For example, the interaction functions learned by SpookyNet are designed to resemble atomic orbitals (see Fig. 1d). Obtaining such an understanding of how ML models85, here SpookyNet, solve a prediction problem is crucially important in the sciences as a low test set error32 alone cannot rule out that a model may overfit or for example capitalize on various artifacts in data86 or show “Clever Hans” effects87

So far, most ML-FFs rely on nuclear charges and atomic coordinates as their only inputs and are thus unable to distinguish chemical systems with different electronic states. Further, they often rely on purely local information and break down when nonlocal effects cannot be neglected. The additions to MPNN architectures introduced in this work solve both of these issues, extending the applicability of ML-FFs to a much wider range of chemical systems than was previously possible and allow to model properties of quantum systems that have been neglected in many existing ML-FFs.

Remaining challenges in the construction of ML-FFs pertain to their successful application to large and heterogenuous condensed phase systems, such as proteins in aqueous solution. This is a demanding task, among others, due to the difficulty of performing ab initio calculations for such large systems, which is necessary to generate appropriate reference data. Although models trained on small molecules may generalize well to larger structures, it is not understood how to guarantee that all relevant regions of the potential energy surface, visited e.g. during a dynamics simulation, are well described. We conjecture that the inclusion of physically motivated inductive biases, which is a crucial ingredient in the SpookyNet architecture, may serve as a general design principle to improve the next generation of ML-FFs and tackle such problems.

Methods

Details on the neural network architecture

In the following, basic neural network building blocks and components of the SpookyNet architecture are described in detail (see Fig. 3 for a schematic depiction). A standard building block of most neural networks are linear layers, which take input features and transform them according to

| 5 |

where and are learnable weights and biases, and nin and nout are the dimensions of the input and output feature space, respectively (in this work, nin = nout unless otherwise specified). Since Eq. (5) can only describe linear transformations, an activation function is required to learn nonlinear mappings between feature spaces. Here, a generalized SiLU (Sigmoid Linear Unit) activation function88,89 (also known as “swish”90) given by

| 6 |

is used. Depending on the values of α and β, Eq. (6) smoothly interpolates between a linear function and the popular ReLU (Rectified Linear Unit) activation91 (see Supplementary Fig. 4). Instead of choosing arbitrary fixed values, α and β are learnable parameters in this work. Whenever the notation silu(x) is used, Eq. (6) is applied to the vector x entry-wise and separate α and β parameters are used for each entry. Note that a smooth activation function is necessary for predicting potential energies, because the presence of kinks would introduce discontinuities in the atomic forces.

In theory, increasing the number of layers should never decrease the performance of a neural network, since in principle, superfluous layers could always learn the identity mapping. In practice, however, deeper neural networks become increasingly difficult to train due to the vanishing gradients problem92, which often degrades performance when too many layers are used. To combat this issue, it is common practice to introduce “shortcuts” into the architecture that skip one or several layers93, creating a residual block94. By inverting the order of linear layers and activation functions, it is even possible to train neural networks with several hundreds of layers95. These “pre-activation” residual blocks transform input features x according to

| 7 |

Throughout the SpookyNet architecture, small feedforward neural networks consisting of a residual block, followed by an activation and a linear output layer, are used as learnable feature transformations. For conciseness, such residual multilayer perceptrons (MLPs) are written as

| 8 |

The inputs to SpookyNet are transformed to initial atomic features (Eq. (1)) via embeddings. A nuclear embedding is used to map atomic numbers to vectors given by

| 9 |

Here, is a parameter matrix that projects constant element descriptors to an F-dimensional feature space and are element-specific bias parameters. The descriptors dZ encode information about the ground state electronic configuration of each element (see Supplementary Table 3 for details). Note that the term by itself allows to learn arbitrary embeddings for different elements, but including MdZ provides an inductive bias to learn representations that capture similarities between different elements, i.e., contain alchemical knowledge.

Electronic embeddings are used to map the total charge and number of unpaired electrons to vectors , which delocalize this information over all atoms via a mechanism similar to attention78. The mapping is given by

| 10 |

where are parameters and Ψ = Q for charge embeddings, or Ψ = S for spin embeddings (independent parameters are used for each type of electronic embedding). Separate parameters indicated by subscripts ± are used for positive and negative total charge inputs Q (since S is always positive or zero, only the + parameters are used for spin embeddings). Here, all bias terms in the resmlp transformation (Eq. (8)) are removed, such that when av = 0, the electronic embedding eΨ = 0 as well. Note that ∑iai = Ψ, i.e. the electronic information is distributed across atoms with weights proportional to the scaled dot product .

The initial atomic representations x(0) (Eq. (1)) are refined iteratively by a chain of T interaction modules according to

| 11 |

Here, are temporary atomic features and represent interactions with other atoms. They are computed by local (Eq. (12)) and nonlocal (Eq. (18)) interaction blocks, respectively, which are described below. Each module t produces two outputs , where x(t) is the input to the next module in the chain and all y(t) outputs are accumulated to the final atomic descriptors f (Eq. (3)).

The features l in Eq. (11) represent a local interaction of atoms within a cutoff radius and introduce information about the atom positions . They are computed from the temporary features (see Eq. (11)) according to

| 12 |

where, is the set of all indices j ≠ i for which (with ). The parameter matrices are used to construct feature-wise interaction functions as linear combinations of basis functions , , and (see Eq. (13)), which have the same rotational symmetries as s-, p-, and d-orbitals. The features , , and encode the arrangement of neighboring atoms within the cutoff radius and describes the central atom in each neighborhood. Here, s stores purely radial information, whereas and allow to resolve angular information in a computationally efficient manner (see Supplementary Discussion for details). The parameter matrices are used to compute two independent linear projections for each of the rotationally equivariant features and , from which rotationally invariant features are obtained via a scalar product 〈⋅,⋅〉. The basis functions (see Fig. 6) are given by

| 13 |

where the radial component ρk is

| 14 |

and

| 15 |

are Bernstein polynomials (k = 0, …, K − 1). The hyper-parameter K determines the total number of radial components (and the degree of the Bernstein polynomials). For K → ∞, linear combinations of bk,K−1(x) can approximate any continuous function on the interval [0, 1] uniformly58. An exponential function maps distances r from [0, ∞) to the interval (0, 1], where is a radial decay parameter shared across all basis functions (for computational efficiency). A desirable side effect of this mapping is that the rate at which learned interaction functions can vary decreases with increasing r, which introduces a chemically meaningful inductive bias (electronic wave functions also decay exponentially with increasing distance from a nucleus)37,55. The cutoff function

| 16 |

ensures that basis functions smoothly decay to zero for r ≥ rcut, so that no discontinuities are introduced when atoms enter or leave the cutoff radius. The angular component in Eq. (13) is given by

| 17 |

where and . Note that the in Eq. (17) omit the normalization constant , but are otherwise identical to the standard (real) spherical harmonics.

Fig. 6. Visualization of basis functions.

All basis functions with K = 4 (see Eq. (13)) with different radial and angular components ρk (Eq. (14)) and (Eq. (17)) are shown.

Although locality is a valid assumption for many chemical systems11, electrons may also be delocalized across multiple distant atoms. Starting from the temporary features (see Eq. (11)), such nonlocal interactions are modeled via self-attention78 as

| 18 |

where the features n in Eq. (11) are the (transposed) rows of the matrix . The idea of attention is inspired by retrieval systems96, where a query is mapped against keys to retrieve the best-matched corresponding values from a database. Standard attention is computed as

| 19 |

where are queries, keys, and values, 1N is the all-ones vector of length N, and diag(⋅) is a diagonal matrix with the input vector as the diagonal. Unfortunately, computing attention with Eq. (19) has a time and space complexity of and 79, respectively, because the attention matrix has to be stored explicitly. Since quadratic scaling with the number of atoms N is problematic for large chemical systems, the FAVOR+ (Fast Attention Via positive Orthogonal Random features) approximation79 is used instead:

| 20 |

Here is a mapping designed to approximate the softmax kernel via f random features, see ref. 79 for details (here, f = F for simplicity). The time and space complexities for computing attention with Eq. (20) are and 79, i.e., both scale linearly with the number of atoms N. To make the evaluation of SpookyNet deterministic, the random features of the mapping ϕ are drawn only once at initialization and kept fixed afterwards (instead of redrawing them for each evaluation).

Once all interaction modules are evaluated, atomic energy contributions Ei are predicted from the atomic descriptors fi via linear regression

| 21 |

and combined to obtain the total potential energy (see Eq. (4)). Here, are the regression weights and are element-dependent energy biases.

The nuclear repulsion term Erep in Eq. (4) is based on the Ziegler-Biersack-Littmark stopping potential80 and given by

| 22 |

Here, ke is the Coulomb constant and ak, ck, p, and d are parameters (see Eqs. (12) and (16) for the definitions of and fcut). Long-range electrostatic interactions are modeled as

| 23 |

where qi are atomic partial charges predicted from the atomic features fi according to

| 24 |

Here, and are regression weights and element-dependent biases, respectively. The second half of the equation ensures that ∑iqi = Q, i.e., the total charge is conserved. Standard Ewald summation97 can be used to evaluate Eele when periodic boundary conditions are used. Note that Eq. (23) smoothly interpolates between the correct r−1 behavior of Coulomb’s law at large distances (r > roff) and a damped dependence at short distances (r < ron) via a smooth switching function fswitch given by

| 25 |

For simplicity, and , i.e. the switching interval is automatically adjusted depending on the chosen cutoff radius rcut (see Eq. (16)). It is also possible to construct dipole moments from the partial charges according to

| 26 |

which can be useful for calculating infrared spectra from MD simulations and for fitting qi to ab initio reference data without imposing arbitrary charge decomposition schemes98. Long-range dispersion interactions are modeled via the term Evdw. Analytical van der Waals corrections are an active area of research and many different methods, for example the Tkatchenko-Scheffler correction77, or many body dispersion74, have been proposed99. In this work, the two-body term of the D4 dispersion correction100 is used for its simplicity and computational efficiency:

| 27 |

Here sn are scaling parameters, is a damping function, and are pairwise dispersion coefficients. They are obtained by interpolating between tabulated reference values based on a (geometry-dependent) fractional coordination number and an atomic partial charge qi. In the standard D4 scheme, the partial charges are obtained via a charge equilibration scheme100, in this work, however, the qi from Eq. (24) are used instead. Note that the D4 method was developed mainly to correct for the lack of dispersion in density functionals, so typically, some of its parameters are adapted to the functional the correction is applied to (optimal values for each functional are determined by fitting to high-quality electronic reference data)100. In this work, all D4 parameters that vary between different functionals are treated as learnable parameters when SpookyNet is trained, i.e., they are automatically adapted to the reference data. Since Eq. (24) (instead of charge equilibration) is used to determine the partial charges, an additional learnable parameter sq is introduced to scale the tabulated reference charges used to determine dispersion coefficients . For further details on the implementation of the D4 method, the reader is referred to ref. 100.

Training and hyperparameters

All SpookyNet models in this work use T = 6 interaction modules, F = 128 features, and a cutoff radius rcut = 10 a0 (≈5.29177 Å), unless otherwise specified. Weights are initialized as random (semi-)orthogonal matrices with entries scaled according to the Glorot initialization scheme92. An exception are the weights of the second linear layer in residual blocks (linear2 in Eq. (7)) and the matrix M used in nuclear embeddings (Eq. (9)), which are initialized with zeros. All bias terms and the and parameters in the electronic embedding (Eq. (10)) are also initialized with zeros. The parameters for the activation function (Eq. (6)) start as α = 1.0 and β = 1.702, following the recommendations given in Ref. 88. The radial decay parameter γ used in Eq. (14) is initialized to and constrained to positive values. The parameters of the empirical nuclear repulsion term (Eq. (22)) start from the literature values of the ZBL potential80 and are constrained to positive values (coefficients ck are further constrained such that ∑ck = 1 to guarantee the correct asymptotic behavior for short distances). Parameters of the dispersion correction (Eq. (27)) start from the values recommended for Hartree-Fock calculations100 and the charge scaling parameter sq is initialized to 1 (and constrained to remain positive).

The parameters are trained by minimizing a loss function with mini-batch gradient descent using the AMSGrad optimizer101 with the recommended default momentum hyperparameters and an initial learning rate of 10−3. During training, an exponential moving average of all model parameters is kept using a smoothing factor of 0.999. Every 1k training steps, a model using the averaged parameters is evaluated on the validation set and the learning rate is decayed by a factor of 0.5 whenever the validation loss does not decrease for 25 consecutive evaluations. Training is stopped when the learning rate drops below 10−5 and the model that performed best on the validation set is selected. The loss function is given by

| 28 |

where , , and are separate loss terms for energies, forces and dipole moments and αE, αF, and αμ corresponding weighting hyperparameters that determine the relative influence of each term to the total loss. The energy loss is given by

| 29 |

where B is the number of structures in the mini-batch, Epot,b the predicted potential energy (Eq. (4)) for structure b and the corresponding reference energy. The batch size B is chosen depending on the available training data: When training sets contain 1k structures or less, B = 1, for 10k structures or less, B = 10, and for more than 10k structures, B = 100. The force loss is given by

| 30 |

where Nb is the number of atoms in structure b and the reference force acting on atom i in structure b. The dipole loss

| 31 |

allows to learn partial charges (Eq. (24)) from reference dipole moments , which are, in contrast to arbitrary charge decompositions, true quantum mechanical observables98. Partial charges learned in this way are typically similar in magnitude to Hirshfeld charges102 and follow similar overall trends (see Supplementary Fig. 5). Note that for charged molecules, the dipole moment is dependent on the origin of the coordinate system, so consistent conventions must be used. For some datasets or applications, however, reference partial charges obtained from a charge decomposition scheme might be preferred (or the only data available). In this case, the term in Eq. (28) is replaced by with

| 32 |

For simplicity, the relative loss weights are set to αE = αF = αμ/q = 1 in this work, with the exception of the MD17 and QM7-X datasets, for which αF = 100 is used following previous work37. Both energy and force prediction errors are significantly reduced when the force weight is increased (see Supplementary Table 4). Note that the relative weight of loss terms also depends on the chosen unit system (atomic units are used here). For datasets that lack the reference data necessary for computing any of the given loss terms (Eqs. (29)–(32)), the corresponding weight is set to zero. In addition, whenever no reference data (neither dipole moments nor reference partial charges) are available to fit partial charges, both Eele and Evdw are omitted when predicting the potential energy Epot (see Eq. (4)).

For the “unknown molecules/unknown conformations” task reported in Table 2, the 25 entries with the following ID numbers (idmol field in the QM7-X file format) were used as a test set: 1771, 1805, 1824, 2020, 2085, 2117, 3019, 3108, 3190, 3217, 3257, 3329, 3531, 4010, 4181, 4319, 4713, 5174, 5370, 5580, 5891, 6315, 6583, 6809, 7020. In addition to energies and forces, SpookyNet uses dipole moments (property D in the QM7-X dataset) to fit atomic partial charges.

Computing and visualizing local chemical potentials and nonlocal contributions

To compute the local chemical potentials shown in Fig. 2a and Supplementary Fig. 3, a similar approach as that described in Ref. 33 is followed. To compute the local chemical potential of a molecule M for an atom of type A (here, hydrogen is used), the idea is to introduce a probe atom of type A at position and let it interact with all atoms of M, but not vice versa. In other words, the prediction for M is unperturbed, but the probe atom “feels” the presence of M. Then, the predicted energy contribution of the probe atom is interpreted as its local chemical potential . This is achieved as follows: First, the electronic embeddings (Eq. (10)) for all N atoms in M are computed as if the probe atom was not present. Then, the embeddings for the probe atom are computed as if it was part of a larger molecule with N + 1 atoms. Similarly, the contributions of local interactions (Eq. (12)) and nonlocal interactions (Eq. (18)) to the features of the probe atom are computed by pretending it is part of a molecule with N + 1 atoms, whereas all contributions to the features of the N atoms in molecule M are computed without the presence of the probe atom. For visualization, all chemical potentials are projected onto the isosurface, where the sum runs over the positions of all atoms i in M.

To obtain the individual contributions for s-, p-, and d-orbital-like interactions shown in Fig. 2, different terms for the computation of l in Eq. (12) are set to zero. For the s-orbital-like contribution, both and are set to zero. For the p-orbital-like contribution, only is set to zero, and the s-orbital-like contribution is subtracted from the result. Similarly, for the d-orbital-like contribution, the model is evaluated normally and the result from setting only to zero is subtracted.

The nonlocal contributions to the potential energy surface shown in Fig. 2b are obtained by first evaluating the model normally and then subtracting the predictions obtained when setting n in Eq. (11) to zero.

SchNet and PaiNN training

The SchNet and PaiNN models for the QM7-X experiments use F = 128 features, as well as T = 6 and T = 3 interactions, respectively. Both employ 20 Gaussian radial basis function up to a cutoff of 5 Å. They were trained with the Adam optimizer103 at a learning rate of 10−4 and a batch size of 10.

Data generation

For demonstrating the ability of SpookyNet to model different electronic states and nonlocal interactions, energies, forces, and dipoles for three new datasets were computed at the semi-empirical GFN2-xTB level of theory104. Both the Ag/Ag (see Fig. 4a) and the singlet/triplet CH2 (see Fig. 4b) datasets were computed by sampling 550 structures around the minima of both electronic states with normal mode sampling23 at 1000 K. Then, each sampled structure was re-computed in the other electronic state (e.g., all structures sampled for Ag were re-computed with a negative charge), leading to a total of 2200 structures for each dataset (models were trained on a subset of 1000 randomly sampled structures).

The dataset for Fig. 5 was computed by performing bond scans for all nine shown diatomic molecules using 1k points spaced evenly between 1.5 and 20 a0, leading to a total of 9k structures. Models were trained on all data with an increased cutoff rcut = 18 a0 to demonstrate that a model without nonlocal interactions is unable to fit the data, even when it is allowed to overfit and use a large cutoff.

The reference geometries shown in Fig. 2c and Supplementary Fig. 6 were computed at the PBE0+MBD73,74 level using the FHI-aims code105,106. All calculations used “tight” settings for basis functions and integration grids and the same convergence criteria as those applied for computing the QM7-X dataset71.

Supplementary information

Acknowledgements

O.T.U. acknowledges funding from the Swiss National Science Foundation (Grant No. P2BSP2_188147). We thank the authors of ref. 107 for sharing raw data for reproducing the learning curves shown in Supplementary Fig. 2 and the geometries displayed in Supplementary Fig. 1e. K.R.M. was supported in part by the Institute of Information & Communications Technology Planning & Evaluation (IITP) grant funded by the Korea Government (No. 2019-0-00079, Artificial Intelligence Graduate School Program, Korea University), and was partly supported by the German Ministry for Education and Research (BMBF) under Grants 01IS14013A-E, 01GQ1115, 01GQ0850, 01IS18025A, and 01IS18037A; the German Research Foundation (DFG) under Grant Math+, EXC 2046/1, Project ID 390685689. Correspondence should be addressed to O.T.U. and K.R.M. We thank Alexandre Tkatchenko and Hartmut Maennel for very helpful discussions and feedback on the manuscript.

Author contributions

O.T.U. designed the SpookyNet architecture, wrote the code, and ran experiments. O.T.U., S.C., M.G., K.T.S., and H.E.S. designed the experiments. O.T.U. and K.R.M. designed the figures, all authors contributed to writing the manuscript.

Peer review information

Nature Communications thanks Jan Gerit Brandenburg and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Data availability

The singlet/triplet carbene and Ag/Ag datasets generated for this work are available without restrictions from 10.5281/zenodo.5115732108. All other datasets used in this work are publicly available from ref. 109 (see completeness test in the Supplementary Discussion), http://www.sgdml.org(MD17), ref. 110 (QM7-X), ref. 111 (datasets used in Table 1), and ref. 68 (QMspin).

Code availability

A reference implementation of SpookyNet using PyTorch112 is available from https://github.com/OUnke/SpookyNet.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Oliver T. Unke, oliver.unke@googlemail.com

Klaus-Robert Müller, Email: klaus-robert.mueller@tu-berlin.de.

Supplementary information

The online version contains supplementary material available at 10.1038/s41467-021-27504-0.

References

- 1.Warshel A. Molecular dynamics simulations of biological reactions. Acc. Chem. Res. 2002;35:385. doi: 10.1021/ar010033z. [DOI] [PubMed] [Google Scholar]

- 2.Karplus M, McCammon JA. Molecular dynamics simulations of biomolecules. Nat. Struct. Biol. 2002;9:646. doi: 10.1038/nsb0902-646. [DOI] [PubMed] [Google Scholar]

- 3.Dirac PAM. Quantum mechanics of many-electron systems. Proc. R. Soc. Lond. A. 1929;123:714. [Google Scholar]

- 4.Dykstra, C., Frenking, G., Kim, K., & Scuseria, G. (Eds.). Theory and applications of computational chemistry: the first forty years. (Elsevier 2005).

- 5.Unke OT, Koner D, Patra S, Käser S, Meuwly M. High-dimensional potential energy surfaces for molecular simulations: from empiricism to machine learning. Mach. Learn. Sci. Technol. 2020;1:013001. [Google Scholar]

- 6.Bartók AP, Payne MC, Kondor R, Csányi G. Gaussian approximation potentials: the accuracy of quantum mechanics, without the electrons. Phys. Rev. Lett. 2010;104:136403. doi: 10.1103/PhysRevLett.104.136403. [DOI] [PubMed] [Google Scholar]

- 7.Rupp M, Tkatchenko A, Müller K-R, Von Lilienfeld OA. Fast and accurate modeling of molecular atomization energies with machine learning. Phys. Rev. Lett. 2012;108:058301. doi: 10.1103/PhysRevLett.108.058301. [DOI] [PubMed] [Google Scholar]

- 8.Bartók AP, et al. Machine learning unifies the modeling of materials and molecules. Sci. Adv. 2017;3:e1701816. doi: 10.1126/sciadv.1701816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gastegger M, Schwiedrzik L, Bittermann M, Berzsenyi F, Marquetand P. wACSF—weighted atom-centered symmetry functions as descriptors in machine learning potentials. J. Chem. Phys. 2018;148:241709. doi: 10.1063/1.5019667. [DOI] [PubMed] [Google Scholar]

- 10.Schütt K, Gastegger M, Tkatchenko A, Müller K-R, Maurer RJ. Unifying machine learning and quantum chemistry with a deep neural network for molecular wavefunctions. Nat. Commun. 2019;10:5024. doi: 10.1038/s41467-019-12875-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Unke OT, et al. Machine learning force fields. Chem. Rev. 2021;121:10142–10186. doi: 10.1021/acs.chemrev.0c01111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.von Lilienfeld OA, Müller K-R, Tkatchenko A. Exploring chemical compound space with quantum-based machine learning. Nat. Rev. Chem. 2020;4:347. doi: 10.1038/s41570-020-0189-9. [DOI] [PubMed] [Google Scholar]

- 13.Noé F, Tkatchenko A, Müller K-R, Clementi C. Machine learning for molecular simulation. Annu. Rev. Phys. Chem. 2020;71:361. doi: 10.1146/annurev-physchem-042018-052331. [DOI] [PubMed] [Google Scholar]

- 14.Glielmo, A. et al. Unsupervised learning methods for molecular simulation data. Chem. Rev. 121, 9722–9758 (2021). [DOI] [PMC free article] [PubMed]

- 15.Keith JA, et al. Combining machine learning and computational chemistry for predictive insights into chemical systems. Chem. Rev. 2021;121:9816–9872. doi: 10.1021/acs.chemrev.1c00107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Sauceda HE, Gastegger M, Chmiela S, Müller K-R, Tkatchenko A. Molecular force fields with gradient-domain machine learning (GDML): Comparison and synergies with classical force fields. J. Chem. Phys. 2020;153:124109. doi: 10.1063/5.0023005. [DOI] [PubMed] [Google Scholar]

- 17.Behler J, Parrinello M. Generalized neural-network representation of high-dimensional potential-energy surfaces. Phys. Rev. Lett. 2007;98:146401. doi: 10.1103/PhysRevLett.98.146401. [DOI] [PubMed] [Google Scholar]

- 18.Behler J. Atom-centered symmetry functions for constructing high-dimensional neural network potentials. J. Chem. Phys. 2011;134:074106. doi: 10.1063/1.3553717. [DOI] [PubMed] [Google Scholar]

- 19.McCulloch WS, Pitts W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943;5:115. [PubMed] [Google Scholar]

- 20.Unke OT, Meuwly M. Toolkit for the construction of reproducing kernel-based representations of data: application to multidimensional potential energy surfaces. J. Chem. Inf. Model. 2017;57:1923. doi: 10.1021/acs.jcim.7b00090. [DOI] [PubMed] [Google Scholar]

- 21.Chmiela S, et al. Machine learning of accurate energy-conserving molecular force fields. Sci. Adv. 2017;3:e1603015. doi: 10.1126/sciadv.1603015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chmiela S, Sauceda HE, Poltavsky I, Müller K-R, Tkatchenko A. sGDML: Constructing accurate and data efficient molecular force fields using machine learning. Computer Phys. Commun. 2019;240:38. [Google Scholar]

- 23.Smith JS, Isayev O, Roitberg AE. ANI-1: an extensible neural network potential with DFT accuracy at force field computational cost. Chem. Sci. 2017;8:3192. doi: 10.1039/c6sc05720a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Zhang L, Han J, Wang H, Car R, Weinan E. Deep potential molecular dynamics: a scalable model with the accuracy of quantum mechanics. Phys. Rev. Lett. 2018;120:143001. doi: 10.1103/PhysRevLett.120.143001. [DOI] [PubMed] [Google Scholar]

- 25.Unke OT, Meuwly M. A reactive, scalable, and transferable model for molecular energies from a neural network approach based on local information. J. Chem. Phys. 2018;148:241708. doi: 10.1063/1.5017898. [DOI] [PubMed] [Google Scholar]

- 26.Christensen AS, Bratholm LA, Faber FA, Anatole von Lilienfeld O. Anatole von Lilienfeld, O. FCHL revisited: faster and more accurate quantum machine learning. J. Chem. Phys. 2020;152:044107. doi: 10.1063/1.5126701. [DOI] [PubMed] [Google Scholar]

- 27.Vapnik, V. N. The Nature of Statistical Learning Theory. (Springer, 1995).

- 28.Cortes C, Vapnik V. Support-vector networks. Mach. Learn. 1995;20:273. [Google Scholar]

- 29.Müller K-R, Mika S, Ratsch G, Tsuda K, Schölkopf B. An introduction to kernel-based learning algorithms. IEEE Trans. Neural Netw. 2001;12:181. doi: 10.1109/72.914517. [DOI] [PubMed] [Google Scholar]

- 30.Schölkopf, B. & Smola, A. J. Learning with kernels: support vector machines, regularization, optimization, and beyond. (MIT press, 2002).

- 31.Braun ML, Buhmann JM, Müller K-R. On relevant dimensions in kernel feature spaces. J. Mach. Learn. Res. 2008;9:1875. [Google Scholar]

- 32.Hansen K, et al. Assessment and validation of machine learning methods for predicting molecular atomization energies. J. Chem. Theory Comput. 2013;9:3404. doi: 10.1021/ct400195d. [DOI] [PubMed] [Google Scholar]

- 33.Schütt KT, Arbabzadah F, Chmiela S, Müller KR, Tkatchenko A. Quantum-chemical insights from deep tensor neural networks. Nat. Commun. 2017;8:13890. doi: 10.1038/ncomms13890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Gilmer, J., Schoenholz, S. S., Riley, P. F., Vinyals, O. & Dahl, G. E. Neural message passing for quantum chemistry, in International Conference on Machine Learning, 1263–1272 https://proceedings.mlr.press/v70/gilmer17a.html (2017).

- 35.Schütt KT, Sauceda HE, Kindermans P-J, Tkatchenko A, Müller K-R. SchNet—a deep learning architecture for molecules and materials. J. Chem. Phys. 2018;148:241722. doi: 10.1063/1.5019779. [DOI] [PubMed] [Google Scholar]

- 36.Lubbers N, Smith JS, Barros K. Hierarchical modeling of molecular energies using a deep neural network. J. Chem. Phys. 2018;148:241715. doi: 10.1063/1.5011181. [DOI] [PubMed] [Google Scholar]

- 37.Unke OT, Meuwly M. PhysNet: A neural network for predicting energies, forces, dipole moments, and partial charges. J. Chem. Theory Comput. 2019;15:3678. doi: 10.1021/acs.jctc.9b00181. [DOI] [PubMed] [Google Scholar]

- 38.Friesner RA. Ab initio quantum chemistry: methodology and applications. Proc. Natl Acad. Sci. USA. 2005;102:6648. doi: 10.1073/pnas.0408036102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Born, M. & Einstein, A. The Born-Einstein Letters 1916–1955 (Macmillan, 2005).

- 40.Noodleman L, Peng C, Case D, Mouesca J-M. Orbital interactions, electron delocalization and spin coupling in iron-sulfur clusters. Coord. Chem. Rev. 1995;144:199. [Google Scholar]

- 41.Dreuw A, Weisman JL, Head-Gordon M. Long-range charge-transfer excited states in time-dependent density functional theory require non-local exchange. J. Chem. Phys. 2003;119:2943. [Google Scholar]

- 42.Duda L-C, et al. Resonant inelastic X-Ray scattering at the oxygen K resonance of NiO: nonlocal charge transfer and double-singlet excitations. Phys. Rev. Lett. 2006;96:067402. doi: 10.1103/PhysRevLett.96.067402. [DOI] [PubMed] [Google Scholar]

- 43.Bellec A, et al. Nonlocal activation of a bistable atom through a surface state charge-transfer process on Si(100)–(2 × 1):H. Phys. Rev. Lett. 2010;105:048302. doi: 10.1103/PhysRevLett.105.048302. [DOI] [PubMed] [Google Scholar]

- 44.Boström EV, Mikkelsen A, Verdozzi C, Perfetto E, Stefanucci G. Charge separation in donor–C60 complexes with real-time green functions: the importance of nonlocal correlations. Nano Lett. 2018;18:785. doi: 10.1021/acs.nanolett.7b03995. [DOI] [PubMed] [Google Scholar]

- 45.Ghasemi SA, Hofstetter A, Saha S, Goedecker S. Interatomic potentials for ionic systems with density functional accuracy based on charge densities obtained by a neural network. Phys. Rev. B. 2015;92:045131. [Google Scholar]

- 46.Rappe AK, Goddard III WA. Charge equilibration for molecular dynamics simulations. J. Phys. Chem. 1991;95:3358. [Google Scholar]

- 47.Wilmer CE, Kim KC, Snurr RQ. An extended charge equilibration method. J. Phys. Chem. Lett. 2012;3:2506. doi: 10.1021/jz3008485. [DOI] [PubMed] [Google Scholar]

- 48.Cheng Y-T, et al. A charge optimized many-body (comb) potential for titanium and titania. J. Phys. Condens. Matter. 2014;26:315007. doi: 10.1088/0953-8984/26/31/315007. [DOI] [PubMed] [Google Scholar]

- 49.Ko TW, Finkler JA, Goedecker S, Behler J. A fourth-generation high-dimensional neural network potential with accurate electrostatics including non-local charge transfer. Nat. Commun. 2021;12:398. doi: 10.1038/s41467-020-20427-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Zubatyuk, R., Smith, J.S., Nebgen, B.T. et al. Teaching a neural network to attach and detach electrons from molecules. Nat. Commun.12, 4870 (2021). [DOI] [PMC free article] [PubMed]

- 51.Zubatyuk R, Smith JS, Leszczynski J, Isayev O. Accurate and transferable multitask prediction of chemical properties with an atoms-in-molecules neural network. Sci. Adv. 2019;5:eaav6490. doi: 10.1126/sciadv.aav6490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Xie X, Persson KA, Small DW. Incorporating electronic information into machine learning potential energy surfaces via approaching the ground-state electronic energy as a function of atom-based electronic populations. J. Chem. Theory Comput. 2020;16:4256. doi: 10.1021/acs.jctc.0c00217. [DOI] [PubMed] [Google Scholar]

- 53.Qiao Z, Welborn M, Anandkumar A, Manby FR, Miller III TF. OrbNet: Deep learning for quantum chemistry using symmetry-adapted atomic-orbital features. J. Chem. Phys. 2020;153:124111. doi: 10.1063/5.0021955. [DOI] [PubMed] [Google Scholar]

- 54.Pfau D, Spencer JS, Matthews AG, Foulkes WMC. Ab initio solution of the many-electron Schrödinger equation with deep neural networks. Phys. Rev. Res. 2020;2:033429. [Google Scholar]

- 55.Hermann, J., Schätzle, Z. & Noé, F. Deep-neural-network solution of the electronic Schrödinger equation. Nat. Chem. 12, 891–897 (2020). [DOI] [PubMed]

- 56.Scherbela, M., Reisenhofer, R., Gerard, L., Marquetand, P. & Grohs, P. Solving the electronic Schrödinger equation for multiple nuclear geometries with weight-sharing deep neural networks. arXiv preprint arXiv:2105.08351 (2021). [DOI] [PubMed]

- 57.Klicpera, J., Groß, J. & Günnemann, S. Directional message passing for molecular graphs. International Conference on Learning Representations (ICLR)https://openreview.net/forum?id=B1eWbxStPH (2020).

- 58.Bernstein S. Démonstration du théorème de Weierstrass fondée sur le calcul des probabilités. Commun. Kharkov Math. Soc. 1912;13:1. [Google Scholar]

- 59.Thomas, N. et al. Tensor field networks: Rotation-and translation-equivariant neural networks for 3d point clouds. arXiv preprint arXiv:1802.08219 (2018).

- 60.Anderson, Brandon and Hy, Truong Son and Kondor, Risi, Cormorant: Covariant Molecular Neural Networks, Advances in Neural Information Processing Systems 32, https://papers.nips.cc/paper/2019/hash/03573b32b2746e6e8ca98b9123f2249b-Abstract.html (2019).

- 61.Schütt, K. T., Unke, O. T. & Gastegger, M. Equivariant message passing for the prediction of tensorial properties and molecular spectra. Proceedings of the 38th International Conference on Machine Learning, 9377–9388 (2021).

- 62.Batzner, S. et al. SE(3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials. arXiv preprint arXiv:2101.03164 (2021). [DOI] [PMC free article] [PubMed]

- 63.Bartók AP, Kondor R, Csányi G. On representing chemical environments. Phys. Rev. B. 2013;87:184115. [Google Scholar]

- 64.Thompson AP, Swiler LP, Trott CR, Foiles SM, Tucker GJ. Spectral neighbor analysis method for automated generation of quantum-accurate interatomic potentials. J. Comput. Phys. 2015;285:316. [Google Scholar]

- 65.Yao K, Herr JE, Toth DW, Mckintyre R, Parkhill J. The TensorMol-0.1 model chemistry: a neural network augmented with long-range physics. Chem. Sci. 2018;9:2261. doi: 10.1039/c7sc04934j. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Grisafi A, Ceriotti M. Incorporating long-range physics in atomic-scale machine learning. J. Chem. Phys. 2019;151:204105. doi: 10.1063/1.5128375. [DOI] [PubMed] [Google Scholar]

- 67.Bereau T, DiStasio Jr RA, Tkatchenko A, Von Lilienfeld OA. Non-covalent interactions across organic and biological subsets of chemical space: physics-based potentials parametrized from machine learning. J. Chem. Phys. 2018;148:241706. doi: 10.1063/1.5009502. [DOI] [PubMed] [Google Scholar]

- 68.Schwilk, M., Tahchieva, D. N. & von Lilienfeld, O. A. The QMspin data set: Several thousand carbene singlet and triplet state structures and vertical spin gaps computed at MRCISD+Q-F12/cc-pVDZ-F12 level of theory. Mater. Cloud Arch. 10.24435/materialscloud:2020.0051/v1 (2020a).

- 69.Schwilk, M., Tahchieva, D. N. & von Lilienfeld, O. A. Large yet bounded: Spin gap ranges in carbenes. arXiv preprint arXiv:2004.10600 (2020b).

- 70.Deringer VL, Caro MA, Csányi G. A general-purpose machine-learning force field for bulk and nanostructured phosphorus. Nat. Commun. 2020;11:1. doi: 10.1038/s41467-020-19168-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Hoja J, et al. QM7-X, a comprehensive dataset of quantum-mechanical properties spanning the chemical space of small organic molecules. Sci. Data. 2021;8:43. doi: 10.1038/s41597-021-00812-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Blum LC, Reymond J-L. 970 million druglike small molecules for virtual screening in the chemical universe database GDB-13. J. Am. Chem. Soc. 2009;131:8732. doi: 10.1021/ja902302h. [DOI] [PubMed] [Google Scholar]

- 73.Adamo C, Barone V. Toward reliable density functional methods without adjustable parameters: the PBE0 model. J. Chem. Phys. 1999;110:6158. [Google Scholar]

- 74.Tkatchenko A, DiStasio Jr RA, Car R, Scheffler M. Accurate and efficient method for many-body van der Waals interactions. Phys. Rev. Lett. 2012;108:236402. doi: 10.1103/PhysRevLett.108.236402. [DOI] [PubMed] [Google Scholar]

- 75.Folmsbee D, Hutchison G. Assessing conformer energies using electronic structure and machine learning methods. Int. J. Quantum Chem. 2021;121:e26381. [Google Scholar]

- 76.Perdew JP, Burke K, Ernzerhof M. Generalized gradient approximation made simple. Phys. Rev. Lett. 1996;77:3865. doi: 10.1103/PhysRevLett.77.3865. [DOI] [PubMed] [Google Scholar]

- 77.Tkatchenko A, Scheffler M. Accurate molecular van der Waals interactions from ground-state electron density and free-atom reference data. Phys. Rev. Lett. 2009;102:073005. doi: 10.1103/PhysRevLett.102.073005. [DOI] [PubMed] [Google Scholar]

- 78.Vaswani, Ashish and Shazeer, Noam and Parmar, Niki and Uszkoreit, Jakob and Jones, Llion and Gomez, Aidan N and Kaiser, Lukasz and Polosukhin, Illia, Attention is All you Need, Advances in Neural Information Processing Systems 30, https://papers.nips.cc/paper/2017/hash/3f5ee243547dee91fbd053c1c4a845aa-Abstract.html (2017).

- 79.Choromanski, K. et al. Rethinking attention with performers. International Conference on Learning Representations https://openreview.net/forum?id=Ua6zuk0WRH (2021).

- 80.Ziegler, J. F., Littmark, U. & Biersack, J. P. The Stopping and Range of Ions in Solids (Pergamon, 1985).

- 81.Artrith N, Morawietz T, Behler J. High-dimensional neural-network potentials for multicomponent systems: applications to zinc oxide. Phys. Rev. B. 2011;83:153101. [Google Scholar]

- 82.Morawietz T, Sharma V, Behler J. A neural network potential-energy surface for the water dimer based on environment-dependent atomic energies and charges. J. Chem. Phys. 2012;136:064103. doi: 10.1063/1.3682557. [DOI] [PubMed] [Google Scholar]

- 83.Morawietz T, Behler J. A density-functional theory-based neural network potential for water clusters including van der Waals corrections. J. Phys. Chem. A. 2013;117:7356. doi: 10.1021/jp401225b. [DOI] [PubMed] [Google Scholar]

- 84.Uteva E, Graham RS, Wilkinson RD, Wheatley RJ. Interpolation of intermolecular potentials using Gaussian processes. J. Chem. Phys. 2017;147:161706. doi: 10.1063/1.4986489. [DOI] [PubMed] [Google Scholar]

- 85.Samek, W., Montavon, G., Vedaldi, A., Hansen, L. K. & Müller, K.-R. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning, vol. 11700 (Springer Nature, 2019).

- 86.Samek W, Montavon G, Lapuschkin S, Anders CJ, Müller K-R. Explaining deep neural networks and beyond: a review of methods and applications. Proc. IEEE. 2021;109:247. [Google Scholar]

- 87.Lapuschkin S, et al. Unmasking clever Hans predictors and assessing what machines really learn. Nat. Commun. 2019;10:1096. doi: 10.1038/s41467-019-08987-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Hendrycks, D. & Gimpel, K. Gaussian error linear units (GELUs). arXiv preprint arXiv:1606.08415 (2016).

- 89.Elfwing S, Uchibe E, Doya K. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Netw. 2018;107:3. doi: 10.1016/j.neunet.2017.12.012. [DOI] [PubMed] [Google Scholar]

- 90.Ramachandran, P., Zoph, B. & Le, Q. V. Searching for activation functions. arXiv preprint arXiv:1710.05941 (2017).

- 91.Nair, V. & Hinton, G. E. Rectified linear units improve restricted boltzmann machines. in ICML International Conference on Machine Learning (2010).

- 92.Glorot, X. & Bengio, Y. Understanding the difficulty of training deep feedforward neural networks, in Proc. thirteenth international conference on artificial intelligence and statistics, 249–256 (JMLR Workshop and Conference Proceedings, 2010).

- 93.Srivastava, R., Greff, K. & Schmidhuber, J. Highway networks. arXiv preprint arXiv:1505.00387 (2015).

- 94.He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition, in Proc. IEEE conference on computer vision and pattern recognition 770–778 (2016).

- 95.He, K., Zhang, X., Ren, S. & Sun, J. Identity mappings in deep residual networks. in European conference on computer vision, 630–645 (Springer, 2016).

- 96.Kowalski, G. J. In Information Retrieval Systems: Theory and Implementation, Vol. 1 (Springer, 2007).

- 97.Ewald PP. Die Berechnung optischer und elektrostatischer Gitterpotentiale. Ann. der Phys. 1921;369:253. [Google Scholar]

- 98.Gastegger M, Behler J, Marquetand P. Machine learning molecular dynamics for the simulation of infrared spectra. Chem. Sci. 2017;8:6924. doi: 10.1039/c7sc02267k. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Hermann J, DiStasio Jr RA, Tkatchenko A. First-principles models for van der Waals interactions in molecules and materials: concepts, theory, and applications. Chem. Rev. 2017;117:4714. doi: 10.1021/acs.chemrev.6b00446. [DOI] [PubMed] [Google Scholar]

- 100.Caldeweyher E, et al. A generally applicable atomic-charge dependent london dispersion correction. J. Chem. Phys. 2019;150:154122. doi: 10.1063/1.5090222. [DOI] [PubMed] [Google Scholar]

- 101.Reddi, S. J., Kale, S. & Kumar, S. On the convergence of Adam and beyond. International Conference on Learning Representations (2018).

- 102.Hirshfeld FL. Bonded-atom fragments for describing molecular charge densities. Theor. Chim. Acta. 1977;44:129. [Google Scholar]

- 103.Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. International Conference for Learning Representations (2015).