Abstract

A variety of deep neural network (DNN)-based image denoising methods have been proposed for use with medical images. Traditional measures of image quality (IQ) have been employed to optimize and evaluate these methods. However, the objective evaluation of IQ for the DNN-based denoising methods remains largely lacking. In this work, we evaluate the performance of DNN-based denoising methods by use of task-based IQ measures. Specifically, binary signal detection tasks under signal-known-exactly (SKE) with background-known-statistically (BKS) conditions are considered. The performance of the ideal observer (IO) and common linear numerical observers are quantified and detection efficiencies are computed to assess the impact of the denoising operation on task performance. The numerical results indicate that, in the cases considered, the application of a denoising network can result in a loss of task-relevant information in the image. The impact of the depth of the denoising networks on task performance is also assessed. The presented results highlight the need for the objective evaluation of IQ for DNN-based denoising technologies and may suggest future avenues for improving their effectiveness in medical imaging applications.

Keywords: Image denoising, task-based image quality assessment, numerical observers, ideal observer, deep learning

I. Introduction

IMAGE denoising is a classical image processing operation that is commonly employed in medical imaging applications [1]–[7]. Recently, denoising methods based on deep neural networks (DNNs) have been proposed and widely investigated [5], [6], [8]–[15]. These methods are typically trained by minimizing loss functions that quantify a distance between the denoised image and the defined target image (e.g., a noise-free or low noise image) and have demonstrated high performance in terms of traditional image quality metrics such as root mean square error (RMSE), structural similarity index metric (SSIM) [16] or peak signal-to-noise ratio (PSNR).

In medical imaging, images are often acquired for specific purposes and the use of objective measures of image quality (IQ) has been widely advocated for assessing imaging systems and image processing algorithms [17]–[22]. Despite this, the objective evaluation of modern DNN-based medical image denoising methods remains largely lacking [23]. Although DNN-based denoising methods, by conventional design, can improve traditional IQ measures, it is well-known that such measures may not always correlate with objective task-based IQ measures [24]–[28]. For example, Yu et al. [23] conducted a study in which a DNN-based denoising method was observed to reduce RMSE compared to an alternative method, but signal detectability was unimproved [23].

Even more concerning is the fact that image denoising methods can compromise the visibility of important structural details in the denoised images even though traditional measurement metrics (such as RMSE or SSIM) are improved [2], [8], [29]. While DNN-based denoising operations may succeed at lowering noise levels, the extent to which they perturb the second- and higher-order statistical properties of an image that are relevant to signal detection is not understood. Finally, according to data processing inequality [30], the performance of an ideal observer cannot be increased via image processing operations such as denoising. However, conditions under which DNN-based denoising methods can improve the performance of sub-optimal observers on detection tasks remains relatively unexplored.

The purpose of this study is to assess modern DNN-based denoising methods by use of objective IQ measures, in a preliminary attempt to address the issues described above. Three canonical DNN-based denoising methods are identified for analysis. The convolutional neural network (CNN)-based observer, the Hotelling observer, the Regularized Hotelling observer, an anthromorphic channelized Hotelling observer, and a non-prewhitening matched filter are implemented as NOs. The performances of these NOs acting on the original noisy images and the corresponding denoised images are quantified via receiver operating characteristic (ROC) analysis, and signal detection efficiencies are computed to assess the impact of the denoising operations on NO performance. The impact of the network depth of a DNN-based denoising method on NO performance is assessed to understand if the deep learning mantra “deeper is better” necessarily holds true for signal detection performance. A covariance matrix propagation analysis is also performed, to gain insights into how DNNs modify the covariance structure of image data as they are propagated through layers of a linear convolutional network. Finally, the depth of the CNN-based observer is varied to demonstrate how the benefit of the denoising operation is dependent on the specification of the NO. The presented analysis highlights the importance of objective IQ evaluation for DNN-based denoising technologies and may suggest future avenues for improving their effectiveness in medical imaging applications.

The remaining of the paper is organized as follows. Section II describes the necessary background on binary signal detection task, numerical observers, and image denoising. The numerical studies and the results of the proposed evaluations of different denoising networks are provided in Sections III and IV. Finally, the article provides a discussion of the key findings in Sec. V.

II. Background

A. Formulation of Binary Signal Detection Task

A linear digital imaging system can be described as a continuous-to-discrete (C-D) mapping process [17]:

| (1) |

where is the measured image vector, f (r) denotes the object function that is dependent on the coordinate , k ≥ 2, denotes a linear imaging operator that maps to , and denotes the measurement noise. When its spatial dependence is not important to highlight, f (r) will be denoted as f.

A binary signal detection task requires an observer to classify the measured image data g as satisfying either a signal-present hypothesis H1 or a signal-absent hypothesis H0. These two hypotheses can be described as:

| (2a) |

| (2b) |

where fs and fb denote the signal and background, respectively, and and denote the signal and background images. For the case of a signal-known-exactly (SKE) and background-known-statistically (BKS) task, s is known while b is a random vector.

To perform this task, a deterministic observer computes a test statistic that maps the measured image g to a real-valued scalar variable that is compared to a predetermined threshold τ to determine if g satisfies H0 or H1. By varying the threshold τ, a ROC curve can be formed to quantify the trade-off between the false-positive fraction (FPF) and the true-positive fraction (TPF) [17]. The area under the ROC curve (AUC) can be subsequently calculated as a figure-of-merit (FOM) for signal detection performance.

B. Numerical Observers for IQ Assessment

In preliminary assessments of medical imaging technologies, NOs have been employed to quantify task-based measures of IQ for various image-based inferences [24]. The NOs that are employed in this study to perform binary SKE/BKS signal detection tasks are described briefly below.

1). Ideal Observer (IO) and CNN-Based Observer:

The Bayesian Ideal Observer (IO) sets an upper limit of observer performance for signal detection tasks and has been advocated for use in optimizing medical imaging systems and data-acquisition designs [17]–[21]. The IO test statistic tIO(g) is any monotonic transformation of the likelihood ratio ΛLR(g):

| (3) |

where p(g|H1) and p(g|H0) are the conditional probability density functions that describe the measured data g under the hypotheses H1 and H0, respectively. Equation (3) is analytically intractable, in general, and Markov-chain Monte Carlo (MCMC) techniques have been proposed to approximate the IO test statistic [31]. In this study, an alternative method based on supervised learning is employed to approximate ΛLR(g). Specifically, this will be accomplished by use of an appropriately designed CNN-based classifier as described elsewhere [32]. The resulting NO will be referred to as the CNN-IO observer.

Please note that when a CNN-based classifier is employed as a NO but it does not possess sufficient model capacity to accurately approximate ΛLR(g), it will simply be referred to as a CNN-based observer. Therefore, the CNN-based observer is, by definition, a sub-optimal observer.

2). Hotelling Observer and Regularized Hotelling Observer:

The Hotelling Observer (HO) is the IO that is restricted to employ test statistics that are linear functions of the data [17]. The HO employs the Hotelling discriminant, which is the population equivalent of the Fisher linear discriminant, and is optimal among all linear observers in the sense that it maximizes the signal-to-noise ratio of the test statistic [17]. The HO test statistic tHO(g) is defined as:

| (4) |

where denotes the Hotelling template, denotes the difference between the ensemble mean of the measurements g under the two hypotheses H0 and H1, and . Here and denote the covariance matrices of g under the two hypotheses H0 and H1. If a linear imaging system and a SKE signal detection task are considered,. Note that the HO only employs first and second order statistical information about g, whereas the IO requires full knowledge of the image data statistics.

In some cases, the covariance matrices K0(g) and K1(g) can be ill-conditioned and therefore the Hotelling template cannot be stably computed. To address this, a regularized HO (RHO) can be employed that implements the test statistic tRHO(g):

| (5) |

where Kλ represents a low-rank approximation of Kg that is formed by keeping only the singular values greater than λσmax. Here, is the Moore–Penrose inverse of Kλ, λ is a threshold for the singular values and σmax represents the largest singular value of Kg, The value of λ can be tuned on an independent set of data and the value that leads to the best RHO performance can be selected.

3). Channelized Hotelling Observer:

When the HO is employed with a channeling mechanism to reduce the dimensionality of the image data, a channelized HO (CHO) is formed. When implemented with difference-of-Gaussian (DOG) channels and an internal noise mechansim, the CHO can be interpreted as an anthropomorphic observer [33]–[35]. Let T denote a channel matrix and v ≡ Tg the corresponding channelized image data. The CHO test statistic tCHO(g) is given by:

| (6) |

where Kv denotes the covariance matrix of the channelized data v, Kint denotes the covariance matrix of the channel internal noise, and vint is a noise vector sampled from the Gaussian distribution . Based on previous studies [35], in this work Kint will be defined as:

| (7) |

where diag(Kv) represents a diagonal matrix with diagonal elements from Kv and ϵ is the internal noise level. The parameters of the DOG channels and the internal noise level employed in this study are described below in Sec. III-C4.

4). Non-Prewhitening Matched Filter (NPWMF):

The non-prewhitening matched filter (NPWMF) is a simple NO that utilizes only first-order statistical information [36], [37]. The NPWMF test statistic tNPWMF(g) is given by:

| (8) |

where represents the difference of the means of the ensemble of measured images g under the two hypotheses H0 and H1, respectively. By design, the NPWMF will not be affected by changes to the second- and higher-order statistics of the image data.

C. DNN-Based Image Denoising

Denoising methods based on DNNs hold significant potential for medical imaging applications [1]–[8], [38], [39]. Due to their flexibility and ability to exploit image features, many such denoising methods have been proposed based on CNNs. Given a noisy image g, the action of a DNN-based denoising method can be described generically as:

| (9) |

where the mapping denotes the DNN that is parameterized by the weight vector Θ and denotes the estimated denoised image. Depending on how the target data are defined when training the DNN, can be interpreted as an estimate of the noiseless g or an estimate of g that contains a reduced noise level. When pre-training networks by use of simulated data, the former approach has been commonly employed [1]–[7], [29], [38], [39].

In addition to CNN-based methods, a variety of other approaches, including residual learning [40], have been employed for medical image denoising [5], [39]. The performance of denoising networks has commonly been evaluated by use of traditional metrics such as structural similarity index metric (SSIM) [16] and peak signal-to-noise ratio (PSNR).

III. Numerical Studies

Computer-simulation studies were conducted to objectively evaluate DNN-based denoising methods for SKE/BKS binary signal detection tasks. Three different DNNs were investigated, which were trained on simulated image data. The performances of the five different NOs reviewed in Sec. II-B on the noisy and denoised image data were analyzed under different conditions to gain insights into the potential impact of DNN-based denoising on signal detection.

A. Simulated Nuclear Medicine Images From a Parallel-Hole Collimator Imaging System

Planar scintigraphy images were simulated via an idealized linear parallel-hole collimator imaging system. The system was described by a linear C-D mapping that was specified by Gaussian point response functions [31]:

| (10) |

where [g]m denotes the mth component of g, V denotes the support of f (r), and the amplitude with the height h and width wm. The to-be-imaged objects f (r) = fb(r) + fs(r) contained a random background and a superimposed deterministic signal in the signal present case. The random background fb(r) was specified by lumpy object model [31] as:

| (11) |

where denotes the number of the lumps with denoting a Poisson distribution with the mean . The lump function l(r − rn|a, wb) was modeled by a 2D Gaussian function with lump amplitude a and lump width wb:

| (12) |

where rn denotes the center location of the nth lump that was sampled from a uniform distribution over the spatial support of the image. For the signal present cases, the signal corresponded to a Gaussian signal:

| (13) |

where As is the signal amplitude, ws is the signal width and rs is the center of signal. The images and are given by:

| (14) |

and

| (15) |

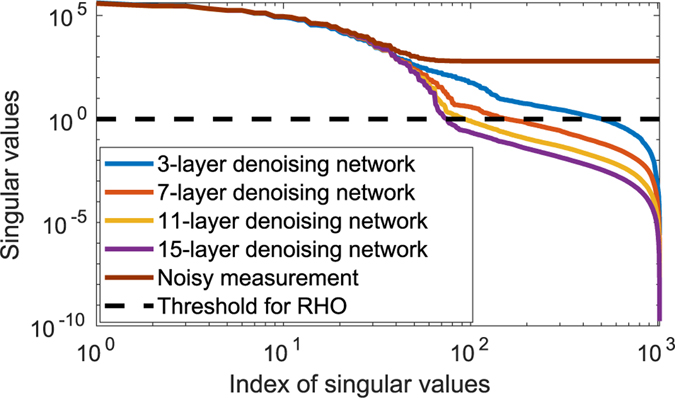

The measurement noise n was described by an uncorrelated mixed Possion-Gaussian noise model. Details regarding the signal, background and noise are provided in Sec. III-C below. Figure 1 shows an example of the signal and a noise free signal-present image along with the corresponding noisy image data g.

Fig. 1.

These images are examples that depict (a) a possible signal s, (b) a noise-free signal-present image s + b, and (c) the corresponding noisy measurement g. The dimensions of the images are 64 × 64. As described in the text, the signal amplitude was relatively small to emulate a situation where the detection task is challenging.

The relatively simple image models employed in our study provided a means by which simulated image data could be computed and degraded in a clear and controlled way, without being influenced by unknown noise sources that could potential be present in clinically acquired images.

B. DNN-Based Denoising Methods, Training, and Validation

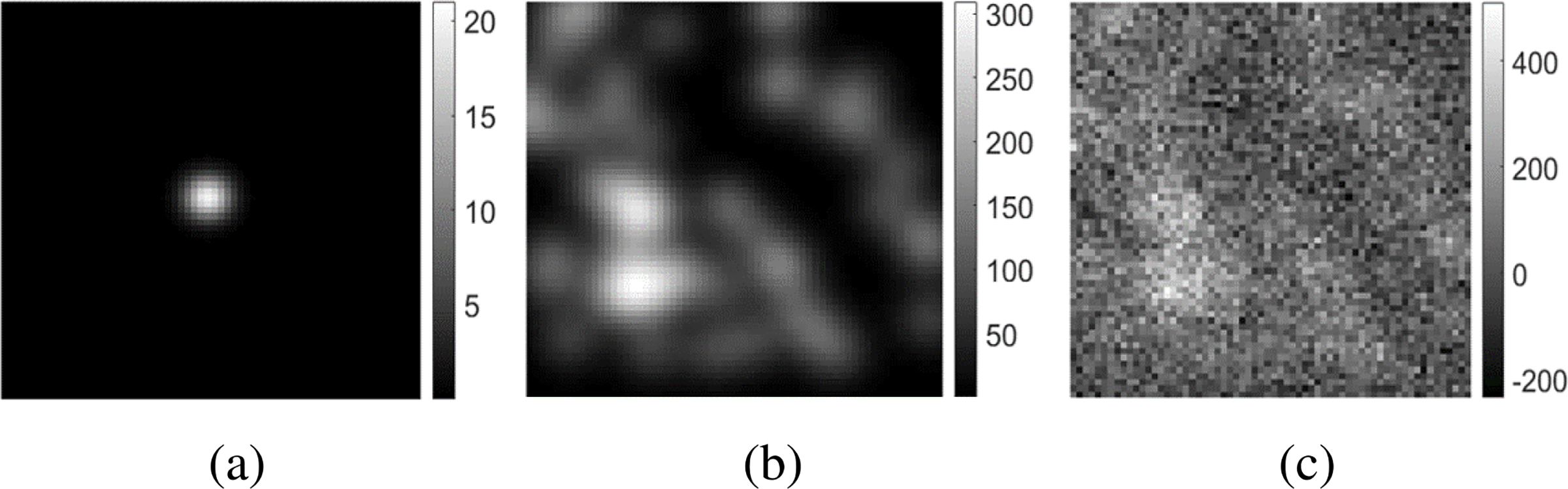

A simple linear denoising network and two nonlinear denoising networks with CNN-based or ResNet-based architectures were considered as three representative examples to be evaluated in this study. Figure 2 shows the architectures of these three networks, which are described next.

Fig. 2.

The three denoising networks evaluated in this study were based upon a (a) linear CNN, (b) non-linear CNN, and (c) non-linear ResNet denoising network, respectively. The dimensions of the input and output images are (a) 32 × 32, (b) 64 × 64, (c) 64 × 64, respectively.

1). Linear DNN-Based Denoising Method:

As depicted in Fig. 2(a), the linear DNNs include only a collection of D linear convolutional layers. Although such networks will not achieve state-of-the art performance, they are considered here because they permit the analytic propagation of covariance matrices, and hence Hotelling templates, through the different layers of the network. Therefore, preliminary insights into how DNNs perturb information relevant to binary signal detection tasks can be gained. The network input was a noisy image g of dimension 32 × 32 and the output was the estimated with the same dimensions. In the first layer of the network, 32 filters of dimension 3 × 3 × 1 were employed to generate 32 feature maps. In each of the 2nd to the (D-1)th layers, 32 filters of dimension 3 × 3 × 32 were employed. In the penultimate layer, a single filter of dimension 3 × 3 × 32 was applied to map the tensor-valued feature map to the scalar-valued output image.

As described in Eqn. (1), let denote a given ground truth (noiseless) image corresponding to either a signal absent or signal present case and let gj denote the corresponding measured noisy image. Here, the subscript j has been added to index the objects and images. Given the collection of paired training data , the linear network was trained by minimizing the mean-square-error (MSE) loss function:

| (16) |

2). Nonlinear CNN-Based Denoising Network:

As depicted in Fig. 2(b), a traditional non-linear CNN architecture of depth D was considered. The network input was a noisy image g of dimension 64 × 64 and the output was the estimated with the same dimensions. The CNN contained four types of layers. The first layer was a Conv+ReLU layer, in which 64 convolution filters of dimension 3 × 3 × 1 were applied to generate 64 feature maps. In each of the 2nd to (D-2)th Conv+BN+ReLU layers, 64 convolution filters of dimension 3 × 3 × 64 were employed and batch normalization was included between the convolution and ReLU operations. In the (D-1)th Conv+BN layer, 64 convolution filters of dimension 3 × 3 × 64 were employed and batch normalization was performed. In the last Conv layer, one single convolution filter of dimnension 3 × 3 × 64 was employed to form the final denoised image of dimension 64 × 64. The network was trained by use of the MSE-based loss function.

3). Nonlinear ResNet-Based Denoising Network:

An alternative nonlinear denoising network based on a ResNet architecture [40] was also investigated. As shown in Fig. 2(c), the ResNet architecture employs shortcut connections (the so-called skip connections) between non-adjacent convolutional layers. This network design can better address the vanishing gradient issue [40], and allows for a deeper network with more convolutional layers. In this study, skip connections were added every other layer, as depicted by the gray line in Fig. 2(c). An additional skip connection, depicted as the brown line in Fig. 2(c), was added to connect the output of the 1st layer and the input of the Dth (i.e., last) layer. Except for the skip connections, the network architecture was identical to that described above for the non-linear CNN.

Instead of using MSE-based loss, the perceptual loss was employed to train this network:

| (17) |

where ϕ(·) represents a feature extraction operator. It has been observed that denoising networks trained by use of a perceptual loss function can be effective in reducing noise while retaining image details [5].

4). Datasets and Denoising Network Training Details:

The standard convention of utilizing separate training/validation/testing datasets was adopted. The training dataset included 10,000 noisy signal-present and 10,000 noisy signal-absent measurement images along with the corresponding noise-free target images. The validation datatset included 200 signal-present images and 200 signal absent images and the corresponding noise-free target images. Finally, the testing dataset comprised 10,000 signal-present images and 10,000 signal-absent noisy images.

These datasets were computed as follows. First, lumpy background images, which were generated according to Eqn. (15), were employed as the noise-free signal-absent images. Then, a Gaussian signal was inserted to the background images to create noise-free images under the signal-present hypothesis. The signal was defined in Eqn. (14). Finally, mixed Poisson and Gaussian noise was added to the noise-free images under both hypotheses. The training, validation, or testing datasets were generated separately according to the steps described above. The statistical properties of these images varied between studies and are described below.

All the denoising networks were trained on mini-batches at each iteration by use of the Adam optimizer [41] with a learning rate of 0.0001. Each mini-batch contained 200 signal-present images and 200 signal absent images that were randomly selected from the training dataset. The network model that possessed to the best performance on the validation dataset was selected for use. Keras [42] was employed for implementing and training all networks on a single NVIDIA TITAN X GPU.

When training the nonlinear ResNet-based denoising network, the output before the first pooling layer from a pre-trained VGG19 [43] network was employed as a feature extraction operator to compute the perceptual loss in Eqn. (17). A similar feature extraction operator was utilized by Gong et al. [5]. The VGG19 network contained 16 convolutional layers, 5 max pooling layers, and 3 fully connected layers, and was trained by use of images from ImageNet [44]. A total of 64 feature maps were extracted with spatial size 64 × 64 to compute the perceptual loss.

C. Objective Evaluation of Denoising Networks

1). Studies Involving Linear Denoising Networks:

A study was implemented to assess the performance of the RHO when acting on data corresponding to the outputs of different intermediate layers in the linear denoising network. In this way, the RHO performance could be observed as it propagates through the network. The RHO was utilized because the resulting covariance matrices were generally ill-conditioned.

In the detection task, the signal defined in Eqn. (13) was employed with As = 2.5, ws = 1, and rs = [16; 16]T. The parameters of the lumpy background model defined in Eqn. (11) were , a = 5, and wb = 3. The dimensions of s, b, n, and g in Eqn. (2) were 32 × 32. The assumed parameters of the imaging system defined in Eqn. (10) were , h = 20 and wm = 2. For the mixed Possion-Gaussian noise, the Gaussian noise was sampled from a Gaussian distribution with the mean 0 and the standard deviation 25. Based on these settings, the training/validation/testing datasets were established and the linear denoising networks with depths varying from D = 2 to D = 15 were trained as described above in Sec. III-B1. Each network with different D was trained separately to achieve the optimal performance based on the defined loss function.

In order to compute the RHO acting on the tensor-valued feature data produced by each network layer, the covariance matrix Kd of the output data tensor of each layer needed to be estimated. Here, d denotes a layer index. To accomplish this, the tensor-valued data were vectorized and the associated covariance matrices corresponding to each layer were computed by propagating the covariance matrix K0 of the noisy input image through the network. Details regarding this procedure are provided in Sec. 1 of the Supplementary file.

2). Studies Involving the Non-Linear Denoising Networks:

A study was designed to investigate the performance of NOs when acting on the original noisy measurement images and the corresponding denoised images produced by the non-linear CNN and ResNet-based networks. Several parameters of the simulated images and denoising networks were varied to gain insights into the potential impact of denoising on NO performance.

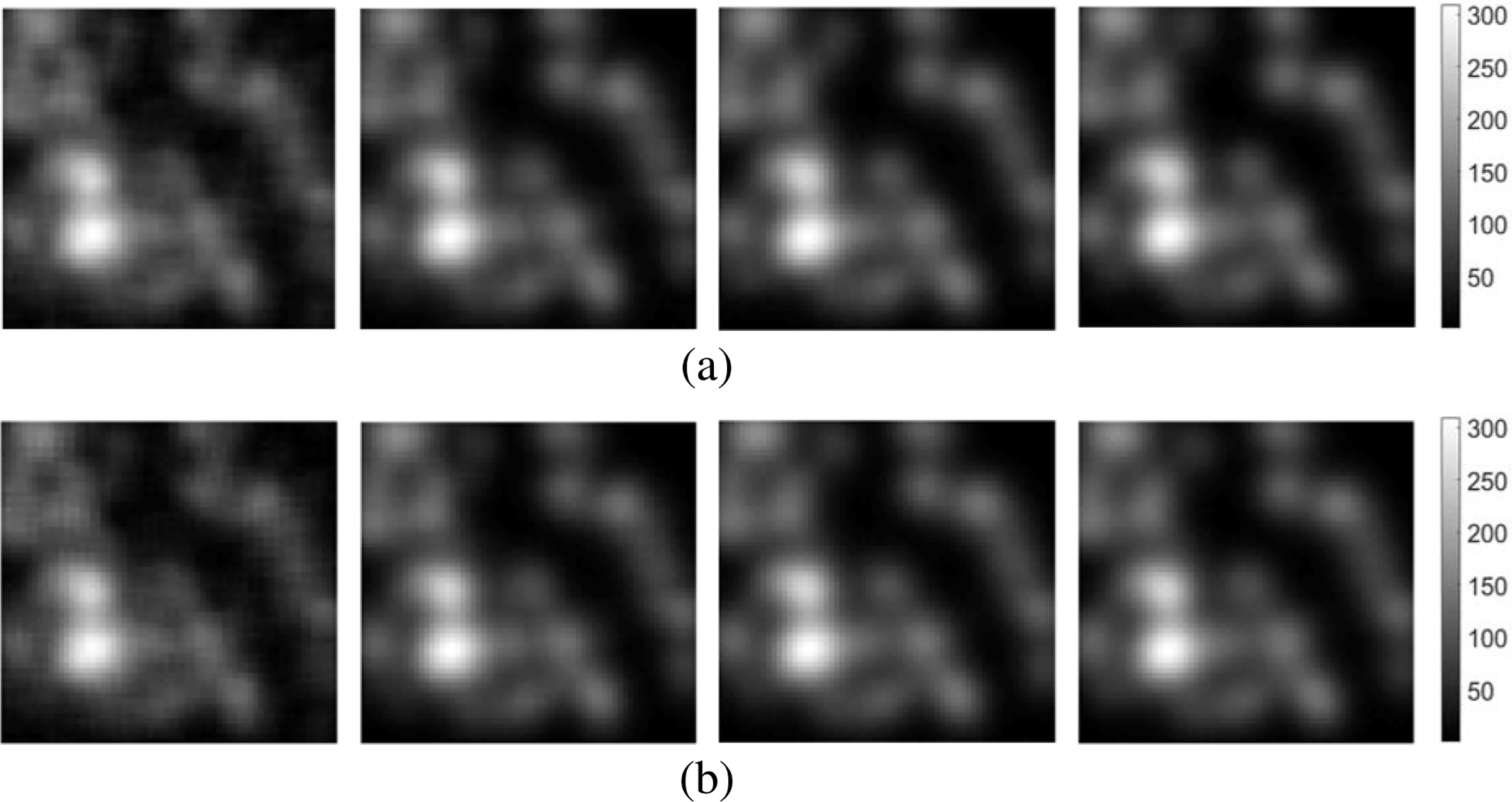

For the considered detection tasks, the signals, the lumpy object model, and the parallel-hole collimator imaging system were defined as in Sec. III-C1 but with different parameter settings. The signal possessed an amplitude As = 3, width , and center location rs = [32; 32]T. The parameters of the lumpy background model defined in Eqn. (11) were , a = 5, and wb = 3. The dimensions of s, b, and n in Eqn. (2) were 64 × 64. The parallel-hole collimator imaging system was specified as , h = 20 and wm = 2. The standard deviation of Gaussian noise was set to 75. Based on these settings, the training/validation/testing datasets were established and nonlinear denoising networks of depth D = {3, 5, 7, 9, 11, 13} were trained as described above in Sec. III-B2. Examples of denoised images produced by use of the CNN-based and ResNet-based denoising networks of different depths D are shown in Fig. 3. This study was also repeated for the case where low-noise, instead of noiseless, target images, were employed for training. Those studies are presented in Sec. 2 of the Supplementary file.

Fig. 3.

The images, from left to right, in each row represent the denoised estimates obtained by use of a) the CNN-based and b) the ResNet-based non-linear networks with varied {3, 7, 11, 13} layers, respectively. The related noise-free signal-present target image and the original noisy image g were the second and third images shown in Fig. 1. The dimensions of the images are 64 × 64.

Finally, the impact of the signal size on the performance of the RHO was investigated. Signals of width were considered. All other parameters were kept the same as that described above.

3). Observer Performance Evaluation Metrics:

To evaluate the performance of the NOs, ROC analysis was conducted and AUC values were computed and employed as a figure-of-merit. The ROC curves were fit by use of the Metz-ROC software [45] that employs the proper binormal model [46]. The error bars of the AUC values were estimated as well. Detection efficiencies for a given NO and denoising method were defined as

| (18) |

where AUCdenoised and AUCnoisy denote the AUC values corresponding to a NO acting on the denoised and original noisy image data, respectively. The detection efficiency quantifies the impact of the denoising operation on the performance of the NO. It should be noted that this definition is different from that employed elsewhere in the literature, where detection efficiency is typically referenced to an IO [47]. As such, it is possible that e > 1 when the IO is not employed. The denoised images were also assessed by use of RMSE and SSIM.

4). Numerical Observer Computation:

The CNN-IO was employed to approximate the IO test statistic [32]. Details regarding the implementation of the CNN-IO and CNN-based observers are provided in Sec. 5 of the Supplementary file.

For computing the HO and RHO test statistics, the covariance matrix Kg need to be estimated. For use in evaluating the linear denoising networks, the covariance matrix decomposition method [17], [32] was initially employed to estimate the covariance matrix of the original noisy images. To estimate the covariance matrix of the background images, 100,000 signal-present and 100,000 signal-absent noiseless images were utilized. Subsequently, to examine how task-performance propagates through the networks, the covariance matrices corresponding to the vectorized feature tensors at each network layer were computed by use of the propagation strategy described in Sec. 1 of the Supplemental file. For evaluating the nonlinear denoising networks, the covariance matrices corresponding to both the noisy and denoised images were empirically estimated by use of 100,000 signal-present and 100,000 signal-absent images.

When computing the RHO test statistic, the threshold parameter λ in Eqn. (5) was swept from 1e − 3 to 1e − 7 and the corresponding detection performance was estimated based on a separate validation dataset including 2,000 signal-present images and 2,000 signal-absent images. The value which led to the best RHO detection performance was selected. The RHO with selected parameter was then applied to the testing dataset described below and the corresponding observer performance was estimated. The NPWMF template was established by use of the same training data as employed to establish the RHO.

For computing the CHO test statistic, 2,000 signal-present and 2,000 signal-absent images were utilized to estimate the channelized covariance matrix. A set of 10 DOG channels [35] was employed with channel parameters σ0 = 0.005, α = 1.4, and Q = 1.67. The internal noise level ϵ was 2.5, which was the same value employed by Abbey et al. [35].

The performance of the NOs on the original noisy images was evaluated by use of a testing dataset with 10,000 signal-present noisy images and 10,000 signal-absent noisy images that was described above in Sec. III-B4. Subseqently, the performance of the NOs was assessed by use of the denoised testing images.

IV. Results

A. Propagation of Task-Based Information Through a Linear Denoising Network

The performance of the RHO acting on the noisy test data and on data corresponding to the outputs of different intermediate layers in the linear denoising network is summarized in Table I. The covariance matrices needed to compute the RHO test statistic corresponding to the output of each network layer were calculated by use of the propagation strategy described in Sec. 1 of the supplementary file. With the exception of the network with three layers, the RHO performance on the denoised images was lower than on the original noisy images, and the performance decreases more on the image denoised by deeper networks.

TABLE I.

RHO Signal Detection Performance Propagation Through Linear CNN-Based Denoising Network With {3, 5, 7, 9, 11, 13, 15} Layers Were Demonstrated by Use of AUC Values. The Standard Error of Each AUC Value Was the Same of 0.003

| RHO detection performance (AUC values) at the output of different layers of the linear denoising network | ||||||||

|---|---|---|---|---|---|---|---|---|

| Noisy measurements | Layer Index | The denoising network with different layers | ||||||

| 3 layers | 5 layers | 7 layers | 9 layers | 11 layers | 13 layers | 15 layers | ||

| 3 | 0.6376 | 0.6376 | 0.6376 | 0.6376 | 0.6376 | 0.6376 | 0.6376 | |

| 5 | 0.6372 | 0.6376 | 0.6376 | 0.6376 | 0.6376 | 0.6376 | ||

| 7 | 0.6316 | 0.6376 | 0.6376 | 0.6376 | 0.6376 | |||

| 0.6376 | 9 | 0.6283 | 0.6376 | 0.6376 | 0.6376 | |||

| 11 | 0.6213 | 0.6376 | 0.6376 | |||||

| 13 | 0.6188 | 0.6376 | ||||||

| 15 | 0.6158 | |||||||

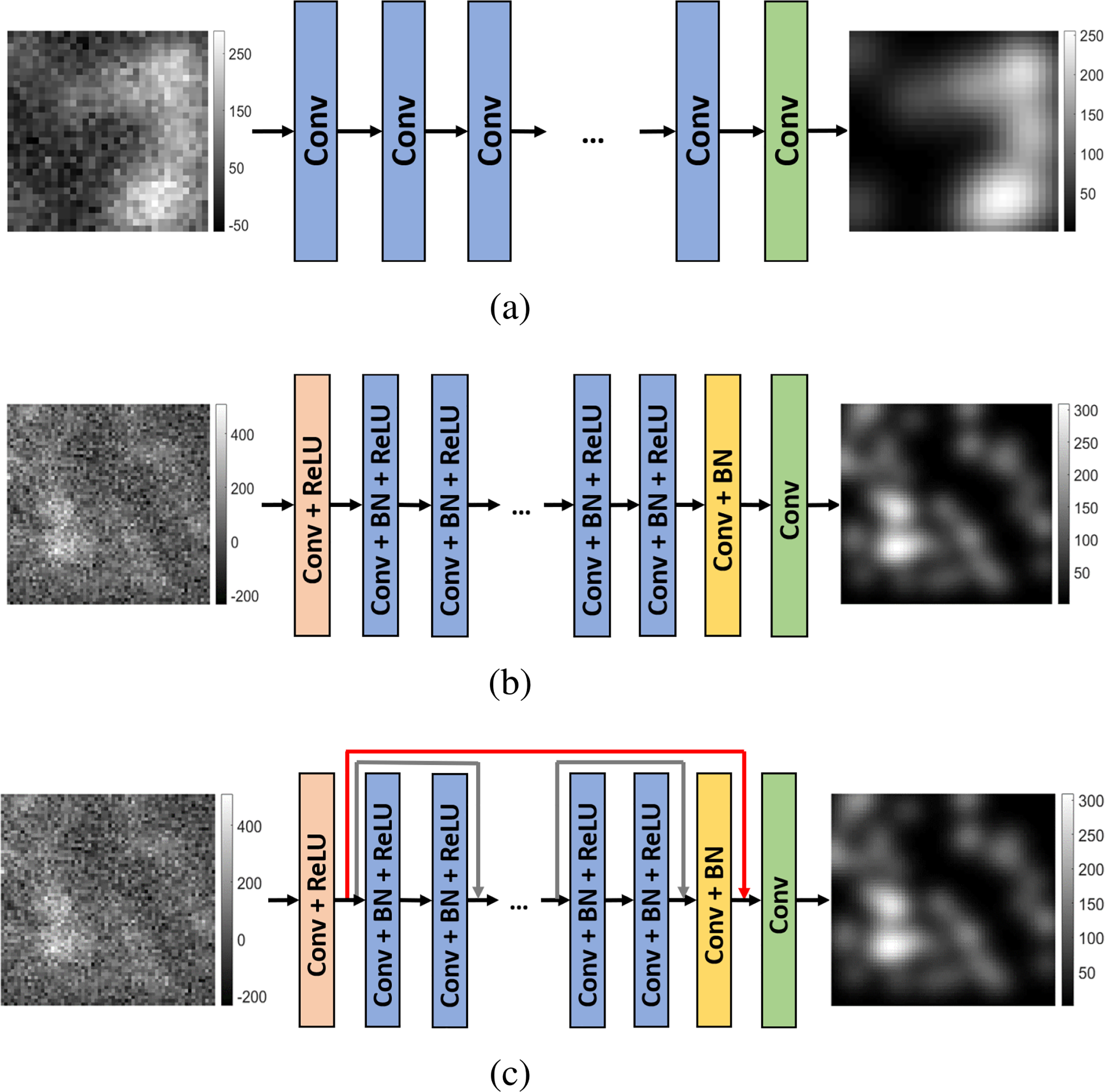

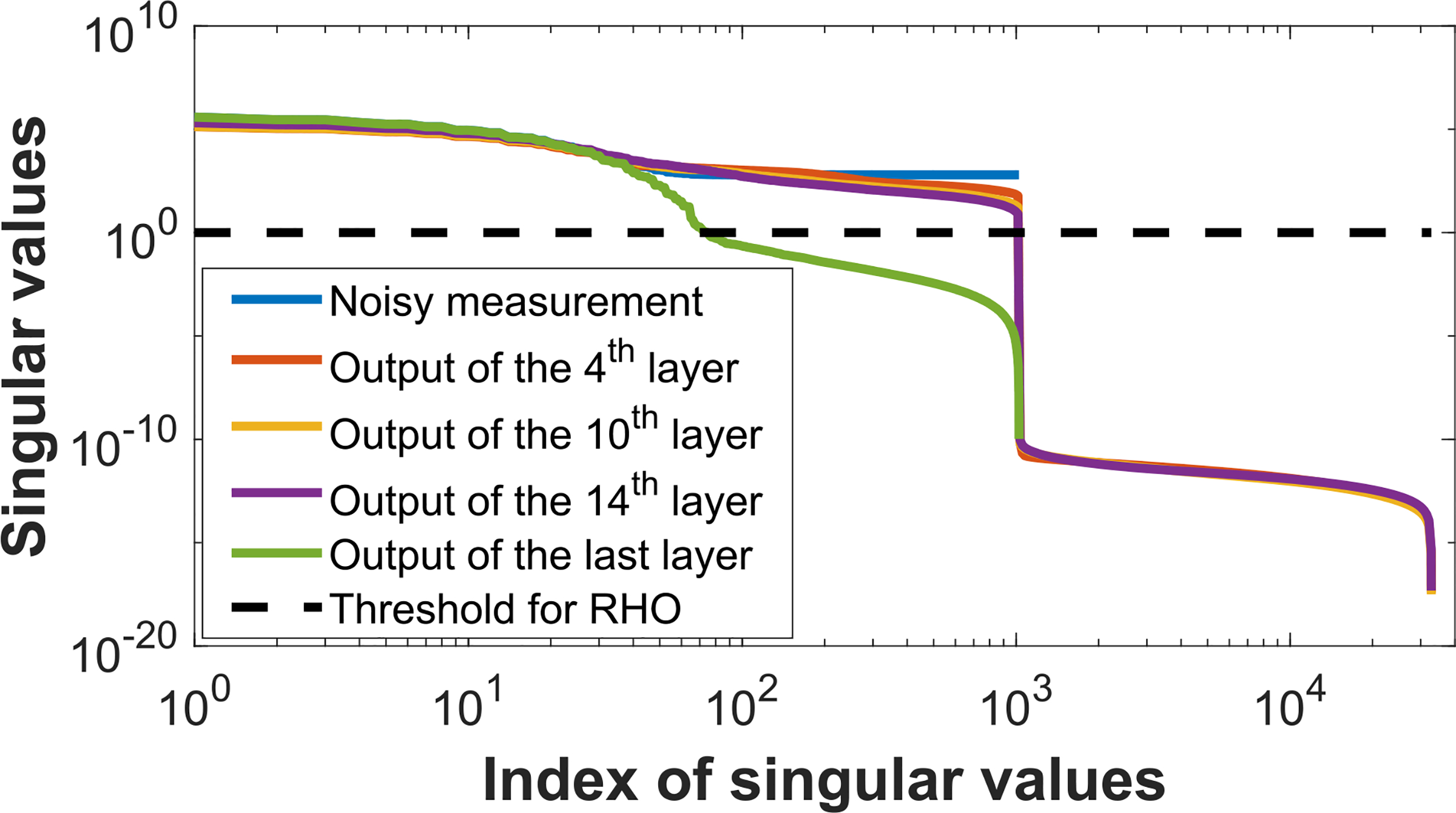

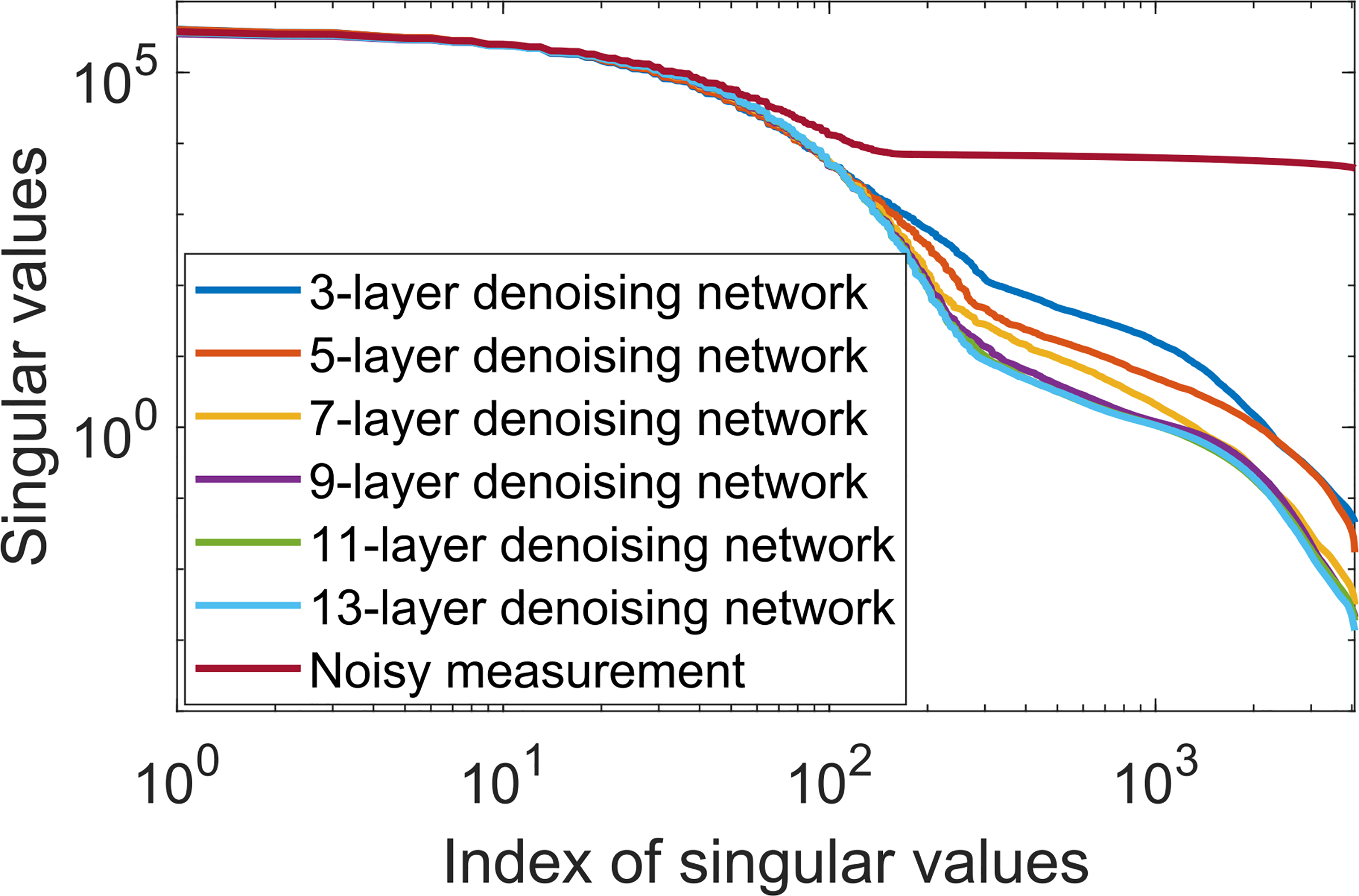

To gain insights into this behavior, the singular value spectra of the covariance matrices estimated from the original noisy images and from the images denoised by networks with varied depths were examined. The results, shown in Fig. 4, reveal that the spectra corresponding to the denoised images decay more rapidly than that corresponding to the original noisy image. Additionally, the spectra corresponding to the denoised images decayed more rapidly as the denoising network became deeper. Accordingly, the number of singular values that exceeded the value of the threshold λσmax that specified the RHO via Eqn. (5) decreased as the network depth increased. This resulted in the RHO performance to degrade as the network depth increased.

Fig. 4.

The singular value spectra of the covariance matrices corresponding to the original noisy images and the images denoised by use of the linear denoising networks with depths of {3, 7, 11, 15} were demonstrated.

The propagation of RHO performance through the networks is summarized in Table I. It was observed that the RHO performance on data produced by the intermediate denoising network layers remained approximately constant until the last layer, at which point it decreased. It should be noted that the last layer of the denoising network transforms a high-dimensional feature tensor to the denoised output image. This operation possesses a null space and is therefore noninvertible. The drop in RHO performance at the last layer suggests that some of the features that were important to task-performance resided in the null space of the learned transformation.

To understand why NO performance remained constant until the last layer, the singular value spectra of the covariance matrices estimated from the original noisy images and the feature tensors corresponding to intermediate layers of the denoising network were further analyzed for the case of the network of depth D = 15. The results, shown in Fig. 5, reveal that the spectra corresponding to the intermediate layers were similar to that corresponding to the original noisy images. Accordingly, the number of singular values that exceeded the value of the threshold λσmax that specified the RHO via Eqn. (5) at different intermediate network layers remained constant as the network depth increased. This resulted in the RHO performance remaining fixed as the network depth increased, until the last layer was reached as discussed above.

Fig. 5.

The singular value spectra of covariance matrices corresponding to the original noisy images and the outputs of different layers in a linear CNN denoising network with the depth D = 15 were illustrated.

B. Impact of Denoising Network Depth

1). Performance Changes:

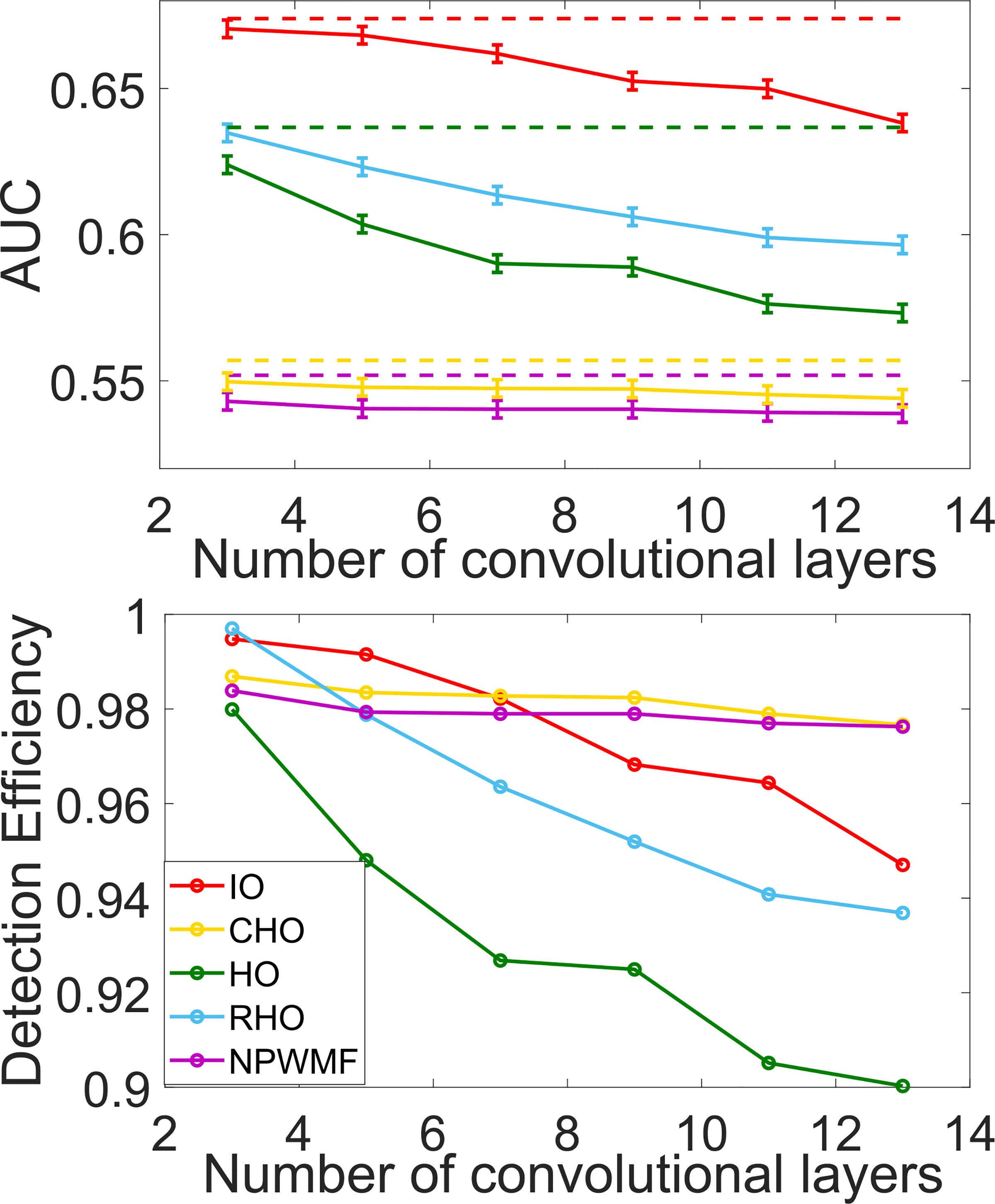

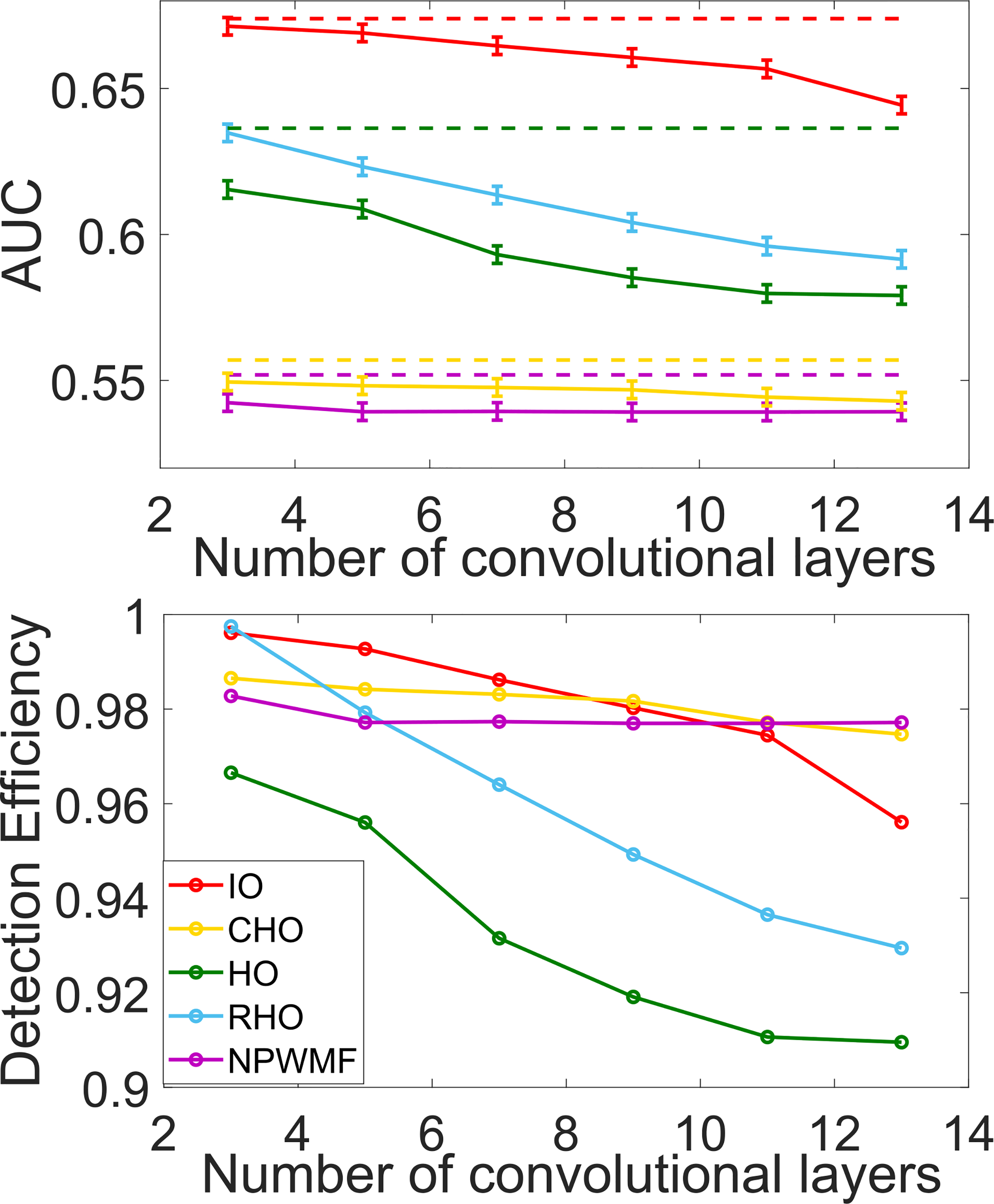

The impact of depth of the non-linear CNN and ResNet networks on the NO performance as measured by AUC and detection efficiency is shown in Fig. 6 and Fig. 7. For all cases, it was observed that the performance of the NOs on the original noisy images was higher than on the denoised images. The performance of the CNN-IO, HO and RHO on the denoised images decreased as the depth of the denoising networks increased. Contrarily, the performance of CHO and NPWMF on the denoised images was relatively insensitive to the depth of the denoising networks. These observations suggest that the second- and potentially higher-order statistical properties of the images were degraded by the denoising networks; this is confirmed below in Fig. 8. The quality of the denoised images as measured by RMSE and SSIM values for the networks of varying depth are shown in Table II. As expected, these metrics improved as the depth of the denoising networks increased. These results confirm that objective measures of IQ based on signal detection performance can show conflicting trends as compared to traditional metrics when comparing different denoising networks.

Fig. 6.

The relationships between AUC (top figure) and detection efficiency (bottom figure) and the depth (the number of convolutional layers) of a CNN-based non-linear denoising method when different NOs are employed were quantified. The two figures share the same legend that is displayed in the bottom figure. The dashed lines in the upper figure depict the performances of the NOs on the original noisy images.

Fig. 7.

The relationship between NO performance and the depth (the number of convolutional layers) of the ResNet-based non-linear denoising networks was quantified. The two figures on each panel share the same legend. The dashed lines in the upper figure represent the performance of the NOs on the noisy images.

Fig. 8.

The singular values of the covariance matrices from noisy images and images denoised by CNN-based non-linear denoising networks with {3, 5, 7, 9, 11, 13} convolutional layers were compared, respectively. The denoising operation changes the structure of data covariance matrix. The changes are more obvious for deeper networks.

TABLE II.

The RMSE and SSIM Values Associated With Noisy Images and the Output Images of Two Different Nonlinear Denoising Networks Were Compared

| Measurement Metrics | ||||

|---|---|---|---|---|

| Denoising networks | CNN + MSE | ResNet + Perceptual | ||

| RMSE | SSIM | RMSE | SSIM | |

| Noise image | 75.4161 | 0.3663 | 75.4161 | 0.3663 |

| 3 layers | 13.1478 | 0.9370 | 13.3280 | 0.9337 |

| 5 layers | 12.1819 | 0.9469 | 12.3120 | 0.9463 |

| 7 layers | 11.5499 | 0.9526 | 11.6433 | 0.9519 |

| 9 layers | 11.4584 | 0.9535 | 11.4340 | 0.9540 |

| 11 layers | 11.4563 | 0.9536 | 11.3016 | 0.9549 |

| 13 layers | 11.4548 | 0.9537 | 11.2556 | 0.9555 |

2). Changes in Covariance Matrix Induced by Denoising:

The degradation of HO performance was further analyzed by computing the SVD of the covariance matrices corresponding to the images denoised by use of the CNN-based method. The results, shown in Fig. 8, reveal that the covariance matrix corresponding to the denoised images was ill-conditioned, while that corresponding to the original noisy images was well-conditions. Moreover, the singular value spectra tended to decrease more rapidly as the depth of the denoising network was increased. Although not shown, similar observations were made in the case of the ResNet-based denoising method. These results confirm that the denoising networks changed the second-order statistical properties of the denoised images. As mentioned above, the performance of the NPWMF observer, which uses only first-order statistical information, was not strongly degraded by denoising. Together, these observations support the assertion that the reduction in performance of the NOs that were sensitive to second- and higher-order image statistics was caused by the the changes in these properties induced by the denoising operation.

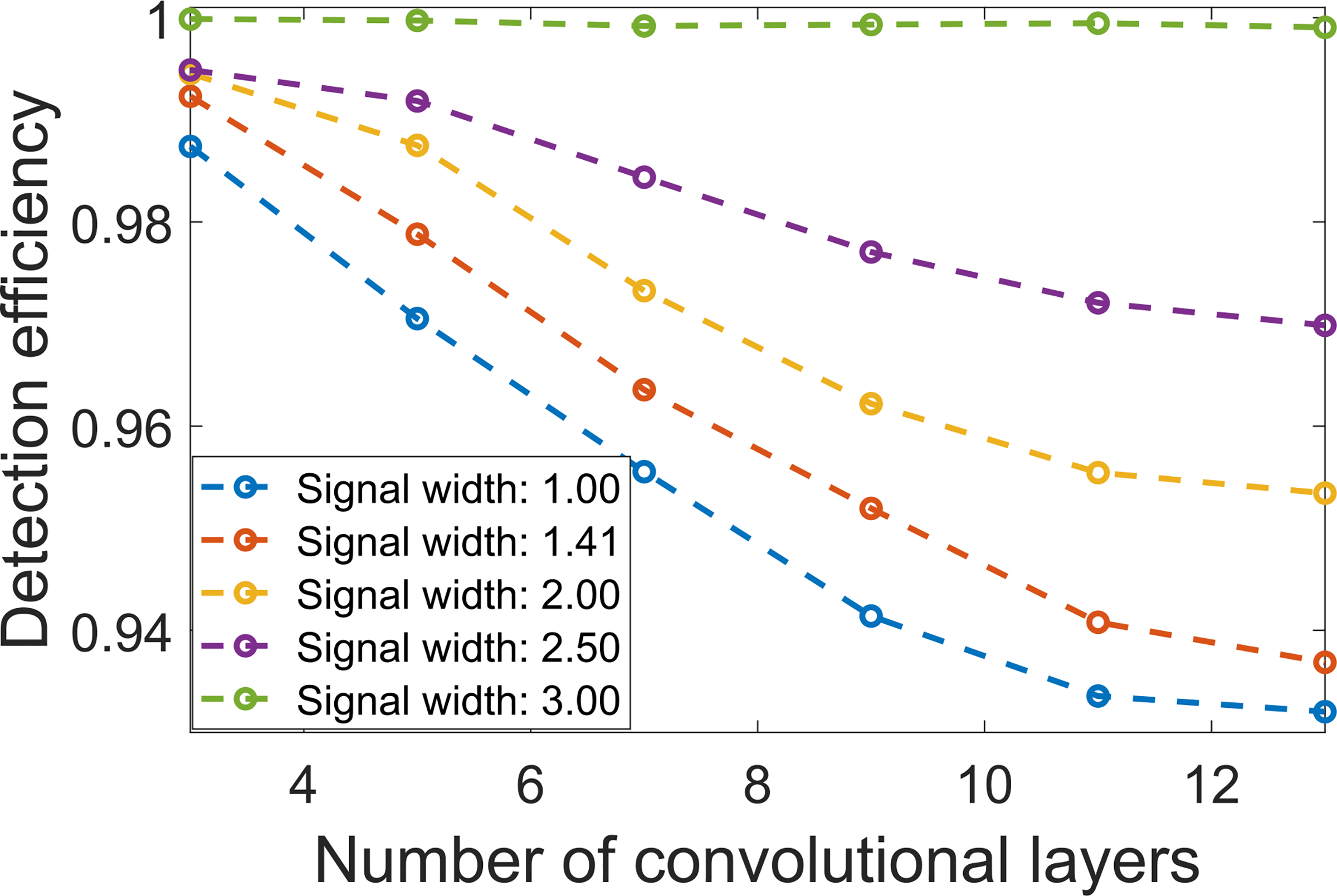

C. Detection Efficiency vs. Signal Size

The impact of signal size on RHO detection efficiency is shown in Fig. 9. Here, the width ws of the Gaussian signal in Eqn. (13) took on the values:. It was observed that, for each signal size, the detection efficiency was reduced as the denoising network depth increased. Additionally, the detection efficiency reduced more rapidly as a function of network depth for smaller signal sizes as compared to larger ones. Specifically, for ws = 3, there was no statistically significant decrease in detection efficiency as the denoising network depth increased. Moreover, the detection efficiency was close to one. This is due to the relatively large size of the signal and use of an MSE loss function to train the denoising network. An MSE loss function treats every pixel in an image equally and therefore a large signal contributes more than a small one during the network training (i.e., more task-specific information is potentially preserved).

Fig. 9.

The relationships between signal size and RHO detection efficiency were quantified. Here, the CNN-based denoising method was employed with an MSE loss and the network depth was varied: D = {3, 5, 7, 9, 11, 13}. Detection efficiency reduced more rapidly as a function of network depth when the signal size was reduced.

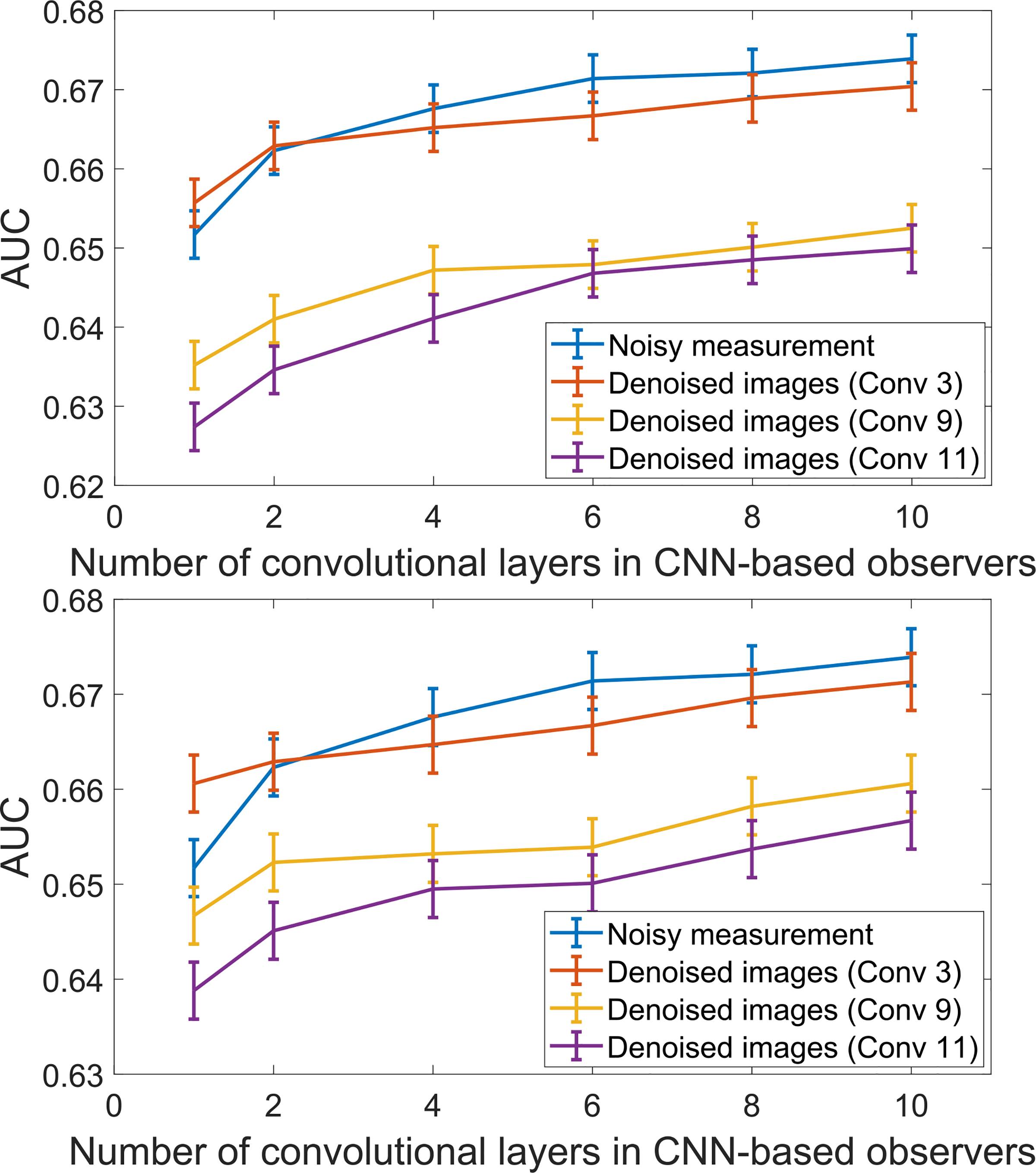

D. Situations Where Denoising Improved Detection Performance

CNN-based observers of varying depths were employed to demonstrate conditions under which the CNN- and ResNet-based denoising methods could improve signal detection performance. Detection performance was assessed on the original noisy images and the outputs of the two denoising networks with the depth of {3, 9, 11}, respectively. The evaluated CNN-based observers for this study were set with {1, 2, 4, 6, 8, 10} convolutional layers, respectively. It should be noted that the CNN-based observer with 10 layers coincided with the CNN-IO, and therefore approximated the IO for this task.

The results shown in Fig. 10 reveal, as expected, that the performance of the CNN-based observer increases with observer network depth. More interestingly, the detection performance of the shallow CNN-based observer with 3 layers on the original noisy images was worse than that on the images denoised by a non-linear denoising network that also had 3 convolutional layers. This represented a situation in which the denoising operation resulted in improved signal detection performance.

Fig. 10.

The performance of the CNN-based observers with different number of convolutional layers acting on the original noisy image and the outputs of two non-linear denoising networks were compared. The upper panel shows the results on the CNN-based nonlinear denoising networks; The lower panel shows the results on the ResNet-based nonlinear denoising networks. Note that the y-axis range is clipped for display purposes.

As observed and discussed above in Section IV-A, the use of deeper denoising networks resulted in a stronger degradation in signal detection performance as compared to use of shallower networks for the NOs considered. Additionally, according to data processing inequality [30], it is known that the performance of an IO cannot be increased via image processing operations such as denoising. As such, it is to be expected that the performance of the CNN-IO on the original noisy image data will not be improved by use of any denoising operation. These factors suggest that the extent to which a denoising operation will improve signal detection performance depends, in a complicated way on (at least) the following: 1) the extent to which the denoising operation degrades the image statistics that are employed by a given NO for a specified inference; and 2) the extent to which the NO approximates the IO.

V. Summary and Discussion

In this work, the performance of DNN-based denoising methods was evaluated by use of task-based IQ measures. Specifically, binary signal detection tasks under SKE/BKS conditions were considered. The performance of the IO and common linear NOs were quantified to assess the impact of the denoising operation on task performance. This study was motivated by the scarcity of works that have evaluated such modern denoising methods by use of objective methods.

The numerical results showed that, in the cases considered, the denoising operation can result in a loss of task-relevant information. Moreover, it was observed that while increasing the depth of the denoising network improved RMSE and SSIM, it resulted in a decrease in NO performance. This is consistent with the well-known fact that physical IQ measures may not always correlate with task-based ones [26]. This result also suggests that the mantra “deep is better” should be qualified and may not always hold true for objective IQ measures. The considered networks were analyzed to gain insights into the observed behavior and it was found that the denoising operation resulted in ill-conditioned covariance matrices. As such, denoising networks, while seeking to minimize a traditional (non-task-based) loss function, have the potential to degrade the image statistics that are important for signal detection.

Conditions under which the considered denoising operations could improve NO performance were also investigated. In the presented studies, it was observed that a shallow denoising network could improve the performance of a shallow CNN-based observer. When the depth of either the denoising or observer networks increased, the benefit of denoising was lost and NO performance was degraded. This suggests that the impact of denoising on signal detection performance depends, in a complicated way, on the specification of the denoising network, task, and the NO. As such, there is an urgent need to objectively evaluate new DNN-based denoising methods.

There remain numerous important topics for future investigation. The binary SKE detection task considered in this study is simplistic relative to many real-word clinical tasks. It will be important to consider more complicated tasks that involve signal variability and hybrid tasks that involve detection and estimation [48]. The study design presented can also readily be applied to assess alternative DNN-based denoising methods that use varying network architectures and loss functions. Ultimately, it will be critical to conduct human reader studies to assess the utility of new DNN-based denoising methods for specific clinical tasks.

Finally, the presented results will motivate the development of new approaches to establishing DNN-based denoising methods that mitigate the loss of task-relevant information by incorporating task-relevant information in the training strategy.

Supplementary Material

Acknowledgments

This work was supported in part by NIH under Award R01EB020604, Award R01EB023045, Award R01NS102213, Award R01CA233873, and Award R21CA223799.

Footnotes

This article has supplementary downloadable material available at https://doi.org/10.1109/TMI.2021.3076810, provided by the authors.

Contributor Information

Kaiyan Li, Department of Bioengineering, University of Illinois at Urbana–Champaign, Urbana, IL 61801 USA.

Weimin Zhou, Department of Electrical and Systems Engineering, Washington University in St. Louis, St. Louis, MO 63130 USA; Department of Psychological and Brain Sciences, University of California at Santa Barbara, Santa Barbara, CA 93106 USA.

Hua Li, Department of Bioengineering, University of Illinois at Urbana–Champaign, Urbana, IL 61801 USA; Carle Cancer Center, Carle Foundation Hospital, Urbana, IL 61801 USA.

Mark A. Anastasio, Department of Bioengineering, University of Illinois at Urbana–Champaign, Urbana, IL 61801 USA.

REFERENCES

- [1].Manduca A et al. , “Projection space denoising with bilateral filtering and CT noise modeling for dose reduction in CT,” Med. Phys, vol. 36, no. 11, pp. 4911–4919, October. 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Li Z et al. , “Adaptive nonlocal means filtering based on local noise level for CT denoising,” Med. Phys, vol. 41, no. 1, December. 2013, Art. no. 011908. [DOI] [PubMed] [Google Scholar]

- [3].Lin J-W, Laine AF, and Bergmann SR, “Improving PET-based physiological quantification through methods of wavelet denoising,” IEEE Trans. Biomed. Eng, vol. 48, no. 2, pp. 202–212, June. 2001. [DOI] [PubMed] [Google Scholar]

- [4].Le Pogam A, Hanzouli H, Hatt M, Le Rest CC, and Visvikis D, “Denoising of PET images by combining wavelets and curvelets for improved preservation of resolution and quantitation,” Med. Image Anal, vol. 17, no. 8, pp. 877–891, December. 2013. [DOI] [PubMed] [Google Scholar]

- [5].Gong K, Guan J, Liu C-C, and Qi J, “PET image denoising using a deep neural network through fine tuning,” IEEE Trans. Radiat. Plasma Med. Sci, vol. 3, no. 2, pp. 153–161, March. 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Chen H et al. , “Low-dose CT denoising with convolutional neural network,” in Proc. IEEE 14th Int. Symp. Biomed. Imag. (ISBI), April. 2017, pp. 143–146. [Google Scholar]

- [7].Zhang K, Zuo W, Chen Y, Meng D, and Zhang L, “Beyond a Gaussian denoiser: Residual learning of deep CNN for image denoising,” IEEE Trans. Image Process, vol. 26, no. 7, pp. 3142–3155, July. 2017. [DOI] [PubMed] [Google Scholar]

- [8].Yang Q et al. , “Low-dose CT image denoising using a generative adversarial network with wasserstein distance and perceptual loss,” IEEE Trans. Med. Imag, vol. 37, no. 6, pp. 1348–1357, June. 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Gondara L, “Medical image denoising using convolutional denoising autoencoders,” in Proc. IEEE 16th Int. Conf. Data Mining Workshops (ICDMW), December. 2016, pp. 241–246. [Google Scholar]

- [10].Kang E, Min J, and Ye JC, “A deep convolutional neural network using directional wavelets for low-dose X-ray CT reconstruction,” Med. Phys, vol. 44, no. 10, pp. e360–e375, October. 2017. [DOI] [PubMed] [Google Scholar]

- [11].Chen H et al. , “Low-dose CT with a residual encoder-decoder convolutional neural network,” IEEE Trans. Med. Imag, vol. 36, no. 12, pp. 2524–2535, December. 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Liu P, El Basha MD, Li Y, Xiao Y, Sanelli PC, and Fang R, “Deep evolutionary networks with expedited genetic algorithms for medical image denoising,” Med. Image Anal, vol. 54, pp. 306–315, May 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].You X, Cao N, Lu H, Mao M, and Wanga W, “Denoising of MR images with rician noise using a wider neural network and noise range division,” Magn. Reson. Imag, vol. 64, pp. 154–159, December. 2019. [DOI] [PubMed] [Google Scholar]

- [14].Liu D, Wen B, Jiao J, Liu X, Wang Z, and Huang TS, “Connecting image denoising and high-level vision tasks via deep learning,” IEEE Trans. Image Process, vol. 29, pp. 3695–3706, 2020. [DOI] [PubMed] [Google Scholar]

- [15].Tian C, Xu Y, Fei L, and Yan K, “Deep learning for image denoising: A survey,” in Proc. Int. Conf. Genet. Evol. Comput. Singapore: Springer, 2018, pp. 563–572. [Google Scholar]

- [16].Wang Z, Bovik AC, Sheikh HR, and Simoncelli EP, “Image quality assessment: From error visibility to structural similarity,” IEEE Trans. Image Process, vol. 13, no. 4, pp. 600–612, April. 2004. [DOI] [PubMed] [Google Scholar]

- [17].Barrett HH and Myers KJ, Foundations Image Science. Hoboken, NJ, USA: Wiley, 2013. [Google Scholar]

- [18].Metz CE, Wagner RF, Doi K, Brown DG, Nishikawa RM, and Myers KJ, “Toward consensus on quantitative assessment of medical imaging systems,” Med. Phys, vol. 22, no. 7, pp. 1057–1061, July. 1995. [DOI] [PubMed] [Google Scholar]

- [19].Vennart W, “ICRU Report 54: Medical imaging—The assessment of image quality: ISBN 0–913394-53-X. April 1996, Maryland, USA,” Radiography, vol. 3, no. 3, pp. 243–244, 1997. [Google Scholar]

- [20].Wagner RF and Brown DG, “Unified SNR analysis of medical imaging systems,” Phys. Med. Biol, vol. 30, no. 6, p. 489, 1985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].He X and Park S, “Model observers in medical imaging research,” Theranostics, vol. 3, no. 10, p. 774, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Li K, Zhou W, Li H, and Anastasio MA, “Supervised learning-based ideal observer approximation for joint detection and estimation tasks,” Proc. SPIE Med. Imag., Image Perception, Observer Perform., Technol. Assessment, vol. 11599, October. 2021, Art. no. 115990F. [Google Scholar]

- [23].Yu Z et al. , “Ai-based methods for nuclear-medicine imaging: Need for objective task-specific evaluation,” J. Nucl. Med, vol. 61, no. 1, p. 575, 2020. [Google Scholar]

- [24].Barrett HH, Yao J, Rolland JP, and Myers KJ, “Model observers for assessment of image quality,” Proc. Nat. Acad. Sci. USA, vol. 90, no. 21, pp. 9758–9765, 1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Christianson O et al. , “An improved index of image quality for task-based performance of CT iterative reconstruction across three commercial implementations,” Radiology, vol. 275, no. 3, pp. 725–734, June. 2015. [DOI] [PubMed] [Google Scholar]

- [26].Myers KJ, Barrett HH, Borgstrom MC, Patton DD, and Seeley GW, “Effect of noise correlation on detectability of disk signals in medical imaging,” J. Opt. Soc. Amer. A, Opt. Image Sci, vol. 2, no. 10, pp. 1752–1759, October. 1985. [DOI] [PubMed] [Google Scholar]

- [27].Badal A, Cha KH, Divel SE, Graff CG, Zeng R, and Badano A, “Virtual clinical trial for task-based evaluation of a deep learning synthetic mammography algorithm,” Proc. SPIE, vol. 10948, October. 2019, Art. no. 109480O. [Google Scholar]

- [28].Li K, Zhou W, Li H, and Anastasio MA, “Task-based performance evaluation of deep neural network-based image denoising,” Proc. SPIE Med. Imag. Image Perception, Observer Perform., Technol. Assessment, vol. 11599, May 2021, Art. no. 115990L. [Google Scholar]

- [29].Li S, Zhou J, Liang D, and Liu Q, “MRI denoising using progressively distribution-based neural network,” Magn. Reson. Imag, vol. 71, pp. 55–68, September. 2020. [DOI] [PubMed] [Google Scholar]

- [30].Beaudry NJ and Renner R, “An intuitive proof of the data processing inequality,” Quantum Inf. Comput, vol. 12, nos. 5–6, pp. 432–441, May 2012. [Google Scholar]

- [31].Kupinski MA, Hoppin JW, Clarkson E, and Barrett HH, “Ideal-observer computation in medical imaging with use of Markov-chain Monte Carlo techniques,” JOSA A, Opt. Image Sci. Vis, vol. 20, no. 3, pp. 430–438, 2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Zhou W, Li H, and Anastasio MA, “Approximating the ideal observer and hotelling observer for binary signal detection tasks by use of supervised learning methods,” IEEE Trans. Med. Imag, vol. 38, no. 10, pp. 2456–2468, October. 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Myers KJ and Barrett HH, “Addition of a channel mechanism to the ideal-observer model,” J. Opt. Soc. Amer. A, Opt. Image Sci, vol. 4, no. 12, pp. 2447–2457, 1987. [DOI] [PubMed] [Google Scholar]

- [34].Abbey C and Bochud F, “Modeling visual detection tasks in correlated image noise with linear model observers,” in Handbook of Medical Image Physics and Psychophysics, vol. 1, Van Metter RL, Beutel J, and Kundel HL, Eds. Bellingham, WA, USA: SPIE, 2000, pp. 629–654. [Google Scholar]

- [35].Abbey CK and Barrett HH, “Human-and model-observer performance in ramp-spectrum noise: Effects of regularization and object variability,” J. Opt. Soc. Amer. A, Opt. Image Sci, vol. 18, no. 3, pp. 473–488, 2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Wagner RF, Brown DG, and Pastel MS, “Application of information theory to the assessment of computed tomography,” Med. Phys, vol. 6, no. 2, pp. 83–94, March. 1979. [DOI] [PubMed] [Google Scholar]

- [37].Burgess AE, “Statistically defined backgrounds: Performance of a modified nonprewhitening observer model,” J. Opt. Soc. Amer. A, Opt. Image Sci, vol. 11, no. 4, pp. 1237–1242, 1994. [DOI] [PubMed] [Google Scholar]

- [38].Manjón JV, Coupé P, Buades A, Louis Collins D, and Robles M, “New methods for MRI denoising based on sparseness and self-similarity,” Med. Image Anal, vol. 16, no. 1, pp. 18–27, January. 2012. [DOI] [PubMed] [Google Scholar]

- [39].Jifara W, Jiang F, Rho S, Cheng M, and Liu S, “Medical image denoising using convolutional neural network: A residual learning approach,” J. Supercomput, vol. 75, no. 2, pp. 704–718, February. 2019. [Google Scholar]

- [40].He K, Zhang X, Ren S, and Sun J, “Deep residual learning for image recognition,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), June. 2016, pp. 770–778. [Google Scholar]

- [41].Kingma DP and Ba J, “Adam: A method for stochastic optimization,” 2014, arXiv:1412.6980. [Online]. Available: http://arxiv.org/abs/1412.6980 [Google Scholar]

- [42].Chollet F. (2015). Keras. [Online]. Available: https://keras.io

- [43].Simonyan K and Zisserman A, “Very deep convolutional networks for large-scale image recognition,” 2014, arXiv:1409.1556. [Online]. Available: http://arxiv.org/abs/1409.1556 [Google Scholar]

- [44].Deng J, Dong W, Socher R, Li L-J, Li K, and Fei-Fei L, “ImageNet: A large-scale hierarchical image database,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., June. 2009, pp. 248–255. [Google Scholar]

- [45].Metz CE, Herman BA, and Roe CA, “Statistical comparison of two ROC-curve estimates obtained from partially-paired datasets,” Med. Decis. Making, vol. 18, no. 1, pp. 110–121, 1998. [DOI] [PubMed] [Google Scholar]

- [46].Pesce LL and Metz CE, “Reliable and computationally efficient maximum-likelihood estimation of ‘proper’ binormal ROC curves,” Academic Radiol, vol. 14, no. 7, pp. 814–829, July. 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [47].Park S, Clarkson E, Kupinski MA, and Barrett HH, “Efficiency of the human observer detecting random signals in random backgrounds,” J. Opt. Soc. Amer. A, Opt. Image Sci, vol. 22, no. 1, pp. 3–16, January. 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [48].Clarkson E, “Estimation receiver operating characteristic curve and ideal observers for combined detection/estimation tasks,” J. Opt. Soc. Amer. A, Opt. Image Sci, vol. 24, no. 12, p. B91, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.