Abstract

In recent times, big data classification has become a hot research topic in various domains, such as healthcare, e-commerce, finance, etc. The inclusion of the feature selection process helps to improve the big data classification process and can be done by the use of metaheuristic optimization algorithms. This study focuses on the design of a big data classification model using chaotic pigeon inspired optimization (CPIO)-based feature selection with an optimal deep belief network (DBN) model. The proposed model is executed in the Hadoop MapReduce environment to manage big data. Initially, the CPIO algorithm is applied to select a useful subset of features. In addition, the Harris hawks optimization (HHO)-based DBN model is derived as a classifier to allocate appropriate class labels. The design of the HHO algorithm to tune the hyperparameters of the DBN model assists in boosting the classification performance. To examine the superiority of the presented technique, a series of simulations were performed, and the results were inspected under various dimensions. The resultant values highlighted the supremacy of the presented technique over the recent techniques.

Subject terms: Computer science, Information technology

Introduction

Big data is a group of information that cannot be processed, captured, and managed by means of traditional software implements in a particular interval. This can be a higher growth rate, huge and differentiated data resource1. It needs novel processing modes to have strong decision-making control, understanding, optimization capability, and discovery powers. Big data analysis is performed by many approaches, such as databases, web crawlers, analysis, data warehouses, big data mining, and visualization algorithms. Among others, web crawlers are a widespread analysis technology2. They could extract text data/numerical values from a webpage and set up it to analyze information. In addition to Python language, this technique could be executed with few software packages. The big data mining algorithm needs to manage a huge amount of information. Furthermore, the speediness of handling is deliberate. This algorithm includes optimization technologies (such as GDA and PSO algorithms), scientific modeling or other predictive technologies (such as NN, NB, roughset, DT), decision analysis technologies (such as multicriteria decision, gray decision, and so on), performance assessment technologies (for example, fuzzy comprehensive evaluation and data envelopment analyses). Since this mining information was hardly examined and big data in the historical, particularly used to engineer problems, it has is a very big problem while employing this mining information algorithm to big data3.

Clustering and classification are the two key classes of algorithms in mining information4. Nevertheless, the performances of classification and clustering methods are considerably caused by the increasing dataset dimension because the algorithm in this category operates on the dataset dimension. Additionally, the drawback of higher dimension datasets includes redundant data, higher module construct time, and degraded quality, which makes information analyses highly complicated. To resolve these problems, the selection of features is employed as a major preprocessing phase for choosing subsets of features from a large dataset and increases the accuracy of clustering and classification models, which triggers foreign, ambiguous, and noisy data elimination5. The FS method depends upon search techniques and a performance assessment of subsets. Since the preprocessing stage, feature selection was essential for removing duplications, minimizing the amount of information, and irrelevant and unnecessary characteristics. It has various techniques to select the feature that assists in choosing the actual dataset as the effective feature. Filter, embedded and wrapper are the 3 approaches of the FS model6. The selection of features should achieve 2 aims: to eliminate/reduce the amount of FS and increase the output performance. As already mentioned, meta heuristics in previous decades simulate organisms’ collective behaviors. In particular, this algorithm has generated an important development in several regions associated with optimization7. The optimal selection is made by a metaheuristic algorithm; in a rational interval, the cloud generates better solutions8. Sometimes, it is a better solution to mitigate the limitation of comprehensive time-consuming searches9. Various metaheuristic methods, alternatively, suffer from the optimum location, missing search multiplicity and imbalance among exploitative and explosive performances10. Recently, EA has shown itself to be efficient and attractive for solving challenges using optimizations. There are few approaches, such as PSO, CSA11, GA12, and ACO algorithms13. PSO was hybridized for constant search space issues in the works with another metaheuristics approach.

This study focuses on the design of a big data classification model using chaotic pigeon inspired optimization (CPIO)-based feature selection with an optimal deep belief network (DBN) model14. The proposed model is executed in the Hadoop MapReduce environment to manage big data. Initially, the CPIO algorithm is applied to select a useful subset of features. In addition, the Harris hawks optimization (HHO)-based deep belief network (DBN) model is derived as a classifier to allocate appropriate class labels. The design of the HHO algorithm to tune the hyperparameters of the DBN model assists in boosting the classification performance. To examine the superiority of the presented technique, a series of simulations were performed, and the results were inspected under various dimensions. This paper structure is defined as follows. In “Results” section, the limitation of the proposed research work was identified through a literature review. Hadoop map reduction models defined in “Discussion” section. In “Methods” section, the performance analysis of the proposed system is briefly elaborated. This is followed by conclusions and some probable future directions are recommended in “Conclusion” section. In Al-Thanoon et al., BCSA was stimulated by natural phenomena to perform the FS method. In BCSA, the flight length variable plays a significant part in the performance15. To enhance the classification performances by rationally elected features, a development of defining the flight length parameters through the concepts of the opposition-based learning method of BCSA is presented. BenSaid and Alimi proposed an OFS method that solves these problems16. The presented method named MOANOFS explores the new developments of the OML method and conflict resolution method (Automated Negotiation). MOANOFS employs a 2-decision level. Initially, deciding k(s) among the learner (OFS method) is trustful (trust value/higher confidence). This selected k learner will take part in the next phase in which this presented MANOFS technique is incorporated.

In Pooja et al., the TC-CMECLPBC method is projected. Initially, the features and data were collected from large climate databases17. The TCC model is employed to find the comparison among the features to select appropriate features through high FS precision. The clustering method consists of 2 stages, maximization (M) and expectation (E), for discovering the maximal likelihood of grouping information into clusters. Next, the clustering results are provided to linear program boosting classifiers to improve the predictive performance. Lavanya et al. examine FS techniques such as rough set and entropy on the sensor's information18. Additionally, a representative method of FW is presented that represents Twitter and sensor information effectively for additional analyses of information. Few common classifications, such as NB, KNN, SVM, and DT, are employed to validate the efficiency of the FS. An ensemble classification method is presented that is related to many advanced methods. In Sivakkolundu and Kavitha, a new BCTMP-WEABC method is presented to predict upcoming outcomes with high precision and less time consumption19. This method includes 2 models, FS and classifier, to handle a large amount of information. Baldomero-Naranjo et al. proposed a strong classification method depending upon the SVM method that concurrently handles FS and outlier discovery20. The classifiers are made to consider the ramp loss margin error and involve budget constraints for limiting the number of FSs. The search of classifiers is modeled by a mixed integer design through a large M parameter. Two distinct methods (heuristic and exact) are presented for solving this method. The heuristic method is authenticated by relating the quality of the solution given to this method using an accurate method.

Guo et al. proposed a WRPCSP method for executing the FS method. Next, the study incorporates BN and CBR systems for reasoning knowledge21. According to the possible reasoning and calculation, WRPCSP algorithms and BN permit the presented CBR scheme to work in big data. Furthermore, to solve these problems created with a large number of features, this study also proposed a GO method for assigning the computation process of big data for similar data processing. Wang et al. proposed a big data analytics approach to the FS process for obtaining each explanatory factor of CT, which will shed light on the fluctuations of CT22. Initially, the relative analyses are executed among all 2 candidate factors through mutational data metrics to construct the experiential system. Next, the system deconvolutions are explored to infer the direct dependencies among the candidate’s factors and the CT by eliminating the effect of transitive relationships from the system. In Singh and Singh, the 4-phase hybrid ensemble FS method was proposed. Initially, the datasets are separated by the cross validation process23. Next, several filter approaches that depend on the weight score are ensembles for generating a rating of features, and then the consecutive FS method is used as a wrapper method for obtaining the best subsets of features. Finally, the resultant subsets are treated for the succeeding classifier's task. López et al. proposed a distributed feature weight method that accurately estimates feature significance in a large dataset with the popular method RELIEF in smaller problems24. The solution named BELIEF integrates new redundant removal measures that generate schemes related to this entropy but with low time costs. Furthermore, BELIEF provides a smoother scale-up, while additional cases are needed to increase the accuracy of the estimation.

Results

In this study, a new big data classification model is designed in the MapReduce environment. The proposed model derived a novel CPIO-based FS technique, which extracts a useful subset of features. In addition, the HHO-DBN model receives the chosen features as input and performs the classification process. The detailed working of these processes in the Hadoop MapReduce Environment is offered in the following sections. An essential component that develops the Hadoop architecture is as follows: Hadoop Distributed File System (HDFS): Initially, it can be a Google File System. This component was a distributed file system utilized as distributed storage to data; additionally, it gives access to information with maximum throughput. Hadoop YARN (MRv2): This component has been responsible for job scheduling and managing cluster resources. Hadoop MapReduce: Initially, Google’s MapReduce, these modules are scheme dependent upon YARN to the parallel process of data.

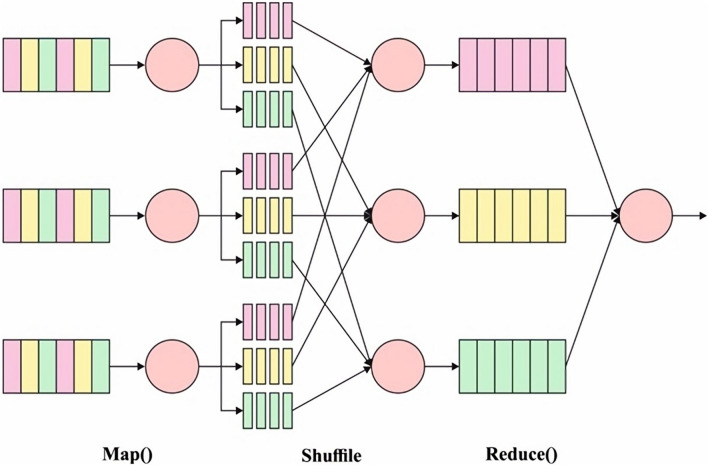

There are several studies compared with Hadoop, namely, Mahout, Hive, Hbase, and Spark. The most essential feature that describes Hadoop is which the HDFS is a maximum fault tolerance to hardware failure. Certainly, it can be capable of repeatedly handling and resolving these cases. Additionally, HDFS can interface among the nodes going to cluster to manage the data, i.e., to rebalance them25. The model of data storing the HDFS was carried out using the MapReduce structure. While Hadoop has expressed mainly in Java and C languages, it can be near several other programming languages. The MapReduce structure allows separation of nodes going to cluster the task, which is also finished. An essential disadvantage of Hadoop is the absence of execution capable of real-time tasks. However, it could not be a vital restriction because of these particular features, another technology is utilized. MapReduce automatically parallelizes and applies the program to a large cluster of commodity technologies. It mechanism by break model as to 2 stages, the map as well as reduce stage. All the stages are key-value pairs as input as well as output, this kind of that can be elected as programmer. The map and reduces operations of MapReduce are combined and determined in terms of data structured form (key, value) pairs. The calculation obtains the group of input key-value pairs and makes the group of output key-value pairs. The map as well as reduce operations in Hadoop MapReduce is the subsequent common procedure:

Design of CPIO-FS technique

At this stage, the CPIO-FS technique is applied to derive a subset of features. PIO has been a newly developed bioinspired technique extremely utilized for solving the optimized issue. It can be dependent upon social organisms of swarms that utilize the base of learning. It tried to mathematically enhance the solution quality based on the natural performance of the swarm to adapt to the position and velocity of all individuals. The homing nature of pigeons derives from 2 important functions, mapping and compassing and landmark functions. Map and compass operator: At this point, the rules were explained as the place and velocity of pigeon , and the position and velocity in the -dimensional search space were maximized at each iteration. The new place and velocity of pigeons at the -th iteration have been defined utilizing the provided function in Eqs. (1)–(2):

| 1 |

| 2 |

where stands for the mapping and compassing factors, rand demonstrates the arbitrary value in [0–1], and illustrates the present global optimum place, which is obtained in the evaluation of every location26.

Landmark operator: During this metric, the partial amount of pigeons is diminished from every generation. To accomplish the target immediately, residual pigeon flies to the destination place27. Let be the middle place of pigeons, and the place upgrading rule of pigeon at the t‐th iteration has been written as Eqs. (3)–(5):

| 3 |

| 4 |

| 5 |

where refers to the amount of pigeons, but the fitness is the cost function of pigeons. To reduce optimization, the target function has been elected from the rate of minimum.

Optimal feature selection process

The FF objective is a terminology utilized to estimate the solution. The FF evaluates the solution, which is a subset of obvious features, by means of the true positive rate (TPR), false positive rate (FPR), and number of features. The number of features comprises FF, and there are features obtainable without affecting TPR or FPR. During these cases, it can be necessary to eliminate individual features. Equation (6) schemes the function executed to estimate the fitness of the pigeon or solution.

| 6 |

where determines the number of elected features, refers to the entire feature and . The measures of weights are set as and because TPR and FPR are important. An initial method of projected PIO-FS defines the outcome or pigeon by vector with length, which is similar to feature count. As the PIO techniques fundamental approach acts on the place of pigeon regularly, the proposed PIO for FS explains the solution inference as vector of a definite length, but the measures of place and velocity vectors are arbitrary values in 0 and 1.

Usually, the velocity of pigeons is monitored by a sigmoidal function that has been utilized to transfer velocity as a binary version by utilizing Eq. (7). To resolve the binarized SI technique, the pigeon place is upgraded depending upon the sigmoid function value and the possibilities of arbitrarily uniform values in 0 and 1 by Eq. (8). The residual manner was operated similar to convention PIO except for the upgrading place of landmark operators. In addition, the sigmoid function was implemented transmission, and the velocity and place were upgraded as:

| 7 |

| 8 |

where stands for the pigeon velocities from iteration and refer to the uniformly arbitrary values.

Discussion

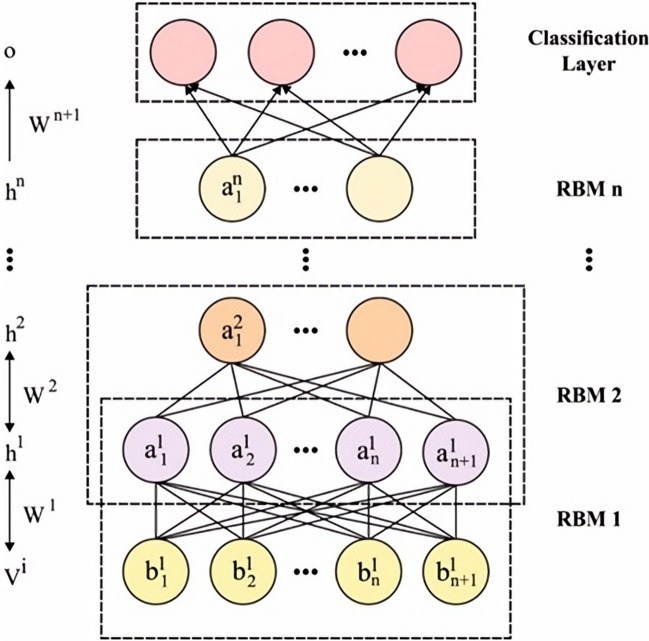

The Design of HHO-DBN Model is discussed here. The features are passed into the DBN model to perform the classification process. The DBN was a probability generation approach that is opposite the classic discriminative approach. This network is a DL technique that is stacked by RBM and trained in a greedy approach. The resultant prior layer was utilized as the input of the succeeding layer. Eventually, the DBN network has been generated. In the DBN, hierarchical learning has been simulated as the framework of the human brain. All the layers of the deep network are regarded as a logistic regression (LR) approach. The joint distribution function of and in Layer is in Eq. (9).

| 9 |

Input data of the DBN method compose the 2D vector reached in preprocessing. The RBM layers were trained one-by-one in pretrained. The following visible variable is a duplicate hidden variable from the preceding layer28. The parameter is transmitted in a layerwise approach, and the features have been learned in the preceding layer. The LR is maximum layers trained by fine‐tuning, where the cost function has been revised using BP for optimizing the weight The 2 steps are contained in the procedure of trained a DBN technique. All the RBM layers are unsupervised trained, input has been mapped as to distinct feature space, and data has been saved about feasible. Afterward, the LR layer was added on top of the DBN as supervised classification. Figure 2 demonstrates the framework of the DBN model.

Figure 1.

Framework of Mapreduce in Hadoop. It consists of map as well as reduce operations. The map stage obtains the various inputs and spilt and shuffled it. During this reduction stage, all reduce tasks procedure the input in-between data with a decrease function and create output data. Figure illustrates the block diagram of MapReduce.

Figure 2.

DBN structure. The hyperparameters of the DBN model take place using the HHO algorithm to boost the classifier results. During the HHO, the candidate solution is the Harris hawks, and an optimum/global solution has been planned prey. Therefore, HHO illustrates the exploratory and exploitative phases.

The HHO technique is simulated as the hunting performance of Harris hawk to rabbit. If the rabbits have considerable energy, the Harris hawk explores the definitional domain LB, with the subsequent formula in Eq. (10):

| 10 |

where implies the place of the th individual from the next iteration of refers to the place of an arbitrarily elected candidate at the present iteration, and and are optimum and averaged places the swarms. , and are 3 arbitrary numbers from the Gauss distribution. implies the chance of an individual following that most 2 manners, which represents which it can also be an arbitrary number29. The energy of rabbits, signified as symbol , declines linearly in the maximal value to 030–32 in Eq. (11):

| 11 |

where refers to the primary phase of energy that also fluctuates in the interval of [0, 1]. stands for the maximal permitted iteration number that is set up at the start. When , the Harris hawk is a manner for rabbits with approaches that are explained. refers the current iteration.

Soft besiege. and , the Harris hawk encloses rabbits softly and scares rabbits to run to make the rabbits tired. During this approach, the Harris hawk is upgrading its places with the subsequent formula in Eq. (12):

| 12 |

where signified the arbitrary jumps near the rabbit.

Hard besiege. , and , the rabbits were previously exhausted to minimal energy; afterward, the Harris hawk was carrying out hard besiege and made the surprised pounce in Eq. (13).

| 13 |

Soft besiege with progressive rapid dives. If and , the rabbits are sufficient energy; therefore, the Harris hawks until soft besiege is performed, but in further intelligence, one in Eqs. (14)–(16):

| 14 |

| 15 |

| 16 |

where implies the dimensionality of the issues, and refers to the arbitrary number. shows the Levy flight from dimensions in Eq. (17):

| 17 |

Hard besiege with progressive rapid dives. If and , the rabbits were tired, and Harris hawks were performed hard besiege with intelligence. The influence of the upgrading formulas is similar to Eq. (15), but the middle parameter of is altered, which exists to the averages in Eq. (18):

| 18 |

Methods

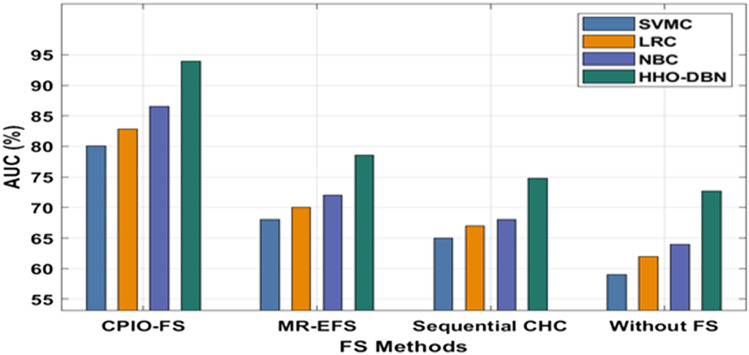

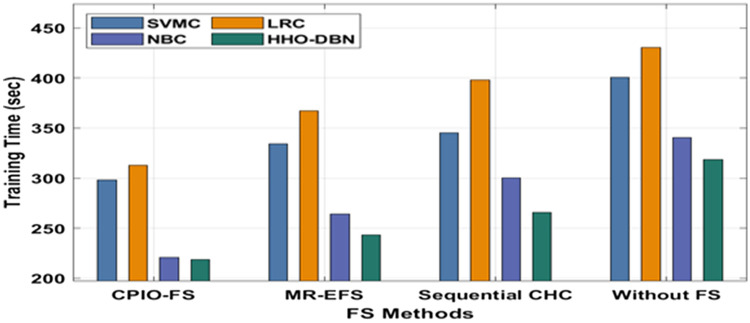

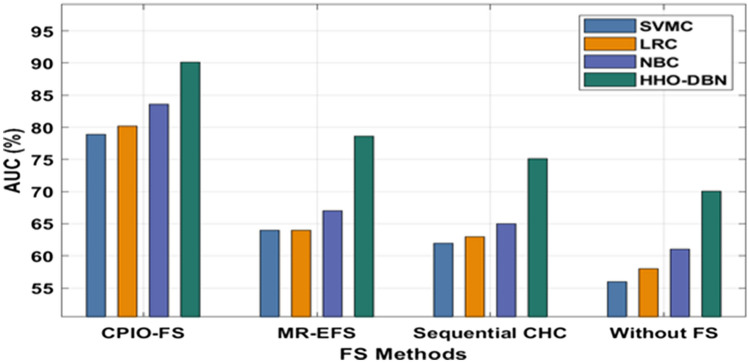

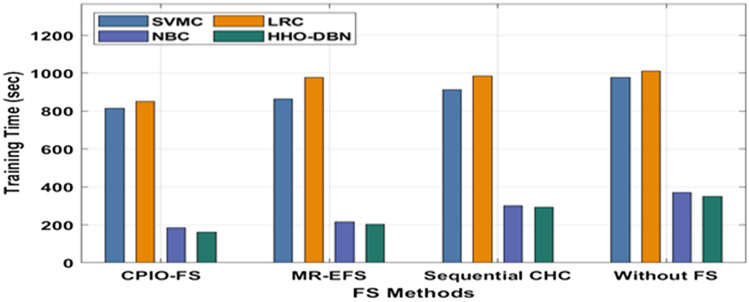

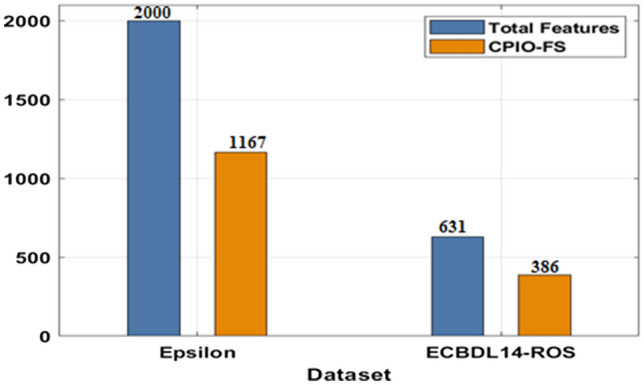

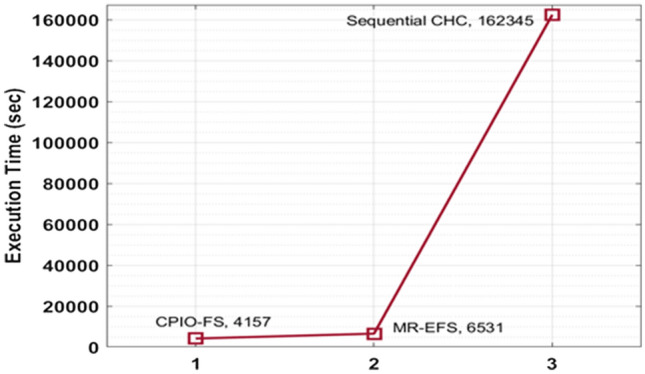

The evaluation of the experimental results of the presented technique takes place on two benchmark datasets, namely, Epsilon and ECBDL14-ROS. The former dataset has 400,000 samples, whereas the latter dataset has 65,003,913 samples33, as shown in Table 1. Figure 3 offers the FS outcome of the CIPO-FS technique. Figure 4 demonstrates the execution time analysis of the CPIO-FS technique with existing techniques with 400,000 training instances. Figure 5 investigates the AUC analysis of different classification models under different FS approaches on the epsilon dataset34,35. A comprehensive training runtime analysis of different classification models under different FS approaches on the epsilon dataset is provided in Fig. 6. A brief AUC analysis of different classification models under distinct FS methods on the ECBDL14-ROS dataset is provided in Fig. 7. A detailed training runtime analysis of different classification approaches under different FS approaches on the ECBDL14-ROS dataset is provided in Fig. 8.

Table 1.

Dataset description.

| Dataset | Training instances | Test instances | Features |

|---|---|---|---|

| Epsilon | 400,000 | 100,000 | 2000 |

| ECBDL14-ROS | 65,003,913 | 2,897,917 | 631 |

Figure 3.

FS analysis of CPIO-FS model. The results depicted that the CPIO-FS technique has chosen an optimal set of features from the applied dataset. For instance, on the test Epsilon dataset, the CIPO-FS technique selected a set of 1167 features out of 2000 features. In addition, on the applied ECBDL14-ROS technique, the CIPO-FS technique has chosen a collection of 631 features out of 386 features.

Figure 4.

Execution time analysis of CPIO-FS model. The results showed that the CPIO-FS technique obtained an effective outcome with a minimal execution time of 4157 s, whereas the MR-EFS and sequential CHC techniques achieved higher execution times of 6531 s and 162,345 s, respectively.

Figure 5.

AUC analysis of HHO-DBN model under epsilon dataset. The results ensured that the CPIO-HS with the HHO-DBN model offered the maximum outcome over the other techniques. For instance, with no FS technique, the HHO-DBN model attained a higher AUC of 72.70%, whereas the SVMC, LRC, and NBC techniques attained a lower AUC of 59%, 62%, and 64%, respectively. Following the sequential CHC approach, the HHO-DBN manner reached an increased AUC of 74.80%, whereas the SVMC, LRC, and NBC methods obtained minimal AUCs of 65%, 67%, and 68%, respectively. In line with MR-EFS, the HHO-DBN method reached a superior AUC of 78.60%, whereas the SVMC, LRC, and NBC methodologies achieved a minimal AUC of 68%, 7%, and 72%, respectively. Last, with the CPIO-FS technique, the HHO-DBN methodologies reached a superior AUC of 93.90%, whereas the SVMC, LRC, and NBC approaches attained minimal AUCs of 80.10%, 82.80%, and 86.50%, respectively.

Figure 6.

Training time analysis of the HHO-DBN model under the epsilon dataset. The results portrayed that CPIO-FS with the HHO-DBN technique resulted in the least training runtime compared to the other methods. For instance, with no FS, the HHO-DBN technique has gained a lower runtime of 318.54 s, whereas the SVMC, LRC, and NBC techniques have accomplished a higher runtime of 400.38 s, 430.48 s, and 340.42 s, respectively. Moreover, sequential CHC, the HHO-DBN approach has attained a minimal runtime of 265.98 s, whereas the SVMC, LRC, and NBC approaches have accomplished superior runtimes of 345.27 s, 398.07 s, and 300.21 s, respectively. Furthermore, MR-EFS, the HHO-DBN method has reached an increased runtime of 243.09 s, whereas the SVMC, LRC, and NBC methodologies have accomplished a higher runtime of 334.18 s, 367.29 s, and 264.26 s, respectively. Finally, CPIO-FS, the HHO-DBN method has attained a lower runtime of 218.96 s, whereas the SVMC, LRC, and NBC algorithms have accomplished superior runtimes of 298.36 s, 312.78 s, and 220.90 s, respectively.

Figure 7.

AUC analysis of HHO-DBN model under ECBDL14-ROS dataset. The outcomes demonstrated that CPIO-FS with the HHO-DBN technique resulted in the lowest training AUC compared to the other methods. For instance, with no FS, the HHO-DBN manner has reached a maximum AUC of 70.10%, whereas the SVMC, LRC, and NBC techniques have accomplished a lower AUC of 56%, 58%, and 61%, respectively. Additionally, sequential CHC and the HHO-DBN technique achieved a higher AUC of 75.14%, whereas the SVMC, LRC, and NBC techniques achieved a minimum AUC of 62%, 63%, and 65%, respectively. Likewise, MR-EFS, the HHO-DBN technique, gained an increased AUC of 78.60%, whereas the SVMC, LRC, and NBC techniques accomplished a decreased AUC of 64%, 64%, and 67%, respectively. Finally, CPIO-FS and the HHO-DBN technique achieved a higher AUC of 90.10%, whereas the SVMC, LRC, and NBC techniques achieved minimal AUCs of 78.90%, 80.15%, and 83.60%, respectively.

Figure 8.

Training time analysis of the HHO-DBN model under the ECBDL14-ROS dataset. The results portrayed that CPIO-FS with the HHO-DBN technique resulted in the least training runtime compared to the other techniques. For instance, with no FS, the HHO-DBN technique has gained a minimal runtime of 350.76 s, whereas the SVMC, LRC, and NBC methods have accomplished superior runtimes of 978.37 s, 1012.47 s, and 369.98 s, respectively. In addition, sequential CHC, the HHO-DBN approach has reached a reduced runtime of 293.87 s, whereas the SVMC, LRC, and NBC algorithms have accomplished superior runtimes of 912.40 s, 986.45 s, and 300.26 s, respectively. In addition, MR-EFS, the HHO-DBN method has reached a lesser runtime of 203.76 s, whereas the SVMC, LRC, and NBC methodologies have accomplished maximal runtimes of 864.28 s, 978.38 s, and 215.09 s, respectively. Last, CPIO-FS, the HHO-DBN manner, obtained a minimal runtime of 160.90 s, whereas the SVMC, LRC, and NBC methodologies accomplished superior runtimes of 815.89 s, 850.53 s, and 184.34 s, respectively.

Conclusion

In this study, a new big data classification model is designed in the MapReduce environment. The proposed model derived a novel CPIO-based FS technique, which extracts a useful subset of features. In addition, the HHO-DBN model receives the chosen features as input and performs the classification process. The design of the HHO-based hyperparameter tuning process assists in enhancing the classification results to a maximum extent. To examine the superiority of the presented technique, a series of simulations were performed, and the results were inspected under various dimensions. The resultant values highlighted the supremacy of the presented technique over the recent techniques. The proposed work utilized a limited number of quality service parameters for the implementation. In the future, hybrid metaheuristic algorithms and advanced DL architectures can be designed to further improve the classification outcomes of big data with an extended number of quality of service parameters.

Acknowledgements

We deeply acknowledge Taif University for supporting this study through Taif University Researchers Supporting Project Number (TURSP-2020/313), Taif University, Taif, Saudi Arabia.

Author contributions

S.R. and S.A. conceived the experiment. Y.A. and O.K. conducted the experiment and performed statistical analysis and figure generation. All authors reviewed the manuscript.

Data availability

Data are available upon reasonable request for researchers who meet the criteria for access to confidential data.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Awan MJ, Rahim MSM, Nobanee H, Khalaf OI, Ishfaq U. A big data approach to black Friday sales. Intell. Autom. Soft Comput. 2021;27:785–797. doi: 10.32604/iasc.2021.014216. [DOI] [Google Scholar]

- 2.El-Hasnony IM, Barakat SI, Elhoseny M, Mostafa RR. Improved feature selection model for big data analytics. IEEE Access. 2020;8:66989–67004. doi: 10.1109/ACCESS.2020.2986232. [DOI] [Google Scholar]

- 3.Qiu M, Kung SY, Yang Q. Editorial: IEEE transactions on sustainable computing special issue on smart data and deep learning in sustainable computing. IEEE Trans. Sustain. Comput. 2019;4:1–3. doi: 10.1109/TSUSC.2018.2880127. [DOI] [Google Scholar]

- 4.Sudhakar Sengan P, Sagar V, Khalaf OI, Dhanapal R. The optimization of reconfigured real-time datasets for improving classification performance of machine learning algorithms. Math. Eng. Sci. Aerospace. 2021;12:1–10. [Google Scholar]

- 5.Zhao W, Han S, Meng W, Sun D, Hu RQ. BSDP: Big sensor data preprocessing in multisource fusion positioning system using compressive sensing. IEEE Trans. Veh. Technol. 2019;68:8866–8880. doi: 10.1109/TVT.2019.2929560. [DOI] [Google Scholar]

- 6.Emary E, Zawbaa HM. Feature selection via lèvy antlion optimization. Pattern Anal. Appl. 2019;22:857–876. doi: 10.1007/s10044-018-0695-2. [DOI] [Google Scholar]

- 7.Dhrif H, Giraldo LGS, Kubat M, Wuchty S. A stable hybrid method for feature subset selection using particle swarm optimization with local search. Proc. Genet. Evol. Comput. Conf. 2019;1:13–21. doi: 10.1145/3321707.3321816. [DOI] [Google Scholar]

- 8.Abdulsahib GM, Khalaf OI. Comparison and evaluation of cloud processing models in cloud-based networks. Int. J. Simul. Syst. Sci. Technol. 2018;19:1–6. [Google Scholar]

- 9.Guo Y, Chung FL, Li G, Zhang L. Multilabel bioinformatics data classification with ensemble embedded feature selection. IEEE Access. 2019;7:103863–103875. doi: 10.1109/ACCESS.2019.2931035. [DOI] [Google Scholar]

- 10.Al-Khanak EN, Lee SP, Ur Rehman Khan S, Verbraeck A, van Lint H. A heuristics-based cost model for scientific workflow scheduling in cloud. Comput. Mater. Continua. 2021;67(3):3265–3282. doi: 10.32604/cmc.2021.015409. [DOI] [Google Scholar]

- 11.De Souza R. C. T., Coelho L. D. S., De Macedo C. A., & Pierezan J. A V-Shaped binary crow search algorithm for feature selection. in Proc. IEEE Congr. Evol. Comput. (CEC). 1–8 (2018).

- 12.Khan NA, Khalaf OI, Romero CAT, Sulaiman M, Bakar MA. Application of Euler neural networks with soft computing paradigm to solve nonlinear problems arising in heat transfer. Entropy. 2021;23:1053. doi: 10.3390/e23081053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Alsufyani A, Alotaibi Y, Almagrabi AO, Alghamdi SA, Alsufyani N. Optimized intelligent data management framework for a cyber-physical system for computational applications. Compl. Intell. Syst. 2021;1:1–13. [Google Scholar]

- 14.Khan HH, Malik MN, Alotaibi Y, Alsufyani A, Algamedi S. Crowdsourced requirements engineering challenges and solutions: A software industry perspective. Comput. Syst. Sci. Eng. 2021;39:221–236. doi: 10.32604/csse.2021.016510. [DOI] [Google Scholar]

- 15.Al-Thanoon NA, Algamal ZY, Qasim OS. Feature selection based on a crow search algorithm for big data classification. Chem. Intell. Lab. Syst. 2021;212:104288. doi: 10.1016/j.chemolab.2021.104288. [DOI] [Google Scholar]

- 16.BenSaid F, Alimi AM. Online feature selection system for big data classification based on multiobjective automated negotiation. Pattern Recogn. 2021;110:107629. doi: 10.1016/j.patcog.2020.107629. [DOI] [Google Scholar]

- 17.Pooja SB, Balan RS, Anisha M, Muthukumaran MS, Jothikumar R. Techniques Tanimoto correlated feature selection system and hybridization of clustering and boosting ensemble classification of remote sensed big data for weather forecasting. Comput. Commun. 2020;151:266–274. doi: 10.1016/j.comcom.2019.12.063. [DOI] [Google Scholar]

- 18.Lavanya PG, Kouser K, Suresha M. Effective feature representation using symbolic approach for classification and clustering of big data. Expert Syst. Appl. 2021;173:114658. doi: 10.1016/j.eswa.2021.114658. [DOI] [Google Scholar]

- 19.Sivakkolundu R, Kavitha V. Bhattacharyya coefficient target feature matching based weighted emphasis adaptive boosting classification for predictive analytics with big data. Mater. Today. 2021;5:63. [Google Scholar]

- 20.Baldomero-Naranjo M, Martínez-Merino LI, Rodríguez-Chía AM. A robust SVM-based approach with feature selection and outliers detection for classification problems. Expert Syst. Appl. 2021;178:115017. doi: 10.1016/j.eswa.2021.115017. [DOI] [Google Scholar]

- 21.Guo Y, Zhang B, Sun Y, Jiang K, Wu K. Machine learning based feature selection and knowledge reasoning for CBR system under big data. Pattern Recogn. 2021;112:107805. doi: 10.1016/j.patcog.2020.107805. [DOI] [Google Scholar]

- 22.Wang J, Zheng P, Zhang J. Big data analytics for cycle time related feature selection in the semiconductor wafer fabrication system. Comput. Ind. Eng. 2020;143:106362. doi: 10.1016/j.cie.2020.106362. [DOI] [Google Scholar]

- 23.Singh N, Singh P. A hybrid ensemble-filter wrapper feature selection approach for medical data classification. Chem. Intell. Lab. Syst. 2021;1:104396. doi: 10.1016/j.chemolab.2021.104396. [DOI] [Google Scholar]

- 24.López D, Ramírez-Gallego S, García S, Xiong N, Herrera F. BELIEF: A distance-based redundancy-proof feature selection method for Big Data. Inf. Sci. 2021;558:124–139. doi: 10.1016/j.ins.2020.12.082. [DOI] [Google Scholar]

- 25.Alotaibi, Y. Automated business process modeling for analyzing sustainable system requirements engineering. in 2020 6th International Conference on Information Management (ICIM), IEEE, 157–161 (2020).

- 26.Alotaibi Y, et al. Suggestion mining from opinionated text of big social media data. Comput. Mater. Continua. 2021;68:3323–3338. doi: 10.32604/cmc.2021.016727. [DOI] [Google Scholar]

- 27.Metawa N, Nguyen PT, Nguyen QLHTT, Elhoseny M, Shankar K. Internet of things enabled financial crisis prediction in enterprises using optimal feature subset selection-based classification model. Big Data. 2021;9:331–342. doi: 10.1089/big.2020.0192. [DOI] [PubMed] [Google Scholar]

- 28.Almanaseer W, Alshraideh M, Alkadi O. A deep belief network classification approach for automatic diacritization of arabic text. Appl. Sci. 2021;11:5228. doi: 10.3390/app11115228. [DOI] [Google Scholar]

- 29.Suryanarayana G, Chandran K, Khalaf OI, Alotaibi Y, Alsufyani A, Alghamdi SA. Accurate magnetic resonance image super-resolution using deep networks and Gaussian filtering in the stationary wavelet domain. IEEE Access. 2021;9:71406–71417. doi: 10.1109/ACCESS.2021.3077611. [DOI] [Google Scholar]

- 30.Li G, et al. Research on the natural language recognition method based on cluster analysis using neural network. Math. Probl. Eng. 2021;2021:1–13. [Google Scholar]

- 31.Alotaibi Y. A new database intrusion detection approach based on hybrid meta-heuristics. Comput. Mater. Continua. 2021;66:1879–1895. doi: 10.32604/cmc.2020.013739. [DOI] [Google Scholar]

- 32.Rout R, Parida P, Alotaibi Y, Alghamdi S, Khalaf OI. Skin lesion extraction using multiscale morphological local variance reconstruction based watershed transform and fast fuzzy C-means clustering. Symmetry. 2021;13:2085. doi: 10.3390/sym13112085. [DOI] [Google Scholar]

- 33.Shafiq M, Tian Z, Bashir AK, Jolfaei A, Yu X. Data mining and machine learning methods for sustainable smart cities traffic classification: A survey. Sustain. Cities Soc. 2020;60:102177. doi: 10.1016/j.scs.2020.102177. [DOI] [Google Scholar]

- 34.Tian Z, et al. User and entity behavior analysis under urban big data. ACM/IMS Trans. Data Sci. 2020;1:1–19. doi: 10.1145/3374749. [DOI] [Google Scholar]

- 35.Luo C, Tan Z, Min G, Gan J, Shi W, Tian Z. A novel web attack detection system for internet of things via ensemble classification. IEEE Trans. Ind. Inf. 2021;17:5810–5818. doi: 10.1109/TII.2020.3038761. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data are available upon reasonable request for researchers who meet the criteria for access to confidential data.