Abstract

Background

Engaging in regular physical activity requires continued complex decision-making in varied and dynamic individual, social and structural contexts. Widespread shortfalls of physical activity interventions suggests the complex underlying mechanisms of change are not yet fully understood. More insightful process evaluations are needed to design and implement more effective approaches. This paper describes the protocol for a process evaluation of the JU:MP programme, a whole systems approach to increasing physical activity in children and young people aged 5–14 years in North Bradford, UK.

Methods

This process evaluation, underpinned by realist philosophy, aims to understand the development and implementation of the JU:MP programme and the mechanisms by which JU:MP influences physical activity in children and young people. It also aims to explore behaviour change across wider policy, strategy and neighbourhood systems. A mixed method data collection approach will include semi-structured interview, observation, documentary analysis, surveys, and participatory evaluation methods including reflections and ripple effect mapping.

Discussion

This protocol offers an innovative approach on the use of process evaluation feeding into an iterative programme intended to generate evidence-based practice and deliver practice-based evidence. This paper advances knowledge regarding the development of process evaluations for evaluating systems interventions, and emphasises the importance of process evaluation.

Supplementary Information

The online version contains supplementary material available at 10.1186/s12889-021-12255-w.

Keywords: Physical activity, Process evaluation, Realist, Systems thinking, Children, Behaviour change, Qualitative, Ripple effect mapping, Network mapping

Background

Physical (in)activity and health inequalities

There is substantial evidence that social structural factors such as deprivation, ethnicity, gender and age influence health-related risk, health outcomes and mortality rates [1–3]. Physical activity (PA), which is positively related to health, wellbeing and academic outcomes [4–6], is also socially patterned [7]. Those who live in more deprived areas and/or are of ethnic minority populations are consistently reported to engage in lower levels of PA than less deprived and / or ethnic majority populations [8–10]. Social stratification of lifestyle behaviours, including PA, provides a partial explanation for the social inequalities of health, and can serve to perpetuate existing health inequalities [11].

Approaches to increasing physical activity and reducing health inequality

Increasing population levels of PA and reducing inequality is considered a public health priority [12, 13]. Until recently, PA interventions have emphasised individual-level behaviour change, which can worsen health inequalities, as they are often less accessible and effective for more deprived populations, due to lesser material resources and ‘leisure’ time [14]. Empirical evidence supports the proposal that behaviour is not solely the product of ‘intention’, but rather is influenced by multiple interacting forces at structural, environmental/neighbourhood, organisational, intrapersonal and individual levels [15, 16]. Interventions that target multiple ‘levels’, alter structures and processes, strengthen relationships between communities, and redistribute power resources, are more likely to increase PA behaviour and reduce inequality, than interventions that only target or that focus primarily on individual behaviour change [17, 18]. Hence, PA and health may be regarded as a “co-responsibility” of governments, individuals, families, organisations, and communities [19]. The International Society of Physical Activity and Health (ISPAH) has recently published a call to action outlining ‘eight investments that work for physical activity’. This resource advocates whole systems change across eight domains including schools, communities, travel, urban design, healthcare, workplaces, mass media, and sport and recreation [12].

The Bradford local delivery pilot context

Responding to the need for whole systems change, Sport England has funded 12 Local Delivery Pilots (LDPs) over a 5-year period (2019–2024), to take a whole systems, place-based approach to reduce physical inactivity and health inequalities. In Bradford, 24% of residents are under the age of 16, making it the ‘youngest’ city in the UK [20]. Bradford is an ethnically diverse city - over 20% of the total district population, and over 40% of children, are of South Asian origin [21]. Bradford falls in the most deprived quintile of the Index of Multiple Deprivation, with 60% of the population living in the poorest 20% of wards in England and Wales [20]. The Bradford LDP is led by the Born in Bradford research programme on behalf of Active Bradford, a partnership of organisations committed to improving physical activity within the district. Unpublished data from the Born in Bradford cohort study [22] indicates that, on average, children and young people in Bradford have lower levels of PA than the general UK population. Given the high numbers of children, and the inverse association between PA levels and age during childhood [23] the Bradford LDP, JU:MP (Join Us: Move. Play), is focused on reducing inactivity in the 27,000 children and young people aged 5–14, and their families residing in the Bradford LDP area. Further information on the programme is contained in “Intervention: the JU:MP Programme”.

The JU:MP programme is one of several system-wide interventions contributing to a major new prevention research programme called ActEarly. The purpose of ActEarly is to identify, implement and evaluate upstream interventions within a whole system city setting. The collective aim of these multiple, system-wide interventions (including JU:MP), enacted in one locality (i.e. Bradford), is to achieve a tipping point for better life-long health and wellbeing, and to evaluate the impact of this way of working. As such, the process evaluation of JU:MP will acknowledge the broader context in which the programme is operating, including understanding which other system-wide interventions are concurrently taking place and how these interact with JU:MP to impact upon the health and wellbeing of children and young people.

The importance of process evaluation

Randomised controlled trials and related outcome evaluations, such as quasi-experimental controlled studies, tell us whether an intervention works in a particular setting, at a particular time, with a particular group of people. In the Bradford LDP, effectiveness studies are taking place at both population and neighbourhood levels. Better understanding of the ‘how’ and ‘why’ of the JU:MP programme, to understand the processes and interlinked contextual factors influencing change, will establish a greater appreciation of the transferability of the intervention. This is especially important for evaluating complex (systems approaches, adaptive) multi-component interventions; mechanisms influencing change are likely to be more complex, varied and dynamic [24]. Complementing an outcome evaluation with a process-oriented evaluation helps uncover processes - incorporating temporal and spatial contextual influences - influencing change [25].

Understanding how intervention (in)effectiveness arises is not the only valuable question within intervention research [26]; feasibility and acceptability are important too - alongside effectiveness, they also shape the level of embeddedness of different approaches as part of a wider whole system programme. Furthermore, process evaluations involving ongoing interaction with key stakeholders can help bridge the research-practice gap [27] and can be viewed as part of the intervention ‘system’ by providing feedback and contributing to iterative programme development [28]. A growing body of evidence - in both the health and social sciences - supports conducting process evaluations of complex interventions (e.g. [25, 29]). However, few PA evaluations have captured the complexity of behaviour change systems [30]. This paper describes the protocol for a process evaluation of the development, implementation and evaluation of the JU:MP programme.

Methods

This paper focuses on the process evaluation of the JU:MP programme approach. The process evaluation will be conducted alongside a complementary effectiveness evaluation and findings from across the broader evaluation will be integrated to advance knowledge production [31].

Intervention: the JU:MP Programme

The underlying themes, framework (tool, settings and principles) and theory of change for the JU:MP programme were developed in 2018 based on community consultation and priority setting workshops, data from the Born in Bradford research programme [22, 32, 33], international peer-reviewed evidence [34, 35] and the socio-ecological model [36]. Subsequently the first iteration of the JU:MP implementation plan was designed, with projects aligned to the programme themes and content related to the theory of change. During 2019–2020 a test-and-learn phase was undertaken, ‘pathfinder’. In 2021, based on the experiences from the ‘pathfinder’ phase a second version of the implementation plan was drawn up. This included the creation of the draft JUMP model depicting 15 workstreams which will be taken forward into the delivery of the ‘accelerator phase’ (2021–2024).

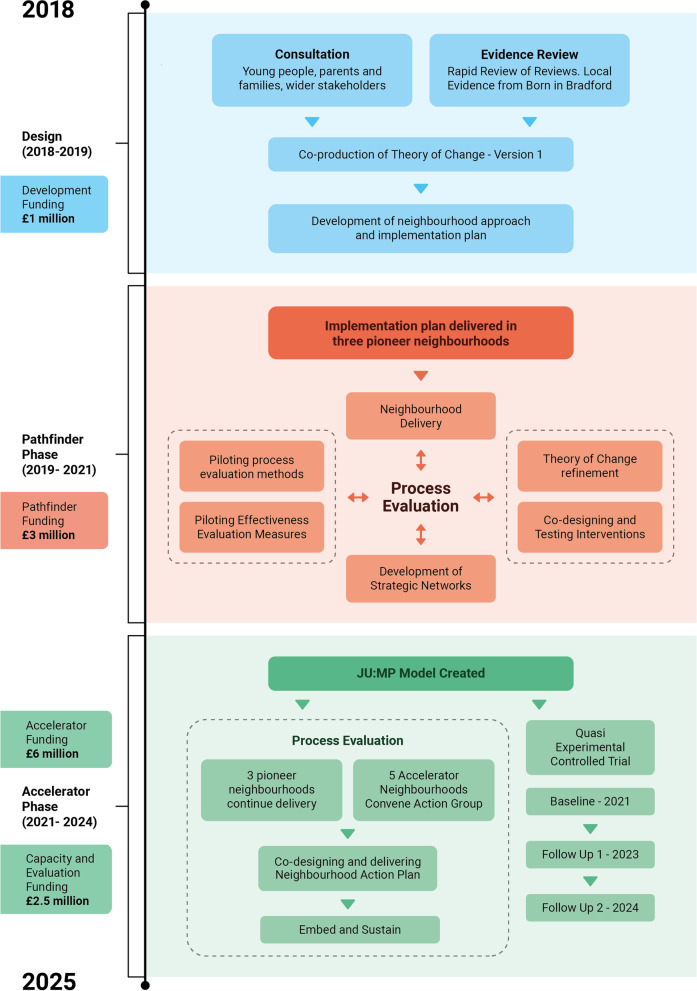

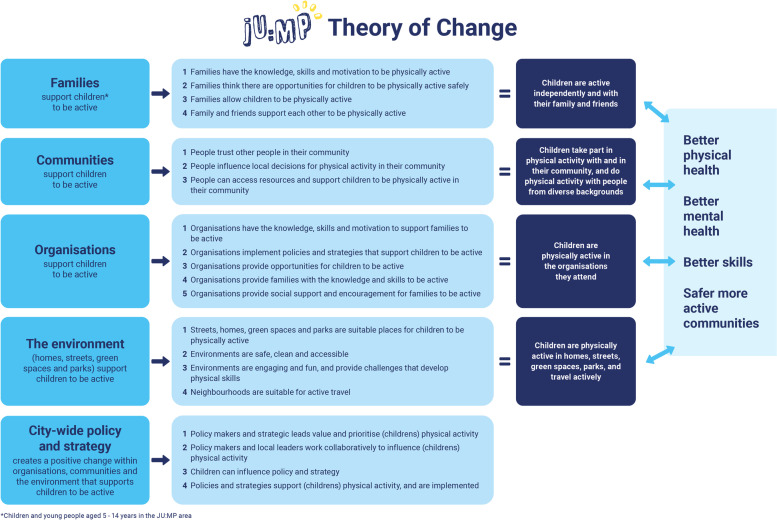

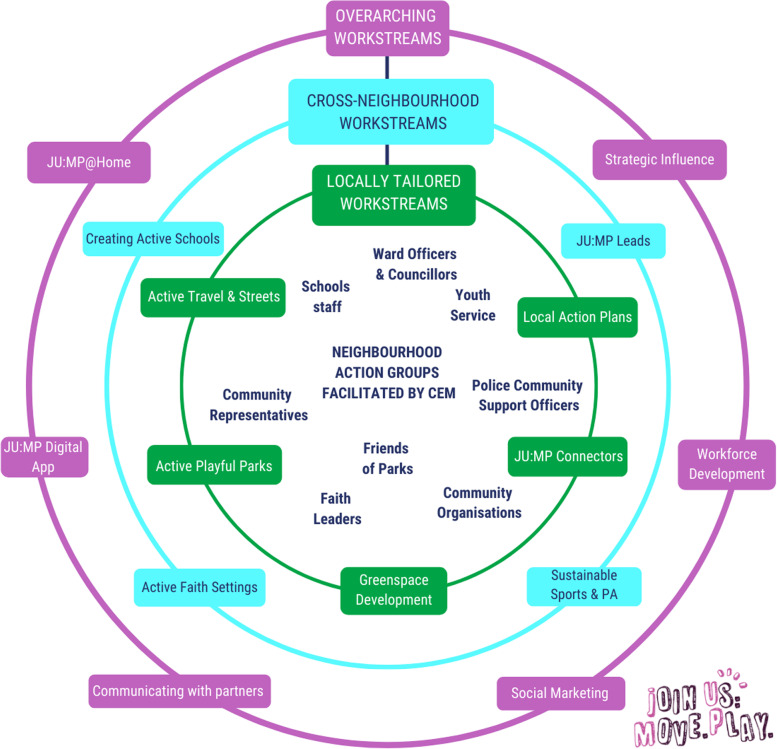

JU:MP has been designed for continuous improvement, based on process evaluation and learning. The description here reflects JU:MP as we transition from the pathfinder phase (the initial small scale test and learn period over 2019–2021) to the accelerator phase (the roll-out of the developed programme across the LDP over 2021–2024); see Fig. 1 for a timeline illustrating key milestones. JU:MP is seen as a whole system approach; the theory of change outlines five themes (family, community, organisations, environment, and policy and strategy) through which JU:MP will ‘act’ to increase PA in children aged 5–14 years, and subsequently improve wider health and social outcomes (see Fig. 2). While the underlying theory of change incorporates multiple ‘mechanisms’, it is recognised that JU:MP is both a system-based intervention and is being implemented within a complex social system, where the process of change in reality will be complex, messy and nonlinear. As such, the theory of change does not provide an exhaustive list of practice-based mechanisms. Four guiding principles underpin the JU:MP approach: i) tailored approaches to change and to link levels within a whole system; ii) community involvement at every step of the process; iii) engaged, active leaders and partners; and iv) evidence- and insight-led. The implementation plan includes 15 interacting work streams which cut across the five JU:MP themes. There are six overarching work streams that are delivered across the whole LDP area, and nine that are developed and delivered at a neighbourhood level (see Fig. 3).

Fig. 1.

JU:MP programme timeline (key milestones)

Fig. 2.

JU:MP theory of change

Fig. 3.

The draft JUMP programme model

JU:MP is being implemented within eight distinct geographic ‘neighbourhoods’ within the Bradford LDP area; see Additional file 1 for a map of the LDP neighbourhoods. Neighbourhood boundaries were based on areas having an area of green space with potential for development, at least 4–5 primary schools, and an active community organisation. This hyper-local scale of whole systems delivery aims to foster genuine collaborative working and building strong sustainable relationships. Using an asset-based community development approach, JU:MP facilitates the development of an action group within each neighbourhood, including key organisational partners, community members, and families. To allow the programme to meet local needs and facilitate longer-term behaviour change, the action group is jointly responsible for (1) co-producing local action plans and green space developments (approximately 3 months), (2) collectively delivering the local action plans, with members contributing to delivering separate work streams (e.g. school stakeholders deliver Creating Active Schools) (approximately 1 year) and (3) the ‘embed and sustain’ phase during which time JU:MP facilitation is lessened (approximately 1 year).

Initially, the neighbourhood approach was operationalised within three ‘Pioneer Neighbourhoods’ (pathfinder phase - 2019-2021) to undertake a test and learn process. Subsequently, the programme will be delivered in the five remaining neighbourhoods (2021–2024), to cover the whole LDP area. The accelerator phase neighbourhoods are further broken down into those that are directly facilitated by the JU:MP team, as in the pathfinder phase (n = 3), and those whose delivery will be externally commissioned (n = 2). The draft programme model is illustrated in Fig. 3. Additional file 2 offers a more detailed description of each work stream.

Process evaluation theory: realism, systems thinking, complexity science

A realist philosophy underpins this process evaluation. Realism posits that an objective reality exists, but that knowledge is ‘value-laden’ and as such we can only understand reality from within a particular discourse [37]. Realism holds that reality exists in an open-system, meaning that attention in programme development and evaluation perspective focuses on how context and mechanisms interact to influence outcomes [38]. Process evaluation is typically understood as “the evaluation of a process of change that an intervention attempts to bring about in order, at least in principle, to explain how outcomes are reached” [29].

Underpinned by realist principles, the role of context is prioritised in establishing intervention (in)effectiveness [29]. Examining context implies focusing on social processes to establish an understanding of how different notions of intervention feasibility, acceptability and effectiveness can be framed. Another part of realist evaluation allows the development and / or refinement of theories relating to mechanisms of change, focusing on context-mechanism-outcome configurations. This supports the iterative development of programme logic models and theories of change [39]. However, a realist approach acknowledges that people attach meaning to experiences, and meanings are implicated within causal processes [37]; behaviour therefore cannot be fully explained, as people are conscious beings that act back on the structures and processes of social life [40].

Within the complex intervention evaluation field, recent calls to embed complexity science and systems principles within process evaluation design reflect a move towards understanding how interventions are part of complex adaptive systems [24, 41, 42]. Realist methodology is consistent with systems thinking and complexity science [41]. They share a mutual belief that wider contexts are inherent within change mechanisms [39]. Yet, systems thinking necessitates taking a holistic view to examine how systems (including interventions) influence behavioural change, rather than viewing interventions in isolation. Further, complexity science is concerned with how interactions between different system elements (including interventions) create change, focusing on concepts including dynamism, nonlinearity, adaptation, feedback loops, and co-evolution [41, 42].

Realism, systems thinking, and complexity science have shaped the development of the aims, study design, data collection, and analysis of the JU:MP process evaluation. Predominantly qualitative methods have been adopted here, using a longitudinal design, to establish a fuller understanding of intervention acceptability and effectiveness, and to capture how acceptability and effectiveness change as systems evolve e.g. generate feedback loops [39, 42].

Aims, objectives and approach

The overarching aim of the process evaluation is to understand the programme implementation and the mechanisms through which JU:MP influences behaviour change across the neighbourhood, and wider policy and strategy systems that it is seeking to influence. The evaluation also facilitates dynamic system change via informing the refinement of the programme and associated theory of change. To address these aims, and in accordance with the JU:MP delivery approach, the process evaluation includes three distinct but interrelated packages of work: (1) a strategic-level evaluation, (2) a neighbourhood-level evaluation, and (3) an end-user evaluation. Table 1 provides an overview of the scope and objectives of each process evaluation work package.

Table 1.

The scope and objectives of the strategic, neighbourhood and end user-level process evaluation work packages

| Strategic-level | Neighbourhood-level | End-user level | |

|---|---|---|---|

| Scope (aims, stakeholders) | To understand the views and actions of JU:MP strategic-level stakeholders, including the core JU:MP team and executive board, stakeholders commissioned to lead on the strategic delivery of work streams, and city-wide strategic partners such as the Living Well programme strategic leads | To understand the views and actions of stakeholders involved in developing and / or implementing JU:MP within a JU:MP neighbourhood (e.g. voluntary organisation stakeholders, school leads, councillors, faith setting leads, friends of groups, families) | To understand the views and actions of the ‘end user’ recipients of JU:MP, i.e. children and young people and their families living in North Bradford |

| Objective (documentation) | To document the strategic-level design, delivery and evaluation processes of the JUMP programme, including: individual work streams and evaluation packages and interactions | To document JU:MP programme neighbourhood level design and delivery processes, including: (a) the community engagement and co-production process and (b) the design and implementation of the overarching action plan and specific interventions | n/a |

| Objective (feasibility and acceptability) | To examine the feasibility and acceptability of the strategic level design, delivery and evaluation of the JU:MP programme, by understanding the barriers, facilitators and contextual factors influencing design, delivery and evaluation, including: (a) Individual work streams and evaluation packages and interactions and (b) strategic influencing across the wider system | To examine the feasibility and acceptability of the neighbourhood level design and implementation of the JU:MP programme, by understanding the barriers, facilitators and contextual factors influencing design and delivery, including: (a) examining the feasibility and acceptability of the neighbourhood co-production approach and (b) examining the feasibility and acceptability of delivering the overarching plan and specific interventions | To examine the experience of children and families receiving JU:MP, including understanding the JU:MP ‘journey’ and acceptability of JU:MP for different users |

| Objective (impact) |

(a) To understand the impact of JU:MP across the whole system including unintended consequences, and developing an understanding of change mechanisms (what works, for whom, and in what context) from the perspective of strategic-level stakeholders (b) To understand the impact of JU:MP on strategic-level stakeholders (c) to understand the impact of JU:MP on city-wide policy and strategic working around physical activity |

(a) to understand the impact of JU:MP across and beyond the neighbourhood system including unintended consequences, and developing an understanding of change mechanisms (what works, for whom, and in what context) from the perspective of neighbourhood-level stakeholders (b) to understand the impact of JU:MP, on neighbourhood-level stakeholders |

To understand the impact of JU:MP including unintended consequences, and change mechanisms |

Strategic-level process evaluation study design

A longitudinal mixed methods design is being adopted. The study received ethical approval from Leeds Beckett University in March 2020 (ref: 69870), and will run until programme delivery ceases in 2024. The overarching objectives of the strategic-level process evaluation are to document, and understand the feasibility, acceptability and impact of the strategic-level development, delivery and evaluation of JU:MP. This includes a focus on the 15 JU:MP programme work streams (see Fig. 2), and the effectiveness, process and individual project evaluations, including interaction, synergy and tension between these different JU:MP system elements.

Stakeholders involved in the development and delivery of JU:MP at a strategic-level, i.e. beyond individual neighbourhoods, will be invited to participate. Data collection methods include surveys, semi-structured interviews, participant observations, and reflections, which will all be implemented at multiple time points throughout programme delivery; see “Process evaluation data collection methods” for further detail. The evaluation will iteratively refine as priorities surface; for example, we have recently incorporated a sub-study to provide a ‘deep dive’ into the strategic-influencing work of JU:MP to examine the wider intended and unintended impacts of city-wide policy and strategic working related to PA, following the addition of policy and strategy as a theme within the theory of change.

Neighbourhood-level process evaluation study design

A longitudinal, mixed-methods case study design is being adopted, with individual neighbourhoods being classified as ‘cases’. This study received ethical approval from the University of Bradford in November 2020 (ref: E838) and will be implemented during the ‘delivery’ phase within each JU:MP neighbourhood, which lasts approximately 3 years. “Intervention: the JU:MP Programme” provides detail on the neighbourhood delivery approach.

A minimum data-set will be collected from each neighbourhood, with additional data collection occurring within selected ‘deep dive’ neighbourhoods. These neighbourhoods will include one from the pioneer neighbourhood phase (with the primary aim of piloting and refining the data collection techniques, and to inform programme design and delivery), and the three accelerator phase neighbourhoods that are directly facilitated by the JU:MP team, in line with the neighbourhoods that are included within the neighbourhood control trial that forms part of the effectiveness evaluation of JU:MP. Aligning the ‘deep dive’ neighbourhoods with those included in the control trial will generate greater understanding and explanation of control trial findings; the trial will provide evidence of JU:MP effectiveness within the neighbourhood. The process evaluation will help explain what worked, why, when, for whom, and within what context.

Amendments to the evaluation protocol will be made following piloting and prior to implementing the study within the ‘accelerator phase’ neighbourhoods. Minimum-data data collection methods include surveys and documentary analysis, and additional methods employed in ‘deep dive’ neighbourhoods include extra surveys, process observations, semi-structured interviews, and participatory evaluation methods; see “Process evaluation data collection methods” for further detail.

End user-level process evaluation study design

The end-user process evaluation will examine the experiences and impact of JU:MP amongst children and families. This will feature focus groups with children and parents/guardians from across the accelerator direct delivery neighbourhoods, approximately 12 months and 24 months following JU:MP commencement. Additionally, in-depth longitudinal research will be conducted with approximately four local families. Citizen science methods will be adopted, which involves members of the public (non-scientists) collecting and analysing data, in collaboration with researchers [38], to foster community engagement.

Multiple and innovative methods of data collection will be employed, which could include written or video diaries, or photo-elicitation techniques, walk-and-talk interviews, but crucially, the families will be engaged in developing the research approach, collecting and analysing their own data, and making recommendations for future practice. A PhD studentship, jointly funded by Sport England (as part of the programme funding) and the University of Bradford, will develop and conduct this work, commencing in 2021. It is preemptive to give close detail of methods and analysis for an area of work that is still emerging.

Theories and models utilised within the process evaluation

Various existing theories / models / frameworks underpin the development, delivery and evaluation of the JU:MP programme. Herein, we focus on theories that are used directly, or indirectly as sensitising concepts, within the process evaluation of JU:MP, including in the development of topic guides and surveys, and analysis frameworks.

Consolidated framework for implementation research (CFIR) [43] - The CFIR was developed by synthesising implementation constructs from across 20 implementation sources and multiple scientific disciplines [43], and is a comprehensive framework designed to examine intervention implementation [44]. Five major domains comprise the CFIR: intervention characteristics, inner setting, outer setting, characteristics of individuals involved in implementation, and the implementation process [43] . The CFIR is being used as a sensitising framework within the process evaluation to understand the feasibility of implementing the JU:MP programme.

Capability, opportunity, motivation-behaviour (COM-B) [45] and the Theoretical Domains Framework (TDF) [46] - The COM-B model provides a comprehensive and evidence-based model for understanding human behaviour and behaviour change. The model proposes that behaviour is influenced by capability (physical, psychological), opportunity (physical, social) and motivation (reflective, automatic), and that all three must be present for a behaviour to occur [45]. The TDF consists of 14 ‘domains’ of influence on behaviour, developed by synthesising 33 theories of behaviour and behaviour change [46]. The Domains align to COM-B categories and can be used to develop and implement interventions and to inform understanding of the barriers and facilitators influencing behaviour change.

JU:MP programme theory of change - The programme theory (see “Intervention: the JU:MP Programme” and Fig. 1) is implicated in the evaluation of JU:MP; it will be utilised to understand impact and mechanisms of impact as well as being iteratively refined as programme delivery and evaluation progress.

Sampling and recruitment

The proposed sample for the strategic-level study includes stakeholders who are part of the strategic leadership of the JU:MP programme. This includes all members of the core JU:MP research and implementation teams, stakeholders commissioned to lead on the strategic delivery of one of the 15 work streams across the LDP, JU:MP executive board members and members of the established strategic development working group for integrating physical activity in policy and strategy across the district. The sample size will be based on the number of individuals that meet the inclusion criteria, which is expected to be around 100. The proposed sample for the neighbourhood-level study includes stakeholders who are involved in designing and delivering JU:MP within one (or more) of the participating neighbourhoods, as part of the neighbourhood action group, including for example, JU:MP connectors, Islamic Religious Setting stakeholders, and children and families; see “Intervention: the JU:MP Programme”. The sample size is based on the expected number of individuals (20) that will form the action groups within each neighbourhood, meaning there will be around 160 participants in total. As detailed in “Process evaluation data collection methods”, not all participants will take part in all aspects of data collection, for example interviews will only be conducted with approximately 20 individuals at each time point in both the strategic and neighbourhood-level studies.

All potential participants across both the strategic and neighbourhood level studies will be engaged in the design and delivery of JU:MP, and as such will already be known and identifiable to the research team, via the implementation team. Potential participants will be given an information sheet for the research, and informed consent will be obtained prior to data collection commencing. Data collection will take place at multiple time-points over a significant time-period (up to for years). At each data collection ‘point’, participants will be verbally reminded that they are taking part in the study and what it involves, and will be given a verbal reminder to let the researcher know at any time if they wish to withdraw their consent to participate.

Process evaluation data collection methods

This section provides a rationale for and description of each data collection method that is being utilised within the JU:MP process evaluation. Table 2 provides a map of when and where each method is being utilised as part of the strategic and neighbourhood evaluation work packages.

Table 2.

Data collection methods for the strategic and neighbourhood process evaluation

| Data collection method | Strategic level process evaluation | Neighbourhood level process evaluation |

|---|---|---|

| Surveys |

Participant characteristics survey: upon recruitment (All recruited participants) Influences on behaviour survey: every 6 months (All recruited participants) |

Participant characteristics survey: upon recruitment (All participants, all neighbourhoods) Influences on behaviour survey: baseline, 6-months, 12-months and 24 months (All participants, all neighbourhoods) Network mapping survey: baseline, 6-months, 12-months and 24 months (All recruited participants, all neighbourhoods) |

| Process observations |

Process observations of meetings including: Implementation team meetings: one in every four (attended by core team members such as the programme director, community engagement managers and communications officer) Other key strategic meetings identified in collaboration with the implementation team |

Process observations of action group workshops: every workshop, approximately once every 6 weeks (deep-dive neighbourhoods only) |

| Documentary analysis | Key documents for each work stream collated every 6 months (including service agreements, project plans and evaluations) |

Action group workshop notes (All neighbourhoods) Neighbourhood action plans (All neighbourhoods) |

| Semi-structured interviews |

Interviews with around 20 strategic stakeholders every 6 months (including members of the core team and one strategic lead for each workstream at each time point) Interviews with around six additional wider stakeholders every 12 months (three members of the executive board and three members of the strategic development) |

Interviews with around 20 neighbourhood stakeholders at 6 and 18 months (including key delivery stakeholders such as JU:MP connector, Islamic Religious Setting lead, school lead etc. from across deep-dive neighbourhoods only) Interviews with around two commissioned organisation stakeholders at 6 and 18 months (commissioned neighbourhoods) |

| Reflections |

Group reflections embed into key meetings: Weekly implementation team meetings: one in every four (attended by core team members such as the programme director, community engagement managers and comms officer) Weekly research team meetings: one in every four (attended by core team members such as the research directors and research fellows) |

– |

| REM | REM workshops embedded into strategic development group meetings: every 6 months | REM workshops embedded into action group meetings: every 6 months (all neighbourhoods) |

Surveys

Personal characteristics survey - a short survey related to the participants’ personal characteristics, including gender, date of birth, home postcode, ethnicity, highest qualification, employer, job role, and JU:MP role(s). This survey will enable characterisation of the sample and will aid in contextualising and interpreting qualitative data.

Influences on behaviour survey - the survey has been developed to assess factors influencing participants’ roles in supporting the design and delivery of JU:MP. The survey is an adapted version of a validated 6-item COM-B questionnaire [47]; see Additional file 3 for a copy of the survey. Draft surveys were piloted with members of the core team, and refined based on feedback. The survey will permit the identification of determinants of behaviour [48], which will highlight areas for intervention to increase the capability, opportunity and / or motivation of stakeholders to influence change and to support children to increase physical activity. Repeating the survey at baseline, 6 months, 12 months and 24 months will permit an understanding of how different influences change over time. Further exploration during interviews for some participants will aid in understanding the reasons for these changes.

Stakeholder mapping survey - this survey has been developed to facilitate a social network analysis [49]; connections between stakeholders will be mapped to understand the impact of JU:MP on relationships between parties within neighbourhood networks. Published guidance on social network analysis [50] and input from network analysis specialists informed the initial development of the survey. The survey is being refined following piloting with pioneer neighbourhood stakeholders. Repeating the survey every 6 months will permit an understanding of how relationships develop and change over the course of the JU:MP programme. See Additional file 4 for a copy of the stakeholder mapping survey.

Feedback forms - feedback forms will be administered following neighbourhood action group workshops to examine participants’ thoughts and feelings about the workshop content and process, and to understand the emerging impact of the work. The content of the forms may be adapted depending on the workshop context, however questions will typically include “What did you find most useful about the workshop?”, “What did you find least useful about the workshop?, How could it be improved?” and “What is the most significant output of JU:MP so far?”

Semi-structured interviews

Semi-structured interviews provide an opportunity for in-depth reflection on the design and delivery of JU:MP, including documenting and reflecting on progress, activity, decisions, perceptions, and challenges [51]. Understanding these processes is important for evaluation, as it helps us to understand the factors influencing whether or not the programme is successful in achieving its outcomes. The interviews will explore the capability, opportunity and motivation of the participants to support the JU:MP programme and will be tailored to their specific role within JU:MP. The opportunity to reflect on involvement via an in-depth interview can also have a positive influence on programme design and delivery via facilitating a process of continuous learning [52]. Interview guides are theoretically informed; they draw on implementation theory (CFIR), behavioural theory (COM-B and TDF), and the JU:MP theory of change. However, interview guides will be refined on an iterative basis based on project developments and prior data collected via other methods, for example, observations (see “Participant observation”).

Participant observation

Observation offers a direct view of behaviour, capturing events as they occur in their natural setting [53]. Qualitative observations, completed by a researcher, provide an independent record of activities, including developing an understanding of context, behaviours and interactions, allowing reflection on these activities [29]. Key meetings (Table 2) will be observed by a researcher. Informed by Spradley [54] and aligned with the theories and frameworks underpinning programme design and evaluation (systems thinking, JU:MP ToC, COM-B, CFIR), an observation summary sheet has been prepared to guide this collection of observational data. This guidance provides common areas of focus across observational records; Additional file 5. During the observation the researcher will record a ‘condensed account’ of the event, which will then be utilised as an aide-memoire to develop an expanded account. These expanded accounts will be included in data analysis.

Documentary analysis

Key programme documents can provide insight into the design and delivery of programmes, including information on decisions made / agreed actions and why, and implementation challenges. Meeting and workshop notes and neighbourhood action plans will be included in qualitative analyses (Table 2). Additionally, key documents such as service agreements and project plans will be requested from stakeholders prior to interviews, to aid the interview process e.g. discussing how and why plans were delivered as intended or amended.

Participatory evaluation methods

Reflections - Regularly reflecting on programme activity is important as it allows us to document progress, activity, decisions, and challenges, as they are occurring. Reflective practice can also have a positive influence on neighbourhood design and delivery via facilitating a process of continuous learning [52]. Short reflection activities are being embedded into key JU:MP meetings, with attendees being given 60–90 s each to share a key learning (what happened, why and how, context, future planning) Reflections are captured as part of documentary analysis; see “Documentary analysis”.

Ripple effects mapping (REM) - This participatory method takes a qualitative, collaborative approach to understanding wider programme impacts. Unlike traditional impact evaluation methods, which tend to focus on a small number of pre-specified outcomes, REM is designed to uncover a wider range of intended and unintended impacts stemming from a programme [55]. This may be particularly important in whole systems programmes and where interventions are co-produced, flexible and emerging. The method involves holding researcher-facilitated workshops with the participants (approximately 12 per workshop) involved in developing and delivering (an aspect of) the programme, to create a visual output of impacts [56, 57]. The workshops involve four steps: team-based conversations, mapping activities and impacts, reflecting further on impacts, and identifying the most and least significant changes. The workshops can be repeated over time to understand impact pathways and timelines (Nobles H, Wheeler J, Dunleavy-Harris K, Holmes R, Inman-Ward A, Potts A, Hall J, Redwood S, Jago R, Foster C: Ripple effects mapping: capturing the wider impacts of systems change efforts in public health, Under review). Previous research has documented that participating in the mapping process and realising the range of impacts can be motivating to stakeholders and encourage further action [55, 58]. Within the process evaluation, this method will be used to examine the impact of the strategic influencing work, and the neighbourhood programmes involved in deep-dive evaluation.

Data analysis

Qualitative data analysis

Qualitative data including semi-structured interview data, reflections, key documents including meeting notes, and process observation summaries will be analysed using a framework approach [59]. Framework analysis is a type of thematic analysis aimed at providing descriptive and/or explanatory findings clustered around themes. Uniquely, framework analysis features using a matrix to systematically reduce the data. The key steps involved include (1) familiarisation, (2) identifying a thematic framework, (3) indexing (applying the thematic framework to the data set), (4) charting (entering data into framework matrices), and (5) mapping and interpretation [55].

A framework approach was selected for a number of reasons. First, the matrix permits multiple comparisons, including between interventions, subjects, data sources and time points [60]. This is particularly important for evaluating the JU:MP programme to allow findings to be examined both within and across different interventions within the system, and over time. Second, a framework aids in consolidating data across themes, identifying broad ranging data - discussing different JU:MP interventions, via different methods, at different time points. The framework also allows the isolation of specific data from different interventions, neighbourhoods, stakeholders etc. to be analysed separately, if required. Third, the indexing and charting process allows all members of a multidisciplinary team to engage with the analysis (e.g. of a particular theme) without needing to read and code all the data [61]. Finally, the approach is suited to prolonged data collection, allowing analysis to occur alongside data collection. This allows findings to inform iterative programme development, and ‘chunks’ analysis across the timeframe of the programme.

An initial framework was developed based on theory underpinning the evaluation, the JU:MP programme structure (deductive), and inductive coding of a small number of initial interview transcripts. Over the course of the pathfinder phase, the framework was iteratively refined based on coding of data, and the development of the programme. The refined framework includes separate themes for the different work streams and evaluation work packages, as well as themes for the overarching programme development, delivery and evaluation (Additional file 6). Following coding using NVivo 12.0, framework matrices will facilitate the interpretation of data and the construction of themes. Miro will be used to visually illustrate the REM maps, while qualitative content analysis, using NVivo 12.0, will analyse the data within the REM outputs. This type of analysis will identify data patterns and quantify emerging aspects of programme outputs.

Quantitative data analysis

Data from the participant characteristics survey and influences on behaviour survey will be summarised using descriptive statistics. Univariate statistical tests will be used to examine differences between different groups of participants, and general linear models will explore any differences in influences on behaviour over time. The network mapping survey will be analysed and illustrated using social network analysis software.

Mixed-method integration and evidence-practice feedback loops

Following initial analysis as described in “Qualitative data analysis” “Quantitative data analysis” the data will be integrated to establish context-mechanism-outcome configurations, to understand what works, when, how, and in what context [39]. Ongoing analysis will inform the refinement of the programme and associated theory of change. To facilitate this process, bi-annual process learning workshops will take place with the core JU:MP research and implementation team. Emerging findings will be presented and, using Driscoll’s learning cycle [62], the team will consider the implications of the findings and agree on changes to the programme design, how the programme is delivered, and/or how the team work (together). These changes will then be captured in the ongoing evaluation as part of workshop notes and interviews, thus completing the cycle.

Discussion

This paper outlines the protocol for a process evaluation of JU:MP, the Bradford LDP, a whole systems programme for increasing PA in children and young people aged 5–14. The aim of the process evaluation is to understand the mechanisms through which JU:MP influences PA, and to examine behaviour change across the wider policy and strategy and neighbourhood systems. The evaluation also facilitates dynamic system change via informing the refinement of the programme and associated theory of change. To address these aims, evaluations are taking place at the strategic, neighbourhood, and end-user level. Mixed methods are being employed including surveys, interviews, and process observations, and participatory methods including reflections and ripple effect mapping.

Publishing a protocol for the process evaluation of the JU:MP programme is intended to both highlight the importance of process evaluations in evaluating complex interventions, and to add to the process evaluation methodology literature. While protocols of process evaluations of PA programmes are now appearing in the literature (e.g. [63–65]), typically they describe protocols for process evaluating individual interventions. In this context, our plan is a rare example that addresses a whole system physical activity programme incorporating multiple interventions. A key strength is that our approach remains flexible to iterative development of the programme; it is not constrained by requiring substantial ethical amendments, nor by pre-specified outcomes [55], while still ensuring that the protocol is clear, detailed and has fixed parameters for transparency and replicability purposes. At the same time, while the in-built processes can ensure evaluation is delivered as planned, they can also record any required adaptations. There are several examples of systems-based community interventions to childhood obesity prevention, with embedded monitoring and evaluation including survey and interview methods, from across the US, Canada and Europe, in the literature (e.g. [66–69]). This paper advances the literature by outlining a novel approach to evaluating a whole system programme, incorporating innovative participatory methods including reflections and REM, that permit iterative refinement of the programme alongside implementation [27, 28]. Given the time often required to conduct robust qualitative work, a challenge here is ensuring that the process evaluation findings remain ‘relevant’ as the JU:MP programme progresses and evolves in an agile way. It is, therefore, important to ensure that the findings are fed back in a timely manner and in an appropriate format to allow the team to ‘step back’ and engage in systematic planning. Whilst the evaluation outlined in this paper is resource-intensive, it is set up to generate a deep and rich understanding of the processes underpinning programme design, implementation and impact, and thus will be invaluable in supporting other communities to apply a similar approach and / or to learn from things that have not delivered expected successes.

An embedded research team is critical for the development of research-practice partnerships, which facilitates evidence-based practice, and the development of practice-based evidence through the JU:MP programme [70]. However, a limitation of this approach is that it reduces the impartiality of the research team and thus the independence of the evaluation [71]. Successfully negotiating a suitable balance of involvement with, and detachment from, the JU:MP programme is critical to the success of the process evaluation [72]. For example, it was imperative that the research team worked alongside the programme team to develop a protocol that aligns with and meets the needs of the programme, and involvement is also required to produce detailed and in-depth observational records that reflect participant experiences. Detachment is also required throughout the research process, for example when analysing data, to ensure that the analysis is reality-congruent and theoretically informed, rather than a reflection of the researcher’s experiences within the setting.

The process evaluation outlined within this paper forms part of a wider evaluation approach, which includes an effectiveness evaluation (neighbourhood control trial, and a before and after evaluation using the Born in Bradford birth cohort). Process evaluations can be complementary to outcome evaluations, as the approaches produce different types of knowledge about a phenomenon that can be combined to further advance knowledge [24, 73]. The JU:MP programme evaluation provides an opportunity for mixed methods evidence synthesis, combining the advantages of controlled trials in estimating intervention effects, with an in-depth understanding of participants’ experiences and the mechanisms underpinning change [25, 39]. However, in doing so, it is important that the inherent value of process evaluation is appreciated, beyond facilitating interpretation of trial findings, to avoid perpetuation of the paradigmatic hegemony existent within intervention evaluation research [74].

Despite significant efforts to address children’s physical inactivity by researchers, practitioners and policy makers, physical activity levels are socially stratified, which can serve to perpetuate health inequalities [7]. Sport England has invested significant funds in 12 LDPs to increase PA and reduce inequalities through taking a place-based, whole systems approach. Methodologically rigorous, high quality research is required to examine what works, why, for whom, and in what context, to understand both the potential of whole system approaches for increasing children’s PA, and whether and how they can be replicated in other geographical contexts. The process evaluation of the Bradford LDP aims to address this.

Supplementary Information

Additional file 1. JU:MP neighbourhood map. A geographical map of the JU:MP neighbourhood boundaries within the LDP area. File extension: png. The map depicted in Additional file 1 is adapted from QGIS Geographic Information System (2021). Open Source Geospatial Foundation Project. http://ggis.osgeo.org.

Additional file 2. JU:MP programme plan. A table providing a description of each of the 15 JU:MP work streams.

Additional file 3. Influences on behaviour questionnaire. A survey, adapted from Keyworth et al. [42], used to understand the capability, opportunity, motivation and behaviour of neighbourhood-level stakeholders related to supporting children to be physically active.

Additional file 4. Network mapping survey. A survey to map connections and relationships between different stakeholders and organisations within JU:MP neighbourhoods.

Additional file 5. Observational framework. A framework designed to guide the collection and recording of observational data.

Additional file 6. JU:MP thematic framework. The framework used to index data as part of the qualitative framework analysis.

Acknowledgements

We are grateful for the funding provided by Sport England to implement and evaluate the programme. The authors wish to thank the JU:MP team and wider stakeholders, including colleagues within Born in Bradford and ActEarly, including Dr. Laura Sheard and Dr. Bridget Lockyer, for their input in developing the process evaluation and their ongoing engagement with and openness to the evaluation process. The authors also wish to thank Aira Armanaviciute, Catherine Sadler, Dionysia Markesini, Emma Young, Isobel Steward, Lisa Ballantine, Simona Kent-Saisch, and Zubeda Khatoon for supporting the testing and refinement of the framework for analysing the qualitative data.

Abbreviations

- BCW

Behaviour Change Wheel

- COM-B

Capability, Opportunity, Motivation-Behaviour

- DD

Direct Delivery

- JU:MP

Join Us: Move. Play

- LDP

Local Delivery Pilot

- REM

Ripple Effects Mapping

Authors’ contributions

This study was conceived and designed by JH and ADS, with input and feedback from all other authors (DDB, AS, SAD, JN, JM, MB, JB, SB). The manuscript was initially drafted by JH. Subsequent drafts were commented on by all authors and revisions were made by JH. All authors have read and approved the final manuscript.

Funding

The authors’ involvement was supported by Sport England’s Local Delivery Pilot – Bradford; weblink: https://www.sportengland.org/campaigns-and-our-work/local-delivery and the National Institute for Health Research (NIHR) Yorkshire and Humber Applied Research Collaboration (ARC). Sport England is a non-departmental public body under the Department for Digital, Culture, Media and Sport (DCMS). James Nobles’ time was supported by the NIHR ARC West. The views expressed in this article are those of the author(s) and not necessarily those of Sport England, DCMS, NIHR or the Department of Health and Social Care. Sally Barbers and Maria Bryants’ time was supported by the UK Prevention Research Partnership (MR/S037527/1), an initiative funded by UK Research and Innovation Councils, the Department of Health and Social Care (England) and the UK devolved administrations, and leading health research charities. Weblink: https://mrc.ukri.org/research/initiatives/prevention-research/ukprp/.

Availability of data and materials

Not applicable as this is a protocol paper so does not report on data.

Declarations

Ethics approval and consent to participate

For the strategic-level process evaluation, ethics approval was granted from Leeds Beckett University (69870). For the neighbourhood-level process evaluation, ethics approval was granted from the University of Bradford (E838). Informed written and verbal consent will be received.

Consent for publication

Not applicable.

Competing interests

The authors report no competing interests.

Footnotes

This article has been updated to correct the funding section.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Change history

1/11/2022

A Correction to this paper has been published: 10.1186/s12889-022-12514-4

References

- 1.Landstedt E, Asplund K, Gillander GK. Understanding adolescent mental health: the influence of social processes, doing gender and gendered power relations. Soc Health Illness. 2009;31:962–978. doi: 10.1111/j.1467-9566.2009.01170.x. [DOI] [PubMed] [Google Scholar]

- 2.Marmot M, Allen J, Goldblatt P, Boyce T, McNeish D, Grady M. Fair society, healthy lives. Strategic review of health inequalities in England post-2010. 2011. https://www.instituteofhealthequity.org/resources-reports/fair-society-healthy-lives-the-marmot-review/fair-society-healthy-lives-full-report-pdf.pdf. Accessed 23 May 2021.

- 3.Nakray K. Addressing ‘well-being’ and ‘institutionalized power relations’ in health policy. J Human Dev Cap. 2011;12:595–598. [Google Scholar]

- 4.Álvarez-Bueno C, Pesce C, Cavero-Redondo I, Sánchez-López M, Garrido-Miguel M, Martínez-Vizcaíno V. Academic achievement and physical activity: a meta-analysis. Ped. 2017;140:6. doi: 10.1542/peds.2017-1498. [DOI] [PubMed] [Google Scholar]

- 5.Poitras VJ, Gray CE, Borghes MM, Carson V, Chaput JP, Janssen I, et al. Systematic review of the relationships between objectively measured physical activity and health indicators in school-aged children and youth. Appl Physiol Nutr Metab. 2016;41:197–239. doi: 10.1139/apnm-2015-0663. [DOI] [PubMed] [Google Scholar]

- 6.Warburton DE, Bredin SS. Health benefits of physical activity: a systematic review of current systematic reviews. Current Opp Cardiol. 2017;32:541–556. doi: 10.1097/HCO.0000000000000437. [DOI] [PubMed] [Google Scholar]

- 7.Kay T. Bodies of knowledge: connecting the evidence bases on physical activity and health inequalities. Int Sport Policy Politics. 2016;8:539–557. [Google Scholar]

- 8.Collings PJ, Dogra SA, Costa S, Bingham DD, Barber SE. Objectively-measured sedentary time and physical activity in a bi-ethnic sample of young children: variation by socio-demographic, temporal and perinatal factors. BMC Public Health. 2020;20:109. doi: 10.1186/s12889-019-8132-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Sawyer AD, Jones R, Ucci M, Smith L, Kearns A, Fisher A. Cross-sectional interactions between quality of the physical and social environment and self-reported physical activity in adults living in income-deprived communities. PLoS One. 2017;12:12. doi: 10.1371/journal.pone.0188962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Williams DR, Priest N, Anderson NB. Understanding associations among race, socioeconomic status, and health: patterns and prospects. Health Psychol. 2016;35:407. doi: 10.1037/hea0000242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Shareck M, Frohlich KL, Poland B. Reducing social inequities in health through settings-related interventions - a conceptual framework. Glob Health Promot. 2013;20:39–52. doi: 10.1177/1757975913486686. [DOI] [PubMed] [Google Scholar]

- 12.International Society for Physical Activity and Health (ISPAH). ISPAH’s Eight Investments That Work for Physical Activity. 2020. www.ISPAH.org/Resources. Accessed 21 April 2021.

- 13.World Health Organisation . Global action plan on physical activity 2018–2030: more active people for a healthier world. Geneva: World Health Organization; 2018. [Google Scholar]

- 14.MacKay K, Quigley M. Exacerbating inequalities? Health policy and the behavioural sciences. Health Care Anal. 2018;26:380–397. doi: 10.1007/s10728-018-0357-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ogden J. Celebrating variability and a call to limit systematisation: the example of the behaviour change technique taxonomy and the behaviour change wheel. Health Psychol Rev. 2016;10:245–250. doi: 10.1080/17437199.2016.1190291. [DOI] [PubMed] [Google Scholar]

- 16.Speake H, Copeland RJ, Till SH, Breckon JD, Haake S, Hart O. Embedding physical activity in the heart of the NHS: the need for a whole system approach. Sports Med. 2016;46:939–946. doi: 10.1007/s40279-016-0488-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Golden SD, Earp JAL. Social ecological approaches to individuals and their contexts: twenty years of health education & behavior health promotion interventions. Health Ed Behav. 2012;39:364–372. doi: 10.1177/1090198111418634. [DOI] [PubMed] [Google Scholar]

- 18.Nobles JD, Radley D, Mytton OT. Whole systems obesity programme team. The Action Scales Model: a conceptual tool to identify key points for action within complex adaptive systems. Pers Public Health. 2021;11:17579139211006747. doi: 10.1177/17579139211006747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Blacksher E, Lovasi GS. Place-focused physical activity research, human agency, and social justice in public health: taking agency seriously in studies of the built environment. Health Place. 2012;18:172–179. doi: 10.1016/j.healthplace.2011.08.019. [DOI] [PubMed] [Google Scholar]

- 20.City of Bradford Metropolitan District Council. Understanding Bradford District, Intelligence Bulletin: Poverty and Deprivation. 2020. https://ubd.bradford.gov.uk/media/1580/poverty -and-deprivation-jan-2020-update.pdf. Accessed 25 Nov 2020.

- 21.Office for National Statistics. Research report on population estimates by ethnic group and religion. 2020. https://www.ons.gov.uk/peoplepopulationandcommunity/population andmigration/populationestimates/articles/researchreportonpopulationestimatesbyethnicgroupandreligion/2019-12-04. Accessed 25 Nov 2020.

- 22.Bird PK, McEachan RR, Mon-Williams M, Small N, West J, Whincup P, et al. Growing up in Bradford: protocol for the age 7–11 follow up of the born in Bradford birth cohort. BMC Public Health. 2019;19:939. doi: 10.1186/s12889-019-7222-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Farooq MA, Parkinson KN, Adamson AJ, Pearce MS, Reilly JK, Hughes AR, et al. Timing of the decline in physical activity in childhood and adolescence: Gateshead millennium cohort study. Br J Sports Med. 2018;52:1002–1006. doi: 10.1136/bjsports-2016-096933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Rosas S, Knight E. Evaluating a complex health promotion intervention: case application of three systems methods. Crit Public Health. 2019;29:337–352. [Google Scholar]

- 25.Moore GF, Evans RE, Hawkins J, Littlecott H, Melendez-Torres GJ, Bonell C, et al. From complex social interventions to interventions in complex social systems: future directions and unresolved questions for intervention development and evaluation. Eval. 2019;25:23–45. doi: 10.1177/1356389018803219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Boeije HR, Drabble SJ, O’Cathain A. Methodological challenges of mixed methods intervention evaluations. Methodology. 2015;11:119–125. [Google Scholar]

- 27.Huby G, Hart E, McKevitt C, Sobo E. Addressing the complexity of health care: the practical potential of ethnography. J Health Serv Res Policy. 2007;12:193–194. doi: 10.1258/135581907782101516. [DOI] [PubMed] [Google Scholar]

- 28.Breckenridge JP, Gianfrancesco C, de Zoysa N, Lawton J, Rankin D, Coates E. Mobilising knowledge between practitioners and researchers to iteratively refine a complex intervention (DAFNE plus) pre-trial: protocol for a structured, collaborative working group process. Pilot Feas Stud. 2018;4:120. doi: 10.1186/s40814-018-0314-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Morgan-Trimmer S. Improving process evaluations of health behavior interventions: learning from the social sciences. Eval Health Prof. 2019;38:295–314. doi: 10.1177/0163278713497363. [DOI] [PubMed] [Google Scholar]

- 30.Quested E, Ntoumanis N, Thøgersen-Ntoumani C, Hagger MS, Hancox JE. Evaluating quality of implementation in physical activity interventions based on theories of motivation: current challenges and future directions. Int Rev Sport Ex Psych. 2017;10:252–269. [Google Scholar]

- 31.Zachariadis M, Scott S, Barrett M. Methodological implications of critical realism for mixed-methods research. MIS Q. 2013;1:855–879. [Google Scholar]

- 32.Dogra SA, Rai K, Barber S, McEachan RR, Adab P, Sheard L. Delivering a childhood obesity prevention intervention using Islamic religious settings in the UK: what is most important to the stakeholders? Prev Med Rep. 2021;24:101387. doi: 10.1016/j.pmedr.2021.101387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Nagy LC, Horne M, Faisal M, Mohammed MA, Barber SE. Ethnic differences in sedentary behaviour in 6–8-year-old children during school terms and school holidays: a mixed methods study. BMC Public Health. 2019;19:152. doi: 10.1186/s12889-019-6456-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ding D, Sallis JF, Kerr J, Lee S, Rosenberg DE. Neighborhood environment and physical activity among youth: a review. Am J Prev Med. 2011;41:442–455. doi: 10.1016/j.amepre.2011.06.036. [DOI] [PubMed] [Google Scholar]

- 35.Van Sluijs EM, Kriemler S. Reflections on physical activity intervention research in young people – dos, don’ts, and critical thoughts. IInt J Behav Nutr Phys Act. 2016;13:1–6. doi: 10.1186/s12966-016-0348-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Sallis JF, Cervero RB, Ascher W, Henderson KA, Kraft MK, Kerr J. An ecological approach to creating active living communities. Annu Rev Public Health. 2006;27:297–322. doi: 10.1146/annurev.publhealth.27.021405.102100. [DOI] [PubMed] [Google Scholar]

- 37.Sayer A. Realism and social science. London: SAGE Publications Ltd; 2000.

- 38.Shearn K, Allmark P, Piercy H, Hirst J. Building realist program theory for large complex and messy interventions. Int J Qual Methods. 2017;16:1609406917741796. [Google Scholar]

- 39.Fletcher A, Jamal F, Moore G, Evans RE, Murphy S, Bonell C. Realist complex intervention science: applying realist principles across all phases of the Medical Research Council framework for developing and evaluating complex interventions. Eval. 2016;22:286–303. doi: 10.1177/1356389016652743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Porter S, Ryan S. Breaking the boundaries between nursing and sociology: a critical realist ethnography of the theory-practice gap. J Adv Nurs. 1996;24:413–420. doi: 10.1046/j.1365-2648.1996.19126.x. [DOI] [PubMed] [Google Scholar]

- 41.Egan M, McGill E, Penney T, Anderson de Cuevas R, Er V, Orton L, et al. NIHR SPHR Guidance on Systems Approaches to Local Public Health Evaluation. Part 1: Introducing Systems Thinking. NIHR SPHR Guidance on Systems Approaches to Local Public Health Evaluation. 2019. https://sphr.nihr.ac.uk/wp-content/uploads/2018/08/NIHR-SPHR-SYSTEM-GUIDANCE- PART-1-FINAL_SBnavy.pdf. Accessed 23 May 2021.

- 42.McGill E, Marks D, Er V, Penney T, Petticrew M, Egan M. Qualitative process evaluation from a complex systems perspective: a systematic review and framework for public health evaluators. PLoS Med. 2020;17:e1003368. doi: 10.1371/journal.pmed.1003368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4(1):1–15. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Safaeinili N, Brown-Johnson C, Shaw JG, Mahoney M, Winget M. CFIR simplified: pragmatic application of and adaptations to the consolidated framework for implementation research (CFIR) for evaluation of a patient-centered care transformation within a learning health system. Learn Health Syst. 2020;4:e10201. doi: 10.1002/lrh2.10201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Michie S, Van Stralen MM, West R. The behaviour change wheel: a new method for characterising and designing behaviour change interventions. Implement Sci. 2011;6:1–12. doi: 10.1186/1748-5908-6-42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Cane J, O’Connor D, Michie S. Validation of the theoretical domains framework for use in behaviour change and implementation research. Implement Sci. 2012;7:1–17. doi: 10.1186/1748-5908-7-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Keyworth C, Epton T, Goldthorpe J, Calam R, Armitage CJ. Acceptability, reliability, and validity of a brief measure of capabilities, opportunities, and motivations (“COM-B”) Br J Health Psychol. 2020;25:474–501. doi: 10.1111/bjhp.12417. [DOI] [PubMed] [Google Scholar]

- 48.Huijg JM, Gebhardt WA, Verheijden MW, van der Zouwe N, de Vries JD, Middelkoop BJ, et al. Factors influencing primary health care professionals’ physical activity promotion behaviors: a systematic review. Int J Behav Med. 2015;22:32–50. doi: 10.1007/s12529-014-9398-2. [DOI] [PubMed] [Google Scholar]

- 49.Monaghan S, Lavelle J, Gunnigle P. Mapping networks: exploring the utility of social network analysis in management research and practice. J Bus Res. 2017;76:136–144. [Google Scholar]

- 50.Digital Promise. Social network analysis toolkit: planning a social network analysis. 2018. https://digitalpromise.org/wp-content/uploads/2018/09/SNA-Toolkit.pdf. Accessed 21 April 2021.

- 51.Doody O, Noonan M. Preparing and conducting interviews to collect data. Nurs Res. 2013;20:5. doi: 10.7748/nr2013.05.20.5.28.e327. [DOI] [PubMed] [Google Scholar]

- 52.Heyer R. Learning through reflection: the critical role of reflection in work-based learning. J Work Appl Manage. 2015;7:15–27. [Google Scholar]

- 53.Watson TJ. Ethnography, reality, and truth: the vital need for studies of ‘how things work’ in organizations and management. J Manag Stud. 2011;48:202–217. [Google Scholar]

- 54.Spradley JP. Participant observation. Long Grove: Waveland Press; 1980.

- 55.Emery M, Higgins L, Chazdon S, Hansen D. Using ripple effect mapping to evaluate program impact: choosing or combining the methods that work best for you. J Extension. 2015;53:2. [Google Scholar]

- 56.Chazdon S, Emery M, Hansen D, Higgins L, Sero R. A field guide to ripple effects mapping. Minneapolis: University of Minnesota Libraries Publishing; 2017.

- 57.Taylor J, Goletz S, Ballard J. Assessing a rural academic-community partnership using ripple effect mapping. J Community Pract. 2020;28:36–45. [Google Scholar]

- 58.Welborn R, Downey L, Dyk PH, Monroe PA, Tyler-Mackey C, Worthy SL. Turning the tide on poverty: documenting impacts through ripple effect mapping. Community Dev. 2016;47:385–402. [Google Scholar]

- 59.Ritchie J, Spencer L. Qualitative data analysis for applied policy research. In: Bryman B, Burgess R, editors. Analyzing qualitative data. New York: Routledge; 1994. pp. 173–194. [Google Scholar]

- 60.Gale NK, Heath G, Cameron E, Rashid S, Redwood S. Using the framework method for the analysis of qualitative data in multi-disciplinary health research. BMC Med Res Methodol. 2013;13:1–8. doi: 10.1186/1471-2288-13-117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Parkinson S, Eatough V, Holmes J, Stapley E, Midgley N. Framework analysis: a worked example of a study exploring young people’s experiences of depression. Qual Research Psych. 2016;13:109–129. [Google Scholar]

- 62.Driscoll J, Teh B. The potential of reflective practice to develop individual orthopaedic nurse practitioners and their practice. J Orthop Nurs. 2001;5:95–103. [Google Scholar]

- 63.Di Lorito C, Bosco A, Goldberg SE, Nair R, O'Brien R, Howe, et al. Protocol for the process evaluation of the promoting activity, independence and stability in early dementia (PrAISED), following changes required by the COVID-19 pandemic. BMJ Open. 2020;10:e039305. doi: 10.1136/bmjopen-2020-039305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Jong ST, Brown HE, Croxson CH, Wilkinson P, Corder KL, van Sluijs EM. GoActive: a protocol for the mixed methods process evaluation of a school-based physical activity promotion programme for 13–14year old adolescents. Trials. 2018;19:1–11. doi: 10.1186/s13063-018-2661-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Mclaughlin M, Duff J, Sutherland R, Campbell E, Wolfenden L, Wiggers J. Protocol for a mixed methods process evaluation of a hybrid implementation-effectiveness trial of a scaled-up whole-school physical activity program for adolescents: physical activity 4 everyone (PA4E1) Trials. 2020;21:1–11. doi: 10.1186/s13063-020-4187-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Jenkins E, Lowe J, Allender S, Bolton KA. Process evaluation of a whole-of-community systems approach to address childhood obesity in western Victoria, Australia. BMC Public Health. 2020;20:1–9. doi: 10.1186/s12889-020-08576-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Amed S, Shea S, Pinkney S, Wharf Higgins J, Naylor PJ. Wayfinding the live 5-2-1-0 initiative—at the intersection between systems thinking and community-based childhood obesity prevention. Int J Environ Res Public Health. 2016;13(6):614. doi: 10.3390/ijerph13060614. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Coffield E, Nihiser AJ, Sherry B, Economos CD. Shape up Somerville: change in parent body mass indexes during a child-targeted, community-based environmental change intervention. Am J Public Health. 2015;105(2):e83–e89. doi: 10.2105/AJPH.2014.302361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Borys JM, Le Bodo Y, Jebb SA, Seidell JC, Summerbell C, Richard D, De Henauw S, Moreno LA, Romon M, Visscher TL, Raffin S. EPODE approach for childhood obesity prevention: methods, progress and international development. Obes Rev. 2012;13(4):299–315. doi: 10.1111/j.1467-789X.2011.00950.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Green LW. Public health asks of systems science: to advance our evidence-based practice, can you help us get more practice-based evidence? Am J Public Health. 2006;96:406–409. doi: 10.2105/AJPH.2005.066035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Cheetham M, Wiseman A, Khazaeli B, Gibson E, Gray P, Van der Graaf P, et al. Embedded research: a promising way to create evidence-informed impact in public health? J Public Health. 2018;40:64–70. doi: 10.1093/pubmed/fdx125. [DOI] [PubMed] [Google Scholar]

- 72.Mansfield L. Involved-detachment: a balance of passion and reason in feminisms and gender-related research in sport, tourism and sports tourism. J Sport Tour. 2007;12:115–141. [Google Scholar]

- 73.Simons L. Moving from collision to integration: reflecting on the experience of mixed methods. J Res Nurs. 2007;12:73–83. [Google Scholar]

- 74.Hesse-Biber S, Johnson RB. Coming at things differently: future directions of possible engagement with mixed methods research. J Mixed Methods Res. 2013;7:103–109. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1. JU:MP neighbourhood map. A geographical map of the JU:MP neighbourhood boundaries within the LDP area. File extension: png. The map depicted in Additional file 1 is adapted from QGIS Geographic Information System (2021). Open Source Geospatial Foundation Project. http://ggis.osgeo.org.

Additional file 2. JU:MP programme plan. A table providing a description of each of the 15 JU:MP work streams.

Additional file 3. Influences on behaviour questionnaire. A survey, adapted from Keyworth et al. [42], used to understand the capability, opportunity, motivation and behaviour of neighbourhood-level stakeholders related to supporting children to be physically active.

Additional file 4. Network mapping survey. A survey to map connections and relationships between different stakeholders and organisations within JU:MP neighbourhoods.

Additional file 5. Observational framework. A framework designed to guide the collection and recording of observational data.

Additional file 6. JU:MP thematic framework. The framework used to index data as part of the qualitative framework analysis.

Data Availability Statement

Not applicable as this is a protocol paper so does not report on data.