Abstract

This randomized clinical trial aimed to determine feasibility, acceptability, and initial efficacy of brief Dialectical Behavior Therapy (DBT) skills videos in reducing psychological distress among college students during the COVID-19 pandemic. Over six weeks, 153 undergraduates at a large, public American university completed pre-assessment, intervention, and post-assessment periods. During the intervention, participants were randomized to receive animated DBT skills videos for 14 successive days (n = 99) or continue assessment (n = 54). All participants received 4x daily ecological momentary assessments on affect, self-efficacy of managing emotions, and unbearableness of emotions. The study was feasible and the intervention was acceptable, as demonstrated by moderate to high compliance rates and video ratings. There were significant pre-post video reductions in negative affect and increases in positive affect. There was a significant time × condition interaction on unbearableness of emotions; control participants rated their emotions as more unbearable in the last four vs. first two weeks, whereas the intervention participants did not rate their emotions as any more unbearable. Main effects of condition on negative affect and self-efficacy were not significant. DBT skills videos may help college students avoid worsening mental health. This brief, highly scalable intervention could extend the reach of mental health treatment.

Keywords: COVID-19, Dialectical behavior therapy, College students, Dissemination, Brief interventions

1. A brief, scalable intervention for mental health sequelae in college students during the COVID-19 pandemic: feasibility, acceptability, and initial efficacy

Rates of mental health problems among college students have increased significantly over the past few decades (Lipson et al., 2019). Approximately 1 in 3 college students indicate significant problems with depression, anxiety, and/or suicidal behavior (Eisenberg et al., 2013) and suicide is the second leading cause of death for this population (Lamis & Lester, 2011). Mental health needs of college students are particularly relevant given that the onset of psychiatric disorders typically occur during early adulthood and college can be stressful due to major life transitions, associated impairments with academic performance (Auerbach et al., 2016) and an increase in stress during the semester (Baghurst & Kelley, 2014; Center for Collegiate Mental Health, 2021). Thus, it is imperative that efforts be made to prevent mental health problems from developing as well as address problems when they occur.

The COVID-19 pandemic disrupted the college experience and its short- and long-term effects on college student mental health are only beginning to be known. Several studies have documented an increase in mental health concerns among college students due to COVID-19 (Kecojevic et al., 2020; Kleiman et al., 2020; Son et al., 2020; Wang et al., 2020). Mental health concerns have included depression and suicidal thoughts (Kecojevic et al., 2020), anxiety, sadness, desire to drink and use drugs (Kleiman et al., 2020), poor sleep and concentration, as well as fear about their loved ones and own health, reduced social interaction due to physical distancing, and academic concerns (Son et al., 2020). Moreover, students reported stress related to finances, living situations, concerns about personal/family safety and security, and race-based discrimination (American College Health Association, 2020).

The COVID-19 pandemic itself was only one source of stress for college students during 2020. While there is some research about the impact of the COVID-19 pandemic on college students' mental health, there is less research regarding the impact of other stressors that have occurred during the same time period. Public instances of police violence toward unarmed Black people, such as the killing of George Floyd on May 25, 2020, have been shown to have a deleterious effect on the mental health of Black Americans (Bor et al., 2018). Research has demonstrated that Black and Latinx college students reported PTSD and depressive symptoms that were associated with viewing online content related to police violence toward Black people (Tynes et al., 2019). The unrest following Floyd's death may have contributed to the stress during the COVID-19 pandemic, especially for Black students. In addition, the lead up to the presidential election in November 2020 was likely a stressor for college students. Previous daily diary research demonstrated that presidential elections can have a significant effect on college students' anxiety, stress, and sleep quality (Roche & Jacobson, 2019). It is possible that the 2020 presidential election had a larger effect on student mental health given the prolonged nature of the election results and the uncertainty regarding a peaceful transition of power.

Together, the stressors of 2020 plus the pre-COVID mental health concerns of college students suggested a dire need for immediate mental health resources. College counseling centers (CCCs) are often considered the first line of support; however, CCCs are frequently overloaded with short treatment lengths and waiting lists (Benton et al., 2003; LeViness et al., 2019). For example, the 2015 Center for Collegiate Mental Health (CCHM) Annual Report (2016) reported on mental health trends from 2009 to 2010 through 2014–2015 and indicated an increase in counseling center utilization by an average of 30%, while university enrollment increased by only 5%. Lipson and colleagues (Lipson et al., 2019) reported that 10% of the overall student population sought mental health treatment on campus. However, even though CCCs are overwhelmed with demand, the majority of college students with psychological distress still are not seeking mental health services on campus (Lipson et al., 2019). There are data indicating that this is especially pronounced among racial minorities (Lipson et al., 2018) and first-generation college students are less likely to initiate services at CCCs (Stebleton et al., 2014).

The increase in mental health problems coupled with increases in mental health service utilization indicate that the time might be right for the dissemination of mental health resources that can be provided easily and without a clinician. Brief, scalable interventions that can reach more individuals, not require individuals to attend a mental health appointment, and be a first line of defense for mental health problems may be an effective method for reducing distress, preventing mental health conditions from developing, and has implications far beyond college student populations (Kazdin, 2017).

Dialectical Behavior Therapy (DBT) is a psychosocial treatment originally designed for severe and complex clinical presentations, including Borderline Personality Disorder (BPD) and suicidal behaviors (Linehan, 1993). DBT is based on a skills deficit model that suggests that BPD is a disorder of emotion dysregulation stemming from important deficits in interpersonal, emotion regulation, and distress tolerance skills. Its standard, comprehensive form involves weekly individual therapy, weekly skills training sessions, as-needed coaching between sessions, and weekly consultation team for therapists. Comprehensive DBT has been found to be efficacious for problems associated with BPD (Cristea et al., 2017; Kliem et al., 2010) as well as within a college student population (Pistorello et al., 2012). However, a myriad of adaptations of DBT have been developed and tested that explore ways in which to disseminate the treatment across settings and populations, including many studies that have evaluated only the skills training component (see Valentine et al., 2015 for a review). With college students specifically, a number of studies have indicated that DBT skills training is effective at helping students cope better with distress and emotional intensity (Chugani et al., 2013; Fleming et al., 2015; Muhomba et al., 2017; Rizvi & Steffel, 2014; Üstündağ-Budak et al., 2019). These studies have all evaluated standard DBT content in the context of a 90–120 min group, or class, and were primarily pilot in nature. Thus, although these studies provide promising results regarding the efficacy of standard DBT skills training for college student populations, much more work is needed.

Importantly, even skills training in its traditional format can have limitations when disseminating to college students. Skills training groups are typically offered through the CCCs which many students will not approach, are designed to fit the academic calendar (rather than necessarily the varying needs of students throughout the year) and require face-to-face contact for typically 60–120 min each week at a time that the students have to make work in their schedules. Providing DBT skills using other media, like instantly accessible brief online videos, could overcome many barriers and, if found to have efficacy, could provide a highly disseminable alternative that reduces burden on CCCs, increases access to evidence based skills, and potentially serves as a gateway to accessing comprehensive treatment when needed.

2. Aims and hypotheses

The aims of the study were to determine the feasibility, acceptability, and initial efficacy of a 14-day intervention which involved daily skills videos (less than 6 min each). We aimed to determine if the videos were effective at reducing distress (i.e., negative affect and unbearableness of emotions) and increasing self-efficacy for managing emotions among college undergraduate students during the Fall 2020 semester. Feasibility was assessed by ease of recruitment into the study as well as response rates throughout the study for both intervention and control conditions. Acceptability was assessed by ratings of the video intervention for participants in the intervention condition. Initial efficacy was determined in the short-term by examining, in the intervention group, affect ratings immediately before and after video viewing, as well as in the longer term by comparing two conditions over the course of the study. We hypothesized that participants receiving skills videos would rate them as likeable and relevant and would indicate reduced negative affect as a result of watching them. We further hypothesized that, compared to the control condition, students in the intervention condition would report decreased distress over the course of the study (pre-vs. post-video exposure). To our knowledge, this is the first study to examine the delivery of DBT skills via video only and has implications beyond the college student population.

3. Method

3.1. Participants

Participants were undergraduate students at a large, public university in the Northeastern United States. This university was primarily operating remotely during the Fall 2020 semester. Participants were recruited via flyer distributed through various student email listservs and social media accounts. The inclusion criteria for participation were: age 18 years or older, matriculated as an undergraduate student at the university during the Fall of 2020, currently residing in the U.S., and using an iOS or Android smartphone compatible with the MetricWire app (see below). Exclusion criteria were limited to non-English speaking or inability to understand or sign the research consent forms. The study was registered at ClinicalTrials.gov (NCT04558411), was approved by the university Institutional Review Board, and all participants provided informed consent.

Of the total sample (N = 153), 84.97% participants (n = 130) identified as female. The average age was 20.74 (SD = 2.68; range: 18–37). Endorsement of racial identity was as follows: 5.9% Black, 45.1% White, 39.2% Asian, and 9.2% did not respond. In terms of ethnicity, 15.7% identified as Hispanic/Latino. The representation of racial identities in the sample was consistent with the broader university population. In addition, 31.4% identified as first-generation college students. 47.1% also reported currently being in therapy. Most participants identified as cisgender (94.1%) and heterosexual (64.1%). On a scale of 1 (very conservative) to 7 (very liberal), mean political affiliation rating was 5.61 (SD = 1.31, range: 1–7). There were no significant differences in demographic makeup between control and intervention conditions, though the difference between the intervention and control condition on % female approached significance (80.81 vs. 92.59, X 2 (1) = 3.80, p = .051).

3.2. Measures

3.2.1. Baseline measures

Participants completed a series of measures at baseline. For the current study, relevant measures are: 1) Demographic measure that assessed characteristics including age, sexual orientation, gender identity, race, and ethnicity. The demographic survey also included questions related to mental health treatment and diagnostic history. 2) Difficulties in Emotion Regulation Scale-18 (DERS-18; Victor & Klonsky, 2016) is a short form of a self-report questionnaire that measures emotion dysregulation (Gratz & Roemer, 2004). The measure consists of 18 items assessing various dimensions of emotion regulation difficulty (e.g., “When I'm upset, I lose control over my behaviors”, “When I'm upset, I feel ashamed with myself for feeling that way”). Items are rated on a 5-point Likert scale ranging from 1 (“almost never”) to 5 (“almost always”). The DERS-18 has demonstrated good reliability and internal consistency, and has also exhibited good concurrent validity with the original DERS (Skutch et al., 2019; Victor & Klonsky, 2016). For the current study, internal consistency was α = 0.90.

3.2.2. Ecological momentary assessment (EMA)

EMA surveys included a range of questions that measured general affect and perceived ability to cope with negative emotions in the present moment. The last assessment of each night was longer and included questions that assessed perceived levels of stress across various domains (e.g., interpersonal stress, financial worry, experiences of discrimination). Questions included in the current study were based on EMA items from prior studies conducted by last author (Kleiman et al., 2020).

Negative Emotion. We created a composite negative emotion variable consisting of the sum of 8 different affect states assessed at every EMA prompt. Specifically, this included ratings of 3 high-arousal negative affect states: (1) agitated, (2) anxious, (3) angry and 5 low-arousal negative affect states: (1) ashamed, (2) guilty, (3) hopeless, (4) lonely, and (5) sad. The pooled within-person reliability was low but not unexpected given that we would expect participants to discriminate between affect states at the same time point (pooled α = .62).

Self-Efficacy to Manage Emotions. We used a composite (mean) score of the four items assessing self-efficacy to manage emotions, delivered at each EMA prompt. These items were drawn from the Self-Efficacy for Managing Emotions – Short Form 4a measure, which is part of the PROMIS Item Bank (Cella et al., 2007). The pooled within-person reliability was sufficient (pooled α = .79).

Bearability of Emotions. We used a single item at each EMA prompt to assess the degree to which participants’ current feelings were bearable (“not at all unbearable” to “extremely unbearable”).

3.2.3. Intervention questions

The first time a participant accessed the video (see below), they were asked a number of questions about the video, including “How relevant do you think this skill is for you?” and “How much did you like the video?” In all subsequent times in which participants rewatched the videos they were asked to rate their positive and negative affect before and after the intervention (“How [positive/negative] do you feel right now?), each on a 0–5 scale.

3.3. Procedures

3.3.1. Recruitment, consent, and baseline

Participants were recruited via emails to listservs (university groups, departments, student organizations) and social media posts. Efforts were made to increase the diversity of the sample (i.e., Black and Latino participants) by reaching out to specific organizations. All recruitment materials contained the following text: “Join a 12-week study to evaluate a quick way to learn how to effectively manage the many challenges as a Rutgers undergraduate in the COVID-19 era” and contained a link (or QR code) to a brief eligibility screener. The screener assessed all inclusion criteria. If participants met inclusion criteria, they were emailed a link to the study consent. If interested, the students read through the online consent and signed it. Following the consent, participants completed a baseline survey (measures described above). Individuals who completed the baseline survey were then provided instructions for downloading the MetricWire app and registering for the study. For each EMA survey completed, participants earned $0.25 in the form of an Amazon eGift Card. Payments were sent following each 2-week period. In addition, participants completed weekly surveys for which they were compensated (not part of the present paper); participants could earn up to $60 for their total participation in the study.

3.3.2. EMA phase

The EMA phase began the day after installation and study registration. Participants were prompted to complete a brief (<5 min) ecological momentary assessment (EMA) four times per day for six weeks using their smartphone. Over these six weeks, participants completed a pre-assessment period (weeks 1–2), intervention period (weeks 3–4), and post-assessment period (weeks 5–6). All surveys were delivered at random times, within pre-specified windows. The first three assessments sent each day expired after 1.5–2 h and the last assessment of each night expired after 14 h.

3.3.3. Randomization

At the end of the initial two-week EMA phase, participants were block randomized to either the intervention condition or the control condition, based on DERS-18 score (<50, >50) such that equal numbers of people with scores above and below the cutoff (50) were included in both conditions. Given the pilot nature of the trial and given that we were conducting some analyses only in the intervention group (and wanted to maximize power), we intentionally randomized with 66% probability to the intervention group.

3.3.4. Intervention development

Brief narrated animated videos were created to describe 14 DBT skills (available at youtube.com/dbtru). These skills (and the order in which they were available) were: 1. Distract, 2. Self-Soothe, 3. IMPROVE the Moment, 4. TIP, 5. Radical Acceptance, 6. Wise Mind, 7. Mindfulness What Skills, 8. Mindfulness How Skills, 9. PLEASE, 10. Opposite Action, 11. Mindfulness of Current Emotion, 12. DEARMAN, 13. GIVE, 14. FAST (Linehan, 2014). Verbal scripts were generated by the first author, an expert in DBT. Artboards were then created to match the script by the second author, a clinical psychology doctoral student with experience in graphic design and illustration. Through an iterative process between the two authors, a final storyboard was created and passed along to a freelance animator who completed the animation, received feedback from the first and second author, and then created a final version of each video. The resulting videos ranged in length from 2 min, 51 s to 5 min, 35 s with an average length of 3 min, 59 s. See Fig. 1 for a couple of representative screenshots from the videos.

Fig. 1.

Representative screenshots from brief DBT skills videos.

3.3.5. Intervention

During the intervention phase, participants randomized to receive the intervention received one new video each day at 8pm via the smartphone app for 14 successive days. Each video was preceded by the text: “Once you watch the video, you can play it as many times as you'd like in the app” and once released, the skills video was made available through the app for the participant to review at any time. Participants did not receive compensation for viewing or completing the surveys associated with each skills video. Participants randomized to the control condition were given access to the full set of videos after the study was complete.

3.4. Data analyses

3.4.1. Response rates

We examined response rates across the study, aggregated by week and phase (pre-vs. post-watch) in study. We were interested in overall response rates as well as potential differences in response rates. Specifically, we were interested in whether compliance (conceptualized as the total number of momentary surveys completed each week) differed as a function of week in study, period of study (pre-vs. post-watch), or by condition. We also tested whether there were any interactions by condition. Because our outcome variable was a count (i.e., number of surveys completed each week), we used a series of multi-level Poisson regression models.

3.4.2. Efficacy

We examined both between- and within-participant effects. Between-participant effects involved comparing the conditions across the two study periods (before and after the period in which participants were exposed the videos). Within-participant effects involved comparing just the individuals in the experimental condition on ratings of positive and negative affect that were collected immediately before and after watching each video.

Between-participants efficacy. We were interested in the effect of the intervention on three different outcomes: (1) negative emotion, (2) self-efficacy to manage negative emotions, and (3) how unbearable negative emotions are. Accordingly, we conducted three sets of multilevel models, differing by the outcome variable. All models had the same predictors: (1) period, a binary variable defined as the period before (vs. after) the 15th day of the study, when the videos were first delivered to participants in the experimental condition, (2) condition (i.e., experimental vs. control), and (3) the interaction between period and condition. To reduce complexity of the dataset, we created day-level averages of the three momentary outcome variables. Aggregating to the day level allowed us to clearly separate the 15th day of the study as the period when participants (in the experimental condition) received their first video. All analyses were conducted using the lme4 R package (Bates et al., 2015).

Within-Participants Efficacy. We were interested in changes in ratings of (1) overall negative emotion and (2) overall positive emotion from before watching the video to after. To address these two types of changes, we analyzed two models: the first model had ratings of negative emotion as the outcome variable and the second had ratings of positive emotion. Both models had time (Pre-video vs. Post-video) as the independent variable and used fixed slopes. We also tried two modified models: (1) adding video as a random factor, to see if effects differed by video type and (2) using ordinal regression instead of linear regression, because the positive and negative ratings are technically on an ordinal scale. Neither modification had an impact on the interpretation of the findings. Accordingly, for ease of interpretation and consistency with the prior analyses, we primarily focus on the linear models with fixed effects only.

4. Results

4.1. Study characteristics

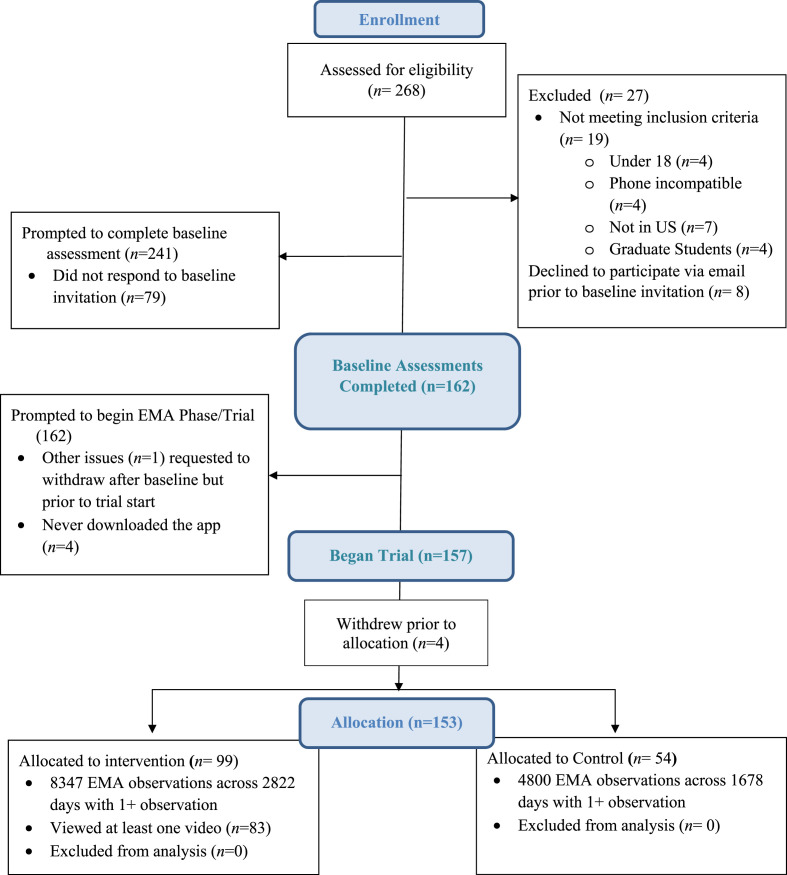

Participants were recruited during a four-week window in the fall of 2020 (9/8/2020–10/5/2020) to contain the study within one semester. A total of 268 participants were screened for inclusion/exclusion criteria. Of those, 23 were excluded based on age (n = 4), phone incompatibility (n = 4), were graduate students (n = 4) or did not currently reside in the U.S. (n = 7). Of those eligible, 162 participants completed the baseline assessment; the remainder either did not respond (n = 79) or indicated that they had changed their mind prior to consent (n = 8). One participant requested to withdraw after consent, but prior to the beginning of the EMA phase. A total of 157 participants downloaded and installed the MetricWire app, however, 4 participants withdrew prior to randomization. A final sample of 153 participants were randomized to either the intervention condition (n = 99) or the control condition (n = 54) (see Table 1 ). Fig. 2 shows the flow of participants through the study.

Table 1.

Demographic Information at Baseline.

| Total Sample (N = 153) |

Intervention (n = 99) |

Control (n = 54a) |

|||

|---|---|---|---|---|---|

| M (SD) | M (SD) | M (SD) | t or X2 | p | |

| Age | 20.74 (2.68) | 20.69 (2.94) | 20.85 (2.16) | t(150) = .36 | .72 |

| Female | 84.97% | 80.81% | 92.59% | X2(1) = 3.80 | .05 |

| Cisgender | 94.12% | 94% | 94.44% | X2(1) = .01 | .92 |

| Heterosexual | 64.05% | 62.63% | 66.67% | X2(1) = .25 | .62 |

| Hispanic/Latino | 15.69% | 17.17% | 12.96% | X2(1) = .47 | .49 |

| Race | X2(3) = .73 | .87 | |||

| Black | 5.88% | 5.05% | 7.41% | ||

| White | 45.10% | 44.44% | 46.30% | ||

| Asian | 39.22% | 40.40% | 37.04% | ||

| Other race(s) | 9.15% | 10.10% | 7.41% | ||

| First-generation college student | 31.37% | 27.27% | 38.89% | X2(1) = 2.19 | .14 |

| Receiving current therapy | 47.06% | 49.49% | 42.59% | X2(1) = .67 | .41 |

| Psychiatric diagnosis | 24.18% | 25.25% | 22.22% | X2(1) = .18 | .68 |

| DERS-18 – Total | 48.09 (13.67) | 48.39 (13.49) | 47.51 (14.10) | t(150)= .38 | .71 |

Note. DERS-18 = Difficulties in Emotion Regulation Scale-18.

n = 53 for reports of age, sex, and DERS-18 due to missing data.

Fig. 2.

Participant flow.

4.2. Response rates - EMA

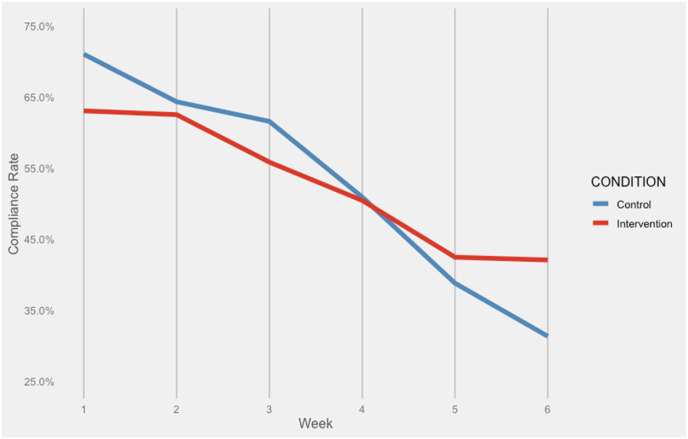

Across the study and both groups, the overall compliance rate for the momentary surveys was 53.96% (54.00% intervention, 53.91% control). However, participants completed at least one survey on 73.88% of all days. As would be expected, there was a decrease in compliance as time went on during the study, reflected both in week of study (IRR = 0.88, 95%CI = [0.87, 0.89], p < .001) and period of study (i.e., pre-vs. post-videos; IRR = 0.77, 95%CI = [0.75, 0.79], p < .001). There was no main effect of condition (IRR = 0.95, 95%CI = [0.69, 1.30], p = .736), however there was an interaction between condition and period (IRR = 1.07, 95%CI = [1.02, 1.13], p = .006) condition and week of study (IRR = 1.06, 95%CI = [1.04, 1.08], p < .001).

The pattern of these interactions was consistent across both the interaction examining period and the interaction examining week of study. When examining the interaction between condition and period, we found that the decrease across the study in number of total surveys was greater among the control group (IRR = 0.74, 95%CI = [0.71,0.77], p < .001) than it was for the intervention group (IRR = 0.79, 95%CI = [0.77,0.81], p < .001). More concretely, during the pre-watch period, the control group completed more surveys than the experimental group (control = 70.38%, experimental = 66.57%). During the post-watch period, the experimental group completed more surveys than the control group (control = 45.68%, experimental = 47.72%). When examining the interaction between condition and week in study, we again found that the decrease across the study in number of total surveys was greater among the control group (IRR = 0.84, 95%CI = [0.83,0.86], p < .001) than it was for the intervention group (IRR = 0.89, 95%CI = [0.88,0.91], p < .001). This interaction is visualized in Fig. 3 .

Fig. 3.

EMA compliance rate throughout the study, by group.

4.3. Response rates - intervention

Of the 99 participants randomized to the intervention, 83 watched at least one of the videos. Participants who watched at least one video watched a median of nine videos at least once (range 1–14). Twenty-three participants watched all 14 videos at least once. A total of 79 participants rewatched at least one video. Among those who rewatched a video at least once, participants rewatched a median of three different videos (range 1–14), a median of nine times (range 1–215 times).

Video-level statistics are shown in the left set of columns in Table 2 . Specifically, this table shows the number of participants who watched each video for the first time, the number of participants who rewatched each video, and how many times each video was re-watched.

Table 2.

DBT skills videos viewing rates and ratings.

| Frequency of watching |

Ratings of how much participants … |

||||

|---|---|---|---|---|---|

| Video | Participants Watched Once | Participants Rewatched | Times Rewatched | Liked video M (SD) | Found video relevant M (SD) |

| DISTRACT | 75 | 70 | 134 | 3.78 (1.05) | 3.42 (1.30) |

| SELF SOOTHE | 65 | 38 | 243 | 3.98 (0.91) | 3.81 (1.37) |

| IMPROVE | 63 | 29 | 241 | 3.72 (1.11) | 3.39 (1.31) |

| TIP | 60 | 28 | 95 | 3.82 (0.97) | 3.40 (1.42) |

| RADICAL ACCEPTANCE | 42 | 21 | 93 | 4.05 (0.95) | 3.19 (1.35) |

| WISE MIND | 42 | 21 | 55 | 3.80 (0.97) | 3.22 (1.21) |

| MINDFUL - WHAT | 37 | 18 | 40 | 4.03 (0.90) | 3.19 (1.27) |

| MINDFUL - HOW | 37 | 32 | 178 | 3.92 (1.13) | 3.36 (1.50) |

| PLEASE | 57 | 23 | 76 | 3.79 (0.88) | 3.35 (1.33) |

| Opposite Action | 82 | 19 | 45 | 3.92 (0.88) | 3.02 (1.55) |

| Mindfulness of Current Emotion | 47 | 11 | 17 | 3.93 (0.90) | 3.30 (1.31) |

| DEARMAN | 37 | 5 | 7 | 4.06 (1.03) | 3.39 (1.52) |

| GIVE | 51 | 6 | 12 | 3.69 (0.94) | 3.04 (1.54) |

| FAST | 46 | 7 | 9 | 3.89 (0.98) | 3.02 (1.34) |

The average rating of how much participants liked the videos was 3.87 (on a scale of 0 [not at all] to 5 [very much]; SD = 0.97) and the average rating of how much participants found the skill in the video relevant was 3.30 (on a scale of 0–5; SD = 1.39). Video-level statistics are show in the right set of columns in Table 2.

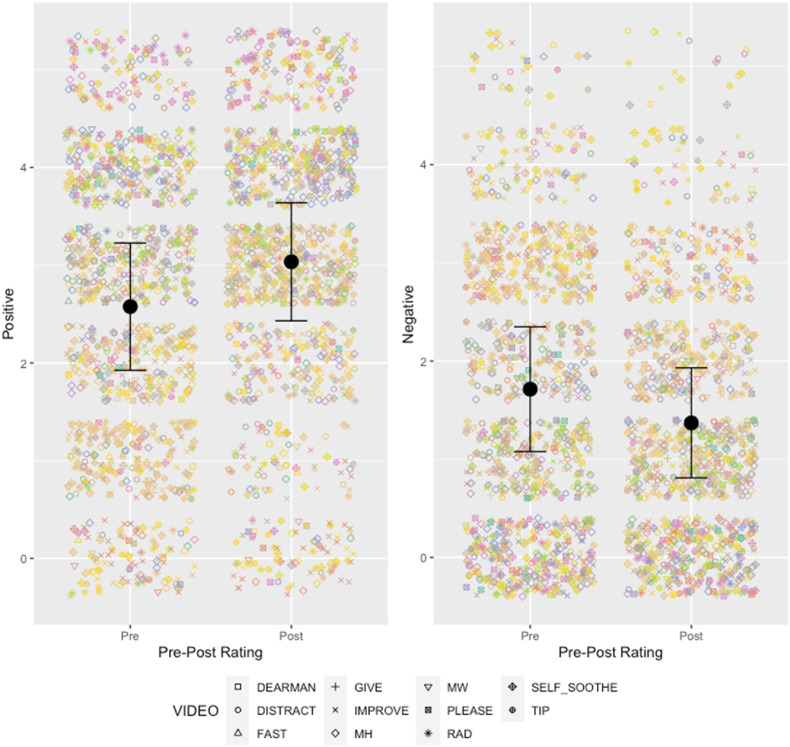

4.4. Within-Participants Efficacy

Fig. 4 shows the average effect across the sample in pre-post ratings of negative affect (left panel) and positive affect (right panel) for rewatched videos. As expected, from pre-to post-video ratings, we found significant reductions in negative affect (b = −0.35, 95%CI = [−0.43, −0.27], p < .001) and significant increases in positive affect (b = 0.46, 95%CI = [0.39, 0.54], p < .001). The interpretation of these effects, as well as the model fit, did not change when added video type as a random effect (X 2 for negative affect = 67.87, df = 65, p = .380, X 2 for positive affect ∼ 1.00, df = 65, p ∼ .999). The interpretation did not change either when using ordinal regression (OR for negative affect = 0.48 95%CI = [0.40, 0.56], p < .001, OR for positive affect = 2.74 95%CI=[2.33, 3.23], p < .001).

Fig. 4.

Within-person comparisons of pre-post-video affect ratings.

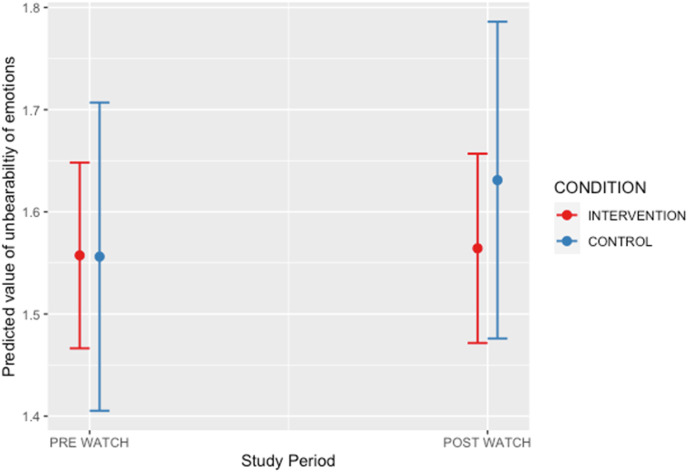

4.5. Between-participants efficacy

Table 3 shows the results of the multi-level models testing efficacy. When negative affect was the outcome variable (left set of columns), the only significant predictor was period, suggesting that participants reported more severe momentary negative affect during the last four weeks of the study compared to the first two weeks. When self-efficacy to manage emotions was the outcome variable (middle set of columns), there were no significant predictors. When bearability of emotions was the outcome variable (right set of columns), there was a significant period × condition interaction. Fig. 5 shows the plot of this interaction. When we probed the interaction, we found that participants in the experimental condition did not rate their emotions as any more unbearable in the post-video period than in the pre-video period (b = 0.01, 95%CI = −0.03 to 0.04, p = .697). We found that participants in the control condition rated their emotions as more unbearable in the comparable post-watch period than the pre-watch period (b = 0.07, 95%CI = 0.03 to 0.12, p < .001). This suggests that although those in the experimental condition did not find their emotions more bearable after watching the videos, those in the control condition found their emotions more unbearable after the comparable time.

Table 3.

Results of multilevel modeling analyses.

| Negative Affect |

Self-Efficacy to Manage Emotions |

Unbearability of Emotions |

|||||||

|---|---|---|---|---|---|---|---|---|---|

| Predictors | b | 95% CI | p | b | 95% CI | p | b | 95% CI | p |

| (Intercept) | 6.90 | 5.45–8.34 | <.001 | 3.29 | 3.09–3.49 | <.001 | 1.59 | 1.47–1.72 | <.001 |

| Period (Ref = pre intervention) | 0.42 | 0.16–0.69 | .002 | −0.03 | −0.06–0.01 | .123 | 0.05 | 0.02–0.09 | .001 |

| Condition (ref = control) | 0.38 | −1.10–1.86 | .617 | −0.02 | −0.22–0.18 | .858 | −0.03 | −0.18–0.11 | .659 |

| Period x Condition | −0.15 | −0.49–0.18 | .368 | −0.00 | −0.05–0.04 | .844 | −0.05 | −0.09–−0.01 | .020 |

| Random Effects | |||||||||

| σ2 | 13.13 | 0.22 | 0.20 | ||||||

| τ00 | 39.11 | 0.81 | 0.25 | ||||||

| ICC | 0.75 | 0.78 | 0.56 | ||||||

| N |

125 |

125 |

125 |

||||||

| Observations | 4296 | 4297 | 4336 | ||||||

| Marginal R2/Conditional R2 | 0.002/0.749 | 0.000/0.784 | 0.002/0.561 | ||||||

Fig. 5.

Condition × period interaction on unbearability of emotions.

5. Discussion

This study aimed to determine the feasibility, acceptability, and initial efficacy of a novel intervention designed to reduce distress in undergraduates during arguably the most challenging time in the United States in decades. Results of the study indicate that the study design and the intervention of brief DBT skills videos were largely acceptable to participants and that the intervention demonstrates some efficacy at reducing negative affect in the moment and preventing students from getting more distressed (i.e., finding their emotions more unbearable) as the semester progresses. Thus, contrary to initial hypotheses, we did not find that the video intervention led to improved outcomes over the duration of the study, rather we found that those who received the intervention seemed to have a less negative trajectory over the study than the control group did. Results have implications for the scalability of this methodology and intervention as well as room for improvements for future research.

In terms of feasibility of the study design and intervention, it is notable that we actively recruited and engaged our target sample size (n = 150) within a four-week period at the beginning of the semester. This engagement was achieved through a process that involved emails sent to student organizations and departments from the third author, Assistant Director for Community Based Counseling at the university, who manages outreach efforts with campus organizations and embedded counselors. Although we did not test this question directly, it is likely that the emails describing a study that came from a known person from within student services, as opposed to an “outside” researcher, facilitated greater sharing to students. In order to reach a diverse undergraduate sample, it is recommended that researchers partner with school administrators and/or students, in line with implementation research recommendations to consider the settings/context in which the intervention is to occur (e.g. Damschroder et al., 2009). In addition, it is notable that the flyers describing the study, that resulted in the 268 individuals screened for eligibility in a four-week period did not mention compensation. This decision was intentional as we wanted a sample that was not “doing it for the money” but instead was genuinely interested in participating in a study about learning coping skills. It is possible that this strategy led to a more intrinsically motivated group of participants which may have had an impact on response rates. Comparison to other EMA studies with college undergraduates is complex given that EMA study duration has often been limited to 2 weeks or less (e.g. Bai et al., 2020; Veilleux et al., 2018). However, our overall compliance rate for the momentary surveys of 53.96%, with nearly three-quarters of participants completing at least one survey per day was notable given the study's 42-day duration.

Although we did not specifically target individuals with mental health problems, nearly half the participants reported that they were currently in therapy and nearly one-quarter self-reported that they met criteria for a psychiatric disorder. This latter datapoint is consistent with studies of the general college student population, indicating that up to one-third of students endorse having significant mood and/or anxiety problems (Eisenberg et al., 2013). Thus, the results from the current sample can likely generalize to college students more broadly.

Acceptability of the intervention was also examined based on the number of times individuals in the intervention condition accessed the videos and their ratings of the videos they watched. In terms of the former, we found that 84% of the participants randomized to the videos watched at least one and nearly one-quarter of the participants (23.23%) watched all 14 videos. The most frequently watched videos were Opposite Action (82 of participants), Distract (75), and Self-Soothe (65), and the least watched videos were Mindfulness-What, Mindfulness-How, and DEARMAN (all watched by 37 participants). Although our data cannot specifically answer why participants watched the videos that they did, it is possible that the students were drawn to more novel and unknown skills (based on the names). The near ubiquity of mindfulness in mental health centers as well as in wellness programs more generally may have made them less enticing to watch in the context of this study. More follow-up information is needed as well as user-centered feedback in future studies that provides more information about how to make all the skills videos have a greater level of acceptability.In terms of video ratings, overall the study participants stated that they liked each video and found the information relevant to their lives. On a 0 (not at all) - 5 (very much) scale, ratings for liking the video ranged from 3.69 (the interpersonal effectiveness skill of “GIVE”) to 4.06 (the interpersonal skill of “DEARMAN”). Ratings for finding the video relevant ranged from 3.02 (emotion regulation skill of “Opposite Action” and the interpersonal effectiveness skill of “FAST”) to 3.81 (the distress tolerance skill of “Self-Soothe”). The ranges for both variables indicate a moderate level of likeability. It is interesting that, although these ratings were relatively high, there was still variability in terms of number of videos watched. Efforts were made to make the video length as brief as possible without losing essential teaching points related to the skills. In addition, the process of creating the videos involved feedback from current graduate students involved with the project, arguably close in age to the target undergraduate sample. This feedback helped shape the design of the videos, including the figures in the videos, to be as inclusive as possible to diverse audiences. Future studies with larger samples could identify whether different subgroups (e.g., individuals of different races, ethnicities, ability) respond to the videos’ acceptability differently and new videos can be designed with such feedback. Although liking the video and finding the skill content relevant were likely facilitators to watching more videos and using the skills in everyday life, there were also likely barriers to watching the videos that need to be assessed more fully and overcome. More work is needed to identify such barriers and whether modifying the intervention delivery would help overcome them.

Our findings related to the preliminary efficacy of the intervention require attention. Specifically, we found that among participants in the intervention condition who elected to rewatch videos more than once, their negative affect decreased and positive affect increased following viewing. This significant change in affect is notable given the brief length of the videos (average of 4 min). The videos were intended to teach coping skills that individuals can use to improve the quality of their everyday lives; the fact that the videos themselves helped individuals feel better in the moment of watching them seems an added benefit. Given that individuals did not complete the affect ratings the first time they watched the videos (because this viewing was not a random occurrence but instead was cued by the study design), it is possible that this effect is only found on repeated viewings or that only individuals who felt better after watching a video watched it again.

The data on between-group findings are more mixed. Specifically, we found no effect of condition on changes in negative affect and self-efficacy for managing emotions. Instead, we found that negative affect increased for participants in both conditions over the course of the trial and self-efficacy did not change. Although these findings were in contrast to hypotheses, they are consistent with other studies that have documented student stress increasing over the course of a semester (Baghurst & Kelley, 2014; Center for Collegiate Mental Health, 2021). Another interesting finding occurred with the dependent variable of ratings of how bearable their emotions were. We found a significant interaction between condition and time. Specifically, we found that although there was no decrease in how unbearable participants in the experimental condition rated their emotions from the first two weeks to the last two weeks of the study, there was an increase in the comparative timeframe for those in the control condition. In other words, it may be that these videos served to help students avoid a potential increase in distress during a distressing period. This finding also highlights the important of inclusion of a control condition even with pilot trials. Without the control condition in this study, we could have concluded that our intervention had no effect or led to a worsening of symptoms. Future research on interventions with college students would benefit from attending to design considerations to address this potential confound. It is also important to comment about the historical period in which this study was conducted. In addition to the documented increases in stress over the course of the typical semester ((Baghurst & Kelley, 2014; Center for Collegiate Mental Health, 2021), the fall 2020 semester in the US was marked by three significant stressors: COVID-19, a contentious presidential election, and ongoing attention and recogntion of pervasive racial injustices. Given the design of this study, it is impossible to determine the differential impacts of each of these historical stressors on level of participation and outcomes.

5.1. Limitations and future directions

There are a few study limitations important to acknowledge and address for future research. First, the order in which the 14 skills videos were delivered to participants in the experimental condition was not randomized. The same delivery order across participants has limited our ability to control for its influence. For example, it is possible that the content of initial videos appealed to some participants but not others, and thus contributed to differential engagement in the intervention across individuals. Additionally, because of the absence of a comparison treatment (e.g., watching non-skills related videos) in the control condition, it remains unclear if the difference in efficacy over study period between conditions was due to skills teaching or other factors, such as watching a video every day (which could serve as a behavioral activation and boost up moods). Similarly, it is difficult to attribute the observed improvements in affect pre- and post-video entirely to the skills training content of videos given the other components/characteristics of the videos (e.g., animation, soothing narrative, humor). Furthermore, likely due to the length of the study, the average EMA compliance was somewhat lower than similar studies involving college students during COVID-19 pandemic (e.g., 69.23% in Kleiman et al., 2020) and decreased significantly over time. The study results could potentially be biased if certain missingness was not random but due to higher stress or intense negative emotions. Finally, although the sample was racially and ethnically diverse, the final sample was heavily skewed on gender (85% female) which may limit the degree to which these results would generalize to male students. It is unclear why more males did not enroll in the study although this gender skew is consistent with other recent research on college populations during COVID-19 (e.g., Kleiman et al., 2020 [77.6% female]; Wang et al., 2020 [62% female]). Future work is necessary to better understand barriers to male participation in college student studies.

Despite these limitations, this study had notable findings and paves the way for many different avenues of research. First, it is important for future research to determine the longer term effects of this brief intervention by including follow-up assessments that evaluate use of skills and mental health symptoms. This research is critical to determine whether these intervention effects are sustained. Should such research demonstrate sustained effects of the intervention, there are many additional paths that future studies can take. For one, given the general levels of acceptability of the videos among the college student population, it would be important to determine with larger samples if the videos are found to be equally relevant among different populations, cultures, and settings. For example, these videos may be useful for individuals that might not need or want therapy but could benefit from brief support to prevent increase in distress. Alternatively, given the ease of dissemination and the cost-effectiveness of these brief videos, adaptive treatment designs that incorporate videos as a first-step, low dose intervention can be conducted to determine whether some individuals use and respond to the videos and therefore do not need a higher level of treatment dose and duration. Those who do not respond to the initial videos could then be diverted to a higher level of care. Research might also consider replication of this study to those that do seek therapy at CCCs or community mental health clinic but have a wait time to begin treatment due to limited resources or what to integrated it into a step-care model. In addition, future research on these videos may wish to assess content knowledge among participants as well as attention checks during the videos to better understand comprehension and its effects on outcomes and whether exposure to the videos impacts one's willingness to engage in broader mental health services. Given the brief, scalable nature of the DBT skills videos, many opportunities exist for dissemination and implementation across populations and settings and far beyond the COVID-19 era.

CRediT authorship contribution statement

Shireen L. Rizvi: Conceptualization, Methodology, Formal analysis, writing, Supervision, Funding acquisition. Jesse Finkelstein: Conceptualization, Resources, Visualization. Annmarie Wacha-Montes: Conceptualization, Resources, Project administration. April L. Yeager: Project administration, Investigation. Allison K. Ruork: Supervision, Investigation, writing. Qingqing Yin: Formal analysis, Investigation, Data curation, writing. John Kellerman: Formal analysis, Investigation, Data curation, writing. Joanne S. Kim: Formal analysis, Investigation, Data curation, writing. Molly Stern: Formal analysis, Investigation, Data curation, writing. Linda A. Oshin: Formal analysis, Investigation, Data curation, writing. Evan M. Kleiman: Conceptualization, Methodology, Formal analysis, writing, Supervision, Funding acquisition.

Declaration of competing interest

Dr. Rizvi provides consultation and training for Behavioral Tech, LLC. Drs. Rizvi and Kleiman receive external grant funding from the National Institute of Mental Health.

Acknowledgements

This work was supported by the Rutgers Center for COVID-19 Response and Pandemic Preparedness.

Footnotes

Supplementary data related to this article can be found at https://doi.org/10.1016/j.brat.2021.104015.

Appendix A. Supplementary data

The following is the supplementary data related to this article:

References

- American College Health Association . 2020. The impact of COVID-19 on college student well-being. [Google Scholar]

- Auerbach R.P., Alonso J., Axinn W.G., Cuijpers P., Ebert D.D., Green J.G., Hwang I., Kessler R.C., Liu H., Mortier P., Nock M.K., Pinder-Amaker S., Sampson N.A., Aguilar-Gaxiola S., Al-Hamzawi A., Andrade L.H., Benjet C., Caldas-de-Almeida J.M., Demyttenaere K., Bruffaerts R. Mental disorders among college students in the world health organization world mental health surveys. Psychological Medicine. 2016;46(14):2955–2970. doi: 10.1017/S0033291716001665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baghurst T., Kelley B.C. An examination of stress in college students over the course of a semester. Health Promotion Practice. 2014;15(3):438–447. doi: 10.1177/1524839913510316. [DOI] [PubMed] [Google Scholar]

- Bai S., Elavsky S., Kishida M., Dvořáková K., Greenberg M.T. Effects of mindfulness training on daily stress response in college students: Ecological momentary assessment of a randomized controlled trial. Mindfulness. 2020;11(6):1433–1445. doi: 10.1007/s12671-020-01358-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates D., Mächler M., Bolker B., Walker S. Fitting linear mixed-effects models using lme4. Journal of Statistical Software. 2015;67(1):1–48. doi: 10.18637/jss.v067.i01. [DOI] [Google Scholar]

- Benton S.A., Robertson J.M., Tseng W.-C., Newton F.B., Benton S.L. Changes in counseling center client problems across 13 years. Professional Psychology: Research and Practice. 2003;34(1):66–72. doi: 10.1037/0735-7028.34.1.66. [DOI] [Google Scholar]

- Bor J., Venkataramani A.S., Williams D.R., Tsai A.C. Police killings and their spillover effects on the mental health of Black Americans: A population-based, quasi-experimental study. The Lancet. 2018;392(10144):302–310. doi: 10.1016/S0140-6736(18)31130-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cella D., Yount S., Rothrock N., Gershon R., Cook K., Reeve B., Ader D., Fries J.F., Bruce B., Rose M. The patient-reported outcomes measurement information system (PROMIS) Medical Care. 2007;45(5 Suppl 1) doi: 10.1097/01.mlr.0000258615.42478.55. S3–S11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Center for Collegiate Mental Health . Publication No. STA 15-108; 2016. 2015 annual report. [Google Scholar]

- Center for Collegiate Mental Health . Publication No. STA 21-045; 2021. 2020 annual report. [Google Scholar]

- Chugani C.D., Ghali M.N., Brunner J. Effectiveness of short-term Dialectical Behavior Therapy skills training in college students with Cluster B personality disorders. Journal of College Student Psychotherapy. 2013;27(4):323–336. doi: 10.1080/87568225.2013.824337. [DOI] [Google Scholar]

- Cristea I.A., Gentili C., Cotet C.D., Palomba D., Barbui C., Cuijpers P. Efficacy of psychotherapies for borderline personality disorder: A systematic review and meta-analysis. JAMA Psychiatry. 2017;74(4):319–328. doi: 10.1001/jamapsychiatry.2016.4287. [DOI] [PubMed] [Google Scholar]

- Damschroder L.J., Aron D.C., Keith R.E., Kirsh S.R., Alexander J.A., Lowery J.C. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science. 2009;4(1):50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eisenberg D., Hunt J., Speer N. Mental health in American colleges and universities: Variation across student subgroups and across campuses. The Journal of Nervous and Mental Disease. 2013;201(1):60–67. doi: 10.1097/NMD.0b013e31827ab077. [DOI] [PubMed] [Google Scholar]

- Fleming A.P., McMahon R.J., Moran L.R., Peterson A.P., Dreessen A. Pilot randomized controlled trial of Dialectical Behavior Therapy group skills training for ADHD among college students. Journal of Attention Disorders. 2015;19(3):260–271. doi: 10.1177/1087054714535951. [DOI] [PubMed] [Google Scholar]

- Gratz K.L., Roemer L. Multidimensional assessment of emotion regulation and dysregulation: Development, factor structure, and initial validation of the Difficulties in Emotion Regulation Scale. Journal of Psychopathology and Behavioral Assessment. 2004;26(1):41–54. doi: 10.1023/B:JOBA.0000007455.08539.94. [DOI] [Google Scholar]

- Kazdin A.E. Addressing the treatment gap: A key challenge for extending evidence-based psychosocial interventions. Behaviour Research and Therapy. 2017;88:7–18. doi: 10.1016/j.brat.2016.06.004. [DOI] [PubMed] [Google Scholar]

- Kecojevic A., Basch C.H., Sullivan M., Davi N.K. The impact of the COVID-19 epidemic on mental health of undergraduate students in New Jersey, cross-sectional study. PLoS One. 2020;15(9) doi: 10.1371/journal.pone.0239696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleiman E.M., Yeager A.L., Grove J.L., Kellerman J.K., Kim J.S. Real-time mental health impact of the COVID-19 pandemic on college students: Ecological momentary assessment study. JMIR Mental Health. 2020;7(12) doi: 10.2196/24815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kliem S., Kröger C., Kosfelder J. Dialectical behavior therapy for borderline personality disorder: A meta-analysis using mixed-effects modeling. Journal of Consulting and Clinical Psychology. 2010;78(6):936–951. doi: 10.1037/a0021015. [DOI] [PubMed] [Google Scholar]

- Lamis D.A., Lester D., editors. Understanding and preventing college student suicide. Charles C Thomas Publisher; 2011. [Google Scholar]

- LeViness P., Bershad C., Gorman K., Braun L., Murray T. 2019. The association for university and college counseling center directors annual survey – public. Version 2018. [Google Scholar]

- Linehan M.M. Guilford Publications; 1993. Cognitive-behavioral treatment of borderline personality disorder. [Google Scholar]

- Linehan M.M. 2nd ed. Guilford Publications; 2014. DBT skills training handouts and worksheets. [Google Scholar]

- Lipson S.K., Kern A., Eisenberg D., Breland-Noble A.M. Mental health disparities among college students of color. Journal of Adolescent Health. 2018;63(3):348–356. doi: 10.1016/j.jadohealth.2018.04.014. [DOI] [PubMed] [Google Scholar]

- Lipson S.K., Lattie E.G., Eisenberg D. Increased rates of mental health service utilization by U.S. College students: 10-Year population-level trends (2007–2017) Psychiatric Services. 2019;70(1):60–63. doi: 10.1176/appi.ps.201800332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muhomba M., Chugani C.D., Uliaszek A.A., Kannan D. Distress tolerance skills for college students: A pilot investigation of a brief DBT group skills training program. Journal of College Student Psychotherapy. 2017;31(3):247–256. doi: 10.1080/87568225.2017.1294469. [DOI] [Google Scholar]

- Pistorello J., Fruzzetti A.E., MacLane C., Gallop R., Iverson K.M. Dialectical behavior therapy (DBT) applied to college students: A randomized clinical trial. Journal of Consulting and Clinical Psychology. 2012;80(6):982–994. doi: 10.1037/a0029096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizvi S.L., Steffel L.M. A pilot study of 2 brief forms of Dialectical Behavior Therapy skills training for emotion dysregulation in college students. Journal of American College Health. 2014;62(6):434–439. doi: 10.1080/07448481.2014.907298. [DOI] [PubMed] [Google Scholar]

- Roche M.J., Jacobson N.C. Elections have consequences for student mental health: An accidental daily diary study. Psychological Reports. 2019;122(2):451–464. doi: 10.1177/0033294118767365. [DOI] [PubMed] [Google Scholar]

- Skutch J.M., Wang S.B., Buqo T., Haynos A.F., Papa A. Which brief is best? Clarifying the use of three brief versions of the Difficulties in emotion regulation scale. Journal of Psychopathology and Behavioral Assessment. 2019;41(3):485–494. doi: 10.1007/s10862-019-09736-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Son C., Hegde S., Smith A., Wang X., Sasangohar F. Effects of COVID-19 on college students' mental health in the United States: Interview survey study. Journal of Medical Internet Research. 2020;22(9) doi: 10.2196/21279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stebleton M.J., Soria K.M., Huesman R.L. First-generation students' sense of belonging, mental health, and use of counseling services at public research universities. Journal of College Counseling. 2014;17(1):6–20. doi: 10.1002/j.2161-1882.2014.00044.x. [DOI] [Google Scholar]

- Tynes B.M., Willis H.A., Stewart A.M., Hamilton M.W. Race-related traumatic events online and mental health among adolescents of color. Journal of Adolescent Health. 2019;65(3):371–377. doi: 10.1016/j.jadohealth.2019.03.006. [DOI] [PubMed] [Google Scholar]

- Üstündağ-Budak A.M., Özeke-Kocabaş E., Ivanoff A. Dialectical behaviour therapy skills training to improve Turkish college students' psychological well-being: A pilot feasibility study. International Journal for the Advancement of Counselling. 2019;41(4):580–597. doi: 10.1007/s10447-019-09379-5. [DOI] [Google Scholar]

- Valentine S.E., Bankoff S.M., Poulin R.M., Reidler E.B., Pantalone D.W. The use of dialectical behavior therapy skills training as stand-alone treatment: A systematic review of the treatment outcome literature. Journal of Clinical Psychology. 2015;71(1):1–20. doi: 10.1002/jclp.22114. [DOI] [PubMed] [Google Scholar]

- Veilleux J.C., Hill M.A., Skinner K.D., Pollert G.A., Baker D.E., Spero K.D. The dynamics of persisting through distress: Development of a Momentary Distress Intolerance Scale using ecological momentary assessment. Psychological Assessment. 2018;30(11):1468–1478. doi: 10.1037/pas0000593. [DOI] [PubMed] [Google Scholar]

- Victor S.E., Klonsky E.D. Validation of a brief version of the Difficulties in emotion regulation scale (DERS-18) in five samples. Journal of Psychopathology and Behavioral Assessment. 2016;38(4):582–589. doi: 10.1007/s10862-016-9547-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang X., Hegde S., Son C., Keller B., Smith A., Sasangohar F. Investigating mental health of US college students during the COVID-19 pandemic: Cross-sectional survey study. Journal of Medical Internet Research. 2020;22(9) doi: 10.2196/22817. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.