Abstract

Given functional data from a survival process with time-dependent covariates, we derive a smooth convex representation for its nonparametric log-likelihood functional and obtain its functional gradient. From this we devise a generic gradient boosting procedure for estimating the hazard function nonparametrically. An illustrative implementation of the procedure using regression trees is described to show how to recover the unknown hazard. The generic estimator is consistent if the model is correctly specified; alternatively an oracle inequality can be demonstrated for tree-based models. To avoid overfitting, boosting employs several regularization devices. One of them is step-size restriction, but the rationale for this is somewhat mysterious from the viewpoint of consistency. Our work brings some clarity to this issue by revealing that step-size restriction is a mechanism for preventing the curvature of the risk from derailing convergence.

MSC2020 subject classifications: Primary 62N02; secondary 62G05,90B22

Keywords and phrases; survival analysis, gradient boosting, functional data, step-size shrinkage, regression trees, likelihood functional

1. Introduction.

Flexible hazard models involving time-dependent covariates are indispensable tools for studying systems that track covariates over time. In medicine, electronic health records systems make it possible to log patient vitals throughout the day, and these measurements can be used to build real-time warning systems for adverse outcomes such as cancer mortality [2]. In financial technology, lenders track obligors’ behaviours over time to assess and revise default rate estimates. Such models are also used in many other fields of scientific inquiry since they form the building blocks for transitions within a Markovian state model. Indeed, this work was partly motivated by our study of patient transitions in emergency department queues and in organ transplant waitlist queues [20]. For example, allocation for a donor heart in the U.S. is defined in terms of coarse tiers [23], and transplant candidates are assigned to tiers based on their health status at the time of listing. However, a patient’s condition may change rapidly while awaiting a heart, and this time-dependent information may be the most predictive of mortality and not the static covariates collected far in the past.

The main contribution of this paper is to introduce a fully nonparametric boosting procedure for hazard estimation with time-dependent covariates. We describe a generic gradient boosting procedure for boosting arbitrary weak base learners for this setting. Generally speaking, gradient boosting adopts the view of boosting as an iterative gradient descent algorithm for minimizing a loss functional over a target function space. Early work includes Breiman [6, 7, 8] and Mason et al. [21, 22]. A unified treatment was provided by Friedman [13], who coined the term “gradient boosting” which is now generally taken to be the modern interpretation of boosting.

Most of the existing boosting approaches for survival data focus on time-static covariates and involve boosting the Cox model. Examples include the popular R-packages mboost (Bühlmann and Hothorn [10]) and gbm (Ridgeway [26]) which apply gradient boosting to the Cox partial likelihood loss. Related work includes the penalized Cox partial likelihood approach of Binder and Schumacher [4]. Other important approaches, but not based on the Cox model, include L2Boosting [11] with inverse probability of censoring weighting (IPCW) [16, 18], boosted transformation models of parametric families [15], and boosted accelerated failure time models [27].

While there are many boosting methods for dealing with time-static covariates, the literature is far more sparse for the case of time-dependent covariates. In fact, to our knowledge there is no general nonparametric approach for dealing with this setting. This is because in order to implement a fully nonparametric estimator, one has to contend with the issue of identifying the gradient, which turns out to be a non-trivial problem due to the functional nature of the data. This is unlike most standard applications of gradient boosting where the gradient can easily be identified and calculated.

1.1. Time-dependent covariate framework.

To explain why this is so challenging, we start by formally defining the survival problem with time-dependent covariates. Our description follows the framework of Aalen [1]. Let T denote the potentially unobserved failure time. Conditional on the history up to time t– the probability of failing at T ∈ [t, t+dt) equals

| (1) |

Here λ(t, x) denotes the unknown hazard function, is a predictable covariate process, and Y (t) ∈ {0, 1} is a predictable indicator of whether the subject is at risk at time t.1 To simplify notation, without loss of generality we normalize the units of time so that Y (t) = 0 for t > 1.2 In other words, the subject is not at risk after time t = 1, so we can restrict attention to the time interval (0, 1].

If failure is observed at T ∈ (0, 1] then the indicator Δ = Y (T) equals 1, otherwise Δ = 0 and we set T = ∞. Throughout we assume we observe n independent and identically distributed functional data samples . The evolution of observation i’s failure status can then be thought of as a sequence of coin flips at time increments t = 0, dt, 2dt, ⋯, with the probability of “heads” at each time point given by (1). Therefore, observation i’s contribution to the likelihood is

where the limit can be understood as a product integral. Hence, if the log-hazard function is

then the (scaled) negative log-likelihood functional is

| (2) |

which we shall refer to as the likelihood risk. The goal is to estimate the hazard function λ(t, x) = eF(t, x) nonparametrically by minimizing Rn(F).

1.2. The likelihood does not have a gradient in generic function spaces.

As mentioned, our approach is to boost F using functional gradient descent. However the chief difficulty is that the canonical representation of the likelihood risk functional does not have a gradient. To see this, observe that the directional derivative of (2) equals

| (3) |

which is the difference of two different inner products 〈eF, f〉† − 〈1, f〉‡ where

Hence, (3) cannot be expressed as a single inner product of the form 〈gF, f〉 for some function gF (t, x). Were it possible to do so, gF would then be the gradient function.

In simpler non-functional data settings like regression or classification, the loss can be written as , where is the non-functional target statistical model and Y is the outcome, so the gradient is simply . The negative gradient is then approximated using a base learner from a predefined class of functions (this being either parametric; for example linear learners, or nonparametric; for example tree learners). Typically, the optimal base learner is chosen to minimize the L2-approximation error and then scaled by a regularization parameter 0 < ν ≤ 1 to obtain the updated estimate of :

Importantly, in the simpler non-functional setting the gradient does not depend on the space that belongs to. By contrast, a key insight of this paper is that the gradient of Rn(F) can only be defined after carefully specifying an appropriate sample-dependent domain for Rn(F). The likelihood risk can then be re-expressed as a smooth convex functional, and an analogous representation also exists for the population risk. These representations resolve the difficulty above, allow us to describe and implement a gradient boosting procedure, and are also crucial to establishing guarantees for our estimator.

1.3. Contributions of the paper.

A key discovery that unlocks the boosted hazard estimator is Proposition 1 of Section 2. It provides an integral representation for the likelihood risk from which several results follow, including, importantly, an explicit representation for the gradient. Proposition 1 relies on defining a suitable space of log-hazard functions defined on the time-covariate domain . Identifying this space is the key insight that allows us to rescue the likelihood approach and to derive the gradient needed to implement gradient boosting. Arriving at this framework is not conceptually trivial, and may explain the absence of boosted nonparametric hazard estimators until now.

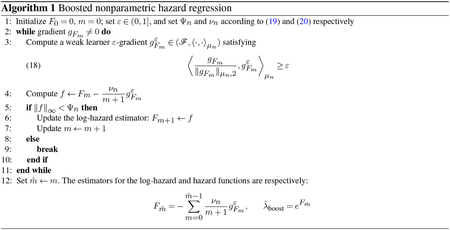

Algorithm 1 of Section 2 describes our estimator. The algorithm minimizes the likelihood risk (2) over the defined space of log-hazard functions. In the special case of regression tree learners, expressions for the likelihood risk and its gradient are obtained from Proposition 1, which are then used to describe a tree-based implementation of our estimator in Section 4. In Section 5 we apply it to a high-dimensional dataset generated from a naturalistic simulation of patient service times in an emergency department.

Section 3 establishes the consistency of the procedure. We show that the hazard estimator is consistent if the space is correctly specified. In particular, if the space is the span of regression trees, then the hazard estimator satisfies an oracle inequality and recovers λ up to some error tolerance (Propositions 3 and 4).

Another contribution of our work is to clarify the mechanisms used by gradient boosting to avoid overfitting. Gradient boosting typically applies two types of regularization to invoke slow learning: (i) A small step-size is used for the update; and (ii) The number of boosting iterations is capped. The number of iterations used in our algorithm is set using the framework of Zhang and Yu [31], whose work shows how stopping early ensures consistency. On the other hand, the role of step-size restriction is more mysterious. While [31] demonstrates that small step-sizes are needed to prove consistency, unrestricted greedy step-sizes are already small enough for classification problems [28] and also for commonly used regression losses (see the Appendix of [31]). We show in Section 3.3 that shrinkage acts as a counterweight to the curvature of the risk (see Lemma 2). Hence if the curvature is unbounded, as is the case for hazard regression, then the step-sizes may need to be explicitly controlled to ensure convergence. This important result adds to our understanding of statistical convergence in gradient boosting. As noted by Biau and Cadre [3] the literature for this is relatively sparse, which motivated them to propose another regularization mechanism that also prevents overfitting. Our work adds to this by considering the functional data setting.

Concluding remarks can be found in Section 6. Proofs not appearing in the body of the paper can be found in the Appendix.

2. The boosted hazard estimator.

In this section, we describe our boosted hazard estimator. To provide readers with concrete examples for the ideas introduced here, we will show how the quantities defined in this section specialize in the case of regression trees, which is one of a few possible ways to implement boosting.

We begin by defining in Section 2.1 an appropriate sample-dependent domain for the likelihood risk Rn(F). As explained, this key insight allows us to re-express the likelihood risk and its population analogue as smooth convex functionals, thereby enabling us to compute their gradients in closed form in Propositions 1 and 2 of Section 2.2. Following this, the boosting algorithm is formally stated in Section 2.3.

2.1. Specifying a domain for Rn(F).

We will make use of two identifiability conditions (A1) and (A2) to define the domain for Rn(F). Condition (A1) below is the same as Condition 1(iv) of Huang and Stone [19]. Assumption (A1). The true hazard function λ(t, x) is bounded between some interval [ΛL, ΛU] ⊂ (0, ∞) on the time-covariate domain .

Recall that we defined X(·) and Y(·) to be predictable processes, and so it can be shown that the integrals and expectations appearing in this paper are all well defined. Denoting the indicator function as I(·), define the following population and empirical sub-probability measures on :

and note that because the data is i.i.d. by assumption. Intuitively, μn measures the denseness of the observed sample time-covariate paths on . For any integrable f,

| (4) |

| (5) |

This allows us to define the following (random) norms and inner products

and note that ‖ · ‖μn, 1 ≤ ‖ · ‖μn, 2 ≤ ‖ · ‖∞ because .

By careful design, μn allows us to specify a natural domain for Rn(F). Let be a set of bounded functions that are linearly independent, in the sense that if and only if c1 = ⋯ = cd = 0 (when some of the covariates are discrete-valued, dx should be interpreted as the product of a counting measure and the Lebesgue measure). The span of the functions is

For example, the span of all regression tree functions that can be defined on , is ,3 which are linear combinations of indicator functions over disjoint time-covariate cubes indexed4 by j = (j0, j1,·⋯, jp):

| (6) |

Remark 1. The regions Bj are formed using all possible split points for the k-th coordinate x(k), with the spacing determined by the precision of the measurements. For example, if weight is measured to the closest kilogram, then the set of all possible split points will be {0.5, 1.5, 2.5, ⋯} kilograms. Note that these split points are the finest possible for any realization of weight that is measured to the nearest kilogram. While abstract treatments of trees assume that there is a continuum of split points, in reality they fall on a discrete (but fine) grid that is pre-determined by the precision of the data.

When is equipped with 〈·, ·〉μn, we obtain the following sample-dependent subspace of L2(μn), which is the appropriate domain for Rn(F):

Note that the elements in are equivalence classes rather than actual functions that have well defined values at each (t, x). This is a problem because the likelihood risk (2) requires evaluating F(t, x) at the points (Ti, Xi(Ti)) where Δi = 1. We resolve this by fixing an orthonormal basis {φnj(t, x)}j for , and represent each member of uniquely in the form . For example in the case of regression trees, applying the Gram-Schmidt procedure to gives

which by design have disjoint support.

The second condition we impose is for to be linearly independent in L2(μ), that is if and only if c1 = ⋯ = cd = 0. Since by construction are already linearly independent in , the condition intuitively requires the set of all possible time-covariate trajectories to be adequately dense in to intersect a sufficient amount of the support of every ϕj. This is weaker than the identifiability conditions 1(ii)-1(iii) in [19] which require X(t) to have a positive joint probability density on .

Assumption (A2). The Gram matrix is positive definite.

2.2. Integral representations for the likelihood risk.

Having deduced the appropriate domain for Rn(F), we can now recast the risk as a smooth convex functional on . Proposition 1 below provides closed form expressions for this and its gradient. We note that if the risk is actually of a certain simpler form, it might be possible to estimate its gradient empirically from our risk expression using [24].

Proposition 1. For functions F(t, x), f(t, x) of the form , the likelihood risk (2) can be written as

| (7) |

where is the function

Thus there exists ρ ∈ (0, 1) (depending on F and f) for which the Taylor representation

| (8) |

holds, where the gradient

| (9) |

of Rn(F) is the projection of eF −λn onto . Hence if gF = 0 then the infimum of Rn(F) over the span of {φnj(t, x)}j is uniquely attained at F.

For regression trees the expressions (7) and (9) simplify further because is closed under pointwise exponentiation, i.e. for . This is because the Bj’s are disjoint so and hence . Thus

| (10) |

| (11) |

| (12) |

where

is the number of observed failures in the time-covariate region Bj.

Proof of Proposition 1. Fix a realization of . Using (5) we can rewrite (2) as

We can express F in terms of the basis {φnk}k as . Hence

where the fourth equality follows from the orthonormality of the basis. This completes the derivation of (7).

By an interchange argument we obtain

the latter being positive whenever f ≠ 0; i.e., Rn(F) is convex. The Taylor representation (8) then follows from noting that gF is the orthogonal projection of eF − λn ∈ L2(μn) onto . □

The expectation of the likelihood risk also has an integral representation. A special case of the representation (13) below is proved in Proposition 3.2 of [19] for right-censored data only, under assumptions that do not allow for internal covariates. In the statement of the proposition below recall that ΛL and ΛU are defined in (A1). The constant is defined later in (28).

Proposition 2. For ,

| (13) |

Furthermore the restriction of R(F) to is coercive:

| (14) |

and it attains its minimum at a unique point . If contains the underlying log-hazard function then F* = log λ.

Remark 2. Coerciveness (14) implies that any F with expected risk R(F) less than R(0) ≤ 1 < 3 is uniformly bounded:

| (15) |

where the constant

| (16) |

is by design no smaller than 1 in order to simplify subsequent analyses.

2.3. The boosting procedure.

In gradient boosting the key idea is to update an iterate in a direction that is approximately aligned to the negative gradient. To model this direction formally, we introduce the concept of an ε-gradient.

Definition 1. Suppose gF ≠ 0. We say that a unit vector is an ε-gradient at F if for some 0 < ε ≤ 1,

| (17) |

Call a negative ε-gradient if is an ε-gradient.

Our boosting procedure seeks approximations that satisfy (17) for some pre-specified alignment value ε. The larger ε is, the closer the alignment is between the negative gradient and the negative ε-gradient, and the greater the risk reduction. In particular, −gF is the unique negative 1-gradient with maximal risk reduction. In practice, however, we find that using a smaller value of ε leads to simpler approximations that prevent overfitting in finite samples. This is consistent with other implementations of boosting: It is well known that the statistical performance of gradient descent generally improves when simpler base learners are used.

Algorithm 1 describes the proposed boosting procedure for estimating λ. For a given level of alignment ε, Line 3 finds an ε-gradient at Fm satisfying (18) at the m-th iteration, and uses its negation for the boosting update in Line 4. If the ε-gradients are regression tree learners, as is the case with the implementation in Section 4, then the trees cannot be grown in the same way as the standard boosting algorithm in Friedman [13]. This is because the standard approach grows all regression trees to a fixed depth, which may or may not ensure ε-alignment at each boosting iteration.

To ensure ε-alignment, the depth of the trees are not fixed in the implementation in Section 4. Instead, at each boosting iteration a tree is grown to whatever depth is needed to satisfy (18). This can always be done because the alignment ε is non-decreasing in the number of tree splits, and with enough splits we can recover the gradient gFm itself up to μn-almost everywhere.5 As mentioned earlier, we recommend using small values of ε, which can be determined in practice using cross-validation. This differs from the standard approach where cross-validation is used to select a common tree depth to use for all boosting iterations.

In addition to the gradient alignment ε, Algorithm 1 makes use of two other regularization parameters, Ψn and νn. The first defines the early stopping criterion (how many boosting iterations to use), while the second controls the step-sizes of the boosting updates. These are two common regularization techniques used in boosting:

- Early stopping. The number of boosting iterations is controlled by stopping the algorithm before the uniform norm of the estimator reaches or exceeds

where W(y) is the branch of the Lambert function that returns the real root of the equation zez = y for y > 0.(19) - Step-sizes. The step-size νn ≪ 1 used in gradient boosting is typically held constant across iterations. While we can also do this in our procedure,6 the role of step-size shrinkage becomes more salient if we use νn/(m+1) instead as the step-size for the m-th iteration in Algorithm 1. This step-size is controlled in two ways. First, it is made to decrease with each iteration according to the Robbins-Monro condition that the sum of the steps diverges while the sum of squared steps converges. Second, the shrinkage factor νn is selected to make the step-sizes decay with n at rate

This acts as a counterbalance to Rn(F)’s unbounded curvature:(20)

which is upper bounded by when ‖F‖∞ < Ψn and .(21)

3. Consistency.

Under (A1) and (A2), guarantees for our hazard estimator in Algorithm 1 can be derived for two scenarios of interest. The guarantees rely on the regularizations described in Section 2.3 to avoid overfitting. In the following development, recall from Proposition 2 that F* is the unique minimizer of R(F), so it satisfies the first order condition

| (22) |

for all . Recall that the span of all trees is closed under pointwise exponentiation , in which case (22) implies that is the orthogonal projection of λ onto .

- Consistency when is correctly specified. If the true log-hazard function log λ is in , then Proposition 2 asserts that F* = log λ. It will be shown in this case that is consistent:

- Oracle inequality for regression trees. If is closed under pointwise exponentiation, it follows from (22) that λ* is the best L2(μ)-approximation to λ among all candidate hazard estimators . It can then be shown that converges to this best approximation:

This oracle result is in the spirit of the type of guarantees available for tree-based boosting in the non-functional data setting. For example, if tree stumps are used for L2-regression, then the regression function estimate will converge to the best approximation to the true regression function in the span of tree stumps [9]. Similar results also exist for boosted classifiers [5].

Propositions 3 and 4 below formalize these guarantees by providing bounds on the error terms above. While sharper bounds may exist, the chief purpose of this paper is to introduce our generic estimator for the first time and to provide guarantees that apply across different implementations. More refined convergence rates may exist for a specific implementation, just like the analysis in Bühlmann and Yu [11] for L2Boosting when componentwise spline learners are specifically used. We leave this for future research.

En route to establishing the guarantees, Lemma 2 below clarifies the role played by step-size restriction in ensuring convergence of the estimator. As explained in the Introduction, explicit shrinkage is not necessary for classification and regression problems where the risk has bounded curvature. Lemma 2 suggests that it may, however, be needed when the risk has unbounded curvature, as is the case with Rn(F). Seen in this light, shrinkage is really a mechanism for controlling the growth of the risk curvature.

3.1. Strategy for establishing guarantees.

The representations for Rn(F) and its population analogue R(F) from Section 2 are the key ingredients for formalizing the guarantees. We use them to first show that converges to : Applying Taylor’s theorem to the representation for R(F) in Proposition 2 yields

| (23) |

The problem is thus transformed into one of risk minimization , for which [31] suggests analyzing separately the terms of the decomposition

| (24) |

The authors argue that in boosting, the point of limiting the number of iterations (enforced by lines 5–10 in Algorithm 1) is to prevent from growing too fast, so that (I) converges to zero as n → ∞. At the same time, is allowed to grow with n in a controlled manner so that the empirical risk in (III) is eventually minimized as n → ∞. Lemmas 1 and 2 below show that our procedure achieves both goals. Lemma 1 makes use of complexity theory via empirical processes, while Lemma 2 deals with the curvature of the likelihood risk. The term (II) will be bounded using standard concentration results.

3.2. Bounding (I) using complexity.

To capture the effect of using a simple negative ε-gradient (17) as the descent direction, we bound (I) in terms of the complexity of7

| (25) |

Depending on the choice of weak learners for the ε-gradients, may be much smaller than . For example, coordinate descent might only ever select a small subset of basis functions {ϕj}j because of sparsity. As another example if λ(t, x) is additively separable in time and also in each covariate, then regression trees might only ever select simple tree stumps (one tree split).

The measure of complexity we use below comes from empirical process theory. Define for Ψ > 0 and suppose that Q is a sub-probability measure on . Then the L2(Q)-ball of radius δ > 0 centred at some F ∈ L2(Q) is . The covering number is the minimum number of such balls needed to cover (Definitions 2.1.5 and 2.2.3 of van der Vaart and Wellner [29]), so for δ ≥ Ψ. A complexity measure for is

| (26) |

where the supremum is taken over Ψ > 0 and over all non-zero sub-probability measures. As discussed, is never greater than, and potentially much smaller than , the complexity of , which is fixed and finite.

Before stating Lemma 1, we note that the result also shows an empirical analogue to the norm equivalences

| (27) |

exists, where

| (28) |

The factor of 2 serves to simplify the presentation, and can be replaced with anything greater than 1.

Lemma 1. There exists a universal constant κ such that for any 0 < η < 1, with probability at least

an empirical analogue to (27) holds for all :

| (29) |

and for all ,

| (30) |

Remark 3. The equivalences (29) imply that equals its upper bound . That is, if , then , so c1 = ⋯ = cd = 0 because are linearly independent on .

3.3. Bounding (III) using curvature.

We use the representation in Proposition 1 to study the minimization of the empirical risk Rn(F) by boosting. Standard results for exact gradient descent like Theorem 2.1.15 of Nesterov [25] are in terms of the norm of the minimizer, which may not exist for Rn(F).8 If coordinate descent is used instead, Section 4.1 of [31] can be applied to convex functions whose infimum may not be attainable, but its curvature is required to be uniformly bounded above. Since the second derivative of Rn(F) is unbounded (21), Lemma 2 below provides two remedies: (i) Use the shrinkage decay (20) of νn to counterbalance the curvature; (ii) Use coercivity (15) to show that with increasing probability, are uniformly bounded, so the curvatures at those points are also uniformly bounded. Lemma 2 combines both to derive a result that is simpler than what can be achieved from either one alone. In doing so, the role played by step-size restriction becomes clear. The lemma relies in part on adapting the analysis in Lemma 4.1 of [31] for coordinate descent to the case for generic ε-gradients. The conditions required below will be shown to hold with high probability.

Lemma 2. Suppose (29) holds and that

Then the largest gap between F* and ,

| (31) |

is bounded by a constant no greater than , and for n ≥ 55,

| (32) |

Remark 4. The last term in (32) suggests that the role of the step-size shrinkage νn is to keep the curvature of the risk in check, to prevent it from derailing convergence. Recall from (21) that describes the curvature of Rn(Fm). Thus our result clarifies the role of step-size restriction in boosting functional data.

Remark 5. Regardless of whether the risk curvature is bounded or not, smaller step-sizes always improve the convergence bound. This can be seen from the parsimonious relationship between νn and (32). Fixing n, pushing the value of νn down towards zero yields the lower limit

However, this limit is unattainable as νn must be positive in order to decrease the risk. This effect has been observed in practical applications of boosting. Friedman [13] noted improved performance for gradient boosting with the use of a small shrinkage factor ν. At the same time, it was also noted there was diminishing performance gain as ν became very small, and this came at the expense of an increased number of boosting iterations. This same phenomenon has also been observed for L2Boosting [11] with componentwise linear learners. It is known that the solution path for L2Boosting closely matches that of lasso as ν → 0. However, the algorithm exhibits cycling behaviour for small ν, which greatly increases the number of iterations and offsets the performance gain in trying to approximate the lasso (see Ehrlinger and Ishwaran [12]).

3.4. Formal statements of guarantees.

As a reminder, we have defined the following quantities:

= , the boosted hazard estimator in Algorithm 1

λ* = , where F* is the unique minimizer of R(F) in Proposition 2

ΛL,ΛU = lower and upper bounds on λ(t, x) as defined in (A1)

= maximum gap between F* and defined in (31)

κ = a universal constant

= complexity measure (26), bounded above by

To simplify the results, we will assume that n ≥ 55 and also set the shrinkage to satisfy . Our first guarantee shows that our hazard estimator is consistent if the model is correctly specified.

Proposition 3. (Consistency under correct model specification). Suppose contains the true log-hazard function log λ. Then with probability

we have that is bounded and

Thus is consistent.

Via the tension between ε and , Proposition 3 captures the trade-off in statistical performance between weak and strong learners in gradient boosting. The advantage of low complexity (weak learners) is reflected in the increased probability of the L2(μ)-bound holding, with this probability being maximized when , which generally occurs as ε → 0. However, diametrically opposed to this, we find that the L2(μ)-bound is minimized by ε → 1, which occurs with the use of stronger learners that are more aligned with the gradient. This same trade-off is also captured by our second guarantee which establishes an oracle inequality for tree learners.

Proposition 4. (Oracle inequality for tree learners). Suppose for . Then among , λ* is the best L2(μ)-approximation to λ, that is

Moreover, converges to this best approximation λ*: With probability

we have that is bounded and

where is the smallest error one can achieve from using functions in to approximate λ.

For tree learners, λ*(t, x) is constant over each region Bj in (6), and its value equals the local average of λ over Bj,

Hence if the Bj’s are small, λ* should closely approximate λ (recall from Remark 1 that the size of the Bj’s is fixed by the data). To estimate the approximation error in terms of Bj, suppose that λ is sufficiently smooth, e.g. Hölder continuous for some b > 0. Then since ,

4. A tree-based implementation.

Here we describe an implementation of Algorithm 1 using regression trees, whereby the ε-gradient is obtained by growing a tree to satisfy (18) for a pre-specified ε.

To explain the tree growing process, first observe that the m-th step log-hazard estimator is an additive expansion of CART basis functions. Thus it can be written as

| (33) |

where Ab,l is the l-th leaf region of the b-th tree. Recall from Section 2.3 that each tree is grown until (18) is satisfied, so the number of leaf nodes Lb can vary from tree to tree. The leaf regions are typically large subsets of the time-covariate space adaptively determined by the tree growing process (to be discussed shortly). Since each leaf region can be further decomposed into the finer disjoint regions Bj in (6), Fm(t, x) can be rewritten as (33). However, many of these regions will share the same coefficient value, so (33) can be written more compactly as

where is the union of contiguous regions whose coefficient equals cm,j. This smooths the hazard estimator over , thanks to the regularization imposed by limiting the number of trees (early stopping) and also by the use of weak tree learners. This is unlike the unconstrained hazard MLE λn(t, x) defined in (10), which can take on a different value in each region Bj, making it prone to overfit the data.

To construct an ε-gradient with ε-alignment to gFm defined by (12),

the tree splits are adaptively chosen to reduce the L2(μn)-approximation error between and gFm. We implement tree splits for both time and covariates. Specifically, suppose we wish to split a leaf region into left and right daughter subregions A1 and A2, and assign values γ1 and γ2 to them. For example, a split on the k-th covariate could propose left and right daughters such as

| (34) |

or a split on time t could propose regions

| (35) |

Now note that gFm is constant within each region Bj. We denote its value by where is the centre of Bj. Hence the best split of A into A1 and A2 is the one that minimizes

| (36) |

where

represents the j-th pseudo-response, its covariate and wj = μn(Bj) its weight. Thus the splits use a weighted least squares criterion, which can be efficiently computed as usual.

We split the tree until (18) is satisfied, resulting in Lm leaf nodes (Lm − 1 splits). As discussed in Section 2.3, we can always find a deep enough tree that is an ε-gradient because with enough splits we can recover the gradient gFm itself. Recall also that a small value of ε performs best in practice, and this can be chosen by cross-validating on a set of small-sized candidates: For each one we implement Algorithm 1, and we select the one that minimizes the cross-validated risk Rn(F) defined in (11). By contrast, the standard boosting algorithm [13] uses cross-validation to select a common number of splits to use for all trees, which does not ensure that each tree is an ε-gradient.

Regarding the possible split points for the covariates (34), note that the k-th covariate x(k) = x(k)(t) is a time series that is sampled periodically. This yields a set of unique values equal to the union of all of the sampled values for the n observations. In direct analogy to non-functional data boosting, we place candidate split points in-between the sorted values in this set. In other words, splits for covariates only occur at values corresponding to the observed data just as in non-functional boosting.

The resolution for the grid of candidate time splits (35) is set equal to the temporal resolution. For example, the covariate trajectories in the simulation in Section 5 are piecewise constant and may change every 0.002 days. Placing the candidate split points at 0.002, 0.004, ⋯ days simplifies the exact computation of μn(Bj) because every covariate trajectory is constant between these points. Again, notice that the splits for time only occur at values informed by the observed data.

Putting it together, the setup above leverages our insight in (36) by transforming the survival functional data into the data values , which enables the implementation to proceed like standard gradient boosting for non-functional data. Only the pseudo-response in needs to be updated at each boosting iteration, while the other two do not change. In terms of storage it costs to store , where is the cardinality of the set of candidate time splits.9 Computationally, choosing a new tree split requires testing candidate splits.10 The space and time complexities of the implementation are reasonable given that they are for non-functional data boosting: In the functional data setting, each sample can have up to observations, so n functional data samples is akin to samples in a non-functional data setting.

5. Numerical experiment.

We now apply the boosting procedure of Section 4 to a high-dimensional dataset generated from a naturalistic simulation. This allows us to compare the performance of our estimator to existing boosting methods. The simulation is of patient service times in an emergency department (ED), and the hazard function of interest is patient service rate in the ED. The study of patient transitions in an ED queue is an important one in healthcare operations, because without a high resolution model of patient flow dynamics, the ED may be suboptimally utilized which would deny patients of timely critical care.

5.1. Service rate.

The service rate model used in the simulation is based upon a service time dataset from the ED of an academic hospital in the United States. The dataset contains information on 86,983 treatment encounters from 2014 to early 2015. Recorded for each encounter was: Age, gender, Emergency Severity Index (ESI)11, time of day when treatment in the ED ward began, day of week of ED visit, and ward census. The last one represents the total number of occupied beds in the ED ward, which varies over the course of the patient’s stay. Hence it is a time-dependent variable. Lastly, we also have the duration of the patient’s stay (service time).

The service rate function is developed from the data in the following way. First, we apply our nonparametric estimator to the data to perform exploratory analysis. We find that:

The key variables affecting the service rate (based on relative variable importance [13]) are ESI, age, and ward census. In addition, two of the most pronounced interaction terms identified by the tree splits are (AGE ≥ 34, ESI = 5) and (AGE ≥ 34, ESI ≤ 4).

Holding all the variables fixed, the shapes of the estimated service rate function resemble the hazard functions of log-normal distributions. This agrees with the queuing literature that find log-normality to be a reasonable parametric fit for service durations.

Guided by these findings, we specify the service rate λ(t, X(t)) for the simulation as a log-normal accelerated failure time (AFT) model, and estimate its parameters from data. This yields the service rate

| (37) |

where ϕl(·;m, σ) and Φl(·;m, σ) are the PDF and CDF of the log-normal distribution with log-mean m = −1.8 and log-standard deviation σ = 0.74. The function θ(x) captures the dependence of the service rate on the covariates:

| (38) |

The specification for θ(X(t)) above is a slight modification of the original estimate, with the free parameter a allowing us to study the effect of time-dependent covariates on hazard estimation. When a = 0, the service rate does not depend on time-varying covariates, but as a increases, the dependency becomes more and more significant. In the data, the ward census never exceeds 70, so we set the capacity of the simulated ED to 70 as well. The min operator caps the impact that census can have on the simulated service rate as a grows. The irrelevant covariates NUISANCE1, ⋯, NUISANCE43 are added to the data in order to assess how boosting performs in high dimensions. We explicitly include them in (38) to remind ourselves that the simulated data is high-dimensional. Forty of the irrelevant variables are generated synthetically as described in the next subsection, while the rest are variables from the original dataset not used in the simulation.

5.2. Simulation model.

Using (37) and (38), we simulate a naturalistic dataset of 10,000 patient visit histories. The value of a will be varied from 0 to 3 in order to study the impact of time-dependent covariates on hazard estimation. Each patient is associated with a 46-dimensional covariate vector consisting of:

The time-varying ward census. The initial value is sampled from its marginal empirical distribution in the original dataset. To simulate its trajectory over a patient’s stay, for every timestep advance of 0.002 days (≈3 minutes), a Bernoulli(0.02) random variable is generated. If it is one, then the census is incremented by a normal random variable with zero mean and standard deviation 10. The result is truncated if it lies outside the range [1, 70], the upper end being the capacity of the ED.

The other five time-static covariates in the original dataset. These are sampled from their marginal empirical distributions in the original dataset. Two of the variables (age and ESI) influence the service rate, while the other three are irrelevant.

An additional forty time-static covariates that do not affect the service rate (irrelevant covariates). Their values are drawn uniformly from [0, 1].

We also generate independent censoring times (rounded to the nearest 0.002 days) for each visit from an exponential distribution. For each simulation, the rate of the exponential distribution is set to achieve an approximate target of 25% censoring.

5.3. Comparison benchmarks.

When the covariates are static in time, a few software packages are available for performing hazard estimation with tree ensembles. Given that the data is simulated from a log-normal hazard, we compare our nonparametric method to two correctly specified parametric estimators:

The blackboost estimator in the R package mboost [10] provides a tree boosting procedure for fitting the log-normal hazard function. In order to apply this to the simulated data, we make ward census a time-static covariate by fixing it at its initial value.

Transformation forests [17] in the R package trtf can also fit log-normal hazards. Moreover, it allows for left-truncated and right-censored data. Since the ward census variable is simulated to be piecewise constant over time, we can treat each segment as a left-truncated and right-censored observation. Thus for this simulation, transformation forests are able to handle time-dependent covariates with time-static effects. This falls in between the static covariate/static effect blackboost estimator and our fully nonparametric one.

Since the service rate model used in the simulations is in fact log-normal, the benchmark methods above enjoy a significant advantage over our nonparametric one, which is not privy to the true distribution. In fact, when a = 0 the log-normal hazard (37) depends only on time-static covariates, so the benchmarks should outperform our nonparametric estimator. However, as a grows, we would expect a reversal in relative performance.

To compare the performances of the estimators, we use Monte Carlo integration to evaluate the relative mean squared error

The Monte Carlo integrations are conducted using an independent test set of 10,000 uncensored patient visit histories. For the test set, ward census is held fixed over time at the initial value, and we use the grid {0, 0.02, 0.04, ⋯, 1} for the time integral. The nominator above is then estimated by the average of evaluated at the 51×10,000 points of (t, x). The denominator is estimated in the same manner.

5.4. Results.

For the implementation of our estimator in Section 4, the value of ε and the number of trees are jointly determined using ten-fold cross validation. The candidate values we tried for ε are {0.003, 0.004, 0.005, 0.006, 0.007}, and we limit to no more than 1,000 trees. A wider range of values can be of course be explored for better performance (at the cost of more computations). As comparison, we run an ad-hoc version of our algorithm in which all trees use the same number of splits, as is the case in standard boosting [13]. This approach does not explicitly ensure that the trees will be ε-gradients for a pre-specified ε. The number of splits and the number of trees used in the ad-hoc method are jointly determined using ten-fold cross-validation.

In order to speed up convergence at the m-th iteration for both approaches, instead of using the step-size νn/(m+1) of Algorithm 1, we performed line-search within the interval (0, νn/(m+1)]. While Lemma 2 shows that a smaller shrinkage νn is always better, this comes at the expense of a larger and hence computation time. For simplicity we set νn = 1 for all the experiments here.

For fitting the blackboost estimator, we use the default setting of nu = 0.1 for the step-size taken at each iteration. The other hyperparameters, mstop (the number of trees) and maxdepth (maximum depth of trees), are chosen to directly minimize the relative MSE on the test set. This of course gives the blackboost estimator an unfair advantage over our estimator, which is on top of the fact that it is based on the same distribution as the true model. Transformation forest (using also the true distribution) is fit using code kindly provided by Professor T. Hothorn.12

Variable selection.

The relative importances of variables [13] for our estimator are given in Table 1 for all four cases a = 0, 1, 2, 3. The four factors that influence the service rate (38) are explicitly listed, while the irrelevant covariates are grouped together in the last column. When a = 0, the service rate does not depend on census, and we see that the importance of census and the other irrelevant covariates are at least an order of magnitude smaller than the relevant ones. As a increases, census becomes more and more important as correctly reflected in the table. Across all the cases the importance of the relevant covariates are at least an order of magnitude larger than the others, suggesting that our estimator is able to pick out the influential covariates and largely avoid the irrelevant ones.

Table 1.

Relative importances of variables in the boosted nonparametric estimator. The numbers are scaled so that the largest value in each row is 1.

| a | Time | Age | ESI | Census | All other variables |

|---|---|---|---|---|---|

| 0 | 1 | 0.21 | 0.025 | 0.0011 | <0.0010 |

| 1 | 1 | 0.22 | 0.013 | 0.46 | <0.0003 |

| 2 | 0.34 | 0.064 | 0.0020 | 1 | 0 |

| 3 | 0.11 | 0.011 | <0.0001 | 1 | 0 |

Presence of time-dependent covariates.

Table 2 presents the relative MSEs for the estimators as the service rate function (38) becomes increasingly dependent on the time-varying census variable. When a = 0 the service rate depends only on time-static covariates, so as expected, the parametric log-normal benchmarks perform the best when applied to data simulated from a log-normal AFT model.

Table 2.

Comparative performances (%MSE) as the service rate (38) becomes increasingly dependent on the time-varying ward census variable (by increasing a).

| a | blackboost (set to true log-normal distribution) | Transformation forest (set to true log-normal distribution) | Boosted hazards (ε fixed for all iterations) | Ad-hoc (# splits fixed for all iterations) |

|---|---|---|---|---|

| 0 | 5.0% | 5.0% | 7.8% | 7.1% |

| 1 | 17% | 6.1% | 4.5% | 8.1% |

| 2 | 46% | 9.7% | 5.4% | 7.0% |

| 3 | 67% | 18% | 7.2% | 7.4% |

However, as a increases, the service rate becomes increasingly dependent on census. The corresponding performances of both benchmarks deteriorate dramatically, and is handily outperformed by the proposed estimator. We note that the inclusion of just one time-dependent covariate is enough to degrade the performances of the benchmarks, despite the fact that they have the exact same parametric form as the true model.

Finally we find comparable performance among the ad-hoc boosted estimator and our proposed one, although a slight edge goes to the latter especially in the more difficult simulations with larger a. The results here demonstrate that there is a place in the survival boosting literature for fully nonparametric methods like this one that can flexibly handle time-dependent covariates.

6. Discussion.

Our estimator can also potentially be used to evaluate the goodness-of-fit of simpler parametric hazard models. Since our approach is likelihood-based, future work might examine whether model selection frameworks like those in [30] can be extended to cover likelihood functionals. For this, [10] provides some guidance for determining the effective degrees of freedom for the boosting estimator. The ideas in [32] may also be germane.

The implementation presented in Section 4 is one of many possible ways to implement our estimator. We defer the design of a more refined implementation to future research, along with open-source code.

Acknowledgements.

The review team provided many insightful comments that significantly improved the paper. We are grateful to Brian Clarke, Jack Hall, Sahand Negahban, and Hongyu Zhao for helpful discussions. Special thanks to Trevor Hastie for early formative discussions. The dataset used in Section 5 was kindly provided by Dr. Kito Lord. NC was supported by HKUST grant R9382 and NSERC Discovery Grant RGPIN-2020-04038. HI was supported by National Institutes of Health grants R01 GM125072 and R01 HL141892. DKKL was supported by a hyperplane.

APPENDIX: PROOFS

Proof of Proposition 2.

Proof. Writing

we can apply (4) to establish the first part of the integral in (13) when . To complete the representation, it suffices to show that the point process

has mean , and then apply Campbell’s formula. To this end, write N(t) = I(T ≤ t) and consider the filtration σ{X(s), Y(s),N(s) : s ≤ t}. Then N(t) has the Doob-Meyer form dN(t) = λ(t, X(t))Y(t)dt + dM(t) where M(t) is a martingale. Hence

where the last equality follows from (4). Since I[{t, X(t)} ∈ B] is predictable because X(t) is, the desired result follows if the stochastic integral is a martingale. By Section 2 of Aalen [1], this is true if M(t) is square-integrable. In fact, is bounded because λ(t, x) is bounded above by (A1). This establishes (13).

Now note that for a positive constant Λ the function ey − Λy is bounded below by both −Λy and Λy + 2Λ{1 – log 2Λ}, hence ey − Λy ≥ Λ|y| + 2Λmin{0, 1 – log 2Λ}. Since Λ min{0, 1 − log2Λ} is non-increasing in Λ, (A1) implies that

Integrating both sides and using the norm equivalence relation (27) shows that

The lower bound (14) then follows from the second inequality. The last inequality shows that R(F) is coercive on . Moreover the same argument used to derive (8) shows that R(F) is smooth and convex on . Therefore a unique minimizer F* of R(F) exists in . Since (A2) implies there is a bijection between the equivalent classes of and the functions in , F* is also the unique minimizer of R(F) in . Finally, since eF(t, x) − λ(t, x)F(t, x) is pointwise bounded below by , for all . □

Proof of Lemma 1.

Proof. By a pointwise-measurable argument (Example 2.3.4 of [29]) it can be shown that all suprema quantities appearing below are sufficiently well behaved, so outer integration is not required. Define the Orlicz norm where . Suppose the following holds:

| (39) |

| (40) |

where is the complexity measure (26), and κ′,κ″ are universal constants. Then by Markov’s inequality, (30) holds with probability at least , and

| (41) |

holds with probability at least . Since and η < 1, (30) and (41) jointly hold with probability at least . The lemma then follows if (41) implies (29). Indeed, for any non-zero , its normalization G = F/‖F‖∞ is in by construction (25). Then (41) implies that

because

where the last inequality follows from the definition of (28).

Thus it remains to establish (39) and (40), which can be done by applying the symmetrization and maximal inequality results in Sections 2.2 and 2.3.2 of [29]. Write where are independent copies of the loss

| (42) |

which is a stochastic process indexed by . As was shown in Proposition 2, . Let ζ1, ⋯ , ζN be independent Rademacher random variables that are independent of . It follows from the symmetrization Lemma 2.3.6 of [29] for stochastic processes that the left hand side of (39) is bounded by twice the Orlicz norm of

| (43) |

Now hold Z fixed so that only ζ1, ⋯ , ζn are stochastic, in which case the sum in the second line of (43) becomes a separable subgaussian process. Since the Orlicz norm of is bounded by for any constant ai, we obtain the following the Lipschitz property for any F1, :

where the second inequality follows from |ex − ey| ≤ emax(x, y)|x−y| and the last from the Cauchy-Schwarz inequality. Putting the Lipschitz constant obtained above into Theorem 2.2.4 of [29] yields the following maximal inequality: There is a universal constant κ′ such that

where the last line follows from (26). Likewise the conditional Orlicz norm for the supremum of is bounded by . Since neither bounds depend on Z, plugging back into (43) establishes (39):

where by (19). On noting that

(40) can be established using the same approach. □

Proof of Lemma 2.

Proof. For , applying (8) to yields

| (44) |

where the bound for the second term is due to (18) and the bound for the integral follows from (Definition 1 of an ε-gradient) and ‖Fm‖∞, ‖Fm+1‖∞ < Ψn for (lines 5–6 of Algorithm 1). Hence for , (44) implies that

because under (20). Since , and using our assumption in the statement of the lemma, we have

Clearly the minimizer F* also satisfies R(F*) ≤ R(0) < 3. Thus coercivity (15) implies that

so the gap defined in (31) is bounded as claimed.

It remains to establish (32), for which we need only consider the case . The termination criterion gFm = 0 in Algorithm 1 is never triggered under this scenario, because by Proposition 1 this would imply that minimizes Rn(F) over the span of {φnj(t, x)}j, which also contains F* (Remark 3). Thus either , or the termination criterion in line 5 of Algorithm 1 is met. In the latter case

| (45) |

where the inequalities follow from (29) and from . Since the sum is diverging, the inequality also holds for sufficiently large (e.g. ).

Given that F* lies in the span of {φnj(t, x)}j, the Taylor expansion (8) is valid for Rn(F*). Since the remainder term in the expansion is non-negative, we have

Furthermore for ,

Putting both into (44) gives

Subtracting Rn(F*) from both sides above and denoting δm = Rn(Fm) − Rn(F*), we obtain

Since the term inside the first parenthesis is between 0 and 1, solving the recurrence yields

where in the second inequality we used the fact that 0 ≤ 1 + y ≤ ey for |y| < 1, and the last line follows from (45).

The Lambert function (19) in Ψn = W(n1/4) is asymptotically log y −log log y, and in fact by Theorem 2.1 of [14], W(y) ≥ log y −log log y for y ≥ e. Since by assumption n ≥ 55 > e4, the above becomes

The last step is to control δ0, which is bounded by 1 − Rn(F*) because Rn(F0) = Rn(0) ≤ 1. Then under the hypothesis |Rn(F*) − R(F*)| < 1, we have

Since (14) implies R(F*) ≥ 2ΛU min{0, 1 − log(2ΛU)},

□

Proof of Proposition 3.

Proof. Let δ = log n/(4n1/4) which is less than one for n ≥ 55 > e4. Since , it follows that

| (46) |

Now define the following probability sets

and fix a sample realization from . Then the conditions required in Lemma 2 are satisfied with , so (and hence ) is bounded and (32) holds. Since Algorithm 1 ensures that , we have and therefore it also follows that . Combining (23) and (24) gives

where the second inequality follows from (32) and , and the last from (46). Now, using the inequality |ex − ey| ≤ max(ex, ey)|x − y| yields

and the stated bound follows from F* = log λ since is correctly specified (Proposition 2).

The next task is to lower bound . It follows from Lemma 1 that

Bounds on and can be obtained using Hoeffding’s inequality. Note from (2) that and for the loss l(·) defined in (42). Since and ,

By increasing the value of κ and/or replacing with if necessary, we can combine the inequalities to get a crude but compact bound:

| (47) |

Finally, since ‖F*‖∞ = ‖log λ‖∞ < max{|log ΛL|, |log ΛU|}, we can replace in the probability bound above by . □

Proof of Proposition 4.

Proof. It follows from (22) that λ* is the orthogonal projection of λ onto . Hence

where the inequality follows from |ex − ey| ≤ max(ex, ey)|x − y|. Bounding the last term in the same way as Proposition 3 completes the proof. To replace in (47) by , it suffices to show that ΛL ≤ λ*(t, x) ≤ ΛU. Since the value of λ* over one of its piecewise constant regions B is , the desired bound follows from (A1). We can also replace and with and respectively. □

Footnotes

The filtration of interest is σ{X(s), Y(s), I(T ≤ s) : s ≤ t}. If X(t) is only observable when Y(t) = 1, we can set whenever Y(t) = 0.

Since the data is always observed up to some finite time, there is no information loss from censoring at that point. For example, if T′ is the failure time in minutes and the longest duration in the data is τ′ = 60 minutes, the failure time in hours, T, is at most τ = 1 hour. The hazard function on the minute timescale, λT′(t′, X(t′)), can be recovered from the hazard function on the hourly timescale, λT (t, X(t)), via .

It is clear that said span is contained in . For the converse, it suffices to show that is also contained in the span of trees of some depth. This is easy to show for trees with p + 1 splits, because they can generate partitions of the form (−∞, t] × (−∞, x(1)]× ⋯ × (−∞, x(p)] in (Section 3 of [8]).

With a slight abuse of notation, the index j is only considered multi-dimensional when describing the geometry of Bj, such as in (6). In all other situations j should be interpreted as a scalar index.

Split the tree until each leaf node contains just one of the regions Bj in (6) with μn(Bj) > 0. Then set the value of the node equal to the value of the gradient function (12) inside Bj.

The term in condition (20) would need to be replaced by if a constant step-size is used.

For technical convenience, has been enlarged from to include the unit ball.

The infimum of Rn(F) is not always attainable: If f is non-positive and vanishes on the set {{Ti, Xi(Ti)} : Δi = 1}, then is decreasing in θ so f is a direction of recession. This is however not an issue for boosting because of early stopping.

Each is of dimension p + 3 and the number of time-covariate regions Bj with wj > 0 is at most . To show the latter, observe that Bj will only have wj = μn(Bj) > 0 if it is traversed by at least one sample covariate trajectory. Then note that each of the n sample covariate trajectories can traverse at most unique regions.

A sample covariate trajectory can have at most unique observed values for the k-th covariate x(k), so there are at most candidate splits for x(k). Thus there are candidate splits for p covariates. The number of candidate splits on time is obviously .

Level 1 is the most severe (e.g., cardiac arrest) and level 5 is the least (e.g., rash). We removed level 1 patients from the dataset because they were treated in a separate trauma bay.

In the code 100 trees are used in the forest, which takes about 700 megabytes to store the fitted object when applied to our simulated data.

REFERENCES

- [1].Aalen OO (1978). Nonparametric inference for a family of counting processes. Annals of Statistics 6 701–726. [Google Scholar]

- [2].Adelson K, Lee DKK, Velji S, Ma J, Lipka SK, Rimar J, Longley P, Vega T, Perez-Irizarry J, Pinker E and Lilenbaum R (2018). Development of Imminent Mortality Predictor for Advanced Cancer (IMPAC), a tool to predict short-term mortality in hospitalized patients with advanced cancer. Journal of Oncology Practice 14 e168–e175. [DOI] [PubMed] [Google Scholar]

- [3].Biau G and Cadre B (2017). Optimization by gradient boosting. arXiv preprint arXiv:1707.05023 [Google Scholar]

- [4].Binder H and Schumacher M (2008). Allowing for mandatory covariates in boosting estimation of sparse high-dimensional survival models. BMC Bioinformatics 9 14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Blanchard G, Lugosi G and Vayatis N (2003). On the rate of convergence of regularized boosting classifiers. Journal of Machine Learning Research 4 861–894. [Google Scholar]

- [6].Breiman L (1997). Arcing the edge. U.C. Berkeley Dept. of Statistics Technical Report 486 [Google Scholar]

- [7].Breiman L (1999). Prediction games and arcing algorithms. Neural Computation 11 1493–1517. [DOI] [PubMed] [Google Scholar]

- [8].Breiman L (2004). Population theory for boosting ensembles. Annals of Statistics 32 1–11. [Google Scholar]

- [9].Bühlmann P (2002). Consistency for L2boosting and matching pursuit with trees and tree-type basis functions. In Research report 109. Seminar für Statistik, Eidgenössische Technische Hochschule (ETH). [Google Scholar]

- [10].Bühlmann P and Hothorn T (2007). Boosting algorithms: Regularization, prediction and model fitting. Statistical Science 22 477–505. [Google Scholar]

- [11].Bühlmann P and Yu B (2003). Boosting with the L2 loss: Regression and classification. Journal of the American Statistical Association 98 324–339. [Google Scholar]

- [12].Ehrlinger J and Ishwaran H (2012). Characterizing L2Boosting. Annals of Statistics 40 1074–1101. [Google Scholar]

- [13].Friedman JH (2001). Greedy function approximation: A gradient boosting machine. Annals of Statistics 29 1189–1232. [Google Scholar]

- [14].Hoorfar A and HASSANI M (2008). Inequalities on the Lambert W function and hyperpower function. Journal of Inequalities in Pure and Applied Mathematics 9. [Google Scholar]

- [15].Hothorn T (2019). Transformation boosting machines. Statistics and Computing 1–12. [Google Scholar]

- [16].Hothorn T, Bühlmann P, Dudoit S, Molinaro A and van der Laan MJ (2006). Survival ensembles. Biostatistics 7 355–373. [DOI] [PubMed] [Google Scholar]

- [17].Hothorn T and Zeileis A (2017). Transformation forests. arXiv preprint arXiv:1701.02110 [Google Scholar]

- [18].Huang J, Ma S and Xie H (2006). Regularized estimation in the accelerated failure time model with high-dimensional covariates. Biometrics 62 813–820. [DOI] [PubMed] [Google Scholar]

- [19].Huang JZ and Stone CJ (1998). The L2 rate of convergence for event history regression with time-dependent covariates. Scandinavian Journal of Statistics 25 603–620. [Google Scholar]

- [20].Lowsky DJ, Ding Y, Lee DKK, McCulloch CE, Ross LF, Thistlethwaite JR and Zenios SA (2013). A K-nearest neighbors survival probability prediction method. Statistics in Medicine 32 2062–2069. [DOI] [PubMed] [Google Scholar]

- [21].Mason L, Baxter J, Bartlett PL and Frean MR (1999). Functional gradient techniques for combining hypotheses. In Advances in Neural Information Processing Systems 221–246. [Google Scholar]

- [22].Mason L, Baxter J, Bartlett PL and Frean MR (2000). Boosting algorithms as gradient descent. In Advances in Neural Information Processing Systems 512–518. [Google Scholar]

- [23].Meyer DM, Rogers JG, Edwards LB, Callahan ER, Webber SA, Johnson MR, Vega JD, Zucker MJ and Cleveland JC Jr (2015). The future direction of the adult heart allocation system in the United States. American Journal of Transplantation 15 44–54. [DOI] [PubMed] [Google Scholar]

- [24].Müller HG and Yao F (2010). Additive modelling of functional gradients. Biometrika 97 791–805. [Google Scholar]

- [25].Nesterov Y (2004). Introductory lectures on convex optimization: A basic course. Springer. [Google Scholar]

- [26].Ridgeway G (1999). The state of boosting. Computing Science and Statistics 31 172–181. [Google Scholar]

- [27].Schmid M and Hothorn T (2008). Flexible boosting of accelerated failure time models. BMC Bioinformatics 9 269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Telgarsky M (2013). Margins, shrinkage, and boosting. In Proceedings of the 30th International Conference on International Conference on Machine Learning-Volume 28 II–307. [Google Scholar]

- [29].van der Vaart AW and Wellner JA (1996). Weak convergence and empirical processes with applications to statistics. Springer; NY. [Google Scholar]

- [30].Vuong QH (1989). Likelihood ratio tests for model selection and non-nested hypotheses. Econometrica 57 307–333. [Google Scholar]

- [31].Zhang T and Yu B (2005). Boosting with early stopping: convergence and consistency. Annals of Statistics 33 1538–1579. [Google Scholar]

- [32].Zou H, Hastie TJ and Tibshirani R (2007). On the ‘degrees of freedom’ of the lasso. Annals of Statistics 35 2173–2192. [Google Scholar]