Abstract

The passage of the Medicare Access and CHIP Reauthorization Act (MACRA) in 2015 marked a fundamental transition in physician payment by the Centers for Medicare and Medicaid Services (CMS) from traditional fee‐for service to value‐based models. MACRA led to the creation of the CMS Quality Payment Program (QPP), which bases the value of physician care in large part on physician quality reporting. The QPP enabled a shift away from legacy CMS‐stewarded quality measures that had limited applicability to individual specialties toward specialty‐specific quality measures developed and stewarded by physician specialty societies using Qualified Clinical Data Registries (QCDRs). This article describes the development of the first nationally available emergency medicine QCDR as a means for emergency physicians to participate in the QPP, measure, and benchmark emergency physician quality.

Keywords: Clinical Data Registries, Electronic health record (EHR), MACRA (Medicare Access and CHIP Reauthorization Act), quality, quality measures, Quality Payment Program

1. INTRODUCTION

The passage of the Affordable Care Act (ACA) in 2010 represented a meaningful shift in healthcare payment. 1 Although physicians had long been paid via a fee‐for‐service model, the ACA formalized a transition away from the fee‐for‐service model to value‐based payments. Value‐based payment models are based on the premise that payors should pay not just for volume or intensity of services but rather for the value of care provided—which means obtaining the best possible quality at the lowest possible cost. Payors often measure the cost of care as healthcare spending, or payments, from the lens of the payor and determine the quality of care based on nationally endorsed or medical specialty society based consensus quality measures often attributed to clinicians, facilities, or populations.

The need for infrastructure to create, maintain, and report quality measures became clear following the 2015 passage of another piece of landmark legislation with even greater potential ramifications for emergency physicians. 2 Specifically, the Medicare Access and CHIP Reauthorization Act of 2015 (MACRA) mandated value‐based payment for physician reimbursement by creating the Quality Payment Program (QPP). 2 The QPP includes 2 tracks: the Merit‐based Incentive Payment System (MIPS) and Advanced Alternative Payment Models (APMs). The vast majority of emergency physicians will be evaluated under MIPS.

Although measuring both cost and quality can be quite complicated, the Centers for Medicare & Medicaid Services (CMS) has delegated most responsibility for quality measurement to clinical specialty societies, while retaining responsibility for cost measurement as the payor. 2 Under MIPS, physicians and other clinicians, such as, Nurse Practitioner and Physician Assistant develop objective measures for episodes of care relevant to their clinical practice. 3 Those quality measures are then reviewed by CMS, and a scoring system is developed. Clinicians must report on those measures through the use of certified electronic health record (EHR) technology and must participate in practice improvement activities. CMS compares performance between individuals or groups of clinicians, calculates the cost of the care provided by those clinicians, and determines an overall MIPS score based on these components. 3 The score is used to determine whether clinicians receive a potential bonus or reimbursement penalty the following year. Among the components of the MIPS score, physicians can primarily influence their score through quality measure performance, which is weighted the most heavily of the 4 categories (quality, cost, technology, and improvement activities). 3

Prior CMS pay‐for‐performance programs relied primarily on claims data for quality reporting, which are limited in its ability to fully capture the value of health care provided. 4 Specifically, claims data fail to capture all patients, lack clinical information, and are not available until months after care is delivered. Qualified Clinical Data Registries (QCDRs) address all of these concern by allowing the collection of data from all payors, using clinical data elements in EHRs, and providing participating clinicians with real‐time feedback dashboards. MACRA specifically supported the use of QCDRs as they are also able to measure multiple domains of quality (eg, safety, efficiency, care coordination), and in particular, outcome measures. More important, though, QCDRs are permitted (subject to CMS approval) to develop and maintain specialty‐specific quality measures that physicians might find more applicable; in fact, QCDRs are expected to be clinician‐led endeavors.

1.1. Development of a QCDR for emergency medicine

Emergency physicians have long participated in developing quality measures through the American College of Emergency Physicians (ACEP), the largest organization representing emergency physicians. 5 Following the passage of MACRA, many medical specialty societies, including ACEP, went through a deliberative process to establish a QCDR. This included a means to develop a QCDR, a mechanism to develop measures, and a mechanism to address current and future challenges of quality measurement.

Conceptually, a QCDR collects voluntarily provided data from emergency department records and uses that information to calculate quality measures that may then be reported to CMS. 6 The data might be sent in by a hospital or physician group from an EHR into a secured data warehouse, whose primary purpose is the reporting of quality measures to CMS. Depending on their quality and architecture, data in the data warehouse could also be used to populate other registries for research or other purposes.

Realizing that the future of healthcare delivery and quality measurement have all been rapidly evolving toward electronic measurement systems, ACEP first arranged a technology partnership that used expertise in data matching and extraction to create the input to the registry. One of the major roles of a technology vendor is to ensure the quality and validity of the data flowing into the data warehouse. For example, to capture a single data element, one might need to search multiple places (eg, problem list, diagnosis codes, past medical history, etc) within an EHR to find and reconcile that element reliably. Determining the location of the data reliably within each local instance of a variety of EHRs is a significant challenge.

Data transmitted to a QCDR may be sent in by a hospital or physician (“data push”) or passively collected directly from an EHR (“data pull”). In the “data push” model, a physician group is provided with a data dictionary containing all of the elements necessary to calculate all of the proposed quality measures. Data are transmitted to the technology vendor and then checked for validity. In most circumstances, a back‐and‐forth process ensues with successive iterations improving the quality of the data until it meets the QCDR's standards. In the “data pull” model, the technology vendor is responsible for installing software within the client's EHR to pull the data into the data warehouse. However, if there are multiple fields within the EHR that might map to the requested QCDR data element, a similar back‐and‐forth process begins as clinical decisions must be made to ensure that the data provided is of the highest possible fidelity.

1.2. Quality measures

Increasingly, CMS has shifted the opportunity and burden to develop specialty‐specific quality measures to medical specialty societies. Quality measure development is an expensive, time‐consuming, and highly technical endeavor, requiring identification of quality gaps in areas of care that are amenable to improvement, followed by measure specification, validation, and testing. Over time, this process has become more rigorous and standards for endorsement and adoption have increased. 7

Historically, specialty societies such as ACEP have developed a limited number of quality measures while the majority of the clinician quality measure development work was performed by payor organizations including CMS and the National Committee for Quality Assurance (NCQA). 8 Organizations often sought endorsement at the National Quality Forum (NQF), which would include physician review and input before promulgation in national programs. 8 Quality measures developed by specialty societies often faced unique challenges including a lack of resources to perform broad‐scale validation. In fact, several emergency medicine measures that had been given time‐limited endorsement by the NQF, such as Hhuman chorionic gonadotropin testing in women presenting with abdominal pain and anticoagulation for pulmonary embolism, were never subsequently tested and validated because of lack of resources and so expired.

A second challenge often faced was a result of widespread adoption and quality improvement; measures with substantial improvement over time may demonstrate limited opportunity for additional improvement. These measures are deemed “topped out” and therefore not suitable for further use in national pay‐for‐performance programs. With fewer approved measures to report upon, emergency physicians and groups are faced with reporting on non‐specialty specific measures that hold less applicability and meaning in emergency care (eg, providing chronic anticoagulation therapy for atrial fibrillation, something rarely managed by emergency physicians). 8

An additional challenge within emergency medicine in particular is the nature of shift work, and the difficulty of attribution of quality measure to individual clinicians. Given this, emergency medicine groups report at the group taxpayer identification number (TIN) level, with all the individual clinicians within the group accepting equally the quality results of the practice group. Further, many academic groups and other emergency physician groups that are part of multispecialty practices have limited incentive to participate in a QCDR when they report under a larger TIN that can select from among a broader set of available measures to report.

1.3. The case for QCDRs and reporting measures

Because QCDRs have the ability to generate and maintain their own quality measures, they are in a unique position to improve the quality of care by directing the development and maintenance of quality measures. As an example, the Clinical Emergency Data Registry (CEDR) incorporates a number of quality measures concerning sepsis care. 9 Measures of relevance to emergency medicine were not previously available for examining this important disease process. By incorporating these measures into a QCDR, CEDR allows emergency physicians to be reimbursed based in part on the quality of their sepsis care—essentially being paid for improved performance. The QCDR structure also allows the flexibility to adapt measure specifications to evolving guidelines in sepsis care. We anticipate seeing improved performance on these measures over time. Once performance nationally on the measures has “topped out,” those measures will be necessarily retired and a new disease process might be chosen as the next focus of quality improvement.

Apart from its value in improving the quality of care and meeting CMS reporting requirements, many emergency physicians may also use QCDRs to meet additional objectives. In addition to digital dashboards that can provide detailed feedback on individual and group performance, QCDRs can also provide national, regional, and even personal benchmarking. 6 As an example CEDR provides the ability for American Board of Emergency Medicine diplomates to satisfy Maintenance of Certification Part IV requirements, and plans are underway for reporting of clinical practice improvement activities (one of the other MIPS subcategories) as well. 9

1.4. Security

Security is an important issue for hospital‐based QCDRs. Hospitals in which physician groups work are frequently owners of the computer networks that might interface with a registry. Hospitals understandably have questions regarding data leaving their site and have concerns about data breaches and compliance with government regulations. Although many hospitals already participate in existing non‐QCDR clinical registries such as trauma registries, tumor boards, and numerous cardiovascular registries, the new emphasis on electronic clinical quality measures (eCQMs) requires a larger number of data elements than have been requested by registries in the past; this is particularly true in emergency care, given the wide variety of conditions treated. Furthermore, although “pulling” the data directly from an EHR is the most efficient means of data retrieval, hospitals are understandably reluctant to have third‐party software interacting with their EHR. The alternative, in which hospitals extract the data themselves and then “push” a report with the necessary data elements to the QCDR, is less efficient and requires some iterative communication to ensure that data capture is as accurate as possible.

1.5. Intellectual property

In addition to security, there is the question of who owns the data within the clinical record. Although the answer can be complicated as patients and clinicians concurrently produce and own EHR data, it can be even more complex in the ED, as hospitals usually own the computer systems used by emergency physicians. As the reuse of healthcare data has become increasingly of value for business purposes, there may be significant disincentives for businesses such as hospitals to readily share patient data. The richness of the data provided to a QCDR will have a direct influence on the richness of the insights the QCDR can produce for its participants.

1.6. Adoption of standards

Although the standards for EHRs continue to evolve, EHR vendors demonstrate a reluctance to incorporate an open architecture to easily share data. The most promising technology for data sharing currently is the Fast Healthcare Interoperability Resources (FHIR) 10 standard, which promises to enable easy, transportable access to healthcare data. However, full adoption of this standard by the EHR vendors has been slow. EHR vendors implement limited portions of the FHIR standard, with many key elements unavailable via the technology. This forces implementation of QCDR measures to use custom data extracts and interfaces to complete the data set required for most measures.

1.7. Multiple demands on EHR data

Many healthcare organizations experience a barrage of data requests from multiple clinical needs. Without a common data and interface standard, each one represents another project for the healthcare entity, with custom data requirements and integration efforts. This lack of reusable data integration work effort compounds the resource demands for both initial development as well as ongoing maintenance of the data exchange.

1.8. Cost

The costs of developing a QCDR are considerable, and the technical requirements are rigorous, with new requirements and restrictions added over time. This has gradually reduced the number of QCDRs approved by CMS from 150 in 2018 to 57 as of 2021. As a result, QCDRs establishment and maintenance is essentially limited to large organizations. Although traditional registries, such as trauma and tumor registries often rely on dedicated personnel at each hospital to collect and report data manually (at considerable cost), CMS offers incentives for QCDRs to use end‐to‐end electronic means of data capture in the QPP. 11 This in turn requires the necessary technology that can interface with multiple different EHRs from a diverse set of vendors. Many of these interfaces have had to be custom built owing to the lack of standard data sets.

Some medical specialty organizations created their QCDRs as a member benefit and available to members without additional cost. Several medical specialty societies have subsequently licensed their QCDR data to private investors to ensure their long‐term sustainability. 12 , 13 ACEP chose to charge a nominal fee per patient encounter for participation in CEDR, balancing the cost of providing the QCDR platform against the expected reimbursement enhancement that participants might enjoy through their participation. 14

The 21st Century Cures Act 15 has resulted in the Office of the National Coordinator for Health Information Technology to create the United States Core Data for Interoperability Standard. 16 As EHRs are required to adopt this standard, creating the interfaces necessary for end‐to‐end electronic reporting may become easier and less costly.

1.9. CEDR PRESENT STATE AND ONGOING BARRIERS

1.10. Adoption

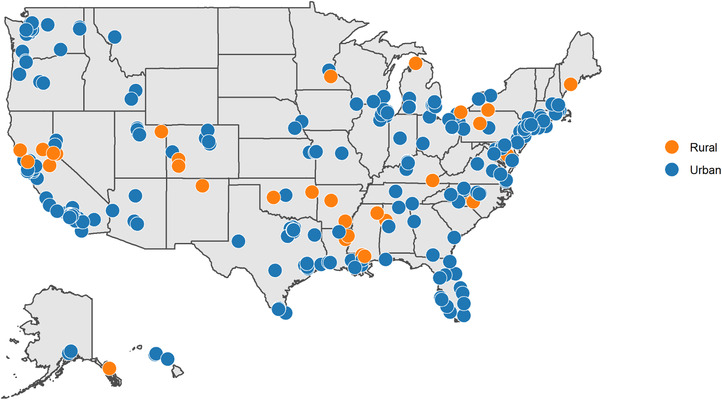

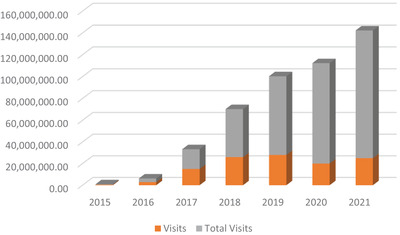

QCDRs have grown rapidly. Since enabling regulations were promulgated, >150 QCDRs have been established, including several specifically targeted to emergency care, 17 though as noted, that number has decreased recently as the operating requirements for QCDR have increased. As the earliest emergency medicine QCDR, CEDR initially grew at an exponential pace. CEDR was established in 2015 with 14 hospitals reporting ≈500,000 patient records. By the end of 2016, 72 hospitals were reporting data, with ≈3 million patient records housed in the CEDR data warehouse. By 2021, participation exceeds 900 EDs contributing over 25 million ED visits (Figures 1 and 2). 9 It is already one of the largest clinical data registries in the country. 9 The growth of QCDRs represents an important opportunity to align and advance the quality of clinical care by leveraging the extensive data for research and surveillance purposes.

FIGURE 1.

Clinical Emergency Data Registry participant sites, 2020

FIGURE 2.

Clinical Emergency Data Registry growth, 2015 to 2021

1.11. Measure evolution

Over the same period, there has been increased regulatory pressure to continually advance quality by integrating measures that matter most to patients, eliminating topped out measures and harmonizing measures that focus on similar quality improvement targets, for example through the MIPS Value Pathways (MVP) announced in 2019. 18 Although these processes have the potential to improve quality, they will also require resources to develop, test, and implement new measures that meet QPP requirements, reflect the most recent clinical evidence, and demonstrate measurable variation in performance among clinicians.

Given the unique clinical environment of the ED and the limited applicability of many existing QPP measures to acute episodic care, the task of new measure development will likely need to be borne by clinical experts and leaders within emergency medicine. Although CEDR has the advantage of being a registry that supports electronic clinical quality measures or eCQMs, the data available for collection in the ED may be less likely to include outcome data in comparison to longitudinal data sets such as administrative claims. Thus, CEDR, like many other QCDRs, collects process measures more easily than outcome measures, particularly as the outcomes of high‐quality emergency care often manifest after the patient departs from the ED. The challenges of outcome measurement are not unique to eCQMs or to the specialty of emergency medicine. 8 These outcome measures require more complex risk adjustment models and often the ability to capture data beyond the ED discharge or admission. This includes the impact of various patient‐ and system‐level factors such as social determinants of health, race, ethnicity, and gender; access to care; and care coordination on outcomes. Only some of these data are reliably captured in the EHR. Despite these challenges, as the interoperablity of healthcare information is improved such measures will become increasingly refined and used more often in QCDRs.

1.12. Measure harmonization

MACRA led to the proliferation of QCDRs and quality measures, leading in many instances to multiple QCDRs within a given specialty, each with its own proprietary quality measures. 19 As a result, CMS has had to adjudicate over a thousand quality measures, a volume of work that might not have been anticipated. 19 Regulatory agencies and payors increasingly expect that measures appearing in different registries be calculated in the same fashion and be comparable across registries. CMS has been requiring QCDRs with similar measures to “harmonize” and agree on a single measure. Not only does this reduce the administrative overhead of reviewing these measures, but it also allows for a quality measure to be adopted more broadly. This is true both for measures in QCDRs within a specialty and for similar measures that cross specialties.

For example, a measure for the appropriate use of coagulation studies had been collected by 2 different emergency medicine QCDRs. 9 The effort to harmonize the measure between the registries required that numerous inclusion and exclusion criteria by made similar to allow for a fair comparison of clinicians across the registries involved. Harmonization required extensive collaboration and communication between registry leaders and this ensured standardized reporting and preserve flexibility for registry participants.

1.13. Value to participants

To date, QCDRs have been effective in fulfilling customers’ primary objective for participation: the avoidance of a negative payment adjustment through MIPS. 17 QCDRs now have an opportunity to build on this success and move from a reactive approach focused on averting losses in a single federal payment program toward a forward‐looking perspective with the goal of advancing emergency care quality and value. As the QPP continues to evolve, from MIPS to more sophisticated value‐based payment models, emergency medicine QCDRs must continue to evolve to create value for emergency physicians and help support the viability of the independent emergency medicine practice. In addition, the quality measures and national scoring available in CEDR could serve as a foundation for private payer contracting that also increasingly includes performance‐based incentives and quality tiering. In fact, use of QCDRs across both public and private payers has the concurrent benefit of supporting broader population‐based quality improvement as well as reducing physician burden and confusion that often follows participating multiple competing pay‐for‐performance programs.

This important mission can be achieved through (1) the ongoing development of new measures that meaningfully advance patient‐centered emergency care, (2) the continued leveraging of QCDRs both locally and nationally as a quality improvement and benchmarking tool, and (3) the examination of the vast clinical data repository that CEDR encompasses to pursue scientific questions that improve emergency care.

The last action poses an immense challenge, given the high variation in data definitions, extraction processes and completeness among participating sites, and the high standard of accuracy and precision required for clinical research. However, the generalizability and sheer size of the registry data represent a tremendous opportunity to create knowledge and improve emergency care for decades to come.

2. CONCLUSION

QCDRs are a novel means provided under MACRA for physicians to report quality and improvement activities to the CMS QPP. Since MACRA's passage, many registries have been established and have been growing rapidly. Although there are challenges to QCDR adoption, these registries provide a cornerstone to the national strategy of rewarding physicians for the value of the care they provide, rather than just the quantity of services delivered.

CONFLICT OF INTEREST

The authors declare no conflict of interest.

ACKNOWLEDGMENTS

The authors acknowledge Craig Rothenberg, MPH, and Dhruv Sharma, MS, who helped assemble figures for this work.

Epstein SK, Griffey RT, Lin MP, Augustine JJ, Goyal P, Venkatesh AK. Development of a qualified clinical data registry for emergency medicine. JACEP Open. 2021;2:e12547. 10.1002/emp2.12547

Supervising Editor: Chadd Kraus, DO, DrPH

Funding and support: By JACEP Open policy, all authors are required to disclose any and all commercial, financial, and other relationships in any way related to the subject of this article as per ICMJE conflict of interest guidelines (see www.icmje.org). The authors have stated that no such relationships exist.

REFERENCES

- 1. Kaiser Family Foundation . Summary of the Affordable Care Act. Available at: https://www.kff.org/health‐reform/fact‐sheet/summary‐of‐the‐affordable‐care‐act/ Accessed 2020.

- 2. Centers for Medicare and Medicaid Services . MACRA. Available at: https://www.cms.gov/Medicare/Quality‐Initiatives‐Patient‐Assessment‐Instruments/Value‐Based‐Programs/MACRA‐MIPS‐and‐APMs/MACRA‐MIPS‐and‐APMs. Accessed 2020.

- 3. Centers for Medicare and Medicaid Services . Participation Options Overview. Available at: https://qpp.cms.gov/mips/overview. Accessed 2020. [Google Scholar]

- 4. Centers for Medicare and Medicaid Services . Getting Started with eCQMs. Available at: https://ecqi.healthit.gov/ecqms. Accessed 2020.

- 5. Jones SS. “Meet PQRS Goals with the Measure Applicability Validation Process.” ACEP Now. 2015;34(4). Available at: https://www.acepnow.com/meet‐pqrs‐goals‐with‐the‐measure‐applicability‐validation‐process/. [Google Scholar]

- 6. Centers for Medicare and Medicaid Services . Measures Management System. Available at: https://www.cms.gov/Medicare/Quality‐Initiatives‐Patient‐Assessment‐Instruments/MMS/Downloads/A‐Brief‐Overview‐of‐Qualified‐Clinical‐Data‐Registries.pdf. Accessed 2020. [Google Scholar]

- 7. Castellucci M “CMS seeks to reduce number of quality measures.” Modern Healthcare. 2018. Available at: https://www.modernhealthcare.com/article/20180428/NEWS/180429901/cms‐seeks‐to‐reduce‐number‐of‐quality‐measures.

- 8. Council of Medical Specialty Societies . The Measurement of Health Care Performance. 3rd ed. Available at: https://cmss.org/wp‐content/uploads/2015/07/CMSS‐Quality‐Primer‐layout.final_‐1.pdf. Accessed 2020.

- 9. American College of Emergency Physicians . Clinical Emergency Data Registry. Available at: https://www.acep.org/cedr/ Accessed 2021.

- 10. What is HL7 FHIR? Available at: https://www.healthit.gov/sites/default/files/page/2021‐04/What%20Is%20FHIR%20Fact%20Sheet.pdf. Accessed 2021.

- 11. Centers for Medicare and Medicaid Services . Quality Measures: Traditional MIPS Requirements. Available at https://qpp.cms.gov/mips/quality‐requirements. Accessed 2021.

- 12. AAN Collaboration with Verana Health . https://www.aan.com/policy‐and‐guidelines/quality/axon‐registry2/axon‐registry/new‐aan‐collaboration‐with‐verana‐health/

- 13. American Academy of Ophthalmology, DigiSight Technologies to Drive Innovations in Eye Health Through World's Largest Specialty Clinical Database. https://www.aao.org/newsroom/news‐releases/detail/academy‐digisight‐collaboration‐news‐release. 2021.

- 14. American College of Emergency Physicians . Clinical Emergency Data Registry Frequently Asked Questions. Available at: https://www.acep.org/cedr/faqs/ Accessed 2021.

- 15. 21st Century Cures Act . Public Law 114‐255‐2016 (42 USC 201). Available at https://www.congress.gov/114/plaws/publ255/PLAW‐114publ255.pdf. Accessed 2021.

- 16. Office of the National Coordinator for Health Information Technology . United State Core Data for Interoperability (USCDI) . Available at https://www.healthit.gov/isa/united‐states‐core‐data‐interoperability‐uscdi. Accessed 2021.

- 17. Tinloy B, Kong Darden C. Physicians, Here's How to Save Money on Quality Reporting. Vituity. Available at: https://www.vituity.com/blog/physicians‐heres‐how‐to‐save‐money‐on‐quality‐reporting/ Accessed 2020. [Google Scholar]

- 18. Centers for Medicare and Medicaid Services . MIPS Value Pathways (MVPs). Available at https://qpp.cms.gov/mips/mips‐value‐pathways. Accessed 2020.

- 19. Avalere Health . Registries Continue to Give More Opportunities for Clinicians to Meet Reporting Requirements. Available at: https://avalere.com/press‐releases/registries‐continue‐to‐give‐more‐opportunities‐for‐clinicians‐to‐meet‐reporting‐requirements Accessed 2020.