Abstract

In the last few years, artificial intelligence (AI) research has been rapidly developing and emerging in the field of dental and maxillofacial radiology. Dental radiography, which is commonly used in daily practices, provides an incredibly rich resource for AI development and attracted many researchers to develop its application for various purposes. This study reviewed the applicability of AI for dental radiography from the current studies. Online searches on PubMed and IEEE Xplore databases, up to December 2020, and subsequent manual searches were performed. Then, we categorized the application of AI according to similarity of the following purposes: diagnosis of dental caries, periapical pathologies, and periodontal bone loss; cyst and tumor classification; cephalometric analysis; screening of osteoporosis; tooth recognition and forensic odontology; dental implant system recognition; and image quality enhancement. Current development of AI methodology in each aforementioned application were subsequently discussed. Although most of the reviewed studies demonstrated a great potential of AI application for dental radiography, further development is still needed before implementation in clinical routine due to several challenges and limitations, such as lack of datasets size justification and unstandardized reporting format. Considering the current limitations and challenges, future AI research in dental radiography should follow standardized reporting formats in order to align the research designs and enhance the impact of AI development globally.

Keywords: Artificial intelligence, machine learning, deep learning, radiography

Introduction

Artificial intelligence (AI) is defined as the capability of a machine to imitate human intelligence and behaviour to perform specific tasks.1 In the past few years, AI has achieved great success through rapid development and continuously influences the lifestyle. Many AI technologies have assisted peoples’ daily life and improved their quality of life, such as online search engines, image recognition and virtual assistants. The development and application of AI has also emerged in the field of medicine. Several AI tasks have been introduced and developed to assist clinicians to diagnose and detect diseases, analyse medical images and analyse treatment outcomes.2 AI technology has a possibility of improving patient care through better diagnostic aids and reduced errors in daily practice.

Digital radiographs have greatly enhanced the development of AI in the medical and dental field, because the radiographic images produced by X-ray irradiation are digitally coded and can be readily translated into computational language.3 Dental radiography, that is, intraoral radiographs, panoramic, cephalogram, and CT, are collected during routine dental practice for diagnosis, treatment planning and treatment evaluation purposes. Thus, these large datasets offer an incredibly rich resource for scientific and medical research, especially for AI development. In common radiology practice, radiologists visually assess and interpret the findings according to the features of the images; however, this assessment can sometimes be subjective and time-consuming. In contrast, AI methods enable automatic recognition of complex patterns in imaging data and provide quantitative analysis.1 Therefore, AI can be used as an effective tool to assist clinicians to perform more accurate and reproducible radiological assessments. Moreover, further development can contribute to personalized dental treatment planning by analysing clinical data in order to improve treatment decision-making and achieve predictable treatment outcome.4

AI has gained the attention of many researchers in dentistry, especially for dental radiography, due to the reasons mentioned above. Many well-written reviews that provided basic concepts or radiologist’s guide of AI application have published, particularly in medical imaging, which attracted more dental researchers to develop its application in dentistry.3,5–7 The rapid development of technology in recent years has also accelerated the development of various applications of AI for dental radiography.8,9

This review focused on the applicability of AI for various purposes in dental radiography, which can be potentially implemented in dental practice. After we classified based on the application purposes, the current development of AI methodology or algorithms to provide information required to design a future AI study was discussed. Finally, limitations and challenges of the current AI developments were identified for further development of AI research in dental and maxillofacial radiology to achieve a better dental healthcare system.

Literature search

An online literature search was performed on PubMed and IEEE Xplore databases, up to December 2020, without restriction of publication period. The combinations of search term were constructed from “artificial intelligence,” “machine learning,” “deep learning,” “convolution neural network,” “automated,” “computer-assisted diagnosis,” “radiography,” “diagnostic imaging” and “dentistry.” In addition to online searches, reference lists from all the included articles were manually examined for further full-text studies. This review included peer-reviewed research articles from journals and conference papers from proceeding books in which full-text articles were available. All the studies investigating the application of AI using digital dental radiography, that is, intraoral, extraoral, panoramic, CBCT and CT, were reviewed. This review excluded the studies that only provided an abstract or the full-text article was not accessible. As a result, this review included 119 relevant articles, which along with the extracted data for the purposes of the study and AI methods are shown in the Supplementary Table 1.

AI Application in dental radiography

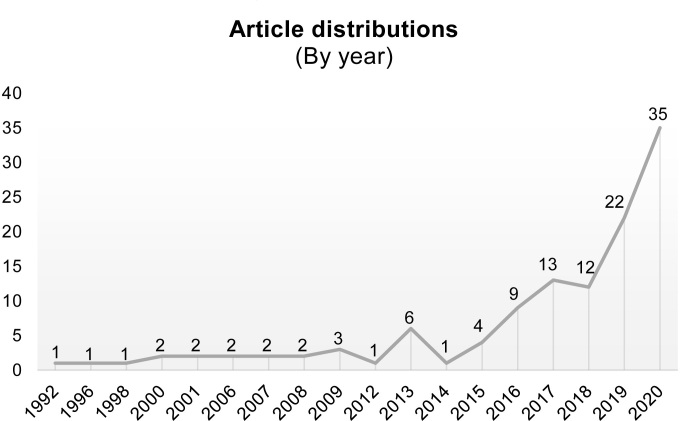

Figure 1 shows the publication of AI studies in dental radiography has increased significantly every year, especially in 2020. Deep learning (DL) is the most popular AI method applied in dentistry, as most studies (59%) used DL as a method to perform image recognition tasks in dental radiography, followed by machine learning (ML) methods (26%) and other computer vision methods.

Figure 1.

Distribution of artificial intelligence studies by year of publication.

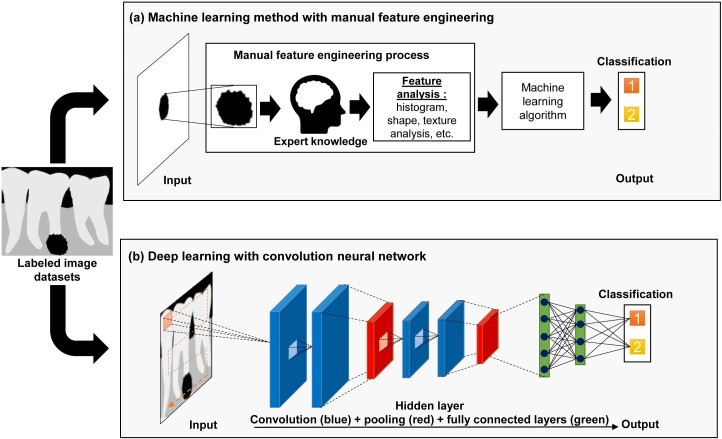

One of the main differences between ML and DL is the feature engineering process, which is the core process of computer vision (Figure 2). In computer vision tasks, feature engineering, which is also called feature extraction, is the process to reduce the complexity of the data so that the patterns can be quantified using computer programs and make it more amenable for learning algorithms. ML is a subfield of AI that allows the prediction of unseen data by using handcrafted feature engineering. These features are used as inputs to state-of-the-art ML models that are trained to solve a specific problem.10 On the other hand, DL, which is also a subfield of ML, can automatically learn feature representations from data without human intervention. This data-driven approach allows more abstract feature definitions that depend on the learning datasets and thus reduces manual preprocessing steps.6 The demand of DL will be expected to increase significantly in the future due to the fact that the first DL-based convolution neural network (CNN) architecture, AlexNet,11 successfully performed the image recognition tasks in 2012. Since various applications of AI in digital dental radiography were reported, the included studies were categorized according to similarity of AI application purpose. Principally, AI in dental radiography have been developed to perform image-based task such as classification, detection and segmentation, which are shown in Figure 3.

Figure 2.

Difference between machine learning (ML) and deep learning (DL) for classification of periapical pathologies.

(a) ML relies on the expert knowledge to perform feature extraction of the periapical lesions on the images. The most robust features are fed into ML classifier to make an accurate prediction; and (b) DL, represented by convolution neural network, can simultaneously perform feature extraction and selection for classification task throughout several hidden layers that can automatically learn relevant features of the images.

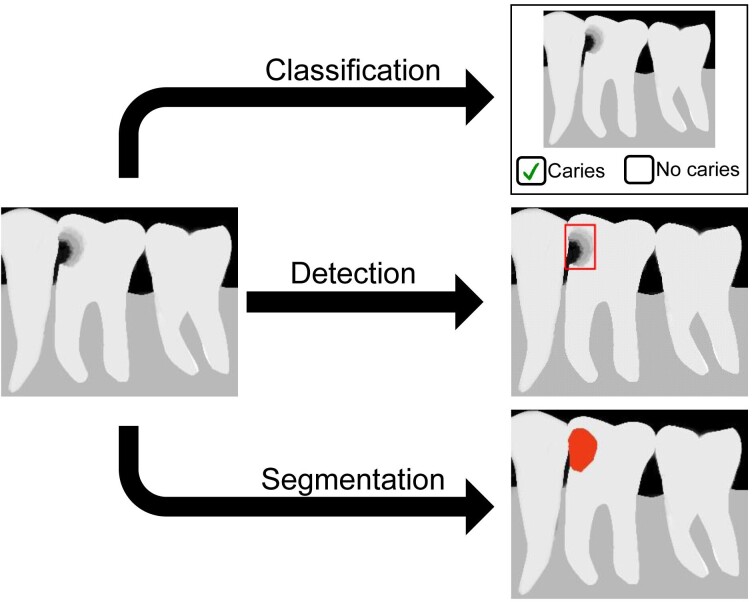

Figure 3.

Most common computer vision tasks with an example of dental caries recognition.

Classification task, which requires labelled dataset, is used to categorize the entire image into a caries or healthy tooth. Detection task, which requires labelled dataset with marking of a region of interest, allows to localize and identify the caries by drawing a bounding box around it. Segmentation task, which requires labeled dataset with precise delineation of the desired object, is implemented to define the pixel-wise boundaries of caries.

Dental caries

AI can provide additional capability to recognize some pathologies, such as proximal caries and periapical pathologies, that are sometimes unnoticed by human eyes on radiographs due to image noise and/or low contrast.12 Several researchers have developed AI models that can assist clinicians to automatically identify dental caries on radiographs. Devito et al. (2008) applied an AI model, a multilayer perceptron neural network, to improve the diagnostic ability of proximal caries on bitewing radiographs. The results demonstrated a 39.4% improvement in proximal caries detection, which corresponded to the application of the neural networks.13 Using various image processing techniques followed by ML classifiers, many studies also demonstrated high-performance results (accuracy of 86 to 97%) in classifying dental caries in radiographies.12,14–17 A DL-based CNN method was also developed for not only classifying but also detecting dental caries in periapical radiographs and showed promising results. Choi et al. (2016) proposed a combination of several image processing techniques with CNN to detect proximal caries,18 and Lee (2018) applied the transfer learning method of deep CNN architectures for the automatic detection of dental caries.19 The automatic detection of dental caries, especially in proximal regions, is useful, because it is sometimes difficult for dentists to identify caries in certain regions because of uneven exposure to X-rays, various sensitivities of the receiver sensor, and natural variability in the density or thickness of the tooth.18 Considering the promising results, more studies are needed to optimize the application of AI for dental caries detection and segmentation in radiographs.

Periapical pathologies

Periapical pathologies may co-exist with dental caries when the infection spreads to the periapical tissues. It can be seen on radiographs as a periapical radiolucency, which may reflect an abscess, dental granuloma or radicular cyst. Detecting and differentiating these types of lesions on radiographs generally depends on the individual’s knowledge, skill and experience.20 It is crucial to differentiate these lesions on radiographs to avoid misdiagnosis of periapical pathologies. Computer-aided diagnosis has been introduced to quantify periapical lesions based on the size21 and severity of lesions.22 DL methods were also used to classify the periapical pathologies based on severity on panoramic radiographs, from mere widening of the periodontal ligament to clearly visible lesions.23 Flores et al. (2009) and Okada et al. (2015) developed computer-aided diagnosis for automatically differentiating dental granuloma and radicular cyst on CBCT using ML methods.24,25 Recently, U-net architecture, a fully convolutional network, has been used for automated detection and segmentation of periapical lesions on panoramic radiographs20 and CBCT.26 These studies demonstrated that there was no significant difference between the performance of the AI model and manual detection by experienced radiologists and oral maxillofacial surgeons. Further advancement of AI in computer-aided diagnostic systems may help to overcome the diagnosis issues of periapical lesions and assist clinicians in the decision-making process in the near future.

Periodontal bone loss

Periodontitis is one of the most common oral diseases and can cause alveolar bone loss, tooth mobility and tooth loss.27 A diagnosis of periodontitis can be established from clinical examination of periodontal tissues and radiographic examination of periodontal bone condition.28 However, the intra- and inter-examiner reliability of detecting and analysing periodontal bone loss (PBL) on radiographs is low due to their complex structure and low resolution.29 Hence, the application of AI in automated assistance systems for dental radiographic imagery data, that is, periapical and panoramic radiographs, could allow more reliable and accurate assessments of PBL. Lin et al developed a computer-aided diagnosis model that can automatically localize PBL on periapical radiographs by segmenting bone loss using a hybrid feature engineering process and subsequently measure the degree of PBL based on the positions of the alveolar crest, cement-enamel junction and tooth apex.28,30 CNN has also been used for the classification of periodontal condition31 and detection of PBL.32–34 Recently, Chang et al. (2020) developed a DL hybrid AI model for detecting PBL and staging periodontitis according to the criteria of the 2017 World Workshop on the Classification of Periodontal and Peri-implant diseases and Conditions.35 Promising results have been demonstrated in these studies, as the AI models showed comparable or even better results than those of manual analysis of PBL. Through the continuous development of AI methods and high-quality image datasets, computer-assisted diagnosis is expected to become an effective and efficient tool in daily clinical practice that can assist in detection, degree measurement and classification of PBL by enabling automated tasks and saving assessment time.

Tumour and cyst classification

To identify or diagnose tumours and/or cysts from radiographic images, dentists are expected to have basic skills in interpreting intraoral and extraoral radiographs that are used in dental practice. The ability to recognize and interpret abnormal patterns in radiographic images is required for diagnostic reasoning, because the characteristics of these lesions vary, such as internal structure, shape, and periphery of the lesions. Biopsy and other additional examinations are normally required to provide a final diagnosis of tumour and/or cyst.36 Many studies have demonstrated that AI systems have superior ability to recognize patterns in images and perform such specific tasks. Therefore, the characteristics of tumours and/or cysts using feature engineering processes were investigated to develop automated diagnosis of various jaw cysts and/or tumours.

Several ML methods have been used to develop a computer-aided classification system for tumours and cysts based on image textures on panoramic radiographs37,38 and CBCT.39 Using CBCT imaging, Abdolali et al. (2017) developed an automatic classification system that identified maxillofacial cysts by automatic segmentation of the lesions using asymmetry analysis40 and subsequently classified them into three different lesions using the ML classifier.41 DL methods, especially using CNN, have also been developed to detect and classify lesions into tumours and various cyst lesions on panoramic radiographs42–45 and CBCT.46 Kwon et al and Yang et al., in 2020 used the You Only Look Once (YOLO) network, a deep CNN model for detection tasks, to detect and classify ameloblastoma and various cysts on panoramic radiographs.46,47 Despite promising results, the performance of the included studies, both ML and DL models, showed variability. These results were reasonable because tumour and cystic lesions can present in various forms (e.g., shape, location, and internal structure) and sometimes also show similarity in radiographic features. Further development of AI models to detect and classify tumour and cyst lesions are needed for their application in clinical practice.

Cephalometric analysis

AI technology has been applied in automated cephalometric anatomical landmarks and skeletal relation classification. Cephalometric image analysis is commonly used in dental clinics for evaluating the skeletal anatomy of the human skull for treatment planning and evaluating treatment outcome.48 Manual identification of many anatomical landmarks is generally needed to complete conventional or digital cephalometric analysis. Various AI methods for cephalometric analysis have been developed to reduce the burden on the clinician and save time. The application of AI for automating the cephalometric anatomical landmarks identification has been developed from 1998 to 2013 using knowledge-based algorithms49 and computer vision methods.50–56 In 2014, automated identification of 3D anatomical landmarks was developed using knowledge-based algorithms57,58 and computer vision methods59–63 to overcome several shortcomings of 2D image analysis, such as errors from projection, magnification of objects and superimposition of structures.64 The DL method using CNN65–67 and R-CNN68,69 was also used to develop AI models for automatic detection of anatomical landmarks in 2D lateral cephalograms with promising results.

AI models have been developed not only to identify anatomical landmarks but also to measure or analyse anatomical landmarks on cephalograms. Gupta et al. (2015) developed an AI model that can perform automatic cephalometric measurement using knowledge-based algorithms and showed no significant difference between automatic and manual measurements.57,58 Fully automatic systems that can classify the anatomical types based on eight standard clinical measurement sets, formulated as geometrical functions of the landmark locations, such as the angle or the distance between cephalometric anatomical landmarks, were developed using ML70 and CNN71 models. With the development of CNN models in AI, Yu et al. (2020) constructed a multimodal CNN to provide an accurate skeletal diagnostic system without additional cephalometric tracing information and analysis using 5,890 lateral cephalograms and demographic data as input.67 Fully automated cephalometric analysis systems showed the potential of DL application as a cephalometric orthodontic diagnostic tool. However, further research comparing these systems with the original approach of anatomical landmark detection is still needed.

Screening of osteoporosis

To date, many radiomorphometric studies have shown the potential of panoramic radiographs to detect osteoporosis based on low bone mineral density (BMD),72 which accelerated AI application for automatic early detection of osteoporosis. Since panoramic radiography is commonly used as a diagnostic tool in dental practice, it will be very useful for initial screening of osteoporosis if AI technology can be applied. The outcome can be used as a consideration for other dental treatments that are affected by low BMD, such as increased risk of peri-implant bone loss73 and osteonecrosis of the jaws.74

Mandibular cortical bone is considered as an important feature and effective region of interest related to BMD. A method of automatic measurement of the mandibular cortical width using an active shape model75,76 and discriminant analysis77 demonstrated that the automatic width measurement significantly correlated with BMD. Roberts et al. (2013) proposed the combined features of mandibular cortical width and texture as a potential biomarker for osteoporosis after showing increased performance using the ML method.78 Subsequently, several ML methods have been developed to analyse and find the optimum feature engineering process of mandibular cortical width,79–83 and these demonstrated high diagnostic performance (AUC: 98.6%) with femoral neck BMD.83 Several CNN architectures have also been developed for the classification of osteoporosis using panoramic radiographs with promising results (AUC varied from 86 to 99%).84–86 The development of the above-mentioned computer-assisted diagnostic systems for osteoporosis showed promising results in recent years and may become available for clinical use in the near future for early detection of osteoporosis.

Tooth recognition and forensic odontology

AI methods can be beneficial for achieving efficient forensic dental identification at the time of large-scale disasters, in which many victims’ bodies are severely damaged. The use of dental information for personal identification is useful because dental features generally remain unaltered after death. Dental radiographs are commonly used for comparison between ante- and post-mortem data, because they contain essential and unique information regarding individual dentition. However, this process is time-consuming because each tooth with various anatomies should be manually analysed prior to the matching process. Considering the capability of AI to assist the dental forensic process, DL methods, such as CNN and R-CNN, have been used for automatic tooth detection on periapical radiographs,87–89 panoramic radiographs90–92 and CBCT.93,94 DL methods are also used to perform automatic teeth segmentation in panoramic radiographs, which can be beneficial for antemortem and postmortem data-matching processes.95–98 To another extent, the promising results of tooth recognition with the AI can be useful in daily clinical practice.

AI application in forensic odontology can also be used for automatic age estimation, sex determination, skeletal morphology identification and personal identification.99–105 Deep CNN was used for age estimation through staging of third molar development corresponding to the Demirjian classification in panoramic radiographs.100–102 The best stage allocation accuracy obtained was 61% in a fully manual segmentation process of the third molar.102 Avuçlu et al. (2017) developed novel approaches to determine age and sex using teeth images from cropped panoramic radiographs. They used image processing techniques to segment the teeth images and feed them into multilayer perceptron neural networks for age and gender estimation.103 Patil et al. (2020) also demonstrated the potential use of ANN for gender determination using mandibular morphometric parameters in panoramic radiographs.104 Matsuda et al. (2020) conducted a preliminary study to investigate the potential use of simple CNN architectures for personal identification by comparing 30 pairs of panoramic radiographs.105 Despite the various promising results in the field of forensic odontology, further improvement of AI models is still needed.

Dental implant

Dental implant has evolved as a one of standard treatment to replace missing teeth. Despite the fact that dental implant treatment showing long-term success and a survival rate of more than 10 years in over 90% of the cases, mechanical and biological complications can occur. When implant failure occurred and the information about the implant system is not available, identifying the correct implant system is essential for retreatment of the existing implant. However, since there are numerous kinds of dental implant systems including the abutment system and the materials of the superstructure, it becomes more difficult nowadays to accommodate specific clinical indications. In such cases, AI can provide advantages to classify the dental implant system through dental radiography because radiograph examination is commonly performed to evaluate implant treatment in medical facilities.

In 1996, Lehmann et al developed IDEFIX or identification of dental fixtures in intraoral x-rays using ML method to classify eight different dental implant systems based on several parameters such as implant diameter, length and cross-section area.106 Recently, many studies utilized CNN to classify different implant systems using intraoral and panoramic radiograph.107–112 Kim et al used transfer learning method on five CNN architectures to classify four different implant systems and demonstrated accuracy exceeding 90% on all models. YOLO network, which specialized to detect an object, can also be used to perform dental implant detection.108 Lee et al used automated deep CNN model to classify six different implants using 11,980 panoramic and periapical radiographs. The results showed that the automated DCNN outperformed most of the participating dental professionals, including periodontists and residents.109 The CNN showing highly effective in classifying similar shapes of different types of implant system based on dental radiographic images. As numerous varieties of dental implants are available in market, further development might be able to classify many dental implant systems and, therefore, can provide valuable information for clinicians when evaluate or fix the implant failure.

Image quality enhancement

CNN-based architecture can also be applied to enhance the image quality of dental radiograph correction. Du et al used a CNN-based architecture that can correct blurred image on panoramic radiograph due to patient’s positioning errors. The result demonstrated stable performances of CNN to estimate the positioning error of patient’s dental arch followed with reconstruction of the corrected panoramic image, resulting in blur reduction.113 Hatvani et al applied U-net architectures for resolution enhancement of 2-D CBCT dental images by using μCT data of the same teeth as ground truth. The results demonstrated the superiority of the proposed CNN-based approaches, allowing better detection of salient features, such as the root canal features for endodontic treatment.114 Because of the better performance for the segmentation task, several studies also applied this U-net architectures to perform metal artefact reduction (MAR) in CBCT images.115–117 Metal artefact commonly appeared in CBCT images due to the high attenuation of heavy materials such as dental restoration and dental implant. Despite the limitation and lack of ground truth images, the results suggest that CNN-based MAR has possible clinical value in real applications on CBCT images as also already demonstrated in conventional CT.

Other purposes

AI has a lot of potential in dental radiography and, henceforth, is expected to reduce the dentists’ workload and human errors through automated tasks. To date, in addition to the above-mentioned applications, AI has also been developed to perform other tasks. Several ML method were used to develop automated diagnosis system of various dental diseases (e.g., dental caries, cracked teeth and periodontal bone loss)118–120 and classification of various dental restoration.121 On the other hand, DL was used to develop AI model using several dental radiography modality for automated diagnosis system common dental diseases122; detect the presence of maxillary sinus pathologies123–125; identification and classification of head and neck lymph node metastasis126,127; detection and segmentation of the relationship between the mandibular canal and mandibular third molar position128–131; detection of vertical root fracture132,133; detection and classification of impacted maxillary supernumerary teeth134 and mandibular third molar135,136; classification of root morphology of the mandibular first molar137; and diagnosis support in patients with Sjögren syndrome using CT images.138 Considering the great potential of DL methods, the application and development will be greatly increased in near future.

Limitation and challenges

Figure 1 shows an increasing number of AI studies in recent years, which demonstrate the potential use and promising results of AI for a wide variety of purposes. However, most of them are not readily applicable in daily clinical practice as further development is needed. The development of AI applications for dental radiography should consider several things. Quantity and quality of data are crucial to the learning process for AI systems. The amount of imaging data and the quality of images are continuously increasing, and they are routinely stored in medical facilities. However, not all of these data are available and accessible for AI development due to ethical issues and patients’ data protection policy.139,140 Despite the exponential rise of computational power year by year, the limitation of data size can be a main problem in developing AI studies.

For the ML-based AI model, especially DL, each of the training, validation and testing sets should be ideally independent. Additionally, demographic and clinical characteristics of cases in dataset should be specified and meet the criteria in accordance to study’s rationale, goals and anticipated impacts.141 For training dataset, the disparity of each dataset or data imbalance that represent each class should be avoided to prevent a high number of false-negative results.142 Furthermore, the relationship between the number of training images and model performance should be evaluated.5 Some studies also did not describe the rationale for the choice of the reference standard or ground truth; and the potential errors, biases, and limitations of that reference. For example, the number of human annotators and their qualifications should be specified. Also, inter- and intrarater variability or any steps should be measured to reduce the variability of the reference standard.

Although the popularity of DL is increasing in recent years, they require huge amounts of data; however, some of the included studies had relatively smaller numbers of dental radiograph datasets, especially when compared to AI study in the medical field. For example, the studies included in a review of AI application for thoracic imaging used a dataset of chest radiograph ranged from 1000 to 100.000 dataset.143 Despite many of AI studies using dental radiograph were pilot/explorative studies, this could lead to over-fitted AI models leading to over optimistic performance results because DL algorithms are usually scaled with data.8,140 To avoid these problems, sample size should be justified, and if applicable, statistically calculated so that it can be generalized in order to show an effect in larger population.144 However, even if the sample size is statistically sufficient, it should be noted that the AI model may cause overfitting or underfitting depending on the quality and quantity of dataset. The number of training and validation dataset should be evaluated during the training process by observing the learning curve of the AI model. The learning curve is a graph showing the relationship between the sample size and the accuracy. For example, when the training score is significantly higher than the validation score, the addition of the training dataset will most likely increase the generalization. Based on the dataset and computational sources, the selection of best algorithm and method to be applied in AI study should be carefully assessed; and experienced dental clinicians, expert computer engineers and data scientists should be involved for the proper choice of methodology.

Thirty-seven of the reviewed studies did not provide information regarding validation techniques, either cross-validation or hold-out validation techniques, which are crucial for not only avoiding the over-fitted model but also for monitoring the performance of the model during training and choosing the best optimized model that can be generalized in an independent set of data.145 It should be noted that to achieve optimal generalizability of the model, hold out validation test set is more preferable than using cross-validation approach although both methods are still methodologically considerable. Therefore, hold-out validation techniques with heterogeneous datasets of dental radiography should be considered as a preferred approach in developing AI model to overcome generalizability issue.

Finally, aligning and standardizing research methodologies, such as concept and terminology usage, dataset size justification, performance metrics, and reporting formats, are required. A standardized reporting format is required, such as that described in the checklist for artificial intelligence in medical imaging (CLAIM).141 CLAIM guideline provides essential information regarding required reporting items that should be included and specified in the AI study, with the goal to promote clear, transparent and reproducible scientific communication about the application of AI to medical imaging. By following standardized reporting format, further AI research in dental radiography are expected to have a global impact and bring about substantial improvement in the field of dental and maxillofacial radiology.

This narrative review covered an overview of AI application in digital dental radiography for a wide variety of purposes. However, some limitations in this review should be taken into consideration. Although we tried to cover many studies through rigorous literature search, some study may not be covered in this review due to the non-systematic protocol of literature search and assessment. Additionally, the challenge of developing AI models using dental radiography might be different depending on the application. Further systematic reviews are needed to answer specific questions regarding AI application for specific purposes in the field of dental and maxillofacial radiology.

Conclusion

AI has rapidly advanced in various medical fields and has gained attention, particularly in the radiology community, in recent years. To date, the application of AI in dental radiography has shown great potential for a wide variety of purposes and may play an important role in assisting clinicians in the decision-making process. However, current AI development in dental imaging is not mature enough and requires substantial improvement before its implementation in clinical routine. Future AI research in dental radiography should involve interdisciplinary researchers and follow the guidelines of reporting formats in order to align the research design and enhance the impact of AI development globally. AI application in dental radiography is expected to revolutionize the dental healthcare system by ensuring better dental care at lower costs and thereby benefit patients, providers and the wider society.

Footnotes

Declaration of interest: The authors declare that they have no competing financial or personal interests.

Funding: This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Contributor Information

Ramadhan Hardani Putra, Email: putra.ramadhan.hardani.s2@dc.tohoku.ac.jp.

Chiaki Doi, Email: chiaki.doi.d8@tohoku.ac.jp.

Nobuhiro Yoda, Email: nobuhiro.yoda.e2@tohoku.ac.jp.

Eha Renwi Astuti, Email: eha-r-a@fkg.unair.ac.id.

Keiichi Sasaki, Email: keiichi.sasaki.e6@tohoku.ac.jp.

REFERENCES

- 1.Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL. Artificial intelligence in radiology. Nat Rev Cancer 2018; 18: 500–10. doi: 10.1038/s41568-018-0016-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Jiang F, Jiang Y, Zhi H, Dong Y, Li H, Ma S, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol 2017; 2: 230–43. doi: 10.1136/svn-2017-000101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Thrall JH, Li X, Li Q, Cruz C, Do S, Dreyer K, et al. Artificial intelligence and machine learning in radiology: opportunities, challenges, pitfalls, and criteria for success. J Am Coll Radiol 2018; 15(3 Pt B): 504–8. doi: 10.1016/j.jacr.2017.12.026 [DOI] [PubMed] [Google Scholar]

- 4.Joda T, Yeung AWK, Hung K, Zitzmann NU, Bornstein MM. Disruptive innovation in dentistry: what it is and what could be next. J Dent Res 2021; 100: 448–53. doi: 10.1177/0022034520978774 [DOI] [PubMed] [Google Scholar]

- 5.Bluemke DA, Moy L, Bredella MA, Ertl-Wagner BB, Fowler KJ, Goh VJ, et al. Assessing Radiology Research on Artificial Intelligence: A Brief Guide for Authors, Reviewers, and Readers-From the Radiology Editorial Board. Radiology 2020; 294: 487–9. doi: 10.1148/radiol.2019192515 [DOI] [PubMed] [Google Scholar]

- 6.Do S, Song KD, Chung JW. Basics of deep learning: a radiologist's guide to understanding published radiology articles on deep learning. Korean J Radiol 2020; 21: 33–41. doi: 10.3348/kjr.2019.0312 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Soffer S, Ben-Cohen A, Shimon O, Amitai MM, Greenspan H, Klang E. Convolutional neural networks for radiologic images: a radiologist's guide. Radiology 2019; 290: 590–606. doi: 10.1148/radiol.2018180547 [DOI] [PubMed] [Google Scholar]

- 8.Hung K, Montalvao C, Tanaka R, Kawai T, Bornstein MM. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: a systematic review. Dentomaxillofac Radiol 2020; 49: 20190107. doi: 10.1259/dmfr.20190107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Nagi R, Aravinda K, Rakesh N, Gupta R, Pal A, Mann AK. Clinical applications and performance of intelligent systems in dental and maxillofacial radiology: a review. Imaging Sci Dent 2020; 50: 81–92. doi: 10.5624/isd.2020.50.2.81 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ebigbo A, Palm C, Probst A, Mendel R, Manzeneder J, Prinz F, et al. A technical review of artificial intelligence as applied to gastrointestinal endoscopy: Clarifying the terminology. Endosc Int Open 2019; 7: E1616–23. doi: 10.1055/a-1010-5705 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.;pp.Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional. Neural Netw 25th Int Conf Neural Inf Process Syst 2012;: 1097–105. [Google Scholar]

- 12.Geetha V, Aprameya KS, Hinduja DM. Dental caries diagnosis in digital radiographs using back-propagation neural network. Health Inf Sci Syst 2020; 8: 8. doi: 10.1007/s13755-019-0096-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Devito KL, de Souza Barbosa F, Felippe Filho WN. An artificial multilayer perceptron neural network for diagnosis of proximal dental caries. Oral Surg Oral Med Oral Pathol Oral Radiol Endod 2008; 106: 879–84. doi: 10.1016/j.tripleo.2008.03.002 [DOI] [PubMed] [Google Scholar]

- 14.Yu Y, Li Y, YJ L, Wang JM, Lin DH, WP Y. Tooth decay diagnosis using back propagation neural network. Proc 2006 Int Conf Mach Learn Cybern 2006;: 3956–9. [Google Scholar]

- 15.Li W, Kuang W, Li Y, YJ L, WP Y. Clinical X-ray image-based tooth decay diagnosis using SVM. Proc Sixth Int Conf Mach Learn Cybern ICMLC 2007; 3: 1616–9. [Google Scholar]

- 16.Ali RB, Ejbali R, Zaied M. Detection and classification of dental caries in X-ray images using deep neural networks. ICSEA 2016 Elev Int Conf Softw Eng Adv 2016;: 223–7. [Google Scholar]

- 17.Singh P, Sehgal P. Automated caries detection based on radon transformation and DCT. 8th Int Conf Comput Commun Netw Technol IEEE 2017;: 1–6. [Google Scholar]

- 18.Choi J, Eun H, Kim C. Boosting proximal dental caries detection via combination of variational methods and Convolutional neural network. J Signal Process Syst 2018; 90: 87–97. doi: 10.1007/s11265-016-1214-6 [DOI] [Google Scholar]

- 19.Lee J-H, Kim D-H, Jeong S-N, Choi S-H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J Dent 2018; 77: 106–11. doi: 10.1016/j.jdent.2018.07.015 [DOI] [PubMed] [Google Scholar]

- 20.Endres MG, Hillen F, Salloumis M, Sedaghat AR, Niehues SM, Quatela O, et al. Development of a deep learning algorithm for periapical disease detection in dental radiographs. Diagnostics 2020; 10: 430–21. doi: 10.3390/diagnostics10060430 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Mol A, van der Stelt PF. Application of computer-aided image interpretation to the diagnosis of periapical bone lesions. Dentomaxillofac Radiol 1992; 21: 190–4. doi: 10.1259/dmfr.21.4.1299632 [DOI] [PubMed] [Google Scholar]

- 22.Carmody DP, McGrath SP, Dunn SM, van der Stelt PF, Schouten E. Machine classification of dental images with visual search. Acad Radiol 2001; 8: 1239–46. doi: 10.1016/S1076-6332(03)80706-7 [DOI] [PubMed] [Google Scholar]

- 23.Ekert T, Krois J, Meinhold L, Elhennawy K, Emara R, Golla T, et al. Deep learning for the radiographic detection of apical lesions. J Endod 2019; 45: 917–22. doi: 10.1016/j.joen.2019.03.016 [DOI] [PubMed] [Google Scholar]

- 24.Flores A, Rysavy S, Enciso R, Okada K. Non-Invasive differential diagnosis of dental periapical lesions in cone-beam CT. Proc 2009 IEEE Int Symp Biomed Imaging From NANO to Macro 2009;: 566–9. [Google Scholar]

- 25.Okada K, Rysavy S, Flores A, Linguraru MG. Noninvasive differential diagnosis of dental periapical lesions in cone-beam CT scans. Med Phys 2015; 42: 1653–65. doi: 10.1118/1.4914418 [DOI] [PubMed] [Google Scholar]

- 26.Orhan K, Bayrakdar IS, Ezhov M, Kravtsov A, Özyürek T. Evaluation of artificial intelligence for detecting periapical pathosis on cone-beam computed tomography scans. Int Endod J 2020; 53: 680–9. doi: 10.1111/iej.13265 [DOI] [PubMed] [Google Scholar]

- 27.Tonetti MS, Jepsen S, Jin L, Otomo-Corgel J. Impact of the global burden of periodontal diseases on health, nutrition and wellbeing of mankind: a call for global action. J Clin Periodontol 2017; 44: 456–62. doi: 10.1111/jcpe.12732 [DOI] [PubMed] [Google Scholar]

- 28.Lin PL, Huang PY, Huang PW. Automatic methods for alveolar bone loss degree measurement in periodontitis periapical radiographs. Comput Methods Programs Biomed 2017; 148: 1–11. doi: 10.1016/j.cmpb.2017.06.012 [DOI] [PubMed] [Google Scholar]

- 29.Åkesson L, Håkansson J, Rohlin M. Comparison of panoramic and intraoral radiography and pocket probing for the measurement of the marginal bone level. J Clin Periodontol 1992; 19: 326–32. doi: 10.1111/j.1600-051X.1992.tb00654.x [DOI] [PubMed] [Google Scholar]

- 30.Lin PL, Huang PW, Huang PY, Hsu HC. Alveolar bone-loss area localization in periodontitis radiographs based on threshold segmentation with a hybrid feature fused of intensity and the H-value of fractional Brownian motion model. Comput Methods Programs Biomed 2015; 121: 117–26. doi: 10.1016/j.cmpb.2015.05.004 [DOI] [PubMed] [Google Scholar]

- 31.Lee J-H, Kim D-H, Jeong S-N, Choi S-H. Diagnosis and prediction of periodontally compromised teeth using a deep learning-based convolutional neural network algorithm. J Periodontal Implant Sci 2018; 48: 114–23. doi: 10.5051/jpis.2018.48.2.114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kim J, Lee H-S, Song I-S, Jung K-H. DeNTNet: deep neural transfer network for the detection of periodontal bone loss using panoramic dental radiographs. Sci Rep 2019; 9: 17615. doi: 10.1038/s41598-019-53758-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Thanathornwong B, Suebnukarn S. Automatic detection of periodontal compromised teeth in digital panoramic radiographs using faster regional convolutional neural networks. Imaging Sci Dent 2020; 50: 169–74. doi: 10.5624/isd.2020.50.2.169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Krois J, Ekert T, Meinhold L, Golla T, Kharbot B, Wittemeier A, et al. Deep learning for the radiographic detection of periodontal bone loss. Sci Rep 2019; 9: 8495. doi: 10.1038/s41598-019-44839-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Chang H-J, Lee S-J, Yong T-H, Shin N-Y, Jang B-G, Kim J-E, et al. Deep learning hybrid method to automatically diagnose periodontal bone loss and stage periodontitis. Sci Rep 2020; 10: 7531. doi: 10.1038/s41598-020-64509-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.White S, Pharaoh M. Oral Radiology Principles and Interpretation seventh edition. Canada: Elsevier Mosby; 2017. [Google Scholar]

- 37.Mikulka J, Gescheidtová E, Kabrda M, Peřina V. Classification of jaw bone cysts and necrosis via the processing of orthopantomograms. Radioengineering 2013; 22: 114–22. [Google Scholar]

- 38.Nurtanio I, Astuti ER, Purnama IKE, Hariadi M, Purnomo MH. Classifying cyst and tumor lesion using support vector machine based on dental panoramic images texture features. IAENG Int J Comput Sci 2013; 40: 29–37. [Google Scholar]

- 39.Yilmaz E, Kayikcioglu T, Kayipmaz S. Computer-Aided diagnosis of periapical cyst and keratocystic odontogenic tumor on cone beam computed tomography. Comput Methods Programs Biomed 2017; 146: 91–100. doi: 10.1016/j.cmpb.2017.05.012 [DOI] [PubMed] [Google Scholar]

- 40.Abdolali F, Zoroofi RA, Otake Y, Sato Y. Automatic segmentation of maxillofacial cysts in cone beam CT images. Comput Biol Med 2016; 72: 108–19. doi: 10.1016/j.compbiomed.2016.03.014 [DOI] [PubMed] [Google Scholar]

- 41.Abdolali F, Zoroofi RA, Otake Y, Sato Y. Automated classification of maxillofacial cysts in cone beam CT images using contourlet transformation and spherical harmonics. Comput Methods Programs Biomed 2017; 139: 197–207. doi: 10.1016/j.cmpb.2016.10.024 [DOI] [PubMed] [Google Scholar]

- 42.Poedjiastoeti W, Suebnukarn S. Application of convolutional neural network in the diagnosis of jaw tumors. Healthc Inform Res 2018; 24: 236–41. doi: 10.4258/hir.2018.24.3.236 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Ariji Y, Yanashita Y, Kutsuna S, Muramatsu C, Fukuda M, Kise Y, et al. Automatic detection and classification of radiolucent lesions in the mandible on panoramic radiographs using a deep learning object detection technique. Oral Surg Oral Med Oral Pathol Oral Radiol 2019; 128: 424–30. doi: 10.1016/j.oooo.2019.05.014 [DOI] [PubMed] [Google Scholar]

- 44.Watanabe H, Ariji Y, Fukuda M, Kuwada C, Kise Y, Nozawa M, et al. Deep learning object detection of maxillary cyst-like lesions on panoramic radiographs: preliminary study. Oral Radiol 2020;19 Sep 2020. doi: 10.1007/s11282-020-00485-4 [DOI] [PubMed] [Google Scholar]

- 45.Lee J-H, Kim D-H, Jeong S-N. Diagnosis of cystic lesions using panoramic and cone beam computed tomographic images based on deep learning neural network. Oral Dis 2020; 26: 152–8. doi: 10.1111/odi.13223 [DOI] [PubMed] [Google Scholar]

- 46.Kwon O, Yong T-H, Kang S-R, Kim J-E, Huh K-H, Heo M-S. Automatic diagnosis for cysts and tumors of both jaws on panoramic radiographs using a deep convolution neural network. Dentomaxillofac Radiol 2020; 49: 20200185. doi: 10.1259/dmfr.20200185 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Yang H, Jo E, Kim HJ, Cha IH, Jung YS, Nam W. Deep learning for automated detection of cyst and tumors of the jaw in panoramic radiographs. J Clin Med 1839; 2020: 9: 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Devereux L, Moles D, Cunningham SJ, McKnight M. How important are lateral cephalometric radiographs in orthodontic treatment planning? Am J Orthod Dentofacial Orthop 2011; 139: e175–81. doi: 10.1016/j.ajodo.2010.09.021 [DOI] [PubMed] [Google Scholar]

- 49.Liu JK, Chen YT, Cheng KS. Accuracy of computerized automatic identification of cephalometric landmarks. Am J Orthod Dentofacial Orthop 2000; 118: 535–40. doi: 10.1067/mod.2000.110168 [DOI] [PubMed] [Google Scholar]

- 50.Hutton TJ, Cunningham S, Hammond P. An evaluation of active shape models for the automatic identification of cephalometric landmarks. Eur J Orthod 2000; 22: 499–508. doi: 10.1093/ejo/22.5.499 [DOI] [PubMed] [Google Scholar]

- 51.Rueda S, Alcañiz M. An approach for the automatic cephalometric landmark detection using mathematical morphology and active appearance models. Med Image Comput Comput Assist Interv 2006; 9(Pt 1): 159–66. doi: 10.1007/11866565_20 [DOI] [PubMed] [Google Scholar]

- 52.Vucinić P, Trpovski Z, Sćepan I. Automatic landmarking of cephalograms using active appearance models. Eur J Orthod 2010; 32: 233–41. doi: 10.1093/ejo/cjp099 [DOI] [PubMed] [Google Scholar]

- 53.Rudolph DJ, Sinclair PM, Coggins JM. Automatic computerized radiographic identification of cephalometric landmarks. Am J Orthod Dentofacial Orthop 1998; 113: 173–9. doi: 10.1016/S0889-5406(98)70289-6 [DOI] [PubMed] [Google Scholar]

- 54.Grau V, Alcañiz M, Juan MC, Monserrat C, Knoll C. Automatic localization of cephalometric landmarks. J Biomed Inform 2001; 34: 146–56. doi: 10.1006/jbin.2001.1014 [DOI] [PubMed] [Google Scholar]

- 55.Leonardi R, Giordano D, Maiorana F. An evaluation of cellular neural networks for the automatic identification of cephalometric landmarks on digital images. J Biomed Biotechnol 2009; 2009: 1: 717102–12. doi: 10.1155/2009/717102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Shahidi S, Shahidi S, Oshagh M, Gozin F, Salehi P, Danaei SM. Accuracy of computerized automatic identification of cephalometric landmarks by a designed software. Dentomaxillofac Radiol 2013; 42: 20110187. doi: 10.1259/dmfr.20110187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Gupta A, Kharbanda OP, Sardana V, Balachandran R, Sardana HK. A knowledge-based algorithm for automatic detection of cephalometric landmarks on CBCT images. Int J Comput Assist Radiol Surg 2015; 10: 1737–52. doi: 10.1007/s11548-015-1173-6 [DOI] [PubMed] [Google Scholar]

- 58.Gupta A, Kharbanda OP, Sardana V, Balachandran R, Sardana HK. Accuracy of 3D cephalometric measurements based on an automatic knowledge-based landmark detection algorithm. Int J Comput Assist Radiol Surg 2016; 11: 1297–309. doi: 10.1007/s11548-015-1334-7 [DOI] [PubMed] [Google Scholar]

- 59.Shahidi S, Bahrampour E, Soltanimehr E, Zamani A, Oshagh M, Moattari M, et al. The accuracy of a designed software for automated localization of craniofacial landmarks on CBCT images. BMC Med Imaging 2014; 14: 32. doi: 10.1186/1471-2342-14-32 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Codari M, Caffini M, Tartaglia GM, Sforza C, Baselli G. Computer-Aided cephalometric landmark annotation for CBCT data. Int J Comput Assist Radiol Surg 2017; 12: 113–21. doi: 10.1007/s11548-016-1453-9 [DOI] [PubMed] [Google Scholar]

- 61.Montúfar J, Romero M, Scougall-Vilchis RJ. Automatic 3-dimensional cephalometric landmarking based on active shape models in related projections. Am J Orthod Dentofacial Orthop 2018; 153: 449–58. doi: 10.1016/j.ajodo.2017.06.028 [DOI] [PubMed] [Google Scholar]

- 62.Montúfar J, Romero M, Scougall-Vilchis RJ. Hybrid approach for automatic cephalometric landmark annotation on cone-beam computed tomography volumes. Am J Orthod Dentofacial Orthop 2018; 154: 140–50. doi: 10.1016/j.ajodo.2017.08.028 [DOI] [PubMed] [Google Scholar]

- 63.Neelapu BC, Kharbanda OP, Sardana V, Gupta A, Vasamsetti S, Balachandran R, et al. Automatic localization of three-dimensional cephalometric landmarks on CBCT images by extracting symmetry features of the skull. Dentomaxillofac Radiol 2018; 47: 20170054. doi: 10.1259/dmfr.20170054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Chien PC, Parks ET, Eraso F, Hartsfield JK, Roberts WE, Ofner S. Comparison of reliability in anatomical landmark identification using two-dimensional digital cephalometrics and three-dimensional cone beam computed tomography in vivo. Dentomaxillofac Radiol 2009; 38: 262–73. doi: 10.1259/dmfr/81889955 [DOI] [PubMed] [Google Scholar]

- 65.Nishimoto S, Sotsuka Y, Kawai K, Ishise H, Kakibuchi M. Personal computer-based cephalometric landmark detection with deep learning, using Cephalograms on the Internet. J Craniofac Surg 2019; 30: 91–5. doi: 10.1097/SCS.0000000000004901 [DOI] [PubMed] [Google Scholar]

- 66.Kunz F, Stellzig-Eisenhauer A, Zeman F, Boldt J. Artificial intelligence in orthodontics : Evaluation of a fully automated cephalometric analysis using a customized convolutional neural network. J Orofac Orthop 2020; 81: 52–68. doi: 10.1007/s00056-019-00203-8 [DOI] [PubMed] [Google Scholar]

- 67.Yu HJ, Cho SR, Kim MJ, Kim WH, Kim JW, Choi J. Automated skeletal classification with lateral cephalometry based on artificial intelligence. J Dent Res 2020; 99: 249–56. doi: 10.1177/0022034520901715 [DOI] [PubMed] [Google Scholar]

- 68.Park J-H, Hwang H-W, Moon J-H, Yu Y, Kim H, Her S-B, et al. Automated identification of cephalometric landmarks: Part 1-Comparisons between the latest deep-learning methods YOLOV3 and SSD. Angle Orthod 2019; 89: 903–9. doi: 10.2319/022019-127.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Hwang H-W, Park J-H, Moon J-H, Yu Y, Kim H, Her S-B, et al. Automated identification of cephalometric landmarks: Part 2-Might it be better than human? Angle Orthod 2020; 90: 69–76. doi: 10.2319/022019-129.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Lindner C, Wang C-W, Huang C-T, Li C-H, Chang S-W, Cootes TF. Fully automatic system for accurate localisation and analysis of cephalometric landmarks in lateral cephalograms. Sci Rep 2016; 6: 33581. doi: 10.1038/srep33581 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Arık Sercan Ö, Ibragimov B, Xing L. Fully automated quantitative cephalometry using convolutional neural networks. J Med Imaging 2017; 4: 014501. doi: 10.1117/1.JMI.4.1.014501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Calciolari E, Donos N, Park JC, Petrie A, Mardas N. Panoramic measures for oral bone mass in detecting osteoporosis: a systematic review and meta-analysis. J Dent Res 2015; 94(3 Suppl): 17–27. doi: 10.1177/0022034514554949 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.de Medeiros FCFL, Kudo GAH, Leme BG, Saraiva PP, Verri FR, Honório HM, et al. Dental implants in patients with osteoporosis: a systematic review with meta-analysis. Int J Oral Maxillofac Surg 2018; 47: 480–91. doi: 10.1016/j.ijom.2017.05.021 [DOI] [PubMed] [Google Scholar]

- 74.Aghaloo T, Pi-Anfruns J, Moshaverinia A, Sim D, Grogan T, Hadaya D. The effects of systemic diseases and medications on implant osseointegration: a systematic review. Int J Oral Maxillofac Implants 2019; 34: s35–49. doi: 10.11607/jomi.19suppl.g3 [DOI] [PubMed] [Google Scholar]

- 75.Allen PD, Graham J, Farnell DJJ, Harrison EJ, Jacobs R, Nicopolou-Karayianni K, et al. Detecting reduced bone mineral density from dental radiographs using statistical shape models. IEEE Trans Inf Technol Biomed 2007; 11: 601–10. doi: 10.1109/TITB.2006.888704 [DOI] [PubMed] [Google Scholar]

- 76.Muramatsu C, Matsumoto T, Hayashi T, Hara T, Katsumata A, Zhou X, et al. Automated measurement of mandibular cortical width on dental panoramic radiographs. Int J Comput Assist Radiol Surg 2013; 8: 877–85. doi: 10.1007/s11548-012-0800-8 [DOI] [PubMed] [Google Scholar]

- 77.Nakamoto T, Taguchi A, Ohtsuka M, Suei Y, Fujita M, Tsuda M, et al. A computer-aided diagnosis system to screen for osteoporosis using dental panoramic radiographs. Dentomaxillofac Radiol 2008; 37: 274–81. doi: 10.1259/dmfr/68621207 [DOI] [PubMed] [Google Scholar]

- 78.Roberts MG, Graham J, Devlin H. Image texture in dental panoramic radiographs as a potential biomarker of osteoporosis. IEEE Trans Biomed Eng 2013; 60: 2384–92. doi: 10.1109/TBME.2013.2256908 [DOI] [PubMed] [Google Scholar]

- 79.Kavitha MS, Asano A, Taguchi A, Kurita T, Sanada M. Diagnosis of osteoporosis from dental panoramic radiographs using the support vector machine method in a computer-aided system. BMC Med Imaging 2012; 12: 1. doi: 10.1186/1471-2342-12-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Kavitha MS, Asano A, Taguchi A, Heo M-S. The combination of a histogram-based clustering algorithm and support vector machine for the diagnosis of osteoporosis. Imaging Sci Dent 2013; 43: 153–61. doi: 10.5624/isd.2013.43.3.153 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Kavitha MS, An S-Y, An C-H, Huh K-H, Yi W-J, Heo M-S, SY A, CH A, WJ Y, et al. Texture analysis of mandibular cortical bone on digital dental panoramic radiographs for the diagnosis of osteoporosis in Korean women. Oral Surg Oral Med Oral Pathol Oral Radiol 2015; 119: 346–56. doi: 10.1016/j.oooo.2014.11.009 [DOI] [PubMed] [Google Scholar]

- 82.Hwang JJ, Lee J-H, Han S-S, Kim YH, Jeong H-G, Choi YJ. Strut analysis for osteoporosis detection model using dental panoramic radiography. Dentomaxillofac Radiol 2017; 46: 20170006. doi: 10.1259/dmfr.20170006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Kavitha MS, Ganesh Kumar P, Park S-Y, Huh K-H, Heo M-S, Kurita T. Automatic detection of osteoporosis based on hybrid genetic Swarm fuzzy classifier approaches. Dentomaxillofac Radiol 2016; 45: 20160076. doi: 10.1259/dmfr.20160076 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Chu P, Bo C, Liang X, Yang J, Megalooikonomou V, Yang F. Using Octuplet Siamese network for osteoporosis analysis on dental panoramic radiographs, using Octuplet Siamese network for osteoporosis analysis on dental panoramic radiographs. Proc 2018 Annu Int Conf IEEE Eng Med Biol Soc EMBS 2018;: 2579–82. [DOI] [PubMed] [Google Scholar]

- 85.Lee K-S, Jung S-K, Ryu J-J, Shin S-W, Choi J. Evaluation of transfer learning with deep convolutional neural networks for screening osteoporosis in dental panoramic radiographs. J Clin Med 2020; 9: 392. doi: 10.3390/jcm9020392 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Lee J-S, Adhikari S, Liu L, Jeong H-G, Kim H, Yoon S-J. Osteoporosis detection in panoramic radiographs using a deep convolutional neural network-based computer-assisted diagnosis system: a preliminary study. Dentomaxillofac Radiol 2019; 48: 20170344. doi: 10.1259/dmfr.20170344 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Eun H, Kim C. Oriented tooth localization for periapical dental X-ray images via convolutional neural network. Asia-Pacific Signal Inf Process Assoc Annu Summit 2016; 2016: 1–7. [Google Scholar]

- 88.Zhang K, Wu J, Chen H, Lyu P. An effective teeth recognition method using label tree with cascade network structure. Comput Med Imaging Graph 2018; 68: 61–70. doi: 10.1016/j.compmedimag.2018.07.001 [DOI] [PubMed] [Google Scholar]

- 89.Chen H, Zhang K, Lyu P, Li H, Zhang L, Wu J, et al. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci Rep 2019; 9: 3840. doi: 10.1038/s41598-019-40414-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Oktay AB. Tooth detection with convolutional neural network. Med Technol Natl Congr 2017;: 1–4. [Google Scholar]

- 91.Tuzoff DV, Tuzova LN, Bornstein MM, Krasnov AS, Kharchenko MA, Nikolenko SI, et al. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac Radiol 2019; 48: 20180051. doi: 10.1259/dmfr.20180051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Mahdi FP, Motoki K, Kobashi S. Optimization technique combined with deep learning method for teeth recognition in dental panoramic radiographs. Sci Rep 2020; 10: 19261. doi: 10.1038/s41598-020-75887-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Miki Y, Muramatsu C, Hayashi T, Zhou X, Hara T, Katsumata A, et al. Classification of teeth in cone-beam CT using deep convolutional neural network. Comput Biol Med 2017; 80: 24–9. doi: 10.1016/j.compbiomed.2016.11.003 [DOI] [PubMed] [Google Scholar]

- 94.Miki Y, Muramatsu C, Hayashi T, Zhou X, Hara T, Katsumata A. Tooth labeling in cone-beam CT using deep convolutional neural network for forensic identification. Proc SPIE Medical Imaging 2017: Computer-Aided Diagnosis 2017; 10134101343E. [DOI] [PubMed] [Google Scholar]

- 95.Wirtz A, Mirashi SG, Wesarg S. Automatic teeth segmentation in panoramic X-ray images using a coupled shape model in combination with a neural network. Lecture Notes in Computer Science 2018; 11073: 712–9. [Google Scholar]

- 96.Jader G, Fontineli J, Ruiz M, Abdalla K, Pithon M, Oliveira L. Deep instance segmentation of teeth in panoramic X-ray images. Proc 31st 2018 Conf Graph Patterns Images SIBGRAPI 2018;: 400–7. [Google Scholar]

- 97.Leite AF, Van Gerven AV, Willems H, Beznik T, Lahoud P, Gaêta-Araujo H.;in press Artificial intelligence-driven novel tool for tooth detection and segmentation on panoramic radiographs. Clin Oral Investig 2020. [DOI] [PubMed] [Google Scholar]

- 98.Lee J-H, Han S-S, Kim YH, Lee C, Kim I. Application of a fully deep convolutional neural network to the automation of tooth segmentation on panoramic radiographs. Oral Surg Oral Med Oral Pathol Oral Radiol 2020; 129: 635–42. doi: 10.1016/j.oooo.2019.11.007 [DOI] [PubMed] [Google Scholar]

- 99.Niño-Sandoval TC, Guevara Pérez SV, González FA, Jaque RA, Infante-Contreras C. Use of automated learning techniques for predicting mandibular morphology in skeletal class I, II and III. Forensic Sci Int 2017; 281: 187.e1–187.e7. doi: 10.1016/j.forsciint.2017.10.004 [DOI] [PubMed] [Google Scholar]

- 100.De Tobel J, Radesh P, Vandermeulen D, Thevissen PW. An automated technique to stage lower third molar development on panoramic radiographs for age estimation: a pilot study. J Forensic Odontostomatol 2017; 35: 42–54. [PMC free article] [PubMed] [Google Scholar]

- 101.Banar N, Bertels J, Laurent F, Boedi RM, De Tobel J, Thevissen P, et al. Towards fully automated third molar development staging in panoramic radiographs. Int J Legal Med 2020; 134: 1831–41. doi: 10.1007/s00414-020-02283-3 [DOI] [PubMed] [Google Scholar]

- 102.Merdietio Boedi R, Banar N, De Tobel J, Bertels J, Vandermeulen D, Thevissen PW. Effect of lower third molar segmentations on automated tooth development staging using a Convolutional neural network. J Forensic Sci 2020; 65: 481–6. doi: 10.1111/1556-4029.14182 [DOI] [PubMed] [Google Scholar]

- 103.Avuçlu E, Başçiftçi F, Avuçlu E, Başçiftçi F. Novel approaches to determine age and gender from dental X-ray images by using multiplayer perceptron neural networks and image processing techniques. Chaos, Solitons & Fractals 2019; 120: 127–38. doi: 10.1016/j.chaos.2019.01.023 [DOI] [Google Scholar]

- 104.Patil V, Vineetha R, Vatsa S, Shetty DK, Raju A, Naik N, et al. Artificial neural network for gender determination using mandibular morphometric parameters: a comparative retrospective study. Cogent Engineering 2020; 7: 1723783: 1723783. doi: 10.1080/23311916.2020.1723783 [DOI] [Google Scholar]

- 104.Matsuda S, Miyamoto T, Yoshimura H, Hasegawa T. Personal identification with orthopantomography using simple convolutional neural networks: a preliminary study. Sci Rep 2020; 10: 13559. doi: 10.1038/s41598-020-70474-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Lehmann T, Schmitt W, Horn H, Hillen W. Idefix: identification of dental fixtures in intraoral X rays. Proc. SPIE: Medical Imaging 1996: Image Processing 1996; 2710: 584–95. [Google Scholar]

- 107.Kim J-E, Nam N-E, Shim J-S, Jung Y-H, Cho B-H, Hwang JJ. Transfer learning via deep neural networks for implant fixture system classification using periapical radiographs. J Clin Med 2020; 9: 1117. doi: 10.3390/jcm9041117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Takahashi T, Nozaki K, Gonda T, Mameno T, Wada M, Ikebe K. Identification of dental implants using deep learning-pilot study. Int J Implant Dent 2020; 6: 53. doi: 10.1186/s40729-020-00250-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Lee J-H, Kim Y-T, Lee J-B, Jeong S-N. A performance comparison between automated deep learning and dental professionals in classification of dental implant systems from dental imaging: a multi-center study. Diagnostics 2020; 10: 910. doi: 10.3390/diagnostics10110910 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Sukegawa S, Yoshii K, Hara T, Yamashita K, Nakano K, Yamamoto N, et al. Deep neural networks for dental implant system classification. Biomolecules 2020; 10: 984–13. doi: 10.3390/biom10070984 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.Hadj Saïd M, Le Roux M-K, Catherine J-H, Lan R. Development of an artificial intelligence model to identify a dental implant from a radiograph. Int J Oral Maxillofac Implants 2020; 36: 1077–82. doi: 10.11607/jomi.8060 [DOI] [PubMed] [Google Scholar]

- 112.Lee J-H, Jeong S-N. Efficacy of deep convolutional neural network algorithm for the identification and classification of dental implant systems, using panoramic and periapical radiographs: a pilot study. Medicine 2020; 99: e20787. doi: 10.1097/MD.0000000000020787 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Du X, Chen Y, Zhao J, Xi Y. A convolutional neural network based auto-positioning method for dental arch in rotational panoramic radiography. Annu Int Conf IEEE Eng Med Biol Soc 2018; 2018: 2615–8. doi: 10.1109/EMBC.2018.8512732 [DOI] [PubMed] [Google Scholar]

- 114.Hatvani J, Horvath A, Michetti J, Basarab A, Kouame D, Gyongy M. Deep learning-based super-resolution applied to dental computed tomography. IEEE Trans Radiat Plasma Med Sci 2018; 3: 120–8. doi: 10.1109/TRPMS.2018.2827239 [DOI] [Google Scholar]

- 115.Liang K, Zhang L, Yang H, Yang Y, Chen Z, Xing Y. Metal artifact reduction for practical dental computed tomography by improving interpolation-based reconstruction with deep learning. Med Phys 2019; 46: e823–34. doi: 10.1002/mp.13644 [DOI] [PubMed] [Google Scholar]

- 116.Minnema J, van Eijnatten M, Hendriksen AA, Liberton N, Pelt DM, Batenburg KJ, et al. Segmentation of dental cone-beam CT scans affected by metal artifacts using a mixed-scale dense convolutional neural network. Med Phys 2019; 46: 5027–35. doi: 10.1002/mp.13793 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117.Hegazy MAA, Cho MH, Cho MH, Lee SY. U-net based metal segmentation on projection domain for metal artifact reduction in dental CT. Biomed Eng Lett 2019; 9: 375–85. doi: 10.1007/s13534-019-00110-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.Ngan TT, Tuan TM, Son LH, Minh NH, Dey N. Decision making based on fuzzy aggregation operators for medical diagnosis from dental X-ray images. J Med Syst 2016; 40: 280. doi: 10.1007/s10916-016-0634-y [DOI] [PubMed] [Google Scholar]

- 119.Son LH, Tuan TM, Fujita H, Dey N, Ashour AS, Ngoc VTN, et al. Dental diagnosis from X-ray images: an expert system based on fuzzy computing. Biomed Signal Process Control 2018; 39: 64–73. doi: 10.1016/j.bspc.2017.07.005 [DOI] [Google Scholar]

- 120.Abdolali F, Zoroofi RA, Otake Y, Sato Y. A novel image-based retrieval system for characterization of maxillofacial lesions in cone beam CT images. Int J Comput Assist Radiol Surg 2019; 14: 785–96. doi: 10.1007/s11548-019-01946-w [DOI] [PubMed] [Google Scholar]

- 121.Abdalla-Aslan R, Yeshua T, Kabla D, Leichter I, Nadler C. An artificial intelligence system using machine-learning for automatic detection and classification of dental restorations in panoramic radiography. Oral Surg Oral Med Oral Pathol Oral Radiol 2020; 130: 593–602. doi: 10.1016/j.oooo.2020.05.012 [DOI] [PubMed] [Google Scholar]

- 122.Prajapati SA, Nagaraj R, Mitra S. Classification of dental diseases using CNN and transfer learning. 5th Int Symp Comput Bus Intell ISCBI 2017; 2017: 70–4. [Google Scholar]

- 123.Murata M, Ariji Y, Ohashi Y, Kawai T, Fukuda M, Funakoshi T, et al. Deep-learning classification using convolutional neural network for evaluation of maxillary sinusitis on panoramic radiography. Oral Radiol 2019; 35: 301–7. doi: 10.1007/s11282-018-0363-7 [DOI] [PubMed] [Google Scholar]

- 124.Kuwana R, Ariji Y, Fukuda M, Kise Y, Nozawa M, Kuwada C, et al. Performance of deep learning object detection technology in the detection and diagnosis of maxillary sinus lesions on panoramic radiographs. Dentomaxillofac Radiol 2021; 50: 20200171. doi: 10.1259/dmfr.20200171 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 125.Kim Y, Lee KJ, Sunwoo L, Choi D, Nam C-M, Cho J, et al. Deep learning in diagnosis of maxillary sinusitis using conventional radiography. Invest Radiol 2019; 54: 7–15. doi: 10.1097/RLI.0000000000000503 [DOI] [PubMed] [Google Scholar]

- 126.Kann BH, Aneja S, Loganadane GV, Kelly JR, Smith SM, Decker RH, et al. Pretreatment identification of head and neck cancer nodal metastasis and extranodal extension using deep learning neural networks. Sci Rep 2018; 8: 14036. doi: 10.1038/s41598-018-32441-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 127.Ariji Y, Fukuda M, Kise Y, Nozawa M, Yanashita Y, Fujita H, et al. Contrast-Enhanced computed tomography image assessment of cervical lymph node metastasis in patients with oral cancer by using a deep learning system of artificial intelligence. Oral Surg Oral Med Oral Pathol Oral Radiol 2019; 127: 458–63. doi: 10.1016/j.oooo.2018.10.002 [DOI] [PubMed] [Google Scholar]

- 128.Vinayahalingam S, Xi T, Bergé S, Maal T, de Jong G. Automated detection of third molars and mandibular nerve by deep learning. Sci Rep 2019; 9: 9007. doi: 10.1038/s41598-019-45487-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 129.Fukuda M, Ariji Y, Kise Y, Nozawa M, Kuwada C, Funakoshi T, et al. Comparison of 3 deep learning neural networks for classifying the relationship between the mandibular third molar and the mandibular canal on panoramic radiographs. Oral Surg Oral Med Oral Pathol Oral Radiol 2020; 130: 336–43. doi: 10.1016/j.oooo.2020.04.005 [DOI] [PubMed] [Google Scholar]

- 130.Jaskari J, Sahlsten J, Järnstedt J, Mehtonen H, Karhu K, Sundqvist O, et al. Deep learning method for mandibular canal segmentation in dental cone beam computed tomography volumes. Sci Rep 2020; 10: 5842. doi: 10.1038/s41598-020-62321-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 131.Kwak GH, Kwak E-J, Song JM, Park HR, Jung Y-H, Cho B-H, et al. Automatic mandibular canal detection using a deep convolutional neural network. Sci Rep 2020; 10: 5711. doi: 10.1038/s41598-020-62586-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 132.Johari M, Esmaeili F, Andalib A, Garjani S, Saberkari H. Detection of vertical root fractures in intact and endodontically treated premolar teeth by designing a probabilistic neural network: an ex vivo study. Dentomaxillofac Radiol 2017; 46: 20160107. doi: 10.1259/dmfr.20160107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 133.Fukuda M, Inamoto K, Shibata N, Ariji Y, Yanashita Y, Kutsuna S, et al. Evaluation of an artificial intelligence system for detecting vertical root fracture on panoramic radiography. Oral Radiol 2020; 36: 337–43. doi: 10.1007/s11282-019-00409-x [DOI] [PubMed] [Google Scholar]

- 134.Kuwada C, Ariji Y, Fukuda M, Kise Y, Fujita H, Katsumata A, et al. Deep learning systems for detecting and classifying the presence of impacted supernumerary teeth in the maxillary incisor region on panoramic radiographs. Oral Surg Oral Med Oral Pathol Oral Radiol 2020; 130: 464–9. doi: 10.1016/j.oooo.2020.04.813 [DOI] [PubMed] [Google Scholar]

- 135.Vranckx M, Van Gerven A, Willems H, Vandemeulebroucke A, Ferreira Leite A, Politis C, et al. Artificial intelligence (AI)-driven molar angulation measurements to predict third molar eruption on panoramic radiographs. Int J Environ Res Public Health 2020; 17: 3716. doi: 10.3390/ijerph17103716 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 136.Orhan K, Bilgir E, Bayrakdar IS, Ezhov M, Gusarev M, Shumilov E.;in press Evaluation of artificial intelligence for detecting impacted third molars on cone-beam computed tomography scans. J Stomatol Oral Maxillofac Surg 2020. [DOI] [PubMed] [Google Scholar]

- 137.Hiraiwa T, Ariji Y, Fukuda M, Kise Y, Nakata K, Katsumata A, et al. A deep-learning artificial intelligence system for assessment of root morphology of the mandibular first molar on panoramic radiography. Dentomaxillofac Radiol 2019; 48: 20180218. doi: 10.1259/dmfr.20180218 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 138.Kise Y, Ikeda H, Fujii T, Fukuda M, Ariji Y, Fujita H, et al. Preliminary study on the application of deep learning system to diagnosis of Sjögren’s syndrome on CT images. Dentomaxillofac Radiol 2019; 48: 20190019. doi: 10.1259/dmfr.20190019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 139.Schwendicke F, Samek W, Krois J. Artificial intelligence in dentistry: chances and challenges. J Dent Res 2020; 99: 769–74. doi: 10.1177/0022034520915714 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 140.Brady AP, Neri E. Artificial intelligence in Radiology-Ethical considerations. Diagnostics 2020; 10: 1–9. doi: 10.3390/diagnostics10040231 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 141.Mongan J, Moy L, Kahn CE. Checklist for artificial intelligence and medical imaging (claim. Radiol Artif Intell 2020;: 2.2.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 142.Mazurowski MA, Habas PA, Zurada JM, Lo JY, Baker JA, Tourassi GD. Training neural network classifiers for medical decision making: the effects of imbalanced datasets on classification performance. Neural Netw 2008; 21(2-3): 427–36. doi: 10.1016/j.neunet.2007.12.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 143.Chassagnon G, Vakalopoulou M, Paragios N, Revel M-P. Artificial intelligence applications for thoracic imaging. Eur J Radiol 2020; 123: 108775. doi: 10.1016/j.ejrad.2019.108774 [DOI] [PubMed] [Google Scholar]

- 144.Eng J. Sample size estimation: how many individuals should be studied? Radiology 2003; 227: 309–13. doi: 10.1148/radiol.2272012051 [DOI] [PubMed] [Google Scholar]

- 145.Park SH, Han K. Methodologic guide for evaluating clinical performance and effect of artificial intelligence technology for medical diagnosis and prediction. Radiology 2018; 286: 800–9https://. doi: 10.1148/radiol.2017171920 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.