Abstract

Mobile-based health (mHealth) systems are proving to be a popular alternative to the traditional visits to healthcare providers. They can also be useful and effective in fighting the spread of infectious diseases, such as the COVID-19 pandemic. Even though young adults are the most prevalent mHealth user group, the relevant literature has overlooked their intention to invest in and use mHealth services. This study aims to investigate the predictors that influence young adults’ intention to invest in mHealth (IINmH), particularly during the COVID-19 crisis, by designing a research methodology that incorporates both the health belief model (HBM) and the expectation-confirmation model (ECM). As an expansion of the integrated HBM-ECM model, this study proposes two additional predictors: mobile Internet speed and mobile Internet cost. A multi-method analytical approach, including partial least squares structural equation modelling (PLS-SEM), fuzzy-set qualitative comparative analysis (fsQCA), and machine learning (ML), was utilised together with a sample dataset of 558 respondents. The dataset—about young adults in Bangladesh with an experience of using mHealth—was obtained through a structured questionnaire to examine the complex causal relationships of the integrated model. The findings from PLS-SEM indicate that value-for-money, mobile Internet cost, health motivation, and confirmation of services all have a substantial impact on young adults’ IINmH during the COVID-19 pandemic. At the same time, the fsQCA results indicate that a combination of predictors, instead of any individual predictor, had a significant impact on predicting IINmH. Among ML methods, the XGBoost classifier outperformed other classifiers in predicting the IINmH, which was then used to perform sensitivity analysis to determine the relevance of features. We expect this multi-method analytical approach to make a significant contribution to the mHealth domain as well as the broad information systems literature.

Keywords: mHealth, Young adults, Integrated information systems model, Multi-method analytical approach, SEM-fsQCA-ML

1. Introduction

The COVID-19 pandemic has had catastrophic effects on both communities and governments (Hasan, 2020, Mao et al., 2020). High incidence and mortality rates, as well as rapid changes in biological and epidemiological patterns, have had a major impact on healthcare services. The necessity of strong measures for controlling the pandemic and the resulting negative economic consequences have caused dilemmas for policymakers. Measures that governments should take to save lives can often be difficult to implement (Guo et al., 2021). A deep understanding of these dilemmas can help healthcare providers and policymakers identify healthcare alternatives for combating the COVID-19 pandemic, as well as others in the future (Mao et al., 2020). This can be critical for implementing effective measures, particularly in developing and least developed countries with high population density, weak healthcare services, and a scarcity of required resources (Shammi et al., 2020). In this regard, a combination of digital healthcare systems and remote care strategies might help control, prevent, and combat the transmission of communicable diseases (Asadzadeh & Kalankesh, 2021). Mobile health (mHealth) can provide alternative approaches for controlling any pandemic by creating awareness, providing remote consultation and minimising patient referrals, supporting contact tracing, and thereby helping to mitigate the further expansion of an epidemic (Nachega et al., 2020).

These days, mHealth applications are regularly used by the general population, government, and crisis management organisations (Guo et al., 2021). Governments and healthcare providers use mHealth technologies, such as instant messaging, patient surveillance, and contact tracing, to check the spread of infectious diseases. An mHealth app (Flu-Report) was used to monitor influenza patients using a self-reported questionnaire (Fujibayashi et al., 2018). A health-monitoring app for detecting the Zika virus was used to continuously monitor the health status of the Spanish Olympic delegation (Rodriguez-Valero et al., 2018). A mobile system for rapid diagnosis of the Middle East respiratory syndrome (MERS) has also been developed (Shirato et al., 2020). More recently, various government departments, including healthcare and epidemic control, are utilising mHealth applications for diagnosis and screening, contact tracing, recording movement, sending awareness messages, and combating misinformation (Asadzadeh & Kalankesh, 2021). Thus, mHealth can be beneficial for patients, healthcare professionals, and policymakers alike by further improving healthcare services.

Young adults (19–34 years) are the most potential consumer group for mHealth services, with a greater tendency to download mHealth apps than other age groups (Altmann & Gries, 2017). The flexibility and accessibility of mobile technology platforms can attract them towards mHealth and, thus, connect them to the healthcare system (Slater et al., 2017). Usage of mHealth applications might include a powerful strategy of self-care management for young adults. However, young adults are generally overlooked in favour of older age groups by conventional health policies, since they have less contact with health information exchange or interaction with health practitioners (Nikolaou et al., 2019). Despite this, the use of mHealth technology among young adults is increasing. For instance, in China, most mHealth users are well-educated and city-dwelling young adults (To et al., 2019). Young medical practitioners extensively use mHealth applications as clinical resources to provide adequate healthcare services. In Africa, despite limited mHealth services, young people are making innovative and strategic use of mobile telephones to ensure efficient healthcare services (Kathuria-Prakash et al., 2019). Sharpe et al. (2017) found that mHealth apps can go beyond conventional healthcare behaviour changes. Furthermore, the limited resources and funds available to young adults might make mHealth an appealing alternative for accessing the healthcare system (Hampshire et al., 2015). While long-term usage of mHealth applications is beneficial, young adults choose to abandon the apps for financial reasons. Many users who downloaded and used an mHealth app but then uninstalled it, complained that it was too expensive or had poor user experience (Murnane et al., 2015). However, another study found the cost of the app to have a statistically significant positive association with younger participants’ intentions to invest more money in mHealth apps (Somers et al., 2019). Despite widespread acceptance and understanding of the deployment of mHealth, there seems to be a dearth of evaluations of this technology, particularly in the post-adoption phases (O’Connor, Andreev, & O’Reilly, 2020). In this context, understanding and encouraging the post-adoption of mHealth services by young adults is important for ensuring the success of mHealth policy and improving overall access to healthcare services. While prior research has attempted to determine users' intention to accept and utilise mHealth (Altmann and Gries, 2017, Hampshire et al., 2015, Sittig et al., 2020 Talukder et al., 2019), there are only a few studies about young adults' intentions to adopt mHealth technology. Therefore, this study aims to address the following research question: “What factors influence young adults’ intention to invest in mHealth (IINmH) technology, especially during the COVID-19 pandemic?”.

Recent studies have investigated intentions to use mHealth by applying various technology acceptance models, such as the Extended Unified Theory of Acceptance and Use of Technology Model (UTAUT2) (Hoque & Sorwar, 2017), Technology Acceptance Model (TAM) (Alsswey & Al-Samarraie, 2020), and Health Belief Model (HBM) (Alhalaseh et al., 2020). However, none of these studies has been conducted from the perspective of young adults. This study attempts to bridge this gap in the literature by addressing the cognitive factors that might influence the intention of young adults to use mHealth services. This study incorporates the HBM (Alhalaseh et al., 2020, Rosenstock et al., 1988) and the Expectation Confirmation Model (ECM) (Chiu et al., 2020, Oliver, 1980) to provide a holistic interpretation of young adults' intention to use mHealth services from a behavioural aspect. Prior studies have used the HBM as a theoretical basis for understanding individuals' intentions to use different mHealth applications (Alhalaseh et al., 2020, Puspita et al., 2017), while the ECM has been used to examine the factors that affect individuals' devotion to using mHealth apps (Chiu et al., 2020, Tam et al., 2020). Moreover, most prior studies have employed a single model with a limited number of factors, resulting in a relatively lower capability for explaining users' intentions from a particular viewpoint. In this regard, this paper investigates the drivers that influence young adults’ intention to use mHealth apps by developing a research methodology that incorporates both the HBM and the ECM. The two models could complement each other, and an integrated model could mitigate the drawbacks of the single model by allowing a better understanding of young adults’ IINmH.

Methodologically, most prior studies on mHealth have applied a single-stage data analytical approach, particularly the partial least square-structural equation modelling (PLS-SEM) (Alsswey and Al-Samarraie, 2020, Chiu et al., 2020, Hoque and Sorwar, 2017, Tam et al., 2020). Lee et al. (2020) pointed out that a single-stage PLS-SEM analysis might only capture the linear relationship between the antecedents within a research framework, and this approach might be insufficient to predict complex decision-making processes in real-world problems. Others have attempted to mitigate this constraint by performing a second-stage data analysis using fuzzy-set qualitative comparative analysis (fsQCA) and/or an artificial neural network (ANN). However, the fsQCA has certain drawbacks in setting the True Table’s threshold value, since there is no universally accepted rule for using it. Different threshold values can result in solutions with different degrees of sufficient consistency (Roig-Tierno et al., 2017). Similarly, the majority of ANN analyses (Alam et al., 2021, Lee et al., 2020, Talukder et al., 2020) have employed a single hidden layer for training the model, which is referred to as a superficial form of ANN (Lee et al., 2020). This has resulted in the growing attraction towards alternative methodologies, such as machine learning (ML), for generating more profound insights (Kaya et al., 2020). Given this, we intend to contribute to the current literature by implementing a multi-stage SEM-fsQCA-ML analytical approach that can enhance the accuracy of a non-linear and asymmetric relationship due to its improved learning capacity.

The rest of this paper is organised as follows. An extensive literature review is provided in Section 2, following which we explain the research methodology in Section 3. Then, in Section 4, we discuss the methods used in this study and provide the statistical analysis with major findings in Section 5. The overall results and their implications are provided in 6, 7, respectively. Finally, we draw conclusions and discuss the limitations of the study and potential future research in Section 8.

2. Theoretical background

2.1. Health belief model (HBM)

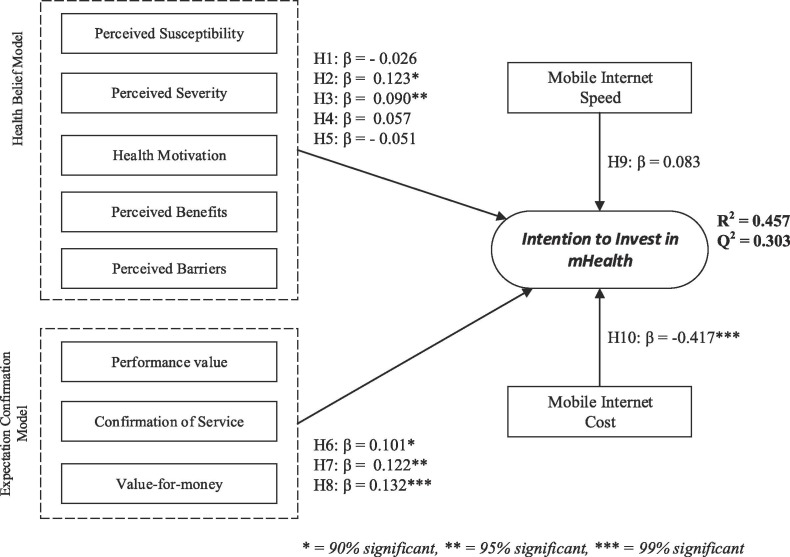

The HBM, an explanatory paradigm, was initially introduced by a team of social psychologists from the US General Health Service in the 1950s, and was applied to health promotion (Ataei et al., 2021). Afterward, it was improved to explain why citizens refused to take preventive measures for diseases (Janz & Becker, 1984). It has also been used to evaluate the perceptions of patients while making health-related decisions. This paradigm provides a strong foundation for people's intrinsic desire to engage in behavioural action. It is a standard paradigm for healthcare studies in understanding and predicting public health practice and preventive healthcare behaviour. According to the HBM, individuals are more willing to take an initiative that overcomes barriers if the action leads to benefits, such as decreased potential vulnerability to a disease with serious illness (C.C & Prathap, 2020). The HBM is a broadly recognised framework in health behaviour studies that illustrates how health-related behaviours improve, sustain, and strive towards an optimal quality of living (Wei et al., 2020). Considering the recent research, investigating the intention to invest in healthcare may be seen as a preventive health behaviour that tends to decrease the likelihood of risky lifestyles, e.g., contracting the COVID-19 virus (García and Cerda, 2020, Nembrini et al., 2020). Due to the need to use remote healthcare facilities during a pandemic, this research explores various antecedents such as perceived susceptibility, perceived barriers, perceived severity of COVID-19, and perceived benefits. Five constructs make up the HBM: perceived susceptibility, perceived severity, health motivation, perceived barriers, and perceived benefits (Fig. 1 ). This model posits that people are more willing to make decisions pertaining to their own fitness practice because they want to remain healthy and believe that their practice will facilitate and support their well-being (Ataei et al., 2021).

Fig. 1.

Research model and hypotheses.

The HBM has been proven to be applicable for studying the adoption of new health technologies in the literature. Numerous researchers have examined technology-enabled health behaviour. For example, Ahadzadeh et al. (2015) discussed health-related Internet usage by integrating the HMB, TAM, and UTAUT. As part of the investigation into patients’ adoption of smartphone health technologies, Dou et al. (2017) used various hypotheses and theories, including the HBM, to better understand patients’ attitudes and perspectives. Shang et al. (2021) employed the HBM to explore older adults’ intentions to exchange health knowledge on social media. Daragmeh et al. (2021) examined customers’ prospects of using an E-wallet service, employing the HBM and Technology Continuous Theory (TCT) throughout the COVID-19 pandemic coevolutionary period. Therefore, the HBM is an ideal tool to further understand young adults’ investment intention regarding mHealth during the COVID-19 crisis.

2.2. Expectation confirmation model (ECM)

The ECM has gained substantial interest for studying post-acceptance behaviour from the information systems (IS) domain in the last decade. This framework has become popular for demonstrating customers' satisfaction and their willingness to use an IS repeatedly (Bhattacherjee, 2001, Venkatesh et al., 2011). The ECM was developed by Bhattacherjee (2001), and is inspired by the expectation-confirmation theory (Oliver, 1980), which has been widely used in the business field to assess customer loyalty and post-purchase behaviour through belief, consequence, and motivation in the use of IS. He associated IS users’ continuation decisions with customers’ repurchase decisions, noting that both include the steps of (1) initial experience, (2) initial acceptance or purchase, and (3) post-decision for repurchasing the product or service. The degree of service confirmation is considered one of the three primary predictors (performance value, confirmation of service, and value-for-money) and the most influential indicator of users' intention to invest in mHealth technology (Leung & Chen, 2019). The ECM has consistently proven itself for predicting consumer behaviour as a common paradigm in numerous healthcare studies, including the intention to invest in mobile app purchases (Hsu & Lin, 2015), to ascertain the intention to use a mobile device in the hospital waiting room (Reychav et al., 2021), and acceptance of smart wearable devices (Park, 2020). However, only a few mHealth studies that utilised the ECM have been conducted, such as the continuation of mHealth service analysis in Bangladesh (Akter et al., 2013).

Users arrive at their repurchase intentions following a chain mechanism. Users will have formed an expectation before purchasing the products or services. Immediately after usage, users develop an impression regarding the effectiveness of the products or services and compare them with their expectations (Wu et al., 2020). Their degree of happiness is determined by how well their expectations match their perceived performance, and afterward, they make repurchase decisions or discontinue the service. Although behavioural models such as the TAM and UTAUT have seen widespread usage in assessing technology adoption rates, these models do not clarify how people use technology at the initial acceptance stage (Chiu et al., 2020). With the increasing prevalence of health-related apps, the ECM framework is crucial to analysing users’ investment decisions in mHealth technologies. At the same time, the ECM serves as an essential framework to understand individuals' decisions to invest in new technologies (Pee et al., 2018).

2.3. Motivation behind integrated the HBM and ECM model

The prevention of COVID-19 outbreaks primarily includes following hygienic lifestyles and coping with social distancing due to the lack of vaccinations or treatment plans. Society is shifting to contactless healthcare systems to prepare people to protect themselves during periods of crisis. To ensure patient safety, healthcare providers globally are preparing to implement a virtualised treatment strategy that eliminates the need for physical visits with patients and health professionals (Webster, 2020).

The use of mHealth technology could be seen as a preventive strategy (adopting social distance). The beliefs about the threat of the epidemic and one’s anxieties about vulnerability to the outbreaks would significantly impact the implementation of preventive health interventions (switching from a physical visit to a virtual consultation). The HBM (Rosenstock et al., 1988) is a theoretical paradigm that might be used to direct interventions intended to promote well-being and prevent disease (Fathian-Dastgerdi et al., 2021). It can assist in explaining and predicting changes in users' health behaviours. Previously, the HBM was employed empirically to investigate individuals’ beliefs and behaviour towards seasonal influenza and pandemic swine flu vaccinations (Santos et al., 2017). Additionally, this model was also used to predict the acceptance of and willingness to invest in COVID-19 treatment (Wong et al., 2021). Although the HBM helps understand mHealth investment decisions, it is just one factor among many involved in the investment in technology. Therefore, the HBM alone is insufficient to justify consumers’ willingness to invest in mHealth technologies. Moreover, a single framework with a limited number of factors could be inadequate to explain individuals’ post-adoption decisions (Chiu et al., 2020). On the other hand, to investigate what factors affect mHealth technology investment decisions, the ECM, designed initially to explain repurchase decisions (Oliver, 1980), was extended in the technological context. Following the implementation of mHealth, investment decisions will be influenced by additional factors (satisfaction or monetary value), which must be investigated. Thus, this study intends to incorporate the HBM and ECM to explore this research problem in the context of mHealth investment decision-making challenges. As a result of integrating the HBM and ECM, this research provides a more detailed structure and extends the existing literature on consumers' investment decisions in mHealth applications.

3. Research model and hypotheses development

The research model presented in Fig. 1 incorporates the dimensions of two well-established theories: the HBM (Janz & Becker, 1984) and modified ECM (Hsu & Lin, 2015). These theoretical models take into account the factors that may affect an individual’s decision-making strategy. These dimensions include all intrinsically linked aspects and highlight the decision-making process in a particular context. The following section will discuss the development of the hypotheses based on these predictors.

3.1. Perceived susceptibility

The term “perceived susceptibility” refers to an individual's ambiguous assessment of the probability or likelihood of suffering from a health problem (Janz & Becker, 1984). Regarding COVID-19, while the use of digital services becomes increasingly vital in the scenario of a pandemic, the current research considered the perceived susceptibility to COVID-19 as a predictor of young adults’ investment intentions in mHealth technologies (C.C & Prathap, 2020). According to several scholars, perceived susceptibility correlates significantly with healthy behaviour, and understanding susceptibility will motivate people to mitigate threats and make investment decisions (Huang et al., 2020). Considering COVID-19, young people are more likely to invest in effective mobile healthcare systems, for example, after identifying their susceptibility to getting infected during a physical visit to a healthcare facility. Thus, we hypothesise the following:

H1: Perceived susceptibility to COVID-19 has a significant impact on investment intentions in mHealth technology.

3.2. Perceived severity

The term “perceived severity” applies to an individual's assessment of the seriousness of a particular problem (Janz & Becker, 1984), as well as perceptions about the adverse effects of getting an infection (Wong et al., 2020), and this assessment may result in appropriate prevention measures. Individuals may take preventive measures against sickness if they think it may have harmful effects (Huang et al., 2020). Individuals are more willing to have a robust protective motivation for individual investment decisions with substantial consequences. Therefore, it is deemed more beneficial to avoid adverse effects. According to Wong et al. (2020), most people believed the COVID-19 virus was severe and those perceptions of severity were significantly associated with increased willingness to invest in mHealth. Thus, the following hypothesis is proposed:

H2: Perceived severity of COVID-19 has a significant impact on the intention to invest in mHealth technology.

3.3. Health motivation

Health motivation is defined as an intense desire to practice healthy behaviour in order to avoid health problems, such as eating healthy, living in a healthy atmosphere, and paying for health benefits. While this factor was not originally introduced in the HBM, Becker (1974) argued that it should be included in the model. According to Janz and Becker (1984), cues to action cover a wide variety of factors that influence an individual’s motivation for a particular activity and help formulate a decision for health benefits. In Becker's view, motivation for healthy living includes the willingness to be proactive about it, and he, therefore, included it in the HBM. A person’s motivation to undertake a healthy behaviour can be divided into three categories: people’s beliefs, modified factors, and probability of practice (McKellar & Sillence, 2020). The last component of the motivation is easily transformed into potential promises, such as investing in healthcare technology. Therefore, we hypothesised the following:

H3: Health motivation regarding COVID-19 has a significant impact on the intention to invest in mHealth technology.

3.4. Perceived benefits

Perceived benefits refer to the beneficial effects associated with the adoption of healthy lifestyles (Janz & Becker, 1984). According to the concept of HBM, potential benefits would motivate people to engage in digital healthcare services. Perceived benefits typically involve individual and societal preventive health habits, including home self-quarantine to avoid unnecessary hospital costs and social quarantine to stop transmitting the disease within the community (Fathian-Dastgerdi et al., 2021). People are far more willing to follow proactive health behaviours if they feel the perceived benefits outweigh the perceived barriers. People should indeed believe that the intervention will be beneficial and trust that if it is taken, then the adverse health condition can be avoided (Mou et al., 2016). When people believe that utilising mHealth technologies could alleviate a health problem or enhance their current health condition, they are more willing to use the latest technology. Therefore, we hypothesise:

H4: Perceived benefits have a strong significant positive effect on the intention to investment in mHealth technology.

3.5. Perceived barriers

Discouraging the promotional health activities that may hinder the acceptance of the desired action or new health behaviour can be defined as perceived barriers (Green et al., 2020). Barriers may describe any negative attribute, such as cost, risks, alarms, inconvenience, irritation, etc. When discussing the current context, perceived barriers represent factors that might hinder or deter individuals from investing in mHealth technologies. Understanding and identifying possible barriers would effectively increase engagement in disease prevention initiatives (Julinawati et al., 2013). If barriers are determined and resolved, investments in emerging technologies for health benefits can be reconsidered (Wang et al., 2021). In some instances, perceived barriers in healthcare negatively affect healthcare actions that discourage investment in mHealth technologies. Thus, we have proposed the following hypothesis:

H5: Perceived barriers have a significant negative influence on the intention to invest in mHealth technology.

3.6. Performance value

The performance of mHealth is confirmed by an assessment of users’ feedback and the fulfilment of specific expectations for continued use of the service. Better performance can encourage post-adoption beliefs such as intention to invest in emerging technologies (Bhattacherjee, 2001). Additionally, the confirmation of technology performance could be crucial in determining whether to continue using IS. Numerous studies have examined the use of the digital platform or paid mobile apps, using the ECM as a theoretical foundation (Hsu & Lin, 2015). Recent studies have shown a correlation between investment intention and emerging health technology (C.C & Prathap, 2020). More specifically, investments in mHealth applications can help reduce physical visits to medical centres and help prevent the spread of infections such as COVID-19. As a result, we identified a function to reinforce the need for investment decisions on mHealth, making the recognition of mHealth performance a critical prerequisite. Thus, the following hypothesis is formed:

H6: mHealth performance value is strongly related to the intention to invest in mHealth technology.

3.7. Confirmation of service

Confirmation refers to the users' expectations about the effects of mHealth usage and whether the system actually performs as expected (Bhattacherjee, 2001). According to Joo and Choi (2016), confirmation of service seems to affect the continuous intention to use IS. Confirming customer expectations on mHealth services will increase customer satisfaction, enhance user loyalty, and encourage investment in new technology. When the customer's initial expectations are achieved or even surpassed, that will further user engagement and promote continued service usage (Venkatesh & Goyal, 2010). Based on the ECM, this study investigates the impact of confirmation of service on mHealth technology users’ investment decisions, and the following hypothesis is proposed:

H6: Confirmation of mHealth service significantly influences the intention to invest in mHealth technologies.

3.8. Value-for-money

Perceived value is an attribute that benefits marketers, as it is considered a multi-dimensional aspect in terms of consumer value, which includes value-for-money. In other words, value-for-money is the utility that considers both short-term and long-term cost savings associated with the adoption of emerging technologies (Shang & Wu, 2017). An encouraging experience resulting from value-for-money contributes to improving a better behavioural intention. Chen, 2008, Rajaguru, 2016 explored a strong relationship between users' perceived value-for-money and their purchasing intention for new services. Considering that mHealth has been recognised as a concept of consumer behaviour, this research incorporates value-for-money (Sweeney & Soutar, 2001) to further clarify mHealth users’ intention to invest in technology. There has been controversy and little research in the literature about how the value-for-money of mHealth technologies influences customers’ behavioural intentions. We believe that a user’s IINmH is determined by the technology’s perceived value-for-money and hypothesise that:

H8: Value-for-money has a significant positive influence on the intention to invest in mHealth technologies.

3.9. Internet speed

Slow Internet speed is a leading cause of everyday annoyance for Bangladeshi mobile Internet users. Most rural areas have insufficient Internet access relative to their urban counterparts. According to Index (2021), the average mobile Internet speed is 11.32 Mbps, and broadband Internet speed is 36.02 Mbps in Bangladesh, which is significantly lower than the global average mobile Internet speed of 53.38 Mbps and broadband Internet speed of 102.12Mbps. Bangladesh is ranked 132nd for mobile Internet speed out of 134 countries and 99th for broadband Internet speed among 176 countries in 2021. Considering the low speed of mobile network connectivity in Bangladesh, it is almost impossible for mHealth services to succeed. Users may experience buffering and delays while using mHealth technologies because of the slower Internet speeds. There is a strong association between Internet speed and Internet-based services (Chiu et al., 2017). We believe that high-quality Internet access impacts mHealth acceptance and the willingness to invest in it. Therefore, we propose the following hypothesis:

H9: Internet speed has a significant positive influence on the intention to invest in mHealth technologies.

3.10. Internet cost

Internet cost is another major determinant when considering the adoption and intention to continue the online services (Nethananthan et al., 2018). Because of the high cost of the Internet in developing and least developed countries, online-based services have broken down, as overall service costs, set-up, and operational costs have increased (Chiu et al., 2017). While Bangladesh has a large population under the poverty line, mobile Internet is incredibly expensive (Dutta & Smita, 2020). In a recent survey by Islam et al. (2020), out of a total of 13,525 young participants, 55.3% were classified as belonging to lower and middle-income households, while 88% reported using the Internet every day for more than two hours. The majority, 64.2% of young adults, did not attend any online classes, and surprisingly, 65.7% of them did not play an online game, which might be due to the high Internet cost. Consequently, cost perceptions have a significant detrimental impact on the intention to invest in mobile Internet-based services. Thus, we hypothesised:

H10: Mobile Internet cost has a significant influence on the intention to invest in mHealth technologies.

4. Methods

4.1. Sample and data collection

The target population consists of young people who are or were active users of mHealth apps in Bangladesh during the COVID-19 pandemic. The G*Power (ver.3.1.9.4) (Faul et al., 2009) tool was used to determine the accurate sample size. A total number of 543 responses were required for multiple linear regression having ten predictors with high power (1- β = 0.95), a low probability of error (α = 0.05) and small effect size (f2 = 0.02). However, we collected data using a non-probability convenient sampling technique (Iqbal & Iqbal, 2020). The participants were not asked for any identifying information, and confidentiality was thus ensured. The interview was completely anonymous, and the data obtained was kept strictly confidential. The interview began with an overview of the research objective, a brief description of mHealth apps, and obtained the participants’ oral consent to participate in the study. A self-reported survey questionnaire containing 34 items, including demographic information, was distributed to target participants via online channels (Facebook/WhatsApp groups of medical colleges, nursing institutes, etc.), and direct data was collected from physical exercise centres, healthcare centres, nursing training institutes, and certain medical college hospitals as well as certain young mHealth users known to the researchers. Throughout the survey (October 2020 to February 2021), a total of 583 responses were received. Initially, the data was thoroughly reviewed for fraudulent content. A multivariate outlier test based on the Mahalanobis distance (Hair et al., 2010) was undertaken to detect potential outliers. Consequently, we retained 558 valid responses for further analysis. We implemented two-step protocols to mitigate possible sampling bias. First, young mHealth users from across the country were selected as the source samples. Second, duplicate responses by the same participant were prohibited. We conducted a pilot study that included the first 150 responses to figure out the internal reliability of the items. The findings revealed that Cronbach's alpha, which represents the reliability of a measurement, was above the recommended threshold of 0.70 (Hu & Bentler, 1998).

4.2. Measurement instruments

The survey questionnaire consists of two sections. The first section includes demographic information of the participants, while the second section includes 34 items from existing theories that investigate respondents' IINmH. The following questions were asked to further comprehend demographic information: age, gender, education, mHealth usage behaviour, and experience in using mHealth. Additionally, we inquired about their chronic disease conditions. The construct and its corresponding items were taken from the relevant literature to confirm the content validity. We adopted the survey instruments with eleven constructs. Among them, perceived susceptibility (three items), perceived severity (three items), and perceived barriers (three items) to COVID-19, and perceived benefits (four items) were adopted and modified from C.C and Prathap, 2020, Daragmeh et al., 2021, and Janz and Becker (1984). Health motivation (three items) measurements were derived from (Becker, 1974). The items of the ECM framework, namely, performance value (four items), confirmation of service (three items), value-for-money (four items), and intention to invest (three items), were adapted from Hsu and Lin (2015). Additionally, the scale of Internet speed and Internet cost were derived from Chiu et al., 2017, Islam et al., 2020, respectively. Details of the items can be found in the supplementary document. Moreover, to confirm the same interpretation, the questionnaire was initially developed in English and then translated into Bengali using a back-translation method (Brislin, 1970). Initially, two health informatics specialists and fifteen mHealth apps users thoroughly reviewed the Bengali version of the questionnaire to ensure readiness. Preliminary observations indicate that the Bengali questionnaire was correctly completed by the participants without any confusion or concerns regarding readability. The respondents were asked to rate their feelings by answering on a 5-point Likert scale, with 1 meaning strongly disagree and 5 meaning strongly agree.

5. Results

5.1. Multivariate assumptions

Prior to validating the modelling process, we initially conducted a preliminary test of the multivariate assumptions to verify that the research results were valid and trustworthy (Leong et al., 2019).

5.1.1. Normality test

For parametric statistics, it is assumed that data is normally distributed. Therefore, the normal distribution test is required. This study assessed normality of the data using the One-sample Kolmogorov–Smirnov test (Leong et al., 2019). The results of the test, as shown in Table 1 , illustrate that all p -values of the Kolmogorov- Smirnov test statistics are<0.05, which confirms that all the predictors in the research model are not normally distributed. Therefore, we employed the variance based PLS in our study since it is robust for non-normal distribution.

Table 1.

One-sample Kolmogorov–Smirnov test for normality assessment.

| N | Normal Parametersa,b |

Most Extreme Differences |

Kolmogorov–Smirnov Z |

Asymp. Sig. (P-Value) (2-tailed)c |

||||

|---|---|---|---|---|---|---|---|---|

| Mean | Std. Deviation | Absolute | Positive | Negative | ||||

| PSus | 558 | 3.4438 | 1.03149 | 0.157 | 0.076 | -0.157 | 0.157 | 0.000 |

| PSev | 558 | 3.2855 | 1.01853 | 0.106 | 0.067 | -0.106 | 0.106 | 0.000 |

| HM | 558 | 3.7210 | 0.74605 | 0.155 | 0.082 | -0.155 | 0.155 | 0.000 |

| PBe | 558 | 2.8920 | 0.82888 | 0.143 | 0.081 | -0.143 | 0.143 | 0.000 |

| PBa | 558 | 4.0287 | 0.76632 | 0.189 | 0.102 | -0.189 | 0.189 | 0.000 |

| CS | 558 | 3.3035 | 0.75076 | 0.102 | 0.086 | -0.102 | 0.102 | 0.000 |

| VfM | 558 | 3.3616 | 0.72341 | 0.104 | 0.053 | -0.104 | 0.104 | 0.000 |

| PeV | 558 | 3.4789 | 0.80763 | 0.104 | 0.070 | -0.104 | 0.104 | 0.000 |

| MIS | 558 | 3.5681 | 0.89273 | 0.177 | 0.119 | -0.177 | 0.177 | 0.000 |

| MIC | 558 | 3.5224 | 0.93118 | 0.158 | 0.100 | -0.158 | 0.158 | 0.000 |

| IINmH | 558 | 3.4863 | 0.71942 | 0.149 | 0.075 | -0.149 | 0.149 | 0.000 |

a. Test distribution is Normal.

b. Calculated from data.

c. Lilliefors Significance Correction.

Note:PSus = Perceived susceptibility, PSev = Perceived severity, HM = Health motivation, PBe = Perceived benefits, PBa = Perceived barriers, CS = Confirmation of service, VfM = Value-for-money, PeV = Performance value, MIS = Mobile Internet speed, MIC = Mobile Internet Cost, IINmH = Intention to Invest in mHealth technology.

5.1.2. Linearity test

Analysis of variance (ANOVA) was applied to test the linear relationship within the variables using P-values of significant level (Ooi et al., 2018). Table 2 reveals that some linear and non-linear relationships have arisen in the data.

Table 2.

Deviation from linearity test.

| Sum of Squares | df | Mean Square | F | P-Value | Linear | ||

|---|---|---|---|---|---|---|---|

| IINmH * PSus | 8.413 | 11 | 0.765 | 1.556 | 0.108 | Yes | |

| IINmH * PSev | Deviation from Linearity | 3.872 | 11 | 0.352 | 0.719 | 0.721 | Yes |

| IINmH * HM | 10.281 | 10 | 1.028 | 2.156 | 0.019 | No | |

| IINmH * PBe | 11.693 | 15 | 0.780 | 1.535 | 0.088 | Yes | |

| IINmH * PBa | 11.853 | 10 | 1.185 | 2.390 | 0.009 | No | |

| IINmH * CS | 5.942 | 11 | 0.540 | 1.157 | 0.315 | Yes | |

| IINmH * VfM | 10.623 | 14 | 0.759 | 1.838 | 0.031 | No | |

| IINmH * PeV | 17.433 | 15 | 1.162 | 2.464 | 0.002 | No | |

| IINmH * MIS | 8.918 | 7 | 1.274 | 2.773 | 0.008 | No | |

| IINmH * MIC | 8.403 | 7 | 1.200 | 2.946 | 0.005 | No |

5.1.3. Common method variance (CMV)

Since the measurement scales in our study were self-reported, it is crucial to eliminate the potential CMV to ensure that the findings are unbiased. First, Harman's single-factor analysis with all eleven constructs was utilised to ensure that the obtained data was free from CMV (Podsakoff et al., 2003). The results reveal that a single factor explained 24.92% of the variation, which was much less than the recommended threshold of 50% (Podsakoff et al., 2003), suggesting that no evidence of CMV was presented in this study. Secondly, we conducted the collinearity test that has resulted in variance inflation factors (VIFs). The model is considered free from CMV if the VIF values are less than or equal to 3.3 (Kock, 2015). The results of the collinearity test, in this study, are equal to or lower than 3.3 (see Appendix A), which confirmed that the test failed to reveal potential sources of CMV.

5.1.4. Homoscedasticity

At this stage, we analysed scatter plots of standardised residuals and dependent factors to identify and assess whether the data was homoscedastic. Homoscedasticity enables us to comprehend the ramifications of implementing multiple regressions. Fig. 2 illustrates that we may accept our conceptual framework for SEM analysis since all residuals are evenly scattered and uniformly distributed along a straight line. These finding provide evidence for the homoscedasticity of the distribution (Ooi et al., 2018).

Fig. 2.

Homoscedasticity test on raw data.

5.1.5. Non-response bias

Non-response bias in questionnaire surveys occurs when respondents do not reply to a survey simultaneously. According to Ooi et al. (2018), we employed an independent t-test to evaluate potential non-response bias. The respondents were initially divided into two groups according to the median day of data collection and the constructs were constructed afterwards. There were no substantial differences between early and late responses since all the t-test values had P-values>0.05. Thus, we did not find non-response bias in this study.

5.2. Measurement model

The model fit index should always be assessed at the beginning of the model evaluation. The standardised root mean square residual (ERMR) has been taken into account in conjunction with the criteria for an acceptable model fit index proposed by Henseler et al. (2015). To prevent model misspecification, the value of SRMR should be less than or equal to 0.08 or 0.10 (Hu & Bentler, 1998). However, our model provides an excellent model fit index with SRMR = 0.069. To validate the measurement model in this study, we used two different validity methods (convergent validity and discriminant validity). This study took into account the factor loading, the Alpha Cronbach's, the composite reliability (CR) and average extracted variance (AVE) to confirm convergent validity; and both Fornell-Larcker and Heterotrait-Monotrait Ratio (HTMT) were applied to justify discriminant validity (Hair et al., 2020). Cronbach’s alpha values higher than 0.50 have been used to measure the internal reliability of the constructs (Hu & Bentler, 1998). Additionally, we assessed the construct reliability with composite reliability (CR) values>0.70, as suggested by Fornell and Larcker (1981). Finally, we computed the extracted average variance (AVE), and the AVE threshold value must exceed 0.50 (Fornell & Larcker, 1981) to indicate that the measurement error is less than the structure’s observed variation. The Cronbach's Alpha, rho A, Composite Reliability, and AVE values, as shown in Table 3 , are all acceptable. The factor loadings of each item presented in Appendix A are also deemed acceptable. Moreover, Fornell–Larcker criteria (Fornell & Larcker, 1981) and the newly developed HTMT (Henseler et al., 2015) were investigated to measure whether they could effectively differentiate two constructs. The square root of AVE should be greater than the inner-correlation of the constructs (Fornell & Larcker, 1981), and Table 4 portrays that this research satisfies the criteria for discriminant validity. Finally, the results presented in Table 5 reveal that the reported HTMT ratio of discrimination correlation has a poor correlation at 0.90 or below (Henseler et al., 2015), confirming the qualified discriminant validity.

Table 3.

Analysis of convergent validity.

| Cronbach's Alpha | rho_A | Composite Reliability | Average Variance Extracted (AVE) | |

|---|---|---|---|---|

| PeV | 0.770 | 0.773 | 0.853 | 0.593 |

| HM | 0.613 | 0.680 | 0.832 | 0.714 |

| MIC | 0.704 | 0.709 | 0.871 | 0.771 |

| MIS | 0.613 | 0.623 | 0.826 | 0.704 |

| PBa | 0.837 | 0.862 | 0.900 | 0.750 |

| PBe | 0.812 | 0.840 | 0.887 | 0.723 |

| VfM | 0.684 | 0.696 | 0.824 | 0.610 |

| PSev | 0.822 | 0.878 | 0.891 | 0.732 |

| PSus | 0.785 | 0.905 | 0.863 | 0.680 |

| CS | 0.687 | 0.692 | 0.865 | 0.761 |

| IINmH | 0.605 | 0.608 | 0.835 | 0.717 |

Table 4.

Inter-correlation between the constructs and the square root of AVEs. (Fornell-Larcker Criterion).

| PeV | HM | MIC | MIS | PBa | PBe | VfM | PSev | PSus | CS | IINmH | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| PeV | 0.770 | ||||||||||

| HM | 0.090 | 0.845 | |||||||||

| MIC | 0.361 | 0.132 | 0.878 | ||||||||

| MIS | 0.717 | 0.117 | 0.296 | 0.839 | |||||||

| PBa | 0.194 | −0.009 | 0.060 | 0.034 | 0.866 | ||||||

| PBe | 0.085 | 0.043 | 0.080 | 0.068 | 0.049 | 0.850 | |||||

| VfM | 0.133 | 0.188 | 0.247 | 0.260 | −0.120 | 0.272 | 0.781 | ||||

| PSev | 0.091 | −0.035 | −0.043 | −0.067 | 0.199 | 0.297 | −0.149 | 0.855 | |||

| PSus | 0.049 | −0.054 | −0.030 | −0.069 | 0.157 | 0.348 | −0.074 | 0.829 | 0.825 | ||

| CS | 0.226 | 0.122 | 0.236 | 0.269 | −0.092 | 0.245 | 0.568 | −0.163 | −0.103 | 0.873 | |

| IINmH | 0.347 | 0.212 | 0.559 | 0.369 | −0.057 | 0.126 | 0.398 | −0.195 | −0.157 | 0.393 | 0.847 |

Table 5.

Heterotrait-Monotrait ratio (HTMT).

| PeV | HM | MIC | MIS | PBa | PBe | VfM | PSev | PSus | CS | IINmH | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| PeV | |||||||||||

| HM | 0.133 | ||||||||||

| MIC | 0.490 | 0.199 | |||||||||

| MIS | 0.917 | 0.198 | 0.464 | ||||||||

| PBa | 0.256 | 0.029 | 0.091 | 0.081 | |||||||

| PBe | 0.124 | 0.092 | 0.105 | 0.127 | 0.067 | ||||||

| VfM | 0.192 | 0.290 | 0.347 | 0.406 | 0.176 | 0.365 | |||||

| PSev | 0.177 | 0.067 | 0.052 | 0.095 | 0.257 | 0.372 | 0.190 | ||||

| PSus | 0.137 | 0.100 | 0.078 | 0.092 | 0.227 | 0.425 | 0.103 | 0.939 | |||

| CS | 0.304 | 0.180 | 0.332 | 0.423 | 0.130 | 0.335 | 0.822 | 0.199 | 0.138 | ||

| IINmH | 0.502 | 0.336 | 0.854 | 0.615 | 0.077 | 0.174 | 0.606 | 0.264 | 0.201 | 0.607 |

5.3. Structural model

The measurement model provided significant findings, but we examined the structural model further before drawing any conclusions. To ensure the model accurately portrays the relationship between various paths, a bootstrapping method with 5,000 sub-samples was applied (Hair et al., 2010). Table 6 and Fig. 3 represent the findings of the test of hypotheses. In statistical hypothesis testing, path coefficient (β), T-Statistics, and P-values were calculated to decide whether the hypotheses had been accepted. The results, as shown in Table 6 and Fig. 3, show that perceived severity (β = 0.123, P < 0.05), health motivation (β = 0.090, P < 0.05), performance values (β = 0.101, P < 0.05), confirmation of service (β = 0.122, P < 0.01), and value-for-money (β = 0.132, P < 0.001) have a significant positive impact on intention to invest in mHealth technology; thus supporting H2, H3, H6, H7, and H8 respectively. However, mobile Internet cost (β = −0.417, P < 0.001) has a significant negative impact on the intention to invest in mHealth technology, indicating that an increase of 1 unit in the mobile Internet cost will reduce the IINmH by 0.417 units in young adults. However, results showed that perceived susceptibility (β = −0.026, P > 0.05), perceived benefits (β = 0.057, P > 0.05), perceived barriers (β = −0.051, P > 0.05) and mobile Internet speed (β = 0.083, P > 0.05) did not affect the young adults’ intentions to invest in mHealth technology, indicating that hypotheses H1, H4, H5, and H9, respectively were insignificant.

Table 6.

PLS-SEM path analysis.

| Hypothesis | β | T Statistics | P Values | SE | 2.5% | 97.5% | Supported | f2 | |

|---|---|---|---|---|---|---|---|---|---|

| H1 | PSus -> IINmH | −0.026 | 0.450 | 0.653 | 0.002 | −0.140 | 0.087 | No | 0.001 |

| H2 | PSev -> IINmH | 0.123 | 1.982 | 0.048 | 0.003 | −0.240 | 0.004 | Yes | 0.008 |

| H3 | HM -> IINmH | 0.090 | 2.327 | 0.020 | 0.002 | 0.010 | 0.162 | Yes | 0.014 |

| H4 | PBe -> IINmH | 0.057 | 1.581 | 0.115 | 0.002 | −0.032 | 0.116 | No | 0.005 |

| H5 | PBa -> IINmH | −0.051 | 1.424 | 0.155 | 0.002 | −0.103 | 0.043 | No | 0.004 |

| H6 | PeV -> IINmH | 0.101 | 1.965 | 0.051 | 0.002 | −0.014 | 0.206 | Yes | 0.017 |

| H7 | CS -> IINmH | 0.122 | 2.619 | 0.009 | 0.002 | 0.037 | 0.214 | Yes | 0.019 |

| H8 | VfM -> IINmH | 0.132 | 3.283 | 0.001 | 0.002 | 0.050 | 0.203 | Yes | 0.008 |

| H9 | MIS -> IINmH | 0.083 | 1.600 | 0.110 | 0.002 | −0.007 | 0.185 | No | 0.006 |

| H10 | MIC -> IINmH | −0.417 | 9.807 | 0.000 | 0.002 | 0.320 | 0.493 | Yes | 0.262 |

Predictive Relevance: R-Square: 0.457, Q-Square: 0.303 (DV = IINmH).

Fig. 3.

Path analysis diagram.

Nevertheless, it is worth noting that the path coefficient might not be quantified and evaluated until the predictive values are determined. Additionally, the model reveals that around 45.7% of the variation is in investment intentions in mHealth technology, indicating that the model captured approximately half of the total variance. The final step of model validation was to examine predictive relevance by using Stone–Geisser's Q-square values. A Q2 value higher than zero indicates that the model is accurately predictive (Rehman Khan & Yu, 2020). Our model correctly predicted investment intentions in mHealth technologies since Q2 is equal to 0.303 in Table 6. The evaluation of the independent predictor and its involvement in the model is expressed as the effect size (f2) for the path. The f2 values of 0.02, 0.15, and 0.35 reflect small, medium, and large effects, respectively (Cohen, 1988). As illustrated in Table 6, the results indicate that mobile Internet cost has a large effect (ƒ2 = 0.262) on the intention to invest in mHealth technology.

5.4. Post hoc analysis of PLS-SEM

We used importance-performance map analysis (IPMA) alongside standard PLS analysis to better understand the future investment intentions in mHealth technology among young adults. IPMA provides us the opportunity to supplement our PLS-SEM findings, which can offer additional insights (Ringle and Sarstedt, 2016). The primary purpose of the IPMA is to explore the important predictors, which allows substantial impact while also having a lower average latent factor score (Pisitsankkhakarn & Vassanadumrongdee, 2020). We implemented the IPMA technique following Hair et al. (2017) and Pisitsankkhakarn & Vassanadumrongdee (2020) to identify the most important predictors for investigating IINmH. Table 7 and Fig. 4 show the findings of IPMA, and these results imply that mobile Internet cost has a substantial effect on investment intention in mHealth technology, with the highest total effect score of −0.417 at the performance level of 63.029. By looking at Fig. 4, we can see that increasing the “mobile Internet cost” by 1 unit will reduce the overall investment intention by 0.417 units. Similarly, the value for money enhances investment intention with a total effect of 0.132 and overall performance level of 54.976. Furthermore, policymakers and/or governments may assist in increasing the IINmH among young adults by lowering mobile Internet costs. These findings confirm that policymakers should strongly prioritise these predictors.

Table 7.

Importance-Performance Analysis.

| Constructs | Importance | Performance |

|---|---|---|

| PeV | 0.101 | 61.941 |

| HM | 0.090 | 68.462 |

| MIC | −0.417 | 63.029 |

| MIS | 0.083 | 64.242 |

| PBa | −0.051 | 76.522 |

| PBe | 0.057 | 45.425 |

| VfM | 0.132 | 54.976 |

| PSev | −0.123 | 56.905 |

| PSus | −0.026 | 60.473 |

| CS | 0.122 | 58.640 |

Fig. 4.

Importance-performance map analysis.

5.5. Asymmetric analysis (fsQCA)

In an asymmetric modelling technique called fsQCA, fuzzy sets are combined with fuzzy logic. The key concepts of fsQCA are fuzzy set theory and Boolean algebra, which are used to investigate how many predictors interact to eventually lead to a particular outcome either with their presence or absence (Ragin, 2009). There are multiple significant reasons why “asymmetric modelling with complexity theory” is crucial: correlation coefficients, even if very reliable, cannot account for the strength of the relationship between endogenous and exogenous factors, and fuzzy sets can help resolve this problem (Kaya et al., 2020, Pappas et al., 2017). The second issue is that the findings of symmetric analysis like multiple regression analysis (MRA) and SEM can also result in overfitting, owing to collinearity confusing related effects (Olya & Altinay, 2016). The third issue is that, in addressing a real-world problem, numerous outcome variables depend on multiple factors and combinations of the predictor, rather than just a single predictor. While symmetric analysis implies that high coefficient values for predictor variables are necessary and sufficient to predict outcome variables, asymmetric analysis suggests that high coefficient values for predictor variables are sufficient but not necessarily essential to predict outcome variables (Kaya et al., 2020). Nevertheless, fsQCA assists in distinguishing between independent and dependent variables, identifying patterns that explain a consequence, and more crucially differentiating from other types of analyses, such as regression, correlation, and ANOVA, since it provides possible alternative solutions to a single problem (Pappas et al., 2017).

5.5.1. Calibration

Before performing fsQCA analysis, calibration of raw data is required. The Likert scale used in this research needed rescaling. Since the study constructs were assessed on a 5-point Likert scale, rescaling the uncalibrated data is crucial. We checked the One-sample Kolmogorov–Smirnov test (Leong et al., 2019) of our uncalibrated data such that all p –values (Table 1) of the Kolmogorov- Smirnov test statistics are<0.05, which confirms all the predictors in the research model are not normally distributed. We also verified that our raw data was not normally distributed by checking that the kurtosis was less than ± 2 and the skewness was less than ± 1 (Appendix A), suggesting non– normal distributions (Kaya et al., 2020). Prior studies in the literature have theorised that the values must be set at 1 for full membership, 0.5 for crossover point, and 0 for full non-membership (Fiss, 2011). A software program called fsQCA (version 3.1b) was used to transform the variables into calibrated sets. The average of items was used first to compute all constructs (Pappas & Woodside, 2021), and then the original value, which covered 95%, 50%, and 5% of the results, was used as the calibration threshold. The quartile statistics for these transformations’ strategies are shown in Table 8 .

Table 8.

Quartiles results for calibration concepts

| PSus | PSev | HM | PBe | PBa | CS | VfM | PeV | MIS | MIC | IINmH | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Full non-membership (5%) | 1.67 | 1.33 | 2.33 | 1.25 | 2.67 | 2.00 | 2.00 | 2.00 | 2.00 | 2.00 | 2.33 |

| Crossover point (50%) | 3.67 | 3.33 | 4.00 | 3.00 | 4.00 | 3.33 | 3.50 | 3.50 | 3.50 | 3.50 | 3.67 |

| Full membership (95%) | 5.00 | 4.67 | 4.67 | 4.25 | 5.00 | 4.33 | 4.50 | 5.00 | 5.00 | 5.00 | 4.33 |

5.5.2. Analysis of necessary conditions

While fsQCA focuses on developing a sufficient condition, evaluation is always at the centre of the model and the necessary conditions should always be formulated first. This study examines the single endogenous variable referred to as ‘IINmH’ in the PLS-SEM model (Fig. 1), as well as the outcome conditions that are associated with it. The fsQCA analysis examines the conditions of ten predictors for the outcome variable, as with the SEM model, that affects ‘IINmH’ and investigates all conditions that influence and do not influence the outcome results. According to Ragin (2009), the consistency range is from 0 to 1. Typically, the consistency range should never be ≤ 0.75 but must be ≥ 0.80. In addition, a condition is “almost always necessary” or “necessary” when the associated consistency value is ≥ 0.80 or ≥ 0.90, respectively (Ragin, 2000). The specific findings are shown in Table 9 , and they reveal that none of the predictors is necessary alone for ‘IINmH’, since the consistency score is<0.90.

Table 9.

Analysis of necessary conditions (Outcome variable: IINmH).

| Conditions tested | Consistency | Coverage | Conditions tested | Consistency | Coverage |

|---|---|---|---|---|---|

| PSus | 0.587101 | 0.589008 | ∼PSus | 0.695186 | 0.674623 |

| PSev | 0.609482 | 0.564850 | ∼PSev | 0.661870 | 0.698008 |

| HM | 0.654241 | 0.687695 | ∼HM | 0.610788 | 0.567706 |

| PBe | 0.661761 | 0.658962 | ∼PBe | 0.635458 | 0.621174 |

| PBa | 0.645594 | 0.572746 | ∼PBa | 0.602361 | 0.669251 |

| CS | 0.727919 | 0.695066 | ∼CS | 0.542197 | 0.553275 |

| VfM | 0.746557 | 0.752767 | ∼VfM | 0.548737 | 0.529928 |

| PeV | 0.717057 | 0.666702 | ∼PeV | 0.547574 | 0.575355 |

| MIS | 0.791135 | 0.672514 | ∼MIS | 0.472624 | 0.555468 |

| MIC | 0.756765 | 0.716572 | ∼MIC | 0.529699 | 0.545434 |

5.5.3. Analysis of sufficient conditions

Generating the truth table is the first step in implementing a sufficient condition analysis (Ragin, 2009). The truth table contains 2^k rows (k = number of conditions), and each row indicates every causative condition combination. The fsQCA technique was used to generate the truth table in this research to understand the IINmH. For large sample sizes, Fiss (2011) recommends that the frequency cut-off be set at 3 (or higher) (Pappas & Woodside, 2021). Consistency values above 0.74 indicate that the combined assessment has provided meaningful solutions (Elbaz et al., 2018). The frequency threshold value is set at 5 since our sample size is 558, and any combinations with a lower frequency are excluded from further investigations. Table 10 summarises the findings of the fsQCA analyses for the IINmH (intermediate solution). Simplified illustrations have been used to enhance the readability of the results, where black circles (●) indicate the existence of a causative condition, blank cross circle (⊗) indicate the absence or negation of a condition, and empty cells represent instances where the absence of such a condition does not affect the outcome. Table 10 also represents the raw consistency for each solution, which is a measurement similar to the regression coefficient. In addition, coverage scoring for each solution and circumstance indicates the size of the effects in hypothesis testing (Woodside & Zhang, 2012). Finally, when evaluating the overall solution coverage, which is similar to the R-square value given in variable-based approaches (Elbaz et al., 2018), it is possible to see whether the revealed configurations influence the IINmH.

Table 10.

fsQCA analysis (intermediate solution).

| Model: IINmH = f(PSus, PSev, HM, PBe, PBa, SS, PG, FC, MIS, MIC) | ||||||

|---|---|---|---|---|---|---|

| Configuration | Solution 1 | Solution 2 | Solution 3 | Solution 4 | Solution 5 | Solution 6 |

| PSus | ⊗ | ● | ● | ⊗ | ⊗ | ● |

| PSev | ⊗ | ● | ● | ⊗ | ⊗ | ● |

| HM | ● | ● | ⊗ | ● | ● | |

| PBe | ● | ● | ⊗ | ⊗ | ● | |

| PBa | ⊗ | ● | ⊗ | ⊗ | ||

| CS | ● | ● | ● | ⊗ | ⊗ | ● |

| VfM | ● | ● | ● | ● | ● | ● |

| PeV | ● | ● | ● | ⊗ | ⊗ | ⊗ |

| MIS | ● | ● | ● | ⊗ | ● | ⊗ |

| MIC | ● | ● | ● | ● | ● | ● |

| Raw coverage | 0.315214 | 0.402109 | 0.396821 | 0.227046 | 0.25426 | 0.262326 |

| Unique coverage | 0.042711 | 0.0137572 | 0.0165019 | 0.0156987 | 0.0121839 | 0.0158997 |

| Consistency | 0.98597 | 0.968712 | 0.965784 | 0.943525 | 0.97975 | 0.971248 |

| Solution coverage: 0.546477Solution consistency: 0.940925 | ||||||

Table 10 illustrated that the performance of no single predictors would be superior to combinations of predictors. The results of the fsQCA demonstrated that six pathways related to IINmH are possible. However, all the solutions have high raw consistency (above 0.90), which has been identified as leading to high performance in IINmH. In particular, the findings demonstrated that the combination of less perceived susceptibility × less perceived severity × health motivation × low perceived barriers × confirmation of service × value-for-money × performance value × mobile Internet speed × mobile Internet cost (solution 1) is more likely to achieve high performance than the other combinations with a consistency score of 0.98597. Some 31.5% of the young adults have supported solution 1 (Raw coverage). According to Solution 2, all ten predictors are equally significant except perceived barriers, which is supported by 40.2% of respondents with a consistency of 0.968712. Similarly, solution 3 demonstrated that, except health motivation, each of the ten predictors is equally important in predicting IINmH (consistency of 0.965784 and raw coverage of 0.396821). Solution 4 has comparatively low consistency (0.943525) with the combination of the full importance of value-for-money and mobile Internet cost, negating the importance of the other seven predictors and ignorance of perceived barriers. Alternatively, the combination of less perceived susceptibility × less perceived severity × health motivation × low perceived benefits × low perceived barriers × low confirmation of service × value-for-money × low-performance value × mobile Internet speed × mobile Internet cost (solution 5) is equally expected to provide excellent performance since it has a consistency score of 0.97975 and is accepted by 25.4% of young adults. Finally, solution 6 is also competitive with full importance of perceived susceptibility, perceived severity, health motivation, perceived benefits, confirmation of service, value-for-money, and mobile Internet cost while perceived barriers, performance value, and mobile Internet speed can be neglected. The above six solutions explain 54.6% of the likelihood of achieving high performance. In summary, the findings suggest that having the same causative predictors leads to strong intentions to invest in mHealth, depending on how the presence or absence of predictors are configured with other causal factors.

5.6. Machine learning for predicting IINmH

ML classification algorithms were employed to predict the interconnection of the proposed integrated model. Eight ML algorithms were used to predict IINmH. These classifiers were trained using labelled data in a supervised learning approach. Python 3.7 was employed for modelling, training, and evaluating the prediction model based on several classifiers, including the support vector machine (SVM), logistic regression (LR), random forest, k-Nearest Neighbours (KNN), Naive Bayes, Adaboost, neural network, and XGBoost. With the SVM, the target variable transforms into a binary classification problem (0 = do not change IINmH, 1 = change IINmH), with the mean score of the items representing the threshold value. The cross-channel normalisation technique was used to normalise the input data in the range between [0, 1] (Mezzatesta et al., 2019). In this study, 80% of the data was used for training the model, while the remaining 20% was used for testing. Finally, sensitivity analysis was performed on the best performing ML model to explore the association between the input predictors and the target factor (IINmH), as well as the degree of relative importance for these predictors.

5.6.1. Feature selection and parameter tuning

Feature selection is a widely utilised technique for pre-processing data. Identifying the most important feature for optimal model fitting in ML is a challenging task. Retrieving important features enables the elimination of superfluous and redundant features, resulting in quicker and more accurate computations. There are two common ways of evaluating criteria: filter and wrapper (Hu et al., 2015). Embedded techniques are also popular in ML because they are less computationally expensive than wrapper methods. For classification modelling, Hasan and Bao (2021) showed that the wrapper method outperforms other methods in terms of feature selection. Therefore, the wrapper method was used for feature selection in this study.

Moreover, every ML algorithm has pre-defined parameters that may have a significant impact on its performance. A grid search method was used to test a large number of combinations of various parameter values to find the most suitable parameters for each ML model (Syarif et al., 2016). Parameter ranges and the best values of ML models for tuning are shown in Table 11 . A five-fold cross-validation technique was applied to avoid overfitting. Additionally, early stopping criteria were employed in the ANN to halt the training process after a large number of epochs where the loss has not been improved (Zhou et al., 2021).

Table 11.

Parameter ranges/best values.

| Classifier | Parameter ranges/best values |

|---|---|

| Support Vector Machine | C = 10, gamma = 0.001 |

| Logistic Regression | C = 10.0, penalty = l2 |

| Random Forest | Number of trees = 200, Number of features for splitting = 8 |

| KNN | Number of neighbours = 8 |

| Naive Bayes | |

| AdaBoost | 'n_estimators': [100,200], |

| 'learning_rate': [0.001, 0.01, 0.1, 0.2, 0.5] | |

| Neural Network | 'alpha': array ([1.e-01, 1.e-02, 1.e-03, 1.e-04, 1.e-05, 1.e-06]), |

| 'hidden_layer_sizes': array ([ 5, 6, 7, 8, 9, 10, 11]), | |

| 'max_iter': [500, 1000, 1500], | |

| 'random_state': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9] | |

| XGBoost | 'min_child_weight': [1, 5, 10], |

| 'gamma': [0.5, 1, 1.5, 2, 5], | |

| 'subsample': [0.6, 0.8, 1.0], | |

| 'colsample_bytree': [0.6, 0.8, 1.0], | |

| 'max_depth': [3, 4, 5] |

5.6.2. Performance matrix and evaluation of findings

When it comes to predicting IINmH, the goal is to identify whether a user will invest in mHealth. The classifications and model assessment findings in Table 12 indicate the accuracy of the observations based on the confusion matrix. Accuracy was determined based on the confusion matrix (Equation (1)). Eight classifiers were considered to measure performance analysis: accuracy, true positive, false positive, precision, recall, f-measure, and GMean. Accuracy (in percentage) measures how well a model’s predictions match actual occurrences. However, accuracy measurements cannot figure out the difference between the numbers of correctly identified samples of each class, particularly for the positive class in classification problems. A somewhat reliable classifier may mislabel the positive class as negative. For evaluating model performance, accuracy in classification tasks alone might not ensure adequate performance measurement.

| (1) |

Table 12.

Confusion Matrix for Cardiovascular Disease Prediction

| Predicted |

||

|---|---|---|

| Actual | Intention to invest | No intention to invest |

| Intention to invest | True positive (TP) | False Negative (FN) |

| No intention to invest | False Position (FS) | True Negative (TN) |

In addition, we incorporated five metrics: precision, recall, F-measure, GMean, and area under curve (AUC), along with confusion matrix and accuracy percentage, which are often applied to classification issues. These values are calculated as follows:

| (2) |

| (3) |

| (4) |

(5)

| (6) |

The F-measure represents a weighted average of precision. This is the percentage of accurate positive predictions made, and recall is a method of measuring how well a classifier can identify positive instances. The objective of GMean is to evaluate the balance between the two-class recall. A low GMean score will be obtained if the model is inherently biased towards one of the two classes. Finally, the AUC is used to evaluate the average performance of a classification model when various parameters are used. With increasing AUC, the model's classification capacity becomes more accurate and reliable. Provost and Fawcett (1997) recommended that instead of assessing accuracy rate, the receiver operating characteristics (ROC) curve of AUC be used. Thus, this technique has become more popular in the field of categorisation. Therefore, we used the F-measure, the GMean, and the ROC curve of the AUC to evaluate the model's performance in terms of predicting the IINmH.

We partitioned our dataset into two segments before running the ML algorithms: the original dataset (Ori), and the dataset with feature selection (FS). Similarly, we computed our results for four sections: the original dataset, the dataset with feature selection (FS), the dataset with FS and hyperparameter optimisation (FS_HPO), and the original dataset with HPO (Ori_HPO). The findings obtained from the eight distinct ML models regarding performance analysis are shown in Table 13 . The most accurate models were provided by XGBoost for both Ori_HPo and FS_HPO, with values of 0.90 and 0.881, respectively; whereas the least accurate models were provided by NN, with values of 0.68 and 0.6520 for Ori_HPo and FS_HPO, respectively.

Table 13.

Performance analysis of ML models.

| Classifiers | CC1 (%) | TP2 | FP3 | precision | recall | f1-score | Gmean | |

|---|---|---|---|---|---|---|---|---|

| Ori | Support Vector Machine | 80.40 | 0.32 | 0.06 | 0.78 | 0.91 | 0.84 | 0.84 |

| Logistic Regression | 79.50 | 0.32 | 0.07 | 0.78 | 0.89 | 0.83 | 0.83 | |

| Random Forest | 72.30 | 0.31 | 0.10 | 0.75 | 0.83 | 0.79 | 0.79 | |

| KNN | 78.60 | 0.28 | 0.04 | 0.75 | 0.94 | 0.83 | 0.84 | |

| Naive Bayes | 75.90 | 0.32 | 0.11 | 0.77 | 0.83 | 0.8 | 0.8 | |

| AdaBoost | 75.00 | 0.29 | 0.09 | 0.74 | 0.86 | 0.8 | 0.8 | |

| Neural Network | 72.00 | 0.28 | 0.12 | 0.73 | 0.81 | 0.77 | 0.77 | |

| XGBoost | 68.80 | 0.28 | 0.15 | 0.71 | 0.77 | 0.74 | 0.74 | |

| FS | Support Vector Machine | 76.80 | 0.27 | 0.05 | 0.74 | 0.92 | 0.82 | 0.83 |

| Logistic Regression | 77.70 | 0.30 | 0.07 | 0.76 | 0.89 | 0.82 | 0.82 | |

| Random Forest | 72.30 | 0.28 | 0.12 | 0.74 | 0.8 | 0.77 | 0.77 | |

| KNN | 75.80 | 0.28 | 0.07 | 0.74 | 0.89 | 0.81 | 0.81 | |

| Naive Bayes | 76.80 | 0.30 | 0.08 | 0.76 | 0.88 | 0.81 | 0.82 | |

| AdaBoost | 72.30 | 0.34 | 0.17 | 0.77 | 0.73 | 0.75 | 0.75 | |

| Neural Network | 69.60 | 0.24 | 0.10 | 0.69 | 0.84 | 0.76 | 0.76 | |

| XGBoost | 71.40 | 0.33 | 0.17 | 0.76 | 0.73 | 0.75 | 0.74 | |

| FS_HPO (Grid Search) | Support Vector Machine | 77.80 | 0.30 | 0.07 | 0.76 | 0.89 | 0.82 | 0.82 |

| Logistic Regression | 77.80 | 0.30 | 0.07 | 0.76 | 0.89 | 0.82 | 0.82 | |

| Random Forest | 71.00 | 0.31 | 0.15 | 0.74 | 0.77 | 0.75 | 0.75 | |

| KNN | 73.20 | 0.27 | 0.09 | 0.72 | 0.86 | 0.79 | 0.79 | |

| Naive Bayes | ||||||||

| AdaBoost | 75.90 | 0.31 | 0.10 | 0.76 | 0.84 | 0.8 | 0.8 | |

| Neural Network | 65.20 | 0.26 | 0.17 | 0.68 | 0.73 | 0.71 | 0.7 | |

| XGBoost | 88.10 | 0.34 | 0.04 | 0.81 | 0.94 | 0.87 | 0.87 | |

| Ori_HPO (Grid Search) | Support Vector Machine | 79.5 | 0.31 | 0.06 | 0.77 | 0.91 | 0.83 | 0.84 |

| Logistic Regression | 79.5 | 0.32 | 0.07 | 0.78 | 0.89 | 0.83 | 0.83 | |

| Random Forest | 74.1 | 0.31 | 0.11 | 0.75 | 0.83 | 0.79 | 0.79 | |

| KNN | 75.9 | 0.31 | 0.10 | 0.76 | 0.84 | 0.8 | 0.8 | |

| Naive Bayes | ||||||||

| AdaBoost | 75.0 | 0.30 | 0.10 | 0.75 | 0.84 | 0.79 | 0.79 | |

| Neural Network | 67.6 | 0.29 | 0.17 | 0.71 | 0.73 | 0.72 | 0.72 | |

| XGBoost | 90.0 | 0.30 | 0.07 | 0.82 | 0.95 | 0.88 | 0.88 |

Ori = Original dataset, FS = feature selection, HPO = hyperparameter optimisation.

Correctly Classified (%), 2TP: True Positive, 3FP: False Positive.

When the FS dataset was used, both Random Forest and AdaBoost achieved the same accuracy of 72.30. Similarly, in terms of FS_HPO and Ori_HPO, the SVM and LR both had the same accuracy (79.5%). The noteworthy finding is that neural networks provided excellent outcomes when applied to a wide feature collection. When features were eliminated, accuracy values decreased (Ori: 72%, FS: 69.6%, FS_HPO: 65.2%, and Ori_HPO: 67.6%). In terms of precision, recall, F1-score, and GMean, it is therefore noteworthy that the XGBoost model outperformed other ML models. In contrast, the neural network provided the worst values for both the FS_HPO and Ori_HPO contexts. Moreover, with an AUC score of 0.847 for Ori_HPO, XGBoost outperformed the other ML models, followed by LR with a value of 0.779 for both the Ori and Ori_HPO instances. Fig. 5 represents the ROC curve of the AUC score of each ML model. We can conclude that feature selection is not a major concern in a small dimensional dataset. Compared to the seven ML models, XGBoost performed the best. Thus, the XGBoost model was employed to further investigate the relation between the predictors and the target variable of the proposed integrated research framework that determines the intention of young adults to invest in mHealth.

Fig. 5.

ROC curve for AUC score for each ML mode.

5.6.3. Sensitivity analysis (SA)

SA is an approach for determining how the variability of a target outcome may be affected by the predictors that are strongly dependent on the input. The relative importance of predictive variables on the target output was determined by performing a sensitivity analysis. Numerous techniques for SA have been described in scientific literature. In recent literature (Zhou et al., 2021), Sobol’s indices (Sobol, 2001) have gained prominence because of variance-based methods for substitute model concepts. Sobol’s SA can determine the contribution of each predictor variable and their interconnections to the overall model output variance (Zhang et al., 2015). It is worth noting that the purpose of Sobol’s SA is not to determine the source of input variability; it simply shows the effect and magnitude of the change on the model's output.

Consequently, the Sobol's SA for the identified XGBoost model was used in this study to investigate and quantify the relevance of predictor variables influencing the IINmH. The relative feature importance values of each predictor variable are represented in Fig. 6 . The value-for-money was the most important predictor affecting the IINmH, followed by mobile Internet cost. Health motivation and perceived susceptibility were the third and fourth-ranked predictors, respectively. Mobile Internet speed was the least important predictor. In summary, financial concerns (value-for-money and the cost of mobile Internet access) greatly impact young adults' intentions to invest in mHealth.

Fig. 6.

Relative feature importance.

6. Discussion

Understanding young adults’ IINmH is critical for the long-term viability of healthcare systems, since this digitalised group of individuals is likely to be the most prominent consumers of mHealth services. To better understand the mechanism of investment intentions in mHealth, this study incorporates the HBM and ECM and presents a novel model based on task needs and technological functionalities. Besides, prior research on mHealth has overlooked the incorporation of monetary aspect (i.e., Internet cost) and network factors (i.e., Internet speed), and the possible effect of users' expectations on the decision to use mHealth, all of which may affect IINmH. Thus, these research conclusions are limited because they cannot fully represent the asymmetrical relationship among the predictors involved. This study used an integrated research model based on the HBM and ECM to investigate the theoretical relationship between health beliefs and users' expectations-decision to use mHealth services. The integrated model used a multi-analytical technique, including SEM, fsQCA, and ML approaches.

The SEM findings indicated that the VfM, MIC, HM, and CS substantially impact young adults’ IINmH during the COVID-19 pandemic. Surprisingly, Pba and MIS are insignificant to IINmH. The MIC is negatively significant to IINmH, which indicates that increasing the MIC would decrease investment in mHealth. The findings of the fsQCA revealed that no single predictor is necessary alone, but all predictors had an important impact in predicting the IINmH. The fsQCA solution analysis supplied six different solutions. Following the post-hoc analysis of SEM, the fsQCA and ML-based feature importance method also showed that VfM and MIC are the most significant predictors of IINmH. Additionally, while the SEM model revealed that around 45.7% of the variation is in IINmH technology, fsQCA provided 54.6% of the variation in IINmH with six different solutions. At the same time, the ML-based XGBoost model can predict users’ IINmH with 90% accuracy. Thus, this study revealed the importance of using configurational analysis in mHealth. It is thus recommended to use a multi-analytical approach (SEM-fsQCA-ML) to explore the complex causation underpinning mHealth investment intention, which can overcome the possible constraints and drawbacks of traditional statistical techniques. These findings have several implications for theory and practice, which are discussed in the next section.

7. Implications

7.1. Theoretical implications

The findings of this study provide a range of theoretical contributions to consider. First, this study contributes to developing an integrated research framework that is employed in the context of decisions by young adults to invest in mHealth. The integrated framework is more advanced than the single HBM or ECM, demonstrating that integration of the HBM and ECM concepts enhances the predictive power for IINmH. Thus, the integrated model provides a more comprehensive understanding of young adults regarding their IINmH. This result contributes to the gap identified by Veeramootoo et al. (2018), who stated that to comprehend behavioural approaches better, researchers must implement integrated IS models and constructs rather than depending on conventional models.