A practical quantum scheme is shown to have a performance beyond the classical limits in monitoring a manufacturing process.

Abstract

We introduce a protocol addressing the conformance test problem, which consists in determining whether a process under test conforms to a reference one. We consider a process to be characterized by the set of end products it produces, which is generated according to a given probability distribution. We formulate the problem in the context of hypothesis testing and consider the specific case in which the objects can be modeled as pure loss channels. We demonstrate theoretically that a simple quantum strategy, using readily available resources and measurement schemes in the form of two-mode squeezed vacuum and photon counting, can outperform any classical strategy. We experimentally implement this protocol, exploiting optical twin beams, validating our theoretical results, and demonstrating that, in this task, there is a quantum advantage in a realistic setting.

INTRODUCTION

Substantial progress has been made in recent years in the field of quantum sensing (1, 2) both for continuous parameter estimation (3–5) and for discrimination tasks in the case of discrete variables (6–8). The use of quantum states and resources brings an advantage that has been demonstrated in various specific tasks: both phase (9–13) and loss (14–20) estimation, quantum imaging (21–23), and discrimination protocols such as target detection (24–27) and quantum reading (28, 29).

In this class of problems, a parameter of interest is encoded in some quantum states, or channels, and the values it can take can belong to either discrete or continuous sets. The estimation of this parameter requires the choice of a probe, as well as a measurement scheme, i.e., a measurement strategy. Here, we consider an important discrimination problem that can find various important applications, the quantum conformance test (QCT). In this problem, one wants to assess whether a process is conform to a reference, or if it is defective. In the general case, the process is characterized by a physical parameter distributed according to some continuous probability density distribution. We experimentally have access to the end products of this process, which we can perform measurements on. Accordingly, the measurement outcomes belong to a continuous set of possible values. However, the expected output of the procedure should be binary: conform or not conform. Such conformity tests appear frequently in many applications (30), one example being product safety testing.

Under energy constraints for the probe, quantum mechanical fluctuations set lower bounds to the probability of error that depend on the decision strategy. It is important to investigate whether, and to what extent, the use of certain quantum resources can reduce the probability of error below what is possible in the classical domain.

In this work, we introduce a formal description of the conformance test problem in the context of quantum information (31) and consider the paradigmatic example of bosonic pure loss channels probed with light. We demonstrate that, under the same energy constraints, i.e., fixing the photon number, a quantum strategy making use of entangled photons as probe states, and photon counting (PC) as a measurement strategy, can deliver better results than any classical strategy. Last, we present an experimental optical implementation of our proposed quantum protocol, which validates our theoretical model and shows that a genuine quantum advantage persists even in the presence of experimental inefficiencies.

Quantum conformance test

The QCT can be modeled as follows. We define the binary random variable x ∈ {0,1}, which corresponds to the process from which the physical system under test (SUT) was generated, 0 being the reference and 1 being the defective one. We consider the monitoring of a physical process Px, producing a quantum object (the SUT), ℰθ, which depends on a parameter θ. The process Px can be described by the ensemble {gx(θ), ℰθ}: The parameter θ is extracted from a set A, according to the probability distribution gx(θ), and it defines the physical object ℰθ. The set A can be either discrete or continuous. The conformance test consists in ruling whether an unknown process is conform to a “reference” process, P0, or if it should be labeled “defective,” P1, using measurements on the set of objects it produces {ℰθ}.

A QCT is performed using a probe in a generic quantum state ρ. After the probe has interacted with the SUT, the final state is measured by a positive-operator-valued measure (POVM) Π and, after classical postprocessing of the measurement results, the outcome of the procedure is a final guess on the nature of the production process, expressed by the binary variable y ∈ {0,1}.

The test is successful when y = x, i.e., when the guess is correct. On the other hand, if y ≠ x, the test fails. Two cases can be distinguished:

● False negative: In this scenario, a SUT produced by a conform process (x = 0) is labeled as defective (y = 1). In an industrial context, this kind of outcome can be seen as an economic loss for a manufacturer, as a conform process is considered defective. We will denote the probability of false negatives as p10.

● False positive: A SUT produced by a defective process (x = 1) is labeled as conform (y = 0). This outcome represents a risk since possibly unsafe products are released. The false-positive probability will be referred to as p01.

The analysis of false positives and negatives plays a central part in conformity testing, and the specific choice, if required, of the tolerance on either one of those errors vastly depends on the situation considered. In a general scenario, a relevant figure of merit—when the energy of the probe is considered a limited resource and, therefore, one compares the results at fixed energy—that can be used to assess the effectiveness of the test is the total probability of error, defined as

| (1) |

In Fig. 1, we present some examples of error probabilities for the conformance test. In Fig. 1 (A and B), two possible scenarios for the conform and defective processes are shown. In Fig. 1A, we consider two distributions whose overlap is negligible, whereas, in Fig. 1B, we consider significantly overlapping distributions. In Fig. 1 (C to F), we show the probability distributions for the parameter, convoluted with the noise emerging from both the measurement process and the probe state, either classical (Fig. 1, C and D) or quantum (Fig. 1, E and F). From these distributions, the outcome y must be decided. One can observe that the noise present in the state, which probes the SUT, translates into an error in the discrimination.

Fig. 1. Examples of error probabilities for the conformance test.

(A and B) Distributions of the reference process (red) P0, and defective one (blue) P1: the two rows present opposite and archetypal situations. The graphics (C to F) are obtained considering a pure loss channel of parameter θ = τ as the SUT, and a PC measurement. (C and D) Resulting photon number distributions p(n), in the case where a classical state is used as a probe. The overlaps between the two distributions, highlighted in green and blue, the two colors distinguishing the cases where the reference or the defective process is most likely, are visualizations of the conditional error probabilities, at a given value of τ, while their weighted sum gives an appreciation of the total error probability. (E and F) Case in which quantum probes are used: Quantum correlations enhance the performance of the discrimination task.

In the subsequent parts of this work, we focus on a specific class of SUTs: the bosonic pure loss channels. We show how, in this case, quantum resources can be used to notably mitigate the spurious effect of the noise and can improve the discrimination performance—evaluated in terms of total probability of error—over any classical strategy. Subsequently, we analyze the case in which a constraint is imposed on either the false-positive or false-negative probabilities. We note that the quantum reading problem, originally proposed in (28) and experimentally tested in (29), can be considered to be a special case of the conformance test (i.e., in the case where g0 and g1 are both Dirac delta functions).

RESULTS

Pure loss channels

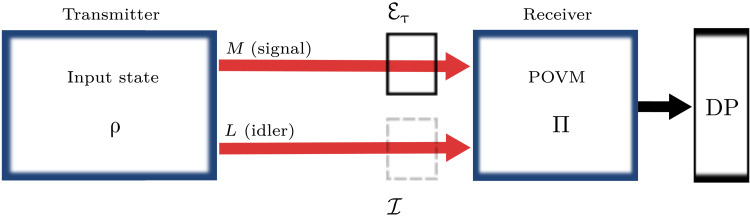

A bosonic loss channel ℰτ is characterized by its transmittance τ ∈ [0,1]. The input-output relation for such a channel is , where is the annihilation operator of the input mode (32). The annihilation operator of the mode on the second port, , acts on the vacuum, as we are considering pure losses. The configuration for a conformance test over a pure loss channel is described in Fig. 2. A transmitter irradiates an optical probe state ρ on the SUT. In a general case, the state is bipartite, having a number M of signal modes interacting with the SUT and a number L of idler ones that represent the ancillary modes. The state is measured at the receiver by a joint measurement, and its outputs are processed to obtain the final outcome.

Fig. 2. Quantum conformance test.

A probe ρ sends M signal modes through the loss channel ℰτ, representing the SUT, while L idler modes directly reach the receiver—I represents the identity operator. A POVM Π is applied to the output state. Using the result of this measurement and data processing (DP), a decision y is taken: The process generating ℰτ is identified as conform (y = 0) or defective (y = 1).

After the interaction with the SUT, an input state ρ will be mapped into either ρ0 or ρ1, with

| (2) |

and where represents the expectation value over the ensemble P and I is the identity operator.

In general, the output states of the reference and defective processes, ρ0 or ρ1, will overlap. Accordingly, the discrimination between the processes will be affected by an error probability , which is a function of the processes considered, the input state, and the POVM—as well as the decision procedure applied to the measurement result. The optimization of the QCT protocol is achieved by a minimization of over all possible input states ρ and POVMs Π

| (3) |

Without constraints on the energy, a trivial strategy is to let the energy of the probe system go to infinity, which, given a suitable measurement, would nullify the quantum noise and lead to the minimum possible perr permitted by the problem, i.e., the overlap between the two initial distributions g0 and g1 (see Fig. 1). Nevertheless, in several relevant cases, one cannot dispose of, or use, states of arbitrarily large energy (e.g., in order not to damage the SUT). In the case we are considering, we fix the total energy of the probe, and the optimization problem is not easily solved directly—this is particularly true when experimental imperfections are taken into account. Moreover, any theoretical bound that could be found would not necessarily correspond to a viable experimental implementation (feasible input state and output measurement). Our goal is more practical: Following the approaches used, for example, in (28, 33), we find the minimum probability of error that can be achieved with any classical transmitter. Then, we show that a class of quantum transmitters, namely, multimode two-mode squeezed vacuum (TMSV) states, achieves probabilities of error below the classical bound, demonstrating a quantum advantage.

Classical limit

First, we turn our attention to the derivation of the minimum error probability which can be achieved using classical input states. In the context of quantum optics, classical states can be defined as the states having a positive P-representation (34). A generic classical bipartite state can be represented as

| (4) |

where ∣α⟩ and ∣β⟩ are M- and L-mode coherent states, respectively (corresponding to the signal and idler channels), and P(α, β) ≥ 0 is a probability density. The energy constraint for the signal system is expressed, in terms of mean signal photon number , as

| (5) |

While the choice of imposing the energy constraint only on the signal system is arbitrary—the alternative being, for example, a constraint on the total energy—it is a natural choice for real applications, where the energy irradiated over the SUT should be limited.

Given an input state ρcla, the output states, after interaction with the SUT, are and , calculated according to Eq. 2. In this context, the minimum probability of error for the QCT protocol with classical states is equal to the minimum probability of error in the discrimination of and . The best performance in this task is achieved by using a POVM measurement which assumes the Helstrom projectors as elements (6). This optimal discrimination procedure yields a probability of error given by

| (6) |

where D(ρ0, ρ1) = ‖ρ0 − ρ1‖/2 is the trace distance with . A lower limit for can be found by upper bounding D(ρ0, ρ1). By exploiting the convexity of the trace distance (see the Supplementary Materials for details), the minimum error probability for classical states in the QCT protocol is bounded by

| (7) |

The quantity C establishes a lower bound for the discrimination error probability when considering classical resources and an optimal measurement strategy. We note that this bound is not tight, which means that it may not be reached by any classical receiver.

Quantum strategy

In the following, we analyze the particular strategy, involving quantum states, that is able to surpass the best classical performance C. It uses a transmitter ρ constituted of K replicas of a TMSV state and a PC receiver (29), whose output is processed by a maximum likelihood decision.

The TMSV state (35) admits the following expression in the photon number basis: , where is a thermal distribution, is the mean photon number, and ∣n⟩i is the n-photon state in the i = S signal, or i = I idler mode. TMSV states can easily be produced experimentally by spontaneous parametric down-conversion (SPDC) (36–38) or four-wave mixing (39, 40, 41). A multimode TMSV state is a tensor product of K TMSV states ⊗K ∣ψ⟩. It admits a multithermal distribution for the total photon number in both the signal and idler modes , denoted as (the same notation is used for the mean photon number) and preserves perfect photon number correlation between the channels, i.e., . The result of the PC measurement is the classical random variable n = (nS, nI), whose distribution p(n∣Px) = ⟨nS, nI∣ρx∣nS, nI⟩ is conditioned on the nature x of the process, where ρx is defined in Eq. 2. Using Bayes theorem and assuming the defective and reference processes to be equiprobable, p(Px = P0) = p(Px = P1) = 1/2, we can write the a posteriori probability p(Px∣n) as

| (8) |

Let us first consider the action of a pure loss channel on the photon number distribution of a state. The distribution p(n∣τ) after the interaction is the composition of the joint distribution with a binomial distribution

| (9) |

since the m signal photons can be seen as undergoing a Bernoulli trial each, with probability of success τ, resulting in the binomial distribution ℬ(nS∣m, τ). Using the linearity of quantum operations, we can evaluate the effect of a loss channel with transmittance τ, p(n∣Px), as

| (10) |

After the measurement, the decision is made by choosing the outcome that maximizes the conditional probability: y is chosen such that . We note that this condition for the choice of y is equivalent, due the constant prior, to a maximum likelihood decision, i.e., choosing y such that p(n∣Py) ≥ p(n∣P1−y). The probability of error for the quantum strategy, Q, is given by

| (11) |

Classical states and PC

We have derived a limit C on the performance that can be achieved with an optimal classical strategy, i.e., optimal classical input states and an unspecified optimal receiver. We also defined the performance, Q, of a quantum strategy using TMSV and PC. In this section, we consider the case in which classical states are paired with a PC receiver. The bound found in Eq. 7 is not tight, meaning that it may not be possible to reach it. Moreover, in the case where a POVM could be found that saturates the bound, its implementation may be of difficult practical realization. The analysis of the best classical performance achievable with the PC receiver will give a second classical benchmark, whose performance can be experimentally validated.

The analysis is analogous to the one performed for the quantum strategy. However, since the input states considered are limited to classical ones, the use of idler modes cannot improve the performance. Classical states are statistical mixtures of coherent states that are Poisson distributed in the photon number: Their variance is lower bounded by the Poisson one (34). In this scenario, the best performance is achieved using signal states having a Poisson photon number distribution. The error probability perr is proportional to the overlap of the measurement outcomes, as shown in Fig. 1, which, in the case of a PC measurement, are the photon number distributions. Using a state with a narrower photon number distribution will lead to better discrimination performances. We denote the best performance that can be achieved using classical states and PC as Cpc and write it in the form

| (12) |

where 0 ≤ qp ≤ 1. The form of the function qp depends on the distributions of the considered processes, g0 and g1. In the following, we report on the case where both the reference and defective processes have Gaussian distributions (where and σ2 are the mean and the variance). The distributions that we consider are thus and for the reference and defective processes, respectively. For more general solutions and a more in-depth analysis, we refer the reader to the Supplementary Materials.

As pointed out in previous sections, the probability of error depends on the overlap of the two measurement distributions p(P0∣nS) and p(P1∣nS). Under the assumption of Gaussian distributions for both processes, and of a large photon number in the initial probe state , the photon number distribution at the outcome can be well approximated by a Gaussian: , with (details can be found in the Supplementary Materials). An expression for the overlap can be found after the determination of the solutions of the equality p(P0∣n) = p(P1∣n). In general, this equation admits two solutions in the case where the distributions are Gaussian. If we can assume the variances to be of the same order, and , often only one of the solutions, labeled nth, will be in a range where p(P0∣n) and p(P1∣n) are not negligible. Under these conditions, we can derive a closed form for the function qp in Eq. 12 as

| (13) |

As indicated before, the expression in Eq. 13 holds for certain regimes, and a more general solution, as well as an explicit expression for nth, is reported in the Supplementary Materials.

Numerical study

In the strategies described in the previous sections, the performance of the QCT is a function of the mean photon number , as well as the form of the distributions of the processes considered, g0 and g1.

A visualization of the discrimination problem is depicted in Fig. 1, where the measurement strategy considered at the receiver is PC. In Fig. 1A, the distributions of the processes to be discriminated are shown, g0 in red and g1 in blue. In the first row, the two distributions barely overlap. Nonetheless, when a probe with finite energy is used to perform the discrimination, the intrinsic noise of the state on the photon number distribution results in a substantial amplification of the overlap of the measurement outcome distributions. This is shown in Fig. 1C, where the two possible photon number distributions, after the interaction with the SUT, and for the classical input state discussed in the previous sections, are plotted. The overlap area, highlighted in blue-green, is a visual representation of the probability of error: perr is equal to half that area. This overlap cannot be reduced using classical states without an increment to the total energy used. However, using the same signal energy, an improvement can be achieved using quantum correlations. If we consider as input a TMSV state, as described earlier in the text, we can analyze the photon number distribution for the signal system, conditioned to having measured a given photon number in the idler branch. This analysis is shown in Fig. 1E, where the overlap between the two distributions is markedly reduced, leading to much more efficient discrimination. The nature of the advantage resides in the photon number correlations, which are used to greatly reduce the photon number noise of the initial state. In the second row, is depicted a situation in which the overlap of the parameter distributions is high. In Fig. 1B, the considered distributions have the same mean value but different variances. In this case, using a classical discrimination strategy performs very poorly, as shown in Fig. 1D, where the two processes are almost indistinguishable. Figure 1F shows how, once again, quantum correlation can be used to greatly improve the performance. We note that the best performances (shown in Fig. 1, E and F) are achieved in the limit of large K so that .

From this point on, to reduce the number of considered parameters, we assume the reference process P0 to be strongly peaked around τ0 so that we can approximate it as , where δ(τ) is the Dirac delta distribution. We also consider two different noteworthy forms for g1: the Gaussian distribution , and the uniform one . Using a Gaussian probability distribution for the transmittance τ is justified by the wide range of physical phenomena it can describe. In this case, the processes are fully characterized by their mean value and variance σ2. On the other hand, the uniform distribution, , is well suited to describe situations in which there is a complete lack of knowledge of the nature of the process, whose range can be limited by the physical constraints of the apparatus. Once more, the processes are characterized by two parameters only: their mean and half-width δ. To make a fair comparison of the error probabilities for Gaussian and uniform distributions, we choose their parameters such that the resulting variances are equal. In particular, we will use , since Var.

In Fig. 3, we show how, for each of the three strategies studied—the classical optimal, C, the classical strategy with PC, Cpc, and the quantum strategy Q—the error probability depends on the reference process transmittance τ0. The number of signal photons is fixed to ns = 105, as well as the mean value of the transmittance of the defective process . In Fig. 3A, the performances of the different strategies are plotted in the case of a uniformly distributed defect, while in Fig. 3B the case of a Gaussian distributed one is reported. In both cases, the results for different values of the variance of the process (denoted by different colors) are shown. In the range studied, it can be seen that the quantum strategy (solid lines) outperforms both the classical lower bound (dashed lines) and the strategy using a classical probe and PC (dotted lines). Both classical and quantum strategies have a maximum error probability when . This shared feature does not depend on the noise but on the nature of the problem. In this configuration, the overlap between the initial distributions is maximum and, as a result, the error probability is maximum as well. In both panels, we see how, in this high overlap region, the effect of the probe state’s noise is such that, when using a classical strategy, the process results are completely (perr = 0.5), or almost completely, indistinguishable, while distinguishability is recovered when quantum states with reduced noise are used.

Fig. 3. Error probabilities.

The error probabilities as functions of the mean value of the reference process are displayed, for different values of standard deviation of the defective process σ (the numerical values are reported in the legend), and for the different discrimination strategies described in the main text: the quantum strategy (solid line), the classical strategy with PC (dotted line), and the optimal classical strategy (dash-dotted line). The reference distribution is considered strongly peaked: . In (A), the defect distribution, g1(τ), is uniform; in (B), it is Gaussian. The distributions g1(τ) for different values of variance are plotted on the first row panels. In both bottom panels, the mean number of signal photons is fixed to ns = 105 and the mean value of the defective process is .

Experimental results

To validate the presence of a quantum advantage for the conformance test, we experimentally implemented the protocol described in the “Quantum strategy” section—with the substantial difference that we operate with a nonideal detection efficiency η. The detection efficiency encompasses different processes: the nonunit quantum efficiency of the detectors, the losses due to the different optical elements, and, in the case of correlated photon sources, the imperfect efficiency affecting the measurement of the correlated photons. These losses cannot be distinguished from those caused by a pure loss channel. Hence, the fit with theoretical curves must be made using the substitution τ → ητ. In general, the effect is equivalent to a reduction of the probe energy of the same factor τ. For the quantum strategy, however, another effect is the reduction of the degree of correlation of the state. Because of this, in general, it is expected that the quantum advantage will be reduced as η becomes lower. The setup used is thoroughly described in Materials and Methods.

Our results are presented in Fig. 4. Using a sample presenting a varying spatial transmittance, we acquired data for different values of τ. We used this experimental dataset to realize different defect distributions: In Fig. 4, we plot perr (see Eq. 1) when uniform distributions are considered with , , and . For each acquisition, the mean numbers of photons nS and nI, and the efficiencies ηS and ηI of the signal and idler channels, are estimated, as well as the electronic noise ν of the camera. These values are used to draw the theoretical curves: the quantum and classical error probabilities with PC, Q, and Cpc, as well as the classical optimal bound C, defined in Eq. 7. We display the results in Fig. 4 (D to F). As a comparison, we also plot the ideal case ηS = ηI = 1 in Fig. 4 (A to C). For the quantum error probability, we also report the confidence region at two standard deviations as a colored band around the curve. The two sets of experimental data reported in Fig. 4 correspond to the quantum and classical PC strategies, respectively. More details on the elaboration of these results and data analysis can be found in Materials and Methods.

Fig. 4. Error probabilities for the QCT.

(A to F) Error probabilities are plotted as a function of the reference parameter τ0, for different values of the defective process’s mean value and half-width δ: , , and . On the left-hand side, we show the theoretical curves (quantum and classical probability of error with PC Q and Cpc, as well as classical bound C) in the ideal case of unitary detection efficiency (ηS = ηI = 1) for different sets of parameters σ and . In the right column, we show the experimental error probabilities obtained using the quantum PC protocol (black dots—Exp Q) and the classical PC one (black circles—Exp C). The efficiencies of the channels in the experimental realization are ηS ≃ ηI ≈ 0.76, while the mean number of signal photons is nS ≈ 105. The theoretical error probabilities using the estimated experimental parameters (black and light green solid lines—Q and Cpc) are plotted for both protocols, as well as the confidence interval for the quantum case (green shaded areas—± one SD). The classical bound C is also displayed as a comparison.

For the three regimes considered, most points fall within the confidence interval. These results show that, even in the case of degraded detection efficiency, the quantum strategy always brings an advantage with respect to the classical one based on PC. However, within the range of the defect distribution, the optimal classical bound C on the error probability becomes smaller than the quantum strategy error probability in some regions. To bring this point into perspective, we point to Fig. 4 (A to C), which are constructed with the same experimental parameters but unit detection efficiency. In this case, the quantum strategy overcomes any classical one. We note that, as expected, while the classical error probabilities are little modified by the change in efficiency η, the quantum one improves substantially in case of η = 1. This effect stems from the fact that, as mentioned, spurious losses η reduce the photon number correlations between signal and idler channels.

Constrained probability of error

Up until now, the figure of merit considered was the total probability of error perr (Eq. 1). However, this quantity may not be the most relevant one to optimize, depending on the nature of the process under test. In some instances, it can be required to impose a constraint in either one of the conditional probabilities p01 or p10, instead of their sum. These two types of errors represent different outcomes, and the minimization of one can be deemed more important than that of the other. To proceed further, we use the notion of cost, which quantifies the fact that, in the mislabeling of a process, the false positives and false negatives may not be equivalent for the operator making the decision: One type of error may be more “costly” than the other. We introduce the coefficient 0 < S < 1 and define the total cost C of the conformance test as

| (14) |

From Eq. 14, it follows that, if we use the cost C as the figure of merit for the evaluation of the QCT, the minimum probability of error is achieved for S = 1/2. If S ≠ 1/2, the minimization of the cost will, in general, not minimize the total error probability perr.

In the following, we analyze how the strategies presented in the previous sections, consisting of PC and a maximum likelihood decision, for both classical and quantum probes, can be modified to minimize the cost C. In the formalism of the previous sections, we write the general term for the probabilities pij as

| (15) |

where Θ is the step function.

The false-positive and false-negative probabilities are plotted as functions of the number of signal photons in Fig. 5A for the previous section’s strategies, in the case where perr is the optimized quantity. In both the classical (red lines) and quantum (blue lines) cases, the false-negative probability tends to be smaller than the false-positive one, meaning that the procedure is more likely to select the reference process. This unbalance comes from the fact that we consider as a reference process a distribution strongly peaked around τ0, while, for the defective process, we consider a uniform distribution. In terms of photon count probability, this results in the fact that, when an overlap is present, the most peaked process, P0, is chosen, while the defective one is selected in a range that is larger, but also less likely to be measured. This results in a bias toward P0.

Fig. 5. Cost analysis in QCT.

(A) Dependence on the signal photon number nS of the total error probability (perr), false positive (p01), and false negative (p10). The maximum likelihood postprocessing used in this case minimizes perr. The reference process is considered strongly peaked around the value τ0 = 0.8, while the defective one is chosen uniformly distributed with mean and half-width δ = 0.09. (B) A situation similar to that of (A) is considered, but we use a biased maximum likelihood postprocessing with bias coefficient b = 0.6 (see the main text for details). (C) We fix photon number to nS = 500 and analyze the dependence of the cost C on b. All the other parameters are equal to those of the previous panels. (D) Optimum value of b as a function of S.

The maximum likelihood postprocessing used up until now minimizes the probability of error by construction. To minimize the cost C, a different postprocessing is needed. We can modify the maximum likelihood condition used for the decision, p(n∣Py) ≥ p(n∣P1 − y), to make it more likely that one specific process is selected according to some parameters. We select y when

| (16) |

where

| (17) |

and where b ∈ [ − 1,1] is a real constant. We call this modified postprocessing biased maximum likelihood. Selecting a positive b results in the defective process being chosen more often, while a negative b results in a more likely selection of the reference one. In other words, the value of b can be varied to shift p01 and p10, reducing the cost function C at the cost of the increase of the total error probability. This is shown in Fig. 5B: p01 and p10 have been brought closer to each other and the total error probability slightly increased, in both the quantum and classical cases. It is worth highlighting how the quantum advantage that we had with maximum likelihood processing is well preserved when the biased maximum likelihood one is used. The optimization of C is performed by varying the coefficient b. As an example, we consider the particular situation in which S = 1/4, meaning that each false positive is considered three times as costly as a false negative. In this situation, it is convenient to use a positive value for b, whose effect is to reduce the number of times the reference process P0 is selected overall, thus reducing the false-positive probability p01. The dependence of C on b is shown in Fig. 5C. In both the quantum and classical cases, as expected, there is a single optimum value for b, for which one has a minimum cost. The dependence of the optimum value of b on S, with respect to the cost C, is shown in Fig. 5D.

The false-positive and false-negative probabilities of the classical and quantum strategies with a PC receiver can be evaluated experimentally with the same procedure as the one described in Materials and Methods for the total probability of error. The experimental points, along with the theoretical curves, are reported in Fig. 6.

Fig. 6. Theoretical and experimental conditional probabilities of error for the quantum and classical strategies with PC receiver.

The distribution is taken such that and δ = 0.001. The mean number of signal photons is ns ≈ 105, and the channel efficiencies are ηS ≃ ηI ≈ 0.76. In the legend, the quantum case is denoted as q ij and the classical as c ij, with i,j = 0,1.

DISCUSSION

In this work, we proposed a protocol addressing the conformance test task, exploiting specific probe states and measurement strategies. We investigated the specific scenario in which the systems under test, representing possible outputs of the unknown process, can be modeled by pure loss channels.

In this context, we found a lower bound on the error probability that can be achieved in the discrimination task, using any classical source paired with an optimal measurement. We then showed that a particular class of quantum states, namely TMSV states, can be used in conjunction with a simple receiver consisting of a PC measurement and a maximum likelihood decision to improve the performances over those of any classical strategy. This enhancement is due to the high degree of correlation (entanglement) of TMSV states, whose nature is fundamentally quantum. We also analyzed the particular case of a classical source paired with PC measurements at the receiver.

We demonstrated that the quantum advantage persists for a wide range of parameters, even in the presence of losses due to possible experimental imperfections, and we validated our results by performing an experimental realization of the protocol. We showed an experimental advantage in a realistic scenario where experimental losses amounted to more than 20%, highlighting the robustness of the proposed protocol.

We emphasize the fact that these results are particularly important because they are achieved using states that are easily produced, as well as a receiver design of simple implementation, allowing practical applications of the protocol with present technology. We found an advantage, although the bound C on the performance of classical states is not tight, meaning that the actual quantum advantage could effectively be higher. For a thorough study, one can consider a scenario in which a set of objects is tested instead of one. In this scenario, we find an informational limit using the Holevo bound and we showed how our proposed quantum strategy, relying on an independent measurement over individual systems, surpasses a more general classical strategy, where joint measurements over a collection of systems are allowed. A detailed description of this result will be presented in a forthcoming paper. The proposed QCT protocol could be used in the foreseeable future in notable problems concerning the monitoring of production processes of any object probed with quantum states. For example, our results on loss channel QCT can be used to boost the accuracy in the identifications of issues in concentration and composition of chemical production by transmittance measurement.

MATERIALS AND METHODS

Experimental setup

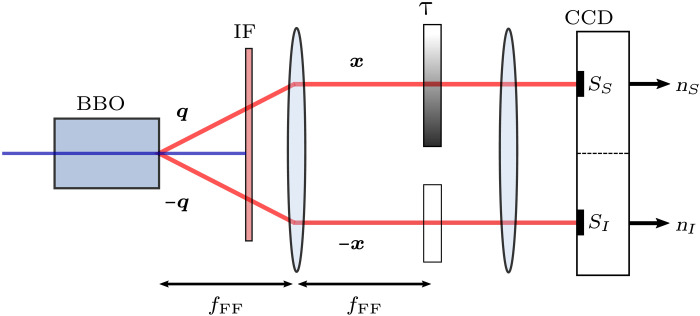

The experiment is based on SPDC and is depicted in Fig. 7. A multimode TMSV state ⊗K∣ψ⟩ is generated using a 1-cm3 type II β-barium borate crystal and a continuous-wave laser at λp = 405 nm, delivering a power of 100 mW. The down-converted photons are correlated in momentum. This correlation is mapped into spatial correlations using a lens in f-f configuration: The “far-field” lens (fFF = 1 cm) is positioned at one focal length of the output plane of the crystal. The absorption sample is positioned in the conjugated plane, which corresponds to the far field of the source. It consists of a coated glass plate, which presents different transmittance regions, realized with depositions of varying density. A blank coated glass is inserted in the idler beam’s path to match the optical paths of both beams. The sample is imaged using a second “imaging” lens onto a charge-coupled device camera (Princeton Instruments PIXIS:400BR eXcelon), working in linear mode with high quantum efficiency (>95% at 810 nm) and low (few electrons per pixel per frame) electronic noise. Individual pixels of the camera are binned together in 12 × 12 macropixels to increase the readout signal-to-noise ratio and the acquisition speed.

Fig. 7. Schematic of the experimental setup.

Pumping a β-barium borate (BBO) crystal with a laser at 405 nm, a multimode TMSV is generated. Using a lens of focal fFF, the correlation in momentum is converted into correlation in position in the sample plane, which is then imaged on the camera using a second lens. The signal beam passes through the sample of transmittance τ and is then detected in the SS region of the charge-coupled device (CCD). The idler beam goes directly to SI without interacting with the sample, although its optical path is matched with the sample’s one using a nonabsorbing glass. Integrating the signals over the two detection regions, nS and nI are collected.

The photon counts nS and nI of the signal and idler beams are obtained by integrating over two spatially correlated detection areas SS and SI, which are subparts of the “full” correlated areas. The two full correlated areas are schematically defined by the two illuminated regions on the camera, i.e., the spatial extent of the idler and signal beams. Their precise definition is made using a procedure described in (22). The total number of spatial modes collected is Ks ∼ 103, and that of temporal mode is Kt ∼ 1010 [see (42) for more details on the estimation]. The mean photon number measured in one region Si is . Hence, the mean occupation number per mode is very small . Under these conditions, the multithermal marginal photon number distributions can be well approximated by Poisson distributions (34). When the absorptive sample is replaced with an equivalent coated glass plate without any deposition, we estimate, for each pair of regions SS and SI, the corresponding detection efficiencies ηS and ηI, by exploiting the correlations of the SPDC process (43, 44). The phase matching conditions, the interference filter, the quality of the optical alignment, and the pixels’ properties on the camera all introduce small differences in the photon number correlations that need to be accounted for. With the absorptive sample, for each pair of regions, i.e., for all the different values of τ, NDτ = 2 · 104 frames are recorded (Dτ designates the experimental dataset for transmittance τ).

Data analysis

Using the experimental data, two error probabilities, p01 and p10, must be evaluated to access to the total error probability

| (18) |

In our analysis, the reference process P0 is strongly peaked around τ0. Hence, the evaluation of p10 with experimental data is rather straightforward. We use the dataset Dτ0, composed of M measurement outcome (ns; ni), when the transmittance τ0 is inserted in the signal branch to compute the experimental frequency of error f10. In particular, for each data pair (ns; ni) ∈ Dτ0, a label y ∈ {0,1} is assigned according to the maximum likelihood strategy described in the main text. In the evaluation, experimental parameters such as the mean photon number of the source and the detection efficiencies are used, estimated in a calibration phase. f10 is then defined as the number of wrong decisions, which, in this case, is simply the number of occurrences of y = 1 over M. f10 will converge to the real probability of error p10 as the number of experimental points in the dataset M becomes large.

On the other hand, the evaluation of p01 requires a more careful approach. One has to construct an ensemble of experimental data DP1, whose data are taken with parameters τ distributed in a way that is representative of the true probability density g1(τ). Experimentally, we acquire photon number pairs for each of the transmittance τi, i ∈ {1, …, L}, i.e., data points in total. The transmittance values τi are selected in an interval [τmin, τmax]. They may not be distributed uniformly in this interval because of a nonperfect experimental control of the position of the absorption layer, and the uncertainty on the estimation of τi. Starting from the experimental dataset, and binning the interval [τmin, τmax] in K equal subintervals of size 2l (2l = (τmax − τmin)/K), we define the experimental distribution for the transmittance, g, as the normalized histogram

| (19) |

where dk is the number of experimental transmittance values τi that fall in the kth bin so that the relative number of pairs (ns; ni)k is . Θk(τ) is a step function, equal to unity in the kth bin and 0 elsewhere. In an ideal case, the experimental distribution g in Eq. 19 should be a uniform distribution in the interval [τmin, τmax], but, in practice, because of the experimental issues mentioned above, it can present substantial discrepancies with respect to it.

To approximate a general probability distribution f(t), we can multiply the coefficients in Eq. 19, by proper weights wk, with k ∈ {1, …, K}. Once the weights are determined by a proper optimization procedure, described in the Supplementary Materials, they are used to modulate the number of experimental data in each bin according to the substitution , where wk represents the fraction of data kept in bin k. The ensemble of data randomly picked from the initial set, according to the weights, will define the final set DP1. We refer to the distribution of DP1 after the reweighting procedure, as gw(τ), where w = [w1, …, wK]. Since the experimental set cannot be increased, the constraint 0 ≤ wk ≤ 1 must be imposed. The procedure will, in general, reduce the number of points in the dataset: An increase of the statistical uncertainty can arise. Thus, it may be useful to introduce a second constraint on the size of the final dataset, , in the optimization procedure to put a lower bound on how much data are discarded.

For the optimization process, we define the objective function to be maximized as the Bhattacharyya coefficient between the distributions f and gw

| (20) |

This quantity measures their similarity and ranges between 0 and 1. T(w) gives a quantitative measure of how close the experimental dataset can be arranged to resemble the objective distribution. We define a threshold value 0 ≤ Tth ≤ 1, above which the approximation of f ≈ gw is deemed “good enough,” i.e., T(w) ≥ Tth. In general, the optimal w and the corresponding T(w) depend on the interval [τmin; τmax], which should be chosen so that dt is close to unity. We note that the minimum number of data points MT and the initial distribution of the experimental data can affect the results of the optimization algorithm. For this reason, in our realization of this algorithm, we sampled the interval [τmin, τmax] with a dense array of values τi, taken equispaced within the experimental uncertainty, and we took a very large total number of data points with respect to the target MT. The approximated distribution for the data, reported in Results, had a coefficient T ∼ 1. A more formal description, as well as further details on the procedure, is reported in the Supplementary Materials.

Acknowledgments

Funding: This work has been funded from the EU Horizon 2020 Research and Innovation Action under Grant No. 862644 (FET-Open project: “Quantum Readout Techniques and Technologies,” QUARTET).

Author contributions: G.O. and I.R.-B. proposed the QCT protocol. G.O. developed the theoretical model and performed the theoretical and numerical analysis for both the classical limits and the quantum protocol. P.B. and E.L. acquired the experimental data, the experiment being designed by I.R.-B. P.B. and G.O. performed the data analysis with contributions from I.P.D. M.G. leads the quantum optics group at INRiM and coordinated the project. All authors contributed to the discussion of the results and the writing of the manuscript.

Competing interests: The authors declare that they have no competing interest.

Data and materials availability: All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Materials. Additional data related to this paper is available on https://doi.org/10.5281/zenodo.5725385.

Supplementary Materials

This PDF file includes:

Supplementary Materials

Figs. S1 to S3

REFERENCES AND NOTES

- 1.Pirandola S., Bardhan B. R., Gehring T., Weedbrook C., Lloyd S., Advances in photonic quantum sensing. Nat. Photonics 12, 724–733 (2018). [Google Scholar]

- 2.Degen C. L., Reinhard F., Cappellaro P., Quantum sensing. Rev. Mod. Phys. 89, 035002 (2017). [Google Scholar]

- 3.Genovese M., Real applications of quantum imaging. J. Optics 18, 073002 (2016). [Google Scholar]

- 4.Berchera I. R., Degiovanni I. P., Quantum imaging with sub-Poissonian light: Challenges and perspectives in optical metrology. Metrologia 56, 024001 (2019). [Google Scholar]

- 5.Polino E., Valeri M., Spagnolo N., Sciarrino F., Photonic quantum metrology. AVS Quant. Sci. 2, 024703 (2020). [Google Scholar]

- 6.C. Helstrom, Quantum Detection and Estimation Theory (Academic Press, 1976). [Google Scholar]

- 7.Chefles A., Barnett S. M., Quantum state separation, unambiguous discrimination and exact cloning. J. Phys. A Math. Gen. 31, 10097–10103 (1998). [Google Scholar]

- 8.Chefles A., Quantum state discrimination. Contemp. Phys. 41, 401–424 (2000). [Google Scholar]

- 9.Aasi J., Abadie J., Abbott B. P., Abbott R., Abbott T. D., Abernathy M. R., Adams C., Adams T., Addesso P., Adhikari R. X., Affeldt C., Aguiar O. D., Ajith P., Allen B., Amador Ceron E., Amariutei D., Anderson S. B., Anderson W. G., Arai K., Araya M. C., Arceneaux C., Ast S., Aston S. M., Atkinson D., Aufmuth P., Aulbert C., Austin L., Aylott B. E., Babak S., Baker P. T., Ballmer S., Bao Y., Barayoga J. C., Barker D., Barr B., Barsotti L., Barton M. A., Bartos I., Bassiri R., Batch J., Bauchrowitz J., Behnke B., Bell A. S., Bell C., Bergmann G., Berliner J. M., Bertolini A., Betzwieser J., Beveridge N., Beyersdorf P. T., Bhadbhade T., Bilenko I. A., Billingsley G., Birch J., Biscans S., Black E., Blackburn J. K., Blackburn L., Blair D., Bland B., Bock O., Bodiya T. P., Bogan C., Bond C., Bork R., Born M., Bose S., Bowers J., Brady P. R., Braginsky V. B., Brau J. E., Breyer J., Bridges D. O., Brinkmann M., Britzger M., Brooks A. F., Brown D. A., Brown D. D., Buckland K., Brückner F., Buchler B. C., Buonanno A., Burguet-Castell J., Byer R. L., Cadonati L., Camp J. B., Campsie P., Cannon K., Cao J., Capano C. D., Carbone L., Caride S., Castiglia A. D., Caudill S., Cavaglià M., Cepeda C., Chalermsongsak T., Chao S., Charlton P., Chen X., Chen Y., Cho H. S., Chow J. H., Christensen N., Chu Q., Chua S. S. Y., Chung C. T. Y., Ciani G., Clara F., Clark D. E., Clark J. A., Constancio Junior M., Cook D., Corbitt T. R., Cordier M., Cornish N., Corsi A., Costa C. A., Coughlin M. W., Countryman S., Couvares P., Coward D. M., Cowart M., Coyne D. C., Craig K., Creighton J. D. E., Creighton T. D., Cumming A., Cunningham L., Dahl K., Damjanic M., Danilishin S. L., Danzmann K., Daudert B., Daveloza H., Davies G. S., Daw E. J., Dayanga T., Deleeuw E., Denker T., Dent T., Dergachev V., DeRosa R., DeSalvo R., Dhurandhar S., di Palma I., Díaz M., Dietz A., Donovan F., Dooley K. L., Doravari S., Drasco S., Drever R. W. P., Driggers J. C., du Z., Dumas J. C., Dwyer S., Eberle T., Edwards M., Effler A., Ehrens P., Eikenberry S. S., Engel R., Essick R., Etzel T., Evans K., Evans M., Evans T., Factourovich M., Fairhurst S., Fang Q., Farr B. F., Farr W., Favata M., Fazi D., Fehrmann H., Feldbaum D., Finn L. S., Fisher R. P., Foley S., Forsi E., Fotopoulos N., Frede M., Frei M. A., Frei Z., Freise A., Frey R., Fricke T. T., Friedrich D., Fritschel P., Frolov V. V., Fujimoto M. K., Fulda P. J., Fyffe M., Gair J., Garcia J., Gehrels N., Gelencser G., Gergely L. Á., Ghosh S., Giaime J. A., Giampanis S., Giardina K. D., Gil-Casanova S., Gill C., Gleason J., Goetz E., González G., Gordon N., Gorodetsky M. L., Gossan S., Goßler S., Graef C., Graff P. B., Grant A., Gras S., Gray C., Greenhalgh R. J. S., Gretarsson A. M., Griffo C., Grote H., Grover K., Grunewald S., Guido C., Gustafson E. K., Gustafson R., Hammer D., Hammond G., Hanks J., Hanna C., Hanson J., Haris K., Harms J., Harry G. M., Harry I. W., Harstad E. D., Hartman M. T., Haughian K., Hayama K., Heefner J., Heintze M. C., Hendry M. A., Heng I. S., Heptonstall A. W., Heurs M., Hewitson M., Hild S., Hoak D., Hodge K. A., Holt K., Holtrop M., Hong T., Hooper S., Hough J., Howell E. J., Huang V., Huerta E. A., Hughey B., Huttner S. H., Huynh M., Huynh-Dinh T., Ingram D. R., Inta R., Isogai T., Ivanov A., Iyer B. R., Izumi K., Jacobson M., James E., Jang H., Jang Y. J., Jesse E., Johnson W. W., Jones D., Jones D. I., Jones R., Ju L., Kalmus P., Kalogera V., Kandhasamy S., Kang G., Kanner J. B., Kasturi R., Katsavounidis E., Katzman W., Kaufer H., Kawabe K., Kawamura S., Kawazoe F., Keitel D., Kelley D. B., Kells W., Keppel D. G., Khalaidovski A., Khalili F. Y., Khazanov E. A., Kim B. K., Kim C., Kim K., Kim N., Kim Y. M., King P. J., Kinzel D. L., Kissel J. S., Klimenko S., Kline J., Kokeyama K., Kondrashov V., Koranda S., Korth W. Z., Kozak D., Kozameh C., Kremin A., Kringel V., Krishnan B., Kucharczyk C., Kuehn G., Kumar P., Kumar R., Kuper B. J., Kurdyumov R., Kwee P., Lam P. K., Landry M., Lantz B., Lasky P. D., Lawrie C., Lazzarini A., le Roux A., Leaci P., Lee C. H., Lee H. K., Lee H. M., Lee J., Leong J. R., Levine B., Lhuillier V., Lin A. C., Litvine V., Liu Y., Liu Z., Lockerbie N. A., Lodhia D., Loew K., Logue J., Lombardi A. L., Lormand M., Lough J., Lubinski M., Lück H., Lundgren A. P., Macarthur J., Macdonald E., Machenschalk B., MacInnis M., Macleod D. M., Magaña-Sandoval F., Mageswaran M., Mailand K., Manca G., Mandel I., Mandic V., Márka S., Márka Z., Markosyan A. S., Maros E., Martin I. W., Martin R. M., Martinov D., Marx J. N., Mason K., Matichard F., Matone L., Matzner R. A., Mavalvala N., May G., Mazzolo G., McAuley K., McCarthy R., McClelland D. E., McGuire S. C., McIntyre G., McIver J., Meadors G. D., Mehmet M., Meier T., Melatos A., Mendell G., Mercer R. A., Meshkov S., Messenger C., Meyer M. S., Miao H., Miller J., Mingarelli C. M. F., Mitra S., Mitrofanov V. P., Mitselmakher G., Mittleman R., Moe B., Mokler F., Mohapatra S. R. P., Moraru D., Moreno G., Mori T., Morriss S. R., Mossavi K., Mow-Lowry C. M., Mueller C. L., Mueller G., Mukherjee S., Mullavey A., Munch J., Murphy D., Murray P. G., Mytidis A., Nanda Kumar D., Nash T., Nayak R., Necula V., Newton G., Nguyen T., Nishida E., Nishizawa A., Nitz A., Nolting D., Normandin M. E., Nuttall L. K., O’Dell J., O’Reilly B., O’Shaughnessy R., Ochsner E., Oelker E., Ogin G. H., Oh J. J., Oh S. H., Ohme F., Oppermann P., Osthelder C., Ott C. D., Ottaway D. J., Ottens R. S., Ou J., Overmier H., Owen B. J., Padilla C., Pai A., Pan Y., Pankow C., Papa M. A., Paris H., Parkinson W., Pedraza M., Penn S., Peralta C., Perreca A., Phelps M., Pickenpack M., Pierro V., Pinto I. M., Pitkin M., Pletsch H. J., Pöld J., Postiglione F., Poux C., Predoi V., Prestegard T., Price L. R., Prijatelj M., Privitera S., Prokhorov L. G., Puncken O., Quetschke V., Quintero E., Quitzow-James R., Raab F. J., Radkins H., Raffai P., Raja S., Rakhmanov M., Ramet C., Raymond V., Reed C. M., Reed T., Reid S., Reitze D. H., Riesen R., Riles K., Roberts M., Robertson N. A., Robinson E. L., Roddy S., Rodriguez C., Rodriguez L., Rodruck M., Rollins J. G., Romie J. H., Röver C., Rowan S., Rüdiger A., Ryan K., Salemi F., Sammut L., Sandberg V., Sanders J., Sankar S., Sannibale V., Santamaría L., Santiago-Prieto I., Santostasi G., Sathyaprakash B. S., Saulson P. R., Savage R. L., Schilling R., Schnabel R., Schofield R. M. S., Schuette D., Schulz B., Schutz B. F., Schwinberg P., Scott J., Scott S. M., Seifert F., Sellers D., Sengupta A. S., Sergeev A., Shaddock D. A., Shahriar M. S., Shaltev M., Shao Z., Shapiro B., Shawhan P., Shoemaker D. H., Sidery T. L., Siemens X., Sigg D., Simakov D., Singer A., Singer L., Sintes A. M., Skelton G. R., Slagmolen B. J. J., Slutsky J., Smith J. R., Smith M. R., Smith R. J. E., Smith-Lefebvre N. D., Son E. J., Sorazu B., Souradeep T., Stefszky M., Steinert E., Steinlechner J., Steinlechner S., Steplewski S., Stevens D., Stochino A., Stone R., Strain K. A., Strigin S. E., Stroeer A. S., Stuver A. L., Summerscales T. Z., Susmithan S., Sutton P. J., Szeifert G., Talukder D., Tanner D. B., Tarabrin S. P., Taylor R., Thomas M., Thomas P., Thorne K. A., Thorne K. S., Thrane E., Tiwari V., Tokmakov K. V., Tomlinson C., Torres C. V., Torrie C. I., Traylor G., Tse M., Ugolini D., Unnikrishnan C. S., Vahlbruch H., Vallisneri M., van der Sluys M. V., van Veggel A. A., Vass S., Vaulin R., Vecchio A., Veitch P. J., Veitch J., Venkateswara K., Verma S., Vincent-Finley R., Vitale S., Vo T., Vorvick C., Vousden W. D., Vyatchanin S. P., Wade A., Wade L., Wade M., Waldman S. J., Wallace L., Wan Y., Wang M., Wang J., Wang X., Wanner A., Ward R. L., Was M., Weinert M., Weinstein A. J., Weiss R., Welborn T., Wen L., Wessels P., West M., Westphal T., Wette K., Whelan J. T., Whitcomb S. E., Wiseman A. G., White D. J., Whiting B. F., Wiesner K., Wilkinson C., Willems P. A., Williams L., Williams R., Williams T., Willis J. L., Willke B., Wimmer M., Winkelmann L., Winkler W., C. Wipf C., Wittel H., Woan G., Wooley R., Worden J., Yablon J., Yakushin I., Yamamoto H., Yancey C. C., Yang H., Yeaton-Massey D., Yoshida S., Yum H., Zanolin M., Zhang F., Zhang L., Zhao C., Zhu H., Zhu X. J., Zotov N., Zucker M. E., Zweizig J., Enhanced sensitivity of the LIGO gravitational wave detector by using squeezed states of light. Nat. Photonics 7, 613–619 (2013). [Google Scholar]

- 10.Ruo Berchera I., Degiovanni I. P., Olivares S., Genovese M., Quantum light in coupled interferometers for quantum gravity tests. Phys. Rev. Lett. 110, 213601 (2013). [DOI] [PubMed] [Google Scholar]

- 11.Schäfermeier C., Ježek M., Madsen L. S., Gehring T., Andersen U. L., Deterministic phase measurements exhibiting super-sensitivity and super-resolution. Optica 5, 60–64 (2018). [Google Scholar]

- 12.Ortolano G., Ruo-Berchera I., Predazzi E., Quantum enhanced imaging of nonuniform refractive profiles. Intl. J. Quant. Inform. 17, 1941010 (2019). [Google Scholar]

- 13.Pradyumna S. T., Losero E., Ruo-Berchera I., Traina P., Zucco M., Jacobsen C., Andersen U. L., Degiovanni I. P., Genovese M., Gehring T., Twin beam quantum-enhanced correlated interferometry for testing fundamental physics. Commun. Phys. 3, 104 (2020). [Google Scholar]

- 14.Tapster P. R., Seward S. F., Rarity J. G., Sub-shot-noise measurement of modulated absorption using parametric down-conversion. Phys. Rev. A 44, 3266–3269 (1991). [DOI] [PubMed] [Google Scholar]

- 15.Monras A., Paris M. G. A., Optimal quantum estimation of loss in bosonic channels. Phys. Rev. Lett. 98, 160401 (2007). [DOI] [PubMed] [Google Scholar]

- 16.Adesso G., Dell’Anno F., De Siena S., Illuminati F., Souza L. A. M., Optimal estimation of losses at the ultimate quantum limit with non-Gaussian states. Phys. Rev. A 79, 040305 (2009). [Google Scholar]

- 17.Matthews J. C., Zhou X., Cable H., Shadbolt P., Saunders D., Durkin G., Pryde G., O’Brien J., Towards practical quantum metrology with photon counting. npj Quant. Inf. 2, 16023 (2016). [Google Scholar]

- 18.Moreau P.-A., Sabines-Chesterking J., Whittaker R., Joshi S. K., Birchall P. M., McMillan A., Rarity J. G., Matthews J. C. F., Demonstrating an absolute quantum advantage in direct absorption measurement. Sci. Rep. 7, 6256 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Losero E., Ruo-Berchera I., Meda A., Avella A., Genovese M., Unbiased estimation of an optical loss at the ultimate quantum limit with twin-beams. Sci. Rep. 8, 7431 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Avella A., Ruo-Berchera I., Degiovanni I. P., Brida G., Genovese M., Absolute calibration of an EMCCD camera by quantum correlation, linking photon counting to the analog regime. Opt. Lett. 41, 1841–1844 (2016). [DOI] [PubMed] [Google Scholar]

- 21.Brida G., Genovese M., Ruo Berchera I., Experimental realization of sub-shot-noise quantum imaging. Nat. Photonics 4, 227–230 (2010). [Google Scholar]

- 22.Samantaray N., Ruo-Berchera I., Meda A., Genovese M., Realization of the first sub-shot-noise wide field microscope. Light Sci, Appl. 6, e17005 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sabines-Chesterking J., McMillan A. R., Moreau P. A., Joshi S. K., Knauer S., Johnston E., Rarity J. G., Matthews J. C. F., Twin-beam sub-shot-noise raster-scanning microscope. Opt. Express 27, 30810–30818 (2019). [DOI] [PubMed] [Google Scholar]

- 24.Lloyd S., Enhanced sensitivity of photodetection via quantum illumination. Science 321, 1463–1465 (2008). [DOI] [PubMed] [Google Scholar]

- 25.Tan S.-H., Erkmen B. I., Giovannetti V., Guha S., Lloyd S., Maccone L., Pirandola S., Shapiro J., Quantum illumination with gaussian states. Phys. Rev. Lett. 101, 253601 (2008). [DOI] [PubMed] [Google Scholar]

- 26.Lopaeva E. D., Ruo Berchera I., Degiovanni I. P., Olivares S., Brida G., Genovese M., Experimental realization of quantum illumination. Phys. Rev. Lett. 110, 153603 (2013). [DOI] [PubMed] [Google Scholar]

- 27.Zhang Z., Mouradian S., Wong F. N. C., Shapiro J. H., Entanglement-enhanced sensing in a lossy and noisy environment. Phys. Rev. Lett. 114, 110506 (2015). [DOI] [PubMed] [Google Scholar]

- 28.Pirandola S., Quantum reading of a classical digital memory. Phys. Rev. Lett. 106, 090504 (2011). [DOI] [PubMed] [Google Scholar]

- 29.Ortolano G., Losero E., Pirandola S., Genovese M., Ruo-Berchera I., Experimental quantum reading with photon counting. Sci. Adv. 7, eabc7796 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Pendrill L. R., Using measurement uncertainty in decision-making and conformity assessment. Metrologia 51, S206–S218 (2014). [Google Scholar]

- 31.M. A. Nielsen, I. L. Chuang, Quantum Computation and Quantum Information (Cambridge Univ. Press, ed. 10, 2011). [Google Scholar]

- 32.Meda A., Meda A., Losero E., Samantaray N., Scafirimuto F., Pradyumna S., Avella A., Ruo-Berchera I., Genovese M., Photon-number correlation for quantum enhanced imaging and sensing. J. Opt. 19, 094002 (2017). [Google Scholar]

- 33.Pirandola S., Lupo C., Giovannetti V., Mancini S., Braunstein S. L., Quantum reading capacity. New J. Phys. 13, 113012 (2011). [Google Scholar]

- 34.L. Mandel, E. Wolf, Optical Coherence and Quantum Optics (Cambridge Univ. Press, 1995). [Google Scholar]

- 35.Weedbrook C., Pirandola S., García-Patrón R., Cerf N. J., Ralph T. C., Shapiro J. H., Lloyd S., Gaussian quantum information. Rev. Mod. Phys. 84, 621–669 (2012). [Google Scholar]

- 36.Bondani M., Allevi A., Zambra G., Paris M. G. A., Andreoni A., Sub-shot-noise photon-number correlation in a mesoscopic twin beam of light. Phys. Rev. A 76, 013833 (2007). [Google Scholar]

- 37.Peřina J. J., Křepelka J., Peřina J. Jr., Bondani M., Allevi A., Andreoni A., Experimental joint signal-idler quasidistributions and photon-number statistics for mesoscopic twin beams. Phys. Rev. A 76, 043806 (2007). [Google Scholar]

- 38.Chekhova M. V., Germanskiy S., Horoshko D. B., Kitaeva G. K., Kolobov M. I., Leuchs G., Phillips C. R., Prudkovskii P. A., Broadband bright twin beams and their upconversion. Opt. Lett. 43, 375–378 (2018). [DOI] [PubMed] [Google Scholar]

- 39.Glorieux Q., Guidoni L., Guibal S., Likforman J.-P., Coudreau T., Quantum correlations by four-wave mixing in an atomic vapor in a nonamplifying regime: Quantum beam splitter for photons. Phys. Rev. A 84, 053826 (2011). [Google Scholar]

- 40.Pooser R. C., Lawrie B., Plasmonic trace sensing below the photon shot noise limit. ACS Photonics 3, 8–13 (2016). [Google Scholar]

- 41.Wu M.-C., Schmittberger B. L., Brewer N. R., Speirs R. W., Jones K. M., Lett P. D., Twin-beam intensity-difference squeezing below 10 Hz. Opt. Express 27, 4769–4780 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Brida G., Degiovanni I., Genovese M., Rastello M. L., Ruo-Berchera I., Detection of multimode spatial correlation in PDC and application to the absolute calibration of a CCD camera. Opt. Express 18, 20572–20584 (2010). [DOI] [PubMed] [Google Scholar]

- 43.Meda A., Ruo-Berchera I., Degiovanni I. P., Brida G., Rastello M. L., Genovese M., Absolute calibration of a charge-coupled device camera with twin beams. Appl. Phys. Lett. 105, 101113 (2014). [Google Scholar]

- 44.Brida G., Genovese M., Ruo-Berchera I., Chekhova M., Penin A., Possibility of absolute calibration of analog detectors by using parametric downconversion: A systematic study. J, Opt. Soc. Am. B 23, 2185–2193 (2006). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Materials

Figs. S1 to S3