Abstract

We examined the time-course of lexical activation, deactivation, and the syntactic operation of dependency linking during the online processing of object-relative sentence constructions using eye-tracking-while-listening. We explored how manipulating temporal aspects of the language input affects the tight lexical and syntactic temporal constraints found in sentence processing. The three temporal manipulations were (1) increasing the duration of the direct object noun, (2) adding the disfluency uh after the noun, and (3) replacing the disfluency with a silent pause. The findings from this experiment revealed that the disfluent and silence temporal manipulations enhanced the processing of subject and object noun phrases by modulating activation and deactivation. The manipulations also changed the time-course of dependency linking (increased reactivation of the direct object). The modulated activation dynamics of these lexical items are thought to play a role in mitigating interference and suggest that deactivation plays a beneficial role in complex sentence processing.

Keywords: Sentence processing, lexical activation, lexical deactivation, syntactic reactivation, temporal manipulation

Introduction

Successful auditory comprehension hinges on the listener’s ability to access lexical representations and integrate them into an emerging syntactic structure, moment-by-moment, as a sentence unfolds. In building a syntactic structure, there are often times that lexical elements are linked but are not adjacent to one another in a sentence and form long-distance dependencies (such as those created by reference-seeking elements like pronouns, reflexives and other structurally driven constructs). In such cases, success depends on the listener’s ability to establish a real-time link between reference-seeking elements and their structurally linked antecedents. This process has been argued to be fast-acting (without conscious reflection) yet requires a complex interplay of successive lexical and syntactic levels of processing (Love & Swinney, 1996). Of interest here is the time-course of these lexical activation dynamics, integration of lexical items into a syntactic frame, and the effect that time has on these processes.

Much of the research exploring the time-course of sentence processing consists of stimuli that have been carefully recorded to remove any interruptions or errors. This swath of research has demonstrated that lexical and syntactic processing during auditory sentence comprehension occurs rapidly and automatically. When surveying natural language samples, however, it is clear that naturally occurring utterances that listeners typically receive have numerous instances of hesitations and interruptions (Gilquin & De Cock, 2013). These interruptions to the temporal flow of speech are characterised as disfluencies, which often take the form of filled and unfilled pauses (uh and silent pauses, respectively), though there are of course other forms. Such disfluencies add a temporal delay in the on-going speech signal without adding semantic content. Although listeners are typically unaware of the multitude of interruptions that riddle spoken language, a limited amount of research has looked at the additional time that disfluencies introduce during processing and have revealed that they can enhance word recognition, cue a listener to an upcoming clause boundary, direct listeners’ attention to new lexical information, and help disambiguate structural ambiguities (e.g. garden path sentences) (Arnold et al., 2004, 2007; Bailey & Ferreira, 2003; Brennan & Schober, 2001; Collard et al., 2008; Corley et al., 2007; Corley & Hartsuiker, 2011; Ferreira et al., 2004; Ferreira & Bailey, 2004; Fox Tree, 2001). However, the immediate effect that disfluencies have on lexical level representations (activation and decay) and integration during sentence processing is less clear. In this paper we ask if different forms of temporal manipulations enhance or disrupt lexical activation and if so, do they alter the time-course of lexical integration during sentence processing? Furthermore, we ask what the consequences are on later syntactic operations such as dependency linking.

We first present a brief review of structural dependency linking in sentences followed by an overview of the various ways in which researchers have described how additional time provided by disfluencies affects word recognition and structural parsing in auditory sentences.

Structural parsing: dependency linking in object relative sentence constructions

During auditory processing, the listener receives acoustic input serially and must rapidly access lexical items in a successive fashion. Additionally, sentences may require linking of linguistically dependent information in order to assign thematic roles and arrive at a final understanding. This process is commonly found in a complex sentence structure known as an object-relative construction.

The object-relative sentence below (1) is comprised of two smaller sentences: The boy was in the park and The girl pushed the boy. Both of these sentences follow the standard word order for English, meaning they observe the canonical word order pattern of subject-verb-object. These two sentences can be combined by fronting the direct object (the boy) of the verb (pushed) to the beginning of the sentence, resulting in an object-relative sentence construction. While grammatical, this word ordering is considered non-canonical as it deviates from s-v-o word order. It is also considered more complex as the displacement of the direct object creates a long-distance structural dependency (noted by the co-indexation subscript, i).

(1) The boyi that the girl pushedi was in the park.

Research has shown that upon processing a verb in an object relative construction, a listener links it to the fronted direct object the boy (Love & Swinney, 1996). In the example above, this results in the assignment of the thematic role, “theme”, allowing for the final interpretation of who is doing what to whom. The time-course of the processes described require a tight coupling between lexical and syntactic level processing. Studies using cross model priming paradigms (CMP, Swinney, 1979) have shown that there is a predictable time-course of activation of the direct object noun throughout a sentence. In examples with dependency links, such as (2) below, these studies have shown immediate activation of the noun at its offset (*1), fast decay (within 400 ms, *2), and reactivation at a syntactically defined position (*3), in this case, verb offset (Love et al., 2008).

(2) The boyi *1 from next *2 door that the girl pushedi *3 was in the park.

Findings of activation followed by reactivation of displaced elements in sentences with syntactic dependencies have also been replicated using eye-tracking methods (Akhavan et al., 2020; Koring et al., 2012; Thompson & Choy, 2009).

Parsing object-relative constructions places tight temporal constraints and increases the demands on the processor. Some theories have focused on the increased processing demands mandated by long distance dependency resolution and successful comprehension. In Cue-Based Retrieval Theory (Lewis et al., 2006; Lewis & Vasishth, 2005; Van Dyke & Lewis, 2003; Van Dyke & McElree, 2006), this sentence processing difficulty is determined by the ease with which a distant dependent (i.e. a displaced direct object noun) can be retrieved (i.e. dependency completion) via an associative search. There are two components to this theory that are particularly relevant to the work presented below.

In object-relative constructions, interference could arise during retrieval of the target noun at the post-verb gap site, the direct object, if other items encountered in a sentence match in retrieval cues with the target creating competition in the form of similarity-based interference. Interference makes it harder to retrieve the direct object and has been shown to lead to increased processing times at the verb and overall comprehension difficulty (Gordon et al., 2001; Gordon et al., 2002, 2004, 2006; Van Dyke, 2007; Van Dyke & McElree, 2006). In addition to interference, another factor that can impact retrieval is activation decay (Van Dyke & Lewis, 2003). According to decay theory, an element in memory is susceptible to decay over time which lowers the degree of its activation in short-term and working memory (Brown, 1958; Lewis et al., 2006; Lewis & Vasishth, 2005). The combination of interference and decay has been argued to dictate the ease with which an item in a sentence is reactivated. Housed within the general framework of Cue-based Retrieval theory (Lewis & Vasishth, 2005), activation-based models assert that the activation level of a constituent determines retrieval latency and the probability of retrieval. Difficulty in reactivating prior constituents is thus a function of how much they have decayed and the degree of interference (Lewis & Vasishth, 2005).

The parameter of time is important when considering interference and decay during sentence processing, as both processes interact with and are affected by time. Investigations on sentence processing have shown that manipulations of time, as defined by speaking rate, can have positive or negative effects depending on how it is manipulated. When time is extended, there are benefits to sentence processing in language impaired individuals (Love et al., 2008; Montgomery, 2005). Research with unimpaired individuals has shown that when time is compressed, or speeded, sentence processing is made more difficult (Chodorow, 1979; Peelle et al., 2004; Wingfield et al., 2003).

Disfluency as a temporal parameter in lexical access

One way to capture time in lexical and sentence processing is by investigating disfluencies. Disfluencies appear in speech quite commonly as they are estimated to occur at a rate of roughly six times per 100 words (Bortfeld et al., 2001; Fox Tree, 1995). Examples of disfluencies include filled and unfilled pauses, repetitions, replacements, and restarts (Clark & Wasow, 1998). Given the ubiquity of disfluencies in spoken language, mechanisms must be in place for the comprehension system to handle such imperfections. At the lexical level, research has focused on the effect of disfluencies on recognising words in upcoming speech. Disfluent utterances have been found to speed up response times to target words in word monitoring tasks (Fox Tree, 2001) and in visual object selection tasks (Brennan & Schober, 2001; Corley & Hartsuiker, 2011), and online identification of unfamiliar referents during comprehension using eye-tracking (Arnold et al., 2004, 2007). The processing benefit provided by disfluencies during word recognition has been explained on the basis of temporal delays and heightened attention.

Using eye-tracking-while-listening in a visual world paradigm, Arnold et al. (2007) examined reference comprehension by presenting participants with graphic displays of familiar easy-to-name objects (e.g. ice cream cones) and unfamiliar difficult-to-name objects (e.g. complex shapes) and heard either fluent auditory instructions (Click on the …) or disfluent instructions (Click on thee uh …). The results showed that disfluent expressions produced a bias toward unfamiliar objects. The increased attention to unfamiliar objects suggests that listener’s show sensitivity to the kind of information that disfluencies signal, such as speaker’s uncertainty or production difficulty and use this information during language comprehension to bias attention towards unfamiliar objects (Arnold et al., 2007) or unmentioned objects (Arnold et al., 2004).

Corley and Hartsuiker (2011) investigated the effects of disfluent instructions on word recognition to determine whether the delays caused by disfluencies facilitate comprehension. They proposed a delay hypothesis which posits that delays in word onset facilitate recognition of lexical items. They tested three forms of delay (a disfluency um, a silent pause, and an artificial tone) in auditory word recognition tasks. Delays were placed immediately before a target word (delay condition) or earlier in the auditory sentence (control condition). Participants were faster to respond when target words were preceded by a delay, regardless of the type of manipulation. These results suggest that delays, regardless of the form, facilitate auditory word recognition. It has been reasoned that delays serve to heighten attention to upcoming linguistic information (Clark & Fox Tree, 2002; Corley & Hartsuiker, 2011). Consistent with an attentional account, Collard et al. (2008) found modulations in the amplitude of two ERP components associated with attention, mismatch negativity and P300, when participants listened to utterances containing a disfluency. When listeners were given a surprise subsequent recognition memory test, words that had been preceded by a disfluency were more likely to be remembered demonstrating that modulations in attention can have both short and longer-lasting effects. Note that these studies focus on how additional time affects upcoming word recognition but to our knowledge, no one has directly looked at how time affects post lexical selection and integration during sentence processing.

Structural parsing: disfluencies (time) as a parameter for structural parsing

Research has also shown that disfluencies can have effects on both online (automatic) processing and final (reflective) comprehension. Ferreira and colleagues (see review by Ferreira & Bailey, 2004 and Ferreira et al., 2004) have shown that the type and location of disfluencies affect the parsing and reanalysis of temporarily ambiguous (garden-path) sentences. In their 2003 paper, Bailey and Ferreira demonstrated that the parser may use disfluencies as a cue to the upcoming structure. In the example sentences below, they found when the filled-pause uh was placed before the onset of a disambiguating word told in (3), participants were more likely to mistakenly interpret the waiter as part of the object of the verb bumped and commit to that incorrect structure longer. In other words, the garden-path effect was enhanced. However, when disfluencies were placed in a position that reinforces the upcoming syntactic structure, in this case at the beginning of a clause boundary in (4), participants showed shorter garden path effects and judged the sentences as grammatical more often (Ferreira et al., 2004).

(3) Sandra bumped into the busboy and the waiter uh uh told her to be careful.

(4) Sandra bumped into the busboy and the uh uh waiter told her to be careful.

The authors propose multiple ways that disfluencies disrupt or assist in garden-path sentence processing. One posited role is that disfluencies provide a cue to the correct or incorrect structure owing to their typical distribution in language. Because disfluencies tend to occur before the onset of complex syntactic constituents (Clark & Wasow, 1998; Hawkins, 1971) listeners may use this information during processing of ambiguous sentences to predict a more complex structure (Bailey & Ferreira, 2003). Another mechanism is that disfluencies provide additional time to reinforce the chosen representation at that point in time by delaying the onset of subsequent information. This delay has important ramifications for the current structures or representations under consideration which are given time to increase in activation at the expense of alternative interpretations leading to stronger garden-path effects (Bailey & Ferreira, 2003; Ferreira et al., 2004; Ferreira & Bailey, 2004). One may extend this interpretation to lexical-level processing to say that additional time allows for longer processing of lexical representations. In other words, lexical items may gain activation in a way similar to that of structures under consideration.

Current study

As discussed above, disfluencies afforded more time for unimpaired listeners to process lexical and sentence constructions, however, their effect on both lexical activation and syntactic processing during auditory sentence comprehension has not been investigated.

In addition to disfluencies, manipulations to rate of speech input have also been found to influence sentence processing (Love et al., 2008; Montgomery, 2005; Peelle et al., 2004; Wingfield et al., 2003). Of these, slowed rate of speech was accomplished by stretching the entire sentence (while maintaining pitch). This temporal manipulation was found to disrupt the normal time-course of lexical and syntactic processing in unimpaired adults (Love et al., 2008). As such, one condition in this study concentrates the temporal manipulation focally within the direct object noun itself. We believe that this would result in less overall lexical and structural processing hindrances.

In the current study, we seek to investigate if additional time, via disfluencies and a focal rate manipulation, helps or hinders lexical activation and decay as well as if it affects downstream dependency linking (via modulating interference). The present study compares three forms of reduced rate of speech input on the processing of sentences containing object relative clauses. The manipulations resulted in 4 experimental conditions: control, stretch, disfluent, and silence. The control condition was the naturally recorded sentences described below in the Stimuli section. The stretch condition follows prior research showing an effect of reduced rate of input via temporal elongation on sentence level processing in aphasia (Love et al., 2008). Here though, rather than stretching the entire sentence, we look to enhance lexical processing by only reducing the speech rate of the critical noun, the direct object of the relative clause verb (RC-v). The disfluent condition seeks to provide more processing time via a natural temporal delay with the silence condition serving as a temporal control.

We use eye-tracking to capture the time-course of lexical and syntactic parsing during auditory sentence processing. This method allows us to explore the nature of interference and the contributions that lexical activation and decay have on subsequent dependency linking during real-time processing. This paradigm has been used to successfully index interference (Akhavan et al., 2020; Sheppard et al., 2015; Sekerina et al., 2016) and allows us to explore how manipulating temporal aspects of the language input affects these processes. We acknowledge, however, that the nature of lexical decay under investigation likely differs from the memory-based decay discussed above due to the method in the current study. In this eye-tracking study, the referents mentioned in the sentences were displayed as characters on screen and thus provided visual support during language processing. The overt presence of the referents’ pictures may not directly index memory-based retrieval as it is typically viewed in the memory literature, although no study to our knowledge has explored this effect. To be conservative in our interpretation of our patterns, we will therefore refer to lexical decay as lexical deactivation.

Methods

To examine lexical activation, deactivation, and reactivation as it occurs during real-time sentence processing, this study employs Eye-tracking-While-Listening (ETL) using a visual world paradigm (Allopenna et al., 1998; Tanenhaus et al., 2000). In the current study, participants are presented with an array of four pictures while listening to sentences. Two of the pictures depict referents mentioned in the sentence and two are distractors. It is hypothesised that while listening to sentence constituents, a listener will demonstrate increased looks towards the picture of the referent recently processed indicating lexical activation, while looks away (disengagement) from a referent are indicative of deactivation. Reactivation of the displaced noun phrase should occur during critical syntactic positions that result in syntactic dependency linking (i.e. gap filling, see Akhavan et al., 2020; Sussman & Sedivy, 2003; Thompson & Choy, 2009).

As previously mentioned, Cue-based Retrieval theory posits that retrieval interference arises during dependency linking as a result of competition between the displaced noun and an intervening noun when they have similar features, in other words when the necessary cues for identifying a dependent are ambiguous (Van Dyke & McElree, 2006). For example in the object-relative sentence below (5), the retrieval cues provided by the verb pushed select for an animate noun, however there two noun phrases that fit these cues, the displaced noun (the elf) and the intervening noun (the clown) which creates interference (Sekerina, et al., 2016).

(5) The elf that the clown pushed was at the end of the street.

This interference has been demonstrated in eye-tracking data using a similar paradigm as an extended activation of both nouns during the post-verb time window (Akhavan et al., 2020). Specifically, the process of deactivation of the recently processed intervening noun (here, N2) coupled with the signalling to the parser to reactivate the displaced noun (here, N1) can result in overlapping gaze patterns of both nouns (Akhavan et al., 2020). Thus, this methodology gives us a detailed time-course of lexical activation, deactivation, and interference during reactivation as the auditory sentence unfolds over time. All methods described below were approved by the University of California San Diego and the San Diego State University Institutional Review Boards. Per the Center for Open Science we include this statement to confirm that what follows is a complete reporting of all measures, conditions, data exclusions and participant characteristics.

Participants

33 unimpaired students from San Diego State University were enrolled in this study. Participants were required to be native English speakers with minimal experience in a second language (i.e. any knowledge of a second language was learned after the age of six). They had to be self-reported right hand dominant with normal or corrected vision and hearing and no self-reported history of alcohol and/or drug abuse, active psychiatric illness, intellectual disability, or other significant brain disorder or dysfunction. Non-native knowledge of English was considered an exclusionary criterion and on this this basis, 6 participants were excluded as they were not monolingual English Speakers. In addition, 3 other participants had to be excluded due to computer errors in data collection. The resulting 24 participants (Mage = 21, SD = 3.3, range = 18–29, male = 2) were included in the analyses described below. All participants received extra credit for study participation.

Materials

Stimuli

Experimental stimuli consisted of 40 object-relative sentences which were constructed in a similar format as shown in (5) below:

| Relative Clause | |||||

|---|---|---|---|---|---|

| (6) | Adverbial clause | NP1 | NP2 | Verb | Main Verb + PP |

| [Last year on Halloween], | [the elf that | the clown | pushed] | [was at the end of the street]. | |

All sentences contained non-biasing contexts such that both noun phrases (NPs) were equally plausible as the subject or object of the RC-v (pushed). The role of each NP was reversed in a matched sentence design so that each NP served once as the direct-object and once as the subject (see 6 and 7 below).

(7) Last year on Halloween, the elf that the clown pushed was at the end of the street.

(8) At the costume party, the clown that the elf poked was in the backyard.

Each sentence in the pair contained RC-v’s with similar phonetic onsets and were matched in syllable length (e.g. help/hug, push/poke). Twenty matched RC-v’s were used twice to create a total of 40 experimental items. Importantly, the 2 NPs of interest were not phonologically or semantically related to one another, nor were they related on either dimension with the NPs in the matched set. Regarding the presentation of the images for the visual world portion of the ETL study, the images used to represent each NP were positioned such that across all conditions and items, both NP1 and NP2 appeared equally across all four quadrants of the visual display. The sentences described here formed the basis for the three temporal manipulations detailed in the “Temporal manipulation” section below resulting in 160 experimental items (40 per condition). Finally, an additional 40 unrelated filler sentences were created to distract participants from the object-relative construction in target sentences and thereby avoid developing strategic processing (i.e. only looking to the first noun, etc.). These were of similar length and speech rate to the experimental sentences but varied in syntactic structure. Thus, there were 200 stimuli in this experiment (experimental plus fillers).

Temporal manipulations

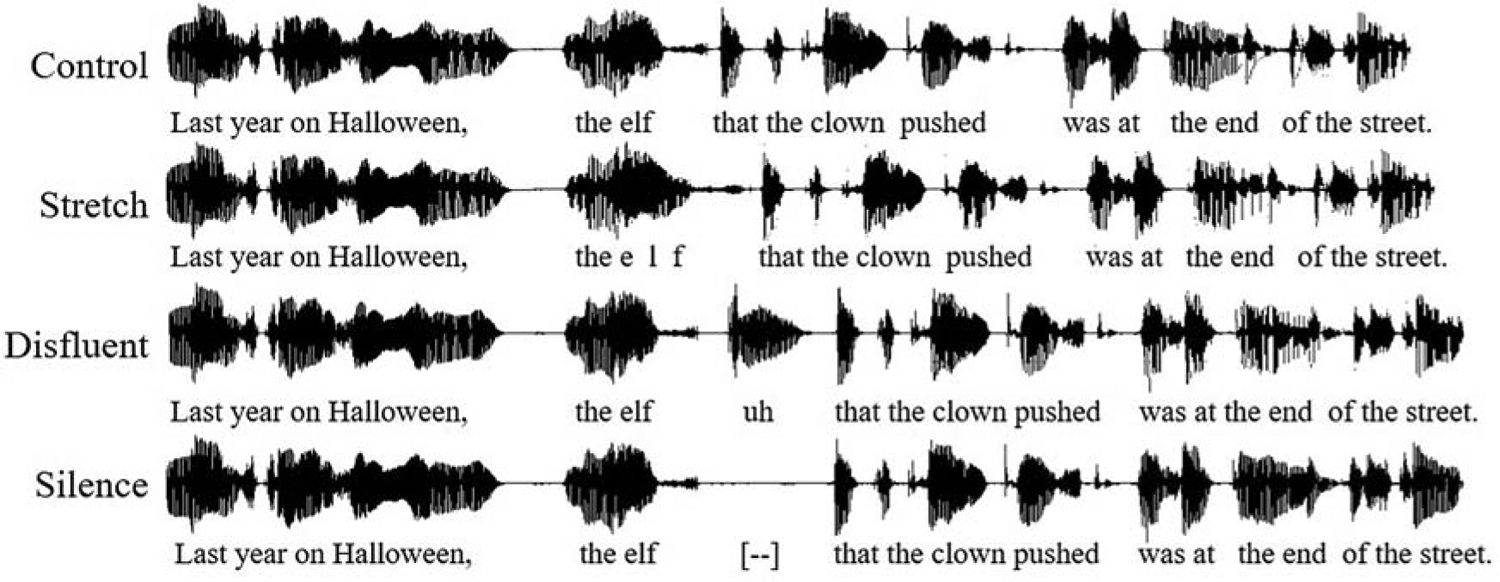

Stimuli were recorded by a female speaker in a sound-treated room. A natural recording of each sentence served as the control condition with an average rate of speech of 4.94 syllables/second (SD=.26 s). These sentences served as the base to which the time manipulations were made. Recall that 3 temporal manipulations were used: stretch, disfluent, and silence. The stretch condition was created by digitally “stretching” the wave form associated with direct object noun (N1) using Adobe Audition CC by 150% while maintaining pitch (see Figure 1, below). This level was chosen as the maximum duration that words could be stretched without distortion so that it sounded as natural as possible as confirmed by researchers in the laboratory. This time-stretch version resulted in the addition of ~250 ms to the overall time as compared to the control recording. To create the disfluent condition (see Figure 1), sentences were recorded by the same female speaker with a disfluency (uh) embedded in them. This provided a natural recording of a disfluency in the control sentence context that was then spliced out and inserted into the original recording of the control sentence. Thus, the overall sentence contour remained the same for this condition. The average duration of each disfluency was 250.4 ms (SD=3.56 ms). These disfluencies were inserted approximately 100 ms after the offset of the N1 and 100 ms before the upcoming relativizer to allow for a natural buffer before and after the disfluency as would be heard in typical speech (Shriberg, 1999). This manipulation resulted in an average of 460.9 ms (SD = 3.8 ms) additional time. Since the placement of the disfluencies in the current experiment differs from how they are typically found in sentences (i.e. clause boundaries or word initial, for review see Ferreira et al., 2004; Shriberg, 1999) the naturalness of disfluent sentences was confirmed by five research assistants who were unaware of the aims of the study. This pretest confirmed that other than the speaker “sounding confused”, the sentences appeared natural. Finally, to create the silence condition (see Figure 1), the disfluent portion was replaced with silence in Adobe Audition CC to maintain the same timing as the disfluent condition.

Figure 1.

Example waveforms of stimuli showing the control condition and temporal manipulations (disfluent, silence, and stretch).

Visual stimuli

Recall that there were 2 NPs in each of the 20 experimental sentence pairs (see 5 above) and 1 or 2 NPs in the 40 filler sentences. Images for the NPs were coloured cartoon images created by a single artist to maintain general style. In total there were 80 images created for this study.

Pretest of visual images

In order to ensure that each drawing accurately depicted the intended NP, a naming pretest was conducted with a separate group of 19 participants (M age = 28.8, SD=16.4; male = 3). All participants were Native English speakers who were right-handed, had normal to corrected vision, and no self-reported history of head injury, learning disability, or drug abuse.

Using Qualtrics, images were displayed one at a time. Participants were asked to provide a single word to name each of the 80 images. They did so by typing their responses. All data were aggregated across participants to determine the percent accuracy of semantic agreement. Any image for which there was <70% semantic agreement was removed. For example, an acceptable response for the target image weightlifter was bodybuilder or powerlifter, but not boxer. Of the 80 images tested, 8 pictures failed to produce at least 70% semantic agreement and were discarded. Of the remaining pool of 72 images, 40 were used for the experimental sentences and the remaining 32 were used in the filler sentences. We converted 8 of the 40 filler sentences from having 2 NPs to 1 NP to accommodate the loss of picture items, thus 25 filler items had 1 noun and 15 had 2 nouns.

Comprehension questions

For each experimental sentence, participants heard a yes/no comprehension question at the end of the trial to ensure that they had listened to the sentence for meaning. All questions for the experimental items were in the form of “Did the NP + verb + someone?” These questions were recorded by another female speaker at a normal rate of speech. Filler sentence comprehension questions took a variety of formats so that the participant could not anticipate what to listen for. Comprehension questions were counterbalanced so half of the total questions had a correct answer of “yes”. For the experimental items, the NP used in the comprehension question (NP1 or NP2) was also counterbalanced.

Study design

In this design, participants contributed data to all conditions but never received an item more than one time. In order to ensure that all participants heard an experimental item only once in a given session, the sentences were split into four lists. Each list contained 40 experimental items (10 experimental sentences per condition) and 40 filler items for a total of 80 sentences. Participants were tested on two separate lists in two sessions spaced a minimum of one week apart, contributing data to 20 sentences per condition. List assignment was randomised and counterbalanced.

Procedure

Participants were seated in front of a computer screen equipped with a Tobii X3-120 eye-tracker bar and positioned so that their eyes were approximately 65 cm away from the eye-tracker. At the beginning of each experimental session, the eye-tracker was calibrated to each participant so that both eyes were captured, and the location of the gazes were accurately measured. Reaction time (RT) data (ms, accuracy) and timed presentation of auditory and visual stimuli were controlled by E-Prime 3.0 software (Psychology Software Tools, Pittsburgh, PA).

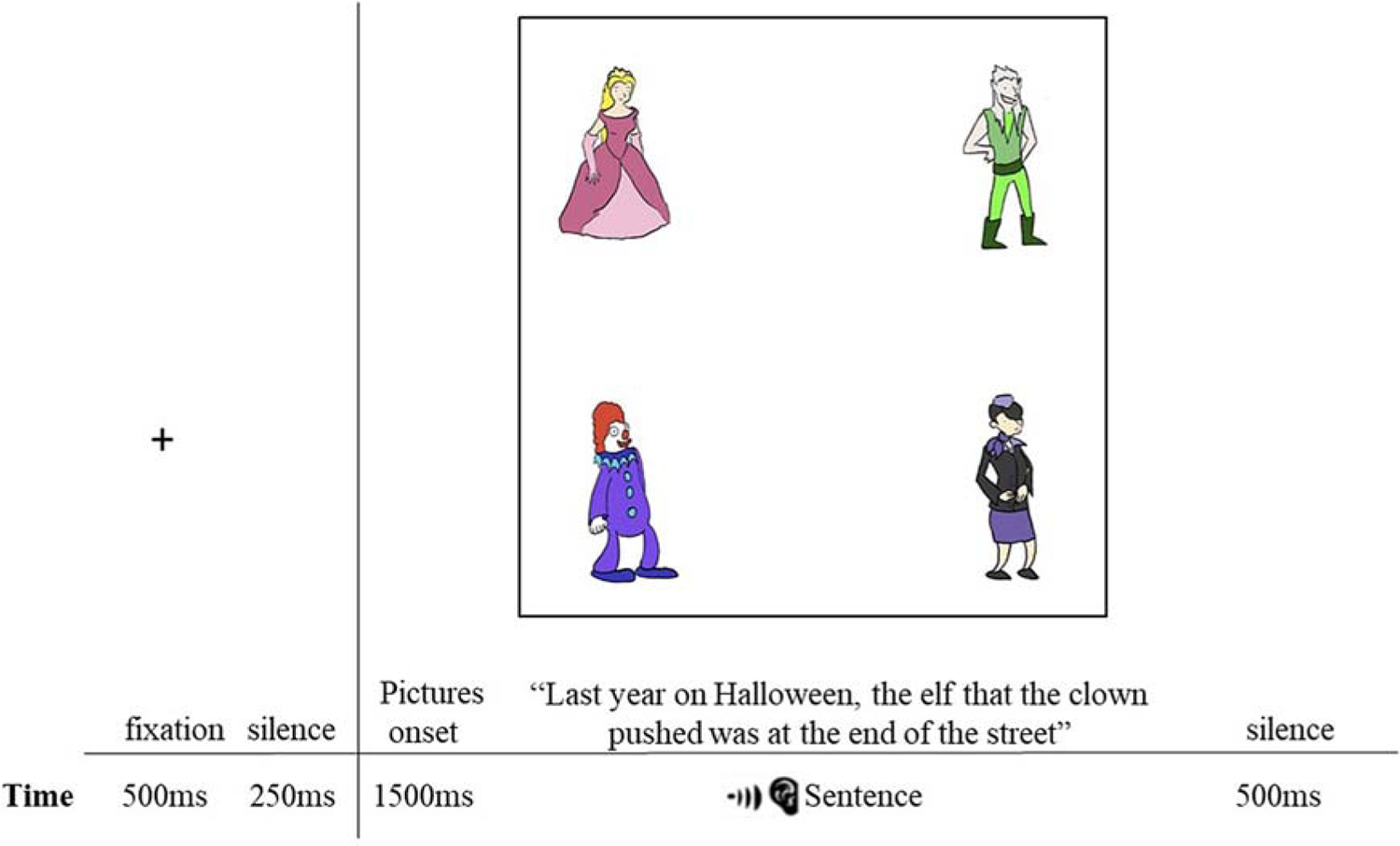

Participants were instructed to listen carefully to each sentence as they would be asked a comprehension question after each one. These questions were intended both to reinforce the need for the participants to attend to the meaning of the sentences and to maintain task engagement. As shown in Figure 2, each trial began with the presentation of a central fixation cross for 500 ms, followed by a 250 ms blank screen. The four-picture display was then presented for 1500 ms before the experimental (or filler) sentence began. As we were interested in the activation of a lexical item as it is integrated into an ongoing sentence (i.e. post lexical selection), this preview duration provided ample time to form mental representations of each lexical item. The pictures remained on the screen for 500 ms after the sentence ended. For all trials, gaze location was sampled at a rate of 120 Hz resulting in gaze location being recorded every 8 ms across each trial. As described above, each sentence was followed by an aurally presented Y/N comprehension question. Participants were instructed to respond as quickly and as accurately as possible with their right hand using a two-button response box. Comprehension accuracy and response time were also recorded by E-Prime 3.0 software. Ten practice trials were conducted prior to each experimental session to allow the experimenter to provide feedback or redirection prior to the beginning of the experiment.

Figure 2.

Procedure used in each trial of the experiment. The example display presents the direct object noun (e.g. clown), the second noun (e.g. elf), and two distractors (e.g. princess and stewardess). Auditory sentences began after a 1500 ms preview of the display.

Data analysis approach: ETL-VWP

Before providing the results of the statistical analyses, we first give an overview of the statistical approaches taken for ETL-VWP.

Pre-processing

Trackloss:

The first step in preparing the eye-tracking data for analyses was to evaluate the integrity of the eye-tracking data that was collected every 8 ms by the Tobii eye tracker. Samples in which eye gaze was not registered was considered trackloss. This occurs when the eye-tracker fails to register gaze information from at least one of the participant’s eyes, either because the participant is looking off screen, not within an areas of interest (AOI), or the sample contains a blink.

To clean data for trackloss, four AOIs were defined corresponding to the four pictures in each display. Each AOI corresponded to a 450 × 450 pixel quadrant situated in each of the four corners of the computer screen. Following Huang and Snedeker (2020), any gaze samples that contained trackloss were excluded from further analyses resulting in roughly 13% of the samples being removed. Of the remaining samples, any trial for which less than half of the expected samples remained was excluded from analyses (Huang & Snedeker, 2020). This resulted in the additional removal of .4% of data.

Data preparation:

Given the physiological nature of eye movements, there is inherent dependency in time-series eye-tracking data since the location of eye gaze at one time point is likely to be influenced by gaze location at nearby time points. This type of serial dependency can lead to negative consequences such as inflated Type I error rates. One strategy to account for such dependencies is to aggregate observations across trials and group them into temporal bins. Here, for each participant within each session and condition, we grouped observations into 100 ms bins. For each bin, we then estimated the proportion of gaze within each AOI and computed the empirical logit by logit-transforming the proportions. This logistic transformation of a binary response variable (within or outside of an AOI) is argued to be the appropriate choice as it helps reduce eye-movement based dependences (for review, see Barr, 2008). Pre-processing and preparation of eye-tracking data was done using eyetrackingR (Dink & Ferguson, 2015) and gazeR (Geller et al., 2020) packages.

Growth curve analyses

To explore the dynamic trajectory of lexical activation as auditory sentences unfold, we employed growth curve analyses, which are a type of multilevel regression modelling technique developed to analyse time-course data (Mirman, 2017; Mirman et al., 2008). In eye-tracking data, gaze trajectory often takes multiple patterns – linear (reflecting constant change over time) and curvilinear (reflecting fluctuations over time). In the models presented below, the time-series variables used to capture linear and curvilinear patterns were orthogonal polynomials (linear, quadratic, and cubic), similar to previous studies (Chen & Mirman, 2015; Magnuson et al., 2007; Nozari et al., 2016). Analyses were conducted in the statistical software R (R Core Team, 2019) using the lme4 package (Bates et al., 2015). Fixed effects of Condition (control, stretch, disfluent, silence) were added as an interaction on all time terms. To evaluate whether adding the fixed effect of Condition on each time term improved model fit, model comparisons were used. Changes in goodness of model fit were assessed using the likelihood ratio test with degrees of freedom equal to the number of parameters added. Tests of individual parameter estimates were used to evaluate specific condition differences on each of the time terms (i.e. whether each experimental manipulation was reliably different from the baseline control condition) using the Satterthwaite’s (Kenward-Roger’s) approximations for the t-test and corresponding p-values implemented in the ImerTest package (Kuznetsova et al., 2017). The control condition was used as the baseline and separate parameters were estimated for each of the temporal manipulations for each time term. Effects on the intercept term reflect the average height of the curve (i.e. the overall magnitude of activation) throughout the time window, which is analogous to a repeated measures ANOVA. Effects on the linear term reflect differences in the slope of the gaze trajectories, effects on the quadratic term reflect the symmetric rise and fall around a central inflection point (i.e. activation and deactivation), and effects on the cubic term tend to capture effects in the tails of the curves (Mirman, 2017; Mirman et al., 2008).

Windows of interest:

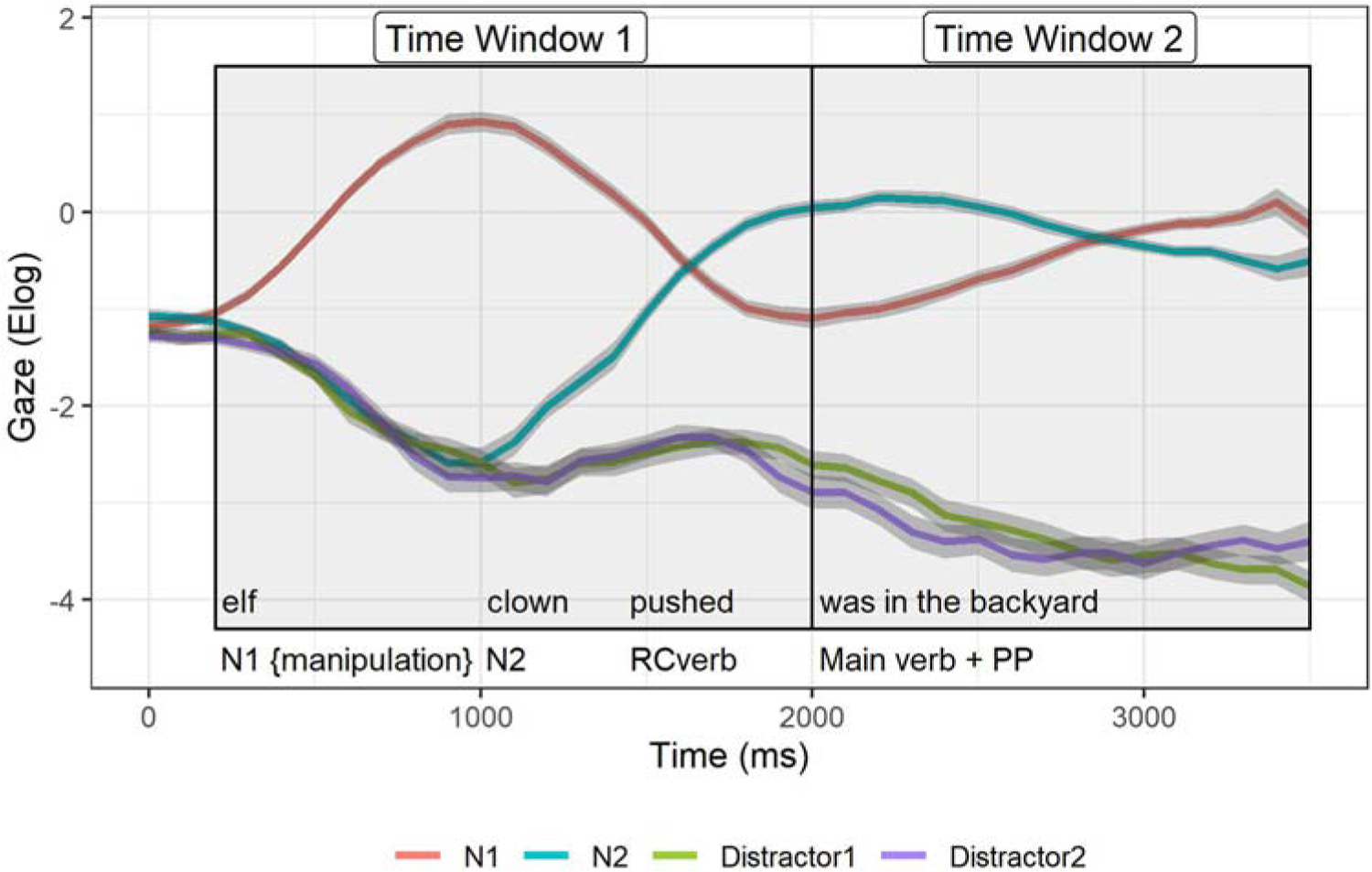

Two time windows were created to explore various aspects of lexical activation at hypothesised points during sentence processing. Time window 1 encompasses the full-time-course of processing the first noun (N1), which includes lexical activation and deactivation. Time window 2 captures syntactic reactivation of N1 and resolution of interference post-verb. We are interested in how the temporal manipulations affect the overall magnitude and the pattern of looks to N1 and N2 over time. To create the time windows of interest, the data for every trial was first aligned to particular time points throughout the sentence (e.g. onset of the first noun, onset of the relativizer, and the main verb). Figure 3 shows the averaged time-course of looks to N1 and N2 from the auditory presentation of N1 onset collapsed across conditions. Note that across conditions there were minimal looks to the distractor images throughout the sentences and therefore these are not presented in subsequent analyses or graphs.

Figure 3.

Mean gaze to N1 (the first noun), N2 (second noun), and two distractor images averaged across condition as a function of time beginning at the auditory onset of N1. Windows of interest (1 and 2) are outlined in black. Error bars (shaded areas) are within-subject 95% confidence intervals.

Initial N1 Processing (Time window 1):

Initial processing of N1 was analysed by modelling eye gaze patterns from the onset of N1 and continued until the offset of the RC-verb. In order to account for planning and execution of an eye movement, a baseline was established by shifting the N1 window to occur 200 ms after N1 onset (Hallett, 1978). Time window 1 is shown in the box on the left in the Figure 3 above and captures the change in activation and deactivation of N1 across all four conditions. Upon visual inspection, it is clear that upon hearing N1 (elf), participants direct their eye gaze towards the picture referent of the noun they just heard (red line), representing initial lexical activation. The peak of lexical activation is followed by a disengagement of looks to N1 (downward slope). We argue that this reflects deactivation and allows the participants to shift focus to the upcoming N2 (blue line). Statistical testing of these observations are detailed below. In the analysis presented below, the models include participants and condition (time manipulations) as random intercepts with random slopes on the linear and quadratic terms. Random effects of session for each subject on each time term (intercept and slopes) were also added to capture any variation resulting from two testing sessions.

Syntactic reactivation and interference resolution (Time window 2):

Time window 2, encompassing syntactic reactivation, begins at the offset of the verb (time-locked to 0 ms) and lasts 500 ms after the end of the sentence. The right box in the Figure 3 captures the pattern of N1 and N2 across all four conditions. As expected, based on prior behavioural findings, we see a decrease in looks to N2 (indicating N2 deactivation) along with an increase in looks back to N1 after the verb constituting reactivation of this constituent (i.e. gap-filling). This is shown visually as a crossover in looks from N2 (blue line) to N1 (red line) after the verb. To statistically establish evidence of reactivation we look to the model’s linear term in the results section below. We hypothesise that post-verb, increased rates of reactivation will be demonstrated by a significant incline in the looks to N1 as noted by steeper slopes.

As mentioned earlier, prior research demonstrates that similarity-based interference is evident in unimpaired processing. In order to best capture the retrieval interference process as it unfolds over time, we look at the model’s three-way interaction that includes both AOIs (N1and N2), condition (control, stretch, disfluent and silence), and time terms (linear and quadratic) so as to simultaneously capture any effects on the deactivation of N2 (negative linear trend) and the reactivation of N1 (positive linear trend) post-verb (see Mirman, 2017 for a review of growth curve analysis methods and equations). Interference during retrieval is reflected as overlapping gaze trajectories to both nouns, which indicates the listener is processing both items. As has been found in prior eyetracking studies, these overlapping gaze patterns reveal competition between the nouns, resulting in interference. This can be measured via slope coefficients where the two nouns show flatter, but opposing slope patterns (see for example, Akhavan et al., 2020). In cases where interference is reduced, we should expect to see stronger deactivation of N2 coupled with greater reactivation of N1 across time. In other words, there would be a stronger divergence in the gaze trajectories to each noun in the post-verb region (see cluster-based permutation analyses below). The random effects were structured similarly to those for time window 1 with the only difference being the addition of AOI to each random effect term to account for any differences in variation between the two nouns.

Cluster-based permutation analyses

The parameters of growth curve analyses describe how gaze trajectories change over time while taking into account individual differences, however, they do not directly address when in time two trajectories differ. To complement the growth curve analyses, we also conducted cluster-based permutation analyses to detect the temporal onset and duration of an effect between two time-series. This method was originally developed to account for the multiple comparison problem and autocorrelation arising in EEG and MEG data (Maris & Oostenveld, 2007), and has been increasingly applied to eyetracking research (Barr et al., 2014; Gerhardstein & Olsen, 2020: Weighall et al., 2017). In cluster-based permutation testing, inferential tests are performed on each time-bin over the analysis window and all adjacent, significant bins (i.e. passing a determined threshold) are grouped together into a single cluster and a cluster-level statistic is computed whose null distribution is derived through data permutation. Finally, a nonparametric statistical test is then performed by calculating a p-value under the permutation distribution and comparing with a critical alpha-level. By grouping temporally adjacent bins into a single cluster, individual tests are replaced by a single test for the cluster, thus controlling for family-wise error rate (for more information, see Maris & Oostenveld, 2007).

For each cluster-based permutation analysis, we used the eyetrackingR divergence analysis package (Dink & Ferguson, 2015). As applied here, t-test statistics were computed for each time-bin over the analysis window and all adjacent bins whose t-value exceeded the threshold (97.5th quantile for a two-sided t-test) were grouped into a single cluster. A cluster-level statistic was then calculated by taking the sum of the t-values to create a cluster mass statistic. Here the data was iteratively sampled 1000 times to build a null distribution. Significant clusters are identified using a critical alpha-level of 0.05. As the questions of interest differ in each time window (1 and 2), we approached the cluster analyses using different comparisons (described in detail below).

Cluster-based analysis: Time window 1

In time window 1, the goal is to compare the effect of each temporal manipulation, against the control condition, on the processing of N1 (i.e. control-stretch, control-disfluent, and control-silence). To complement the results found using GCA, cluster-based analyses were conducted using the same temporal time bin resolution (100 ms), with an extension of the original time window of interest by 300 ms to determine if deactivation continues across the two conditions. Thus, the final time window began at the onset of N1 (baselined 200 ms) and ended past the offset of the RC-verb (2400 ms).

Cluster-based analysis: Time window 2

In time window 2, encompassing syntactic reactivation, the goal in using cluster-based analyses is to quantify the onset and temporal extent of interference resolution in each experimental condition. Here, for each condition, we estimate when during the time-course looks to N1 significantly diverge from looks to N2, which we take as evidence interference resolution (Akhavan et al., 2020). Cluster-based analyses in this window were conducted using the same time bin resolution (100 ms) and the same time window of interest (1200 ms total duration; offset of the verb until 500 ms post sentence end) as used in the GCA analyses.

Results

Offline comprehension

Although our primary analyses focused on real-time sentence processing using eye-tracking, we first report performance accuracy and RT for sentence comprehension questions. As a reminder, these served to keep participants on task and were not meant to measure overall comprehension success. As a group, all conditions achieved a minimum of 80% accuracy (see Table 1, below). Accuracy was modelled with mixed effects logistic regression using glmer function with the bobyqa optimiser in R (R Core Team, 2019) in the lme4 package (Bates et al., 2015). Random slopes for condition were added per subject and per item to achieve a maximal random effects structure (Barr et al., 2013). RT was analysed in a mixed effects linear regression model using the lme4 package (Bates, et al., 2015) in R and degrees of freedom were calculated using the Satterthwaite’s (Kenward-Roger’s) approximations for the t-test and corresponding p-values in the package ImerTest (Kuznetsova et al., 2017). To evaluate RT, only correct trials were entered into analyses (84.05% data remained). In addition, any observations for which RT was less than 200 ms and observations greater than twice the standard deviation of that participant’s mean (per condition) were removed (83.26% data remained). Table 1 shows the mean accuracy and reaction times (in ms) for each of the four conditions. Logistic mixed effects models revealed no significant effects in accuracy across conditions (p > .05). Thus, participants arrived at the correct interpretation equally across conditions. Similarly, linear mixed effects models revealed no significant reaction times effects across conditions (p > .05). The temporal manipulations did not alter the response speeds of the participants.

Table 1.

Mean (M) and standard deviations (SD) for offline comprehension. Accuracy (% correct) and reaction time (RT in ms).

| Accuracy | RT | |||

|---|---|---|---|---|

| Condition | M | SD | M | SD |

| Control | 82% | 38% | 560.6 | 335.7 |

| Stretch | 86% | 35% | 521.1 | 305.1 |

| Disfluent | 84% | 37% | 512.3 | 299.3 |

| Silence | 84% | 37% | 527.6 | 302.7 |

ETL-VWP results

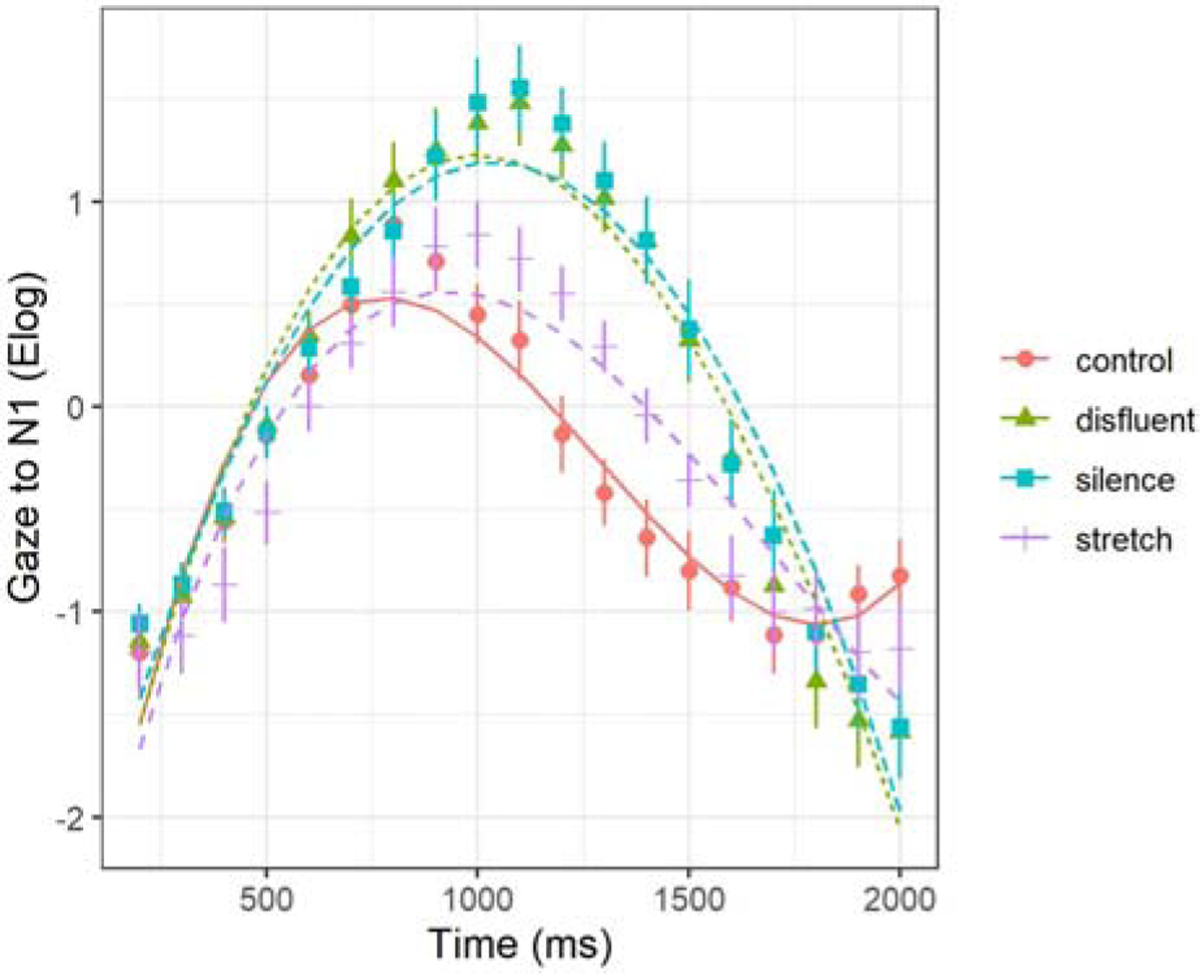

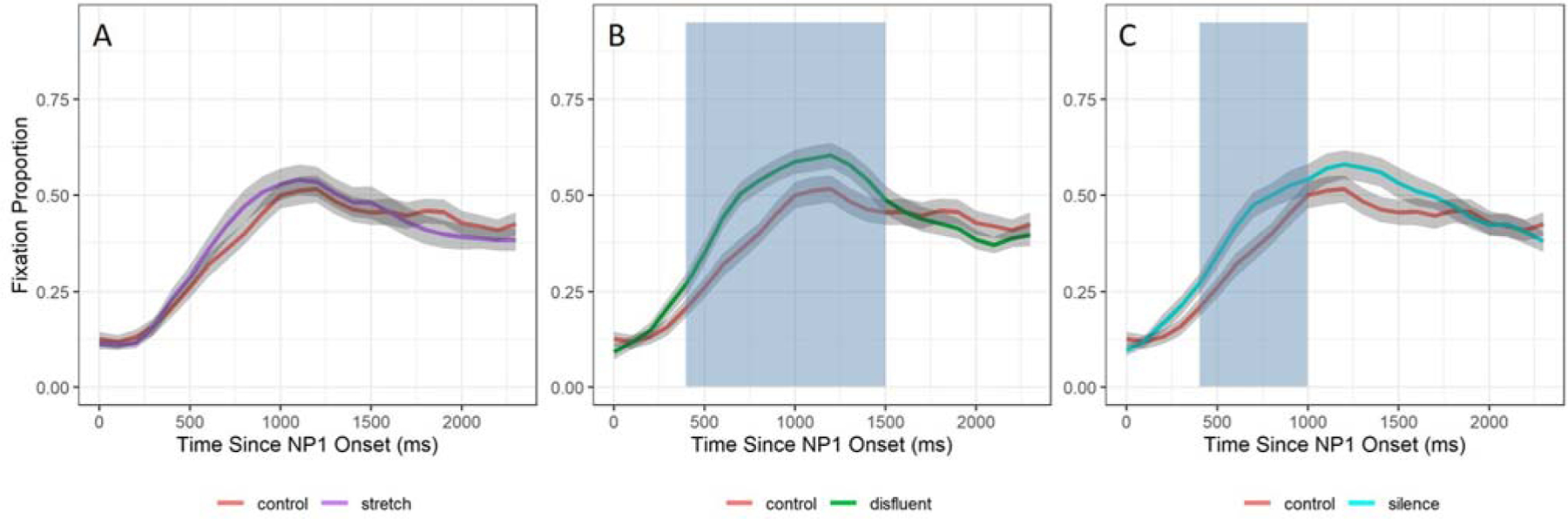

In Figure 4, the effects of the three time manipulations (compared to the control condition) as are shown with time window one outlined in black.

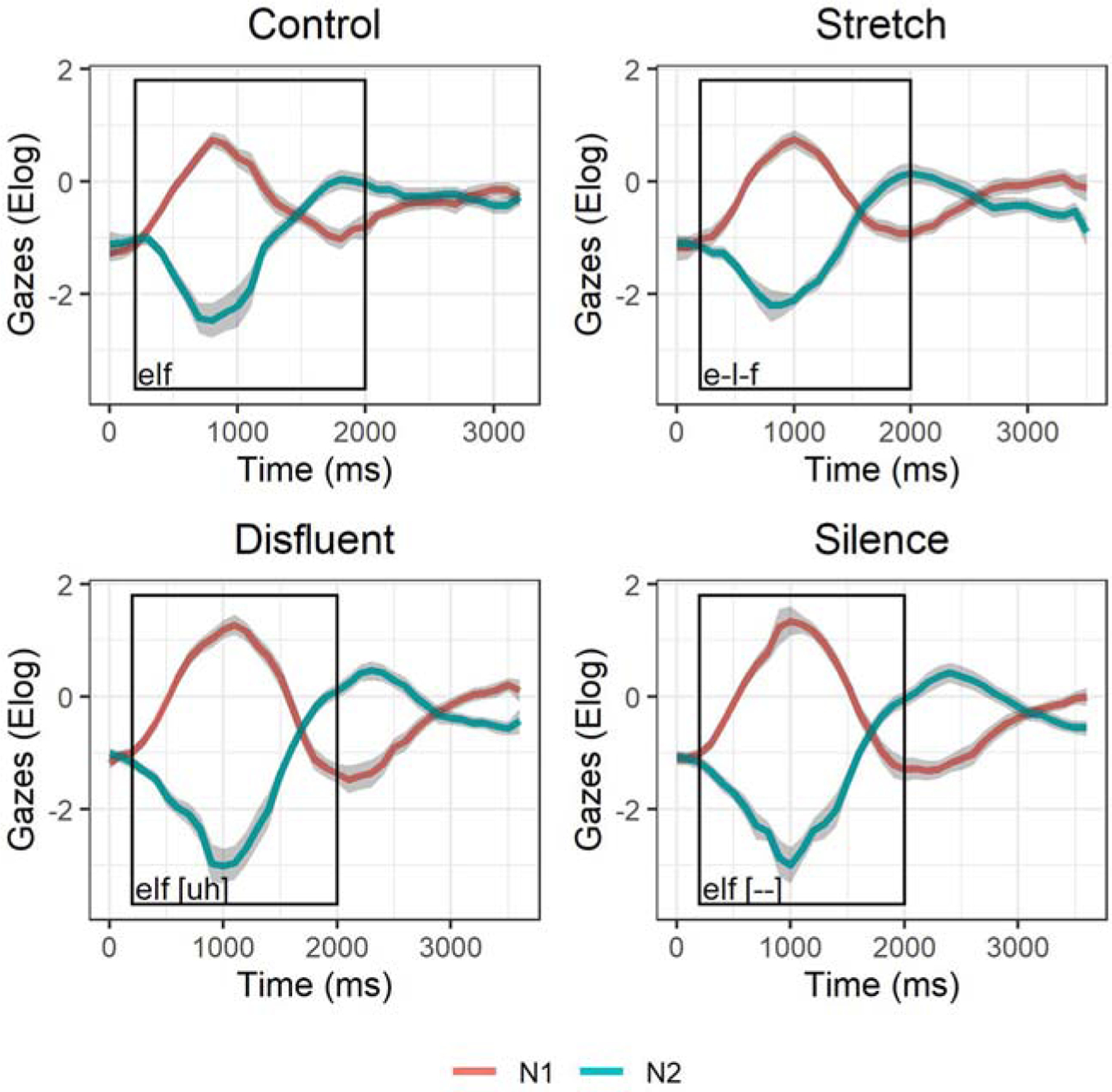

Figure 4.

Time window 1 (black box): Mean gaze trajectories and standard error (grey error ribbons) to N1 (red line) and N2 (blue line) in each condition: Control (top-left), Stretch (top-right), Disfluent (bottom-left), and Silence (bottom-right).

Initial lexical processing of N1 (Time window 1)

In the initial lexical processing window (Time window 1, see Figure 4), we tested the extent to which temporal manipulations modulated the activation and deactivation dynamics of gazes to N1. Recall from above that we begin all analyses with a base model that includes time without any modulation of condition. The addition of Condition improved model fit on all time terms except linear (intercept χ2(26) = 27.79, p < .001; linear χ2(29) = 5.41, p > .05; quadratic χ2(32) = 38.90, p < .001; cubic χ2(35) = 83.86, p < .001). Significance tests on the individual parameter estimates revealed a significant difference in the overall magnitude of N1 activation among the Conditions. Figure 5, below, shows the observed data and model fits in each condition.

Figure 5.

Initial lexical processing of the displaced object (N1). Model fit (lines) of the gaze data (shapes are means for the 4 conditions; error bars are standard errors) from the onset of N1 (Time window 1).

As can be seen in Figure 5, there was higher overall activation when N1 was followed by a disfluency or silent pause compared to no manipulation (disfluent β = .43, SE = .08, p < .001; silence β = .46, SE = .08, p < .01). There were no reliable differences in overall activation between the stretch and control condition (stretch β = .07, SE = .08, p > .05). There were also notable effects on gaze trajectory. Significant effects of Condition were found on the quadratic term, indicating steeper curvature (rise and fall) of activation and deactivation in the disfluent, silent, and stretch conditions (disfluent β = −2.35, SE = .38, p < .001; silence β = −2.18, SE = .38, p < .01; stretch β = −.96, SE = .38, p = .01). Table 2, below, contains fixed effect model parameters, deviance statistics, and significance levels (random effects are presented in Appendix A).

Table 2.

GCA model results from initial lexical processing of N1 (Time window 1).

| Model Fit | ||||

|---|---|---|---|---|

| Term | −2LL | X 2 | p | |

| Intercept | −5136.2 | 27.79 | <.001 | |

| Linear | −5134.5 | 5.41 | 0.14 | |

| Quadratic | −5114 | 38.90 | <.001 | |

| Cubic | −5072.1 | 83.86 | <.001 | |

| Parameter estimates | ||||

| Term | Est. | SE | t | p |

| Intercept | −0.35 | 0.10 | −3.42 | 0.001 |

| Linear | −1.01 | 0.44 | −2.30 | 0.03 |

| Quadratic | −1.96 | 0.35 | −5.56 | <.001 |

| Cubic | 1.64 | 0.13 | 12.80 | <.001 |

| Disfluent*Intercept | 0.43 | 0.08 | 5.44 | <.001 |

| Silence*Intercept | 0.46 | 0.08 | 5.86 | <.001 |

| Stretch*Intercept | 0.07 | 0.08 | 0.91 | 0.37 |

| Disfluent*Linear | −0.06 | 0.41 | −0.14 | 0.89 |

| Silence*Linear | 0.19 | 0.41 | 0.46 | 0.65 |

| Stretch*Linear | 0.43 | 0.41 | 1.03 | 0.31 |

| Disfluent*Quadratic | −2.35 | 0.38 | −6.13 | <.001 |

| Silence*Quadratic | −2.18 | 0.38 | −5.70 | <.001 |

| Stretch*Quadratic | −0.96 | 0.38 | −2.52 | 0.01 |

| Disfluent*Cubic | −1.31 | 0.18 | −7.19 | <.001 |

| Silence*Cubic | −1.56 | 0.18 | −8.57 | <.001 |

| Stretch*Cubic | −0.89 | 0.18 | −4.87 | <.001 |

Note: The table provides the test of the overall interaction (model fit) as well as the test of the specific comparisons between the temporal manipulations for each term of the model (intercept, linear, quadratic, and cubic). Results in boldface are presented in the text. LL = log-likelihood.

Syntactic reactivation and interference resolution window (Time window 2)

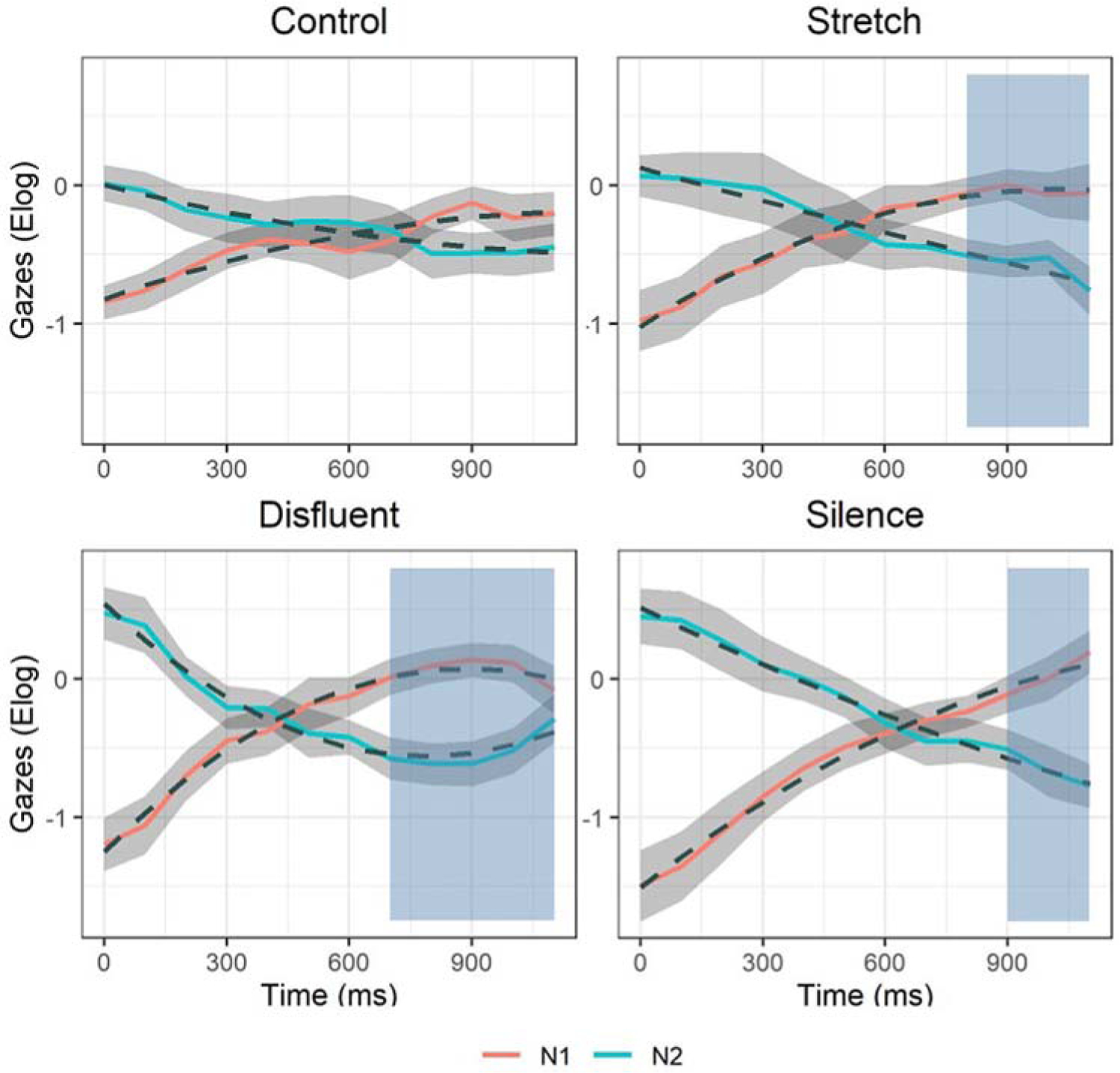

In the syntactic reactivation and interference resolution window (Time window 2, starting at verb offset), we tested the extent to which temporal manipulations modulated syntactically driven reactivation and resolution of interference. Overall model comparisons revealed that adding the interaction Condition*AOI significantly improved model fit on the linear, and quadratic terms (intercept χ2(29) = 5.25, p = .63; linear χ2(36) = 68.19, p < .001; quadratic χ2(43) = 28.05, p < .001). This indicates that there were significant differences in the time-course of gaze trajectories to each noun across the disfluent and silence temporal manipulations. The observed data and model fits are shown in Figure 6, below, separated by condition.

Figure 6.

Syntactic reactivation of the displaced object (N1) and deactivation of N2. Model fit (dashed lines) of the observed gaze data (coloured lines are means; shaded ribbons are standard errors) from the onset of the main verb (Time window 2). Blue shaded regions indicate time span of significant clusters (p <.05).

To better understand the nature of the interaction of Condition*AOI*time, we first looked at the linear term and found that the slopes (i.e. rate of change) of deactivation of N2 and reactivation of N1 differed across conditions. Beginning with the deactivation of N2, the disfluent and silence temporal manipulations increased the rate with which N2 was deactivated as compared to the control condition (disfluent β = −1.16, SE = .49, p = .02; silence β = −1.92, SE = .49, p < .001). There was no difference between stretch and control condition (stretch β = −.77, SE = .49, p = .12). With regards to reactivation of N1, the interaction was due to significantly higher reactivation slopes in the disfluent and silence conditions as compared to the control (disfluent β = .68, SE = .35, p = .05; silence β = 1.07, SE = .35, p < .001). No differences were found between the stretch and control conditions (stretch β = .39, SE = .35, p > .05). Table 3, below, contains fixed effect model parameters, deviance statistics, and significance levels (random effects are presented in Appendix B).

Table 3.

GCA results of the effect of temporal manipulations on N1 reactivation N2 deactivation (Time window 2).

| Model Fit | ||||

|---|---|---|---|---|

| Term | −2LL | X 2 | p | |

| Intercept | −6033.6 | 5.25 | 0.63 | |

| Linear | −5999.5 | 68.19 | <.001 | |

| Quadratic | −5988.6 | 28.05 | <.001 | |

| Parameter estimates | ||||

| Term | Est. | SE | t | p |

| Intercept | −0.43 | 0.13 | −3.28 | <.001 |

| Linear | 0.68 | 0.31 | 2.23 | 0.03 |

| Quadratic | −0.15 | 0.20 | −0.77 | 0.44 |

| Disfluent*Intercept | 0.11 | 0.12 | 0.99 | 0.33 |

| Silence*Intercept | −0.13 | 0.12 | −1.14 | 0.26 |

| Stretch*Intercept | 0.07 | 0.12 | 0.64 | 0.52 |

| Disfluent*Linear | 0.68 | 0.35 | 1.96 | 0.05 |

| Silence*Linear | 1.07 | 0.35 | 3.09 | <.001 |

| Stretch*Linear | 0.39 | 0.35 | 1.13 | 0.26 |

| Disfluent*Quadratic | −0.46 | 0.26 | −1.79 | 0.08 |

| Silence*Quadratic | −0.12 | 0.26 | −0.46 | 0.65 |

| Stretch*Quadratic | −0.19 | 0.26 | −0.74 | 0.46 |

| Intercept*N2 | 0.14 | 0.19 | 0.75 | 0.45 |

| Linear*N2 | −1.21 | 0.43 | −2.81 | 0.01 |

| Quadratic*N2 | 0.25 | 0.28 | 0.89 | 0.37 |

| Disfluent*N2*Intercept | −0.07 | 0.16 | −0.42 | 0.67 |

| Silence*N2*Intercept | 0.25 | 0.16 | 1.55 | 0.12 |

| Stretch*N2*Intercept | −0.08 | 0.16 | −0.49 | 0.62 |

| Disfluent*N2*Linear | −1.16 | 0.49 | −2.36 | 0.02 |

| Silence*N2*Linear | −1.92 | 0.49 | −3.92 | <.001 |

| Stretch*N2*Linear | −0.77 | 0.49 | −1.56 | 0.12 |

| Disfluent*N2*Quadratic | 1.01 | 0.36 | 2.80 | 0.01 |

| Silence*N2*Quadratic | 0.12 | 0.36 | 0.32 | 0.75 |

| Stretch*N2*Quadratic | 0.10 | 0.36 | 0.29 | 0.77 |

Note: The table provides the test of the overall interaction (model fit) as well as the test of the specific comparisons between the temporal manipulations for each term of the model (intercept, linear, quadratic, and cubic). Results in boldface are presented in the text. LL = log-likelihood.

Cluster-based permutation results

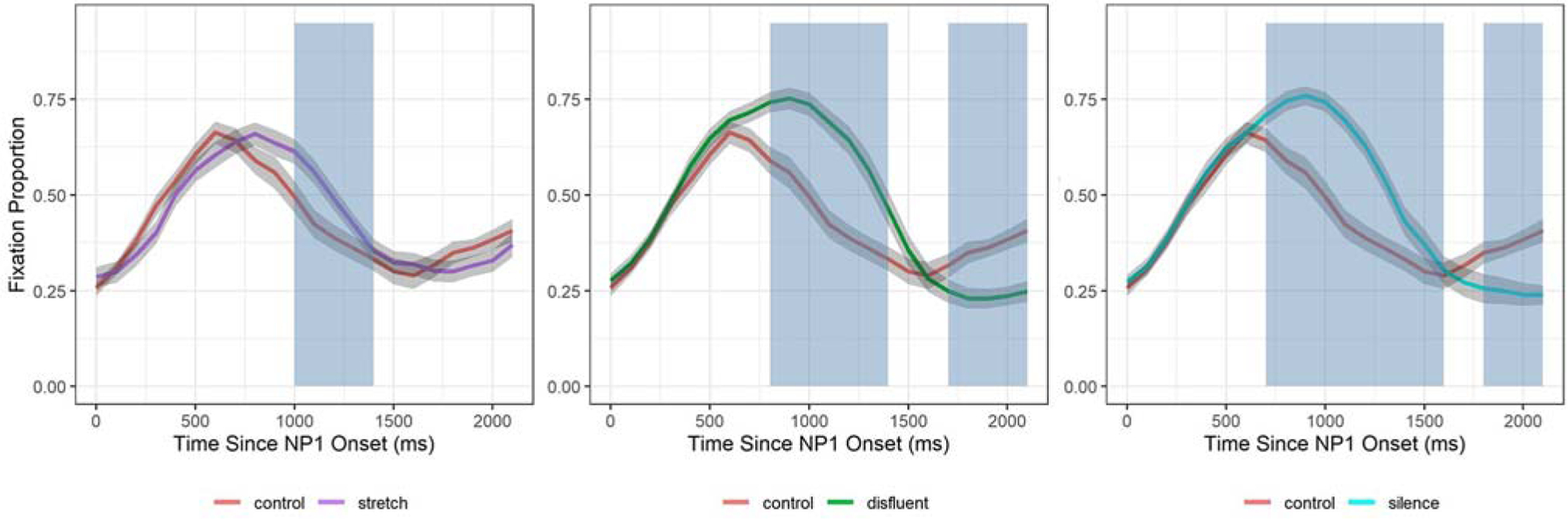

Initial lexical processing of N1 (Time window 1)

Recall, cluster-based permutation analyses were conducted to determine when in the time-course the temporal manipulations differed from the control condition. Results below report the summary statistics and the p-values of significant clusters. Note that the sign of the cluster (positive or negative) indicates the direction of the effect, such that a positive cluster indicates that gazes in the control are higher than in the manipulation and a negative cluster indicates that gazes for temporal manipulations are higher than for control. Shaded regions in Figure 7 depict the time points in the sentence where significant clusters occur. Analyses revealed that in the stretch-control comparison (Figure 7(A)), only one significant cluster was found from 1000 to 1400 ms (cluster sum statistic = −15.16, p <.05). In the disfluent-control comparison (Figure 7(B)), two significant clusters were found: a negative cluster from 800 to 1500 ms (cluster sum statistic = −49.58, p < .001) and a positive cluster from 1700 to 2200 ms (cluster sum statistic = 22.29, p < .05). Two significant clusters were also found in the silence-control comparison (Figure 7(C)): a negative cluster from 700 to 1600 ms (cluster sum statistics = −54.03, p < .001) and a positive cluster from 1800 to 2200 ms (cluster sum statistic = 18.02, p < .05). Combined with the GCA results, these analyses reveal that the effects of the disfluent and silence conditions occurred in the deactivation portion of the time window (i.e. post peak). The second set of positive clusters indicate that deactivation of N1 was significantly extended in these conditions.

Figure 7.

Results of cluster-based permutation analyses on initial N1 processing. This figure shows the mean probability of fixating on N1 and standard error (grey error ribbons) in each comparison: control vs stretch (A), control vs disfluent (B), and control vs silent (C). Blue shaded regions represent the time span for each significant cluster (p <.05).

Syntactic reactivation and interference resolution window (Time window 2)

In time window 2, we used cluster-based analysis to determine when during the time-course the temporal manipulations modulated resolution of interference. Here, we are interested in when looks to N1 exceed N2, thus indicating successful interference resolution. For this reason, we only report significant positive clusters.

These analyses revealed that N1 significantly diverged from N2 in each of the temporal manipulations but not in the control condition. Unlike in the GCA analyses, in the stretch condition, we found a significant cluster from 800 to 1200 ms (cluster sum statistic = 10.04, p < .05). In the disfluent condition, a significant cluster was found from 700 to 1100 ms (cluster sum statistic = 11.21, p < .05) and in the silence condition, the significant cluster was from 900 to 1200 ms (cluster sum statistic = 9.19, p < .05). The significant regions of divergence are indicated by shaded areas in Figure 6.

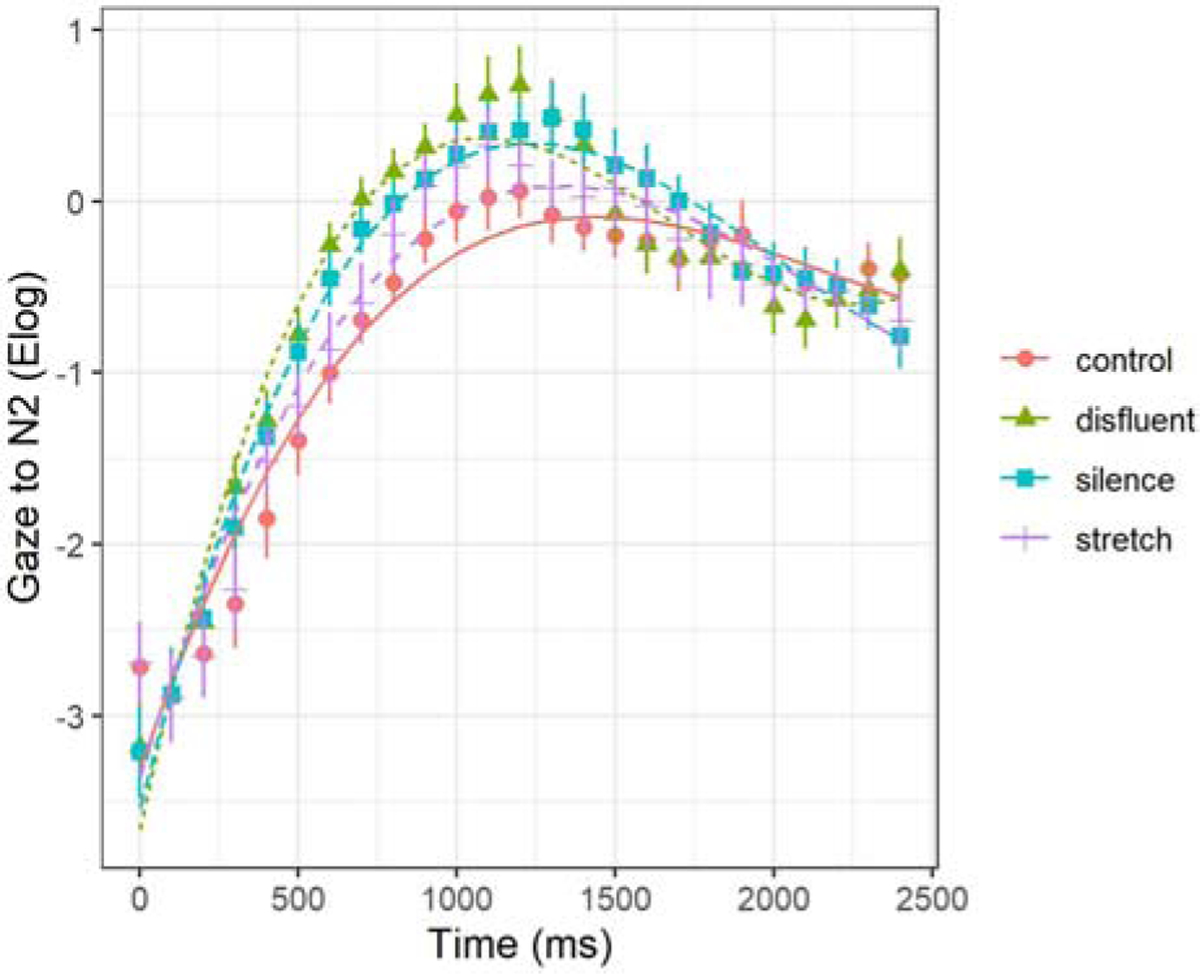

Post hoc analysis of the effect of N1 temporal manipulations on N2

An unexpected observation was the effect of the N1 temporal manipulations on the activation patterns of N2. Upon visual inspection it appeared as though there was an enhancement in the activation dynamics of N2, in the disfluent and silence conditions, similar to those found on N1. To explore this effect, a third time window was created to capture the time-course of activation and deactivation of N2. This window began at the auditory onset of N2 (time-locked to 0 ms) and lasted until the end of the sentence (total duration = 2400 ms). Data analysis followed the same approach as described above for N1, whereby orthogonal polynomial time terms (linear, quadratic, and cubic) were added to GCA models to capture linear and curvilinear patterns in gaze trajectory during this time window. The fixed effects of condition were again added to all time terms to determine model fit and follow-up comparisons of individual parameter estimates were used to evaluate specific condition differences on each of the time terms. The random effects were structured identically to those used in time window 1.

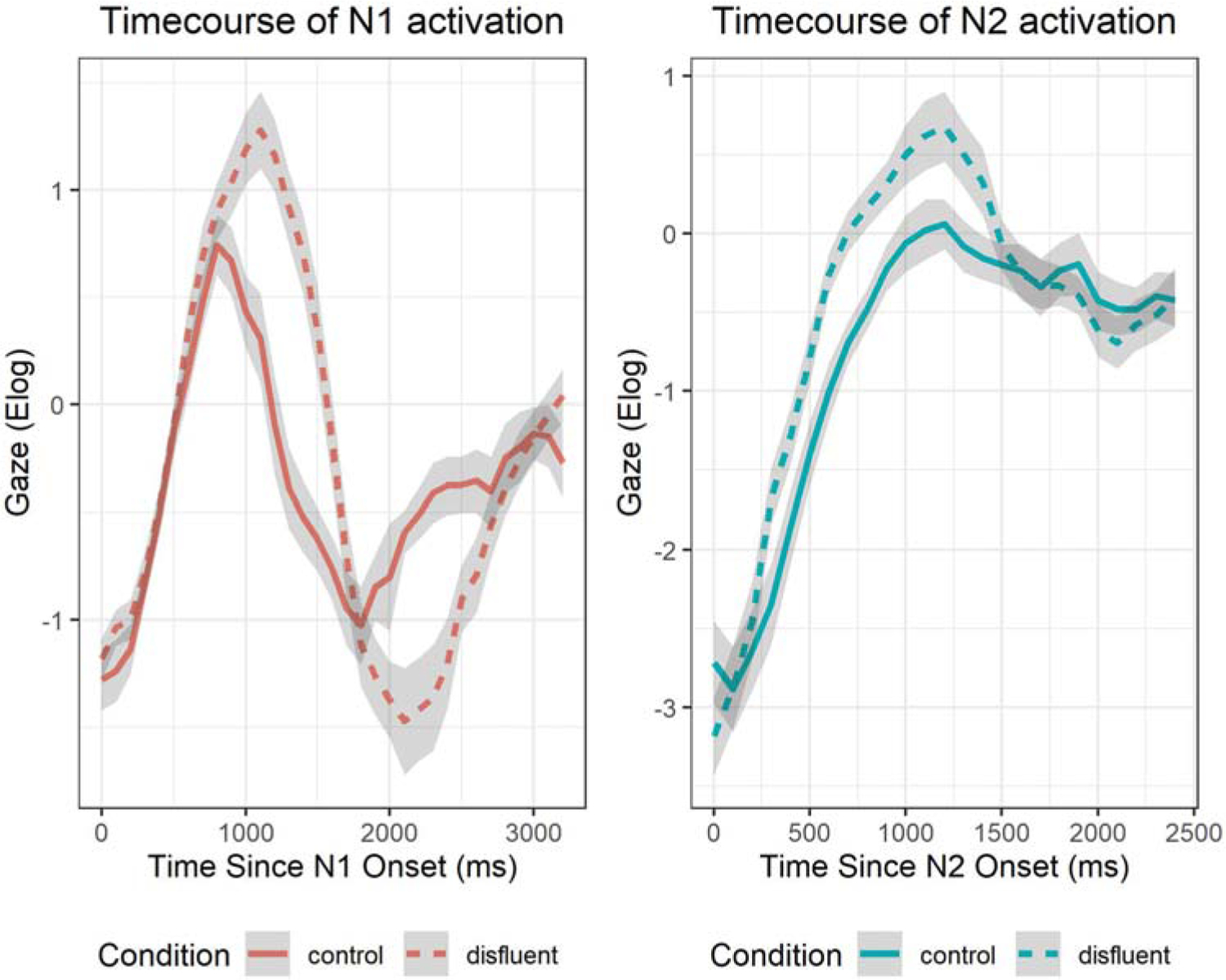

Model comparisons showed significant effects of condition when added to each time term except for the intercept (intercept χ2(26) = 4.23, p = .24; linear χ2(29) = 7.79, p = .05; quadratic χ2(32) = 9.31, p < .03; cubic χ2(35) = 37.95, p < .001). As shown in Figure 8, the first effect revealed greater magnitude of looks to NP2 overall in both the disfluent and silence conditions compared to control (disfluent β = .25, SE = .09, p = .01; silence β = .22, SE = .09, p = .01). The interaction on the quadratic term revealed that the disfluent and silence conditions (as compared to control) increased the steepness of the rise and fall of activation and deactivation (disfluent β = −1.08, SE = .42, p = .01; silence β = −1.17, SE = .42, p = .01). Table 4 contains fixed effect model parameters, deviance statistics, and significance levels (random effects are presented in Appendix C).

Figure 8.

N2 processing. Model fit (lines) of the gaze data (shapes are means for the 4 conditions; error bars are standard errors) from the onset of N2.

Table 4.

GCA results of the effect of temporal manipulations on N2 activation.

| Model Fit | ||||

|---|---|---|---|---|

| Term | −2LL | X 2 | p | |

| Intercept | −7100.8 | 4.23 | 0.24 | |

| Linear | −7096.9 | 7.79 | 0.05 | |

| Quadratic | −7092.2 | 9.31 | 0.03 | |

| Cubic | −7073.3 | 37.95 | <.001 | |

| Parameter estimates | ||||

| Term | Est. | SE | t | p |

| Intercept | −0.79 | 0.11 | −7.22 | <.001 |

| Linear | 3.33 | 0.50 | 6.61 | <.001 |

| Quadratic | −2.88 | 0.42 | −6.79 | <.001 |

| Cubic | 0.64 | 0.14 | 4.55 | <.001 |

| Disfluent*Intercept | 0.25 | 0.09 | 2.85 | 0.01 |

| Silence*Intercept | 0.22 | 0.09 | 2.54 | 0.01 |

| Stretch*Intercept | 0.09 | 0.09 | 0.97 | 0.33 |

| Disfluent*Linear | −0.86 | 0.49 | −1.74 | 0.09 |

| Silence*Linear | −0.53 | 0.49 | −1.08 | 0.28 |

| Stretch*Linear | −0.41 | 0.49 | −0.84 | 0.41 |

| Disfluent*Quadratic | −1.08 | 0.42 | −2.58 | 0.01 |

| Silence*Quadratic | −1.17 | 0.42 | −2.80 | 0.01 |

| Stretch*Quadratic | −0.59 | 0.42 | −1.42 | 0.16 |

| Disfluent*Cubic | 1.11 | 0.20 | 5.55 | <.001 |

| Silence*Cubic | 0.39 | 0.20 | 1.98 | 0.05 |

| Stretch*Cubic | 0.08 | 0.20 | 0.42 | 0.67 |

Note: The table provides the test of the overall interaction (model fit) as well as the test of the specific comparisons between the temporal manipulations for each term of the model (intercept, linear, quadratic, and cubic). Results in boldface are presented in the text. LL = log-likelihood.

We also conducted cluster-based permutation analyses to determine the timing of the effects established in the GCA. These analyses compared looks to N2 in each temporal manipulation against the control condition and used the same time-bin resolution and time window. All specifications (t-threshold, number of samples, etc.) were identical to the previous analyses in time windows 1 and 2. Consistent with the GCA results, significant clusters were only found in the control-disfluent comparison (Figure 9(B), cluster sum statistic = −37.9, p <.001) and the control-silence comparison (Figure 9(C), cluster sum statistic = −20.7, p < .05).

Figure 9.

Results of cluster-based permutation analyses on N2 processing. This figure shows the mean probability of fixating on N2 and standard error (grey error ribbons) in each comparison: control vs stretch (A), control vs disfluent (B), and control vs silent (C). Blue shaded regions represent the time span for each significant cluster (p <.05).

Discussion

The findings from this ETL experiment revealed that temporal manipulations modulated the activation dynamics of subject and object noun phrases during the auditory processing of object-relative sentences. These results provide a number of novel findings relevant to lexical activation, deactivation, and syntactically driven reactivation during sentence processing. In terms of initial lexical activation of the direct object (N1), GCA revealed that the addition of disfluencies and silent pauses increased the overall magnitude and curvature of activation of N1 (steeper rise and fall). Follow up cluster-based analyses complemented the GCA models by revealing when deactivation was enhanced in the disfluent and silence curvatures. Downstream, similar effects of these manipulations were also found on the subject noun (N2) and the reactivation and interference resolution processes. Critically, after processing the RC-verb, looks back to N1 rose more sharply and exhibited more divergence from the N2 (the competing constituent). In other words, interference was resolved more rapidly.

The effect of additional time on lexical and syntactic processing

The addition of a brief delay in the speech input (via disfluency and silent pause) after the presentation of the N1 enhanced processing of that noun, which resulted in a higher total level of activation followed by enhanced deactivation.

In our investigations of the syntactic reactivation of N1, we found across all cases, significant reactivation which replicates prior reports of real time dependency linking during object-relative sentence processing (Akhavan et al., 2020; Love & Swinney, 1996; Sussman & Sedivy, 2003; Thompson & Choy, 2009). In addition, these ETL data provide insight about the impact that additional time has on the post-verb reactivation process and the extent of the interference patterns of the two nouns.

Comparing the four conditions, there are clear differences in the onset and extent of lexical reactivation at the post-verb site. Specifically, the disfluent and silence conditions pattern together and show steeper rises in looks to N1 compared to the control condition. Accompanying these increases is a strengthened rate of deactivation of N2. The coupling of stronger reactivation of N1 and deactivation of N2 are explained in terms of a greater resolution of interference, which can be visualised in the gaze patterns displayed in Figure 6. As can be seen from the figure, the interference region is greatest in the control condition (noted by an extended overlap in looks to the two nouns), whereas it is reduced in the three temporal conditions. This reduction is manifested as greater divergence of gazes between the two nouns such that interference is resolved earlier and maintained longer, as confirmed by cluster-based analyses.

One interpretation of this pattern is that the additional time post N1 (by disfluency or silence) allowed for a deeper processing of the direct object noun (N1), leading to a strengthened representation (see Karimi & Ferreira, 2016 for additional discussion on deeper processing). Prior research supports this notion and is consistent with the view that disfluencies (overt and silent), provide an additional window of time allowing the current representation to strengthen in activation whether that be a structural interpretation as found during the parsing of garden path sentences (Bailey & Ferreira, 2003) or in this case lexical items. This enhanced focus could result in sustained activation making it available at verb offset, however, our results (and those previously reported in the literature) do not support a sustained activation model, as we see clear deactivation.

Another possible interpretation of the effect of the manipulation can be aimed at N2 processing. When considering how disfluencies and silence affect sentence constituents downstream from where they are presented, it is possible that the manipulations caused heightened attention to upcoming information, such as N2. Cluster analyses exploring the time-course of activation of N2 revealed a significant effect for both the disfluent and silence conditions, supporting the enhanced activation found from the GCA analyses. This is in line with auditory recognition research which has found that target words that were preceded by disfluencies were faster to be recognised due to modulations in attention (Collard et al., 2008; Corley et al., 2007; Corley & Hartsuiker, 2011; Fox Tree, 2001). However, this too cannot be the whole story as we find less interference at the post-verb site, yet one would expect that heightened activation of the competing noun would interfere with reactivation. This leaves us to entertain our third option which centres on lexical disengagement.

The enhancement in the activations of N1 and N2 from the manipulations co-occurred with steeper deactivation of N1 pre-verb and N2 post-verb. An example of this can be seen for the disfluent-control comparison as shown in Figure 10. It is believed that these patterns allowed for cleaner linking of the dependency elements at verb offset as there is reduced interference. The finding that disengagement of a noun affected the pattern in which subsequent information is activated is an aspect of lexical processing that is not often investigated. These results indicate that lexical deactivation plays a beneficial role in allowing for the tight lexical and syntactic coupling needed to accomplish real time syntactic linking and interference resolution between sentence constituents in constructions where competition exists (i.e. object relative constructions).

Figure 10.

Overlays of the time course of activation of N1 (left) and N2 (right) for the control and disfluent conditions (solid line and dashed line, respectively) showing enhanced activation and deactivation for the disfluent condition as compared to the control condition. Grey ribbons indicate standard error.

These findings are in conceptual alignment with the functional decay theory, originally put forth by Altmann and Gray (2002), which posits that decay and interference are functionally related; that is, decay plays a functional role in memory by mitigating interference. While this theory was initially proposed for short term memory processing, the current pattern of results fit with this schema. This theory rests on the idea that information must decay to some degree to allow the system to more efficiently retrieve the target information. With respect to language processing, this would mean that lexical representations must decay, to some degree, so that the system can process new incoming information without interference. But this does not explain how information is then retrieved and integrated into a syntactic frame when the structure requires linking of the decayed information. In fact, decay theories posit that the more decay that a representation undergoes, the more difficult it is to retrieve that item at a later time (Brown, 1958). Our results show that the greater the deactivation of a noun, the more enhanced the activation and deactivation of a subsequent noun. It is this enhanced process that allows for the reduction of interference and the successful linking of the verb to the prior processed direct object noun.

Our work hints at avenues for further investigations as the process of decay may take different forms depending on the methods used and the modality of investigation. It is possible that the ETL-VWP task used here is tapping into a different type or level of decay than those tasks typically used in memory research. As our work suggests, some level of decay or deactivation mitigates interference during complex language processing. There are instances, however, in which decay of information has been found to impede processing. Such an example has been demonstrated in garden path sentence processing when an initially chosen structural interpretation turns out to be incorrect and reanalysis must occur in order to reactivate the correct structure and arrive at a correct interpretation (i.e. final comprehension, [Van Dyke & Johns, 2012; Van Dyke & Lewis, 2003]). The logic here is that the more the required representation has decayed1, the more difficult reanalysis is. In contrast, our findings suggest a different, beneficial, role for decay; that is in minimising interference. In order to tease apart deactivation from memory-based decay, future investigations should explore a variety of methodological approaches.

Temporal parameter for influencing lexical processing and reactivation

The patterning of disfluent and silence conditions suggests that the additional time provided by these manipulations influenced lexical activation regardless of whether the delay was filled (via the disfluency uh) or unfilled (silence), indicating that time itself was an essential factor in influencing these processes. The stretch condition, in contrast, only had a reliable effect on the curvature of lexical activation and deactivation in the initial processing window of N1. Compared to the ~460 ms of additional timing the disfluent and silence conditions provided, the stretch condition provided less time, ~250 ms. It is possible that effects of time and the ~250msec addition was not long enough to produce any measurable effects on the initial activation of N2. Another possibility is that in the disfluent and silence conditions, time was manipulated after the noun whereas in the stretch condition, time was an internal temporal manipulation, motivating further exploration of these different approaches.

Interestingly, the GCA and cluster analyses provided discrepant findings of post-verb looks to N2. No difference between the control and stretch conditions were revealed from GCA. However, cluster-based analyses revealed a significant positive cluster indicating divergent looks to N1 and N2. This divergent pattern supports reactivation at the post-verb position. As the lack of replication between GCA and cluster analysis weakens the argument that the stretch condition enhanced reactivation, we have taken a measured approach in our interpretation of this condition and focused instead on the clear effects from the disfluent and silence conditions.

Conclusions

The overall emerging picture points to the benefit of time on lexical and syntactic levels of processing. Based on the evidence shown above, the effects from two of the temporal manipulations (disfluent and silence) on N1 cascaded to N2 where we saw enhanced processing similar to that found for N1. Regarding these patterns of effects, we assert that the stronger deactivation of N1 allowed for greater activation of N2. When looking at the point of reactivation at the post-verb site, we found greater deactivation of N2 along with reactivation of the structurally linked object (N1) and argue that N2 deactivation led to reduced interference when N1 was reactivated.

These findings point to a beneficial aspect of decay in mitigating interference (Altmann & Gray, 2002). While these aspects of processing need further investigation, it is clear from the data presented that there is a relationship between lexical activation and deactivated and the effects on subsequent constituent processing. These patterns are clearly affected by the temporal manipulations made with the stimuli. Interestingly, while we see graded activation and disengagement across the conditions, the final gaze level reached in each condition was similar. Thus, the effect of the manipulations reflects the activation dynamics, not the final result. This is critical as we can determine that the disfluent and silence manipulations (and to a lesser extent, the stretch condition), have a direct effect on the nature of the listener’s parsing where the additional time provided by those conditions changed the rate with which the direct object was reactivated. These findings lend support to the notion that time can enhance lexical representations and has an effect on later integration within a sentence structure.

Supplementary Material

Acknowledgments

We thank Kristi Hendrickson, Niloofar Akhavan and Noelle Abbott for their assistance in various stages of the preparation of this manuscript, to all our participants and their families, and to NIH NIDCD (T32 DC007361, and R01 DC009272), SDSU’s University Grants Program, and the Dr. David Swinney Fellowship for supporting this work.

Funding

This work was supported by NIH NIDCD [grant number R01 DC009272,T32 DC007361]; San Diego State University [grant number University Grant Program]; Dr. David Swinney Fellowship.

Footnotes

Supplemental data for this article can be accessed https://doi.org/10.1080/23273798.2021.1941147.

According to the Cue-based Retrieval framework, successful retrieval of a distant dependent is largely determined by the strength of the association between the retrieval cues and the target item while time-based decay in activation may be more relevant in repair processes associated with the processing of ambiguous sentence structures as described in Van Dyke and Lewis (2003).

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Akhavan N, Blumenfeld HK, & Love T (2020). Auditory sentence processing in bilinguals: The role of cognitive control. Frontiers in Psychology, 11. 10.3389/fpsyg.2020.00898 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allopenna PD, Magnuson JS, & Tanenhaus MK (1998). Tracking the time-course of spoken word recognition using eye movements: Evidence for continuous mapping models. Journal of Memory and Language, 38(4), 419–439. 10.1006/jmla.1997.2558 [DOI] [Google Scholar]

- Altmann EM, & Gray WD (2002). Forgetting to remember: The functional relationship of decay and interference. Psychological Science, 13(1), 27–33. 10.1111/1467-9280.00405 [DOI] [PubMed] [Google Scholar]

- Arnold JE, Kam CLH, & Tanenhaus MK (2007). If you say thee uh you are describing something hard: The on-line attribution of disfluency during reference comprehension. Journal of Experimental Psychology: Learning, Memory, and Cognition, 33(5), 914–930. 10.1037/0278-7393.33.5.914 [DOI] [PubMed] [Google Scholar]

- Arnold JE, Tanenhaus MK, Altmann RJ, & Fagnano M (2004). The old and thee, uh, new: Disfluency and reference resolution. Psychological Science, 15(9), 578–582. 10.1111/j.0956-7976.2004.00723.x [DOI] [PubMed] [Google Scholar]

- Bailey KGD, & Ferreira F (2003). Disfluencies affect the parsing of garden-path sentences. Journal of Memory and Language, 49(2), 183–200. 10.1016/S0749-596X(03)00027-5 [DOI] [Google Scholar]

- Barr DJ (2008). Analyzing ‘visual world’ eyetracking data using multilevel logistic regression. Journal of Memory and Language, 59(4), 457–474. 10.1016/j.jml.2007.09.002 [DOI] [Google Scholar]