Abstract

(1) Background: COVID-19 computed tomography (CT) lung segmentation is critical for COVID lung severity diagnosis. Earlier proposed approaches during 2020–2021 were semiautomated or automated but not accurate, user-friendly, and industry-standard benchmarked. The proposed study compared the COVID Lung Image Analysis System, COVLIAS 1.0 (GBTI, Inc., and AtheroPointTM, Roseville, CA, USA, referred to as COVLIAS), against MedSeg, a web-based Artificial Intelligence (AI) segmentation tool, where COVLIAS uses hybrid deep learning (HDL) models for CT lung segmentation. (2) Materials and Methods: The proposed study used 5000 ITALIAN COVID-19 positive CT lung images collected from 72 patients (experimental data) that confirmed the reverse transcription-polymerase chain reaction (RT-PCR) test. Two hybrid AI models from the COVLIAS system, namely, VGG-SegNet (HDL 1) and ResNet-SegNet (HDL 2), were used to segment the CT lungs. As part of the results, we compared both COVLIAS and MedSeg against two manual delineations (MD 1 and MD 2) using (i) Bland–Altman plots, (ii) Correlation coefficient (CC) plots, (iii) Receiver operating characteristic curve, and (iv) Figure of Merit and (v) visual overlays. A cohort of 500 CROATIA COVID-19 positive CT lung images (validation data) was used. A previously trained COVLIAS model was directly applied to the validation data (as part of Unseen-AI) to segment the CT lungs and compare them against MedSeg. (3) Result: For the experimental data, the four CCs between COVLIAS (HDL 1) vs. MD 1, COVLIAS (HDL 1) vs. MD 2, COVLIAS (HDL 2) vs. MD 1, and COVLIAS (HDL 2) vs. MD 2 were 0.96, 0.96, 0.96, and 0.96, respectively. The mean value of the COVLIAS system for the above four readings was 0.96. CC between MedSeg vs. MD 1 and MedSeg vs. MD 2 was 0.98 and 0.98, respectively. Both had a mean value of 0.98. On the validation data, the CC between COVLIAS (HDL 1) vs. MedSeg and COVLIAS (HDL 2) vs. MedSeg was 0.98 and 0.99, respectively. For the experimental data, the difference between the mean values for COVLIAS and MedSeg showed a difference of <2.5%, meeting the standard of equivalence. The average running times for COVLIAS and MedSeg on a single lung CT slice were ~4 s and ~10 s, respectively. (4) Conclusions: The performances of COVLIAS and MedSeg were similar. However, COVLIAS showed improved computing time over MedSeg.

Keywords: COVID-19, CT, lung segmentation, COVLIAS, MedSeg, AI, DL, HDL, validation, benchmark.4

1. Introduction

COVID-19 lung segmentation in computed tomography (CT) scans is critical for determining lung severity [1,2,3]. According to the World Health Organization (WHO), as of 4 November 2021, more than 247 million individuals have been infected with the acute respiratory syndrome coronavirus 2 (SAR-COV-2). A fraction of the world’s population has come into contact with Acute Respiratory Distress Syndrome (ARDS), which has resulted in the death of 5 million people [4]. COVID-19 worsens with comorbidity, affecting other organs such as coronary artery disease, [5,6], diabetes [7], atherosclerosis [8], fetal [9], pulmonary embolism [10], and stroke [11]. In patients with underlying comorbidities or moderate to severe disease, chest radiographs and CT [12,13,14] are utilized to identify acute ARDS severity based on the number of pulmonary opacities such as ground-glass (GGO), consolidation, and mixed [2,15,16,17]. To describe the severity of COVID-19 pneumonia, most radiologists provide a semantic description of the degree and its kind of opacities. These methods are time-consuming and subjective for the examination of pulmonary opacities [18,19,20,21]. As part of the pipeline for COVID-19 diagnosis, CT lung segmentation is crucial [1,2,3]. Here is where artificial intelligence (AI) comes into play in automating this time-consuming process and providing a faster diagnosis of the disease [22,23,24,25].

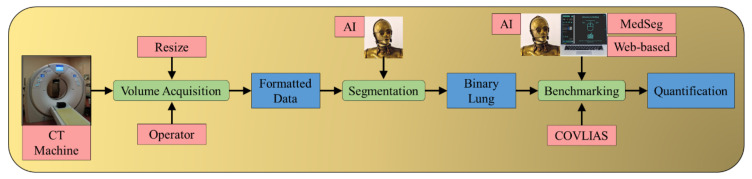

COVLIAS 1.0 (Figure 1) [1] is a global lung segmentation and evaluation system using AI-based segmentation models. This robust AI system (from here on will be called COVLIAS) is designed to keep in mind its clinical acceptability for the segmentation of COVID-19 affected CT lungs. The proposed study presents a comparison of COVLIAS [1,2,3] and the previously offered AI integrated web-based CT image segmentation tool MedSeg [26]. The working of COVLIAS and MedSeg is discussed briefly in the methodology section. The performance evaluation section shows the comparison of COVLIAS and MedSeg on ITALIAN experimental data using (i) Bland–Altman (BA) plots, (ii) correlation coefficient (CC) plots, (iii) Receiver operating characteristic (ROC) curve, and (iv) Figure of Merit (FoM). Further, we used validation data from CROATIA consisting of COVID-19 positive CT lung images to compare COVLIAS vs. MedSeg. These validation data were never seen by the AI model. They are categorized as unseen-AI, where the dataset was taken from a different clinical setting, making it perfect for validating the COVLIAS against MedSeg systems.

Figure 1.

Pipeline for comparing AI-based COVLIAS and MedSeg. The benchmarking stage shows the comparison between COVLIAS and MedSeg. The CT machine uses the ITALIAN cohort during experimentation, and during validation, the CT machine uses the CROATIA cohort.

The layout of this comparative study is as follows: Section 2 discusses the background literature. Section 3 presents the methodology, where we discuss the demographics and AI architectures. The results and performance evaluation are discussed in Section 4. The comparison between our study and similar studies is presented in the benchmarking table in Section 5. The same section also discusses the strengths, weaknesses, and extensions. The study concludes in Section 6.

2. Background Literature

The concept of utilizing AI to characterize diseases has been implemented in almost all areas of medical imaging. This includes the AI’s function in locating the disease, extracting the disease’s region of interest, and automatically classifying the disease against binary or multiclass events. We chose characterization methods based on machine learning and deep learning that overlap and synchronize with ARDS frameworks. The purpose of adopting this characterization system is to communicate the origination and innovation spirits that have been derived for various modalities, organs, and applications. Examples of AI-based characterization can be seen in several other disease diagnosis applications such as brain [27,28,29], stroke [30,31,32], liver [33,34,35], coronary artery [36,37], prostate [38], ovarian [39,40], diabetes [41], thyroid cancer [42], skin cancer [43,44], and heart [45,46,47]. This framework can also be extended to the COVID-19 framework.

COVID-19 is genetically similar to SARS-CoV-1, but not the coronavirus that causes Middle East respiratory syndrome (MERS-CoV). The incubation duration, clinical severity, and transmissibility are all different from SARS-CoV-1 [48]. The global spread of COVID-19 has expanded despite government measures to establish social habits such as social distancing and wearing masks and quarantining and non-pharmacological, preventive treatments for psychophysical well-being [49,50]. To describe the severity of COVID-19 pneumonia, most radiologists provide a semantic description of the degree and kind of opacities. The semiquantitative assessment of pulmonary opacities is time-consuming, subjective, and labor-intensive [18,19,20,21]. In disease detection, segmentation [51,52,53,54] and classification [47,55] are two primary components, with segmentation playing a critical role. Deep learning (DL), an machine learning (ML) extension, uses thick layers to automatically extract and classify all important imaging characteristics [23,56,57,58,59,60]. Hybrid DL (HDL), an approach that integrates two AI systems, aids in addressing some of the issues encountered with DL models, such as overfitting and optimizing hyperparameters to remove bias [61].

3. Material and Research Methodology

3.1. Material: Patient Demographics and Image Acquisition

3.1.1. Demographics for Italian and Croatian Databases

The dataset includes 72 adult Italian patients (experimental database), 46 of them are male, and the rest are female. The average height in males and females is 171 cm, and 175 cm, respectively, and the average weight in males and females is 76 kg and 83 kg, respectively. A total of 60 individuals tested positive for RT-PCR, with bronchoalveolar lavage confirming 12 of them [62]. The cohort had an average GGO of 4.1 and consolidation of 2.4, which was regarded as mild. Following are the percentage distribution out of the 72 individuals having cough (45%), sore throat (8%), dyspnea (54%), chronic inflammatory lung disease (COPD) (42%), hypertension (12.5%), diabetes (11%), smokers (11%), and cancer (14%).

The validation cohort consisted of 500 CT scans (validation database, taken from 7 patients from Croatia) with a mean age of 66 (SD 7.988), 5 of them male (71.4%) and the rest female. The average GGO and consolidation scores were 2 and 1.2 in the cohort, respectively. Out of 7 selected patients in this study, all of them had a cough, 85% were reported to have dyspnea and hypertension (28%), 14% were smokers, and none of them had a sore throat or diabetes, COPD, and cancer. None of them were admitted to the Intensive Care Unit (ICU), and none of the patients died due to COVID-19.

3.1.2. Image Acquisition for Italian and Croatian Cohorts

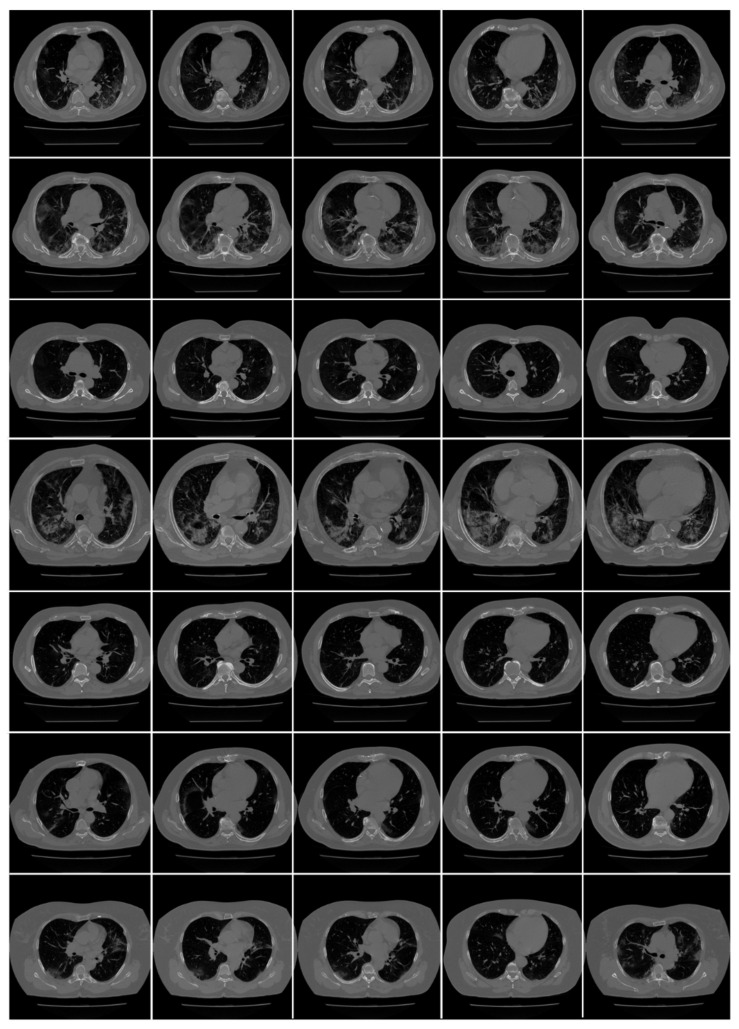

Italian cohort: All chest CT scans were performed in a supine position with a single full inspiratory breath-hold, utilizing a 128-slice multidetector-row “Philips Ingenuity Core” CT scanner from Philips Healthcare(Netherlands). There was no intravenous or oral injection of contrast media. A soft tissue kernel with 512 × 512 matrix (mediastinal window) and a lung kernel with 768 × 768 matrix (lung window) were utilized to rebuild one-mm thick pictures. The CT tests were carried out with a 120 kV, 226 mAs/slice (using Philips’ automatic tube current modulation—Z-DOM), 1.08 spiral pitch factor, 0.5-s gantry rotation time, and 64 × 0.625 detector configuration. The CT data of 72 COVID-positive individuals were used in the proposed investigation. CT volumes of patients were selected based on two criteria (i) the image quality should be reasonable and should have no artifacts or blurriness due to body movement, and (ii) there is no metallic object present in the scan area. Each patient consisted of approximately 200 slices from which the radiologist [LS] selected 65–70 slices from the visible lung region (Figure 2), yielding a total of 5000 images. These 5000 images were used to train and test AI-based segmentation models in the COVLIAS 1.0 system.

Figure 2.

Raw CT images from NOVARA, ITALIAN dataset [3].

Croatian Cohort: A CROATIAN patient of seven COVID-19 positive patients (500 images) was used to validate the AI system (COVLIAS). All chest multidetector CT images (MDCT) were performed in a supine position with a single full inspiratory breath-hold utilizing FCT Speedia HD (Fujifilm Corporation, Tokyo, Japan, 2017) 64-detector MDCT scanner to acquire images of the thorax in craniocaudal direction. Images were acquired with a standard algorithm and viewed with Hitachi, Ltd. Whole Body X-ray CT System Supria Software (System Software Version: V2.25, Copyright Hitachi, Ltd. 2017). There was no contrast media available for intravenous or oral administration. The used scanned parameters were: volume scan, large focus, tube voltage 120 kV, tube current 350 mA with automatic tube current modulation mode (IntelliEC mode), and rotation speed 0.75 s. Parameters used for reconstruction were: field of view (FOV) 350 mm, slice thickness 5 mm (0.625 × 64), table pitch 1.3281, picture filter 32 with multi recon option: picture filter 22 (lung standard) with Intelli IP Lv.2 iterative algorithm (WW1600/WL600), slice thickness 1.25 mm, recon index 1 mm and picture filter 31 (mediastinal) with Lv.3 Intelli IP iterative algorithm (WW450/WL45), slice thickness 1.25 mm, recon index 1 mm. CT volumes of patients were selected based on two criteria (i) the image quality should be reasonable and should have no artifacts or blurriness due to body movement and (ii) there is no metallic object present in the scan area. Figure 3 shows an example of raw CT images from the cohort.

Figure 3.

Raw CT images from the CROATIA dataset.

3.1.3. Data Preparation

We follow the CT slice selection guidelines as used in our previous published studies [1,63,64,65], where the focus was to exclude the non-lung anatomy while preserving the most volumetric region of the lung. The idea was to get the entire visible lung region in each CT slice. Since the lung region (area) was only about ~20% of the whole CT image slice (768 × 768 px2), this accounted for the removal of nearly ~32% of the CT slices, each from the top and the bottom of the CT volume. It was equivalent to removing 65 CT slices from the top and bottom of the CT volume. Thus, the radiologist [LS] choose the remaining ~70 CT slices out of ~200 CT slices for each patient corresponding to the visible lung region.

3.2. Research Methodology and Experimental Protocol

3.2.1. AI Architecture for Two Hybrid Models

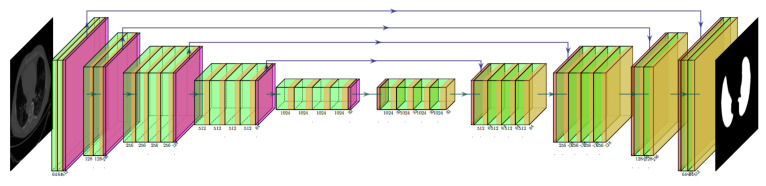

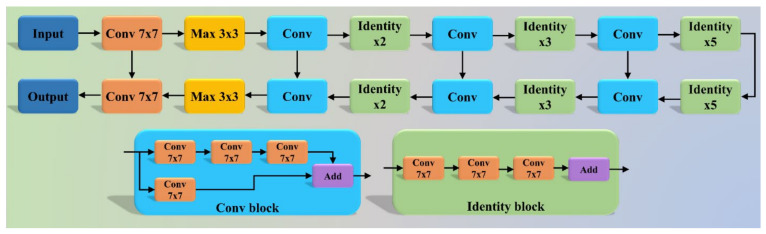

The COVLIAS system incorporates two hybrid DL (HDL) (a) VGG-SegNet (HDL 1) and (b) ResNet-SegNet (HDL 2). The VGG-SegNet architecture (Figure 4) employed in this study is made up of three components: an encoder, a decoder, and a pixel-wise SoftMax classifier in the end. In comparison to the SegNet [66] architecture, it has 16 convolutions (Conv) layers (VGG backbone). In ResNet-SegNet (Figure 5), the difference is in the encoder and decoder parts. It is replaced with a ResNet [67] architecture. In this architecture, a new link known as the skip connection was developed, allowing gradients to bypass a specific number of levels to overcome the vanishing gradient problem.

Figure 4.

VGG-SegNet (HDL 1) architecture.

Figure 5.

ResNet-SegNet (HDL 2) architecture.

3.2.2. Loss Function Design

We adapted cross-entropy (CE)-loss during the training of the (a) VGG-SegNet (HDL 1) and (b) ResNet-SegNet (HDL 2) models. If i represents the input manual delineation label 1 (lung region), (1 −i) represents the manual delineation label 0 (non-lung region), represents the probability of the HDL models (SoftMax) adapted at the prediction layer of the AI model, and the product is represented by term and the symbol represents CE-loss function, then is mathematically represented, as shown in Equation (1).

| (1) |

3.2.3. Experimental Protocol

The AI models’ accuracy was determined using the standardized cross-validation (CV) technique. Because the data had mild COVID, the 5-fold (K5) CV procedure was utilized. In this experimental protocol, 4000 CT images (80% data) were used for training the two AI-based HDL models. The remaining 1000 CT images (20% remaining data) were used to test the performance of the AI models. During the K5 implementation, we ensured that every test fold was unique and mutually exclusive. Further, we ensure that 10% of training data is used to validate the AI system.

3.2.4. Cross-Validation Accuracy

The output of the AI model in the foreground (white) region represents the segmented lung. The manual delineated region follows a similar setup where the foreground (white) region represents the MD lung. In the above scenarios, the foreground lung (white) region is represented a binary 1 and the background as binary 0. The accuracy of the HDL system is computed using the standardized formula, given the 2 × 2 truth table values, namely, true-positive (TP), true-negative (TN), false-negative (FN), and false-positive (FP). It can be mathematically represented in Equation (2).

| (2) |

3.2.5. Lung Area Calculation and Figure of Merit

We calculate the area of the two balloon-shaped lungs using the foreground part of the binary image corresponding to the two AI systems. If A(p, q) represents the lung area for in the image “q” using model “p”, where “p” can take VGG-SegNet (HDL 1) and ResNet-SegNet (HDL 2). We adopt a resolution factor of 0.52 to convert pixel to mm2. Then the mean area for the HDL model “p” in mm2 is symbolized as and can be mathematically represented using Equation (3).

| (3) |

We have used FoM to represent the AI systems error, if represents a mean area of the manual delineated lungs, “r” represents the MD 1 or MD 2, then FoM for model “p” can be mathematically represented using Equation (4).

| (4) |

3.2.6. Performance Evaluation Criteria

As part of the performance evaluation criteria, we compare COVLIAS (HDL 1) vs. MD 1, COVLIAS (HDL 2) vs. MD 1, COVLIAS (HDL 1) vs. MD 2, COVLIAS (HDL 2) vs. MD 2, and COVLIAS vs. MedSeg using the following attributes (i) creating the BA plots, (ii) estimating the CC plots, (iii) ROC curve, and (iv) computing the FoM. Further, we use validation data from CROATIA consisting of COVID-19 positive CT lung images to compare COVLIAS vs. MedSeg.

3.2.7. MedSeg—A Web-Based AI Segmentation Tool

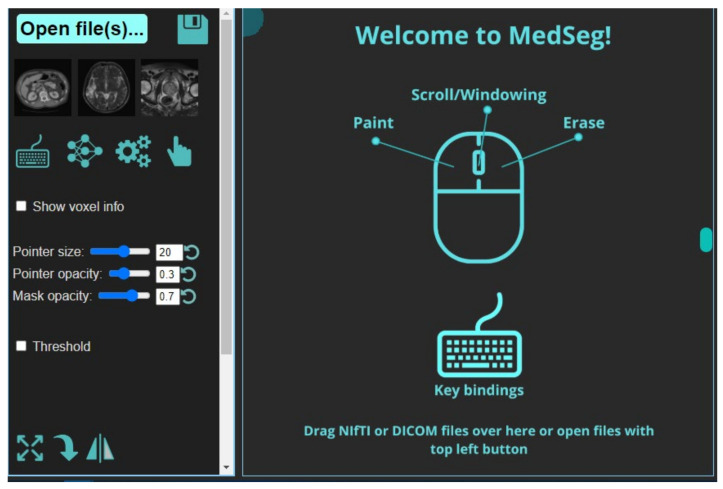

It is a web-based annotation and segmentation tool for medical organs. The steps for segmentation include (i) Drag and dropping the collection of DICOM- files or single NIfTI-file into the segmentation zone as shown below. This is done after launching the MedSeg link. An alternate way is to click the upload button on the top left (Figure 6). If multiple DICOM-slices are uploaded, this tool will automatically stack them in the correct order. (ii) If one uses the computer’s central processing unit (CPU) that has relatively up-to-date hardware, then it will give the best experience in terms of avoiding lag. (iii) Once the image is loaded, it will appear with a default window level/gray scaling (Figure 7). (iv) Next, select the CT lung segmentation model from the list for segmentation of the lung. (v) It will show the line “CT Thorax lungs model loaded” and activate the segmentation process (Figure 8). (vi) Scroll images using the mouse wheel or press ‘Up arrow’ and ‘Down arrow’ to move one slice at a time. (vii) After the segmentation process is complete, click the save icon. The final result will be a NIfTI [68] file with the annotated mask.

Figure 6.

Opening page of the MedSeg tool.

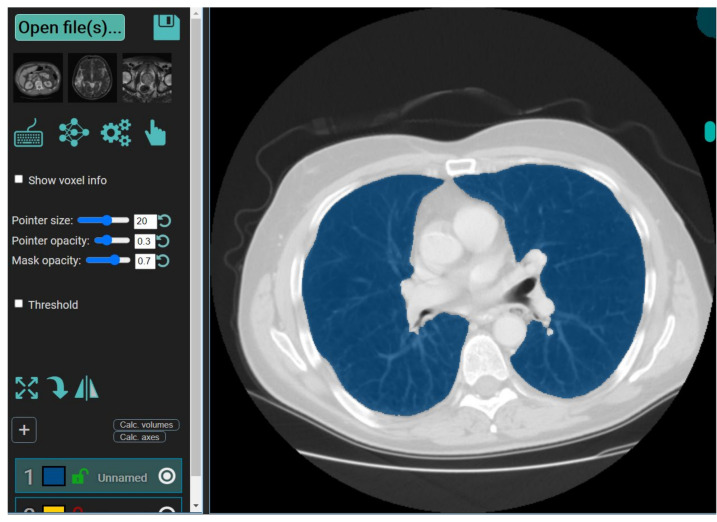

Figure 7.

Display of CT image using the MedSeg tool.

Figure 8.

Segmentation of the lung in CT slice using the MedSeg tool.

4. Results and Performance Evaluation

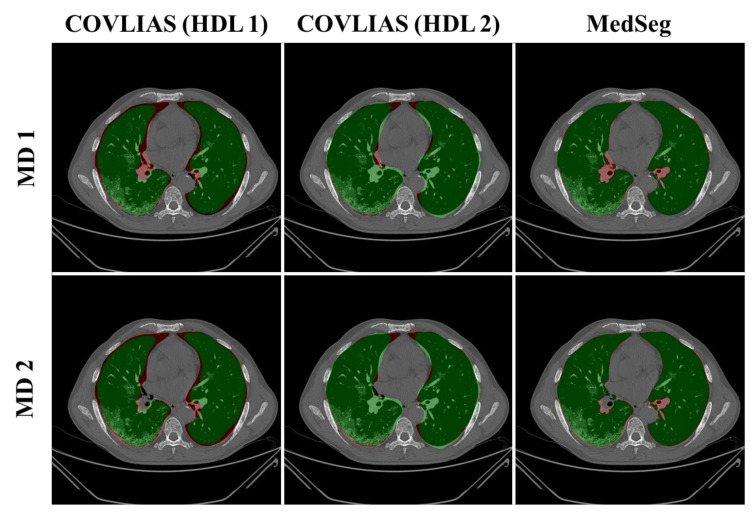

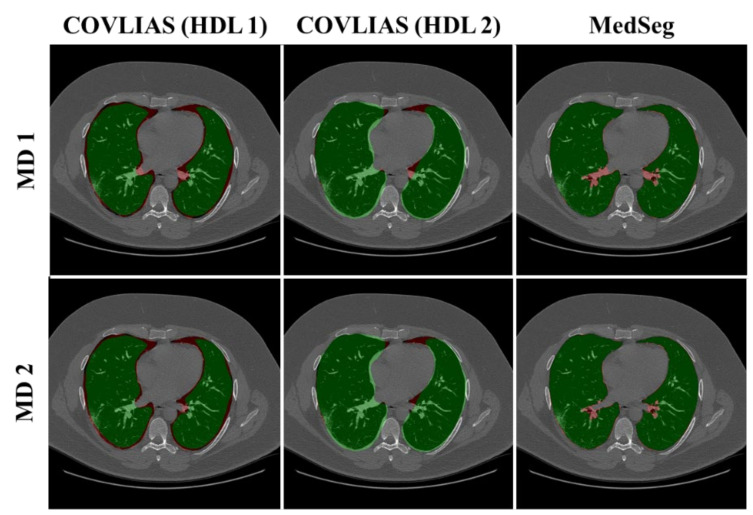

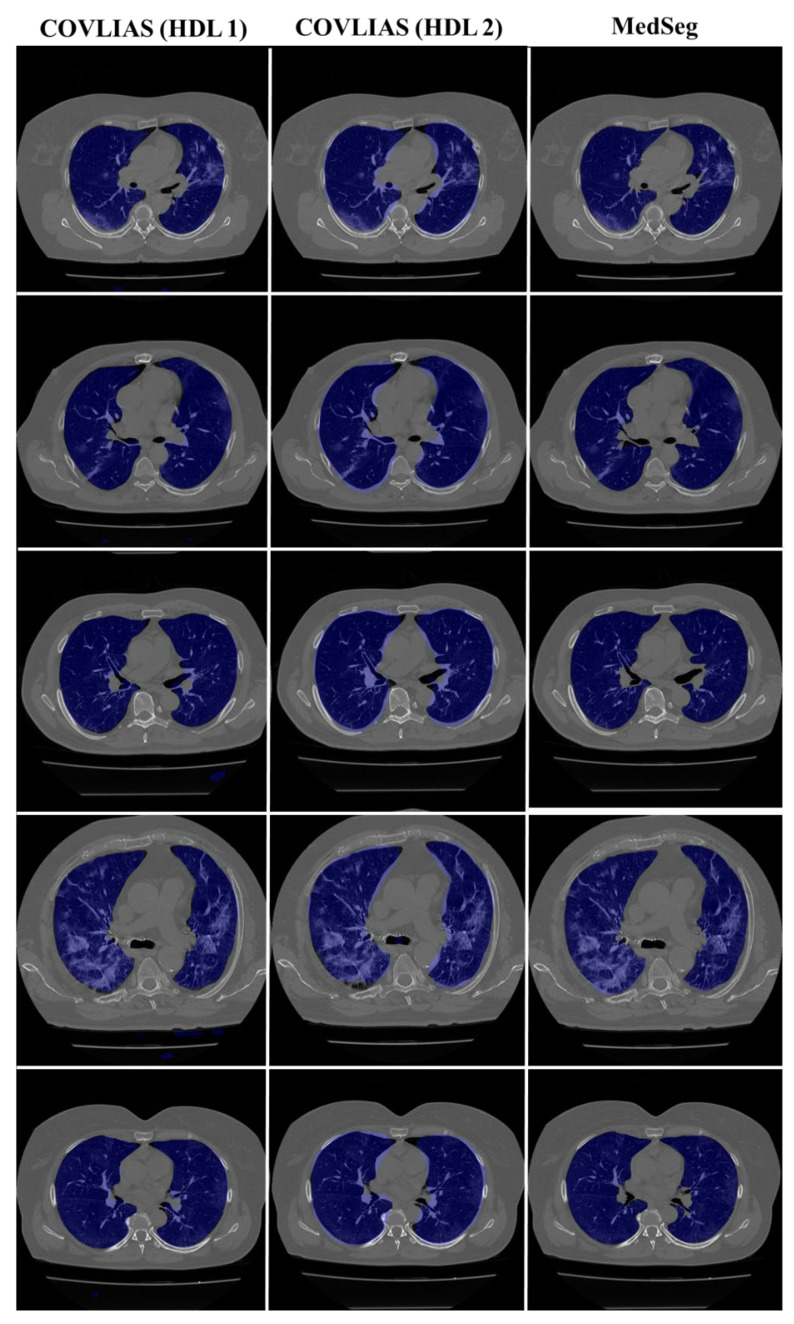

We present a comparison of CT lung segmentation for COVLIAS 1.0 vs. MedSeg using overlays of the binary mask from the two AI systems (see Figure 9 and Figure 10). Using a region-to-boundary convertor, the lung masks were then turned into lung boundary images, which were then placed over the original COVID-19 lung CT grayscale scans. We present (i) Bland–Altman (BA) plots, (ii) CC plots, (iii) receiver operating characteristic (ROC) curve, and (iv) Figure of Merit (FoM) as part of performance evaluation for COVLIAS and MedSeg against Manual Delineation (MD).

Figure 9.

COVLIAS (HDL 1) (green) in column 1; COVLIAS (HDL 2) (green) in column 2; MedSeg (green) in column 3, MD 1 in row 1 (red); MD 2 in row 2 (red).

Figure 10.

COVLIAS (HDL 1) (green) in column 1; COVLIAS (HDL 2) (green) in column 2; MedSeg (green) in column 3, MD 1 in row 1 (red); MD 2 in row 2 (red).

4.1. Performance: COVLIAS vs. MedSeg

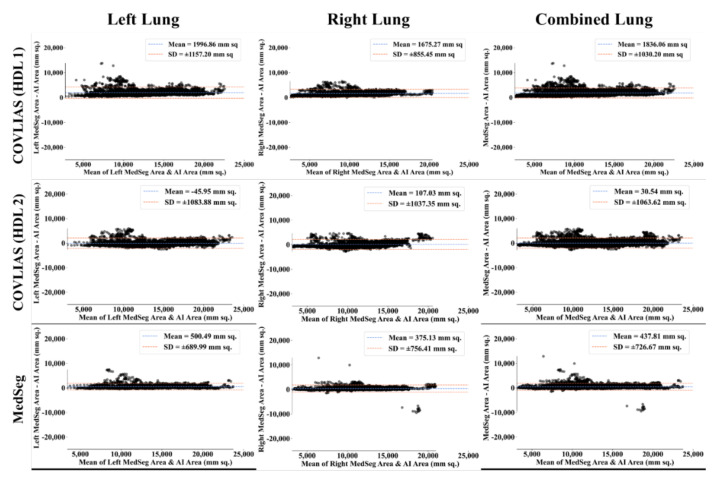

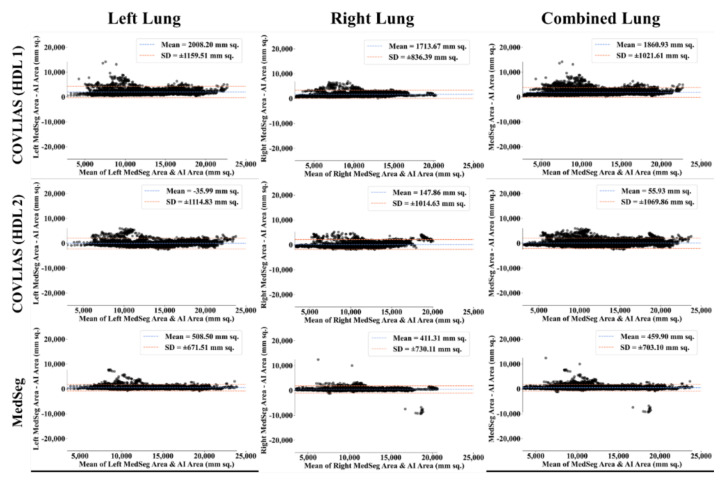

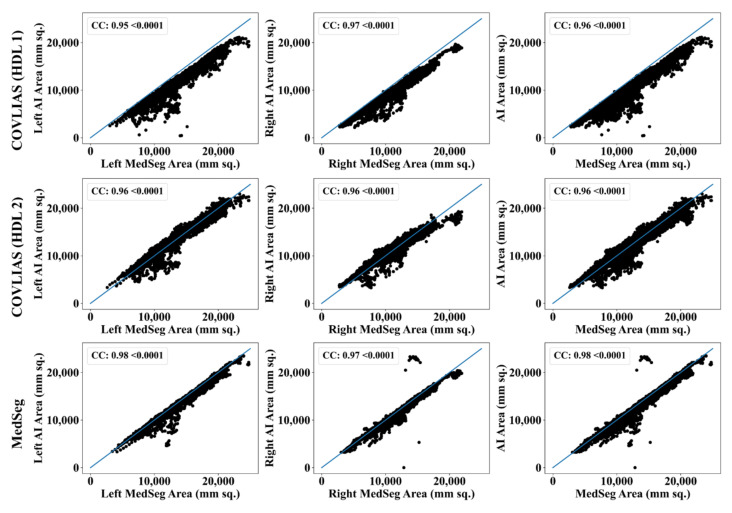

The Bland–Altman computation approach, based on our previous ideas [69,70], is used to show the consistency of two methods that uses the same variable. The mean and standard deviation of the lung area between the AI model of COVLIAS and MedSeg against MD region corresponding to MD 1 is shown in Figure 11 and Figure 12. Similarly, Figure 13 and Figure 14 show the CC plot for the AI model of COVLIAS and MedSeg against the MD 1 and MD 2, with the CC > 0.95 for all the AI models. A ROC curve shows how the diagnostic performance of an AI system changes as the discrimination threshold is changed. The ROC curve and AUC value for the three AI models are shown in Figure 15, with AUC > 0.95 for all three AI models. The figure of merit (FoM) is determined by the error’s statistical significance.

Figure 11.

Bland–Altman plots: COVLIAS (row 1 and row 2) vs. MedSeg (row 3) using MD 1. Column 1: left lung, column 2: right lung, and column 3: mean of left and right. COVLIAS (HDL 1): VGG-SegNet; COVLIAS (HDL 2): ResNet-SegNet.

Figure 12.

Bland–Altman plots: COVLIAS (row 1 and row 2) vs. MedSeg (row 3) using MD 2. Column 1: left lung, column 2: right lung, and column 3: mean of left and right. COVLIAS (HDL 1): VGG-SegNet; COVLIAS (HDL 2): ResNet-SegNet.

Figure 13.

CC plots: COVLIAS (row 1 and row 2) vs. MedSeg (row 3) using MD 1. Column 1: left lung, column 2: right lung, and column 3: mean of left and right lungs. COVLIAS (HDL 1): VGG-SegNet; COVLIAS (HDL 2): ResNet-SegNet.

Figure 14.

CC plots: COVLIAS (row 1 and row 2) vs. MedSeg (row 3) using MD 2. Column 1: left lung, column 2: right lung, and column 3: mean of left and right lungs. COVLIAS (HDL 1): VGG-SegNet; COVLIAS (HDL 2): ResNet-SegNet.

Figure 15.

ROC plot: COVLIAS vs. MedSeg. Row 1: left lung, row 2: right lung, row 3: combined lung. Left: using MD 1, Right: using MD 2.

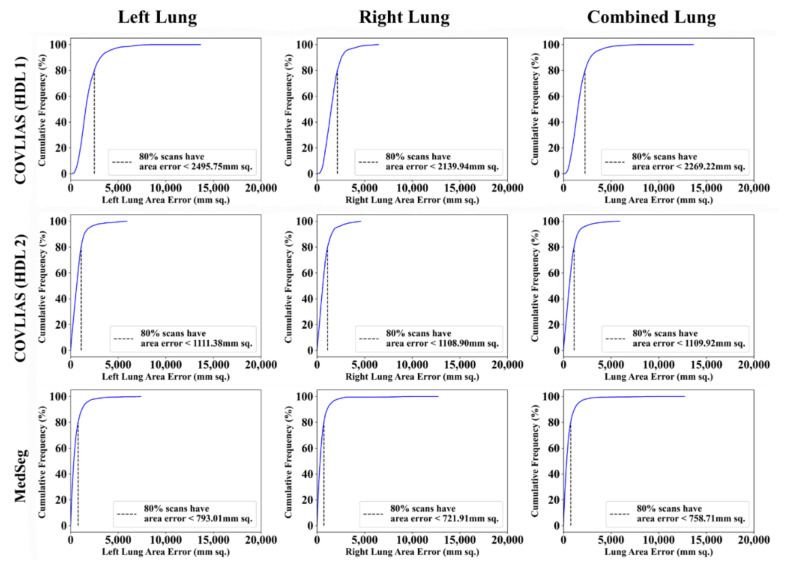

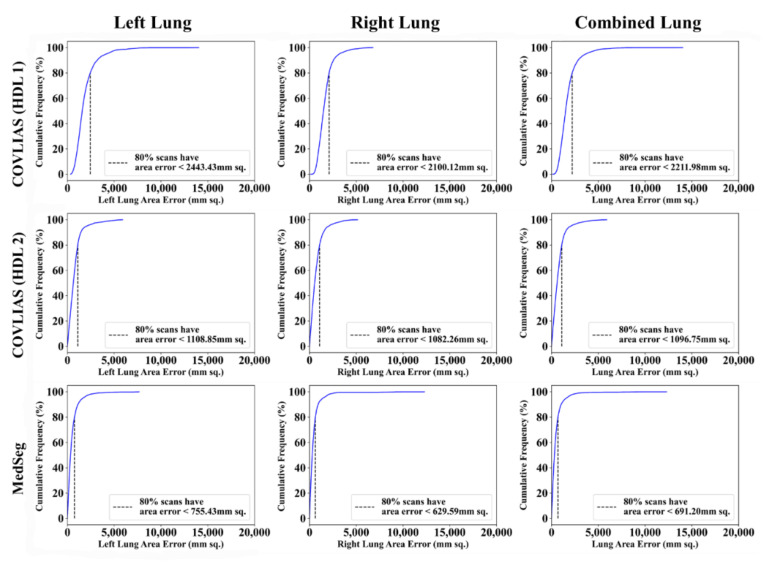

Cumulative frequency plot (Figure 16 and Figure 17) shows the lung area error for the AI model of COVLIAS and MedSeg against MD 1 and MD 2, with the 80% cutoff for all the AI models. Table 1 shows the values for Figure of Merit (FoM) and the percentage difference for the COVLIAS and MedSeg against the MD.

Figure 16.

Cumulative frequency plots: COVLIAS (row 1 and row 2) vs. MedSeg (row 3) using MD 1. Column 1: left lung, column 2: right lung, and column 3: mean of left and right lungs. COVLIAS (HDL 1): VGG-SegNet; COVLIAS (HDL 2): ResNet-SegNet.

Figure 17.

Cumulative frequency plots: COVLIAS (row 1 and row 2) vs. MedSeg (row 3) using MD 2. Column 1: left lung, column 2: right lung, and column 3: mean of left and right lungs. COVLIAS (HDL 1): VGG-SegNet; COVLIAS (HDL 2): ResNet-SegNet.

Table 1.

FoM table for COVLIAS and MedSeg for lung area error against MD.

| MD 1 | MD 2 | % Difference | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Left | Right | Mean | Left | Right | Mean | Left | Right | Mean | |

| MedSeg | 96.42 | 96.85 | 96.61 | 96.36 | 96.55 | 96.45 | 0.1% | 0.3% | 0.2% |

| VGG-SegNet | 92.45 | 93.41 | 92.89 | 92.40 | 93.13 | 92.73 | 0.1% | 0.3% | 0.2% |

| ResNet-SegNet | 99.96 | 98.63 | 99.39 | 99.98 | 98.30 | 99.23 | 0.0% | 0.3% | 0.2% |

4.2. Statistical Tests

A standard Mann–Whitney, Paired t-Test, and Wilcoxon test were used to examine the system’s reliability and stability. When the distribution is not normal, the Wilcoxon test is employed instead of the paired t-test to assess if enough evidence supports the hypothesis. The statistical analysis was carried out using MedCalc software (Osteen, Belgium). We provided all conceivable combinations (six in total) for the COVLIAS and MedSeg against MD 1 and MD 2 to validate the system proposed in the study. The results of the Mann–Whitney, Paired t-Test, and Wilcoxon test are shown in Table 2.

Table 2.

Mann–Whitney, Paired t-test, and Wilcoxon test for COVLIAS and MedSeg for combined lung area against MD.

| Mann-Whitney | Paired t-Test | Wilcoxon | |

|---|---|---|---|

| COVLIAS (HDL 1) vs. MD 1 | p < 0.0001 | p < 0.0001 | p < 0.0001 |

| COVLIAS (HDL 1) vs. MD 2 | p < 0.0001 | p < 0.0001 | p < 0.0001 |

| COVLIAS (HDL 2) vs. MD 1 | p < 0.0001 | p < 0.0001 | p < 0.0001 |

| COVLIAS (HDL 2) vs. MD 2 | p < 0.0001 | p < 0.0001 | p < 0.0001 |

| MedSeg vs. MD 1 | p < 0.0001 | p < 0.0001 | p < 0.0001 |

| MedSeg vs. MD 2 | p < 0.0001 | p < 0.0001 | p < 0.0001 |

4.3. Scientific Validation

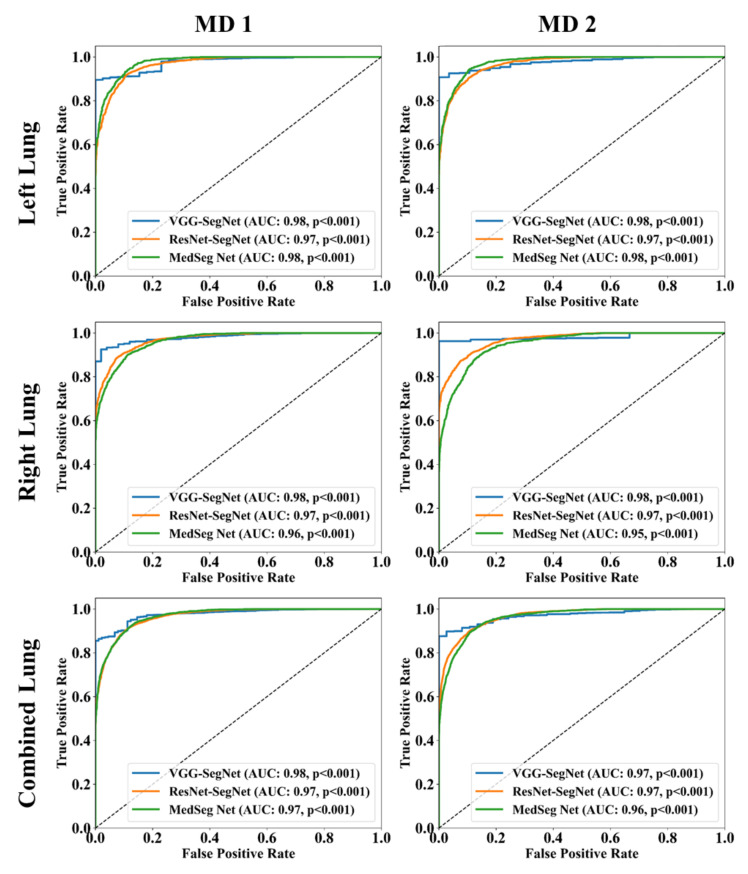

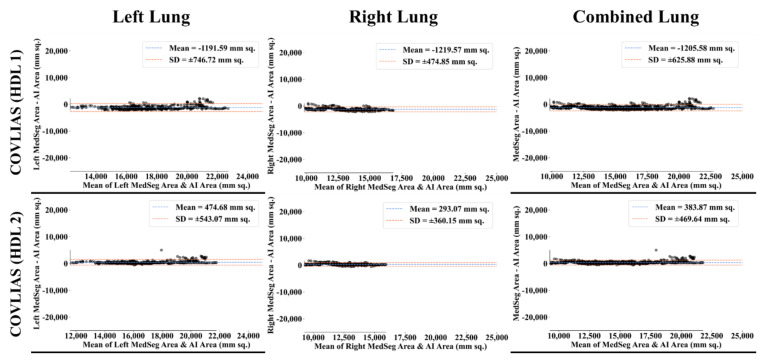

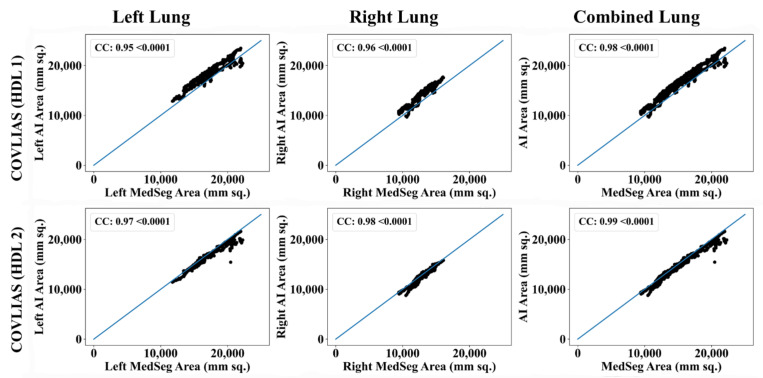

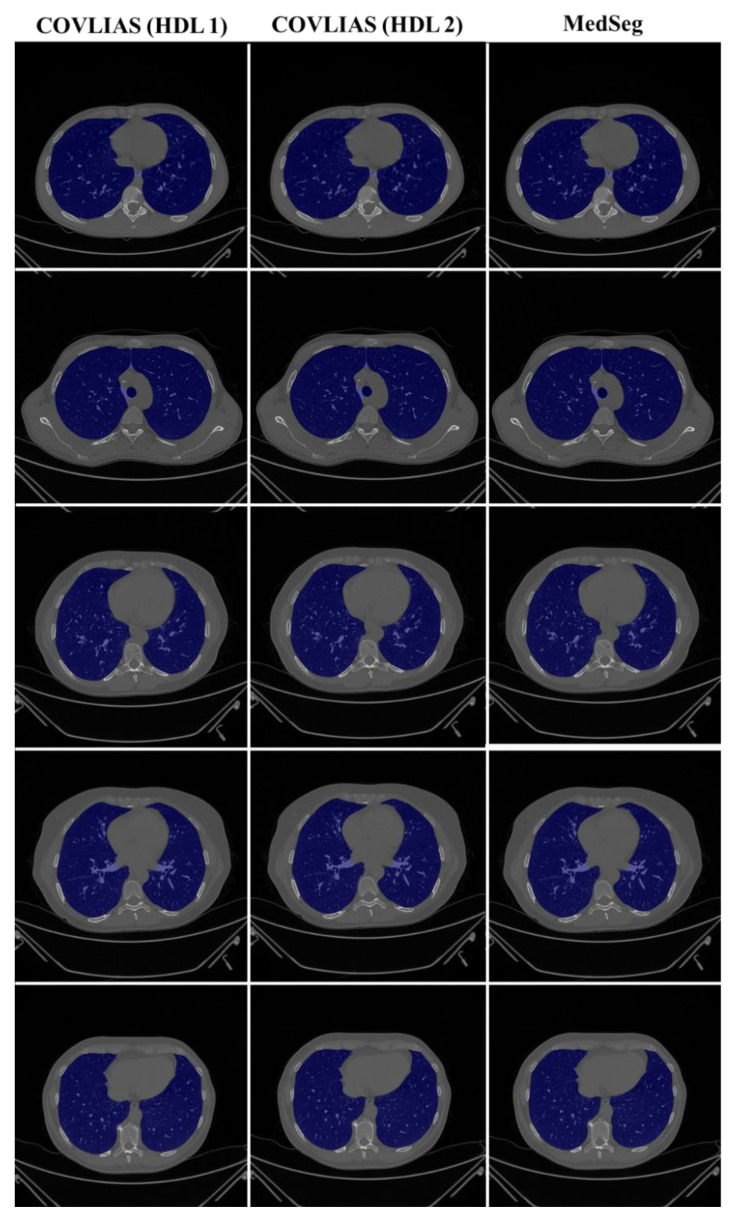

To validate the COVLIAS system and benchmark against MedSeg, a validation cohort from CROATIA was used. This is part of the validation process, where the AI model in the COVLIAS was trained on the NOVARA (Italian) dataset consisting of 5000 CT lung images and validated on 500 CROATIAN CT lung images. Figure 18 shows BA plots for the COVLIAS vs. MedSeg using the CROATIAN data with a mean area error of ~1205 mm2 between COVLIAS (HDL 1) and MedSeg and ~383 mm2 between COVLIAS (HDL 2) and MedSeg, respectively. The mean CC (Figure 19) between COVLIAS (HDL 1) and MedSeg was 0.98 and between COVLIAS (HDL 2) and MedSeg was 0.99. Figure 20 shows the segmented binary lung overlay from the two AI systems COVLIAS and MedSeg on the raw CT lung image.

Figure 18.

Bland–Altman plot: COVLIAS 1.0 vs. MedSeg. Left. Column 1: left lung, column 2: right lung, and column 3: combined lungs. COVLIAS (HDL 1): VGG-SegNet; COVLIAS (HDL 2): ResNet-SegNet.

Figure 19.

CC plot: COVLIAS 1.0 vs. MedSeg. Column 1: left lung, column 2: right lung, and column 3: combined lungs. COVLIAS (HDL 1): VGG-SegNet; COVLIAS (HDL 2): ResNet-SegNet.

Figure 20.

COVLIAS vs. MedSeg: Segmented mask (blue) on the raw CT CROATIA lung image.

5. Discussion

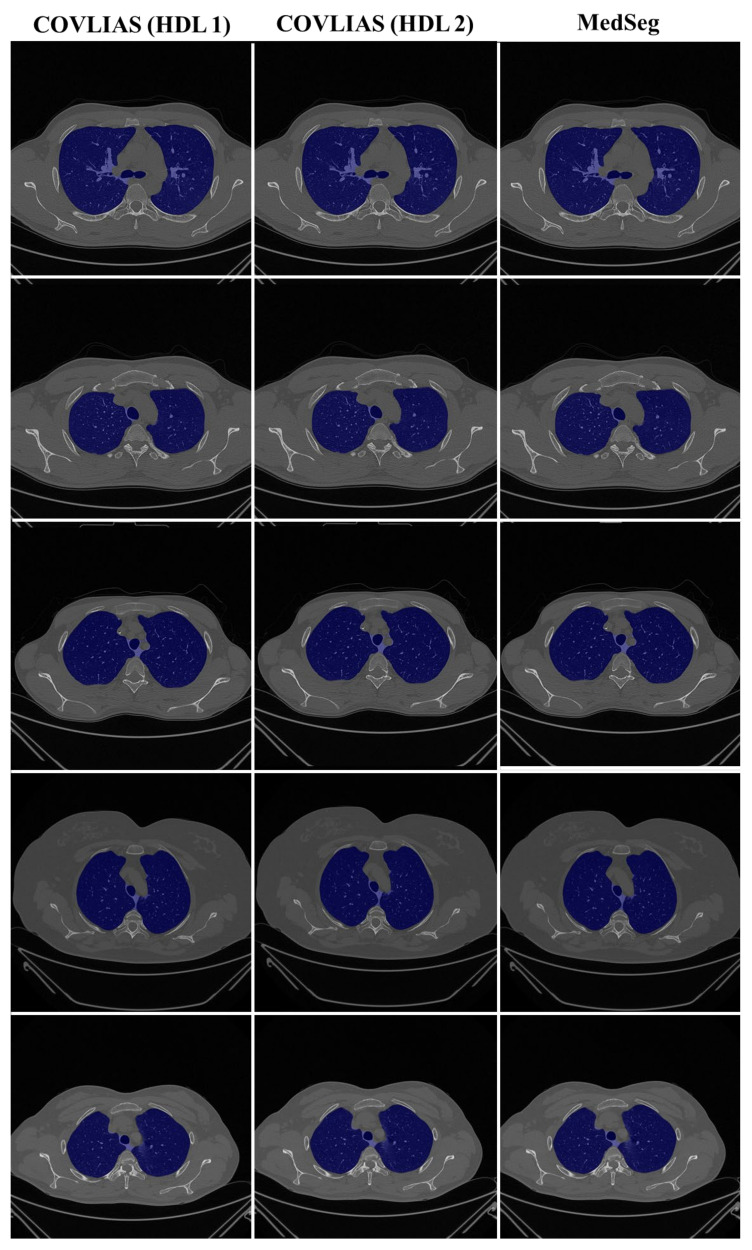

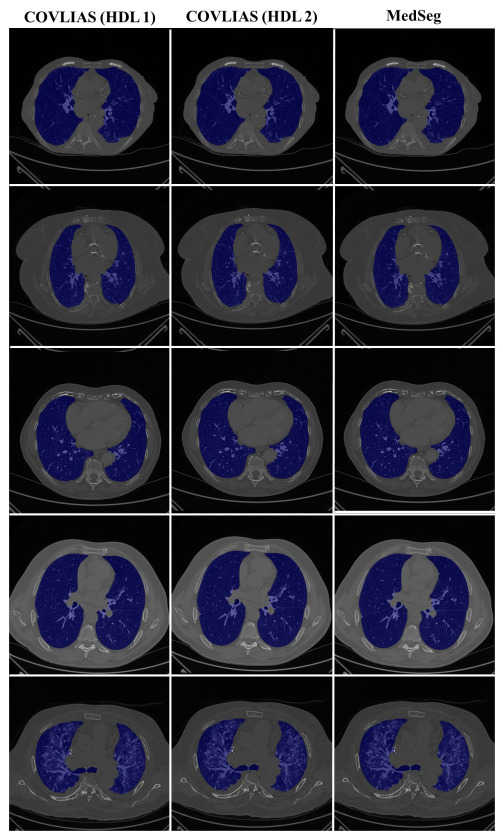

The proposed study presents a brief comparison of two AI lung segmentation tools, COVLIAS 1.0 and MedSeg. COVLIAS has been trained on MD COVID-19 infected patient data from two MD using the K5 protocol [1]. We demonstrated the consistency in the AI system by using four performance evaluation metrics (i) BA plots, (ii) CC plots, (iii) ROC curve, and (iv) FoM. Figure 9 and Figure 10 show visual binary mask overlays, where red represents the MD lung, and the green represents the output of the AI model. It shows that ResNet-SegNet is precise in detecting the curves and portions that are missed by MedSeg and VGG-SegNet. Using the mean error of the combined lung area in Figure 11 and Figure 12, it shows that ResNet-SegNet gives the least error of 30.54 mm2 and 91.56 mm2 compared to 437.81 mm2 and 459.90 mm2 for MedSeg when using MD1 and MD2, respectively. COVLIAS 1.0 is an AI system that employed two HDL models (VGG-SegNet and ResNet-SegNet), while MedSeg used its own DL model. COVLIAS was designed to work on PNG, JPEG, DICOM, and NIfTI images, while MedSeg can only work on NIfTI [68] or DICOM [71] format images. Both COVLIAS and MedSeg processed the binary mask images to compute the lung area during performance evaluation. Figure 21 (large lung) and Figure 22 (small lung) shows the segmented mask on the control patients from ITALIAN CT lung dataset. Similarly, Figure 23 (large lung) and Figure 24 (small lung) shows the segmented mask on the non-COVID patients from the ITALIAN CT lung dataset.

Figure 21.

COVLIAS vs. MedSeg: Segmented mask (blue) on the Control CT lung image (large lung).

Figure 22.

COVLIAS vs. MedSeg: Segmented mask (blue) on the Control CT lung image (small lung).

Figure 23.

COVLIAS vs. MedSeg: Segmented mask (blue) on the non-COVID CT lung image (large lung).

Figure 24.

COVLIAS vs. MedSeg: Segmented mask (blue) on the non-COVID CT lung image (small lung).

5.1. A Special Note on MedSeg

MedSeg is a radiology-developed web-based segmentation tool for annotating CT/MRI images. You may instantly review and segment your photographs using your browser and your computer’s GPU without installing software or transferring your data to an external server. The basic requirements to run this tool are an up-to-date web browser, keyboard, mouse, and a GPU (for increasing the efficiency of segmentation). This tool accepts data in NIfTI [68] or DICOM [71] format. It is an interactive tool where the user can either drag and drop the image or select it from the directory. The next step is loading the CT thorax lung model for segmentation. MedSeg then gives the output in NIfTI format, which is then converted to PNG format. The performance evaluation (PE) metrics are computed using the PNG images. This includes (i) left and right lung separation, (ii) individual lung area calculation, and (iii) visual overlay generation.

5.2. Benchmarking

Various research using deep learning algorithms based on chest CT imaging to identify and segment COVID-19 instances from non-COVID-19 cases have been published [72,73,74,75]. However, most of the studies lack in individual lung area estimation, transparency overlay generation, and usage of HDL. Table 3 depicts the benchmarking table, which includes studies from Paluru et al. (2020) [76], Saood et al. (2021) [77], Cai et al. (2020) [78], Suri et al. (2021) [1], and Suri et al. (2021) [3], where [76,77,78] have used solo DL models to segment the lungs, compared to [1,3], where both solo DL and HDL model were used. This proposed study by Suri et al. consisted of two kinds of MD which are benchmarked against a web-based lung segmentation tool, MedSeg. Overall, this study offers intra-variability MD against COLVIAS and MedSeg for segmentation of COVID-19 based CT lung images.

Table 3.

Benchmarking table.

| Author (Year) | # of Patients | Gender | # of Images | # of Tracers | Variability Studies | Image Size | Comparison | Model | Solo vs. HDL | Modality | Area Error |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Cai et al. (2020) [78] | 99 | 58 males; 41 females |

6336 | 2 | ✗ | - | - | UNet | Solo | 2D | ✗ |

| Paluru et al. (2021) [76] | 69 | - | 4339 | NA | ✓ | 512 | 7 | Anam-net | Solo | 2D | ✗ |

| Saood et al. (2021) [77] | - | 100 | NA | ✗ | 256 | 2 | UNet, SegNet | Solo | 2D | ✗ | |

| Suri et al. (2021) [1] | 72 | 46 males; 26 females |

5000 | 1 | ✗ | 768 | 4 | NIH, SegNet, VGG-SegNet, ResNet-SegNet |

Both | 2D | ✓ |

| Suri et al. (2021) [3] | 72 | 46 males; 26 females |

5000 | 2 | ✓ | 768 | 13 | PSP Net, VGG-SegNet, ResNet-SegNet |

Both | 2D | ✓ |

| Suri et al. (2021) Proposed |

79 | 51 males. 28 females |

5500 | 1 | ✓ | 768 | 4 | VGG-SegNet, ResNet-SegNet, MedSeg |

HDL | 2D | ✓ |

#: number; HDL: Hybrid Deep Learning.

5.3. Strength, Study limitation, and Extension

The experimental data set was used to compare COVLIAS and MedSeg. The results show proximity between COLVIAS and MedSeg. Unseen AI was conducted on validation data using COVLIAS and compared against MedSeg. The overall results showed COVLIAS and MedSeg having a 2.5% difference, meeting the industry standard of MedSeg. Intra-observer analysis was conducted during these comparisons. In spite of the encouraging results of COVLIAS on experimental and validation data sets, the pilot study can be enhanced by adding a bigger validation data set and conducting inter-observer analysis. Even though the COVLIAS is a well-balanced HDL system, the system can be implemented by taking the demographics and risk factors in a big data framework [79]. Several other models can be attempted in transfer learning or ensemble frameworks [4,56,57]. It would also be interesting to explore the segmentation of lungs with severe COVID-19 patients using the AI model. Our experimental and validation data GGO values were in the range 4.1 and 2. These are considered low to mild COVID GGO values. Since our AI models were trained on the mild COVID-19 CT scans, it is likely that we will be retraining the AI models should new COVID CT data have higher GGO values or have consolidations or crazy paving. There is the possibility that one might require different AI training models with a different intensity level of the COVID disease.

6. Conclusions

The study presented a segmentation comparison of two CT lung AI systems, namely, COVLIAS 1.0 (Global Biomedical Technologies, Inc., Roseville, CA, USA) and MedSeg. Our cohorts were taken from two nations, namely Italy (experimental data) and Croatia (validation data), having a sample size of 5500 CT scans. These cohorts were COVID mild, having a mean glass ground opacities of 4.1 and 2. Two AI-based HDL models of the COVLIAS, i.e., VGG-SegNet (19 layers, named HDL 1) and ResNet-SegNet (51 layers, named HDL 2), were used in the proposed study for benchmarking them against MedSeg. The error metrics for the two HDL systems, designed and developed using the two-gold standard manual delineations, were compared to validate the results from the AI systems. A trained radiologist annotated these manual delineations.

Our results on the experimental Italian data show that the CC between COVLIAS (HDL 1) and MD 1, COVLIAS (HDL 2) and MD 1, COVLIAS (HDL 1) and MD 2, and COVLIAS (HDL 2) and MD 2 were 0.96, 0.96, 0.96, and 0.96 with a mean of 0.96, respectively. The CC between (a) MedSeg and MD 1 and (b) MedSeg and MD 2 was 0.98 and 0.98, with the system’s mean 0.98. The difference in mean values between COVLIAS and MedSeg was in the range of 2.5%. On the validation (Croatia) data, our results show that the CC between (i) COVLIAS (HDL 1) and MedSeg and (ii) COVLIAS (HDL 2) and MedSeg was 0.98 and 0.99, respectively. As part of the validation, we also applied the two HDL training models to (a) non-COVID Italian and (b) Control Italian cohorts and compared them against MedSeg, demonstrating consistent lung segmentation results. To assess the system’s dependability and stability, a standard Mann–Whitney, Paired t-Test, and Wilcoxon tests were demonstrated. Our results showed clear evidence of comparable performance between COVLIAS 1.0 and MedSeg.

Author Contributions

Conceptualization, J.S.S., N.N.K., M.M. and M.K.K.; Data curation, A.C., A.P. (Alessio Paschè), P.S.C.D., M.C., L.S., K.V., A.M., G.F., M.T., P.R.K., F.N., Z.R. and A.G.; Formal analysis, J.S.S. and M.K.K.; Investigation, J.S.S., I.M.S., P.S.C., A.M.J., S.M., J.R.L., G.P., D.W.S., P.P.S., G.T., A.P. (Athanasios Protogerou), D.P.M., V.A., J.S.T., M.A.-M., S.K.D., A.N., A.S., A.A., F.N., A.G. and M.K.K.; Methodology, J.S.S., S.A. (Sushant Agarwal) and A.B.; Project administration, J.S.S. and M.K.K.; Software, S.A. (Sushant Agarwal), S.A. (Samriddhi Agarwal), and L.G.; Supervision, J.S.S., S.N., K.I.P. and M.K.K.; Validation, J.S.S., S.A. (Sushant Agarwal), A.C., A.P. (Alessio Paschè), P.S.C.D., M.C., L.S., K.V., A.M. and M.K.K.; Visualization, S.A. (Sushant Agarwal); Writing—original draft, J.S.S. and S.A. (Sushant Agarwal); Writing—review & editing, J.S.S., A.C., A.P. (Alessio Paschè), P.S.C.D., L.S., K.V., I.M.S., M.T., P.S.C., A.M.J., N.N.K., S.M., J.R.L., G.P., M.M., D.W.S., A.B., P.P.S., G.T., A.P. (Athanasios Protogerou), D.P.M., V.A., G.D.K., M.A.-M., S.K.D., A.N., A.S., V.R., M.F., A.A., F.N., Z.R., A.G., S.N., K.I.P. and M.K.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Ethics Committee of the Azienda Ospedaliero Universitaria Maggiore della Carità di Novara (protocol code 131/20 and date of approval 25 June 2020).

Informed Consent Statement

Written informed consent, including consent for publication, was obtained from the patient.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Suri J.S., Agarwal S., Pathak R., Ketireddy V., Columbu M., Saba L., Gupta S.K., Faa G., Singh I.M., Turk M., et al. COVLIAS 1.0: Lung Segmentation in COVID-19 Computed Tomography Scans Using Hybrid Deep Learning Artificial Intelligence Models. Diagnostics. 2021;11:1405. doi: 10.3390/diagnostics11081405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Cau R., Falaschi Z., Paschè A., Danna P., Arioli R., Arru C.D., Zagaria D., Tricca S., Suri J.S., Karla M.K. Computed tomography findings of COVID-19 pneumonia in Intensive Care Unit-patients. J. Public Health Res. 2021;10:3. doi: 10.4081/jphr.2021.2270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Suri J.S., Agarwal S., Elavarthi P., Pathak R., Ketireddy V., Columbu M., Saba L., Gupta S.K., Faa G., Singh I.M., et al. Inter-Variability Study of COVLIAS 1.0: Hybrid Deep Learning Models for COVID-19 Lung Segmentation in Computed Tomography. Diagnostics. 2021;11:2025. doi: 10.3390/diagnostics11112025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Agarwal M., Saba L., Gupta S.K., Johri A.M., Khanna N.N., Mavrogeni S., Laird J.R., Pareek G., Miner M., Sfikakis P.P. Wilson disease tissue classification and characterization using seven artificial intelligence models embedded with 3D optimization paradigm on a weak training brain magnetic resonance imaging datasets: A supercomputer application. Med. Biol. Eng. Comput. 2021;59:511–533. doi: 10.1007/s11517-021-02322-0. [DOI] [PubMed] [Google Scholar]

- 5.Saba L., Gerosa C., Fanni D., Marongiu F., La Nasa G., Caocci G., Barcellona D., Balestrieri A., Coghe F., Orru G., et al. Molecular pathways triggered by COVID-19 in different organs: ACE2 receptor-expressing cells under attack? A review. Eur. Rev. Med. Pharm. Sci. 2020;24:12609–12622. doi: 10.26355/eurrev_202012_24058. [DOI] [PubMed] [Google Scholar]

- 6.Cau R., Bassareo P.P., Mannelli L., Suri J.S., Saba L. Imaging in COVID-19-related myocardial injury. Int. J. Cardiovasc. Imaging. 2021;37:1349–1360. doi: 10.1007/s10554-020-02089-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Viswanathan V., Puvvula A., Jamthikar A.D., Saba L., Johri A.M., Kotsis V., Khanna N.N., Dhanjil S.K., Majhail M., Misra D.P. Bidirectional link between diabetes mellitus and coronavirus disease 2019 leading to cardiovascular disease: A narrative review. World J. Diabetes. 2021;12:215. doi: 10.4239/wjd.v12.i3.215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fanni D., Saba L., Demontis R., Gerosa C., Chighine A., Nioi M., Suri J., Ravarino A., Cau F., Barcellona D. Vaccine-induced severe thrombotic thrombocytopenia following COVID-19 vaccination: A report of an autoptic case and review of the literature. Eur. Rev. Med. Pharmacol. Sci. 2021;25:5063–5069. doi: 10.26355/eurrev_202108_26464. [DOI] [PubMed] [Google Scholar]

- 9.Gerosa C., Faa G., Fanni D., Manchia M., Suri J., Ravarino A., Barcellona D., Pichiri G., Coni P., Congiu T. Fetal programming of COVID-19: May the barker hypothesis explain the susceptibility of a subset of young adults to develop severe disease? Eur. Rev. Med. Pharmacol. Sci. 2021;25:5876–5884. doi: 10.26355/eurrev_202109_26810. [DOI] [PubMed] [Google Scholar]

- 10.Cau R., Pacielli A., Fatemeh H., Vaudano P., Arru C., Crivelli P., Stranieri G., Suri J.S., Mannelli L., Conti M., et al. Complications in COVID-19 patients: Characteristics of pulmonary embolism. Clin. Imaging. 2021;77:244–249. doi: 10.1016/j.clinimag.2021.05.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kämpfer N.A., Naldi A., Bragazzi N.L., Fassbender K., Lesmeister M., Lochner P. Reorganizing stroke and neurological intensive care during the COVID-19 pandemic in Germany. Acta Bio-Med. Atenei Parm. 2021;92:e2021266. doi: 10.23750/abm.v92i5.10418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wu X., Hui H., Niu M., Li L., Wang L., He B., Yang X., Li L., Li H., Tian J. Deep learning-based multi-view fusion model for screening 2019 novel coronavirus pneumonia: A multicentre study. Eur. J. Radiol. 2020;128:109041. doi: 10.1016/j.ejrad.2020.109041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Pathak Y., Shukla P.K., Tiwari A., Stalin S., Singh S. Deep transfer learning based classification model for COVID-19 disease. IRBM. 2020 doi: 10.1016/j.irbm.2020.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Saba L., Suri J.S. Multi-Detector CT Imaging: Principles, Head, Neck, and Vascular Systems. Volume 1 CRC Press; Boca Raton, FL, USA: 2013. [Google Scholar]

- 15.Gozes O., Frid-Adar M., Greenspan H., Browning P.D., Zhang H., Ji W., Bernheim A., Siegel E. Rapid ai development cycle for the coronavirus (COVID-19) pandemic: Initial results for automated detection & patient monitoring using deep learning ct image analysis. arXiv. 20202003.05037 [Google Scholar]

- 16.Shalbaf A., Vafaeezadeh M. Automated detection of COVID-19 using ensemble of transfer learning with deep convolutional neural network based on CT scans. Int. J. Comput. Assist. Radiol. Surg. 2021;16:115–123. doi: 10.1007/s11548-020-02286-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Yang X., He X., Zhao J., Zhang Y., Zhang S., Xie P. COVID-CT-dataset: A CT scan dataset about COVID-19. arXiv. 20202003.13865 [Google Scholar]

- 18.Alqudah A.M., Qazan S., Alquran H., Qasmieh I.A., Alqudah A. COVID-2019 detection using X-ray images and artificial intelligence hybrid systems. 2020; Volume 2, p. 1

- 19.Aslan M.F., Unlersen M.F., Sabanci K., Durdu A. CNN-based transfer learning–BiLSTM network: A novel approach for COVID-19 infection detection. Appl. Soft Comput. 2021;98:106912. doi: 10.1016/j.asoc.2020.106912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wu Y.-H., Gao S.-H., Mei J., Xu J., Fan D.-P., Zhang R.-G., Cheng M.-M. Jcs: An explainable covid-19 diagnosis system by joint classification and segmentation. IEEE Trans. Image Process. 2021;30:3113–3126. doi: 10.1109/TIP.2021.3058783. [DOI] [PubMed] [Google Scholar]

- 21.Xu X., Jiang X., Ma C., Du P., Li X., Lv S., Yu L., Ni Q., Chen Y., Su J. A deep learning system to screen novel coronavirus disease 2019 pneumonia. Engineering. 2020;6:1122–1129. doi: 10.1016/j.eng.2020.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Suri J.S., Agarwal S., Gupta S.K., Puvvula A., Biswas M., Saba L., Bit A., Tandel G.S., Agarwal M., Patrick A. A narrative review on characterization of acute respiratory distress syndrome in COVID-19-infected lungs using artificial intelligence. Comput. Biol. Med. 2021;130:104210. doi: 10.1016/j.compbiomed.2021.104210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Saba L., Agarwal M., Patrick A., Puvvula A., Gupta S.K., Carriero A., Laird J.R., Kitas G.D., Johri A.M., Balestrieri A., et al. Six artificial intelligence paradigms for tissue characterisation and classification of non-COVID-19 pneumonia against COVID-19 pneumonia in computed tomography lungs. Int. J. Comput. Assist. Radiol. Surg. 2021;16:423–434. doi: 10.1007/s11548-021-02317-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Suri J.S., Puvvula A., Biswas M., Majhail M., Saba L., Faa G., Singh I.M., Oberleitner R., Turk M., Chadha P.S., et al. COVID-19 pathways for brain and heart injury in comorbidity patients: A role of medical imaging and artificial intelligence-based COVID severity classification: A review. Comput. Biol. Med. 2020;124:103960. doi: 10.1016/j.compbiomed.2020.103960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Suri J.S., Puvvula A., Majhail M., Biswas M., Jamthikar A.D., Saba L., Faa G., Singh I.M., Oberleitner R., Turk M. Integration of cardiovascular risk assessment with COVID-19 using artificial intelligence. Rev. Cardiovasc. Med. 2020;21:541–560. doi: 10.31083/j.rcm.2020.04.236. [DOI] [PubMed] [Google Scholar]

- 26.MedSeg [(accessed on 1 October 2021)]. Available online: https://htmlsegmentation.s3.eu-north-1.amazonaws.com/index.html.

- 27.Saba L., Agarwal M., Sanagala S., Gupta S., Sinha G., Johri A., Khanna N., Mavrogeni S., Laird J., Pareek G.J.E.L. Brain MRI-based Wilson disease tissue classification: An optimised deep transfer learning approach. Electron. Lett. 2020;56:1395–1398. doi: 10.1049/el.2020.2102. [DOI] [Google Scholar]

- 28.Tandel G.S., Biswas M., Kakde O.G., Tiwari A., Suri H.S., Turk M., Laird J.R., Asare C.K., Ankrah A.A., Khanna N.J.C. A review on a deep learning perspective in brain cancer classification. Cancers. 2019;11:111. doi: 10.3390/cancers11010111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Saba L., Tiwari A., Biswas M., Gupta S.K., Godia-Cuadrado E., Chaturvedi A., Turk M., Suri H.S., Orru S., Sanches J.M., et al. Wilson’s disease: A new perspective review on its genetics, diagnosis and treatment. Front Biosci. 2019;11:166–185. doi: 10.2741/E854. [DOI] [PubMed] [Google Scholar]

- 30.Acharya U.R., Mookiah M.R.K., Sree S.V., Afonso D., Sanches J., Shafique S., Nicolaides A., Pedro L.M., Fernandes J.F.e., Suri J.S. Atherosclerotic plaque tissue characterization in 2D ultrasound longitudinal carotid scans for automated classification: A paradigm for stroke risk assessment. Med. Biol. Eng. Comput. 2013;51:513–523. doi: 10.1007/s11517-012-1019-0. [DOI] [PubMed] [Google Scholar]

- 31.Sharma A.M., Gupta A., Kumar P.K., Rajan J., Saba L., Nobutaka I., Laird J.R., Nicolades A., Suri J.S. A review on carotid ultrasound atherosclerotic tissue characterization and stroke risk stratification in machine learning framework. Curr. Atheroscler. Rep. 2015;17:55. doi: 10.1007/s11883-015-0529-2. [DOI] [PubMed] [Google Scholar]

- 32.Biswas M., Kuppili V., Saba L., Edla D.R., Suri H.S., Sharma A., Cuadrado-Godia E., Laird J.R., Nicolaides A., Suri J.S. Deep learning fully convolution network for lumen characterization in diabetic patients using carotid ultrasound: A tool for stroke risk. Med. Biol. Eng. Comput. 2019;57:543–564. doi: 10.1007/s11517-018-1897-x. [DOI] [PubMed] [Google Scholar]

- 33.Saba L., Dey N., Ashour A.S., Samanta S., Nath S.S., Chakraborty S., Sanches J., Kumar D., Marinho R., Suri J.S., et al. Automated stratification of liver disease in ultrasound: An online accurate feature classification paradigm. Comput. Methods Programs Biomed. 2016;130:118–134. doi: 10.1016/j.cmpb.2016.03.016. [DOI] [PubMed] [Google Scholar]

- 34.Acharya U.R., Sree S.V., Ribeiro R., Krishnamurthi G., Marinho R.T., Sanches J., Suri J.S. Data mining framework for fatty liver disease classification in ultrasound: A hybrid feature extraction paradigm. Med. Phys. 2012;39:4255–4264. doi: 10.1118/1.4725759. [DOI] [PubMed] [Google Scholar]

- 35.Biswas M., Kuppili V., Edla D.R., Suri H.S., Saba L., Marinhoe R.T., Sanches J.M., Suri J.S. Symtosis: A liver ultrasound tissue characterization and risk stratification in optimized deep learning paradigm. Comput. Methods Programs Biomed. 2018;155:165–177. doi: 10.1016/j.cmpb.2017.12.016. [DOI] [PubMed] [Google Scholar]

- 36.Boi A., Jamthikar A.D., Saba L., Gupta D., Sharma A., Loi B., Laird J.R., Khanna N.N., Suri J.S. A survey on coronary atherosclerotic plaque tissue characterization in intravascular optical coherence tomography. Curr. Atheroscler. Rep. 2018;20:33. doi: 10.1007/s11883-018-0736-8. [DOI] [PubMed] [Google Scholar]

- 37.Acharya U.R., Faust O., Kadri N.A., Suri J.S., Yu W. Automated identification of normal and diabetes heart rate signals using nonlinear measures. Comput. Biol. Med. 2013;43:1523–1529. doi: 10.1016/j.compbiomed.2013.05.024. [DOI] [PubMed] [Google Scholar]

- 38.Pareek G., Acharya U.R., Sree S.V., Swapna G., Yantri R., Martis R.J., Saba L., Krishnamurthi G., Mallarini G., El-Baz A. Prostate tissue characterization/classification in 144 patient population using wavelet and higher order spectra features from transrectal ultrasound images. Technol. Cancer Res. Treat. 2013;12:545–557. doi: 10.7785/tcrt.2012.500346. [DOI] [PubMed] [Google Scholar]

- 39.Acharya U.R., Molinari F., Sree S.V., Swapna G., Saba L., Guerriero S., Suri J.S. Ovarian tissue characterization in ultrasound: A review. Technol. Cancer Res. Treat. 2015;14:251–261. doi: 10.1177/1533034614547445. [DOI] [PubMed] [Google Scholar]

- 40.Acharya U.R., Sree S.V., Kulshreshtha S., Molinari F., Koh J.E.W., Saba L., Suri J.S. GyneScan: An improved online paradigm for screening of ovarian cancer via tissue characterization. Technol. Cancer Res. Treat. 2014;13:529–539. doi: 10.7785/tcrtexpress.2013.600273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Viswanathan V., Jamthikar A.D., Gupta D., Shanu N., Puvvula A., Khanna N.N., Saba L., Omerzum T., Viskovic K., Mavrogeni S., et al. Low-cost preventive screening using carotid ultrasound in patients with diabetes. Front. Biosci. 2020;25:1132–1171. doi: 10.2741/4850. [DOI] [PubMed] [Google Scholar]

- 42.Acharya U.R., Swapna G., Sree S.V., Molinari F., Gupta S., Bardales R.H., Witkowska A., Suri J.S. A review on ultrasound-based thyroid cancer tissue characterization and automated classification. Technol. Cancer Res. Treat. 2014;13:289–301. doi: 10.7785/tcrt.2012.500381. [DOI] [PubMed] [Google Scholar]

- 43.Shrivastava V.K., Londhe N.D., Sonawane R.S., Suri J.S. Computer-aided diagnosis of psoriasis skin images with HOS, texture and color features: A first comparative study of its kind. Comput. Methods Programs Biomed. 2016;126:98–109. doi: 10.1016/j.cmpb.2015.11.013. [DOI] [PubMed] [Google Scholar]

- 44.Shrivastava V.K., Londhe N.D., Sonawane R.S., Suri J.S. A novel and robust Bayesian approach for segmentation of psoriasis lesions and its risk stratification. Comput. Methods Programs Biomed. 2017;150:9–22. doi: 10.1016/j.cmpb.2017.07.011. [DOI] [PubMed] [Google Scholar]

- 45.Acharya U.R., Joseph K.P., Kannathal N., Lim C.M., Suri J.S. Heart rate variability: A review. Med. Biol. Eng. Comput. 2006;44:1031–1051. doi: 10.1007/s11517-006-0119-0. [DOI] [PubMed] [Google Scholar]

- 46.Corrias G., Cocco D., Suri J.S., Meloni L., Cademartiri F., Saba L. Heart applications of 4D flow. Cardiovasc. Diagn. Ther. 2020;10:1140. doi: 10.21037/cdt.2020.02.08. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Acharya U.R., Sree S.V., Krishnan M.M.R., Krishnananda N., Ranjan S., Umesh P., Suri J.S. Automated classification of patients with coronary artery disease using grayscale features from left ventricle echocardiographic images. Comput. Methods Programs Biomed. 2013;112:624–632. doi: 10.1016/j.cmpb.2013.07.012. [DOI] [PubMed] [Google Scholar]

- 48.Wilder-Smith A., Chiew C.J., Lee V.J. Can we contain the COVID-19 outbreak with the same measures as for SARS? Lancet Infect. Dis. 2020;20:e102–e107. doi: 10.1016/S1473-3099(20)30129-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Maugeri G., Castrogiovanni P., Battaglia G., Pippi R., D’Agata V., Palma A., Di Rosa M., Musumeci G.J.H. The impact of physical activity on psychological health during COVID-19 pandemic in Italy. Heliyon. 2020;6:e04315. doi: 10.1016/j.heliyon.2020.e04315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Lesser I.A., Nienhuis C.P. The Impact of COVID-19 on Physical Activity Behavior and Well-Being of Canadians. Int. J. Environ. Res. Public Health. 2020;17:3899. doi: 10.3390/ijerph17113899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.El-Baz A., Jiang X., Suri J.S. Biomedical Image Segmentation: Advances and Trends. CRC Press; Boca Raton, FL, USA: 2016. [Google Scholar]

- 52.Suri J.S., Liu K., Singh S., Laxminarayan S.N., Zeng X., Reden L. Shape recovery algorithms using level sets in 2-D/3-D medical imagery: A state-of-the-art review. IEEE Trans. Inf. Technol. Biomed. 2002;6:8–28. doi: 10.1109/4233.992158. [DOI] [PubMed] [Google Scholar]

- 53.El-Baz A.S., Acharya R., Mirmehdi M., Suri J.S. Multi Modality State-of-the-Art Medical Image Segmentation and Registration Methodologies: Volume 2. Volume 2 Springer Science & Business Media; Berlin/Heidelberg, Germany: 2011. [Google Scholar]

- 54.El-Baz A., Suri J.S. Level Set Method in Medical Imaging Segmentation. CRC Press; Boca Raton, FL, USA: 2019. [Google Scholar]

- 55.Saba L., Sanagala S.S., Gupta S.K., Koppula V.K., Johri A.M., Khanna N.N., Mavrogeni S., Laird J.R., Pareek G., Miner M. Multimodality carotid plaque tissue characterization and classification in the artificial intelligence paradigm: A narrative review for stroke application. Ann. Transl. Med. 2021;9:1206. doi: 10.21037/atm-20-7676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Agarwal M., Saba L., Gupta S.K., Carriero A., Falaschi Z., Paschè A., Danna P., El-Baz A., Naidu S., Suri J.S. A novel block imaging technique using nine artificial intelligence models for COVID-19 disease classification, characterization and severity measurement in lung computed tomography scans on an Italian cohort. J. Med. Syst. 2021;45:1–30. doi: 10.1007/s10916-021-01707-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Skandha S.S., Gupta S.K., Saba L., Koppula V.K., Johri A.M., Khanna N.N., Mavrogeni S., Laird J.R., Pareek G., Miner M. 3-D optimized classification and characterization artificial intelligence paradigm for cardiovascular/stroke risk stratification using carotid ultrasound-based delineated plaque: Atheromatic™ 2.0. Comput. Biol. Med. 2020;125:103958. doi: 10.1016/j.compbiomed.2020.103958. [DOI] [PubMed] [Google Scholar]

- 58.Tandel G.S., Balestrieri A., Jujaray T., Khanna N.N., Saba L., Suri J.S. Multiclass magnetic resonance imaging brain tumor classification using artificial intelligence paradigm. Comput. Biol. Med. 2020;122:103804. doi: 10.1016/j.compbiomed.2020.103804. [DOI] [PubMed] [Google Scholar]

- 59.Sarker M.M.K., Makhlouf Y., Banu S.F., Chambon S., Radeva P., Puig D. Web-based efficient dual attention networks to detect COVID-19 from X-ray images. Electron. Lett. 2020;56:1298–1301. doi: 10.1049/el.2020.1962. [DOI] [Google Scholar]

- 60.Sarker M.M.K., Makhlouf Y., Craig S.G., Humphries M.P., Loughrey M., James J.A., Salto-Tellez M., O’Reilly P., Maxwell P. A Means of Assessing Deep Learning-Based Detection of ICOS Protein Expression in Colon Cancer. Cancers. 2021;13:3825. doi: 10.3390/cancers13153825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Suri J., Agarwal S., Gupta S.K., Puvvula A., Viskovic K., Suri N., Alizad A., El-Baz A., Saba L., Fatemi M. Systematic Review of Artificial Intelligence in Acute Respiratory Distress Syndrome for COVID-19 Lung Patients: A Biomedical Imaging Perspective. IEEE J. Biomed. Health Inform. 2021;25:4128–4139. doi: 10.1109/JBHI.2021.3103839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Aggarwal D., Saini V. Factors limiting the utility of bronchoalveolar lavage in the diagnosis of COVID-19. Eur. Respir. J. 2020;56:2003116. doi: 10.1183/13993003.03116-2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Noor N.M., Than J.C., Rijal O.M., Kassim R.M., Yunus A., Zeki A.A., Anzidei M., Saba L., Suri J.S. Automatic lung segmentation using control feedback system: Morphology and texture paradigm. J. Med. Syst. 2015;39:1–18. doi: 10.1007/s10916-015-0214-6. [DOI] [PubMed] [Google Scholar]

- 64.Than J.C., Saba L., Noor N.M., Rijal O.M., Kassim R.M., Yunus A., Suri H.S., Porcu M., Suri J.S. Lung disease stratification using amalgamation of Riesz and Gabor transforms in machine learning framework. Comput. Biol. Med. 2017;89:197–211. doi: 10.1016/j.compbiomed.2017.08.014. [DOI] [PubMed] [Google Scholar]

- 65.Saba L., Banchhor S.K., Araki T., Viskovic K., Londhe N.D., Laird J.R., Suri H.S., Suri J.S. Intra- and inter-operator reproducibility of automated cloud-based carotid lumen diameter ultrasound measurement. Indian Heart J. 2018;70:649–664. doi: 10.1016/j.ihj.2018.01.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Badrinarayanan V., Kendall A., Cipolla R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39:2481–2495. doi: 10.1109/TPAMI.2016.2644615. [DOI] [PubMed] [Google Scholar]

- 67.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]

- 68.Neuroimaging Informatics Technology Initiative. [(accessed on 3 November 2021)]; Available online: https://nifti.nimh.nih.gov/

- 69.Riffenburgh R.H., Gillen D.L. Statistics in Medicine. 4th ed. Academic Press; Cambridge, MA, USA: 2020. Contents; pp. ix–xvi. [Google Scholar]

- 70.Saba L., Than J.C., Noor N.M., Rijal O.M., Kassim R.M., Yunus A., Ng C.R., Suri J.S. Inter-observer variability analysis of automatic lung delineation in normal and disease patients. J. Med. Syst. 2016;40:142. doi: 10.1007/s10916-016-0504-7. [DOI] [PubMed] [Google Scholar]

- 71.Onken M., Eichelberg M., Riesmeier J., Jensch P. Biomedical Image Processing. Springer; Berlin/Heidelberg, Germany: 2010. Digital imaging and communications in medicine; pp. 427–454. [Google Scholar]

- 72.Chaddad A., Hassan L., Desrosiers C. Deep CNN models for predicting COVID-19 in CT and x-ray images. J. Med. Imaging. 2021;8:014502. doi: 10.1117/1.JMI.8.S1.014502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Gunraj H., Wang L., Wong A. COVIDNet-CT: A Tailored Deep Convolutional Neural Network Design for Detection of COVID-19 Cases From Chest CT Images. Front. Med. 2020;7:608525. doi: 10.3389/fmed.2020.608525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Iyer T.J., Joseph Raj A.N., Ghildiyal S., Nersisson R. Performance analysis of lightweight CNN models to segment infectious lung tissues of COVID-19 cases from tomographic images. PeerJ Comput. Sci. 2021;7:e368. doi: 10.7717/peerj-cs.368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Ranjbarzadeh R., Jafarzadeh Ghoushchi S., Bendechache M., Amirabadi A., Ab Rahman M.N., Baseri Saadi S., Aghamohammadi A., Kooshki Forooshani M. Lung Infection Segmentation for COVID-19 Pneumonia Based on a Cascade Convolutional Network from CT Images. Biomed. Res. Int. 2021;2021:5544742. doi: 10.1155/2021/5544742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Paluru N., Dayal A., Jenssen H.B., Sakinis T., Cenkeramaddi L.R., Prakash J., Yalavarthy P.K. Anam-Net: Anamorphic Depth Embedding-Based Lightweight CNN for Segmentation of Anomalies in COVID-19 Chest CT Images. IEEE Trans. Neural. Netw. Learn Syst. 2021;32:932–946. doi: 10.1109/TNNLS.2021.3054746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Saood A., Hatem I. COVID-19 lung CT image segmentation using deep learning methods: U-Net versus SegNet. BMC Med. Imaging. 2021;21:1–10. doi: 10.1186/s12880-020-00529-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Cai W., Liu T., Xue X., Luo G., Wang X., Shen Y., Fang Q., Sheng J., Chen F., Liang T. CT Quantification and Machine-learning Models for Assessment of Disease Severity and Prognosis of COVID-19 Patients. Acad. Radiol. 2020;27:1665–1678. doi: 10.1016/j.acra.2020.09.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.El-Baz A., Suri J.S. Big Data in Multimodal Medical Imaging. CRC Press; Boca Raton, FL, USA: 2019. [Google Scholar]