Abstract

Deep learning technologies and applications demonstrate one of the most important upcoming developments in radiology. The impact and influence of these technologies on image acquisition and reporting might change daily clinical practice. The aim of this review was to present current deep learning technologies, with a focus on magnetic resonance image reconstruction. The first part of this manuscript concentrates on the basic technical principles that are necessary for deep learning image reconstruction. The second part highlights the translation of these techniques into clinical practice. The third part outlines the different aspects of image reconstruction techniques, and presents a review of the current literature regarding image reconstruction and image post-processing in MRI. The promising results of the most recent studies indicate that deep learning will be a major player in radiology in the upcoming years. Apart from decision and diagnosis support, the major advantages of deep learning magnetic resonance imaging reconstruction techniques are related to acquisition time reduction and the improvement of image quality. The implementation of these techniques may be the solution for the alleviation of limited scanner availability via workflow acceleration. It can be assumed that this disruptive technology will change daily routines and workflows permanently.

Keywords: deep learning, DL, MRI, GRE, TSE, prostate MRI, MSK

1. Introduction

Technical progress and innovative developments have always had an enormous influence on radiology. Whereas the ending of the last century was dominated by the introduction of picture archiving and communication systems, which finally replaced analogous X-ray films, the dominating novelty of this century maybe the implementation of machine learning (ML) and deep learning (DL) architectures and strategies. DL has the potential of being a disruptive technology which may significantly affect radiological workflows and daily clinical practice.

Convolutional neural networks (CNN) represent one the basic principles of DL algorithms and were already described several decades ago [1]. However, in the very beginning of this work, the success of these algorithms was relatively limited, due to restricted computer processing power. With the introduction of more powerful central processing units (CPU) and graphics processing units (GPU), the success story of DL algorithms truly began [1,2]. Nowadays, studies implementing DL algorithms have even outperformed human readers in certain image recognition tasks [1]. Suitable applications for DL range from lesion or organ detection and segmentation to the classification of lesions [1,3,4,5]. Recent studies also suggest that DL technology can be applied to the prognosis and mortality assessments in cancer and non-cancer patients, opening the perspective for DL-assisted decision support systems [6,7].

However, DL technology has not only been used for the analysis and post-processing of already acquired or reconstructed images, but also for image acquisition and image reconstruction itself, as was shown for both computed tomography (CT) and magnetic resonance imaging (MRI) [8,9,10,11,12,13].

The aim of this article was to present a review of the current DL technologies with a focus on MR image reconstruction. The first part of this article introduces the basic principles of DL image reconstruction techniques. The second part outlines its translation into clinical practice. The third part demonstrates the different fields of applications regarding MR image reconstruction, and different post-processing areas.

2. Machine Learning Reconstruction in MRI

Different types of tasks can be solved using machine learning (ML), such as image segmentation, and image classification, as well as regression tasks. While image classification and segmentation assign a global or local label to the input image, MRI reconstruction can be viewed as sensor-to-image translation task. This translation describes an image regression task if continuous predictions are assigned to every pixel in the image.

In MR image reconstruction, we generally aim to recover an image from the k-space signal , which is corrupted by measurement noise , following the equation:

| (1) |

where is the linear forward operator describing the MR acquisition model, and denotes the number of measurements, i.e., the dimensionality of the underlying k-space data for the image of voxels. Depending on the imaging application and signal modelling, the operator involves Fourier transformations, sampling trajectories, coil sensitivity maps. field inhomogeneities, relaxation effects, motion, and diffusion.

In ML frameworks, the objective is to learn the sensor-to-image mapping function with the learnable parameters . The mapping function can be (in the case of deep learning) stated as neural networks and can be used in different ways to reconstruct an image from the measured k-space data . All tasks have an image and/or k-space as the inputs to the function , but this can also include further MR-specific information as meta information, e.g., trajectories and coil sensitivity maps. ML reconstruction tasks for MRI differ in terms of input and targeted application output. These reconstruction tasks are further described hereafter.

2.1. Image Denoising

Certain types of under-sampled MR acquisition techniques introduce incoherent, noise-like aliasing in the zero-filled reconstructed images. Thus, an image denoising task can be used to reduce the noise-like aliasing in the images. The function performs an image-to-image regression by predicting the output value , which is based on the corrupted input image . The input to the denoising task can be either the zero-filled (and noise-affected) MR images or the reconstructed MR images that present remaining aliasing or noise amplification for high under-samplings, e.g., images reconstructed with higher parallel imaging acceleration factors. Instead of learning the denoised image, some approaches learn the residual noise that is to be removed from the noisy input [14], as shown for 2D cardiac CINE MRI [15]. The mapping only acts on the image and has no information of the acquired rawdata. Hence, the consistency of the measured k-space signal cannot be guaranteed. There are approaches that exist which add additional k-space consistency to the cost function [16], or enforce k-space consistency after image denoising, as shown for brain MRIs that have an improvement of image quality [17].

2.2. Direct k-Space to Image Mapping

A different ML-based approach is to reconstruct the MR image directly from the acquired k-space data. With the so-called “direct k-space to image mapping”, the k-space data are fed directly into the mapping function to achieve . Consequently, the mapping function approximates the forward model. Learning a direct mapping function is especially useful if the forward model or parts of the forward model are not exactly known. In the case of fully sampled MRIs under ideal conditions, the learned mapping approximates the Fourier transformation [18]. However, this becomes computationally very demanding, due to the fully connected layers which are involved here. Furthermore, the consistency of the acquired k-space data cannot be guaranteed.

2.3. Physics-Based Reconstruction

Another family of ML-based MR reconstruction methods is referred to as physics-based reconstruction. These approaches integrate traditional physics-based modelling of the MR which is encoded with ML, ensuring the consistency of the acquired data. We can distinguish two classes of problems here: (1) learning within the k-space domain, and (2) iterative optimization within an image domain containing interleaved data consistency steps. The first approaches are referred to as k-space learning, whereas the latter one is known collectively as unrolled optimization methods. These two approaches can be combined into hybrid approaches that learn a neural network in both the k-space domain and image domain.

2.4. k-Space Learning

A prominent approach for physics-based learning in the k-space domain [19] can be viewed as an extension of the linear kernel estimation in the generalized autocalibration partial parallel acquisition (GRAPPA), which is commonly used in parallel imaging acquisitions (e.g., cardiac, musculoskeletal or abdominal MRI). A non-linear kernel which is modelled by the mapping function is learned from the autocalibration signal (ACS). The missing k-space lines can then be filled using this estimated, non-linear kernel and the data is then transformed to the image space, using an inverse Fourier transformation. The final image is then obtained by a root-sum-of-squares reconstruction of the individual coil images. The applications of this approach were demonstrated for neuro and cardiac imaging, which showed a superior performance when compared to conventional imaging, especially in the cases of high acceleration factors [19].

2.5. Hybrid Learning

Hybrid approaches, as demonstrated in brain MRIs [20], combine the advantages of learning both within the k-space domain and image domain. These networks are applied in an alternating manner to obtain the final reconstruction . When designing hybrid approaches, it is important to keep the basic theorems of the Fourier transformation in mind: Local changes in the image domain result in global changes to the k-space domain and vice versa, which should be remembered to avoid unexpected behavior.

2.6. Plug-and-Play Priors

Trained image denoisers can also be combined with physics-based learning or conventional iterative reconstructions and serve thus as an advanced regularization for a traditional optimization problem. To achieve this, iterative, image-wise, or patch-wise denoising is performed, followed by a subsequent data consistency step. This concept is also involved in plug-and-play priors [15,21,22,23], regularization by denoising [24], or in image restoration [25], and has been applied in cardiac cine MRI or knee MRI.

2.7. Unrolled Optimization

Physics-based learning, which is modelled as an iterative optimization, can be viewed as a generalization of iterative SENSE [26,27] with a learned regularization in the image domain. The basic variational image reconstruction problem

| (2) |

contains a data-consistency term and a regularization term , which imposes prior knowledge on the reconstruction . The easiest way to solve Eq. (2) is to use a gradient descent scheme to optimize for the reconstruction . Alternatively, a proximal gradient scheme [28,29], variable splitting [30] or a primal-dual optimization [31] can be used for algorithm unrolling. In learning algorithms, the iterative optimization scheme is unrolled for a fixed number of iterations to obtain a solution for . Neural networks replace the gradient of the hand-crafted regularizer by a learned data-driven mapping function . Training several iterations with alternating mapping functions and intermittent data consistencies therefore reflect these unrolled optimizations [32]. These networks were shown for a multitude of applications, ranging from neurological [16,33], to cardiac [29,34] to musculoskeletal imaging [35].

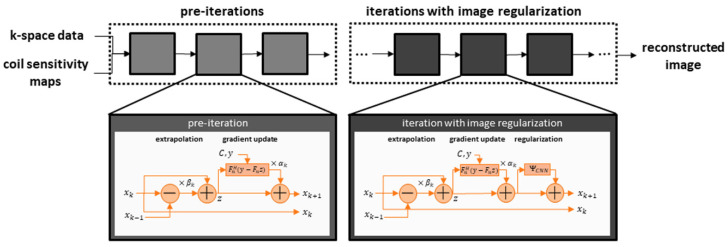

As our clinical examples shown below also employed networks which relied on unrolled optimization, an exemplary architecture is illustrated in Figure 1.

Figure 1.

The network receives conventionally determined coil sensitivity maps that specify the local sensitivity of each receiving channel, as well as the under-sampled k-space data. The reconstruction iteratively updates the image based on the gradients of the data fidelity term. In the first step, this may be done without image regularization, as the architecture focuses on generating non-acquired k-space samples which are based on the inherent parallel imaging component of the data fidelity term. As the extrapolation may still involve trainable parameters, such as the gradient step-sizes, these iterations are called pre-cascades. The main deep-learning aspect is then included in subsequent cascades that further include an image-enhancing neural network as regularization.

3. Towards Machine Learning Reconstruction in Clinical Practice

While machine learning algorithms have revolutionized multiple fields at an unprecedented speed, and while their limits still need to be explored, the dust is settling for some applications. As data fidelity is essential for medical images, it turns out that the implementation of DL in image reconstructions has to be done very carefully, to avoid, for example, false positives for non-present pathologies, or the hiding of present pathologies. Therefore, a successful strategy for improving image reconstruction is the following: conventional algorithms which consist of multiple processing steps are inspected, and steps that can be replaced by neural networks are identified. The identified steps perform mostly conventional image processing, such as apodization (i.e., optical filtering), interpolation, or denoising. This strategy keeps the conventional modelling for data consistency and parallel imaging untouched, and the introduced neural networks allow for a better tuning of the conventional, often quite simplistic, processing steps. Generally, this reduces the black-box character of the machine learning reconstruction and the requirements of the training data, as well as the mitigation of issues that regard the generalization of applications that were not seen in the training.

An example is given by the development from compressed sensing to variational networks. Compressed sensing relies on a sparsity and incoherence of imaging data, allowing the under-sampling of the k-space [36]. While the reconstructed compressed sensing image can be the solution to an optimization problem, state-of-the-art implementations perform iterative reconstructions that alternate between data consistency and image regularization [37]. While the data consistency is physically motivated and includes a parallel imaging component, the regularization is heuristic. Compressed sensing has introduced the notion of sparsity here, but the justification its use for actual images is rather superficial, and the regularization essentially resembles a form of edge-preserving image denoising. However, in light of machine learning, the typically employed regularizations, which are based on wavelets, can be conceived as small convolutional networks without any trainable components [29,35]. It therefore seems sensible to extend the size of the employed network to allow the better adaptation to MR images and, furthermore, to determine the optimal parameters of a large, representative database through machine learning. From this perspective it is not surprising that the resulting machine learning reconstruction outperforms compressed sensing, and even provides more realistic images. In this context, the specialized term ‘deep learning’ is often used, as the introduced number of trainable parameters in modern architectures is actually larger than may be anticipated. Current architectures often employ well beyond one million trainable parameters and are reported to have a large model capacity. The latter refers to the ability to adapt well to the assigned task of image reconstruction, and this must be supplemented with a suitable amount of training data.

From the Algorithm to the Scanner—Workflow of Integration

Setting up the architecture for a machine learning reconstruction is the first step. The next step is to decide on a training strategy and to generate training data. If applicable, the most successful strategy is supervised training. In that case, the training data is organized into pairs of input data to be supplied to the machine learning reconstruction, which is then used in the following prospective deployment; the ground truth data is the expected output of the reconstruction. Such training data can be generated through dedicated, long-lasting acquisitions that produce the desired ground truth images that are of a high quality, and the associated input data can be obtained by retrospective under-sampling, i.e., only providing a fraction of the acquired data to the network as an input during training. The training data can be further enhanced through augmentation techniques, such as adding noise, flipping, or mimicking artifacts in the input. It is worth noting that generating training data for machine learning reconstruction seems to have practical advantages when compared to other machine learning tasks, such as segmentation or detection. First, augmentation by a human expert is not necessary, as the target is the already available, high-quality image. Secondly, as the trainable aspect of the network focuses on local image enhancement, such as edges and patterns, the training is less sensitive to the morphological content of the image. The later network performance is mostly determined by a broad range of image contrasts with representative signal-to-noise ratios. For these reasons, the training data can even be acquired on healthy subjects.

The actual training is computationally intensive. It is typically performed on dedicated hardware relying on GPUs, and for actual applications training may last as long as several weeks. For supervised training, a loss function is determined that measures the deviation between the current network output for the provided input data and the ground truth. The trainable parameters of the network are incrementally updated in a process termed backpropagation, which is iterated multiple times while going through the training dataset and which corresponds to an optimization of the trainable parameters of the training dataset. The process is usually tracked by analyzing the network performance on a previously separated validation dataset. This process provides a trained model that can be applied prospectively on data without a known ground truth.

For use in clinical practice, the trained model has finally been deployed on an actual scanner. While the training is typically implemented using freely available software libraries in Python, the hardware limitations of integrated applications often require a translation into a proprietary framework. Here, two aspects are worth mentioning: firstly, the evaluation of a trained network on prospective input data—also called inference or forward pass—is technically easier than the whole training process. Therefore, the requirement for the inference implementation is lower. Secondly, neural networks use a very standardized language to describe their architecture, such as convolutions or activations. This allows the network to perform generic conversions and to not specialize the inference implementation to a specific application. In summary, this strategy allows for the implementation of neural networks that are trained offline into another software environment, e.g., into a routine environment on a clinical scanner.

4. Deep Learning Applications in Radiology

As outlined above, DL applications play an important role in radiology, not only for image reconstruction, but also for image classification and segmentation/registration, as well as for prognosis assessment and diagnosis/decision support. Therefore, the applications of DL can be divided into two groups: image acquisition/reconstruction on the scanner and post-processing at the workstation for reporting. Firstly, we would like to highlight image acquisition and reconstruction.

An important area for the applications of DL is image reconstruction. It was shown in several studies that a drastic reduction of acquisition time is feasible for clinical applications, using DL-based reconstruction schemes [10,11]. In particular, this approach is of high interest in turbo spin-echo (TSE) imaging. TSE imaging is of utmost importance in musculoskeletal (MSK) and pelvic imaging, due to its robustness and high image quality. However, a severe limitation of these sequences is based on its long acquisition times and reduced scanner availability. In recent studies, it was shown that DL image reconstruction is able to achieve an acquisition time reduction of up to 65% in prostate TSE imaging, without any loss of image quality (Figure 2) [11,38].

Figure 2.

Figure 2 shows an example of a T2-weighted turbo spin-echo (TSE) image of the prostate in the axial plane, with standard reconstruction (SR) and deep learning reconstruction (DL) of a second, under-sampled acquisition of the same patient. SR is shown on the left, with an acquisition time of 4:19 min. The acquisition time of DL was 1:20 min in the same patient (right-hand-side image). Motion artifacts were reduced due to the shortened acquisition time.

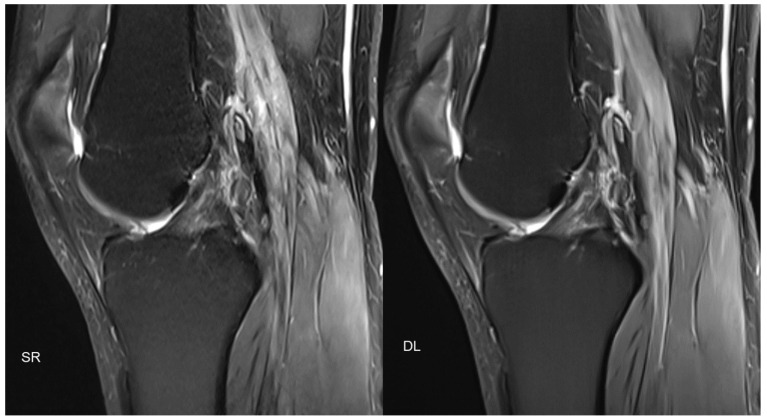

Similar approaches also exist in MSK imaging, with promising results (Figure 3).

Figure 3.

Figure 3 shows an example of PD-weighted turbo spin-echo (TSE) imaging, with fat sat of the knee in the sagittal plane, with standard reconstruction (SR; acquisition time 3:11 min) and deep learning reconstruction (DL; acquisition time 1:33 min) of the same patient. Similar to Figure 2, two different acquisitions were performed (standard acquisition for SR and conventionally under-sampled acquisition for DL).

Therefore, DL techniques systematically reducing acquisition times might be the key for overcoming shortness of MR scanner capacities and improve healthcare and patient care. DL image reconstruction can not only be used for reduction of acquisition time, but also for improvement of image quality and improvement of patient comfort as it was presented by Wang et al. in prostate MRI [39].

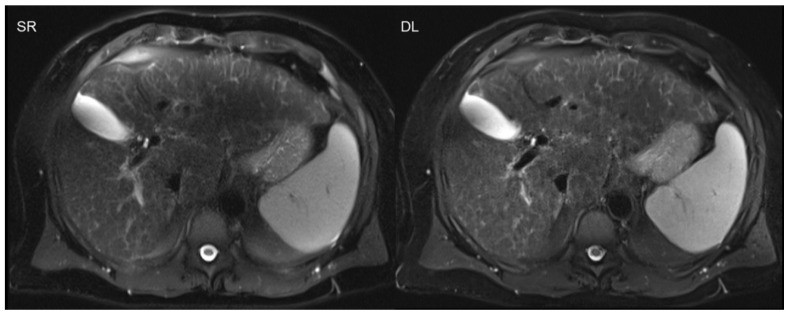

The advantages of acquisition time reduction are not only limited to time-consuming sequences, as are usually applied in MSK and pelvic imaging. The benefits of scan accelerations are especially advantageous in the imaging of the upper abdomen, e.g., liver imaging, or in cardiac imaging, due to the necessity of breath-holds [34]. Breath-holds often demonstrate challenges in clinical practice regarding motion artifacts, especially in severely ill or elderly patients with restricted breath-holding capacities. The successful implementation of DL was recently also shown in single-shot sequences, such as T2-weighted, half-Fourier acquisition single-shot turbo spin-echo sequences in the upper abdomen (Figure 4) [12,13].

Figure 4.

Deep learning reconstruction (DL; acquisition time 0:16 min) of accelerated T2-weighted half-Fourier acquisition single-shot turbo spin-echo sequence (HASTE) of the upper abdomen using a 3T scanner is shown on the right. The left-hand side image demonstrates standard reconstruction (SR; acquisition time 1:30 min).

DL-based super-resolution is another concept worth mentioning. In contrast to simple denoising algorithms, super-resolution aims to increase the spatial resolution via DL-based post-processing [40,41,42]. This concept was successfully implemented in head and neck imaging, as well as abdominal and cardiac imaging [40,43,44]. Especially fast sequences, such as gradient echo (GRE) imaging, benefit from these implementations, due to their relatively low signal-to-noise ratios. The newest algorithm developments also include partial-Fourier imaging with an acquisition time reduction, which is beneficial for the reduction of breathing artifacts (Figure 5) [45].

Figure 5.

Dynamic contrast-enhanced T1-weighted gradient echo imaging (VIBE Dixon) of the liver. Standard reconstruction is shown on the left. The right hand-side image shows the result of post-processing of the same dataset without change of acquisition parameters, using a deep learning super-resolution algorithm with improved sharpness and contrast.

Due to the increase in radiological examinations and the increase of images that are taken within one examination, additional tools for automated image analysis are very helpful in daily clinical practice. DL networks, especially CNNs, are especially suitable for the further analysis of image data. One of these areas is related to organ segmentation. It was previously shown that the segmentation of the prostate, as well as of the left ventricle, is feasible in MR images using DL networks [46,47]. However, DL networks have not only be applied for segmentation purposes but also for the detection of certain structures and findings. An interesting approach was demonstrated by Dou et al., who applied CNN for the detection of cerebral microbleeds in MRI [48]. It was also previously shown that CNN can be used for the detection and segmentation of brain metastases in MRI [49]. However, the capabilities of novel DL networks even extend to involving classification tasks, in addition to sole detection alone. Wang et al. demonstrated the application of DL in prostate MRI for the classification of prostate cancer [50]. In another study, the impact of DL for the differentiation of clinically significant and indolent prostate cancer was analyzed with promising results using 3T MRI [51]. Furthermore, the potential of DL was also investigated in brain MRI for its ability to distinguish between multiple sclerosis patients and healthy patients [52]. Further developments are related to image registration, as it was demonstrated in the registration of prostate MRI and histopathology images by DL [53].

Besides lesion detection and characterization, it was also demonstrated that DL networks can be applied for the prognosis assessment of patients regarding cancer or non-cancer diseases, e. g. using chest radiographs or MR imaging [6,7]. mpMRI of the prostate, in particular, is of high interest regarding prognosis assessment. It was shown that the prediction of biochemical recurrence after radical prostatectomy via novel support vector machine classification is feasible, with a better performance when compared to standard models [7]. Furthermore, it was demonstrated that machine learning can be helpful for response prediction in intensity-modulated radiation therapy of the prostate using radiomic features prior to irradiation, as well as post-irradiation [54].

5. Conclusions

This review outlined state-of-the-art DL-based technologies in radiology, with a focus on image reconstruction in MRI. The promising results of the most recent studies indicate that DL will be a major player in radiology in the upcoming years. Apart from decision and diagnosis support, the major advantages of DL MR reconstruction techniques are related to the acquisition time reduction and the improvement of image quality. Although the future might not yet be present, due to mostly experimental approaches, the next decade will be dominated by DL technologies. The implementation of these techniques may be the solution for the alleviation of limited scanner availability via workflow acceleration. It can be assumed that this disruptive technology will change daily routines and workflows permanently.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board (055/2017BO2; 542/202BO; 670/202BO2) of the University Hospital Tuebingen.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Conflicts of Interest

Dominik Nickel is an employee of Siemens Healthcare.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Mazurowski M.A., Buda M., Saha A., Bashir M.R. Deep learning in radiology: An overview of the concepts and a survey of the state of the art with focus on MRI. J. Magn. Reason. Imaging. 2019;49:939–954. doi: 10.1002/jmri.26534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Shen D., Wu G., Suk H.I. Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 2017;19:221–248. doi: 10.1146/annurev-bioeng-071516-044442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Litjens G., Kooi T., Bejnordi B.E., Setio A.A.A., Ciompi F., Ghafoorian M., van der Laak J.A.W.M., van Ginneken B., Sanchez C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 4.Lundervold A.S., Lundervold A. An overview of deep learning in medical imaging focusing on MRI. Z. Med. Phys. 2019;29:102–127. doi: 10.1016/j.zemedi.2018.11.002. [DOI] [PubMed] [Google Scholar]

- 5.Shin H.C., Roth H.R., Gao M., Lu L., Xu Z., Nogues I., Yao J., Mollura D., Summers R.M. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans. Med. Imaging. 2016;35:1285–1298. doi: 10.1109/TMI.2016.2528162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lu M.T., Ivanov A., Mayrhofer T., Hosny A., Aerts H., Hoffmann U. Deep Learning to Assess Long-term Mortality from Chest Radiographs. JAMA Netw. Open. 2019;2:e197416. doi: 10.1001/jamanetworkopen.2019.7416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Zhang Y.D., Wang J., Wu C.J., Bao M.-L., Li H., Wang X.-N., Tao J., Shi H.-B. An imaging-based approach predicts clinical outcomes in prostate cancer through a novel support vector machine classification. Oncotarget. 2016;7:78140–78151. doi: 10.18632/oncotarget.11293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Arndt C., Guttler F., Heinrich A., Burckenmeyer F., Diamantis I., Teichgraber U. Deep Learning CT Image Reconstruction in Clinical Practice. Rofo. 2021;193:252–261. doi: 10.1055/a-1248-2556. [DOI] [PubMed] [Google Scholar]

- 9.Akagi M., Nakamura Y., Higaki T., Narita K., Honda Y., Zhou J., Yu Z., Akino N., Awai K. Deep learning reconstruction improves image quality of abdominal ultra-high-resolution CT. Eur. Radiol. 2019;29:6163–6171. doi: 10.1007/s00330-019-06170-3. [DOI] [PubMed] [Google Scholar]

- 10.Recht M.P., Zbontar J., Sodickson D.K., Knoll F., Yakubova N., Sriram A., Murrell T., Defazio A., Rabbat M., Rybak L., et al. Using Deep Learning to Accelerate Knee MRI at 3 T: Results of an Interchangeability Study. Am. J. Roentgenol. 2020;215:1421–1429. doi: 10.2214/AJR.20.23313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Gassenmaier S., Afat S., Nickel D., Mostapha M., Herrmann J., Othman A.E. Deep learning-accelerated T2-weighted imaging of the prostate: Reduction of acquisition time and improvement of image quality. Eur. J. Radiol. 2021;137:109600. doi: 10.1016/j.ejrad.2021.109600. [DOI] [PubMed] [Google Scholar]

- 12.Herrmann J., Gassenmaier S., Nickel D., Arberet S., Afat S., Lingg A., Kundel M., Othman A.E. Diagnostic Confidence and Feasibility of a Deep Learning Accelerated HASTE Sequence of the Abdomen in a Single Breath-Hold. Investig. Radiol. 2021;56:313–319. doi: 10.1097/RLI.0000000000000743. [DOI] [PubMed] [Google Scholar]

- 13.Herrmann J., Nickel D., Mugler J.P., III, Arberet S., Gassenmaier S., Afat S., Nikolaou K., Othman A.E. Development and Evaluation of Deep Learning-Accelerated Single-Breath-Hold Abdominal HASTE at 3 T Using Variable Refocusing Flip Angles. Investig. Radiol. 2021;56:645–652. doi: 10.1097/RLI.0000000000000785. [DOI] [PubMed] [Google Scholar]

- 14.Han Y.S., Yoo J., Ye J.C. Deep Residual Learning for Compressed Sensing CT Reconstruction via Persistent Homology Analysis. arXiv. 2016161106391 [Google Scholar]

- 15.Kofler A., Dewey M., Schaeffter T., Wald C., Kolbitsch C. Spatio-Temporal Deep Learning-Based Undersampling Artefact Reduction for 2D Radial Cine MRI with Limited Training Data. IEEE Trans. Med. Imaging. 2020;39:703–717. doi: 10.1109/TMI.2019.2930318. [DOI] [PubMed] [Google Scholar]

- 16.Yang G., Yu S., Dong H., Slabaugh G., Dragotti P.L., Ye X., Liu F., Arridge S., Keegan J., Guo Y., et al. DAGAN: Deep De-Aliasing Generative Adversarial Networks for Fast Compressed Sensing MRI Reconstruction. IEEE Trans. Med. Imaging. 2017;37:1310–1321. doi: 10.1109/TMI.2017.2785879. [DOI] [PubMed] [Google Scholar]

- 17.Hyun C.M., Kim H.P., Lee S.M., Lee S.M., Seo J.K. Deep learning for undersampled MRI reconstruction. Phys. Med. Biol. 2018;63:135007. doi: 10.1088/1361-6560/aac71a. [DOI] [PubMed] [Google Scholar]

- 18.Zhu B., Liu J.Z., Cauley S.F., Rosen B.R., Rosen M.S. Image reconstruction by domain-transform manifold learning. Nature. 2018;555:487–492. doi: 10.1038/nature25988. [DOI] [PubMed] [Google Scholar]

- 19.Akçakaya M., Moeller S., Weingärtner S., Uğurbil K. Scan-specific robust artificial-neural-networks for k-space interpolation (RAKI) reconstruction: Database-free deep learning for fast imaging. Magn. Reson. Med. 2019;81:439–453. doi: 10.1002/mrm.27420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Eo T., Jun Y., Kim T., Jang J., Lee H.J., Hwang D. KIKI-net: Cross-domain convolutional neural networks for reconstructing undersampled magnetic resonance images. Magn. Reson. Med. 2018;80:2188–2201. doi: 10.1002/mrm.27201. [DOI] [PubMed] [Google Scholar]

- 21.Ahmad R., Bouman C.A., Buzzard G.T., Chan S., Liu S., Reehorst E.T., Schnitter P. Plug-and-Play Methods for Magnetic Resonance Imaging: Using Denoisers for Image Recovery. IEEE Signal Process. Mag. 2020;37:105–116. doi: 10.1109/MSP.2019.2949470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lempitsky V., Vedaldi A., Ulyanov D. Deep Image Prior; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition; Salt Lake City, UT, USA. 18–23 June 2018; pp. 9446–9454. [Google Scholar]

- 23.Meinhardt T., Moeller M., Hazirbas C., Cremers D. Learning Proximal Operators: Using Denoising Networks for Regularizing Inverse Imaging Problems; Proceedings of the IEEE International Conference on Computer Vision; Venice, Italy. 22–29 October 2017; pp. 1799–1808. [Google Scholar]

- 24.Romano Y., Elad M., Milanfar P. The little engine that could: Regularization by Denoising (RED) SIAM J. Imaging Sci. 2017;10:1804–1844. doi: 10.1137/16M1102884. [DOI] [Google Scholar]

- 25.Lehtinen J., Munkberg J., Hasselgren J., Laine S., Karras T., Aittala M., Aila T. Noise2Noise: Learning image restoration without clean data; Proceedings of the 35th International Conference on Machine Learning, ICML; Stockholm, Sweden. 10–15 July 2018; pp. 4620–4631. [Google Scholar]

- 26.Pruessmann K.P., Weiger M., Boernert P., Boesiger P. Advances in sensitivity encoding with arbitrary k-space trajectories. Magn. Reson. Med. 2001;46:638–651. doi: 10.1002/mrm.1241. [DOI] [PubMed] [Google Scholar]

- 27.Pruessmann K.P., Weiger M., Scheidegger M.B., Boesiger P. SENSE: Sensitivity encoding for fast MRI. Magn. Reson. Med. 1999;42:952–962. doi: 10.1002/(SICI)1522-2594(199911)42:5<952::AID-MRM16>3.0.CO;2-S. [DOI] [PubMed] [Google Scholar]

- 28.Aggarwal H.K., Mani M.P., Jacob M. Model Based Image Reconstruction Using Deep Learned Priors (Modl); Proceedings of the IEEE International Symposium on Biomedical Imaging; Washington, DC, USA. 4–7 April 2018; pp. 671–674. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Schlemper J., Caballero J., Hajnal J.V., Price A.N., Rueckert D. A Deep Cascade of Convolutional Neural Networks for Dynamic MR Image Reconstruction. IEEE Trans. Med. Imaging. 2018;37:491–503. doi: 10.1109/TMI.2017.2760978. [DOI] [PubMed] [Google Scholar]

- 30.Duan J., Schlemper J., Qin C., Ouyang C., Bai W., Biffi C., Bello G., Statton B., O’Regan D.P., Rueckert D. Lecture Notes in Computer Science. Springer; Berlin/Heidelberg, Germany: 2019. Vs-net: Variable splitting Network for Accelerated Parallel MRI Reconstruction; pp. 713–722. ((Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics)). [Google Scholar]

- 31.Adler J., Öktem O. Learned Primal-Dual Reconstruction. IEEE Trans. Med. Imaging. 2018;37:1322–1332. doi: 10.1109/TMI.2018.2799231. [DOI] [PubMed] [Google Scholar]

- 32.Gregor K., Lecun Y. Learning Fast Approximations of Sparse Coding; Proceedings of the International Conference on Machine Learning; Haifa, Israel. 21–24 June 2010; pp. 399–406. [Google Scholar]

- 33.Yang Y., Sun J., Li H., Xu Z. ADMM-Net: A deep learning approach for compressive sensing MRI. arXiv. 2017 doi: 10.1109/TPAMI.2018.2883941.170506869 [DOI] [PubMed] [Google Scholar]

- 34.Kustner T., Fuin N., Hammernik K., Bustin A., Qi H., Hajhosseiny R., Masci P.G., Neji R., Rueckert D., Botnar R.M., et al. CINENet: Deep learning-based 3D cardiac CINE MRI reconstruction with multi-coil complex-valued 4D spatio-temporal convolutions. Sci. Rep. 2020;10:13710. doi: 10.1038/s41598-020-70551-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hammernik K., Klatzer T., Kobler E., Recht M.P., Sodickson D.K., Pock T., Knoll F. Learning a variational network for reconstruction of accelerated MRI data. Magn. Reson. Med. 2018;79:3055–3071. doi: 10.1002/mrm.26977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Feng L., Benkert T., Block K.T., Sodickson D.K., Otazo R., Chandarana H. Compressed sensing for body MRI. J. Magn. Reson. Imaging. 2017;45:966–987. doi: 10.1002/jmri.25547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lustig M., Donoho D., Pauly J.M. Sparse MRI: The application of compressed sensing for rapid MR imaging. Magn. Reson. Med. 2007;58:1182–1195. doi: 10.1002/mrm.21391. [DOI] [PubMed] [Google Scholar]

- 38.Gassenmaier S., Afat S., Nickel M.D., Mostapha M., Herrmann J., Almansour H., Nikolaou K., Othman A.E. Accelerated T2-Weighted TSE Imaging of the Prostate Using Deep Learning Image Reconstruction: A Prospective Comparison with Standard T2-Weighted TSE Imaging. Cancers. 2021;13:3593. doi: 10.3390/cancers13143593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Wang X., Ma J., Bhosale P., Rovira J.J.I., Qayyum A., Sun J., Bayram E., Szklaruk J. Novel deep learning-based noise reduction technique for prostate magnetic resonance imaging. Abdom. Radiol. 2021;46:3378–3386. doi: 10.1007/s00261-021-02964-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Almansour H., Gassenmaier S., Nickel D., Kannengiesser S., Afat S., Weiss J., Hoffmann R., Othman A.E. Deep Learning-Based Superresolution Re-construction for Upper Abdominal Magnetic Resonance Imaging: An Analysis of Image Quality, Diagnostic Confidence, and Lesion Conspicuity. Investig. Radiol. 2021;56:509–516. doi: 10.1097/RLI.0000000000000769. [DOI] [PubMed] [Google Scholar]

- 41.Gassenmaier S., Afat S., Nickel D., Kannengiesser S., Herrmann J., Hoffmann R., Othman A.E. Application of a Novel Iterative Denoising and Image Enhancement Technique in T1-Weighted Precontrast and Postcontrast Gradient Echo Imaging of the Abdomen: Improvement of Image Quality and Diagnostic Confidence. Investig. Radiol. 2021;56:328–334. doi: 10.1097/RLI.0000000000000746. [DOI] [PubMed] [Google Scholar]

- 42.Gassenmaier S., Herrmann J., Nickel D., Kannengiesser S., Afat S., Seith F., Hoffmann R., Othman A.E. Image Quality Improvement of Dynamic Contrast-Enhanced Gradient Echo Magnetic Resonance Imaging by Iterative Denoising and Edge Enhancement. Investig. Radiol. 2021;56:465–470. doi: 10.1097/RLI.0000000000000761. [DOI] [PubMed] [Google Scholar]

- 43.Koktzoglou I., Huang R., Ankenbrandt W.J., Walker M.T., Edelman R.R. Super-resolution head and neck MRA using deep machine learning. Magn. Reson. Med. 2021;86:335–345. doi: 10.1002/mrm.28738. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Küstner T., Escobar C.M., Psenicny A., Bustin A., Fuin N., Qi H., Neji R., Kunze K., Hajhosseiny R., Prieto C., et al. Deep-learning based super-resolution for 3D iso-tropic coronary MR angiography in less than a minute. Magn. Reson. Med. 2021:2837–2852. doi: 10.1002/mrm.28911. [DOI] [PubMed] [Google Scholar]

- 45.Afat S., Wessling D., Afat C., Nickel D., Arberet S., Herrmann J., Othman A.E., Gassenmaier S. Analysis of a Deep Learning-Based Superresolution Algorithm Tailored to Partial Fourier Gradient Echo Sequences of the Abdomen at 1.5 T: Reduction of Breath-Hold Time and Improvement of Image Quality. Investig. Radiol. 2021 doi: 10.1097/RLI.0000000000000825. [DOI] [PubMed] [Google Scholar]

- 46.Guo Y., Gao Y., Shen D. Deformable MR Prostate Segmentation via Deep Feature Learning and Sparse Patch Matching. IEEE Trans. Med. Imaging. 2016;35:1077–1089. doi: 10.1109/TMI.2015.2508280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Avendi M.R., Kheradvar A., Jafarkhani H. A combined deep-learning and deformable-model approach to fully automatic segmentation of the left ventricle in cardiac MRI. Med. Image Anal. 2016;30:108–119. doi: 10.1016/j.media.2016.01.005. [DOI] [PubMed] [Google Scholar]

- 48.Dou Q., Chen H., Yu L., Zhao L., Qin J., Wang D., Mok V.C.T., Shi L., Heng P.-A. Automatic Detection of Cerebral Microbleeds From MR Images via 3D Convolutional Neural Networks. IEEE Trans. Med. Imaging. 2016;35:1182–1195. doi: 10.1109/TMI.2016.2528129. [DOI] [PubMed] [Google Scholar]

- 49.Grovik E., Yi D., Iv M., Tong E., Rubin D., Zaharchuk G. Deep learning enables automatic detection and segmentation of brain metastases on multisequence MRI. J. Magn. Reson. Imaging. 2020;51:175–182. doi: 10.1002/jmri.26766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Wang X., Yang W., Weinreb J., Han J., Li Q., Kong X., Yan Y., Ke Z., Luo B., Liu T., et al. Searching for prostate cancer by fully automated magnetic resonance imaging classification: Deep learning versus non-deep learning. Sci. Rep. 2017;7:15415. doi: 10.1038/s41598-017-15720-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Zhong X., Cao R., Shakeri S., Scalzo F., Lee Y., Enzmann D.R., Wu H.H., Raman S.S., Sung K. Deep transfer learning-based prostate cancer classification using 3 Tesla multi-parametric MRI. Abdom. Radiol. 2019;44:2030–2039. doi: 10.1007/s00261-018-1824-5. [DOI] [PubMed] [Google Scholar]

- 52.Yoo Y., Tang L.Y.W., Brosch T., Li D.K.B., Kolind S., Vavasour I., Rauscher A., MacKay A.L., Traboulsee A., Tam R.C. Deep learning of joint myelin and T1w MRI features in normal-appearing brain tissue to distinguish between multiple sclerosis patients and healthy controls. Neuroimage Clin. 2018;17:169–178. doi: 10.1016/j.nicl.2017.10.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Shao W., Banh L., Kunder C.A., Fan R.E., Soerensen S.J.C., Wang J.B., Teslovich N.C., Madhuripan N., Jawahar A., Ghanouni P., et al. ProsRegNet: A deep learning framework for registration of MRI and histopathology images of the prostate. Med. Image Anal. 2021;68:101919. doi: 10.1016/j.media.2020.101919. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Abdollahi H., Mofid B., Shiri I., Razzaghdoust A., Saadipoor A., Mahdavi A., Galandooz H.M., Mahdavi S.R. Machine learning-based radiomic models to predict intensity-modulated radiation therapy response, Gleason score and stage in prostate cancer. Radiol. Med. 2019;124:555–567. doi: 10.1007/s11547-018-0966-4. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.