Abstract

Chatbots are software applications to simulate a conversation with a person. The effectiveness of chatbots in facilitating the recruitment of study participants in research, specifically among racial and ethnic minorities, is unknown. The objective of this study is to compare a chatbot versus telephone-based recruitment in enrolling research participants from a predominantly minority patient population at an urban institution. We randomly allocated adults to receive either chatbot or telephone-based outreach regarding a study about vaccine hesitancy. The primary outcome was the proportion of participants who provided consent to participate in the study. In 935 participants, the proportion who answered contact attempts was significantly lower in the chatbot versus telephone group (absolute difference −21.8%; 95% confidence interval [CI] −27.0%, −16.5%; P < 0.001). The consent rate was also significantly lower in the chatbot group (absolute difference −3.4%; 95% CI −5.7%, −1.1%; P = 0.004). However, among participants who answered a contact attempt, the difference in consent rates was not significant. In conclusion, the consent rate was lower with chatbot compared to telephone-based outreach. The difference in consent rates was due to a lower proportion of participants in the chatbot group who answered a contact attempt.

Keywords: Chatbot, telephone outreach, recruitment, electronic consent

INTRODUCTION

Chatbots are software applications that mimic written or spoken human speech to simulate a conversation or interaction with a person. Several reports have described the use of chatbots in healthcare, including randomized trials to evaluate the effectiveness of chatbots in providing health-related communication, supporting mental health, and reducing postoperative opioid use.1–19 The effectiveness of chatbots to facilitate recruitment of research participants, however, is unclear.

Participant recruitment in research is costly and remains a major challenge in many studies involving human subjects, particularly among racial and ethnic minorities.20 In the United States, recruitment involves several activities, including adequately explaining the study to potential participants and obtaining informed consent using institutional review board (IRB)-approved procedures. Recruitment using traditional telephone contact may take days to months depending on the number of potential study participants and requires a substantial research infrastructure (eg, communications center, use of a Health Insurance Portability and Accountability Act [HIPAA]-compliant telephony system). While the development of chatbots also requires an infrastructure (eg, communication scripts and chatbot platform), chatbots can instantaneously contact far more participants than is possible by telephone. In this brief communication, we present the results of a randomized trial in a minority-serving institution in the United States to compare consent rates with chatbot versus telephone-based outreach in an IRB-approved research study about Coronavirus Disease 2019 (COVID-19) vaccine hesitancy.

METHODS

A chatbot platform developed by QliqSOFT (Dallas, TX, USA) that enables HIPAA-compliant communication was used to recruit participants for a study to complete a brief questionnaire about vaccine hesitancy (UIC IRB#: 2020-1157). The study was conducted at the University of Illinois Hospital & Clinics (UI Health) that includes a single 462-bed hospital, 22 outpatient clinics, and a network of 13 federally qualified health centers.21 UI Health is a part of the University of Illinois Chicago (UIC), which is recognized by the US Department of Education as a minority-serving institution, an educational institution that serves racial and ethnic minority populations.22

Source population

Adult participants who enrolled in an IRB-approved research registry for patients with pulmonary disorders from 2011 to 2019, all of whom were able to speak and understand English, were invited to participate in the study. The registry included self-reported demographics (date of birth, gender, race, and ethnicity) and contact information (full name and telephone number).

Study design

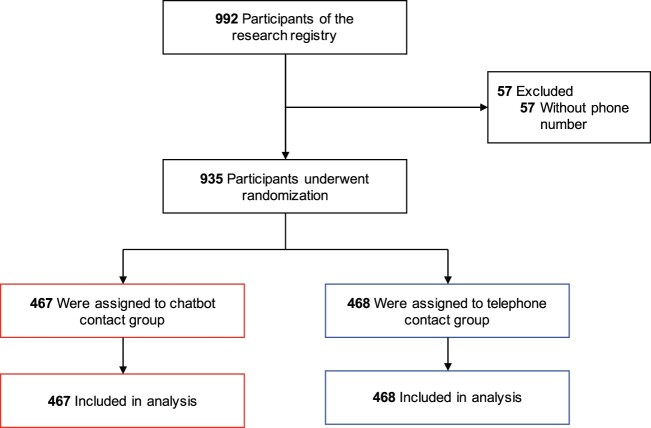

We employed a randomized, parallel-group study design (Figure 1). Participants in the registry were randomly assigned to either the chatbot or telephone group using a 1:1 allocation ratio. To prevent imbalance due to recency of contact information in the registry, we stratified randomization by year of enrollment (2011–2019) in the research registry (Supplementary Table S1). In both study groups, participants received up to 2 contact attempts over 2 consecutive business days during 1 of 3 time periods: 8:00 AM–12:00 PM, 12:01 PM–4:00 PM, or 4:01 PM–8:00 PM.

Figure 1.

Randomization of participants in the research registry. Among 992 participants in the research registry, 57 participants were excluded because they did not have a documented phone number. All participants who underwent randomization were included in the analysis.

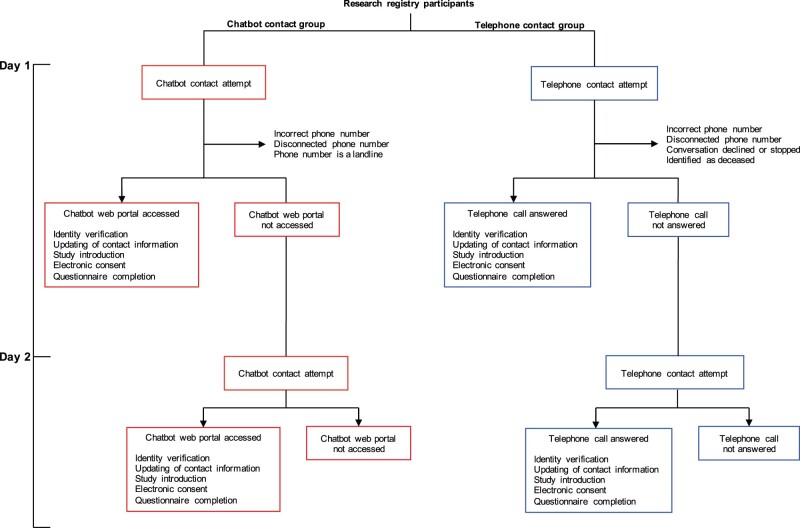

Participants assigned to the chatbot group received an SMS message with a link to a HIPAA-compliant web portal for instant messaging with the chatbot. Participants assigned to the telephone group received a telephone call from a research coordinator who used a nearly identical script to the chatbot group, with some modifications to accommodate verbal communication (Supplementary Table S2). The chatbot and telephone-based recruitment design required that participants verify their identity (full name and date of birth) prior to providing documentation of electronic consent (e-consent) (Figure 2). After providing e-consent, participants were asked to complete an 11-item questionnaire about attitudes and practices about vaccination, adapted from the Vaccine Hesitancy 5-point Likert scale questionnaire created by the World Health Organization.23

Figure 2.

Study schema. Participants were randomized to either the chatbot or telephone contact intervention. Participants in the chatbot group who did not access the web portal by 12:00 AM the following day received a second contact attempt. Participants in the telephone group unavailable to speak on the phone received a second contact attempt. Participants in both groups who answered a contact attempt were asked to verify their identity by confirming their first and last name in addition to their birth date and to either update or confirm their contact information. Participants interested in learning more about the research study were either shown a pre-recorded video of a study overview within the chatbot web portal or presented the study overview script verbally by research coordinators. Participants in the chatbot and telephone groups who agreed to enroll in the study were invited to provide documentation of consent electronically. Once documentation of e-consent was obtained, participants in the chatbot group were invited to complete the questionnaire using a form within the chatbot web portal, whereas participants in the telephone group were asked to verbally complete the questionnaire administered by a call center agent.

The telephone contact group required 5 trained call center agents working full-time over 5 days (about 60 agent-hours) to complete up to 2 contact attempts per individual. While the chatbot could complete up to 2 contact attempts for each individual instantaneously, we allocated an equal number of chatbot contact attempts over 5 days to prevent the potential for differential response rates by day of week and time of day.

Outcomes

The primary outcome of the study was the proportion of participants who provided e-consent. Several secondary outcomes were also assessed: (1) the proportion of delivered first or second contact attempts. A “delivered” contact attempt was defined in the chatbot group as an SMS message sent to a phone number in service and able to receive SMS messages and in the telephone group as a telephone call to a phone number in service (ie, able to receive calls); (2) the proportion of answered first or second contact attempts. An “answered” contact attempt was defined in the chatbot group as a clicked link to the chatbot application and in the telephone group as an answered phone call by an individual; and (3) the proportion of participants who verified their identity and completed the questionnaire.

Statistical analysis

Descriptive statistics employed mean (standard deviation) and proportions, as appropriate. The primary analysis used the intention-to-treat (as-randomized) principle; we also conducted exploratory analyses among individuals in the chatbot and telephone groups who answered a contact attempt. We used a 2-sample comparison of means or proportions to evaluate demographic characteristics of registry participants who answered or did not answer the chatbot or telephone contact attempts. A comparison of proportions with 95% confidence intervals (CIs) and P-values were used to compare outcomes in the 2 groups. Two-sided P-values <.05 were considered statistically significant. All analyses were completed using R (version 4.0.3; R Core Team, Vienna, Austria).

RESULTS

Most participants in the research registry were enrolled between 2011 and 2015 (Supplementary Table S1). Of 935 participants randomized to the chatbot (N = 467) or telephone (N = 468) group, the mean age was 58 years, and the majority of participants were women (69%) and either African American/Black (65.6%) or Hispanic (10.5%) (Supplementary Table S3).

The chatbot group had a significantly lower rate of first delivered contact attempts compared to the telephone group (Table 1). The proportion of contact attempts that were answered were also lower in the chatbot group. Individuals who did not answer the chatbot (vs answered the chatbot) were slightly older (57 vs 54 years), more likely to be women (71% vs 59%) and African American/Black (66% vs 54%), and less likely to be White (28% vs 36%); however, none of these differences were significant (Supplementary Table S4). There were also no significant differences in demographics between individuals who answered and did not answer a telephone contact.

Table 1.

Outcomes of contact attempts

| Outcome | Chatbot (N = 467) | Telephone (N = 468) | Difference, % (95% CI) | P-value |

|---|---|---|---|---|

| First contact attempts, N (%) | ||||

| Delivered | 259 (55.5)a | 333 (71.2)b | −15.7 (−21.8, −9.6) | <.001 |

| Answered | 42 (9.0)c | 120 (25.6)d | −19.8 (−26.7, −13.0) | <.001 |

| Second contact attempts, N (%) | ||||

| Delivered | 225 (48.2)a | 224 (47.9)b | 0.3 (−6.1, 6.7) | .92 |

| Answered | 16 (3.4)c | 50 (10.7)d | −7.3 (−10.5, −4.0) | <.001 |

| First or second contact attempt, N (%) | ||||

| Delivered | 267 (57.2)a | 337 (72.0)b | −14.8 (−20.9, −8.8) | <.001 |

| Answered | 58 (12.4)c | 160 (34.2)d | −21.8 (−27.0, −16.5) | <.001 |

See Methods for definitions.

As-randomized analyses indicated a smaller proportion of the chatbot group verified their identity, updated their contact information, and provided informed e-consent compared to the telephone group (1.7% vs 5.1%; absolute difference −3.4%; 95% CI −5.7%, −1.1%; P = 0.004) (Table 2). Among the subset of participants who answered a contact attempt, there were no significant differences in the proportion who verified their identity, updated their contact information, or provided informed e-consent.

Table 2.

Outcomes as-randomized and among those who answered at least 1 contact attempt

| Outcome | As-randomized |

With at least 1 answered contact attempt |

||||||

|---|---|---|---|---|---|---|---|---|

| Chatbot (N = 467) | Telephone (N = 468) | Difference, % (95% CI) | P-value | Chatbot (N = 58) | Telephone (N = 160) | Difference, % (95% CI) | P-value | |

| Identity verified | 24 (5.1) | 58 (12.4) | −7.3 | <.001 | 24 (41.4) | 58 (36.3) | 5.1 | .49 |

| (−10.8, −3.7) | (−9.6, 19.8) | |||||||

| Contact information updated | 21 (4.5) | 55 (11.8) | −7.3 | <.001 | 21 (36.2) | 55 (34.4) | 1.8 | .80 |

| (−10.7, −3.8) | (−12.6, 16.2) | |||||||

| Interested in learning about study | 15 (3.2) | 40 (8.6) | −5.3 | <.001 | 15 (25.9) | 40 (25.0) | 0.9 | .90 |

| (−8.3, −2.3) | (−12.3, 14.0) | |||||||

| Provided informed e-consent | 8 (1.7) | 24 (5.1) | −3.4 | .004 | 8 (13.8) | 24 (15.0) | −1.2 | .82 |

| (−5.7, −1.1) | (−11.7, 9.3) | |||||||

Results of questionnaires among the 32 respondents suggested a favorable perspective regarding vaccination (Supplementary Figure S1); the small number of respondents precluded meaningful comparisons of responses obtained via chatbot compared to telephone.

DISCUSSION

In this parallel-group randomized trial of over 900 individuals at a US minority-serving institution, chatbots were compared to telephone outreach by trained call center agents. We found e-consent rates were about 3% lower with chatbots than with telephone-based recruitment. However, among participants who answered a contact attempt, there were no significant differences in e-consent between chatbot and telephone.

To our knowledge, this is the first report to examine the use of chatbots in recruitment for an IRB-approved research study that required electronic documentation of consent (e-consent). The use of chatbots as a cost-saving strategy is growing rapidly, and some reports estimate that the use of chatbots could save the healthcare, banking, and retail sectors $11 billion annually by 2023.24 Furthermore, interest in testing novel strategies for recruitment in minority populations in the United States has grown substantially during the COVID-19 pandemic, given the disproportionate share of morbidity and mortality from COVID-19.25,26 We compared the use of chatbots with telephone-based recruitment because phone calls are commonly used in research as a lower-cost alternative to traditional in-person visits. To reach all participants in the telephone group, 5 call center agents worked full-time over 5 days. By contrast, the chatbot was able to instantaneously contact a similar or greater number of participants in the chatbot group.

Overall, the proportion of participants who provided e-consent to join a minimal-risk study about attitudes and practices about vaccination was disappointingly low (well below 10% of those invited) and even lower in the chatbot group compared to the telephone group. However, the rate of e-consent in the chatbot group was comparable to rates of participant enrollment in studies evaluating other electronic forms of communication, such as email and patient portal messaging from electronic health records (0.17–4.4%).27,28 Differences in enrollment yield between the chatbot and telephone groups appear to be driven by a lower proportion of participants in the chatbot group who answered a contact attempt. We observed numerically (but not significantly) lower rates of answering the chatbot outreach among older individuals, women, and non-Whites. These findings suggest lower rates of acceptability of chatbots in some patient subgroups, which could further exacerbate differential enrollment in studies according to various sociodemographic characteristics. Future studies should consider prior notification to build trust and awareness with chatbots before sending recruitment messages, especially in individuals who have not previously used chatbots in research-related communication. Alternatively, individuals could be asked about their preferred mode of contact (eg, chatbot or telephone) and this information could be used to inform recruitment strategies tailored to their preferences.

The rate of delivered contact attempts was also significantly lower in the chatbot group compared to the telephone group, which suggests that there may have been a disproportionate number of landlines recorded in the research registry. The Centers for Disease Control and Prevention reported that from 2011 to 2020, the percentage of all adults who live in US households using only wireless telephones increased from 30.2% to 62.5%.29,30 The majority of participants in the research registry were enrolled between 2011 and 2015, suggesting that the lower proportion of delivered contact attempts in the chatbot versus telephone groups with the first contact attempt (56% vs 71%) may in part be attributed to phone numbers associated with landlines in the research registry. This observation suggests that when deploying the chatbot in the research setting, the reach of the target population may be improved by first verifying whether telephone numbers are current, associated with wireless telephones, and able to receive SMS messages.

Our study has several strengths, including a randomized study design in over 900 individuals, testing the use of e-consent, and conducting the study in a population that has historically been under-represented in research. The study also has several limitations related to external validity (generalizability). We used a research registry with telephone numbers predominantly before 2015, so comparisons with telephone-based recruitment may underestimate the yield with chatbots in populations with more up-to-date contact information. Also, the study was conducted in a largely older patient population at a single health center, and results may not apply to other patient populations who may be more likely to use smartphones or use chatbots. The results of our study, however, could be used as a guide when considering the use of chatbots for recruiting study participants.

In conclusion, the recruitment yield was slightly lower with chatbots than with telephone calls in a predominantly older, female, and non-White patient population. Additional studies are needed to improve the acceptability of chatbots in some populations and to evaluate use preference-based algorithms in research recruitment.

FUNDING

This work was supported by the National Center for Advancing Translational Sciences (UL1TR002003). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

AUTHOR CONTRIBUTIONS

YJK, JAD, NLS, EB, JAL, JS, and JAK significantly contributed to the conception and design of the study. JT, SD, and JS developed the chatbot platform with support from YJK, JAD, NLS, EB, JAL, and JAK. YJK, JAD, JT, and SD coordinated the implementation of the chatbot platform. SK and JT acquired the data. YJK, JAD, YC, and JAK analyzed the data. YJK, JAD, and JAK drafted the initial manuscript. All authors were involved in data interpretation and manuscript revision and approved the final version submitted for publication.

SUPPLEMENTARY MATERIAL

Supplementary materials are available at Journal of the American Medical Informatics Association online.

Supplementary Material

ACKNOWLEDGMENTS

We thank the Breathe Chicago Center, especially the Research Communication Center, for their input on the study design and work in the telephone communications for this study.

CONFLICT OF INTEREST STATEMENT

JS is an employee and owns stock in QliqSOFT. SD and JT are also employees of QliqSOFT. No other authors have competing interests to disclose.

DATA AVAILABILITY

The data underlying this article are from an IRB-approved research study (UIC IRB#: 2020-1157) and includes protected health information of individuals that participated in the study. De-identified data may be shared on request to the corresponding author.

REFERENCES

- 1. Bibault J-E, Chaix B, Guillemassé A, et al. A chatbot versus physicians to provide information for patients with breast cancer: blind, randomized controlled noninferiority trial. J Med Internet Res 2019; 21 (11): e15787. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Greer S, Ramo D, Chang Y-J, et al. Use of the chatbot “Vivibot” to deliver positive psychology skills and promote well-being among young people after cancer treatment: randomized controlled feasibility trial. JMIR Mhealth Uhealth 2019; 7 (10): e15018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Anthony CA, Rojas EO, Keffala V, et al. Acceptance and commitment therapy delivered via a mobile phone messaging robot to decrease postoperative opioid use in patients with orthopedic trauma: randomized controlled trial. J Med Internet Res 2020; 22 (7): e17750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Hauser-Ulrich S, Künzli H, Meier-Peterhans D, et al. A smartphone-based health care chatbot to promote self-management of chronic pain (SELMA): pilot randomized controlled trial. JMIR Mhealth Uhealth 2020; 8 (4): e15806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Martin A, Nateqi J, Gruarin S, et al. An artificial intelligence-based first-line defence against COVID-19: digitally screening citizens for risks via a chatbot. Sci Rep 2020; 10 (1): 19012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Judson TJ, Odisho AY, Young JJ, et al. Case report: implementation of a digital chatbot to screen health system employees during the COVID-19 pandemic. J Am Med Inform Assn 2020; 27: ocaa130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Car LT, Dhinagaran DA, Kyaw BM, et al. Conversational agents in health care: scoping review and conceptual analysis. J Med Internet Res 2020; 22 (8): e17158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Lai L, Wittbold KA, Dadabhoy FZ, et al. Digital triage: novel strategies for population health management in response to the COVID-19 pandemic. Healthc (Amst) 2020; 8 (4): 100493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Yoneoka D, Kawashima T, Tanoue Y, et al. Early SNS-based monitoring system for the COVID-19 outbreak in Japan: a population-level observational study. J Epidemiol 2020; 30: 362–370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Oh J, Jang S, Kim H, et al. Efficacy of mobile app-based interactive cognitive behavioral therapy using a chatbot for panic disorder. Int J Med Inform 2020; 140: 104171. [DOI] [PubMed] [Google Scholar]

- 11. Gabrielli S, Rizzi S, Bassi G, et al. Engagement and effectiveness of a healthy-coping intervention via chatbot for university students during the COVID-19 pandemic: mixed methods proof-of-concept study. JMIR Mhealth Uhealth 2021; 9 (5): e27965. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Almalki M, Azeez F. Health chatbots for fighting COVID-19: a scoping review. Acta Inform Med 2020; 28 (4): 241–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Asensio-Cuesta S, Blanes-Selva V, Portolés M, et al. How the Wakamola chatbot studied a university community’s lifestyle during the COVID-19 confinement. Health Informatics J 2021; 27 (2): 146045822110179. [DOI] [PubMed] [Google Scholar]

- 14. Hautz WE, Exadaktylos A, Sauter TC. Online forward triage during the COVID-19 outbreak. Emerg Med J 2021; 38 (2): 106–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Schubel LC, Wesley DB, Booker E, et al. Population subgroup differences in the use of a COVID-19 chatbot. NPJ Digit Med 2021; 4 (1): 30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. So R, Furukawa TA, Matsushita S, et al. Unguided chatbot-delivered cognitive behavioural intervention for problem gamblers through messaging app: a randomised controlled trial. J Gambl Stud 2020; 36 (4): 1391–407. [DOI] [PubMed] [Google Scholar]

- 17. Morse KE, Ostberg NP, Jones VG, et al. Use characteristics and triage acuity of a digital symptom checker in a large integrated health system: population-based descriptive study. J Med Internet Res 2020; 22 (11): e20549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Piao M, Ryu H, Lee H, et al. Use of the healthy lifestyle coaching chatbot app to promote stair-climbing habits among office workers: exploratory randomized controlled trial. JMIR Mhealth Uhealth 2020; 8 (5): e15085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Dennis AR, Kim A, Rahimi M, et al. User reactions to COVID-19 screening chatbots from reputable providers. J Am Med Inform Assn 2020; 27: ocaa167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Heller C, Balls-Berry JE, Nery JD, et al. Strategies addressing barriers to clinical trial enrollment of underrepresented populations: a systematic review. Contemp Clin Trials 2014; 39 (2): 169–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.University of Illinois Hospitals & Clinics. 2019 Community Assessment of Needs: Towards Health Equity. 2019. https://hospital.uillinois.edu/about-ui-health/community-commitment/community-assessment-of-health-needs-ui-can Accessed September 15, 2021.

- 22.U.S. Department of the Interior. Minority Serving Institutions Program. U.S. Department of the Interior. https://www.doi.gov/pmb/eeo/doi-minority-serving-institutions-program Accessed September 16, 2021.

- 23.Group SW. Report of the SAGE Working Group on Vaccine Hesitancy. 2014. https://www.who.int/immunization/sage/meetings/2014/october/1_Report_WORKING_GROUP_vaccine_hesitancy_final.pdf Accessed December 20, 2020.

- 24.Business Insider. Chatbot Market in 2021: Stats, Trends, and Companies in the Growing AI Chatbot Industry. 2021. https://www.businessinsider.com/chatbot-market-stats-trends?utm_source=copy-link&utm_medium=referral&utm_content=topbar Accessed July 27, 2021.

- 25. Webb Hooper M, Nápoles AM, Pérez-Stable EJ. COVID-19 and racial/ethnic disparities. JAMA 2020; 323 (24): 2466–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Chowkwanyun M, Reed AL. Racial health disparities and Covid-19—caution and context. N Engl J Med 2020; 383 (3): 201–3. [DOI] [PubMed] [Google Scholar]

- 27. Pfaff E, Lee A, Bradford R, et al. Recruiting for a pragmatic trial using the electronic health record and patient portal: successes and lessons learned. J Am Med Inform Assoc 2019; 26 (1): 44–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Plante TB, Gleason KT, Miller HN, et al. ; STURDY Collaborative Research Group. Recruitment of trial participants through electronic medical record patient portal messaging: a pilot study. Clin Trials 2020; 17 (1): 30–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Blumberg SJ, Luke JV. Wireless Substitution: Early Release of Estimates from the Health Interview Survey, January-June 2011. Centers for Disease Control and Prevention; 2011. https://www.cdc.gov/nchs/data/nhis/earlyrelease/wireless201112.pdf Accessed September 1, 2021.

- 30. Blumberg SJ, Luke JV. Wireless Substitution: Early Release of Estimates From the National Health Interview Survey, January-June 2020. Centers for Disease Control and Prevention; 2021. https://www.cdc.gov/nchs/data/nhis/earlyrelease/wireless202102-508.pdf Accessed September 1, 2021.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data underlying this article are from an IRB-approved research study (UIC IRB#: 2020-1157) and includes protected health information of individuals that participated in the study. De-identified data may be shared on request to the corresponding author.