Abstract

Every year about one million people die due to diseases transmitted by mosquitoes. The infection is transmitted to a person when an infected mosquito stings, injecting the saliva into the human body. The best possible way to prevent a mosquito-borne infection till date is to save the humans from exposure to mosquito bites. This study proposes a Machine Learning (ML) and Deep Learning based system to detect the presence of two critical disease spreading classes of mosquitoes such as the Aedes and Culex. The proposed system will effectively aid in epidemiology to design evidence-based policies and decisions by analyzing the risks and transmission. The study proposes an effective methodology for the classification of mosquitoes using ML and CNN models. The novel RIFS has been introduced which integrates two types of feature selection techniques – the ROI-based image filtering and the wrappers-based FFS technique. Comparative analysis of various ML and deep learning models has been performed to determine the most appropriate model applicable based on their performance metrics as well as computational needs. Results prove that ETC outperformed among the all applied ML model by providing 0.992 accuracy while VVG16 has outperformed other CNN models by giving 0.986 of accuracy.

Keyword: Disease epidemiology, Image classification, Vector mosquito, ML, CNN, RIFS, ROI

1. Introduction

Every year mosquitoes cause notable damage to human populations as they spread deadly infectious diseases like yellow fever, malaria, encephalitis (Thomas et al., 2016). It is supposed that half of the world’s population is at risk of dengue fever, which also spreads by mosquitoes (W.H. Organization et al., 2020). There is also solid evidence of an outbreak of combined infection of dengue and chikungunya pathogens in humans (Furuya-Kanamori et al., 2016). For the age group of nine years and above a renowned vaccine Sanofi PasteurCYD-TDV for dengue shows its efficiency around 65.5% and for the age group under nine-year, its efficiency decreasesto 46.6% (Hadinegoro et al., 2015). The major means of transmission of dengue, chikungunya, zika, and yellow fever include mosquitoes such as Aedes, Anopheles, and Culex (Roth et al., 2014, Jasinskiene et al., 1998).

Mosquitoes are very small flying insects that have the ability to hide anywhere. Use of the pesticides and fogs has difficulty permeating into these hideouts. Mosquito nets show some ability to prevent them but this is not the perfect solution for decreasing the mosquitoes’ proliferation. Entomological characterizations the basic tool for obtaining information about mosquitoes. Aedes and Culex are the two notorious types of mosquitoes. They are famous for spreading deadly diseases (W.H. Organization, 2021). Aedes and Culex are common types of mosquitoes, which are present commonly in almost every part of the world under all suitable weather conditions. Dengue fever is being spread by the Aedesalobopictus and Aedes Aegypti (T. center for disease control, 2021). On the other hand, Culex tritaeniorhychus and Culex annulus are infamous for the spread of Japanese Encephalitis (D. control center Taiwan, 2021). While studying the works related to the diseases transmitted by the mosquitoes demands an efficient automatic system for the detection, and classification of the mosquitoes.

To contribute to this important and critical disease spread domain, it demands an efficient automatic information system for the detection and classification of the special types of mosquitoes. The system will aid the health authorities and other stack holders to check the presence of any disease spreading mosquito in the area of their interest. The proposed system will effectively aid in epidemiology to design evidence-based policies and decisions by analyzing the risks and transmission. It will prepare the authorities to take preventive measures in the targeted areas to control its spread at the right time. This study proposes an effective methodology for the classification of mosquitoes using the deep learning approach.

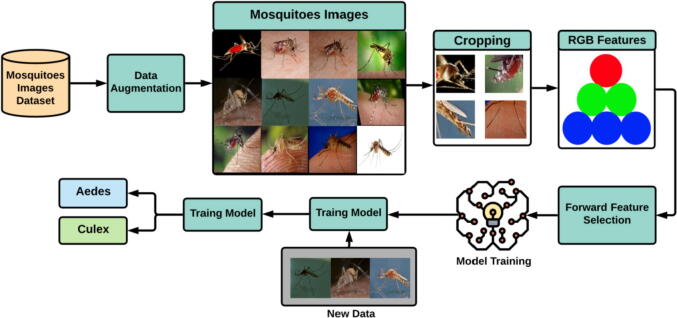

The dataset used in this study has been obtained from IEEE dataport contain to the type of mosquito images (Reshma Pise, 2020). The proposed system uses different techniques in data preprocessing to ensure the maximum classification performance as well as to explore the methods to classify the images using the lowest possible computational efforts. These preprocessing techniques include data augmentation and the proposed feature selection approach RIFS, a hybrid approach based on Image processing filters to focus on specific parts of an image which is significant in classification, and forward features selection (FFS) to select the best features from the ROI based RGB feature sets.

As a first step towards preprocessing the data augmentation has been performed to increase the size of training data (Reshi, 2021). After data augmentation, the feature selection has been done using the RIFS approach to select the specific features for the learning of models. The proposed machine learning (ML) and convolutional neural networks (CNN) have been used to learn on RIFS generated features. The performance of the models has been evaluated in terms of accuracy, precision, recall, and F1-score.

The key points of the study are:

-

•

This study performs the classification of mosquitoes (Aedes and Culex) using ML and deep learning approaches.

-

•

Data pre-processing has been done using data augmentation and the proposed novel feature selection technique RIFS which improves the performance and reduces the computational cost of the models

-

•

A strong comparison has been done with the baseline pre-trained and well-tuned models that have been done in this study

-

•

Results show that ML models can also perform well in image classification if the datasets are preprocessed properly models are tuned well.

The rest of the paper is divided into four sections. Section 2 contains the related work of the study, Section 3 contains the detailed description of the dataset, proposed methodology, and techniques. Section 4, contains the results of the experiments, Section 5 presents the discussion and Section 6 contains the conclusion of the study.

2. Related work

Mosquito classification has gained much interest by researchers to work. Recent studies proposed different classification approaches, but efficiency and computational complexity are still a tradeoff in most of the research studies conducted so far. Several researchers have performed studies in this domain such as Garcia et al(Garcia et al.). worked on the classification of mosquitoes larva. They used machine learning classifiers support vector machine (SVM) and K-means with low features like local binary pattern (LBP), co-occurrence matrix (CM), and Gabor filtering features (FG2). In their study, they used 308 images of “Aedes” and “others”. Feature extraction was used for classification. The obtained results show that K-mean accuracy on low features and local binary pattern (LBP), co-occurrence matrix (CM), and Gabor filtered features (FG2) the Achieved accuracy was 59% for CM, 55% LBP, and 60% for the FG2. On the other hand, the accuracy achieved by the SVM was 67% for CM, 72% on LBP, and 79% for FG2.

Ortiz et al(Ortiz et al., 2018). Proposed a system for mosquito larva classification by using a VGG16 pre-trained convolutional neural network. The dataset used in their work was consisting of two types of mosquitoes: Aedes and other genera. For training purposes, they used different values of epochs. Due to the pre-trained bottleneck features, they achieve the highest accuracy of 97%. Therefore, the localization of vectors could be accurate and the process of fumigation could be more efficient.

In continuation of their work Ortiz et al(Sanchez-Ortiz et al., 2017) used AlexNet CNN for the classification of mosquitoes. They used images of eight segments of the larva. The images they have used in the study were obtained from the smart phone. They used nearly 300 images in their study. The number of iterations performed to increase the accuracy. On the 200 epochs, the proposed network achieved an accuracy of 96.8%.

Okayasci et al (Okayasu et al., 2019)used(Sanchez-Ortiz et al., 2017)types of features in their work. They used handcrafted feature extraction and SVM for the classification. They built their dataset consisted of 14,460 images of mosquitoes with the three different types of mosquito species. This study compared the conventional and deep learning methods. Conventional methods provided the highest accuracy of 82.4% while the accuracy given by the deep learning model was 95.5% in the residual network using data augmentation. Data augmentation has been proved helpful for the classification of mosquito species.

Motta et al(Motta et al., 2019) used a convolutional neural network to perform automatic morphological classification of mosquitoes. They used LeNet, GoogleNet, and AlexNet in their study. By using GoogleNet, they achieved an accuracy of 76.2%. The dataset used in their studies consisted of 4056 images of mosquitoes. Li-Pang et al (Huang et al., 2018) worked on the automatic classification of mosquitoes which can identify Aedes and Culex (species of mosquitoes). They used edge computing and deep learning in their work. They implemented their system with the help of IoT-based devices. The highest accuracy they have achieved was 90.5% on test data.

Fuchida et al (Fuchida et al., 2017)worked on vision-based perception and classification of mosquitoes. There proposed system has the capacity to identify mosquitoes from the other bugs (bees, flies) by extracting morphological features. They used the machine learning algorithm SVM variants in their work. SVM-II achieved an accuracy of 85.2% for mosquitoes and 97.6% for the bugs, and SVM-III achieved an accuracy of 98.9% for the mosquitoes and 92.5% for bugs.

Fuad et al. (Fuad et al., 2018) classified Aedes Aegypti larvae and float value with three different learning rates. They have used 534 images in their study. They achieved the highest accuracy of 99%. They have compared the values of their accuracy and cross-entropy errors of the training set with the different learning rates. Minakshi et al. (Minakshi et al., 2017)proposed a learning algorithm that was designed to process the image of a mosquito taken by the mobile phone camera to identify the species of the mosquitos. The sample size was 60 images and seven species were used in their study. Random forest achieved the highest accuracy was 83.3% with good precision and recall value. As the dataset was of only 60 images so, it was not very suitable for classification.

Fahioudakis et al. (Fanioudakis et al., 2018)worked on a large-scale classification experiment based on optical recordings of six different species of mosquitoes. They study the signal and attributes of a mosquito’s wing beat. They have used 279,566 flight sound beats of mosquitoes and used top-tier deep learning approaches for the implementation of classification. They reached an accuracy of 96%.

Akter et al. (Akter et al., 2020) used CNN with data augmentation for mosquito classification. Their dataset contains was 442 images. They used random forest, SVM, XGBoost, and CNN (VGG16). CNN outperforms the other classifiers in terms of accuracy where it gives the accuracy of 93%. After augmentation of images, the number of images was 36000. Junyony Park et al. (Park et al., 2020) worked on the morphological analysis of vector and classification of mosquitoes. They have collected 3600 images of eight different species of mosquitoes. They used CNN and achieved an accuracy of 96.6%. The summary of related work is present in Table 1.

Table 1.

Summary of the systematic analysis studies in related work.

| Study | Year | Dataset | Accuracy | Classes |

|---|---|---|---|---|

| Garcia et al. (Garcia et al.) | 2017 | Self-made 306 images | SVM, k mean67%, 72%, 79% | Aedes and others |

| Sanchez-Ortiz et al. (Sanchez-Ortiz et al., 2017) | 2017 | Self-made 300 images | 96.8 alex net cnn | N/A |

| Fuchida et al. (Fuchida et al., 2017) | 2017 | 400 images | SVM I and SVMII 98.9% | Mosquitoes and flies |

| Minakshi et al. (Minakshi et al., 2017) | 2017 | 60 images | 83.3% | – |

| Fuad et al. (Fuad et al., 2018) | 2018 | Self made 534 images | 99% cross entropy | Aedes larvae |

| Fanioudakis et al. (Fanioudakis et al., 2018) | 2018 | N/A | 96% | Classification of the mosquitoes based on the wingbeats |

| Huang et al. (Huang et al., 2018) | 2018 | Self-made | 90.5% CNN | Aedes and culex |

| Ortiz et al. (Ortiz et al., 2018) | 2018 | Self-made with mobile camera | 97% | Aedes and others |

| Okayasu et al. (Okayasu et al., 2019) | 2019 | Self-made, 14,460 images | 95.5% residual network using data augmentation | N/A |

| Motta et al. (Motta et al., 2019) | 2019 | 4056 images | 76.2% CNN with googlenet | N/A |

| Akter et al. (Akter et al., 2020) | 2019 | 36,000 images self made | 93% CNN(VGG-16) | N/A |

| Park et al. (Park et al., 2020) | 2020 | 3600 self-collected | 96.6% CNN | N/A |

The proposed system uses data pre-processing technologies to achieve maximum classification performance and explore the methods to classify the images using minimum computational complexity. These pre-processing techniques include data augmentation, Region of interest (ROI) based image slicing to focus on specific body parts of the mosquito image, forward features selection (FFS) to select the best features from RGB feature sets. The image classification has been done using different ML and Deep learning models to analyze each approach and model for its best performance and computational complexity. The proposed study uses Aedes and Culex dataset obtain from IEEE data port.

3. Materials and methods

3.1. Dataset description

This study uses an open-access image dataset of Aedes and Culex mosquitoes species (Reshma Pise, 2020) obtained from the IEEE data port. The dataset contains images of two species of mosquitoes named Culex and Aedes. There are a total of 1404 images of the mosquitoes. Out of 1,404 images, there are 810 images of Aedes and 594 images of Culex class. In the Culex class, out of 594 images, 432 images have been used for training purposes, and 162 images are used for the testing. Similarly, out of 810 images of the Aedes class, 591 images have been used for the training, and 219 images used for the testing purpose. Thus, the total images for the training are 1023 and 381 images for testing as shown in Table 2 and the sample of the dataset is shown in Fig. 1.

Table 2.

Random splitting of training and testing.

| Type | Total | Training | Testing |

|---|---|---|---|

| Culex | 594 | 432 | 162 |

| Aedes | 810 | 591 | 219 |

| Total | 1404 | 1023 | 381 |

Fig. 1.

Sample of dataset.

3.2. Data augmentation

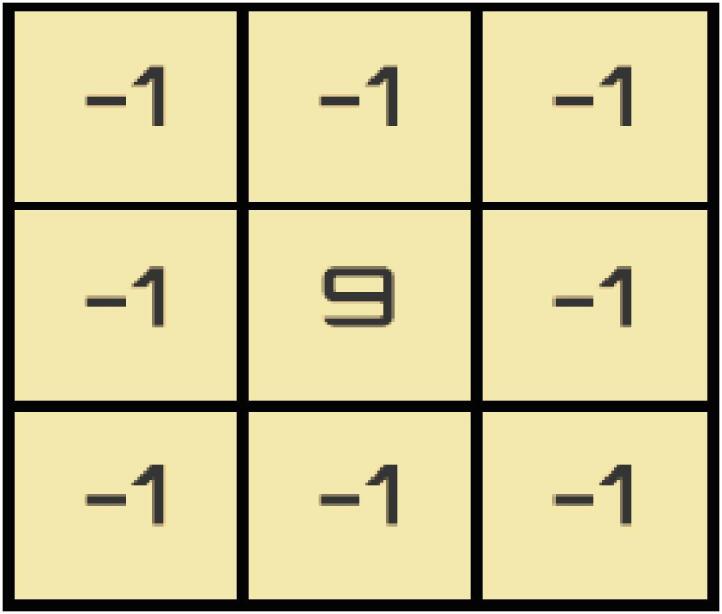

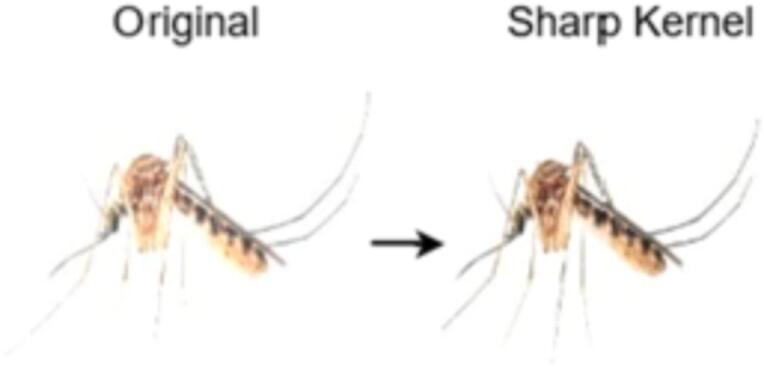

The data processing technique is used to increase the amount of data by adding slightly modified copies of already existing data or develops new synthetic data from the existing data. It works as a regularizer and assists to overcome over-fitting while training a model (Shorten and Khoshgoftaar, 2019). Data augmentation techniques are closely related to the oversampling in data analysis. It increases the diversity of data that is available for training models without actually collecting new data. In this study, a sharp filter for data augmentation has been used to generate one more image from an original image. Data augmentation has only being applied on the training set. Training images count after augmentation shown in Table 3 and applied sharp kernel shown in Fig. 2.

Table 3.

Data augmentation.

| Type | Training Set | Sharp Kernel | Total |

|---|---|---|---|

| Culex | 432 | 432 | 854 |

| Aedes | 591 | 591 | 1182 |

| Total | 1023 | 1023 | 2046 |

Fig. 2.

Sharp filter.

Sharpening an image is a feature enhancement methodology whose purpose is to highlight the fine details in an image. Typically, this technique uses linear filters for implementing the high pass filters, which may produce unlikely results. The reason being, the linear filters are incapable of sharpening correctly if the image is being corrupted with noise. Therefore, before image sharpening, image soothing and filtering need to be carried out, which involve low pass filters and replacement of pixels. Sharpening enhances the structure and the other details of an image. These line structures and edges of an image can be obtained through the application of a high pass filter over an image. The high pass filter is a spatial operation that takes the difference between the current and averaging weights of the nearby pixels by using matrices. The high pass filter is then used to design a sharpening filter that produces the desired, appropriately scaled, high-pass sharpened image, and the result after applying a sharp filter is shown in Fig. 3.

Fig. 3.

Sample image before and after applying sharp filter.

3.3. CNN variants used in this study

3.3.1. VGG-16

VGG-16 is a renowned convolutional network. It was developed by the visual geometry group which is also known as VGG-16. Instead of having many hyper parameters, VGG16 only focuses on having convolutional layers of 3 × 3 filters with stride 1 and uses the same max pool and padding layer of 2 × 2 filters of stride 2. In the end, it has 2 fully connected layers followed by the softmax for the output. This 16 in VGG-16 represents the 16 layers that have weights. VGG-16 has approximately 138 million parameters so, it is a large network. VGG is a pre-trained version of the network trained on more than a billion images from the ImageNet database (Simonyan and Zisserman). It can classify images into thousands of object categories, which results in the learning of networks on rich features representation for various images. The image input size in VGG-16 is 224 × 224 (Theckedath and Sedamkar, 2020).

3.3.2. Inception V3

By modifying the previous inception architecture inception, V3 has a quality that it uses less computation power. Comparing other inception networks like GoogLeNet/ inception V1, inception V3 is more efficient in terms of memory and resources. In the inception V3 network, some techniques for optimizing the network have been put suggested to loosen the constraint for easier model adoption. Their techniques are factorized convolutions, dimension reduction, regularization, and parallelized competitions. Due to factorized convolution, inception V3 uses less number of parameters involved in the network. Bigger convolution replaced with smaller convolution might increase the training speed of the inception V3. In inception V3, auxiliary classifier acts as a regularizer, and grid size reduction is usually done by pooling operation.

3.3.3. ResNet-50

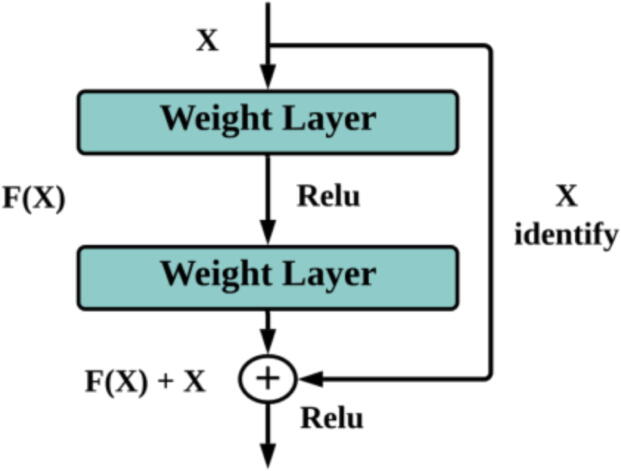

ResNet-50 is a variant of a convolutional neural network that is 50 layers deep. It is frequently used as a starting point for transfer learning. The concept of skip connection was firstly introduced by residual network 50. The ResNet model consists of five stages where each with the convolutional and identity block. Every convolutional block comprises 3 convolutional layers. ResNet-50 has over 23 million trainable parameters. Skip connections are used to add the output from a previous layer to the next coming layer. This helps to mitigate the vanishing gradient problem. Resnet-50 is comparable to the VGG-16 accept that ResNet-50 has the capability of additional identity mapping (Theckedath and Sedamkar, 2020) as shown in Fig. 4.

Fig. 4.

Identity mapping function in ResNet-50.

ResNet-50 predicts The Delta which is necessary to reach the final prediction from one layer to another(He et al., 2016).

3.3.4. EfficientNetBo

EfficientNet is a group of convolutional neural networks. It is more effective than most of its contemporaries. EfficientNet model consists of eight models from the B0 model to the B7 model (Tan and Le, 2019). With every subsequent model number referred to variants with more parameters and higher accuracy. Similar to any other model, EfficientNet saves time and computational power. In doing so, it gives better results than many known models. This is all because of intelligent scaling at depth, width, and resolution. EfficientNet enables the use of deep learning on mobile and other edge devices. There is a compound scaling method in EfficientNet that uses compound coefficient $\phi$ to uniformly scale network width, depth, and resolution in a principle way:

| (1) |

| (2) |

| (3) |

| (4) |

Where, the constants α, β, and γ can be found by using small grid search. φ represents a user-specified coefficient which determines the number of resources ready for model scaling. And To determine the assignment of extra resources to network width, depth, and resolution is represented by α, β, γ respectively.

3.4. Machine learning models

3.4.1. Extra tree classifier (ETC)

ETC develops a group of unpruned decision trees following the top-down method. While splitting a node of a tree, involves randomizing both attribute and cut point selection strongly. In extreme conditions, it develops fully randomized trees with structures independent of the training sample's output value. The main

difference between the other famous ML model random forest, ETC is:

-

•

ETC uses the whole dataset for the training of a model, while random forest uses bootstrap replica for training.

-

•

ETC randomly picks the best features along with the corresponding values to split the node.

Due to these qualities, an extra tree classifier is less likely to over-fit a dataset and gives better performance (Geurts et al., 2006).

Extra tree classifier has pros for calculation of the essential features. For calculating the importance feature Xm for predicting Y in a tree structure T by summing up the decrease in the weighted impurity (pt)Δ i(S t, t) for all nodes t, where feature Xm is used, then averaging over all Nt trees in the forest.

| (5) |

where p(t) is the proportion of NNT samples reaching node t and v(S t) is the feature used in split S t (Bhati and Rai, 2020). The decrease in some impurity measures i(t) at node t is represented by the following formula:

| (6) |

Where, pL = Nt/N, pR = NtR/N and split st = s* for which the partition of the N node samples into two subsets tL and tR uplift the decrease in the impurity is identified. The construction of trees stops if the nodes are pure in terms of Y. Gini index is used as an impurity function and this is known as Gini importance or means decrease Gini.

Table 4 shows the hyper-parameters for the used machine learning models. The models tuning has been performed to get this parameters setting.

Table 4.

Hyper-parameters of machine learning algorithms used in our study.

| ML Algorithms | Hyper-parameters |

|---|---|

| Random Forest (RF) | n estimators = 300 max depth = 300 random state = 2 |

| Logistic Regression (LR) | multi class = ’ovr’ C = 3.0 Solver = ’liblinear’ |

| Support Vector Machine (SVM) | C = 3.0 kernel =’linear’ |

| Extra Trees Classifier(ETC) | no. of estimators = 300 max tree depth = 300 random states = 2 |

| K-Nearest Neighbor (KNN) | n neighbors = 6 wt = ’uniform’ |

3.5. Feature selection

Training a model on a high-dimensional dataset is generally very challenging. Many ML models can easily over-fit and usually need wary hyper-parameter tuning when trained on a high-dimensional dataset. The feature reduction techniques help reduce the dimensions of a given dataset (Singh et al., 2009). The feature reduction process has to be performed very carefully to eliminate the redundant or irrelevant features from the data set. This elimination is done based on their predictive power, rather than eliminating features randomly. The feature reduction presents the following advantages:

-

•

Training time is decreased due to decreased number of features.

-

•

Fewer chances of overfitting due to reduced dimensionality.

-

•

Increases interpret-ability in models and makes them simple.

-

•

Decreases the effect of the curse of dimensionality.

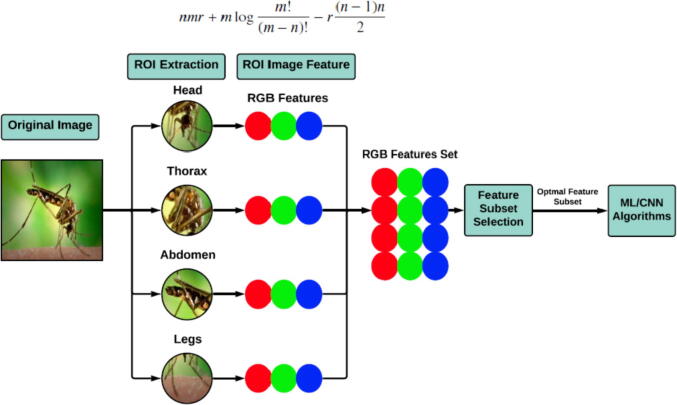

Feature selection is the process of finding the most appropriate features of a given dataset (Reshi, 2021). It is beneficial for the improvement of classification accuracy as well as computational speed. In most of the classification problem domains, the number of features is in hundreds and thousands, so selecting useful features out of such a large number of features is a challenging task. In this study, a hybrid feature selection method named RIFS has been introduced, which combines two feature selection methods – the ROI-based image filtering and the wrappers-based FFS technique. The refined images with selected ROI have been first obtained from the primary feature set using image filters. These features have further been refined by applying wrapper feature selection methods like FFS. This hybrid mechanism takes advantage of both the image filters and the wrappers. The mechanism has been examined by using different ML and CNN models. The results prove that a smaller refined feature set produces better classification accuracy with decreased computational complexity than feature sets with a large number of features.

{ROI} ROI extraction deals with the extraction of the intended shape signatures by applying edge detection techniques. Edge detection is a digital image processing technique in which an image is segmented into different regions with discontinuities. Edge detection techniques are most commonly used in image morphology, pattern recognition, and feature extraction. In feature extraction edge detection-based ROI extraction technique enables us to select an intended region of the total image. It minimizes the computational complexity by reducing data processing to recognize the required features for any classification task. Thus, the edge detection technique has been applied to the whole images to crop the needed region of the images necessary in mosquito classification.

3.5.1. FFS

FFS is a technique that notably reduces the number of models that need to be learned (Whitney, 1971). The technique starts with a null model, and the features are added one at a time, choose the best model among the bests in each iteration. That is, the next feature is selected, and its metric value is calculated. The feature which gives the best metric value is added to the feature list. The process is repeated for two features, one from the selected feature set and one selected from the set of all remaining features. Again the metric value is evaluated, and compared to every featured pair, the feature with the best metric value is added to the selected feature set. The process is reiterated till a desired n number of features are obtained. This feature set of n features can be referred to as hyper-parameter FFS is thus a feasible technique to tune the hyper-parameter to obtain the optimal performance.

The linear model has been mainly used with FFS to find the features subset. To reduce the time complexity because it takes less time to train than non-linear models. Consider that if there are r rows in a dataset, the time taken to run the above algorithm will be:

| (7) |

which can be simplify as:

| (8) |

The proposed RIFS technique shown in Fig. 5 and can be defined mathematically as follows:

Fig. 5.

RIFS Workflow diagram.

The proposed RIFS technique shown in Fig. 5 can be defined mathematically as follows:

| (9) |

The matrix in equation (9) shows the image features and equation (10) shows the RoIs from image.

| (10) |

| (11) |

Now, the final features set for learning models from the RIFS approach can be defined as:

| (12) |

3.6. Proposed methodology workflow

This study performs all experiments on the Core i7 7th generation machine with Windows 10 operating system with 8 GB RAM and 500 GB HDD. Jupyter notebook has been used for the implementation of python code.

The proposed methodology uses different techniques and methods to classify the Aedes and Culex. As a first step in the workflow, the dataset has been obtained from an IEEE data port. The obtained dataset has then been preprocessed to improve learning models' performance and compensate for computational complexity. Thus, in preprocessing step, data augmentation and RIFS feature selection have been performed. The data augmentation technique is used to increase the number of images for models' learning because the original dataset is not large enough, so the numbers of features are not enough for a good fit. For data augmentation, a sharp filter method has been used, generating one more image corresponding to each image from the training set. The data augmentation technique has been applied only on the training set to double the training set's size, as shown in Table 5 and training, testing images count shown in Table 6.

Table 5.

Training data size after data augmentation.

| Original | Augmented Images | Total | |

|---|---|---|---|

| Total | 1023 | 1023 | 2046 |

Table 6.

Training and testing images count.

| Type | Training | Testing |

|---|---|---|

| Culex | 854 | 162 |

| Aedes | 1182 | 219 |

| Total | 2046 | 381 |

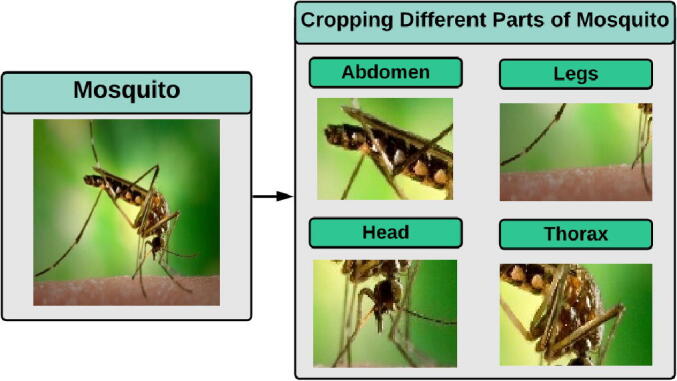

After data augmentation, the RIFS has been applied to extract the specific parts of mosquito bodies such as the abdomen, legs, head, and thorax because these parts are important for visual recognition of both mosquito types. The effect of the ROI step of RIFS of the images is shown in Fig. 6.

Fig. 6.

RoIs from an image.

The ROI phase of RIFS generates more specific features to learn as compared to learn whole images. After the ROI extraction, the RGB features have been extracted from selected images, and these RGB features have been passed to forward features selection (FFS). Through FFS, the important features are selected for the learning of models to improve the performance. Since all the features are not correlated to the target class, only the important features are selected. The models have been trained using the resulted training set, and then the performance evaluation of the models has been performed using the test data. The important metrics, including accuracy, precision, recall, F1-score, and ROC AUC, have been calculated to evaluate learning models. Proposed methodology diagram shown in Fig. 7.

| Algorithm 1. Proposed approach algorithm |

|---|

| 1. Begin |

| 2. Input: Input_Mosquito_Images[N] |

| 3. Augmented_Images[] = 2 N |

| 4. initialization: |

| 5. loop image in Augmented_Images[]: |

| 6. loop RoI in images: |

| 7. Images_Feature_Set.append(RGB(RoI)) |

| 8. end loop |

| 9. Images_Feature_Set(Images_Feature_Set[]) |

| 10. endloop |

| 11. Train_Model(Images_Feature_Set[]) |

| 12. Output: Evaluate_Model(New_Images[]) |

| 13. End |

Fig. 7.

Proposed methodology diagram.

4. Results

This study deals with the classification of mosquito images for that different deep learning and ML models have been used. The comparative analysis of model performances and computational complexity of different approaches has been evaluated. This section presents the results of all models individually on the given datasets in different scenarios.

4.1. Model performances on primary dataset

The performance evaluation results of all the used models on the original dataset without any preprocessing have been presented in this section. The models in this scenario have been trained on the RGB features of the original images of the primary dataset, and the performance metrics of ML models such as accuracy, precision, recall, and F1 score has been presented in Table 7. The confusion matrix obtained from the current model scenario has been shown in Table 8.

Table 7.

Performance of machine learning models on the original dataset.

| Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| SVM | 0.601 | 0.540 | 0.413 | 0.468 |

| RF | 0.703 | 0.696 | 0.537 | 0.606 |

| ETC | 0.713 | 0.708 | 0.555 | 0.622 |

| LR | 0.627 | 0.589 | 0.407 | 0.481 |

| KNN | 0.706 | 0.686 | 0.567 | 0.621 |

Table 8.

Confusion matrix for all machine learning models on the original dataset.

| Model | TP | TN | FP | FN | CP | WP |

|---|---|---|---|---|---|---|

| SVM | 162 | 67 | 57 | 95 | 229 | 152 |

| RF | 181 | 87 | 38 | 75 | 268 | 113 |

| ETC | 182 | 90 | 37 | 72 | 272 | 109 |

| LR | 173 | 66 | 46 | 96 | 239 | 142 |

| KNN | 177 | 92 | 42 | 70 | 269 | 112 |

The performance of the CNN variants has also been evaluated for the same on the same original dataset images and has been reported in Table 9. As shown in the results VVG16 in this case outperforms all the other CNN Variants and the ML models whose results are presented in the previous subsection. Thus VVG16 gives 0.955 accuracy score which shows the significance of the deep learning approach in the current application domain.

Table 9.

Performance of CNN variant on the original dataset.

| Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| VVG16 | 0.955 | 0.981 | 0.942 | 0.961 |

| Inception V3 | 0.892 | 0.917 | 0.897 | 0.907 |

| ResNet50 | 0.910 | 0.931 | 0.914 | 0.923 |

| EfficientNetB0 | 0.905 | 0.954 | 0.889 | 0.920 |

4.2. Model performance after ROI extraction of images

In this scenario the models have been trained on ROI processed dataset. The highest performance in this setup has been given by ETC followed by RF. As shown in the results the ETC provided accuracy score of 0.98 and RF performs closely with the accuracy score of 0.979. As shown in Table 11, the performances of all models improve when trained on this refined version of the dataset. As shown in Table 12, ETC gives the highest number of correct predictions, i.e 376 out of 381 and lowest false predictions as compared to other ML models.

Table 11.

Performance of machine learning models after applying ROI extraction technique.

| Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| SVM | 0.968 | 0.951 | 0.975 | 0.963 |

| RF | 0.979 | 0.987 | 0.962 | 0.975 |

| ETC | 0.986 | 0.987 | 0.975 | 0.984 |

| LR | 0.965 | 0.951 | 0.969 | 0.960 |

| KNN | 0.955 | 0.909 | 0.993 | 0.949 |

Table 12.

Confusion matrix for all machine learning models after applying ROI extraction technique.

| Model | TP | TN | FP | FN | CP | WP |

|---|---|---|---|---|---|---|

| SVM | 211 | 158 | 8 | 4 | 269 | 12 |

| RF | 217 | 156 | 2 | 6 | 373 | 8 |

| ETC | 218 | 158 | 1 | 4 | 376 | 5 |

| LR | 211 | 157 | 8 | 5 | 368 | 13 |

| KNN | 203 | 161 | 16 | 1 | 364 | 17 |

The CNN variants’ performance is shown in Table 13, and results show that the performance of the CNN variants also improves after ROI extraction of images. The performance of VVG16 increased from 0.955 to 0.986, which shows the significance of ROI-based region specific feature learning for both machine learning and deep learning models. As discussed earlier, the specific region of images gives the specific features for learning which is significant to improve model performances.

Table 13.

Performance of CNN variant after applying ROI extraction technique.

| Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| VVG16 | 0.986 | 0.990 | 0.986 | 0.988 |

| Inception V3 | 0.910 | 0.992 | 0.796 | 0.883 |

| ResNet50 | 0.960 | 0.980 | 0.925 | 0.952 |

| EfficientNetB0 | 0.934 | 0.915 | 0.932 | 0.923 |

4.3. Model performance on application of proposed RIFS technique

This section presents results after the application of the RIFS technique to select the important features from images. RIFS is a combination of ROI and FFS techniques that help to boost ML models’ performance and gives the highest results of this study. RIFS return important features which are significantly correlated features to learn as compared to original dataset image features. All ML models improve their performance with RIFS, as shown in Table 15. ETC gives the highest accuracy score of 0.992, which shows the significance of the proposed feature extraction approach, while the other models as RF and LR, and also improve their performance with an accuracy score of 0.984 and 0.976, respectively. Table 16 shows the confusion matrix for all ML models, and results show that ETC gives 378 correct predictions out of 381, which is the highest correct prediction ratio of the study.

Table 15.

Performance of machine learning models after applying RIFS techniques.

| Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| SVM | 0.960 | 0.956 | 0.950 | 0.953 |

| RF | 0.984 | 0.993 | 0.969 | 0.981 |

| ETC | 0.992 | 0.993 | 0.987 | 0.990 |

| LR | 0.976 | 0.975 | 0.969 | 0.972 |

| KNN | 0.955 | 0.923 | 0.975 | 0.948 |

Table 16.

Confusion matrix for all machine learning models after applying RIFS techniques.

| Model | TP | TN | FP | FN | CP | WP |

|---|---|---|---|---|---|---|

| SVM | 212 | 154 | 7 | 8 | 366 | 15 |

| RF | 218 | 157 | 1 | 5 | 375 | 6 |

| ETC | 218 | 160 | 1 | 2 | 378 | 3 |

| LR | 215 | 157 | 4 | 5 | 372 | 9 |

| KNN | 206 | 158 | 13 | 4 | 364 | 17 |

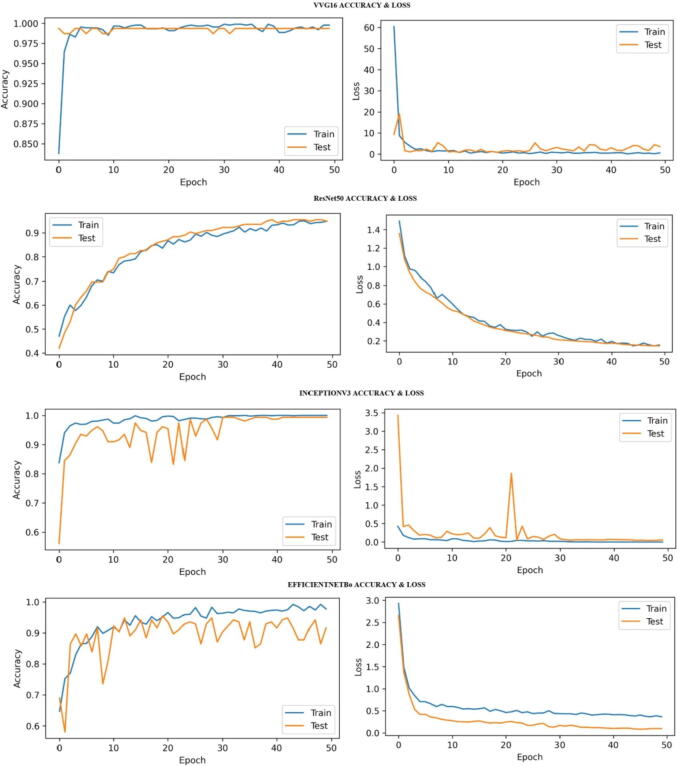

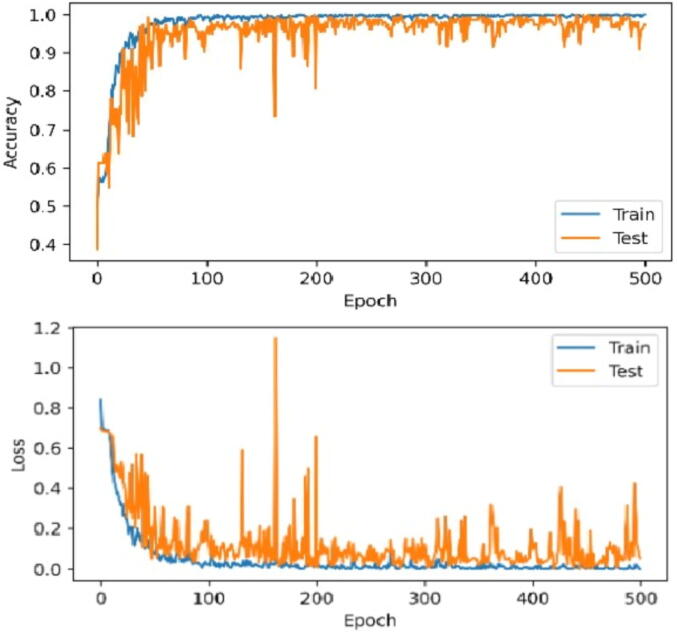

The CNN variants’ performance also improves with RIFS, which shows the significance of the RIFS approach for mosquito image classification using both types of classification models. The results of the CNN variants are shown in Table 17, and according to the results, InceptionV3, ResNet-50, and EfficientNetB0 improve their results compared to previous results, while VGG16 results remain the same as on ROI based feature extraction. According to the confusion matrix in Table 18, the VGG16 is on top with 376 correct classifications and only five wrong classifications. CNNs’ accuracy and loss graph shown in Fig. 8.

Table 17.

Performance of CNN variant after applying RIFS techniques.

| Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| VVG16 | 0.986 | 0.995 | 0.981 | 0.932 |

| Inception V3 | 0.979 | 0.975 | 0.975 | 0.932 |

| ResNet50 | 0.981 | 0.975 | 0.981 | 0.978 |

| EfficientNetB0 | 0.947 | 0.943 | 0.932 | 0.937 |

Table 18.

Confusion matrix for all CNN variants after applying RIFS techniques.

| Model | TP | TN | FP | FN | CP | WP |

|---|---|---|---|---|---|---|

| VVG16 | 218 | 158 | 1 | 4 | 376 | 5 |

| Inception V3 | 215 | 158 | 4 | 4 | 373 | 8 |

| ResNet50 | 215 | 159 | 4 | 3 | 374 | 7 |

| EfficientNetB0 | 210 | 151 | 9 | 11 | 361 | 20 |

Fig. 8.

Training and testing accuracy loss for CNNs with RIFS approach.

4.4. Comparison with other studies

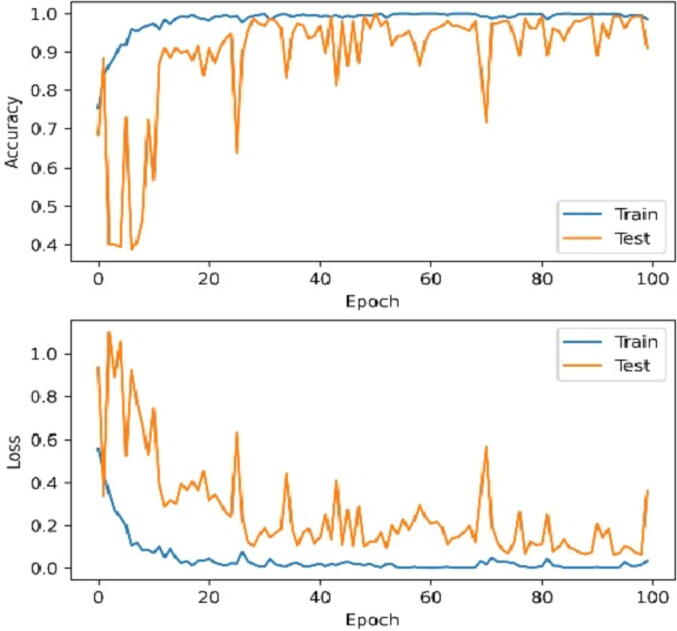

To check the significance of this study, a comparative analysis with prior studies on mosquito image classification has also been performed. The proposed models used in these studies have been applied to the dataset used in this study. The results show that the proposed approach has more significant results than prior studies. The comparison of results with state-of-the-art models are shown in Table 19, Table 20. Fig. 10, Fig. 11 show the accuracy and loss for compared study models.

Table 19.

Comparison results with prior studies.

| Study | Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| Akter et al. (Akter et al., 2020) | CNN | 0.973 | 0.958 | 0.995 | 0.975 |

| Park et al. (Park et al., 2020) | CNN | 0.968 | 0.990 | 0.955 | 0.973 |

| This work | ETC | 0.992 | 0.993 | 0.987 | 0.990 |

Table 20.

Comparison results in confusion matrix with prior studies.

| Study | Year | TP | TN | FP | FN | CP | WP |

|---|---|---|---|---|---|---|---|

| Akter et al. (Akter et al., 2020) | 2020 | 210 | 161 | 9 | 1 | 371 | 10 |

| Park et al. (Park et al., 2020) | 2020 | 217 | 152 | 2 | 10 | 369 | 12 |

| This work | 2021 | 218 | 160 | 1 | 2 | 378 | 3 |

Fig. 10.

Study (Akter et al., 2020) accuracy and loss.

Fig. 11.

Study (Park et al., 2020) accuracy and loss.

4.5. Time complexity comparison between proposed approach model ETC and other CNN variants

To prove the computational complexity improvement given by ETC, the selected best performing ML model. The comparative analysis has been performed between ETC and the CNN models. The results reveal that ETC takes 46 times less training time as compared to fastest trained CNN model, InceptionV3. Thus the results presented in Table 21 show the computational requirements of ETC in terms of time is significantly low in terms of accuracy as compared to CNN variants and other ML models. These results prove that machine learning models can also perform very well as neural network models on image dataset in terms of both accuracy and computational time.

Table 21.

Training and testing time between our proposed approach and CNN variants.

| Model | Training Time (Sec) | Testing Time (Sec) |

|---|---|---|

| VVG16 | 4204.2 | 45.7 |

| InceptionV3 | 1481.4 | 9.3 |

| ResNet-50 | 3942.4 | 17.6 |

| EfficientNetBo | 3259.8 | 8.1 |

| Proposed Approach (ETC) | 32.2 | 0.10 |

5. Discussion

This study deals with the development of methodology for the classification of disease spreading mosquitoes. Various state-of-the-art ML, CNN models and studies have been investigated, analyzed and applied to propose an effective method in terms of accuracy and computational time.

5.1. ML and CNN models without data preprocessing

As shown in the results, the ensemble model ETC outperforms all other ML models with a 0.713 accuracy score. RF and KNN have also performed comparatively well with accuracy scores of 0.703 and 0.706 respectively. However SVM and LR couldn’t perform well, because in the current scenario these models required an extensive feature set for learning, compared to RF, ETC, and KNN. The highest correct predictions in this scenario are given by the ETC, which is 272 out of 381, as shown in confusion matrix of Table 8. In addition, all CNN variants give higher performance results as compared to all the ML models. These pre-trained models used the neural networks in their architecture which are more appropriate to extract important features for learning and help to boost their accuracy. ML models have no such mechanisms to find important features for learning automatically. That is the reason ML models are not performing well as compared to CNN models. Results reveal that the VVG16 gives 364 correct predictions out of 381 and only 17 wrong predictions, as shown in Table 10, which is the lowest wrong prediction ratio as compared to all other models on the original image dataset.

Table 10.

Confusion matrix for all CNN variants on the original dataset.

| Model | TP | TN | FP | FN | CP | WP |

|---|---|---|---|---|---|---|

| VVG16 | 215 | 149 | 4 | 13 | 364 | 17 |

| Inception V3 | 201 | 139 | 18 | 23 | 340 | 41 |

| ResNet50 | 204 | 143 | 15 | 19 | 347 | 34 |

| EfficientNetB0 | 209 | 136 | 10 | 26 | 345 | 36 |

5.2. ML and CNN models with ROI based data preprocessing

The image dataset has been preprocessed to extract the specific regions of images using ROI extraction. Thus after getting the images consisting of only the specific parts which are relevant in mosquito image classification in a refined version of the dataset. All the ML models have been then trained using the dataset containing the images with specific regions of interest. On testing the models after application of ROI based feature extraction, athe model performances were significantly improved. Since the model learning in this scenario was based on more relevant features needed for image classification. While as all the other irrelevant image regions were cropped out to eliminate the features not required for the classification.

VVG16 is equal in performance with machine learning model ETC because the ROI based machine learning model gets same important features. ML models provided a high score as compared to InceptionV3, ResNet-50, and EfficientNetB0 because these models perform better given a large feature set while after selected feature set size to reduce which is not suitable for CNN variant but machine learning models. VVG16 gives 376 correct predictions out of 381, equal in number with ETC shown in Table 14.

Table 14.

Confusion matrix for all CNN variants after applying ROI extraction technique.

| Model | TP | TN | FP | FN | CP | WP |

|---|---|---|---|---|---|---|

| VVG16 | 217 | 159 | 2 | 3 | 376 | 5 |

| Inception V3 | 218 | 129 | 1 | 33 | 347 | 34 |

| ResNet50 | 216 | 150 | 3 | 12 | 366 | 15 |

| EfficientNetB0 | 205 | 151 | 14 | 11 | 356 | 25 |

5.3. ML and CNN models with RIFS data preprocessing

The ETC model performs well in this scenario because of its ensemble architecture. It combines several decision trees in the classification procedure using majority voting criteria which makes it a more suitable model compared to individual classification models.

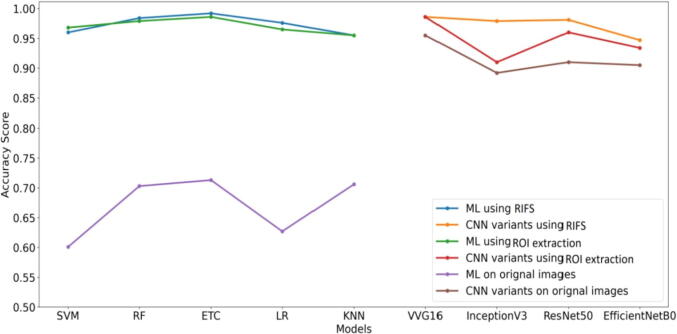

The comparison between all model performances shows that ROI and RIFS based feature extraction techniques improve model performances very significantly as compared to training on features of the original dataset. This is because these techniques help extract very specific and more meaningful features for learning, which helps boost models’ performance. Fig. 9 shows that ML models are not performing well on the original dataset. However, the CNNs’ performance is better on original dataset images because these models automatically learn on good features. ML models’ performance boosts right after the ROI extraction of specific parts of mosquito’s from images. This technique enables the models to use the most appropriate features for classification and improves the results while the RIFS technique helps more to improve model performances.

Fig. 9.

Accuracy comparison between all approaches.

Why this study is significant?

-

•

The study experiments are based on a dataset which has never been used previously in any of the studies according to best of our knowledge.

-

•

This study proposes a novekl approach in which the ML model outperforms the strong CNN Variant.

-

•

This study introduces the RIFS approach which shows that the focus on the important features can improve ML model performance and also can save computation time and cost.

-

•

The study performs a strong comparison between well-tuned baseline models to show the significance of the proposed approach

6. Conclusion

Many Mosquito-borne diseases are deadly as the antiviral and antibiotic drugs are not effective in treating the infected patients. This becomes a primary reason for almost one million human deaths per year in the world. The current best-known approach to prevent the spread of mosquito-borne diseases is to eliminate the possible presence of mosquitoes and their breeding areas using different chemical and insecticide sprays. However, the complete elimination of these flying insects becomes challenging because these sprays or chemical applications' abundant use creates resistance in the mosquitoes.

Identifying disease-transmitting mosquitoes in any potential area demands an efficient automatic system to detect and classify the particular types of mosquitoes. The system will aid the health authorities and other stack holders to check the presence of any disease-spreading mosquito in the area of their interest. In this study, an ML and CNN-based methodology has been proposed to develop an effective system that will effectively aid in epidemiology to design evidence-based policies and decisions by analyzing the risks and transmission. It will prepare the authorities to take preventive measures in the targeted areas to control its spread at the right time. This study proposes a methodology for classifying the most important classes of disease-spreading mosquitoes such as Aedes and Culex using ML and CNN-based approaches. The study uses a primary image dataset of Aedes and Culex mosquitoes species obtained from the IEEE data port, a well-known source for the datasets. The dataset contains the images of two species of mosquitoes named Culex and Aedes. A novel feature selection approach, RIFS, has been proposed and evaluated in this study. The objective was to benefit from image filtering techniques' efficiency and the accuracy of wrapper-based feature selection techniques. So the RIFS was designed as a hybrid feature selection preprocessing technique. The first phase of the feature selection eliminates the irrelevant portions of images to increase efficiency and reduce the computational cost. In the second phase, the most appropriate features are being selected to improve the model accuracies. In the experimental setup, all the applied ML models such as SVM, RF, ETC, LR, and KNN and CNN model variants such as VG16, InceptionV3, ResNet 50, and EfficientNetB0 have been trained to evaluate the performance and computational cost of each model.

The results show that the proposed methodology based on the novel feature selection approach RIFS is very effective on both model efficiencies and computational complexity. It is also believed that the methodology can also apply to other different problem areas involving effective feature selection. The main findings in the results show that the ETC outperformed among all the ML models giving 0.992 accuracy, which was performing as low as 0.71 before the application of RIFS. VVG16 attained 0.986 accuracy when the proposed RIFS was applied. These observations prove that the low-performing ML models can also achieve a significant performance while also reducing the computational efforts when using appropriate feature selection. As can be seen in the results, the ETC model takes very less training time and testing time compared to all other models.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgement

This research was supported by the Florida Center for Advanced Analytic and Data Science funded by Ernesto. Net (under the Algorithms for Good Grant). The authors extend their appreciation to the Deanship of Scientific Research at King Saud University for supporting this research work through research group no. RG-1441-455.

Footnotes

Peer review under responsibility of King Saud University.

Contributor Information

Aijaz Ahmad Reshi, Email: aijazonnet@gmail.com.

Ernesto Lee, Email: elee@broward.edu.

References

- Akter, M, Hossain, M.S., Uddin Ahmed, T., Andersson, K., 2020. Mosquito classication using convolutional neural network with data augmentation. In: 3rd International Conference on Intelligent Computing & Optimization 2020, ICO 2020.

- Bhati B.S., Rai C. Intelligent Computing in Engineering. Springer; 2020. Ensemble based approach for intrusion detection using extra tree classifier; pp. 213–220. [Google Scholar]

- D. control center Taiwan, 2021. Japanese encephalitis information from Taiwan cdc. https://www.cdc.gov.tw/En/Category/ListContent/bg0g\_VU\_Ysrgkes\_KRUDgQ\?uaid\=FCBms2B8k0PJx4io35AsOw (February 2021).

- Fanioudakis E., Geismar M., Potamitis I. 26th European SignalProcessing Conference (EUSIPCO) IEEE, 2018. 2018. Mosquito wingbeat analysis and classification using deep learning; pp. 2410–2414. [Google Scholar]

- Fuad M.A.M., Ab Ghani M.R., Ghazali R., Izzuddin T.A., Sulaima M.F., Jano Z., Sutikno T. Training of convolutional neural networkusing transfer learning for aedes aegypti larvae. Telkomnika. 2018;16(4):1894–1900. [Google Scholar]

- Fuchida M., Pathmakumar T., Mohan R.E., Tan N., Nakamura A. Vision-based perception and classification of mosquitoes using supportvector machine. Appl. Sci. 2017;7(1):51. [Google Scholar]

- Furuya-Kanamori L., Liang S., Milinovich G., Magalhaes R.J.S., Clements A.C., Hu W., Brasil P., Frentiu F.D., Dunning R., Yakob L. Co-distribution and co-infection of chikungunya and dengue viruses. BMC Infect. Dis. 2016;16(1):1–11. doi: 10.1186/s12879-016-1417-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- García, Z., Yanai, K., Nakano, M., Arista, A., Cleofas, L., Perez-Meana, H., (2019). Mosquito Larvae Image Classification Based on DenseNet and Guided Grad-CAM. 10.1007/978-3-030-31321-0_21. [DOI]

- Geurts P., Ernst D., Wehenkel L. Extremely randomized trees. Machine Learning. 2006;63(1):3–42. [Google Scholar]

- Hadinegoro S.R., Arredondo-García J.L., Capeding M.R., Deseda C., Chotpitayasunondh T., Dietze R., Hj Muhammad Ismail H.I., Reynales H., Limkittikul K., Rivera-Medina D.M., Tran H.N., Bouckenooghe A., Chansinghakul D., Cortés M., Fanouillere K., Forrat R., Frago C., Gailhardou S., Jackson N., Noriega F., Plennevaux E., Wartel T.A., Zambrano B., Saville M. Efficacy and long-term safety of a dengue vaccine in regions of endemic disease. New England J. Med. 2015;373(13):1195–1206. doi: 10.1056/NEJMoa1506223. [DOI] [PubMed] [Google Scholar]

- He K., Zhang X., Ren S., Sun J. Proceedings of the IEEE conference on computer vision andpattern recognition. 2016. Deep residual learning for image recognition; pp. 770–778. [Google Scholar]

- Huang L.-P., Hong M.-H., Luo C.-H., Mahajan S., Chen L.-J. 2018 Conference on Technologies and Applications of Artificial Intelligence (TAAI), IEEE. 2018. A vector mosquitoes classification system based on edge computing and deeplearning; pp. 24–27. [Google Scholar]

- Jasinskiene N., Coates C.J., Benedict M.Q., Cornel A.J., Rafferty C.S., James A.A., Collins F.H. Stable transformation of the yellowfever mosquito, aedes aegypti, with the hermes element from the housefly. Proc. Natl. Acad. Sci. 1998;95(7):3743–3747. doi: 10.1073/pnas.95.7.3743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minakshi M., Bharti P., Chellappan S. 2017 European Conference on Networksand Communications (EuCNC), IEEE. 2017. Identifying mosquito species using smart-phone cameras; pp. 1–6. [Google Scholar]

- Motta D., Santos A.Á.B., Winkler I., Machado B.A.S., Pereira D.A.D.I., Cavalcanti A.M., Fonseca E.O.L., Kirchner F., Badaro R. Application of convolutional neural networks for classification of adult mosquitoes in the field. PLoS ONE. 2019;14(1):e0210829. doi: 10.1371/journal.pone.0210829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okayasu K., Yoshida K., Fuchida M., Nakamura A. Vision-based classification of mosquito species: Comparison of conventional and deeplearning methods. Appl. Sci. 2019;9(18):3935. [Google Scholar]

- Ortiz, A.S., Miyatake, M.N., Tünnermann, H., Teramoto, T., Shouno, H., 2018. Mosquito larva classification based on a convolution neural network. In: Proceedings of the International Conference on Parallel and Distributed Processing Techniques and Applications (PDPTA), The SteeringCommittee of The World Congress in Computer Science, Computer …, pp. 320–325.

- Park J., Kim D.I., Choi B., Kang W., Kwon H.W. Classification and morphological analysis of vector mosquitoes using deep convolutionalneural networks. Sci. Rep. 2020;10(1):1–12. doi: 10.1038/s41598-020-57875-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reshma Pise, K.P.P.C., 2020. Mayawadee Aungmaneeporn, Image dataset of aedes and culex mosquito species. https://ieee\-dataport.org/open\-access/image\-dataset\-aedes\-and\-culex\-mosquito\-species (December 2020).

- Reshi Aijaz, Ahmad, et al. An Efficient CNN Model for COVID-19 Disease Detection Based on X-Ray Image. Complexity. 2021 doi: 10.1155/2021/6621607. https://www.hindawi.com/journals/complexity/2021/6621607/ [DOI] [Google Scholar]

- Reshi Aijaz, Ahmad, et al. Diagnosis of vertebral column pathologies using concatenated resampling with machine learning algorithms. PeerJ Computer. 2021 doi: 10.7717/peerj-cs.547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roth A., Mercier A., Lepers C., Hoy D., Duituturaga S., Benyon E., Guillaumot L., Souares Y. Concurrent outbreaks of dengue, chikungunyaand zika virus infections–an unprecedented epidemic wave of mosquito-borne viruses in the pacific 2012–2014. Eurosurveillance. 2014;19:20929. doi: 10.2807/1560-7917.es2014.19.41.20929. [DOI] [PubMed] [Google Scholar]

- Sanchez-Ortiz A., Fierro-Radilla A., Arista-Jalife A., Cedillo-Hernandez M., Nakano-Miyatake M., Robles-Camarillo D. 2017 International Conference on Electronics, Communications and Computers (CONIELECOMP), IEEE. 2017. Cuatepotzo-Jim ´enez, Mosquito larva classification method based on convolutional neural networks; pp. 1–6. [Google Scholar]

- Shorten C., Khoshgoftaar T.M. A survey on image data augmentation for deep learning. J. Big Data. 2019;6(1):1–48. doi: 10.1186/s40537-021-00492-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simonyan, K, Zisserman, A., Very deep convolutional networks for large-scale image recognition, arXiv preprint arXiv:1409.1556.

- Singh, S, Kubica, J., Larsen, S., Sorokina, D., 2009. Parallel large scale feature selection for logistic regression. In: Proceedings of the 2009 SIAMinternational conference on data mining, SIAM, pp. 1172–1183.

- T. center for disease control, 2021. Dengue fever information from Taiwan cdc. https://bit.ly/3bAswDe (February 2021).

- Tan M., Le Q. International Conference on Machine Learning, PMLR. 2019. Efficientnet, Rethinking model scaling for convolutional neural networks; pp. 6105–6114. [Google Scholar]

- Theckedath D., Sedamkar R. Detecting affect states using vgg16, resnet50 and se-resnet50 networks. SN Computer Sci. 2020;1(2):1–7. [Google Scholar]

- Thomas T., De T.D., Sharma P., Lata S., Saraswat P., Pandey K.C., Dixit R. Hemocytome: deep sequencing analysis of mosquito blood cellsin Indian malarial vector anopheles stephensi. Gene. 2016;585(2):177–190. doi: 10.1016/j.gene.2016.02.031. [DOI] [PubMed] [Google Scholar]

- Organization, W.H., et al., 2020. Global strategy for dengue prevention and control 2012-2020.

- Organization, W.H., 2021. Vector-borne diseases. https://www.who.int/news\-room/fact\-sheets/detail/vector\-borne\-diseases (February 2021).

- Whitney A.W. A direct method of nonparametric measurement selection. IEEE Trans. Comput. 1971;100(9):1100–1103. [Google Scholar]