Abstract

Background

There has been an interest in real-world evidence (RWE) in recent years. RWE is usually generated from data derived from routine healthcare, such as electronic healthcare records and disease registries. While RWE has many advantages, it is often open to various biases, which may distort results. Appropriate understanding and interpretation are critical to the best use of RWE in healthcare decisions.

Methods

On the basis of a literature review and empirical research experience, we summarised the concept and methodological framework of RWE, and discussed in detail methodological issues specific to routinely collected healthcare data and observational studies using such data.

Results

RWE is derived from a spectrum of data generated from the real-world setting, using two broad study designs including observational studies and pragmatic clinical trials. Real-world data may usually be collected through routine practice or sometimes actively collected with a research purpose. Observational studies using routinely collected data (RCD) are the most common type of RWE, although they are prone to biases. When planning and implementing RWE studies, coherent working steps are warranted, including definition of a clear and answerable research question, development of a research team, selection of a fit-for-purpose data source, choice of state-of-the-art study design, establishing a database with transparent data processing, performing multiple statistical analysis to control bias, and reporting results in accordance with established guidelines.

Conclusions

RWE has been mounting over the years. The appropriate interpretation and use of such evidence often warrant adequate understanding about methodology. Researchers and policymakers should be aware of the methodological pitfalls when generating and interpreting RWE.

Keywords: evidence-based medicine, practice guideline, practice guidelines as topic, research design, safety

In recent years, the concept of real-world evidence (RWE) has become widely accepted. In particular, with the release of the 21st Century Cure Act in the USA, the interest in RWE was fuelled among researchers and policymakers.1 RWE may have a wide spectrum of applications, such as understanding about treatment patterns, informing treatment outcomes in vulnerable populations, and assessing treatment effects in real-world practice.

However, misunderstanding or confusion is still common concerning what RWE is and how one should interpret the evidence. For example, a common misconception about RWE is that it can only be generated using data from routine clinical care and does not involve new data collection over a pre-defined protocol.2 Another common misconception is that RWE merely refers to evidence generated from observational studies.3

In reality, the methodological framework for RWE is more complex than classical clinical trials. Lack of strong methodological and statistical expertise may sometimes lead to inappropriate handling of data, thus producing unreliable or even incorrect conclusions.3 Therefore, it is important to better understand the concept and methodological issues regarding RWE.

Conceptual framework of real-world evidence

The term ‘real-world evidence’ is often used to refer to clinical evidence about utilisation (eg, treatment pattern or compliance), benefits and harms of medical products in a defined population or a subgroup population. The evidence is typically derived from analyses of healthcare data outside of classical clinical trials.2

Data sources for generating RWE usually come in two main forms, including routinely collected healthcare data (RCD) and actively collected healthcare data in routine clinical practice settings.4 While RCD are often generated from routine practice for non-research purposes, such as electronic medical records, claims data and health surveillance data, actively collected healthcare data are often collected with certain research purposes. These two forms of data are important sources of real-world data, and their common features are that the data are derived from routine clinical practice.

RWE is derived from the analyses of real-world data, and in many cases is based on observational study designs. However, such studies are susceptible to bias due to the complexity in the healthcare setting, data, and observational nature of the design. Both researchers and evidence users must be highly cautious about observational studies using real-world data. It is also worth noting that RWE is not equivalent to observational study. An interventional study can also be used to generate RWE, and one such design is a pragmatic clinical trial.3 5 This study design is often prioritised where the treatment effect on a heterogeneous population is urgently needed, the optimal treatment is largely unknown in routine practice, or medical needs (typically those related to patient welfare) are insufficiently met.6 In this paper, we specifically discuss issues about observational studies using real-world data, especially routinely collected healthcare data.

Issues about data sources: focusing on routinely collected data

Routinely collected healthcare data represent the most common type of real-world data. Because these data are typically collected in routine healthcare without a priori research purposes, their quality and applicability are often issues of methodological concerns.7 8

The quality of RCD may be assessed in two dimensions—completeness and accuracy.9 Completeness refers to the extent to which data are missing from the research perspective. For example, while information regarding cigarette smoking is important for many epidemiological studies, this information may often go unrecorded in routine practice.10 Missing data are inevitable in RCD. However, understanding the extent to which important variables are missing among RCD and potential reasons for them missing is often needed. Another important dimension is accuracy. Information in electronic medical records, such as disease codes or numerical values, may sometimes be recorded inaccurately. Also, the underlying reasons may vary.11 Validation of data is often needed when applying RCD for research purposes, and the involvement of manual checking is also often needed.12

One should also assess the relevance of data. In the generation of RWE, the choice of data should always be made according to predefined research purposes.13 For example, claims data may be more suitable for studies on health economics and treatment patterns; however, they may not provide sufficient information on patient characteristics, laboratory results or clinical endpoints, which are crucial for studies assessing treatment effects.14 In another example, spontaneous adverse events report databases may often be used for detecting a signal of adverse events or generating hypotheses, but are of limited relevance for testing a hypothesis about adverse drug reaction. In the third example, electronic health records contain abundant clinical information, such as operation, imaging and laboratory results. They are useful data sources for answering a wide spectrum of clinical questions, ranging from disease burdens to prognoses, but are lacking regular follow-up visits.7

In order to enhance the use of real-world data, several guidance documents are readily available that discuss the key issues about data sources for pharmacoepidemiology studies.15–17

Observational studies using routinely collected data

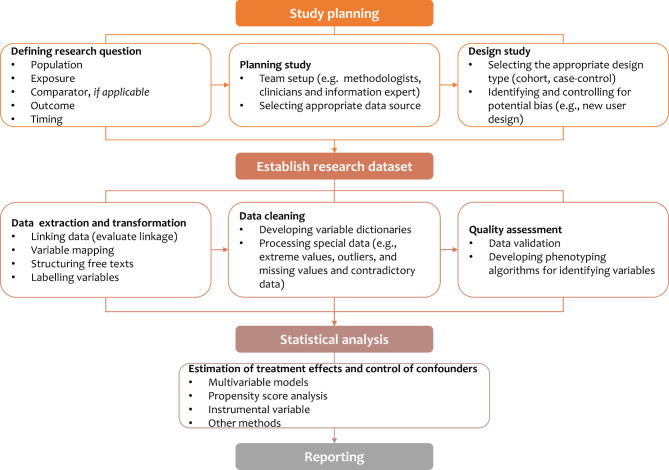

Observational studies are the most common approach to using routinely collected data. A common research flow may be used when planning and implementing such studies (figure 1).

Figure 1.

Schematic flow of observational study using routinely collected data.

Research question, study planning and design

In observational studies using RCD, the initial step is to specify a clear and answerable research question that contains the key components, including population, exposure, comparator (if applicable), outcome and timing. A multidisciplinary team would usually be developed which is responsible for the planning, design, and implementation of a study. In the study planning, the research team needs to identify potential data sources and determine the appropriateness of the data. The data appropriateness often varies by research questions. However, it may commonly be assessed in dimensions including representativeness, size of data, availability, completeness and accuracy of key research variables, and duration of database coverage.18

In observational studies using RCD, study designs may be highly variable and are typically retrospective in nature. Retrospective cohort studies, case–control studies or nested case–control studies are the most frequently chosen epidemiological designs in assessing effects of drug treatments. However, these designs are usually subject to selection bias and measurement bias, both of which may distort the estimates of drug treatment effects, and even flip the direction of the effects. Many forms of selection biases have been identified in studies using RCD,19 and indication bias is among the most common selection biases that warrants strong attention.20 Another common bias is time-dependent bias, such as immortal time bias and time-lag bias, which may derive from a wrongly defined timeframe of the exposure group (eg, a waiting time between initiation of follow-up and treatment inappropriately assigned to the exposed group).21 There is an extensive literature discussing the different forms of selection biases22–24 and interested researchers may find them helpful in designing their studies. In general, new user design, treatment-naïve new user design or active comparator are often desirable strategies to resolve some of these important biases.25 26New user design align exposure and comparator groups at the same initiation time, while active comparators can restrict participants with the same indications.

Developing research dataset from the RCD

On the completion of study planning and design, a research dataset should be established. As RCD are collected for administrative purposes, they are not usable for observational studies in their original forms. Therefore, it is necessary to transform the data into a uniformed and structured format. The transformation of RCD into a research dataset may include multiple running steps, such as data linkage, structurisation of the free texts and variable labelling.

Additional data cleaning is also an essential part of building a research dataset. This process often includes establishing variable dictionaries, processing special data (ie, extreme values, outliers, missing values and contradictory data). Notably, raw data, detailed cleaning rules, and data processing procedures should be kept to ensure the transparency of the study.

A specific question of using RCD is to how to frame operational phenotyping algorithms—computer-executable definitions that use diagnosis codes, clinical markers, or demographic characteristics—for identifying research variables (including exposure, outcome and covariates).27 The validity or reliability of these codes or algorithms for research variables are critical.

Statistical analysis

Statistical analysis in observational studies should be mindful of controlling for confounding factors. Confounding is very common in observational studies, and many types of confounding may be present in the use of RCD for assessing drug treatment effects, for example, time-dependent confounding and unmeasured confounding. These issues may often distort the estimated treatment effects.19 20 28–30 Various methods have been developed to address confounding issues such as multivariable models, propensity score analysis and instrumental variable analysis.31–33 Guidance is available for the use of sophisticated statistical methods in the analysis of RCD.34

Given these methodological challenges in observational studies, both regulatory decision-makers and academic experts are committed to developing methodological guidelines about observational studies using RCD.13 15 25 35–39 It is always recommended that researchers should develop a research protocol for any study.25

Reporting

Complete and transparent reporting is essential for evaluating the reliability and validity of study findings. However, the reporting quality of observational studies using RCD is often suboptimal,40 especially in the elaborations of research questions, type of data sources, time frames, study designs, and statistical models.40 41 Several guidelines have been developed to enhance reporting, such as Strengthening the Reporting of Observational Studies in Epidemiology (STROBE),42 the Reporting of studies Conducted using Observational Routinely-collected Data (RECORD) statement,43 and its extension for pharmacoepidemiology studies (RECORD-PE).44 Interested researchers should always consult these guidelines for reporting of their studies.

Conclusions

In this paper, we provide a snapshot of the concepts and key methodological issues for RWE. For researchers, real-world data have provided important data sources to address a variety of questions. Nevertheless, important methodological challenges may be present, and careful planning, implementing and reporting of such studies are highly desirable. The users of RWE should also be cautious when interpreting the findings from such studies and should always be aware of the potential methodological pitfalls.

What this paper adds.

What is already known on this subject

The release of the 21st Century Cure Act in the USA has accelerated the interest in real-world evidence (RWE), especially among healthcare researchers and policymakers.

Misunderstanding and lack of methodological know-how is common about RWE.

What this study adds

This paper summarises the conceptual framework of RWE and proposes a research flow to assist in the understanding and implementation of an RWE study.

This paper provides an overview of pitfalls inherent with RWE, especially those observational studies using routinely collected healthcare data, and offers reference to guidance documents about reporting.

Footnotes

Contributors: Conceptualisation: XS. Writing – original draft: ML. Writing – review and editing: XS, ML, YQ, WW. XS is the guarantor who takes responsibility for the overall content.

Funding: This research was supported by Sichuan Youth Science and Technology Innovation Research Team (Grant No. 2020JDTD0015), 1.3.5 project for disciplines of excellence, West China Hospital, Sichuan University (Grant No. ZYYC08003), and China Medical Board (Grant No. CMB19-324).

Competing interests: None declared.

Provenance and peer review: Commissioned; internally peer reviewed.

Data availability statement

No data are available. Not applicable.

Ethics statements

Patient consent for publication

Not applicable.

References

- 1. Booth CM, Karim S, Mackillop WJ. Real-world data: towards achieving the achievable in cancer care. Nat Rev Clin Oncol 2019;16:312–25. 10.1038/s41571-019-0167-7 [DOI] [PubMed] [Google Scholar]

- 2. Makady A, de Boer A, Hillege H, et al. What is real-world data? A review of definitions based on literature and stakeholder interviews. Value Health 2017;20:858–65. 10.1016/j.jval.2017.03.008 [DOI] [PubMed] [Google Scholar]

- 3. Sherman RE, Anderson SA, Dal Pan GJ, et al. Real-world evidence - what is it and what can it tell us? N Engl J Med 2016;375:2293–7. 10.1056/NEJMsb1609216 [DOI] [PubMed] [Google Scholar]

- 4. Wen W, Jing T, Yan R. Real-world data studies: update and future development. Chinese Journal of Evidence-based Medicine 2020;20. [Google Scholar]

- 5. Karanicolas PJ, Montori VM, Schünemann HJ, et al. ACP Journal Club. "Pragmatic" clinical trials: from whose perspective? Ann Intern Med 2009;150:Jc6-2–jc6-3.. 10.7326/0003-4819-150-12-200906160-02002 [DOI] [PubMed] [Google Scholar]

- 6. Kalkman S, van Thiel GJMW, Grobbee DE, et al. Pragmatic clinical trials: ethical imperatives and opportunities. Drug Discov Today 2018;23:1919–21. 10.1016/j.drudis.2018.06.007 [DOI] [PubMed] [Google Scholar]

- 7. Corrigan-Curay J, Sacks L, Woodcock J. Real-world evidence and real-world data for evaluating drug safety and effectiveness. JAMA 2018;320:867–8. 10.1001/jama.2018.10136 [DOI] [PubMed] [Google Scholar]

- 8. Brennan L, Watson M, Klaber R, et al. The importance of knowing context of hospital episode statistics when reconfiguring the NHS. BMJ 2012;344:e2432. 10.1136/bmj.e2432 [DOI] [PubMed] [Google Scholar]

- 9. Cook JA, Collins GS. The rise of big clinical databases. Br J Surg 2015;102:e93–101. 10.1002/bjs.9723 [DOI] [PubMed] [Google Scholar]

- 10. Basch E, Schrag D. The evolving uses of "real-world" data. JAMA 2019;321:1359–60. 10.1001/jama.2019.4064 [DOI] [PubMed] [Google Scholar]

- 11. Ladha KS, Eikermann M. Codifying healthcare--big data and the issue of misclassification. BMC Anesthesiol 2015;15:179. 10.1186/s12871-015-0165-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Neuman MD. The importance of validation studies in perioperative database research. Anesthesiology 2015;123:243–5. 10.1097/ALN.0000000000000691 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Magnusson P, Palm A, Branden E, et al. Misclassification of hypertrophic cardiomyopathy: validation of diagnostic codes. Clin Epidemiol 2017;9:403–10. 10.2147/CLEP.S139300 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Neubauer S, Kreis K, Klora M, et al. Access, use, and challenges of claims data analyses in Germany. Eur J Health Econ 2017;18:533–6. 10.1007/s10198-016-0849-3 [DOI] [PubMed] [Google Scholar]

- 15. U.S. Food & Drug Administration . Good pharmacovigilance practices and pharmacoepidemiologic assessment. Available: https://www.fda.gov/media/71546/download

- 16. U.S. Food & Drug Administration . General Considerations for Clinical Studies E8(R1) (draft version). Available: https://www.fda.gov/media/82664/download [Accessed 8 May 2019].

- 17. Epstein M, International Society of Pharmacoepidemiology . Guidelines for good pharmacoepidemiology practices (GPP). Pharmacoepidemiol Drug Saf 2005;14:589–95. 10.1002/pds.1082 [DOI] [PubMed] [Google Scholar]

- 18. Wang W, Gao P, Wu J. Technical guidance for developing research databases using existing health and medical data [in Chinese]. Chinese Journal of Evidence-based Medicine 2019;19:763–70. [Google Scholar]

- 19. Prada-Ramallal G, Takkouche B, Figueiras A. Bias in pharmacoepidemiologic studies using secondary health care databases: a scoping review. BMC Med Res Methodol 2019;19:53. 10.1186/s12874-019-0695-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Kyriacou DN, Lewis RJ. Confounding by indication in clinical research. JAMA 2016;316:1818–9. 10.1001/jama.2016.16435 [DOI] [PubMed] [Google Scholar]

- 21. Iudici M, Porcher R, Riveros C, et al. Time-dependent biases in observational studies of comparative effectiveness research in rheumatology. A methodological review. Ann Rheum Dis 2019;78:562–9. 10.1136/annrheumdis-2018-214544 [DOI] [PubMed] [Google Scholar]

- 22. Haneuse S. Distinguishing selection bias and confounding bias in comparative effectiveness research. Med Care 2016;54:e23–9. 10.1097/MLR.0000000000000011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Nohr EA, Liew Z. How to investigate and adjust for selection bias in cohort studies. Acta Obstet Gynecol Scand 2018;97:407–16. 10.1111/aogs.13319 [DOI] [PubMed] [Google Scholar]

- 24. Infante-Rivard C, Cusson A. Reflection on modern methods: selection bias-a review of recent developments. Int J Epidemiol 2018;47:1714–22. 10.1093/ije/dyy138 [DOI] [PubMed] [Google Scholar]

- 25. U.S. Food & Drug Administration . Best practices for conducting and reporting pharmacoepidemiologic safety studies using electronic healthcare data. Available: https://www.fda.gov/media/79922/download [Accessed May 2013].

- 26. Lund JL, Richardson DB, Stürmer T. The active comparator, new user study design in pharmacoepidemiology: historical foundations and contemporary application. Curr Epidemiol Rep 2015;2:221–8. 10.1007/s40471-015-0053-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Richesson RL, Sun J, Pathak J, et al. Clinical phenotyping in selected national networks: demonstrating the need for high-throughput, portable, and computational methods. Artif Intell Med 2016;71:57–61. 10.1016/j.artmed.2016.05.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Groenwold RHH, Sterne JAC, Lawlor DA, et al. Sensitivity analysis for the effects of multiple unmeasured confounders. Ann Epidemiol 2016;26:605–11. 10.1016/j.annepidem.2016.07.009 [DOI] [PubMed] [Google Scholar]

- 29. Suissa S. Immortal time bias in pharmaco-epidemiology. Am J Epidemiol 2008;167:492–9. 10.1093/aje/kwm324 [DOI] [PubMed] [Google Scholar]

- 30. Haine D, Dohoo I, Dufour S. Selection and misclassification biases in longitudinal studies. Front Vet Sci 2018;5:99. 10.3389/fvets.2018.00099 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Zhang Z, Uddin MJ, Cheng J, et al. Instrumental variable analysis in the presence of unmeasured confounding. Ann Transl Med 2018;6:182. 10.21037/atm.2018.03.37 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Austin PC. An introduction to propensity score methods for reducing the effects of confounding in observational studies. Multivariate Behav Res 2011;46:399–424. 10.1080/00273171.2011.568786 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Wunsch H, Linde-Zwirble WT, Angus DC. Methods to adjust for bias and confounding in critical care health services research involving observational data. J Crit Care 2006;21:1–7. 10.1016/j.jcrc.2006.01.004 [DOI] [PubMed] [Google Scholar]

- 34. Johnson ML, Crown W, Martin BC, et al. Good research practices for comparative effectiveness research: analytic methods to improve causal inference from nonrandomized studies of treatment effects using secondary data sources: the ISPOR Good Research Practices for Retrospective Database Analysis Task Force Report--part III. Value Health 2009;12:1062–73. 10.1111/j.1524-4733.2009.00602.x [DOI] [PubMed] [Google Scholar]

- 35. U.S. Food & Drug Administration . Best practices in drug and biological product postmarket safety surveillance for FDA staff (draft). Available: https://www.fda.gov/media/130216/download [Accessed Nov 2019].

- 36. Agency for Healthcare Research and Quality . AHRQ methods for effective health care. In: Velentgas P, Dreyer NA, Nourjah P, et al., eds. Developing a protocol for observational comparative effectiveness research: a user’s guide. Rockville, MD: Agency for Healthcare Research and Quality (US), 2013. [PubMed] [Google Scholar]

- 37. Public Policy Committee, International Society of Pharmacoepidemiology . Guidelines for good pharmacoepidemiology practice (GPP). Pharmacoepidemiol Drug Saf 2016;25:2–10. 10.1002/pds.3891 [DOI] [PubMed] [Google Scholar]

- 38. Banack HR, Stokes A, Fox MP, et al. Stratified probabilistic bias analysis for body mass Index-related exposure misclassification in postmenopausal women. Epidemiology 2018;29:604–13. 10.1097/EDE.0000000000000863 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Brown J, Kreiger N, Darlington GA, et al. Misclassification of exposure: coffee as a surrogate for caffeine intake. Am J Epidemiol 2001;153:815–20. 10.1093/aje/153.8.815 [DOI] [PubMed] [Google Scholar]

- 40. Hemkens LG, Benchimol EI, Langan SM, et al. The reporting of studies using routinely collected health data was often insufficient. J Clin Epidemiol 2016;79:104–11. 10.1016/j.jclinepi.2016.06.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Nie X, Zhang Y, Wu Z, et al. Evaluation of reporting quality for observational studies using routinely collected health data in pharmacovigilance. Expert Opin Drug Saf 2018;17:661–8. 10.1080/14740338.2018.1484106 [DOI] [PubMed] [Google Scholar]

- 42. Cuschieri S. The STROBE guidelines. Saudi J Anaesth 2019;13:31–4. 10.4103/sja.SJA_543_18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Benchimol EI, Smeeth L, Guttmann A, et al. [The REporting of studies Conducted using Observational Routinely-collected health Data (RECORD) statement]. Z Evid Fortbild Qual Gesundhwes 2016;115-116:33–48. 10.1016/j.zefq.2016.07.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Langan SM, Schmidt SA, Wing K, et al. The reporting of studies conducted using observational routinely collected health data statement for pharmacoepidemiology (RECORD-PE). BMJ 2018;363:k3532. 10.1136/bmj.k3532 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

No data are available. Not applicable.