Abstract

Fake news is spreading rapidly on social media and poses a serious threat to the COVID-19 outbreak response. This study thus aims to reveal the factors influencing the acceptance of fake news rebuttals on Sina Weibo. Drawing on the elaboration likelihood model (ELM), we used text mining and the econometrics method to investigate the relationships among the central route (rebuttal's information readability and argument quality), peripheral route (rebuttal's source credibility, including authority and influence), and rebuttal acceptance, as well as the moderating effect of receiver's cognitive ability on these relationships. Our findings suggest that source authority had a negative effect on rebuttal acceptance, while source influence had a positive effect. Second, both information readability and argument quality had positive effects on rebuttal acceptance. In addition, individuals with low cognitive abilities relied more on source credibility and argument quality to accept rebuttals, while individuals with high cognitive abilities relied more on information readability. This study can provide decision support for practitioners to establish more effective fake news rebuttal strategies; it is especially valuable to reduce the negative impact of fake news related to major public health emergencies and safeguard the implementation of anti-epidemic strategies.

Keywords: COVID-19, Fake news, Elaboration likelihood model, Rebuttal acceptance, Cognitive ability, Social media

Nomenclature

- COVID-19

Corona Virus Disease 2019

- ELM

Elaboration Likelihood Model

- LDA

Latent Dirichlet Allocation

- WHO

World Health Organization

- SDQC

Supporting, Denying, Querying, or Commenting

- BERT

Bidirectional Encoder Representations from Transformers

- RoBERTa-wwm-ext

Robustly Optimized Bidirectional Encoder Representations from Transformers Approach-Whole Word Masking-Extended Data

- RBT3

Three-Layer Robustly Optimized Bidirectional Encoder Representations from Transformers Approach-Whole Word Masking-Extended Data

- CCCPC

Central Committee of the Communist Party of China

1. Introduction

The global outbreak of Corona Virus Disease 2019 (COVID-19) in the age of social media has caused millions of people to contract the virus, and a significant number of them have lost their lives, resulting in a tremendous social and economic shock across the globe (Erku et al., 2021). Amid the growing burden of the COVID-19 pandemic, the parallel emergencies infodemic (information + epidemic) must be simultaneously tackled: the proliferation of fake news, false rumors, and misinformation surrounding COVID-19 (Gallotti et al., 2020; World Health Organisation, 2020a). At its worst, some health-related fake news has introduced people to ineffective or even potentially harmful remedies, which seriously disrupt the social order. For example, the fake news “The Chinese patent medicine Shuanghuanglian oral solution can prevent COVID-19” led to an overreaction as people rushed to buy the product, indirectly bringing about aggregation behavior. Moreover, the fake news “Smoking and drinking can kill COVID-19” led people to engage in risky behaviors that endangered their health (Song et al., 2021). Thus, the World Health Organization (WHO) warned the public that “we're not just fighting an epidemic; we're fighting an infodemic. Fake news spreads faster and more easily than this virus, and is just as dangerous” (World Health Organisation, 2020b). Studies have shown that when fake news spreads as online rumors on social media, its consequences are amplified (Pal et al., 2020). An important reason is that what used to be propagated largely by word of mouth can now become viral in a short period of time with simple clicks (Chua & Banerjee, 2018). Therefore, how to effectively refute fake news on social media has become an important issue that has never been seen.

To curtail the effect of fake news, most researchers examine methods that might be effective in refuting fake news. There are two main types of methods (Li et al., 2021): blocking fake news by identifying influential users (Ji et al., 2014; Zhang et al., 2018) and a common approach that organizations adopt, which is to use fake news rebuttals (henceforth referred to as “rebuttals”) to spread truths, a term referring to messages that debunk and clarify fake news (Pal et al., 2020; Wang & Qian, 2021). Notably, using rebuttals has better long-term performance than blocking, as the openness of the Internet has made it increasingly difficult to block fake news, and the more the fake news is blocked, the more concerned and skeptical the crowd becomes (Li et al., 2021; Wen et al., 2014). Therefore, on social media, effective methods increasingly tend to use fake news rebuttals.

The limited studies on the effectiveness of rebuttals have found mixed results. Some studies have aimed to evaluate the effectiveness of fake news rebuttal. For example, Li et al. (2021) developed a proper index to measure refutation effectiveness by identifying key factors through text characteristics, including content and contextual factors. Wang et al. (2021) explored the attitude-based echo chamber effect in users' response to rumor rebuttal for multiple topics on Sina Weibo in the early stage of the COVID-19 epidemic. Furthermore, some studies have shown that rebuttals help reduce people's belief in fake news (Bordia et al., 2000; Huang, 2017). These messages enhance individuals' critical thinking ability (Tanaka et al., 2013). Meanwhile, believing rebuttals helps correct individuals' misconceptions and reduce their anxiety (Bordia et al., 2005). However, other studies suggest that rebuttals are not always effective (DiFonzo et al., 1994; Nyhan & Reifler, 2010). Some psychology studies have shown that correction of fake news via the presentation of true information is not only helpful in reducing misperceptions but sometimes may even increase misperceptions (Nyhan & Reifler, 2010). In some cases, these rebuttals can backfire and, ironically, increase misbelief (Lewandowsky et al., 2012; Nyhan & Reifler, 2010).

In addition to the mixed findings presented above, two research gaps can be identified in the related literature. First, there is a lack of research on the acceptance of fake news rebuttals during public health emergencies, and which properties of rebuttals may work better as well as how they do so have not been extensively investigated. Previous research on the factors that influence fake news rebuttal has focused on the characteristics of the source subjects (Bordia et al., 2005; DiFonzo & Bordia, 2000), content (Bordia et al., 2005), and dissemination channels (Jang et al., 2019), which are primarily based on qualitative or empirical studies (e.g., surveys or questionnaires). At the same time, the advent of the big data era has brought a large and growing volume of data generated from numerous sources; however, the big data generated by social media have not been well utilized, and few studies have been conducted on enhancing fake news refutation effectiveness (Li et al., 2021). As statements of truth, effective rebuttals are essentially a powerful, persuasive message. However, previous research has neglected the factors affecting the routes of persuasive strategies for fake news rebuttal acceptance. One promising theoretical framework using which we can understand fake news rebuttal acceptance is the elaboration likelihood model (ELM) of persuasive theory. The ELM is well constructed and clearly and simply articulates the persuasion process (Kitchen et al., 2014). In addition, the model is so descriptive that it can accommodate several different outcomes and hence can be used to support many situations (Kitchen et al., 2014). The ELM argues that information processing can act via a central or peripheral route (Petty & Cacioppo, 1986, pp. 1–24), which has been applied to detect fake news in recent studies (Osatuyi & Hughes, 2018; Singh et al., 2020; Zhao et al., 2021), such as Zhao et al. (2021) drew on the ELM to reveal the effective features to detect misinformation in online health communities. But there has been limited research on the factors influencing the rebuttal acceptance of fake news based on the ELM. Therefore, based on the abundant research data available on social media platforms and drawing from the literature on the ELM, this study argues that peripheral cues (source credibility, including authority and influence) and central clues (information readability and argument quality) may influence the acceptance of fake news rebuttal on social media in China.

Second, to some extent, previous rebuttal research has ignored the individual differences in information receivers' responses to fake news rebuttal, particularly differences in psychological factors, such as individuals' cognitive abilities. Recent studies have begun to focus on the psychological factors that may make some individuals less likely to fall for fake news, such as emotional intelligence (Preston et al., 2021) and critical thinking ability (Tanaka et al., 2013). However, although the number of rebuttals has also risen in response to fake news, it appears that corrections to incorrect information only work on some individuals. In addition, previous research has noted that without a nuanced understanding of the cognitive effects of fake news on individuals, the array of possibilities against which society must guard itself becomes insuperable (Bastick, 2021). Thus, to successfully correct users’ cognitive errors, rebuttals must convince them (Sui & Zhang, 2021). The ELM posits that the ability and motivation to process the information determine which route individuals will employ—central or peripheral—and those individual attributes determine the relative effectiveness of these processes (Angst & Agarwal, 2009). For example, Bordia et al. (2005) test the effects of central cues (denial message quality) and peripheral cues (source credibility), as well as the moderating effects of personal relevance. Prior works also suggest that ability, as used in the ELM literature, is conceptualized as the cognitive ability of a recipient to process the information presented in the message; it is thus an important component of the information processing act (Angst & Agarwal, 2009). Cognitive ability refers to the ability of the human brain to process, store, and extract information (Lubinski, 2009). A previous study investigates the role of political interest, cognitive ability, and social network size in fake information sharing and indicates that those with lower cognitive ability are more likely to share fake information inadvertently (Ahmed, 2021). However, based on the central and peripheral routes in the ELM, how individuals with different cognitive abilities make judgments and further accept fake news rebuttals on social media has not been sufficiently investigated hitherto, particularly regarding the COVID-19 pandemic.

For these reasons, this study poses four research questions (RQs):

RQ1. How is the source credibility (authority and influence) of rebuttals related to rebuttal acceptance?

RQ2. How is the information readability of rebuttals related to rebuttal acceptance?

RQ3. How is the argument quality of rebuttals related to rebuttal acceptance?

RQ4. How are the relationships between source credibility (authority and influence) and rebuttal acceptance, and between information readability and argument quality and rebuttal acceptance moderated by an individual's cognitive ability?

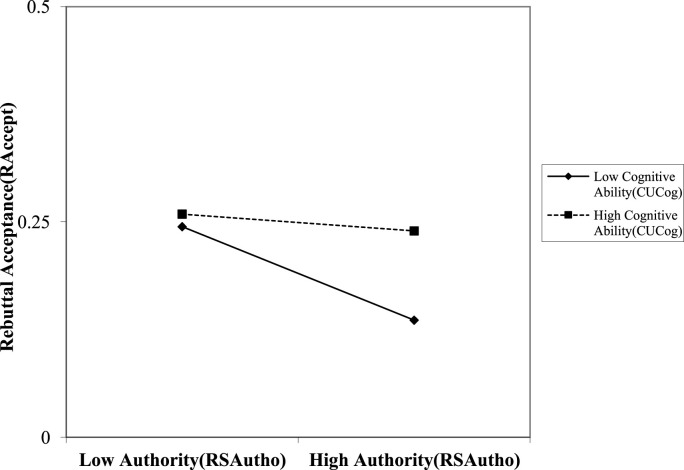

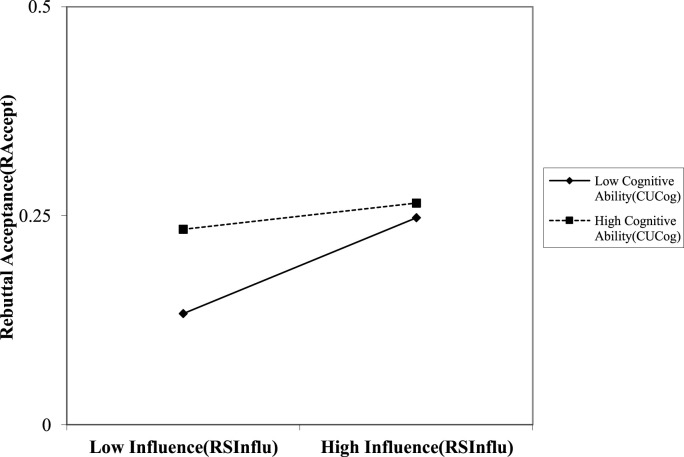

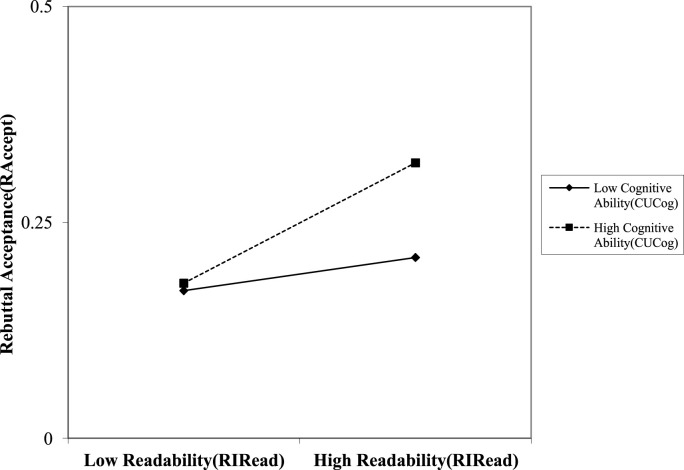

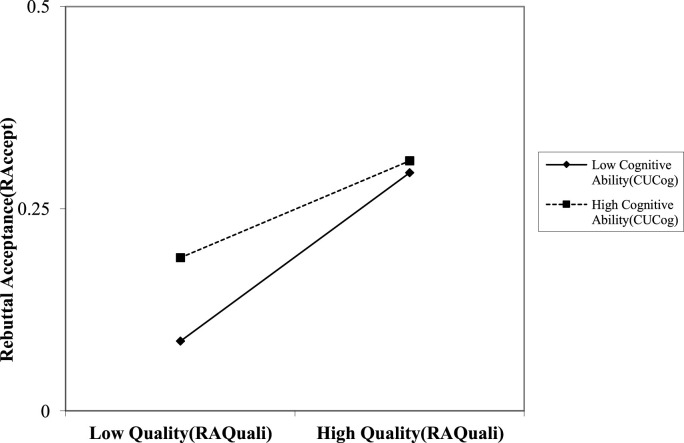

Thus, to resolve the objectives, we collected data on five fake news events related to the COVID-19 pandemic from Sina Weibo in China from January 20, 2020, to June 28, 2020, and investigated rebuttal postings and their comments to reveal the underlying mechanisms of user's rebuttal acceptance. We used deep learning algorithms, the Latent Dirichlet Allocation (LDA) topic model, and the econometrics method to investigate the relationships among rebuttal's source credibility (authority and influence), information readability, argument quality, and rebuttal acceptance, as well as the moderating effect of user's cognitive ability on these relationships. First, we found that source credibility influenced rebuttal acceptance. Specifically, source authority had a negative effect on rebuttal acceptance, while source influence could enhance rebuttal acceptance; the positive effect was slightly stronger. Second, we found that both the information readability and argument quality had a positive effect on rebuttal acceptance. More insightful was the discovery that the effect of argument quality on rebuttal acceptance was more significant than that of information readability. Third, we also found that higher cognitive ability weakened the links among source authority, source influence, argument quality, and rebuttal acceptance, while they strengthened the link between information readability and rebuttal acceptance. Thus, we found that individuals with low cognitive abilities relied more heavily on source credibility in peripheral cues and argument quality in central cues to accept rebuttals, while individuals with high cognitive abilities relied more on information readability in central cues to accept rebuttals.

This study is significant in both theory and practice. Theoretically, this study provides two insights. First, this research enriches the literature related to rebuttal by revealing the underlying mechanisms of rebuttal acceptance on social media, particularly on the Sina Weibo platform in China. To the best of our knowledge, this is one of the first studies to explore the underlying mechanism of rebuttal acceptance during the COVID-19 pandemic from a large volume of text information based on text mining (e.g., deep learning algorithms and LDA topic model) and econometric analysis technology on Chinese social media platforms. Second, the majority of applied research on ELM has been conducted in the fields of social psychology and marketing (e.g., advertising and consumer behavior) to describe how individuals process information (Kitchen et al., 2014; Morris et al., 2005). However, using the ELM theory, we propose that source credibility (authority and influence), information readability, and argument quality are factors that affect the acceptance of fake news rebuttals. This study extends the applicability of the ELM by measuring factors from a text-mining perspective and identifies pathways through which individuals with different cognitive abilities accept fake news rebuttal, which enriches the application of the ELM theory in the field of fake news rebuttal on social media.

Furthermore, this study has two practical implications. First, it highlights the source credibility (authority and influence), information readability, and argument quality features of rebuttals and shows how rebuttals can be crafted effectively for refuting fake news on social media. Our findings offer insights for news outlet professionals, social platform managers, and Chinese government regulators regarding the use of rebuttals in combating online fake news during the COVID-19 pandemic. Second, this study shows the extent to which individuals with different cognitive abilities accept fake news rebuttals in terms of source credibility, information readability, and argument quality. These results provide guidance and a reference point for fake news rebuttal practitioners to provide refuting strategies targeted at individuals with different cognitive abilities.

The remainder of this paper is organized as follows. Section 2 presents a brief overview of the prior research and its theoretical foundation. The development of the research hypotheses and the proposed model are presented in Section 3. The research methodology used in our model is explained in Section 4. The data analysis and results are presented in Section 5. Finally, in Section 6, key findings are discussed, and the paper concludes with theoretical contributions, practical implications, limitations, and future directions.

2. Theoretical background

2.1. Rumors, fake news, and rebuttals

There are numerous terms related to false information on social media; fake news, misinformation, disinformation, and rumors are the four most prominent examples (Jung et al., 2020). Regarding the definition and connotation of fake news, mis- and disinformation and rumors, scholars have different understandings, which are often used interchangeably in academic research (Wang et al., 2018; R. Wang, He, et al., 2020). Allport and Postman (1946) provided an early definition, stating that a rumor is “a specific (or topical) proposition for belief, passed along from person to person, usually by word-of-mouth, without secure standards of evidence being present”. Later, rumors were defined as information spread widely in uncertain or dangerous situations to alleviate fear and anxiety (DiFonzo & Bordia, 2007). In the Chinese context, the fake news problem often refers to online rumors (yao yan in Chinese), a type of unverified information statement widely distributed online (DiFonzo & Bordia, 2007). Similar to the online spread of rumors in Western democracies, rumors concerning politics, health care, food safety, and the environment are rampant on China's Internet (Guo, 2020). Zhang and Ghorbani (2020) state that “fake news refers to all kinds of false stories or news that are mainly published and distributed on the Internet, in order to purposely mislead, befool or lure readers for financial, political or other gains”.

Therefore, in this paper, owing to its useful scientific meaning and construction, we have retained the term “fake news” to represent our research object, which refers to false news or false rumors that authorities’ (e.g., government agencies, state media, and other authoritative organizations) statements determine to be false; the term has been used by most researchers (Grinberg er al., 2019; Lazer et al., 2018). Notably, first, we decided to disregard the politicized nature the term has developed, especially since the 2016 U.S. presidential elections. Second, we adopt a broad definition of news: any information (text, emoticons, links, etc.) posted on social media (Vosoughi et al., 2018). Third, our study does not consider the intentions behind the posters of information, such as their political, economic, or other interests (Zhang & Ghorbani, 2020), or the differences between social robots and people. This is because it has been confirmed that humans, not robots, are more likely to spread fake news (Vosoughi et al., 2018), which implies that containment policies should emphasize human behavioral interventions rather than interventions targeted at automated robots. In summary, we decided to use the term “fake news” in this study.

Most scholarly attention has focused on fake news on social media in terms of theoretical models of fake news diffusion (Tambuscio et al., 2015), simulation models of fake news propagation (Hui et al., 2020; Shrivastava et al., 2020; Xing et al., 2021), methods for fake news detection (Ozbay & Alatas, 2020; Zhang & Ghorbani, 2020), credibility evaluation (Ciampaglia et al., 2015; Sui & Zhang, 2021), and interventions to curtail the spread of fake news (Hui et al., 2020; Pennycook et al., 2020). Rebuttals have been a subject of growing scholarly attention as a possible approach to mitigating online fake news (Pal et al., 2020). Some studies have suggested that rebutting fake news is effective for the acceptance of rebuttals (Li et al., 2021), while others have suggested that rebuttals may not always achieve their intended effect (DiFonzo et al., 1994) and may even bring about backfire effects that reinforce the belief in fake news (Nyhan & Reifler, 2010). Due to confirmation bias from individuals' preexisting beliefs (Nickerson, 1998), rebuttals only work on some individuals, which is related to the level of individuals’ cognitive ability.

Taken together, these mixed findings suggest that further research on the underlying mechanism of rebuttal acceptance is warranted. In addition, given that the purpose of rebuttals is to refute fake news, the two antithetical messages often create complex dynamics during fake news refutation (Pal et al., 2020). However, this research focuses on exploring factors that influence fake news rebuttals, and whether acceptance of fake news rebuttal depends on an individual's level of cognitive ability. Thus, this research focuses on enhancing the competitive advantage of rebuttals by considering how to improve rebuttal acceptance, focusing on the exploration of factors that improve the effectiveness of rebuttal strategies to better guide and suggest fake news rebuttals in today's era of post-factual truths.

2.2. The elaboration likelihood model and rebuttal acceptance

The rebuttal of fake news belongs to the category of persuasion information, which aims to correct the public's misconceptions and provide knowledge about the truth of matters (Sui & Zhang, 2021). The ELM is among the most authoritative dual-process theories and describes the change in attitudes in the field of knowledge persuasion (Petty & Cacioppo, 1986, 2012), with “attitude” defined as an evaluation of a target object, person, behavior, or event on a scale reflecting some degree of good or bad, such as favor/disfavor, approval/disapproval, liking/disliking, or similar reactions (Eagly & Chaiken, 1993; Griffith et al., 2018). The ELM posits that the ability and motivation to process information determine which route individuals will follow: central routes or peripheral routes, which differ in the amount of thoughtful information processing or “elaboration” demanded of the information receiver (Bhattacherjee & Sanford, 2006; Petty & Cacioppo, 1986, 2012).

Petty and Cacioppo (1986) define elaboration as “the extent to which a person thinks about the content (e.g., issue-relevant arguments) contained in a message.” The ELM states that in different situations, different message recipients will vary in the extent to which they cognitively elaborate on a particular message, and these variations in the likelihood of elaboration affect the success of an influence attempt, along with other factors (Sussman & Siegal, 2003). Because of the cognitive effort involved, receivers do not elaborate on every message that they receive, and some receivers elaborate on fewer messages than others (Sussman & Siegal, 2003). According to the ELM, informational influence can occur at any degree of receiver elaboration, but it occurs as a result of very different influence processes: high levels of elaboration represent a central route to influence, while low levels result in a peripheral route (Sussman & Siegal, 2003).

Some researchers have used argument quality and source credibility to represent the central and peripheral routes in the ELM (Cheung et al., 2008; Stephenson et al., 2001; Sussman & Siegal, 2003). Mak et al. (1997) proposed that source credibility has been regarded as a major peripheral cue, while argument strength is a critical factor for central route cues. Zha et al. (2018) considered information quality to be the central route and source credibility and reputation to be the peripheral route. Central and peripheral routes are operationalized using source credibility and argument quality, respectively, and do not consider whether source credibility was manipulated before participants read the argument (Stephenson et al., 2001); these appear to be two of the most frequently referenced constructs (Li, 2013; Rucker & Petty, 2006). Source credibility is defined as a person who provides expertise or whom information recipients perceive to be believable, competent, and trustworthy to deliver persuasive messages (Petty & Cacioppo, 1986, pp. 1–24). Argument quality indicates argument strength, which refers to a message that provides other messages embedded with strong, persuasive arguments (Eagly & Chaiken, 1993; Li, 2013). In addition, the ELM states that elaboration involves attending to the content of the message and scrutinizing and assessing its content (Sussman & Siegal, 2003). Meanwhile, in the online context, the central factors primarily consist of text-related attributes (Srivastava & Kalro, 2019). When the message is processed via the central route, in addition to the argument itself, the receivers evaluate the information embedded within the text (Srivastava & Kalro, 2019). Online text is less likely to be narrated in a “standard” form; rather, it is narrated in a form with a certain level of informativeness descriptions (e.g., description of fake news events) as well as in persuasive language with expressions (e.g., persuasive evidence to refute fake news; K.Z. Zhang, Zhao, et al., 2014). The online text is more or less unstructured, thus presenting challenges for people who read and interpret it (Cao et al., 2011). In particular, fake news and the rebuttal messages related to COVID-19 involve newer fields and specialized vocabulary, as previous studies have shown that online information about COVID-19 is difficult for the general population to read and comprehend (Szmuda et al., 2020). Thus, for the general public, the readability of this online information has an important impact on the understanding of disease (Worrall et al., 2020). Extant literature indicates that readability refers to the level of effort required to understand a text (DuBay, 2004), and it is a textual property that can be considered the central route (Agnihotri & Bhattacharya, 2016; Srivastava & Kalro, 2019). Previous results have validated the usefulness of the readability feature extracted from the linguistic model for fake news detection on social media (Choudhary & Arora, 2021). Studies have also validated it as an important influential factor characterizing the writing style related to fake news in empirical research (Barrón-Cedeno et al., 2019; Deng et al., 2021). Since the Internet is one of the most popular sources of information in recent times, it is crucial that people are provided with easily understandable information. Health-related news especially, being difficult to read and understand may cause spread of misinformation (Daraz et al., 2018), and public panic resulting from lack of accessible and credible information can pose a greater burden on a country's healthcare system (Szmuda et al., 2020). Therefore, in the online fake news rebuttal field during the COVID-19 pandemic, information readability is an important factor in the central route (Agnihotri & Bhattacharya, 2016; Srivastava & Kalro, 2019; Zhou et al., 2021).

Therefore, to simplify the research model, this study operationalized the central route (argument quality and information readability) and peripheral route (source credibility) constructs as two differential influencing routes to fake news rebuttal acceptance.

Another important aspect of the ELM is the study of the moderating role of “elaboration likelihood,” which relates to an individual's abilities and motivations (Shahab et al., 2021). In the ELM, motivation refers to an individual's desire to exert a high level of mental effort, and capacity refers to an individual's ability and opportunity to think (Griffith et al., 2018). When individuals are encouraged by various factors to have the motivation and/or the ability to process arguments thoughtfully and carefully, elaboration likelihood is on the high end, and the central route to persuasion becomes salient (Zha et al., 2018). When elaboration anchors at the low end, the peripheral route to persuasion becomes salient (Zha et al., 2018).

The ELM posits that to change someone's understanding and attitude, the receiver must be motivated to consider the message and must have the ability to process (i.e., devote cognitive effort to) the message (Robert & Dennis, 2005). When using social media to communicate, the receiver must commit some portion of his or her attention to the message and will, therefore, be more likely to be motivated to hear it, and the attention and motivation required on the part of the receiver, which is the first step toward elaboration in the ELM. If individuals are motivated, they are inclined to begin the elaboration process, and the degree to which they can elaborate will depend on their ability to process the message received (Robert & Dennis, 2005). Hence, the next hurdle to elaboration is to ensure that the receiver has the ability to process the information, the final step toward elaboration (Robert & Dennis, 2005). Therefore, scholars posit that the influence of motivation in determining information processing routes may be limited as users are already consciously engaging in deeper cognitive activity when they actively go online to search for specific details (Goh & Chi, 2017). Therefore, in this study, we focus more on the moderating role of cognitive ability.

Prior work has also suggested that ability, as used in ELM literature, is conceptualized as the cognitive ability of a recipient to process the information presented in the message and is an important component of the act of information processing (Angst & Agarwal, 2009). The ELM explains why a given influence process may lead to different outcomes (Li, 2013). Most theories focus on either higher or lower cognitive processing separately, but the ELM is a unified model that deals with both aspects simultaneously (Shahab et al., 2021); that is, information processing can act through both a central and a peripheral route simultaneously (Eagly & Chaiken, 1993; Sussman & Siegal, 2003). Both routes signify that one's attitudes take shape or vary according to intrinsic information processing capabilities—that is, they can evaluate information based on their past experiences and knowledge (Sussman & Siegal, 2003). When individuals have sufficient cognitive abilities to process information, they accept the persuasion of central routes, such as paying more attention to the argument and message; in contrast, in the peripheral route, individuals exert little cognitive abilities and rely on simple cues to evaluate information, such as focusing on source credibility (Petty & Cacioppo, 1986, 2012). Thus, these perspectives suggest that there are two routes of information processing based on the level of an individual's cognitive ability.

Accordingly, this study identifies two modes of information processing: central route processing, which requires higher cognitive ability to make judgments regarding related information, and peripheral route processing, which requires lower cognitive ability to make judgments involving the use of simple rules or cues (Petty & Cacioppo, 1986, 2012; Yu, 2021). The most frequently used dependent variable for the ELM is attitude (Bhattacherjee & Sanford, 2006; Chang et al., 2015). In this study, we consider using rebuttal acceptance as a measure of users’ acceptance of rebuttals when COVID-19-related fake news rebuttals have been published. Thus, this study considers both routes to explain how individuals with different cognitive abilities accept fake news rebuttals on social media.

With the ELM as its foundation, this study aims to develop and empirically validate a research model of rebuttal acceptance. The ELM was deemed appropriate because it provides a versatile theoretical lens to examine the underlying mechanism of rebuttal acceptance. That is, when individuals with different cognitive abilities encounter rebuttals, the model demonstrates how central or peripheral cue processing may influence individuals’ internal states of information processing and the extent to which they accept fake news rebuttals.

3. Research model and hypotheses

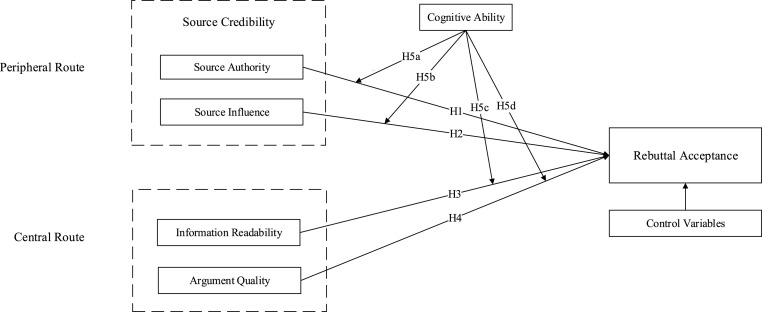

The research model is presented in Fig. 1 . This research relies on the theoretical lens of ELM to propose the construction of a research model of the factors affecting fake news rebuttal acceptance from two aspects: the peripheral route and the central route. The central route includes information readability and argument quality; while the peripheral route includes source credibility (authority and influence). To be specific, we constructed a theoretical model for the source credibility, information readability, and argument quality determinants refuting fake news based on the ELM, focusing primarily on the impact of a rebuttal's source credibility, information readability, and argument quality on rebuttal acceptance. In addition, we also explored whether higher or lower cognitive abilities would have moderating effects on a rebuttal's source credibility, or information readability, argument quality, and rebuttal acceptance. In this vein, the research model was formed and the hypotheses are presented in the following.

Fig. 1.

Research model.

3.1. Effects of source credibility

Source credibility refers to a message recipient's perception of the credibility of a message source, reflecting nothing about the message itself (Sussman & Siegal, 2003). Different sets of conceptual dimensions of source credibility have been identified in prior ELM-based research. The source credibility model provides a three-dimension conceptual framework to operationalize source credibility that influences recipients' acceptance of a message; these three dimensions are expertise, trustworthiness, and attractiveness (Ohanian, 1990). Recent research generally suggests that the dimensions of source credibility can be narrowed down to expertise and attractiveness (Zhu et al., 2014).

In an online environment in which communication is computer-mediated, verifying source credibility is not an easy task (Li et al., 2017). On social media, a key element of source credibility is the characteristic of the users of posting (L. Zhang, Peng, et al., 2014). For instance, (L. Zhang, Peng, et al., 2014) used users' authority to indicate source credibility; Lin et al. (2016) suggested that source credibility is strongly influenced by authority. The ability to identify authority has been widely recognized as a key component of source credibility in early persuasion research (Lin et al., 2016). The concept of authority may vary across cultures and expertise fields; however, in general, people with authority are those who have acquired status through education, experience, expertise, talent, or other means (Cialdini, 2001). For instance, in the public health sphere, expert sources (e.g., government health agencies), news media, and social peers may have the potential to correct fake news about public health crises (van der Meer & Jin, 2020), and they may be regarded as authority figures or organizations, but they possess different levels of authority. Authority can assist in the evaluation of source credibility, particularly in risk and health issues, given the inequality of information flow between content producers and consumers (Lin et al., 2016). Therefore, we adopted authority as one dimension of source credibility in social media. In addition, previous literature has also shown the positive influence of a communicator's physical attractiveness on message persuasiveness (Zhu et al., 2014). This effect is independent of argument quality and source authority, regardless of whether the message receiver has read the message or whether the communicator is an authority figure or organization (Pallak et al., 1983). In the absence of traditional social ties and face-to-face interactions, social structural information such as the number of online fans/followers can emulate physical attractiveness to enhance source credibility and influence users' attitudes toward fake news rebuttals (Zhu et al., 2014). A user's number of followers indicates the user's influence on Sina Weibo, and users with large numbers of followers are identified as users with great influence (L. Zhang, Peng, et al., 2014). Thus, we adopted influence to indicate the attractiveness of a rebuttal source on social media as one dimension of source credibility. Therefore, in this study context, we measure source credibility through two dimensions: source authority and source influence.

On the one hand, the “authority principle” refers to the tendency of individuals to comply with the recommendations or directives of authority figures, and is a fundamental social influence principle (Cialdini, 2001). In general, the authority principle functions such that the higher the level of authority, the more positive attitudes (e.g., toward fake news rebuttal acceptance) should be (Sui & Zhang, 2021). For instance, previous research suggests that individuals are more influenced by a medical expert or official authority, such as tweets generated by the American Heart Association (Westerman et al., 2014). However, under certain circumstances, authority may exert a negative influence (Jung & Kellaris, 2006). For example, individuals who resent or distrust authority react negatively to authority-based persuasion attempts (Jung & Kellaris, 2006). Previous research has indicated that methods of health persuasion often cause rejection of health messages, a phenomenon known as “reactance” (S. S. Brehm & J. W. Brehm, 2013; Hachaturyan et al., 2021). For example, Lewandowsky et al. (2012) highlighted one cognitive reason that could explain the persistence of erroneous beliefs based on misinformation: because of psychological reactance (S. S. Brehm & J. W. Brehm, 2013), people generally do not like to be told what to think and how to act and may thus reject particularly authoritative fake news rebuttals, especially if they concern complex issues such as public health crises. The COVID-19 infodemic is full of false claims, half-baked conspiracy theories, and pseudoscientific therapies regarding the diagnosis, treatment, prevention, origin, and spread of the disease (Naeem et al., 2021). However, in responding to the COVID-19 pandemic, considering the nature of the pandemic and the effectiveness of measures against the disease (e.g., fake news rebuttals regarding vaccines and preventive measurements), citizens worldwide do not trust their governments or health authorities; in other words, they do not consider the recommendations prescribed by figures in authority or organizations as useful or trustworthy (Houdek et al., 2021, pp. 1–4). In addition, psychological reactance tends to be more pronounced when communicators are perceived as having greater authority (Invernizzi et al., 2003; Pavey et al., 2021). For example, Invernizzi et al. (2003) concluded that when participants with a strong identity as smokers were exposed to a message that targeted smokers, the intention to quit decreased when the message was attributed to a health institute, but increased when it was attributed to a neighborhood association. The neighborhood association (low authority) tended to lead smokers to make a positive change, whereas a health institute (high authority) did not. Similarly, in Gans's (2014) study on HPV vaccination, findings supported the choice of a nonexpert young girl rather than an authoritative expert as the spokesperson to deliver a pro-HPV vaccine message. In this vein, the refuter with low authority bears a greater social risk in making a recommendation and thus may elicit more positive attitudes (Jung & Kellaris, 2006), which suggests that receivers may exhibit a reverse authority effect (Coppola & Girandola, 2018; Invernizzi et al., 2003; Jung et al., 2009), that is, the higher the level of authority of the rebuttal source, the less positive receivers' attitudes toward accepting fake news rebuttals.

On the other hand, as mentioned before, users who have large numbers of followers are identified as users with great influence on social media (L. Zhang, Peng, et al., 2014). A study found that a user's number of followers had significantly positive effects on their number of postings, comments, and posting popularity (L. Zhang, Peng, et al., 2014), and influenced others' perceptions and persuading others to follow their choices (Susarla et al., 2012). The influence of the source can enhance source credibility and positively influence users' attitudes toward fake news rebuttals (Zhu et al., 2014); that is, the recipient's decision-making process will be more affected by the provider if the rebuttal provider has a high level of influence.

In summary, as a peripheral clue, source credibility may play a crucial role in fake news debunking on social media, and a rebuttal source's authority and influence are important factors that affect the acceptance of fake news rebuttals. Therefore, with respect to the first research question (RQ1), we hypothesize the following:

H1

The authority of a rebuttal source has a negative effect on the acceptance of fake news rebuttals.

H2

The influence of a rebuttal source has a positive effect on the acceptance of fake news rebuttals.

3.2. Effects of information readability

In general terms, the concept of readability describes the effort and educational level required for a person to understand and comprehend a segment of text (DuBay, 2004). The purpose of a readability test is to provide a scale-based indication of how difficult a segment of text is for readers to comprehend based on the linguistic characteristics of that text (Korfiatis et al., 2012). Thus, a readability test can indicate how understandable a piece of rebuttal text is based on its syntactical elements and style (Korfiatis et al., 2012).

Descriptions that are easy to read should be more helpful and influential than other descriptions that are hard to read, and easy-reading text improves comprehension, retention, and reading speed (Han et al., 2018). The extent to which a reader can understand and comprehend the information embedded within a message drives its adoption and effectiveness (Srivastava & Kalro, 2019). Thus, the readability of a message is an important factor that determines how well the receiver is able to understand and adopt the information (Liu & Park, 2015). An online text that is easily readable in terms of content and presentation is deemed more helpful (Srivastava & Kalro, 2019) and draws more utility as its comprehensibility increases (Agnihotri & Bhattacharya, 2016).

In a rebuttal, the provision of information that is easy to read is necessary to facilitate a favorable response in readers to the refutation (Sui & Zhang, 2021), which indicates that providing information with high readability will effectively promote the acceptance of rebuttal messages. Thus, as a central cue, information readability is an important factor (Agnihotri & Bhattacharya, 2016; Srivastava & Kalro, 2019; Zhou et al., 2021) that can affect the acceptance of fake news rebuttals; regarding the second research question (RQ2), we hypothesize the following:

H3

The information readability of a rebuttal has a positive effect on the acceptance of fake news rebuttals.

3.3. Effects of argument quality

Although argument quality is commonly used as a central route in prior literature, its conceptualization and operationalization remain inconsistent among scholars (Angst & Agarwal, 2009). Eagly and Chaiken (2003) defined argument quality as “the strength or plausibility of persuasive argumentation”, which was similar to Petty and Cacioppo's definition (Petty & Cacioppo, 1986, pp. 1–24), which indicated that the concept pertains to perceptions of strong and convincing arguments rather than weak and unreal ones. Following this line of definitions, Bhattacherjee and Sanford (2006) operationalized argument quality by emphasizing the strength of arguments, whereas Cheung et al. (2009) employed argument strength to highlight whether received information can persuade a person to believe something or to perform a behavior. Other scholars examined argument quality from a slightly different angle, such as measuring argument quality by examining whether the information is complete, consistent, accurate, or adequate (Sussman & Siegal, 2003) or its relevance, timeliness, accuracy, and comprehensiveness (Cheung et al., 2008). In this study, we argue that argument quality is the strength of arguments, which refers to the extent to which the argument is convincing or valid in refuting fake news and shows how well a refuting position is justified based on available evidence or a set of reasons (Musuva et al., 2019).

Research demonstrates that argument strength or quality is positively related to attitude change (Stephenson et al., 2001), particularly in online environments (Cheung et al., 2009). The persuasion literature shows that high argument quality has been found to contribute to favorable decision outcomes (Angst & Agarwal, 2009; Srivastava & Kalro, 2019). If the received information is perceived to have valid arguments, that is, if the message contains powerful arguments, good evidence, sound reasoning, and so on, the receiver will develop a positive attitude toward the information; conversely, if the received information appears to have invalid arguments, that is, the message's argument reveals weak evidence, slipshod reasoning, and the like, the receiver will adopt a negative attitude toward the information (Cheung et al., 2009).

If the argument regarding fake news rebuttals is strong, it can generate favorable cognitive responses regarding arguments (Chang et al., 2015). Thus, as a central cue, argument quality may play a crucial role in fake news rebuttal on social media; regarding the third research question (RQ3), we hypothesize the following:

H4

The argument quality of a rebuttal has a positive effect on the acceptance of fake news rebuttals.

3.4. Effects of cognitive ability

The ELM outlines a process-oriented approach to persuasion, and the same variable can induce different attitude changes at different degrees of elaboration (Chen & Ku, 2013). In other words, the informational influence on attitude change is likely to occur at any degree of elaboration, but the influence processes are very different. According to the ELM, the effects of central and peripheral cues on users' decision-making are moderated by the users' ability and motivation to elaborate on the information (Petty & Cacioppo, 1986, pp. 1–24). Many prior studies have tested elaboration likelihood's positive moderating effects on the central route and its negative moderating effects on the peripheral route. For example, Chen and Ku (2013) found that personal relevance and user expertise have positive moderating effects on the central route (argument quality) and negative moderating effects on the peripheral route (source credibility). Zha et al. (2018) found that focused immersion positively moderates the effect of information quality (central route) and negatively moderates the effect of reputation (peripheral route) on informational fit-to-task.

Cognitive ability refers to the ability of the human brain to process, store, and extract information (Lubinski, 2009), including the capability to execute higher cognitive processes of reasoning, remembering, understanding, and problem-solving (Bernstein et al., 2012). As previously mentioned, ability, as used in ELM literature, is conceptualized as the cognitive ability of a subject to process the information presented in the message (Angst & Agarwal, 2009), which refers to the extent to which one possesses prior knowledge or expertise regarding the topic at hand (Bhattacherjee & Sanford, 2006). The current study examines individuals’ cognitive abilities regarding fake news rebuttals to understand the different informational influence processes underlying the effectiveness of accepting fake news rebuttals under different levels of elaboration likelihood.

Previous studies have noted that it is also probable that cognitive ability can moderate the relationship between political interest and inadvertent fake information-sharing behavior, and higher news exposure may increase the likelihood of exposure to fake information (Ahmed, 2021). As such, the importance of an individual's information-processing ability is heightened under such conditions. If cognitive ability acts as a buffer against the manipulative nature of fake news, it is plausible that social media users with higher cognitive abilities of fake news rebuttals would accept such fake news rebuttals. Individuals with higher cognitive abilities are known to make sound judgments when they engage with fake news (Ahmed, 2021); for example, they are skilled at discerning facts from disinformation (Pennycook & Rand, 2019) and adjust their attitudes while engaging with fake news (Roets, 2017). Previous findings also support the thesis that cognitive ability is positively related to efficient information processing (Lodge & Hamill, 1986) and better decision-making (Ahmed, 2021; Gonzalez et al., 2005).

According to ELM, individuals with higher information-related motivations and abilities are more likely to engage in central route processing (Metzger, 2007; Yu, 2021); they may thus be more likely to accept fake news rebuttal through central cues. In contrast, prior work shows that those with lower information-related motivations and abilities are more likely to engage in peripheral route processing (Metzger, 2007; Yu, 2021); they may thus be more likely to accept fake news rebuttal through peripheral cues.

Therefore, it is hypothesized that users with higher cognitive abilities will prefer to make judgments based on central cues, while those with lower cognitive abilities may be more likely to make judgments based on peripheral cues. Thus, with respect to the fourth research question (RQ4), to examine the above associations, we hypothesize the following:

H5a

The effect of source authority on the acceptance of fake news rebuttals will be negatively moderated by individuals' cognitive ability regarding fake news rebuttals such that this effect will be weakened by individuals with higher cognitive abilities.

H5b

The effect of source influence on the acceptance of fake news rebuttals will be negatively moderated by individuals' cognitive ability regarding fake news rebuttals such that this effect will be weakened by individuals with higher cognitive abilities.

H5c

The effect of information readability on the acceptance of fake news rebuttals will be positively moderated by individuals' cognitive ability regarding fake news rebuttals such that this effect will be strengthened by individuals with higher cognitive abilities.

H5d

The effect of argument quality on the acceptance of fake news rebuttals will be positively moderated by individuals' cognitive ability regarding fake news rebuttals such that this effect will be strengthened by individuals with higher cognitive abilities.

4. Methodology

4.1. Data collection and preprocessing

4.1.1. Study context and data collection

Sina Weibo (Weibo, Beijing, China, http://www.weibo.com/), often referred to as “Chinese Twitter” (Twitter, San Francisco, USA, http://www.twitter.com/), is one of the most influential social network platforms in China (Rodríguez et al., 2020). As in some other microblogging systems (e.g., Twitter), users of Sina Weibo can post short messages, termed “postings.” The process of information diffusion on Sina Weibo can be traced by the analysis of original postings, reposting, comments, replies, and likes of postings. These features make this social platform highly interactive and allow for rapid and broad dissemination of information. Furthermore, in contrast to other social networks used in China such as WeChat (WeChat, Shenzhen, China, http://weixin.qq.com/), communication on Sina Weibo is almost entirely public, which generates a large amount of unstructured text data and provides data support for our research. Using the Zhiwei Data Sharing Platform (Zhiwei Data, Beijing, China, http://university.zhiweidata.com/), a well-known social media data service company in China, we collected data on COVID-19-related fake news events through the Business Application Programming Interface (API) of Sina Weibo, as outlined below.

-

(1)

We collected original postings (i.e., non-reposted postings) regarding COVID-19-related fake news events. First, based on the fake news event list dating from January 20, 2020, to June 28, 2020, for the Zhiwei Data Sharing Platform, we defined keyword combinations related to fake news events that occurred in China using multiple logical relationships (e.g., AND and OR). Then, we collected fake news event data related to the COVID-19 pandemic dating from January 20, 2020, to June 28, 2020 (these stories had been confirmed as “fake” by authoritative statements) by obtaining the original postings and corresponding comments of each event. Second, to avoid data selection bias and according to the event classification criteria of the Zhiwei Data Sharing Platform, according to the number of comments, we sampled five fake news events that involved three fields: authority, society, and politics. These fake news events are the five fake news stories with the highest number of comments from January 20, 2020, to June 28, 2020, which have the characteristics of the most widespread dissemination and influence on social media platforms in China during the COVID-19 pandemic. The annotation scheme of three fields of fake news was developed through an iterative process of rounds of annotation and evaluation involving three researchers (two Ph.D. students and one expert from Zhiwei Data Sharing Platform, who are experienced in fake news research amid public health crises). Table 1 shows the annotation scheme for the three fields of fake news. Subsequently, after manual data denoising and cleaning by three expert annotators, we randomly selected 2053 postings related to fake news events. For brevity, the descriptions of the five fake news events are shown in Appendix A.

-

(2)

We also collected all comments that replied to 2053 original postings. From the original postings obtained, as mentioned earlier, we collected comment conversations associated with each original posting. To collect comments, we scraped the webpage of each original posting to retrieve the URL. However, if the URL was missing or invalid, we chose MIDs (a unique identification code for each posting on Sina Weibo) to retrieve the comments. We collected a total of 100,348 comments (either direct replies or nested replies to the original postings) that replied to the 2053 original postings.

Table 1.

The annotation scheme for three fields of fake news.

| Category of fake news | Annotation scheme for category of fake news |

|---|---|

| Authority | Those slandered by the fake news are authoritative individuals or organizations in expertise fields related to COVID-19, such as Nanshan Zhong (Event 1) and the Wuhan Institute of Virology (Event 3). |

| Society | Fake news regarding social events, social problems, and social style involving people's daily lives in relation to COVID-19, especially reflecting social morality and ethics. |

| Politics | The fake news related to COVID-19 regarding politics, i.e., the activities of classes, parties, social groups, and individuals in domestic and international relations. |

Table 2 presents the basic attributes, including the type of event and the number of original postings and comments, of each fake news event. All of the information obtained on the web was written in simplified Chinese and released publicly by Sina Weibo. Because we used publicly available data, we only referred to the summarized results and did not collect any sensitive data.

Table 2.

Five fake news events (translated into English from Chinese).

| No. | Event | Category | Original Postings | Comments |

|---|---|---|---|---|

| 1 | Yansong Bai dialogued with Nanshan Zhong. | Authority | 478 | 11,207 |

| 2 | Materials of Jiangsu Province aided medical team of Hubei Province were detained. | Society | 114 | 8006 |

| 3 | A postgraduate from the Wuhan Institute of Virology was the “Patient Zero.” | Authority | 676 | 49,763 |

| 4 | 80 Chinese citizens were abused while being quarantined in Russian Federation. | Politics | 258 | 15,524 |

| 5 | A car owner in Hubei Province died of COVID-19. | Society | 527 | 15,848 |

Note: a. The data collection for the postings and comments ended on September 12, 2020, at 23:59:59. b. The main objects affected by fake news in Events 1 and 3 were authoritative individuals and organizations in expertise fields, respectively.

4.1.2. Filtering of postings for fake news rebuttals

We used manual annotation to filter out postings that refuted fake news. A three-person expert panel of social media researchers also labeled the refuting postings out of the 2053 original postings. Referring to Tian et al. (2016), the specific steps are as follows:

First, two annotators with a detailed understanding of COVID-19-related fake news independently labeled postings of fake news rebuttals among all 2053 original postings.

Second, to eliminate the differences due to human factors, the two members discussed all the annotation results and re-annotated the postings to reach an agreement on the differences.

Third, the third annotator annotated 2053 original postings to calculate inter-rater reliability. Cohen's kappa () for the annotators was 0.92 (), indicating a good agreement among them (Cohen, 1960).

Finally, from the 2053 original postings, we obtained 1721 original postings of labeled fake news rebuttals.

4.1.3. Stance classification

Utilizing the rich information in comments that replied to the original postings on Sina Weibo, we employed SDQC stance classification (Derczynski et al., 2017) to classify the stance in user comments on fake news as one of the following: supporting [S], denying [D], querying [Q], or commenting [C]. However, most prior studies have examined SDQC stances on fake news rather than stances on fake news rebuttals (Zubiaga et al., 2018). To follow the existing criteria for classifying SDQC stances, the four stances in the comments on refuting postings studied in this paper are stances on fake news. The comments of each user were categorized according to the following four stances:

-

●

Supporting (S): Users who commented believing that the fake news is true; that is, they think the fake news rebuttal is false.

-

●

Denying (D): Users who commented believing that the fake news is false; that is, they think the fake news rebuttal is true.

-

●

Querying (Q): Users who commented on the veracity of the fake news while asking for additional evidence.

-

●

Commenting (C): Users who commented without clearly expressing whether they wanted to assess the veracity of the fake news.

To improve classification efficiency and obtain satisfactory results in the case of massive text data, we designed text classifiers with supervised learning methods to automatically identify and classify the four types of SDQC stances observed in the 100,348 comments.

Similarly, first, we asked two members of our team to label 12,000 comments (randomly selected from 100,348 comments based on the proportion of the number of comments in each event) with the corresponding SDQC stances.

Second, the third member randomly selected 2000 comments to validate the inter-annotator agreement, and the annotation process was assessed and validated using Cohen's kappa (; Cohen, 1960). All codes were mutually exclusive.

Third, we divided 12,000 comments into training and testing sets in a ratio of the label distribution is presented in Table 3 .

Table 3.

Label distribution of the training and testing sets.

| Stance |

||||

|---|---|---|---|---|

| Support | Deny | Query | Comment | |

| Training set | 227 | 2305 | 1126 | 4742 |

| Testing set | 98 | 956 | 472 | 2074 |

Fourth, we trained and compared the text classifiers using deep learning methods. Because the new language representation model, the bidirectional encoder representations from transformers (BERT; developed by Google in 2018), is conceptually simple, empirically powerful, and obtains new state-of-the-art results on 11 natural language processing tasks without substantial task-specific architectural modifications, we chose BERT and its two related but improved models to train the classifiers (Devlin et al., 2018). These three models are BERT, RoBERTa-wwm-ext (robustly optimized BERT approach-whole word masking-extended data), and RBT3 (three-layer RoBERTa-wwm-ext; Cui et al., 2019; Cui et al., 2020; Liu et al., 2019). We chose accuracy and macro-F1 score to measure the performance of the classifiers (Van Asch, 2013; Zhang et al., 2015). During the fine-tuning process, we compared 72 sets of hyperparameters based on the three models to obtain the best-performing stance classification model. Table 4 presents the performances of these classifiers under different combinations of hyperparameters. For brevity, we have only shown the hyperparameter results with the best performance for each model. The detailed results of the stance classification are described in Appendix B. Finally, we chose the RoBERTa-wwm-ext model with hyperparameters (140, 16, 3e–5, 3) to predict the SDQC stance for large comment datasets, as it performed satisfactorily with an accuracy of 80.89% and a macro-F1 of 68.06%. The results of our stance classifier are presented in Table 5 .

Table 4.

Stance detection performance of the classifiers on user comments.

| Model | Hyperparameters | Accuracy | Macro-precision | Macro-recall | Macro-F1 |

|---|---|---|---|---|---|

| BERT | (70, 16, 2e–5, 3) | 80.33% | 67.71% | 65.94% | 66.51% |

| RBT3 | (140, 32, 5e–5, 3) | 80.17% | 68.78% | 63.08% | 64.53% |

| RoBERTa-wwm-ext | (140, 16, 3e–5, 3) | 80.89% | 68.76% | 67.88% | 68.06% |

Note: Hyperparameters (x, y, z, w), where x: max_seq_length (70, 140), y: train_batch_size (16, 32), z: learning_rate (2e–5, 3e–5, 5e–5), and w: num_train_epochs (2, 3). The values in parentheses represent the hyperparameters derived from the fine-tuning process.

Table 5.

Comparison of the number of users and the number of comments for the SDQC stances.

| Stance | Unique |

Mixed | Total | |||

|---|---|---|---|---|---|---|

| Support | Deny | Query | Comment | |||

| Total No. of users, n | 781 | 19,111 | 6832 | 34,694 | 6370 | 67,788 |

| Total No. of comments, n | 800 | 20,546 | 7359 | 45,337 | 26,306 | 100,348 |

Note: Unique stance means that the user has only expressed one unique stance in their comments, no matter how many times they commented. A mixed stance means that the user commented at least twice and expressed more than one type of stance.

4.1.4. Sample selection

To measure the rebuttal acceptance of fake news refuting postings, we randomly selected a subset of users who commented on refuting postings, including 3865 unique users from a total of four types of stances, and their 564,910 background postings, including 4277 comments on 343 fake news refuting postings (they may have made different comments on different refuting postings from different fake news fields).

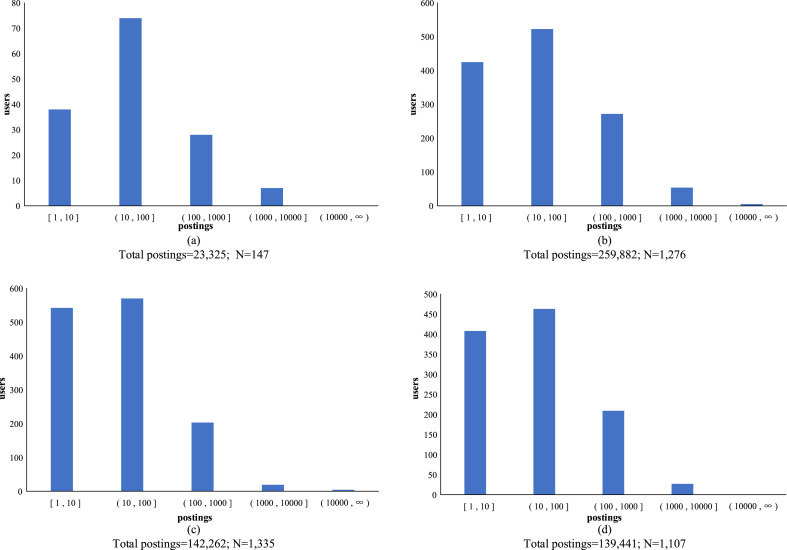

First, according to the proportion of users in the four stances in Table 5, among the users who commented on 1721 refuting postings, we randomly selected 147 unique users with a supporting stance, 1276 unique users with a denying stance, 1335 unique users with a querying stance, and 1107 unique users with a commenting stance. All of these users participated in the 4277 comments responding to the 343 fake news refuting postings.

Second, to avoid contact with the COVID-19 fake news refuting information in the users' historical experience, we chose all 3865 users' background postings from the 3 months before the outbreak of COVID-19 in early December 2019 (2019.09.01 00:00:00–2019.11.30 23:59:59). We then collected 564,910 background postings in total through the users’ UIDs (a unique identification code for each user on Sina Weibo).

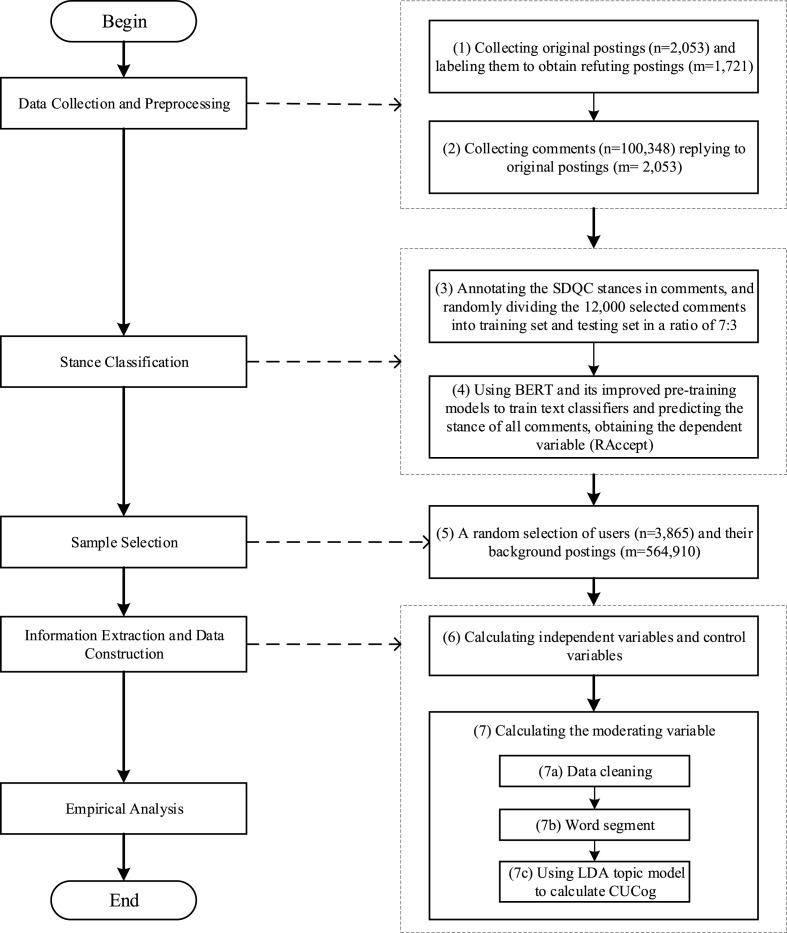

As we sampled users who commented on refuting postings, we are approximating what the users may have been exposed to regarding rebuttal postings. Users’ commenting behavior represents their behavioral decisions after they are exposed to postings and process those postings (Liu et al., 2012). Fig. 2 shows the distribution of the number of background postings of users in each stance. The process of data collection and preprocessing and the flowchart of the sample selection is shown in Fig. 4 .

Fig. 2.

Distribution of background postings of users with (a) supporting, (b) denying, (c) querying, (d) commenting stances.

Fig. 4.

The process of the research procedures.

4.2. Operationalization of variables

4.2.1. Independent variables

The independent variables are the rebuttal source's authority (), the rebuttal source's influence (), the information readability of the rebuttal information (), and the argument quality of the rebuttal information (). These were extracted from the text information through text mining and manual scoring.

-

(1)

Measuring the authority of a rebuttal source

To identify the authority of a rebuttal source (), referring to Chen et al. (2021), we scored the authority of a rebuttal source according to their homepage information and verification status on Sina Weibo as well as other information online (e.g., http://baike.baidu.com/), ranging from 1 to 5, where 1 indicated that the rebuttal source is “completely not an authority,” and 5 indicates that it is “completely an authority.” At the same time, to score the degree of authority more accurately, we used a fuzzy expression that shields the generally adopted Likert scale from rank restriction, allowing experts to freely express their attitudes on a number axis from 1 to 5 based on scoring criteria, marking the point that best indicates the degree of authority and marking the number represented above this point (Pennycook et al., 2020). The size of the number indicates the expert's perceived value of authority, with larger values indicating higher authority of the account. To ensure that the manual scoring process met reliability standards, we also used a three-person expert panel of social media researchers to score the authority of the account (two Ph.D. students and one expert from Zhiwei Data Sharing Platform who are experienced in fake news research amid public health crises). First, the three researchers iteratively discussed and confirmed the preliminary authoritative scoring criteria by drawing on their practical research experience, sample data characteristics, and reading of research materials. Second, the two researchers independently scored the authority of the accounts based on the preliminary scoring criteria formed above, repeatedly revising and discussing the scoring details during the scoring process, which resulted in the final version of the authority scoring criteria; ultimately, they obtained a consistent scoring result for the authority of the accounts. Third, the third researcher scored independently based on the final version of the scoring criteria and obtained their scoring results. Fourth, we calculated the consistency of the two scoring results, and the inter-rater agreement was determined to be 0.91 by calculating Cohen's kappa () (), which indicated that the scoring process had been validated. The calculation formula is . Table 6 shows the final scoring criteria and examples of the results of our scoring of the source authority.

-

(2)

Measuring the influence of a rebuttal source

Table 6.

Criteria and examples for the process of scoring the authority of a rebuttal source (translated into English from Chinese).

| Score | Description | Example |

|---|---|---|

| [1,1.5] | Ordinary users who have no authentication information, and the content of their homepage is primarily personal opinions or sharing daily life. | / |

| (1.5,2] | Personal accounts with authority in certain specific industries, such as social peers in the public health sphere. | / |

| (2,3] | Non-government-related official media accounts (i.e., official media accounts not affiliated with any government): for example, authoritative media in specific industry sectors (e.g., financial newspapers); portal sites (e.g., Tencent, Sina) |

|

| (3,4] | Official accounts of local governments, emergency services, and political groups and unions: for example, police agencies, local government official accounts (e.g., provincial, municipal governments), media accounts belonging to local governments, and the accounts of their subordinate agencies (e.g., official newspaper [local government-operated]), political groups such as the communist youth league, party groups, and unions. |

|

| (4,5] | The official media accounts are led by the Central Committee of the Communist Party of China (CCCPC) and the accounts of its subordinate agencies. |

|

Note: Personal users are involved in non-public data; thus, no examples of specific user nicknames will be provided.

Users who have large numbers of followers on social media are identified as influential (L. Zhang, Peng, et al., 2014). On Sina Weibo, the number of followers can be used to measure the account's social media influence (Z. Wang, Liu, et al., 2020). Therefore, the influence of the rebuttal source () was measured using the number of followers of the rebuttal source accounts. The calculation formula is .

-

(3)

Measuring the information readability of a rebuttal posting

In this study, we used information readability () to indicate the ease with which a commenting user can understand a rebuttal text (Korfiatis et al., 2012). Readability can be defined as the extent to which a written text is easy to understand, and its main determinant is the complexity of wording (Senter & Smith, 1967). Thus, for all COVID-19-related fake news rebuttals, the higher the readability of the rebuttal text, the less difficult it is for users to understand the message when the main idea of the message expressed in the rebuttal text is the same (Korfiatis et al., 2012).

In more formal terms, a readability test is a formula produced by applying linear regression to subjects regarding the reading ease of different segments of text that they had been asked to comprehend using specific instruments (Korfiatis et al., 2012). Thus, we measured the information readability of a rebuttal posting () using the readability index (). The calculation of the readability index has been established in English studies (Korfiatis et al., 2012). Among the existing Chinese readability formulas, Jing Xiyu's proposed readability formula is the most authoritative (see Equation (1)), while the common characters used the in Equation (1) defines come from a list of 495 commonly used traditional characters in Taiwan's national primary school, which is relatively old and not applicable to the classification of common Chinese characters in online social media (Qing & Qin, 2020). Therefore, we chose to cite the 1988 edition of Modern Chinese Commonly Used Characters (3500 characters) as a dictionary of commonly used Chinese characters.

| (1) |

where for any refuting posting containing at least one sentence, is the total number of characters (Chinese characters, English letters, and numbers except for spaces and punctuation marks) in a refuting posting, and indicates the average length of a sentence in a refuting posting—that is, the average number of characters in a sentence.

| (2) |

where indicates the number of sentences contained in a refuting posting, and is the percentage of common Chinese characters in a refuting posting.

| (3) |

where indicates the number of common Chinese characters that appear in a refuting posting, and indicates the total number of Chinese characters in a refuting posting.

In Equation (1), a smaller value indicates a smaller and and a larger , suggesting that the rebuttal text is easier to read; conversely, a larger value indicates a larger and and a smaller , suggesting that the rebuttal text is less readable (Qing & Qin, 2020). Therefore, to facilitate the analysis of the relationship between information readability and rebuttal acceptance in the regression model, we take the opposite value of the (see Equation (1)) to express ; a higher score indicates the ease of readability (i.e., the higher the , the easier it is to read the rebuttal text). This was calculated using the following formula:

| (4) |

Similarly, the calculation formula is .

-

(4)

Measuring the argument quality of a rebuttal posting

Similar to the scoring method of , referring to the previous studies in Section 3.3, which consider the quality of argument in the postings of fake news rebuttals (Bordia et al., 2005; Zubiaga et al., 2018), we also scored for the quality of the argument regarding rebuttal information (). The score ranged from 0 to 2, showing the strength of the argument contained in the rebuttal information, where 0 indicates that the rebuttal information is “a completely weak argument” and 2 indicates that it is “a completely strong argument.” The higher the score, the higher the argument quality (i.e., the stronger the arguments) provided in the respective rebuttal. The calculation formula is . Furthermore, we also conducted interrater agreement reliability tests to ensure intersubjective verifiability (). Table 7 shows an example of the results of our scoring for argument quality.

Table 7.

Criteria and examples for scoring the argument quality of a rebuttal (translated into English from Chinese).

| Score | Example | Description |

|---|---|---|

| [0,0.5] |

|

A flat counterstatement of fake news without providing any valid arguments, i.e., the message's argument reveals weak evidence, slipshod reasoning, and the like, indicating that the strength of the argument is weak. |

| (0.5,1] |

|

Refuting fake news with cited evidence and reasons that are not very good, adequate, or powerful, such as using a relevant person or an organization's response, indicating that the strength of the argument is medium. |

| (1,2] |

|

Refuting fake news with a combination of evidence and reasons, such as using both a person's and an organization's response; the message contains powerful arguments, good evidence, sound reasoning, and so on, indicating that the strength of the argument is strong. |

Note: a. For brevity, we only show the text that can indicate the strength of the rebuttal argument and do not list the full rebuttal postings. b. Keywords concerning the quality of the argument are italicized.

4.2.2. Dependent variable

The dependent variable in this research is rebuttal acceptance (), which indicates the user's stance reaction when commenting on the refuting posting after it is posted. Each classifier in Section 4.1.3 can output probability values of the four SDQC stances corresponding to each comment text before outputting the stance classification result for each comment text; for each comment, the sum of the four probability values is 1. Therefore, we used the results from the optimal stance classifier in Section 4.1.3 (value ranges [0,1]) to output the probability value of the fake news denying stance corresponding to each comment text to indicate the degree of acceptance of the fake news rebuttal. The higher the value, the higher the acceptance of the rebuttals by the users. The calculation formula is .

4.2.3. Moderator variable

The moderator variable in this research is the commenting users’ cognitive ability ().

Petty and Cacioppo (1986) posit that in circumstances in which individuals are motivated and can process information, they will engage in issue-relevant thinking. When this occurs, individuals are likely to attempt to access relevant associations, images, and experiences from memory and analyze and elaborate on the information presented in conjunction with such associations provided by their memory. For example, individuals may also extract inferences concerning the merits of the arguments in support of a recommendation centered on their examination and develop an overall evaluation of or attitude toward the information (Petty & Cacioppo, 1986). As the individual integrates the arguments presented with their previous knowledge, the likelihood of elaboration is high, and there will be considerable support for the allotment of cognitive effort (Petty & Cacioppo, 1986; Robert et al., 2005).

Language is inherently cognitive (Beckage & Colunga, 2016). Cognitive ability is structured around a regnant general factor (general intelligence) and supported by a number of specific factors (e.g., mathematical, spatial, and verbal abilities; Lubinski, 2009). Vocabulary tests share high variance in terms of general intelligence and haves frequently been used to assess individuals' cognitive capability (Ahmed, 2021; Brandt & Crawford, 2016). Wechsler (1958) supports the use of a vocabulary test to measure cognitive ability and argues that “contrary to lay opinion, the size of a man's vocabulary is not only an index of his schooling but also an excellent measure of his general cognitive ability. Its excellence as a test of cognitive ability may stem from the fact that the number of words a man knows is at once a measure of his learning ability, his fund of verbal information, and the general range of his ideas.”

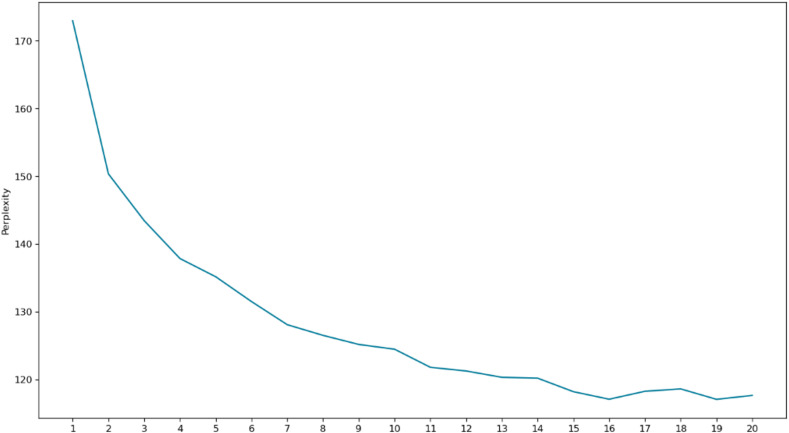

As mentioned above, an individual's cognitive ability to process a message depends on many factors, such as prior knowledge of the topic (Metzger, 2007). Context-specific knowledge gained through experience provides a cognitive foundation that enables one to engage in cognitively rigorous elaboration (Petty & Cacioppo, 1986, pp. 1–24). Therefore, we investigate the text similarity between the individuals' past experiences and knowledge and the rebuttal postings that they have commented on to analyze the level of cognitive ability of individuals in relation to fake news rebuttals. The more information (words, topics) related to the rebuttal message in the domain that is contained in the user's historical memory, the more the user can understand the meaning embedded in the rebuttal text, and the higher is their degree of cognitive abilities regarding fake news rebuttals in the domain. To accomplish this, based on previous research (Vosoughi et al., 2018), using an LDA topic model, which is a probabilistic statistical model used to discover the underlying abstract topics in a series of documents or text data (Blei et al., 2003), we calculated the information distance between the background postings from the comment users and the refuting postings from the rebuttal source.