Abstract

The present study created an artificial intelligence (AI)-automated diagnostics system for uterine cervical lesions and assessed the performance of these images for AI diagnostic imaging of pathological cervical lesions. A total of 463 colposcopic images were analyzed. The traditional colposcopy diagnoses were compared to those obtained by AI image diagnosis. Next, 100 images were presented to a panel of 32 gynecologists who independently examined each image in a blinded fashion and diagnosed them for four categories of tumors. Then, the 32 gynecologists revisited their diagnosis for each image after being informed of the AI diagnosis. The present study assessed any changes in physician diagnosis and the accuracy of AI-image-assisted diagnosis (AISD). The accuracy of AI was 57.8% for normal, 35.4% for cervical intraepithelial neoplasia (CIN)1, 40.5% for CIN2-3 and 44.2% for invasive cancer. The accuracy of gynecologist diagnoses from cervical pathological images, before knowing the AI image diagnosis, was 54.4% for CIN2-3 and 38.9% for invasive cancer. After learning of the AISD, their accuracy improved to 58.0% for CIN2-3 and 48.5% for invasive cancer. AI-assisted image diagnosis was able to improve gynecologist diagnosis accuracy significantly (P<0.01) for invasive cancer and tended to improve their accuracy for CIN2-3 (P=0.14).

Keywords: artificial intelligence, deep learning, image diagnosis, colposcopy, cervical intraepithelial neoplasia, cervical cancer

Introduction

Every year, ~500,000 women are affected with cervical cancer worldwide and ~270,000 women succumb to this disease (1). The cervical cancer frequency is higher in still-advancing countries that typically have far fewer medical resources (2). As the world's population grows and ages, cases of cervical cancer have the potential to increase significantly.

The traditional biopsy routine for cervical cancer diagnosis is that gynecologists manually observe the uterine cervix with a colposcope and decide where to obtain a tissue sample for more detailed microscopic examination. There are problems to this method. First, colposcopes are large and expensive. Second, gynecologists require a great deal of practical experience in deciding correctly from which part of the cervix is best to obtain the tissue.

To address this shortcoming, the present study created a system of AI-assisted image diagnosis (AISD) for cervical lesions. This AI system can guide the inexperienced in their selection of the best biopsy sites. If AISD for cervical lesions could be normalized for use in professional practice, the biopsy itself might become obsolete, or used only when absolutely needed for a definitive opinion. This economical and simple improvement in diagnostic capabilities would reduce the burden for gynecologists and could be expanded to medical facilities in localities, regions and advancing countries that have fewer medical resources. This would be conducive for provision of proper medical treatments and decreasing the overall cervical cancer burden (Fig. 1).

Figure 1.

Diagram of AI-image-assisted diagnosis for cervical lesions. The traditional biopsy routine for cervical cancer diagnosis is that gynecologists manually observe the uterine cervix with a colposcope and decide where to obtain a tissue sample for more detailed microscopic examination. However, colposcopes are large and expensive and gynecologists require a great deal of practical experience in deciding correctly from which part of the cervix is best to obtain the tissue. Smartscopy is cheap and simple improvement. This AI system can guide the selection of the best biopsy sites by doctors not yet well-practiced with such decisions. It could be expected to be of help to reduce the burden of gynecologists and expand to medical facilities in advancing countries. AI, artificial intelligence.

Tanaka et al (3) were the first to report the capability of a smartphone for diagnostic-assistance (Smartscopy; Apple Inc.). They found that it can detect 90.8% of the same pathological cervical lesion detectable by colposcopy. The detection sensitivity of pathological lesions that were for cervical intraepithelial neoplasia (CIN)2 or greater was 92%. From this work, they concluded that the imaging quality of Smartscopy is appropriate for diagnosis.

The aim of the present study was to achieve AISD for cervical lesion using images taken by Smartscopy and report the performance assessment of AISD for cervical lesions taken by colposcope. This system could be subsequently applied toward Smartscopy images.

Materials and methods

The present study was a cooperative research project with Kyocera Corporation, a maker of advanced smartphones and AI software. University Clinical Research Review Committee approved this research [17257(T7)-8]. All methods were performed in accordance with the relevant guidelines and regulations.

Patients

Colposcopy and biopsy were performed on 463 patients by gynecologic oncologists at the Osaka University Hospital between January 2010 and August 2019. The median age of the patients was 46 years (range 23-82). This is a retrospective study in which the patient data was fully de-identified. The present study was approved by the Institutional Review Board and the Ethics Committee of the Osaka University Hospital [approval no. 17257(T7)-8]. The researchers obtained informed consent from participants of the survey on the questionnaire, which was anonymous. The present study included only those who consented to participate.

Images of pathological lesions

A total of 463 images from 463 patients taken by colposcope were analyzed. The images were of pathological cervical lesions processed with acetic acid prior to biopsy. These images were cropped to 224x224 pixels and saved as JPEG files. Gynecologic oncologists annotated the images according to pathological lesions (Fig. 2). The images were used retrospectively as the input data for deep learning by Kyocera. Of 463 images, 120 were normal, 120 were CIN1, 113 were CIN2-3 and 110 were of invasive cancer (Table I).

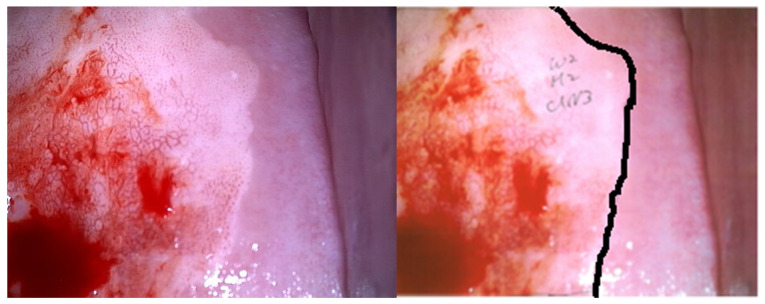

Figure 2.

Example of an annotated image. The left image is of a cervical pathological lesion processed with acetic acid prior to biopsy. The right image is annotated by a gynecologic oncologist, who specified the pathological lesion.

Table I.

The distribution of images.

| Normal | CIN1 | CIN2-3 | Invasive cancer | Total | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Images | 120 | 120 | 113 | 110 | 463 | |||||

| Training | Test | Training | Test | Training | Test | Training | Test | Training | Test | |

| 90 | 30 | 90 | 30 | 85 | 28 | 83 | 27 | 348 | 115 | |

CIN, cervical intraepithelial neoplasia.

Preparation

A randomly selected subset of 115 of the 463 images was employed as a ‘test dataset’ and the remaining 348 images were used as the training dataset (Table I). Next, 25% of the training dataset was used in Group 1, 50% in Group 2, 75% in Group 3 and all of the training dataset was used in Group 4.

The number of images was also increased. The use of triple images for the training dataset was investigated by adding rotated or blurred images and quadruple images were tested by changing the hue, chroma (purity or intensity of color) and brightness (HSV), as is the standard practice in computer image analysis.

AI image diagnosis

GoogLeNet (Inception v1) (4) software was used with a convolutional neural network. After the images for the training dataset were investigated using deep learning with a convolutional neural network, the traditional colposcopy diagnosis and AI image diagnosis for the test dataset were compared.

Human accuracy assisted with AI

During the period between October of 2020 and January of 2021, 100 images (25 images for each pathology category) were presented to a panel of 32 gynecologists in the Osaka University Graduate School of Medicine, Niigata University Graduate School of Medicine, Kanazawa Medical University, University of Occupational and Environmental Health, Kawasaki Medical University, Hiramatsu Obstetrics and Gynecology Clinic, Saito Women Clinic, Ladies Clinic Yagi and Maki Ladies Clinic. They diagnosed each image as belonging to one of the four categories. Next, they re-diagnosed every image after the AI diagnosis was revealed to them. Changes in human diagnosis and the accuracy of the AI-image diagnosis was assessed.

Statistical analysis

Using Medcalc (https://www.medcalc.org), differences between groups were calculated by the χ2 test and the logistic regression test for categorical variables. P<0.05 was considered to indicate a statistically significant difference.

Results

Performance of AI for diagnosis for pathological lesions

The average accuracy of diagnosis for pathological lesions solely by AI was 43.5%. For the four categories, the accuracy was 57.8% for normal, 35.4% for CIN1, 40.5% for CIN2-3 and 44.2% for invasive cancer (Table II).

Table II.

The accuracy of AI image diagnosis.

| Accuracy (%) | |

|---|---|

| Normal | 57.8 |

| CIN1 | 35.4 |

| CIN2-3 | 40.5 |

| Invasive cancer | 44.2 |

| Total | 43.5 |

AI, artificial intelligence; CIN, cervical intraepithelial neoplasia.

To improve accuracy, the number of images per slide for the training dataset were changed. Table III shows the accuracy for each category. In group 1, 25% images were used for the training dataset and the accuracy was 48.2% for normal, 19.9% for CIN1, 30.4% for CIN2-3 and 54.1% for invasive cancer. In group 2, 50% images were used for the training dataset, 25% images more than for group 1; the group 2 accuracy was 47.7% for normal, 29.7% for CIN1, 29.5% for CIN2-3 and 52.5% for invasive cancer. In group 3, 75% images were used for the training dataset; the accuracy was 53.6% for normal, 30.1% for CIN1, 42.9% for CIN2-3 and 46.3% for invasive cancer. In group 4, 100% images were used for the training dataset and the accuracy improved to 57.8% for normal, 35.4% for CIN1, 40.5% for CIN2-3 and 44.2% for invasive cancer. Increasing the number of images in the training dataset beyond 25% did not lead to a significant improvement in the accuracy for the four categories (Table III).

Table III.

Accuracy of AI image diagnosis of each group.

| Group 1 25% of training case (%) | Group 2 50% of training case (%) | Group 3 75% of training case(%) | Group 4 100% of training case (%) | |

|---|---|---|---|---|

| Normal | 48.2 | 47.7 | 53.6 | 57.8 |

| CIN1 | 19.9 | 29.7 | 30.1 | 35.4 |

| CIN2-3 | 30.4 | 29.5 | 42.9 | 40.5 |

| Invasive cancer | 54.1 | 52.5 | 46.3 | 44.2 |

| Total | 36.4 | 37.9 | 42.1 | 43.5 |

AI, artificial intelligence; CIN, cervical intraepithelial neoplasia.

Next, whether increasing the number of images per slide could improve the accuracy of image diagnosis, as is standard practice in computer science was investigated. Tripling the number of images for the training dataset by adding rotated and blurred images and quadrupling the images by changing HSV was also investigated. However, none of these efforts improved upon the accuracy of using a single image.

AI-assisted image diagnosis

The accuracy of the human diagnosis of cervical pathological images by gynecologists before knowing the diagnosis from AI was 64.8% for normal, 54.4% for CIN1, 54.4% for CIN2-3 and 38.9% for invasive cancer. Once they became aware of the AI diagnosis, the human diagnosis accuracy was 63.3% for normal, 51.1% for CIN1, 58.0% for CIN2-3 and 48.5% for invasive cancer (Table IV).

Table IV.

Significance of AI-assisted image diagnosis.

| Lesions | Initial | AI-assisted | P-value |

|---|---|---|---|

| Normal | 518/800 (64.8%) | 506/800 (63.3%) | 0.57 |

| CIN 1 | 435/800 (54.4%) | 409/800 (51.1%) | 0.21 |

| CIN 2-3 | 435/800 (54.4%) | 464/800 (58.0%) | 0.14 |

| Invasive cancer | 311/800 (38.9%) | 388/800 (48.5%) | <0.01 |

AI, artificial intelligence; CIN, cervical intraepithelial neoplasia.

Discussion

AI is being applied across various disciplines, including phonetic recognition, image recognition, face recognition and automated driving technology. Similarly, AI applications are expected to evolve rapidly in many medical fields (5). The medical sector is heavily burdened with many challenges to overcome. There is scarcity of medical professionals, area to area medical bias, bias between treatment departments, crushing labor hours, lapses in safety and stability of the medical delivery system. AI can potentially reduce or resolve many of these problems.

Incorporating AI into medical practices is expected to improve the medical environment across Japan. Patients could receive safer and more adequate medical services, the overload of medical professionals could be reduced and new methods of diagnosis and treatment could be developed.

The Japanese Ministry of Health, Labor and Welfare has selected six important areas for AI development (6). These encompass genomic medicine, diagnostic imaging, assistance with diagnosis and treatment, drug development, caregiving for dementia and surgical assistance. Among these areas, diagnostic imaging is regarded the most practical for rapid AI adoption as most medical images are already digitized and application of established AI-associated technology would be easier.

The number of AI image recognition software has seen dramatic recent increase and there have already been reports of AI automated diagnosis being conducted (Table V).

Table V.

Summary of AI reports.

| Author (year) | Subject | (Refs.) |

|---|---|---|

| Hu et al (2019) | Pioneer of automated visual evaluation of cervigrams | (7) |

| Xue et al (2020) | AI assistance in colposcopy imaging judgment | (8) |

| Yuan et al (2020) | High performance of AI diagnostic system | (9) |

| Xue et al (2020) | Automated visual evaluation on smartphones | (10) |

| Miyagi et al (2020) | AI colposcopy combined with HPV types | (11) |

| Tan et al (2021) | AI assistance in thin-prep cytological test images | (12) |

AI, artificial intelligence; HPV, human papillomavirus.

As pioneers in this field, Hu et al (7) report that automated visual evaluation of cervigrams can identify precancer/cancer cases with great accuracy [area under the curve (AUC)=0.91]. Xue et al (8) report the potential of AI to address the colposcopic bottleneck, which could assist colposcopists in colposcopy image diagnosis, the detection of underlying CINs and the guidance of biopsy sites.

Yuan et al (9) report that the sensitivity, specificity and accuracy of the classification model to differentiate negative cases from positive cases were 85.38, 82.62 and 84.10%, respectively, with an AUC of 0.93. The recall and Sørensen-Dice coefficient of the segmentation model to segment suspicious lesions in acetic acid images were 84.73 and 61.64%, with an average accuracy of 95.59%. Furthermore, 84.67% of high-grade lesions were detected by the acetic detection model. Compared to colposcopists, the diagnostic system showed improved performance for ordinary colposcopy images but was slightly unsatisfactory for high-definition images.

Furthermore, Xue et al (10) report that automated visual evaluation by smartphones can be a useful adjunct to health-worker visual assessment with acetic acid, a cervical cancer screening method commonly used in low- and middle-resource settings. Miyagi et al (11) report the feasibility of using deep learning to classify cervical squamous epithelial lesions (SILs) from colposcopy images combined with human papillomavirus (HPV) types. The sensitivity, specificity, positive predictive value, negative predictive value and the AUC ± standard error for AI colposcopy combined with HPV types and pathological results were 0.956 (43/45), 0.833 (5/6), 0.977 (43/44), 0.714 (5/7) and 0.963±0.026, respectively.

Tan et al (12) report that computer-based deep learning methods can achieve high-accuracy fast cancer screening using thin-prep cytological test images. This system could classify the images and generate a test report in ~3 min with high performance (the sensitivity and specificity was 99.4 and 34.8%, respectively, with an AUC of 0.67).

In all of these reports, cervical pathology was divided into two or three categories, atypical squamous cells of undetermined significance, low grade (L)SIL (normal and CIN1) and high grade (H)SIL (CIN2 and over). The present study is the first (to the best of the authors' knowledge) to report the evaluation of AI image diagnosis using four categories. The average accuracy was 43.5% particularly for CIN2-3 and 44% for invasive cancer. This is lower than the accuracy of the other two categories. To further improve AI accuracy, the number of training dataset images for various methods was increased, but this was unsuccessful.

In the future, diagnosis using images captured by the Smartscope will be evaluated. It is hypothesized that AI accuracy might be improved with improved context and timing of image-acquisition.

The present study reported, for first time to the best of the authors' knowledge, on the integration of AI and human image diagnosis for uterine cervical pathological lesions. It was evident that AI-assisted image diagnosis could significantly improve the gynecologist's accuracy for diagnosing of the category of invasive cervical cancer and it tended to improve diagnosis accuracy for CIN2-3, but not CIN1 and normal.

When comparing the initial accuracy of AI and humans diagnoses, the accuracy of humans was higher for normal and CIN1 (64.8 and 54.4%, respectively. AI-assisted accuracy was higher for CIN2-3 and invasive cancer (58 and 48.5%, respectively).

For mammography screening, AI advances could be used to increase screening accuracy by reducing missed cancers and false positives. Salim et al (13) performed AI computer-aided detection algorithms as independent mammography readers and assessed the screening performance when combined with radiologists. The results indicate that AI computer-aided detection algorithms can assess screening mammograms with a sufficient diagnostic performance that could be further evaluated as an independent readers in prospective clinical trials.

Schaffter et al (14) evaluated whether AI could overcome human mammography interpretation limitations; >1,100 subjects, comprising 126 teams from 44 countries participated. The top-performing algorithms achieved an AUC of 0.858 (United States) and 0.903 (Sweden) and a specificity of 66.2% (United States) and 81.2% (Sweden) compared with the radiologists' sensitivity, which was lower than community-practice radiologists' specificity of 90.5% (United States) and 98.5% (Sweden). Combining top-performing algorithms and US radiologist assessments resulted in a higher AUC of 0.942 and achieved a significantly improved specificity (92.0%) at the same sensitivity.

Humans are still responsible for any AI-assisted diagnosis in Japan. At present, it need not be argued ‘Which is better, human or AI?’ or ‘Will humans be dumped into the dustbin of medical history?’ Instead, we are looking toward a way to realize the powerful potential of human and AI cooperation in medicine.

The present study has a limitation. The accurate diagnosis rate of AI-based diagnosis is in the 40% range, which cannot be used in clinical practice. This could be attributed to the evaluation of AI image diagnosis in four categories in the current study. In previous reports (7,9-12), the cervical pathology was divided into two or three categories. In the present study, the diagnostic accuracy when divided into two categories was 79.4% in HSIL and 87.0% in LSIL, comparable to other reports (7,9-12). In some reports, the accuracy for detecting HSIL by colposcopy was ~80-90%, the sensitivity was ~80% and specificity was ~70% (15,16). This level is felt necessary for clinical utility, which might be a limitation in colposcopic diagnosis.

For four categories of cervical cancer pathology diagnosis, the accuracy of AI image diagnosis was 57.8% for normal, 35.4% for CIN1, 40.5% for CIN2-3 and 44.2% for invasive cancer. AI-assisted image diagnosis significantly improved the diagnostic accuracy of the gynecologist for invasive cancer and tended to improve slightly the gynecologist's accuracy for CIN2-3, but it did not improve the gynecologist's accuracy regarding the categories of CIN1 and normal cervix.

Acknowledgements

The authors would like to thank Dr GS Buzard (Department of Obstetrics and Gynecology, Osaka University Graduate School of Medicine) for his constructive criticism and editing of our manuscript.

Funding Statement

Funding: No funding was received.

Availability of data and materials

The datasets during and/or analyzed during the current study available from the corresponding author on reasonable request.

Authors' contributions

YI designed the study and interpreted the results, AM wrote the manuscript, designed the study and interpreted the results, YU designed the study and interpreted the results, YT, RN, AM, MSh, TE, MSe, TE, TS, KY, HH, TN, TM, KH, JS, JY, YT and TK performed sample preparation. AM and YI confirm the authenticity of all the raw data. All authors reviewed and approved the final manuscript.

Ethics approval and consent to participate

The present study was approved by the Institutional Review Board and the Ethics Committee of the Osaka University Hospital [approval no. 17257(T7)-8]. The researchers obtained informed consent from participants of the survey on the questionnaire, which was anonymous. The present study included only those who consented to participate.

Patient consent for publication

Not applicable.

Competing interest

The present study was a cooperative research project with Kyocera Corporation.

References

- 1. WHO Disease and Injury Country Estimates. Available from: https://www.who.int/healthinfo/global_burden_disease/estimates_country/en/. [Google Scholar]

- 2.Hull R, Mbele M, Makhafola T, Hicks C, Wang SM, Reis RM, Mehrotra R, Mkhize-Kwitshana Z, Kibiki G, Bates DO, Dlamini Z. Cervical cancer in low and middle-income countries. Oncol Lett. 2020;20:2058–2074. doi: 10.3892/ol.2020.11754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Tanaka Y, Ueda Y, Kakubari R, Kakuda M, Kubota S, Matsuzaki S, Okazawa A, Egawa-Takata T, Matsuzaki S, Kobayashi E, Kimura T. Histologic correlation between smartphone and coloposcopic findings in patients with abnormal cervical cytology: Experiences in a tertiary referral hospital. Am J Obstet Gynecol. 2019;221:241.e1–241.e6. doi: 10.1016/j.ajog.2019.04.039. [DOI] [PubMed] [Google Scholar]

- 4. https://towardsdatascience.com/a-simple-guide-to-the-versions-of-the-inception-network-7fc52b863202. [Google Scholar]

- 5.Jiang F, Jiang Y, Zhi H, Dong Y, Li H, Ma S, Wang Y, Dong Q, Shen H, Wang Y. Artificial intelligence in healthecare: Past, present and future. Strole Vasc Neurol. 2017;2:230–243. doi: 10.1136/svn-2017-000101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. WHO: The Global Health Observatory. Explore a world of health data. Available from: https://www.mhlw.go.jp/content/10601000/000568486.pdf. [Google Scholar]

- 7.Hu L, Bell D, Antani S, Xue Z, Yu K, Horning MP, Gachuhi N, Wilson B, Jaiswal MS, Befano B, et al. An observational study of deep learning and automated evaluation of cervical images for cancer screening. J Natl Cancer Inst. 2019;111:923–932. doi: 10.1093/jnci/djy225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Xue P, Ng MT, Qiao Y. The challenges of colposcopy for cervical cancer screening in LMICs and solutions by artificial intelligence. BMC Med. 2020;18(169) doi: 10.1186/s12916-020-01613-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Yuan C, Yao Y, Cheng B, Cheng Y, Li Y, Li Y, Liu X, Cheng X, Xie X, Wu J, et al. The application of deep learning based diagnostic system to cervical squamous intraepithelial lesions recognition in colposcopy images. Sci Rep. 2020;10(11639) doi: 10.1038/s41598-020-68252-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Xue Z, Novetsky AP, Einstein MH, Marcus JZ, Befano B, Guo P, Demarco M, Wentzensen N, Long LR, Schiffman M, Antani S. A demonstration of automated visual evaluation of cervical images taken with a smartphone camera. Int J Cancer. 2020;147:2416–2423. doi: 10.1002/ijc.33029. [DOI] [PubMed] [Google Scholar]

- 11.Miyagi Y, Takehara K, Nagayasu Y, Miyake T. Application of deep learning to the classification of uterine cervical squamous epithelial lesion from colposcopy images combined with HPV types. Oncol Lett. 2020;19:1602–1610. doi: 10.3892/ol.2019.11214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Tan X, Li K, Zhang J, Wang W, Wu B, Wu J, Li X, Huang X. Automatic model for cervical cancer screening based on convolutional neural network: A retrospective, multicohort, multicenter study. Cancer Cell Int. 2021;21(35) doi: 10.1186/s12935-020-01742-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Salim M, Wåhlin E, Dembrower K, Azavedo E, Foukakis T, Liu Y, Smith K, Eklund M, Strand F. External evaluation of 3 commercial artificial intelligence algorithms for independent assessment of screening mammograms. JAMA Oncol. 2020;6:1581–1588. doi: 10.1001/jamaoncol.2020.3321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Schaffter T, Buist DSM, Lee CI, Nikulin Y, Ribli D, Guan Y, Lotter W, Jie Z, Du H, Wang S, et al. Evaluation of combined artificial intelligence and radiologist assessment to interpret screening mammograms. JAMA Netw Open. 2020;3(e200265) doi: 10.1001/jamanetworkopen.2020.0265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Stuebs FA, Schulmeyer CE, Mehlhorn G, Gass P, Kehl S, Renner SK, Renner SP, Geppert C, Adler W, Hartmann A, et al. Accuracy of colposcopy-directed biopsy in detecting early cervical neoplasia: A retrospective study. Arch Gynecol Obstet. 2019;299:525–532. doi: 10.1007/s00404-018-4953-8. [DOI] [PubMed] [Google Scholar]

- 16.Fatahi MN, Meybodi NF, Karimi-Zarchi M, Allahqoli L, Sekhavat L, Gitas G, Rahmani A, Fallahi A, Hassanlouei B, Alkatout I. Accuracy if triple test versus colposcopy for the diagnosis of premalignant and malignant cervical lesions. Asian Pac J Cancer Prev. 2020;21:3501–3507. doi: 10.31557/APJCP.2020.21.12.3501. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets during and/or analyzed during the current study available from the corresponding author on reasonable request.