Abstract

Speech perception depends on the ability to generalize previously experienced input effectively across talkers. How such cross-talker generalization is achieved has remained an open question. In a seminal study, Bradlow & Bent (2008, henceforth BB08) found that exposure to just five minutes of accented speech can elicit improved recognition that generalizes to an unfamiliar talker of the same accent (N=70 participants). Cross-talker generalization was, however, only observed after exposure to multiple talkers of the accent, not after exposure to a single accented talker. This contrast between single- and multi-talker exposure has been highly influential beyond research on speech perception, suggesting a critical role of exposure variability in learning and generalization. We assess the replicability of BB08’s findings in two large-scale perception experiments (total N=640) including 20 unique combinations of exposure and test talkers. Like BB08, we find robust evidence for cross-talker generalization after multi-talker exposure. Unlike BB08, we also find evidence for generalization after single-talker exposure. The degree of cross-talker generalization depends on the specific combination of exposure and test talker. This and other recent findings suggest that exposure to cross-talker variability is not necessary for cross-talker generalization. Variability during exposure might affect generalization only indirectly, mediated through the informativeness of exposure about subsequent speech during test: similarity-based inferences can explain both the original BB08 and the present findings. We present Bayesian data analysis, including Bayesian meta-analyses and replication tests for generalized linear mixed models. All data, stimuli, and reproducible literate (R markdown) code are shared via OSF.

Keywords: speech perception, foreign-accented speech, adaptation, cross-talker generalization, replication, Bayesian analyses

Introduction

Talkers differ in the acoustic realization of the same word due to factors such as physiology, language background, and talker identity (e.g., Allen, Miller & DeSteno, 2003; Newman, Clouse & Burnham, 2001; Peterson & Barney, 1952). These differences are an important part of the speech signal, in that they encode information about social identity (for reviews, see Eckert, 2012; Foulkes & Hay, 2015). At the same time, inter-talker variability causes substantial computational challenges to speech perception. How human listeners achieve robust speech recognition despite this variability, and how they utilize previous experience to understand unfamiliar talkers, has been a perennial puzzle for language researchers (for review, see Kleinschmidt & Jaeger, 2015).

In this context, non-native (L2-accented) speech presents a case that is of both social and theoretical relevance. Of social relevance, because negative attitudes towards L2-accented speech can result in discrimination, with sometimes substantial social and socioeconomic consequences for the speaker (e.g., Fuertes, Gottdiener, Martin, Gilbert & Giles, 2012; Lippi-Green, 2012; Munro, 2003). Of theoretical relevance, because L2-accented speech deviates from native speech (henceforth L1-accented speech) in systematic ways, depending on the talker’s language background: talkers of the same first language (e.g., Mandarin) tend to share characteristics in their pronunciations of a second language (e.g., English). Research in speech perception has drawn on this property in order to understand the conditions under which listeners generalize previous experience to subsequently encountered talkers with the same L2 accent (for review, see Baese-Berk, 2018).

When exposed to a talker with an unfamiliar L2 accent, native listeners might initially experience substantial processing difficulty (e.g., Munro & Derwing, 1995; Schmale & Seidl, 2009). This initial difficulty can dissipate quickly with exposure to the talker (e.g., Clarke & Garrett, 2004; Xie, Weatherholtz, Bainton, Rowe, Burchill, Liu & Jaeger, 2018; Weil, 2001). Such talker-specific adaptation is now well-documented (for review, see Weatherholtz & Jaeger, 2016), and similar adaptation has been observed for native dialects and regional varieties (Best, Shaw, Mulak, Docherty, Evans, Foulkes, Hay, Al-Tamimi, Mair & Wood, 2015; Smith, Holmes-Elliott, Pettinato & Knight, 2014), as well as idiosyncratic sound-specific deviations from canonical pronunciations (e.g., Kraljic & Samuel, 2005; Norris, McQueen & Cutler, 2003; Sumner, 2011).

Listeners also seem to be able to generalize previous experience to other unfamiliar talkers of the same accent. Research on the perception of L2 accents has played a key role in showcasing this ability: because deviations from L1-accented speech are in part systematic across talkers of the same L2 accent, implicit knowledge of this cross-talker variability is predicted to facilitate speech perception (Foulkes & Hay, 2015; Kleinschmidt & Jaeger, 2015). There is evidence that this is indeed the case for automatic speech recognition systems, which benefit from training on speech from different dialects and accents (e.g., Soto, Siohan, Elfeky & Moreno, 2016; Tatman, 2016). Similarly, human listeners who are familiar with a regional or L2 accent via long-term experience tend to show better comprehension of that accent than listeners who are not (Porretta, Tucker & Järvikivi, 2016; Stuart-Smith, 2008; Witteman, Weber & McQueen, 2013). Long-term cumulative experience with an accent thus seems to facilitate generalization to unfamiliar talkers of the same accent and perhaps to L2-accented speech more generally (Bent & Bradlow 2003).

How this ability to generalize is gained via individual encounters with L2-accented talkers—i.e., how listeners incrementally and cumulatively come to improve their comprehension of an L2 accent—is, however, still largely unknown. This gap in our knowledge includes some of the basic conditions for successful cross-talker generalization, and we hope to fill this gap with the current investigation. Here, we seek to replicate a seminal study on this question (Bradlow & Bent, 2008). Both within research on speech perception and within research on learning more generally, this study is often cited as evidence for an important constraint on generalization. This constraint is variably characterized as a categorical limitation—the inability to generalize to unfamiliar talkers of an L2 accent following exposure to only a single talker of that accent—or a matter of degree—the relative advantage in generalization following exposure to multiple talkers of an accent (although these two interpretations differ, they are not always distinguished in practice). Both interpretations have been highly influential in shaping theories of speech perception and effective learning.

There are, however, reasons to revisit Bradlow and Bent’s study. This includes limitations of the experimental design employed in the original study—limitations that were clearly acknowledged by the authors but have since been forgotten. These limitations are particularly important in light of a small number of more recent studies with potentially conflicting results. Despite these new findings, the original findings of Bradlow and Bent’s experiment continue to be taken as ground truth (for a recent review, see Baese-Berk, 2018, p. 16–18). We thus present two large-scale replications of Bradlow and Bent’s experiment while removing the confounds of the original study. We address both the categorical question—whether generalization can be found after exposure to a single talker—and the gradient question—whether multi-talker exposure facilitates generalization over and above single-talker exposure. Beyond speech perception, the analysis approach and findings we present are of relevance to research on effective training, therapy, and teaching.

Bradlow and Bent (2008)

Bradlow and Bent (2008; henceforth BB08) made several important contributions to the field of speech perception. Here we focus on their Experiment 2, which investigates cross-talker generalization. In this pioneering experiment, BB08 examined listeners’ ability to generalize following initial exposure to unfamiliar L2 accents. In particular, BB08 asked how the type of exposure—multiple talkers or a single talker—affects listeners’ comprehension of unfamiliar talkers of the same L2 accent.

L1-English listeners were exposed to 160 spoken sentences from either L1 talkers of American English or Mandarin-accented talkers of English—about five minutes worth of speech, spread over two exposure sessions on two consecutive days. Both during exposure and the subsequent test, the participants’ task was to transcribe the sentences they heard. Performance was measured by the number of correctly transcribed words. Between-participants, BB08 manipulated the speech heard during exposure. In the control condition, participants heard five L1-accented talkers of English and were tested on the speech of a Mandarin-accented talker. In the talker-specific condition, participants heard the same Mandarin-accented talker during exposure and test. In the single-talker condition, participants heard one Mandarin-accented talker during exposure and a different Mandarin-accented talker during test. Finally, in the multi-talker condition, participants heard five different Mandarin-accented talkers, followed by a test against a different (sixth) Mandarin-accented talker.

BB08 found that exposure to speech from one accented talker leads to improved transcription performance on novel sentences from the same talker during test (talker-specific adaptation): participants in the talker-specific condition achieved about 10% better performance during test (92 RAU) than participants in the control condition (82 RAU; RAU are rationalized arcsine-transformed proportions; for the present purpose, they closely approximate percent correct). BB08 further found that listeners generalized this learning to unfamiliar talkers of the same L2 accent (cross-talker generalization). Critically, listeners only did so following exposure to multiple talkers (90 RAU, +8 above the control condition). No generalization was observed following exposure to only a single talker (82 RAU, ±0 above the control condition).1

Both the existence of cross-talker generalization after multi-talker exposure and the complete lack of cross-talker generalization after single-talker exposure are striking findings. Together, these findings seem to suggest clear limits on generalization during speech perception. BB08 proposed that the cross-talker variability observed during multi-talker exposure allows listeners to distinguish between accent- and talker-specific characteristics, essentially learning the structure of the accent. The findings also seem to rule out the alternative explanations in terms of similarity-based generalization from episodic (Goldinger, 1996, 1998) or exemplar accounts of speech perception (Johnson, 1997; Pierrehumbert, 2001). These accounts—which we return to in the general discussion—would predict that cross-talker generalization can occur after exposure to a single talker, provided that the exposure and test talker are sufficiently similar (e.g., Eisner & McQueen, 2005; Goldinger, 1996; Reinisch & Holt, 2014; Xie & Myers, 2017). In short, listeners’ ability to generalize to unfamiliar talkers after single-talker exposure speaks directly to the mechanism supporting cross-talker generalization and the types of representations listeners maintain about previously experienced input. It is thus not surprising that this particular set of findings—generalization across talkers, but only after exposure to multiple talkers—has been influential in theoretical work on speech perception (for reviews, Kleinschmidt & Jaeger, 2015; Sumner, 2011), second language learning (see references in Pajak, Fine, Kleinschmidt & Jaeger, 2016) and research on learning more generally (e.g., Potter & Saffran, 2017; Schmale & Seidl, 2009).

There are, however, reasons to call for caution in interpreting the findings of BB08: while the test talker for the single- and multi-talker exposure conditions in BB08 was identical, the exposure talkers differed between the two conditions. Specifically, BB08 used four different exposure talkers across four different (between-participant) single-talker conditions. The multi-talker condition employed five exposure talkers (for all participants in that condition). Critically, only two of the exposure talkers in the multi-talker condition were also used in the single-talker condition. Thus, if generalization to the test talker depends at least in part on similarities between the exposure and test talkers—as predicted by, for example, exemplar and episodic accounts—this could explain the results of BB08. The results of BB08 are thus ambiguous with regard to whether multi-talker exposure is necessary for, or even facilitates, cross-talker generalization. Yet, this alternative interpretation of BB08’s findings has not been investigated in subsequent studies.

A second, more general, reason to revisit BB08 is the use of only one test talker. This is not uncommon for studies on speech perception, including the perception of L2-accented speech (e.g., Clarke & Garrett 2004; Reinisch, Wozny, Mitterer & Holt 2014; Janse & Adank 2012; including our own work, e.g., Xie et al. 2018; Xie, Earle & Myers 2018). It raises questions, however, about the extent to which the results of studies with a single test talker generalize across the population of talkers. Indeed, a few more recent studies have returned mixed evidence for cross-talker generalization after single-talker exposure, though it is worth pointing out that these studies, too, were based on only one test talker (Xie & Myers 2017; Xie et al. 2018; as well as unpublished thesis work in Clarke 2003; Weil 2001; we return to these and related studies in the general discussion).2 Reliance on only one test talker is arguably particularly problematic for investigations of cross-talker generalization because some accounts of speech perception predict that generalization depends on the similarity between the exposure and test talker (e.g., Goldinger, 1998; Johnson, 1997; Kleinschmidt & Jaeger, 2015; Kraljic & Samuel, 2007). In short, there is to this day no study with multiple test talkers that tests, and compares, cross-talker generalization after both single- and multi-talker exposure within the same experiment.3 The present study seeks to address this gap in the literature.

The need for such a replication is concisely exemplified by a recent review of the field (Baese-Berk, 2018), summarizing BB08: “[. . . ] listeners who heard a different single talker at test and training do not perform better than the individuals who were trained on the task but with native English speakers. However, [. . . ] with increased variation in number of talkers, listeners demonstrate talker-independent adaptation for Mandarin. That is, listeners exposed to multiple talkers during training were able to generalize to a novel talker from the same language background at test.” (Baese-Berk, 2018, p. 16). This is, of course, a correct summary of BB08. The question we ask here is whether these results replicate when multiple test talkers are used, all combinations of exposure and test talkers are adequately counter-balanced across conditions, and response data from a large number of participants is collected.

The present study

We present two large-scale replications of BB08 with 320 participants each. These are presented as Experiments 1a and 1b. We seek to contribute to three questions about cross-talker generalization, summarized in Table 1.

Table 1.

Three research questions about cross-talker generalization.

| Generalization | Question | |

|---|---|---|

| (1) | Multi-talker | Is generalization to an unfamiliar L2 talker possible following |

| exposure to multiple L2 talkers of the same accent? | ||

|

| ||

| (2) | Single-talker | Is generalization to a novel L2 talker possible following |

| exposure to a single L2 talker of the same accent? | ||

|

| ||

| (3) | Multi- vs. single-talker | Does exposure to multiple L2 talkers facilitate |

| generalization to a novel talker of the same accent beyond exposure to a single L2 talker? | ||

Our replication closely follows BB08, with some exceptions, while removing the aforementioned confounds. Following BB08, we employ the same exposure-test paradigm. We compare listeners’ transcription accuracy for an L2-accented talker presented during test following exposure to L1-accented English (control condition), exposure to the same L2 talker (talker-specific condition), exposure to another L2 talker of a same accent (single-talker condition), and exposure to multiple L2 talkers of the same accent (multi-talker condition). By comparing transcription accuracy during test for the multi-talker condition against the control, we address Question 1. Of the three questions we ask here, this is the one for which there is by now the most convincing evidence (Sidaras et al., 2009; Tzeng et al., 2016; Alexander & Nygaard, 2019). By comparing accuracy for the single-talker condition against the control, we address Question 2. For this question, some studies have returned affirmative answers while other studies—including BB08—have returned a negative answer. However, all of these studies have employed a single test talker. Finally, by comparing accuracy for the multi-talker against the single-talker condition, we address Question 3.

To address the potential confounds of the original BB08 study, our experiments compare generalization of adaptation for multiple test talkers (four in each experiment) and multiple exposure talkers (six in each experiment). This results in a large number of exposure-test talker combinations—the single-talker condition in Experiments 1a and 1b contains 20 unique combinations of exposure and test talkers (compared to four in BB08; see also Table 3). This contrasts with BB08 and other previous work, which has generally assessed adaptation or generalization for only one test talker (e.g., Gass & Varonis 1984; Wade, Jongman & Sereno 2007; Weil 2001; but see Sidaras et al. 2009; Tzeng et al. 2016). Unlike BB08, we also counterbalance all exposure-test talker combinations so as to hold talker identity constant across the single- and multi-talker conditions. This removes the potential confound—and thereby the alternative interpretation—of the results observed in BB08. The design of our experiments is visualized in Figure 1 and described in more detail under Procedure.

Table 3.

Number of participants and talker-pairs in each exposure condition remaining for analysis (Experiment 1a and 1b). For comparison, we list the same information for Bradlow and Bent (2008, Experiment 1). For example, in the single-talker condition, BB08 paired four possible exposure talkers with one test talker for a total of 4 talker pairs. By comparison, we paired 5 exposure talkers with 4 test talkers to form 20 talker pairs for each of Experiments 1a and 1b.

|

Bradlow & Bent (2008)

|

Current Study (Exp 1a and 1b) |

|||

|---|---|---|---|---|

| Condition | Exposure-test Talker combinations | Participants | Exposure-test Talker combinations | Participants |

| Control | 1 | 10 | 4 | 80 (each) |

| Single-talker | 4 (1 test) | 40 | 20 (4 test) | 80 (each) |

| Multi-talker | 1 | 10 | 4 | 80 (each) |

| Talker-specific | 1 | 10 | 4 | 80 (each) |

|

| ||||

| Total | 70 | 320 (each) | ||

Figure 1.

Design balancing both exposure and test talkers across participants. Unlike in BB08, the exposure talkers that occurred across participants within the single-talker condition were identical to the exposure talkers in the multi-talker condition. Each shape represents a different talker—i.e., the square is always the same talker (only two out of four test talkers are shown). The exposure talkers in the control condition are the only L1-accented talkers of American-English (and therefore represented by shapes different from those representing Mandarin-accented talkers in the other three conditions). Background colors indicate the exposure condition. The resulting large number of lists (see Procedure) and participants (320 each in Experiments 1a and 1b) motivate the use of a web-based crowd-sourcing paradigm.

We also halve the amount and duration of exposure, compared to BB08 (80 instead of 160 exposure sentences). Beyond simply saving costs, this deviation was motivated by theoretical considerations. Specifically, it allows us to rule out alternative explanations for cross-talker generalization after single-talker exposure, if observed. Bradlow and Bent propose that generalization from single talkers might be possible after prolonged exposure to one talker because of increased exposure to within-talker variability (BB08, p. 723). This, Bradlow and Bent point out, would explain an unpublished result by Weil (2001) finding generalization after three days of single-talker exposure. By using substantially less exposure than in BB08’s study, we aim to rule out a similar explanation should we observe cross-talker generalization following single-talker exposure.

Finally, we address concerns about spurious significance (Type I error) and power (Type II error) by increasing the number of participants per condition well above the minimum recommended in the statistical literature (from 10 in BB08 to 80 participants per condition in each of Experiments 1a and 1b; Simmons, Nelson & Simonsohn 2011 recommend a minimum of 20 participants per between-participant condition). Lack of power does not only increase the rate of Type II errors (failure to detect an effect), it also makes it more likely that an experiment yields effect estimates that are zero or the opposite direction from the true effect. It is therefore possible that lack of power contributed to the observed ±0 effect of single-talker exposure in BB08 (Question 2), and—as a consequence—the observed benefit of multi-talker over single-talker exposure (Question 3).

The 320 participants we recruit in each experiment are the minimal number required to fully counter-balance the design of Experiments 1a and 1b, due to the large number of between-participant experimental lists (up to 80 per exposure condition, for a total of 128 unique lists in each experiment; see Procedure). Data collection for this large number of participants is facilitated through a web-based crowdsourcing paradigm. We have previously replicated similar lab-based speech perception paradigms over the web (Kleinschmidt & Jaeger, 2012; Kleinschmidt, Raizada & Jaeger, 2015; Liu & Jaeger, 2018; Xie et al., 2018), including paradigms that employed transcription to detect talker-specific adaptation to L2 accents (e.g., two experiments with multiple between-participant conditions in Burchill, Liu & Jaeger, 2018).4 Power analyses presented in Appendix C confirm that the present study had substantially higher statistical power than BB08.

We analyze our findings with Bayesian Generalized Linear Mixed Models (GLMMs). While frequentist analyses returned the same results, Bayesian analyses are particularly well-suited for the present purpose: Bayesian hypothesis tests provide coherent gradient measures of the strength of evidence for each of the three questions we seek to address (Raftery, 1995; Wagenmakers, 2007). This reduces the temptation to think about findings in categorical terms (significant vs. not; for helpful discussion, see Vasishth, Mertzen, Jäger & Gelman, 2018), a feature that we find particularly helpful in the context of replication. In addition to separate analyses of Experiments 1a and 1b, we present both a Bayesian meta-analysis and a Bayesian replication test for GLMMs (extending Verhagen & Wagenmakers, 2014). The choice of Bayesian analyses has further practical advantages, such as the ability to fit GLMMs with full random effect structures, avoiding the need for ad-hoc recipes that have become the standard in some parts of the field (see discussion in Baayen, Vasishth, Bates & Kliegl, 2016). The ability to model rich random effect structures also lets us assess the generalizability of our findings not only beyond the particular sample of stimuli and subjects, but beyond the particular sample of exposure and test talkers (following a recent call by Yarkoni, 2019).

All analyses as well as additional visualization and tables are available on OSF (Xie, Liu & Jaeger, 2020) at https://osf.io/brwx5/ (DOI 10.17605/OSF.IO/BRWX5). This includes all data as well as all R code in form of an R Markdown document (henceforth supplementary information or SI).

Why two replications?

We present two replication experiments of identical design, both addressing Questions 1–3. Experiment 1a was conducted in 2016, and Experiment 1b was conducted in 2018, following feedback on earlier presentations of this work. Specifically, some procedural decisions—most notably, the fact that recruitment for some between-participants conditions was completed before recruitment for other conditions began—made some results of Experiment 1a vulnerable to inflated Type I errors. We thus pre-registered Experiment 1b as an exact replication of Experiment 1a and all of its analyses (OSF https://osf.io/u74vr/register/5771ca429ad5a1020de2872e). Below we present the two experiments side by side and discuss when their results differ from each other or BB08.

Methods

Participants

Participants were recruited on Amazon Mechanical Turk, an online crowdsourcing platform. All participants were self-reported L1 speakers of American English. Participants were paid $1.50 ($6 hourly rate) for the experiment, which was estimated to take about 15 minutes.

Experiments 1a and 1b each recruited 80 successful participants after exclusions in each of the four exposure conditions. This number allowed us to balance exposure conditions, exposure and test talkers, and stimulus order across lists (see Procedure). For Experiment 1a, we include 32 participants each for the control and talker-specific conditions from a pilot experiment that only included those two conditions (reported in Appendix B). All remaining participants were recruited in parallel. Since we found talker-specific adaptation in the pilot experiment (Appendix B), this makes the comparison of the talker-specific against the control condition anti-conservative for Experiment 1a.5 This was one of the motivations for Experiment 1b: all 320 participants in Experiment 1b were recruited in parallel across the four conditions, and no previously collected data was included. All procedures were performed in compliance with the guidelines of the University of Rochester Research Subjects Review Board.

Aggregate demographics.

The demographic distributions for Experiments 1a and 1b were comparable. Of those participants who volunteered demographic information, 42% and 48% reported to be female in Experiments 1a and 1b, respectively (0% declined to report); 8% and 7% reported to be of “Hispanic” ethnicity (0% declined to report); 6% and 6% reported their race as “Black or African American”, 3% and 2% reported as “Asian”, 0% and 2% as “American Indian/Alaska Native”, 5% and 4% as “More than one race”, and 83% and 83% reported as “White” (2% and 3% declined to report). The mean age of participants was 34.4 (SD = 10.0) and 35.0 (SD = 10.0) in Experiments 1a and 1b, respectively (2% and 3% declined to report). Demographic categories were determined by NIH reporting requirements. (We conducted no analyses for effects of demographic variables. Any potential confounds of such variables in our results are, however, avoided through a control predictor introduced below.)

Exclusions.

To achieve the targeted number of 320 participants each, we recruited 343 participants for Experiment 1a (6.7% exclusion rate) and 379 participants for Experiment 1b (15.6% exclusion rate). As we seek to test how participants learn regularities from an unfamiliar accent, we excluded participants from analysis who reported a high degree of familiarity with Chinese or Chinese-accented English in the post-experiment questionnaire. These participants reported that a close family member or friend spoke with a Chinese or similar sounding accent, that they heard that accent all of the time, and/or that they spoke Chinese themselves. Table 2 summarizes the exclusions.

Table 2.

Total number of participants in each exposure condition before and after exclusions. We observe a higher rate of exclusions in Experiment 1b than in 1a because more participants report having familiarity with a similar accent to the one used in our study.

| Exposure condition | analyzed | Participants recruited | excluded |

|---|---|---|---|

| Experiment 1a | |||

| Control | 80 | 86 | 6 (7.0%) |

| Single-talker | 80 | 86 | 6 (7.0%) |

| Multi-talker | 80 | 90 | 10 (11.1%) |

| Talker-specific | 80 | 81 | 1 (1.2%) |

|

| |||

| Experiment 1b | |||

| Control | 80 | 94 | 14 (14.9%) |

| Single-talker | 80 | 95 | 15 (15.6%) |

| Multi-talker | 80 | 95 | 15 (15.6%) |

| Talker-specific | 80 | 95 | 15 (15.6%) |

We observe consistently higher rates of exclusions in Experiment 1b than in 1a because more participants reported familiarity with an accent similar to the one used in our study. We do not know the reason for this increase, but note that almost 3 years passed between the end of recruiting for Experiment 1a and the start of recruiting for Experiment 1b.

Materials

The materials from BB08 were not available to us because of the permissions under which they were elicited. Speech recordings were thus taken from Northwestern University’s Archive of L1 and L2 Scripted and Spontaneous Transcripts and Recordings (ALLSSTAR, Bradlow, Ackerman, Burchfield, Hesterberg, Luque & Mok, 2010). ALLSSTAR contains recordings from talkers of various L1 backgrounds performing speech production tasks in English, along with intelligibility ratings for certain talkers. This includes 11 male Mandarin-accented talkers.

As a fully counter-balanced design for all 11 talkers would have required thousands of participants, we sought to select those test talkers suitable for our purpose. Based on theoretical considerations, we expected the two cross-talker generalization conditions (single-talker, multi-talker) to yield performance that would fall between the control and talker-specific conditions (this is confirmed by our results). In order to adequately power our experiments, it was thus important to select test talkers for which we could reliably detect talker-specific adaptation—otherwise a failure to detect cross-talker generalization on the test talkers would be uninformative. For example: for test talkers that are too easy to understand, it would be hard to detect benefits of talker-specific adaptation or cross-talker generalization.

We initially selected 6 talkers based on their intelligibility scores (provided by the ALLSSTAR database). Following Bradlow and Bent (2008), we aimed to select test talkers with mid-range intelligibility scores (between 71% to 86% percentage transcription accuracy according to ALLSSTAR database). Next, we conducted a pilot experiment (reported in Appendix B) to assess which of the six talkers yielded the clearest evidence for talker-specific adaptation (i.e., a benefit of talker-specific exposure compared to control exposure). We then selected the four talkers for which the pilot experiment found significant talker-specific adaptation as the four test talkers for Experiments 1a and 1b. This approach resulted in talker-specific adaptation of similar average magnitude as in BB08. Specifically, transcription accuracy for the only test talker in BB08 was about 10% higher in the talker-specific condition compared to the control condition (92 vs. 82 RAU). For the four test talkers selected for the present study, transcription accuracy in the pilot experiment’s talker-specific condition was on average 11.75% higher than in the control condition (range: 7.5%−16%; see Figure B3). We note that such non-random selection of test talkers—the standard in the field—can inflate the effect of talker-specific adaptation, compared to randomly selecting test talkers from the population of L2-accented speakers. Here, it serves our purpose to increase the statistical power for our key questions, about the relative benefit of single- and multi-talker exposure (Questions 1–3).

For each of the four test talkers, the five other L2 talkers out of the original set of six talkers were used as exposure talkers in the singe-talker and multi-talker conditions (i.e., across subjects, all six talkers used in the pilot experiment occurred during the exposure phase of EExperiments 1a and 1b). Following BB08, we also selected 5 male L1-accented talkers of American English to serve as the exposure talkers for the control condition. 120 hearing-in-noise test (HINT) sentence recordings were available for each of these talkers. All sentence recordings in the database had been leveled to 65dB. HINT sentences are simple declarative sentences containing 2–4 keywords (e.g., A boy fell from the window), making them similar in complexity and structure to the sentences used in Bradlow and Bent (2008).

We selected two sets of 16 sentences (32 total) from the HINT sentences for each talker to serve as exposure and test stimuli. Following BB08, these sentences were selected to avoid obvious disfluencies or errors (e.g. false starts, incorrect readings) in the recordings for all selected talkers. The two sets of 16 sentences contained a total of 51 and 52 keywords, respectively. One additional sentence not included in either set was selected to use as a practice sentence. This practice sentence was produced by a female L1-accented speaker of American English and served to familiarize participants with the task for the experiment. Following BB08, all sentence stimuli were convolved with white noise at a +5 signal-to-noise ratio to avoid ceiling effects (speech signal: 65dB; noise: 60dB).

Procedure

We implemented a novel web-based paradigm that otherwise closely followed the procedure of BB08. Participants were told that they should complete the experiment in a quiet room while wearing headphones. Participants then transcribed two words produced by a male L1 speaker of American English in order to set their volume to a comfortable level, which they were asked to not adjust for the remainder of the experiment. Following that, participants transcribed the L1-accented practice sentence.

The main part of the experiment consisted of an exposure phase and a test phase, during both of which participants listened to and transcribed sentences. During the exposure phase, participants listened to and transcribed 80 sentences, one per trial. This constituted 50% of the exposure in BB08, and occurred over the course of one day, as opposed to two days. Participants were asked to transcribe the sentence that they heard to the best of their abilities. Each sentence was only played once. During test, participants transcribed 16 sentences, as in BB08. At the end of experiment, participants completed a short questionnaire that assessed their audio equipment type and familiarity with Mandarin-accented English. This full questionnaire is provided in Appendix A.

The 80 sentence recordings during the exposure phase consisted of 5 repetitions of the 16 sentences from one of the two sentence sets. Presentation of the exposure stimuli was blocked by repetition of the sentences: participants heard all 16 sentences (k − 1)- times, before they heard any sentence for the kth time (where k ranged from 1 to 5). Unintended by us, this structure differs from BB08, who blocked the exposure sentences by talker (Bradlow, email communication, 2/6/2017). Available evidence suggests that this departure from BB08’s design is expected to facilitate cross-talker generalization: ordering exposure stimuli in a way that increases trial-to-trial variability across stimuli has been found to lead to increased cross-talker generalization (Tzeng et al., 2016), increasing the statistical power for our design.

In the control and multi-talker conditions, participants heard all five exposure talkers within each bin of 16 sentences (four talkers three times and one talker four times). The order of the talkers was pseudo-randomized such that the same talker never produced two consecutive sentences in the same trial bin. Across all 80 sentence recordings, participants heard all 16 sentences produced by all five talkers so that each sentence-talker combination occurred exactly once. In the talker-specific and single-talker conditions, each of the six Mandarin-accented talkers served as the exposure talker across participants. This means that each participant heard 16 recordings from the same Mandarin-accented talker, each repeated five times. The ordering of the sentences was the same as in the control and multi-talker conditions.

During the test phase, participants were asked to transcribe the other set of 16 sentences, produced by one Mandarin-accented talker. The task during the test phase was identical to the task during the exposure phase. Each of the four of the Mandarin-accented test talkers served as the test talker equally often (counterbalanced across participants) within each combination of exposure condition and list order. This resulted in 20 participants for each of the four test talkers in each of the four exposure conditions in each of the two experiments.

The fully counter-balanced assignment of exposure and test talkers within and across each of the four exposure conditions is shown in Figure 1. For participants in the control condition, the test talker was the only Mandarin-accented talker they heard in the experiment. For participants in the talker-specific condition, the test talker was the same Mandarin-accented talker as the exposure talker. For participants in the single-talker condition, the test talker was Mandarin-accented but different from the exposure talker. Across participants in the single-talker condition, all four test talkers occurred equally often with each of the remaining five Mandarin-accented talkers as exposure talker. Thus, each of the 20 unique combinations of exposure and test talker were seen by four participants in the single-talker condition (for the total of 80 participants in that condition), equally distributed over the four test talkers (as in all exposure conditions). For participants in the multi-talker condition, the five exposure talkers were the five Mandarin-accented talkers that were not the test talker. By making sure that each exposure talker occurred equally often with each test talker, including across the single- and multi-talker conditions, we remove the design flaw present in BB08.

The assignment of the two sentence sets across exposure and test was counterbalanced across participants: half of all participants heard one sentence set during exposure, and half heard the other sentence set during exposure. One pseudo-random presentation order was created for exposure. This presentation order was reversed to create one additional list for each condition. The same orderings of sentences were heard in the talker-specific and control conditions: only the talkers producing the sentences changed across the two conditions. This resulted in a total of 80 lists in the single-talker condition (5 Mandarin-accented exposure talker pairs × 4 Mandarin-accented test talkers × 2 assignments of sentence sets to exposure vs. test × 2 presentation orders within blocks) and 16 lists each in the multi-talker, talker-specific, and control conditions (4 Mandarin-accented test talkers × 2 assignments of sentence sets to exposure vs. test × 2 presentation orders within blocks), for a total of 128 between-participant lists. One successful participant per list in the single-talker condition, and five participants per list in the other conditions, resulted in the 80 participants per condition for the fully balanced design shown in Table 3.

Analysis approach

We first present an overview of the analysis approach we used to address Question 1–3 from Table 1. We then present separate analyses of Experiments 1a and 1b. Additional analyses assess the extent to which the results of the two experiments support the same conclusions, and how much results depend on the different test talkers.

Bayesian mixed-effects logistic regression.

We employed Bayesian generalized linear mixed models with a Bernoulli (logit) link, predicting proportion of keywords correct (correct = 1 vs. incorrect = 0) as a function of exposure condition as well as the maximal random effect structure justified by the design: by-participant random intercepts and by-item random intercepts and slopes for condition (for introductions to mixed-effects logistic regression, see Jaeger, 2008; Johnson, 2009). We used sliding difference coding for the exposure condition, an orthogonal coding scheme that compares the talker-specific against the multi-talker condition, the multi-talker against the single-talker condition, and the single-talker against the control condition. We then used Bayesian hypothesis testing to ask our three research questions (for further details and code, see SI, §4–5).

Model fitting.

All analyses were fit using the library brms (Bürkner, 2017), which provides a Stan interface for Bayesian generalized multilevel models in R (R Core Team, 2018, version 3.5.2). Stan (Carpenter, Gelman, Hoffman, Lee, Goodrich, Betancourt, Brubaker, Guo, Li, Riddell & others, 2016) allows the efficient implementation of Bayesian data analysis through No-U-Turn Hamiltonian Monte Carlo sampling. We follow common practice and use weakly regularizing priors to facilitate model convergence. For fixed effect parameters, we use Student priors centered around zero with a scale of 2.5 units (following Gelman et al., 2008) and 3 degrees of freedom.6 For random effect standard deviations, we use a Cauchy prior with location 0 and scale 2, and for random effect correlations, we use an uninformative LKJ-Correlation prior with its only parameter set to 1 (Lewandowski, Kurowicka & Joe, 2009), describing a uniform prior over correlation matrices.

To achieve robust estimates of Bayes Factors for the Bayesian hypothesis tests (in particular, the replication test, presented below), each model was fit using eight chains with 10,000 post-warmup samples per chain, for a total of 80,000 posterior samples for each analysis. Each chain used 2,000 warmup samples to calibrate Stan’s No U-Turn Sampler. All analyses reported here converged (e.g., all . Bayesian hypothesis testing was conducted via the hypothesis function of brms (Bürkner, 2017).

How we report results.

Rather than p-values, we report Bayes Factors and posterior probabilities for each hypothesis. The Bayes Factor (henceforth BF, Jeffreys, 1961; Kass & Raftery, 1995; Wagenmakers, 2007) quantifies the odds of the hypothesis tested (e.g., that the difference between talker-specific and control exposure is > 0) compared to the alternative hypothesis (e.g., that the difference between talker-specific and control exposure < 0). BFs of 1 to 3 are considered “weak” evidence, BFs > 3 “positive” evidence, BFs > 20 “strong” evidence, and BFs > 150 “very strong evidence” (Raftery, 1995). Bayes Factors provide a coherent measure of support: if the Bayes Factor for a hypothesis is x, then the Bayes Factor against the hypothesis (and for its alternative) is 1/x. A perhaps even more intuitive measure of support is the posterior probability of the hypothesis tested (henceforth pposterior): if we assume that both hypotheses are equally likely a priori, then pposterior = BF / (1 + BF). Posterior probabilities of > .95 can thus be considered the closest equivalent to the conventional significance criterion in null hypothesis significance testing in the psychological sciences (though this interpretation might be seen as not in the Bayesian spirit).

Controlling for individual differences.

All analyses further included an offset term to remove possible confounds due to differences in audio equipment, prior accent experience, task engagement, proficiency with transcription tasks, or other participant-specific differences. For any of these reasons, a participant may achieve higher transcription accuracy independent of the exposure condition. We thus estimated individual differences in performance at the onset of exposure and corrected for those differences in our analysis of the test responses. Specifically, we fit a Bayesian mixed-effects logistic regression to the exposure data from both Experiments 1a and 1b, predicting the proportion of keywords correct during exposure. Predictors included exposure condition, Experiment, trial bock, and all interactions. The model further included the maximal random effect structure: random intercepts by participant, by item and by the current talker, as well as by-item random slopes for exposure condition. The by-talker random intercept captures inter-talker differences in intelligibility, as the stimuli in the first trial bin during exposure can come from different talkers, depending both on the condition and the specific list. Further details, visualization, and the full results of the exposure analysis are presented in the SI (§3). The SI also contain additional analyses that relax the assumption of linear changes in performance across trial blocks during exposure (using monotonic effects instead, §3.3.2). All results presented here replicate under those relaxed assumptions.

We coded trial bin as 0 for the first block, so that the random by-participant intercepts reflect differences in performance at the beginning of the experiment (relative to the average performance in the respective exposure condition). These by-participant intercepts were indeed highly predictive of participants’ performance during test (see SI, §3.4): participants who achieved high transcription accuracy during exposure relative to other participants in the same exposure condition, also achieved comparatively higher transcription accuracy during test relative to other participants in the same condition (BFs for all exposure conditions > 150, all pposterior > .9999). The magnitude of this effect was highly similar across all four exposure conditions, and across both experiments. This suggests that individual differences in audio equipment, task engagement, or other factors strongly affected performance during test. For the analysis of test responses reported next, we thus include the by-participant random intercepts from the exposure analysis as an offset term (i.e., we set the coefficient for this individual difference term to be the constant 1).7

Results

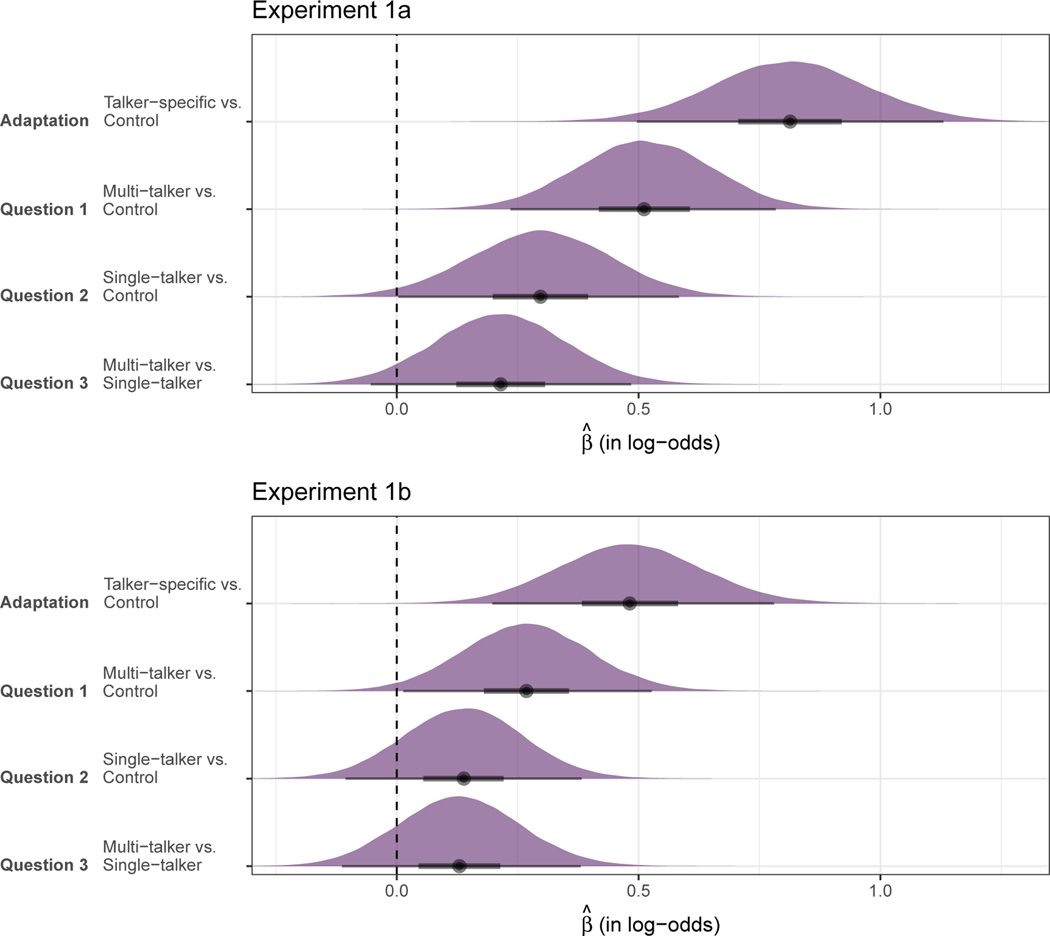

The full summaries of the mixed-effects logistic regressions are provided in the SI (§4–5). Figure 2 shows the estimated difference between conditions (in log-odds) for each comparison relevant for Question 1–3. Table 4 summarizes the corresponding Bayesian hypothesis tests. A positive estimate in Table 4 means that participants in the first exposure condition transcribed more words correctly than participants in the second condition. We illustrate this for the comparison between the talker-specific condition and the control condition, which we include for the sake of comparison. This comparison estimates the benefit of talker-specific adaptation. In Experiment 1a, for example, the estimated median difference between the talker-specific and the control condition is .81 log-odds. The posterior probability of the hypothesis that the benefit of talker-specific exposure is larger than zero is estimated to be at least 0.9999 for Experiment 1a (and would therefore meet standards well beyond the traditional significance criterion).

Figure 2.

Posterior density estimates (over log-odds of correct transcription) for the comparisons associated with research questions 1–3 for both Experiment 1a (top) and 1b (bottom). For comparison, we also show the effect of talker-specific adaptation. Points represents the median of the estimates, 50% of the estimates fall within the thick lines, and 95% within the thin lines.

Table 4.

Summary of Bayesian hypothesis testing for Questions 1–3 for Experiments 1a and 1b. For comparison, we include the estimates for talker-specific adaptation. CNTL: control exposure; ST: single-talker MT: multi-talker; TS: talker-specific. The first four columns show the median estimate for each comparison (in log-odds), its estimated standard error, and 95% credible intervals. The evidence ratio (Bayes Factor, BF) in support of each one-sided hypothesis is expressed in the fifth column. BFs > 1 indicate support for the hypothesis; BFs < 1 indicate support against the hypothesis. The final column gives the estimated posterior probability of the hypothesis.

| Hypothesis | Est. | SE | CIlower | CIupper | BF | pposterior |

|---|---|---|---|---|---|---|

| Adaptation: TS > CNTL | 0.81 | 0.161 | 0.55 | 1.08 | >79999.0 | >0.9999 * |

| Question 1: MT > CNTL | 0.51 | 0.140 | 0.28 | 0.74 | 6665.7 | 0.999 * |

| Question 2: ST > CNTL | 0.30 | 0.147 | 0.05 | 0.54 | 41.3 | 0.976 * |

| Question 3: MT > ST | 0.21 | 0.137 | −0.01 | 0.44 | 16.3 | + |

| (a) Experiment 1a | ||||||

| Hypothesis | Est. | SE | CIlower | CIupper | BF | pposterior |

| Adaptation: TS > CNTL | 0.48 | 0.149 | 0.24 | 0.73 | 2050.3 | 0.999 * |

| Question 1: MT > CNTL | 0.27 | 0.131 | 0.05 | 0.49 | 49.0 | 0.988 * |

| Question 2: ST > CNTL | 0.14 | 0.124 | −0.07 | 0.34 | 6.6 | 0.868 |

| Question 3: MT > ST | 0.13 | 0.126 | −0.07 | 0.34 | 5.7 | 0.850 |

| (b) Experiment 1b | ||||||

Results that meet traditional thresholds for significance or marginal significance+ are marked.

There are a number of clear similarities between the two experiments. Foremost of all, all critical comparisons show effects in the same direction for both Experiment 1a and 1b. Support for these effects is positive or stronger in all comparisons, and the relative benefits from three exposure conditions rank consistently across both experiments, with the best test performance in the talker-specific exposure, followed by the multi-talker exposure and then by single-talker exposure. The relative support for each of the three questions, too, ranks consistently across the two experiments.

Both Experiment 1a and 1b provide very strong evidence of talker-specific adaptation, replicating previous work including BB08. Both Experiment 1a and 1b also provide at least strong evidence for generalization to an unfamiliar L2 talker after exposure to multiple L2 talkers of the same accent (Question 1), again replicating BB08 and other studies showing the benefit of talker-specific or multi-talker exposure (e.g., Baese-Berk et al., 2013; Sidaras et al., 2009; Tzeng et al., 2016). Unlike in BB08, both experiments provide support for generalization after exposure to a single talker of the same accent (Question 2), although the degree of support for this hypothesis differs across the two experiments. Whereas Experiment 1a provides strong support, Experiment 1b only provides positive support for the hypothesis that cross-talker generalization is possible after exposure to a single talker. Finally, both experiments provide positive support for the hypothesis that multi-talker exposure facilitates cross-talker generalization beyond single-talker exposure (Question 3). This qualitatively replicates BB08, but unlike BB08 the evidence from Experiments 1a and 1b does not reach the threshold of the traditional significance criterion.

Strikingly, all differences between conditions were smaller in Experiment 1b compared to Experiment 1a. Next, we discuss possible reasons for this difference and ask what can be concluded from our data.

What can be concluded from Experiments 1a and 1b together?

We begin with a visual comparison of Experiments 1a and 1b, and then present additional Bayesian analyses that assess the extent to which the two experiments support the same conclusions. We recognize that it would be preferable to assess the degree of replication between the present experiments and the original study (BB08). However, only aggregate statistics are available from the original study, which was published before reproducibility became a more broadly recognized standard.

Figure 3 shows by-participant transcription accuracy during test side by side for both experiments (see SI, §6, for same plots in empirical logits). This reveals a striking difference between Experiments 1a and 1b: transcription accuracy in the control condition of Experiment 1b was much higher than in the control condition of Experiments 1a. That is, there is a striking difference between Experiments 1a and 1b in terms of how well participants performed who did not receive exposure to the L2 accent within our experiment.

Figure 3.

Transcription accuracy during test following different exposure conditions (Experiments 1a and 1b). Small points show by-participant means. Solid larger points show averages across the by-participant means, and error bars represent 95% confidence intervals bootstrapped over by-participant means.

We can only speculate as to the causes for this difference. One possibility is that, by chance, the participants recruited in the control condition in Experiment 1a had lower performance than would have been representative of the population they were recruited from. Another possibility is that both the participants in Experiment 1a and the participants in Experiment 1b were representative of the population we were recruiting from at that moment in time. Experiments 1a and 1b were conducted almost 3 years apart between 2015–2018. Although we excluded participants who reported prior familiarity with Chinese or Chinese-accented English, it is possible that overall exposure to Chinese or Chinese-accented English in the population we recruited from increased sufficiently much during that time to explain the overall increase in performance in the control condition.8

Regardless of the reasons, higher baseline performance in the control condition in Experiment 1b reduces the power to detect increases in performance in the other conditions (for demonstration, see Dixon, 2008): the closer the average performance in the baseline condition is to ceiling performance, the harder it is to detect facilitation. For the multi-talker and single-talker conditions—which are expected to elicit equal or poorer performance than the talker-specific condition—ceiling performance is defined by the talker-specific condition (about 90% in both Experiment 1a nd 1b). For Experiment 1b, this means that there was not that much space for improvement above the control condition (86.7% accuracy). Indeed, the estimated effect of talker-specific adaptation in Experiment 1b is about half the size of Experiment 1a (see Table 4). Figure 3 suggests that this is primarily, though perhaps not completely, due to increased performance in the control condition.

What then should we conclude from Experiments 1a and 1b together? Bayesian data analysis provides us with two tools to address this question. The first analysis treats the two experiments as exchangeable and assess whether each effect is present or not using the pooled data from Experiments 1a and 1b. The second analysis gives up the (in this case, questionable) assumption of exchangeability, and instead asks whether the effect sizes found in Experiment 1a are replicated in Experiment 1b. The two analyses thus address related, but different, questions. Together they delineate what we can conclude from Experiments 1a and 1b.

Meta-analysis Bayes Factor.

When the data from both experiments are combined and analyzed with the exact same approach as in the previous section (SI, §6.1), they provide strong evidence for affirmative answers to all of Questions 1–3. The relative size of effects order in the same way as in the separate analyses of Experiments 1a and 1b. Specifically, the two experiments together provide very strong support both for talker-specific adaptation (BF > 79999.0, pposterior > 0.9999) and for cross-talker generalization after multi-talker exposure (Question 1: BF > 79999.0, pposterior > 0.9999). Support for cross-talker generalization after single-talker exposure is strong (Question 2: BF = 109.0, pposterior > 0.991), as is support for the advantage of multi-talker over single-talker exposure (Question 3: BF = 45.6, pposterior = 0.979). As in the individual analyses of Experiments 1a and 1b, support for the difference between single- and multi-talker exposure (Question 3) remains the weakest of the effects investigated here (though still above the traditional significance criterion).

The meta-analysis takes advantage of all available data, which helps to alleviate issues with the increased baseline performance in the control condition in Experiment 1b. However, the assumption of exchangeability is a potentially problematic assumption. Recall, for example, that we initially recruited participants for just the control and talker-specific conditions in Experiment 1a (to ascertain that the paradigm was able to detect talker-specific adaptation). Experiment 1b instead used fully random assignment of participants to any of the four exposure conditions. For the present purpose, the meta-analysis approach thus likely provides an inflated estimate of the overall available evidence (in particular, with regard to comparisons involving the talker-specific condition). This motivates the next analysis.

Replication Bayes Factor.

The Bayesian replication test we present assesses whether Experiment 1b is more likely to reflect a replication of the effect sizes observed in Experiment 1a or a null effect. This is a particularly stringent test: even when the effect of the replication study (here Experiment 1b) goes into the same direction as the original study (Experiment 1a), this does not necessarily mean that the two studies support the same effect size, compared to the alternative hypothesis of a null effect.

Our approach follows Verhagen & Wagenmakers (2014). Verhagen and Wagenmakers (p. 1461) describe a Bayesian replication test that pitches the hypothesis that the effect observed in an experiment constitutes a replication of the effect observed in an original experiment, against the skeptic’s hypothesis of a null effect. The test calculates the Bayes Factor of the replication hypothesis over the null hypothesis:

| (1) |

where Yrep is the vector of responses in the replication experiment, Yorig is the original data, and δ is the size of the effect for which we seek to test replication. The numerator in Equation 5 describes the marginal likelihood of the replication data given prior beliefs (i.e., given prior uncertainty) about the effect size after having observed the original data. That is, we use the original data to estimate the posterior probability of different effect sizes, which then constitute our estimate of the prior probability of different effect sizes under the proponent’s hypothesis of replication. We then estimate the likelihood of the replication data under the different effect sizes each weighted by their relative prior probability. The denominator in Equation 1 is the likelihood of the replication data if the effect is zero.

Verhagen & Wagenmakers (2014) illustrate this approach for t-tests. We apply it to generalized linear mixed model (here, with a Bernoulli response distribution, though our code can be applied to other types of GLMMs). As described in Appendix D, the approach captures uncertainty about any of the other predictors in the model, when assessing replication success for a particular predictor. The test provides a principled quantitative measure of the support for the replication hypothesis, and readily extends to generalized linear mixed models with other link functions. Appendix E summarizes simulations validating the test.

We use this test to ask whether Experiment 1b replicates the specific answers to Questions 1–3 provided by Experiment 1a. The results are shown in Figure 5. With a replication Bayes factor (BFHrepH0) of 584.4, there is very strong evidence for talker-specific adaptation effects (talker-specific exposure vs. control) across both Experiments 1a and 1b (i.e., pposterior > 0.998). The Bayes Factor suggests that it is over 500 times more likely to observe the data of Experiment 1b under the replication hypothesis than under the hypothesis of a null effect.

Figure 5.

Results of replication test assessing whether the effects observed in Experiment 1b are more likely to result under the hypothesis of replication vs. the hypothesis of a null effect. Panels on the right zoom in on the region around . The colored vertical lines indicate the ordinates of the prior and posterior at the skeptic’s null hypothesis that the effect size is zero. The Bayes factor for the replication test, BFHrepH0, is identical to the ordinate of the prior divided by the ordinate of the posterior (Verhagen & Wagenmakers, 2014). A BFHrepH0 > 1 thus indicates how much less likely the null hypothesis is after seeing the data from Experiment 1b, compared to before. Note that limits of the y-axis vary across the rows of the plot (not shown), so as to facilitate comparison of the prior and posterior within each row.

There is strong support for a replication with regard to Question 1 (multi-talker exposure vs. control, BFHrepH0 = 24.5, pposterior = 0.96). This result confirms that Experiment 1a and 1b consistently yield generalization after multi-talker exposure. For Questions 2 and 3, the evidence is non-decisive. For Question 2, there is positive evidence for a replication of cross-talker generalization following single-talker exposure (BFHrepH0 = 4.4, pposterior = 0.81). For Question 3, there is only weak evidence for the hypothesis that Experiment 1b replicates Experiment 1a (BFHrepH0 = 1.6, pposterior = 0.62).

Both the meta-analysis and the replication test agree that all of Questions 1–3 have affirmative answers. At first blush, it might be surprising that the replication test does not return more convincing evidence of replication for Questions 2–3 despite a total of 640 participants. However, it is important to keep in mind that the replication test, too, is affected by the high baseline performance in Experiment 1b. Recall that the statistical power to detect facilitation decreases as baseline performance approaches ceiling performance. Similarly, the ability to find decisive evidence that Experiment 1b replicates Experiment 1a also decreases as baseline performance in Experiment 1b approaches ceiling. This is confirmed by computational analyses presented in Appendix E. Put differently, the replication test has low sensitivity for data like that of Experiments 1b.

Altogether, our results suggest that cross-talker generalization can occur after both single- and multi-talker exposure, while also suggesting that the specific sizes of these effects vary substantially across participants (see also Figure 3). This is a clear signal that the field should maintain uncertainty about the effects of exposure on cross-talker generalization—uncertainty that is often lost when data are summarized in terms of significances (e.g., Kruschke, 2014; Gelman, Carlin, Stern, Dunson, Vehtari & Rubin, 2013; Vasishth et al., 2018; Wagenmakers, 2007). To further highlight the consequences of this uncertainty, we explore our results across the four test talkers.

To what extent do the results depend on the test talker?

Unlike BB08 (and most work on speech perception), Experiments 1a and 1b employed multiple test talkers. Following suggestions by reviewers, we present post-hoc comparisons of exposure effects for these different talkers. We emphasize, however, that even 320 participants per experiment are likely not enough to expect reliable results at the by-talker level. Only 20 participants per experiment were available for analysis when each unique combination of exposure condition and test talker is considered—barely meeting the suggested minimum for between-participant tests (Simmons et al., 2011). The results presented here should thus be interpreted with caution.9

To investigate effects by test talker, we refit the analyses reported above including random effects by test talker (intercepts and slopes for exposure condition) in addition to the random effects by subject and items already included in the analysis. Compared to the alternative approach of separate analyses by test talker, the approach taken here reduces, but does not eliminate, the risk of over-fitting (which would exaggerate differences between talkers). The random effects by test talker act as a regularizing prior that ‘pulls’ differences across test talkers towards zero—i.e., it pulls the means of individual talkers towards the mean of the conditional by-talker means (sometimes referred to as “shrinkage”; see e.g., Kliegl, Masson & Richter, 2010, Figure 5 for visualization). Conceptually, this regularization implements a form of Occam’s razor.

Figure 6 summarizes the posterior distribution of effect sizes for Questions 1–3 obtained from the updated analysis, separately by test talker. The full model summaries are reported in the SI (§7.4). Overall, Experiments 1a and 1b continue to show a remarkable degree of agreement even at the level of individual test talkers. As can be seen by comparing the first three rows of each panel, the four exposure conditions rank identically across all four test talkers in both experiments: talker-specific exposure always provides at least as much benefit as multi-talker exposure, which provides at least as much benefit as single-talker exposure, which provides at least as much benefit as control exposure (all median estimates for Question 2 are larger than zero). Bayesian hypothesis tests confirm that for both experiments, support for effects of exposure was strongest for test talkers 032 and 035, and weakest for test talker 043 (see BFs in Figure 6; for details, see SI §7.1). Additionally, the support for an affirmative answer to the three research questions ranks consistently across all four test talkers and both experiments, with one exception (the relative ordering of Questions 2 and 3 for test talker 037 in Experiment 1b).

Figure 6.

Same as Figure 2 but split by test talker based on analysis that additionally contained random effects by-test talker. Posterior density estimates for the comparisons associated with research questions 1–3. Points represents the median of the estimates, 50% of the estimates fall within the thick lines, and 95% within the thin lines. BFs quantify the support for the hypothesis that the effect is larger than zero.

Talker-specific adaptation is the only effect for which there was at least strong support (BFs ≥ 34.7, pposteriors ≥ .972) for all four test talkers in both experiments. Indeed, talker-specific adaptation is the only effect that receives at least strong support for any test talker in Experiment 1b. Cross-talker generalization after multi-talker exposure receives strong support for three test talkers in Experiment 1a (Question 1: BFs ≥ 20.7, pposteriors ≥ 0.954); this support is positive for the remaining test talker in Experiment 1a (Talker 043, BF > 14.0, pposterior = 0.93) and all test talkers in Experiment 1b (BFs ≥ 5.8, pposteriors ≥ 0.053). Support for cross-talker generalization after single-talker exposure is strong for two test talkers in Experiment 1a (Question 2: BFs ≥ 36.7, pposteriors ≥ 0.974); this support is positive for the remaining two test talkers in Experiment 1a and all test talkers in Experiment 1b (BFs ≥ 4.2, pposteriors ≥ 0.809), except for Talker 037, for which support was weak (BF > 1.4, pposterior = 0.575). Finally, support for the hypothesis that multi-talker exposure facilitates generalization beyond single-talker exposure is not strong for any test talker in either experiment (all BFs < 20). The strongest support comes from Talker 037 in Experiment 1b (BF > 17.8, pposterior = 0.947). A look at Figure 6 reveals that this is caused by the very small estimate for the effect of single-talker exposure for this test talker in Experiment 1b.10

The four test talkers in Experiments 1a and 1b were selected to have similar baseline intelligibility and large effects of talker-specific adaptation (in the pilot experiment reported in Appendix B). It is thus encouraging, but not entirely surprising, that we find a fair amount of agreement across test talkers—with just 20 participants per test talker. However, even for this rather homogeneous set of test talkers, it is clear that we might have arrived at different conclusions if Experiments 1a and 1b had employed only one test talker. For example, there is little evidence for any difference between single- and multi-talker exposure in Talker 043 in either experiment (see Figure 6). This contrasts with Talker 035, for which both experiments find a consistent advantage of multi-talker over single-talker exposure. Similarly, we could have gotten ‘unlucky’ by solely relying on test talker 037, for which we find less consistent results across the two experiments—an inconsistency that, as it turns out, is due to substantially larger by-participant variability for this talker in both experiments (see footnote 10). In the Bayesian analyses presented here, these differences between test talkers show as varying degrees of support (the Bayes Factors). In common practices of summarizing null hypothesis significance testing, however, the same differences could have resulted in seemingly qualitative difference in significance (see also discussion of the “significance filter”, Vasishth et al. 2018).

General Discussion

We set out to replicate key findings of an influential study on cross-talker generalization during speech perception (Bradlow & Bent, 2008). This seminal study by Bradlow and Bent established listeners’ ability to generalize previously experienced speech input across talkers of the same accent, while also suggesting clear limits to this ability. Bradlow and Bent found that exposure to multiple L2 talkers facilitated comprehension for L2-accented speech from a different talker of the same accent. Participants’ transcription accuracy on the unfamiliar test talker after multi-talker exposure was, in fact, indistinguishable from their performance after the same amount of exposure to that specific test talker (talker specific adaptation). This ability to generalize to an unfamiliar talker after multi-talker exposure stands in stark contrast to BB08’s findings for single-talker exposure. After exposure to the same total number of trials from just a single L2 talker, BB08 did not observe any facilitation for the unfamiliar test talker. Bradlow and Bent interpreted these results as evidence that listeners can learn talker-independent representations about an L2 accent, provided they are exposed to sufficient variability (as in the multi-talker condition). Both in its categorical form (multi-talker exposure as a requirement for generalization) and in more gradient interpretations (multi-talker exposure as an additional benefit beyond single-talker exposure), this hypothesis has been influential within research on speech perception (e.g., Kleinschmidt & Jaeger 2015; Baese-Berk 2018) and beyond (e.g., Schmale, Seidl & Cristia 2015; Potter & Saffran 2017; Paquette-Smith, Cooper & Johnson 2020).

The design of the original study did, however, contain a critical confound: while all between-participant conditions employed the same L2 test talker, the single- and multi-talker conditions employed different L2 exposure talkers. As a consequence of this confound, there is an alternative explanation for the lack of cross-talker generalization after single-talker exposure in BB08. As we detail below, this alternative explanation focuses on the objective similarity of talkers’ speech—specifically with regards to the talker-specific mappings from linguistic categories (like phonological segments or words) onto the speech signal—and thus appeals to different mechanisms and theoretical constructs than the explanation advanced in BB08. Under this alternative explanation, the lack of a cross-talker generalization in BB08’s single-talker condition is a consequence of the particular talkers that were employed in that condition, and the fact that they differed from the talkers in the multi-talker condition. Finally, further complicating the interpretation of BB08’s finding is the fact that the study employed a small number of participants (10 per condition), following the standards of the field at that time.

The present large-scale replications with a total of 640 participants aimed to closely follow BB08’s paradigm while avoiding the confound of the original study. Across the participants in our replications, the single- and multi-talker conditions employed the exact same exposure talkers. We further aimed to assess the replicability of the key findings across a variety of combinations of exposure and test talkers. Experiments 1a and 1b each employed four different test talkers, with fully balanced designs within each test talker, including 20 unique combinations of exposure and test talkers in the single-talker condition.

Replicating both BB08 and other studies across a variety of paradigms, we find clear support that exposure to a few dozen sentences of L2-accented speech facilitates subsequent comprehension of speech from the same talker (talker-specific adaptation to an L2 talker, e.g., Clarke & Garrett 2004; Weil 2001; Xie et al. 2018; Paquette-Smith et al. 2020; Gordon-Salant, Yeni-Komshian, Fitzgibbons & Schurman 2010; for review, see Baese-Berk 2018). This result received strong support across all four test talkers in both Experiment 1a and 1b. With regard to our three research questions about cross-talker generalization, we replicate BB08 and find clear support for cross-talker generalization after exposure to multiple talkers of the same L2 accent (Question 1; see also Alexander & Nygaard 2019; Sidaras et al. 2009; Tzeng et al. 2016). We replicate this result for all four test talkers in both Experiment 1a and 1b, though the strength of the support for this finding varied across test talkers and experiments (Experiment 1a vs. 1b). Unlike BB08, we also find support for cross-talker generalization after exposure to a single talker (Question 2). We find positive evidence of cross-talker generalization across all four test talkers though the strength of the support again varied across test talkers and experiments. As we discuss below, the degree of cross-talker generalization seems to depend on the specific combination of exposure and test talker. Overall, support for this finding was weaker than for Question 1. Finally, also unlike BB08, we find less clear evidence that multi-talker exposure facilitates cross-talker generalization beyond exposure to a single talker (Question 3). While multi-talker exposure led to better transcription accuracy than single-talker exposure for all four test talkers in both Experiment 1a and 1b, these effects were often very small.

Both differences between the present replications and the original BB08 study relate to participants’ performance after single-talker exposure. In the remainder of this discussion, we focus on this difference and its relevance for theories of speech perception. We first discuss procedural differences between our replications and BB08 (other than the intended difference in design). We conclude that procedural differences are unlikely to account for the difference in results. We then return to the most likely explanation for the different findings: the removal of the design confound coupled with increased statistical power. We discuss findings since BB08’s seminal study that speak to the mechanisms underlying cross-talker generalization—both from research on the perception of L2-accented speech and other lines of work (e.g., Kraljic & Samuel, 2006; Reinisch & Holt, 2014; Xie & Myers, 2017; Xie et al., 2018). Drawing on these works, we discuss an account in terms of similarity-based generalization, and contrast it with the previous focus on variability as the explanatory variable in generalization. We lay out how similarity-based generalization can explain both BB08’s failure to find cross-talker generalization after single-talker exposure and the results of our replication experiments. The same account also offers explanations for the relative performance in the talker-specific vs. multi-talker conditions (identical in BB08, but different in our replications), and the differences between the 20 unique exposure-test talker combinations in the single-talker condition in our experiments (which we visualize below). Finally, the proposed interpretation of our results has consequences for the theoretical role of variability in learning, training, teaching, and therapy. We thus close with a brief discussion of why variability during learning often seems to facilitate generalization beyond the specific training.

Procedural differences between original and replication studies

Experiments 1a and 1b largely follow the general design and procedure of BB08 very closely. Like BB08, we used an exposure-test paradigm with four exposure condition manipulated between-participants. We used the same target language (American English) and the L2-accented talkers had the same L1 background (L1 Mandarin). The task on each trial was the same as in BB08 (transcription), and the stimuli were of similar complexity (short sentences of 3–4 content words with uninformative context), using the same level of noise-masking. Like BB08, we held the test talker(s) constant across exposure conditions, and used the same number of test tokens. Like BB08, all exposure and test talkers were male.