Abstract

The multiple response structure can underlie several different technology-enhanced item types. With the increased use of computer-based testing, multiple response items are becoming more common. This response type holds the potential for being scored polytomously for partial credit. However, there are several possible methods for computing raw scores. This research will evaluate several approaches found in the literature using an approach that evaluates how the inclusion of scoring related to the selection/nonselection of both relevant and irrelevant information is incorporated extending Wilson’s approach. Results indicated all methods have potential, but the plus/minus and true/false methods seemed the most promising for items using the “select all that apply” instruction set. Additionally, these methods showed a large increase in information per time unit over the dichotomous method.

Keywords: technology-enhanced items, multiple response items, polytomous scoring

With the increasing use of technology-enhanced items (TEIs) in computer-based testing (CBT), testing programs are confronted with item and response types that move beyond dichotomous multiple-choice (MC) items. Many of these TEIs provide an opportunity to have multiple score points that assess partial knowledge and thus provide greater information about examinee knowledge or ability. A number of studies over the years (Bock, 1972; DeMars, 2008; Frary, 1989; Haladyna & Kramer, 2005; Samejima, 1969, 1981; Thissen & Steinberg, 1984; Warrens et al., 2007) have proposed and/or compared item scoring models for MC items in an attempt to elicit greater information than the standard right/wrong dichotomy. The multiple response (MR) item type opened the potential for obtaining greater information through evaluating partial credit methods. However, because there are many methods of assigning partial credit, selecting the most suitable one is challenging.

When identifying different levels of partial knowledge or understanding, Wilson (2005) outlines a theoretical framework building on the structure of learning outcomes work by Biggs and Collis (1982). The BEAR taxonomy (Wilson, 2005) provides for a generic outcome space capable of organizing information into levels of partial knowledge. The outcome space spans from the prestructural, where responses only consist of irrelevant information, up to the extended abstract encompassing not only all the relevant information provided in the stimulus with information but also that goes beyond what was given.

A useful aspect of this generic space is the implied hierarchy associated with increasing integration of information into the response. With selected response items, this approach nicely incorporates with polytomous scoring models where a range of scores can be mapped to selection of more relevant information, see, for example, Wilson’s (2005) use of the Rasch model for polytomous items using partial credit (Wright & Masters, 1982). If one individual identifies more relevant information than another, then that individual should score higher in the generic outcome space, indicating they have selected more relevant information in providing a response.

However, an open question within this space relates to how to incorporate irrelevant and relevant information when applying a scoring methodology. For instance, the “semirelational” level (Wilson, 2005) refers to a situation where some, but not all, of the relevant pieces of information are identified and related together. Given the nature of the outcome space, it would be presumed that an individual scoring in this response space would have a higher score than one in the response space just below in the “multistructural” level. However, how does a scoring method apply when two individuals respond in the same manner, for example, both in the “semirelational,” but one of the individuals also responds with a greater level of irrelevant information. The generic method easily accounts for incrementally greater responses of relevant information but does not provide a framework for incorporating increasing levels of irrelevant information within the same space. This research evaluates methods for handling both aspects of the outcome space with respect to applying raw scoring rules that provide both relevant and irrelevant information, which can then be calibrated using an item response method like the Rasch model (Masters, 1982; Wright & Masters, 1982).

Evaluating raw scoring methods is a fundamental aspect of psychometrics and is highly relevant today with the increase in TEIs found in educational and licensure/certification examinations using CBT. The application of a raw scoring method is a necessary component within the context of a principled assessment design (Nichols et al., 2016) or an evidence-centered design framework (Riconscente et al., 2016) and more generally important to the validity inferences from those scores (American Educational Research Association, American Psychological Association, & National Council on Measurement in Education, 2014). Therefore, providing evidence of the scoring method is a vital part of assessment validity, and it justifies the use and interpretation of scores (Kane, 2016). In the sections that follow, we describe a common approach to administering MR items and outline several different raw scoring methods. Each of the methods use different approaches to handling relevant and irrelevant information in scoring.

Multiple Response Items

One of the more frequently used response types that allows for multiple keys is the MR structure. This response structure has also been referred to as multiple multiple-choice (Cronbach, 1941), multiple-answer multiple-choice (Duncan & Milton, 1978), multiple-mark (Pomplun & Omar, 1997), or multiple answer (Dressel & Schmid, 1953). This type of selected response structure (Sireci & Zenisky, 2006) allows for items to have multiple correct keys within a set of selectable options. The utility of this response type is that it is easily implemented with both CBT and paper-and-pencil exams, it is easily understood by examinees, and it underlies several different types of item designs for TEIs.

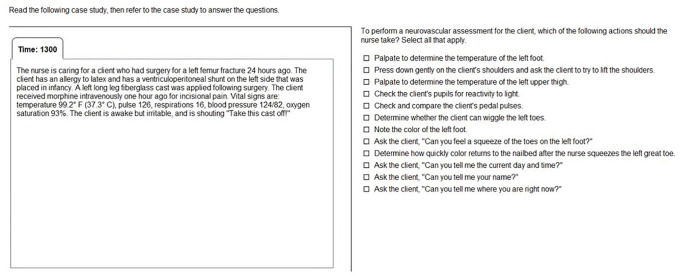

For instance, Figure 1 shows an example of a simple MR item that uses check boxes. This is the classic example when an individual responds to the question by selecting multiple options with the checkboxes. Figure 2 shows a variation on highlighting within text to select all tokenized phrases that apply. Figure 3 is similar to Figure 2 but allows for highlighting rows in a table. Figure 4 is an example of a drag-and-drop item that specifies selecting a specific number of responses, “select N” rather than “select all that apply” (SATA). Although the response format of the items is different, they all have the same underlying response structure, for example, the potential to have more than a single key and allow for selection of more than one option.

Figure 1.

Classic multiple response type item using basic check boxes.

Figure 2.

Within text highlighting item: Select all tokenized phrases.

Figure 3.

Within table selecting multiple rows: Select all rows that apply.

Figure 4.

Drag-and-drop item with “select N” that apply.

Multiple Response Item Instructions

There are generally two main instruction sets for completing MR items. Each one of these options leads to increasing or decreasing the utility of the possible scoring methods. One type of instruction requires examinees to SATA and that allows for open-ended option selection (Koch, 1993; Parshall et al., 1996). This approach directs the examinees to select as many of the options as believed to answer the question, allowing for the possibility to over- or underselect options. For example, an examinee can complete a SATA MR item by choosing as many options believed to satisfy the question prompt, which could result in either selecting more options than keys (overselecting) or selecting fewer options than keys (underselecting).

An alternative instruction set for MR items is the “select N options,” whereby examinees must select a specific and fixed number of response options, “N” (Bauer et al., 2011; Eggen & Lampe, 2011; O’Neil & Folk, 1996). Unlike the SATA method, instructing examinees to select the exact number of options simplifies the task. Consequently, this can reduce the fidelity of items because real-world tasks and decisions are usually not constrained to a specific number. For example, recognizing the significant abnormal cues of a patient in the emergency room is open ended; one must recognize all the important cues without reference to some external marker of how many to look for.

However, informing how many options to select has the potential to reduce construct irrelevant variance and focus on a specific amount of information. For example, the item in Figure 4 asks for the “four most important findings.” So, while all options might have some level of importance, it focuses the respondent on prioritizing and ranking those findings to select only the top four. Allowing for over- or underresponding could also have unintended consequences on examinee responding behavior and result in an unintended response set, for example, risk-taking or risk-adverse response sets (Cronbach, 1946). Context of the construct being measured should help guide the use of the different instruction sets. For example, assessing an examinee’s ability to recognize cues in a clinical environment might suggest SATA instructions, whereas identifying the top N possible diagnoses could better use the “select N.” This research will focus on the use of SATA MR items.

Multiple Response Scoring Methods

Frequently MR items are scored dichotomously in practice. One must endorse all keys correctly to get full credit. If one endorses a single distractor, incorrect option, or fails to endorse any one of the keys, correct option, they receive no points. This type of scoring obfuscates any potential for increasing information by allowing for the assessment of partial knowledge for partial credit. In the context of Wilson’s (2005) taxonomy, there would be no intermediary outcomes between prestructural and relational responses (with the TEI MR structure, the “extended abstract” response would not be appropriate unless the item also allowed for an individual to add free text responses that could extend the correct options beyond what was provided). Individuals with partial knowledge will be swept into the “all wrong” scoring category rather than allowing for separation between individuals with different levels of partial knowledge.

This scoring method can also produce items that appear to be quite difficult. The rationale for this can be seen in the context of a graded response model (Samejima, 1969). Treating an MR item as dichotomous suggests that the difficulty of the item is the point at which the probability of being in the highest score category (all and only all keys chosen) relative to all lower score categories. Grouping response patterns into “all correct” or “all incorrect” when individuals might have been able to select some relevant information occludes the potential to discriminate between levels of partial knowledge in the outcome space.

Given that multiple correct options, keys, are allowed by the MR response structure, it seems potentially useful to investigate partial credit scoring for these items. However, assigning multiple raw score points is not as straightforward as basic MC where there is a single correct answer. MR response formats allow for individuals to respond to some combination of correct and incorrect options. There is no straightforward method for handling these situations.

One possibility is to simply evaluate each of the different response patterns that can be found based on the item structure. For example, take the item in Figure 1. There are 12 response options, which implies 212 = 4,096 possible response patterns. Under the dichotomous scoring routine, only one of those possible patterns is correct and simplifies the analysis because every other pattern goes to zero score. However, this method has the obvious disadvantage of extremely large sample sizes that need to ensure all possible patterns are uncovered. Additionally, using methods that rely on response score patterns (e.g., Bock, 1972) becomes extremely difficult to ensure that all possible patterns are accounted for in the data for calibration purposes. However, there are several other alternatives that have been investigated in the research.

Early research on MR response type highlighted the potential for evaluating partial knowledge and found positive results (Collet, 1971; Coombs et al., 1956; Cronbach, 1939, 1941; Dressel & Schmid, 1953; Morgan, 1979). Likewise, more recent research explored alternative scoring solutions that focused on assigning raw score values to option response sets (Bauer et al., 2011; Becker & Soni, 2013; Betts, 2013, 2018; Clyne, 2015; Couch et al., 2018; Domnich et al., 2015; Dressel & Schmid, 1953; Eggen & Lampe, 2011; Hohensinn & Kubinger, 2011; Hsu et al., 1984; Jiao et al., 2012; Jorion et al., 2019; Kao & Betts, 2019; Kim et al., 2018; Lorié, 2014; Muckle et al., 2011; Muntean & Betts, 2015, 2016a, 2016b; Muntean et al., 2019). These studies have generally shown positive results for a broad range of partial credit scoring models. However, the prior research was done with a diverse methodology for scoring MR items. Additionally, much of the research was done with limited samples of both individuals and items. The current research seeks to provide an analysis of several promising approaches to applying raw scores for assessing partial knowledge in MR items using the SATA instruction set. This work attempts to organize results across several of the above research endeavors to provide evidence for numerous raw scoring methods that have shown reasonable results in the prior studies.

Scoring Methods Evaluated

Dichotomous

One approach to scoring MR items that is used quite often in practice is to simply score the items in a dichotomous (DI) method. For a correct score of 1, an individual must select all the keys and only the keys to obtain a correct status. Any selection of irrelevant information, that is, selecting a distractor, or not selecting the entire set of relevant information, that is, failure to select any key, results in a raw score of 0. This method applies a very strict penalty for both the selection of irrelevant information and failure to select all relevant information. This results in two groups, “all right” or “all wrong”; however, individuals in the “all wrong” group could actually have responded to some subset of the relevant information that suggests the “all wrong” category might not be an accurate description. Therefore, the opportunity to glean partial information from individuals with partial knowledge is lost.

Subset

Another raw scoring method is the use of a proper subset (SU) criteria. This method provides a positive score for correctly selecting any subset of the key set. The score applied is the cardinality of this subset. Thus, some responding to relevant information in the outcome space without selecting any irrelevant information provides a basis for assigning partial credit. However, the selection of a single irrelevant feature results in assigning a score of 0 and places them in the “all incorrect” grouping. Take, for example, an item with three keys “ABC” and four distractors “DEFG.” An examinee who endorses “A” and “B” will obtain a score of 2. However, if a single distractor is selected, then the item is scored as incorrect. Thus, if an examinee chose “A,”“B,” and “E,” then the result would be a score of 0 as a distractor was selected.

This method has also been referred to as the “polytomous trapdoor” (Muckle et al., 2011). While this method has a significant penalty for any distractor selection, that is, setting the score on the item to 0, it also is slightly more lenient than the DI method. This leniency is due to allowing for partial credit for any response string that is a proper subset of the key set. In this case, the selection of irrelevant information still nullifies the score, but one does not have to select all the correct responses in order to obtain some points, which would reflect different levels in the outcome space.

One thing to note about this scoring method is that the type of instructions can have a potentially unintended consequence. For instance, in the SATA situation, this method might encourage individuals to underselect, select less options than number of keys, because of the open-ended nature of the instructions and the strict penalty for selecting irrelevant information. Additionally, an examinee could implement a cautious strategy to avoid marking incorrect responses.

Ripkey

The Ripkey (RI) method has been discussed in the context of the “select N”’ instruction set for MR items with promising results for the partial credit scoring (Ripkey et al., 1996). The scoring for this item proceeded by providing a single point for each correct answer and no points for an incorrect selection. However, if an individual overresponded, that is, selected more options than the N stated, a score of 0 was assigned. Using the example above with keys “ABC,” if an individual selected “ACE” they would receive a score of 2 for not overresponding (no penalty), selecting “A” and “C” from the key set (2 points), and selecting “E” from the distractor set (0 points). However, if one selected “ABCD,” then the score results in 0 points because the individual selected more options than keys.

If the examinee overselects, then one is substantially penalized by assigning a total score of 0. This means that for an item with three correct keys, individuals selecting two correct and one incorrect option would get a score of 2, whereas individuals selecting all three correct keys but additionally selecting an incorrect response would get a score of 0. So the inclusion of irrelevant information within a response set that is no larger than the key set has no effect on the score. For this reason, the RI method is seemingly more lenient than the SU method because it can allow for partial credit even when irrelevant information is selected. In contrast, the SU method treats any selection of irrelevant information as nullifying the response. In the context of SATA, this scoring method could induce a conservative approach to responding similar to the SU method. This is because the individual does not know what the exact “N” is, though it is more lenient by not penalizing irrelevant information selections without overresponding. This type of scoring could be more appropriate to the “select N” instruction set.

Plus/Minus

One approach that provides a slightly less strenuous penalty to selection of any distractor is the plus/minus (PM) method. This method was introduced as the “n from 5” format by Morgan (1979) to indicate that the examinee needed to select “n” options from the “5” options provided. While originally computed as a percentage of the total number of correct and incorrect alternatives, this method can be used more simply at the item level to provide a single integer score. This method has also been referred to as the “negative scoring” method (Muckle et al., 2011) and has been utilized in the Pearson Test of English (Pearson Education, 2018).

This method scores any selection of a key as 1 point and any selection of a distractor as a negative point. The total score on the item is then the sum of the response set, that is, adding the +1s and the −1s. If the sum is negative, the score is set to 0. While previous methods can result in a 0 score for the selection of a single incorrect answer or not selecting all the keys, this method cancels out selections of correct responses with selections of incorrect responses. In effect, the selection of irrelevant information only nullifies the selection of the same number of relevant selections. Applying this to the example above, the individual that answered two correct keys, “AB.” would get 2 points. If they selected one incorrect, “F,” they would get minus 1 point, resulting in a final raw score of 1 point. Another individual with three correct responses (+3) and one incorrect (−1) would have a score of 2.

The PM method is more lenient than the DI method because overresponding does not result in a complete nullification of selections of relevant information. Rather than resulting in a totally incorrect item when a distractor is selected, this method cancels out one of the correctly selected keys. This method incorporates irrelevant information in a one-for-one negation of relevant information. It also rounds all negative scores when more irrelevant than relevant information is selected, which implies that it does not try to differentiate between different levels of irrelevant information selection. Additionally, it should be possible to assign different integer values to options if there was a clear rationale. For instance, if there were a distractor that was so bad that its selection indicated a serious deficiency of understanding, for example, selecting a response on a nursing exam that would potentially harm or kill a patient, then one could assign a negative value to that response that outweighs all the correct responses. This in effect could provide a “trapdoor” scoring approach that highlights a significant penalty for certain distractor selection. Likewise, it could also be possible to assign greater positive point values to some keys, if there was a valid rationale.

Multiple True/False

The multiple true/false (TF; Cronbach, 1939, 1941, 1946) method utilizes scoring for both selection of relevant information and nonselection of irrelevant information. This method has also been referred to as the “multiple true/false” method (Muckle et al., 2011) or the “true/false testlet” model (Muntean & Betts, 2015; Muntean et al., 2016a). This method does not assess a penalty for incorrect responses; rather it simply provides no score for either incorrectly not selecting a key or selecting a distractor. The above research and others (Couch et al., 2018; Frisbie & Sweeney, 1982; Tsai & Suen, 1993) have found positive and encouraging evidence to support this scoring approach.

This method gives a point for the selection of any key and for the nonselection of distractors. Therefore, a point is awarded for each option with the selection of relevant information and for the nonselection of irrelevant information. If a key is not selected or a distractor is selected, then there are no points for that option. The score on the item is determined by accumulating all the points achieved across the options. Following the example above with key set, “ABC,” and distractor set, “DEFG.” Applying the TF scoring rule, there would be a maximum of seven achievable points, when only “ABC” are selected (+3) and “DEFG” are not selected (+4). If an individual were to select, “A,”“B,” and “D,” they would obtain 2 points for selecting “A” and “B” and 3 points for not selecting “E,”“F,” and “G”; thus, obtaining a score of 5 (2 + 3). In contrast, for the same item the maximum score for the DI method is a single point, and is 3 points for the RI, SU, and PM methods. With the TF scoring method, if an individual chose “ABFG,” then they would get 2 points for endorsing “A” and “B” keys and 2 points for not endorsing “D” and “E,” which results in a score of 4.

This method incorporates irrelevant information by providing points for accurately identifying information as irrelevant and not selecting it. There is no penalty for selection of irrelevant information or the nonselection of relevant information. It is expected that this should be the most lenient of the methods investigated. One possible implication with this method could be that it encourages individuals with little knowledge to simply select all responses and thus accumulate points for the selection of the keys. A positive aspect is that, with no penalty for selection or nonselection, issues related to risk taking or anxiety could be negated.

One interesting use of this method has been found in the context of item response theory and signal-detection framework (DeCarlo, 1998, 2011). In this context, it has provided a useful approach to evaluating the ability of an examinee to select target information from nontarget information and more generally recognizing pertinent cues from irrelevant cues (Betts & Muntean, 2017; Muntean & Betts, 2015; Muntean, Betts, Kao, et al., 2018; Muntean, Betts, Luo, et al., 2018; Muntean et al., 2016a, 2016b; Woo et al., 2014). In addition to having a high level of correspondence with item response parameters, it also provides an additional parameter that allows for measuring the response bias for examinees (Muntean & Betts, 2015; Muntean, Betts, Kao, et al., 2018; Muntean, Betts, Luo, et al., 2018; Woo et al., 2014).

Research Questions

As all the raw scoring methods uniquely account for irrelevant information in the scoring, measures of item fit and difficulty are of interest in this study. As the items are assigned scores based on differences in incorporating irrelevant information, with some being stricter than others, it is likely that differences in item difficulty will materialize. It was hypothesized that the following order would be found with respect to the most difficult to least difficult: DI, SU, RI, PM, TF. The rationale for this hypothesis was related to the decreasing “penalty” applied for the selection of irrelevant information.

One of the methods associated with the construct mapping methodology (Wilson, 2005) is the use of “fit” statistics for evaluating the goodness of fit between the data and the intended model. If raw scoring methods are appropriate, they should show reasonable levels of fit between the model and the data generated. Of interest in this study are both measures of “fit” called mean square infit and outfit (Wright & Masters, 1982). The “fit” metrics provide a reference for evaluating the fit between the different raw scoring methods.

Additional practical “fit” metrics were used to explore the potential differential effects of the raw scoring methods. These were based on practical considerations of calibrating useful items for testing. The percentage of items with missing categories was computed. Normally for a useful item all score categories are populated and needed for calibration. If one method leads to larger amounts of missing categories, it might suggest that the method would not be useful for practical purposes. Additionally, it is assumed that the raw scores are ordinal, with higher raw scores related to higher ability estimates, and thus the thresholds representing the transition from the lower score category to the higher would also be ordered in that same fashion, for example, the threshold for transitioning from score category 0 to 1 should be lower, easier, than the threshold for moving from 1 to 2, and so on. While this is not a necessity of the partial credit model (PCM), it is a practical consideration when making a validity argument. Exploration of the different fit statistics was thought of as exploratory; however, it was expected that the TF method would result in a large number of items with missing score categories as it theoretically generates the highest number of score categories and may be quite difficult to get a score of 0 on many items.

Another important consideration with TEIs has to do with information efficiency (Wan & Henly, 2012). The metric is used to evaluate the relative trade-off in information to response time for TEIs when compared with other types of items, for example, MC. Jodoin (2003) compared item formats on information, a measure of reliability in item response theory using data from a computer software certification test. He found that TEIs provided more information across ability levels compared with MC items. However, TEI formats required more testing time, which resulted in lower efficiency (i.e., information per unit time). Similar patterns of results were obtained in the studies that compared MC items with TEI formats such as figural response (Wan & Henly, 2012) and fill in the blank (Qian et al., 2017). It is expected that all the partial credit methods will prove more efficient than DI.

Method

Comparisons of scoring methods were based on over 2 years of data collected from a variable-length, computerized adaptive test for licensure examination using the Rasch model. Exam lengths could range from a minimum of 60 scored, operational items to a maximum of 250. All SATA items were randomly seeded into each examinee’s operational exam. Items looked exactly like other items in the operational pool to ensure best effort. These types of items are used on the operational exam but are scored dichotomously. Each MR item was scored using all the proposed raw scoring methods.

To compare results across items and samples, the final ability estimate from the operational exam, theta, was used to anchor all item parameter estimates to the same, common scale using the fixed-person calibration method (Stocking, 1988). To make comparisons and evaluate differences between methods, it was necessary to put all items on the same scale to allow for direct comparisons. The final theta was computed using all responses to all items from the operational exam. This person-anchoring approach has shown to provide reliably consistent estimates of item difficulty (Meng & Han, 2017). The PCM (Masters, 1982) was used for calibrating all items and raw scoring methods using Winsteps (Linacre, 2020).

Analysis

For this study, the average difficulty of items was calculated as the average of the thresholds estimated from the PCM. This provided an indication of the relative difficulty of the items under the different scoring methods. It was expected that there would be differences in difficulty between the methods due to the differences in the way the methods handle scoring of irrelevant information. Therefore, the general linear model (Kutner et al., 2005) was used to evaluate the significance of differences in difficulty.

To evaluate the fit to the model, the mean square infit and outfit measures from Winsteps (Linacre, 2020) were used. Infit is the information-weighted fit, which is more sensitive to the pattern of responses to items targeted on the examinee. Outfit is the outlier-sensitive fit, which is more sensitive to responses to items with difficulty far from a person. As this is an exploratory research, the focus is on relative fit of the scoring methods. This research used the guidelines outlined in Linacre (2020) suggesting that values between 0.5 and 1.5 could be considered providing good fit to the model. Infit and outfit were averaged across all items for each method and compared against this guideline. Scoring methods with averages falling inside of those ranges might be considered potentially useful methods for further investigation or use in practice.

The percentage of items showing missing score categories was computed by identifying all items within each method that showed at least one missing category and dividing by the total number of items in the analysis set. Similarly, the percentage of items with disordered thresholds was computed by dividing the total number of items that had at least one disordered pair of thresholds by the total number of items. Additionally, a follow-up analysis was done with only the disordered items. A bubble sort algorithm (Knuth, 1998) was used to measure the total number of pairwise swaps that were needed to take a set of disordered thresholds and put them in the ascending order. Methods having a greater number of internally disordered thresholds would result in larger numbers of swaps and suggested a poorer fit of the response data to model and the expectation of dominance ordering. For this metric, we used the median of the number of swaps for comparison because the metric will have a lower bound score of 1 because only items identified as disordered were evaluated. This truncation can result in positive skew, and therefore the median number of swaps was preferred over the mean.

This research provides a more direct assessment of the relative trade-off of information to time. To evaluate this, the total information for each item is computed for each raw scoring method. Information is computed as the sum of the inverse of the square of the standard error of measurement (Lord, 1980) and can be evaluated across the ability scale to show the curve of the information function at different points of ability. Unlike previous research, this research applied the different scoring methods to the same item responses; thus, the item response time and sample was fixed across each scoring method. Differences in item information as a function of scoring method was based on a common, fixed amount of time. Therefore, information relative to a unit of time was directly compared across the scoring methods and scoring methods with greater information would have greater efficiency.

Item Development and Deployment

All items (N = 2,243) were developed using the standard operational item development process and designed to look exactly like operational MR items in the current bank. All items had either five (N = 1,982) or six (N = 261) options and from one to five keys. The standard process entails a panel of subject matter experts (SMEs) writing all items, all items are then reviewed by a sensitivity panel, and finally all items were reviewed by another panel of SMEs to ensure the item content and accuracy of the keys. After the items passed all reviews, they were placed into a pretest pool of an operational exam and were randomly seeded into candidates’ exams. On average, there were about 888 responses for each item (SD = 182).

Item Scoring Methods

Each item was scored using all the scoring approaches outlined above; DI, SU, RI, PM, and TF. Here is the process for each.

Dichotomous

The item was scored 1 if the examinee answered only the correct keys. Otherwise, a score of 0 was applied.

Subset

The examinee was only given credit for proper subsets of the answer key set. The cardinality of the proper subset was the examinees score, for example, a single point was given for each correct element with the total score being the sum of the correct elements in the subset. If the examinee selected any incorrect option, the total score for the item was 0. Thus, the selection of any irrelevant information, that is, a distractor, would result in an incorrect item. Partial credit was only scored when relevant information, that is, keys, were selected.

Ripkey

The examinee was given 1 point for each correct response and 0 for selecting a distractor. The sum of the correct responses was the final score. However, if an examinee selected more options than answer keys, this resulted in a score of 0. In this case, selection of irrelevant information only affects the score when the individual overselects.

Plus/Minus

Each correct selection of a key was counted as 1 point. When an examinee selected an incorrect option, a distractor, that response was assigned a value of negative one, −1. The score on the item was the sum of the selected responses, and, if the score was negative, a score of 0 was applied. This model enforced a one-to-one trade-off between relevant and irrelevant selection.

True/False

For this approach, each option was scored as if it were a TF-type item. A point was given for each selection of a key or nonselection of a distractor. Otherwise, if a distractor was selected or a key was not selected, 0 points were assigned. The score on the item was the sum across the option scores. This was considered the most lenient model as selection of irrelevant information does not affect the overall score.

Results

Table 1 displays the descriptive results based on the results of the PCM. The correlations between methods are in the top of the table, and the mean (SD) of the item difficulty for each method in the bottom rows. All correlations were significant, p < .0001, and most were greater than .80. However, the correlation between the DI and RI methods (r = .63) along with the correlation between the TF method and both SU and RI (r = .77 and .58, respectively) were lower. The results suggested that the methods ordered examinees in a similar way.

Table 1.

Descriptive Statistics on PCM Analyses (Correlations—Upper Rows; Means and Standard Deviation—Bottom Rows).

| Method | DI | SU | RI | PM | TF |

|---|---|---|---|---|---|

| SU | .84 | ||||

| RI | .63 | .91 | |||

| PM | .82 | .92 | .82 | ||

| TF | .83 | .77 | .58 | .82 | |

| Mean difficulty | 1.10 | 0.39 | −0.03 | −0.39 | −1.05 |

| SD | 1.41 | 0.76 | 0.79 | 0.96 | 0.60 |

Note. All correlations are significant, p < .0001. PCM = partial credit model; SU = subset; RI = Ripkey; PM = plus/minus; TF = true/false; DI = dichotomous.

On the difficulty scale, larger numbers indicate more difficult items. The results, see Table 1, show the hypothesized pattern with the DI method the most difficult (mean difficulty = 1.10) followed by SU at 0.39 all the way to the TF method with the lowest difficulty (mean difficulty = −1.05). Analysis using the general linear model indicated that the omnibus test was significant, F(4, 11213) = 1609.34, p < .0001, R2 = .36. Follow-up pairwise comparisons with Bonferroni adjustments indicated that all conditions significantly differed from one another, ps < .0001. These results indicated not only that the order of difficulty was as expected but also that the differences were significant.

Table 2 provides descriptive statistics for the fit results. The RI method had the least number of items with missing score categories at 3.7%, and PM was next lowest with 4.7%. These results were markedly different from the SU and TF with 20.4% and 62.6%, respectively. Additionally, PM had the least number of items with disordered thresholds at 25.8%, and the SU method had almost every item with at least one disordering with 98.5%. It usually took one swap for the disordered PM and TF items to be ordered and two for the SU and RI methods.

Table 2.

Fit Metrics and Statistics for Each Method.

| Mdn | Infit | Outfit | |||||

|---|---|---|---|---|---|---|---|

| Method | Missing category (%) | Disordered (%) | Swaps | M | SD | M | SD |

| DI | — | — | — | 1.03 | 0.04 | 1.12 | 0.47 |

| SU | 20.4 | 98.5 | 2 | 1.37 | 0.21 | 2.70 | 2.07 |

| RI | 3.7 | 87.5 | 2 | 1.32 | 0.20 | 1.88 | 1.37 |

| PM | 4.7 | 25.8 | 1 | 1.16 | 0.10 | 1.33 | 0.84 |

| TF | 62.6 | 45.8 | 1 | 1.24 | 0.15 | 1.40 | 0.83 |

Note. DI = dichotomous; SU = subset; RI = Ripkey; PM = plus/minus; TF = true/false.

For both infit and outfit measures, the SU method had the highest mean fit statistics, infit = 1.37, outfit = 2.70. Of the other scoring methods, the PM method yielded the lowest average infit (1.16) and outfit (1.33). Additionally, both the PM and TF methods showed more narrow ranges of variability as reflected by the lower standard deviations in both infit and outfit than other partial credit scoring methods. Overall, all methods had infit values within the expected range. However, SU and RI had outfit measures greater than the expected 1.5. Overall, the PM method appeared to provide the best fit across the polytomous methods with good infit and outfit values along with low numbers of missing score categories and small amounts of disordering of item thresholds.

Fit statistics were also computed for each of the score categories for each method. Results are provided in Table 3 showing the average and standard deviation of category infit, outfit, and root mean square residuals (Linacre, 2020). Results indicated that the PM method had the lowest root mean square residual of 1.06 and both SU and RI with 1.11. Standard deviations were similar across those three methods with the TF having both higher mean and standard deviation. The category infit and outfit results indicated that the PM method showed best fit out of all methods with 1.16 and 1.24, respectively. Additionally, the PM showed the lowest variation in fit statistics with quite low standard deviations of, 0.09 and 0.19, respectively.

Table 3.

Category Fit Statistics for Raw Scoring Methods.

| Method | RMSR | Infit | Outfit | |||

|---|---|---|---|---|---|---|

| M | SD | M | SD | M | SD | |

| SU | 1.11 | 0.61 | 1.29 | 0.28 | 2.14 | 1.25 |

| RI | 1.11 | 0.68 | 1.27 | 0.25 | 1.71 | 0.76 |

| PM | 1.06 | 0.66 | 1.16 | 0.09 | 1.24 | 0.19 |

| TF | 1.49 | 0.96 | 1.29 | 0.24 | 1.42 | 0.47 |

Note. SU = subset; RI = Ripkey; PM = plus/minus; TF = true/false; RMSR = root mean square residual.

Finally, an analysis of the overall information provided by the different scoring methods was computed. The sum of item information of all 2,243 items are presented in Figure 5. Item information is plotted across the entire range of the ability distribution (x-axis) and binned into separate bins from a minimum of −4.0 logits to +4.0 logits in widths of 0.5 logits. All scoring methods provide more information in the middle of the score scale when compared with DI, as expected; however, the SU method appears to be similar to the DI in the ends of the ability scale but provides the highest of all around the middle of the scale. Both the TF and PM show high levels of information in the center also. For abilities less than −0.9 and greater than 0.6, the TF can provide more information than the rest of the methods. Given these are computed on the same set of items under different scoring methods, the time to complete any one item would be the same across the methods; therefore, it appears that applying a partial credit scoring approach provides substantially more information when compared with the DI method per unit of time, especially for the ability scale it ranged from −2.0 to +2.0.

Figure 5.

Total information for each scoring method across the ability (theta) scale.

Discussion

Given the rapid advancement of TEIs being incorporated into CBT, this research sought to evaluate different scoring methods for a very flexible response type, MR, using the SATA instruction set. Not only does this response structure underlie several different types of item designs for CBT, but it is also just as easily implemented in a paper-and-pencil format. MR items provide a direct method for evaluating partial knowledge and/or partial credit to responses. This research provides some initial results when exploring different methods for including irrelevant information underlying different raw scoring methods.

Significant differences were found on item difficulty between the different methods; however, with the large samples sizes the tests were quite powerful. The DI method was found to produce the most difficult items, whereas the TF was found to be the least difficult items, overall. Additionally, item fit statistics indicated that the TF and PM fit well on both infit and outfit; whereas both the SU and RI methods had higher levels of outfit than expected. For practical fit metrics, the PM appeared to have the best results, overall; however, all methods resulted in a large number of items with disordered thresholds with PM being the lowest at about 26% of the items. All methods provided substantially more information around the center of the scale when compared with the DI method and were deemed more efficient with more information per unit of time. Overall, the PM method faired particularly well across all the metrics used in this study.

Although this research was undertaken with respect to a single licensure program domain, applications of these methods for any testing program is feasible. It is suggested that programs interested in evaluating scoring approaches to MR items utilize all available methods to help focus on the most useful for each case. For instance, Muckle et al. (2011) showed the potential for differential results depending on the type of program from which the data are derived. Additionally, thinking about how different scoring methods incorporate irrelevant information could help inform the selection of scoring methods to employ.

This research was undertaken using the SATA instruction set. Results might not be applicable under the “select N” instruction set. Additionally, some of the methods might not make sense with this alternative instruction set. For example, using the PM method, the only way to obtain a score of N − 1 would be for an individual to only select N − 1 options and get them correct. If an individual selected N options and got one of the options incorrect, it would be impossible to get any scores in the N − 1 category. Additionally, the RI method would seem to be a better candidate for the “select N” instruction set, and, especially with a CBT where a constraint can be employed to restrict candidates from selecting more than N. However, if not following the directions is important, then the “penalty” for overselection might be rationalized to not put this constraint on items.

Future research could focus on evaluating results when item instructions, SATA versus “select N,” are crossed with different information about how items will be scored. For instance, pairing a “select N” instruction set with examinees knowing they will be scored with the SU method might have different results when compared with the RI method. Allowing individuals to select up to N responses but no more and using the RI scoring might entail individuals willing to be more risk taking with selecting options to which they are unsure as no “penalty” would be applied. However, the SU instruction set might show more underresponding as individuals might choose to not chance the selection of a questionable response that they might have under the RI.

Moreover, future research might seek out situations that explore the utility of specific scoring methods when coupled with specific cognitive tasks. For example, the TF scoring was used specifically in a signal detection–related task of cue recognition within the context of nursing clinical judgement (Betts, 2013; Betts & Muntean, 2017; Muntean & Betts, 2015, 2016a). This type of scoring method is well structured for this type of cognitive task. Other types of tasks could be identified that align well with the proposed scoring methods. For instance, the PM method was also found to be useful when evaluating the construct of “taking action” within a nursing clinical judgment task (Kim et al., 2018). Here, taking correct actions were positive, whereas taking incorrect actions were seen as negative to patient care.

Another potential line of research could evaluate differential methods of applying penalties to selection of irrelevant information. For instance, some irrelevant information might not affect the outcome of the task being evaluated, whereas others might. In a case like this, one might investigate having no penalty for selecting an inconsequential irrelevant response but providing a penalty for selecting one that would have a negative effect. Moreover, one might consider responses that when selected would have a detrimental effect on the outcome or task being evaluated and providing an even harsher penalty. For example, in the medical field, the selection of a distractor that could seriously harm or kill a patient might be scored with a heavy penalty, whereas selecting a distractor that has no effect on the situation has no or a very low penalty.

Overall, these results suggest several useful methods for scoring MR items to extract partial credit when using a SATA instruction set. MR items are flexible in that they can underlie many different item presentations and be used on both CBT and paper-and-pencil exams. Of the methods evaluated here, each had their own unique approach to incorporating the selection of irrelevant information into the raw scoring methods. The methods explored here showed significant differences in difficulty between all methods and various degrees of fit. For the most part they showed more information to time efficiencies than scoring items dichotomously. Overall, the PM method seemed to have the best promise as it had relatively better fit metrics and a nice spread of information across the ability continuum. Further studies should seek to replicate these types of scoring methods across different types of examinations and assessments with different populations of individuals to see how robust the results are as general scoring methods.

Footnotes

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) received no financial support for the research, authorship, and/or publication of this article.

ORCID iD: Joe Betts  https://orcid.org/0000-0002-0378-132X

https://orcid.org/0000-0002-0378-132X

References

- American Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (2014). Standards for educational and psychological testing (6th ed.). American Educational Research Association. [Google Scholar]

- Bauer D., Holzer M., Kopp V., Fischer M. (2011). Pick-N multiple choice-exams: A comparison of scoring algorithms. Advances in Health Sciences Education, 16(2), 211-221. 10.1007/s10459-010-9256-1 [DOI] [PubMed] [Google Scholar]

- Becker K. A., Soni H. (2013, April 28-30). Improving psychometric feedback for innovative test items [Paper presentation]. National Council on Measurement in Education annual meeting, San Francisco, CA, United States. [Google Scholar]

- Betts J. (2013, April 27–May 1). Exploring innovative item types on computer-based testing [Paper presentation]. National Conference of the American Educational Research Association, San Francisco, CA, United States. [Google Scholar]

- Betts J. (2018, April 12-16). Measuring clinical judgment in nursing: Integrating technology enhanced items [Paper presentation]. Annual Conference of the National Council on Measurement in Education, New York, NY, United States. [Google Scholar]

- Betts J., Muntean W. (2017, April 28-30). Using signal detection theory and IRT methods with multiple response items [Paper presentation]. Annual Conference of the National Council on Measurement in Education, San Antonio, TX, United States. [Google Scholar]

- Biggs J. B., Collis K. F. (1982). Evaluating the quality of learning: The SOLO taxonomy. Academic Press. [Google Scholar]

- Bock R. D. (1972). Estimating item parameters and latent ability when responses are scored in two or more nominal categories. Psychometrika, 37(1), 29-51. 10.1007/BF02291411 [DOI] [Google Scholar]

- Clyne C. M. (2015). The effects of different scoring methodologies on item and test characteristics of technology-enhanced items [Unpublished doctoral dissertation]. University of Kansas. https://kuscholarworks.ku.edu/bitstream/handle/1808/21675/Clyne_ku_0099D_14314_DATA_1.pdf?sequence=1

- Collet L. S. (1971). Elimination scoring: An empirical evaluation. Journal of Educational Measurement, 8(3), 209-214. 10.1111/j.1745-3984.1971.tb00927.x [DOI] [Google Scholar]

- Coombs C. H., Milholland J. E., Womer J. F. (1956). The assessment of partial knowledge. Educational and Psychological Measurement, 16(1), 13-37. 10.1177/001316445601600102 [DOI] [Google Scholar]

- Couch B. A., Hubbard J. K., Brassil C. E. (2018). Multiple-true-false questions reveal the limits of the multiple-choice format for detecting students with incomplete understandings. BioScience, 68(6), 455-463. 10.1093/biosci/biy037 [DOI] [Google Scholar]

- Cronbach L. J. (1939). Note on the multiple true-false test exercise. Journal of Educational Psychology, 30(8), 628-631. 10.1037/h0058247 [DOI] [Google Scholar]

- Cronbach L. J. (1941). An experimental comparison of multiple true-false and multiple multiple-choice tests. Journal of Educational Psychology, 32(7), 533-543. 10.1037/h0058518 [DOI] [Google Scholar]

- Cronbach L. J. (1946). Response sets and test validity. Educational and Psychological Measurement, 6(4), 475-494. 10.1177/001316444600600405 [DOI] [Google Scholar]

- DeCarlo L. T. (1998). Signal detection theory and generalized linear models. Psychological Methods, 3(2), 186-205. 10.1037/1082-989X.3.2.186 [DOI] [Google Scholar]

- DeCarlo L. T. (2011). Signal detection theory with item effects. Journal of Mathematical Psychology, 55(3), 229-239. 10.1016/j.jmp.2011.01.002 [DOI] [Google Scholar]

- DeMars C. E. (2008, March 25-27). Scoring multiple choice items: A comparison of IRT and classical polytomous and dichotomous methods [Paper presentation]. Annual Meeting of the National Council on Measurement in Education, New York, NY, United States. [Google Scholar]

- Domnich A., Panatto D., Arata L., Bevilacqua I., Apprato L., Gasparini R., Amicizia D. (2015). Impact of different scoring algorithms applied to multiple-mark survey items on outcome assessment: An in-field study on health-related knowledge. Journal of Preventive Medicine and Hygiene, 56(4), E162-E171. [PMC free article] [PubMed] [Google Scholar]

- Dressel P. L., Schmid J. (1953). Some modifications of the multiple-choice item. Educational and Psychological Measurement, 13(4), 574-595. 10.1177/001316445301300404 [DOI] [Google Scholar]

- Duncan G. T., Milton E. O. (1978). Multiple-answer multiple-choice test items: Responding and scoring through Bayes and minimax strategies. Psychometrika, 43(1), 43-57. 10.1007/BF02294088 [DOI] [Google Scholar]

- Eggen T. J. H. M., Lampe T. T. M. (2011). Comparison of the reliability of scoring methods of multiple-response items, matching items, and sequencing items. Cadmo, 19(2), 85-104. [Google Scholar]

- Frary R. B. (1989). Partial-credit scoring methods for multiple-choice tests. Applied Measurement in Education, 2(1), 79-96. 10.1207/s15324818ame0201_5 [DOI] [Google Scholar]

- Frisbie D. A., Sweeney D. C. (1982). The relative merits of multiple true-false achievement tests. Journal of Educational Measurement, 19(1), 29-35. 10.1111/j.1745-3984.1982.tb00112.x [DOI] [Google Scholar]

- Haladyna T. M., Kramer G. (2005, April 11-15). An empirical investigation of poly-scoring of multiple-choice item responses [Paper presentation]. Annual Meeting of the National Council on Measurement in Education, Montreal, Quebec, Canada. [Google Scholar]

- Hohensinn C., Kubinger K. D. (2011). Applying item response theory methods to examine the impact of different response formats. Educational and Psychological Measurement, 71(4), 732-746. 10.1177/0013164410390032 [DOI] [Google Scholar]

- Hsu T.-C., Moss P. A., Khampalikit C. (1984). The merits of multiple-answer items as evaluated by using six scoring formulas. Journal of Experimental Education, 52(3), 152-158. 10.1080/00220973.1984.11011885 [DOI] [Google Scholar]

- Jiao H., Liu J., Hynie K., Woo A., Gorham J. (2012). Comparison between dichotomous and polytomous scoring on innovative items in a large-scale computerized adaptive test. Educational and Psychological Measurement, 72(3), 493-509. 10.1177/0013164411422903 [DOI] [Google Scholar]

- Jodoin M. G. (2003). Measurement efficiency of innovative item formats in computer-based testing. Journal of Educational Measurement, 40(1), 1-15. 10.1111/j.1745-3984.2003.tb01093.x [DOI] [Google Scholar]

- Jorion N., Betts J., Kim D., Muntean W. (2019, April 4-8). Evaluating clinical judgment items: Field test results [Paper presentation]. Annual Conference of the National Council on Measurement in Education, Toronto, Ontario, Canada. [Google Scholar]

- Kane M. (2016). Validation strategies: Delineating and validating proposed interpretations and uses of test scores. In Lane S., Raymond M. R., Haladyna T. M. (Eds.), Handbook of test development (2nd ed., pp. 64-80). Routledge. [Google Scholar]

- Kao S.-c., Betts J. (2019, April 4-8). Exploring item scoring methods for technology-enhanced items in computerized adaptive tests [Paper presentation]. Annual Conference of the National Council on Measurement in Education, Toronto, Ontario, Canada. [Google Scholar]

- Kim D., Woo A., Betts J., Muntean W. (2018, April 12-16). Evaluating scoring models to align with proposed cognitive constructs underlying item content [Paper presentation]. Annual Conference of the National Council on Measurement in Education, New York, NY, United States. [Google Scholar]

- Knuth D. E. (1998). The art of computer programming: Sorting and searching (Vol. 3, 2nd ed.). Addison-Wesley. [Google Scholar]

- Koch D. A. (1993). Testing goes graphical. Journal of Interactive Instruction Development, 5, 14-21. [Google Scholar]

- Kutner M. K., Nachtsheim C. J., Neter J., Li W. (2005). Applied linear statistical models (5th ed.). McGraw-Hill Irwin. [Google Scholar]

- Linacre J. M. (2020). Winsteps® (Version 4.5.2) [Computer software]. Winsteps.com.

- Lord F. M. (1980). Applications of item response theory to practical testing problems. Lawrence Erlbaum. [Google Scholar]

- Lorié W. (2014, April 4-6). Application of a scoring framework for technology-enhanced items [Paper presentation]. 2014Annual Meeting of the National Council for Measurement in Education, Philadelphia, PA, United States. [Google Scholar]

- Masters G. N. (1982). A Rasch model for partial credit scoring. Psychometrika, 47(2), 149-174. 10.1007/BF02296272 [DOI] [Google Scholar]

- Meng H., Han C. (2017, August 18-21). Comparison of pretest item calibration methods in CAT [Paper presentation] 2017 International Association for Computerized Adaptive Testing, Niigata, Japan. [Google Scholar]

- Morgan M. (1979). MCQ: An interactive computer program for multiple-choice self-testing. Biochemical Education, 7(3), 67-69. 10.1016/0307-4412(79)90049-9 [DOI] [Google Scholar]

- Muckle T. J., Becker K. A., Wu B. (2011, April 7-8). Investigating the multiple answer multiple choice item format [Paper presentation]. 2011 Annual Meeting of the National Council on Measurement in Education, New Orleans, LA, United States. [Google Scholar]

- Muntean W., Betts J. (2015, April 15-19). Analyzing multiple response data through a signal-detection framework [Paper presentation]. National Conference of the National Council on Measurement in Education, Chicago, IL, United States. [Google Scholar]

- Muntean W., Betts J. (2016. a, April 7-11). Investigating sequential item effects in a testlet model [Paper presentation]. National Conference of the National Council on Measurement in Education, Washington, DC, United States. [Google Scholar]

- Muntean W., Betts J. (2016. b, July 1-4). Developing and pretesting technology enhanced items: Issues and outcomes [Paper presentation]. International Conference of the International Testing Commission, Vancouver, British Columbia, Canada. [Google Scholar]

- Muntean W., Betts J., Kao S.-c., Woo A. (2018, April 12-16). A hierarchical framework for response times and signal detection theory [Paper presentation]. Annual Conference of the National Council on Measurement in Education, New York, NY, United States. [Google Scholar]

- Muntean W., Betts J., Kim D. (2016. a, May 26-29). Exploring the similarities and differences between item response theory and item-level signal detection theory [Poster presentation]. Annual Conference of the Association for Psychological Science, Chicago, IL, United States. [Google Scholar]

- Muntean W., Betts J., Kim D. (2016. b, July 12-15). Exploring the similarities and differences between item response theory and item-level signal detection theory [Paper presentation]. International Conference of the Psychometric Society, Asheville, NC, United States. [Google Scholar]

- Muntean W., Betts J., Kim D., Jorion N. (2019, April 4-8). Scaling clinical judgment items using polytomous and super-polytomous models [Paper presentation]. Annual Conference of the National Council on Measurement in Education, Toronto, Ontario, Canada. [Google Scholar]

- Muntean W., Betts J., Luo X., Kim D., Woo A. (2018, April 12-16). Using signal-detection theory to enhance IRT Methods: A clinical judgment example [Paper presentation]. Annual Conference of the National Council on Measurement in Education, New York, NY, United States. [Google Scholar]

- Nichols P. D., Kobrin J. L., Lai E., Koepfler J. (2016). The role of theories of learning and cognition in assessment design and development. In Rupp A. A., Leighton J. P. (Eds.), The handbook of cognition and assessment: Frameworks, methodologies, and applications (pp. 15-40). Wiley Blackwell. [Google Scholar]

- O’Neil K., Folk V. (1996, April 9-11). Innovative CBT item formats in a teacher licensing program [Paper presentation]. Annual Meeting of the National Council on Measurement in Education, New York, NY, United States. [Google Scholar]

- Parshall C., Stewart R., Ritter J. (1996, April 9-11). Innovations: Sound, graphics, and alternative response modes [Paper presentation]. Annual Meeting of the National Council on Measurement in Education, New York, NY, United States. [Google Scholar]

- Pearson Education. (2018). Pearson PTE Academic: Score guide, Version 11. https://pearsonpte.com/wp-content/uploads/2019/08/Job_-Score-Guide-2019_-V.11-2019.pdf

- Pomplun M., Omar M. H. (1997). Multiple-mark items: An alternative objective item format? Educational and Psychological Measurement, 57(6), 949-962. 10.1177/0013164497057006005 [DOI] [Google Scholar]

- Qian H., Woo A., Kim D. (2017). Exploring the psychometric properties of innovative items in computerized adaptive testing. In Hong J., Lissitz R. W. (Eds.), Technology enhanced innovative assessment: Development, modeling, and scoring from an interdisciplinary perspective (pp. 97-118). Information Age. [Google Scholar]

- Riconscente M. M., Mislevy R. J., Corrigan S. (2016). Evidence-centered design. In Lane S., Raymond M. R., Haladyna T. M. (Eds.), Handbook of test development (2nd ed., pp. 40-63). Routledge. [Google Scholar]

- Ripkey D. R., Case S. M., Swanson D. B. (1996). A “new” item format for assessing aspects of clinical competence. Academic Medicine, 71(10), 534-536. 10.1097/00001888-199610000-00037 [DOI] [PubMed] [Google Scholar]

- Samejima F. (1969). Estimation of latent ability using a response pattern of graded scores (Psychometric Monograph No. 17). Psychometric Society. https://www.psychometricsociety.org/sites/main/files/file-attachments/mn17.pdf?1576606975

- Samejima F. (1981). Efficient methods of estimating the operating characteristics of item response categories and challenge to a new model for the multiple choice item. Final report. Office of Naval Research. [Google Scholar]

- Sireci S. G., Zenisky A. L. (2006). Innovative item formats in computer-based testing: In pursuit of improved construct representation. In Downing S. M., Haladyna T. M. (Eds.), Handbook of test development (pp. 329-347). Routledge. [Google Scholar]

- Stocking M. L. (1988). Scale drift in on-line calibration (Research Report RR-88-28-ONR). Educational Testing Service. [Google Scholar]

- Thissen D., Steinberg L. (1984). A response model for multiple choice items. Psychometrika, 49(4), 501-519. 10.1007/BF02302588 [DOI] [Google Scholar]

- Tsai F., Suen H. (1993). A brief report on the comparison of six scoring methods for multiple true-false items. Educational and Psychological Measurement, 53(2), 399-404. 10.1177/0013164493053002008 [DOI] [Google Scholar]

- Wan L., Henly G. (2012). Measurement properties of two innovative item formats in a computer-based test. Applied Measurement in Education, 25(1), 58-78. 10.1080/08957347.2012.635507 [DOI] [Google Scholar]

- Warrens M. J., de Gruijter D. N. M., Heiser W. J. (2007). A systematic comparison between classical optimal scaling and the two-parameter IRT model. Applied Psychological Measurement, 31(2), 106-120. 10.1177/0146621605287912 [DOI] [Google Scholar]

- Wilson M. (2005). Constructing measures: An item response modeling approach. Lawrence Erlbaum. [Google Scholar]

- Woo A., Muntean W., Betts J. (2014, July 2-5). Using signal-detection theory to measure cue recognition in multiple response items [Paper presentation]. International Conference of the International Testing Commission, San Sebastian, Spain. [Google Scholar]

- Wright B. D., Masters G. N. (1982). Rating scale analysis. MESA Press. [Google Scholar]