Abstract

The onset of motor symptoms in Parkinson disease (PD) is typically unilateral. Previous work has suggested that laterality of motor symptoms may also influence non-motor symptoms including cognition and emotion perception. In line with hemispheric differences in emotion processing, we tested whether left side/right brain motor onset was associated with worse expression of facial affect when compared to right side/left brain motor onset. We evaluated movement changes associated with facial affect in 30 patients with idiopathic PD (15 left-sided motor onset, 15 right-sided motor onset) and 20 healthy controls. Participants were videotaped while posing three facial expressions: fear, anger, and happiness. Expressions were digitized and analyzed using software that extracted three variables: two measures of dynamic movement change (total entropy and entropy percent change) and a measure of time to initiate facial expression (latency). The groups did not differ in overall amount of movement change or percent change. However, left-sided onset PD patients were significantly slower in initiating anger and happiness facial expressions than were right-sided onset PD patients and controls. Our results indicated PD patients with left-sided symptom onset had greater latency in initiating two of three facial expressions, which may reflect laterality effects in intentional behavior.

Introduction

Most patients with Parkinson disease initially present with motor symptoms on one side of the body, left or right. This initial motor asymmetry reflects differential disruption of contralateral basal ganglia-frontal lobe dopaminergic systems. With disease progression, symptoms become more bilateral, though some degree of motor asymmetry often persists (Lee et al., 1995). A variety of studies have reported that laterality of symptom onset in Parkinson disease (PD) may also influence non-motor symptoms, such as cognitive processing in a material-specific manner (for reviews, see Steinbach et al., 2021; Verreyt et al., 2011). Some, but not all, studies report worse language/verbal memory processing with left brain onset and worse visual processing/memory with right brain onset (Verreyt et al., 2011). A recent meta-analysis also indicated that left-sided PD motor symptom onset (right brain) was also associated with greater difficulties in identifying social and emotional cues in others (Coundouris et al., 2019). What remains unknown is whether laterality of symptom onset in PD also affects facial expressivity. Blunted facial expressivity, or the ‘masked face,’ is a characteristic feature of PD that can lead to misattribution of mood and emotion (e.g., depression, apathy) by family members, caretakers, and clinicians.

The mechanisms underlying the masked face in PD are not fully understood, but have been linked to disruption of cortical and basal ganglia systems for both intentional (voluntary) and spontaneous (involuntary) expression of emotions. Elegant electrophysiology mapping studies by Morecraft and colleagues (2001) have identified five cortical “face representation areas” in macaques, including two in the anterior cingulate region linked to spontaneous emotional expressions. These five face representation areas are interconnected, though each independently sends corticobulbar projections to the facial nucleus in the brainstem. For intentional expression, abnormal output from the basal ganglia may result in reduced activation of primary motor and pre-motor areas in frontal cortical regions, potentially leading to diminished intentional facial movements (Bologna et al., 2013; Marsili et al., 2014). Similarly, for involuntary expression, abnormal basal ganglia output may alter activation of anterior cingulate regions via parallel circuitry outlined by Alexander, DeLong, and Strick (1986). Taken together, such observations provide an anatomic basis for how dopaminergic disruption of striatal-frontal circuitry in PD can influence facial movement. Turning to the stroke literature, several studies have described worse facial expressivity, both voluntary and involuntary, following unilateral hemispheric strokes of the right relative to the left hemisphere (Borod et al., 1986; Kent et al., 1988), particularly frontal lobe regions. Additionally, studies in split-brain patients (i.e., those who have undergone surgical resection of the corpus callosum)have found that the right hemisphere may be superior in discriminating emotional facial expressions (Stone et al., 1986) and that the right hemisphere in particular is associated with an autonomic response from emotional stimuli (Làdavas et al., 1993).

One interpretation of these stroke and split-brain findings pertains to hemispheric differences in the processing of emotions. Two major theories of cerebral lateralization of emotion processing have been proposed: the right hemisphere hypothesis and the valence-specific hypothesis (for review, see Gainotti, 2019). According to the right hemisphere hypothesis, the right cortical hemisphere is dominant for mediating all emotional behavior, regardless of affective valence (Borod et al., 1998; Heilman et al., 2003). In contrast, the valence-specific hypothesis posits that both hemispheres process emotion, but that the right hemisphere is more dominant in processing negative emotions while the left hemisphere is dominant in processing positive emotions (Davidson, 1992). An expansion of the valence-specific hypothesis was proposed by Heller (1993) who suggested that there are two distinct neural systems, one involved in the modulation of emotional valence in the frontal lobes (with right frontal activity linked to negative valence and left frontal activity linked to positive valence) and the other system involved in the modulation of autonomic arousal (located in the right parieto-temporal region). Although the exact nature of this lateral division of emotion remains a topic of debate, several recent studies examining the processing of emotional facial expressions in neurotypical populations have revealed a right hemisphere bias for several emotions (Hausmann et al., 2021; Innes et al., 2016). These findings, coupled with research from hemispheric strokes, led us to adopt the right hemisphere hypothesis as the theoretical framework for the present study.

The goal of the present study was to test the hypothesis that laterality of motor symptom onset in PD might influence the intentional/voluntary production of facial emotions. We predicted that individuals with left symptom onset (right brain) would exhibit slower and less intense facial emotions than those with right symptom onset (left brain). This hypothesis and predictions were based on the view that there is greater involvement of right hemisphere systems in emotional behavior. To examine these predictions, we used a semi-automated computer program to quantify the amount and timing of movement over the face during production of intentional facial emotions (see Bowers et al., 2006). Individuals with PD and healthy controls were videotaped while posing facial expressions, and each video frame was analyzed for dynamic movement changes across the face. Our approach was based on a method originally developed by Leonard and colleagues (1991) which relies on light reflectance patterns occurring over the moving face to compute the speed and amplitude of a facial expression.

Method

Participants

Participants included 30 patients with idiopathic PD and 20 healthy controls recruited from a larger parent study on treatment of facial expressivity (NCT00350402). All PD patients had an established diagnosis of idiopathic PD based on the UK Brain Bank Criteria. Laterality of symptom onset (right or left) was based on self-report and medical record review. Exclusion criteria for all participants were suspected dementia (DRS total score < 130), current or past history of major psychiatric disturbance (e.g., substance abuse, bipolar disorder), history of neurologic illness (other than PD), unstable medication regimen, neurosurgery, and presence of orofacial dyskinesias. The final PD sample included 15 patients with right-sided motor symptom onset (RSO-PD) and 15 patients with left-sided motor symptom onset (LSO-PD). Informed consent was obtained according to university and federal guidelines. Demographic information and scores on standard motor, cognitive, and mood measures are depicted in Table 1. The groups did not significantly differ across these indices based on parametric (ANOVA, t-tests) and nonparametric (chi-square) statistics.

Table 1:

Participant Demographics and Clinical Characteristics

| RSO-PD | LSO-PD | Controls | Significance | |

|---|---|---|---|---|

|

| ||||

| N | 15 | 15 | 20 | — |

| Age, mean (SD), years | 66.9 (6.9) | 69.9 (7.1) | 66.0 (8.9) | ns |

| Education, mean (SD), years | 16.7 (2.8) | 16.1 (2.6) | 15.7 (2.6) | ns |

| Sex Ratio (male:female) | 9:6 | 10:5 | 12:8 | ns |

| Race and Ethnicity | ns | |||

| White | 13 | 14 | 19 | |

| Hispanic | 0 | 1 | 0 | |

| Black | 2 | 0 | 1 | |

| Handedness (right:left) | 13:2 | 12:3 | 18:2 | ns |

| DRS-II total score, mean (SD) | 140.7 (2.9) | 137.4 (5.6) | 140.4 (3.8) | ns |

| Symptom duration, mean (SD), years | 7.8 (5.0) | 7.3 (3.7) | — | ns |

| UPDRS total motor, mean (SD), on medication | 21.1 (7.6) | 25.8 (9.9) | — | ns |

| BDI-II, total score, mean (SD) | 6.1 (3.8) | 9.2 (7.8) | 4.6 (5.5) | ns |

| AS, total score, mean (SD) | 9.1 (4.8) | 12.5 (6.7) | 9.0 (4.1) | ns |

RSO-PD: Right-sided motor symptom onset; LSO-PD: Left-sided motor symptom onset; SD: Standard Deviation;DRS-II: Dementia Rating Scale-II;UPDRS:Unified Parkinson Disease Rating Scale (motor scale);BDI-II:Beck Depression Inventory-II; AS: Apathy Scale

Face Digitizing Methods

Procedure for Collecting Facial Expression.

During testing, participants were seated in a quiet room with their head positioned in a CIVCO Vac-Lok head stabilization system. Participants were asked to pose three different emotional expressions (happiness, anger, fear) so that others would be able to discern how they felt. They were videotaped using a black-and-white Pulnix camera (TM-7CN) and Sony video recorder (SLV-R1000). Indirect lighting was produced by reflecting two 150-watt tungsten light bulbs onto two white photography umbrellas positioned about three feet from the face. Lighting on each side of the face was balanced within one lux of brightness using a Polaris light meter. Participants wore a Velcro headband across the top of the forehead. Attached to the headband were two light emitting diodes that were synchronized with a buzzer whose onset signaled that the participant should make a target facial expression. Because sound was not recorded, onset of the diodes provided an index of trial onset during subsequent image processing.

For each of the three expressions (i.e., happiness, anger, fear), participants were instructed: “without moving your head, show the most intense expression you can make when you hear the buzzer.” Participants were asked not to blink and to look straight into the camera. At onset of each trial, participants were told the “target” emotion they should pose, but instructed not to produce it until they heard an auditory cue (i.e., buzzer) that would occur a few moments later (range from 2 to 4 seconds). After making the expression, participants closed their eyes and relaxed their face for approximately 10 seconds. Each of the three emotion expressions was produced twice in order to optimize the possibility that one expression would be free of motion artifact. Participants completed a single testing session that lasted approximately 90 minutes in total. In addition to time required for informed consent and completion of questionnaires/cognitive measures, this also included time to stabilize the head, ensure equivalent lighting levels across the two sides of the face, and complete the face expression trials. Two independent raters selected the expression with least motion artifact for analysis. When no artifact was detected, the first expression was used. The PD participants were tested after taking their first dose of dopaminergic medication in the morning.

Quantification Methods.

To quantify intensity and timing of facial movements, we used custom software and methods as described by Bowers et al.(2006). For post-image processing, separate video sequences were extracted for the three emotions. These video segments, time-locked to the onset of the tone cue, were digitized using EyeView software. Each segment consisted of 90 digitized images or ‘frames’ (single frame = 30.75 ms; 640 X 480 pixel array at 256 levels of gray scale). Custom software written in PV-WAVE (D. Gokcay) automatically extracted the face region and computed differences in intensity from each pixel in adjacent frames, following onset of the trial cue. These summed changes in pixel intensity represented the dependent variable of ‘entropy’ and directly corresponded to changes in light reflectance patterns that occurred over the face during the facial expression movement. The formula for deriving entropy was: Ei(t) = − Σnj(t)/Ni*log(nj(t)/Ni), where i = index associated with the face region of interest; j = index associated with individual gray level intensities; Ni = total number of pixels in face region; nj(t) = number of pixels with gray level intensity, j, on the image obtained by subtracting frame t − 1 from frame t; and Ei(t) = entropy of face region at time t, where j = 0,…,255.

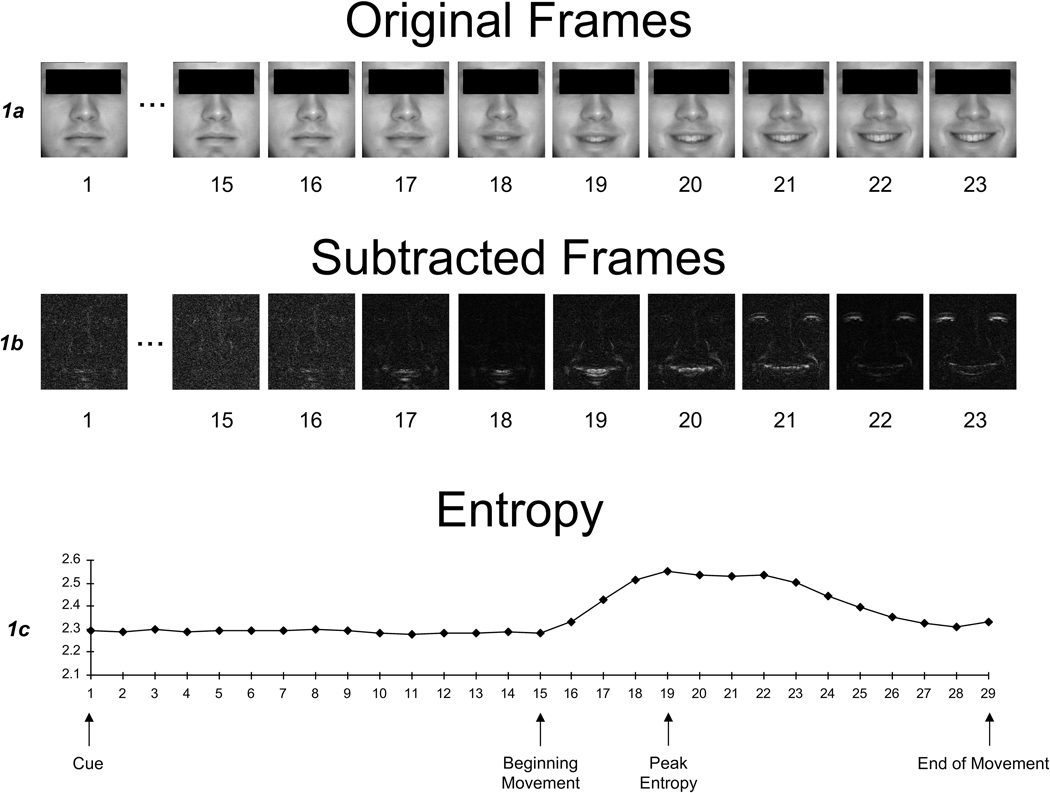

For each expression, three outcome variables were computationally derived, based in part on research by Leonard and colleagues (1991). The first was total entropy, which was the total amount of movement change during a dynamic expression. The second was entropy percent change. This value corresponded to the percent change in entropy from baseline (prior to initiation of expression) to peak entropy, which is the point of most rapid movement change that occurs during the course of a facial expression. We chose this index based on previous findings by Leonard et al. (1991) that the categorical perception of a facial expression occurred at the point of greatest movement change (i.e., entropy) in the ongoing temporal sequence of the facial expression. This interpretation was based on a study whereby raters were shown a series of still images of faces that depicted the temporal evolution of smiles in 30 ms increments. Raters were asked to select the image where they could identify the ‘message.’ Those images that had sharp increases in entropy (i.e., rapid changes in movement signal) compared to the prior image in the sequence were most likely to be identified as the onset of a smile. Based on these observations, we selected entropy percent change as a potentially meaningful outcome that likely corresponds to how rapidly the facial expression could be first identified by observers. Finally, our third outcome variable was latency, which was the initiation time between tone cue and facial movement. Figure 1 depicts the entropy curve and temporal points along the curve for a prototypic expression.

Figure 1.

Original frames, subtracted frames, and entropy values during the emergence of a smile (adapted from Bowers et al., 2006). Figure 1a shows the original frames from the course of a facial expression from a neutral (baseline) expression through a smile. Figure 1b shows the subtracted frames which were derived by subtracting corresponding pixel intensities of adjacent images. Figure 1c shows a plot of the summed pixel difference changes (entropy) as the expression appears over time, with labels marking the tone cue, beginning of facial movement, peak entropy (most rapid movement change), and end of facial movement.

Results

For all outcome variables, data were inspected visually and statistically for outliers. Using three standard deviations as our criterion for outliers, we identified and removed several outliers, resulting in the following sample sizes across facial expressivity variables: total entropy: RSO-PD = 14, LSO-PD = 15, Controls = 20; entropy percent change: RSO-PD = 14, LSO-PD = 14, Controls = 20; initiation time: RSO-PD = 15, LSO-PD = 12, Controls = 20. Outliers were not removed via listwise deletion because cases meeting outlier criteria for one variable (e.g., slowed latency) did not necessarily classify as an outlier on other variables (e.g., still produced a facial expression with movement change that was within normal limits despite being slow to initiate). Table 2 depicts the mean values for each of the facial expressivity variables by group. Repeated measures ANOVAs were conducted for each of the outcome variables (total entropy, entropy percent change, initiation time), with group (RSO-PD, LSO-PD, Controls) as the between–subject variable and emotion type (anger, fear, happiness) as the within-subject variable. For facial movement, the three groups did not differ in overall total entropy [F(2, 46) = 1.83, p = .171, ηp2 = .07] or entropy percent change [F(2, 45) = .40, p= .68, ηp2 = .02]. As such, there were no differences in entropy indices of overall or peak facial movement as a function of laterality. Producing a happiness expression resulted in greater facial movement than fear or anger. This occurred regardless of group, with Bonferroni-adjusted post-hoc analyses revealing that happiness expressions induced greater total entropy [F(2, 92) = 7.40, p = .001, ηp2 = .14; M = 1.42, SD = 0.97] and entropy percent change [F(2, 90) = 6.50, p = .002, ηp2 = .13, M = 11.10, SD = 7.21] than fear (total entropy: M = .85, SD = .89, p = .004; entropy percent change: M = 7.52, SD = 7.18, p = .023) and anger (total entropy: M = .90, SD = .83, p = .021; entropy percent change: M = 6.72, SD = 6.20, p = .007). Anger and fear did not did not differ in total entropy or entropy percent change (ps> .05). There were no interaction effects between emotion type and participant group for either total entropy [F(4, 92) = 1.46, p= .222, ηp2 = .06] or entropy percent change [F(4, 90) =.831, p = .509, ηp2 = .04].

Table 2.

Facial expressivity scores across group.

| Mean (SD) | |||

|---|---|---|---|

|

| |||

| Anger | Fear | Happiness | |

|

| |||

| Total entropy | |||

| RSO-PD (N = 14) | .95 (.92) | .39 (.35) | 1.08 (1.08) |

| LSO-PD (N = 15) | .97 (.86) | .86 (.80) | 1.50 (.88) |

| Controls (N = 20) | .82 (.77) | 1.17 (1.08) | 1.60 (.94) |

| Entropy percent change | |||

| RSO-PD (N = 14) | 7.20 (5.90) | 6.00 (6.36) | 9.62 (7.76) |

| LSO-PD (N = 14) | 7.39 (6.41) | 6.64 (6.57) | 10.98 (6.63) |

| Controls (N = 20) | 5.93 (6.48) | 9.20 (8.07) | 12.22 (7.36) |

| Latency | |||

| RSO-PD (N = 15) | 2.42 (1.50) | 1.52 (1.54) | 1.35 (1.20) |

| LSO-PD (N = 12) | 4.69 (2.80) | 1.97 (1.39) | 2.90 (1.92) |

| Controls (N = 20) | 1.55 (1.51) | 1.37 (1.05) | 1.29 (1.36) |

Note. RSO-PD: Right-sided motor symptom onset; LSO-PD: Left-sided motor symptom onset

For the temporal variable, a significant main effect for group emerged for latency to initiate a facial expression [F(2, 44) = 12.21, p < .001, ηp2 = .36]. Post-hoc analyses (Bonferroni) revealed that the LSO-PD group (M = 3.19 s, SD = 1.25) was significantly slower at initiating facial movements than the RSO-PD group (M = 1.76 s, SD = 0.70, p = .002) and Controls (M = 1.40 s, SD = 1.04, p < .001). The RSO-PD group and Controls did not differ (p > .05). Additionally, across all groups, anger expressions were slower to initiate (i.e., higher latency) than happiness or fear[F(2, 88) = 9.13, p < .001, ηp2 =.17]; anger M = 2.63 s, SD = 2.26; fear M = 1.57 s, SD = 1.30, p = .002; happiness M = 1.72s, SD = 1.60, p = .011]. The happiness and fear expressions were not significantly different(p > .05). Finally, there was a group X emotion interaction effect [F(4, 88) = 2.83, p = .029, ηp2 = .114]. The LSO-PD group was significantly slower at initiating facial movements than RSO-PD group and Controls for both the anger and happiness expressions(anger: LSO-PD M = 4.69 s, SD = 2.80; RSO-PD M = 2.42 s, SD = 1.50, p = .011; Controls M = 1.55, SD = 1.51, p < .001;happiness: LSO-PD M = 2.90, SD = 1.92; RSO-PD M = 1.35, SD = 1.20, p = .03; Controls M = 1.29, SD = 1.36, p = .014). However, there were not group differences in initiation time for the fear expression (LSO-PD M = 1.97, SD = 1.39; RSO-PD M = 1.52, SD = 1.54, p > .05; Controls M = 1.37, SD = 1.05, p > .05). The RSO-PD group and Controls did not differ across any emotion expression (ps > .05).

Separate linear regression analyses were conducted to learn whether scores on mood measures (Beck Depression Inventory-II, Apathy Scale) would be associated with the three facial expression outcome variables: total entropy, entropy percent change, and initiation time. Data from the three participant groups were combined (without outliers), and data from the three emotion types (anger, fear, happiness) were averaged across facial expression variables. As shown in Table 3, neither depression nor apathy predicted any of the facial expression outcome variables (all ps> .05). Additional regression analyses were conducted to examine whether PD motor symptoms (UPDRS motor scale) predicted facial expression variables. Data from the two PD groups were combined, and data from the three emotion types were averaged across facial expression variables. As shown in Table 4, motor symptom severity was not related to any of the facial expression variables (all ps> .05).

Table 3.

Linear regression analysis of mood variables and facial expressivity in total sample

| F | R 2 | β | Significance | |

|---|---|---|---|---|

|

| ||||

| Total entropy (N = 49) | .21 | .009 | ||

| BDI-II | −.11 | ns | ||

| AS | .08 | ns | ||

| Entropy percent change (N = 48) | .40 | .017 | ||

| BDI-II | −.14 | ns | ||

| AS | .02 | ns | ||

| Latency (N = 47) | .66 | .029 | ||

| BDI-II | .06 | ns | ||

| AS | .14 | ns | ||

Note. BDI-II: Beck Depression Inventory-II; AS: Apathy Scale; ns: non-significant

Table 4.

Linear regression analysis of UPDRS motor variables and facial expressivity in Parkinson groups

| F | R 2 | β | Significance | |

|---|---|---|---|---|

|

| ||||

| Total entropy (N = 29) | .29 | .011 | ||

| UPDRS motor scale | −.10 | ns | ||

| Entropy percent change (N = 28) | .19 | .007 | ||

| UPDRS motor scale | −.08 | ns | ||

| Latency (N = 27) | .03 | .001 | ||

| UPDRS motor scale | −.03 | ns | ||

Note. UPDRS: Unified Parkinson Disease Rating Scale; ns: non-significant

Discussion

This study used sophisticated digitizing methods to learn whether PD patients with left motor symptom onset would exhibit less facial movement than those with right symptom onset when voluntarily depicting target emotions. This question was based, in part, on views that left and right hemisphere systems make different contributions to emotional behavior and the assumption that asymmetric disruption of basal ganglia systems might result in reduced activation of the right hemisphere. Specifically, we had hypothesized that individuals with left motor symptom onset would have less intense emotional facial expressions due to reduced efficiency and/or activation in right frontal cortical regions, thereby altering the right hemisphere’s capacity to process emotions (right hemisphere hypothesis).In brief, we found no evidence to support this view in individuals with PD. Indeed, the overall amount of movement change during the course of a facial expression did not differ based on laterality of PD symptom onset. In addition, neither depression nor apathy predicted the amount or timing of facial movement, nor did motor severity. These observations are limited to intentionally-produced facial expressions, as spontaneous facial expressions were not examined.

Our findings contrast with those from the stroke literature where decreased facial expressivity, both intentional and spontaneous, has been linked more so to focal right versus left hemisphere lesions (Borod et al., 1986; Kent et al., 1988). Rather, they parallel those of Blonder and colleagues (1989) who examined a different variant of emotional communication (i.e., prosody) and found that both perception and expression of emotional prosody were similarly disrupted by left and right hemiparkinsonism. However, other research examining facial and prosodic social perception found an effect of motor symptom onset, with greater impairment in PD patients with left-sided onset (Coundouris et al., 2019).

A second major finding of the current study was a ‘laterality’ effect in the initiation time for producing anger and happiness facial expressions, with slower initiation of facial expressions by LSO-PD than RSO-PD and control groups. This implies that the speed of accessing and/or activating motor cortical areas important for facial expressions, as identified by Morecraft et al. (2001), was slower for the LSO-PD group. Previous research has indicated that right hemisphere dysfunction is associated with action-intention deficits: patients with right hemisphere lesions often present with slower reaction times and akinesia on motor tasks (Coslett and Heilman, 1989; Howes and Boller, 1975). This suggests that the right hemisphere may play an important role in mediating intention (response readiness). It is possible that our findings may be better explained by a deficit in intention among LSO-PD patients. Future research should test this hypothesis by examining non-emotional facial movement and determining whether a laterality effect in initiation time is still observed.

It is important to note, however, that this slower initiation time by the LSO-PD group only occurred for the anger and happiness expressions, but not fear. It is not totally clear why this difference in latency would be limited to just these two emotional expressions. From an evolutionary/adaptive standpoint, the fear facial expression may be particularly important because it signals that the expresser is in immediate distress and may elicit a prosocial response such as sympathy or desire to help (Marsh and Ambady, 2007). Some research also suggests that when individuals pose a facial expression of fear, they can increase the scope of their visual field and speed of eye movements, which would aid in identifying threats in their environment if in a fear-eliciting situation (Susskind et al., 2008). In terms of processing, facial expressions of fear have been hypothesized to be processed via more rapid subcortical pathways involving the amygdala (Vuilleumier et al., 2003). Taken together, one potential hypothesis is that fear is an evolutionarily-important facial expression that is rapidly processed and is less susceptible to potential mediation of response readiness by the right hemisphere, which is why we did not find a laterality effect in initiation time for this particular emotion. However, this proposed hypothesis is speculative and does not fully explain the present findings.

In addition, the current study focused on voluntary (posed) facial expressions. As such, it remains unknown whether posed expressions are equivalent to involuntary (spontaneous) expressions in terms of overall movement, rate, and timing. Even so, one may hypothesize that discrete facial expressions are similar in terms of movement characteristics, regardless of how they are elicited. Neuroanatomic mapping studies with macaques (Morecraft et al., 2001) have identified five cortical face representation areas in each hemisphere, including two in the cingulate area. Each of these five ‘face areas’ are interconnected with each other (cortico-cortical connections), and each also sends direct corticobulbar projections to the facial nucleus in the brain stem, which then innervates the facial nerves and facial musculature. Although the location of face areas may vary by type of facial expression, with cingulate face areas (M3, M4) associated with involuntary “emotional” facial expressions and face areas in the lateral prefrontal cortex linked to voluntary facial expressions, the overall motor programs for both types of expressions are analogous. Given this, the depiction of facial expressions (i.e., amount of facial movement) may not necessarily differ. We do believe that there would be differences, at least in PD, in the frequency of elicitation. For example, within a given timespan, an individual with PD who is more emotionally expressive would elicit more spontaneous expressions than someone who is less emotionally expressive (e.g., someone with a flat affect). In terms of laterality, right hemisphere focal lesions have resulted in impairment in both spontaneous and voluntarily-posed facial expressions, and it is possible that the present finding of latency to initiate a facial expression may also apply to spontaneous facial expressions, which should be investigated in future studies.

This study had several limitations. First, we did not have an index of current extent of asymmetric basal ganglia disruption (e.g., DAT scan) and relied on motor onset asymmetry at time of diagnosis. As PD progresses, symptoms appear bilaterally. However, they typically remain worse on the initial side of onset (Lee et al., 1995), suggestive of a differential degenerative process within the two hemispheres. Second, the sample was small and primarily White, limiting power and generalizability. Third, use of posed expressions may have limited the degree to which genuine emotion was evoked. Finally, the PD patients were examined when they were fully on dopaminergic therapy with the goal of revealing any noticeable differences in patients’ normal functioning. While this improved ecological validity, it may have diminished potential differences.

In summary, our results demonstrate that Parkinson disease patients who have left-sided motor symptom onset may display greater latency in initiating intentional facial expressions. This may be due to greater dysfunction in the right hemisphere leading to difficulties with intentional behavior in left-sided onset PD patients. Our findings may have implications for individuals’ ability to communicate their emotion to others. If similar findings apply to spontaneous facial expressions, even a delay of just a few seconds in forming expressions could affect social communication. Future work should examine spontaneous facial expressivity, as well as action-intention deficits in PD.

Acknowledgments

Funding

This work was supported in part by the NIH under Grants R01-MH62639, R01-NS50633, and NINDS T32-NS082168 (AR, FL) and by the University of Florida Opportunity Fund.

Footnotes

Disclosure of Interest: The authors report no conflict of interest.

The data for the work described in this article are available at OSF (https://osf.io/h96e8/).

References

- Alexander GE, DeLong MR, Strick PL, 1986. Parallel organization of functionally segregated circuits linking basal ganglia and cortex. 9(1), 357–381. 10.1146/annurev.ne.09.030186.002041 [DOI] [PubMed] [Google Scholar]

- Blonder LX, Gur RE, Gur RC, 1989. The effects of right and left hemiparkinsonism on prosody. Brain Lang. 36(2), 193–207. 10.1016/0093-934x(89)90061-8 [DOI] [PubMed] [Google Scholar]

- Bologna M, Fabbrini G, Marsili L, Defazio G, Thompson PD, Berardelli A, 2013. Facial bradykinesia. J. Neurol.Neurosurg. Psychiatry 84(6), 681–685. 10.1136/jnnp-2012-303993 [DOI] [PubMed] [Google Scholar]

- Borod JC, Cicero BA, Obler LK, Welkowitz J, Erhan H, Santschi C, Grunwald IS, Agosti RM, Whalen JR, 1998. Right hemisphere emotional perception: Evidence across multiple channels. Neuropsychology, 12(3), 446–458. 10.1037//0894-4105.12.3.446 [DOI] [PubMed] [Google Scholar]

- Borod JC, Koff E, Perlman Lorch M, Nicholas M, 1986. The expression and perception of facial emotion in brain-damaged patients. Neuropsychologia 24(2), 169–180. 10.1016/0028-3932(86)900503 [DOI] [PubMed] [Google Scholar]

- Bowers D, Miller K, Bosch W, Gokcay D,Pedraza O, Springer U, Okun M, 2006. Faces of emotion in Parkinsons disease: micro-expressivity and bradykinesia during voluntary facial expressions. J. Int.Neuropsychol. Soc. 12(6), 765–773. 10.1017/S135561770606111X [DOI] [PubMed] [Google Scholar]

- Coslett HB, Heilman KM, 1989. Hemihypokinesia after right hemisphere stroke. Brain Cogn. 9(2), 267–278. 10.1016/0278-2626(89)90036-5 [DOI] [PubMed] [Google Scholar]

- Coundouris SP, Adams AG, Grainger SA, Henry JD, 2019. Social perceptual function in parkinson’s disease: A meta-analysis. Neurosci.Biobehav. Rev. 104, 255–267. 10.1016/j.neubiorev.2019.07.011 [DOI] [PubMed] [Google Scholar]

- Davidson RJ, 1992. Anterior cerebral asymmetry and the nature of emotion. Brain Cogn., 20(1), 125–151. 10.1016/0278-2626(92)90065-T [DOI] [PubMed] [Google Scholar]

- Gainotti G, 2019. A historical review of investigations on laterality of emotions in the human brain. Journal of the History of the Neurosciences, 28(1), 23–41. 10.1080/0964704X.2018.1524683 [DOI] [PubMed] [Google Scholar]

- Hausmann M, Innes BR, Birch YK, &Kentridge RW 2021. Laterality and (in)visibility in emotional face perception: Manipulations in spatial frequency content. Emotion, 21(1), 175–183. 10.1037/emo0000648 [DOI] [PubMed] [Google Scholar]

- Heilman KM, Blonder LX, Bowers D, 2003. Emotional disorders associated with neurologic disease, in: Heilman KM, Valenstein E (Eds.), Clinical Neuropsychology, fourth ed. Oxford University Press, New York, pp. 447–476. [Google Scholar]

- Heller W 1993. Neuropsychological mechanisms of individual differences in emotion, personality, and arousal. Neuropsychology, 7(4), 476–489. 10.1037/0894-4105.7.4.476 [DOI] [Google Scholar]

- Howes D, Boller F, 1975. Simple reaction time: evidence for focal impairment from lesions of the right hemisphere. Brain 98, 317–332. 10.1093/brain/98.2.317 [DOI] [PubMed] [Google Scholar]

- Innes BR, Burt DM, Birch YK, Hausmann M, 2016. A leftward bias however you look at it: Revisiting the emotional chimeric face task as a tool for measuring emotion lateralization. Laterality, 21(4–6), 643–661. 10.1080/1357650X.2015.1117095 [DOI] [PubMed] [Google Scholar]

- Kent J, Borod JC, Koff E, Welkowitz J, Alpert M, 1988. Posed facial emotional expression in brain-damaged patients. Int. J.Neurosci. 43(1–2), 81–87. 10.3109/00207458808985783 [DOI] [PubMed] [Google Scholar]

- Làdavas E, Cimatti D, Del Pesce M, &Tuozzi G, 1993. Emotional evaluation with and without conscious stimulus identification: Evidence from a split-brain patient. Cognition & Emotion, 7(1), 95–114. 10.1080/02699939308409179 [DOI] [Google Scholar]

- Lee CS, Schulzer M, Mak E, Hammerstad JP, Calne S, &Calne DB, 1995. Patterns of asymmetry do not change over the course of idiopathic parkinsonism: implications for pathogenesis. Neurology, 45(3 Pt 1), 435–439. 10.1212/wnl.45.3.435 [DOI] [PubMed] [Google Scholar]

- Leonard CM, Voeller KKS, &Kuldau JM, 1991. When’s a smile a smile? Or how to detect a message by digitizing the signal. Psychol. Sci, 2(3), 166–172. 10.1111/j.1467-9280.1991.tb00125.x [DOI] [Google Scholar]

- Marsili L, Agostino R, Bologna M, Belvisi D, Palma A, Fabbrini G, Berardelli A, 2014. Bradykinesia of posed smiling and voluntary movement of the lower face in Parkinson’s disease. Parkinsonism Relat.Disord. 20(4), 370–375. 10.1016/j.parkreldis.2014.01.013 [DOI] [PubMed] [Google Scholar]

- Marsh AA, Ambady N 2007. The influence of the fear facial expression on prosocial responding. Cogn. Emot, 21(2), 225–247. 10.1080/02699930600652234 [DOI] [Google Scholar]

- Morecraft RJ, Louie JL, Herrick JL, Stilwell-Morecraft KS, 2001. Cortical innervation of the facial nucleus in the non-human primate: a new interpretation of the effects of stroke and related subtotal brain trauma on the muscles of facial expression. Brain 124(1), 176–208. 10.1093/brain/124.1.176 [DOI] [PubMed] [Google Scholar]

- Steinbach MJ, Campbell RW, DeVore BB, Harrison DW, 2021. Laterality in Parkinson’s disease: a neuropsychological review. Appl. Neuropsychol. Adult. 10.1080/23279095.2021.1907392 [DOI] [PubMed] [Google Scholar]

- Stone VE, Nisenson L, Eliassen JC, &Gazzaniga MS (1996). Left hemisphere representations of emotional facial expressions. Neuropsychologia, 34(1), 23–29. 10.1016/0028-3932(95)00060-7 [DOI] [PubMed] [Google Scholar]

- Susskind JM, Lee DH, Cusi A, Feiman R, Grabski W, Anderson AK 2008. Expressing fear enhances sensory acquisition. Nat. Neurosci. 843–850. 10.1038/nn.2138 [DOI] [PubMed] [Google Scholar]

- Verreyt N, Nys GMS, Santens P, Vingerhoets G, 2011. Cognitive differences between patients with left-sided and right-sided Parkinson’s disease. A review. Neuropsychol. Rev. 21, 405–424. 10.1007/s11065-011-9182-x [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ 2003. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat.Neurosci. 6, 624–631. 10.1038/nn1057 [DOI] [PubMed] [Google Scholar]