Abstract

Aims

It is increasingly recognized that tools are required for assessing and benchmarking quality of care in order to improve it. The European Society of Cardiology (ESC) is developing a suite of quality indicators (QIs) to evaluate cardiovascular care and support the delivery of evidence-based care. This paper describes the methodology used for their development.

Methods and results

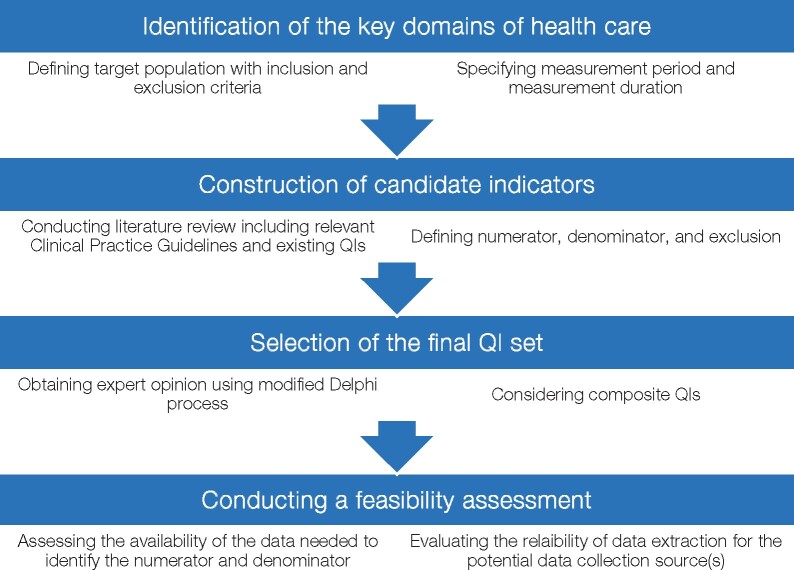

We propose a four-step process for the development of the ESC QIs. For a specific clinical area with a gap in care delivery, the QI development process includes: (i) the identification of key domains of care by constructing a conceptual framework of care; (ii) the construction of candidate QIs by conducting a systematic review of the literature; (iii) the selection of a final set of QIs by obtaining expert opinions using the modified Delphi method; and (iv) the undertaking of a feasibility assessment by evaluating different ways of defining the QI specifications for the proposed data collection source. For each of the four steps, key methodological areas need to be addressed to inform the implementation process and avoid misinterpretation of the measurement results.

Conclusion

Detailing the methodology for the ESC QIs construction enables healthcare providers to develop valid and feasible metrics to measure and improve the quality of cardiovascular care. As such, high-quality evidence may be translated into clinical practice and the ‘evidence-practice’ gap closed.

Keywords: Quality indicators, Cardiovascular disease, Quality improvement, Clinical practice guidelines

Introduction

There is substantial variation in the delivery of care for cardiovascular disease (CVD) which is reflected in variation in disease outcomes. Data from health surveys, administrative records, cohort studies, and registries show persisting geographic and social variation in CVD treatments and mortality across Europe.1,2 Moreover, the potential to reduce premature cardiovascular death has not been fully realized.3 The European Society of Cardiology (ESC) recognizes the variation in CVD burden and delivery of care across its 57 member countries, as well as the need to invest in closing the ‘evidence-practice gap’.2

There is an increasing emphasis on the need for measuring and reporting both processes and outcomes of care and for a better understanding of how analytical tools can facilitate quality improvement initiatives.4,5. For example, the quantification and public reporting of hospital times to reperfusion for the management of patients with ST-segment elevation myocardial infarction has been associated with improvements in patient outcomes.6 Similar successes have been achieved in the surgical management of congenital heart disease, where the implementation of structural measures, such as regionalization of care and setting standards for minimum surgical volume, has been associated with reductions in perioperative mortality.7

It has been proposed that quality indicators (QIs) may serve as a mechanism for stimulating the delivery of evidence-based medicine, through quality improvement, benchmarking of care providers, accountability, and pay-for-performance programs.8 Consequently, the use of indicators of quality is expanding and is of interest to a range of stakeholders including health authorities, professional organizations, payers, and the public.9

In the UK, the National Institute for Health and Care Excellence (NICE) has, for some time, endorsed certain NICE quality indicators, which are typically used by commissioners to ensure that that the services they commission are driving up quality. The introduction of such indicators has been shown to improve outcomes10 and their withdrawal to negatively influence quality of care.11 Notably, the production of NICE indicators follows a structured process, which includes the identification of a topic for indicator development, and the evaluation of a proposed set of indicators by an ‘indicator advisory committee’ that contains patient representatives.12 Other organizations such as the American College of Cardiology (ACC) and the American Heart Association (AHA) have developed Performance Measures for a variety of cardiovascular conditions, also using a structured process for their development.13,14 However, the approach by which QIs are developed is heterogeneous and establishing a uniform framework for the construction of QIs for healthcare should increase their acceptance and perceived trustworthiness.15

In addition, the lack of widely agreed definitions for data variables hampers the development of QIs and their integration with clinical registries.16 Initiatives, such as the European Unified Registries On Heart Care Evaluation and Randomized Trials (EuroHeart), are fundamental to QI development and implementation.17 EuroHeart aims to harmonize data standards for CVD and establish a platform for continuous data collection. Moreover, EuroHeart will provide the means to evaluate cardiovascular care through QIs which are underpinned by standardized data collection and definitions.

This document outlines the process by which the ESC develops its QIs for CVD and provides a standardized methodology which may be used by all stakeholders to ensure the QIs are clinically relevant, scientifically justified, feasible, and usable.18 The ESC anticipates that this process will enable the prioritization of areas for QI development and improve the utility of the developed QIs. Thus, the ESC QIs may be implemented with reasonable cost and effort, interpreted in a context of quality improvement, and reported in a scientifically credible, yet user-friendly format.

Methods

Definition of quality indicators

The ESC uses the term QI to describe, in a specific clinical situation, aspects of the process of care that are recommended (or not recommended) to be performed. Although used interchangeably, a distinction between QIs and performance (or quality) measures has been drawn.15 QIs can be illustrated in an ‘if-then’ format, meaning that ‘if’ a patient has had a given condition and satisfies relevant criteria, ‘then’ he or she should (or should not) be offered a given intervention. Different performance (or quality) measurements may then be derived from the same QI depending on several factors, including the definition of the respective data variables and the sources of data.15 The ESC QIs include main and secondary indicators according to whether they represent a major and complementary component of an aspect of health care. Secondary QIs may be used instead of the main ones in situation where missing data and/or limited resources preclude the measurement of the main QIs.

Types of quality indicators

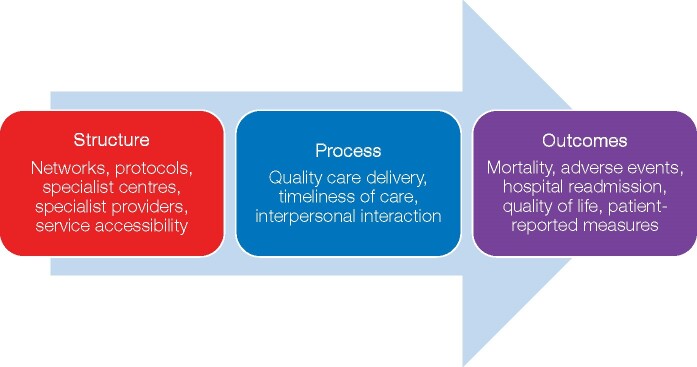

The ESC QIs are expressed as structural, process, and outcome indicators. Structural QIs describe organizational aspects of care, such as physical facilities, human resources, and available protocols or networks. Process QIs capture actions taken by healthcare providers or patients, such as adherence to established guidelines or recommended therapies. On the other hand, outcome QIs concern the effects of health care on patients, populations, or societies. Outcome QIs may also include patient-reported outcome measures (PROMs), such as health-related quality of life.19

High-quality evidence tends to be available to support process QIs rather than structural or outcome indicators.14 However, the inclusion of outcome indicators provides a more comprehensive performance evaluation,20 even though adjustment for differences in patient characteristics is necessary to evaluate whether or not variation in outcomes is due to true differences in quality of care.21 Thus, risk-adjusted outcome QIs form one element of the ESC QIs. For this document, we do not consider the statistical methods for interpreting outcome measurement results and acknowledge that different methods may provide differing results regarding quality of care assessment.22

PROMs have a complementary role to other outcome measures, such as mortality and re-hospitalization rates. Notwithstanding the fact that many PROMs may not yet be based on strong recommendations within guidelines, they provide a patient’s perspective of health outcomes and, thus, allow patient-centred ill-health to be captured.23 Given that many patients value their quality of life and survival equally following an illness,24 improving perceived health and well-being should be the aim of all contemporary cardiovascular interventions, in addition to the reducing major cardiovascular events and mortality.25

Operational framework

Quality indicators committee

The ESC established a QI Committee (QIC) whose members have a range of clinical, statistical, and quality improvement expertise. The aim of the QIC is to develop QIs for ESC Clinical Practice Guidelines by working collaboratively with:

small groups of specialists in the topic of interest (Advisory Committees). Ideally, Advisory Committees would include members (or chairs) of the respective ESC Clinical Practice Guideline Task Forces and

wider teams of domain experts, practising clinicians and patient representatives (Working Groups) for each clinical area.

The major objectives of the ESC QIC are to:

build an explicit, standardized, and transparent methodology for QI development, and ensure that the methodology is followed within agreed timelines and standards of quality;

identify clinical areas for QI development on the basis of prevalence, association with morbidity, mortality and/or healthcare utilization, and availability of effective interventions. These clinical areas may include, but are not limited to, the ESC Clinical Practice Guidelines;

support the process of translating evidence or Practice Guideline recommendations into explicitly defined, specific QI;

determine the specifications needed for operationalizing the developed QIs, according to potential data sources;

support the development and maintenance of means to measure QIs, such as the EurObservational Research Programme; and

facilitate the periodic evaluation, revision, and update the ESC QIs as more data and/or new recommendations become available.

Advisory committees

The main role of a QI Advisory Committee is to identify the domains of health care that would have an impact on the quality of care and subsequent outcomes. This is achieved by drawing upon evidence and construct a conceptual framework articulating the dimensions for the measurement and the pathways by which processes of care are linked to desired outcomes. The structure-process-outcome model illustrated in Figure 1 is a simple and commonly used framework.19 It helps identify the interplay between different aspects of health care, and allows the inclusion of patient and environmental factors.26 This framework was used by the ESC previously to develop QIs for acute myocardial infarction (AMI),27 and, thus, is recommended over other available methods.28

Figure 1.

Conceptual framework of the dimensions of health care based on the Donabedian model.

Working groups

Working Groups are the wider teams responsible for selecting the final set of QIs. Ideally, Working Groups should comprise a wide range of stakeholders including domain experts, practising clinicians, researchers and commissioners as well as members of the public, healthcare consumers, and patients.

Patient engagement is important so that professional scientific knowledge is complemented by the patient perspective on receiving care and on meaningful outcomes. This may be achieved by a ‘co-productive partnership’29 with patients and seeking their insights into quality assessment and improvement. The ESC has an established the ESC Patient Forum whose members are involved in the development of the ESC Clinical Practice Guidelines and the accompanying educational products.30

Clinical practice guideline task forces

Close working with members of the ESC Clinical Practice Guidelines Task Force is integral to the development of QIs. Not only does this ensure that QIs are comprehensive and cover broad aspects of care, but also that they are harmonized with the corresponding Clinical Practice Guideline recommendations. Furthermore, simultaneous writing and/or updating of QIs and ESC Clinical Practice Guidelines facilitates seamless incorporation of QIs within the respective documents, enhance their dissemination and, therefore, uptake into clinical practice.

The four-step process

We propose that the development of the ESC QIs follows a four-step process consisting of: identification of the key domains of health care; construction of candidate indicators; selection of a final QI set; and undertaking of a feasibility assessment (Figure 2). For each step, published evidence and consensus expert opinion are used to inform the development, implementation, and interpretation of QIs (Table 1).

Figure 2.

Process for the development of the ESC quality indicators for cardiovascular disease. ESC, European Society of Cardiology; QIs, quality indicators.

Table 1.

Process for the development of the European Society of Cardiology quality indicators for cardiovascular disease

| Step 1: identifying domains of care | |

| Defining the target population | Define the cohort of patients for whom the set of QIs is intended. This may include age, sex, ethnicity bounds, or any other relevant patients’ characteristics which may help in identifying the sample of interest. |

| Specifying the measurement period | Specify the period during which the process of care being measured would be anticipated to occur. The measurement period should be chosen carefully so that data needed for measurement are readily available and reliably extractable with reasonable cost and effort. |

| Specifying the measurement duration | Specify the time frame during which a sufficient sample size can be collected to provide good assessment of care quality. |

| Specifying the inclusion and exclusion criteria | Specify subgroups of the target population that should be excluded from the measurement when clinically appropriate and/or when data cannot be reliably obtained. |

| Step 2: constructing candidate indicators | |

| Conducting a literature review | Conduct a systematic review of the literature, to include the relevant Clinical Practice Guidelines and existing QIs. Candidate QIs synthesized from the literature review should meet the ESC attributes of QIs (Table 2). |

| Defining candidate QIs | Define the numerator, which is the subset of the patients who has had the indicator met. |

| Define the denominator, which is the proportion of patients within the target population eligible for the measurement. | |

| Define the exclusion, which is a comprehensive list of potential medical-, patient-, or system-related reasons for not meeting the measurement. | |

| Step 3: selecting the final QIs set | |

| Obtaining expert opinion | Use RAND/UCLA appropriateness method and modified Delphi process. Conduct at least two rating rounds, with inter-posed meeting. Ratings should be structured, anonymous and categorical, with instructions provided to voting panellists detailing the selection criteria. |

| Considering composite QIs | Combine two or more of the QIs into a single measure to form a single score. Selection of the individual QIs according to the intention, development, and scoring method of the composite QI. |

| Step 4: conducting feasibility assessment | |

| Identifying the numerator and denominator | Assess whether identifying the numerator and denominator can be (or should be) achieved using data that are readily available in the average medical records. |

| Assessing burden of data collection | Assess whether identifying the numerator and denominator can be extracted with reasonable time and effort. |

| Evaluating data completeness and reliability | Evaluate inter-rater reliability, response rate, frequency of assessments, and timeliness of reporting. |

ESC, European Society of Cardiology; QI, quality indicator; UCLA, University of California–Los Angeles.

Step 1: identifying domains of care

It is important to define the domains of care for which the QIs are being developed. Through comprehending the journey of a patient with a given condition, the QI Advisory Committee may identify important aspects of care process. For example, the ESC Association for Acute Cardiovascular Care (ACVC), formerly the Acute Cardiovascular Care Association, suite of QIs for the management of AMI comprises the following seven domains: centre organization, reperfusion/invasive strategy, in-hospital risk assessment, antithrombotic treatment during hospitalization, secondary prevention discharge treatments, patient satisfaction, and risk adjusted 30-day mortality.27 Identifying the domains of care entails the following four tasks:

Defining the target population

The target population is the cohort of patients for whom the set of QIs is intended. An unambiguous and concise definition of the target population allows simple inclusion and exclusion criteria and facilitates QI development.13 Target population definitions may include, but not be limited to age, sex, and ethnicity of patients for whom the set of QIs applies. Other characteristics might specify, for instance, patients with a given disease (e.g. heart failure), patients undergoing a particular treatment (e.g. percutaneous coronary intervention [PCI]), or patients at risk of developing a certain condition (e.g. sudden cardiac death).

Specifying the measurement period

The ‘measurement period’ is that interval during which the component of care of interest is measured. For instance, the prescription of angiotensin converting enzyme inhibitors or angiotensin receptor blockers (ACEI/ARB) for patients with left ventricular systolic dysfunction (LVSD) immediately after AMI, can be conducted at the time of hospital discharge, which is, in this example, the ‘measurement period’. In other cases, continuous monitoring of the target population may be needed, such as when assessing the adherence to guideline recommended therapies up to 6 months after AMI.13

It is necessary to consider data sources when specifying the measurement period, as they have implications on what components of care can be assessed. In the example above, relevant data may be obtained from hospital records, national registries, or patient surveys. Not only will these potential sources have different degrees of reliability, but they will also provide different samples of patients.15 Defining a measurement period during which an important component of care delivery can be captured reliably with minimal effort is fundamental to developing QIs.

Specifying measurement duration

Measurement duration is the time frame during which sufficient data may be collected to provide a reliable assessment of care. For example, a measurement duration of 12 months for a given QI implies that cases occurring during this time frame will be used in the assessment of quality. The measurement duration determines the number of cases obtained and, as for the measurement period, will determine the components of care that can be assessed. The number of cases may vary between providers according to workload and/or resources. Too short a measurement duration may disallow the collection of sufficient cases,31 while too long duration may affect the relevance of the data collected.13

Specifying inclusion and exclusion criteria

Certain subgroups of the target population may need to be excluded from the measurement when clinically appropriate. Additionally, a comprehensive list of the alternative therapies which may be considered equivalent to the intervention of interest should be specified. Returning to the example above (prescribing ACEI/ARB for patients with LVSD), exclusion criteria may include low blood pressure, intolerance, or a contraindication to ACEI and ARB, while alternative therapies may include sacubitril/valsartan combination. Other reasons for exclusion may be patient-related (e.g. patient preference) or system-related (e.g. limited resources).14

Step 2: constructing candidate quality indicators

The goal of this step is to construct a preliminary list of QIs (candidate QIs) for the domains of care identified in Step 1. This is accomplished by systematically reviewing the literature, including relevant Clinical Practice Guidelines and existing QIs already in use. Since adherence to QIs imply the delivery of optimal care for patients, an extensive review of the medical literature is an important part of their development process. When conducting the literature review, and to ensure candidate QIs are directly associated with improving quality of care and outcomes, one should consider:

The applicability (and relevance) of the data to the target population for which the indicator is being developed.

The strength of evidence supporting the indicator based on the assigned level of evidence (LOE).

The degree to which adherence to the indicator is associated with clinically meaningful benefit (or harm) based on the assigned class of recommendation.

The clinical significance of the outcome most likely to be achieved by adherence to the indicator, as opposed to a statistical significance with little clinical value (see below).

Literature review

Conducting a systematic review of the literature according to a standardized methodology is needed. This ensures that QIs are both clinically meaningful and evidence-based. Initially, a scoping search may be performed to map the literature and identify existing QIs from professional organizations. This preliminary search aims to guide the development of a more comprehensive systematic search strategy focused on addressing gaps in care delivery. It is recommended that a range of medical subject heading (MeSH) terms and online databases (e.g. Embase, Ovid MEDLINE, and PubMed) are used to capture published, peer-reviewed randomized controlled trials. The search should provide clinically important outcomes for a given condition and identify processes of care that correlate with improvements in these outcomes. As such, large observational studies may be included in the search to support the identification of clinically meaningful outcomes.

Defining ‘clinically important’ outcomes may be challenging, and involves the consideration of the magnitude of the treatment effect, as well as the importance, and frequency of the outcome. In contrast to established guidance for statistical significance thresholds in clinical trials, no rigorous standards exist to define a “clinically significant” difference.32High-quality evidence is usually derived from large randomized studies with large treatment effects or from individual-patient meta-analyses.32 However, such evidence may be lacking for certain aspects of care delivery, adherence to which implies a reflection of optimal care.9 For example, patient preference and shared decision making (e.g. the heart team) may not be underpinned by strong guideline recommendations, yet from a philosophical viewpoint are important aspects of optimal care.33

Clinical Practice Guidelines

The ESC Clinical Practice Guidelines should serve as a basis for the development of QIs. However, the ESC QIs are not simply a reflection of the strongest Guideline recommendations. They should also consider areas where there are gaps in care, room for improvement and where there may be longitudinal outcomes data from existing registries. In addition, clinical recommendations for care by other professional organizations may also be considered as a potential source for QIs. Reviewing Clinical Practice Guidelines to develop QIs involves identifying the recommendations with the strongest association of benefit and harm, and evaluating these recommendations against predetermined criteria to assess their suitability for quality measurement.

The ESC has developed criteria to aid the development and evaluation of its QIs. These criteria (Table 2) aim to assess the clinical importance of a given set of QIs, their evidence base, validity, reliability, and feasibility.34,35 Moreover, the criteria aim to ensure that developed QIs can be clearly defined, easily interpreted by healthcare providers, and that the result of the assessment may positively influence current practice. The ESC criteria for QIs will be complemented by expert clinical advice and should form the foundation for the ESC QI development.

Table 2.

Criteria for the development and evaluation of the European Society of Cardiology quality indicators for cardiovascular disease

| Domain | Criteria |

|---|---|

| Importance | QI reflects a clinical area that is of high importance (e.g. common, major cause for morbidity, mortality, and/or health-related quality of life impairment). |

| QI relates to an area where there are disparities or suboptimal care. | |

| QI implementation will result in an improvement in patient outcomes. | |

| QI may address appropriateness of medical interventions. | |

| Evidence base | QI is based on an acceptable evidence consistent with contemporary knowledge. |

| QI aligns with the respective ESC Clinical Practice Guideline recommendations. | |

| Specification | QI has clearly defined patient group to whom the measurement applies (denominator), including explicit exclusions. |

| QI has clearly defined accomplishment criteria (numerator). | |

| Validity | QI is able to correctly assess what it is intended to, adequately distinguishes between good and poor quality of care, and compliance with the indicator would confer health benefits. |

| Reliability | QI is reproducible even when data are extracted by different people, and estimates of performance on the basis of available data are likely to be reliable and unbiased. |

| Feasibility | QI may be identified and implemented with reasonable cost and effort |

| Data needed for the assessment are (or should be) readily available and easily extracted within an acceptable time frame. | |

| Interpretability | QI is interpretable by healthcare providers, so that practitioners can understand the results of the assessment and take actions accordingly. |

| Actionability | QI is influential to the current practice where a large proportion of the determinants of adherence to the QI is under the control of healthcare providers. |

| This influence of QIs on behaviour will likely improve care delivery. | |

| QI is unlikely to cause negative unintended consequences. |

ESC, European Society of Cardiology; QI, quality indicator.

Existing quality indicators

The goal of this step is to avoid duplication of reporting and to incorporate available information about existing indicators’ validity and/or feasibility. Conceptual issues underlying the endorsement and validation of existing QIs have been developed.12,18 As with Clinical Practice Guidelines, reviewing existing QIs involves identifying pertinent indicators, and evaluating them against the ESC criteria for QIs (Table 2). Two considerations are whether existing QIs are endorsed by other professional societies, and whether any validation and/or feasibility data are available as this information may influence the utilization (or adaptation) of the existing QIs.

Defining candidate quality indicators

Following candidate QI synthesis from the literature search, the numerator and denominator for each candidate QI should be defined. By providing an explicit definition to each indicator, the Working Group will be able to evaluate this indicator against the ESC criteria (Table 2) and specify appropriate exclusions from the measurement.

Defining the numerator

The numerator of a QI is the group of patients who have fulfilled the QI. Table 3 provides an example in which a QI to assess the prescription of an ACEI/ARB to patients with LVSD following AMI is developed.27 In this example, the numerator definition determines what ‘counts’ as being prescribed an ACEI/ARB and at which time point in relation to the AMI event.

Table 3.

Target population characteristics, measurement period, and definition of an example quality indicator for the use of an angiotensin converting enzyme inhibitor or angiotensin receptor blocker for patients with hospitalized acute myocardial infarction

| Quality indicator | Proportion of patients with LVEF <0.40 who are discharged from hospital on ACEI (or ARB if intolerant of ACEI) | |

|---|---|---|

| Target population | Age | ≥18 years |

| Sex | Any | |

| Primary diagnosis | Survivors of hospitalized acute myocardial infarction | |

| Subgroup | Patients with left ventricular ejection fraction <0.40 | |

| Measurement period | At the time of hospital discharge | |

| Numerator | Patients with acute myocardial infarction who have a LVEF < 0.40 and are prescribed an ACEI or ARBa at the time of hospital discharge | |

| Denominator | Patients with acute myocardial infarction who have a LVEF < 0.40, alive at the time of hospital discharge and are eligibleb for an ACEI or ARB | |

| Exclusion | Contraindications to ACEI and ARB, such as, allergy, intolerance, angioedema, hyperkalaemia, hypotension, renal artery stenosis, worsening renal function. | |

ACEI, angiotensin converting enzyme inhibitor; ARB, angiotensin receptor blocker; LVEF, left ventricular ejection fraction.

Patients prescribed medications that contain ACEI or ARB as part of a combination therapy, such as sacubitril/valsartan, meet the numerator criteria. bEligibility criteria include, willingness to take an ACEI/ARB, being clinically appropriate, and without contraindications or intolerance to ACEI and ARB.

Defining the denominator

Patients within the target population who are eligible for the assessment of each QI form the denominator. In the example provided in Table 3, the denominator represents the subset of the target population eligible for an ACEI/ARB. Here, the eligibility criteria include, being clinically appropriate, without contraindications or intolerance to both ACEI and ARB, and being willing to take an ACEI/ARB. Providing specifications on how to identify (or validate) the target condition (AMI in the example above), and potential data sources for the assessment enhances indicators implementation and feasibility.

For some structural QIs, no denominator is needed because the assessment is binary. In such cases, the numerator may be the healthcare centre and the assessment may be whether or not a given measure is available at the centre.

Defining the exclusions

It is important to provide an extensive list of potential exclusions for each candidate QI. Using exclusions enables fairer assessment, particularly when the QI is intended for accountability, pay-for-performance, and public reporting.33,36 Considering the ACEI/ARB example provided in Table 3, patients with low blood pressure, hyperkalaemia, or severe renal impairment should not be prescribed an ACEI/ARB, and, thus, they are excluded from the assessment (see Step 1.4).

Step 3: selecting the final quality indicator set

To derive the final set of QIs from amongst the candidate indicators menu, a structured selection process is recommended. This process is based on, and underpinned by, the ESC criteria for QIs (Table 2) combined with consensus expert opinion. The composition of consensus panels (Working Groups) should include a wide range of stakeholders, such as domain experts, practising clinicians, researches, commissioners, and patients to provide breadth and depth of expertise to address aspects of care quality. To reduce difficulties with implementation, efforts should be made to select the minimum number of QIs for each domain.

Consensus methods for obtaining and combining group judgement exist.37 These provide reliable and valid means for assessment and improvement of quality of care.34 The ESC QIC recommends the use of the RAND/University of California–Los Angeles (UCLA) appropriateness method and modified Delphi process,38,39 which are reliable and have content, construct and predictive validity for QI development.40 The modified Delphi technique involves conducting structured, anonymous, iterative and categorical surveys, with interposed face-to-face (or video/teleconference) meetings to reach consensus. An example on how to obtain, combine, and analyse expert opinion is provided in Supplementary material online.40

Step 4: feasibility assessment

The feasibility assessment aims to determine whether translating each developed QI into an actual measure of care quality is (or should be) achievable using available data sources. It also entails assessment of the cost and effort required for data extraction, as well as the reliability of this data. When the data used for quality assessment include patient perspectives, such as health-related quality of life, an evaluation of the response rates and the time of these responses in relation to the index event is needed.13 Thus, a feasible set of QIs is one in which data needed for estimating performance are available in the medical records, likely be unbiased, and can be obtained with no significant recording and/or reporting delays.13

The feasibility assessment may require a different skill set to that required for QI development (such as clinical coding experts, clinical informaticians). Feasibility assessment is an iterative process that involves operationalizing the QI for the potential data source,15 and involves the evaluation of: (i) the different methods of defining the numerator and denominator for the data source to be used (e.g. national registry), and (ii) the interrater reliability in extracting the necessary data. If defining these parameters cannot be achieved with reasonable effort and acceptable reliability, excluding the QI from the final set should be considered.

Defining composite quality indicators

Composite QIs (CQIs) are derived by combining two or more individual indicators in a single measure that results in a single score. Such CQIs may encapsulate broader aspects of care delivery (such as overall quality) or have a focused perspective (such as adherence to a specific set of guidelines). They serve as a tool for benchmarking providers, reducing data collection burden, and providing a more comprehensive assessment of performance.41 When developed according to a structured methodology, CQI for AMI have been shown to have an inverse association with mortality.42,43 The intention of, and the methodology used to develop, the CQIs determine the selection of the individual QIs within the composite and should be stated alongside the proposed scoring method (e.g. all-or-none, opportunity-based, or empirically weighted).

Discussion

This document describes ESC methodology for the development of QIs for the quantification of cardiovascular care and outcomes. Cardiovascular disease is one of the major causes of morbidity and mortality worldwide1 and although Clinical Practice Guidelines exist, gaps in care remain a major challenge. The recommended approach should bring together scientific evidence, Clinical Practice Guidelines, consensus expert opinion, and patient involvement in a structured manner to inform the construction of QIs. By developing the domain specific QIs relating to ESC Clinical Practice Guidelines, it is hoped that the local, regional, national, and international quality improvement initiatives may be promoted so that geographic variation in care delivery and outcomes is addressed and premature death from cardiovascular disease is reduced.

The ESC recognizes the need to improve the quality of care across its member countries to reduce the burden of CVD. As such, and in addition to the publication of its Clinical Practice Guidelines, the ESC delivers a suite of international registries of cardiovascular disease and treatments under the auspice of the EurObservational Research Programme. Furthermore, the ESC recently launched the EuroHeart project, which provides the means for quality improvement, observational research and randomized trials.17 Healthcare centres may implement QIs developed using this methodology into their local quality assessment systems to evaluate clinical practice or to participate in wider quality assurance programs aiming to improve quality of care and clinical outcomes for our patients.

Quality assessment provides the mechanisms to identify areas where improvements in care are most needed and evaluates the effectiveness of implemented interventions and initiatives.44 Quantifying measures of healthcare performance and implementing measures to improve them was associated with improved prognosis.8,10 Notwithstanding that adherence to therapies recommended by guidelines for the management of cardiovascular disease improves outcomes,45 substantial variation in care across countries suggests there is room for improvement.3

The ESC QIs are tools which may be used to assess and improve cardiovascular care quality in light of ESC Clinical Practice Guideline recommendations and therefore considered as a step to help determine the degree to which these recommendations are being implemented. The QIs will serve as specific, quantifiable, and actionable measures that facilitate the rapid incorporation of the best evidence into practice. They are not intended for ranking or pay-for-performance, but rather for quality improvement and performance measurement through meaningful surveillance, as well as for integration within registries, cohort studies, and clinical audits.

Clinical Practice Guidelines are also written with expert consensus using best available evidence to standardize care. There are important differences between the ESC Clinical Practice Guidelines and ESC QIs. First, guidelines tend to be comprehensive and cover almost all aspects of care, whereas QIs are targeted to specific clinical circumstances. Second, the ESC Clinical Practice Guidelines are usually prescriptive recommendations intended to influence subsequent behaviour. On the other hand, QIs are generally applied retrospectively to distinguish between good- and poor-quality care (although they may improve guideline implementation). Third, guidelines provide flexible recommendations that intentionally leave room for clinical judgement, while QIs are precise measures that can be applied systematically to available data to ensure comparability.40 Finally, QIs are intended for a more narrowly defined population than Clinical Practice Guidelines. The target population for a QI should only include patients (or subset of patients) for whom good evidence supporting the intervention exists taking into account patient preference and health status.33

A number of unintended consequences to QIs have been described in the literature.36 These consequences may arise from the fact that performance measurement itself is not capable of improving quality. Performance measurement may miss areas where evidence is not available. Furthermore, important aspects of care quality may not be readily and/or reliably quantifiable.9 Thus, by providing this methodology statement, the ESC anticipates that the developed QIs are associated with favourable outcomes and seen as a tool within a broader quality improvement strategy that encompasses multiple dimensions of quality, follows its own ‘learn-adapt’ cycle, and adjusts both the QIs themselves and how they are used.9

This approach to the development of QIs is not without limitations. Since the QIs are developed on condition-specific basis, this may lead to condition-specific assessment at the provider-level, and thus, may impact on the care in other areas not captured by the assessment. This challenge may be solved by combining broad sets of QIs that are integrated into a system of quality assessment. Furthermore, when assessed in national and international registries, QIs for AMI that have been developed using similar approach,27 were inversely associated with mortality.46 This proposed methodology has now been, and is being, used for the development of QIs for other cardiovascular domains, including atrial fibrillation and heart failure.

Another limitation is the reliance on expert panel opinion. Although different panels may select different QIs, the proposed QIs development process is based on robust literature review, explicit selection criteria, and the use of the modified Delphi technique. Previous QIs developed in relatively similar methodology were found to be highly valid, feasible, and inversely related with mortality.46 In addition, having a wide range of stakeholders, including practitioners, researchers, members of the respective Clinical Practice Guidelines Task Force, commissioners, and patients in the rating rounds would ensure reasonable representation of important aspect of care delivery.

Conclusion

The provision of tools for the measurement of care quality is a necessary next step to reducing the burden of cardiovascular disease and close the ‘evidence-practice gap’. By means of a transparent methodological approach for the construction of valid and feasible QIs, a suite of ESC QIs will be developed for a wide range of cardiovascular conditions and interventions. These will provide the underpinning framework that enables healthcare professionals and their organizations systematically to improve care and, therefore, clinical outcomes.

Supplementary material

Supplementary material is available at European Heart Journal – Quality of Care and Clinical Outcomes online.

Supplementary Material

Acknowledgements

We thank Ebba Bergman (Uppsala Clinical Research Center, Sweden) and Catherine Reynolds (Leeds Institute of Cardiovascular & Metabolic Medicine, UK) for their contribution to the EuroHeart project. We also thank Inga Drossart (Patient Engagement Officer, ESC) for providing a wider perspective on the quality indicators development.

Contributor Information

Suleman Aktaa, Leeds Institute of Cardiovascular and Metabolic Medicine, University of Leeds, Leeds LS2 9JT, UK; Leeds Institute for Data Analytics, University of Leeds, Leeds LS2 9JT, UK; Department of Cardiology, Leeds Teaching Hospitals NHS Trust, Leeds LS1 3EX, UK.

Gorav Batra, Department of Medical Sciences, Cardiology and Uppsala Clinical Research Center, Uppsala University, Uppsala 751 85, Sweden.

Lars Wallentin, Department of Medical Sciences, Cardiology and Uppsala Clinical Research Center, Uppsala University, Uppsala 751 85, Sweden.

Colin Baigent, MRC Population Health Research Unit, Nuffield Department of Population Health, University of Oxford, Oxford OX3 7LF, UK.

David Erlinge, Department of Cardiology, Clinical Sciences, Lund University, Lund SE-221 85, Sweden.

Stefan James, Department of Medical Sciences, Cardiology and Uppsala Clinical Research Center, Uppsala University, Uppsala 751 85, Sweden.

Peter Ludman, Institute of Cardiovascular Sciences, University of Birmingham, Birmingham B15 2TT, UK.

Aldo P Maggioni, National Association of Hospital Cardiologists Research Center (ANMCO), Florence 50121, Italy.

Susanna Price, Department of Adult Intensive Care, Royal Brompton & Harefield NHS Foundation Trust, Royal Brompton Hospital, National Heart & Lung Institute, Imperial College, London SW3 6NP, UK.

Clive Weston, Department of Cardiology, Hywel Dda University Health Board, Wales SA6 6NL, UK.

Barbara Casadei, Division of Cardiovascular Medicine, Radcliffe Department of Medicine, University of Oxford, Oxford OX3 9DU, UK.

Chris P Gale, Leeds Institute of Cardiovascular and Metabolic Medicine, University of Leeds, Leeds LS2 9JT, UK; Leeds Institute for Data Analytics, University of Leeds, Leeds LS2 9JT, UK; Department of Cardiology, Leeds Teaching Hospitals NHS Trust, Leeds LS1 3EX, UK.

Funding

B.C. is funded by the British Heart Foundation and by the NIHR Oxford Biomedical Research Centre.

Conflict of interest: none declared.

Data availability

The data underlying this article are available in the article and in its online supplementary material.

References

- 1. Wang H, Naghavi M, Allen C, Barber RM, Bhutta ZA, Carter A et al. Global, regional, and national life expectancy, all-cause mortality, and cause-specific mortality for 249 causes of death, 1980–2015: a systematic analysis for the Global Burden of Disease Study 2015. Lancet 2016;388:1459–1544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Timmis A, Townsend N, Gale CP, Torbica A, Lettino M, Petersen SE, European Society of Cardiology et al. European Society of Cardiology: cardiovascular disease statistics 2019. Eur Heart J 2020;41:12–85. [DOI] [PubMed] [Google Scholar]

- 3. Chung SC, Sundstrom J, Gale CP, James S, Deanfield J, Wallentin L, et al. Comparison of hospital variation in acute myocardial infarction care and outcome between Sweden and United Kingdom: population based cohort study using nationwide clinical registries. BMJ 2015;351:h3913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Pronovost PJ, Nolan T, Zeger S, Miller M, Rubin H. How can clinicians measure safety and quality in acute care? Lancet 2004;363:1061–1067. [DOI] [PubMed] [Google Scholar]

- 5. Randell R, Alvarado N, McVey L, Greenhalgh J, West RM, Farrin A et al. How, in what contexts, and why do quality dashboards lead to improvements in care quality in acute hospitals? Protocol for a realist feasibility evaluation. BMJ Open 2020;10:e033208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Park J, Choi KH, Lee JM, Kim HK, Hwang D, Rhee TM et al. ; The KAMIR‐NIH (Korea Acute Myocardial Infarction Registry–National Institutes of Health) Investigators. Prognostic implications of door-to-balloon time and onset-to-door time on mortality in patients with ST-segment-elevation myocardial infarction treated with primary percutaneous coronary intervention. J Am Heart Assoc 2019;8:e012188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Backer CL, Pasquali SK, Dearani JA. Improving national outcomes in congenital heart surgery. Circulation 2020;141:943–945. [DOI] [PubMed] [Google Scholar]

- 8. Song Z, Ji Y, Safran DG, Chernew ME. Health care spending, utilization, and quality 8 years into global payment. N Engl J Med 2019;381:252–263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Raleigh VS, Foot C. Getting the Measure of Quality: Opportunities and Challenges. London: King's Fund; 2010. [Google Scholar]

- 10. Sutton M, Nikolova S, Boaden R, Lester H, McDonald R, Roland M. Reduced mortality with hospital pay for performance in England. N Engl J Med 2012;367:1821–1828. [DOI] [PubMed] [Google Scholar]

- 11. Minchin M, Roland M, Richardson J, Rowark S, Guthrie B. Quality of care in the United Kingdom after removal of financial incentives. N Engl J Med 2018;379:948–957. [DOI] [PubMed] [Google Scholar]

- 12. NICE indicator process guide, 2019. https://wwwniceorguk/media/default/Get-involved/Meetings-In-Public/indicator-advisory-committee/ioc-process-guidepdf (24 March 2020).

- 13. Spertus JA, Eagle KA, Krumholz HM, Mitchell KR, Normand S-LT. American College of Cardiology and American Heart Association methodology for the selection and creation of performance measures for quantifying the quality of cardiovascular care. Circulation 2005;111:1703–1712. [DOI] [PubMed] [Google Scholar]

- 14. Spertus JA, Chan P, Diamond GA, Drozda JP, Kaul S, Krumholz HM et al. ; Writing Committee Members. ACCF/AHA new insights into the methodology of performance measurement. Circulation 2010;122:2091–2106. [DOI] [PubMed] [Google Scholar]

- 15. Shekelle PG. Quality indicators and performance measures: methods for development need more standardization. J Clin Epidemiol 2013;66:1338–1339. [DOI] [PubMed] [Google Scholar]

- 16. Bhatt DL, Drozda JP, Shahian DM, Chan PS, Fonarow GC, Heidenreich PA et al. ACC/AHA/STS statement on the future of registries and the performance measurement enterprise. J Am Coll Cardiol 2015;66:2230–2245. [DOI] [PubMed] [Google Scholar]

- 17. Wallentin L, Gale CP, Maggioni A, Bardinet I, Casadei B. EuroHeart: European Unified Registries on heart care evaluation and randomized trials. Eur Heart J 2019;40:2745–2749. [DOI] [PubMed] [Google Scholar]

- 18. National Quality Forum. Measure evaluation criteria. http://www.qualityforum.org/Measuring_Performance/Submitting_Standards/Measure_Evaluation_Criteria.aspx#comparison (25 March 2020).

- 19. Donabedian A. An Introduction to Quality Assurance in Health Care, 1st ed., Vol. 1. New York, NY: Oxford University Press; 2003. [Google Scholar]

- 20. Krumholz HM, Normand S-LT, Spertus JA, Shahian DM, Bradley EH. Measuring performance for treating heart attacks and heart failure: the case for outcomes measurement. Health Aff 2007;26:75–85. [DOI] [PubMed] [Google Scholar]

- 21. National Quality Forum. Risk adjustment for sociodemographic factors. https://www.qualityforum.org/Publications/2014/08/Risk_Adjustment_for_Socioeconomic_Status_or_Other_Sociodemographic_Factors.aspx (28 April 2020).

- 22. Shahian DM, Wolf RE, Iezzoni LI, Kirle L, Normand S-LT. Variability in the measurement of hospital-wide mortality rates. N Engl J Med 2010;363:2530–2539. [DOI] [PubMed] [Google Scholar]

- 23. Sajobi TT, Wang M, Awosoga O, Santana M, Southern D, Liang Z et al. Trajectories of health-related quality of life in coronary artery disease. Circ Cardiovasc Qual Outcomes 2018;11:e003661. [DOI] [PubMed] [Google Scholar]

- 24. Thompson DR, Yu C-M. Quality of life in patients with coronary heart disease-I: assessment tools. Health Qual Life Outcomes 2003;1:42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Lewis E, Zile MR, Swedberg K, Rouleau JL, Claggett B, Lefkowitz MP et al. Health-related quality of life outcomes in paradigm-hf. Circulation 2015;132(Suppl_3):A17912. [DOI] [PubMed] [Google Scholar]

- 26. Coyle YM, Battles JB. Using antecedents of medical care to develop valid quality of care measures. Int J Qual Health Care 1999;11:5–12. [DOI] [PubMed] [Google Scholar]

- 27. Schiele F, Gale CP, Bonnefoy E, Capuano F, Claeys MJ, Danchin N et al. Quality indicators for acute myocardial infarction: a position paper of the Acute Cardiovascular Care Association. Eur Heart J Acute Cardiovasc Care 2017;6:34–59. [DOI] [PubMed] [Google Scholar]

- 28. Klassen A, Miller A, Anderson N, Shen J, Schiariti V, O'Donnell M. Performance measurement and improvement frameworks in health, education and social services systems: a systematic review. Int J Qual Health Care 2010;22:44–69. [DOI] [PubMed] [Google Scholar]

- 29. Batalden M, Batalden P, Margolis P, Seid M, Armstrong G, Opipari-Arrigan L et al. Coproduction of healthcare service. BMJ Qual Saf 2016;25:509–517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Fitzsimons D. Patient engagement at the heart of all European Society of Cardiology activities. Cardiovasc Res 2019;115:e99–e101. [DOI] [PubMed] [Google Scholar]

- 31. Dimick JB, Welch HG, Birkmeyer JD. Surgical mortality as an indicator of hospital quality: the problem with small sample size. JAMA 2004;292:847–851. [DOI] [PubMed] [Google Scholar]

- 32. Kaul S, Diamond GA. Trial and error. How to avoid commonly encountered limitations of published clinical trials. J Am Coll Cardiol 2010;55:415–427. [DOI] [PubMed] [Google Scholar]

- 33. Walter LC, Davidowitz NP, Heineken PA, Covinsky KE. Pitfalls of converting practice guidelines into quality measures: lessons learned from a VA performance measure. JAMA 2004;291:2466–2470. [DOI] [PubMed] [Google Scholar]

- 34. Normand S-LT, McNeil BJ, Peterson LE, Palmer RH. Eliciting expert opinion using the Delphi technique: identifying performance indicators for cardiovascular disease. Int J Qual Health Care 1998;10:247–260. [DOI] [PubMed] [Google Scholar]

- 35. MacLean CH, Kerr EA, Qaseem A. Time out—charting a path for improving performance measurement. N Engl J Med 2018;378:1757–1761. [DOI] [PubMed] [Google Scholar]

- 36. Werner RM, Asch DA. The unintended consequences of publicly reporting quality information. JAMA 2005;293:1239–1244. [DOI] [PubMed] [Google Scholar]

- 37. Jones J, Hunter D. Consensus methods for medical and health services research. BMJ 1995;311:376–380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Brook RH, McGlynn EA, Cleary PD. Quality of health care. Part 2: measuring quality of care. N Engl J Med 1996;335:966–970. [DOI] [PubMed] [Google Scholar]

- 39. Brook RH, The RAND/UCLA appropriateness method. In: McCormack KA, Moore SR, Siegel RA, eds. Clinical Practice Guideline Development: Methodology Perspectives. Rockville, MD: Agency for Healthcare Research and Policy; 1994. [Google Scholar]

- 40. Mangione-Smith R, DeCristofaro AH, Setodji CM, Keesey J, Klein DJ, Adams JL et al. The Quality of ambulatory care delivered to children in the United States. N Engl J Med 2007;357:1515–1523. [DOI] [PubMed] [Google Scholar]

- 41. Peterson ED, Delong ER, Masoudi FA, O’Brien SM, Peterson PN, Rumsfeld JS et al. ACCF/AHA 2010 Position Statement on Composite Measures for Healthcare Performance Assessment: a report of the American College of Cardiology Foundation/American Heart Association Task Force on Performance Measures (Writing Committee to develop a position statement on composite measures). Circulation 2010;121:1780–1791. [DOI] [PubMed] [Google Scholar]

- 42. Zusman O, Bebb O, Hall M, Dondo TB, Timmis A, Schiele F et al. International comparison of acute myocardial infarction care and outcomes using quality indicators. Heart 2019;105:820–825. [DOI] [PubMed] [Google Scholar]

- 43. Rossello X, Medina J, Pocock S, Van de Werf F, Chin CT, Danchin N et al. Assessment of quality indicators for acute myocardial infarction management in 28 countries and use of composite quality indicators for benchmarking. Eur Heart J Acute Cardiovasc Care 2020;2048872620911853. doi: 10.1177/2048872620911853. [DOI] [PubMed] [Google Scholar]

- 44. Berwick DM. Era 3 for medicine and health care. JAMA 2016;315:1329–1330. [DOI] [PubMed] [Google Scholar]

- 45. Hall M, Dondo TB, Yan AT, Goodman SG, Bueno H, Chew DP et al. Association of clinical factors and therapeutic strategies with improvements in survival following non-ST-elevation myocardial infarction, 2003–2013. JAMA 2016;316:1073–1082. [DOI] [PubMed] [Google Scholar]

- 46. Bebb O, Hall M, Fox KAA, Dondo TB, Timmis A, Bueno H et al. Performance of hospitals according to the ESC ACCA quality indicators and 30-day mortality for acute myocardial infarction: National Cohort Study using the United Kingdom Myocardial Ischaemia National Audit Project (MINAP) register. Eur Heart J 2017;38:974–982. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data underlying this article are available in the article and in its online supplementary material.