Abstract

Scientists conducting affective research often use visual, emotional images, to examine the mechanisms of defensive responses to threatening and dangerous events and objects. Many studies use the rich emotional images from the International Affective Picture System (IAPS) to facilitate affective research. While IAPS images can be classified into emotional categories such as fear or disgust, the number of images per discrete emotional category is limited. We developed the Open Biological Negative Image Set (OBNIS) consisting of 200 colour and greyscale creature images categorized as disgusting, fearful or neither. Participants in Experiment 1 (N = 210) evaluated the images' valence and arousal and classified them as disgusting, fearful or neither. In Experiment 2, other participants (N = 423) rated the disgust and fear levels of the images. As a result, the OBNIS provides valence, arousal, disgust and fear ratings and ‘disgusting,’ 'fearful' and ‘neither’ emotional categories for each image. These images are available to download on the Internet (https://osf.io/pfrx4/?view_only=911b1be722074ad4aab87791cb8a72f5).

Keywords: affect rating, disgust, fear, open-access

1. Introduction

Human beings experience various emotions. In particular, negative emotions such as fear and disgust are essential mechanisms for avoiding dangerous objects and events in our daily lives. Moreover, strong negative emotions also cause phobias. Research has suggested that fear and anxiety play essential roles in causing and maintaining phobias (for review, [1]). More recently, it has been suggested that disgust and fear play crucial roles in causing specific phobias. For example, Olatunji et al. [2] reported that disgust and fear interact in predicting blood injection phobia [2]. Also, many studies have reported that disgust of spiders is the primary emotion in spider phobia ([3–5], but not [6–9]).

Several behavioural and neuroscience studies designed to evaluate defensive responses to threatening and dangerous stimuli have used various types of negative images, including images of snakes, traffic accidents, spiders and dirty toilets. These negative images are catalogued in rich image databases that contain a large amount of image data. Such databases include the International Affective Picture System (IAPS), a standardized set of emotional images [10]; the Geneva Affective Picture Database, another standardized collection of emotional images [11]; the Nencki Affective Picture System (NAPS), an image set of realistic and high-quality emotional images [12]; the basic-emotion normative ratings for the Nencki Affective Picture System, a standardized image set of NAPS images with additional information on discrete emotions [13]; the Open Affective Standardized Image Set, an open-access set of online emotional images [14]; the Set of Fear Inducing Pictures, an image set of phobia-relevant pictures [15]; the DIsgust-RelaTed-Images database (DIRTI), a standardized set of disgusting pictures [16].

The IAPS has been widely used in affective research, and many studies have relied on this database. However, since the IAPS is based on the dimensional approach to emotions and focuses on valence and arousal [12,14], it does not map onto discrete emotions such as disgust [16]. Although several studies have classified the IAPS images into discrete emotional categories (e.g. [17–19]), the number of images per emotion is somewhat limited, especially for disgust. Haberkamp et al. [16] has provided 240 disgust-related images in the DIRTI (e.g. animal carcasses, rotten food and blood) for researchers interested in studying different facets of disgust. However, the DIRTI database provides only disgust-related images. Thus, researchers interested in comparing the effects of discrete emotions such as fear and disgust must gather images from several image databases, making it difficult to select images based on a standardized rating.

Also, the images of most databases do not contain complete objects (e.g. an image shows a snake's face but not the whole body). Additionally, researchers often remove unnecessary backgrounds from images and use the trimmed object as experimental stimuli to ensure a pure effect (e.g. [20,21]). Almost none of the image databases, including the IAPS, have isolated, full object images. Therefore, many researchers in affective science need to collect object images from scratch. In particular, although some image databases provide images of disgusting objects (e.g. images in the DIRTI, [16]), many disgusting images cause disgust through an understanding of the context of the whole scene (e.g. dirty toilets, dead animals) and thus, the total number of disgusting object images is quite small.

The current study's goal was to develop a database of negative emotional objects to address the issues with current databases and reduce the need for detailed, time-consuming and labour-intensive image adjustments. We collected 200 images depicting negative and neutral objects from open-access resources by extracting the images of the object from the background scene. In particular, the current study focused on images associated with disgust and fear and used images that could evoke these emotions by themselves in developing an image database. This database was expected to facilitate investigations of the effects of disgust and fear on behaviour, including phobias. The participants were asked to rate these images in terms of affective values. The images were presented in greyscale or colour. The reason for utilizing both colour and greyscale images was that none of the current databases provide rating scores for the greyscale images. Greyscale images are often required in experiments in which the physical characteristics of images must be controlled. The participants reported the valence and arousal scores for the colour or greyscale images and categorized each image as disgusting, fearful or neither. We calculated mean values and standard deviations of valence and arousal ratings for each image. The probability of classifying each image into disgusting, fearful or neither categories was calculated using each participant's image categorization.

Moreover, we focused on the extent to which people could distinguish between disgust and fear in each image. For example, several studies have suggested that people have culturally acquired disgust of specific animals and insects associated with contamination and disease, such as spiders, and these animals and insects have become fear-relevant [22,23]. Moreover, Woody & Teachman [24] reported that people tend to confuse disgust and fear when the intensity of elicited emotions is moderate [24,25]. Therefore, we expected that asking participants to label many visual images as ‘disgusting’ or ‘fearful’ would allow assessing the extent to which fear and disgust are combined in a particular image and how precisely people could distinguish negativity into the two categorical emotions of fear and disgust. We performed cluster analysis on each image's probability of being classified as ‘disgusting’ or ‘fearful’ and classified each image by the contents of their emotions.

2. Experiment 1

2.1. Methods

2.1.1. Participants

Participants were recruited through the crowdsourcing services (Crowd Works) to rate images for payment of 300 yen. Two-hundred and ten workers (112 females, 98 males, mean age = 40.31 years, age range = 20–68 years) participated. We used a crowdsourcing service in Japan. Therefore, we consider that nearly all the participants in this study were Japanese people or could understand Japanese. All participants gave their informed consent online before participating in the study. Ethical approval for the study was obtained from the Waseda University's Ethics Review Committee on Research with Human Subjects.

2.1.2. Materials

Two-hundred images were collected from Google Images (https://images.google.com). All images were chosen to represent emotionally negative (e.g. snakes, wasps, spiders and cockroaches) or neutral objects (e.g. cats, flowers). The image search was limited to images classified as available for reuse with modification. We trimmed objects from images of scenes and resized them to 500 × 500 pixels in Adobe Photoshop CC 2015. All images were altered to the greyscale. Finally, 400 images consisting of 200 colour and greyscale images with 100 emotionally negative and 100 emotionally neutral images were prepared for the study.

2.1.3. Procedure

The participants were randomly assigned to one of two groups. All images were presented in colour (colour condition; N = 100) to one group, and all images were presented in greyscale (greyscale condition; N = 110) to the other. The 200 images were randomly presented in sequence on a browser window using the Qualtrics platform (Qualtrics, Provo, UT). Participants were instructed to rate each image on valence and arousal dimensions (See appendix A). Valence was assessed by a 9-point scale ranging from 1 (extremely negative) to 9 (extremely positive). Arousal was assessed by a 9-point scale ranging from 1 (low arousal) to 9 (high arousal). Also, participants were instructed to categorize each image as ‘disgusting’, ‘fearful’ or ‘neither’, according to whether they predominantly felt disgusted or fearful when seeing the image. Participants were asked to select ‘neither’ if they felt neither disgust nor fear. Each image was kept presented on the window until all the responses were obtained. It took approximately 45 min to complete the experimental session.

2.2. Results and discussion

2.2.1. Univariate distributions

Figure 1 shows the relationship between means and standard deviations of valence and arousal ratings. Visual inspection indicates that mean valence variability and arousal ratings for colour and greyscale conditions were normally distributed. Each image's valence rating ranged from 1.70 to 7.63 in the colour condition and from 1.72 to 7.56 in the greyscale condition. The mean valence rating was 4.40 in the colour condition and 4.32 in the greyscale condition. The results indicate that the valence rating range's midpoint was lower than the theoretical midpoint of the scale for each presentation mode. The arousal rating per image ranged from 2.66 to 6.94 in the colour condition and from 2.22 to 6.92 in the greyscale condition. The mean arousal rating was 4.84 in the colour condition and 4.67 in the greyscale condition.

Figure 1.

Relationship between the mean value and standard deviation of the valence (a,b) and arousal ratings (c,d). (a,c) show the colour condition. (b,d) display the greyscale condition.

We evaluated the face validity of the valence and arousal ratings for the images by probing the images with the highest positive valence, highest negative valence, the highest arousal ratings and lowest arousal ratings in each mode of the presentation. In the colour condition, the image with the most positive valence was that of a cat (image no. 108; mean = 7.63, s.d. = 1.46), and the image with the most negative valence was that of a cockroach (image no. 1; mean = 1.70, s.d. = 1.14). The image with the highest arousal was that of a snake (image no. 60; mean = 6.94, s.d. = 2.30), and the image with the lowest arousal was that of a leaf (image no. 119; mean = 2.66, s.d. = 1.76). In the greyscale condition, the image with the highest positive valence was that of a cat (image no. 108; mean = 7.55, s.d. = 1.44), and the image with the most negative valence (mean = 1.72, s.d. = 0.94) and the most arousing image was that of a centipede (image no. 23; mean = 6.92, s.d. = 2.13). The least arousing image was that of a leaf (image no. 121; mean = 2.22, s.d. = 1.62). These images established the face validity of the valence and arousal scores because they fit the ratings.

2.2.2. Relationships between valence and arousal

Next, we examined the relationship between valence and arousal ratings for images in each presentation mode. We found that the mean valence ratings of images were negatively correlated with the mean arousal ratings of images in both presentation modes (colour condition: r = −0.87 (−0.90; −0.83), t198 = −24.41, p < 0.001; greyscale condition: r = −0.85 (−0.88; −0.81), t198 = −22.71, p < 0.001). The Open Biological Negative Image Set (OBNIS) contains both emotionally negative and neutral (or relatively positive) images. Since there appears to be no correlation for the neutral valence images in the OBNIS from visual inspections, the strong negative correlation between valence and arousal ratings for images seen in this study can be attributed more to the emotionally negative images in the OBNIS.

2.2.2.1. Gender differences

Previous studies suggest gender differences in affective processing [26–28]. Therefore, we explored whether gender differences modulated valence and arousal ratings. Figure 2 shows the relationship between valence and arousal ratings by gender. A correlation analysis indicated that images' valence ratings were negatively correlated with arousal ratings in both genders (women: r = −0.90 (−0.92; −0.87), t198 = −29.17, p < 0.001; men: r = −0.79 (−0.83; −0.73), t198 = −17.88, p < 0.001). However, the correlation between valence and arousal ratings was stronger in women than men for the colour condition (z = 4.12, p < 0.001). These results, similar to Bradley et al. [26], indicate that gender modulated the relationship between images’ valence and arousal ratings. Conversely, the correlations between valence and arousal ratings in the greyscale condition did not differ between genders (women: r = −0.85 (−0.88; −0.80), t198 = −22.56, p < 0.001; men: r = −0.84 (−0.88; −0.80), t198 = −21.98, p < 0.001; z = 0.22, p = 0.83). These findings indicate that the effect of gender on the relationship between valence and arousal depends on whether an image is presented in colour or not. The majority of the participants in this study were Japanese. Therefore, the extent to which the finding of this study applies to samples from other countries and cultures should be examined in the future.

Figure 2.

Relationship between valence and arousal ratings by gender. (a) shows the colour condition. (b) displays the greyscale condition.

2.2.3. Emotion categorization

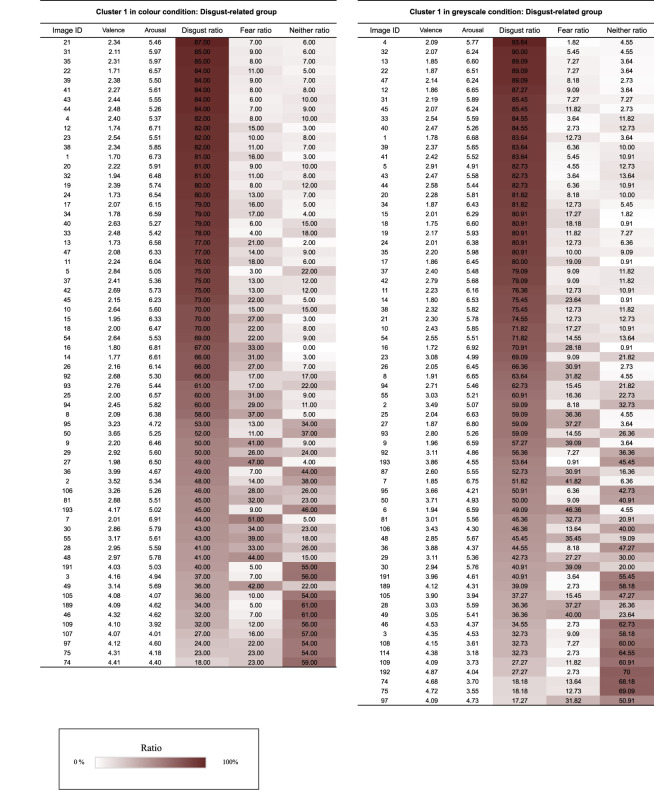

We calculated each image's probability of being classified into each category (i.e. disgust, fear or neither) using cluster analysis with NbClust [29]. NbClust is a software package in R that provides 30 indices for identifying the optimal number of clusters, which proposes using the optimum number of clusters based on the majority rule. In the present study, the NbClust analysis was conducted on 200 images in each presentation mode for the three variables (the probabilities of categorizing as disgust, fear or neither) using the ‘ward.D2' method [30]. The results show that the three clusters were derived from each cluster analysis in the two presentation conditions. Table 1 displays the probability of assigning each image category within each cluster. Cluster 1 in the colour condition was named the ‘disgust-related group’ because the most frequently labelled category for images within this cluster was ‘disgust’ (figure 3; disgust mean ratio: 61.70%, fear mean ratio: 18.52%, neither mean ratio: 19.79%). Cluster 2 was named the ‘fear-related group’, since the most frequently labelled category for images within this cluster was ‘fear’ (figure 3; disgust mean ratio: 15.68%, fear mean ratio: 64.05%, neither mean ratio: 20.27%). Cluster 3 was named the ‘neither group’ because the most frequently labelled category for images within this cluster was ‘neither’ (figure 3; disgust mean ratio: 4.05%, fear mean ratio: 1.62%, neither mean ratio: 94.32%). Cluster 1 in the greyscale condition was named the ‘disgust-related group’ (figure 5; disgust mean ratio: 62.55%, fear mean ratio: 15.51%, neither mean ratio: 21.95%), cluster 2 was named the ‘fear-related group’ (figure 3; disgust mean ratio: 15.87%, fear mean ratio: 62.21%, neither mean ratio: 21.91%) and cluster 3 was named the ‘neither group’ (figure 3; disgust mean ratio: 5.10%, fear mean ratio: 1.84%, neither mean ratio: 93.07%) using the same strategy as in the colour condition. The images assigned to the disgust-related and fear-related groups were judged as disgusting or fearful, with a relatively high probability across participants. Thus, the participants were able to distinguish the emotion they experienced between ‘disgust’ and ‘fear’ in response to the images relatively well. However, some images in the disgust-related group were assigned with almost the same probability to ‘disgust’ and ‘fear’ (i.e. the difference is only 10% or less; e.g. the images in colour condition: Image ID 9, 27, 7, 30, 55, 28, 48, 49, 97, 75 and 74; the images in greyscale condition: Image ID 6, 30, 28, 49, 74, 75 and 97). Thus, it should be noted that the participants might confuse the feelings of disgust and fear when viewing such images.

Table 1.

Each table lists the valence, the arousal, and the probabilities of assigning images in each cluster to ‘disgust’, ‘fear’ or ‘neither’ categories. Image ID refers to an ID assigned to each image. The closer was the colour in the cell to white; the closer the probability of the image being assigned to categories ‘disgust’, ‘fear’ or ‘neither’ was to 0%. The closer was the colour in the cell to red, the closer the probability of the image being assigned to categories.

|

|

|

Figure 3.

Relationship between valence and arousal ratings for images in each subgroup (disgust-related, fear-related, or neither group). (a) shows the colour condition. (b) shows the greyscale condition.

Figure 5.

Relationship between fear and disgust ratings by gender. (a) shows the colour condition, and (b) displays the greyscale condition.

We also examined whether the images assigned to any of the three categories differed between colour and greyscale conditions (table 2). The results indicated that 70 images were assigned to the disgust-related group in the greyscale condition. By contrast, 65 of the identical images were assigned to the disgust-related group in the colour condition, two of the identical images were assigned to the fear-related group and three of the identical images were assigned to the neither group. The 39 images assigned to the fear-related group in the greyscale condition were all assigned to the colour condition's fear-related group. Ninety-one images were assigned to the neither group in the greyscale condition, whereas 90 of the identical images were assigned to the neither group in the colour condition, and one identical image was assigned to the disgust-related group. These results indicated that each subgroup of greyscale images was very similar to images in the colour condition's respective subgroup.

Table 2.

Numbers of images per subgroups.

| mode of presentation | name of clusters | greyscale condition |

||||

|---|---|---|---|---|---|---|

| cluster 1 | cluster 2 | cluster 3 | total | |||

| disgust-related group | fear-related group | neither group | ||||

| colour condition | cluster 1 | disgust-related group | 65 | 0 | 1 | 66 |

| cluster 2 | fear-related group | 2 | 39 | 0 | 41 | |

| cluster 3 | neither group | 3 | 0 | 90 | 93 | |

| total | 70 | 39 | 91 | 200 | ||

2.2.3.1. Valence and arousal ratings

Studies have suggested that emotional valence and arousal levels are different among discrete emotional categories of images [31–34]. Thus, we calculated the mean valence and arousal ratings per subgroup (disgust-related, fear-related and neither groups) in colour and greyscale to identify whether the valence and arousal ratings were different among the subgroups of images. First, the mean valence ratings for coloured images were subjected to a one-way analysis of variance (ANOVA) with the image groups (disgust-related, fear-related and neither groups) as independent variables and the valence ratings as the dependent variable. The main effect of image group was significant, F2,197 = 408.07, p < 0.001, . Post hoc comparisons using the Holm methods indicated that the images in the neither group (mean = 6.15, s.d. = 0.69) were rated as less negative than the images in the disgust-related group (mean = 2.72, s.d. = 0.81; t197 = 26.25, p < 0.001, d = 4.53) or the fear-related group (mean = 3.13, s.d. = 1.03; t197 = 19.89, p < 0.001, d = 3.45). Moreover, the images in the disgust-related group were rated as more negative than the images in the fear-related group, t197 = 2.50, p = 0.01, d = 0.44. These results indicated that the valence ratings were different among colour image subgroups. We also observed the main effect of image group on the mean valence ratings for greyscale images, F2,197 = 346.98, p < 0.001, . Similar to the findings of colour images, the greyscaled images in the neither group (mean = 5.96, s.d. = 0.63) were rated as less negative than the images in the disgust-related group (mean = 2.79, s.d. = 0.89; t197 = 24.58, p < 0.001, d = 4.12) or the images in the fear-related group (mean = 3.21, s.d. = 1.03; t197 = 17.72, p < 0.001, d = 3.24). Moreover, the images in the disgust-related group were rated as more negative than the images in the fear-related group (t197 = 2.58, p < 0.001, d = 0.44). These findings suggest that the images in the disgust-related group were evaluated as most negative compared with the images in either colour or greyscaled conditions. This result supported Alarcão et al. [31], who also reported that the disgusting images were rated more negatively than the fearful images.

We conducted a one-way ANOVA with the image group (disgust-related, fear-related and neither groups) as independent variables and the mean arousal ratings for coloured images as the dependent variables. The results indicated that the main effect of image group was significant, F2,197 = 358.66, p < 0.001, . Post hoc comparisons showed that the images in the neither group (mean = 3.70, s.d. = 0.46) were rated as less arousing than the images in the disgust-related group (mean = 5.65, s.d. = 0.74; t197 = 21.18, p < 0.001, d = 3.16) or the fear-related group (mean = 6.15, s.d. = 0.49; t197 = 21.18, p < 0.001, d = 5.20). Moreover, the images in the fear-related group were rated as more arousing than the images in the disgust-related group, t197 = 4.41, p < 0.001, d = 0.80. These findings suggest that the mean arousal ratings of image subgroups were different. The main effect of image group was significant for the mean arousal ratings of greyscaled images, F2,197 = 270.68, p < 0.001, . A post hoc test of arousal ratings among image subgroups indicated that images in the neither group (mean = 3.45, s.d. = 0.51) were rated as less arousing than the images in the disgust-related group (mean = 5.50, s.d. = 0.94; t197 = 18.92, p < 0.001, d = 2.71) or in the fear-related group (mean = 5.99, s.d. = 0.44; t197 = 19.47, p < 0.001, d = 5.34). Moreover, the images in the fear-related group were rated as more arousing than the images in the disgust-related group, t197 = 3.59, p < 0.001, d = 0.67. These findings suggest that the mean arousal ratings of images in the fear-related group were the highest among the image groups under the colour and the greyscale conditions.

Figure 3 shows the relationship between valence and arousal ratings of images in each subgroup. The images in the disgust-related and fear-related groups in colour and greyscale conditions appear to be rated as more negative and more arousing than the images in the neither group. Moreover, the distribution of images in the disgust-related group appears to be more negative and less arousing than the distribution of images in the fear-related group.

We conducted a correlation analysis between valence and arousal ratings of the images in disgust-related and fear-related groups to examine differences in valence and arousal ratings between the groups. As can be seen in figure 3, the mean valence ratings of the images were negatively correlated with the mean arousal ratings of the images in the fear- and disgust-related groups in both presentation modes (fear-related group in colour condition: r = −0.78 [−0.88; −0.63], t39 = −7.89, p < 0.001; disgust-related group in colour condition: r = −0.90 (−0.94; −0.84), t64 = −16.58, p < 0.01; fear-related group in greyscale condition: r = −0.72 (−0.84; −0.52), t37 = −6.28, p < 0.001; disgust-related group in greyscale condition: r = −0.94 (−0.97; −0.91), t68 = −23.67, p < 0.001). Moreover, the correlations between valence and arousal ratings in the disgust-related group were stronger than those in the fear-related group in both presentation modes (colour condition, z = 2.04, p = 0.04; greyscale condition, z = 4.22, p < 0.001), suggesting that the strength of relationship between valence and arousal might be different between discrete emotions such as disgust and fear.

The results of Experiment 1 demonstrated the probability of each image being categorized as disgusting or fearful. However, the extent to which participants felt disgust or fear for each image was unclear. Therefore, in Experiment 2, we examined the level of disgust and fear felt for each image by asking participants to rate the degree of disgust or fear they felt in response to each image.

3. Experiment 2

3.1. Methods

We recruited 423 workers (134 females and 289 males, mean age = 46.38 years, age range = 18–78 years) through another crowdsourcing service (Yahoo! Crowdsourcing). They participated in Experiment 2 and evaluated the images. Similar to Experiment 1, most of the participants were Japanese and/or understood Japanese. All participants gave their informed consent online prior to participating in the study. Ethical approval for this study was obtained from the Ethical Review Committee for Research Involving Human Subjects at Waseda University.

The procedure of Experiment 2 was identical to that of Experiment 1, except for the following. The participants were randomly assigned to the fear-rating group or the disgust-rating group. Participants in each group were further assigned to a colour image group (fear-rating and colour condition N = 103; disgust-rating and colour condition N = 102) and a greyscale image group (fear-rating and greyscale condition N = 105; disgust-rating and greyscale condition N = 113). They were instructed to rate each image on disgust or fear dimensions (appendix B). Disgust was assessed by a 9-point scale ranging from 1 (Low disgust) to 9 (High disgust), and fear was also assessed by a 9-point scale ranging from 1 (Low fear) to 9 (High fear).

3.2. Results and discussion

3.2.1. Univariate distributions

Figure 4 shows relationships between fear and disgust ratings' means and standard deviations. The fear ratings for the images ranged from 1.05 to 8.18 (mean = 4.54) in the colour condition and from 1.05 to 7.95 (mean = 4.28) in the greyscale condition. Disgust ratings ranged from 1.35 to 8.43 (mean = 4.64) in colour and 1.50 to 8.19 (mean = 4.54) in the greyscale condition.

Figure 4.

Relationships between the mean values and standard deviations of fear (a,b) and disgust ratings (c,d). (a,c) show the colour condition, and (b,d) display the greyscale condition.

The face validity of the fear and disgust ratings for the images was assessed by probing the most fearful, most disgusting, least fearful and least disgusting images for each presentation mode. In the colour condition, the most fearful image was a snake (image no. 76; mean fear = 8.18, s.d. = 1.36), and the most disgusting image was a millipede (image no. 13; mean disgust = 8.43, s.d. = 0.85), whereas the least fearful (image no. 160, 163; mean fear = 1.05, s.d. = 0.32), and least disgusting image (image no. 161; mean disgust = 1.35, s.d. = 0.95) was an orange. In the greyscale condition, the most fearful (image no. 16, mean = 7.95, s.d. = 1.46) and the most disgusting image (image no. 16, mean = 8.19, s.d. = 1.24) was a centipede, whereas the least fearful image was an orange (image no. 163; mean fear = 1.05, s.d. = 0.21), and the least disgusting image was an apple (image no. 160; mean disgust = 1.50, s.d. = 1.09).

3.2.2. Relationship between disgust and fear ratings

We examined the relationship between disgust and fear ratings for images under each presentation mode. The results showed that the mean fear ratings of the images were positively correlated with the mean disgust ratings under colour and greyscale conditions (colour condition: r = 0.94 [0.92; 0.95], t198 = 37.75, p < 0.001; greyscale condition: r = 0.94 [0.92; 0.95], t198 = 37.91, p < 0.001), suggesting that in general, the more disgusting was an OBNIS image, the more fearful it was.

3.2.2.1. Gender differences

We also found high positive correlations between disgust and fear ratings in both genders (figure 5; women in the colour condition: r = 0.95 (0.94; 0.96), t198 = 44.21, p < 0.001; men in the colour condition: r = 0.93 (0.90; 0.94), t198 = 34.46, p < 0.001; women in the greyscale condition: r = 0.95 (0.94; 0.96), t198 = 43.84, p < 0.001; men in the greyscale condition: r = 0.93 (0.91; 0.95), t198 = 34.96, p < 0.001). The correlation between fear and disgust ratings was stronger in women than men under both presentation modes (colour condition: z = −2.32, p = 0.02; greyscale condition: z = −2.11, p = 0.03).

3.2.2.2. Cluster differences

Figure 6 shows relationships between fear and disgust in the subgroups categorized by the cluster analysis of Experiment 1 (i.e. disgust-related, fear-related and neither groups). We tested the relationships between fear and disgust in the disgust-related and fear-related groups. A correlation analysis indicated that the mean fear ratings of the images were positively correlated with the mean disgust ratings of the images in all image subgroups (disgust-related group in colour condition: r = 0.93 (0.89; 0.96), t64 = 20.28, p < 0.001; fear-related group in colour condition: r = 0.76 (0.59; 0.87), t39 = 7.36, p < 0.001; neither group in colour condition: r = 0.88 (0.82; 0.92), t91 = 17.56, p < 0.001; disgust-related group in greyscale condition: r = 0.95 (0.93; 0.97), t68 = 26.12, p < 0.001; fear-related group in greyscale condition: r = 0.83 [0.70; 0.91], t37 = 9.22, p < 0.001; neither group in greyscale condition: r = 0.81 (0.72; 0.87), t89 = 12.95, p < 0.001). Moreover, the correlation between fear and disgust ratings was stronger in the disgust-related than the fear-related group under both presentation modes (colour condition: z = 3.20, p < 0.01; greyscale condition: z = 3.23, p < 0.01). A visual inspection indicated that the fear-related group contained more images that induced less disgust and more fear than the disgust-related group. These images might have caused the weaker correlation between fear and disgust ratings in the fear-related compared with the disgust-related group. Less disgusting images in the fear-related group mainly included images of carnivorous mammals, including tigers and lions, whereas the more disgusting images in the fear-related group mainly included images of reptiles and insects such as snakes and wasps. The possible differences in the relationship between disgust and fear ratings among different animal species, even among images that are frequently categorized as ‘fearful’, need to be further investigated in the future.

Figure 6.

Relationship between fear and disgust ratings of images in each subgroup (disgust-related, fear-related or neither group). (a) shows the colour condition, and (b) shows the greyscale condition. The colour and shape represent the subgroups of images in Experiment 1.

4. General discussion

We collected 200 images to provide a comprehensive database for investigating visually elicited negative emotions, which we call the 'Open Biological Negative Image Set.' The OBNIS includes valence, arousal, disgust and fear levels, and the emotional category (disgust, fear or neither) of each image. The images and their ratings are fully available on the Internet (https://osf.io/pfrx4/?view_only=911b1be722074ad4aab87791cb8a72f5). The images in the OBNIS, similar to those in the IAPS, show that valence and arousal variability ratings form a nearly uniform distribution. IAPS images show a boomerang-shaped relationship between the mean valence and arousal ratings such that extremely positive and negative images had the highest arousal scores. Conversely, the OBNIS showed a linear relationship between the mean valence and arousal ratings. This difference could be because the OBNIS mainly contains emotionally negative images. As a result, the variation of arousal ratings on positive valence might be small. Furthermore, the images in OBNIS were relatively well divided into three groups (disgust-related, fear-related and neither group), regardless of the presentation mode. However, in specific images, especially in the disgust-related group, the possibility of ‘fear’ was as high as the probability of ‘disgust’. Thus, it is possible that disgust and fear tend to be confused, especially when viewing images associated with disgusting objects. Indeed, the images classified as the disgust-related group in Experiment 2 showed a strong correlation between disgust and fear ratings, indicating that even when images are classified as disgusting, people sometimes feel fearful and disgusted.

In conclusion, the OBNIS provides ratings on valence, arousal, disgust, fear and emotional categories, including ‘disgust’, ‘fear’ or ‘neither’ for 200 images. Moreover, the OBNIS provides colour and greyscale versions of the images. We believe that the OBNIS will be a useful image resource for studying visually evoked negative emotions such as disgust and fear in affective research.

Supplementary Material

Appendix A—Instructions

[Question 1—valence]

How strong is your negative or positive emotion when you see this image? Please choose a number ranging from 1 (Very negative) to 9 (Very positive) that reflects your feelings most closely.

* Please make your evaluation based on your own feelings, rather than on how people generally feel.

[Question 2—arousal]

How aroused do you feel when you see the image? Please choose a number ranging from 1 (Low arousal) to 9 (High arousal) that reflects your feelings most closely.

* ‘How aroused do you feel?’ means how much excitement, surprise, agitation or pounding of the heart do you feel? 1 (Low arousal) means very calm, and 9 (High arousal) means very excited with higher numbers expressing increased arousal. The degree of arousal is independent of the response to Question 1. Therefore, the degree of arousal might be high (close to 9) or lower (close to 1) despite the images' emotional valence.

[Question 3—categorization]

Which emotion do you feel, ‘disgust’ or ‘fear’ when you see this image? Please select ‘neither’, if you do not feel either one of these two emotions.

* ‘Disgust’ describes repugnance, irritability and a feeling of heartburn. On the other hand, ‘Fear’ indicates a fear of imminent danger, distress, or anxiety. For example, people expect to feel disgusted when they see an image of dirty toilets, and people are expected to feel fearful when they see an image of unexploded shells. If you do not feel ‘disgust’ or ‘fear’, please select ‘neither’. Please note that you should evaluate fear or disgust based on how you feel, rather than on how people generally feel.

Appendix B—Instructions

[Question—disgust]

How strong is your disgust when you see this image? Please choose a number between 1 (Low disgust) to 9 (High disgust) that most closely reflects your feelings.

* ‘Disgust’ describes repugnance, irritability and a feeling of heartburn. Please make your evaluation based on your own feelings rather than how people generally feel.

[Question—fear]

How strong is your fear when you see this image? Please choose a number between 1 (Low disgust) to 9 (High disgust) that most closely reflects your feelings.

* ‘Fear’ describes a feeling of imminent danger, distress or anxiety. Please make your evaluation based on your own feelings rather than how people generally feel.

Data accessibility

The data and materials for our experiment are available at https://osf.io/pfrx4/?view_only=911b1be722074ad4aab87791cb8a72f5. This experiment was not preregistered.

Authors' contributions

R.S. designed the study, conducted the online experiments and analyses; K.W. designed and coordinated the study. All authors contributed to the writing of the final manuscript. All authors gave final approval for publication and agreed to be held accountable for the work performed therein.

Competing interests

We have no conflicts of interest to report regarding the finding of this study.

Funding

This work was supported by JSPS KAKENHI Grant no. JP20J00838; KAKENHI Grant no. 17H06344, 17H00753; JST-CREST JPMJCR16E1; JST-MIRAI program Grant no. JPMJMI20D8; JST-Moonshot Research and Development Grant no. JPMJMS2012.

References

- 1.Çavuşoğlu M, Dirik G. 2011. Fear or disgust? The role of emotions in spider phobia and blood-injection-injury phobia. Turk. J. Psychiatry 22, 115-122. [PubMed] [Google Scholar]

- 2.Olatunji BO, Williams NL, Sawchuk CN, Lohr JM. 2006. Disgust, anxiety and fainting symptoms associated with blood-injection-injury fears: a structural model. J. Anxiety Disord. 20, 23-41. ( 10.1016/j.janxdis.2004.11.009) [DOI] [PubMed] [Google Scholar]

- 3.Webb K, Davey GC. 1992. Disgust sensitivity and fear of animals: effect of exposure to violent or revulsive material. Anxiety Stress Coping 5, 329-335. ( 10.1080/10615809208248369) [DOI] [Google Scholar]

- 4.Merckelbach H, de Jong PJ, Arntz A, Schouten E. et al. 1993. The role of evaluative learning and disgust sensitivity in the etiology and treatment of spider phobia. Adv. Behav. Res. Ther. 15, 243-255. ( 10.1016/0146-6402(93)90011-P) [DOI] [Google Scholar]

- 5.de Jong PJ, Andrea H, Muris P. 1997. Spider phobia in children: disgust and fear before and after treatment. Behav. Res. Ther. 35, 559-562. ( 10.1016/S0005-7967(97)00002-8) [DOI] [PubMed] [Google Scholar]

- 6.Tolin DF, Lohr JM, Sawchuk CM, Lee TC. et al. 1997. Disgust and disgust sensitivity in blood-injection-injury and spider phobia. Behav. Res. Ther. 35, 949-953. ( 10.1016/S0005-7967(97)00048-X) [DOI] [PubMed] [Google Scholar]

- 7.Thorpe SJ, Salkovskis PM. 1998. Studies on the role of disgust in the acquisition and maintenance of specific phobias. Behav. Res. Ther. 36, 877-893. ( 10.1016/S0005-7967(98)00066-7) [DOI] [PubMed] [Google Scholar]

- 8.Sawchuk CN, Lohr JM, Westendorf DH, Meunier SA, Tolin DF. 2002. Emotional responding to fearful and disgusting stimuli in specific phobias. Behav. Res. Ther. 40, 1031-1046. ( 10.1016/S0005-7967(01)00093-6) [DOI] [PubMed] [Google Scholar]

- 9.Edwards S, Salkovskis PM. 2006. An experimental demonstration that fear but not disgust, is associated with return of fear in phobias. J. Anxiety Disord. 20, 58-71. ( 10.1016/j.janxdis.2004.11.007) [DOI] [PubMed] [Google Scholar]

- 10.Lang PJ, Bradley MM, Cuthbert BN. 1997. International affective picture system (IAPS): technical manual and affective ratings. NIMH Cent. Study Emot. Atten. 1, 39-58. [Google Scholar]

- 11.Dan-Glauser ES, Scherer KR. 2011. The Geneva affective picture database (GAPED): a new 730-picture database focusing on valence and normative significance. Behav. Res. Methods 43, 468. ( 10.3758/s13428-011-0064-1) [DOI] [PubMed] [Google Scholar]

- 12.Marchewka A, Żurawski Ł, Jednoróg K, Grabowska A. 2014. The Nencki Affective Picture System (NAPS): Introduction to a novel, standardized, wide-range, high-quality, realistic picture database. Behav. Res. Methods 46, 596-610. ( 10.3758/s13428-013-0379-1) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Riegel M, Żurawski Ł, Wierzba M, Moslehi A, Klocek Ł, Horvat M, Marchewka A. 2016. Characterization of the Nencki Affective Picture System by discrete emotional categories (NAPS BE). Behav. Res. Methods 48, 600-612. ( 10.3758/s13428-015-0620-1) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kurdi B, Lozano S, Banaji MR. 2017. Introducing the open affective standardized image set (OASIS). Behav. Res. Methods 49, 457-470. ( 10.3758/s13428-016-0715-3) [DOI] [PubMed] [Google Scholar]

- 15.Michałowski JM, Droździel D, Matuszewski J, Koziejowski W, Jednoróg K, Marchewka A. 2017. The Set of Fear Inducing Pictures (SFIP): development and validation in fearful and nonfearful individuals. Behav. Res. Methods 49, 1407-1419. ( 10.3758/s13428-016-0797-y) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Haberkamp A, Glombiewski JA, Schmidt F, Barke A. 2017. The DIsgust-RelaTed-Images (DIRTI) database: validation of a novel standardized set of disgust pictures. Behav. Res. Ther. 89, 86-94. ( 10.1016/j.brat.2016.11.010) [DOI] [PubMed] [Google Scholar]

- 17.Barke A, Stahl J, Kröner-Herwig B. 2012. Identifying a subset of fear-evoking pictures from the IAPS on the basis of dimensional and categorical ratings for a German sample. J. Behav. Ther. Exp. Psychiatry 43, 565-572. ( 10.1016/j.jbtep.2011.07.006) [DOI] [PubMed] [Google Scholar]

- 18.Davis WJ, et al. 1995. Properties of human affect induced by static color slides (IAPS): dimensional, categorical and electromyographic analysis. Biol. Psychol. 41, 229-253. ( 10.1016/0301-0511(95)05141-4) [DOI] [PubMed] [Google Scholar]

- 19.Libkuman TM, Otani H, Kern R, Viger SG, Novak N. 2007. Multidimensional normative ratings for the international affective picture system. Behav. Res. Methods 39, 326-334. ( 10.3758/BF03193164) [DOI] [PubMed] [Google Scholar]

- 20.Soares SC, Lindström B, Esteves F, Öhman A. 2014. The hidden snake in the grass: superior detection of snakes in challenging attentional conditions. PLoS ONE 9, e114724. ( 10.1371/journal.pone.0114724) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Gomes N, Soares SC, Silva S, Silva CF. 2018. Mind the snake: fear detection relies on low spatial frequencies. Emotion 18, 886-895. ( 10.1037/emo0000391) [DOI] [PubMed] [Google Scholar]

- 22.Davey GCL. 1991. Characteristics of individuals with fear of spiders. Anxiety Research 4, 299-314. ( 10.1080/08917779208248798) [DOI] [Google Scholar]

- 23.Davey GCL. 1994. The 'disgusting' spider: the role of disease and illness in the perpetuation of fear of spiders. Soc. Anim. 2, 17-25. ( 10.1163/156853094X00045) [DOI] [Google Scholar]

- 24.Woody SR, Teachman BA. 2000. Intersection of disgust and fear: normative and pathological views. Clin. Psychol.: Sci. Pract. 7, 291-311. ( 10.1093/clipsy.7.3.291) [DOI] [Google Scholar]

- 25.Cisler JM, Olatunji BO, Lohr JM, Williams NL. 2009. Attentional bias differences between fear and disgust: implications for the role of disgust in disgust-related anxiety disorders. Cogn. Emot. 23, 675-687. ( 10.1080/02699930802051599) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Bradley M, Codispoti M, Cuthbert B, Lang P. 2001. Emotion and motivation i: defensive and appetitive reactions in picture processing. Emotion 1, 276-298. ( 10.1037/1528-3542.1.3.276) [DOI] [PubMed] [Google Scholar]

- 27.Sabatinelli D, Flaisch T, Bradley MM, Fitzsimmons JR, Lang PJ. 2004. Affective picture perception: gender differences in visual cortex? Neuroreport 15, 1109-1112. ( 10.1097/00001756-200405190-00005) [DOI] [PubMed] [Google Scholar]

- 28.Wrase J, Klein S, Gruesser SM, Hermann D, Flor H, Mann K, Braus DF, Heinz A. 2003. Gender differences in the processing of standardized emotional visual stimuli in humans: a functional magnetic resonance imaging study. Neurosci. Lett. 348, 41-45. ( 10.1016/S0304-3940(03)00565-2) [DOI] [PubMed] [Google Scholar]

- 29.Charrad M, Ghazzali N, Boiteau V, Niknafs A. 2014. NbClust: an R Package for determining the relevant number of clusters in a data set. J. Stat. Softw. 61, 1-36. ( 10.18637/jss.v061.i06) [DOI] [Google Scholar]

- 30.Murtagh F, Legendre P. 2014. Ward’s hierarchical agglomerative clustering method: which algorithms implement Ward’s criterion? J. Classif. 31, 274-295. ( 10.1007/s00357-014-9161-z) [DOI] [Google Scholar]

- 31.Alarcão SM, Fonseca MJ. 2018. Identifying emotions in images from valence and arousal ratings. Multimed. Tools Appl. 77, 17 413-17 435. ( 10.1007/s11042-017-5311-8) [DOI] [Google Scholar]

- 32.Javela JJ, Mercadillo RE, Ramírez JM. 2008. Anger and associated experiences of sadness, fear, valence, arousal, and dominance evoked by visual scenes. Psychol. Rep. 103, 663-681. ( 10.2466/pr0.103.3.663-681) [DOI] [PubMed] [Google Scholar]

- 33.Barrett LF. 2012. Emotions are real. Emotion 12, 413. ( 10.1037/a0027555) [DOI] [PubMed] [Google Scholar]

- 34.Lindquist KA, Wager TD, Kober H, Bliss-Moreau E, Barrett LF. 2012. The brain basis of emotion: a meta-analytic review. Behav. Brain Sci. 35, 121. ( 10.1017/S0140525X11000446) [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data and materials for our experiment are available at https://osf.io/pfrx4/?view_only=911b1be722074ad4aab87791cb8a72f5. This experiment was not preregistered.