Abstract

The application of closed-loop approaches in systems neuroscience and therapeutic stimulation holds great promise for revolutionizing our understanding of the brain and for developing novel neuromodulation therapies to restore lost functions. Neural prostheses capable of multi-channel neural recording, on-site signal processing, rapid symptom detection, and closed-loop stimulation are critical to enabling such novel treatments. However, the existing closed-loop neuromodulation devices are too simplistic and lack sufficient on-chip processing and intelligence. In this paper, we first discuss both commercial and investigational closed-loop neuromodulation devices for brain disorders. Next, we review state-of-the-art neural prostheses with on-chip machine learning, focusing on application-specific integrated circuits (ASIC). System requirements, performance and hardware comparisons, design trade-offs, and hardware optimization techniques are discussed. To facilitate a fair comparison and guide design choices among various on-chip classifiers, we propose a new energy-area (E-A) efficiency figure of merit that evaluates hardware efficiency and multi-channel scalability. Finally, we present several techniques to improve the key design metrics of tree-based on-chip classifiers, both in the context of ensemble methods and oblique structures. A novel Depth-Variant Tree Ensemble (DVTE) is proposed to reduce processing latency (e.g., by 2.5× on seizure detection task). We further develop a cost-aware learning approach to jointly optimize the power and latency metrics. We show that algorithm-hardware co-design enables the energy- and memory-optimized design of tree-based models, while preserving a high accuracy and low latency. Furthermore, we show that our proposed tree-based models feature a highly interpretable decision process that is essential for safety-critical applications such as closed-loop stimulation.

Keywords: Neural prostheses, closed-loop neuromodulation, on-chip machine learning, symptom detection, decision trees

I. Introduction

Developing novel non-pharmacological treatments such as neurostimulation is becoming increasingly important to treat some of the most prevalent and intractable neurological disorders. Brain stimulation is currently the most common surgical treatment for movement disorders and has shown promise in epilepsy, neuropsychiatric disorders, memory, chronic pain, and traumatic brain injury, with new applications rapidly emerging. Despite promising proof-of-concept results, current clinical neurostimulators are limited in many aspects. For example, while deep-brain stimulation (DBS) can effectively control motor symptoms in most patients suffering from Parkinson’s disease (PD), it causes persistent side effects (e.g., speech impairment and cognitive symptoms) [1], [2]. It is now widely known that this is due to the conventional ‘open-loop’ approach, which involves delivering constant high-frequency (~130Hz) stimulation regardless of the patient’s clinical state. In addition, open-loop stimulation increases the power consumption and the need for surgical battery replacement. This simplistic open-loop approach is also a key limiting factor in designing clinically effective stimulation for more complex disorders such as depression [3], Alzheimer’s disease [4], and stroke [5], [6], among others [5], [7], [8].

To further leverage the benefits of stimulation and address the aforementioned limitations, closed-loop neuromodulation techniques have been recently explored, such as the responsive neurostimulator for epilepsy [9] and PD [10], with promising results. In this approach, stimulation is dynamically controlled according to a patient’s clinical state, either with a continuous (i.e., adaptive) or an on-off (i.e., on-demand) strategy. Through feedback from relevant biomarkers of a neurological symptom (e.g., a seizure event, tremor episode, or mood change), closed-loop stimulation can titrate charge delivery to the brain, thus reducing the side effects and the amount of stimulation delivered, enhancing the therapeutic efficacy and battery life compared to its open-loop counterparts [2]. However, several critical challenges remain to be addressed in order to fully exploit the potential of closed-loop therapies for neurological disorders. The existing closed-loop devices mainly rely on simple comparison of a pre-selected biomarker (typically from 1 out of 4 channels) against a fixed threshold. Such simplistic approaches are known to be suboptimal in terms of predictive accuracy, resulting in low sensitivity and high false alarm rates, while exacerbating other symptoms [8]. Multiple biomarkers and control loops may be necessary to reliably improve symptoms, leading to design complexity.

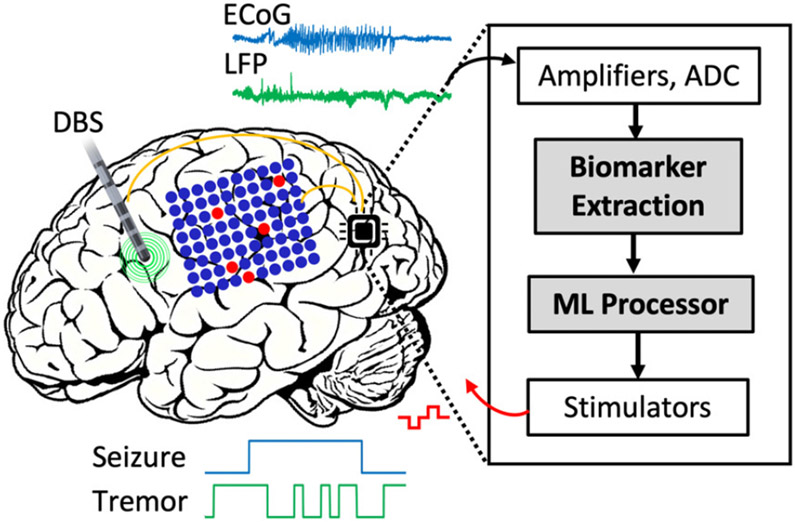

A promising solution to address this challenge is to implement a machine learning (ML) algorithm directly on the implant or wearable to predict the onset or severity of neurological symptoms, an approach that has gained significant interest in recent years [11]-[18]. Real-time symptom control can be achieved through on-chip biomarker extraction and ML-based disease state detection, followed by a closed-loop intervention (e.g., electrical, magnetic or optical stimulation, drug delivery) to suppress the abnormal activity, as illustrated in Fig. 1. This approach offers significant advantages over the conventional wireless transmission and external processing methods [19], [20] that suffer from feedback loop latency, high power consumption due to continuous telemetry, security and privacy concerns [21], [22]. A number of clinical trials have recently shown the advantage of machine learning-based control for closed-loop stimulation in movement disorders, epilepsy, and memory [4], [23]. In addition, machine learning systems have been developed to forecast the onset of neurological symptoms during preictal phase, allowing sufficient time prior to seizure manifestation (e.g., in the order of several minutes) to provide early warnings to the patients and caregivers [24]-[26]. In closed-loop neural prostheses, however, both the ML decoder and neurostimulator are integrated on the implant, eliminating the need for excessively long symptom prediction horizons [27]. Therefore, most closed-loop devices train the classifier to differentiate ictal epochs from interictal period, several seconds prior to symptom onset [28]. Such systems detect the onset and termination (i.e., offset) of neurological symptoms to precisely control the delivery of stimulation [13].

Fig. 1:

Symbolic view of a closed-loop neural prosthesis. Multi-channel neural signals such as ECoG and LFP are recorded by cortical and deep-brain electrodes and sent to the implantable microchip. The on-chip biomarker extraction and ML processor detect the onset of symptoms and trigger a therapeutic neurostimulator.

Despite the benefits of using machine learning for closed-loop intervention, strict power and area requirements on an implantable or wearable device pose critical challenges for hardware realization of ML algorithms, particularly in the form of a miniaturized ASIC. The choice of learning algorithm and neural biomarkers affects the prediction accuracy and latency. Moreover, the prediction accuracy depends on the spatial resolution of the recording system and the number of input channels. Thus, there is a crucial need to develop high-performance, energy- and area-efficient biomarker extraction and ML solutions that are scalable to high channel counts and satisfy the implantable/wearable power budget and form factor.

In this paper, we review the state-of-the-art neural prostheses with embedded biomarker extraction and machine learning. We first discuss the closed-loop system components, requirements for the next-generation smart neural prostheses, their clinical applications, hardware techniques and trade-offs. Commercial and investigational closed-loop neuromodulation devices and a comparison of previously reported system-on-chips (SoCs) for neural signal classification are presented. In the second part of this paper, we discuss an emerging class of machine learning algorithms based on decision trees [12], [22], [29]-[31], including tree ensembles and oblique trees, that are particularly suitable for energy- and area-constrained platforms such as brain implants and wearables. We introduce novel techniques to improve the accuracy-latency trade-off in tree ensembles. A new class of tree-based models that effectively combine decision trees (DTs) with neural networks is further discussed. After presenting various techniques for energy, latency, and memory-efficient realization of oblique trees, we present the results of testing these models on two neural signal classification tasks relevant to closed-loop stimulation (epilepsy and PD).

It should be noted that closed-loop neural prostheses with on-chip intelligence are also being explored in the context of fully implantable brain-machine interfaces (BMI) [30], [32]-[35]. Such BMI systems can provide a sensory feedback to the brain and/or control prosthetic devices to restore lost motor or sensory function in paralyzed patients. However, the focus of this paper is on neural prostheses that directly record and modulate the brain activity to treat neurological disorders, while motor neuroprosthetics (i.e., BMIs or brain-computer interfaces, BCI), peripheral [36] and spinal cord prostheses [37] (e.g., EMG-based interfaces) are beyond the scope of this paper. Furthermore, we limit our review to those systems that focus on ASIC implementation of neurological symptom detection algorithms (either validated on, or with a potential for closed-loop stimulation) due to similarity in design requirements. Thus, FPGA-based systems are not included in this review. While the focus of this review is on CMOS-based edge machine learning specifically for neural prostheses, a comprehensive review on embedded hardware (FPGA, neuromorphic, CMOS) for neural networks used in biomedical applications can be found in [38].

This paper is an extension of our conference paper [22] that presented a brief survey on closed-loop neural interface systems with on-chip machine learning and provides the following contributions:

A comprehensive review on the latest developments in technology design for closed-loop stimulation, including novel electrodes for sensing and stimulation, emerging clinical applications, commercial, research-based and investigational devices for closed-loop stimulation.

A detailed review of the reported neural interface SoCs with on-chip machine learning for neurological disease detection, either as a stand-alone chip or as part of a closed-loop system (implantable and wearable).

Future directions for the next-generation closed-loop neural prostheses, including the integration of advanced design techniques, accommodating high channel counts and the need for online learning.

Novel algorithm-hardware co-design techniques for next-generation energy-efficient neural prostheses. Specifically, we present a range of methods for cost-aware implementation of tree-based classifiers in brain implants and validate them on human neurophysiological datasets.

II. Closed-loop Neural Prostheses: Recent Trends, System Requirements, and Trade-offs

In a closed-loop neural prosthesis (Fig. 1), neurostimulation is triggered to suppress the impending signs of a neurological disease. Research on closed-loop neurostimulation has gained momentum in recent years, particularly with the success of proof-of-concept studies on epilepsy [43] and PD [1], [44], [45]. Closed-loop approaches are now being explored to treat a variety of medication refractory brain disorders where open-loop stimulation has been less effective. Yet, major technological challenges have limited the efficacy and clinical translation. These challenges include the low channel count of the current devices, the effect of stimulation artifacts on the sensing circuits, the need for miniaturization and improved energy efficiency, and the need for more advanced control algorithms [2], [8], [22]. Next-generation closed-loop neuromodulation systems will require significant improvements in the existing devices. For instance, higher numbers of recording and stimulation channels will be necessary for disorders that require multi-site neural recording and manipulation. More sophisticated processing algorithms and complex stimulation patterns will be beneficial to improve therapeutic outcomes. However, this will increase the design complexity and required on-chip resources for symptom detection and stimulation, as well as the required processing time. Better localization of target regions for effective stimulation and improved stimulation artifact cancellation are also critical for bidirectional neural prostheses. In this paper, we discuss the major challenges and review the most recent advances in the field, with a particular focus on machine learning-embedded implantable and wearable systems.

A. Sensing and Stimulation

High-density neural recording and multi-site neurostimulation with low-power miniaturized circuits are crucial for the next-generation closed-loop neural prostheses. Particularly, complex disorders such as depression and Alzheimer’s disease (AD) need multi-site rather than single-site recording that calls for more intelligent, data-driven closed-loop systems with high-density sensing and stimulation capabilities.

1). Conventional and Emerging Electrodes for Sensing and Stimulation:

In a neural prosthesis, the electrophysiological activity of the brain can be recorded through various noninvasive, minimally-invasive, or invasive electrodes such as scalp EEG, subscalp EEG [39], electrocorticography (ECoG), also known as intracranial EEG (iEEG), stereo-EEG (sEEG) [41], [46], and deep-brain leads, providing various degrees of spatial and temporal resolution (Fig. 2). In some cases and predominantly in implantable prostheses, the same electrode can be used for delivering electrical stimulation to the brain to suppress disease symptoms.

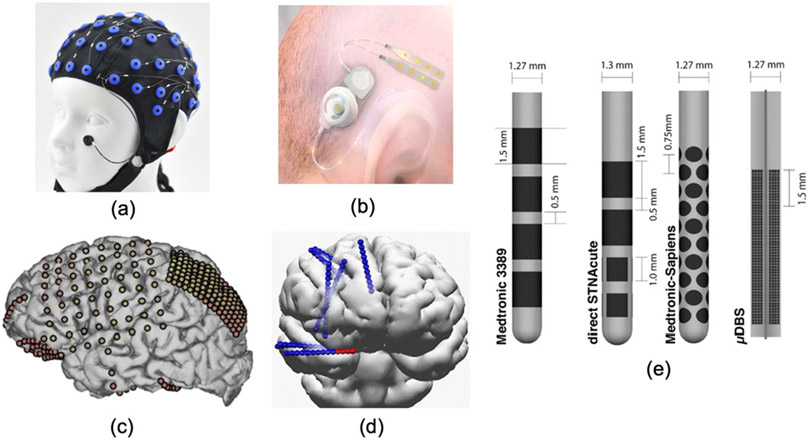

Fig. 2:

Standard and emerging electrodes for neural recording and stimulation via noninvasive, minimally-invasive, and invasive technologies; (a) Standard scalp-EEG electrodes. (b) The Epios subscalp EEG device for chronic epilepsy monitoring [39]. (c) Standard and high-density ECoG [40]. (d) Stereo-EEG leads [41]. (e) Clinical DBS (Medtronic’s FDA-approved 3389, left), emerging directional DBS leads (8-channel direct STNAcute and 40-channel Medtronic-Sapiens, middle) and the Willsie and Dorval 1760-contact micro-DBS lead (right) [42].

The EEG electrodes have a cm-range distance and are noninvasive. Both scalp and subscalp EEG are suitable for wearable settings, with electrodes placed either above (scalp EEG) or under the scalp (subscalp EEG). Subscalp electrodes are particularly suitable for chronic (i.e., longer than one month) EEG recording in a home environment and require a minimally invasive surgery under general anesthesia to implant the subcutaneous electrodes [39]. The subscalp approach eliminates the need for constant electrode care (i.e., no need for an EEG cap or adhesives electrodes), providing a stable and less obtrusive recording modality compared to conventional EEG, Fig. 2(b). Furthermore, subscalp EEG has been shown to attenuate several types of artifacts and improve (or at least maintain) the signal quality compared to EEG. However, similar to scalp EEG, it is limited in temporal and spatial resolution compared to ECoG (i.e., <100Hz vs. several hundred Hz) and cannot monitor deep-brain structures. A number of subscalp EEG systems are currently certified or in development for long-term epilepsy monitoring (Section III).

The spacing of ECoG electrodes (epidural or subdural) is typically within mm-range, while state-of-the-art ECoG interfaces enable denser recording arrays for high-spatial-resolution recording of cortical activity [47]. For instance, it has been shown that high-density μECoG with a 400μm pitch outperforms lower density grids in classifying cognitive tasks in humans [48], highlighting its potential for future high-performance neuroprosthetic applications. High-frequency electrophysiological activity relevant to seizure prediction or epileptic foci localization can be captured on high-resolution ECoG from submillimeter scale cortical regions [47], [49]-[51]. These novel electrodes are not yet adopted in diagnostic or closed-loop devices.

While ECoG provides a precise mapping technique at the level of cortical surface, stereo-EEG (sEEG) [41] is an alternative minimally-invasive method for identifying seizure onset zone in medically refractory focal epilepsy. Placement of stereo-EEG electrodes (typically 5–15 cylindrical shafts) requires small, localized burr holes to insert depth electrodes into the brain. Stereo-EEG enables a sparse sampling of localized brain regions, as opposed to the relatively large craniotomy required for strip/grid ECoG implantation [41].

The electrodes on a deep-brain lead (e.g., Medtronic 3387/3389 deep-brain stimulation lead with four cylindrical contacts) are placed several millimeters or even 100s of micrometers apart to capture the local field potential (LFP) activity (up to several 100 Hz) [42]. The leads employed in sEEG are similar to those used for deep brain stimulation (DBS). DBS is widely used as a treatment for essential tremor, PD and dystonia, with emerging applications in epilepsy, major depression, obsessive-compulsive disorder (OCD), and Tourette’s syndrome. While DBS is primarily used for electrical stimulation, the chronic efficacy and stability of DBS leads suggest the use of long-term sEEG for sensing applications and closed-loop prostheses [41]. In rare cases, single-unit activity captured by μDBS leads (100μm spacing [42]) or penetrating microelectrodes such as Utah array can be used to detect spike-based biomarkers (e.g., neuronal firing rates correlating with cognitive functions) for disease state prediction and guiding neurostimulation therapy [52], [53].

For stimulation, recent DBS electrodes employ directional leads with higher number of small contacts (e.g., 16, 40, 1760) as opposed to traditional leads with only four cylindrical contacts [8], [42], Fig. 2(e). Such directional leads with segmented electrodes can effectively steer the stimulation back toward a missed target structure, without exciting non-target regions and inducing adverse effects. Moreover, recent studies report the impact of using temporal patterns delivered via multiple contacts in enhancing plasticity and symptom relief [8], [42], highlighting the benefits of high-channel-count stimulation.

2). Concurrent Sensing and Stimulation:

Accuracy and latency can be enhanced by measuring evolving disease state even as therapeutic stimulation is applied. This motivates the need for a new class of circuit and system techniques to enable detection of weak electrophysiological signals of interest in the presence of orders-of-magnitude stronger stimulus artifacts. This general problem of measuring weak signals in the presence of extreme self-interference represents a general challenge for modern mixed-signal circuit in various sensing and communication applications. The next generation ‘full-duplex’ neuromodulation devices must feature simultaneous sensing and stimulation for truly closed-loop operation.

The most common electrical approach is to use ‘blanking’ [54], [55] where recording amplifiers are disconnected from the electrode during and immediately after stimulation, and then reconnected after the stimulation artifact will no longer saturate the amplifier. Recent improvements allow the amplifier to be connected immediately after stimulation [56], using mixed-signal circuit realization of the analog front-end (AFE). However, this method still suffers from its inability to record while stimulating, which is especially limiting in complex, multi-electrode stimulation patterns where extended stimulation blocks recording over much longer time stretches.

An alternative approach based on high dynamic range (DR) AFE incorporating amplifiers and analog-to-digital converters (ADC) can reliably record the neural signal along with the persistent artifacts without saturation [57], [58]. Alternatively, the design in [59] proposes a linear-interpolation-based artifact cancellation implemented on an FPGA. Another approach employs a front-end cancellation technique that avoids using a high DR AFE [60]. However, this method requires a significant convergence time (impractical for closed-loop systems). Artifact cancellation generally poses additional hardware overhead on the AFE and on the back-end for digital cancellation, which limits the area and energy efficiency of the closed-loop system.

B. Disease Biomarkers and Machine Learning

While artificial intelligence and machine learning can contribute to various aspects of neurotechnology (e.g., optimizing the programming of stimulation to activate target regions, offline analysis of chronic neural recordings, understanding the underlying disease mechanism), our focus in this paper is on real-time on-device disease state prediction using machine learning. This is inspired by the unique potential of ML techniques in classifying high-dimensional electrophysiological signals, typically outperforming conventional methods in various applications [2], [11], [12], [61]-[64]. Accurate and timely detection of symptoms in brain disorders is critical to enable closed-loop neuromodulation, and it typically requires the use of correlating biomarkers (i.e., features) of an underlying disease state along with a machine learning algorithm. The widely used features in electrophysiological studies include the spectral power (or bandpower) in various frequency bands relevant to the neurological symptom of interest, time-domain and statistical features (e.g., line-length [65], the Hjorth parameters of activity, mobility, and complexity [2], [63], [66], number of peaks, peak-to-peak amplitude and peak latency [63]), biomarkers that measure connectivity between different brain regions such as phase-amplitude coupling and phase locking value [2], [64], [67]-[69], and the correlation structure of multi-channel neural data [70].

Some initial steps have been taken recently toward embedding biomarkers and machine learning algorithms on brain implants or wearables for disease monitoring and closed-loop therapy, and in investigational neuromodulation systems such as Medtronic’s Summit RC+S [71] and Percept PC systems, as summarized in the next sections.

1). Classifier requirements – High accuracy, low latency:

Symptom detection requires high accuracy and low latency. The classification algorithms should be robust in handling the typically small amounts of training data in such applications, due to the lack of chronic recordings. In some cases, the recording length could be limited to the duration of surgery for device implantation (e.g., up to 30 minutes for DBS surgery in PD, several days for epilepsy patients undergoing pre-surgery evaluation at the hospital). With the increasing interest in devices with chronic recording capability (e.g., the NeuroPace RNS and Medtronic Percept), it is expected that more long-term human data will be available in near future, enabling data-driven algorithm and hardware developments.

Depending on the distribution of different classes in a neurophysiological dataset, the appropriate measure of accuracy may be used to evaluate the classifier’s performance. Sensitivity (i.e., True Positive rate), specificity (i.e., selectivity or True Negative rate), accuracy, F1 score, the area under the ROC curve (AUC), and the false alarm rate (FAR) are among the commonly used metrics in ML studies on neural datasets. The F1 score (i.e., the harmonic mean of sensitivity and precision: 2× (precision×sensitivity)/(precision+sensitivity), where precision represents the positive predictive value) is particularly useful in dealing with imbalanced datasets (i.e., datasets with non-uniform distribution of classes), such as EEG or iEEG recordings in epilepsy [12]. Balanced accuracy (i.e., the average of sensitivity and specificity) is another metric used for imbalanced datasets [64].

Most closed-loop systems rely on external computing for feature extraction and classification, which suffers from long loop latency, thus jeopardizing the real-time feedback. The on-chip integration of ML can significantly speed up the closed-loop therapy and enable feedback loops of msec-range latency. If the feedback is too slow, the detector may miss the window of opportunity to trigger or adjust stimulation, resulting in poor therapeutic outcomes. More sophisticated processing algorithms may improve the decoding accuracy at the cost of increased processing latency.

While ‘latency’ has been used to represent various types of ‘processing delay’ in literature (e.g., feature extraction and classification delay resulting from window-based processing), the detection latency of a closed-loop system is typically defined as the delay between the electrographic, expert-marked, or externally labeled symptom onset and the onset declared by the on-chip processor, for instance in detecting seizures in epilepsy [12], [61], [72]-[76] or tremor onset in PD [2], [68], [77]. In disorders such as epilepsy, the onset of clinical symptoms could be several seconds (in some cases, up to 30 seconds [28]) after the time of earliest detectable changes in neural activity. Therefore, therapeutic feedbacks within that time frame can be still beneficial for the patients. In other cases, e.g., in movement disorders with more rapid changes in electrophysiological state, a low latency (i.e., negative latency or lead [2]) is preferred to enable closed-loop stimulation.

2). Classifier requirements – Low power and small area:

To enable efficient local processing in a brain implant, silicon-realizable ML algorithms that can precisely predict a neurological symptom are essential. Neural prostheses with on-device ML do not require continuous wireless telemetry. Yet, low-power realization of machine learning algorithms is crucial to avoid excessive power dissipation. Optimized use of memory and computational resources and compact silicon area are further required to process multiple channels. The computational complexity of the classifier (and features) could set a limit on the number of input channels, thus hindering its application in more complex disorders.

The conventional implementation of most classification algorithms is resource intensive such that devices in existence today [43] sacrifice the classification accuracy and latency to meet the power and size constraints [12]. Some limited processing is embedded in recently developed neuromodulation devices, but this applies to 1–4 channels only, requiring external classifiers for more accurate symptom detection [71]. There is a crucial need for energy- and area-efficient machine learning algorithms via co-design of algorithm and hardware, as discussed in the next sections.

3). Neurophysiological Datasets:

In contrast to computer vision tasks that benefit from standard datasets for direct benchmarking of machine learning models, the electrophysiological datasets used in disease prediction tasks are diverse and not directly comparable. Furthermore, these datasets include different numbers of patients with various levels of symptom detection complexity, making it challenging to compare classifiers evaluated on the same dataset but on different patients. Another critical challenge is the lack of data sharing and open-source datasets in emerging applications beyond epilepsy (e.g., movement disorders, depression, Alzheimer’s disease), which greatly limits the development of biomarkers and ML solutions and subsequent device implementation for such disorders.

III. Commercial and Investigational Closed-loop Devices

One of the few platforms currently available for closed-loop stimulation is the NeuroPace’s Responsive Neurostimulator (RNS) for medication-refractory epilepsy (Fig. 3(a)). RNS continuously analyzes cortical activity to detect and halt seizure events from 4 channels, by comparing a simple pre-selected feature (signal intensity, line-length, or half-wave) against a threshold [43], [78], and it is currently in clinical use in patients. Both cortical and deep-brain stimulation are enabled in RNS (8 channels). The recently published results of a nine-year, multi-center chronic study of RNS device on 230 patients in 34 epilepsy centers [79] showed significant reductions in seizure rates: 75% median reduction, at least 50% reduction in 73% of patients. The sudden unexpected death in epilepsy (SUDEP) was also significantly reduced. The responsive neurostimulation was a well-tolerated treatment, with a similar safety profile to other epilepsy procedures.

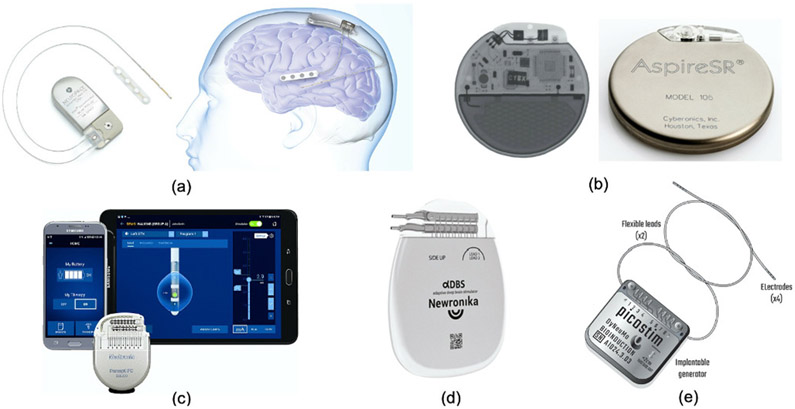

Fig. 3:

Existing clinical or research-based closed-loop neuromodulation devices (with or without on-device ML); (a) The NeuroPace RNS device for epilepsy. (b) The AspireSR (Cyberonics, now known as LivaNova) device for epilepsy. (c) The Medtronic Percept PC device for movement disorders. (d) The Newronika AlphaDBS system for Parkinson’s disease. (e) The DyNeuMo Mk-1 system for movement disorders.

Similarly, Medtronic’s investigational Activa PC+S, Summit RC+S [71] and Percept PC system (Fig. 3(c)) are capable of sensing and closed-loop stimulation for movement disorders such as essential tremor and PD. Compared to RNS, the Medtronic devices implement slightly more complex spectral analysis and a linear classifier, relying on only 4 sensing channels with 2–8 features in total, and 8–16 stimulation channels. For both RNS and Medtronic devices, external algorithms with advanced machine learning capabilities may be necessary for more accurate symptom tracking [71], [80], at the cost of long loop latency and high power demands to support continuous wireless streaming [12], [31].

The AspireSR 106 (LivaNova) is an implantable Vagus Nerve Stimulator (VNS) with an optional AutoStim mode in which the VN stimulation can be adjusted in response to ictal heart rate changes which are potentially associated with an impending seizure (Fig. 3(b)) [81]. In a study on the efficacy of open-loop VNS on 5554 patients [82], a growing increase in seizure freedom was observed post therapy, with 49% responding to treatment 0–4 months after implantation (i.e., >50% seizure frequency reduction). The efficacy of closed-loop AspireSR versus the preceding open-loop device was recently studied, where 4 (from 11) patients who were less responsive to the open-loop VNS achieved >50% seizure reduction [83]. Of note, there have been reports on the Federal Drug Administration (FDA) device recall for different models of VNS due to concerns on device malfunctions.

In addition to the devices described above, there is an increasing effort in developing novel closed-loop stimulation devices for a variety of brain disorders. One example is the AlphaDBSTM system [84], which recently received the CE mark approval to treat Parkinson’s disease (Fig. 3(d)). This closed-loop system developed by Newronika (S.p.A, Milan, Italy) can record deep-brain local field potentials and adjust the stimulation amplitude and frequency. DyNeuMo (Bioinduction, Bristol, UK) is a closed-loop neuromodulation research device that can titrate stimulation according to the current motor state (e.g., posture and activity) [85] (Fig. 3(e)). The device uses off-the-shelf consumer technology and embeds three-axis accelerometer sensors and 8-channel programmable neurostimulators, and is currently in preparation for first-in-human research trials.

Minimally-invasive signal modalities such as subscalp EEG are also being considered for long-term epilepsy monitoring. For instance, the Epios device (Wyss Center for Bio and Neuroengineering, Geneva, Switzerland) [39] enables both focal recording and full-montage coverage using subscalp EEG for chronic seizure analysis and forecasting (Fig. 2(b)). The EEG data is wirelessly transmitted to a wearable unit and temporarily stored, supporting multimodal ECG, audio, and accelerometry recording. Signals are then transmitted to the cloud for long-term data analysis and visualization. The Epios device is currently in preparation for clinical trial phase. The Minder device (Epi-Minder, Melbourne, Australia) [39] implants an electrode lead across the skull to cover both hemispheres (Fig. 3 (g)). This subscalp system provides continuous long-term measurement of EEG for chronic epilepsy diagnosis and monitoring (clinical trial in progress). Alternatively, in the EASEE system by Precisis (Heidelberg, Germany) five subscalp electrodes are implanted above the seizure focus for sensing and closed-loop neurostimulation with a personalized setting (clinical trial in progress) [39].

IV. Neural Prostheses with On-chip ML

In recent years, the application of machine learning techniques in closed-loop neuromodulation and its CMOS implementation have received considerable interest. Machine learning has been used to more accurately predict optimal stimulation times [2], [13], [16], [64], [86] and several clinical studies have shown the advantage of ML-based closed-loop therapy in movement disorders [23], epilepsy [87], and memory [4]. The most prominent benefits of integrating machine learning algorithms on a brain implant include:

Eliminating the need for excessive wireless transmission for external processing, thus allowing design miniaturization, lower power dissipation, and higher mobility.

Increasing patient independence and alleviating security concerns by avoiding the transmission of private data.

Improving symptom prediction accuracy and latency.

The latter advantage largely depends on the number of sensing channels, the quality of neural signal (e.g., its sampling rate and signal-to-noise ratio), the choice of machine learning algorithm and neural biomarkers, and the chronic robustness of the algorithm. As mentioned in the previous section, current clinical devices do not offer sufficient embedded biomarker extraction and ML, relying on telemetry and cloud-based processing for accurate symptom prediction.

Various hardware implementations of machine learning algorithms have been reported for neurological symptom detection, as discussed below. Here, we limit our review to state-of-the-art neural prostheses with an ASIC implementation, validated on animal or human datasets (acute and/or chronic, either diagnostic only or closed-loop).

A. Implants and Wearables for Epilepsy

The most common application of on-chip classification in a neural prosthesis is in the context of seizure detection for medically refractory epilepsy, where a supervised ML algorithm is typically used to detect the onset of seizure events from multi-channel neural recordings. Neurostimulation offers an attractive treatment for intractable epilepsy (approximately one third of epileptic patients). Due to severity of refractory epilepsy, open-source epileptic EEG datasets (both scalp and intracranial) are largely available, as well as established animal models for device validation and preclinical studies. Therefore, several groups have integrated various biomarkers and machine learning algorithms on ASIC for automated seizure detection [11], [12], [14], [73], [90]-[95] and for controlling an on-chip stimulator [13], [16], [17], [29], [88], [89].

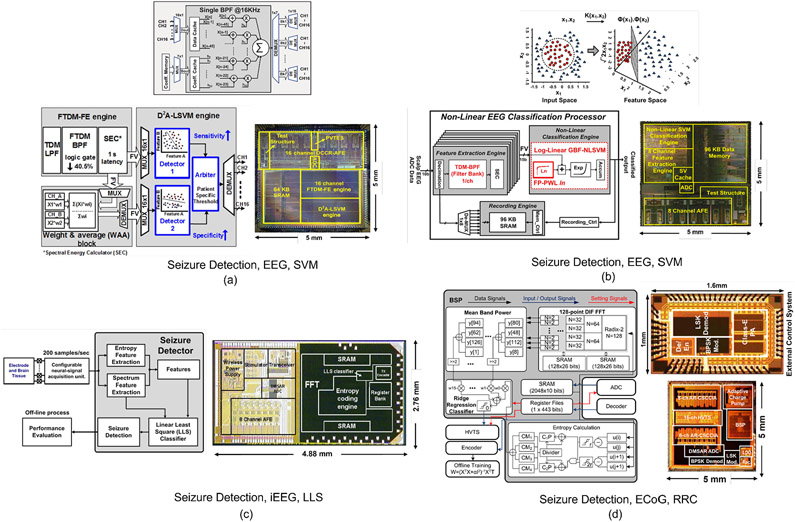

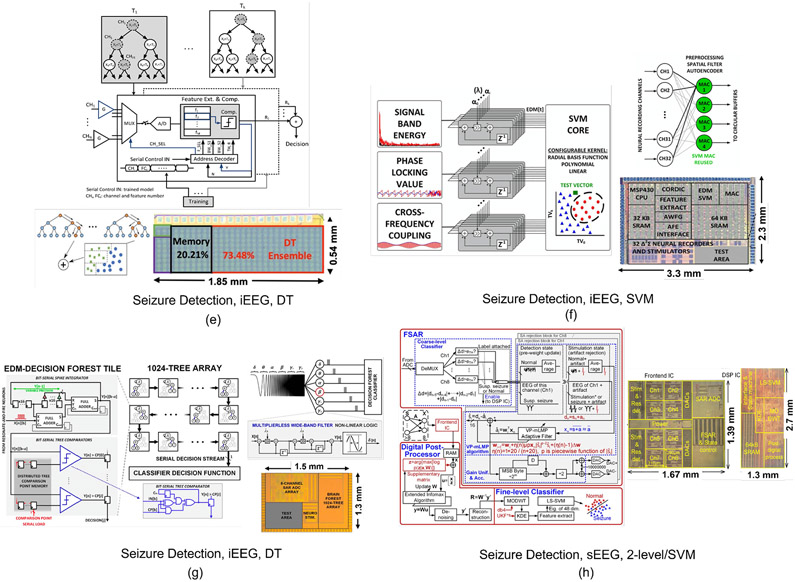

Most ML-embedded SoCs for epilepsy have adopted classifiers based on support vector machines (SVMs), as shown in Fig. 4 and Fig. 5(a). Several variants of SVM kernels including linear, second-order polynomial, and radial basis function (RBF) have been reported for on-chip implementation. An SVM classifier generates weighted feature matrices using multiply-and-accumulate (MAC) blocks and separates them into different classes via linear or non-linear separation boundaries. For example, [11] reported an 8-channel linear SVM classifier with digital bandpower features implemented using a distributed quad-LUT architecture, Fig. 5(a). The system was verified on the MIT PhysioNet EEG database from the Children’s Hospital Boston (CHB-MIT). This dataset includes 906 hours of recordings from 24 patients with epilepsy with ~190 registered seizures, and is commonly used in EEG-based seizure detection SoCs (Table. I). Alternatively, the design in [14] implemented a Gaussian basis function (GBF) SVM classifier to account for linearly non-separable seizure patterns, Fig. 4(b). A natural log operator was employed to linearize the GBF equation and replace multiplications with additions. Time-division multiplexing was used to implement the bandpower features in an area- and energy-efficient manner. The non-linear SVM typically requires sufficient seizure patterns for training, which might be impractical for patients with limited training sets. Later, a combination of two linear SVMs was introduced [13] to address this limitation, Fig. 4(a). The two SVMs were trained separately to achieve high sensitivity and specificity, and the classification results were combined to generate final decisions. This noninvasive closed-loop SoC integrates a transcranial electrical stimulator (tES) to suppress impending seizures. The classification performance and ASIC specifications are summarized in Table. I.

Fig. 4:

Hardware architectures and chip micrographs of ML-embedded neural prostheses for epilepsy: (a) Linear dual-detector SVM classifier and closed-loop transcranial neurostimulator [13], (b) non-linear SVM-based seizure detector [14], (c) linear least square (LLS) classifier and closed-loop stimulator [17], (d) ridge regression classifier (RRC) and closed-loop stimulator [88]. (e) Gradient-boosted tree ensemble for seizure detection [12], (f) exponentially decaying-memory SVM and closed-loop stimulator [16], (g) AdaBoost decision tree classifier and closed-loop stimulator [29], (h) two-level coarse/fine classifier and closed-loop stimulator [89].

Fig. 5:

Hardware architectures and chip micrographs of ML-embedded neural prostheses for various applications: (a) Linear SVM for epilepsy [11], (b) ANN for migraine state detection [18], (c) DNN for Autism emotion detection [100], (d) CNN for emotion detection [101].

TABLE I:

Performance Summary of Machine Learning SoCs for Epilepsy

| Parameter | JETCAS’18 [12] | JSSC’13 [17] | ISSCC’20 [29] | JSSC’18 [88] | ISSCC’18 [16] | ISSCC’20 [89] | TBCAS’16 [14] | JSSC’13 [11] | JSSC’15 [13] | This Work |

|---|---|---|---|---|---|---|---|---|---|---|

| Process | 65 nm | 180 nm | 65 nm | 180 nm | 130 nm | 180 nm | 180 nm | 180 nm | 180 nm | 65 nm |

| Classifier | XGB DT | LLS | AdaBoost DT | RRC | EDM-SVM | coarse/fine SVM | Non-Lin SVM | Lin-SVM | Dual-LSVM | DVTE+ |

| Features | LLN, Pow, Var, BPF | Ent., Spec. | RAF-BPF | FFT, ApEn | PLV, CFC, BPF | MODWT-KDE | TDM-BPF | BPF | FTDM-BPF | LLN, Var, BPF |

| Signal Modality | iEEG | iEEG | iEEG | ECoG | iEEG | Stereo-EEG | EEG | EEG | EEG | iEEG |

| Closed-loop | N | Y | Y | Y | Y | Y | N | N | Y | N |

| # of Sensing Channels | 32 | 8 | 8 | 16 | 32† | 8 | 8 | 8 | 16 | 32 |

| ML Energy Efficiency | 41.2 nJ/class. | 77.9 μJ/class. | 36 nJ/class. | 62.5 μJ/class. | 168.6 μJ/class. | 14.2 μJ/class. | 1.31 μJ/class.†† | 1.49 μJ/class.†† | 1.85 μJ/class. | 5.6 nJ/class. |

| ML Power | 206.4 μW | 882 μW‡ | 9.6 μW†* | 2.5 mW‡ | 674.4 μW | 1.16 μW | 156.6 μW‡ | 193.8 μW‡ | 216.7 μW‡ | 2.8 μW |

| Total Area (ML Area) | 1 (1) mm2 | 13.47 (4.85*) mm2 | 1.95 (0.42) mm2 | 25 (2.52*) mm2 | 7.59 (3.32) mm2 | 5.83 (3.51) mm2 | 25 (5.55*) mm2 | 25 (7.37*) mm2 | 25 (7.47*) mm2 | 0.31 (0.31) mm2 |

| Sampling Rate/Ch. | 5 kS/s | 62.5 kS/s | 256 S/s | 2 kS/s | 256 S/s | 1 kS/s** | 128 S/s†+ | 128 S/s†+ | 128 S/s†+ | 500 S/s |

| Sensitivity | 83.7% | 92%¶ | 96.7% | 97.8%¶ | 97.7% | 97.8% | 95.1% | 82.7%¶¶ | 95.7% | 91.1% |

| Specificity | 88.1% | N.A. | 0.8 FAR*+ | N.A. | 0.185 FAR*+ | 99.7% | 0.27 FAR§ | 4.5% FPR | 98% (0.27 FAR*+) | 96% |

| Dataset (# patients) | iEEG.org (26) | Rats | EU-iEEG | ECoG (5) | EU-iEEG (4) | CHB-MIT §(23) | CHB-MIT (24) | CHB-MIT (24) | CHB-MIT (23) | iEEG.org (11) |

| Latency | 1.1 s | 0.8 s | N.A. | 0.76 s | <0.1 s§§ | <0.3 s§§ | 2 s | <2 s++ | 1 s§§ | 0.52 s§§ |

| ML Energy/Ch. | 1.29 nJ/S | 1.76 nJ/S | 4.69 nJ/S | 78.1 nJ/S | 82.3 nJ/S | 0.145 nJ/S | 153 nJ/S | 189 nJ/S | 106 nJ/S | 0.175 nJ/S |

| ML Area/Ch. | 0.031 mm2 | 0.606 mm2 | 0.053 mm2 | 0.157 mm2 | 0.104 mm2 | 0.439 mm2 | 0.694 mm2 | 0.921 mm2 | 0.467 mm2 | 0.01 mm2 |

| ML E-A FoM | 40.3 pJ·mm2/S | 1.07 nJ·mm2/S | 248 pJ·mm2/S | 12.3 nJ·mm2/S | 8.5 nJ·mm2/S | 63.6 pJ·mm2/S | 106.1 nJ·mm2/S | 174.3 nJ·mm2/S | 49.4 nJ·mm2/S | 1.7 pJ·mm2/S |

ML (feature extractor and classifier) power consumption estimated from power breakdown

ML dynamic power (static power not reported)

Also applicable to Parkinson tremor detection. Post place-and-route results.

Variable (256, 1k, 125kS/s)

Seizure detection rate

With 2000 seizure samples generated by synthetic minority oversampling technique

Rapid eye blink detection

4-channel post dimensionality reduction

As reported in [13]

ML (feature extractor and classifier) area estimated from chip micrograph

Accuracy metric

Number of false alarms per hour

Processing (system) latency

After on-chip decimation

A 32-channel closed-loop neuromodulation system integrating frequency and phase-domain features, a 32-to-4 autoencoder for dimensionality reduction, and an exponentially decaying memory SVM (EDM-SVM) was proposed for seizure control [16], Fig. 4(f). This system was validated on 500 hours of iEEG data (4 patients, 44 seizures) provided by the EU dataset. The design proposed in [89] is an 8-channel closed-loop neuromodulation system for DBS, that was verified using stereo-EEG (sEEG) electrodes. The classifier is composed of a two-level coarse/fine detector, in which the DSP chip (separate from the core sensing chip) is only activated in case of suspected seizures raised by the coarse detector. In this mode, maximum-modulus discrete wavelet transform (MODWT) and kernel density estimation (KDE) are computed and classified by a least-squares SVM (LS-SVM) for fine classification, Fig. 4(h). Furthermore, [90] reported a configurable SVM processor with various kernels (RBF, polynomial, linear), validated on the MIT EEG dataset.

It should be noted that in addition to the machine learning processor, the feature extraction circuits can be highly power- and area-demanding, particularly in systems with many input channels. Minimizing the number of extracted features and their hardware complexity without jeopardizing the classification accuracy is essential to reduce the overall energy consumption and area. The required computational resources in SVM linearly scale with the number of neural channels, making such optimizations more critical in practice.

An 8-channel closed-loop iEEG-based seizure control SoC was presented in [17], computing frequency spectrum and time-domain entropy along with a linear least-square classifier, Fig. 4(c). This system was acutely verified in Long-Evans rats. Similarly, the closed-loop 16-channel design in [88] integrated a biosignal processor to extract approximate entropy (ApEn) and FFT-based bandpower features, passed to a ridge regression classifier (RRC). The system was verified on ECoG data from five patients (duration not reported), and acutely for closed-loop seizure suppression in mini-pigs, Fig. 4(d).

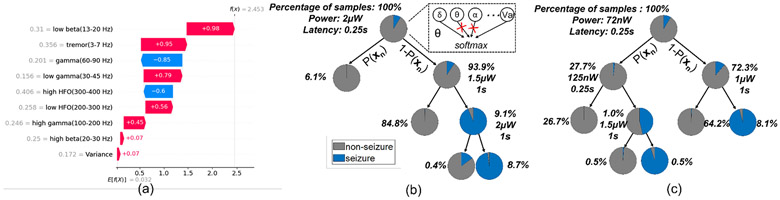

In addition to the above models, machine learning algorithms that exploit decision trees, either as base estimators in ensemble methods such as bagging and boosting [12], [29], [96] or as stand-alone classifiers [31] have been used in neural signal classification tasks. While Random Forests [97] apply a bagging technique to DTs in order to reduce variance, boosting is a bias reduction technique in which individual trees are incrementally added to the ensemble to correct the previously misclassified samples. Popular implementations of boosting methods include gradient boosting [98] and AdaBoost [99]. Both bagging and AdaBoost use classifiers as base estimators, while gradient boosting requires regressors. Particularly, ensembles of gradient-boosted DTs have recently emerged as an accurate [24], yet hardware-efficient [12], [92], [96] machine learning solution for neural SoC platforms and for applications with limited training sets. DT ensembles avoid hardware-intensive MAC operations and enable low-complexity hardware architectures for neural prosthesis applications.

In [12], a gradient-boosted DT ensemble achieved a record energy efficiency (41.2nJ/class, 32-channel) and a compact area (1 mm2) for seizure detection, Fig. 4(e). The system was validated on iEEG from 26 epilepsy patients (3074 hours, 393 seizures), available on the iEEG portal [102], a collaborative platform for sharing large iEEG datasets. An on-demand feature extraction approach was adopted by sequentially using a single feature extraction unit in each tree, thus substantially reducing the number of extracted features and the overall hardware cost for inference. As opposed to other classifiers that compute all features for each input channel, this unique property of DTs allows the classifier to selectively extract a limited number of features to minimize the loss function, thus accommodating a higher number of input channels (Table. I). Another CMOS implementation of tree-based models used AdaBoost with 1024 trees of depth one for seizure detection and closed-loop stimulation [29], Fig. 4(g). Thanks to a bit-serial processing scheme, this 8-channel SoC reported state-of-the-art energy efficiency (36nJ/class) for 8-channel iEEG classification. Recent work replaced axis-aligned splits with logistic regression to construct powerful oblique trees as an efficient combination of neural networks and DTs [31] (Section VI) for epileptic seizure and PD tremor detection.

B. Implants for Movement Disorders

Multiple feasibility studies using closed-loop DBS devices like Medtronic’s Percept and Summit have demonstrated additional benefits using closed-loop versus open-loop DBS in movement disorders [103], [104]. Closed-loop DBS in PD [1], [10], [45] has led to improvements in tremor control, reduced stimulation time and power consumption, and reduced speech side effects compared to open-loop DBS. However, wider adoption of this approach is awaiting advances in implantable hardware, control algorithms, and chronic validation. Current systems predominantly use single-biomarker thresholding, which precludes the optimized control of tremor.

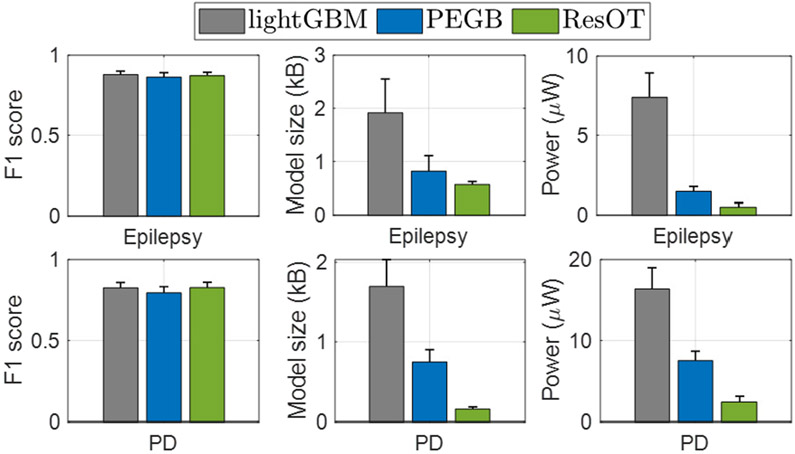

Recently, ML approaches have been used for detecting motor symptoms (e.g., tremor) in patients with PD and essential tremor [2], [23], [68], [77], [105], [106] to control DBS in closed loop. An approach based on feature engineering and tree boosting [2], [68] used various correlating features of tremor such as bandpower in multiple frequency bands, the ratio of high-frequency oscillations, phase-amplitude coupling, and tremor power to detect the onset of rest-state tremor episodes in PD. Using only five selected features, the system was able to predict tremor with a 89.2% sensitivity and detection lead of 0.52s in 12 patients, significantly better than conventional beta-thresholding approach. Fixed-point quantization and power-aware inference were later used to enable low-power gradient boosting, achieving 55.4% energy reduction compared to conventional tree ensemble [105]. A method based on resource-efficient oblique trees (ResOT) was recently applied to PD tremor detection, enabling significant energy and memory reduction by various hardware-algorithm co-design techniques [31]. A similar study was recently done on patients with essential tremor (ET) [23] who suffer from tremor during voluntary movements. Using a binary classifier, postural tremor and voluntary movements were detected from LFP features recorded via the DBS lead, achieving an average sensitivity of 80% in 7 patients with ET. Such machine learning techniques hold the promise to enable accurate symptom detection in closed-loop neural prostheses for various movement disorders. More developments in SoC design for such applications are expected in near future.

C. Implants for Neuropsychiatric Disorders and Memory

Neuromodulation, particularly invasive technologies like DBS, has been recently explored for treating psychiatric disorders such as major depressive disorder (MDD) and obsessive compulsive disorder (OCD) [8], [107]. However, despite promising early results, the high-profile clinical trials have shown inconsistent effects. One major limiting factor is the open-loop approach used in conventional DBS, which has been shown to be inefficient in engaging target brain regions in complex disorders such as depression and OCD [3]. While the application of neurostimulation techniques has made a significant impact on the lives of patients with movement disorders, major advances are needed to treat more prevalent conditions such as depression. Closed-loop patient-specific stimulation appears to be the most viable solution.

Development of algorithms for automated detection of emotional states and shifts in arousal, vigilance, and wakefulness has received considerable attention in EEG-based human studies, with some recent reports on SoC design. For example, a deep neural network (DNN) classifier was implemented for emotion detection in children with Autism [100]. The valence/arousal binary classification by the 4-layer DNN was used to detect four-state emotions. A reduction in energy consumption was achieved through a pipelined DNN architecture with a central arithmetic logic unit, Fig. 5(c). This DNN processor can analyze two EEG channels with an accuracy of 85.2% and energy efficiency of 10.1 μJ/class. In another design, a convolutional neural network (CNN) was proposed for emotion detection [101], offering an online training feature, Fig. 5(d). To minimize area and memory overhead due to batch processing, hardware re-use and mini-batch data were employed for training and acceleration, at the expense of longer training time. Using an external feature extraction engine, this system obtained a 83.36% accuracy in binary classification of emotions (Table. II). Machine learning has also been explored in sleep stage classification [108], task engagement [86] and mental fatigue prediction [69] to potentially trigger a neurostimulation therapy.

TABLE II:

Comparison of Machine Learning SoCs

| Parameter | TCAS-H’21 [18] | JETCAS’19 [101] | CICC’20 [100] |

|---|---|---|---|

| Process | 180 nm | 28 nm | 180 nm |

| Classifier | Multi-ANN+ | CNN | DNN |

| Application | Migraine Detection | Emotion Detection | Emotion Detection |

| Features | HFO, BPF, Peak latency | Off-chip | ZCD, SK |

| Signal Modality | SEP | EEG | EEG |

| Closed-loop | N | N | N |

| # of Sensing Channels | 6 | 2 | |

| ML Energy Efficiency | N.A. | N.A. | 10.13 μJ/class. |

| ML Power | 249 μW | 76.61 mW | N.A. |

| Total Area (ML Area) | 0.5 (0.5) mm2 | 3.35 (3.35) mm2 | 16 (6.02*) mm2 |

| Sampling Rate/Ch. | 5 kS/s | 250 S/s | N.A. |

| Accuracy | 76% | 83.4%** | 85.2% |

| Dataset (# patients) | MI, MII (42), HV (15) | DEAP (32) | DEAP (32), SEED |

| Latency | 50 ms† | 0.45 s† | <1min† |

| ML Energy/Ch. | 49.8 nJ/S | 51.1 μJ/S | N.A. |

| ML Area/Ch. | 0.5 mm2 | 0.558 mm2 | 3.01 mm2 |

| ML E-A FoM | 24.9 nJ·mm2/S | 29 μJ·mm2/S | N.A. |

Post place-and-route results.

ML (feature extractor and classifier) area estimated from chip micrograph

Accuracy metric

Processing (system) latency

Disorders such as Alzheimer’s disease exhibit network abnormalities, necessitating the need for multi-site electrophysiological recordings. The closed-loop stimulation approach in [4] used a patient-specific logistic regression classifier to decode the brain-wide electrocorticography (ECoG) signals, and subsequently triggered stimulation in response to the predicted periods of poor memory encoding to enhance memory. The results suggest a predictive role of increased high-frequency as well as decreased low-frequency activity for memory recall, and that responsive neuromodulation in the lateral temporal cortex could improve recall performance. More developments in neural prosthesis design for mental and memory disorders are expected in the coming years.

D. Wearables for Migraine

While most current devices have been developed for epilepsy and movement disorders, there is an increasing demand for novel therapeutic devices for other medication-resistant neurological disorders. Migraine, for instance, is the most common neurological disorder that affects millions around the world. Migraine patients suffer from episodic headaches lasting hours to days and often move from a stage of low-frequency attacks into chronic migraine. The diagnosis mainly relies on patient diaries and clinical interviews [63], [109]. As an emerging alternative, neurophysiological monitoring techniques have shown to be beneficial in assessing migraine progression [109]. The automated detection of migraine state using continuous brain recordings could help in early and more effective treatment, either with medications or neurostimulation.

A machine learning approach was recently proposed for noninvasive migraine state detection from somatosensory evoked potential (SEP) biomarkers in 42 migraine patients, as described in [63]. The results suggest the potential use of SEP as a feedback signal for migraine attack prediction. Based on this idea, [18] reported a low-power feature extraction and ML processor for migraine state prediction, using single-channel SEP as input. Multiple features such as bandpower, time-domain and statistical features of high-frequency oscillations [63] were integrated with a multi-class artificial neural network (ANN), achieving a predictive accuracy of 76%, Fig. 5(b) (chip layout post place-and-route).

E. Implants for Stroke and Traumatic Brain Injury

Neurostimulation can be used to facilitate post-stroke plasticity and functional recovery. Compared to noninvasive methods such as transcranial magnetic or direct-current stimulation (TMS, tDCS), invasive tools such as direct cortical stimulation offer a higher temporal and spatial resolution. However, current cortical stimulation approaches for stroke are limited by the poor localization of stimulation targets and open-loop operation [110], urging the need for advanced data analysis and machine learning techniques.

In addition, patients with severe-to-moderate traumatic brain injury (smTBI) suffer from persistent cognitive dysfunction and chronic mental fatigue that significantly impacts all aspects of their functioning. Despite extensive efforts to develop rehabilitation and medication-based therapies, there are no effective therapeutic options for these patients. In a break-through study, it was shown that therapeutic DBS in the central thalamus (CT-DBS) could restore executive function, fluent communication and motor control in a patient who remained in a minimally conscious state for six years following a TBI [111]. Similar improvements have been observed in individuals with chronic mental fatigue. In a recent study, the ECoG activity from two healthy non-human primates (NHPs) during a sustained attention task was used to predict the onset of mental fatigue [64], [69]. Using spectrotemporal and connectivity biomarkers and a tree ensemble classifier, the decline in animal’s performance was predicted, seconds prior to NHP’s behavioral response. This approach could potentially be used for closed-loop neurostimulation in patients with TBI.

In a proof-of-concept study [53], a closed-loop neural SoC was used to facilitate recovery after brain injury in a rat model of brain injury. The action potentials detected in premotor cortex were used to trigger neurostimulation in somatosensory cortex for several weeks. This spike-triggered stimulation led to significantly improved reaching and grasping functions, enhancing the functional connectivity between the two brain regions. These findings motivate the design of novel closed-loop neural prostheses to treat brain injury and similar neurological indications.

F. Comparison of ML-embedded SoCs

A comparison on hardware specifications and classification performance of state-of-the-art neural prostheses with on-chip machine learning is presented in Table. I (for epilepsy) and Table II (for other applications). When comparing the performance and hardware cost of different ML SoCs, one should consider various factors that affect the overall predictive performance and design complexity, such as the input signal modality and dataset, the number of analyzed patients, the duration of recording and seizure count, and the metrics used to evaluate the algorithm/hardware performance (e.g., accuracy, F1 score, sensitivity, power vs. energy efficiency, detection vs. system latency). In addition, the number of processed channels should be taken into account to fairly compare various architectures and assess their scalability.

Energy efficiency has been a common metric to compare different ML-embedded biomedical SoCs in literature. However, we note that the energy efficiency is not being reported in a unified manner (e.g., total power consumption/sampling rate [11]-[14], [29] or total power consumption/classification rate [16], [17], [88] has been used), which may hinder appropriate design choices. Furthermore, the number of channels is not taken into account, which is particularly important in modern neural prostheses. Here, we define a new energy-area efficiency figure of merit (E-A FoM) as follows:

| (1) |

where PCh and ACh indicate per-channel power and area of the ML SoC, respectively, and fs is the per-channel sampling rate of the signal processing circuits. Similar FoMs have been used in AFE and ADC design for multi-channel neural recording [112]. The E-A FoM fairly represents the energy-area efficiency of the system while also factoring in the multi-channel scalability. Other performance metrics such as accuracy and latency are excluded as those metrics can vary among different datasets and applications. Table. I and II report the E-A FoM of the state-of-the-art neural prostheses along with their per-channel area and energy consumption. Only the power and area of the ML processor (i.e., feature extractor, classifier, and memory for parameter storage) have been considered. This FoM indicates that the tree-based models achieve orders of magnitude superior energy-area efficiency compared to SVM classifiers, while providing comparable classification accuracy and latency. With cost-aware hardware-algorithm co-design, we aim to improve the efficiency of tree-based classifiers even further, as discussed in Sections VI-VII.

The predictive power and hardware efficiency of different SoCs are greatly affected by their selection of ML algorithms. For example, DT-based ML models feature a lightweight inference scheme where we simply compare feature values to thresholds to route samples through the tree. On the contrary, the inference of kernelized SVM involves vector multiplications and the calculation of Gram matrix, which partially explains the E-A superiority of DTs over SVMs in Table. I. Moreover, inspired by the recent success of deep learning algorithms, there is an increasing interest in deploying CNNs and DNNs on neural SoCs [18], [100], [101]. However, compared to conventional approaches, deep learning models generally require more training data and consume higher power consumption [113]. The benefits of using deep learning in neural SoCs need to be further investigated in the future.

G. Limitations of the Current SoCs and Future Directions

High-density electrode arrays have shown promise in both neurophysiological monitoring [48] and therapeutic neurostimulation [114]. However, the channel count of state-of-the-art ML SoCs is limited to 32, which could hinder their clinical application. The most critical challenges to realizing high-channel-count ML-embedded neural prostheses lie in the AFE, the back-end signal processing, and the memory for parameter storage. Over the past years, the field has witnessed a growth of channel count in neural prostheses, such as Neuralink’s BMI platform with 3072 channels [115]. Recently, a 1024-channel closed-loop BMI SoC was presented with a successful demonstration of motor intention decoding (performed offline) in a macaque monkey [116]. Novel area- and power-efficient AFE design techniques (such as mixed-signal [117] and time-division multiplexing [118], [119]) should continue to be explored. This will enable advanced neural prostheses with high resolution, reduced invasiveness, longer lifetime, and minimized heat-induced tissue damage. In addition to area-power constraints on the AFE, the burden of the back-end signal processing (i.e., feature extraction and classification) is a major bottleneck to next-generation high-channel-count prostheses. The amount of computation in the current ML SoCs grows linearly with channel count, posing a major challenge on the energy consumption. The on-demand feature computation scheme in [12] could be a viable solution to realizing a scalable ML SoC. Only relevant features from a subset of channels are computed in each processing window, achieving a substantial reduction in hardware cost. Similar techniques will pave the way for the integration of novel high-density electrodes (Section II. A) in future diagnostic and closed-loop devices. Another on-demand processing approach was adopted in an SVM-based two-level (coarse/fine) classifier to reduce the system power consumption [89]. Exploiting the sparseness of seizures, the otherwise power-demanding SVM classifier (fine) in a separate chip is only activated upon seizure declaration by the coarse detector. The two-level SVM classifier performs 266 classifications/hour with 1.16 μW average power, improving >135× over the conventional SVM. Single-chip integration and multi-channel scalability have yet to be addressed with this approach.

In addition, most current classifiers integrated on neural prostheses use an offline training scheme with fixed parameters, thus neglecting the non-stationary dynamics of neural signals. The next generation ML-embedded neural SoCs are expected to perform active, incremental learning to account for the previously unseen changes in neurological patterns. In online machine learning, the model parameters are updated with the sequential arrival of data, thus dynamically adapting to new signal patterns. Online learning algorithms have shown promise in stable chronic neural decoding [120]. Yet, the deployment of such models on ASIC with minimal area and power consumption remains an open direction. Current on-chip systems based on SVM [91], [121] are highly energy and memory demanding, while off-chip recalibrations pose security risks and reduce patient independence. More developments in this area are expected in near future.

The ML-embedded neural prostheses, like other edge AI devices in IoT and healthcare, may greatly benefit from developments in algorithm and circuit design that could lead to higher performance, lower energy and more compact area. For instance, future ML SoCs are expected to benefit from emerging techniques in CMOS design such as analog, mixed-signal [122], and approximate computing, as well as in-memory computing techniques. Particularly, in-memory computing has shown the potential to achieve remarkable improvements over conventional digital implementations [32], [38], [123]. Compared to current SoCs, neuromorphic hardware integrates spiking neural networks (SNN) and in-memory computing to avoid the communication overhead between processors and memory, and allows unsupervised online learning via Spike Timing Dependent Plasticity (STDP). As discussed in [38], currently the memristor-based designs are rarely used in the biomedical domain. Moreover, it should be noted that the decoding performance of SNN is relatively low due to the lack of maturity of the training algorithms [38]. Deploying high performance SNN and memristor-based designs in neural prostheses remains as a future direction.

V. Hardware-Algorithm Co-Design of Decision Tree Ensembles

Designing machine learning models that consume little energy and area, while providing a high classification accuracy and low detection latency is essential to the next-generation smart neural prostheses. As discussed in Section IV, decision trees are widely used in edge applications and neural decoding tasks thanks to their low inference complexity, easy and fast training, as well as high predictive power in ensemble methods or oblique structures [12], [22], [24], [29], [31]. These advantages are essential for extremely resource-constrained platforms such as a brain implant or wearable with high channel counts. In this section, we present novel approaches to optimize the key design metrics of an on-chip DT ensemble, including the power consumption and processing latency, in the context of neural signal classification tasks. Some of these techniques are broadly applicable to other machine learning algorithms for various implantable and edge applications.

A. Depth-Variant Tree Ensemble for Latency Reduction

In a decision tree, test sample traverses a single root-to-leaf path during inference [31], [124]. Despite being lightweight and area-efficient, the single-path scheme requires conditional computation and evaluates nodes in a sequential order [125]. As a result, DT-based classifiers impose a latency that increases proportionally with the decision path length. However, early symptom detection is critical to effectively treat neurological disorders, and it is directly affected by the processing latency. Previous work reduced seizure detection latency by either using shorter windows [126] or replacing the widely used bandpower biomarkers with new features such as neuronal potential similarity [127]. However, such methods may suffer from a degraded classification performance (since low-frequency features that require a longer window could be critical in symptom detection [126]) or poor generalizability due to the use of specific biomarkers [127]. To the best of our knowledge, this study is the first to address latency reduction from an algorithmic perspective.

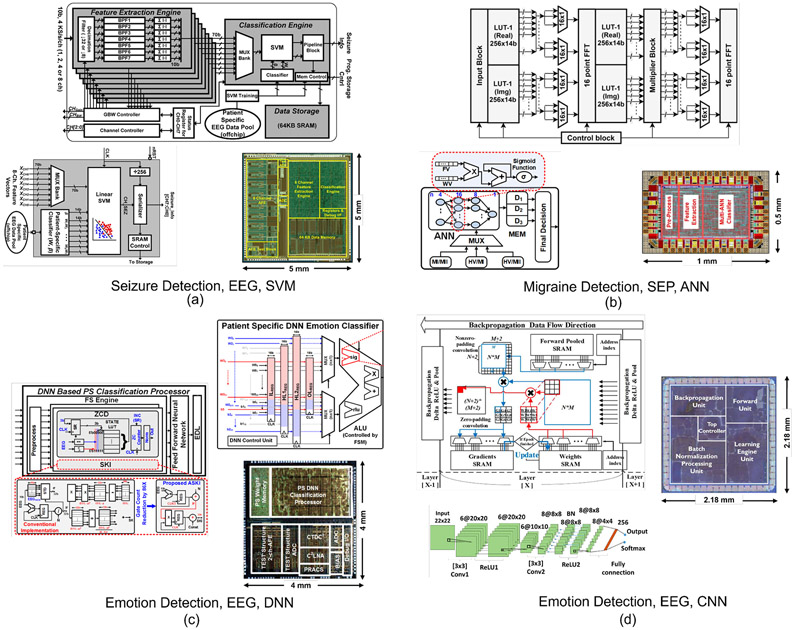

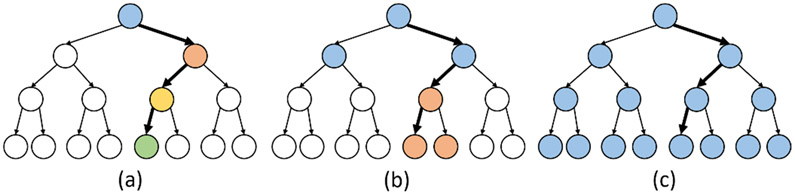

Tree ensembles have shown promise in various neural classification tasks [2], [12], [24], [31]. However, conventional ensembles impose a uniform maximum-depth constraint on all base-estimators in the ensemble, such that the system latency is similar across different trees. In this work, we propose the Depth-Variant Tree Ensemble (DVTE), a novel low-latency variation of conventional ensemble methods. As shown in Fig. 6, DVTE consists of decision trees with different maximum depths, resulting in non-uniform latencies across trees. In a DVTE, shallow trees perform fast inference to reduce system latency, while deep trees are trained to compensate for misclassification errors caused by shallow trees.

Fig. 6:

A DVTE with eight decision trees. Unlike conventional tree ensembles that uniformly set the maximum depth on all trees, the maximum depths in a DVTE are different (1–4). The internal and leaf nodes are shown in blue and black, respectively.

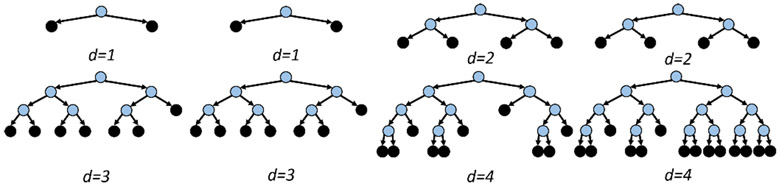

We trained the proposed DVTE model using the popular gradient boosting framework [98], [128]. In the first two boosting rounds, we initialized the ensemble with decision stumps (i.e., decision trees with a single internal node). In the third and fourth rounds, two DTs with a max depth of two were trained to compensate for the residual errors from previous rounds. Deeper trees were gradually added to DVTE in later boosting rounds to better fit on training data. During inference, all decision trees in a DVTE run freely in parallel, with no need for synchronization. Therefore, shallow trees can update the decision outcome more frequently than deeper trees. If the current inference in a deep tree is incomplete (i.e., test samples have not yet reached the leaf nodes), we used the most recent output of that tree. The final prediction of DVTE is calculated as the sum of the outputs of all trees in the ensemble, which can be updated at the same rate as the shortest tree (i.e., d = 1). While shallow trees make predictions with low latency (trees of d = 1 in Fig. 7), they often have a limited predictive power and may not fit well on training data. To tackle this problem and achieve the best trade-off between latency and classification accuracy, DVTE incorporates deeper trees in the gradient boosting framework to reduce bias.

Fig. 7:

The outputs of decision trees in a DVTE. Latency is defined as the time difference between the expert-marked seizure onset and the state change of each tree’s output. d is the maximum depth of each tree.

Unlike DVTE which effectively combines shallow and deep trees in the gradient boosting ensemble to jointly optimize the latency and accuracy, previous work either used a few deep trees (e.g., 8 trees with a max depth of 4 [12]) with potential latency concerns as discussed above, or implemented a large number of shallow trees (1024 decision stumps in [29]), requiring many parallel feature processing units. The aim of DVTE is to benefit from both shallow and deep trees and enable low-latency inference with a small tree ensemble. This is particularly critical in time-sensitive classification tasks such as PD tremor detection with strict latency requirements.

As an example, we built a DVTE with 8 trees and various depths from 1 to 4 (Fig. 6). This model was benchmarked against conventional ensemble (8 trees, max depth: 4 [12]). We used a learning rate of 0.3 for both models and implemented them using the lightGBM library in Python [128]. We tested our classifier on epileptic seizure detection using iEEG recordings (11 patients, 106 annotated seizures over 1255 hours). The number of channels varied from 47 to 128. This dataset can be accessed via iEEG portal [102]. Handcrafted features were extracted over various window lengths as detailed in Table III. It should be noted that both EEG and iEEG have been widely used in on-chip seizure detectors [11]-[14], [16], [17], [29], [88], [89]. However, iEEG is more commonly used in closed-loop prostheses, as it can be easily combined with invasive neuromodulation techniques for improved symptom control [16], [17], [29], [88], and it has been used in our study.

TABLE III:

Epilepsy features, their power and latency costs

| Features and description | Power (nW) | Latency (s) | |

|---|---|---|---|

| Delta (δ): | Bandpower over 1-4Hz | 250.6 | 1 |

| Theta (θ): | Bandpower over 4-8Hz | 250.6 | 0.5 |

| Alpha (α): | Bandpower over 8-13Hz | 250.6 | 0.5 |

| Beta (β): | Bandpower over 13-30Hz | 250.6 | 0.25 |

| Low-Gamma (γ1): | Bandpower over 30-50Hz | 250.6 | 0.25 |

| Gamma (γ2) | Bandpower over 50-80Hz | 250.6 | 0.25 |

| High-Gamma (γ3) | Bandpower over 80-150Hz | 250.6 | 0.25 |

| Ripple: | Bandpower over 150-250Hz | 250.6 | 0.25 |

| Line-Length (LLN): | 7.4 | 0.25 | |

| Variance (Var): | 21.6 | 0.25 | |

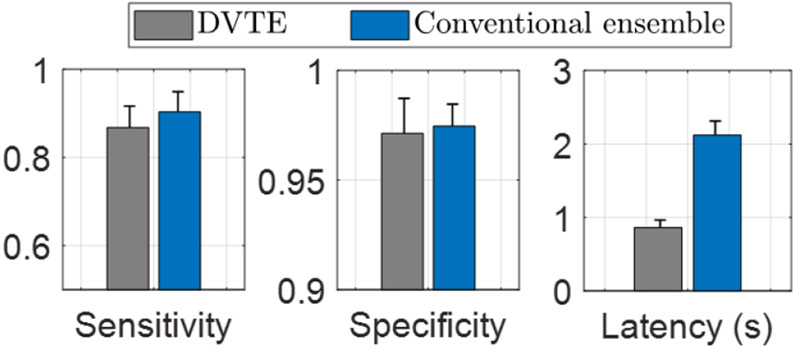

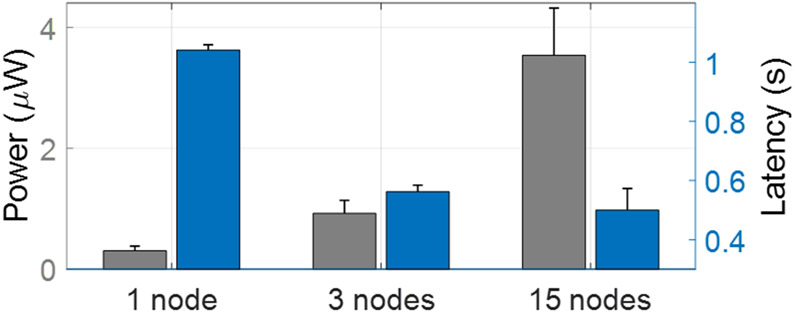

Figure 8 compares the proposed DVTE and the conventional ensemble method in terms of classification performance (sensitivity, specificity) and latency. The performance was evaluated using bit-accurate classifier models in MATLAB and Python. We estimated the processing latency by calculating the average time to traverse a root-to-leaf decision path in the trees. Compared to the conventional ensemble, DVTE caused a marginal performance reduction (<3% in sensitivity and <1% in specificity). On the other hand, DVTE achieved an average latency of 0.86s, significantly lower than the latency of a conventional ensemble (2.12s, 2.5× reduction).

Fig. 8:

Performance comparison of DVTE and conventional tree ensemble with a maximum depth of 4. DVTE reduced the latency by 2.5× with a marginal performance reduction (<3% in sensitivity and <1% in specificity). Error bars indicate the standard errors across patients.

B. Cost-Aware Learning for Latency and Power Reduction

The inference phase of tree-based models is relatively simple. In axis-aligned decision trees, we compare a feature value to a threshold in order to select the child node at each internal node. The leaf node contains a constant weight indicating the prediction result. Given the lightweight inference in tree-based models, the hardware cost (e.g., power, latency) is largely affected by the feature extraction process [12].

Table III summarizes the biomarkers used in our seizure detection task and their power and latency cost. We implemented digital feature extraction hardware in a TSMC 65 nm LP process using Synopsys Design Compiler and Cadence Innovus. The power cost of each feature was simulated under a 1.2-V supply using Synopsys PrimeTime. Line-length, a widely used feature in epilepsy studies, is hardware-friendly and low-power. Bandpower features, on the other hand, consume higher power since they require an FIR filtering stage. The latency associated with a feature depends on the window size used to compute that feature. Long windows were used to extract low-frequency bandpower, while short windows were used for time-domain features and high-frequency bandpowers. Specifically, we used 1s windows to extract Delta (δ), 0.5s for Theta (θ) and Alpha (α), and 0.25s for other features.

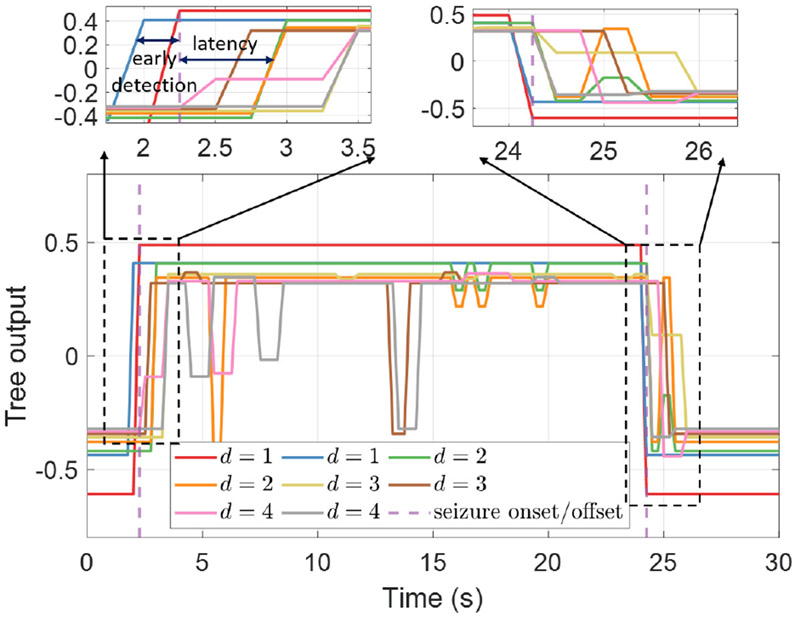

We apply the cost-aware learning approach to tree-based classifiers (e.g., DVTE) to reduce the inference hardware cost. Specifically, we use the total power consumption and latency along the decision path as a regularization term in the training process. The training of cost-aware decision trees attempts to minimize the following expression:

| (2) |

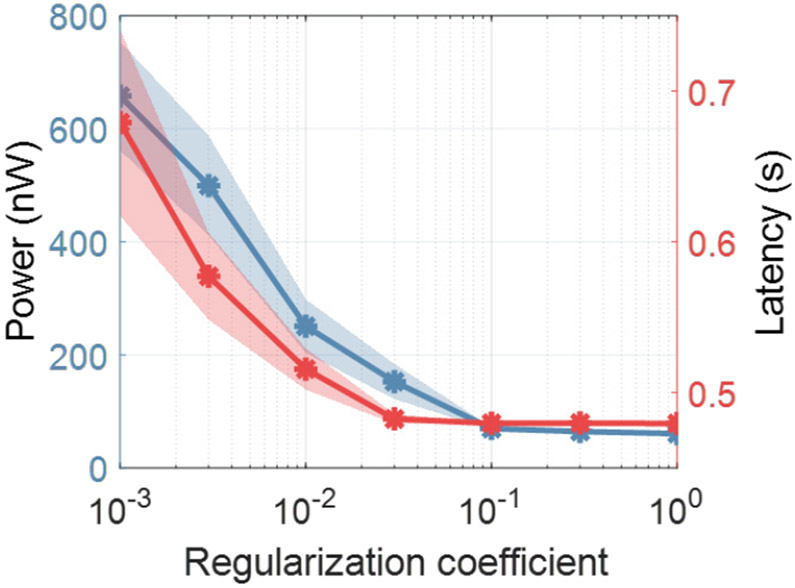

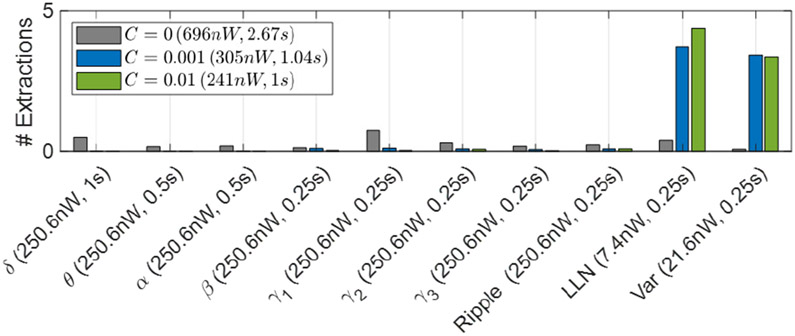

where L(yi, f (xi)) is the loss function that measures the misclassification error as the difference between groundtruth yi and prediction f (xi), Ψpow and Ψlat indicate the estimated power consumption and latency along the decision path, respectively, and C is the regularization coefficient that determines the trade-off between hardware cost and performance. The effect of varying C on latency and power in DVTE is shown in Fig. 9. For a greater regularization coefficient, cost-aware decision trees achieve a lower hardware cost. Since power and latency span over different ranges, we standardized the cost by removing the mean value and normalizing both power and latency to their unit variance.

Fig. 9:

Hardware cost as a function of the regularization coefficient C in DVTE. Large C imposes strong regularization and reduces the power/latency cost. The power cost was calculated as the average power consumption to extract features along the decision path. Latency was estimated as the average time to traverse a root-to-leaf decision path in the tree.

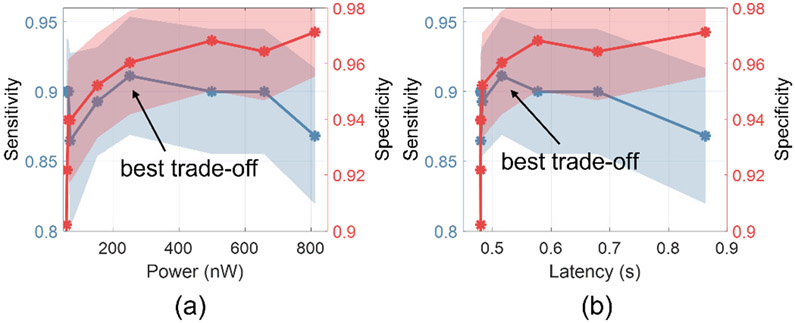

We applied the cost-aware inference approach to DVTE to reduce both power and latency on seizure detection task. Figure 10 shows the classification performance (sensitivity, specificity) as a function of the cost metrics (latency, power). We adjusted the regularization coefficient C to achieve different trade-offs between power/latency and performance. For both the low-power (Fig. 10(a)) and low-latency (Fig. 10(b)) DVTEs, the best trade-off is observed at a point where a maximum reduction in latency or power can be achieved with only a marginal performance loss.

Fig. 10:

Seizure detection performance as a function of (a) power consumption and (b) latency. Shaded area indicates the standard errors across patients. The experiment was performed using DVTE and the following setting: 8 trees, depths varying from 1 to 4.

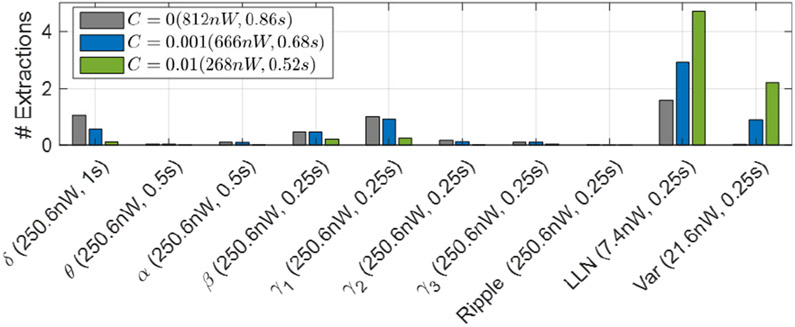

Figure 11 shows the number of extracted features for the cost-aware DVTE. The number of feature extractions are normalized to each 0.25s window. Thus, the normalized feature count is upper bounded by the number of trees. For C > 0, we used the hardware cost to regularize the model and as a result, DVTE was trained to minimize the inference power and latency. As the regularization coefficient increases, the model further penalizes inefficient features. With C = 0.01, we achieved the best trade-off between performance and hardware cost (Fig. 10), reducing the power by 3× and latency by 1.7× compared to DVTE without cost-aware learning.

Fig. 11:

The number of extracted features in DVTE for different regularization coefficients. With greater C, the cost-aware model tends to use hardware-friendly features (e.g., LLN, Var). Features with longer windows (δ, θ, α) are also penalized in the cost-aware model. The power cost and latency for each C are shown in the legend, while the X-axis shows individual feature costs. For C = 0.01, we achieved an average power cost of 268nW and latency of 0.52s.

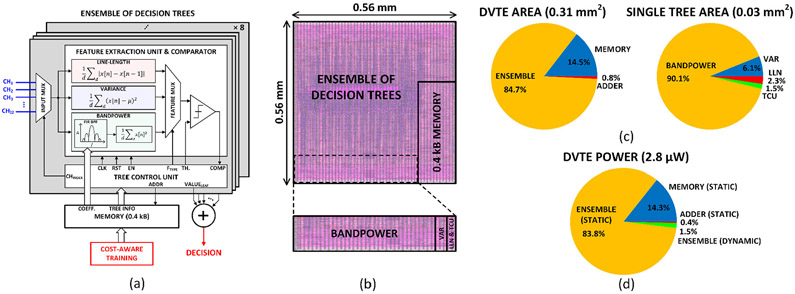

C. Hardware Implementation of DVTE Classifier

We implemented the DVTE classifier in hardware to demonstrate the efficacy of the proposed cost-aware learning approach. Figure 12(a) presents the system architecture of the DVTE classifier, which supports 32-channel 500-S/s 10-bit input data. Each of the 8 decision trees consists of a feature extraction unit (FEU), a comparator, and a tree control unit (TCU). A 32-tap programmable FIR bandpass filter was implemented to extract the bandpower feature in a selected frequency band, and a single FIR coefficient memory was shared between 8 trees. The FEU extracts only one feature during each window, which allows us to clock- and data-gate unused feature blocks for dynamic power saving. The extracted feature is then compared to a threshold to decide the decision path in the tree. The TCU reads the trained tree information (i.e., feature type, channel index, threshold, and leaf value) from memory and controls the FEU based on the current node information and comparison result. When a leaf node is reached, the tree sends out a leaf value and repeats the process starting from the root node. Leaf values from the 8 trees are summed to make a final decision. The proposed lightweight DVTE classifier utilizes a 0.4kB on-chip memory.

Fig. 12:

Hardware implementation of the proposed DVTE classifier: (a) system architecture, (b) layout, (c) area breakdown of the DVTE processor and a single decision tree, and (d) system power breakdown.