Abstract

Metacognition is awareness and control of thinking for learning. Strong metacognitive skills have the power to impact student learning and performance. While metacognition can develop over time with practice, many students struggle to meaningfully engage in metacognitive processes. In an evidence-based teaching guide associated with this paper (https://lse.ascb.org/evidence-based-teaching-guides/student-metacognition), we outline the reasons metacognition is critical for learning and summarize relevant research on this topic. We focus on three main areas in which faculty can foster students’ metacognition: supporting student learning strategies (i.e., study skills), encouraging monitoring and control of learning, and promoting social metacognition during group work. We distill insights from key papers into general recommendations for instruction, as well as a special list of four recommendations that instructors can implement in any course. We encourage both instructors and researchers to target metacognition to help students improve their learning and performance.

INTRODUCTION

Supporting the development of metacognition is a powerful way to promote student success in college. Students with strong metacognitive skills are positioned to learn more and perform better than peers who are still developing their metacognition (e.g., Wang et al., 1990). Students with well-developed metacognition can identify concepts they do not understand and select appropriate strategies for learning those concepts. They know how to implement strategies they have selected and carry out their overall study plans. They can evaluate their strategies and adjust their plans based on outcomes. Metacognition allows students to be more expert-like in their thinking and more effective and efficient in their learning. While collaborating in small groups, students can also stimulate metacognition in one another, leading to improved outcomes. Ever since metacognition was first described (Flavell, 1979), enthusiasm for its potential impact on student learning has remained high. In fact, as of today, the most highly cited paper in CBE—Life Sciences Education is an essay on “Promoting Student Metacognition” (Tanner, 2012).

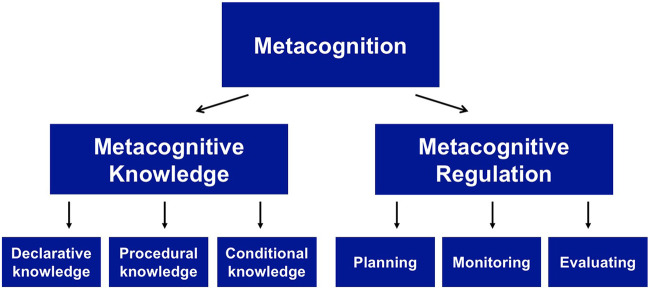

Despite this enthusiasm, instructors face several challenges when attempting to harness metacognition to improve their students’ learning and performance. First, metacognition is a term that has been used so broadly that its meaning may not be clear (Veenman et al., 2006). We define metacognition as awareness and control of thinking for learning (Cross and Paris, 1988). Metacognition includes metacognitive knowledge, which is your awareness of your own thinking and approaches for learning. Metacognition also includes metacognitive regulation, which is how you control your thinking for learning (Figure 1). Second, metacognition includes multiple processes and skills that are named and emphasized differently in the literature from various disciplines. Yet upon examination, the metacognitive processes and skills from different fields are closely related, and they often overlap (see Supplemental Figure 1). Third, metacognition consists of a person’s thoughts, which may be challenging for that person to describe. The tacit nature of metacognitive processes makes it difficult for instructors to observe metacognition in their students, and it also makes metacognition difficult for researchers to measure. As a result, classroom intervention studies of metacognition—those that are necessary for making the most confident recommendations for promoting student metacognition—have lagged behind foundational and laboratory research on metacognitive processes and skills.

FIGURE 1.

Metacognition framework commonly used in biology education research (modified from Schraw and Moshman, 1995). This theoretical framework divides metacognition into two components: metacognitive knowledge and metacognitive regulation. Metacognitive knowledge includes what you know about your own thinking and what you know about strategies for learning. Declarative knowledge involves knowing about yourself as a learner, the demands of the task, and what learning strategies exist. Procedural knowledge involves knowing how to use learning strategies. Conditional knowledge involves knowing when and why to use particular learning strategies. Metacognitive regulation involves the actions you take in order to learn. Planning involves deciding what strategies to use for a future learning task and when you will use them. Monitoring involves assessing your understanding of concepts and the effectiveness of your strategies while learning. Evaluating involves appraising your prior plan and adjusting it for future learning.

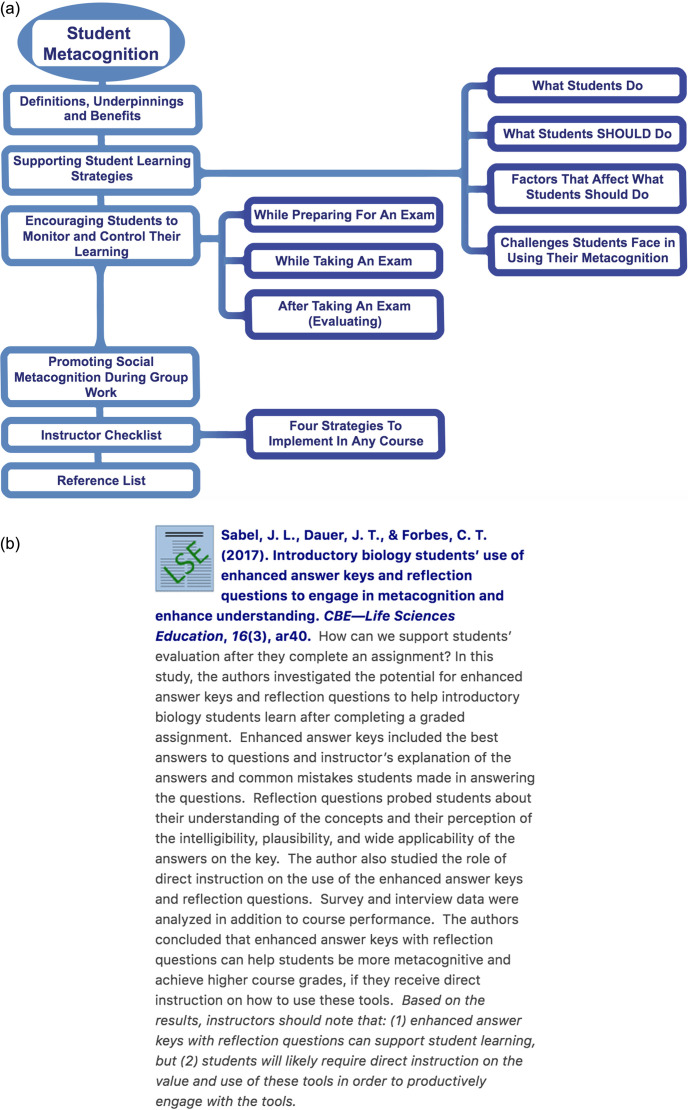

We have created a teaching guide to address these challenges so that instructors can readily provide their students with evidence-based opportunities for practicing metacognition (Figure 2). In an interdisciplinary collaboration, we drew heavily on robust metacognitive research from cognitive science, as well as studies from higher education and discipline-based education research. We worked to align the common aspects of two major metacognition frameworks (Nelson and Narens, 1990; Schraw and Moshman, 1995) to guide our selections (see Figure 1 and Supplemental Figure 1). Our goal was to offer unifying terminology and the reasoning behind metacognition’s benefits to allow instructors to capitalize on the most promising findings from several disciplines. In this essay, we highlight the features of our interactive guide, which can be accessed at: https://lse.ascb.org/evidence-based -teaching-guides/student-metacognition. We also point to some of the important open questions in metacognition in each section. For example, as we think about metacognition generally, we encourage researchers to continue investigating the following questions:

How do undergraduate students develop metacognitive skills?

To what extent do active learning and generative work1 promote metacognition?

To what extent do increases in metacognition correspond to increases in achievement in science courses?

FIGURE 2.

(A) Landing page for the Student Metacognition guide. The landing page provides a map with sections an instructor can click on to learn more about how to support students’ metacognition. (B) Example paper summary showing instructor recommendations. At the end of each summary in our guide, we used italicized text to point out what instructors should know based on the paper’s results.

The organization of this essay reflects the organization of our evidence-based teaching guide. In the guide, we first define terms and provide important background from papers that highlight the underpinnings and benefits of metacognition (https://lse.ascb.org/evidence-based-teaching-guides/student-metacognition/benefits-definitions-underpinnings). We then explore metacognition research by summarizing both classic and recent papers in the field and providing links for readers who want to examine the original studies. We consider three main areas related to metacognition: 1) student strategies for learning, 2) monitoring and control of learning, and 3) social metacognition during group work.

SUPPORTING STUDENTS TO USE EFFECTIVE LEARNING STRATEGIES

What Strategies Do Students Use for Learning?

First our teaching guide examines metacognition in the context of independent study (https://lse.ascb.org/evidence-based-teaching-guides/student-metacognition/supporting-student -learning-strategies). When students transition to college, they have increased responsibility for directing their learning, which includes making important decisions about how and when to study. Students rely on their metacognition to make those decisions, and they also use metacognitive processes and skills while studying on their own. Empirical work has confirmed what instructors observe about their own students’ studying—many students rely on passive strategies for learning. Students focus on reviewing material as it is written or presented, as opposed to connecting concepts and synthesizing information to make meaning. Some students use approaches that engage their metacognition, but they often do so without a full understanding of the benefits of these approaches (Karpicke et al., 2009). Students also tend to study based on exam dates and deadlines, rather than planning out when to study (Hartwig and Dunlosky, 2012). As a result, they tend to cram, which is also known in the literature as massing their study. Students continue to cram because this approach is often effective for boosting short-term performance, although it does not promote long-term retention of information.

Which Strategies Should Students Use for Learning?

Here, we make recommendations about what students should do to learn, as opposed to what they typically do. In our teaching guide, we highlight three of the most effective strategies for learning: 1) self-testing, 2) spacing, and 3) interleaving (https://lse.ascb.org/evidence-based-teaching-guides/student -metacognition/supporting-student-learning-strategies/ #whatstudentsshould). These strategies are not yet part of many students’ metacognitive knowledge, but they should know about them and be encouraged to use them while metacognitively regulating their learning. Students self-test when they use flash cards and answer practice questions in an attempt to recall information. Self-testing provides students with opportunities to monitor their understanding of material and identify gaps in their understanding. Self-testing also allows students to activate relevant knowledge and encode prompted information so it can be more easily accessed from their memory in the future (Dunlosky et al., 2013).

Students space their studying when they spread their learning of the same material over multiple sessions. This approach requires students to intentionally plan their learning instead of focusing only on what is “due” next. Spacing can be combined with retrieval practice, which involves recalling information from memory. For example, self-testing is a form of retrieval practice. Retrieval practice with spacing encourages students to actively recall the same content across several study sessions, which is essential for consolidating information from prior study periods (Dunlosky et al., 2013). Importantly, when students spread their learning over multiple sessions, they are less susceptible to superficial familiarity with concepts, which can mislead them into thinking they have learned concepts based on recognition alone (Kornell and Bjork, 2008).

Students interleave when they alternate studying of information from one category with studying of information from another category. For example, when students learn categories of amino acid side groups, they should alternate studying nonpolar amino acids with polar amino acids. This allows students to discriminate across categories, which is often critical for correctly solving problems (Rohrer et al., 2020). Interleaving between categories also supports student learning because it usually results in spacing of study.

Most research has focused on what strategies students select and use for learning, but more work is needed to understand how students use those strategies (Kuhbandner and Emmerdinger, 2019), and why they use them, which involves both metacognitive knowledge and metacognitive regulation (Figure 1). The ways students enact the same learning strategy can differ greatly. For example, two students may report reading the textbook. The first student may be passively rereading entire textbook chapters, whereas the second student may be selectively reading passages of the text to clarify areas of confusion. Using open-ended instruments for collecting data will allow researchers to resolve contradictory findings on whether certain learning strategies support academic achievement. Three open research questions are:

How are students enacting specific learning strategies, and do different students enact them in different ways?

To what extent do self-testing, spacing, and interleaving support achievement in the context of undergraduate science courses?

What can instructors do to increase students’ use of effective learning strategies?

What Factors Affect the Strategies Students Should Use to Learn?

Next, we examined the factors that affect what students should do to learn. Although we recommend three well-established strategies for learning, other appropriate strategies can vary based on the learning context. For example, the nature of the material, the type of assessment, the learning objectives, and the instructional methods can render some strategies more effective than others (Scouller, 1998; Sebesta and Bray Speth, 2017). Strategies for learning can be characterized as deep if they involve extending and connecting ideas or applying knowledge and skills in new ways (Baeten et al., 2010). Strategies can be characterized as surface if they involve recalling and reproducing content. While surface strategies are often viewed negatively, there are times when these approaches can be effective for learning (Hattie and Donoghue, 2016). For example, when students have not yet gained background knowledge in an area, they can use surface strategies to acquire the necessary background knowledge. They can then incorporate deep strategies to extend, connect, and apply this knowledge. Importantly, surface and deep strategies can be used simultaneously for effective learning. The use of surface and deep strategies ultimately depends on what students are expected to know and be able to do, and these expectations are set by instructors. Openly discussing these expectations with students can enable them to more readily select effective strategies for learning.

What Challenges Do Students Face in Using Their Metacognition to Enact Effective Strategies?

Students may encounter challenges in using metacognition to inform their learning. For instance, students may believe that evidence-based strategies do not work for them personally. Students can be provided with data showing increased performance after use of evidence-based strategies; however, instructors should note that the belief that evidence-based strategies do not work may persist even in the face of a student’s own data (Roediger and Karpicke, 2006). In other cases, students continue to use approaches for learning that they know are not currently effective, especially if those approaches brought them success in the past. Students may be willing to change how they study, but they may need to develop accurate procedural knowledge, which involves knowing how to enact a strategy, or they may need to develop conditional knowledge, which involves knowing when and why a strategy is appropriate for a learning task (Stanton et al., 2015). To help students develop metacognitive knowledge in the form of conditional and procedural knowledge, instructors can model strategies that align with a learning task and give students opportunities to practice those strategies. In other cases, students may know how, when, and why they should use effective strategies, but they may decide not to use them because those strategies cause them discomfort (Dye and Stanton, 2017). For example, self-testing may cause students discomfort because it requires greater cognitive effort compared with passively reviewing material for familiarity. Self-testing can also reveal gaps in understanding, which can cause stress for students who do not want to be confronted with what they do not know. Two important open questions are:

How can students address challenges they will face when using effective—but effortful—strategies for learning?

What approaches can instructors take to help students overcome these challenges?

ENCOURAGING STUDENTS TO MONITOR AND CONTROL THEIR LEARNING FOR EXAMS

Metacognition can be investigated in the context of any learning task, but in the sciences, metacognitive processes and skills are most often investigated in the context of high-stakes exams. Because exams are a form of assessment common to nearly every science course, in the next part of our teaching guide, we summarized some of the vast research focused on monitoring and control before, during, and after an exam (https://lse.ascb.org/evidence-based-teaching-guides/student-metacognition/encouraging-students-monitor-control-learning). In the following section, we demonstrate the kinds of monitoring and control decisions learners make by using an example of introductory biology students studying for an exam on cell division. The students’ instructor has explained that the exam will focus on the stages of mitosis and cytokinesis, and the exam will include both multiple-choice and short-answer questions.

How Should Students Use Metacognition while Preparing for and Taking an Exam?

As students prepare for an exam, they can use metacognition to inform their learning. Students can consider how they will be tested, set goals for their learning, and make a plan to meet their goals. It is expected that students who set specific goals while planning for an exam will be more effective in their studying than students who do not make specific goals. For example, a student who sets a specific goal to identify areas of confusion each week by answering end-of-chapter questions each weekend is expected to do better than a student who sets a more general goal of staying up-to-date on the material. Although some studies include goal setting and planning as one of many metacognitive strategies introduced to students, the influence of task-specific goal setting on academic achievement has not been well studied on its own in the context of science courses.

As students study, it is critical that they monitor both their use of learning strategies and their understanding of concepts. Yet many students struggle to accurately monitor their own understanding (de Carvalho Filho, 2009). In the example we are considering, students may believe they have already learned mitosis because they recognize the terms “prophase,” “metaphase,” “anaphase,” and “telophase” from high school biology. When students read about mitosis in the textbook, processes involving the mitotic spindle may seem familiar because of their exposure to these concepts in class. As a result, students may inaccurately predict that they will perform well on exam questions focused on the mitotic spindle, and their overconfidence may cause them to stop studying the mitotic spindle and related processes (Thiede et al., 2003). Students often rate their confidence in their learning based on their ability to recognize, rather than recall, concepts.

Instead of focusing on familiarity, students should rate their confidence based on how well they can retrieve relevant information to correctly answer questions. Opportunities for practicing retrieval, such as self-testing, can improve monitoring accuracy. Instructors can help students monitor their understanding more accurately by encouraging students to complete practice exams and giving students feedback on their answers, perhaps in the form of a key or a class discussion (Rawson and Dunlosky, 2007). Returning to the example, if students find they can easily recall the information needed to correctly answer questions about cytokinesis, they may wisely decide to spend their study time on other concepts. In contrast, if students struggle to remember information needed to answer questions about the mitotic spindle, and they answer these questions incorrectly, then they can use this feedback to direct their efforts toward mastering the structure and function of the mitotic spindle.

While taking a high-stakes exam, students can again monitor their performance on a single question, a set of questions, or an entire exam. Their monitoring informs whether they change an answer, with students tending to change answers they judge as incorrect. Accordingly, the accuracy of their monitoring will influence whether their changes result in increased performance (Koriat and Goldsmith, 1996). In some studies, changing answers on an exam has been shown to increase student performance, in contrast to the common belief that a student’s first answer is usually right (Stylianou-Georgiou and Papanastasiou, 2017). Changing answers on an exam can be beneficial if students return to questions they had low confidence in answering and make a judgment on their answers based on the ability to retrieve the information from memory, rather than a sense of familiarity with the concepts. Two important open questions are:

What techniques can students use to improve the accuracy of their monitoring, while preparing for an exam and while taking an exam?

How often do students monitor their understanding when studying on their own?

How Should Students Use Metacognition after Taking an Exam?

After completing any learning task, such as an exam, students can use the metacognitive regulation skill of evaluation, which entails assessing the effectiveness of their individual strategies and their overall plans for learning. Students generally do not need to evaluate in high school because they are able to perform well in many of their classes without studying (McGuire, 2006). College science courses provide opportunities for developing evaluation skills because students use metacognition when they find learning tasks both challenging and important (Carr and Taasoobshirazi, 2008). Undergraduates evaluate in response to novel challenges that occur when they encounter new learning experiences (Dye and Stanton, 2017). For example, life science students report that non-math-based problem solving in organic chemistry courses caused them to carefully consider their strategies and plans for learning. When it comes to evaluating individual strategies for learning, senior-level students may use their knowledge of how people learn to evaluate their strategies (e.g., they may refer to neuroscience research to explain strategy effectiveness), whereas introductory students tend to evaluate strategies based on the similarity of study tools to exam questions (Stanton et al., 2019). When it comes to evaluating overall study plans, students tend to evaluate their plans based solely on their performance, rather than considering how well their plans met other goals they had for learning (e.g., being able to connect concepts). Providing students with answer keys that include explanations of the correct ideas and reflection questions can support students in evaluating their learning (Sabel et al., 2017). Students also tend to use their feelings of confidence or preparedness to evaluate their plans, but these feelings are subject to distortion (Koriat and Bjork, 2005). Providing students with specific questions to answer about their study plans can help them focus on other aspects of effectiveness. Three open questions include:

How do students develop metacognitive regulation skills such as evaluation?

To what extent does the ability to evaluate affect student learning and performance?

When students evaluate the outcome of their studying and believe their preparation was lacking, to what degree do they adopt more effective strategies for the next exam?

PROMOTING SOCIAL METACOGNITION DURING GROUP WORK

Next, our teaching guide covers a relatively new area of inquiry in the field of metacognition called social metacognition, which is also known as socially shared metacognition (https://lse.ascb.org/evidence-based-teaching-guides/student -metacognition/promoting-social-metacognition -group-work). Science students are expected to learn not only on their own, but also in the context of small groups. Understanding social metacognition is important because it can support effective student learning during collaborations both inside and outside the classroom. While individual metacognition involves awareness and control of one’s own thinking, social metacognition involves awareness and control of others’ thinking. For example, social metacognition happens when students share ideas with peers, invite peers to evaluate their ideas, and evaluate ideas shared by peers (Goos et al., 2002). Students also use social metacognition when they assess, modify, and enact one another’s strategies for solving problems (Van De Bogart et al., 2017). While enacting problem-solving strategies, students can evaluate their peers’ hypotheses, predictions, explanations, and interpretations. Importantly, metacognition and social metacognition are expected to positively affect one another (Chiu and Kuo, 2009).

Students are likely to need structured guidance from instructors on how to be socially metacognitive while collaborating with their peers. Scripts for guiding collaboration provide students with metacognitive questions and prompts to support their work in groups. These scripts have been developed for undergraduate computer science and social science courses (Miller and Hadwin, 2015). Yet, because social metacognition is context dependent, additional work is needed to evaluate the degree to which these scripts are effective in science courses, and if they are not effective, how to improve their efficacy. Given that social metacognition is a relatively new area of research, several open questions remain. For example,

How do social metacognition and individual metacognition affect one another?

How can science instructors help students to effectively use social metacognition during group work?

CONCLUSIONS

We encourage instructors to support students’ success by helping them develop their metacognition. Our teaching guide ends with an Instructor Checklist of actions instructors can take to include opportunities for metacognitive practice in their courses (https://lse.ascb.org/wp-content/uploads/sites/10/2020/12/Student-Metacognition-Instructor-Checklist.pdf). We also provide a list of the most promising approaches instructors can take, called Four Strategies to Implement in Any Course (https://lse.ascb.org/wp-content/uploads/sites/10/2020/12/Four -Strategies-to-Foster-Student-Metacognition.pdf). We not only encourage instructors to consider using these strategies, but given that more evidence for their efficacy is needed from classroom investigations, we also encourage instructors to evaluate and report how well these strategies are improving their students’ achievement. By exploring and supporting students’ metacognitive development, we can help them learn more and perform better in our courses, which will enable them to develop into lifelong learners.

Supplementary Material

Acknowledgments

We are grateful to Cynthia Brame, Kristy Wilson, and Adele Wolfson for their insightful feedback on this paper and the guide. This material is based upon work supported in part by the National Science Foundation under grant number 1942318 (to J.D.S.). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Footnotes

1Generative work “involves students working individually or collaboratively to generate ideas and products that go beyond what has been presented to them” (Andrews et al., 2019, p2). Generative work is often stimulated by active-learning approaches.

REFERENCES

- Andrews, T. C., Auerbach, A. J. J., Grant, E. F. (2019). Exploring the relationship between teacher knowledge and active-learning implementation in large college biology courses. CBE—Life Sciences Education, 18(4), ar48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baeten, M., Kyndt, E., Struyven, K., Dochy, F. (2010). Using student-centred learning environments to stimulate deep approaches to learning: Factors encouraging or discouraging their effectiveness. Educational Research Review, 5(3), 243–260. [Google Scholar]

- Carr, M., Taasoobshirazi, G. (2008). Metacognition in the gifted: Connections to expertise. In Shaughnessy, M. F., Veenman, M., Kleyn-Kennedy, C. (Eds.), Meta-cognition: A recent review of research, theory and perspectives (pp. 109–125). Hauppauge, NY: Nova Sciences Publishers. [Google Scholar]

- Choi, M. M., Kuo, S. W. (2009). Social metacognition in groups: Benefits, difficulties, learning and teaching. In Larson, C. B. (Ed.), Metacognition: New research developments (pp. 117–136). Hauppauge, NY: Nova Science Publishers. [Google Scholar]

- Cross, D. R., Paris, S. G. (1988). Developmental and instructional analyses of children’s metacognition and reading comprehension. Journal of Educational Psychology, 80(2), 131–142. [Google Scholar]

- de Carvalho Filho, M. K. (2009). Confidence judgments in real classroom settings: Monitoring performance in different types of tests. International Journal of Psychology, 44(2), 93–108. [DOI] [PubMed] [Google Scholar]

- Dunlosky, J., Rawson, K. A., Marsh, E. J., Nathan, M. J., Willingham, D. T. (2013). Improving students’ learning with effective learning techniques: Promising directions from cognitive and educational psychology. Psychological Science in the Public Interest, 14(1), 4–58. [DOI] [PubMed] [Google Scholar]

- Dye, K. M., Stanton, J. D. (2017). Metacognition in upper-division biology students: Awareness does not always lead to control. CBE—Life Sciences Education, 16(2), ar31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flavell, J. H. (1979). Meta-cognition and cognitive monitoring—New area of cognitive-developmental inquiry. American Psychologist, 34(10), 906–911. [Google Scholar]

- Goos, M., Galbraith, P., Renshaw, P. (2002). Socially mediated metacognition: Creating collaborative zones of proximal development in small group problem solving. Educational Studies in Mathematics, 49(2), 193–223. [Google Scholar]

- Hartwig, M. K., Dunlosky, J. (2012). Study strategies of college students: Are self-testing and scheduling related to achievement? Psychonomic Bulletin & Review, 19(1), 126–134. [DOI] [PubMed] [Google Scholar]

- Hattie, J. A., Donoghue, G. M. (2016). Learning strategies: A synthesis and conceptual model. Nature Partner Journal Science of Learning, 1(1), 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karpicke, J. D., Butler, A. C., Roediger, H. L. , III. (2009). Metacognitive strategies in student learning: Do students practise retrieval when they study on their own? Memory, 17(4), 471–479. [DOI] [PubMed] [Google Scholar]

- Koriat, A., Bjork, R. A. (2005). Illusions of competence in monitoring one’s knowledge during study. Journal of Experimental Psychology: Learning, Memory, Cognition, 31(2), 187–194. [DOI] [PubMed] [Google Scholar]

- Koriat, A., Goldsmith, M. (1996). Monitoring and control processes in the strategic regulation of memory accuracy. Psychological Review, 103(3), 490–517. [DOI] [PubMed] [Google Scholar]

- Kornell, N., Bjork, R. A. (2008). Learning concepts and categories is spacing the “enemy of induction”? Psychological Science, 19(6), 585–592. [DOI] [PubMed] [Google Scholar]

- Kuhbandner, C., Emmerdinger, K. J. (2019). Do students really prefer repeated rereading over testing when studying textbooks? A reexamination. Memory, 27(7), 952–961. [DOI] [PubMed] [Google Scholar]

- McGuire, S. Y. (2006). The impact of supplemental instruction on teaching students how to learn. New Directions for Teaching and Learning, 2006(106), 3–10. [Google Scholar]

- Miller, M., Hadwin, A. F. (2015). Scripting and awareness tools for regulating collaborative learning: Changing the landscape of support in CSCL. Computers in Human Behavior, 52, 573–588. [Google Scholar]

- Nelson, T., Narens, L. (1990). Metamemory: A theoretical framework and new findings. Psychology of Learning and Motivation, 26, 125–141. [Google Scholar]

- Rawson, K. A., Dunlosky, J. (2007). Improving students’ self-evaluation of learning for key concepts in textbook materials. European Journal of Cognitive Psychology, 19(4–5), 559–579. [Google Scholar]

- Roediger, H. L., III, Karpicke, J. D. (2006). Test-enhanced learning: Taking memory tests improves long-term retention. Psychological Science, 17(3), 249–255. [DOI] [PubMed] [Google Scholar]

- Rohrer, D., Dedrick, R. F., Hartwig, M. K., Cheung, C.-N. (2020). A randomized controlled trial of interleaved mathematics practice. Journal of Educational Psychology, 112(1), 40. [Google Scholar]

- Sabel, J. L., Dauer, J. T., Forbes, C. T. (2017). Introductory biology students’ use of enhanced answer keys and reflection questions to engage in metacognition and enhance understanding. CBE—Life Sciences Education, 16(3), ar40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schraw, G., Moshman, D. (1995). Metacognitive theories. Educational Psychology Review, 7(4), 351–371. [Google Scholar]

- Scouller, K. (1998). The influence of assessment method on students’ learning approaches: Multiple choice question examination versus assignment essay. Higher Education, 35(4), 453–472. [Google Scholar]

- Sebesta, A. J., Bray Speth, E. (2017). How should I study for the exam? Self-regulated learning strategies and achievement in introductory biology. CBE—Life Sciences Education, 16(2), ar30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stanton, J. D., Dye, K. M., Johnson, M. S. (2019). Knowledge of learning makes a difference: A comparison of metacognition in introductory and senior-level biology students. CBE—Life Sciences Education, 18(2), ar24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stanton, J. D., Neider, X. N., Gallegos, I. J., Clark, N. C. (2015). Differences in metacognitive regulation in introductory biology students: When prompts are not enough. CBE—Life Sciences Education, 14(2), ar15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stylianou-Georgiou, A., Papanastasiou, E. C. (2017). Answer changing in testing situations: The role of metacognition in deciding which answers to review. Educational Research and Evaluation, 23(3–4), 102–118. [Google Scholar]

- Tanner, K. D. (2012). Promoting student metacognition. CBE—Life Sciences Education, 11(2), 113–120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thiede, K. W., Anderson, M., Therriault, D. (2003). Accuracy of metacognitive monitoring affects learning of texts. Journal of Educational Psychology, 95(1), 66–73. [Google Scholar]

- Van De Bogart, K. L., Dounas-Frazer, D. R., Lewandowski, H., Stetzer, M. R. (2017). Investigating the role of socially mediated metacognition during collaborative troubleshooting of electric circuits. Physical Review Physics Education Research, 13(2), 020116. [Google Scholar]

- Veenman, M. V. J., van Hout-Wolters, B. H., Afflerbach, P. (2006). Metacognition and learning: Conceptual and methodological considerations. Metacognition and Learning, 1(1), 3–14. [Google Scholar]

- Wang, M. C., Haertel, G. D., Walberg, H. J. (1990). What influences learning? A content analysis of review literature. Journal of Educational Research, 84(1), 30–43. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.