Abstract

Background

Multi-parametric remote measurement technologies (RMTs) comprise smartphone apps and wearable devices for both active and passive symptom tracking. They hold potential for understanding current depression status and predicting future depression status. However, the promise of using RMTs for relapse prediction is heavily dependent on user engagement, which is defined as both a behavioral and experiential construct. A better understanding of how to promote engagement in RMT research through various in-app components will aid in providing scalable solutions for future remote research, higher quality results, and applications for implementation in clinical practice.

Objective

The aim of this study is to provide the rationale and protocol for a 2-armed randomized controlled trial to investigate the effect of insightful notifications, progress visualization, and researcher contact details on behavioral and experiential engagement with a multi-parametric mobile health data collection platform, Remote Assessment of Disease and Relapse (RADAR)–base.

Methods

We aim to recruit 140 participants upon completion of their participation in the RADAR Major Depressive Disorder study in the London site. Data will be collected using 3 weekly tasks through an active smartphone app, a passive (background) data collection app, and a Fitbit device. Participants will be randomly allocated at a 1:1 ratio to receive either an adapted version of the active app that incorporates insightful notifications, progress visualization, and access to researcher contact details or the active app as usual. Statistical tests will be used to assess the hypotheses that participants using the adapted app will complete a higher percentage of weekly tasks (behavioral engagement: primary outcome) and score higher on self-awareness measures (experiential engagement).

Results

Recruitment commenced in April 2021. Data collection was completed in September 2021. The results of this study will be communicated via publication in 2022.

Conclusions

This study aims to understand how best to promote engagement with RMTs in depression research. The findings will help determine the most effective techniques for implementation in both future rounds of the RADAR Major Depressive Disorder study and, in the long term, clinical practice.

Trial Registration

ClinicalTrials.gov NCT04972474; http://clinicaltrials.gov/ct2/show/NCT04972474

International Registered Report Identifier (IRRID)

DERR1-10.2196/32653

Keywords: app, engagement, major depressive disorder, remote measurement technologies, research, mobile phone

Introduction

Background

The last decade has seen a significant increase in the use of mobile technology in health care (mobile health [mHealth]) research and clinical practice [1]. One such application of mHealth is the use of remote measurement technologies (RMTs), which provide real-time, longitudinal health tracking using a combination of smartphone apps for active symptom reporting tasks (active RMT [aRMT]) and mobile or wearable sensors for passive data collection (passive RMT [pRMT]) [2]. Multi-parametric RMT data have the potential to inform about current clinical state by reflecting patients’ daily experiences in situ. They may also offer predictions by detecting subtle shifts in physiological, behavioral, or environmental variables that occur before a change in clinical state [3,4].

RMTs may be particularly relevant in recurrent conditions. Major depressive disorder (MDD) is a mental health disorder characterized by persistent low mood and anhedonia, often following a trajectory of remission and relapse over time [5]. The economic burden of MDD is currently estimated at US $326 billion [6], with increased risks of comorbidities and health care use associated with high relapse rates [7]. RMTs can collect information about a wide range of factors associated with MDD (mood variability, sociability, activity, cognition, and sleep) [2]. Raw, passive sensor data can be translated into low-level features, higher-level behavioral markers, and ultimately clinical state [8]. Previous work has found ambulatory self-reporting of mood symptoms [9] and multi-parametric RMT measures of location, device use, and sleep across a 30-day period [10] to be clinically valid assessments of individual depression trajectories.

The benefits of using RMTs for MDD symptom tracking are 2-fold. First, given the suggested biases [11] toward mood-congruent information in symptom reporting in depression, such data present a more accurate picture of symptom variability. Second, continuous monitoring of symptom recurrence could provide the temporal resolution needed to detect indicators of future depressive episodes [4]. Therefore, the use of RMTs in MDD could hold great potential for understanding current and predicting future depressive states.

Remote Assessment of Disease and Relapse in MDD

Remote Assessment of Disease and Relapse in MDD (RADAR-MDD) is a longitudinal, multi-site, prospective cohort study that is investigating the feasibility and predictive validity of RMT data in identifying predictors of MDD relapse [2]. It is part of the wider RADAR-CNS program [12] and uses the open-source mHealth platform, RADAR-base [13], to collect aRMT data (fortnightly tracking of mood, self-esteem, and speech using an active smartphone app), pRMT data (GPS, Bluetooth interactions, and ambient noise and light using a passive smartphone app and heart rate and step count from a wrist-worn wearable), and 3-monthly outcome assessments (web-based) in participants with MDD. The core research team provided the initial enrollment session and support throughout the 2-year remote follow-up period. Data were collected from 623 participants across the London, Amsterdam, and Barcelona sites, and the study was concluded in April 2021. The results will explore whether multi-parametric RMTs can feasibly provide clinically relevant information and, if so, pave the way for translation of the platform into routine clinical practice and self-management of MDD.

Engagement With RMTs in Research

The promise of research such as RADAR-MDD depends heavily on user engagement. Engagement with mHealth technologies can be defined as (1) a behavioral construct measured by objective completion statistics and (2) an experiential construct measured by focused attention and interest when interacting with the technology [14]. Qualitative studies suggest that service users endorse the use of RMTs in mental health care [15,16]. Successful recruitment into the RADAR-MDD study also suggests widespread interest in using remote symptom tracking for research [17]. However, past studies have reported varying rates of behavioral engagement during follow-up. Studies using app-based symptom tracking in cohorts with depression have reported low rates of data completion [18,19]. A wider review of RMT for health management found large variations in aRMT and pRMT use times [20]. Preliminary data from RADAR-MDD indicate that participants completed a median of 21 (IQR 9-31) out of a possible 52 aRMT questionnaires, and 52.3% (326/623) provided wearable data for over 75% of the participating days [21]. Iterative work on the RADAR-base platform has also addressed the challenges of deciphering between low user engagement and technical issues with the technology [22].

Behavioral engagement with RMTs in research is vital in reducing data missingness and bias and enhancing quality [23,24]. However, an understanding of experiential engagement with RMTs and the act of symptom tracking itself could prove of equal benefit for data completeness and long-term adherence. In a study using multi-parametric RMTs in bipolar disorder, experiential engagement measures (self-awareness of emotional health and learning about symptoms) positively correlated with increased behavioral engagement with symptom tracking using a smartphone app and Fitbit [25]. A holistic approach to measuring engagement is necessary for understanding the current lack of and promoting future engagement with RMT studies.

Several methods are available to promote engagement within the RMTs themselves. In addition to the presence of a contactable research team, which has been previously associated with increased engagement [17,24], in-app components work remotely within the technology. Push notifications are prompts that appear on the smartphone screen and can vary according to content and timing [26]. Following the Fogg behavioral model [27], notifications provide a trigger to perform a behavior, such as completing tasks on a manual food logging app [28]. Adding theoretically informed notification content, such as insights or tips for using self-monitoring, can further motivate the completion of mood scales [29]. The effects of notification frequency on engagement show mixed results [26,30,31]. Data visualization is a common technique used in mood monitoring apps [32]. Visually displaying data completion allows users to revisit progress and may prompt the action of continued data input [33]. This might be especially effective given that anticipatory pleasure is thought to predict motivation for reward in individuals with depression [34]. It is unclear which combination of in-app features can promote behavioral and experiential engagement with a multi-parametric symptom-tracking app in depression. Findings in this field would provide scalable solutions for engagement in RMT studies, higher quality results, and applications for implementation in clinical practice.

Study Aims and Objectives

This study aims to test the effect of in-app components in a multi-parametric RMT platform on engagement with active and passive symptom tracking in MDD. A 2-armed randomized controlled trial will be used to compare the RADAR-base active app with an adapted app with insightful notifications and progress visualization aimed at promoting behavioral and experiential engagement. Engagement will be measured as provision of symptom tracking data collected through RMT over the 12-week study period and the degree to which participants feel experientially engaged with symptom tracking via the platform. It is hypothesized that participants using the adapted app will be better engaged in monitoring their symptoms, as measured by both behavioral engagement (completion of mood questionnaires) and experiential engagement (measures of attention, aesthetic appeal, and self-awareness). Process evaluation measures will also reveal participant experience with the engagement strategies used.

Methods

Ethics Committee Approval

This study was approved by the Psychiatry, Nursing and Midwifery Research Ethics Subcommittee at King’s College London (reference number: RESCM-20/21-21083) and registered as a clinical trial (reference number: NCT04972474).

Study Design

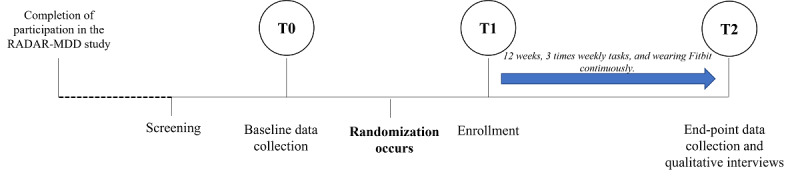

This study is a 12-week, 2-arm randomized controlled trial with 1:1 randomization. A summary of the study design is presented in Figure 1. A 12-week period was chosen to align with the original structure of the RADAR-MDD study [2]. Participants will be recruited from RADAR-MDD, will provide baseline data (T0), and will be randomized at enrollment (T1) to 1 of 2 arms: an adapted app that includes insightful notifications and progress visualization (active arm) or active app as usual (control arm). Both the control and active arms will be delivered through the RADAR-base active app, which collects data in combination with a passive data collection app and a Fitbit Charge device [13]. In both arms, participants will be asked to complete 3 tasks each week via the app and wear the Fitbit device throughout the study. The primary outcome is the percentage of weekly tasks completed over 12 weeks of follow-up.

Figure 1.

Study design from screening to follow-up end point, including time points 0, 1, and 2. RADAR-MDD: Remote Assessment of Disease and Relapse in Major Depressive Disorder.

Upon completion of the study, 20 participants (n=10 from each arm) will also be randomly invited to complete a qualitative interview about their experience of participating.

The study will be conducted using a combination of the RADAR-base platform, including the management portal web application [13] and REDCap (Research Electronic Data Capture) [35]. Owing to the COVID-19 pandemic, participation will be fully remote.

Eligibility Criteria

Inclusion Criteria

Participants will be included if they (1) participated in RADAR-MDD and gave consent for future research contact, (2) experienced at least one episode of MDD in the 2 years preceding RADAR-MDD enrollment, (3) are willing and able to continue to use an Android smartphone and Fitbit Charge device for a 12-week period (both provided for use in RADAR-MDD), and (4) feel comfortable completing an enrollment session remotely either via email instructions or video calls.

Exclusion Criteria

Participants will be excluded if they have been diagnosed with a comorbid psychiatric disorder since their enrollment into RADAR-MDD: bipolar disorder, schizophrenia, psychosis, schizoaffective disorder, or dementia. This will be checked with the participant via email during the recruitment process.

Recruitment

Participants will be recruited through the RADAR-MDD database of the London site. Contact details will be extracted from the RADAR-MDD REDCap system for those who have provided consent to be contacted for future research. This will include any participant who enrolled in the study during the 31 months of recruitment (November 2017 to June 2020; n=345).

Participants will be invited to participate via an email that will explain the study and provide the participant information sheet. Interested participants will respond via email. They will then be asked the eligibility questions via email. If eligible, participant details will be entered into the study REDCap system, which will initiate the sending of a personalized link to the web-based consent form and baseline questionnaires (T0). Once these have been completed, the participant will receive a second link to a web-based booking system, where they can book a time slot for an enrollment session. Enrollments will be conducted between April and May 2021. On the day of enrollment, participants will receive an email (or a video call, depending on their preference) outlining instructions for downloading the study apps and unique QR codes to register them to the platform (T1).

Interventions

Overview

Upon enrollment into the study, participants will be asked to complete 3 tasks per week via the active app, allow the passive app to run in the background on their smartphones, and wear the Fitbit device as much as possible. The active app tasks are as follows: (1) Patient Health Questionnaire-8 (PHQ-8) [36], an 8-item questionnaire assessing the variability of depressive symptoms over the last week; (2) Rosenberg Self-Esteem Scale [37], a 10-item questionnaire assessing variations in self-esteem; and (3) a speech task, during which the participant records themselves reading aloud a short paragraph.

In both arms, the weekly questionnaire tasks, the passive app, and the Fitbit device remain the same. The study is designed such that the enrollment process is identical for both arms to ensure that participants do not prime to the study arm that they are assigned and that both arms are comparable with RADAR-MDD.

Control Condition

Participants in the control arm will receive one notification at the 9 AM, 10 AM, and 11:30 AM time points on the day that a questionnaire task is due, which reads “Questionnaire Time. Won’t usually take longer than 3 minutes.” They will not be able to view any data aside from that through the Fitbit app.

Active Condition

Development of the Adapted App

The design of RADAR-MDD, including the active app, was heavily informed by service user involvement [38]. This study used behavior change theory and further patient public involvement work to inform its design.

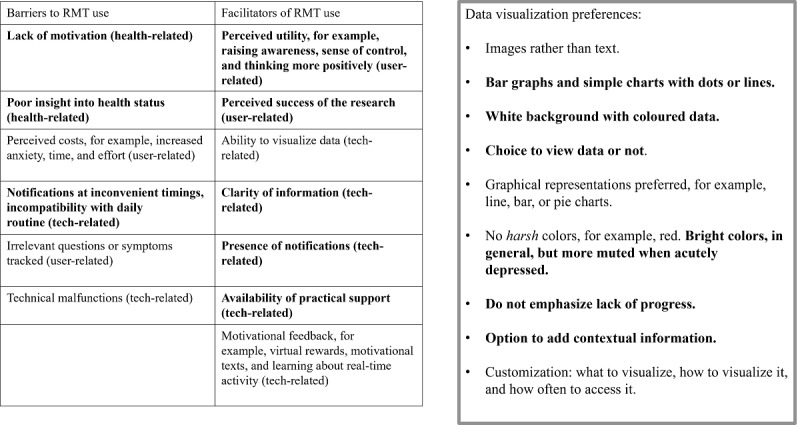

To establish how best to promote the behavior of symptom tracking, it is useful to draw on theories and models of behavior change. The Behavior Change Wheel [39] presents a framework for the development of strategies to promote a target behavior. Previous work was used to identify key health-, user-, and technology-related barriers to engaging with symptom tracking in MDD [15,20,38] (Figure 2). Following the COM-B model, psychological capabilities, such as lack of symptom insight and perceived utility of the research, automatic motivations related to motivational difficulties and low mood, and physical opportunities, such as inability to answer questionnaires at a specific time and unsure if the data have been logged, presented the most pertinent barriers. Following the Behavior Change Wheel, suitable intervention functions thus included education, incentivization, and enablement [39]. Therefore, it was decided that an engaging app should include reminder notifications with information on the potential impacts of symptom tracking from a credible source. It should also include incentivizing feedback on behavior in the form of data visualization. Finally, users should be provided with researcher contact details to report technical issues or receive support.

Figure 2.

Service user involvement in the design of the adapted app. RMT: remote measurement technology.

The progress visualization component was further informed by service user involvement [40] (Figure 2). Simple, clear graphical representations of data were preferred, presented on a white background with colored data points. Users expressed an interest in positive reinforcement based on reaching achievements, for example, step count goals or simply the entering of data, coupled with a visual representation of completion, for example, a change in color. They also requested the choice to view or hide visualizations. Therefore, the visualization component was designed to comprise a separate section of the app that users can choose to view with a simple, colored graph showing completion or noncompletion at each weekly time point. Completion is denoted by a green dot and noncompletion, by a red dot.

In-App Components

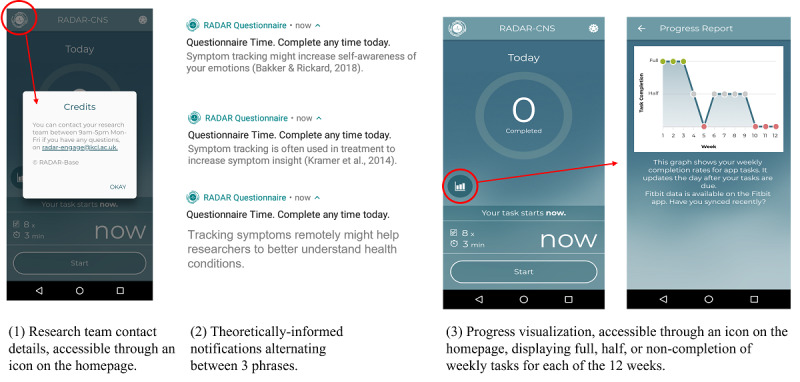

Participants in the active arm will receive notifications at the same time points as those in the control arm, along with the following additional content (Figure 3):

Figure 3.

Screenshots of the in-app components included in the adapted app.

Theoretically informed notifications: additional sentences included in the notifications covering the proposed benefits of remote symptom monitoring for emotional self-awareness, clinical practice, and research, along with a reminder that the questionnaire can be completed any time today.

Progress visualization: participants will be able to view their questionnaire task completion through the app visualized as a graph that is accessible from the main app home page.

Research team contact details: additional text on the app home page will provide a contact phone number, email address, and contact times for the research team.

Data Collection and Follow-up Procedure

A summary of measures and data collection time points is outlined in Table 1.

Table 1.

A summary of measures and data collection points across the 12-week follow-up period.

| Measures | Baseline (T0) | End point (T2) | Weekly | Continuously | |

| REDCapa survey | |||||

|

|

Consent | ✓ |

|

|

|

|

|

Contact information | ✓ |

|

|

|

|

|

Study devices | ✓ |

|

|

|

|

|

Sociodemographics | ✓ |

|

|

|

|

|

Social environment | ✓ |

|

|

|

|

|

Medical history | ✓ |

|

|

|

|

|

Lifetime Depression Assessment Self-report [41] | ✓ |

|

|

|

|

|

Inventory of Depressive Symptomatology [42] | ✓ | ✓ |

|

|

|

|

The World Health Organization Composite International Diagnostic Interview Short-Form [43] | ✓ | ✓ |

|

|

|

|

Generalized Anxiety Disorder-7 [44] | ✓ | ✓ |

|

|

|

|

Work and Social Adjustment Scale [45] | ✓ | ✓ |

|

|

|

|

Brief Illness Perception Questionnaire [46] | ✓ | ✓ |

|

|

|

|

Life events [47] | ✓ | ✓ |

|

|

|

|

Client Service Receipt Inventory [48] | ✓ | ✓ |

|

|

|

|

User Engagement Scale (adapted for mHealthb use) [49] | ✓ | ✓ |

|

|

|

|

Emotional Self-Awareness Questionnaire [50] | ✓ | ✓ |

|

|

|

|

mHealth App Usability Questionnaire [51] | ✓ | ✓ |

|

|

| Active app measures | |||||

|

|

Patient Health Questionnaire-8 [36] |

|

|

✓ |

|

|

|

Rosenberg Self-Esteem Scale [37] |

|

|

✓ |

|

|

|

Speech task |

|

|

✓ |

|

| Passive app measures | |||||

|

|

GPS, Bluetooth, and ambient noise and light |

|

|

|

✓ |

| Fitbit | |||||

|

|

Heart rate and step count |

|

|

|

✓ |

| Process evaluation | |||||

|

|

App use metrics |

|

|

|

✓ |

|

|

Qualitative interviews |

|

✓c |

|

|

aREDCap: Research Electronic Data Capture.

bmHealth: mobile health.

cOnly a select few participants will be asked to interview.

Baseline questionnaires will comprise questions on contact information, sociodemographics, recent service use, physical and mental health history, and comorbidities, including the presence of depression and recent life events. The research team will also manually pull data pertaining to participation in RADAR-MDD for each participant, for example, participation length and completion rates. At the 12-week postbaseline follow-up, participants will receive a personalized link to repeat several baseline questionnaires. Responses received more than 3 weeks after baseline or follow-up will not be recorded.

The principal investigator (KMW) will monitor incoming data streams to ensure that the app is functioning correctly. Participants will not be contacted by the team once enrollment is complete, aside from a check-in email at the 6-week point to ensure that the app is functioning correctly. Participants will not be withdrawn from the study based on nonengagement; however, participants will be made aware that they can withdraw at any point.

Suicidal ideation will also be monitored at baseline (T0) and at follow-up (T2). Participants who report ideation or intent at either time point will be contacted via phone call, advised to contact their treating physician, and emailed a list of appropriate signposts.

Upon completion of the study, participants will view a debriefing page explaining that the study aimed to test the effectiveness of notifications and progress visualizations on engagement with the platform. Both arms will be outlined, identifying arm assignments and end point instructions.

Outcome Measures

Primary Outcome Measure

The primary outcome measure will be the behavioral engagement with the RADAR-base system. This will be measured as the percentage of weekly PHQ-8 questionnaires completed over the 12-week follow-up period. Completion of 1 PHQ-8 task is defined as the completion of the 8 questions.

Secondary Outcome Measures

Secondary outcome measures will be as follows:

Experiential engagement with the RADAR-base platform measured with the User Engagement Scale (UES) [52] adapted to mHealth use [49]. The UES is a 30-item questionnaire measuring 4 factors of experiential engagement with mHealth apps: focused attention, perceived usability, aesthetic appeal, and reward. The UES has been widely adopted and shows good reliability and construct validity [53].

Experiential engagement with the RADAR-base platform measured by the Emotional Self-Awareness Questionnaire (ESQ) [50]. The ESQ is a 33-item questionnaire measuring recognition, contextualization, and decision-making in relation to one’s own emotions. The ESQ has a reliability of 0.92 and shows significant positive correlations with the Emotional Intelligence Test [50].

System usability measured using the mHealth App Usability Questionnaire (MAUQ) for stand-alone apps used by patients [51]. This will be assessed at T2 only, asking participants to reflect solely on their experiences over the last 12 weeks. The MAUQ comprises 18 questions relating to the immediate and long-term usability of the app, including health care management (overall Cronbach α=.914).

Combined adherence to active and passive (Fitbit) components of the system will be measured as follows: adherence rate for the active app measured as the proportion of participants with over 50% of completed data across all 3 weekly questionnaire tasks and adherence rate for the passive Fitbit measured as the proportion of participants with over 50% of study days with any recorded data. These measures were chosen to align with data availability reporting in RADAR-MDD [21], previous literature [25], and the minimum amount of data sufficient for performing predictive analyses.

Additional Data Collection

Passive data through the RADAR-base passive app will also be collected; however, these will not be analyzed as part of this trial. This additional data will be collected for 2 reasons: (1) to emulate the RADAR-MDD as closely as possible and (2) for use in future analyses. The passive app collects information on phone location, battery level, Bluetooth devices, and background noise and light. Participants can opt out of using any of the study apps during their participation.

Process Evaluation Measures

Process evaluation measures will also be collected to further understand the interaction with the RADAR-base system. Quantitative measures will be obtained regarding app use: notification interaction, app initialization, specific module viewing, and viewing time. These will be available from the back end of the RADAR-base platform.

At the end of the follow-up period, 20 participants will be randomly invited to participate in a semistructured telephone interview with a member of the research team, discussing their experiences of participating in the study. Discussions will comprise perceptions of the arm to which the participant was randomized, experiences of the in-app techniques used, suggestions for further improvements for engagement with the system, and views on engagement with RMT systems for symptom tracking in research, clinical care, and self-management (Multimedia Appendix 1).

Sample Size

Power calculations were performed based on preliminary data from the RADAR-MDD. A total of 132 participants are required to detect a difference of 25% completion of PHQ-8 tasks between the control and active arms, with 80% power and 95% CI at the 12-week end point. Allowing for 10% attrition (based on previous research [21] but accounting for a much shorter follow-up period in this study), we will aim to recruit 140 participants. A total of 345 participants will be available to be contacted from the RADAR-MDD study cohort; assuming 50% acceptance of invitation (given the recruitment from a previously motivated cohort), a target of 140 participants should be feasible.

Randomization

Randomization will occur after baseline data collection when the REDCap randomization module initiates the generation of a QR code from the RADAR-base management portal assigned to the participant identifier. Each participant will be randomly allocated at a 1:1 ratio to either the control or the active arm. Simple randomization will be used, in which an allocation table with a random sequence of 1,2 will be generated and uploaded to REDCap. This will be carried out by a team member external to the core research team (YR) and therefore be concealed from the principal investigator (KMW) before enrollment.

Blinding

Individual participants will have previously used the RADAR-MDD app and therefore cannot be blinded because they might recognize new features of the app. However, arm assignments will not be explicitly revealed to the participants until the study debrief.

The principal investigator (KMW) will be unblinded to allocation to ensure that remote enrollments have been carried out correctly. All measures are conducted using the app or web-based REDCap system to avoid detection bias in assessments [54]. The trial data manager (DL) will be blind to arm allocation. No other individuals will have access to the data set for data monitoring or analysis purposes; all tasks will be carried out by the principal investigator (KMW).

Data Management

All data collected via the Fitbit device and smartphone apps will be encrypted and uploaded to a secure server maintained by King’s College London in accordance with the process cited by Ranjan et al [13]. The REDCap system sits on the King’s College London Rosalind server. Only members of the RADAR-Engage team will have access to identifiable data. Qualitative interview data will be temporarily stored on the King’s College London server, transcribed anonymously, and subsequently deleted.

Statistical Analysis: Plan

Overview

All data, including those from withdrawn participants (unless they request for their data to be deleted) will be included in the final analysis. Demographic and clinical characteristics at baseline and follow-up will be summarized by arm using appropriate summary statistics, for example, mean and SD for continuous variables and counts and percentages for categorical variables. Data completeness for all measures and outcomes will be summarized.

The primary outcome will be analyzed using 2-sample t tests (2-tailed) to assess whether the mean percentage of PHQ-8 completion in each arm is statistically different.

For the secondary outcomes, experiential engagement (as measured by the UES and ESQ) will be collected at T0 and T2 and will thus be calculated as a change from baseline. This will be assessed using repeated measures mixed modeling to explore whether experiential engagement is statistically different between the 2 arms. App usability scores (MAUQ) and overall system adherence rates will also be compared. Complete case analyses will be used; if <20% of responses to each questionnaire are missing, mean imputation will be used to provide a total score.

All analyses will be conducted using the intention-to-treat principle. The threshold for statistical significance was set at P=.05.

Process Evaluation Analyses

Qualitative interviews will be transcribed and coded using NVivo software [55]. Grounded theory thematic analysis will provide an exploration of participant experiences across the 2 arms and with the additional in-app components. Descriptive statistics will be reported for app use statistics.

Dissemination

This study will be reported following the CONSORT (Consolidated Standards of Reporting Trials) checklist [56]. The results of this study will be discussed via publication.

Results

This study will begin recruiting and enrolling participants in April and May 2021. Data collection will be completed by September 2021. Data analysis will commence in 2022. The results of this study will be communicated via publication in mid-2022.

Discussion

Principal Findings

The use of RMTs for symptom tracking in MDD research holds great potential for relapse prediction and personalized health care. Understanding current and promoting future engagement with RMTs in research studies is of utmost importance for producing high-quality results, and this is only amplified by the shift to remote health care monitoring during the COVID-19 pandemic [57-59]. Although previous studies have explored the impact of specific in-app components in encouraging data completion [25,26,32,33], to our knowledge, this study is one of the first to explore the promotion of engagement with a multi-parametric RMT system for MDD symptom tracking. Within the framework of the RADAR-base system, this study uses the questionnaire app as a participant-facing conduit to promote behavioral and experiential engagement with active and passive RMT in a large-scale research study incorporating theoretical notifications and progress visualization.

The findings of this study will, first, provide some understanding about how best to promote engagement in subsequent rounds of the RADAR-MDD study. The ability to collect sufficient data remotely by relying less heavily on a core research team while also minimizing burden on the user is a highly valuable asset for RMT research. This study also represents the first attempt to recruit and follow up with participants completely remotely using RADAR-base and, if successful, will pave the way for fully remote recruitment across a range of conditions. Second, this work sheds light on experiential engagement with RMT symptom tracking. The findings here could uncover new methods for measuring and promoting engagement in MDD research. Third, studying behavioral and experiential engagement in a research context can act as a proxy for understanding engagement in a clinical context [60]. Taken together, these findings could have wider implications for RMT research studies across health conditions, alongside the implementation of RMT data collection in clinical settings.

Strengths and Limitations

A key strength of this study is its grounding in a previous research project, using a system that has already been well-documented, designed, and developed for the purpose of RMT data collection [21,61,62]. It also takes an additional theory-driven and user-centered approach to adapting components of the system to promote optimal user engagement. However, this study has 3 main limitations. First, our ability to recruit and retain a sufficient number of participants for power analysis may be hindered by participation fatigue, given that many will have completed up to 2 years in the previous study. The effects of the COVID-19 pandemic on participants’ willingness to engage in research studies are unclear. Second, it should be considered that recruiting from an existing study cohort with prior understanding of the system could create a ceiling effect for engagement, such that participants are already highly motivated to engage in symptom tracking. App literacy has also been noted as a key facilitator of mHealth app engagement [63]. Nonetheless, there is good reason to believe that the new in-app components can encourage engagement over and above the moderate data availability reported in RADAR-MDD [21]. Third, although concerted efforts were made to include health-, user-, and technology-related barriers to engagement in the app development process, we acknowledge that this is not all-encompassing. Certain aspects of depressive symptomatology, for example, low mood or motivation [34], could affect engagement with the RADAR-base system in ways that might not be mitigated by theoretical notifications or progress visualization. Therefore, we have also included process evaluation measures to further understand how participants interact with the components and gain insight for future improvements.

Acknowledgments

The RADAR-CNS project has received funding from the Innovative Medicines Initiative 2 Joint Undertaking under grant agreement No 115902. This Joint Undertaking receives support from the European Union’s Horizon 2020 research and innovation programme and EFPIA). This communication reflects the views of the RADAR-CNS consortium and neither IMI nor the European Union and EFPIA are liable for any use that may be made of the information contained herein. The funding body have not been involved in the design of the study, the collection or analysis of data, or the interpretation of data. This paper also represents independent research part funded by the National Institute for Health Research (NIHR) Biomedical Research Centre at South London and Maudsley NHS Foundation Trust and King’s College London. The views expressed are those of the authors and not necessarily those of the NHS, the NIHR or the Department of Health and Social Care. This research was reviewed by a team with experience of mental health problems and their carers who have been specially trained to advise on research proposals and documentation through the Feasibility and Acceptability Support Team for Researchers (FAST-R): a free, confidential service in England provided by the National Institute for Health Research Maudsley Biomedical Research Centre via King’s College London and South London and Maudsley NHS Foundation Trust. Finally, the authors would like to thank all members of the RADAR-CNS patient advisory board who all have experience of living with or supporting those who are living with depression, epilepsy, or multiple sclerosis.

Abbreviations

- aRMT

active remote measurement technology

- CONSORT

Consolidated Standards of Reporting Trials

- ESQ

Emotional Self-Awareness Questionnaire

- MAUQ

mHealth App Usability Questionnaire

- MDD

major depressive disorder

- mHealth

mobile health

- PHQ-8

Patient Health Questionnaire-8

- pRMT

passive remote measurement technology

- RADAR-MDD

Remote Assessment of Disease and Relapse in Major Depressive Disorder

- REDCap

Research Electronic Data Capture

- RMT

remote measurement technology

- UES

User Engagement Scale

Semistructured qualitative interview schedule (N=20).

Footnotes

Conflicts of Interest: MH is the principal investigator of the RADAR-CNS consortium, a private-public pre-competitive consortium with research funding from Janssen, UCB, MSD, Biogen and Lundbeck. No further conflicts are declared.

References

- 1.Liao Y, Thompson C, Peterson S, Mandrola J, Beg MS. The future of wearable technologies and remote monitoring in health care. Am Soc Clin Oncol Educ Book. 2019 Jan;39:115–21. doi: 10.1200/EDBK_238919. https://ascopubs.org/doi/10.1200/EDBK_238919?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Matcham F, Barattieri di San Pietro C, Bulgari V, de Girolamo G, Dobson R, Eriksson H, Folarin AA, Haro JM, Kerz M, Lamers F, Li Q, Manyakov NV, Mohr DC, Myin-Germeys I, Narayan V, Bwjh P, Ranjan Y, Rashid Z, Rintala A, Siddi S, Simblett SK, Wykes T, Hotopf M, RADAR-CNS consortium, et al Remote assessment of disease and relapse in major depressive disorder (RADAR-MDD): a multi-centre prospective cohort study protocol. BMC Psychiatry. 2019 Feb 18;19(1):72. doi: 10.1186/s12888-019-2049-z. https://bmcpsychiatry.biomedcentral.com/articles/10.1186/s12888-019-2049-z .10.1186/s12888-019-2049-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Jones M, Johnston D. Understanding phenomena in the real world: the case for real time data collection in health services research. J Health Serv Res Policy. 2011 Jul;16(3):172–6. doi: 10.1258/jhsrp.2010.010016.jhsrp.2010.010016 [DOI] [PubMed] [Google Scholar]

- 4.Naslund JA, Marsch LA, McHugo GJ, Bartels SJ. Emerging mHealth and eHealth interventions for serious mental illness: a review of the literature. J Ment Health. 2015;24(5):321–32. doi: 10.3109/09638237.2015.1019054. http://europepmc.org/abstract/MED/26017625 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Verduijn J, Verhoeven JE, Milaneschi Y, Schoevers RA, van Hemert AM, Beekman AT, Penninx BW. Reconsidering the prognosis of major depressive disorder across diagnostic boundaries: full recovery is the exception rather than the rule. BMC Med. 2017 Dec 12;15(1):215. doi: 10.1186/s12916-017-0972-8. https://bmcmedicine.biomedcentral.com/articles/10.1186/s12916-017-0972-8 .10.1186/s12916-017-0972-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Greenberg PE, Fournier A-A, Sisitsky T, Simes M, Berman R, Koenigsberg SH, Kessler RC. The economic burden of adults with major depressive disorder in the United States (2010 and 2018) Pharmacoeconomics. 2021 Jun;39(6):653–65. doi: 10.1007/s40273-021-01019-4. http://europepmc.org/abstract/MED/33950419 .10.1007/s40273-021-01019-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gauthier G, Mucha L, Shi S, Guerin A. Economic burden of relapse/recurrence in patients with major depressive disorder. J Drug Assess. 2019;8(1):97–103. doi: 10.1080/21556660.2019.1612410. http://europepmc.org/abstract/MED/31192030 .1612410 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mohr DC, Zhang M, Schueller SM. Personal sensing: understanding mental health using ubiquitous sensors and machine learning. Annu Rev Clin Psychol. 2017 May 08;13:23–47. doi: 10.1146/annurev-clinpsy-032816-044949. http://europepmc.org/abstract/MED/28375728 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Burchert S, Kerber A, Zimmermann J, Knaevelsrud C. Screening accuracy of a 14-day smartphone ambulatory assessment of depression symptoms and mood dynamics in a general population sample: comparison with the PHQ-9 depression screening. PLoS One. 2021 Jan 6;16(1):e0244955. doi: 10.1371/journal.pone.0244955. https://dx.plos.org/10.1371/journal.pone.0244955 .PONE-D-19-24772 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Moshe I, Terhorst Y, Opoku Asare K, Sander LB, Ferreira D, Baumeister H, Mohr DC, Pulkki-Råback L. Predicting symptoms of depression and anxiety using smartphone and wearable data. Front Psychiatry. 2021 Jan 28;12:625247. doi: 10.3389/fpsyt.2021.625247. doi: 10.3389/fpsyt.2021.625247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wells JE, Horwood LJ. How accurate is recall of key symptoms of depression? A comparison of recall and longitudinal reports. Psychol Med. 2004 Aug;34(6):1001–11. doi: 10.1017/s0033291703001843. [DOI] [PubMed] [Google Scholar]

- 12.RADAR-CNS: Remote Assessment of Disease and Relapse – Central Nervous System. RADAR-CNS. [2021-12-05]. https://www.radar-cns.org/

- 13.Ranjan Y, Rashid Z, Stewart C, Conde P, Begale M, Verbeeck D, Boettcher S, Hyve. Dobson R, Folarin A, RADAR-CNS Consortium RADAR-Base: open source mobile health platform for collecting, monitoring, and analyzing data using sensors, wearables, and mobile devices. JMIR Mhealth Uhealth. 2019 Aug 01;7(8):e11734. doi: 10.2196/11734. https://mhealth.jmir.org/2019/8/e11734/ v7i8e11734 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Perski O, Blandford A, West R, Michie S. Conceptualising engagement with digital behaviour change interventions: a systematic review using principles from critical interpretive synthesis. Transl Behav Med. 2017 Jun;7(2):254–67. doi: 10.1007/s13142-016-0453-1. http://europepmc.org/abstract/MED/27966189 .10.1007/s13142-016-0453-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Simblett S, Matcham F, Siddi S, Bulgari V, Barattieri di San Pietro C, Hortas López J, Ferrão J, Polhemus A, Haro JM, de Girolamo G, Gamble P, Eriksson H, Hotopf M, Wykes T, RADAR-CNS Consortium Barriers to and facilitators of engagement with mHealth technology for remote measurement and management of depression: qualitative analysis. JMIR Mhealth Uhealth. 2019 Jan 30;7(1):e11325. doi: 10.2196/11325. https://mhealth.jmir.org/2019/1/e11325/ v7i1e11325 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Girolamo G, Barattieri di San Pietro C, Bulgari V, Dagani J, Ferrari C, Hotopf M, Iannone G, Macis A, Matcham F, Myin‐Germeys I, Rintala A, Simblett S, Wykes T, Zarbo C. The acceptability of real‐time health monitoring among community participants with depression: a systematic review and meta‐analysis of the literature. Depression Anxiety. 2020 Apr 27;37(9):885–97. doi: 10.1002/da.23023. https://onlinelibrary.wiley.com/doi/abs/10.1002/da.23023 . [DOI] [Google Scholar]

- 17.A Framework for Recruiting into a Remote Measurement Technologies (RMTs) study: experiences from a major depressive disorder cohort. Center for Open Science. [2021-12-05]. [DOI]

- 18.Anguera JA, Jordan JT, Castaneda D, Gazzaley A, Areán PA. Conducting a fully mobile and randomised clinical trial for depression: access, engagement and expense. BMJ Innov. 2016 Jan;2(1):14–21. doi: 10.1136/bmjinnov-2015-000098. http://europepmc.org/abstract/MED/27019745 .bmjinnov-2015-000098 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Arean PA, Hallgren KA, Jordan JT, Gazzaley A, Atkins DC, Heagerty PJ, Anguera JA. The use and effectiveness of mobile apps for depression: results from a fully remote clinical trial. J Med Internet Res. 2016 Dec 20;18(12):e330. doi: 10.2196/jmir.6482. https://www.jmir.org/2016/12/e330/ v18i12e330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Simblett S, Greer B, Matcham F, Curtis H, Polhemus A, Ferrão J, Gamble P, Wykes T. Barriers to and facilitators of engagement with remote measurement technology for managing health: systematic review and content analysis of findings. J Med Internet Res. 2018 Jul 12;20(7):e10480. doi: 10.2196/10480. https://www.jmir.org/2018/7/e10480/ v20i7e10480 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Remote Assessment of Disease and Relapse in Major Depressive Disorder (RADAR-MDD): recruitment, retention, and data availability in a longitudinal remote measurement study. Research Square. [2021-12-05]. [DOI] [PMC free article] [PubMed]

- 22.Ranjan Y, Folarin AA, Stewart C, Conde P, Dobson R, Rashid Z. Proceedings of the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2019 ACM International Symposium on Wearable Computers; UbiComp '19: The 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing; Sep 9 - 13, 2019; London United Kingdom. 2019. [DOI] [Google Scholar]

- 23.Teague S, Youssef GJ, Macdonald JA, Sciberras E, Shatte A, Fuller-Tyszkiewicz M, Greenwood C, McIntosh J, Olsson CA, Hutchinson D, SEED Lifecourse Sciences Theme, et al Retention strategies in longitudinal cohort studies: a systematic review and meta-analysis. BMC Med Res Methodol. 2018 Nov 26;18(1):151. doi: 10.1186/s12874-018-0586-7. https://bmcmedresmethodol.biomedcentral.com/articles/10.1186/s12874-018-0586-7 .10.1186/s12874-018-0586-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Druce KL, Dixon WG, McBeth J. Maximizing engagement in mobile health studies: lessons learned and future directions. Rheum Dis Clin North Am. 2019 May;45(2):159–72. doi: 10.1016/j.rdc.2019.01.004. https://linkinghub.elsevier.com/retrieve/pii/S0889-857X(19)30004-3 .S0889-857X(19)30004-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Van Til K, McInnis MG, Cochran A. A comparative study of engagement in mobile and wearable health monitoring for bipolar disorder. Bipolar Disord. 2020 Mar;22(2):182–90. doi: 10.1111/bdi.12849. http://europepmc.org/abstract/MED/31610074 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Bidargaddi N, Almirall D, Murphy S, Nahum-Shani I, Kovalcik M, Pituch T, Maaieh H, Strecher V. To prompt or not to prompt? A microrandomized trial of time-varying push notifications to increase proximal engagement with a mobile health app. JMIR Mhealth Uhealth. 2018 Nov 29;6(11):e10123. doi: 10.2196/10123. https://mhealth.jmir.org/2018/11/e10123/ v6i11e10123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Fogg BJ. Persuasive technology: using computers to change what we think and do. Ubiquity. 2002 Dec;2002(December):2. doi: 10.1145/764008.763957. [DOI] [Google Scholar]

- 28.Bentley F, Tollmar K. The power of mobile notifications to increase wellbeing logging behavior. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; CHI '13: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; Apr 27 - May 2, 2013; Paris, France. 2013. [DOI] [Google Scholar]

- 29.Bidargaddi N, Pituch T, Maaieh H, Short C, Strecher V. Predicting which type of push notification content motivates users to engage in a self-monitoring app. Prev Med Rep. 2018 Sep;11:267–73. doi: 10.1016/j.pmedr.2018.07.004. https://linkinghub.elsevier.com/retrieve/pii/S2211-3355(18)30117-7 .S2211-3355(18)30117-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Morrison D, Mair FS, Yardley L, Kirby S, Thomas M. Living with asthma and chronic obstructive airways disease: using technology to support self-management - An overview. Chron Respir Dis. 2017 Nov;14(4):407–19. doi: 10.1177/1479972316660977. https://journals.sagepub.com/doi/10.1177/1479972316660977?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed .1479972316660977 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Freyne J, Yin J, Brindal E, Hendrie G, Berkovsky S, Noakes M. Push notifications in diet apps: influencing engagement times and tasks. Int J Human Comput Interact. 2017 Feb 06;33(10):833–45. doi: 10.1080/10447318.2017.1289725. doi: 10.1080/10447318.2017.1289725. [DOI] [Google Scholar]

- 32.Ptakauskaite N, Cox AL, Berthouse N. Knowing what you're doing or knowing what to do: how stress management apps support reflection and behaviour change. Proceedings of the CHI Conference on Human Factors in Computing Systems; CHI '18: CHI Conference on Human Factors in Computing Systems; Apr 21 - 26, 2018; Montreal, QC, Canada. 2018. [DOI] [Google Scholar]

- 33.Li I, Dey AK, Forlizzi J. Understanding my data, myself: supporting self-reflection with ubicomp technologies. Proceedings of the 13th international conference on Ubiquitous computing; Ubicomp '11: The 2011 ACM Conference on Ubiquitous Computing; Sep 17 - 21, 2011; Beijing, China. 2011. [DOI] [Google Scholar]

- 34.Sherdell L, Waugh CE, Gotlib IH. Anticipatory pleasure predicts motivation for reward in major depression. J Abnorm Psychol. 2012 Feb;121(1):51–60. doi: 10.1037/a0024945. http://europepmc.org/abstract/MED/21842963 .2011-17872-001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009 Apr;42(2):377–81. doi: 10.1016/j.jbi.2008.08.010. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(08)00122-6 .S1532-0464(08)00122-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kroenke K, Strine TW, Spitzer RL, Williams JB, Berry JT, Mokdad AH. The PHQ-8 as a measure of current depression in the general population. J Affect Disord. 2009 Apr;114(1-3):163–73. doi: 10.1016/j.jad.2008.06.026.S0165-0327(08)00282-6 [DOI] [PubMed] [Google Scholar]

- 37.Rosenberg M. Society and the Adolescent Self-Image. Princeton: Princeton University Press; 1965. [Google Scholar]

- 38.Matcham F, Barattieri di San Pietro C, Bulgari V, de Girolamo G, Dobson R, Eriksson H, Folarin AA, Haro JM, Kerz M, Lamers F, Li Q, Manyakov NV, Mohr DC, Myin-Germeys I, Narayan V, Bwjh P, Ranjan Y, Rashid Z, Rintala A, Siddi S, Simblett SK, Wykes T, Hotopf M, RADAR-CNS consortium, et al Remote assessment of disease and relapse in major depressive disorder (RADAR-MDD): a multi-centre prospective cohort study protocol. BMC Psychiatry. 2019 Feb 18;19(1):72. doi: 10.1186/s12888-019-2049-z. https://bmcpsychiatry.biomedcentral.com/articles/10.1186/s12888-019-2049-z .10.1186/s12888-019-2049-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Michie S, Atkins L, West R. The Behaviour Change Wheel: A Guide to Designing Interventions. United Kingdom: Silverback; 2014. [Google Scholar]

- 40.Polhemus AM, Novák J, Ferrao J, Simblett S, Radaelli M, Locatelli P, Matcham F, Kerz M, Weyer J, Burke P, Huang V, Dockendorf MF, Temesi G, Wykes T, Comi G, Myin-Germeys I, Folarin A, Dobson R, Manyakov NV, Narayan VA, Hotopf M. Human-centered design strategies for device selection in mHealth programs: development of a novel framework and case study. JMIR Mhealth Uhealth. 2020 May 07;8(5):e16043. doi: 10.2196/16043. https://mhealth.jmir.org/2020/5/e16043/ v8i5e16043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Bot M, Middeldorp CM, de Geus EJ, Lau HM, Sinke M, van Nieuwenhuizen B, Smit JH, Boomsma DI, Penninx BW. Validity of LIDAS (LIfetime Depression Assessment Self-report): a self-report online assessment of lifetime major depressive disorder. Psychol Med. 2017 Jan;47(2):279–89. doi: 10.1017/S0033291716002312.S0033291716002312 [DOI] [PubMed] [Google Scholar]

- 42.Rush AJ, Gullion CM, Basco MR, Jarrett RB, Trivedi MH. The Inventory of Depressive Symptomatology (IDS): psychometric properties. Psychol Med. 1996 May;26(3):477–86. doi: 10.1017/s0033291700035558. [DOI] [PubMed] [Google Scholar]

- 43.Kessler RC, Andrews G, Mroczek D, Ustun B, Wittchen H. The World Health Organization Composite International Diagnostic Interview short-form (CIDI-SF) Int J Method Psychiat Res. 2006 Nov;7(4):171–85. doi: 10.1002/mpr.47. [DOI] [Google Scholar]

- 44.Spitzer RL, Kroenke K, Williams JB, Löwe B. A brief measure for assessing generalized anxiety disorder: the GAD-7. Arch Intern Med. 2006 May 22;166(10):1092–7. doi: 10.1001/archinte.166.10.1092.166/10/1092 [DOI] [PubMed] [Google Scholar]

- 45.Mundt JC, Marks IM, Shear MK, Greist JM. The Work and Social Adjustment Scale: a simple measure of impairment in functioning. Br J Psychiatry. 2002 May;180:461–4. doi: 10.1192/bjp.180.5.461.S0007125000160878 [DOI] [PubMed] [Google Scholar]

- 46.Broadbent E, Petrie KJ, Main J, Weinman J. The brief illness perception questionnaire. J Psychosom Res. 2006 Jun;60(6):631–7. doi: 10.1016/j.jpsychores.2005.10.020.S0022-3999(05)00491-5 [DOI] [PubMed] [Google Scholar]

- 47.Brugha T, Bebbington P, Tennant C, Hurry J. The List of Threatening Experiences: a subset of 12 life event categories with considerable long-term contextual threat. Psychol Med. 1985 Feb;15(1):189–94. doi: 10.1017/s003329170002105x. [DOI] [PubMed] [Google Scholar]

- 48.Beecham J, Martin K. Measuring Mental Health Needs. London: Gaskell/Royal College of Psychiatrists; 1992. Costing psychiatric interventions. [Google Scholar]

- 49.Holdener M, Gut A, Angerer A. Applicability of the user engagement scale to mobile health: a survey-based quantitative study. JMIR Mhealth Uhealth. 2020 Jan 03;8(1):e13244. doi: 10.2196/13244. https://mhealth.jmir.org/2020/1/e13244/ v8i1e13244 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Killian KD. Development and validation of the Emotional Self-Awareness Questionnaire: a measure of emotional intelligence. J Marital Fam Ther. 2012 Jul;38(3):502–14. doi: 10.1111/j.1752-0606.2011.00233.x. [DOI] [PubMed] [Google Scholar]

- 51.Zhou L, Bao J, Setiawan IM, Saptono A, Parmanto B. The mHealth App Usability Questionnaire (MAUQ): development and validation study. JMIR Mhealth Uhealth. 2019 Apr 11;7(4):e11500. doi: 10.2196/11500. https://mhealth.jmir.org/2019/4/e11500/ v7i4e11500 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.O'Brien HL, Toms EG. The development and evaluation of a survey to measure user engagement. J Am Soc Inf Sci. 2009 Oct 19;61(1):50–69. doi: 10.1002/asi.21229. [DOI] [Google Scholar]

- 53.O’Brien HL, Cairns P, Hall M. A practical approach to measuring user engagement with the refined user engagement scale (UES) and new UES short form. Int J Human Comput Stud. 2018 Apr;112:28–39. doi: 10.1016/j.ijhcs.2018.01.004. [DOI] [Google Scholar]

- 54.Probst P, Grummich K, Heger P, Zaschke S, Knebel P, Ulrich A, Büchler MW, Diener MK. Blinding in randomized controlled trials in general and abdominal surgery: protocol for a systematic review and empirical study. Syst Rev. 2016 Mar 24;5:48. doi: 10.1186/s13643-016-0226-4. https://systematicreviewsjournal.biomedcentral.com/articles/10.1186/s13643-016-0226-4 .10.1186/s13643-016-0226-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Unlock insights in your data with powerful analysis. NVivo. [2021-12-05]. https://www.qsrinternational.com/nvivo-qualitative-data-analysis-software/home .

- 56.Rennie D. CONSORT revised--improving the reporting of randomized trials. JAMA. 2001 Apr 18;285(15):2006–7. doi: 10.1001/jama.285.15.2006.jed10017 [DOI] [PubMed] [Google Scholar]

- 57.Behar JA, Liu C, Kotzen K, Tsutsui K, Corino VD, Singh J, Pimentel MA, Warrick P, Zaunseder S, Andreotti F, Sebag D, Kopanitsa G, McSharry PE, Karlen W, Karmakar C, Clifford GD. Remote health diagnosis and monitoring in the time of COVID-19. Physiol Meas. 2020 Nov 10;41(10):10TR01. doi: 10.1088/1361-6579/abba0a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Najam S. Multidisciplinary research priorities for the COVID-19 pandemic. Lancet Psychiatry. 2020 Jul;7(7):e34. doi: 10.1016/s2215-0366(20)30238-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Owens AP, Ballard C, Beigi M, Kalafatis C, Brooker H, Lavelle G, Brønnick KK, Sauer J, Boddington S, Velayudhan L, Aarsland D. Implementing remote memory clinics to enhance clinical care during and after COVID-19. Front Psychiatry. 2020;11:579934. doi: 10.3389/fpsyt.2020.579934. doi: 10.3389/fpsyt.2020.579934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Torous J, Lipschitz J, Ng M, Firth J. Dropout rates in clinical trials of smartphone apps for depressive symptoms: a systematic review and meta-analysis. J Affect Disord. 2020 Feb 15;263:413–9. doi: 10.1016/j.jad.2019.11.167.S0165-0327(19)32606-0 [DOI] [PubMed] [Google Scholar]

- 61.Zhang Y, Folarin AA, Sun S, Cummins N, Ranjan Y, Rashid Z, Conde P, Stewart C, Laiou P, Matcham F, Oetzmann C, Lamers F, Siddi S, Simblett S, Rintala A, Mohr DC, Myin-Germeys I, Wykes T, Haro JM, Penninx BW, Narayan VA, Annas P, Hotopf M, Dobson RJ. Predicting depressive symptom severity through individuals' nearby bluetooth device count data collected by mobile phones: preliminary longitudinal study. JMIR Mhealth Uhealth. 2021 Jul 30;9(7):e29840. doi: 10.2196/29840. https://mhealth.jmir.org/2021/7/e29840/ v9i7e29840 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Remote smartphone-based speech collection: acceptance and barriers in individuals with major depressive disorder. ArXiv.org. 2021. [2021-12-05]. https://arxiv.org/abs/2104.08600 .

- 63.Szinay D, Jones A, Chadborn T, Brown J, Naughton F. Influences on the uptake of and engagement with health and well-being smartphone apps: systematic review. J Med Internet Res. 2020 May 29;22(5):e17572. doi: 10.2196/17572. https://www.jmir.org/2020/5/e17572/ v22i5e17572 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Semistructured qualitative interview schedule (N=20).