Abstract

Background

The COVID-19 pandemic has forced medical schools to create educational material to palliate the anticipated and observed decrease in clinical experiences during clerkships. An online learning by concordance (LbC) tool was developed to overcome the limitation of students’ experiences with clinical cases. However, knowledge about the instructional design of an LbC tool is scarce, especially the perspectives of collaborators involved in its design: 1- educators who wrote the vignettes’ questions and 2- practitioners who constitute the reference panel by answering the LbC questions. The aim of this study was to describe the key elements that supported the pedagogical design of an LbC tool from the perspectives of educators and practitioners.

Methods:

A descriptive qualitative research design has been used. Online questionnaires were used, and descriptive analysis was conducted.

Results:

Six educators and 19 practitioners participated in the study. Important to the educators in designing the LbC tool were prevalent or high-stake situations, theoretical knowledge, professional situations experienced and perceived difficulties among students, and that the previous workshop promoted peer discussion and helped solidify the writing process. Important for practitioners was standards of practice and consensus among experts. However, they were uncertain of the educational value of their feedback, considering the ambiguity of the situations included in the LbC tool.

Conclusions:

The LbC tool is a relatively new training tool in medical education. Further research is needed to refine our understanding of the design of such a tool and ensure its content validity to meet the pedagogical objectives of the clerkship.

Abstract

Contexte :

Face à la pandémie de la COVID-19, les facultés de médecine ont été contraintes à créer du matériel pédagogique pouvant pallier la diminution prévue et avérée de l’exposition clinique pendant les stages d’externat. Un outil numérique de formation par concordance (FpC) a été développé pour combler le manque d’exposition à des cas cliniques. Cependant, les connaissances sur la conception pédagogique des outils de FpC sont limitées, en particulier en ce qui concerne les perspectives des collaborateurs participant à leur réalisation : 1 – les éducateurs qui rédigent les questions des vignettes et 2 – les praticiens composant le groupe d’experts qui fournissent les réponses de référence aux questions de FpC. L’objectif de cette étude était de décrire les éléments clés qui ont étayé la conception pédagogique d’un outil FpC du point de vue des éducateurs et des praticiens.

Méthodes :

Il s’agit d’une recherche qualitative de type descriptif, pour laquelle on s’est servi de questionnaires en ligne et d’une méthode d’analyse descriptive.

Résultats :

Six éducateurs et 19 praticiens ont participé à l’étude. Dans la conception de l’outil FpC, les éducateurs ont attribué une importance particulière aux situations courantes ou à enjeu élevé, aux connaissances théoriques, aux situations professionnelles vécues par les étudiants et aux difficultés qu’ils ont perçues chez eux. Ils ont également tenu à faire en sorte que l’atelier qui précédait la conception favorise le débat entre pairs et contribue à solidifier le processus de rédaction. Les praticiens ont privilégié les normes de pratique et l’existence d’un consensus entre experts. Cependant, ils doutaient de la valeur pédagogique de leurs commentaires, compte tenu de l’ambiguïté des situations décrites dans l’outil FpC.

Conclusions :

Les outils FpC sont relativement nouveaux en éducation médicale. Des recherches plus poussées sont nécessaires pour affiner notre compréhension de la conception d’un tel outil et pour nous assurer de sa validité de contenu, pour bien répondre aux objectifs pédagogiques de l’externat.

Introduction

The COVID-19 pandemic has forced medical schools to create educational material to palliate the anticipated and observed decrease in clinical experiences during clerkships. In these exceptional circumstances, we developed a learning by concordance (LbC) tool to overcome the limitation of clinical experiences. Supported by a descriptive research design, the article presents the results of the design of the LbC tool aiming to enhance the development of medical students’ clinical reasoning (CR). Our objective was to describe the key elements that supported the pedagogical design of this learning tool from the perspectives of both educators and practitioners.

As a core competency of medical practice, CR encompasses all the cognitive and metacognitive mental processes that enable the resolution of clinical problems and situations.1 An interesting and innovative way to allow students to learn how to reason in common clinical situations and to help them develop CR is the LbC tool.2,3 The LbC tool is a new online educational modality that includes simulated clinical situations that includes complex or incomplete information.2-5 After each situation, three to four hypotheses are presented, which are followed by new information. The judgment expected of the students is to evaluate the effect of the new information on the suggested hypothesis. The format of the questions is the same as in script concordance testing (SCT), aiming to assess CR in the context of uncertainty and ambiguity.6-10 Both tools (SCT and LbC) are derived from script theory, which assumes that CR is based on the continuous development of scripts.3 Scripts are rich knowledge networks that are elaborated and structured in long-term memory. In clinical practice, clinicians use scripts to judge the impact of new information on their hypothesis by confronting the patient presentation and history with their existing scripts and recognizing patterns or dissimilarities of the patient presentation through CR.11,12

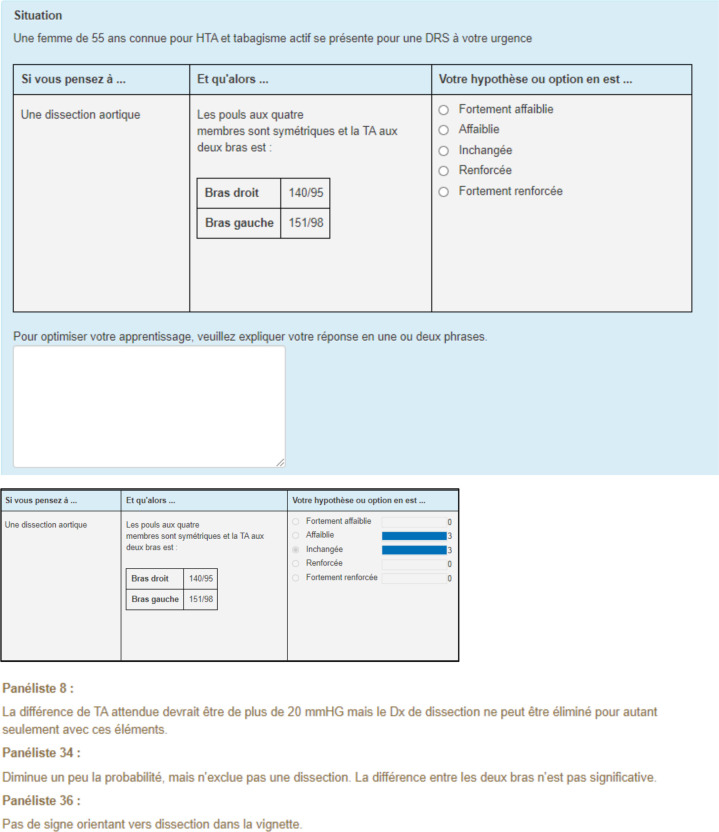

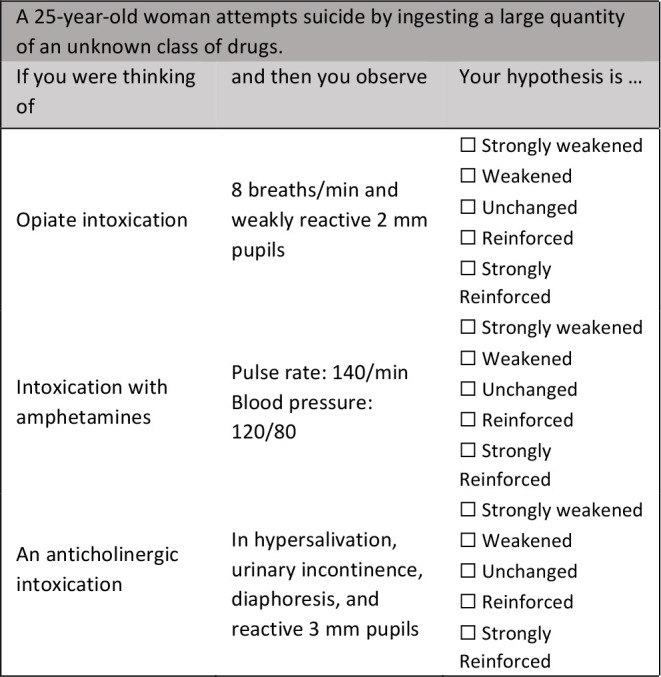

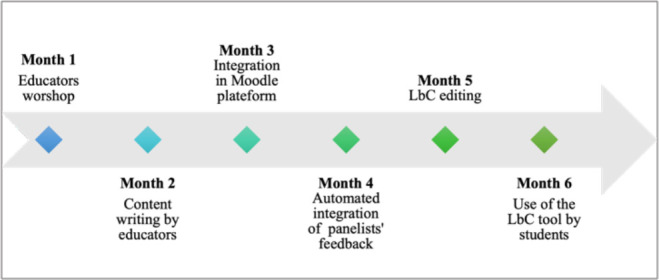

Figure 1 presents an example of a vignette where a clinical situation is presented in the header. In the first column, hypotheses are proposed (If you were thinking of …), followed by new information in the second column (and then…). The additional information may take various forms: the findings of a physical or mental assessment, news signs or symptoms. The third column proposes a five-point Likert scale to trigger a judgment, i.e., to assess whether the new information minimizes/strengthens or has no effect on the entertained hypothesis. Panelists (specialists of the diverse disciplines assessed) individually complete the LbC tool questions before the tool is submitted to students. They answer the vignettes’ questions and write short rationale to explain their response choices. By completing the LbC tool, students answer the questions by making judgments and then read the automated feedback. The first feedback presents the panelists’ response choices while the second feedback exposes their rationale of their response choices. The final feedback provides an educational synthesis (key learning points) of the clinical vignette and provides references to consult such as articles or clinical guidelines, hyperlinks, etc.

Figure 1.

Example of a vignette

Authors: Véronique Castonguay, MD and Amélie Frégeau, MD.

The construction of the LbC tool, used in this study, began in spring 2020. Two researchers in the field (BC and MFD) trained medical educators (n = 17) during a three-hour virtual workshop. The educators were clinical teachers and residents who were chosen for their particular interest in pedagogy. At the beginning of the workshop, the researchers conducted a synchronous virtual conference where examples of vignettes were presented in relation to the objectives of clerkships (45-60 min). Then, the educators were divided into seven subgroups of medical disciplines (60-90 min), still in synchronous mode, to write the vignettes. Finally, a plenary concluded the workshop (15-30 min). The educators had six weeks to 1) write 10 to 15 vignettes per medical discipline; 2) identify the objective pursued for each vignette; and 3) create an educational synthesis (key learning point) for each vignette, which should be in line with the learning objectives. The digital learning environment allowed educators to integrate text, images, and audio recording in the vignettes. Finally, eighty-five vignettes were written, including 272 questions in seven medical disciplines (family medicine, emergency, psychiatry, pediatrics, musculoskeletal and rheumatology, surgery, and gyneco-obstetrics), addressed to 280 clerkship students (see Appendix A).

The content of the learning tool was then integrated on MEDCours (Moodle), the e-learning environment used at the faculty. Three new open-source plugins on Moodle, created at our institution, allowed to create concordance reasoning questions and to collect, in an automated way, panelists’ response choices and comments (see Appendix B). The educators involved in the construction of the LbC questions proposed panelists. Those panelists were either residents or medical staffs and chosen for their expertise in each discipline. Three to four panelists per discipline was deemed sufficient3 to meet the students’ learning objectives. Ultimately, thirty-five panelists responded to the vignette questions and provided rationale for their response choices. The panelists were asked to provide a short bibliography and a photo if desired. They had six to eight weeks to answer the vignettes’ questions. Once the feedback was completed by the panelists and the training content edited, the LbC tool was ready to be used by clerkship students (see Figure 2).

Figure 2.

Chronology of the development of the LbC tool

Designing an LbC tool is challenging. It requires clinical and pedagogical expertise and sustained coordination of all the collaborators involved.13 The existing published literature offers several guidelines for SCT designers.9,10,14 However, studies addressing the pedagogical design of the LbC tool, especially those describing the perspectives of educators and practitioners involved in its development, are rare. Supported by the principle of coherence,15 pedagogical design represents the relevant link between the content, the resources to be mobilized (knowledge, learning strategies, and pedagogical material) and the level of competency development, which allows a coherent transcription of the learning tool on a digital medium.16 A better understanding of the process underlying the pedagogical design of an LbC tool could provide support for designers in academic or clinical settings. In this study, our objective was to describe the key elements that supported the pedagogical design of this learning tool from the perspectives of both educators and practitioners.

Methods

Study design

A descriptive research design17,18 has been used to describe aspects of a particular phenomenon, which are in this case the key elements of the pedagogical design of the LbC tool. Our aim was to describe the design of an LbC tool from the collaborators’ perspectives (educators and panelists) to attain a better understanding of the tool’s construction to enhance the development of students’ CR. The research question was the following: what are the key elements of the pedagogical design of an LbC tool to foster students’ CR from the perspectives of both educators and practitioners?

Setting and participants

The study took place in a Canadian faculty of medicine. The participants in this study were the following: 1- the educators who wrote the vignettes’ questions and key learning points and 2- the panelists who answered the vignettes’ questions. E-mail invitations were sent to solicit educators’ and panelists’ participation in the study. The educators included in this study were recruited among the 17 educators who had previously participated in the construction of the vignettes. They were recruited through a network sampling process.19 The panelists included in this study were recruited among the 35 panelists who had previously responded to the vignettes’ questions. The panelists were also recruited through a network sampling process.

Data collection

Online questionnaires were used to identify the key elements guiding the design of an LbC tool aiming to support the development of students’ CR. Online surveys were cost-effective and allowed maximal participation in a short period of time without geographic barriers.20 In addition, online surveys increased the feasibility of the project in the context of the pandemic. Two questionnaires were designed and based on the standard principles known for the design of an LbC tool.3 The two questionnaires were pretested by four volunteer educators to ensure readability. The online questionnaire for educators contained questions about the clinical situations chosen, the hypotheses, the new information and the key learning points (see Appendix C). The online questionnaire for panelists contained questions about their educative feedback (response choices and rationale of their response choices) (see Appendix D). All participants had the opportunity to provide written comments to describe the key elements guiding their contribution in the construction of the LbC tool. An open-ended question gave participants the opportunity to list the facilitating components and difficulties experienced. Finally, an online sociodemographic questionnaire was used to obtain, among other things, data on participants' prior knowledge of the LbC tool and their teaching experience.

Data analysis

Descriptive analyses of the frequency of response choices were conducted for all the questions included in the questionnaires. Content analysis of the written comments was also conducted.21 Speech segments were used to exemplify the conclusions drawn to ensure the transparency and the credibility of the data reported and to facilitate comparisons between investigators.18,21 Discussions between investigators confirmed the interpretations of the data. Finally, the sociodemographic data were analyzed using descriptive statistics.

Ethical considerations

The study was approved by the scientific committee of the Faculty of Medicine and the research ethics board of the Canadian university (CEREP- 20-152-D).

Results

Sociodemographic data

Of the 52 collaborators involved, 25 (48%), namely, six educators and 19 panelists, participated in the study. Most participants were between 31 and 50 years old (56%). Their years of teaching experience, their medical discipline and their prior knowledge of the LbC tool varied (see Table 1). More than half of the participants (52%) had less than 10 years of teaching experience.

| A 25-year-old woman attempts suicide by ingesting a large quantity of an unknown class of drugs. | ||

|---|---|---|

| If you were thinking of | and then you observe | Your hypothesis is … |

| Opiate intoxication | 8 breaths/min and weakly reactive 2 mm pupils | ◻ Strongly weakened ◻ Weakened ◻ Unchanged ◻ Reinforced ◻ Strongly Reinforced |

| Intoxication with amphetamines | Pulse rate: 140/min Blood pressure: 120/80 |

◻ Strongly weakened ◻ Weakened ◻ Unchanged ◻ Reinforced ◻ Strongly Reinforced |

| An anticholinergic intoxication | In hypersalivation, urinary incontinence, diaphoresis, and reactive 3 mm pupils | ◻ Strongly weakened ◻ Weakened ◻ Unchanged ◻ Reinforced ◻ Strongly Reinforced |

Educators’ perspectives

Table 2 documents, in terms of frequency, the different resources mobilized by educators while writing the vignettes (clinical situations, hypotheses and new information) and what underpinned the content of the key learning points at the end of each vignette.

Table 1.

The sociodemographic data of the participants (n = 25)

| Role in the LbC construction | Educator | 6 (24) |

| Panelist | 19 (76) | |

| Age | 21-30 | 3 (12) |

| 31-40 | 8 (32) | |

| 41-50 | 6 (24) | |

| 51-60 | 5 (20) | |

| 61 and above | 3 (12) | |

| Years of teaching experience | 0-5 | 10 (40) |

| 6-10 | 3 (12) | |

| 11-15 | 3 (12) | |

| 16-20 | 3 (12) | |

| 21-25 | 0 | |

| 26 and above | 6 (24) | |

| Participants’ perception of their level of prior knowledge of the LbC tool (/5) | Educators | 2.3/5 |

| Panelists | 4/5 | |

| Discipline | Family Medicine | 6 (24) |

| Emergency | 4 (16) | |

| Surgery | 2 (8) | |

| Musculoskeletal and rheumatology | 4 (16) | |

| Pediatrics | 4 (16) | |

| Gyneco-obstetrics | 4 (16) | |

| Psychiatry | 1 (4) |

Note: Data are presented in frequency distribution; the percentages are in parentheses.

Panelist perspectives

The panelists reported that the time taken to respond 10 to 15 vignettes (including 30-50 questions) was less than 30 min (n = 4, 21%), between 30 and 90 min (n = 11, 58%), or more than 90 min (n = 4, 21%). The panelists were asked to judge the authenticity of the vignettes previously written by the educators as well as the degree of uncertainty of the situations. The responses to the online questionnaire also provided a description of the resources mobilized among the panelists. In their cases, the questions were directed on what supported their writing of the rationale of their response choices to students (see Table 3).

Table 2.

Resources that guided the medical educators (n = 6) while writing the vignettes and the key learning points

| Resources mobilized, in terms of frequency, while writing the vignettes | ||||||

|---|---|---|---|---|---|---|

| Never | Very rarely | Sometimes | Frequently | Nearly always | Always | |

| Academic sources (clerkship objectives) | 1(16.67) | 2(33.33) | 3(50) | |||

| Professional situations experienced | 1(16.67) | 1(16.67) | 2(33.33) | 2(33.33) | ||

| Prevalent or high-stake situations | 2(33.33) | 4(66.66) | ||||

| Situations to which students are less frequently exposed | 1(16.67) | 1(16.67) | 1(16.67) | 2(33.33) | 1(16.67) | |

| Theoretical knowledge | 1(16.67) | 2(33.33) | 4(66.67) | |||

| Difficulties noticed in students’ CR | 1(16.67) | 1(16.67) | 3(50) | 1(16.67) | ||

| Difficulties noticed in physicians’ CR | 1(16.67) | 1(16.67) | 1(16.67) | 1(16.67) | 1(16.67) | 1(16.67) |

| Resources underlying, in terms of frequency, the key learning points at the end of each vignette | ||||||

| Experiential knowledge | 3(50) | 3(50) | ||||

| Best practice guidelines† | 1(16.67) | 4 (80) | ||||

Note: Data are presented in frequency distribution; the percentages are in parentheses. †: Missing data: one participant (n =1; 16.67%)

Finally, Table 4 presents the facilitating components and difficulties or challenges identified by the educators and panelists.

Table 3.

Key elements that the panelists (n = 19) noted while reading the vignettes and resources mobilized while answering the questions

| Key elements noted by practitioners, in terms of frequency, while reading the vignettes | ||||||

|---|---|---|---|---|---|---|

| Never | Very rarely | Sometimes | Frequently | Nearly always | Always | |

| Vignettes that mirrored frequent questioning of clinical practice | 4(21.05) | 11(57.89) | 4(21.05) | |||

| Uncertain situations where varied hypotheses are plausible † | 1(5.26) | 3(15.79) | 8(42.11) | 5(26.32) | 1(5.26) | |

| Resources mobilized by practitioners, in terms of frequency, while answering questions (response choices and rationale) | ||||||

| Real practice situations experienced | 3(15.79) | 10(52.63) | 3(15.79) | 3(15.79) | ||

| Standards of practice and consensus among experts | 1(5.26) | 6(31.58) | 12(63.16) | |||

| Opportunities/constraints in my clinical setting | 1(5.26) | 7(36.84) | 6(31.58) | 3(15.79) | 2(10.53) | |

Note: Data are presented in frequency distribution; the percentages are in parentheses. †: Missing data: one participant (n = 1; 5.26%)

Table 4.

Facilitating components and difficulties

| Facilitating components | Difficulties |

|---|---|

| Educators | |

| “The preworkshop was essential and interesting.” “The discussions and exchanges within the large group and within the subgroups of disciplines encouraged the emergence of questions, challenges, limits and strengths of this approach” “Numerous easily accessible practice guides” “Cohesion and collegiality of the group” |

“The objectives of some clerkships rotation were not always available and accessible. It could help to emulate the situations.” “Need to spend more time than expected on the whole process of creating vignettes, hypotheses, and discussions among colleagues to resolve some clinical ambiguities.” |

| Panelists | |

| “Simple vignette, not very complex” “Short, well-constructed vignettes on a variety of topics that are common in practice” “The situations submitted were very relevant” “Online platform easy to use and well done” “Clinical experience and theoretical knowledge helped me” |

“Adequately justify a response, despite some gray areas and uncertainty about the appropriate course of action” “Determine my level of agreement with the statement” “Give precise and clear comments” “Make decisions despite the absence of certain discriminatory information that I would have sought in a real clinical situation” “Sometimes several answers seemed possible to me” “Some clinical situations were outside my area of expertise” “The vagueness left by the clinical situations allowing a significant interpretation according to the answers given” |

Discussion

The results demonstrate several resources mobilized among educators to design the vignettes’ questions and the key learning points in an LbC tool: prevalent or high-stake situations, theoretical knowledge, professional situations experienced and perceived difficulties among students. The educators also identified the importance of addressing situations that are less frequently encountered during clerkships, which are considered essential for students’ learning. In fact, the educators used didactic questioning, i.e., the theoretical knowledge to be taught, the characteristics of the students concerned, the material to be used, and the cognitive learning strategies to be solicited in conjunction with construction of the LbC tool.

However, a new question emerges from educators' comments in this study: how do we teach a competency, such as CR, in a context of uncertainty? In this regard, educators mentioned the essential contribution and relevance of the virtual workshop, which was seen as an effective way to promote peer discussion and ultimately help to solidify the educative writing process. These results are similar to those of the Deschênes and Goudreau13 study, where intensive coaching of the educators involved in the LbC tool was necessary to capture the cognitive effort involved while writing clinical situations and questions aiming to solicit undergraduate students’ scripts. In our study, the panelists were invited to judge the vignettes previously written by the educators. They found them to be similar to clinical practice, but with less uncertainty. This comment should be explored more in depth. In other words, the following questions remain: What is the level of complexity expected to trigger students’ CR? Are we sufficiently challenging students? This comment may also reflect the consistency of the educators' choices in relation to the level of competency development of clerkship students of which the panelists may be less aware.

While it can be rewarding, the panelists' experience is not easy in the context of designing an LbC tool. The panelists attempt to balance the salient elements to be written in their comments to make the tool as educative as possible. When asked what guided the panelists' rationale, they agreed that standards of practice and a consensus among experts underpinned their feedback. However, in terms of considering the opportunities or constraints of their clinical practice environments, their thoughts were much more varied. These findings are slightly similar to those of Lineberry et al.22 who explored the validity of the process of expert responses to SCT questions using an online questionnaire. In Lineberry et al.22 study, the experts indicated that they considered the possibilities and constraints of their practice settings when answering questions related to the simulated situations proposed in the SCT. The results showed that some experts had not considered the hypotheses presented, suggesting that the scripts used by these experts would not be the same as those used in an SCT. Further research is needed to better understand how the context of clinical practice influences the CR of panelists and their feedback while responding to the vignette questions of an LbC tool.

The following comments from panelists situate the incompleteness of the panelists’ judgements and thus of the feedback provided: “Give precise and clear comments” and “Make decisions despite the absence of certain discriminatory information that I would have sought in a real clinical situation.” This situation needs to be normalized, which also refers to the fundamental incompleteness of the situations in the vignettes. In their review examining the causes of errors in CR, Norman et al.23 state that “ambiguity is a constant in clinical practice; it is inevitable that some errors will arise simply because there is insufficient information to make a definitive diagnosis” (p. 28). In the context of using the LbC tool, the response choices and rationale from panelists exemplify that the CR process is not finalized; it is rather embryonic. In summary, panelists’ CR are not settled. Furthermore, the decisions to be made in most clinical situations are not readily apparent, suggesting that uncertainty is present in medical practice.6,24 Uncertainty arises when there is insufficient information in a given situation, thus preventing the confirmation of medical hypotheses. Reflecting a dynamic state of self-reassessment of CR processes by clinicians, uncertainty also implies that more than one hypothesis must be considered for a clinical situation and that doubt can always remain, despite the presence of evidence or conclusive information.6

Despite efforts to recruit panelists with expertise, some perceived that some clinical situations were outside of their area of expertise. This comment may demonstrate the need of having panelists with more general practice in their discipline compared to subspecialized doctors. In this case, it could be better to have panelists with fewer years of experience or with a more general practice. Peyrony et al.25 explored the SCT scores of undergraduate medical students (n = 985) according to the experience level of the reference panel. A panel of 75 experts was divided into three groups: 31 residents, 21 non experienced physicians and 23 experienced physicians. The possibility of having panelists with less than five years of experience was well accepted when the examinees were undergraduate medical students.25 When the SCT was designed for more experienced examinees such as residents in a broad specialty, such as general surgery or internal medicine, it could be better to have subspecialty-specific experts.26 This latter recommendation was based on the results of a study by Petrucci et al.26 aiming to determine whether a specialty-specific scoring key improves the validity of the SCT. While Peyrony et al.25 and Petrucci et al.26 focused on SCT, further studies probing the variety of panelist profiles would help to better understand their educational contribution to an LbC tool.

Conclusion

The pandemic has forced the implementation of new online training modalities to support the development of students' CR. A better understanding of the process underlying the pedagogical design of an LbC tool provides substantial support for any designers. In our study, the educators addressed didactic questions and the panelists were uncertain of the educational value of their feedback, considering the ambiguity of the situations. Furthermore, more than half of the participants (52%), both educators and practitioners, had less than 10 years of teaching experience, which may influence findings regarding pedagogical comfort in addressing uncertainty while responding to the questions of the LbC tool.

In our study, educators found the workshop helpful for writing vignettes in the LbC tool, the practitioners (panelists) did not have this support but rather written or verbal instructions. Panelists could be given support similar to that offered to educators to assist them. The goal should not be to reach a consensus in the panelists' response choices and rationales,3 but to normalize the uncertainty of situations in the LbC tool. We then recommend that the educators and panelists involved in an LbC tool should be previously trained to understand the educational modality based on script concordance. A workshop to promote peer-to-peer discussion is also strongly suggested to enhance the quality of the LbC tool construction and the experience of the collaborators involved.

However, the study is highly contextualized. The data collected relate to the experience of a limited number of educators and panelists. The study was also conducted in a single medical school, and further experiments are needed to gather more generic results. Other data collection tools, such as individual or group interviews, are also suggested to more deeply probe the specific contributions of the collaborators involved in the construction of the LbC tool. For example, it seems strange that there was one person in each of the response categories for the question related to the difficulties noted in the physicians as resources mobilized in the writing of the situations in the LbC tool. This question seems to have caused some confusion among the educators, despite prior pretesting of the questionnaires. A validation with more collaborators would be necessary to ensure readability of the questionnaire.

Further research is also needed to better understand how the context of clinical practice influences the CR of the panelists and how their professional profiles influence the quality of their educational feedback. From a pragmatic perspective, we had achieved enough panelists to meet clerkship training objectives in the pandemic context.3 However, it would be interesting to explore, among other things, years of clinical practice and number of experienced clinical situations similar to those presented in the LbC tool to determine the influence of these characteristics on panelists' experience and responses. The level of uncertainty in the situations would also be relevant to explore. A second Likert scale could be added to the LbC tool to perceive the degree of uncertainty of the participants according to their response choices and rationales.

Finally, our experience has made it possible to create an online educational modality that will be sustainable over the years as a support to the clerkships of medical students. The LbC tool aims to support the development of CR early in medical education programs while also providing a promising tool that approximates the uncertainty of clinical practice. This pedagogical approach could also promote academic progression of graduates in furthering education. The LbC tool is a relatively new training tool in medical education. Further research is needed to refine our understanding of the design of such a tool and ensure its content validity to meet the pedagogical objectives of the clerkship.

Appendix A.

Number of vignettes and questions per medical clerkship discipline developed for the LbC tool.

| Vignettes (n) | Questions (n) | |

|---|---|---|

| Emergency | 15 | 50 |

| Family Medicine | 14 | 42 |

| Gynaeco-obstetrics | 8 | 24 |

| Musculoskeletal and rheumatology | 13 | 54 |

| Pediatrics | 11 | 33 |

| Psychiatry | 12 | 36 |

| Surgery | 12 | 36 |

| Total | 85 | 272 |

Appendix B.

Example of a vignette question (discipline: emergency) on Moodle platform, followed by the response choices of the panelists and some rationales following their choices.

Appendix C

Online questionnaire for educators

For each statement, select an answer choice that best describes, in terms of frequency, your experience while writing the LbC tool content.

| 1. While writing the vignettes (situations, hypotheses, and new information), you relied on … | Never | Very rarely | Sometimes | Frequently | Nearly always | Always |

| Academic sources (clerkship objectives) | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ |

| Professional situations experienced | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ |

| Situations prevalent or with high stakes | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ |

| Situations to which students are less frequently exposed | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ |

| Theoretical knowledge | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ |

| Difficulties noticed in students’ CR | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ |

| Difficulties noticed in physicians’ CR | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ |

| Other? If yes, please specify | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ |

| 2. What underpinned the content of the key learning points written at the end of each vignette? | Never | Very rarely | Sometimes | Frequently | Nearly always | Always |

| Experiential knowledge | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ |

| Best practice guidelines | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ |

| Other? If yes, please specify | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ |

2. What were the facilitating components and/or the difficulties during this experience? Explain

Facilitating components:

__________________________________________________________________________________________________________________________________________________________________________________________________________

Difficulties:

__________________________________________________________________________________________________________________________________________________________________________________________________________

3. Do you have any other comments? Explain ...

_______________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________

Appendix D.

Online questionnaire for practitioners

For each statement, select an answer choice that best describes, in terms of frequency, your experience while answering the LbC tool content.

| 1. When you read the vignettes, did you recognise: | Never | Very rarely | Sometimes | Frequently | Nearly always | Always | |

| Situations that mirrored frequent questioning of clinical practice | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ | |

| Uncertain situations where varied hypotheses are plausible | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ | |

| 2. When selecting response choices and writing rationales, you relied on: | Never | Very rarely | Sometimes | Frequently | Nearly always | Always | |

| Real-life practice situations experienced | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ | |

| Standards of practice and consensus among experts | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ | |

| Opportunities/constraints in my clinical setting | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ | |

| Other? If yes, please specify | ◻ | ◻ | ◻ | ◻ | ◻ | ◻ | |

| 3. How long did it take to complete the online LbC tool (including answer choices and comments) | ◻ 30 minutes ◻ 30-60 minutes ◻ 60-90 minutes ◻ 90-120 minutes ◻ More 120 minutes |

||||||

4. What were the facilitating components and/or the difficulties during this experience? Explain

Facilitating components:

__________________________________________________________________________________________________________________________________________________________________________________________________________

Difficulties:

__________________________________________________________________________________________________________________________________________________________________________________________________________

5. Do you have any other comments? Explain ...

_______________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________

Conflicts of Interest

The authors have no conflict of interest to declare.

Funding

No founding was sought or received for this study.

References

- 1.Charlin B, Lubarsky S, Millette B, et al. Clinical reasoning processes: unravelling complexity through graphical representation. Med Educ. 2012;46(5):454-463. 10.1111/j.1365-2923.2012.04242.x [DOI] [PubMed] [Google Scholar]

- 2.Fernandez N, Foucault A, Dubé Set al. Learning-by-Concordance (LbC): introducing undergraduate students to the complexity and uncertainty of clinical practice. Can Med Educ J. 2016;7(2):e104-e113. 10.36834/cmej.36690 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Charlin B, Deschênes M-F, Fernandez N. Learning by concordance (LbC) to develop professional reasoning skills: AMEE Guide No.141. Med Teach. 2021:1-14. 10.1080/0142159X.2021.1900554 [DOI] [PubMed] [Google Scholar]

- 4.Foucault A, Dubé S, Fernandez N, Gagnon R, Charlin B. Learning medical professionalism with the online concordance-of-judgment learning tool (CJLT): a pilot study. Med Teach. 2015;37(10):955-960. 10.3109/0142159X.2014.970986 [DOI] [PubMed] [Google Scholar]

- 5.Deschênes MF, Goudreau J, Fernandez N. Learning strategies used by undergraduate nursing students in the context of a digital educational strategy based on script concordance: A descriptive study. Nurse Educ Today. 2020;95:104607. 10.1016/j.nedt.2020.104607 [DOI] [PubMed] [Google Scholar]

- 6.Cooke S, Lemay JF. Transforming medical assessment: integrating uncertainty into the evaluation of clinical reasoning in medical education. Acad Med. 2017;92(6):746-751. 10.1097/ACM.0000000000001559 [DOI] [PubMed] [Google Scholar]

- 7.Cooke S, Lemay JF, Beran T. Evolutions in clinical reasoning assessment: the evolving script concordance test. Med Teach. 2017;39(8):828-835. 10.1080/0142159X.2017.1327706 [DOI] [PubMed] [Google Scholar]

- 8.Power A, Lemay JF, Cooke S. Justify your answer: the role of written think aloud in script concordance testing. Teach Learn Med. 2017;29(1):59-67. 10.1080/10401334.2016.1217778 [DOI] [PubMed] [Google Scholar]

- 9.Dory V, Gagnon R, Vanpee D, Charlin B. How to construct and implement script concordance tests: insights from a systematic review. Med Educ. 2012;46(6):552-563. 10.1111/j.1365-2923.2011.04211.x [DOI] [PubMed] [Google Scholar]

- 10.Lubarsky S, Dory V, Duggan P, Gagnon R, Charlin B. Script concordance testing: from theory to practice: AMEE guide no. 75. Med Teach. 2013;35(3):184-193. 10.3109/0142159X.2013.760036 [DOI] [PubMed] [Google Scholar]

- 11.Charlin B, Tardif J, Boshuizen HPA. Scripts and medical diagnostic knowledge: Theory and applications for clinical reasoning instruction and research. Acad Med. 2000;75(2):182-190. 10.1097/00001888-200002000-00020 [DOI] [PubMed] [Google Scholar]

- 12.Lubarsky S, Dory V, Audétat MC, Custers E, Charlin B. Using script theory to cultivate illness script formation and clinical reasoning in health professions education. Can Med Educ J. 2015;6(2):e61-e70. 10.36834/cmej.36631 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Deschênes MF, Goudreau J. L’apprentissage du raisonnement clinique infirmier dans le cadre d’un dispositif éducatif numérique basé sur la concordance de scripts. Pédagogie Médicale. 2020;21:143-157. 10.1051/pmed/2020041 [DOI] [Google Scholar]

- 14.Fournier JP, Demeester A, Charlin B. Script concordance tests : guidelines for construction. BMC Med Inform Decis Mak. 2008;8(1):8-18. 10.1186/1472-6947-8-18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lasnier F. Les compétences de l’apprentissage à l’évaluation.Guérin; 2015.

- 16.Emin V, Pernin JP, Guéraud V. Scénarisation pédagogique dirigée par les intentions. Sciences et Technologies de l’Information et de la Communication pour l’Éducation et la Formation. 2011;18:1-22. [Google Scholar]

- 17.Creswell JW. Qualitative inquiry & research design: choosing among five approaches. 3 ed. Sage Publications; 2013. [Google Scholar]

- 18.Bradshaw C, Atkinson S, Doody O. Employing a qualitative description approach in health care research. Global Qualitative Nursing Research. 2017;4:1-8. 10.1177/2333393617742282 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Portney LG, Watkins MP. Foundations of Clinical Research: Applications to Evidence-Based Practice. 3 ed. Pearson/Prentice Hall; 2009. [Google Scholar]

- 20.Wyatt JC. When to use web-based surveys. J Am Med Inform Assoc. 2000;7(4):426-429. 10.1136/jamia.2000.0070426 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Miles MB, Huberman AM. Analyse des données qualitatives. 2 ed. De Boeck Supérieur; 2003. [Google Scholar]

- 22.Lineberry M, Hornos E, Pleguezuelos E, Mella J, Brailovsky C, Bordage G. Experts’ responses in script concordance tests: a response process validity investigation. Med Educ. 2019;53:710-722. 10.1111/medu.13814 [DOI] [PubMed] [Google Scholar]

- 23.Norman GR, Monteiro SD, Sherbino J, Ilgen JS, Schmidt HG, Mamede S. The causes of errors in clinical reasoning: cognitive biases, knowledge deficits, and dual process thinking. Acad Med. 2017;92(1):23-30. 10.1097/acm.0000000000001421 [DOI] [PubMed] [Google Scholar]

- 24.Belhomme N, Jego P, Pottier P. Gestion de l’incertitude et compétence médicale : une réflexion clinique et pédagogique. La Revue de Médecine Interne. 2019;40 (6):361-367. 10.1016/j.revmed.2018.10.382 [DOI] [PubMed] [Google Scholar]

- 25.Peyrony O, Hutin A, Truchot Jet al. Impact of panelists’ experience on script concordance test scores of medical students. BMC Med Educ. 2020;20(1). 10.1186/s12909-020-02243-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Petrucci A, Nouh T, Boutros M, Gagnon R, Meterissian S. Assessing clinical judgment using the Script Concordance test: the importance of using specialty-specific experts to develop the scoring key. Am J Surg. 2012;205(2):137-140. 10.1016/j.amjsurg.2012.09.002 [DOI] [PubMed] [Google Scholar]