Abstract

The future of radiology is disproportionately linked to the applications of artificial intelligence (AI). Recent exponential advancements in AI are already beginning to augment the clinical practice of radiology. Driven by a paucity of review articles in the area, this article aims to discuss applications of AI in non-oncologic IR across procedural planning, execution, and follow-up along with a discussion on the future directions of the field. Applications in vascular imaging, radiomics, touchless software interactions, robotics, natural language processing, post-procedural outcome prediction, device navigation, and image acquisition are included. Familiarity with AI study analysis will help open the current ‘black box’ of AI research and help bridge the gap between the research laboratory and clinical practice.

Keywords: artificial intelligence, machine learning, radiomics, deep learning, interventional radiology

Introduction

Discussions on the future of radiology are often primarily centered around the revolutionary, future impact of artificial intelligence (AI). AI advancements, including the applications of machine learning, and more recently, deep learning, constitute a large section of research in radiology. It is important to distinguish between AI and machine learning (ML), often incorrectly used interchangeably. AI is broadly defined as the science of making intelligent machines, using varying techniques including statistical analysis and ML.1 While ML, a sub-section of AI, is defined as techniques used to design and train algorithms to learn from available data, and improve its performance.1 The implementation of ML was previously limited by the scarcity of high-quality healthcare data. However, the recent explosion of digital healthcare data (in terms of clinical data from electronic health records, real-time clinical monitoring signals, genetic sequencing, and imaging data) has led to large data sets, well-suited for the training of ML algorithms.2–4 These large data sets are available to researchers and can be customized to study different topics via a set of inclusion and exclusion criteria. Although there are some caveats involved, namely the data is usually single-institutional, and the data is not easily accessible to the non-clinician researcher. Also, data available for ML algorithms has increased with the advent of genomic sequencing, and increased imaging utilization by the healthcare system.5 With research advancing at such a staggering pace, review articles have been necessary to inform the practicing radiologist about the development of clinically relevant research that allows for predictive clinical recommendations and personalized medicine. Increasing utilization of complex AI algorithms has also been enabled by the increased availability of inexpensive high-performance computing. AI has the potential to transform every step of the clinical process including diagnosis, therapeutic decision choice, intra-procedural guidance, and follow-up imaging. Work is also advancing in non-interpretive uses of AI in radiology including for scanner utilization, workflow, and billing.6,7

Much of the literature in the field of interventional radiology (IR) has centered on applications of AI in interventional oncology. These studies (including review articles) primarily focus on optimizing patient identification and selecting prognostic predictors of IR procedure efficacy for treating cancers.8–13 Research in AI has transformed the prevailing paradigm by using large data sets to uncover hidden associations and build predictive models that would be very difficult to do via the traditional technique of conducting prospective clinical trials. While clinicians are often familiar with the intricacies of logistic regression and hazard ratios, AI research has inherent complexity and specialized nomenclature that makes it difficult for many clinicians to critically analyze AI literature. Without some background, clinicians may be unable to determine the research’s potential utility or clinical impact. Also, it can be difficult to explain the functioning of AI methods – they appear to be ‘black boxes,’14 – and this can further widen the gap between the AI research laboratory and clinical practice.

The purpose of this article is to firstly provide an overview and brief definitions of some terminology involving ML, including recent advances in the field. Next, applications of AI in non-oncologic IR across procedural planning, execution, and follow-up, are described. Finally, potential future applications of AI in the field are proposed.

Overview of Machine Learning Concepts

Generalizations about what ML is ‘under the hood’ are sometimes made, comparing it to a combination of ‘if-statements’ and fancy statistics. In reality, ML is an umbrella that covers many different techniques, and learning is a property that emerges from a combination of several disparate branches of mathematics that can vary based on the approach used. The usefulness of ML comes from its ability to serve as a tool to solve problems using data by having a computer extrapolate patterns from this data, rather than explicitly creating instructions for the machine to follow. Machine learning allows machines to learn how to perform tasks that they were not explicitly programmed to execute.15 Applications of ML have been incorporated into every major industry since it can revolutionize any task involving data analysis/predictions. Some everyday examples include email spam filters, social media advertising, image recognition, and fraud detection.

Much of the recent excitement for machine learning has been due to developments in the realm of ‘deep learning’ brought on by the promising results neural networks have yielded when faced with increasingly abstract tasks. Inspired loosely by our own biology, neural networks consist of many ‘neurons’ connected into layers. Each neuron performs a very simple computation: a weighted sum followed by an activation function. There are input neurons, output neurons, and those neurons in between found in layers called ‘hidden layers.’ Neurons in layers adjacent to one another are connected, with each connection having a weight. These weights are learned in a training process using data inputs where the correct output is known. The input data features propagate through the hidden layers and are output by the final layer. The strength of activation of each neuron in this structure is reliant on the degree of activation of the neurons in the layer before it., based on their weighted sum. When an input passes through a particular layer, it leads to a web of activations in these neurons that layer-by-layer propagate through the entire network. At the output layer, a final neuron’s activation represents the network’s response to a given input. While each neuron is simple, complex behavior arises due to the use of many neurons in many layers. It is difficult to explain a network’s exact behavior in response to an input in terms of its connection weights. Instead, its performance can be probed in several ways characterizing the network’s strengths and weaknesses and the importance of the input features to reduce the perception that the network is a black box.16

There are many variants of neural networks, but two of the most currently relevant variants are Convolutional Neural Networks (CNNs) and Long Short-Term Memory networks (LSTMs). CNNs have revolutionized computer vision,17 and in doing so are more broadly applicable to medicine, especially via computer-aided detection (CAD) systems in radiology.18 LSTMs networks, on the other hand, are best applied to inputs that vary in time such as in natural language processing.19 In the following sections, applications of both types of neural networks in IR will be presented.

It is important to understand, however, that whatever reasoning the network generates reflects the training data provided to it. The accuracy of the model in completing its assigned task is directly related to its training data being complete, unbiased, and representative of the testing set. This means that if the data is sparse, then the model itself will be incomplete and bound to miss cases that were unlike those included in its training data. Furthermore, if the data is biased, then the model will provide answers in line with these biases instead of being a reflection of the truth.20 To combat these pitfalls, the training data needs to be large and varied to create robust predictions for cases that may be considered fringe while keeping itself as generalized as possible. It is also important for researchers to be aware of the limitations of their data, and thus only use their trained models for tasks it was adequately trained to complete. Used responsibly, ML methods such as neural networks will likely revolutionize the healthcare sector in many ways such as allowing for personalized medicine, testing the efficacy of health policies and clinical trials, and integrating clinical care with the behavioral determinants of health.21

Overview of AI-led advances of IR interventions:

Radiomics

First described in 2012, radiomics is the high throughput extraction and analysis of data from computed tomography (CT), positron emission tomography (PET), or magnetic resonance (MR) imaging.22,23 Radiomics data consists of quantitative imaging features in a form that lends itself to building models that relate these imaging features to gene and protein signatures and phenotypes. Thus, by treating medical images as more than visual aids, these methods harness their potential to provide diagnostic and prognostic information.22 The process of radiomics consists of several universal steps, each with unique challenges. These steps are image acquisition, identification of the volume of interest, segmentation, extraction, and quantification of features, populating databases with these features, and mining the data to develop classifier models.22 Image acquisition through CT, PET, and MR modalities allows for a wide variation in the acquisition protocol, making it difficult to standardize images across institutions, machinery, and patient populations. This presents a challenge in radiomics, as it is crucial to extract data that can be directly compared (Figure 1).

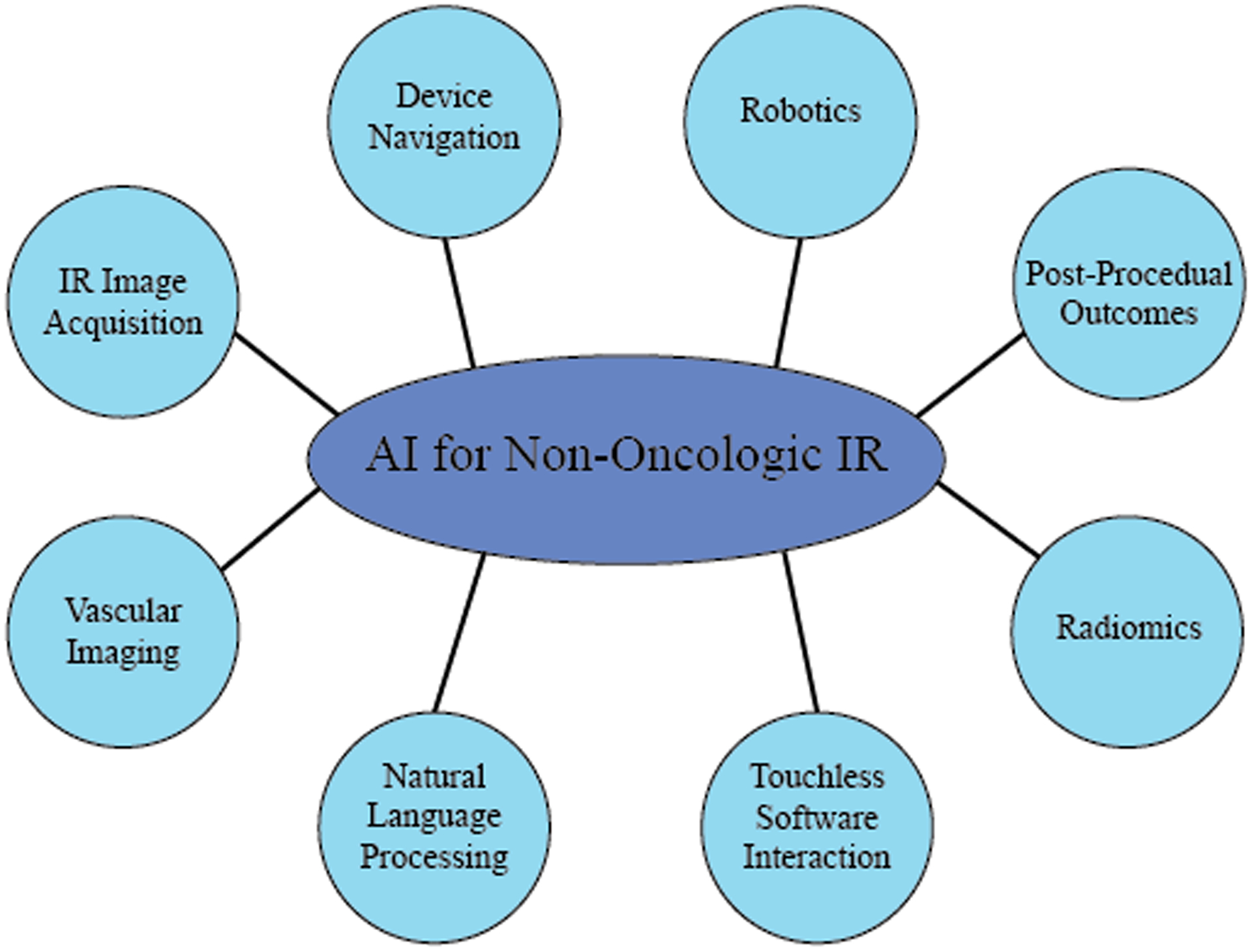

Figure 1:

Schematic Showing the different Applications of Artificial Intelligence in Non-Oncologic Interventional Radiology

Based on the literature, different aspects of the applications of artificial intelligence in non-oncologic interventional radiology were identified and illustrated schematically using a Circle-Spoke diagram

In radiomics, ‘volumes of interest’ often refer to tumors and suspected tumors. Radiomics also allows for the identification of sub-volumes within these regions, which gives radiomics its high prognostic potential.24 Segmentation of volumes of interest can be challenging, as these volumes can be indistinct. It is generally agreed upon that computer-aided border detection and manual fine-tuning is the best approach to accurate segmentation. The actual features that are extracted can be either semantic or agnostic. Semantic features include size, shape, vascularity, etc. while agnostic features include mathematical, quantitative descriptors such as histograms, textures, and Laplacian transforms.23,24 The larger a radiomics dataset, the more power it has because the mining process involves discovering patterns across a large amount of data. This process can involve machine learning approaches, statistical approaches, neural networks, and Bayesian networks.24

Radiomics is most widely applied in the field of oncology, largely owing to support from the National Cancer Institute.25 The use of radiomics in oncology has led to advancements in the ability to diagnose cancer, determine tumor prognosis, and assess treatment options.24 While radiomics has mostly been used for interventional oncological applications at this time, its use in image segmentation and diagnoses bodes well for future applications in non-oncologic IR as well.24

Touchless Software Interaction

In the operating room and the interventional radiology suite, physicians face challenges interacting with computers during procedures within the confines of a sterile environment. Touchless human-computer interaction technologies have the potential to decrease the possibility of error and inefficiency during procedures by offering physicians an alternative, smoother method for interacting with medical software in sterile environments.

Machine learning has emerged as an important approach for streamlining these touchless software-assisted interactions using voice and gesture commands. Machine learning frameworks can be trained to classify voice commands and gestures that physicians may employ during a procedure. These methods can contribute to better recognition rates of these actions by cameras, speakers, and other touchless devices.26,27

In one study, inertial sensors worn on the head and body produced data that was collected and classified as low-dimensional body gestures.28 These sensors eliminate the problems associated with cameras such as ensuring adequate illumination or the need to perform the gestures in the camera’s line-of-sight.29 These are both common problems in a dimly lit interventional suite with multiple moving parts. These gestures were then learned and parameterized by machine learning software, which produced a 90% recognition rate.28 Another study extended this technique to include the option of voice-based or switch-based activation of gesture recognition, with both options achieving a precision of within 2–4% of the parameter range.29

Such gesture recognition principles have also been applied to IR procedures, such as percutaneous nephrostomies.30 In this application, the physician interacts with ultrasound visualization software to determine a needle entry and exit trajectory into the renal pelvis. Physicians were asked to produce five fixed gestures, and angular information for each gesture was manually determined to train and optimize the algorithm to recognize these gestures with 92–100% accuracy. Furthermore, the mean placement error in selecting the target on the ultrasound snapshot was 0.55 ± 0.30 mm.30

Natural Language Processing

Natural Language Processing (NLP) is broadly defined as the field of ML dedicated to the analysis, manipulation, restructuring, and generation of spoken and written language. NLP has special applications in the field of diagnostic radiology to generate standardized reports from the automated extraction of large amounts of clinically relevant information found in the radiologist’s reports.31 Owing to the increased diversity of NLP applications in clinical medicine, it naturally impacts how IR is practiced as well.

With the rise of widely available devices such as the Microsoft Kinect and the Google Home, a new wave of research is aimed at re-deploying these pre-fabricated, relatively cheap devices to the procedural suite.27,32 In one particular application for device sizing and compatibility in IR, a Google Home speaker was outfitted with an NLP implementation.27 Input queries were processed and sent to the cloud which contained data on 475 IR devices for sizing and compatibility (e.g. what size sheath is required for a particular Amplatzer plug).27 Once the solution was determined, it was played back to the operator using the smart speaker, with technically satisfactory outcomes.27 Expanding on prior work on the use of ML in touchless software interaction, standardized machine learning frameworks have allowed for the use of LSTMs in NLP (as mentioned previously) for multimodal gesture recognition that outperforms other state-of-the-art.33

NLP can revolutionize IR outside of the procedural suite as well. In one study, scientists developed a virtual text-based radiology assistant/consultant.34 It was developed to serve as the communications point-of-contact between referring providers and IR. The program simulates conversation on a traditional text-based platform. Once queries are entered into the system, relevant information (in the form of infographics and websites) are sent back to the provider.34 This tool allows clinicians to focus on patient care and reduces the time spent on repetitive communications tasks.34 On the referring provider’s end, this tool allows them to obtain information quickly without any wait time for a provider to be available. Further quality control needs to be performed to make sure the system forwards queries to the provider reliably when it receives questions outside of the data it was trained on.

Post-Procedural Outcomes

One of the most basic examples of ML is the decision tree, well known even outside of computer science. Originally used in the field of statistics to help predict outcomes using decisional probabilities, decision trees have influenced an entire branch of ML. This approach’s branching logic is simple enough to be followed and recreated by hand. A robust example of decision trees in ML, known as the Random Forest, is an ‘ensemble’ or collection of many decision trees working together. The notion is that many weak learners (or weak predictors) can be combined to create a stronger one.35,36 A technique like the random forest can find relationships in high dimensional data that are likely beyond the scope of what an individual researcher with the help of traditional statistical methods could do themselves. The random forest, and other similar methods, can incorporate all the measurable data that a patient possesses. It also incorporates complex nonlinear interactions between these variables and use this information to support decision making and can solve unique problems in patient care, such as advanced prediction of patient outcomes to more personalized plans of care.35,36

In IR, this approach has been successfully utilized to predict iatrogenic pneumothorax after CT-guided transthoracic biopsy (TTB), in-hospital mortality after transjugular intrahepatic portosystemic shunt (TIPS), and occurrence of length of stay >3 days after uterine artery embolization (UFE).36 These applications are particularly enabled by the availability of large sets of patient-associated clinical data from the adoption of electronic health records.36 One of the major strengths of this study is the fact that all of the variables that are input to the model are pre-admission values.36 This method would allow for patient-care optimization based on risk-stratification performed at the time of admission.

Vascular Imaging

The field of vascular imaging has been significantly improved by the applications of AI. These advances extend both to peripheral arterial disease (PAD),37 as well as to neuro-interventional radiology (NIR).38–40

In PAD, an ML-based model outperformed a traditional statistical model using stepwise logistic regression both for the identification of PAD (p=0.03) and predicting future mortality (p=0.10).37 Limitations of this study included PAD defined just by clinical metrics (ankle-brachial indices) instead of symptom metric and a short follow-up time. The ability to seemingly ‘throw’ a series of metrics at an ML algorithm and allowing it to construct a model that outperforms statistical techniques is a hallmark of ML-based research. This allows for risk-stratification, not possible with other techniques. In the setting of acute ischemic stroke, advanced age is associated with higher morbidity and mortality. One study utilized an ML algorithm to optimize the selection of elderly patients, who would benefit the most from an endovascular thrombectomy (ET).40 This study was particularly important, given the fact that elderly patients have been under-represented in clinical trials on ET. ML techniques have also been used to predict the outcomes for endovascular embolization of brain arteriovenous malformations.38

Device Navigation

In medicine, there has been a general move towards minimally invasive surgery to reduce patient morbidity and mortality. Percutaneous procedures particularly rely on a high degree of accuracy to ensure appropriate placement of devices inside the body, and to guarantee that the surrounding tissue is left undamaged. This level of precision is provided by accurate image-guidance. For this task, 2-D fluoroscopic guidance, as well as 3-D guidance using intra-procedural cone beam CT is utilized.41 The primary advantages secured by utilizing AI include automated landmark recognition, compensation for motion artifact, and generation/validation of a safe needle trajectory.41 Fritz et al., have shown the efficacy of an augmented reality system for a variety of IR procedures performed on cadavers, including spinal injection and MR-guided vertebroplasty.42,43

Auloge et al. performed a 20 patient randomized clinical study to test the efficacy of percutaneous vertebroplasty, for patients with vertebral compression fractures.41 Patients were randomized to 2 groups: procedures performed with standard fluoroscopy and procedures augmented with AI-guidance.41 The metrics studied included trocar placement accuracy, complications, trocar deployment time, and fluoroscopy time. All procedures in both groups were successful with no complications observed in either group. No statistically significant differences in accuracy were observed between the groups. Fluoroscopy time was lower in the AI-guided group while deployment time was lower in the standard-fluoroscopy group.41 Further research needs to be performed to be able to generalize these results to the whole spectrum of percutaneous procedures.

Robotics

The increased utilization of robotic assistance in IR has been reviewed in the literature.44,45 The greatest impact offered by existing robotic surgery is increased precision and accuracy, along with an increase in degrees of freedom.44 In IR, the use of robotics also allows for radiation protection by limiting the operator’s exposure to radiation during procedures.44 Applications of computer vision can reduce the tissue trauma prevalent in both traditional open surgery, as well as endoscopic procedures. Using sensors located at the catheter tip, Fagogenis et al. created an autonomous robotic navigation system with haptic (touch-based) vision, for utilization in an aortic paravalvular leak closure.46 ML and image processing algorithms used the sensor data to provide information on the type of tissue that the catheter tip is touching (e.g. blood, cardiac tissue, valve) and the level of force applied. Autonomous control was found to be faster than joy-stick controlled robotic navigation.46 In an article published in Nature Machine Intelligence, Chen et al. described an autonomous robotic guidance system for obtaining vascular access.47 This deep learning system utilizes near-infrared and duplex ultrasound imaging to steer needles or catheters into submillimeter vessels with minimal supervision.47 This system can autonomously compensate for the patient’s arm motion, as well as identify the success or failure of the cannulation attempt based on ultrasound and force feedback.47

Image Acquisition

The goal of this collection of techniques is to optimize image acquisition without a loss of image quality. Metrics to be reduced include radiation48, contrast dose49, and image-processing time50. Altered image appearance and artifacts have limited the utilization of this technology in the past. However, deep learning algorithms have been shown to perform well in terms of structural fidelity and are faster as compared to the competing state-of-art methods. Routinely obtained as a part of IR procedures, imaging optimization can improve the clinical practice of IR while reducing risk (through contrast-injection and radiation) to the patient.

One study used a deep convolutional neural network to map low-dose CT images to their respective normal-dose images in a patch-by-patch fashion.50 This method enables the algorithm to minimize artifacts while preserving the structure of the image. In the field of nuclear imaging, deep learning has been used to reduce radiotracer requirements for amyloid PET/MRI without sacrificing diagnostic quality.48 This model used low-dose PET images and simultaneously acquired multimodal MRI sequences as input. This technique allows for ultra-low-dose imaging, which reduces patient radiation exposure to levels close to those found during a transcontinental flight.48

Future Directions and Conclusion

AI has been already been applied in a variety of applications in IR, ranging from procedural optimization to workflow optimization. In this section, ways in which AI can further impact the field are proposed.

Operator inefficiency is often caused due to the need for repetitive verbal communication between the proceduralist and the circulating nurse for the manipulation of the hardware or software in the room. One study successfully used a voice recognition interface to adjust various parameters during laparoscopic surgeries.51 These include hardware related tasks such as the initial set-up of the light sources and the camera, as well as procedural steps such as the activation of the insufflator.51 In IR, a similarly significant delay is caused by the proceduralist having to wait for the circulator to manipulate the C-arm as well as reposition the patient on the table. A voice-recognition based system would allow for significantly lower delays during procedures. Furthermore, studies have used eye-tracking to optimize training during surgical procedures.52 A tangentially related use of this technology could be applied for fluoroscopy beam collimation in IR. An algorithm’s analyses of operator eye fixation and gaze patterns could automate collimation, thereby narrowing the fluoroscopy beam and reduce the total radiation dose that the patient is exposed to. This technique could automate this process instead of being reliant on communication between the operator and circulating nurse for a task that is often left to an afterthought.

With the increasing clinical nature of IR, ML applied to widely available data from electronic health records can be used to manage complications. Contrast-induced acute kidney injury (AKI) is a well-documented cause of nephropathy after interventional procedures that utilize contrast media.53 The extent of renal impairment is often difficult to assess due to limited prior laboratory values. Without these values, it is difficult to obtain a sense of the acuteness of the changes in the patient’s creatinine. ML has been successfully been applied to the intensive care unit (ICU) setting, to accurately predict pre-admission hemoglobin and creatinine in patients.54 The model uses clinical data commonly available within the first few hours of ICU stay, with predictions averaging within 0.3–0.4mg/dl of actual creatinine values.54 Having accurate predictions of a patient’s baseline creatinine would be indispensable to guide the modality as well as the intensity of treatment.

An important issue in the use of AI to inform the clinical practice of radiology is the potential propagation of bias inherent in the data.55 An initially equitable algorithm can be made biased by prejudiced data/human decisions.56 Some suggestions to prevent this bias from affecting AI performance include: making it a fundamental requirement to be able to explain and interpret the output of every clinical AI system, to eliminate the ‘black box’.55 Additionally, transparency about removing existing biases in raw data used in an algorithm and avoiding adding new biases should be included in the model description.55

The use of ML in robotics and augmented reality systems have also been studied for use in trainee education, and have been proposed for future use in IR.13 For example, researchers in urology have utilized ML to process procedural data in robot-assisted radical prostatectomy to evaluate performance and predict outcomes.57 This study collected automated performance metrics (obtained from the surgical robot system) such as camera idle time, dominant instrument moving time, and camera moving time. These metrics were successfully used to predict an extended length-of-stay for patients’ post-procedure, utilizing a random forest model.57 Furthermore, certain metrics of performance such as surgery time and foley catheter duration as predicted were also significantly associated with the actual measured values.57 One way ML can be used in robotics in IR can be by the use of biomechanical algorithms to predict an interventionalist’s motion based on posture and weight distribution. The use of lead aprons for radiation protection is known to cause a variety of musculoskeletal issues.58 The use of predictive human biomechanics would allow for a weight-bearing exoskeleton to better predict and replicate the movements of an interventionalist in real-time. This would allow for weight offloading and thereby, reduced stresses on the operator’s body.

With further advancement of the field, there is a significant space for AI to further optimize channels in IR, ranging from trainee education to risk stratification, to fundamentally alter the clinical paradigm of the field.

Supplementary Material

Funding:

Research reported in this publication was supported by National Cancer Institute of the National Institutes of Health under award number R01CA206180 grant. The total cost was covered by this grant. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Footnotes

Publisher's Disclaimer: Disclaimer:

Publisher's Disclaimer: Financial remuneration to authors and family members related to the subject of this article: None. JC reports grant support from the Society of Interventional Oncology, Guerbet Pharmaceuticals, Philips Healthcare, Boston Scientific, Yale Center for Clinical Investigation, and the NIH R01CA206180 outside the submitted work.

IRB:

This study does not involve human subjects and is IRB-exempt

Prepared for:

Digestive Disease Interventions

References

- 1.Artificial Intelligence and Machine Learning in Software as a Medical Device Software as a Medical Device (SaMD) https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-software-medical-device#whatis. Accessed 10/20, 2020.

- 2.Sailer AM, Tipaldi MA, Krokidis M. AI in Interventional Radiology: There is Momentum for High-Quality Data Registries. Cardiovasc Intervent Radiol. 2019;42(8):1208–1209. [DOI] [PubMed] [Google Scholar]

- 3.Xu C, Jackson SA. Machine learning and complex biological data. Genome Biol. 2019;20(1):76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Shah P, Kendall F, Khozin S, et al. Artificial intelligence and machine learning in clinical development: a translational perspective. NPJ Digit Med. 2019;2:69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hendee WR, Becker GJ, Borgstede JP, et al. Addressing overutilization in medical imaging. Radiology. 2010;257(1):240–245. [DOI] [PubMed] [Google Scholar]

- 6.Lakhani P, Prater AB, Hutson RK, et al. Machine Learning in Radiology: Applications Beyond Image Interpretation. J Am Coll Radiol. 2018;15(2):350–359. [DOI] [PubMed] [Google Scholar]

- 7.Richardson ML, Garwood ER, Lee Y, et al. Noninterpretive Uses of Artificial Intelligence in Radiology. Acad Radiol. 2020. [DOI] [PubMed] [Google Scholar]

- 8.Abajian A, Murali N, Savic LJ, et al. Predicting Treatment Response to Intra-arterial Therapies for Hepatocellular Carcinoma with the Use of Supervised Machine Learning-An Artificial Intelligence Concept. J Vasc Interv Radiol. 2018;29(6):850–857 e851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Letzen B, Wang CJ, Chapiro J. The Role of Artificial Intelligence in Interventional Oncology: A Primer. J Vasc Interv Radiol. 2019;30(1):38–41 e31. [DOI] [PubMed] [Google Scholar]

- 10.Murali N, Kucukkaya A, Petukhova A, Onofrey J, Chapiro J Supervised Machine Learning in Oncology: A Clinician’s Guide. Digestive Disease Interventions. 2020;04(01):73–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Riyahi S, Choi W, Liu CJ, et al. Quantifying local tumor morphological changes with Jacobian map for prediction of pathologic tumor response to chemo-radiotherapy in locally advanced esophageal cancer. Phys Med Biol. 2018;63(14):145020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Iezzi R, Goldberg SN, Merlino B, Posa A, Valentini V, Manfredi R. Artificial Intelligence in Interventional Radiology: A Literature Review and Future Perspectives. J Oncol. 2019;2019:6153041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Meek RD, Lungren MP, Gichoya JW. Machine Learning for the Interventional Radiologist. American Journal of Roentgenology. 2019;213(4):782–784. [DOI] [PubMed] [Google Scholar]

- 14.Handelman GS, Kok HK, Chandra RV, et al. Peering Into the Black Box of Artificial Intelligence: Evaluation Metrics of Machine Learning Methods. AJR Am J Roentgenol. 2019;212(1):38–43. [DOI] [PubMed] [Google Scholar]

- 15.Samuel AL. Some Studies in Machine Learning Using the Game of Checkers. IBM Journal of Research and Development. 1959;3(3):210–229. [Google Scholar]

- 16.Dayhoff JE, DeLeo JM. Artificial neural networks. Cancer. 2001;91(S8):1615–1635. [DOI] [PubMed] [Google Scholar]

- 17.Salman K, Hossein R, Syed Afaq Ali S, Mohammed B, Gerard M, Sven D. A Guide to Convolutional Neural Networks for Computer Vision. Morgan & Claypool; 2018. [Google Scholar]

- 18.Castellino RA. Computer aided detection (CAD): an overview. Cancer Imaging. 2005;5:17–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Liu Z, Yang M, Wang X, et al. Entity recognition from clinical texts via recurrent neural network. BMC Med Inform Decis Mak. 2017;17(Suppl 2):67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mehrabi N, Morstatter F, Saxena N, Lerman K, Galstyan A. A Survey on Bias and Fairness in Machine Learning. ArXiv. 2019;abs/1908.09635. [Google Scholar]

- 21.Vayena E, Dzenowagis J, Brownstein JS, Sheikh A. Policy implications of big data in the health sector. Bull World Health Organ. 2018;96(1):66–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kumar V, Gu Y, Basu S, et al. Radiomics: the process and the challenges. Magn Reson Imaging. 2012;30(9):1234–1248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Verma V, Simone CB 2nd, Krishnan S, Lin SH, Yang J, Hahn SM. The Rise of Radiomics and Implications for Oncologic Management. J Natl Cancer Inst. 2017;109(7). [DOI] [PubMed] [Google Scholar]

- 24.Gillies RJ, Kinahan PE, Hricak H. Radiomics: Images Are More than Pictures, They Are Data. Radiology. 2016;278(2):563–577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Clarke LP, Nordstrom RJ, Zhang H, et al. The Quantitative Imaging Network: NCI’s Historical Perspective and Planned Goals. Transl Oncol. 2014;7(1):1–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Mewes A, Hensen B, Wacker F, Hansen C. Touchless interaction with software in interventional radiology and surgery: a systematic literature review. Int J Comput Assist Radiol Surg. 2017;12(2):291–305. [DOI] [PubMed] [Google Scholar]

- 27.Seals K, Al-Hakim R, Mulligan P, et al. 03:45 PM Abstract No. 38 The development of a machine learning smart speaker application for device sizing in interventional radiology. Journal of Vascular and Interventional Radiology. 2019;30(3):S20. [Google Scholar]

- 28.Schwarz LA, Bigdelou A, Navab N. Learning Gestures for Customizable Human-Computer Interaction in the Operating Room. Paper presented at: Medical Image Computing and Computer-Assisted Intervention – MICCAI 2011; 2011//, 2011; Berlin, Heidelberg. [DOI] [PubMed] [Google Scholar]

- 29.Bigdelou A, Schwarz L, Navab N. An adaptive solution for intra-operative gesture-based human-machine interaction. Proceedings of the 2012 ACM international conference on Intelligent User Interfaces; 2012; Lisbon, Portugal. [Google Scholar]

- 30.Kotwicz Herniczek S, Lasso A, Ungi T, Fichtinger G. Feasibility of a touch-free user interface for ultrasound snapshot-guided nephrostomy. Vol 9036: SPIE; 2014. [Google Scholar]

- 31.Tang A, Tam R, Cadrin-Chenevert A, et al. Canadian Association of Radiologists White Paper on Artificial Intelligence in Radiology. Can Assoc Radiol J. 2018;69(2):120–135. [DOI] [PubMed] [Google Scholar]

- 32.Suelze B, Agten R, Bertrand PB, et al. Waving at the Heart: Implementation of a Kinect-based real-time interactive control system for viewing cineangiogram loops during cardiac catheterization procedures. Paper presented at: Computing in Cardiology 2013; 22–25 Sept. 2013, 2013. [Google Scholar]

- 33.Nishida N, Nakayama H. Multimodal Gesture Recognition Using Multi-stream Recurrent Neural Network. Revised Selected Papers of the 7th Pacific-Rim Symposium on Image and Video Technology - Volume 9431; 2015; Auckland, New Zealand. [Google Scholar]

- 34.Seals K, Dubin B, Leonards L, et al. Utilization of deep learning techniques to assist clinicians in diagnostic and interventional radiology: Development of a virtual radiology assistant. Journal of Vascular and Interventional Radiology. 2017;28(2):S153. [Google Scholar]

- 35.Wongvibulsin S, Wu KC, Zeger SL. Clinical risk prediction with random forests for survival, longitudinal, and multivariate (RF-SLAM) data analysis. BMC Medical Research Methodology. 2019;20(1):1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Sinha I, Aluthge DP, Chen ES, Sarkar IN, Ahn SH. Machine Learning Offers Exciting Potential for Predicting Postprocedural Outcomes: A Framework for Developing Random Forest Models in IR. J Vasc Interv Radiol. 2020;31(6):1018–1024 e1014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Ross EG, Shah NH, Dalman RL, Nead KT, Cooke JP, Leeper NJ. The use of machine learning for the identification of peripheral artery disease and future mortality risk. J Vasc Surg. 2016;64(5):1515–1522 e1513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Asadi H, Kok HK, Looby S, Brennan P, O’Hare A, Thornton J. Outcomes and Complications After Endovascular Treatment of Brain Arteriovenous Malformations: A Prognostication Attempt Using Artificial Intelligence. World Neurosurg. 2016;96:562–569 e561. [DOI] [PubMed] [Google Scholar]

- 39.Bentley P, Ganesalingam J, Carlton Jones AL, et al. Prediction of stroke thrombolysis outcome using CT brain machine learning. Neuroimage Clin. 2014;4:635–640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Alawieh A, Zaraket F, Alawieh MB, Chatterjee AR, Spiotta A. Using machine learning to optimize selection of elderly patients for endovascular thrombectomy. J Neurointerv Surg. 2019;11(8):847–851. [DOI] [PubMed] [Google Scholar]

- 41.Auloge P, Cazzato RL, Ramamurthy N, et al. Augmented reality and artificial intelligence-based navigation during percutaneous vertebroplasty: a pilot randomised clinical trial. Eur Spine J. 2020;29(7):1580–1589. [DOI] [PubMed] [Google Scholar]

- 42.Fritz J, P UT, Ungi T, et al. Augmented reality visualisation using an image overlay system for MR-guided interventions: technical performance of spine injection procedures in human cadavers at 1.5 Tesla. Eur Radiol. 2013;23(1):235–245. [DOI] [PubMed] [Google Scholar]

- 43.Fritz J, P UT, Ungi T, et al. MR-guided vertebroplasty with augmented reality image overlay navigation. Cardiovasc Intervent Radiol. 2014;37(6):1589–1596. [DOI] [PubMed] [Google Scholar]

- 44.Tacher V, de Baere T. Robotic assistance in interventional radiology: dream or reality? Eur Radiol. 2020;30(2):925–926. [DOI] [PubMed] [Google Scholar]

- 45.Cleary K, Melzer A, Watson V, Kronreif G, Stoianovici D. Interventional robotic systems: applications and technology state-of-the-art. Minim Invasive Ther Allied Technol. 2006;15(2):101–113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Fagogenis G, Mencattelli M, Machaidze Z, et al. Autonomous Robotic Intracardiac Catheter Navigation Using Haptic Vision. Sci Robot. 2019;4(29). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Chen AI, Balter ML, Maguire TJ, Yarmush ML. Deep learning robotic guidance for autonomous vascular access. Nature Machine Intelligence. 2020;2(2):104–115. [Google Scholar]

- 48.Chen KT, Gong E, de Carvalho Macruz FB, et al. Ultra-Low-Dose (18)F-Florbetaben Amyloid PET Imaging Using Deep Learning with Multi-Contrast MRI Inputs. Radiology. 2019;290(3):649–656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Gong E, Pauly JM, Wintermark M, Zaharchuk G. Deep learning enables reduced gadolinium dose for contrast-enhanced brain MRI. J Magn Reson Imaging. 2018;48(2):330–340. [DOI] [PubMed] [Google Scholar]

- 50.Chen H, Zhang Y, Zhang W, et al. Low-dose CT via convolutional neural network. Biomed Opt Express. 2017;8(2):679–694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.El-Shallaly GE, Mohammed B, Muhtaseb MS, Hamouda AH, Nassar AH. Voice recognition interfaces (VRI) optimize the utilization of theatre staff and time during laparoscopic cholecystectomy. Minim Invasive Ther Allied Technol. 2005;14(6):369–371. [DOI] [PubMed] [Google Scholar]

- 52.Merali N, Veeramootoo D, Singh S. Eye-Tracking Technology in Surgical Training. J Invest Surg. 2019;32(7):587–593. [DOI] [PubMed] [Google Scholar]

- 53.Pattharanitima P, Tasanarong A. Pharmacological strategies to prevent contrast-induced acute kidney injury. Biomed Res Int. 2014;2014:236930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Dauvin A, Donado C, Bachtiger P, et al. Machine learning can accurately predict pre-admission baseline hemoglobin and creatinine in intensive care patients. NPJ Digit Med. 2019;2:116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Chapiro J The Role of Artificial Intelligence. Endovascular Today. 2020;19(10):78–79. https://evtoday.com/pdfs/et1020_F5_Disparities.pdf. Accessed 10/30/2020. [Google Scholar]

- 56.DeCamp M, Lindvall C. Latent bias and the implementation of artificial intelligence in medicine. J Am Med Inform Assoc. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Hung AJ, Chen J, Che Z, et al. Utilizing Machine Learning and Automated Performance Metrics to Evaluate Robot-Assisted Radical Prostatectomy Performance and Predict Outcomes. J Endourol. 2018;32(5):438–444. [DOI] [PubMed] [Google Scholar]

- 58.Orme NM, Rihal CS, Gulati R, et al. Occupational health hazards of working in the interventional laboratory: a multisite case control study of physicians and allied staff. J Am Coll Cardiol. 2015;65(8):820–826. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.