Abstract

Chest radiography (X-ray) is the most common diagnostic method for pulmonary disorders. A trained radiologist is required for interpreting the radiographs. But sometimes, even experienced radiologists can misinterpret the findings. This leads to the need for computer-aided detection diagnosis. For decades, researchers were automatically detecting pulmonary disorders using the traditional computer vision (CV) methods. Now the availability of large annotated datasets and computing hardware has made it possible for deep learning to dominate the area. It is now the modus operandi for feature extraction, segmentation, detection, and classification tasks in medical imaging analysis. This paper focuses on the research conducted using chest X-rays for the lung segmentation and detection/classification of pulmonary disorders on publicly available datasets. The studies performed using the Generative Adversarial Network (GAN) models for segmentation and classification on chest X-rays are also included in this study. GAN has gained the interest of the CV community as it can help with medical data scarcity. In this study, we have also included the research conducted before the popularity of deep learning models to have a clear picture of the field. Many surveys have been published, but none of them is dedicated to chest X-rays. This study will help the readers to know about the existing techniques, approaches, and their significance.

Keywords: Deep convolutional neural network; Computer vision; Lung segmentation; Multiclass classification; Nodule, TB, COVID-19, Pneumothorax detection; GAN

Introduction

The medical science branch can be categorized into two classes: anatomy and physiology. Information based on visual appearance comes under anatomy, while physiological information might not be visible, for example, diet, age, a parameter from the blood test. Medical imaging comes under the anatomy class. Magnetic resonance imaging (MRI), computed tomography (CT) scan, X-rays are few clinical examinations used for probing pulmonary disorders. X-rays hold a valued position and is a primary diagnostic tool for many medical conditions because of its ease to use and low cost.

The first X-ray of small animals is reported back in 1895 [113]. It is an imaging technique that uses radiations to produce images of organ tissues and bones. It is a very common clinical examination used by the radiologist for diagnosing pulmonary diseases. The chest radiograph is captured by passing the X-ray beam through the body. It is done in two ways: in posterior–anterior (PA) the beam is passed from back to front, and anterior–posterior (AP) where the beam is passed from the front to back. It appears black and white depending on the X-ray absorption because of the different density body parts. Bones are of high density so it appears white, while muscles and fat appear gray. The air in the lungs appears black because of its low density. Chest X-ray captures the lungs, heart, rib cage, airways, and blood vessels. It is a common diagnosis for pneumonia, tuberculosis (TB), pulmonary nodule, lung tissue scarring called fibrosis, and others. It provides the thorough examination of the patient’s chest but requires interpretation by a qualified radiologist. Variation in shape-size and overlapping of organs such as lung fields with the rib cage, fuzzy intensity transitions near the boundary of heart and lung, makes the interpretation difficult even for an expert. Therefore, the discrepancy is reported in the interpretation of X-rays among radiologists and physicians in an emergency [4, 129]. Further, the rapid rise in workload and complexity increases the chances of wrong interpretation.

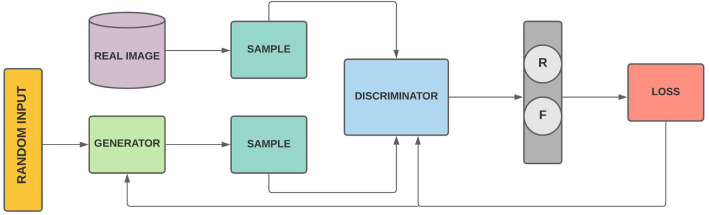

In the 1960s, with modern digital computers, X-rays were getting analyzed. Almost two decades later, the research community was focusing on CAD to assist the radiologist [45]. In the late 90s, using training data for the development of automated supervised system were becoming popular. From deformable models (used in segmentation) to statistical classifiers were developed for diagnosing medical conditions. There are four main components of CAD systems: image pre-processing, segmentation, extraction of the region of interest (ROI), and classification. In medical imaging, pre-processing steps like enhancement technique (histogram equalization) and rib cage suppression can help in the identification of abnormality in the chest. Lung segmentation helps in removing the non-lung part from the computation as ROI lies inside it and helps in better analysis of clinical parameters. Segmentation may decrease the false positive (FP), as any knowledge outside the lung is irrelevant. Lung segmentation is a challenging task as there is variation in shape, size (due to age), gender, and the overlapping of clavicles and rib cage. Many different segmentation methods were developed such as simple rule-based [28, 40, 94] to adapting deformable models [32, 82], but since the popularity of complex deep learning architectures [12, 141], most of the segmentation studies are using them. The convolutional neural network (CNN) is the most used technique for image analysis. The first CNN model was Neocognitron [41]. CNN was used for the first time in lung nodule detection [100]. LeNet [91] was the first real-world CNN application where it recognized the handwritten digits, but CNN gained popularity with AlexNet [87] which is a deep CNN. Many research studies for multi-class pulmonary classification [53, 185], lung nodule detection [93], TB detection [49] using deep CNN are published. Apart from the medical diagnosis, CNN can be used on handwritten text segmentation [69], facial emotion [2, 80], and face detection [138]. Further, GAN models are used for generating synthetic imaging data that can reduce the dependency for collecting medical image datasets, as it involves legal and privacy issues. In recent years, a significant research on segmentation and classification involving GAN has been published.

With the resurgence of DL in CV from 2012, most of the research work published in medical imaging is using it. DL models require a large dataset for the training. Research labs and groups working in the field have collected and annotated large medical image datasets. These groups have made datasets public for research in the deep learning field. Some publicly available chest X-ray datasets are Japanese Society of Radiological Technology (JSRT) [151], Chest X-ray-14 (CXR-14) [175], CheXpert [71]. Advancement in hardware such as graphical processing unit (GPU) and release of large annotated medical datasets has increased the pace of work in the medical imaging field. Many surveys have been published on medical image analysis and deep neural networks. One of the most recent and detailed are [98] and [50], respectively.

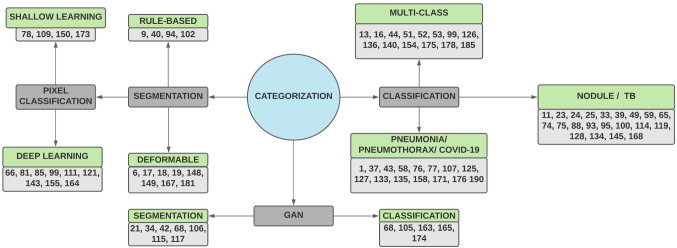

In this study, we have tried to obtain insight into the field of deep learning in medical image analysis. The intention is to collect and present the research done in the last three decades on concerning topics. An attempt has also been made to collect and discuss the publicly available chest X-ray datasets. To the best of knowledge, no survey has been published which together studied the segmentation, classification, and GAN model application involving chest X-rays. Surveys on these topics are published, but none of them is dedicated to chest X-rays only. These surveys are mostly conducted for CT scans [104, 187, 191]. This paper is categorized into eight sections. Section 2 discusses the most commonly diagnosed pulmonary disorders with X-rays. Section 3 provides a brief overview of the deep neural networks. Section 4 lists some of the publicly available datasets of chest X-rays. Section 5 discusses the work done in lung segmentation, and Sect. 6 deals with pulmonary disease detection along with multi-class classification. Section 7 followed by Sect. 6 discusses the segmentation and classification work done on X-rays using the GAN models. And Sect. 8 concludes the whole exercise. The categorization of the paper is given in Fig. 1.

Fig. 1.

Categorization of the paper

Diagnosis with chest radiographs

Chest X-rays can help in the diagnosis of different pulmonary disorders such as nodules, tuberculosis (TB), pneumothorax and many others. Pulmonary disorders can be life-threatening if not diagnosed at an early stage. In the following, we have given a brief overview of the diseases that can be diagnosed with chest X-rays.

TB is a deadly disease that is responsible for a million death according to World Health Organization Global Tuberculosis report [124]. Mantoux tuberculin skin tests (TST) or TB blood tests are conducted to detect it, but these tests are expensive. Chest X-rays are cheap and fast that can help in pulmonary TB detection. A lung nodule is another life-threatening condition that can cause cancer if not detected at an early stage. The five-year survival rate of the cancer patient is very low [153]. CT scans are most useful for early detection [57], but the low cost associated with the chest X-rays makes it popular for nodule detection.

Other pulmonary conditions that can be diagnosed with X-rays are pneumonia, atelectasis, cardiomegaly, pneumothorax, consolidation, emphysema, fibrosis, hernia and COVID-19. Atelectasis is a collapse of complete or partial lung area because alveoli within the lung is filled with alveolar fluid or becomes deflated. The airspace between the lung and the chest wall causes pneumothorax. Cardiomegaly is due to the enlargement of the heart. It can be the result of stress on the body, abnormal heart rhythms, or kidney-related diseases. On the other hand, emphysema is a chronic obstructive pulmonary condition that results in chronic cough and difficulty in breathing. The viral/bacterial infection can be the reason of pneumonia. While COVID-19 is also a kind of pneumonia caused by coronavirus. Chest X-rays can help in early detection and isolation of patients.

Overview of deep learning

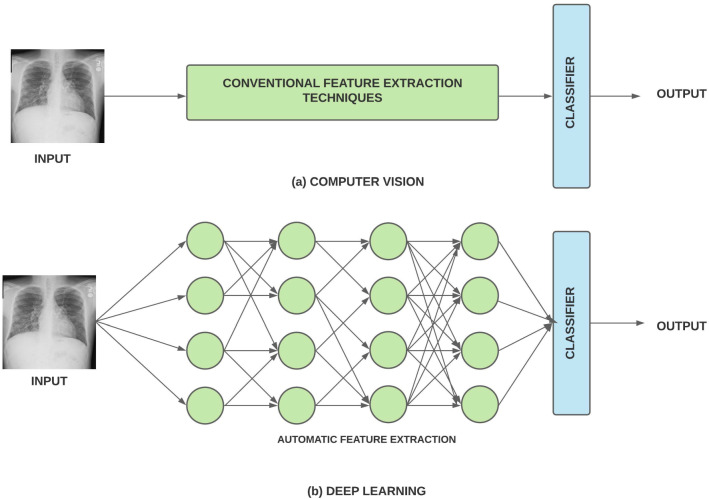

DL algorithms are a subset of machine learning that are designed to recognize or extract the patterns. It consists of artificial neurons that store the weight and help in extracting the unseen patterns. DL performs much better than traditional CV algorithms, but it is data-hungry and also requires more computational power. Improvement in computing power and availability of large annotated datasets has increased the acceptability of DL models. Unlike traditional CV algorithms that use conventional feature extraction techniques, DL models extract the feature vectors directly from the data. The workflow of CV and DL models is described in Fig. 2.

Fig. 2.

Workflow of a Computer Vision and b Deep Learning

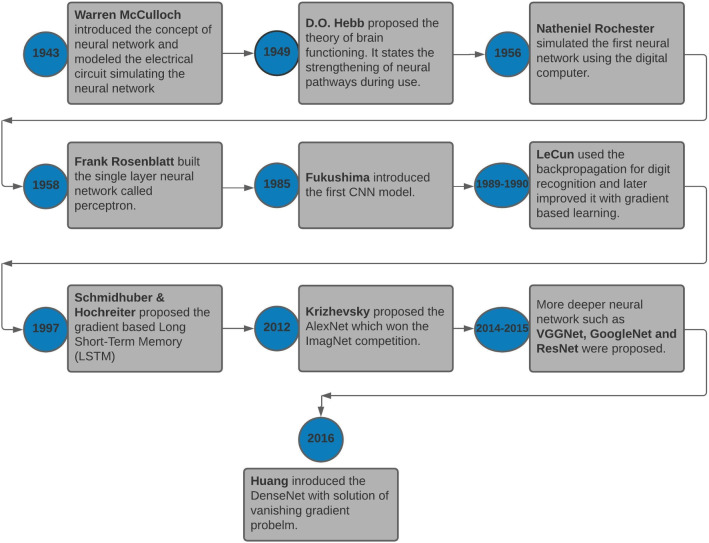

Evolution of neural network to deep learning

The neural networks can be traced back to 1943 when neuro-physiologist Warren McCulloch and mathematician Walter Pitts wrote about possible neuron functioning. Then, Rochester et al. [139] and Rosenblatt [142] simulated the first neural network and proposed the concept of perceptron, respectively. After the 1960s, research on neural networks partially halted, but later in the 1980s Fukushima et al. [41] proposed the first CNN model, and LeCun et al. [90] presented the first CNN application for digit recognition. Later, many advanced research models and techniques were published [56, 70, 87, 156]. A timeline of events from the proposed theory of neural network to the advanced deep learning architecture is given in Fig. 3.

Fig. 3.

Event from the theoretical neural network to Deep Learning models

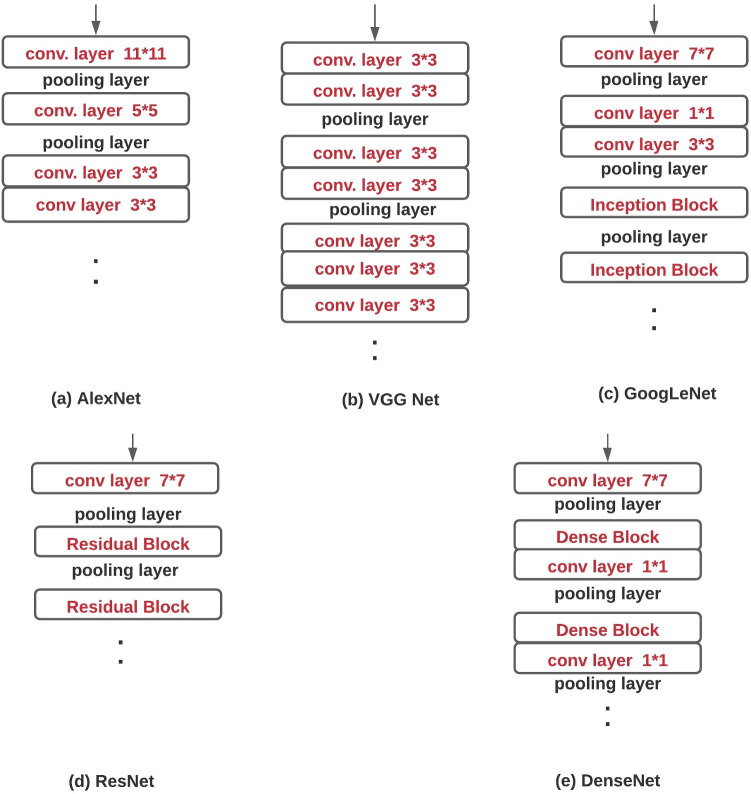

Overview of deep CNN architectures

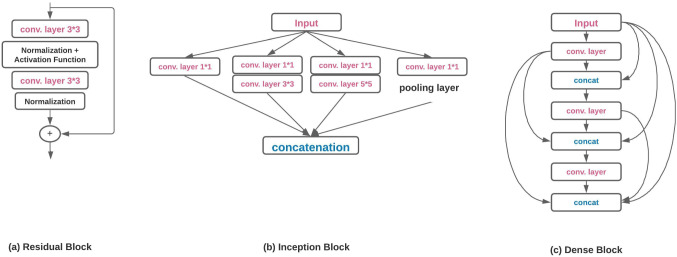

CNN was introduced with Neocognitron [41], but AlexNet revolutionizes the CV field after winning the ILSVRC-12 challenge. LeNet and AlexNet have the same design methodology but have different network depth, activation, and pooling function. Architectures proposed in later years are the AlexNet variations but are effective in classification results on the ImageNet dataset. In ILSVRC-12, AlexNet achieved 84.7% and in ILSVRC-17, NASNet [192] achieved 96.2% top-5 accuracy which is quite impressive. In this sub-section, a brief discussion of popular deep CNN architectures is presented. Figure 4 shows the basic building blocks of few popular deep learning architectures.

Fig. 4.

Basic structure of different popular deep CNN architectures

AlexNet

Krizhevsky et al. [87] proposed the eight-layer CNN for the image dataset classification. It showed that the features obtained by automatic learning could surpass the conventional methods used in the traditional CV domain. In the proposed network, there are eight stacked weighted layers out of which five are convolutional layers followed by fully connected layers. It used the dropout, data augmentation, local response normalization, and overlapped pooling for the first time. It also replaced the tanh activation function used in LeNet with the ReLU [116] activation function.

GoogLeNet

GoogLeNet [159] won the ILSVRC-14 challenge with 6.67% top-5 error. It introduced a new block called Inception (Fig. 5(b)). It uses padding to give the same height and width to the input/output. There are nine inception blocks in the architecture and at the end; fully connected (FC) layers are replaced by Global Average Pooling(GAP)[97]. The replacement of FC layers with GAP reduces the trainable parameters.

Fig. 5.

Basic diagram of a Residual Block b Inception Block c Dense Block

VGGNet

Visual Geometry Group from Oxford University introduced the VGG [152] in the ILSVRC-14 challenge. It became popular for the introduction of the repetitive blocks consisting of two/three convolution layers with ReLU activation function, followed by max-pooling layers. Unlike the AlexNet, VGGNet has only 33 convolutional layers. It achieved significant improvement in the results by increasing the network depth to 16-19 layers.

ResNet

He et al. [56] introduced the ResNet model in the ILSVRC-15 challenge and achieved 3.57% top-5 error. The plain network introduced in the original paper was inspired by the VGG nets where all convolutional layers were stacked. However, residual learning (shortcut connections) was introduced which turns the plain network into a residual version (Fig. 5(a)). The introduction of shortcut connection improved the performance of the network.

DenseNet

Huang et al. [61] proposed the Dense Convolutional Network (DenseNet) where the layers are connected in a feed-forward manner. The proposed network ensures the maximum information flow between all the connected layers (Fig. 5(c)). Except the first layer, all the layers take input from the preceding layers and pass the feature maps to successive layers.

EfficientNet

Most of the proposed CNN architectures are scaled by depth, width, or dimension. VGG [152], ResNet [56], and DenseNet [61] are few architectures that are scaled by depth. Gpipe [63] uses 557 million parameters for the image classification task. Due to large number of parameters, it needs a parallel pipeline library and more than one accelerator for training. To provide the solution for compound scaling of the network, Tan et al. [162] introduced the EfficientNet. It is the first work in the DL, to empirically quantify the relationship among scaling dimensions. With compound scaling, they have proposed the eight variants of EfficientNet (B0-B7).

Chest X-ray datasets

Deep learning models require a large dataset for the training. Collecting such a large medical dataset is a hectic process as there are legal and privacy issues. However, some research groups have collected and annotated the chest X-rays and released them for the research studies. Labeling can be done in two ways: manual or automatic. Manual labeling requires experience and expertise which is not a feasible or practical solution for a large dataset. Automatic annotation is fast but error-prone. Many researchers rely on these datasets for their work. A brief details about these datasets are given in Table 1.

Table 1.

Publicly available datasets

| S. no. | Dataset | Year | Modality | Instances | Resolution | Projection | Publicly available |

|---|---|---|---|---|---|---|---|

| 1 | JSRT | 2000 | X-Ray | 247 | 2048 2048 | PA | Yes |

| 2 | MC | 2014 | X-Ray | 138 | 4020 4892 | PA | Yes |

| 3 | Shenzhen | 2014 | X-Ray | 762 | 3000 3000 | AP | Yes |

| 4 | Indiana | 2016 | X-Ray | 7470 | – | PA | Yes |

| 5 | CXR-14 | 2017 | X-Ray | 112,120 | 1024 1024 | PA/AP | Yes |

| 6 | CheXpert | 2019 | X-Ray | 224,316 | – | – | Yes |

| 7 | MIMIC | 2019 | X-Ray | 371,920 | – | – | No |

| 8 | COVID-19 | 2020 | X-ray | 679 | 224224 | PA/AP | Yes |

JSRT [151] is the most used dataset for nodule detection and lung segmentation. It has 247 posterior X-rays collected from fourteen institutions in Japan and the USA. Montgomery County (MC) dataset [73] is available on the National library of medicine. It consists of 138 PA radiographs of which 80 are normal and 58 are infected with TB. Another such dataset is Shenzhen Hospital X-ray [73] which consists of 326 normal and 336 abnormal radiographs showing TB. A COVID-19 dataset [31] comprising more than 600 frontal X-rays collected from 412 people in 26 countries is released recently.

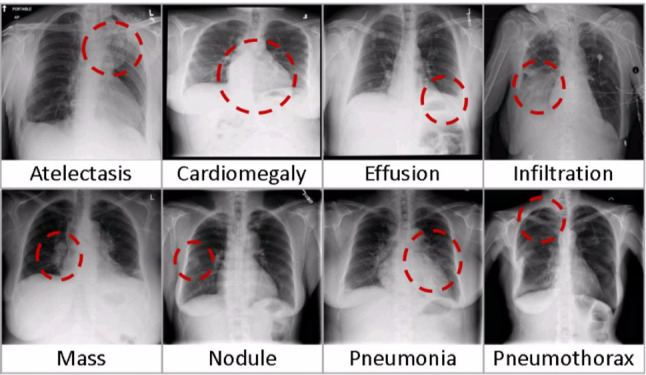

In 2017, CXR-14 [175] was made public for the research community by the National Library of Medicine, National Institutes of Health (NIH), USA. Initially, it was labeled with eight pulmonary diseases, but later, updated to 14 diseases. CheXpert dataset released by the Stanford ML Group is another large dataset. It is labeled with fourteen diseases namely pneumonia, atelectasis, lung nodule, lung opacity, edema, pleural effusion, and others. Figure 6 shows the common eight pulmonary conditions that can be examined in chest X-rays.

Fig. 6.

Diseases diagnosis with chest X-rays [175]

MIMIC-CXR dataset [79] contains 371,920 x-ray images from 60,000 patients. However, this dataset is not publicly available. Indiana dataset [35] contains 7470 images with 3955 radiology reports. The dataset is text-mined with eight disease labels (each image can have multi-labels). It is collected from the various hospital associated with Indiana University.

ImageNet dataset [36] is important while discussing deep learning in medical image analysis. DL models require a large dataset and enormous computation power for training [89]. If the model is not trained enough, then there are chances of overfitting. Therefore, many researchers have released pre-trained deep learning models. These pre-trained models are used for further fine-tuning the models. This technique is known as transfer learning. With no other medical dataset available of ImageNet size, transfer learning is extensively applied in the medical image domain.

Segmentation

Extracting the information from an image with a computer can be termed image processing. Dissecting the image, depending on the objects or regions present in it is termed image segmentation. It helps in locating and identifying the boundaries of different objects. Object detection helps to differentiate among objects but does not help in identifying the boundaries of objects. It simply puts the square box around the object to identify it. In chest X-rays, accurate and automatic segmentation is a challenging problem due to variation in size of the lung, edges at the rib cage, and clavicle. Segmentation in chest X-rays is performed for segregation or separation of lungs, heart, and clavicles. Many methods are proposed for lung segmentation to determine the ROI for specific abnormalities such as lung nodules, pulmonary TB, cavities, pulmonary opacity, and consolidations. Usually, the whole X-ray is used for training purposes, but the presence of unnecessary organs can contribute to noise and false positive (FP). Ginneken et al. [169, 170] classified the segmentation methods into four categories (i) rule-based, (ii) pixel classification-based, (iii) deformable-based, and (iv) hybrid methods. This categorization is generally followed in the academia for writing research or survey papers. Table 2 lists the below-mentioned rule-based and deformable methods, and Table 3 lists the pixel classification methods for lung segmentation. In the tables, if the author had calculated the result for both lungs separately, then the average score is mentioned. Further, if the author has used more than one dataset for the performance evaluation, then the best score is reported.

Table 2.

Quantitative comparison of rule and deformable model-based lung segmentation methods. Accuracy, dice similarity coefficient (DSC), jaccard index (JI), and inference time are reported for comparison

| Methods | Dataset | Technique | Results(%) | Time(s) | ||

|---|---|---|---|---|---|---|

| Accuracy | DSC | JI | ||||

| Intensity based [9] | Private | Thresholding, Filtering, Contour smoothing | 79.0 | – | – | – |

| Edge based [40] | Private | Edge tracing algorithm | 95.85 | – | – | 0.84 |

| Edge based [94] | Private | Derivative function, Iterative contour smoothing | 95.6 | – | – | 0.775 |

| Knowledge based [102] | Private | Robust snake model, Textual, Spatial and Shape characteristics | 80.0 | – | – | – |

| Parametric model [167] | Private | ASM, Adaptive gray-level appearance model, kNN | – | – | 95.5 | 4.1 |

| Parametric model [149] | JSRT | SIFT, Optimization algorithm | – | – | 94.9 | 75 |

| Geometric model [6] | Private | Level set energy, Equalized histogram, Canny edge detector, Otsu thresholding | – | 88.0 | – | 7 |

| Geometric model [17] | JSRT | Lung model calculation, Graph cut segmentation | 91.0 | 91.0 | – | 8 |

| Geometric model [19] | JSRT | Adaptive parameter learning, Graph cut segmentation | – | – | 91.1 | 8 |

| Geometric model [18] | JSRT, MC | Image retrieval, SIFT flow, Graph cut optimization | 95.4 | – | – | 20–25 |

| Hybrid model [148] | JSRT | Robust shape initialization, Local sparse shape composition, Local appearance model, Hierarchical deformable segmentation framework | – | 97.2 | 94.6 | 35.2 |

| Hybrid method [181] | JSRT | Modified gradient vector flow-ASM | – | – | 89.1 | – |

Table 3.

Quantitative comparison of shallow and deep learning models. Accuracy (Acc.), dice similarity coefficient (DSC), jaccard index (JI), and time are reported for comparison. If the time is in second(s), then it is the inference time; otherwise, it is the model training time. SGD is the abbreviation for stochastic gradient descent, ReLu for rectified linear unit, lr for learning rate, and AF for activation function

| Methods | Optimizer | AF | LR scheduling | Images size | Pre-processing step | Dataset | Technique | Acc. | DSC | JI | Time |

|---|---|---|---|---|---|---|---|---|---|---|---|

| SL [150] | – | – | – | 440 440 | Image resizing | JSRT | Gaussian kernel distance matrix, FCM | 97.8 | – | – | – |

| SL [78] | – | – | – | 2048 2048, 4020 4892 | No pre-processing is performed | JSRT, MC, CXR-14 | FCM, Level set algorithm | – | 97.6 | 95.6 | 25–30(s) |

| SL [109] | – | – | – | – | No pre-processing is performed | Private | Linear discriminant, kNN, Neural Network, gray level thresholding | 76.0 | – | – | – |

| SL [173] | – | – | – | 1024 1024 | Images are resized using the bilinear interpolation | Private | Markov random field classifier, Iterated conditional modes | 94.8 | – | – | – |

| DL [66] | SGD | – | lr = 0.1 and it decreased to 0.01 after training 70 epochs | 256 256 | Image resizing | JSRT; MC | Residual learning, atrous convolution layers, network wise training | – | 98.0 | 96.1 | – |

| DL [121] | Adam | ELU, Sigmoid | lr = 0.00001 with = 0.9 and = 0.999 | 128 128, 256 256 | Image resizing | JSRT | UNet, ELU, Highly restrictive regularization | – | 97.4 | 95.0 | 33.0(hr) |

| DL [99] | Adam | ReLu | lr = 0.001 | 256 256 | Image resizing and data augmentation by affine transformations | JSRT | UNet, cross-validation | – | 98.0 | 97.0 | – |

| DL [155] | SGD | ReLu, Softmax | lr = 0.01 | 512 512, 128 128 | Image resizing and scaling | MC | AlexNet, ResNet-18, Patch classification, Reconstruction of lungs | 96.9 | 94.0 | 88.07 | – |

| DL [81] | SGD | ReLu, Softmax | – | 2048 2048 | Histogram equalization and local contrast normalization is applied | JSRT | Modified SegNet | 96.2 | 95.0 | – | 3.0(hr) |

| DL [143] | – | ReLu, Softmax | – | 2048 2048 | No pre-processing is performed | JSRT | SegNet | – | 95.9 | – | – |

| DL [111] | Adam | ReLu, Softmax | lr = 0.0001 with = 0.9 and = 0.999 | 224 224 | Image resizing and data augmentation by flipping, rotating, and cropping | JSRT, MC | Modified SegNet (Lf-SegNet) | 98.73 | – | 95.10 | – |

| DL [164] | SGD | – | lr = 0.02 with poly learning rate policy | 512 512 | Image resizing and data augmentation by image to image translation | JSRT, MC, NIH | ResNet-101, dilated convolution, CCAM, MUNIT | – | 97.6 | – | – |

| DL [85] | SGD | ReLu, Sigmoid | lr = 0.01 with decrease in by factor 10 when validation accuracy is not improved | 512 512 | Image resizing | JSRT, MC, Shenzhen | ResNet-101, UNet, self-attention modules | – | 97.2 | – | 1.4(s) |

Rule-based segmentation

Rule-based segmentation uses prior information such as shape, position, texture, lung intensity, and imaging features to formulate the rules. Rule-based segmentation is done using thresholding, edge detection, and knowledge-based rules.

Intensity thresholding identifies the lung or non-lung region based on the threshold value, but the challenge is to find the suitable value. A low value can result in insufficient lung contour and a high value can merge the contour between the lung and non-lung region. Using a single gray-level threshold value based on knowledge of X-ray, Cheng et al. [28] segmented the left and right lung separately, while Armato et al. [9] proposed the method based on the combination of iterative gray-level thresholding (global and local), contour smoothing using the morphological, and rolling-ball techniques. Iterative thresholding improves the segmentation but makes the process computationally insufficient and slow, while the use of static threshold values can result in poor segmentation performance with different contrast.

Edge detection depends on the pixel intensity difference in the lung and non-lung regions. There are sharp changes in intensity near the lung edges. Edge detection in lung segmentation does not require any prior knowledge of the X-ray. Duryea et al. [40] used a heuristic edge-tracing approach with validation against hand-drawn lung fields. The proposed algorithm is capable of segmenting both lungs separately. The proposed lung detection algorithm took 673 seconds to determine both lungs for 802 images (0.84 second/image). Powell et al. [131] first used the derivative for rib cage boundary detection that was later improved by Xu et al. [182, 183]. Both have used the second derivative for contour detection. The first-order derivative produces the thicker edges, while the second-order derivative generates fine details such as thin lines, isolated points, and noise.

Later, Li et al.[94] used the first derivative which includes costal and mediastinal edges. The first derivative is sensitive to noise, thus is unreliable for edge detection, but Li et al. [94] successfully used it for lung segmentation. Rib structure hindrance and false contour problem are successfully eliminated which remained in Duryea et al. [40]. It took 0.775 second per image to determine the lung contours.

In intensity and edge-based segmentation, it is possible, not to get the desired results for a deformed anatomical structure. Prior knowledge of the lung’s spatial, texture, and shape characteristics can help with this issue. Brown et al. [15] proposed a knowledge-based approach where anatomical structure information is used for extracting the edges. Another study which used the knowledge-based approach is Luo et al. [102]. It used the lung characteristics for constructing an object-oriented hierarchical model for lung regions. After defining the initial lung contour with knowledge-based rules, it is further improved with a robust snake model [103].

Deformable methods

The deformable models define the geometric shape of the object. The object’s shape can change under internal forces, external forces, or user-defined constraints. These models have been used extensively in motion tracking, edge detection, segmentation, and shape modeling. Initially, they were used in geometric applications but later found scope in medical imaging. These models can be categorized into two types: parametric and geometric deformable models. Lung segmentation applications using the parametric and geometric deformable models are discussed in the following sub-sections.

Parametric deformable

Cootes et al. [32] introduced the Active Shape Model (ASM) in 1995. The method is similar to the Active Contour Model (ACM) [82] but different in global shape constraint. Many researchers improved it later [167, 189]. Yuan et al. [189] proposed the gradient vector flow driven active shape model (GV-ASM). However, this algorithm is not applied to medical datasets. Ginneken et al. [167] extended the ASM and test the method for lung segmentation in chest X-ray using the gray-level appearance model. It moves the points along the lung contour to a better location during optimization. Pixel classification inside or outside of the lung is determined through feature selection and a kNN classifier. One advantage that the original ASM has over the proposed scheme is the speed. The total time for segmentation is 4.1 seconds for the proposed method, while with original ASM is 0.17 seconds. Gleason et al. [46] proposed the deformable model that optimizes the objective function generated by global shape and local gray-level characteristics, simultaneously. The local shape characteristics that are obtained in the training are used for boundary detection. Shi et al. [149] proposed the deformable model using the population-based and patient-specific shape (PBPS) statistics trained using hierarchical principal component analysis (PCA). Further, they used the scale-invariant feature transform (SIFT) to improve the segmentation accuracy. The proposed method took 75 seconds, while the standard ASM took 30 seconds for one chest radiograph segmentation. The limitation of the ASM is that it only considers the shape of an object, not texture or gray-level appearance. This limitation is removed by the active appearance model (AAM) that is an extension of ASM. It took both shape and appearance into account for segmentation but is computationally expensive.

Geometric deformable

Caselles et al. [20] proposed the geometric deformable model to overcome the parametric model limitations. These models are based on the level set method and curve evolution theory [86, 146]. Geometric measures evolve the curve and surfaces that are independent of the parameterization. This results in the smooth handling of the automatic topology changes. In parametric methods, evolution is mapped with the image data for recovering the contour.

Annangi et al. [6] used the active contour with the prior shape knowledge for the lung segmentation. Lungs are segmented with otsu thresholding and level set minimal energy that uses extracted edge/corner feature with region-based data. Extracted features along with region-based data help in the handling of local minima issues. The proposed algorithm took seven second per image for segmenting both lungs.

Graph cut is another segmentation method where the objective function is similarly minimized as the level set method. Candemir et al. [17] proposed the segmentation model based on graph cut and later expanded the work using multi-class regularization to detect lung boundary [19] in chest X-rays. The work done in [17, 19] was extended in [18]. Candemir et al. [17] proposed the graph-cut-based lung segmentation model. It consists of two stages: in first, the average lung shape model is calculated, and in second, the lung boundary is calculated using graph-cut. It took eight second for image segmentation of 1024 1024 resolution. The drawback of graph cut segmentation is that it produces the static shape model and the chest X-rays have variable lung shape [17]. Another drawback of the graph cut method is high computation time. In [19], adaptive parameter learning methodology is described to improve the segmentation process using a multi-class classifier approach. The proposed method takes eight second for segmentation using graph cut. Candemir et al. [18] improved the lung segmentation method by using the anatomical atlases with non-registration. In this work, lung contour is detected using non-rigid registration by applying an image retrieval-based patient-specific adaptive lung model. The execution time for the proposed method is 20–25 seconds on 256 256 resolution.

Segmentation methods (rule-based, parametric) reported good results for the lung segmentation, but the hybrid approach can do better. Shao et al. [148] combined the active shape and active appearance model. In the proposed method, three models are used for robust and accurate segmentation. First, the shape initialization model is used to detect the salient landmarks on the lung boundary, and then the local sparse appearance model is used to learn local sparse shapes. Finally, a local appearance model is built for capturing the local appearance characteristics. The computation time for the proposed method is 35.2 seconds. Xu et al.[181] proposed a modified gradient vector flow-based ASM (GVF-ASM) for lung segmentation in X-rays. The experimental results are 3-5% better than the ASM technique.

Pixel classification-based segmentation

Lung segmentation in radiographs allows quantitative analysis of clinical parameters. It is the first step of the CAD diagnosis. Classifiers such as kNN or support vector machine (SVM) can extract pixel spatial and texture information from gray-scale values, and according to it, the pixel is assigned to the corresponding anatomical structure. Segmentation is one of the most common topic for researchers applying deep learning on medical images [98]. With the best of knowledge, the earliest work for lung segmentation on chest radiography using convolutional neural networks and neural networks is Hasegawa et al. [55] and McNittGray et al. [109], respectively. Hasegawa et al.[55] used downsampled chest X-rays as input for shift-invariant convolutional neural network, and then post-processing methods, such as adaptive thresholding, noise reduction, were applied for lung field segmentation.

Pixel classification can be sub-divided into shallow and deep learning (DL). DL models outperform the shallow learning models as it extracts the features automatically from raw data, while later uses the conventional methods. The disadvantage with DL models is that they need large annotated datasets and have high computational complexity. Few deep learning architectures have been proposed for the segmentation of medical images only. One such architecture is UNet proposed by Ronneberger et al. [141] for biomedical image segmentation. The main highlight of the architecture is an equal number of upsampling and downsampling layers along with skip connections. Milletari et al. [110] proposed the variant of UNet called VNet. It performed the 3D medical image segmentation using the 3D convolution layers. Another encoder–decoder-based architecture that is quite popular for image segmentation is SegNet [12]. The encoder architecture is similar to that of VGG-16 except fully connected layers were replaced by the decoder. One major difference between UNet and SegNet is that U-Net does not use the pooling indices and transfers the whole feature map to the corresponding decoding layer [111]. In the following, lung segmentation in chest X-rays using shallow and deep learning-based segmentation models is discussed.

Shallow learning

In shallow learning (SL), the features is extracted with conventional methods and the challenge is to find a suitable class for the extracted features robustly. McNittGray et al. [109] were first to propose lung segmentation using a neural network. With kNN, linear discriminant analysis (LDA), and feed-forward neural network classifiers (FFNNC), chest X-ray pixels are classified into anatomical classes. Each classifier is trained using local texture, gray-level-based features. Among all three classifiers, the neural network outperformed the other two and predicted more than 76% pixels correctly on the test set. Vittitoe et al. [173] identified lung region in X-rays using spatial and texture characteristics with Markov random field modeling (MRFM). The proposed method classified 94.8% pixels correctly in comparison with 76% pixels in [109]. For medical image segmentation, the fuzzy c-means clustering algorithm (FCM) is often used. It permits a single data point to belong to many clusters. It is used in pattern recognition problems. Many research studies have used the FCM for the MRI image segmentation [3, 26, 27]. The basic difference between MRI and chest X-ray is that in X-ray there is the possibility of ribs and nodule overlap, but in MRI both are separated. Shi et al. [150] proposed an algorithm for lung segmentation in X-rays using FCM. The proposed algorithm is based on Gaussian kernel-based fuzzy clustering. The original c-means algorithm is modified by altering the objective function using the Gaussian kernel-induced distance metric. Jangam et al. [78] proposed an algorithm based on a firefly optimized fuzzy c-means clustering algorithm. The model combines spatial fuzzy clustering and a level set algorithm for lung segmentation. Lung region pixels are classified using a fuzzy clustering algorithm, and then the outcome is applied to the level set algorithm for segmentation. The computation time for the proposed method is 25–30 seconds.

Deep learning

Loss of a lung region while segmentation can be disastrous. So, the need for an algorithm for accurate segmentation is very important. The shallow learning-based method relies on conventional feature extraction methods, while deep learning models extract the features automatically. So, the deep learning feature extraction method has a huge advantage over shallow learning-based methods. To the best of knowledge, Long et al. [101] was the first study to use the deep fully convolutional neural network for pixel classification in images. The encoder–decoder architecture is extensively used for semantic segmentation [120] in which an image is processed for multiple segmentation. The encoder works as CNN for feature extraction, while the decoder is used for upsampling operations to get the final segmentation results. The studies on lung segmentation can be classified according to whether the network uses transfer learning or is trained from scratch. The pre-trained network has the advantage of knowledge transfer from a large image classification dataset.

In the following, the studies that have used transfer learning are discussed. Tang et al. [164] use the pre-trained ResNet-101 [56] along with the criss-cross attention module (CCAM) [64]. CCAM captures the contextual information to improve the pixel-wise representation. Further, to deal with the insufficient data, the image-to-image translation is proposed using the MUNIT [62]. This is the only study that has performed cross-dataset generalization. The proposed model has trained on JSRT and MC dataset and tested it on NIH dataset [164]. Kim et al. [85] proposed the self-attention module to capture the global features in input X-ray images. The proposed attention module is applied to the UNet for lung segmentation. They have used the pre-trained ResNet-101 [56] as UNet backbone. The training time of UNet is 4 hours approximately, but when attention modules are added in the architecture, the training time dropped to 2.5 hours. While the inference time is 1.4 second per chest X-ray image.

In the following, we have discussed the studies that have used the training from scratch strategy. One such study is Hwang et al. [66] that proposed the model for chest X-ray segmentation based on residual learning [56] with atrous convolution layers. The global receptive field of the network can be increased by stacking the convolutional layer for a larger context. But this can increase the computational complexity, so the atrous convolution is used with a dilation rate of 3 in the last two residual layers. The proposed method uses the multi-stage training strategy called network-wise training. In network-wise training, the output of the pre-stage network is used as input. The proposed model has 120,672 parameters which are less than the UNet model. Novikov et al. [121] performed the clavicles, lungs, and heart segmentation. The proposed modified UNet (InvertedNet) architecture outperformed the radiologist for lungs and heart segmentation. In the modified architecture, Gaussian dropout is added after every convolutional layer. The delayed sub-sampling has been introduced in the contraction part of the network, and the exponential linear units (ELU) [30] are used instead of ReLu to speed up training. The method performed better even after with ten times fewer parameters compared to the state of art methods. The proposed InvertedNet took 33 hours to train and have 3,140,771 parameters. Other studies have also used the UNet architecture, and one such is Liu et al. [99] that modified the architecture for the lung segmentation. The false-positive (FP) region is dealt with using post-processing techniques after segmentation. External air or intestinal gas can cause the FP area to appear. The actual segmented lung is found by measuring the distance between the picture center and the segmented area centroid.

SegNet [12] is another popular encoder–decoder architecture for the image segmentation. Kalinovsky et al. [81] modified the SegNet and segmented the lungs on 354 radiographs with 96.2% accuracy. They used the max-pool index from the corresponding encoder layer to upsample feature map in the decoding layer which is not clearly defined in the SegNet. The proposed network took nearly three hours for the training. Another study that uses the SegNet for lung segmentation is Saidy et al. [143]. The model is tested on 35 unseen images and a dice coefficient (DC) or DSC value of 96.0% is achieved. Similarly, Mittal et al. [111] performed the lung segmentation using the encode-decoder network, named as LF-SegNet. They performed the segmentation on the JSRT and MC dataset and achieved 98.73% accuracy and 95.10% Jaccard Index value.

In Souza et al. [155] the weights are initialized using normal initialization [47]. The proposed method performs lung segmentation using the reconstruction technique. Initial segmentation is done using AlexNet [87] on lung patches to generate a segmentation mask. Many false positives generated in the resultant segmentation mask during the plotting of lung patches are removed with morphological operations. In healthy radiographs where no abnormality is present, the initial segmentation can produce good results, but the lung regions severely affected by opacities or consolidations can result in poor segmentation. To provide a general solution, a second CNN (ResNet-18) is trained for lung reconstruction. The initial segmentation and reconstruction outputs are combined with binary OR operation for the final segmentation.

Most of the lung segmentation models in chest X-rays have used the UNet and SegNet architecture or have proposed their modified variants, but in the following, we have mentioned some recurrent neural network (RNN) models that have been used for medical image segmentation. The RNN models have not been exploited for lung segmentation in chest X-rays. One such model is Xie et al. [180] that uses the spatial clockwork RNN for the segmentation of the histopathology images, while Stollenga et al. [157] use the 3D LSTM-RNN for membrane and brain segmentation. Another model that has used RNN is Andermatt et al. [5]. It has used the 3D RNN for brain segmentation, while Chen et al. [22] used the bi-directional LSTM-RNN and U-Net architecture together for tubular fungus and neuronal structures segmentation in 3D images.

Discussion

A clear boundary between the lung and non-lung parts is needed for lung segmentation. Variation in lung shape and ambiguity in boundary makes it difficult for the rule-based methods. Later many other methods such as deformable methods were proposed, and they have shown better results in comparison with rule-based segmentation. The problem with deformable models is that they are knowledge-based and do not work accurately on different datasets. Deep learning methods have given the state of the art results in several image domains. In deep learning models, the features are automatically extracted, they do not use the handcraft features or conventional methods. After the introduction of UNet, SegNet models, many research studies have proposed their variant architectures for medical image segmentation. In the above-discussed paper, we can analyze that transfer learning and cross-dataset generalization has not been exploited for lung segmentation in comparison with image classification. Further, to get the insight of the field, Ginneken et al.[170] have conducted a comparative study between ASM, AAM, and pixel classification methods for lung segmentation and Terzopoulos et al.[108] have done a detailed survey on deformable models in medical image analysis. Recently, Tajbakhsh et al. [161] have conducted a detailed survey addressing the techniques to handle the scarcity of segmentation datasets for deep learning models.

Classification

Lung diseases are the primary cause of death in most countries. The chest X-rays, CT scans, and MRI scans are few available diagnosis methods for detecting and analyzing the disease severity. Among the diagnoses stated, X-ray is the most commonly used modality. The use of medical imaging with deep learning for classification is the current academic research direction. After segmentation, classification is the most common topic for research in medical image analysis [98]. The deep learning model requires a large dataset for training. It is hard to acquire a large dataset due to privacy issues. Labeling or annotation is another issue related to the medical datasets. Many research groups have collected datasets for their study and made them available publicly. The size of these datasets is small, ranging from a few hundred to thousands. CXR-14 [175] is the largest X-ray dataset that is released publicly in 2017. They have collected more than 100,000 chest X-rays from nearly 30,000 patients. Most of the multi-class classification studies using deep learning have used this dataset. In recent years, some new datasets have been made publicly available. For example, the CheXpert dataset is publicly available, whereas MIMIC-CXR is available only for the credential authors. Other chest X-ray datasets (e.g., JSRT, Indiana, etc.) are publicly available, and many studies have evaluated their models on these datasets. In the below sub-sections, we have discussed the studies that have used automatic models to detect pulmonary conditions.

Disease detection

Nodule detection

Commonly referred to as a ‘spot on the lung’, a nodule is a dense round area in comparison with normal tissue. They appear as white shadows in the chest X-rays. A lung nodule is an early stage of lung cancer. According to WHO, 9.6 million people have died from cancer in 2018. The nodule of size 5-10 mm is visible in X-rays, and less than that is not likely to be detected. Nodule detection is also difficult sometimes because it may be hidden by strong ribs or clavicle [10, 147]. There are no symptoms of the pulmonary nodule. It can be diagnosed only through screening. In Table 4, we have enlisted the nodule detection work reported on X-rays.

Table 4.

Quantitative comparison of nodule detection methods. Accuracy, sensitivity for false positive per image (FPPI), and inference time are reported for comparison. For [33] training time is reported. SGD is the abbreviation for stochastic gradient descent, ReLu for rectified linear unit, lr for learning rate, and AF for activation function

| Method | Optimizer | AF | LR scheduling | Images size | Pre-processing step | Dataset | Technique | Accuracy | Sensitivity | Time(s) |

|---|---|---|---|---|---|---|---|---|---|---|

| ANN [100] | – | – | – | 32 32 | Background removal for nodule enhancement and contrast enhancement | Private | CNN with fuzzy training, circular background subtraction technique | – | – | 15 |

| ANN [33] | Gradient-descent | – | lr = 0.1 | 128 128, 512 512 | Lung field segmentation using deformable models (snakes) | JSRT, Private | Neural network, LoG, gabor kernel | 95.7-98.0(4-10) | 60.0-75.0(4-10) | 500 |

| CAD [145] | – | – | – | 256 256 | Deformable model (ASM) is used for lung field segmentation and local normalization is performed to achieve the global contrast equalization | JSRT | ASM, local normalization (LN) filtering, Lindeberg detector, Gaussian filter bank | 51.0(2), 67.0(4) | – | – |

| CAD [25] | – | – | – | 2048 2048 | Lung segmentation using multi-segment ASM, gray-level morphological operators for enhancing nodules and rib suppression | JSRT, Private | ASM, watershed algorithm, leave-one out cross-validation, SVM with a Gaussian kernel | – | 77.1(2), 83.3(5) | 70 |

| ANN [24] | – | – | – | 2048 2048 | Lung segmentation using multi-segment ASM, gray-level morphological operators for enhancing nodules and rib suppression using MTANNs | JSRT, Private | VDE, MTANN, morphological filtering, support vector classifier | – | 85.0(5) | 115 |

| DL [93] | SGD | ReLu | – | 229 229 | Unsharp mask sharpening technique | JSRT | Unsharp mask sharpening, E-CNN, five-fold cross-validation | – | 84.0(2), 94.0(5) | – |

| DL [95] | SGD | ReLu | lr = 0.001 which drops 0.00001 after every iteration | 224 224 | Random rotation and mirroring, image enhancement with gray-level stretching and histogram matching, lung field segmentation and rib suppression using ASM and PCA, respectively | JSRT, Shenzhen | ASM, PCA, dense blocks, fivefold cross-validation | 99.0(0.2) | – | – |

| DL [23] | SGD | ReLu, Softmax | lr = 0.001 | 229 229 | Horizontal inversion, angle rotation and flipping, lung field segmentation using multi-segment ASM | JSRT | ASM, watershed algorithm, GoogLeNet | – | 91.4(2), 97.1(5) | – |

Lo et al. [100] is the first study to apply CNN for nodule detection. Here, the proposed CNN is the simplified version of Neocognitron [41]. The results on the test set demonstrated the effective use of CNN in nodule detection. Further, the fuzzy training method is used with CNN to improve the obtained results. The processing time taken by the proposed model for nodule detection is 15 seconds on a DEC Alpha workstation. Coppini et al. [33] proposed an approach based on multi-scale processing and artificial neural network (ANN) for detecting lung nodules. The feed-forward ANN uses prior knowledge such as the shape and size of lung nodules. The proposed method works in twofold: first, the network narrows down the regions of possibly having the nodule with high true positive. Second, evaluating the region for the abnormality presence to detect the nodule. It helps in reducing the false positive and increasing the detection rate. The Laplacian of Gaussian (LoG) and Gabor kernels are used to amplify the features of X-rays. The average training time for the proposed method is 500 seconds.

Schilham et al. [145] proposed a method for nodule detection and selection to reduce the FP for candidate classification. To avoid nodules candidates outside the lungs, lung segmentation is performed using ASM before nodule detection. The detection of lung nodules is a multi-scale problem since nodule size varies. The bright spots help in blob detection, so the authors use the local maxima to find the candidate nodule locations with the corresponding radius. The detected blob is then segmented to separate the nodule from the background with ray casting procedure [54]. After the candidate blob detection, features from a multi-scale Gaussian filter bank are used to classify the nodule with a kNN classifier. It is employed to locate the k closest candidates in the database.

The detection of lung nodules is challenging since their size varies, and if they’re under the ribs, they are easily overlooked. So, Chen et al. [25] proposed the multi-stage method to improve the nodule detection and to reduce the false positive. First, the lungs are segmented using the multi-segment ASM. Then, a gray-level morphological operation is applied to enhance the nodule, and a line-structure suppression technique to detect the nodules overlapping the rib cage and clavicles. At the third stage, in a nodule likelihood map obtained from the second stage, noise is reduced by using the Gaussian filter to further improve the performance. After, the candidate nodule detection from the images, the improved watershed algorithm is applied for nodule segmentation. The features such as texture, shape, gray-level, etc are extracted, and then a nonlinear SVM with a Gaussian kernel is used lung nodule classification. The proposed method took 70 seconds to detect the nodule in a image.

In their other work, Chen et al. [24] also suppress ribs to reduce the FP. The same method is applied for the lung segmentation, but for the ribs and clavicle suppression, they have used the virtual dual-energy (VDE) X-ray images with massive training artificial neural network (MTANNs) instead of subtracting the rib pattern from the enhanced image. The ribs and clavicles in X-rays can be significantly repressed with this approach, while soft tissues like lung nodules and vessels remain preserved. The candidate nodules are detected by using morphological filtering techniques and the nonlinear support vector classifier. The processing time of the proposed method for each image is 115 seconds. In comparison with [25], sensitivity is improved, but processing time is increased.

All the above-mentioned work has produced good sensitivity with decreasing false positive per image (FPPI), but none of the above-mentioned research has used deep learning. Li et al. [93] in their proposed work have used the deep ensemble network for nodule detection and FPPI reduction. The proposed method uses an ensemble convolutional neural network (E-CNN) and does not employ the lung segmentation procedure as in the above-mentioned methods. The nodule is amplified using the unsharp mask image sharpening technique. Patches are cut from the processed image with the slide-window method before feeding to the CNN. Three different CNN models with dissimilar input sizes and layers are trained, and the results of all models are fused using the logical AND operator. The proposed ensemble network has outperformed the other state-of-the-art methods. In their another study, Li et al. [95] have done segmentation and rib suppression using the modified ASM and PCA, respectively. Image enhancement is performed using the efficient gray-level stretching operation and histogram matching. Then, three CNN with dense blocks is fused with four varying strategies to find the probability of the selected candidate nodule. Further, the FP is removed using morphological operations in post-processing steps.

Chen et al. [23] proposed the CAD scheme using the CNN for nodule detection. They have used the classical method for candidate detection and the CNN for FP reduction. Authors termed the methodology as balanced CNN with classic candidate detection. First, pulmonary parenchyma is segmented by using the multi-segment ASM. Then, the gray-scale morphological enhancement technique is applied to improve the visibility of the nodule. After selecting the nodule from enhanced images, the watershed algorithm is applied to segment the candidate nodule. At last, a pre-trained CNN model (GoogLeNet) is used for the classification of nodule candidates. On analyzing Table 4, it can be seen that the deep learning models provide better sensitivity and accuracy in comparison with shallow learning methods.

Tuberculosis detection

TB is the main reason for morbidity and mortality worldwide. It is the second disease after HIV that has resulted in million death alone in 2010 [124]. Chest X-rays are the most common diagnosis for TB examination [92]. With an increasing number of cases and a handful of trained professionals to interpret the radiographs, there is a need for automatic detection. Many research studies are available that has exploited the deep learning models for tuberculosis detection. These models need minimum human intervention and give accurate results. We have also discussed few shallow methods for result comparison with deep models. Table 5 enlists the work done for TB detection on chest X-rays.

Table 5.

Quantitative comparison of TB detection methods. Accuracy, sensitivity, and area under curve (AUC) are reported for comparison. SGD is the abbreviation for stochastic gradient descent, ReLu for rectified linear unit, lr for learning rate, and AF for activation function

| Method | Optimizer | AF | LR scheduling | Images size | Pre-processing step | Dataset | Technique | Accuracy | Sensitivity | AUC |

|---|---|---|---|---|---|---|---|---|---|---|

| CAD [168] | – | – | – | 932 932 | Lung field segmentation using ASM | Private | ASM, Gaussian derivative filter, kNN algorithm | – | 86.0 | 82.0 |

| CAD [74] | – | – | – | 2048 2048, 1023 1005 | Lung field segmentation using intensity, lung model and Log Gabor masks | JSRT, MC | lung model, intensity and LoG mask, SVM, leave-one out evaluation | 75.0 | – | 83.12 |

| CAD [75] | – | – | – | 2048 2048 | Lung field segmentation using graph cut method | JSRT, MC, Shenzhen | Graph cut segmentation, ODI, CBIR, SVM | 84.0 | – | 90.0 |

| DL [65] | SGD | ReLu, Softmax | lr = 0.01 and it is decreased by a factor of 2 for every 30 epochs | 520 520 | Images resizing and data augmentation | MC, Shenzhen | AlexNet, transfer learning, dataset augmentation, threefold cross-validation | 90.3 | – | 96.4 |

| DL [88] | SGD | – | Training from scratch, lr = 0.01, pre-trained network, lr = 0.001 | 256 256 | Data augmentation and histogram equalization | MC, Shenzhen | GoogLeNet, AlexNet, transfer learning, data augmentation | – | – | 99.0 |

| DL [59] | Adam | ReLu, Softmax | lr = 0.0001 | 224 224 | No pre-processing is performed | MC, Shenzhen | CNN | 94.73 | – | – |

| DL [49] | Nesterov ADAM | ReLu | lr = 0.001 and decreased by factor of 10 when validation loss stops improving | 224 224 | Image resizing, normalization, and data augmentation | MC, Shenzhen, CXR-14 | DenseNet121, transfer learning, meta data | – | – | 93.7 |

| DL [128] | Adam | ReLu, Softmax | lr = 0.001 | 512 512 | Image cropping, resizing, and normalization | MC, Shenzhen | CNN, grad-CAM, cross-validation | 86.2 | – | 92.5 |

| DL [134] | SGD | ReLu, Softmax | lr = 0.001 | 224 224 | Image normalization using Z-score, lung segmentation and data augmentation | MC, Shenzhen | ResNet18, ResNet-101, VGG-19, InceptionV3, UNet, transfer learning, score-CAM | 98.6 | 98.56 | – |

| DL [114] | SGD | ReLu | lr = 0.01 with rate decay of 0.5 | 224 224 | Unsharp Masking, High-Frequency Emphasis Filtering, and Contrast Limited Adaptive Histogram Equalization, cropping, image normalization | Shenzhen | ResNet-18, ResNet-50, EfficientNet-B4, UM, HEF, CLAHE, transfer learning | 89.92 | – | 94.8 |

| DL [11] | – | ReLu | – | 300 300, 229 229 | Image normalization and resizing | MC, Shenzhen | Inception-v3, MobileNet, ResNet50, Gabor filter, cross-validation | 97.59 | – | 99.0 |

| DL [39] | – | ReLu, Softmax | – | 224 224, 320 320 | Image resizing and data augmentation | MC, Shenzhen, COVID-19 | Transformer model, EfficientNetB0, EfficientNetB1, transfer learning | 97.72 | – | 100 |

| DL [119] | – | – | – | 512 512 | Image resizing, normalization, and data augmentation | MC, Shenzhen, Private | ResNext, UNet | 91.0 | 85.7 | 91.0 |

Ginneken et al. [168] were the first to propose an automatic method for TB detection. The lungs are initially segmented using ASM in the suggested method. Then, the segmented lung fields are divided into several regions. The texture features are extracted from each region using the multi-scale filter bank and the analysis of these texture features results in feature vectors. At last, kNN is applied to classify the feature vector. The proposed method obtained an AUC of 0.82 and a sensitivity of 0.86. Jaegar et al. [74] proposed the automatic method for TB detection. Initially, they used three different masks, namely intensity mask, lung model mask, and Log Gabor mask for lung segmentation. Then, the shape and texture feature descriptors are used for finding normal/abnormal patterns. At last, the SVM classifier is trained to classify the images into normal and abnormal. The overall accuracy reached 75.0% when all three masks are used together. In their other work [75], they used the graph cut method for lung segmentation. The object detection inspired (ODI) and content-based image retrieval (CBIR) based texture and shape features are applied to the segmented lung fields for describing normal and abnormal patterns. At last, the SVM classifier categorizes the computed features into abnormal or normal classification.

Deep learning is not employed for feature extraction in any of the above-mentioned three papers. The features are extracted using conventional image processing methods in these research papers. These strategies have the drawback of making the model domain-specific, while deep learning models are not domain-specific since they can automatically extract significant characteristics. Hwang et al. [65] were the first to propose TB detection using the deep learning model. The pre-trained AlexNet is used for the feature extraction, and at the end, Softmax is used for the classification. The method is validated with the cross-dataset generalization technique. Lakhani et al. [88] detected the TB using the pre-trained CNN with data augmentation techniques such as rotation and histogram equalization. They evaluated the three training strategy, i.e., pre-trained and untrained networks, pre-trained and untrained networks with data augmentation, and ensemble pre-trained networks with augmented data. Among all the different training methods, an ensemble pre-trained network reported the best AUC of 99.0%.

Gozes et al. [49] employed DenseNet-121 network trained on CXR-14 dataset for classification. The proposed model (MetaChexnet) is trained in two phases. In the first phase, a pre-trained DenseNet-121 learns the dataset-specific features with the CXR-14 dataset and meta-data. Then, the network is fine-tuned for TB detection with Shenzhen and MC dataset. The proposed model is compared with the ChexNet and pre-trained DenseNet-121 for the classification. The proposed model gives a comparable result with the ChexNet [135] and outperforms the pre-trained DenseNet-121. Rahman et al. [134] evaluated the nine different pre-trained CNN models for TB detection. They used two strategies for training the model. In the first strategy, the lung region is extracted with UNet architecture [141] and then it is fed as input to the CNN models. In the second strategy, full X-rays are used as input to CNN models. Among all the pre-trained models, DenseNet-201 achieved the highest accuracy for TB detection on the segmented lungs.

Hooda et al. [59] proposed the CNN with 7 convolutional layers, 7 activation layers, 2 dropout layers, and 3 fully connected layers for the TB detection. They used the MC and Shenzhen datasets to validate the proposed model. The proposed CNN does not use transfer learning. Similar to Hooda et al. [59], Pasa et al. [128] also proposed the model that does not use transfer learning. The proposed model is faster and efficient for TB detection compared to the state-of-the-art deep learning models. With a depth of 5 blocks, the proposed method reported good classification performance. The proposed network has only 230,000 parameters and took approximately one hour for the training. The inference time of the proposed model is five-six milliseconds.

The deep learning models require high-quality images for training, and the low contrast chest X-ray may impact the results. Munadi et al. [114] evaluated the effect of image enhancement for TB detection in chest X-rays. They used the Unsharp Masking (UM) and High-Frequency Emphasis Filtering (HEF) technique for image enhancement. They used enhanced images to fine-tune ResNet-18, ResNet-50, and EfficientNet-B4. EfficientNet-B4 outperformed the other models on UM enhanced images. Training time for the proposed models was 14 minutes approximately. Ayaz et al. [11] proposed the CAD for early TB detection using chest X-rays. They have applied hybrid learning approach that uses the handcraft feature extraction (Gabor filter) and deep feature learning (CNN) technique. They ensembles several classifiers through logistic regression to achieve the best classification accuracy. The proposed methodology was evaluated on Shenzhen and MC datasets with K-fold cross-validation.

Transformer models are mostly used in natural language processing and their application in images are very limited. Duong et al. [39] proposed the hybrid deep learning model consisting of EfficientNet [162] and Transformer model [38] for TB detection. Moreover, three different learning strategies are considered for training the proposed model to prevent overfitting. The proposed model outperformed the other state-of-the-art models with 97.72% accuracy and 100% AUC. The proposed model has 94,814,915 parameters. All the research papers discussed above performed the image classification for TB detection, while Nijiati et al. [119] proposed the model to detect the TB affected regions in the lungs to assist the radiologist. For the same, TB-UNet model is proposed that uses the ResNext [179] as the encoder for UNet architecture [141]. Further, they have compared the performance of radiologists with and without the proposed model assistance for TB diagnosis in patients. The obtained results show that the radiologist performance is improved with the help of the proposed model.

Many research studies have been proposed for detecting respiratory illnesses such as pneumonia, COVID-19, and pneumothorax. Table 6 and Table 7 enlist the few works for these pulmonary conditions on chest X-rays. In the following, we have discussed the deep learning models proposed for pneumothorax, COVID-19, and pneumonia.

Table 6.

Quantitative comparison of pneumonia and pneumothorax detection methods. Dice similarity coefficient (DSC), F1-score, and the area under curve (AUC) are reported for comparison. SGD is the abbreviation for stochastic gradient descent, ReLu for rectified linear unit, lr for learning rate, and AF for activation function

| Method | Optimizer | AF | LR Scheduling | Images size | Pre-processing step | Dataset | Technique | DSC | F1-Score | AUC |

|---|---|---|---|---|---|---|---|---|---|---|

| DL [135] | Adam | Sigmoid | lr = 0.001 that is decreased by factor of 10 when validation loss is not improved | 224 224 | Image normalization | CXR-14 | DenseNet-121, transfer learning | – | 43.5 | – |

| DL [77] | SGD | – | lr = 0.00105 | 512 512 | Random scaling, shift in coordinate space, brightness and contrast adjustment, blurring with Gaussian blur | RSNA | ResNet50, ResNet101, mask-RCNN, data augmentation | – | – | – |

| DL [133] | Gradient Descent | ReLu, Softmax | lr = 0.0003 | 224 224, 227 227 | Image resize and augmentation | Kaggle [112] | AlexNet, ResNet-18, DenseNet-201, SqueezeNet, transfer learning, data augmentation, cross-validation | – | 93.5 | 95.0 |

| DL [43] | Adam | – | lr = 0.00001 with learning rate decrease factor of 0.2 | 512 512 | Image resizing and data augmentation techniques such as scaling, shear and rotation | CXR-14 | single-shot detector RetinaNet with Se-ResNext101, cross-validation | – | – | – |

| DL [37] | – | ReLu, Softmax | lr = 0.00001 | 227 227 | Image resizing | CXR-14 | VGG-19, CWT, DWT, GLCM, transfer learning, SVM-linear, SVM-RBF, KNN classifier, RF, DT | – | 92.15 | – |

| DL [158] | Adam | ReLu, Sigmoid | lr = 0.001 with = 0.9 and = 0.999 | 224 224 | Image normalization, resizing, cropping and data augmentation | CheXpert | DenseNet-122, transfer learning | – | – | 70.8 |

| DL [176] | Adam | ReLu, Softmax | lr = 0.0005 with = 0.9, = 0.999 | 768 768, 1024 1024 | Image normalization and data augmentation with random Gamma correction, random brightness and contrast change, CLAHE, motion blur, median blur, horizontal flip, random shift, random scale, and random rotation | SIIM-ACR, MC | UNet, SE-Resnext-101, EfficientNet-B3, transfer learning | 88.0 | – | – |

| DL [1] | Adam | ReLu, Sigmoid | lr = 0.001 which is relatively dropped per epoch using the cosine annealing learning rate technique | 256 256, 512 512 | Image resizing, normalization and data augmentation using horizontal flip, one of random contrast, random gamma, and random brightness, one of elastic transform, grid distortion, and optical distortion | SIIM-ACR | UNet, ResNet-34, transfer learning, stochastic weight averaging | 83.56 | – | – |

Table 7.

Quantitative comparison of COVID-19 detection methods. Accuracy (Acc.), F1-score, and the area under curve (AUC) are reported for comparison. SGD is the abbreviation for stochastic gradient descent, ReLu for rectified linear unit, lr for learning rate, and AF for activation function

| Method | Optimizer | AF | LR Scheduling | Images size | Pre-processing step | Dataset | Technique | Acc. | F1-Score | AUC |

|---|---|---|---|---|---|---|---|---|---|---|

| DL [125] | Adam | ReLu | lr = 0.0001 | 224 224 | Image resizing and data augmentation with rotation | COVID-19 | VGG-16, transfer learning | 97.97 | – | – |

| DL [107] | Adam | ReLu, Softmax | lr = 0.0001 and it reduces when there is no improvement for continuous three epochs | 224 224 | Image resizing, scaling, and data augmentation | COVID-19 | EfficientNet-B4, transfer learning, cross-validation | 96.70 | 97.11 | 96.66 |

| DL [76] | Adam | LeakyReLU, Softmax | lr = 0.001 | 128 128 | Image resizing, and data augmentation such as rotation and zoom | COVID-19, Kaggle [112] | InceptionNet-V3, XceptionNet, ResNext, transfer learning | 97.0 | 95.0 | – |

| DL [58] | Adam | ReLu | lr = 0.00001 with rate decay of 0.1 | 224 224 | Image resizing and data augmentation | COVID-19, Kermany [83] | SE-ResNext-50, transfer learning | 97.55 | – | – |

| DL [190] | Adam | ReLu | lr = 0.0001 | 224 224 | Image resizing, normalization, and data augmentation technique such as random rotation, width shift, height shift, horizontal flip | COVID-19, Kaggle[112] | VGG16, ResNet50, EfficientNetB0, synthetic image generation, cross-validation | 96.8 | – | – |

| DL [127] | Adam | – | lr = 0.0001 | 512 512 | Image normalization and resizing | CheXpert, Private | DenseNet-121, PCAM, Vision Transformer | 86.4 | – | 94.1 |

| DL [171] | Adam | ReLu, Softmax | lr = 0.000001 for 20 epochs and then 0.0000001 | 224 224 | Image resizing, normalization and data augmentation | COVID-19, RSNA, CheXpert, MC | ResNeXt-50, Inception-v3, DenseNet-161, transfer learning | 98.1 | – | – |

Pneumonia

Pneumonia can be life-threatening if it is not diagnosed at the time. It nearly killed 800,000 children under the age of five-year worldwide in 2018. The main reason for pneumonia is viral/bacterial infections or fungi that result in inflammation of the lungs. It is normally found in small children or old age people (above 65). Chest X-rays can help the radiologist to identify white spots (infection) or fluid surrounding the lungs. In the following, we have discussed research studies that have used deep learning models for pneumonia detection.

Rajpurkar et al. [135] deployed DenseNet-121 for pneumonia detection which exceed the radiologist interpretation. They compared the test set with the annotation of four radiologists. The proposed method performed (0.435) better than the radiologist (0.387) on the F1 metric. Jaiswal et al. [77] experimented with a deep neural network based on mask-region CNN (RCNN) that does pixel classification for pneumonia detection. It incorporates global and local features for pixel-wise segmentation. ResNet-101 and Resnet-50 are used as backbone detectors in masked-RCNN. They also experimented with YOLO3 [137] and UNet [141], but both models failed to give better predictions results on the test set. Rahman et al. [133] used the different pre-trained deep learning models for the classification of normal, bacterial, and viral pneumonia. They trained the AlexNet, ResNet18, DenseNet201, and SqueezeNet [67] on the augmented dataset to deal with overfitting. The DenseNet-201 achieved the highest accuracy for pneumonia detection.

Gabruseva et al. [43] proposed the model for automatic pneumonia detection using the deep learning. The proposed model uses a single-shot detector RetinaNet with SE-ResNext101 [60] encoder that had been pre-trained on the ImageNet dataset. On the ImageNet dataset, the SE-ResNext architectures performed best, with a good trade-off between accuracy and complexity [14]. The images are resized to 512 512 as the proposed model on 256 256 resolution yielded the poor performance and 1024 1024 increased the computational complexity. Dey et al. [37] proposed the deep learning system for early pneumonia detection using chest X-rays. They used the ensemble feature scheme (EFS) for the feature extraction. They combined the features extracted with the assistance of Complex Wavelet Transform (CWT), Discrete Wavelet Transform (DWT), and Gray-Level Co-occurrence Matrix (GLCM) and deep learning models. They used the pre-trained VGG-19 for the deep feature extraction. Further, they tested the different classifiers such as SVM-linear, SVM-RBF, kNN classifier, Random-Forest (RF), and Decision-Tree (DT) to obtain better accuracy. At last, VGG-19 with a random-forest classifier achieved the highest pneumonia detection accuracy. They also compared their proposed model with ResNet50, VGG-16, and AlexNet.

COVID-19

COVID-19 is a kind of pneumonia caused by coronavirus. This disease has killed more than a million people, with 58 million confirmed cases worldwide. RT-PCR and antigen tests are recommended for the diagnosis but are expensive. The increasing load of patients can crumble down the medical infrastructure. Chest X-rays can help in the early detection and isolation of COVID-19 patients. Deep learning can assist the medical community with automatic detection. Many research studies are proposed for COVID19 detection in chest X-rays, and in the following, we have discussed a few studies.

Panwar et al. [125] proposed the deep learning-based nCOVnet for detecting the COVID-19. The model contains 23 layers in which 18 are of pre-trained VGG-16 model and the rest are fine-tuned on COVID-19 and other datasets. The total number of trainable parameters in the proposed models is 14,846,520. Another work reported on COVID-19 is Marques et al. [107] that have used the pre-trained EfficientNetB4 for detection. The proposed model is validated through 10-fold stratified cross-validation. The total number of trainable parameters in the proposed models is 17,913,755. Jain et al. [76] evaluated the three different models namely, InceptionNet-V3 [160], XceptionNet [29] and ResNext [179] model for COVID-19 diagnosis. The images are downsampled to 128 128 for faster training. Further, data augmentation is used to prevent the models from overfitting. Among all three models, XceptionNet outperformed the other two models for accurately detecting the COVID-19.

Hira et al. [58] evaluated the nine pre-trained models for the COVID-19 detection. Among all the models, SE-ResNeXt-50 achieved the highest accuracy for binary and multi-class classification. The total number of parameters in the SE-ResNext-50 is 27.56 million. Vantaggiato et al. [171] proposed the ensemble-CNN for the three-class and five-class COVID-19 classification. They ensemble pre-trained ResNeXt-50 [179], Inception-v3 [160], and DenseNet-161 [61] networks for the classification. The proposed ensemble network achieves the higher performance for COVID-19 detection in comparison with the other CNN architectures. Again in Zebin et al. [190] three pre-trained CNN models (VGG16 [152], ResNet50 [56], EfficientNetB0 [162]) are used for COVID-19 detection. In this paper, synthetic images are generated with CycleGAN to augment the minority COVID-19 class. Among all these three CNN models, EfficientNetB0 achieves the best accuracy for COVID-19 detection. The number of parameters in EfficientNetB0 architecture is 5.3M, while in ResNet50 is 26M.

The transformer is a deep neural network based on a self-attention mechanism that produces very wide receptive fields. It was first introduced in the field of natural language processing (NLP) [172]. Because it allows modeling long-range dependency inside images, it has spurred the vision community to examine its applications in computer vision after attaining spectacular results in NLP. Park et al. [127] proposed the Vision Transformer model for diagnosing the COVID-19 in chest X-rays. In the proposed model, the transformer uses the low-level chest X-ray feature corpus obtained from the backbone network trained to extract aberrant chest X-ray features. The DenseNet-121 is used as backbone network along with probabilistic class activation map poling is used to extract low-level features from an image. The proposed model outperformed the state-of-the-art models for the COVID-19 detection.

Pneumothorax

Pneumothorax can be life-threatening if not diagnosed at a time. The airspace between the lung and the chest wall causes pneumothorax. It can be diagnosed by X-rays, but the interpretation requires an expert radiologist. This can be time-consuming, so Sze-To et al. [158] proposed a tChexNet for the automatic detection. The proposed model is 122 layers deep and uses a training strategy to transfer knowledge learned in CheXNet [135] to detect the pneumothorax. The AUC obtained by the tCheXnet on the test set is 10% better than CheXNet.