Abstract

To slow the spread of COVID-19, most countries implemented stay-at-home orders, social distancing, and other nonpharmaceutical mitigation strategies. To understand individual preferences for mitigation strategies, we piloted a web-based Respondent Driven Sampling (RDS) approach to recruit participants from four universities in three countries to complete a computer-based Discrete Choice Experiment (DCE). Use of these methods, in combination, can serve to increase the external validity of a study by enabling recruitment of populations underrepresented in sampling frames, thus allowing preference results to be more generalizable to targeted subpopulations. A total of 99 students or staff members were invited to complete the survey, of which 72% started the survey (n = 71). Sixty-three participants (89% of starters) completed all tasks in the DCE. A rank-ordered mixed logit model was used to estimate preferences for COVID-19 nonpharmaceutical mitigation strategies. The model estimates indicated that participants preferred mitigation strategies that resulted in lower COVID-19 risk (i.e. sheltering-in-place more days a week), financial compensation from the government, fewer health (mental and physical) problems, and fewer financial problems. The high response rate and survey engagement provide proof of concept that RDS and DCE can be implemented as web-based applications, with the potential for scale up to produce nationally-representative preference estimates.

Keywords: Discrete choice experiment, Respondent driven sampling, COVID-19, Nonpharmaceutical interventions

Introduction

In the initial phases of efforts to lessen the spread and mortality of the COVID-19 pandemic, countries around the world enacted nonpharmaceutical mitigation strategies such as social distancing, stay-at-home orders, closure of non-essential businesses, and mask use in public (Flaxman et al. 2020, Lyu and Wehby 2020, Medline et al. 2020, Teslya et al. 2020, The Economist 2020). Research suggested that doing so could save millions of lives and decrease burdens on overstretched health care systems (Walker et al. 2020a, b; Pei et al. 2020).

Although COVID-19 vaccines are being administered in some countries, COVID-19 cases and mortality remain high (Anderson et al. 2020, Carl Zimmer et al. (2020) Center for Systems Science and Engineering (CSSE) at Johns Hopkins University 2021). Therefore, nonpharmaceutical mitigation strategies will remain key to reducing disease spread and may be the ‘new normal’ while vaccination infrastructure is scaled up globally. However, the effectiveness of nonpharmaceutical mitigation strategies is dependent on population adherence, which in turn is partially driven by individual preferences. The impact of the COVID-19 pandemic on the quality of life and mental health of selected populations, such as health workers, has been previously studied. However, there is limited data on the quality of life impact and tradeoffs within the general population (Young et al. 2020; Zhang and Ma 2020).

In order to get a sense of individual preferences for varying levels of mitigation strategies across a diverse population, discrete choice experiments (DCE) could be combined with respondent driven sampling to promote representative sampling targeting specific populations. In a DCE, respondents are presented with a choice that consists of two or more discrete (i.e., mutually exclusive) scenarios with various combinations of alternatives. The respondents choose the scenario which best aligns with their preferences (Hensher et al. 2005; Train 2009). An example of a scenario is choosing whether to wear (or not wear) a mask in public during the COVID-19 pandemic. In the mask use example, alternatives could include wearing a mask ‘none of the time’, ‘some of the time’ or ‘all of the time’. DCEs were originally developed for economic and market research and are an established tool for eliciting individual preferences (Ryan et al. 2001; de Bekker-Grob et al. 2012). Increasingly, DCEs have been used in health economics to inform healthcare decision making (de Bekker-Grob et al. 2015; Flynn 2010; Ghijben et al. 2014; Ryan et al. 2006; Wilson et al. 2014). Results from a DCE can be used to estimate which preferences increase or decrease utility, a concept used to model worth or value (Hensher et al. 2005; Train 2009). Because of the need to limit in-person contact during the COVID-19 pandemic, web-based DCE’s could be utilized to remotely survey participants.

In an effort to make the preference results representative of a diverse population, web-based respondent-driven sampling (RDS) could be used to target different demographic characteristics during recruitment. RDS is a probability sampling method that uses participant driven referral for recruitment of hard-to-reach populations for which a sampling frame may not exist (e.g., person employed by sex work or persons who inject drugs) (Abdul-Quader et al. 2006; Bengtsson et al. 2012; Heckathorn 1997; Hequembourg and Panagakis 2019; Jennings Mayo-Wilson et al. 2020; Magnani et al. 2005; Salganik and Heckathorn 2004; Wang et al. 2007, 2005). Study samples recruited using RDS are usually more heterogenous than populations recruited using other sampling strategies, and as a result can be more generalizable to a population of interest (Kendall et al. 2008). RDS is traditionally done in-person, and participants are issued unique referral coupons which are used to trace recruitment patterns (Heckathorn 2007; Jennings Mayo-Wilson et al. 2020). Due to COVID-19 stay-at-home orders and travel restrictions, web-based RDS could be utilized in place of in-person recruitment. Web-based RDS has been used to recruit populations that are hard to reach using in-person methods or for well-networked but hard-to-identify populations (e.g., persons with substance use disorder, in financial distress, or sexual minorities) (Bauermeister et al. 2012; Bengtsson et al. 2012; Hildebrand et al. 2015; Wejnert and Heckathorn 2008). Also, web-based RDS has demonstrated an ability to overcome temporal and physical barriers to traditional in-person RDS by allowing participants to refer a peer using features on social networking sites, such as a wall post, status update, or personal message (Hildebrand et al. 2015).

Despite the ability of DCEs to assess whether a scenario results in an increase or decrease in utility and RDS to recruit diverse study samples, there is currently limited published evidence on the feasibility of using both techniques synchronously. Therefore, the primary objective of this paper is to show the feasibility, measured via overall response rate, of using these two methodologies in combination. The secondary objective is to report preliminary findings of preferences for COVID-19 nonpharmaceutical mitigation strategies from a small sample of university students and staff members in four different countries.

Methods

Study settings and populations

Participants were recruited from universities in countries representative of the three World Bank income groups: high income (U.S.), upper-middle income (Mexico), and lower-middle income (Kenya). Participants meeting the following criteria were eligible for inclusion: 18 years or older; staff member or enrolled as a student at Brown University, Purdue University, Moi University or National Institute of Public Health (INSP); resided in the same country as their university (either U.S., Kenya, or Mexico) regardless of legal status or citizenship, from April 2020–June 2020 (the time frame mitigation measures were initially implemented in all three countries); and able to read English in the U.S., English or Kiswahili in Kenya, and Spanish in Mexico. Eligibility was confirmed using institution-provided emails and screening questions.

Respondent driven sampling design and recruitment

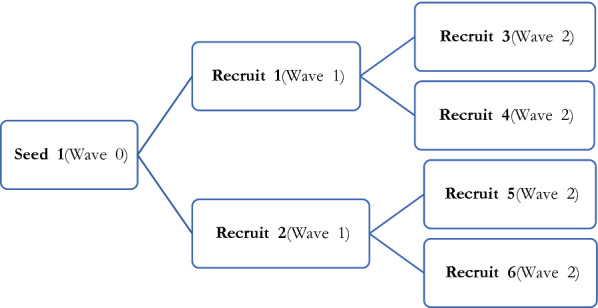

This pilot study aimed to recruit approximately 60 participants (i.e., 10–20 individuals per university) using RDS methodology as illustrated in Fig. 1 to provide an adequate sample of participants to demonstrate feasibility. To begin RDS recruitment, five ‘seed’ individuals per institution were invited to complete the survey (wave 0, n ≤ 5 per site). Eligible seeds were 18 years or older, affiliated with one of the respective universities, and willing to invite 2 additional people in their network to participate in the survey. After survey completion, each seed was asked to invite 2 eligible recruits to complete the survey (wave 1, n ≤ 10 per site). A seed was considered productive if their invitees completed the survey, and unproductive if the seed or their invitee did not complete the survey. Emails were sent to recruit additional seeds in order to replace unproductive seeds. Post-survey completion, wave 1 recruits were asked to invite another 2 eligible recruits (wave 2, n ≤ 20 per site). Participants were given up to three email reminders if they had not responded to the survey link.

Fig. 1.

Illustrative RDS Recruitment Process

With the exception of one site (INSP), participants received small incentives ($5–10) for survey completion in the form of a gift card, internet or airtime. Incentives were received for survey completion only (Brown University) or both survey completion and successful recruitment (Moi University and Purdue University). Successful recruitment meant that the recruiter’s recruit completed the survey. However, a decision not to recruit did not prevent a participant from receiving an incentive for survey completion. To reduce potential for repeat enrollments, institutional emails were required for enrollment and tracked for duplications by survey programmers, and the completion of incentives was accounted for by administrative personnel at each institution.

Respondent driven sampling measurements

RDS process measures (Jennings Mayo-Wilson et al. 2020), were used to assess the RDS recruitment process (Table 1). RDS process measures included: the individual’s self-reported social network size (participant was asked ‘How many currently-enrolled students or staff members are there that you know by name and that know you by name?), recruiter-recruit relationship (length of the relationship [years], how met), number of recruiter reminders to complete the survey, and nature (friendly, aggressive, exciting, worrisome, other) of the invitation from their recruiter. Participants were also asked their willingness to recruit peers on a scale from 0 to 10 (0 = not willing, 10 = very willing).

Table 1.

RDS (Respondent-Driven Sampling) Process Measures

| Total Respondents (n = 63) | |

|---|---|

| # Respondents | |

| Brown University | 15 |

| Purdue University | 25 |

| Moi University | 8 |

| National Institute of Public Health (INSP) | 15 |

| Members in network, Mean (SD) | 56.6 (71.8) |

| Peer network size, # (%) | |

| 1–25 | 31 (49.2) |

| 26 – 50 | 12 (19.0) |

| 51 – 100 | 6 (9.5) |

| > 100 | 11 (17.5) |

| NR | 3 (4.8) |

| Recruiter-recruit relationship, # (%) | |

| Friend | 49 (77.8) |

| Other | 14 (22.2) |

| # of years knowing the recruiter, Mean (SD) | 3.8 (4.4) |

| Where first met recruiter, # (%) | |

| In the community | 6 (9.5) |

| At School | 36 (57.1) |

| At work | 7 (11.1) |

| Other | 14 (22.2) |

| # of times reminded to participate by recruiter, Median | 1 |

| Nature of invitation, # (%) | |

| Exciting | 4 (6.3) |

| Friendly | 54 (85.7) |

| Other | 2 (3.2) |

| NR | 3 (4.8) |

| Willingness to recruit, Mean (SD) | 8.1 (3.0) |

SD standard deviation, NR not recorded

Discrete choice experiment design

The DCE was designed to elicit participants’ preferences for nonpharmaceutical COVID-19 mitigation strategies, and identify preferences that were associated with increases or decreases in utility. DCE creation was an iterative process based on the established literature which aimed to ensure that attributes were generalizable across countries, described individual level characteristics (e.g., mask ease of use, ability to work from home) not societal level characteristics (e.g., capacity of indoor locations, stay-at-home orders), and were not too numerous as to impose a cognitive burden to survey responders. The DCE was adapted from questions in the Understanding Society- UK Household Longitudinal Study COVID-19 Survey (University of Essex Institute for Social and Economic Research 2020). In addition to the DCE, participants completed a survey that assessed depression using the Patient Health Questionnaire-2 (PHQ-2) (Kroenke et al. 2003), health related quality of life using the EQ-5D-3L (The EuroQol Group 1990), socioeconomic status, health (i.e., presence of chronic conditions), and sociodemographic characteristics. The survey was designed to take about 30 min to complete, and was tested by the study investigators prior to finalization and distribution to participants.

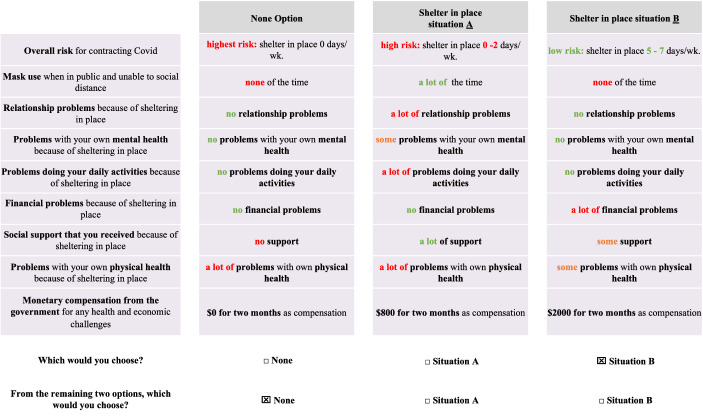

Figure 2 is an example of the DCE that was administered to participants. The DCE consisted of nine individual-level attributes based on experiences during the initial three months (April 2020–June 2020) of social distancing and stay-at-home orders: (1) risk of contracting COVID-19 due to frequency of sheltering-in-place (2) frequency of mask use in public (3) relationship problems (4) mental health problems (5) physical health problems (6) problems performing daily activities (7) financial problems, (8) level of support received, and (9) financial compensation received from the government (Table 2). These attributes represented both subjective and objective components of quality of life (Baker and Intagliata 1982; Caron 2012; Fleury et al. 2013), and were applicable across countries. Each attribute was described using a ‘level’ (Hensher et al. 2005). For example, the attribute ‘reduction in COVID-19 risk due to days per week sheltering-in-place’, consisted of three levels: ‘high risk (0–2 days per week sheltering in place)’, ‘medium risk (3–4 days per week sheltering in place)’, and ‘low risk (5–7 days per week sheltering in place)’ (Table 2). Eight of the attributes had three levels. One attribute, ‘financial compensation received from the government’, had 7 levels because of the wide variation in compensation across countries. The final DCE generated a large number of scenarios (S) per country (S = 38 × 71 = 45,927) which made a full factorial design unfeasible. Thus, we used the dcreate procedure (Stata SE, College Station, TX) (Hole 2015), to implement a D-efficient partial factorial design (Carlsson and Martinsson 2003).

Fig. 2.

Examples of computer-based choice scenarios from Discrete Choice Experiment

Table 2.

Discrete Choice Experiment Attributes and Levels

| DCE Attribute | Attribute Level | Coding scheme | # of Levels |

|---|---|---|---|

| COVID-19 risk due to frequency of sheltering-in-place |

High (0–2 days); Medium (3–4 days); Low (5–7 days) |

0 = High 1 = Medium 2 = Low |

3 |

| Frequency of mask use in public |

None of the time; Some of the time; A lot of the time |

0 = None 1 = Some 2 = A lot |

3 |

| Relationship problems due to sheltering-in-place |

No problems; Some problems; A lot of problems |

0 = None 1 = Some 2 = A lot |

3 |

| Mental health problems due to sheltering-in-place |

No problems; Some problems; A lot of problems |

0 = None 1 = Some 2 = A lot |

3 |

| Physical health problems due to sheltering-in-place |

No problems; Some problems; A lot of problems |

0 = None 1 = Some 2 = A lot |

3 |

| Problems performing daily activities due to sheltering-in-place |

No problems; Some problems; A lot of problems |

0 = None 1 = Some 2 = A lot |

3 |

| Financial problems due to sheltering-in-place |

No problems; Some problems; A lot of problems |

0 = None 1 = Some 2 = A lot |

3 |

| Level of support received when sheltering-in-place |

No support; Some support; A lot of support |

0 = None 1 = Some 2 = A lot |

3 |

| Financial compensation received from the government during April–September 2020 | Country dependent* |

0 = Lowest compensation 7 = Highest compensation |

7 |

*Compensation ranges (min to max) in local currency: United States: USD 0–5000; Kenya: KES 0–5000; Mexico: MEX 0–5000

During the DCE, participants completed a choice task, which was choosing their preferred mitigation strategy from the randomly presented options. The task was comprised of 3 choice profiles: Shelter in Place Situation A, or Shelter in Place Situation B, or None (Fig. 2). The None option represented the pre-COVID-19 status quo (opt-out) option (which explicitly allowed for higher risk preferences). Each profile was comprised of the nine attributes listed in Table 2. To ascertain preference, participants were presented with a task that contained different combinations of the levels of each of the nine attributes (Hensher et al. 2005). Participants were presented with 10 tasks twice, resulting in 20 choice tasks. The first task asked participants to choose the best options from the three choice profiles (Shelter in Place Situation A, or Shelter in Place Situation B, or None), and the second task asked them to choose the best option from the remaining two choice profiles (best-best analysis) (Ghijben et al. 2014; Lancsar et al. 2017). Since the study had three alternatives, use of a best-best DCE allowed for full preference ranking of the choice-sets (Ghijben et al. 2014; Lancsar et al. 2017). The number of tasks was chosen in alignment with the literature to prevent participant fatigue, limit cognitive overload, and ensure participants were able to consider all attributes (Coast and Horrocks 2007; Mangham et al. 2008; Clark et al. 2014).

Discrete choice experiment administration

Participants received the survey via email link sent from the study team. The survey was administered using Qualtrics (Seattle, WA) (Weber 2019). It was translated from English into Spanish and Kiswahili; and provided in English in the U.S., English or Kiswahili in Kenya, and Spanish in Mexico. Use of Qualtrics allowed for the tracking of recruiter-recruit relationship using automated identification numbers that identified the RDS lineage. Time stamps and minimum time requirements were programmed into the DCE in order to ensure participants were taking time to read responses.

Sample size

The minimum sample size needed for a DCE in order to calculate main effects is estimated based on the number of tasks (t), alternatives (a) per task, and number of analysis cells (c): N ≥ (500 × c)/(t × a), with ‘c’ equal to the largest number of levels for any of the attributes (de Bekker-Grob et al. 2015). The minimum required sample size for this DCE was 58 participants (solving with t = 20, a = 3, c = 7). Therefore, the final sample of 63 participants (with complete data) exceeded the minimum requirement. (For future research, larger samples sizes will be required to allow for subgroup analyses and heterogeneity.)

Data analysis

A mixed rank-ordered logit model with normally distributed random parameters was used to estimate preferences for COVID-19 nonpharmaceutical mitigation strategies (Lancsar et al. 2017). The mixed rank-ordered logit is an extension of the conditional logit that takes into account ranked choices, and is expressed as the product of the logit formulas (Galárraga et al. 2020; Ghijben et al. 2014; Lancsar et al. 2017). Normally distributed random parameters were included to allow for comparison of unobserved preference heterogeneity for a chosen social distancing scenario versus no social distancing (Galárraga et al. 2020; Lancsar and Louviere 2008). Since this was a best-best analysis, the first choice set contained data representing the three alternatives (i.e., Shelter in Place Situation A, or Shelter in Place Situation B, or None), with the dependent variable (choice) = 1 for the first-best and = 0 for the remaining alternatives. A dummy variable controlling for block effects was included in the model to ensure that the block a participant answered had no effect on model results (Lancsar and Louviere 2008).

To check for robustness and due to possible correlation between attributes, a Bonferroni corrected p value was included to test statistical significance of the coefficients (VanderWeele and Mathur 2018). The Bonferroni corrected p value was calculated by dividing the nominal significance level of the alpha test (α = 0.05) by the number of tests/attributes, 0.05/9 = 0.0056. The distribution (level balance) of the nine attributes was checked across participant’s first and second choices, to ensure that the properties of the logit model were satisfied (Albert and Anderson 1984; Cook et al. 2018), and that the frequencies of the attribute levels exceeded the rule of thumb of 10 events per variable (EPV) for logistic regression (de Jong et al. 2019). RDS process measures were reported descriptively using means, standard deviations, and percentages. All analyses were performed using Stata SE (College Station, TX, version 16).

Results

Study population

Data collection was conducted from September through November 2020 and lasted an average of 17.5 days at each institution. A total of 99 people were invited to complete the survey, of whom 71 started the DCE (72% overall response rate, Table 3). Of starters, 63 out of the 71 completed all tasks in the DCE (89% engagement rate). For DCE completers, mean age was 26.4 years (SD 7.6), 64% were assigned female at birth, and 49% did not have a partner (Table 4). Approximately 65% of participants worked full or part time, and 56% were unable to telecommute or work from home. The participants’ preferences are shown in Table 5.

Table 3.

Study Recruitment and Survey Response

| Total | Brown University | Purdue University | Moi University | National Institute of Public Health (INSP) | |

|---|---|---|---|---|---|

| Participants recruited | 99 | 22 | 32 | 20 | 25 |

| Survey starters | 71 | 15 | 25 | 13 | 18 |

| Survey completers | 63 | 15 | 25 | 8 | 15 |

| # Seeds (wave 0) | 22 | 6 | 5 | 4 | 7 |

| # Recruits (wave 1) | 25 | 6 | 9 | 3 | 7 |

| # Recruits (wave 2) | 16 | 3 | 11 | 1 | 1 |

Table 4.

Demographic characteristics

| Respondents n = 63 | |

|---|---|

| Age in years, Mean (SD) | 26.4 (7.6) |

| Female sex assigned at birth, # (%) | 40 (63.5) |

| Marital status | |

| Never married and never lived together | 42 (66.7) |

| Married or living together | 14 (22.2) |

| Other | 7 (11.1) |

| Partnered, # (%) | |

| No | 31 (49.2) |

| Yes, and share a household | 13 (20.6) |

| Yes, and do not share a household | 9 (14.3) |

| NR | 10 (15.9) |

| Paid work, # (%) | |

| No | 19 (30.2) |

| Full-time (36 h or more per week) | 20 (31.7) |

| Part-time (< 36 h per week) | 21 (33.3) |

| No Response | 3 (4.8) |

| Ability to work from home (telecommute), # (%) | |

| No | 35 (55.6) |

| Yes | 24 (38.1) |

| NR | 4 (6.3) |

SD standard deviation, NR not recorded

Table 5.

DCE Pilot Results-Participant Preferences for Nonpharmaceutical COVID-19 Mitigation Scenarios in Kenya, Mexico and the US

| Attributes | Coefficients (Robust standard error) | P value |

|---|---|---|

| Reducing COVID-19 risk due to higher frequency of sheltering-in-place | 0.230* (0.097) | 0.018 |

| Frequency of mask use in public | 0.079 (0.097) | 0.418 |

| Relationship problems | −0.239*(0.095) | 0.012 |

| Mental health problems | −0.726***(0.120) | < 0.001† |

| Problems performing daily activities | −0.295***(0.087) | < 0.001† |

| Financial problems | −0.520***(0.112) | < 0.001† |

| Level of support received | 0.056 (0.073) | 0.445 |

| Physical health problems | −0.436***(0.100) | < 0.001† |

| Financial compensation from government | 0.097***(0.026) | < 0.001† |

| Block * Constant | −0.104 (0.177) | 0.556 |

| Constant | 3.330***(0.612) | < 0.001† |

| ‡N total observations | 2520 |

*p < 0.05, **p < 0.01, ***p < 0.001

†Statistically significant using Bonferroni-correction, p < 0.0056

‡Total number of tasks done by 63 individuals

Positive coefficient values suggest increased utility/preference

Negative coefficient values suggest decreased utility/preference

Attributes and levels are listed in Table 2 and example scenarios shown in Fig. 2

Respondent driven sample process measures

Twenty seeds were recruited, five seeds per institution. Half (10) of these original seeds were unproductive, meaning the seed or their invitee did not complete the survey, and 8 seeds were replaced (Appendix Table 6). The final sample consisted of 28 seeds, 17 were productive (10 original seeds and 7 replacement seeds) and 11 seeds were unproductive.

Table 6.

Seed RDS (Respondent-Driven Sampling) Process Measures

| Total | Brown University | Purdue University | Moi University | National Institute of Public Health (INSP) | |

|---|---|---|---|---|---|

| Seeds | |||||

| # original seeds at start of study | 20 | 5 | 5 | 5 | 5 |

| # Original productive1 seeds | 10 | 3 | 2 | 2 | 3 |

| # Original unproductive2 seeds | 10 | 2 | 3 | 3 | 2 |

| # Replacement seeds | 8 | 1 | 3 | 1 | 3 |

| # Productive replacement seeds | 7 | 1 | 3 | 1 | 2 |

| # Unproductive replacement seeds | 1 | 0 | 0 | 0 | 1 |

| Total # productive seeds (original + replacement) | 17 | 4 | 5 | 3 | 6 |

| #Recruitment waves (excluding seeds) | 2 | 2 | 2 | 2 | 2 |

1Productive if seed and invitee completed the survey

2Unproductive if seed or invitee did not complete the survey

Data on RDS process measures were collected from the 63 individuals that completed the survey (Table 1). The mean network size of participants was 57 members (standard deviation [SD] 72). Most participants were friends with their recruiter (78%), had met them at school (57%), and had known them an average of 3.8 years (SD 4.4). Approximately 86% of participants described the invitation from their recruiter as being “friendly” and received one follow up reminder to complete the survey. No participants reported safety concerns related to their participation in the study. Mean willingness to share the survey with others was 8.1 (SD 3.0) on a scale from 0 to 10 (0 = not willing, 10 = very willing).

Preferences

The DCE results, preferences for COVID-19 nonpharmaceutical mitigation strategies, are reported in Table 5. The attributes were evenly distributed across first (Appendix Table 7) and second choices (Appendix Table 8), meaning that the DCE was balanced and the properties of the logit model were satisfied.

Table 7.

First Choice: Level Balance of Attributes Across Choice Categories

| Total | Not Chosen (Choice = 0) | Chosen (Choice = 1) | |

|---|---|---|---|

| N = 2,520 | N = 2,016 | N = 504 | |

| COVID-19 risk due to frequency of sheltering-in-place | |||

| High (0–2 days) | 894 (35.5%) | 780 (38.7%) | 114 (22.6%) |

| Medium (3–4 days) | 845 (33.5%) | 690 (34.2%) | 155 (30.8%) |

| Low (5–7 days) | 781 (31.0%) | 546 (27.1%) | 235 (46.6%) |

| Frequency of mask use in public | |||

| None of the time | 894 (35.5%) | 780 (38.7%) | 114 (22.6%) |

| Some of the time | 798 (31.7%) | 628 (31.2%) | 170 (33.7%) |

| A lot of the time | 828 (32.9%) | 608 (30.2%) | 220 (43.7%) |

| Relationship problems due to sheltering-in-place | |||

| No problems | 894 (35.5%) | 780 (38.7%) | 114 (22.6%) |

| Some problems | 751 (29.8%) | 570 (28.3%) | 181 (35.9%) |

| A lot of problems | 875 (34.7%) | 666 (33.0%) | 209 (41.5%) |

| Mental health problems due to sheltering-in-place | |||

| No problems | 894 (35.5%) | 780 (38.7%) | 114 (22.6%) |

| Some problems | 748 (29.7%) | 488 (24.2%) | 260 (51.6%) |

| A lot of problems | 878 (34.8%) | 748 (37.1%) | 130 (25.8%) |

| Physical health problems due to sheltering-in-place | |||

| No problems | 894 (35.5%) | 780 (38.7%) | 114 (22.6%) |

| Some problems | 779 (30.9%) | 550 (27.3%) | 229 (45.4%) |

| A lot of problems | 847 (33.6%) | 686 (34.0%) | 161 (31.9%) |

| Problems performing daily activities due to sheltering-in-place | |||

| No problems | 894 (35.5%) | 780 (38.7%) | 114 (22.6%) |

| Some problems | 796 (31.6%) | 584 (29.0%) | 212 (42.1%) |

| A lot of problems | 830 (32.9%) | 652 (32.3%) | 178 (35.3%) |

| Financial problems due to sheltering-in-place | |||

| No problems | 894 (35.5%) | 780 (38.7%) | 114 (22.6%) |

| Some problems | 677 (26.9%) | 486 (24.1%) | 191 (37.9%) |

| A lot of problems | 949 (37.7%) | 750 (37.2%) | 199 (39.5%) |

| Level of support received when sheltering-in-place | |||

| No support | 894 (35.5%) | 780 (38.7%) | 114 (22.6%) |

| Some support | 793 (31.5%) | 596 (29.6%) | 197 (39.1%) |

| A lot of support | 833 (33.1%) | 640 (31.7%) | 193 (38.3%) |

| Financial compensation received from the government during April–September 2020 | |||

| 0 = Lowest compensation | 894 (35.5%) | 780 (38.7%) | 114 (22.6%) |

| 1 | 174 (6.9%) | 142 (7.0%) | 32 (6.3%) |

| 2 | 204 (8.1%) | 142 (7.0%) | 62 (12.3%) |

| 3 | 254 (10.1%) | 198 (9.8%) | 56 (11.1%) |

| 4 | 281 (11.2%) | 234 (11.6%) | 47 (9.3%) |

| 5 | 231 (9.2%) | 168 (8.3%) | 63 (12.5%) |

| 6 | 260 (10.3%) | 174 (8.6%) | 86 (17.1%) |

| 7 = Highest compensation | 222 (8.8%) | 178 (8.8%) | 44 (8.7%) |

The coefficients on reduction in COVID-19 risk and financial compensation from the government were statistically significant and positive; meaning that participants preferred to shelter in place more days a week in order to have a lower COVID-19 risk (0.230) and receive financial compensation from the government (0.097). Alternatives that were less preferred (statistically significant and had negative coefficients) included relationship problems (−0.239), mental health problems (−0.726), problems performing daily activities (−0.295), financial problems (−0.520), and physical health problems (−0.436) due to sheltering in place. The coefficient of the random intercept (‘constant’ in Table 5) was statistically different from zero (3.32, p < 0.001), indicating there was significant heterogeneity in preference for social distancing scenarios, but participants preferred social distancing scenarios over no social distancing. Since the block effect (‘block * constant’ in Table 5) was not significant (p = 0.556), the random version of the survey (i.e., variation of the levels) that the participant responded to had no effect on preference answer or model results. When using the Bonferroni corrected p value (p = 0.0056), reducing COVID-19 risk due to higher frequency of sheltering-in-place and relationship problems, were no longer statistically significant predictors of respondents’ preferences for COVID-19 nonpharmaceutical mitigation strategies. Due to small sample sizes, comparisons between countries could not be calculated. (Similarly, heterogeneity by type of subgroup by race/ethnicity, age, gender, region, etc. will be subject of future research with larger samples).

Discussion

This study used web-based respondent driven sampling (RDS) to recruit participants from four universities in three countries to complete a web-based Discrete Choice Experiment (DCE) on preferences for COVID-19 nonpharmaceutical mitigation strategies. The overall response rate was 72%, and engagement with the DCE was over 89%. This compares favorably with both RDS and DCE methods (Watson et al. 2017). Participants preferred strategies that resulted in lower COVID-19 risk (e.g., more days per week sheltering-in-place) and financial compensation from the government. The former results may be potentially driven by the fact that 55.6% of respondents reported not being able to work from home/telecommute; the latter may be explained because about a third of respondents reported working a full-time job while also attending school full-time, and could also explain why financial compensation is a preferred strategy.

Also, participants preferred scenarios that caused fewer health (mental and physical), interpersonal, or financial problems. Interpreting coefficients from the DCE that are not statistically significant (i.e., neither more or less preferred) presents a challenge. For example, the lack of strong preferences for or against mask use may indicate ambivalence about mask wearing or that there is heterogeneity across respondents’ preferences for mask use (e.g., half of the respondents may be strongly against mask use, while the other half are strongly supportive of mask use). Given the small sample size (N = 63), these are preliminary findings. To our knowledge, nevertheless, this is the first instance of RDS and DCE being used in combination.

Use of these methodologies can serve to increase the external validity of an experiment by ensuring preference results are more generalizable to a specific target population (e.g., college students or university staff members or race/ethnic minorities) regardless of what country they are in. DCEs assessing population-level interventions can lack generalizability because they are commonly recruited from person receiving services in clinics, databases of willing research participants, disease registries, convenience samples, using time-and-place sampling, or snow ball sampling (Galárraga et al. 2014; Ghijben et al. 2014; Hobden et al. 2019; Lokkerbol et al. 2019; Sharma et al. 2020; Vallejo-Torres et al. 2018). By using well-networked individuals, RDS enabled the creation of a heterogenous study population, representing perspectives of diverse participants. Heterogeneity is especially important in the context of COVID-19 mitigation, since impact varies widely by country and within countries, and relationships have emerged between COVID-19 morbidity/mortality and race, age, socioeconomic status, and health.

Lessons learned from this pilot can be used to collect useful and more representative data at larger scale. Prior engagement with ‘seeds’, use of small incentives (gift cards), and broad inclusion criteria contributed to successful pilot implementation. While there were decreases in the number of subsequent recruits in waves 1 and 2, study sites that provided participants incentives to both complete the survey and recruit additional participants were most successful. Such recruitment incentives are traditionally a feature of RDS, and will be used when scaling-up the study. Additional challenges included managing computer timeout issues during peak times of internet use (Kenya) and misunderstanding referral expectations (Mexico). Because the studies were rolled out at different times (Brown University and Moi University were first), we were able to refine the directions and expectations for the ‘seeds’, which may explain the more successful wave 1 and wave 2 recruitment at Purdue University. Future work should explore heterogeneity in preferences by field of study or occupation: it may make a difference to ask students or staff members from different departments: medicine, pharmacy, or public health, vs. business, administration, culinary arts, etc.

While most DCEs have an average of 7 attributes and the literature suggests 10 attributes as ideal (de Bekker-Grob et al. 2012; Mangham et al. 2008), our DCE had 9 attributes. In future versions of this DCE ‘physical health problems’ may be removed since ‘problems preforming daily activities’ is an established quality of life metric used in the EQ-5D (The EuroQol Group 1990) and physical health problems would likely preclude performance of daily activities. Also, ‘level of support received’ may be removed since attributes related to relationship, mental health, and financial problems encompass similar themes.

Strengths

We created a DCE with attributes that are globally relevant and applicable to participants in high-, middle-, and lower-middle-income countries. Additionally, we were able to successfully carry out recruitment and administration of the DCE over the internet, and believe our current infrastructure can be repeated to recruit much larger and diverse samples. Response rates were generally high, and not drastically lower in sites that did not issue incentives at all or in sites that only issued incentives for survey completion. The topic of COVID-19 is very relevant currently and may remain relevant for years to come. Furthermore, these methods can also be used to examine the ways in which other public health interventions impact preferences and risk mitigation behaviors.

Limitations

The final sample size for this pilot was slightly above the minimum requirement for a DCE, but the results are not powered to be disaggregated by country (or other characteristics) and imply associations only at the general level. Recall bias is possible since we were asking participants in September–November 2020 to recall preferences and feelings from April–June of 2020. It is possible that a participant’s current emotional state could influence their preference (Lerner et al. 2015). The ever-changing COVID-19 news cycle, school/administrative work, and other concurrent global events, may have influenced how participants felt and responded to our pilot. Participants needed access to an internet-enabled device to participate in the pilot, therefore when expanding this study, steps need to be taken to ensure individuals without internet access can participate.

Conclusion

Our timely and relevant pilot project of nonpharmaceutical COVID-19 mitigation preferences among university students and staff members has shown that using web-based respondent-driven sampling (RDS) to recruit participants for a web-based discrete choice experiment (DCE) from multiple sites across three counties is feasible and implementable. The combination of these techniques is promising because it can enable recruitment of hard-to-reach populations that are underrepresented in sampling frames, allow higher-risk populations to participate in research, and can be completed anywhere in the world with access to the internet or a smart phone.

Acknowledgements

We would like to thank the members of the study team that made this work possible. Computer-assisted DCE programming and data management were done by Timothy Souza, Suzanne Sales, and Michelle Loxley at Brown University. Project management and research assistance was provided by Marta Wilson-Barthes at Brown, and project management provided by Kathryn Rodenbach at Purdue. Design and analytical guidance was provided by a team of advisers including: Juddy Wachira, Stavroula Chrysanthopoulou, Fernando Alarid-Escudero, and Jasmine Gonzalvo.

Appendix

Appendix Tables 6, 7 shows the level balance of the 9 attributes included in the DCE, across choice categories, for the participants’ first choice. The ‘Total’ column shows the frequency with which the particular level of an attribute appeared in the experiment. The column ‘Chosen (Choice = 1)’ shows how many times the particular level of an attribute was chosen, and the column ‘Not Chosen (Choice = 0)’ shows how many times the particular level of an attribute was not chosen. For example, for the attribute ‘COVID-19 risk due to frequency of sheltering-in-place’, the attribute levels (high, medium, low), each appeared about one third of the time, showing that the DCE was balanced. For the ‘High (0–2 day)’ risk due to sheltering in place category, we see that the option was presented 894 times (‘Total’ column) in the experiment, and was chosen 114 times (‘Chosen (Choice = 1)’ column) and not chosen 780 times (‘Not Chosen (Choice = 0)’ column).

Appendix Table 8 shows the level balance of the 9 attributes included in the DCE, across choice categories, for the participants’ second choice. The ‘Total’ column shows the frequency with which the particular level of an attribute appeared in the experiment. The column ‘Chosen (Choice = 1)’ shows how many times the particular level of an attribute was chosen, and the column ‘Not Chosen (Choice = 0)’ shows how many times the particular level of an attribute was not chosen. For example, for the attribute ‘COVID-19 risk due to frequency of sheltering-in-place’, the attribute levels (high, medium, low), each appeared about one third of the time, showing that the DCE was balanced. For the ‘High (0 – 2 day)’ risk due to sheltering in place category, we see that the option was presented 894 times (‘Total’ column) in the experiment, and was chosen 270 times (‘Chosen (Choice = 1)’ column) and not chosen 624 times (‘Not Chosen (Choice = 0)’ column.

Table 8.

Second Choice: Level Balance of Attributes Across Choice Categories

| Total | Choice = 0 | Choice = 1 | |

|---|---|---|---|

| N = 2520 | N = 1512 | N = 1008 | |

| COVID-19 risk due to frequency of sheltering-in-place | |||

| High (0–2 days) | 894 (35.5%) | 624 (41.3%) | 270 (26.8%) |

| Medium (3–4 days) | 845 (33.5%) | 413 (27.3%) | 432 (42.9%) |

| Low (5–7 days) | 781 (31.0%) | 475 (31.4%) | 306 (30.4%) |

| Frequency of mask use in public | |||

| None of the time | 894 (35.5%) | 624 (41.3%) | 270 (26.8%) |

| Some of the time | 798 (31.7%) | 398 (26.3%) | 400 (39.7%) |

| A lot of the time | 828 (32.9%) | 490 (32.4%) | 338 (33.5%) |

| Relationship problems due to sheltering-in-place | |||

| No problems | 894 (35.5%) | 624 (41.3%) | 270 (26.8%) |

| Some problems | 751 (29.8%) | 397 (26.3%) | 354 (35.1%) |

| A lot of problems | 875 (34.7%) | 491 (32.5%) | 384 (38.1%) |

| Mental health problems due to sheltering-in-place | |||

| No problems | 894 (35.5%) | 624 (41.3%) | 270 (26.8%) |

| Some problems | 748 (29.7%) | 400 (26.5%) | 348 (34.5%) |

| A lot of problems | 878 (34.8%) | 488 (32.3%) | 390 (38.7%) |

| Physical health problems due to sheltering-in-place | |||

| No problems | 894 (35.5%) | 624 (41.3%) | 270 (26.8%) |

| Some problems | 779 (30.9%) | 425 (28.1%) | 354 (35.1%) |

| A lot of problems | 847 (33.6%) | 463 (30.6%) | 384 (38.1%) |

| Problems performing daily activities due to sheltering-in-place | |||

| No problems | 894 (35.5%) | 624 (41.3%) | 270 (26.8%) |

| Some problems | 796 (31.6%) | 412 (27.2%) | 384 (38.1%) |

| A lot of problems | 830 (32.9%) | 476 (31.5%) | 354 (35.1%) |

| Financial problems due to sheltering-in-place | |||

| No problems | 894 (35.5%) | 624 (41.3%) | 270 (26.8%) |

| Some problems | 677 (26.9%) | 375 (24.8%) | 302 (30.0%) |

| A lot of problems | 949 (37.7%) | 513 (33.9%) | 436 (43.3%) |

| Level of support received when sheltering-in-place | |||

| No support | 894 (35.5%) | 624 (41.3%) | 270 (26.8%) |

| Some support | 793 (31.5%) | 471 (31.2%) | 322 (31.9%) |

| A lot of support | 833 (33.1%) | 417 (27.6%) | 416 (41.3%) |

| Financial compensation received from the government during April–September 2020 | |||

| 0 = Lowest compensation | 894 (35.5%) | 624 (41.3%) | 270 (26.8%) |

| 1 | 174 (6.9%) | 100 (6.6%) | 74 (7.3%) |

| 2 | 204 (8.1%) | 112 (7.4%) | 92 (9.1%) |

| 3 | 254 (10.1%) | 142 (9.4%) | 112 (11.1%) |

| 4 | 281 (11.2%) | 157 (10.4%) | 124 (12.3%) |

| 5 | 231 (9.2%) | 121 (8.0%) | 110 (10.9%) |

| 6 | 260 (10.3%) | 156 (10.3%) | 104 (10.3%) |

| 7 = Highest compensation | 222 (8.8%) | 100 (6.6%) | 122 (12.1%) |

Funding

This work was partially funded by the Population Studies and Training Center at Brown University, which receives funding from the NIH (P2C HD041020) for general support, and internal funding at Purdue University.

Declarations

Conflict of interest

The authors declare no conflicts of interest.

Code availability

Stata code available upon request.

Consent to participate

Electronic informed consent was obtained from all individuals that participated in the study.

Ethics approval

All human subjects activities were approved locally by ethical review committees at Brown University (Protocol #2008002772), Purdue University (IRB-2020–1188), Moi University (IREC approval #0003635), and Mexico National Institute of Public Health (Proyecto CI: 1698).

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Courtney A. Johnson, Email: courtney_a_johnson@brown.edu

Dan N. Tran, Email: tran.nk.tina@temple.edu

Ann Mwangi, Email: annwsum@gmail.com.

Sandra G. Sosa-Rubí, Email: sandra.sosa.rubi@gmail.com

Carlos Chivardi, Email: krloschivardi@gmail.com.

Martín Romero-Martínez, Email: martin.romero.martinez@gmail.com.

Sonak Pastakia, Email: spastaki@purdue.edu.

Elisha Robinson, Email: robin378@purdue.edu.

Larissa Jennings Mayo-Wilson, Email: ljmayowi@iu.edu.

Omar Galárraga, Email: omar_galarraga@brown.edu.

References

- Abdul-Quader AS, Heckathorn DD, Sabin K, Saidel T. Implementation and analysis of respondent driven sampling: lessons learned from the field. J. Urban Health Bull. New York Acad. Med. 2006;83(6 Suppl):i1–i5. doi: 10.1007/s11524-006-9108-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Albert A, Anderson JA. On the existence of maximum likelihood estimates in logistic regression models. Biometrika. 1984;71(1):1–10. doi: 10.2307/2336390. [DOI] [Google Scholar]

- Anderson RM, Vegvari C, Truscott J, Collyer BS. Challenges in creating herd immunity to SARS-CoV-2 infection by mass vaccination. The Lancet. 2020;396(10263):1614–1616. doi: 10.1016/S0140-6736(20)32318-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker F, Intagliata J. Quality of life in the evaluation of community support systems. Eval. Program. Plann. 1982;5(1):69–79. doi: 10.1016/0149-7189(82)90059-3. [DOI] [PubMed] [Google Scholar]

- Bauermeister JA, Zimmerman MA, Johns MM, Glowacki P, Stoddard S, Volz E. Innovative recruitment using online networks: lessons learned from an online study of alcohol and other drug use utilizing a web-based, respondent-driven sampling (webRDS) strategy. J. Stud. Alcohol Drugs. 2012;73(5):834–838. doi: 10.15288/jsad.2012.73.834. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bengtsson L, Xin Lu, Nguyen QC, Camitz M, Le Hoang N, Nguyen TA, Liljeros F, Thorson A. Implementation of web-based respondent-driven sampling among men who have sex with men in Vietnam. PLoS ONE. 2012;7(11):e49417. doi: 10.1371/journal.pone.0049417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlsson F, Martinsson P. Design techniques for stated preference methods in health economics. Health Econ. 2003;12(4):281–294. doi: 10.1002/hec.729. [DOI] [PubMed] [Google Scholar]

- Caron J. Predictors of quality of life in economically disadvantaged populations in Montreal. Soc. Indic. Res. 2012;107(3):411–427. doi: 10.1007/s11205-011-9855-0. [DOI] [Google Scholar]

- Center for Systems Science and Engineering (CSSE) at Johns Hopkins University. “COVID-19 Dashboard” (2021). https://coronavirus.jhu.edu/map.html. Accessed 23 Sept

- Clark MD, Determann D, Petrou S, Moro D, de Bekker-Grob EW. Discrete choice experiments in health economics: a review of the literature. Pharmacoeconomics. 2014;32(9):883–902. doi: 10.1007/s40273-014-0170-x. [DOI] [PubMed] [Google Scholar]

- Coast J, Horrocks S. Developing attributes and levels for discrete choice experiments using qualitative methods. J. Health Serv. Res. Pol. 2007;12(1):25–30. doi: 10.1258/135581907779497602. [DOI] [PubMed] [Google Scholar]

- Cook SJ, Niehaus J, Zuhlke S. A warning on separation in multinomial logistic models. Res. Pol. 2018;5(2):2053168018769510. doi: 10.1177/2053168018769510. [DOI] [Google Scholar]

- de Bekker-Grob EW, Donkers B, Jonker MF, Stolk EA. Sample size requirements for discrete-choice experiments in healthcare: a practical guide. The Patient Patient Cent. Outcomes Res. 2015;8(5):373–384. doi: 10.1007/s40271-015-0118-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Bekker-Grob EW, Ryan M, Gerard K. Discrete choice experiments in health economics: a review of the literature. Health Econ. 2012;21(2):145–172. doi: 10.1002/hec.1697. [DOI] [PubMed] [Google Scholar]

- de Jong VMT, Eijkemans MJC, van Calster B, Timmerman D, Moons KGM, Steyerberg EW, van Smeden M. Sample size considerations and predictive performance of multinomial logistic prediction models. Stat. Med. 2019;38(9):1601–1619. doi: 10.1002/sim.8063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flaxman, Seth, Swapnil Mishra, Axel Gandy, H. Juliette T. Unwin, Thomas A. Mellan, Helen Coupland, Charles Whittaker, Harrison Zhu, Tresnia Berah, Jeffrey W. Eaton, Mélodie Monod, Pablo N. Perez-Guzman, Nora Schmit, Lucia Cilloni, Kylie E. C. Ainslie, Marc Baguelin, Adhiratha Boonyasiri, Olivia Boyd, Lorenzo Cattarino, Laura V. Cooper, Zulma Cucunubá, Gina Cuomo-Dannenburg, Amy Dighe, Bimandra Djaafara, Ilaria Dorigatti, Sabine L. van Elsland, Richard G. FitzJohn, Katy A. M. Gaythorpe, Lily Geidelberg, Nicholas C. Grassly, William D. Green, Timothy Hallett, Arran Hamlet, Wes Hinsley, Ben Jeffrey, Edward Knock, Daniel J. Laydon, Gemma Nedjati-Gilani, Pierre Nouvellet, Kris V. Parag, Igor Siveroni, Hayley A. Thompson, Robert Verity, Erik Volz, Caroline E. Walters, Haowei Wang, Yuanrong Wang, Oliver J. Watson, Peter Winskill, Xiaoyue Xi, Patrick G. T. Walker, Azra C. Ghani, Christl A. Donnelly, Steven Riley, Michaela A. C. Vollmer, Neil M. Ferguson, Lucy C. Okell, Samir Bhatt, and Covid-Response Team Imperial College Estimating the effects of non-pharmaceutical interventions on COVID-19 in Europe. Nature. 2020;584(7820):257–261. doi: 10.1038/s41586-020-2405-7. [DOI] [PubMed] [Google Scholar]

- Fleury M-J, Grenier G, Bamvita J-M, Tremblay J, Schmitz N, Caron J. Predictors of quality of life in a longitudinal study of users with severe mental disorders. Health Qual. Life Outcomes. 2013;11(1):92. doi: 10.1186/1477-7525-11-92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flynn TN. Using conjoint analysis and choice experiments to estimate QALY values. Pharmacoeconomics. 2010;28(9):711–722. doi: 10.2165/11535660-000000000-00000. [DOI] [PubMed] [Google Scholar]

- Galárraga O, Kuo C, Mtukushe B, Maughan-Brown B, Harrison A, Hoare J. iSAY (incentives for South African youth): Stated preferences of young people living with HIV. Soc. Sci. Med. 2020;265:113333. doi: 10.1016/j.socscimed.2020.113333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galárraga O, Sosa-Rubí SG, Infante C, Gertler PJ, Bertozzi SM. Willingness-to-accept reductions in HIV risks: conditional economic incentives in Mexico. The Eur. J. Health Econ. HEPAC Health Econ. Prev. Care. 2014;15(1):41–55. doi: 10.1007/s10198-012-0447-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghijben P, Lancsar E, Zavarsek S. Preferences for oral anticoagulants in atrial fibrillation: a best-best discrete choice experiment. Pharmacoeconomics. 2014;32(11):1115–1127. doi: 10.1007/s40273-014-0188-0. [DOI] [PubMed] [Google Scholar]

- Heckathorn DD. Respondent-driven sampling: a new approach to the study of hidden populations*. Soc. Probl. 1997;44(2):174–199. doi: 10.2307/3096941. [DOI] [Google Scholar]

- Heckathorn DD. Extensions Of respondent-driven sampling: analyzing continuous variables and controlling for differential recruitment. Sociol. Methodol. 2007;37(1):151–207. doi: 10.1111/j.1467-9531.2007.00188.x. [DOI] [Google Scholar]

- Hensher DA, Rose JM, Greene WH. Applied Choice Analysis: A Primer. Cambridge: Cambridge University Press; 2005. [Google Scholar]

- Hequembourg AL, Panagakis C. Maximizing respondent-driven sampling field procedures in the recruitment of sexual minorities for health research. SAGE Open Med. 2019;7:2050312119829983. doi: 10.1177/2050312119829983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hildebrand J, Burns S, Zhao Y, Lobo R, Howat P, Allsop S, Maycock B. Potential and challenges in collecting social and behavioral data on adolescent alcohol norms: comparing respondent-driven sampling and web-based respondent-driven sampling. J. Med. Internet Res. 2015;17(12):e285–e285. doi: 10.2196/jmir.4762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hobden B, Turon H, Bryant J, Wall L, Brown S, Sanson-Fisher R. Oncology patient preferences for depression care: a discrete choice experiment. Psychooncology. 2019;28(4):807–814. doi: 10.1002/pon.5024. [DOI] [PubMed] [Google Scholar]

- Hole, A. R.: “DCREATE: stata module to create efficient designs for discrete choice experiments”. Statistical Software Components S458059, Boston College Department of Economics (2015)

- Jennings Mayo-Wilson, Larissa, Muthoni Mathai, Grace Yi, Margaret O. Mak’anyengo, Melissa Davoust, Massah L. Massaquoi, Stefan Baral, Fred M. Ssewamala, Nancy E. Glass, and Nahedo Study Group Lessons learned from using respondent-driven sampling (RDS) to assess sexual risk behaviors among Kenyan young adults living in urban slum settlements: a process evaluation. PLoS ONE. 2020;15(4):e0231248. doi: 10.1371/journal.pone.0231248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kendall C, Kerr LRFS, Gondim RC, Werneck GL, Macena RHM, Pontes MK, Johnston LG, Sabin K, McFarland W. An Empirical comparison of respondent-driven sampling, time location sampling, and snowball sampling for behavioral surveillance in men who have sex with men, Fortaleza, Brazil. AIDS Behav. 2008;12(1):97. doi: 10.1007/s10461-008-9390-4. [DOI] [PubMed] [Google Scholar]

- Kroenke, K. Spitzer, R.L., Williams, J.B.W.: The patient health questionnaire-2: validity of a two-item depression screener. Med. Care 41 (11) (2003) [DOI] [PubMed]

- Lancsar E, Fiebig DG, Hole AR. Discrete choice experiments: a guide to model specification, estimation and software. Pharmacoeconomics. 2017;35(7):697–716. doi: 10.1007/s40273-017-0506-4. [DOI] [PubMed] [Google Scholar]

- Lancsar E, Louviere J. Conducting discrete choice experiments to inform healthcare decision making. Pharmacoeconomics. 2008;26(8):661–677. doi: 10.2165/00019053-200826080-00004. [DOI] [PubMed] [Google Scholar]

- Lerner JS, Li Ye, Valdesolo P, Kassam KS. Emotion and decision making. Ann. Rev. Psychol. 2015;66(1):799–823. doi: 10.1146/annurev-psych-010213-115043. [DOI] [PubMed] [Google Scholar]

- Lokkerbol J, van Voorthuijsen JM, Geomini A, Tiemens B, van Straten A, Smit F, Risseeuw A, van Balkom A, Hiligsmann M. A discrete-choice experiment to assess treatment modality preferences of patients with anxiety disorder. J. Med. Econ. 2019;22(2):169–177. doi: 10.1080/13696998.2018.1555403. [DOI] [PubMed] [Google Scholar]

- Lyu W, Wehby GL. Shelter-in-place orders reduced COVID-19 Mortality and reduced the rate of growth in hospitalizations. Health Aff. (millwood) 2020;39(9):1615–1623. doi: 10.1377/hlthaff.2020.00719. [DOI] [PubMed] [Google Scholar]

- Magnani R, Sabin K, Saidel T, Heckathorn D. Review of sampling hard-to-reach and hidden populations for HIV surveillance. AIDS. 2005;19:S67–S72. doi: 10.1097/01.aids.0000172879.20628.e1. [DOI] [PubMed] [Google Scholar]

- Mangham LJ, Hanson K, McPake B. How to do (or not to do) … Designing a discrete choice experiment for application in a low-income country. Health Pol. Plan. 2008;24(2):151–158. doi: 10.1093/heapol/czn047. [DOI] [PubMed] [Google Scholar]

- Medline A, Hayes L, Valdez K, Hayashi A, Vahedi F, Capell W, Sonnenberg J, Glick Z, Klausner JD. Evaluating the impact of stay-at-home orders on the time to reach the peak burden of Covid-19 cases and deaths: Does timing matter? BMC Pub. Health. 2020;20(1):1750. doi: 10.1186/s12889-020-09817-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pei S, Kandula S, Shaman J. Differential effects of intervention timing on COVID-19 spread in the United States. Sci. Adv. 2020 doi: 10.1126/sciadv.abd6370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ryan M, Bate A, Eastmond CJ, Ludbrook A. Use of discrete choice experiments to elicit preferences. Qual. Health Care QHC. 2001 doi: 10.1136/qhc.0100055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ryan M, Netten A, Skåtun D, Smith P. Using discrete choice experiments to estimate a preference-based measure of outcome—an application to social care for older people. J. Health Econ. 2006;25(5):927–944. doi: 10.1016/j.jhealeco.2006.01.001. [DOI] [PubMed] [Google Scholar]

- Salganik MJ, Heckathorn DD. Sampling and estimation in hidden populations using respondent-driven sampling. Sociol. Methodol. 2004;34(1):193–240. doi: 10.1111/j.0081-1750.2004.00152.x. [DOI] [Google Scholar]

- Sharma M, Ong JJ, Celum C, Terris-Prestholt F. Heterogeneity in individual preferences for HIV testing: a systematic literature review of discrete choice experiments. Eclin. Med. 2020;29–30:100653. doi: 10.1016/j.eclinm.2020.100653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teslya A, Pham TM, Godijk NG, Kretzschmar ME, Bootsma MCJ, Rozhnova G. Impact of self-imposed prevention measures and short-term government-imposed social distancing on mitigating and delaying a COVID-19 epidemic: a modelling study. PLoS Med. 2020;17(7):e1003166–e1003166. doi: 10.1371/journal.pmed.1003166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- The Economist: Covid-19 is now in 50 countries, and things will get worse. accessed January 19 (2020). https://www.economist.com/briefing/2020/02/29/covid-19-is-now-in-50-countries-and-things-will-get-worse

- The EuroQol Group EuroQol - a new facility for the measurement of health-related quality of life. Health Pol. 1990;16(3):199–208. doi: 10.1016/0168-8510(90)90421-9. [DOI] [PubMed] [Google Scholar]

- Train, K.E.: Discrete choice methods with simulation: Cambridge university press (2009)

- University of Essex Institute for Social and Economic Research. 2020. Understanding Society: COVID-19 Study, 2020. [data collection]

- Vallejo-Torres L, Melnychuk M, Vindrola-Padros C, Aitchison M, Clarke CS, Fulop NJ, Hines J, Levermore C, Maddineni SB, Perry C, Pritchard-Jones K, Ramsay AIG, Shackley DC, Morris S. Discrete-choice experiment to analyse preferences for centralizing specialist cancer surgery services. BJS (br. J. Surg.) 2018;105(5):587–596. doi: 10.1002/bjs.10761. [DOI] [PMC free article] [PubMed] [Google Scholar]

- VanderWeele TJ, Mathur MB. Some desirable properties of the bonferroni correction: Is the bonferroni correction really so bad? Am. J. Epidemiol. 2018;188(3):617–618. doi: 10.1093/aje/kwy250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walker, P., Whittaker, C., Watson, O., Baguelin, M., Ainslie, K., Bhatia, S., Bhatt, S., Boonyasiri, A., Boyd, O., Cattarino, L., Cucunuba Perez, Z., Cuomo-Dannenburg, G., Dighe, A., Donnelly, C., Dorigatti, I., Van Elsland, S., Fitzjohn, R., Flaxman, S., Fu, H., Gaythorpe, K., Geidelberg, L., Grassly, N., Green, W., Hamlet, A., Hauck, K., Haw, D., Hayes, S., Hinsley, W., Imai, N., Jorgensen, D., Knock, E., Laydon, D., Mishra, S., Nedjati Gilani, G., Okell, L., Riley, S., Thompson, H., Unwin, H., Verity, R., Vollmer, M., Walters, C., Wang, H., Wang, Y., Winskill, P., Xi, X., Fergusonn, N. and Ghani, A.: Report 12: The global impact of COVID-19 and strategies for mitigation and suppression (2020a)

- Walker PGT, Whittaker C, Watson OJ, Baguelin M, Winskill P, Hamlet A, Djafaara BA, Cucunubá Z, Mesa DO, Green W, Thompson H, Nayagam S, Ainslie KEC, Bhatia S, Bhatt S, Boonyasiri A, Boyd O, Brazeau NF, Cattarino L, Cuomo-Dannenburg G, Dighe A, Donnelly CA, Dorigatti I, van Elsland SL, FitzJohn R, Han Fu, Gaythorpe KAM, Geidelberg L, Grassly N, Haw D, Hayes S, Hinsley W, Imai N, Jorgensen D, Knock E, Laydon D, Mishra S, Nedjati-Gilani G, Okell LC, Juliette Unwin H, Verity R, Vollmer M, Walters CE, Wang H, Wang Y, Xi X, Lalloo DG, Ferguson NM, Ghani AC. The impact of COVID-19 and strategies for mitigation and suppression in low- and middle-income countries. Science. 2020;369(6502):413. doi: 10.1126/science.abc0035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang J, Carlson RG, Falck RS, Siegal HA, Rahman A, Li L. Respondent-driven sampling to recruit MDMA users: a methodological assessment. Drug Alcohol Depend. 2005;78(2):147–157. doi: 10.1016/j.drugalcdep.2004.10.011. [DOI] [PubMed] [Google Scholar]

- Wang J, Falck RS, Li L, Rahman A, Carlson RG. Respondent-driven sampling in the recruitment of illicit stimulant drug users in a rural setting: findings and technical issues. Addict. Behav. 2007;32(5):924–937. doi: 10.1016/j.addbeh.2006.06.031. [DOI] [PubMed] [Google Scholar]

- Watson V, Becker F, de Bekker-Grob E. Discrete choice experiment response rates: a meta-analysis. Health Econ. 2017;26(6):810–817. doi: 10.1002/hec.3354. [DOI] [PubMed] [Google Scholar]

- Weber S. A step-by-step procedure to implement discrete choice experiments in qualtrics. Soc. Sci. Comput. Rev. 2019 doi: 10.1177/0894439319885317. [DOI] [Google Scholar]

- Wejnert C, Heckathorn DD. Web-based network sampling: efficiency and efficacy of respondent-driven sampling for online research. Sociol. Methods Res. 2008;37(1):105–134. doi: 10.1177/0049124108318333. [DOI] [Google Scholar]

- Wilson L, Loucks A, Bui C, Gipson G, Zhong L, Schwartzburg A, Crabtree E, Goodin D, Waubant E, McCulloch C. Patient centered decision making: use of conjoint analysis to determine risk–benefit trade-offs for preference sensitive treatment choices. J. Neurol. Sci. 2014;344(1):80–87. doi: 10.1016/j.jns.2014.06.030. [DOI] [PubMed] [Google Scholar]

- Young KP, Kolcz DL, O’Sullivan DM, Ferrand J, Fried J, Robinson K. Health care workers’ mental health and quality of life during COVID-19: results from a mid-pandemic, national survey. Psychiatr. Serv. 2020;72(2):122–128. doi: 10.1176/appi.ps.202000424. [DOI] [PubMed] [Google Scholar]

- Zhang Y, Ma ZF. Impact of the COVID-19 pandemic on mental health and quality of life among local residents in Liaoning Province, China: a cross-sectional study. Int. J. Environ. Res. Pub. Health. 2020 doi: 10.3390/ijerph17072381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zimmer C., Corum J., Wee S.-L.: Coronavirus vaccine tracker (2020). https://www.nytimes.com/interactive/2020/science/coronavirus-vaccine-tracker.html. Accessed 19 Jan