Abstract

There is an evolution and increasing need for the utilization of emerging cellular, molecular and in silico technologies and novel approaches for safety assessment of food, drugs, and personal care products. Convergence of these emerging technologies is also enabling rapid advances and approaches that may impact regulatory decisions and approvals. Although the development of emerging technologies may allow rapid advances in regulatory decision making, there is concern that these new technologies have not been thoroughly evaluated to determine if they are ready for regulatory application, singularly or in combinations. The magnitude of these combined technical advances may outpace the ability to assess fit for purpose and to allow routine application of these new methods for regulatory purposes. There is a need to develop strategies to evaluate the new technologies to determine which ones are ready for regulatory use. The opportunity to apply these potentially faster, more accurate, and cost-effective approaches remains an important goal to facilitate their incorporation into regulatory use. However, without a clear strategy to evaluate emerging technologies rapidly and appropriately, the value of these efforts may go unrecognized or may take longer. It is important for the regulatory science field to keep up with the research in these technically advanced areas and to understand the science behind these new approaches. The regulatory field must understand the critical quality attributes of these novel approaches and learn from each other's experience so that workforces can be trained to prepare for emerging global regulatory challenges. Moreover, it is essential that the regulatory community must work with the technology developers to harness collective capabilities towards developing a strategy for evaluation of these new and novel assessment tools.

Keywords: Emerging technologies, biomarkers, regulatory science, risk assessment, bioimaging, bioinformatics

Impact statement

Emerging technologies will play a major role in regulatory science in the future. One could argue that there has been an evolution of use and incorporation of new approaches from the very beginning of the safety assessment process. As the pace of development of novel approaches escalates, it is evident that assessment of the readiness for these new approaches to be incorporated into the assessment process is necessary. By examining the areas of Artificial Intelligence (AI) and Machine Learning (ML); Omics, Biomarkers, and Precision Medicine; Microphysiological Systems and Stem Cells; Bioimaging and the Microbiome, clear examples as to how to assess the reproducibility, reliability and robustness of these new technologies have been revealed. In a group movement, there is a call for product developers, regulators, and academic researchers to work together to develop strategies to verify the utility of these novel approaches to predict impact on human health.

Introduction

The organizing committee of the Global Summit on Regulatory Science (GSRS20) recruited a world-class set of authors carefully selected to address the theme of Emerging Technologies and Their Impact on Regulatory Science. Although the development of emerging technologies may allow rapid advances in regulatory decision making, there is concern that these new technologies have not been thoroughly evaluated to determine if they are ready for regulatory application. The number and diversity of these alternate approaches may make it challenging to determine whether the approach is ready for routine use in the regulatory environment. There is a need to develop strategies to evaluate the new technologies to determine their reliability, reproducibility and robustness as applied to regulatory decision making. The new approaches need to be evaluated to determine if these potentially faster, more accurate, and cost-effective approaches are ready for regulatory use. A clear strategy needs to be developed to evaluate emerging technologies rapidly and appropriately. In addition, regulatory scientists need to work with the novel approaches to understand the strengths and limitations of these new approaches. The regulatory field must set acceptable quality standards for these novel approaches and share this information with others working in the field of regulatory science. It is critical that the regulatory community work with the technology developers to harness the full value of the new technology and develop a strategy for evaluation of these novel assessment approaches.

The carefully selected authors at the GSRS20 provide a full understanding of the scope and status of global accomplishment in the application of emerging technologies to regulatory science. The GSRS20 organizing committee under the leadership of the Global Coalition for Regulatory Science Research (GCRSR) assembled an outstanding set of international science thought leaders to address the promise of emerging technologies and their application to regulatory science.

A series of introductory contributions from global research/regulatory leaders is provided to set the stage and introduce the needs for and contributions of emerging technologies and their impact on regulatory science. The first presentation is from the director of America's National Institutes of Health, Dr. Francis Collins, a proven world leader in the development of new technology and the importance of applying emerging technologies for improving public health. Dr. Collins’ comments are followed by Dr. Bernhard Url, Executive Director of the European Food Safety Authority (EFSA), European Union (EU), a world leader in assessing safety. He emphasizes the three challenges: speed, complexity, preparedness in the safety assessments in the world’s food supply. Next, Dr. Elke Anklam, principal advisor on life sciences at the Joint Research Center of the European Commission provides comments about emerging technologies and their huge potential impact on the risk assessment of drugs and chemicals. From the National Institute of Health Sciences, Japan, Dr. Masamitsu Honma, deputy director general, addresses the regulatory sciences from his perspective in Asia. Following that, Dr. Margaret Hamburg, foreign secretary for America's National Academy of Medicine, provides some historical background concerning the regulatory sciences and the GSRS20.

Next to provide comment is RADM Denise Hinton, the FDA Chief Scientist. She describes FDA’s regulatory science and innovation initiatives. Dr. Primal Silva, the Chief Science Operating Officer at the Canadian Food Inspection Agency, provides his perspective on technologies and regulatory science as does Dr. Anand Shah, the former Deputy Commissioner for Medical and Scientific Affairs at the U.S. FDA. Dr. George Kass, from the EFSA, introduces the developments in regulatory science in food safety from a EU perspective.

Following these opening addresses, there are several presentations within the topic of Track A, Artificial Intelligence for Drug Development and Research Assessment. This segment is introduced by Weida Tong from NCTR/FDA and Arnd Hoeveler from the Joint Research Centre of the European Commission (EC-JRC). Next, Track B, focused on OMICS, Biomarkers, and Precision Medicine, introduced by Neil Vary and Primal Silva from the Canadian Food Inspection Agency and Richard Beger NCTR/FDA and Susan Sumner UNC, Chapel Hill. Track C, focused on Microphysiological Systems and Stem Cells, is introduced by William Slikker, Jr., NCTR/FDA, and Elke Anklam from EC-JRC. Track D is focused on bio-imaging research in regulatory science and is described by Drs. Serguei Liachenko from the NCTR/FDA and John Waterton from the University of Manchester, U.K. Finally, the study of the microbiome in regulatory science is described by George Kass and Reinhilde Schoonjans, from the EFSA.

Global thought leaders’ opening contributions

Francis S. Collins, MD, PhD, Director, National Institutes of Health, USA

This year's summit focuses on emerging technologies and their application to regulatory science. I want to give special thanks to all the presenters, and to Dr. Slikker, Dr. Tagle, and all of the others who have worked hard to put this together, even in the face of the global COVID-19 pandemic and the need of carrying this out in a virtual format.

Emerging technologies are playing critical roles in the development of new approaches to address the safety of foods, drugs, and healthcare products. And their importance this year—and our collective charge to ensure their responsible application in regulatory science—is certainly highlighted by what's happening with COVID-19. This pandemic has taken on a remarkable priority in all our lives and in our work and it is why we're having this global summit in a virtual workspace, instead of being all together in one place.

NIH is proud to have played a role in the development of all the emerging technologies that are being featured in this summit. And I think these technologies, which are finding their way into all kinds of applications, are some of the greatest hits that I have seen developed during my more than a quarter century at NIH. So, it is highly appropriate that this summit focuses on trying to make sure that we are doing everything possible to advance such technologies and to use regulatory science to implement them in ways that are safe and effective for the public.

Those technologies that we're talking about include MPSs, otherwise known as tissue chips, that are being used for preclinical safety and efficacy studies, as well as toxicology studies. NIH is a world leader in developing this technology and has worked closely with FDA along the way.

A second area is AI and ML. Everybody is busy figuring out ways to apply these technologies. We in the life sciences are finding some remarkable moments of opportunity there, including in drug development.

Thirdly, we have -omics, all of those -omics: genomics, transcriptomics, metabolomics, and so on. These technologies have many valuable applications, including for the discovery of biomarkers for advancing precision medicine and for gaining insights into details of how cells do what they do, how disease happens, and what we can do about it.

We also need to acknowledge the role of the microbiome in health and disease. Increasingly, we are recognizing that we humans are not an organism, we're a superorganism. So, we must think, in many instances, about interactions between ourselves and the microbes that live on us and in us, which can both help us stay healthy or, if things get out of balance, can cause illness. Obviously, that has important implications for regulatory science as well.

All of these things are a good fit for what we are asking you all to address during this virtual summit. It does demand, if you're getting into questions about regulatory decision-making, rigorous assessments of what we know—and what we don't know—may take us a bit beyond the traditional academic lab. I'm glad that we're having this gathering that mixes various perspectives together in useful ways. It's not just about mixing representatives of particular scientific communities, it's about countries as well. Certainly, the effort to try to harmonize regulatory decisions needs to be global and to depend upon close interactions between research funding and regulatory agencies.

Thinking back about the efforts that we have conducted since I've been an NIH director, which is now a little over 11 years, I'm particularly pleased that we were able, early on, to set up an NIH-FDA Leadership Council. This has provided an opportunity for the senior leadership of NIH and FDA to identify areas in which we could work together particularly effectively. A past example of this is what's been done with tissue chips. A current example is the development of next-generation sequencing tests, and, of course, AI and ML are in that space as well. We are counting on that leadership council to continue to be a valuable forum for figuring out ways that we can work together. Frankly, I think this summit will provide some valuable ideas for our leadership council about new challenges that we ought to take on or about existing areas that we might steer in a somewhat different direction. We're counting on learning from this gathering.

Coming back to COVID-19, certainly all of the emerging technologies that you're going to be talking about have opportunities to provide hope in these pandemic times. By advancing the cause of science, including regulatory science, we have opportunities to find real solutions at a pace that needs to be as rapid as it possibly can. We have this wonderful relationship with the FDA, along with industry partners and other government agencies, that is called ACTIV, which stands for Accelerating COVID-19 Therapeutic Interventions and Vaccines. That effort, started in April 2020, has accelerated the pace of designing master protocols, identifying clinical trial capacity, focusing on vaccines and therapeutics, and focusing also on preclinical efforts in ways that have never been done before. In the past, you might have contemplated putting together such a public-private partnership in a two-year period. But ACTIV was put together in about two weeks and continues to be a remarkable contributor to the fact that we are as far along as we are now, in terms of testing out and prioritizing therapeutics, vaccines, and other approaches. Certainly, FDA has been a critical part of that whole enterprise.

In the diagnostic arena, we also have very important issues. We recognize that testing for COVID-19, while it's come a long way, would be more advantageous if we had even more capabilities for doing point-of-care testing. That's what the RADx program, standing for Rapid Acceleration of Diagnostics, aims to do by bringing new platforms forward, getting them validated, going to FDA, and seeking Emergency Use Authorization (EUA) approval of those so they can be deployed. Of course, we count on FDA to be rigorous in those analyses, so that we're sure that what we're offering the public happens quickly, but also in a way that can be trusted.

The need for the kind of conversation we're having at this GSRS20 summit can hardly be overemphasized. In the midst of everything else that's swirling around us, I really appreciate people taking the time to come and make presentations, and others to listen carefully and engage in discussion and to see if there are specific actions coming out of this that could be a useful contribution to the critical path forward.

That means celebrating accomplishments, but also looking closely and honestly at weaknesses to figure out ways that we could collectively address those. So, all of that's on the table. Again, I am sorry for not to be able to look out over a sea of faces of people who are going to be engaged in this enterprise. I will have to satisfy myself by knowing you're there and being confident with the leadership that has put this agenda together. Some really good science will get presented and talked about, and some actions will be identified. I look forward to hearing what actions are moving forward from the leadership.

So, to all the presenters, organizers, and participants, I want to say thank you to everybody and may you have a wonderfully productive GSRS20.

Bernhard Url, PhD, Executive Director, European Food Safety Authority

EFSA is tasked to provide scientific advice to the EU institutions and Member States about risks in the food and feed chain. EFSA deals with animal health, animal welfare, plant health, nutrition and maybe in the future with sustainability.

I envision common challenges (and opportunities) in EFSA’s core business of finding, selecting, appraising, and integrating diverse streams of evidence to answer risk questions. I identify three challenges: speed, complexity, preparedness:

Speed: the pace of innovation in the outside world is faster than innovation in our work procedures, which means there is a lag in method developments, up to date safety dossiers and a lack of speed in delivering scientific advice.

Complexity: the increasing complexity of the food system, which relates to the physical material that is moved across the globe, and about the exponential growth of data and evidence. Society demands to be more protected while at the same time not trusting experts that much.

Preparedness: how can science-based bodies master the paradox to have process stability to deliver efficiently and have enough fluidity to absorb outside complexities?

On all three challenges the work of the GCRSR is valuable as it aims to develop methods which use 21st century science for regulatory purposes. This touches data sciences, exposure sciences as well as new insights in toxicology and epidemiology.

I propose to further deepen collaboration between EFSA and the GCRSR towards a regulatory ecosystems approach. An ecosystem is an agile and co-evolving community of diverse actors who constantly sophisticate their collaboration, and sometimes also competition, to create more value. I conclude by stating that I am convinced that this deep collaboration will help organizations to stay relevant, now as well as in the future.

Elke Anklam, PhD, Principal Advisor at the Joint Research Centre, European Commission (JRC/EU)

The JRC is the European Commission's in-house scientific service and supports EU policymakers through providing multidisciplinary scientific and technical support.

One of the focuses of the JRC is on the harmonization of analytical and toxicological methodologies that can be used, for example, in risk assessment of chemicals including nanomaterials. Therefore, the JRC is a proud member of the GCRSR. Together with EFSA and the European Medical Agency (EMA), the JRC represents the EU in this important global coalition.

The annual global summits organized by the Global Coalition for Regulatory Science and Research aim to provide a platform for regulators, policymakers, and scientists to exchange views on the innovative technologies, methods, and regulatory assessments.

The JRC had the honor of hosting the ninth annual global summit that took place in September 2019 in Stresa, Italy. The topic of GSRS19 was on the scientific and regulatory challenges related to nanotechnology and nano plastics. 1

Emerging technologies have amongst others a huge potential in the risk assessment of drugs and chemicals. It is important to understand amongst others, the interaction of living cells and their environment. I stress that there is an urgent need to develop and harmonize strategies and to be faster in the evaluation of new technologies and I conclude that is only possible when working together at a global level. Therefore, I look forward to the contributions of the renowned scientists of GSRS20.

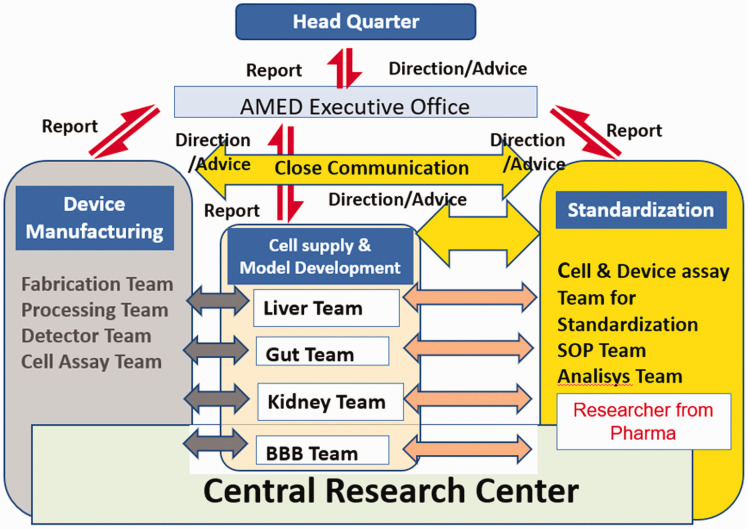

Masamitsu Honma, PhD, Deputy Director General, National Institute of Health Sciences, Japan

I will describe how regulatory science influences the medical field in Japan. Regulatory science has contributed new developments in the prevention, diagnosis, and treatment of diseases and has established a system for facilitating the practical use of pharmaceuticals and medical devices as quickly as possible. This has promoted life innovation (i.e., the realization of a healthy and long-lived society through innovative medicines and medical devices originating in Japan). Four institutions promote regulatory science in Japan. The Ministry of Health, Labour and Welfare (MHLW) decides and organizes basic policy. The Pharmaceuticals and Medical Devices Agency (PMDA) is in charge of drug review and consultation. The National Institute of Health Sciences (NIHS) is responsible for developing official tests and guidelines as well as conducting basic research. Finally, the Japan Agency for Medical Research and Development (AMED) provides funding to facilitate research and development.

In 2018, the PMDA established a Regulatory Science Center to act as a command center; this center plays a critical central role in the incorporation of innovation in the regulatory system. This has led to the utilization of clinical trial data and electronic health records for advanced reviews and safety measures and has promoted innovative approaches to develop advancements in therapy and technologies. The Regulatory Science Center comprises of three offices: The Office of Medical Informatics and Epidemiology, Office of Research Promotion, and Office of Advanced Evaluation with Electronic Data. These closely work with other offices to review new drug reviews and safety measures such as advanced analysis of clinical trial data based on modeling and simulation, as well as pharmaco-epidemiological investigations using real-world data.

One innovative approach that the PMDA has been focused on is horizon scanning, which is aimed at detecting signs of emerging technology in very early stages. Previously, the PMDA did not assess emerging technology until after it was applied to product development. With the emergence of innovations, PMDA is now looking a step ahead and has prepared the means to assess new technologies properly.

I will introduce emerging technologies used by the NIHS to assess the quality, safety, and efficacy of pharmaceuticals, foods, and numerous chemicals. We mainly focused on four technologies:

In silico/deep learning/AI

Omics; toxicogenomics technology

MPS/body-on-chip

Desorption electrospray ionization–mass spectrometry (DESI-MS)

The NIHS has started to develop a large chemical safety database and AI platform to support efficient and reliable human safety assessment of pharmaceuticals, food, and household chemicals, where large-scale and reliable toxicity test data of the NIHS are integrated with expert knowledge beyond the regulatory framework. The aim is to support the introduction of a reliable management standard for pharmaceuticals, to prevent side effects, and to set safety evaluation criteria for food and household chemicals to avoid overdose and exposure. A success of this project has been the development of a chemical mutagenicity prediction model using quantitative structure-activity relationship (QSAR) and AI. 2

Research on toxicogenomics has been promoted since 2000. For example, the Percellome project was initiated to develop a comprehensive gene network for mechanism-based predictive toxicology. This project focuses on building and maintaining the Percellome database for single-dose toxicity; this is one of the largest transcriptome databases accessible via the Internet.

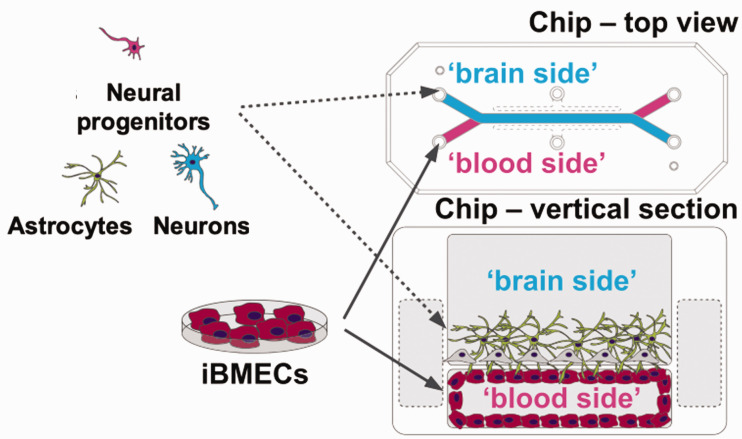

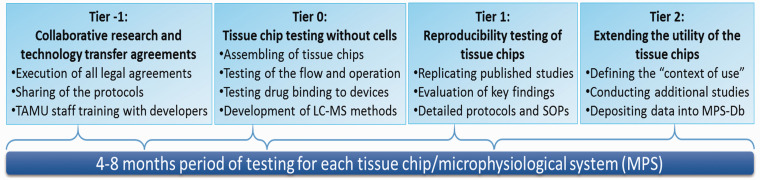

An MPS is a chip that introduces cells onto a microfluidic device with the aim of reproducing tissues and physiological functions that are difficult to obtain with conventional cell cultures and experimental methods. An MPS enables a more accurate evaluation of the effects and toxicity of drugs on humans.

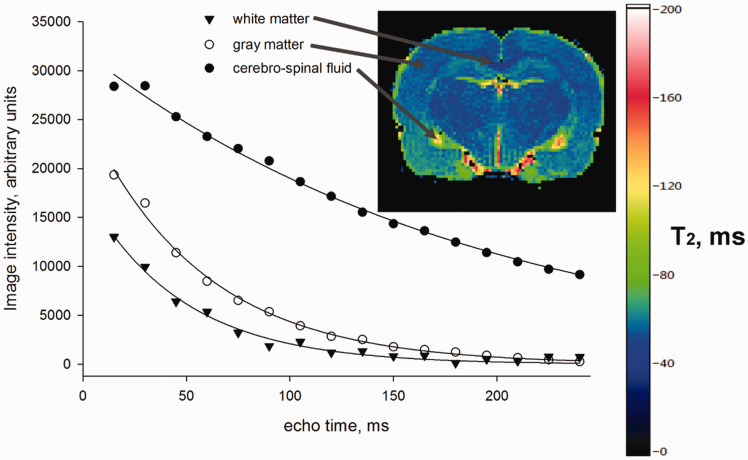

DESI-MS is an ambient ionization technique that can be coupled with mass spectrometry for chemical analysis of samples under atmospheric conditions. The charged droplets generated by the electrospray impact the surface, where the analyte is dissolved into the electrically charged droplets. The secondary droplets ejected from the surface are subsequently collected into an ion-transfer tube and are analyzed by mass spectrometry. This technique allows the distribution of the administered drugs and metabolites in tissue sections to be visualized through the detection of individual mass images. 3

Margaret Hamburg, MD, Foreign Secretary, National Academy of Medicine

It's a great pleasure to help launch the 10th GSRS20. This international conference represents a very special event bringing together scientists from government, industry, and academic research from around the world to explore critical advances in science, technology, and innovation and to strengthen and extend partnerships needed to enhance translation of these scientific advances into regulatory applications; applications that result in much-needed products, and applications that can be applied within a global context. This year's theme, “Emerging Technologies,” and their application to regulatory science is exciting and exceedingly timely.

Ten years ago, when this global summit first began, I was serving as Commissioner of the U.S. Food and Drug Administration (FDA). At the FDA I had good fortune to work closely with Bill Slikker who's been the vision and energy behind this summit from the very beginning. I was very excited by the possibility of what a summit such as this could offer, but whether the summit idea would last one year or a decade or more was uncertain. We suspected that such a convening could have real and enduring value and I think there's no question about that now. So, I certainly want to thank Bill and the team that has put together this meeting, as well as all of those who have helped to develop and to host these meetings over the past 10 years. I also want to welcome and thank all of you for participating in this global summit. Your work matters.

At FDA I came to understand how truly vital regulatory science is to the overall scientific enterprise. It is what enables us to harness the power of science and technology in the service of people and of progress. It speeds innovation, streamlines research and development, improves regulatory decision-making, and strengthens our ability to get the safe and effective products necessary to address unmet public health needs and medical care needs.

To be effective, regulatory science must ground itself on several things including strong science, partnerships, and the ability to work across disciplines, sectors, and borders. This regulatory summit has from the beginning been committed to doing just those things. It has offered a really valuable and somewhat unique platform where regulators, policymakers, and bench scientists from a wide range of countries can come together to discuss how to develop, how to validate, and apply innovative methodologies and approaches for product development and regulatory assessments within their own countries, as well as harmonizing and aligning strategies to a shared collaborative global engagement.

Now in the midst of a global pandemic, I think we all appreciate the value of this more profoundly than ever. In fact, I think the whole world is looking to regulatory science to facilitate the swift but scientifically robust development, review, approval, and availability of essential medical products from personal protective gear to diagnostics, drugs, and vaccines. Too often regulation had been viewed as a barrier to progress but in fact it represents a crucial partner in achieving the shared goals of developing innovative, safe, and effective products as effectively and efficiently as possible. This is what all stakeholders want and expect. As desperate as we are for drugs, vaccines, and new improved diagnostics for COVID-19, the public and patients along with the medical community, policy makers, and government leaders all must have trust and confidence in the process and the products.

In many ways regulatory science represents the bridge to both translate the science into the products we so desperately need and to ensure a robust process based on science. Rigorous science does not have to mean rigid, inflexible, and slow. And speed does not have to mean cutting corners. In fact, applying innovative regulatory science enables us to apply more modern and adaptable tools to the R&D and regulatory review, approval, and oversight process including such things as biomarkers, predicted toxicology, MPSs for drug development, nanotechnology-driven applications, innovative clinical trial designs, and AI, and IT-driven strategies for product development, risk assessment, and regulatory monitoring.

Not surprisingly, I see that you'll be addressing many of these areas of science in this meeting as you think about emerging technologies and their applications to regulatory science. We are living in a time when the advances in science and technology are unfolding with unprecedented speed. Yet the challenges we need to address, with the best possible science, are also unprecedented and profound. That is why the work each of you does is so important and why coming together now to deepen understanding, gain new insights, and strengthen collaboration will enable us to better define and implement the best science-driven regulatory practices that can support the public health imperatives of a global pandemic such as COVID-19, but can also assure systems in which the best discoveries in science can be translated as quickly and appropriately as possible to deliver the kinds of the innovative, safe, and effective products that patients and consumers expect and deserve each and every day.

Denise M. Hinton, RADM, Chief Scientist, FDA, USA

The 2020 GSRS20 highlights Emerging Technologies for Regulatory Application, a Global Perspective. The topic is certainly appropriate, given the current state of affairs—an unprecedented pandemic. And yet, never has there been a greater need for regulatory science to embrace and address the interconnection among people, animals, plants, and their shared environment—a timeless concept that we call One Health.

Globalization, new science, and emerging technologies have been the accelerators that are bringing us together, literally and now virtually, to address both their inherent opportunities and their challenges. FDA has long valued collaboration in leveraging broad expertise to tackle a problem. It is even clearer what the confluence of these efforts has demanded we do if we want to successfully protect, promote, and advance the public health.

We must continue to find new ways of collaborating with all our stakeholders in the regulatory science enterprise. The current and potential regulatory science applications of exciting technologies highlighted in this global summit include, but are not limited to AI, MPSs, the microbiome, biomarkers, and precision medicine, all of which are evidence of this essential collaborative approach and action.

Just a decade ago, the Office of the Chief Scientist was established at FDA to forge many of the intramural and extramural 1 , 2 (https://www.fda.gov/science-research/advancing-regulatory-science/centers-excellence-regulatory-science-and-innovation-cersis;https://www.fda.gov/science-research/advancing-regulatory-science/regulatory-science-extramural-research-and-development-projects) collaborations that are now helping to advance these technologies, and to support a global network of partners who can help ensure the safety of our medical products and our food supply. For instance, FDA's cross-agency scientific work is focused on developing and fostering opportunities for promising innovative technologies with the goal of advancing new tools as well as new areas of science.

For example, FDA's Alternative Methods Working Group3 is focused on supporting methods that are alternatives to traditional toxicity and efficacy testing that will be used across FDA's various product areas. This working group is serving as a catalyst to the development and potential application of alternative systems in vitro, in vivo, and in silico, and using systems toxicology and modeling to inform FDA decision-making and regulatory toxicology. To that end, the Alternative Methods Working Group has launched a webinar series 4 (https://www.fda.gov/science-research/about-science-research-fda/fda-webinar-series-alternative-methods-showcasing-cutting-edge-technologies-disease-modeling) for developers to share their cutting-edge technologies with FDA scientists.

Well-established collaborations with our stakeholders at home and abroad are enabling FDA to work expeditiously in support of the application of these new technologies to the development of therapeutics and vaccines for COVID-19. By leveraging nimble funding mechanisms like the OCS-led advancing regulatory science broad agency announcement (BAA), FDA has been able to solicit innovative ideas and approaches to evaluating FDA regulated products and build on long standing relationships with our collaborators.

At no time have the benefits of these existing partnerships with world class institutions been more caring than during this pandemic. For example, the Office of Counterterrorism and Emerging Threats, OCET in OCS, is using BAA-funded contracts to support FDA medical countermeasure research on Ebola and Zika.

One study, with Public Health England, 5 (https://www.fda.gov/emergency-preparedness-and-response/mcm-regulatory-science/developing-toolkit-assess-efficacy-ebola-vaccines-and-therapeutics) has been expanded to leverage technology used in developing a toolkit to assess efficacy of Ebola vaccines and therapeutics to gather vital information about COVID-19 infections. This project is generating reagents that are being shared with FDA scientists and external partners to support COVID-19 research. BAA project with Stanford University 6 (https://www.fda.gov/emergency-preparedness-and-response/mcm-regulatory-science/survivor-studies-better-understanding-ebolas-after-effects-help-find-new-treatments) that is evaluating the after-effects of Ebola in survivors, and how to more effectively treat these patient's chronic health problems has also been extended to aid the development of rapid diagnostics, therapeutics, and vaccines for COVID-19 and inform FDA evaluation of these products.

And, most recently, in partnership with National Institute of Allergy and Infectious Diseases, OCET has awarded a new BAA contract to the University of Liverpool 7 (https://www.fda.gov/emergency-preparedness-and-response/mcm-regulatory-science/fda-and-global-partners-analyze-coronavirus-samples) who, together with a global consortium, will sequence and analyze samples from humans and animals to create profiles of various coronaviruses, including SARS CoV-2. The investigation will also use in vitro coronavirus models like organs-on-a-chip to help inform development and evaluation of medical countermeasures for COVID-19. One example is where we have built on existing collaborative research, FDA and its partners at NIH have recently modified a crowdsourcing application co-developed by FDA and the Johns Hopkins CERSI to enable the clinical community to share their COVID-19 treatment experiences.Our UCSF/Stanford CERSI has begun research on a rapid query model that is enabling us to examine COVID-19 questions using electronic health record data.

The “all-hands-on-deck” response to the COVID-19 pandemic has brought home, not only the criticality of worldwide collaboration and cooperation in combating this global scourge, but the recognition that the health of people, animals, and the ecosystem are interdependent. In 2019, FDA launched its One Health Platform, 8 (https://www.fda.gov/animal-veterinary/animal-health-literacy/one-health-its-all-us) to further public and global health and breakdown silos.

Working at the nexus of the three one health domains of human, animal, and environmental health, FDA has been encouraging the expansion of multi-disciplinary and inter sectorial networks and partnerships to improve our scientific knowledge with information-sharing, pooling of skills and resources, and monitoring and surveillance with the goal of generating more beneficial health outcomes.

NCTR, Jefferson Labs, is surveying SARS CoV-2 in wastewater, which is a research project that exemplifies the One Health approach as a complimentary tool for estimating the viral speed of COVID-19 in the central Arkansas area. The goals are to establish a method to extract and quantify SARS CoV-2 in wastewater samples; monitor the temporal dynamics of titer of SARS CoV-2 in the wastewater as a proxy for the presence and prevalence of COVID-19 in Arkansas communities; and estimate the number of clinical cases, based on the titers of SARS CoV-2 in the wastewater sample.

This kind of early detection and continuous monitoring of COVID in the community may help federal and local agencies respond more quickly to halt the spread of COVID-19 and decrease the burden of patient admissions in healthcare facilities in the event of future pandemic waves—a direct public health impact.

Let this pandemic, with its clear evidence of the interconnection of plants, animals, and human life serves as a plan of action for future public health emergencies. FDA's 2021 Focus Areas of Regulatory Science 9 (2021 Advancing Regulatory Science at FDA: Focus Areas of Regulatory Science (FARS). 2020. https://www.fda.gov/media/145001/download) reflects the need for a nimbler approach that will enable us to respond swiftly to the increasing rapid evolutions in science and technology.

In building a robust scientific infrastructure and training programs for scientists, FDA is laying the foundational elements to tackle innovations in our regulatory portfolio and public health preparedness and response. What is needed is a preparedness mindset and the global drive to develop and implement the interventions that will make us resilient in the face of challenges to come in our increasingly interconnected world.

Anand Shah, MD, former Deputy Commissioner of Medical and Scientific Affairs, FDA, USA

This year's global summit with a focus on emerging technologies and their impact on regulatory science emphasizes an issue that has always been important given how the relentless nature of scientific progress requires regulators to continually improve and innovate their regulatory processes to ensure the safety of consumer products.

However, this theme is particularly salient this year in 2020 given the ongoing COVID-19 pandemic with the virus infecting more than 23 million people and claiming the lives of over 800,000 individuals worldwide. Given the SARS-CoV-2 is a novel pathogen, we didn't have any medical products designed to diagnose or treat this specific virus when the outbreak first broke out.

Over the past eight months, the innovation from the scientific and medical communities has been simply tremendous. We've seen an array of timely and innovative tests and some highly accurate PCR-based diagnostics to test that use saliva to increase speed and convenience. In terms of therapeutics, we've seen an incredibly diverse pipeline of potential treatments emerge with hundreds of clinical trials initiated worldwide for antiviral drugs, immunomodulators, neutralizing antibodies, and more.

And of course, as the world works to chart a roadmap to recovery, there's been an increasing focus on COVID-19 vaccines with tens of thousands of individuals volunteering across the world to participate in clinical trials over the past few months. These emerging technologies are exciting, highlight the ingenuity of the research community, and offer hope to patients and consumers that there's light at the end of the tunnel of this pandemic.

However, as regulators, the crisis and associated emerging medical technologies have created a unique series of challenges. During emergency situations, it's important for us to be responsive to the evolving public health need and be adaptive so that our regulatory decisions are made on the latest and highest quality evidence.

The public and medical providers have tremendous faith in the decisions of regulators. And it's important that we, in turn, demonstrate our commitment to safety and scientific integrity. Consider the case of COVID-19 vaccines. While we celebrate the unprecedented collaboration and scientific focus on developing these new products as public health officials, we're concerned by the reports of growing vaccine hesitancy in the population. Vaccines are foundational to public health and have played a critical role in reducing morbidity and mortality from infectious diseases for over 200 years.

For COVID-19, vaccines that are shown to be safe and effective through rigorous clinical testing can help us safely achieve herd immunity and return to normal life. It's important for the public to know that regulators are committed to following unwavering regulatory safeguards for COVID-19 vaccines.

Commissioner Hahn, Dr. Peter Marks, and I recently reaffirmed this commitment in an article in JAMA where we outline some of the key steps the agency has taken such as providing guidance to vaccine developers in committing to seek input from the FDA vaccine advisory committee to help ensure that the public is clear about FDA's expectations for data to support safety, effectiveness and that the required regulatory standards will be met, so they can be comfortable receiving any vaccine to prevent COVID-19.

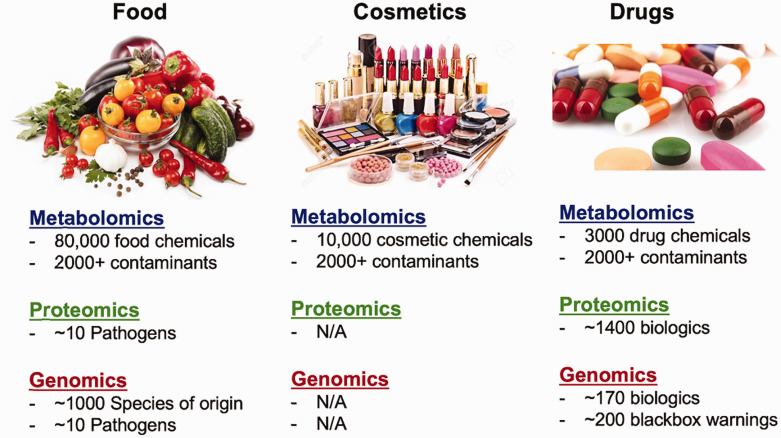

While COVID-19 is our number one priority right now, it's important to recognize that the challenges and lessons for regulatory science during this pandemic are applicable to a whole host of other ongoing and forthcoming public health issues. As all attendees of this summit are aware, there's a continuing evolution of the utilization of emerging technologies and novel approaches for the safety assessment of food, drugs, and personal care products. Prominent examples include the application of genomics, proteomics, and metabolomic technologies. These technologies have served as the foundation for precision medicine and improve food safety and traceback procedures.

For example, genomic analysis of pathogens is becoming increasingly common. This is a welcomed innovation as genomic analysis can help us improve surveillance for the public health threat of antimicrobial resistance, and support consumers by advancing the movement towards personalized medicine. However, as these technologies advance, regulators need to be informed and aware of the best practices for verifying the evidence and validating the performance of these products.

Another example is proteomics which has significant potential for improving food safety by enabling screenings for foodborne pathogens or allergens with high sensitivity and specificity. These advances would help improve the traceability and quality of the food system—a commitment the FDA recently affirmed in its blueprint for the new era of smarter food safety.

Other emerging technologies which will be featured at this year's conference include the use of AI and bioinformatics in predictive systems and the use of MPS such as “organ-on-a-chip” technologies which can help bridge the gap between in vitro and in vivo. While each of these technologies offers tremendous potential, it's important that regulators possess the tools and capacity needed to systematically examine the strengths and weaknesses of each approach.

Furthermore, given the increasingly interconnected nature of today's world, it's also important for regulators to share experiences and best practices with one another so that we can harmonize our standards where possible and prepare our workforce to meet the challenges of emerging technologies. That's why forums like today's GSRS20 are so important. By convening the world's leading researchers, regulators, and experts on these technologies, we can help activate our collective capabilities and support the development of a strategy for evaluating these novel assessment tools.

Primal Silva, PhD, Chief Science Operating Officer, Canadian Food Inspection Agency, Canada

I will focus on how the Canadian Food Inspection Agency (CFIA) is using emerging technologies to expand and enhance its capabilities in carrying out its regulatory function.

The CFIA is an organization in Canada that is tasked with doing many different functions. It has a mandate in terms of prevention and managing food safety risks in Canada. It also is tasked with the plant health and animal health responsibilities in the country, as well as contributing to the consumer protection and facilitating market access for Canada's food, animals, and plants. CFIA is the largest science-based regulatory agency in Canada and uses technology and well-trained scientists and expertise to carry out its function. It is supported by 13 laboratories spread across the country that use cutting-edge technologies to deliver on its broad mandate.

In terms of some of the technologies, I want to describe a new program in Canada, Innovative Solutions Canada, where government departments engage with small and medium-sized enterprises with innovative new ideas to see how they can help deliver government services. We have partnered with several companies using this program to bring about the best available science and technologies to help us carry out regulatory functions. For example, with the current COVID-19 pandemic, we are validating a ONETest CoronaVirus test that can test for the presence of the virus, not only in human samples, but across some of the animal species given the broad host range and the zoonotic nature of this virus.

The other area where the CFIA has done a lot of work is in applying genomics for helping with regulating food, in terms of the whole genome sequencing that is now fully integrated into the testing for foodborne pathogens in the Agency as well as for antimicrobial resistance testing. Recently in Canada, we switched over from pulsed-field gel electrophoresis to whole genome sequencing technology to make the link between human foodborne pathogen samples and food isolates4,5

Similar advances have been made in the plant health area. 6 For example, we are using whole genomic sequencing to detect plant viruses of quarantine significance as way to reduce the time and improve precision in permitting release of new plant varieties in Canada. Likewise, new genomic technologies play a very big part in the animal health diagnostics e.g., chronic-wasting disease, rabies, avian influenza, African swine fever, etc. These new technologies are often used in conjunction with the classical tests that are already established as the gold standard tests for diagnostics. 7

In terms of advancing regulatory application of genomics, it has been crucial that we work with international partners for the validation of these technologies. Addressing key questions such as—how do you standardize genomics-based results, or how do you apply genomics in the regulatory context for decision making?—are all important in this context.

I want to acknowledge the important role the GCRSR has played in developing international consensus on scientific matters. As global regulators, we have a very important role in promoting science and evidence-based international standards and action.

George Kass, PhD, Lead Expert at the European Food Safety Authority, EU

I will address emerging technologies for regulatory applications with a focus on the EU food safety perspective. EFSA is the EU agency that is responsible for providing independent scientific advice and for communicating on existing and emerging risks associated with the food chain in the EU8. Its advice forms the basis for European policies and legislation and its remit covers food and feed safety, nutrition, animal health and welfare, plant protection, and plant health. Furthermore, EFSA also considers, through environmental risk assessments, the possible impact of the food chain on the biodiversity of plant and animal habitats.

Many food and feed related products require a scientific assessment to evaluate their safety before they are authorized on the EU market. These so-called regulated products include substances used in food and feed (such as additives, enzymes, flavorings, and nutrient sources), novel foods, food contact materials and pesticides, genetically modified organisms, food-related processes, and processing aids, and these require a specific risk assessment to be performed; These products may be new or already on the market. In addition, EFSA is dealing with other types of substances that may be found unintentionally in the food chain, such as contaminants.

For regulated products, the type and amount of data provided to EFSA depends very much on the data requirements, the latter being specified either in the prevailing EU legislations or in the appropriate guidance documents developed by EFSA. Often the amount and type of information requested depend on the application domain and use levels but also follows EU legislation when it comes to the minimization and optimization of the use of animal data, the so-called 3R principles, in accordance with Directive 2010/63/EU. The type of data typically received by EFSA consists of in vivo and in vitro studies but also includes in silico data. The in vivo studies, which predominate, include studies on absorption, distribution, metabolism, and excretion (ADME), aiming at information on the behavior of a substance in a complex biological system, as well as toxicological studies to identify and characterize the potential toxicity of this substance. In vitro studies are also evaluated by EFSA, but these are mainly genotoxicity studies.

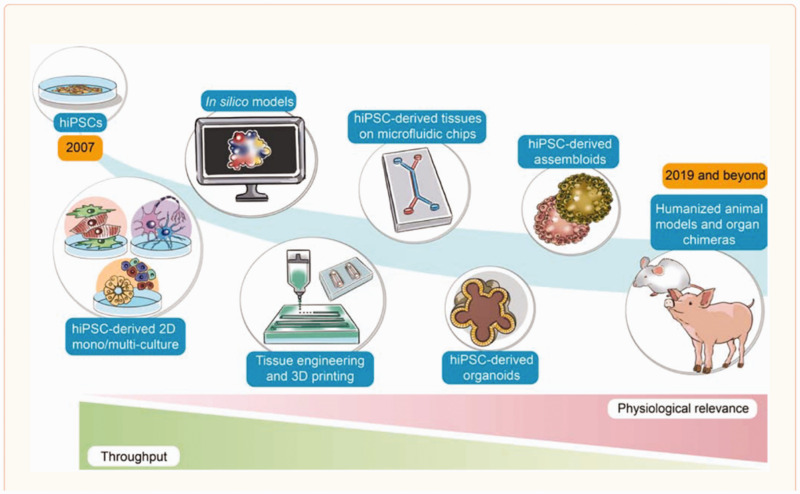

The development of new technologies, methodologies and tools for chemical risk assessment presents for an organization like EFSA both challenges and opportunities. The types of new methodologies that EFSA is facing are often referred to as New Approach Methodologies (NAMs) (European Chemicals Agency, 2016; https://echa.europa.eu/documents/10162/22816069/scientific). The overarching goal of NAMs is to enable the replacement of animal testing through a combination of predictive in silico models and in vitro assays. On the in silico side, these can be tools to predict the physico-chemical properties of chemicals or the behavior of chemicals in biological systems. The latter include (Q)SAR and read-across tools as well as PBTK models to predict the kinetics of a chemical, across species and across biological systems (e.g., QIVIVE models to link external and internal exposure). Attempts to develop more sophisticated and elaborated systems such as such as virtual organ models and virtual organisms are also currently under development. Better performing in vitro tools are being developed at an equally rapid pace to overcome the limitations of 2D culture systems based on transformed cell lines. For instance, stem cell differentiation protocols are becoming mature to produce human cell models that not only are more biologically relevant than experimental animal-derived cells but also are amenable to greater complexity using in vivo-like architectures such as organoids, MPSs on chip to mimic multi-organ systems or even the whole-body architecture.

An impact from many of these new methodologies is the quantity of data generated, the so-called big data, which can come from high throughput screening of multiple chemicals or from high content screening on individual substances. Also, whole genome sequencing produces a considerable amount of data as also other OMICs approaches do. With this challenge comes the need for new tools and skills to handle big data, not only for their collection, their curation, their storage, but, also, for their analysis. While progress is being made in these areas, it is encouraging to note that rapid developments are being seen at the level of AI and ML at helping in the prediction of toxicity and in the risk assessment.

When assessing the regulatory landscape for food safety, it becomes evident that the great majority of biological and toxicological data requested by legislation involve in vivo studies that are expected to be conducted using the appropriate test guidelines of the Organization for Economic Co-operation and Development (OECD) and following the OECD principles of Good Laboratory Practice (GLP). In the case where EFSA develops its own sector-specific guidance documents, these equally describe the types of data and studies that should be performed to support an appropriate risk assessment. It is important to stress that the same requirements for following OECD test guidelines and the principles of GLP apply. However, where possible, new methodologies are referred to and incorporated, but under the condition that such methodologies are sufficiently robust, reproducible, and accepted for regulatory decision making. EFSA’s policy to update its guidance documents on a regular basis also provides an opportunity for new methodologies to be incorporated as they become accepted in the regulatory arena.

NAMs carry several advantages, including the use of human relevant models. They can produce mechanistic data, too, that informs the risk assessor on adverse outcome pathways and modes of action. Furthermore, the information generated can be much more human disease-relevant than from animal studies and can be produced at a pace and cost that support a more rapid risk assessment. Yet, we also see hurdles in their use in the regulatory context. The new methodologies need to be standardized, be reproducible and need to be validated in order to gain the confidence of the risk assessor. Moreover, they also need to be accepted by the wider community. For these reasons, EFSA is very much engaged with organizations that deal with the issues of promoting, developing and validating NAMs. We work very closely with the European Centre for the Validation of Alternative Methods (ECVAM) at the JRC but also with the European Chemicals Agency (ECHA). On the EU research and innovation front for regulatory sciences, it is also important to highlight the development of the new Horizon Europe framework program that will be launched in 2021 and that includes the co-creation of the Partnership for the Assessment of Risks from Chemicals (PARC) (https://ec.europa.eu/info/horizon-europe/european-partnerships-horizon-europe_en). Together with still ongoing Horizon 2020 programs such as EUToxRisk (https://www.eu-toxrisk.eu/), such initiatives are expected to help promote the uptake and use of NAMs in regulatory sciences. In addition to its engagement with such initiatives, EFSA also has its own vehicle of promoting and funding research into areas directly pertinent to new methodologies, such as its newly created Science Studies and Project Identification & Development Office (SPIDO) under which, among others, NAM case studies have been launched. At an international level beyond the EU, EFSA collaborates with the World Health Organization, with OECD, with the GCRSR, and other food safety agencies outside the EU. EFSA is also actively involved in programs such as the international government-to-government initiative, Accelerating the Pace of Chemical Risk Assessment (APCRA), and has established its own platforms such as the International Liaison Group for Methods on Risk Assessment of Chemicals in Food (ILMERAC) to interact with various organizations in its ambition to contribute to the harmonization of chemical risk assessment to support food and feed safety.

Christopher Austin, PhD, former Director of the National Center for Advancing Translational Sciences (NCATS) at the National Institutes of Health, USA

Of the 27 institutes of the NIH, NCATS is the newest of them, and our job is closely related to the purpose of this meeting focused on emerging technologies. That is, to develop new ways, new methods, new technologies, new paradigms to make the process of developing and deploying interventions that improve human health better, faster, and we hope, cheaper. So, we work on the problem of how therapeutics and diagnostics and medical procedures are developed and improve that efficiency, and regulatory science is big part of that.

So, how did we define translational science? Like any other science, it is a field of investigation that seeks to understand general principles—in this case the underlying principles of the translational process—with the ultimate goal of prediction. I think about regulatory science as largely a subset of translational science.

The problems we work on are the major rate-limiting steps to translational efficiency. A lot of you will find these familiar, and ones that you run into every day, and bedevil every therapeutic area. And you'll notice, if you look down this list, a very large number of them are regulatory issues. Not all, of course, because many interventions are not subject to regulatory approval, behavior interventions as an example.

So, the first thing I want to tell you about, which I hope you find useful in your own work, is something that we did with a group at the National Academy of Medicine a couple of years ago, to address a problem that is familiar to all of you: that many stakeholders, if not most, are unaware of the complexity of the process by which interventions that improve human health are developed. We felt that there was a need for an accurate portrayal of what is involved, not only as an educational tool, but also for use by translational and regulatory scientists to determine where they should apply their efforts, based on knowledge of the most problematic steps in terms of failure, time, or cost. We published process maps for small molecules and biologics in a couple of papers a couple years ago that you might want to look at.

The group took as its starting place the most commonly used portrayal of therapeutic development, the linear chevron diagram. It is unfortunately a terribly inaccurate and misleading portrayal since it makes the process appear straightforward—drug development in six easy steps! So, we broke out each of the stages of the chevron into a “neighborhood” of dozens of interconnected and recursive steps. Since the publications of the map in static form in 2018, we have converted it into an interactive electronic form that you can find on the NCATS website (https://ncats.nih.gov/translation/maps), with the ability to zoom in and out and see where the problem areas are and go to resources which might help you through them.

NCATS’s longest running regulatory science initiative is the Toxicology in the 21st Century (Tox21) program, a collaboration among NCATS, FDA, EPA, and the National Toxicology Program which is developing innovative test systems, data, and algorithms that better predict human toxicity. Current goals of the program include implementing technologies that overcome some of the traditional limitations of in vitro testing systems, such as metabolic capability, and testing systems that didn't exist when we started Tox21, such as Induced Pluripotent Stem Cells (iPS) cells, 3D cellular organoids, and tissue chips, utilizing transcriptional readouts in addition to biochemical or imaging ones, and working via the adverse outcome pathway paradigm that I think you're all familiar with.

In addition to generating unprecedented amounts of in vitro screening and follow-up mechanistic and organ-based data, we are curating legacy data from the NTP and EPA, integrating it with Tox21 data, and making all data and analyses publicly available. We take great care in validating assay performance and compound identity and purity and are beginning to utilize these data in physiologically based pharmacokinetic (PBPK) modeling.

Complementing Tox21’s systems biology approach are efforts to develop more predictive translational models and assays. At NCATS we utilized and support development of the full range of human cell-based assay platforms. We're all familiar with the traditional 2D cell line culture systems that many of us have spent our careers doing, but in the last 10 years, it has become possible to test the activities of compounds in human primary cells, iPSC-derived cells, multicellular spheroids, multi-cell type organoids, 3D printed tissues, or MPSs, otherwise known as MPS, tissue chips, or organs-on-a-chip. Each system is useful and complements the others, given the inverse relationship of throughput and physiological complexity. At one end of the spectrum, cell line based small volume multi-well (384- or 1536-well plate) HTS assays have low physiological complexity but can test up to hundreds of thousands of compounds a day. At the other extreme, tissue chips can be very physiologically complex and can test only a few compounds a day.

MPSs merit particular mention since they are the newest platform for compound testing so may be less familiar. This field has developed over just the last 10 years, through the leadership of NCATS and a number of other institutes at NIH, DARPA, and FDA. MPS are multi-human cell type bioreactors that mimic the structure and function of human tissues. MPS have been developed for over a dozen individual human organs as well as linked arrays of up to 10 different human organs. MPS are being used to study normal and disease physiology as well as to test for effects of xenobiotics on human tissues, currently as a complement to, but we hope eventually in partial replacement of, animal testing.

Population clinical data from humans is also a rich source of regulatory science-relevant insights, as FDA's Sentinel Initiative and many epidemiological studies have shown. However, there has to date not been an appropriately scaled, nationwide, publicly accessible resource of EHR data that is broadly representative of U.S. population demographics. NCATS and its Clinical and Translational Science Award (CTSA) grantees began creating the foundation of such a resource in 2018, and when the urgent need for such data became evident in the early days of the COVID-19 pandemic, the platform was rapidly instantiated to gather, harmonize, and make available in a secure privacy-protected federal enclave longitudinal EHR data on millions of patients with COVID-19 symptoms and diagnoses.

The National COVID Cohort Collaborative (N3C) currently has information on hundreds of thousands of patients from academic health centers across the country and will have approximately six million COVID-19 patients’ records by early 2021. The purpose is to transform the clinical information that's in those electronic health records into a format that can be queried by individual investigators and have AI or ML programs applied to them in ways that are impossible with current federated methods. And the kinds of things that one would be able to ask here are there—what are the risk factors that indicate a better or worse prognosis? What are all the treatments that have been used for these patients that might make patients better or worse? The N3C (https://covid.cd2h.org/) will launch shortly and you can apply for access if you’re a qualified investigator, with projects approved though a data access committee. Though the platform is focused on COVID-19 for now, it is completely generic in methodology, so once COVID-19 is over we hope to expand the platform’s purview to other diseases and regulatory science applications.

Lastly, I want to tell you about CURE-ID (https://cure.ncats.io/), a different clinical data resource that I hope many of you will use and contribute to. Two years ago, scientists at NCATS began working with colleagues at the FDA to develop a mobile application that would allow health workers, particularly in the more remote parts of the country or the world, to share their clinical experiences using approved drugs to treat diseases other than the ones for which they're regulatorily indicated on the label. The project was initially focused on the neglected tropical diseases of the developing world. CURE-ID was launched in December 2019, and of course later that month the first cases of COVID-19 were reported. A COVID-19 module was immediately added to CURE-ID, and rapidly became an invaluable resource for clinician experience sharing, particularly in the difficult early days of the pandemic. The platform currently covers over 100 infectious diseases, and we anticipate that it will be increasingly useful for both clinical and regulatory science applications.

Track A: Artificial Intelligence (AI) and Machine Learning (ML) for Regulatory Science Research, Risk Assessment and Public Health (Weida Tong, PhD, National Center for Toxicological Research/FDA, USA and Arnd Hoeveler, PhD, Joint Research Centre, European Commission, EU)

Artificial Intelligence has impacted a board range of scientific disciplines and played an increasing role in regulatory science, risk assessment and public health. AI is a broad concept of training machines to think and behave like humans. It consists of a wide range of statistical and machine learning approaches to learn from the existing data/information to predict future outcomes. Some new AI methodologies such as deep learning has advanced algorithms and accuracy to extract complex patterns from new data streams and formats (e.g., image and graphic data) which has been increasingly used in the regulatory context. Data connectivity, computational resource and new/advanced algorithms fuel the rise of AI, which give new insight to underlying mechanisms and biology, thus significant for regulatory application. Although the concept of AI was introduced back in the 1950s and has been actively pursued in the research community, its critical role in regulatory application concerning food and drug safety, has yet to be been realized. Understanding that AI offers both opportunities and challenges to global regulatory agencies with questions such as (1) how to assess and evaluate AI-based products and (2) how to develop and implement AI-based application to improve the agencies’ functions, GSRS20 hosted a special track to discuss the basic concept and methodologies of AI applied in regulatory science, risk assessment and public health with real-world examples. Particularly, the current thinking and on-going efforts with various agencies in applying AI in these fields were discussed with a specific focus on drug and food safety, clinical application (e.g., prognosis and diagnosis), precision medicine, biomarker development, natural language processing (NLP) for regulatory documents and regulatory science application.

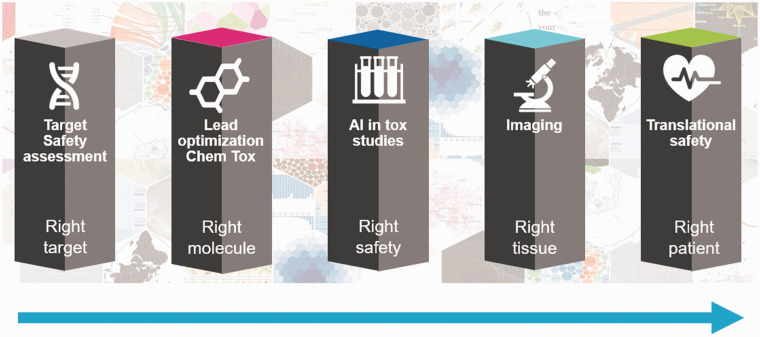

One of the important questions raised by many presenters is about where the highest potential for the application of AI in regulatory agency is. It was well acknowledged that AI based NLP could play a major role in extracting useful information from both the regulatory documents and literature data to facilitate regulatory science research and improve the product review process. It is a fact that one of the most important AI advancements in the recent years is in the area of NLP. The early effort in this area treated a document as a “bag-of-words”, where the context of the document is pronounced based on the frequency of words. Recognizing that the context of a document is better represented by the co-occurrence of multiple words, the Probabilistic Graphic Models were introduced and have gained attraction in the community to understand “topics” of a document. However, more recently, the advanced AI in NLP offers an unprecedented opportunity to analyze the entire sentence, beyond words, by using language models to perform a broad range of NLP tasks such as Questions/Answers, Sentimental Analysis, Information Retrieval, Text Classification, etc. Dr. Henry Kautz from National Science Foundation presented examples for analysis of social media data for foodborne illness surveillance and illness prediction. The role of NLP was also discussed for drug target safety assessment by Dr. Stefan Platz from AstraZeneca (AZ) as one of five pillars of AI for safety assessment—that is right target—target safety assessment, (2) right molecule—lead optimization chemical toxicity, (3) right safety—toxicological study, (4) right tissue—digital pathology, and (5) right patient—translational safety.

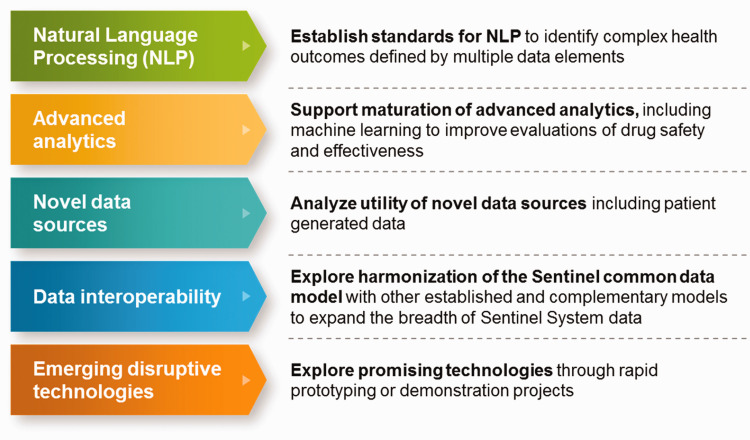

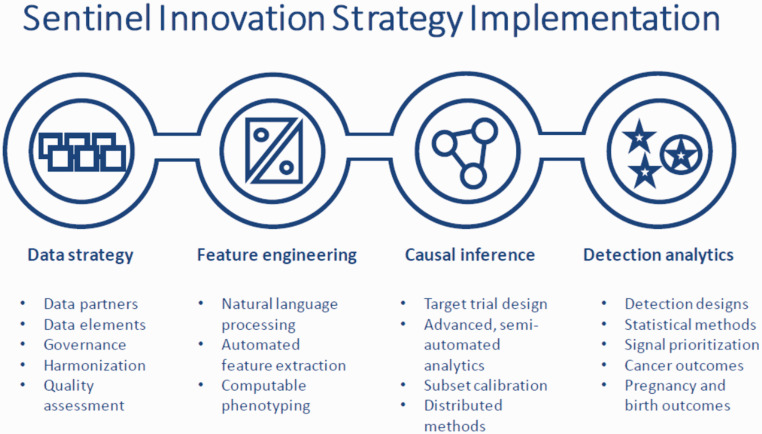

The regulatory agencies throughout the world have routinely generated a variety of documents during regulated product review and decision-making, which has led to a large inventory of review documentation with a broad array of information. For example, Guidance documents, drug labeling, and FDA Adverse Event Reporting System (FAERS) are a part of the regulatory documents made available by U.S. FDA submissions. Meanwhile, the regulatory process also requires curating literature data for science-based decision-making. Unfortunately, most of these tasks are still a manually conducted process, which is time-consuming and labor-intensive, thus resulting in longer review times. Dr. Robert Ball from U.S. FDA emphasized AI-based NLP as an important piece of modernization for the FDA Sentinel system. Both Dr. Philippe Girard from Swissmedic of Switzerland and Dr. Blanka Halamoda Kenzoui from EC-JRC provided specific examples on improving regulatory efficiency with text mining coupled with machine leaning. Besides discussing AI based NLP of literature data for safety assessment in Japan, Dr. Akihiko Hirose from National Institute of Health Science (NIHS) of Japan also discussed how the advanced AI methodologies improves some traditional techniques routinely used for risk assessment such as quantitative structure-activity relationships (QSARs), read-across and toxicogenomics. He provided a comprehensive review of various regulatory-driven applications of AI including development of chemical safety big database and AI platform to support human safety assessment of pharmaceuticals, foods and household chemicals, deep learning model for Ames mutagenicity and hepatotoxicity.

Another question that has been touched on by several speakers is the role of AI in public health, ranging from clinical diagnosis and prognosis, drug and food safety, disease prevention, precision medicine and nutrition. As we all aware that the COVID-19 crisis has served as a boost for adoption and the increase use of AI in health care, what are some concerns/challenges and how to overcome the challenges. Drs. May Wang and Li Tong from Georgia Tech and Emery University of USA discussed five opportunities and challenges in AI for public health; these are (1) data integrity, (2) data integration, (3) causal inference, (4) real-time decision-making, and (5) metric and validation for explainable AI. Dr. Peng Li discussed regulatory science of medical device and AI in China with an example of applying AI-based image analysis for lung cancer and precision medicine. Dr. Kautz (NSF) described nEmesis, a foodborne illness surveillance system using a semi supervised leaning approach for analysis of Twitter data (ML epidemiology: real-time detection of foodborne illness at scale, Nature Digital Medicines, 1, 26, 2018) and the other AI system to extract information from social media to predict low self-esteem. Dr. Yinyin Yuan from Institute of Cancer Research of United Kingdom discussed AI for digital pathology in clinical diagnosis and prognosis. She touched on an important topic about reproducible AI for digital pathology. It was acknowledged that reproducibility takes multiple means, depending on the area of study, but in the context of digital pathology, controlling key parameters and measures are required towards improved reproducibility.

Weida Tong, PhD, National Center for Toxicological Research, FDA, USA

I propose a framework to consider for effective and efficient use of AI and ML technologies. The acronym of the framework is called TRIAL, which stands for T ransparency, R eproducibility, I nterpretability, A pplicability and L iability. Distinct from other similar frameworks from academia or other government agencies, the TRIAL framework is intended to serve as a reference point for AI applications that could be adopted across global regulatory community.

What are the elements of TRIAL and their inter-connection and complimentary features? Specifically,

Transparency: An appropriate level of clarity for explaining algorithmic and data output of life-cycle AI performance allows assessment of AI by end-users and trust in the technology.

Reproducibility: A set of well-defined measures ensure the trustworthy of an AI model.

Interpretability: An AI model not only can be explained in human terms but also the causality of the underlying driving parameters to the model performance can be established with scientific support.

Applicability: The context of use and application domain of an AI model need to be established including defining best practices, and whether AI can complement or replace other technologies.

Liability: To prevent misuse of AI, the ethic rule and policy should be established which define the boundary of application and responsibility while promoting AI innovation in FDA.

As examples to articulate how the TRIAL framework would facilitate and guide the process of AI deployment in the regulatory setting, I provide the following steps: (1) State the problem that the AI algorithms will address, (2) Describe the data to collect in order to develop and validate AI results, (3) Replicate the results using different data sets if possible, (4) Design an AI platform for use in FDA or industry and to test feasibility in a pilot study, and (5) Implement the AI algorithm system-wide, monitor progress and update algorithm based on new data and results.

Stefan Platz, PhD, AstraZeneca, United Kingdom

Integrating AI in drug safety has the potential to unlock many benefits to enable us to enhance our capability, increase automation and accelerate our delivery.

I summarize my vision for AI as: “AI will not replace toxicologists, but those who don't use AI, will be replaced by those who do”. This is an important statement because there’s anxiety that we will replace all toxicologists’ work with a computer, which is far from the truth. But the toxicologist’s role needs to evolve so people understand data more and use AI to find answers or collect insight that makes a more significant impact on our discovery and development.

There are three areas that have reached a level of maturity that enables us to maximize the momentum of AI:

Computational power—with more powerful central processing units (CPUs) and Graphics processing units (GPUs), we can now manage and analyze huge amounts of data, which is key if we are to benefit from deep learning.

Data connectivity—maximizing cloud-based systems, we can now access a wealth of data from different sources and from any location.

Algorithmic development—combining intelligent algorithms with computational power and data connectivity, we can now access new insights into complex biology using multi-dimensional datasets.

Within AI there are three key areas: robotics, NLP and ML. The latter has been available for a long time and includes linear regression, random forests or Bayesian approaches, so therefore, it's part of AI.

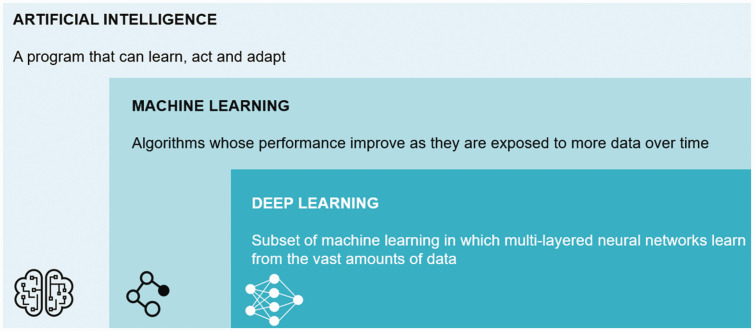

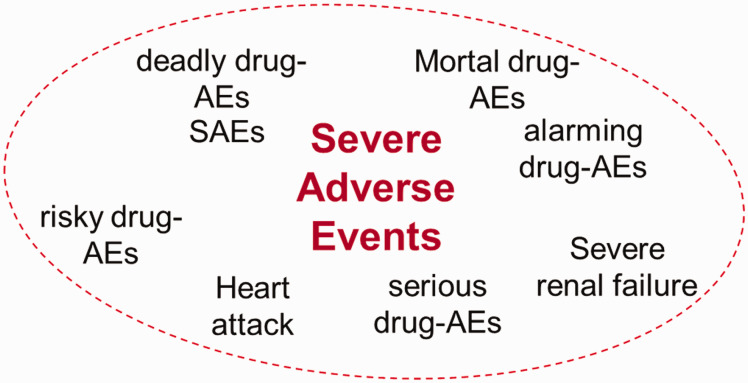

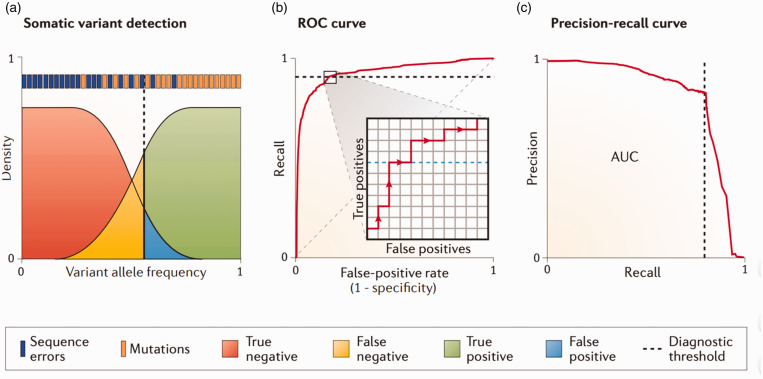

Many people have now been discussing deep learning, a subset of ML, where we take a different approach through multi-layered neural networks, which require a vast amount of data (Figure 1).

Figure 1.

Definition of machine learning and deep learning.

How do we differentiate ML with deep learning? With the former, you define features (for example, to look for a triangle or a circle, etc.), set up rules and develop decision trees. You then cluster the information to reach an outcome. Despite the benefits with this approach, there are downsides, such as the system will only look for specific features you have defined, and it will ignore all the underlying information behind the data.

Deep learning takes this approach one step further and is very similar to how the human brain functions. Instead of feeding the system with “features” (for instance, a triangle or circle), we build and train an artificial neural network with many thousands of reference images. The system learns to “discriminate” by exploring different layers of analysis and setting up rules from all the raw image data. The key benefits are that you don't need to specify the features upfront, and you use all the information encoded in the data, which can be extrapolated with transfer learning. Essentially, you're going deeper and deeper in every layer, which is known as the multi-layered approach, and by connecting all the data, the computer delivers an outcome.

One of the hot topics in deep learning is the causality of prediction models—what is the relationship between cause and effect? Are the predictions of our models truly based on biological data?

We now have access to a hugely significant amount of data—internally and externally—but it’s important to balance quantity and quality; having a huge volume of data doesn’t always equate to relevant, high-quality information. It’s important to structure your data and define ontologies, as you may have to merge internal and external data, and take extracts from multiple sources, such as medical data, product data, genomics data, imaging data and in vivo data. You can then consolidate data sources, some with their defined ontology and semantics, into a next-generation sequencing platform.

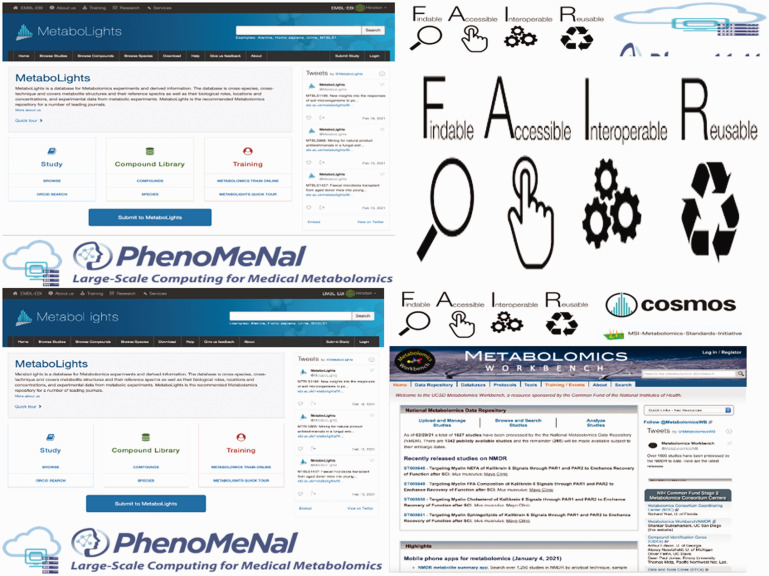

Once you have structured your data, you need to connect the information into data mechanisms, such as data marts, knowledge graphs, data warehouses or data lakes. And from that point, you use AI technology in these data applications extract information. We use the acronym, FAIR, to describe the quality and usability of our data: Find, Accessible, Interoperable and Reusable. The quality of your AI algorithms heavily depends on the data you feed in and how structured the data are.

Targeted safety assessment

The first example is in the targeted safety assessment. We find, in the phase two clinical trials, that we have attrition—fairly high on safety, but also in efficacy. There is an opportunity for AI to help increase understanding on a target you have picked to be LI (in the lead identification space) very early in the discovery, and to determine the connections of that target with other targets.

Within target safety assessment, we aim to develop a type of database called knowledge graphs which takes information from chemistry, pharmacology, clinical trial, genomics, multi-omics and biomarkers. In its simplest form, the knowledge graph connects two endpoints—a drug with a target—for example, gene X translates into protein X, and the graph provides some weighting to the strength of that connection. Extrapolating this can create a huge network which provides you with key information about connections through other targets and through other pathways. This may allow you to predict which target and off-target effects to expect.

2. Lead optimization in chemical toxicology

Another example is the lead optimization in chemical toxicity. The question is, how rapidly can we optimize the structure and progress it to a point where we can test it as a medicine in humans? In tox studies, can AI help to make predictions, for example, a chronic tox study out of acute tox studies? Target organ toxicities? Pathologists? Imaging?