Abstract

Background

There is often a lack of transparency in research using online panels related to recruitment methods and sample derivation. The purpose of this study was to describe the recruitment and participation of respondents from two disparate surveys derived from the same online research panel using quota sampling.

Methods

A commercial survey sampling and administration company, Qualtrics, was contracted to recruit participants and implement two internet-based surveys. The first survey targeted adults aged 50–75 years old and used sampling quotas to obtain diversity with respect to household income and race/ethnicity. The second focused on women aged 18–49 years and utilized quota sampling to achieve a geographically balanced sample.

Results

A racially and economically diverse sample of older adults (n=419) and a geographically diverse sample of younger women (n=530) were acquired relatively quickly (within 12 and 4 days, respectively). With exception of the highest income level, quotas were implemented as requested. Recruitment of older adults took longer (vs. younger female adults). Although survey completion rates were reasonable in both studies, there were inconsistencies in the proportion of incomplete survey responses and quality fails.

Conclusions

Cancer researchers – and researchers in general – should consider ways to leverage the use of online panels for future studies. To optimize novel and innovative strategies, researchers should proactively ask questions about panels and carefully consider the strengths and drawbacks of online survey features including quota sampling and forced response.

Impact

Results provide practical insights for cancer researchers developing future online surveys and recruitment protocols.

Keywords: Survey Research, Internet-based Research, Online Surveys, Online Research Panels, Sampling Procedures

Background

Increased use of online (internet) surveys has led to a rise in commercial survey and market research platforms. Companies such as Qualtrics (www.qualtrics.com), Survey Monkey (www.surveymonkey.com), and Amazon’s Mechanical Turk (MTurk; www.mturk.com) allow researchers to develop, test, and distribute surveys online. In addition to creating electronic surveys for distribution through typical sample outlets, these companies enable researchers to purchase access to existing pools of potential participants that have agreed to be solicited for survey recruitment. Utilizing online research panels for sample acquisition and data collection is quick and efficient. Compared to traditional survey modes (e.g., mail and telephone), online surveys are typically less expensive [1], require less time to complete [2], and more readily provide access to unique populations [3, 4].

Innovations in the collection of data using mobile and online platforms is transforming the conduct of survey research [5]. For example, crowdsourcing (i.e., the practice of soliciting contributions from large groups of people) has been applied to other health-related research and is in the early stages of adoption in cancer research. A systematic review identified 12 studies that applied crowdsourcing to cancer research in a range of capacities, including identifying candidate gene sequences for investigation, developing cancer prognosis models, and assessing cancer knowledge, beliefs, and behaviors [6]. Within the broad field of cancer, other recent studies have drawn from panel samples to conduct survivorship [7], risk communication [8], message testing [9], and behavioral [10] research. With increasing use of new technologies for data collection, the use of commercial research panels will become more prevalent in cancer research. Furthermore, large cohorts of individuals open to participating in research are being established for longitudinal epidemiologic research, including the Growth from Knowledge (GfK) KnowledgePanel and National Institute of Health (NIH) All of Us panel. Future use of panel surveys for collecting health data and biospecimens holds promise for accelerating cancer research across the cancer continuum.

Although online panels may not be representative of the US population [11], growing evidence suggests samples recruited through online panels can be as representative of the population as traditional recruitment methods [12–14]. Yet, the greatest advantage of online research panels may be their ability to produce samples targeting specific groups, such as respondents who meet a specific condition of interest to the researcher. The use of quota sampling (i.e., a non-probability sampling technique) in online panel research can help researchers obtain survey participants matching specified criteria, such as young adult cancer survivors or mammography screening age-eligible adults. Although online panel provide some advantages over traditional sampling methods, questions about the validity of commercially derived online panel samples have been raised [15–17]. These concerns may arise due to a lack of transparency in the recruitment of panelists and insufficient details on how samples are derived from online panels.

Online panel members are recruited from a variety of sources [12] and therefore, precise information on how sampling frames are constructed is usually not available. Because researchers generally lack control over sample acquisition procedures, an in-depth characterization of how panel participants are recruited is needed to inform researchers on what to expect when administering an online survey and recruiting participants from online sample panels. The purpose of this study was to describe the recruitment and participation of respondents from two disparate online surveys using quota sampling and administered by the same commercial research platform.

Methods

Qualtrics, a commercial survey sampling and administration company, was contracted to recruit participants and implement two internet-based surveys. Samples were acquired from existing pools of research panel participants. Samples were acquired from existing pools of research panel participants who have agreed to be contacted for research studies. The Qualtrics network of participant pools, referred to as the Marketplace, consists of hundreds of suppliers with a diverse set of recruitment methodologies [18]. The compilation of sampling sources helps to ensure that the overall sampling frame is not overly reliant or dependent on any particular demographic or segment of the population. Respondents can be sourced from a variety of methods depending on the individual supply partner, including the following: ads and promotions across various digital networks, word of mouth and membership referrals, social networks, online and mobile games, affiliate marketing, banner ads, TV and radio ads, and offline mail-based recruitment campaigns.

Recruitment targeted potential survey respondents who were likely to qualify based on the demographic characteristics reported in their user profiles (e.g., race and age). Panelists were invited to participate and opted in by activating a survey link directing them to the study consent page and survey instrument. Ineligible respondents were immediately exited from the survey upon providing a response that did not meet inclusion criteria or exceeded set quotas (i.e., a priori quotas for race or household income group already met).

To ensure data quality, surveys featured (1) attention checks (i.e., survey items that instructed respondents to provide a specific response); and (2) speeding checks (i.e., respondents with survey duration of ≤ one-third of the median duration of survey). Respondents who failed either quality check were excluded from the final samples. The two surveys were approximately equivalent in terms of survey duration and participant remuneration. Qualtrics charged investigators $6.50 for each completed survey response requested. The data reported in this study were collected according to separate IRB-approved protocols and in accordance with recognized ethical guidelines. Written informed consent was obtained from each participant.

Study Design and Survey Administration

Sample One.

The first sample was obtained as part of a pre-post parallel trial designed to examine the effects of providing colorectal cancer risk feedback to average risk adults who are age-eligible for colorectal cancer screening. The survey contained 133 items with each item requiring a response (i.e., forced response). The target enrollment was 400 eligible respondents. To be eligible to participate, respondents had to report being aged 50–75 years, have no personal or family history of colorectal cancer or other predisposing condition, able to read and comprehend English, and reside in the contiguous United States (US). Sampling quotas were implemented for race and annual household income. Specifically, balanced proportions of respondents with non-Hispanic White/Caucasian, non-Hispanic Black/African American, and Hispanic/Latino/Spanish origin racial/ethnic identities and diversity in reported household income (approximately 20% less than 20k, 30% between 20–49k, 20% between 50–74k, 10% between 75–99k = 10% and 20% greater than or equal to 100k) were requested. Respondents identifying as some other race, ethnicity or origin were not eligible to participate.

Sample Two.

Sample two was acquired during a previously described study testing whether specific message types and various psychosocial variables affect future Zika vaccine uptake intent among women of childbearing age [19]. The survey contained 105 items and did not utilize forced response. Target enrollment was 500 respondents. To be eligible to participate in this study, respondents had to report being female, between the ages 18 and 49 years, able to read and comprehend English, and a resident of the contiguous US. Sampling quotas were implemented to achieve a geographically varied sample across the four census regions (i.e., Northeast, South, West, and Midwest) with oversampling in the Southern region due to the heightened risk of Zika in this area.

Results

Data Collection

For the first sample, completed survey duration ranged from 10 to 1,922 minutes, with a median duration of 26 minutes. Data collection occurred over a period of 12 days in June 2017. Completed survey duration for the second sample ranged from 8 to 390 minutes, with a median duration of 27 minutes. Data collection occurred over a period of four days in March 2017.

Survey Recruitment and Participant Flow

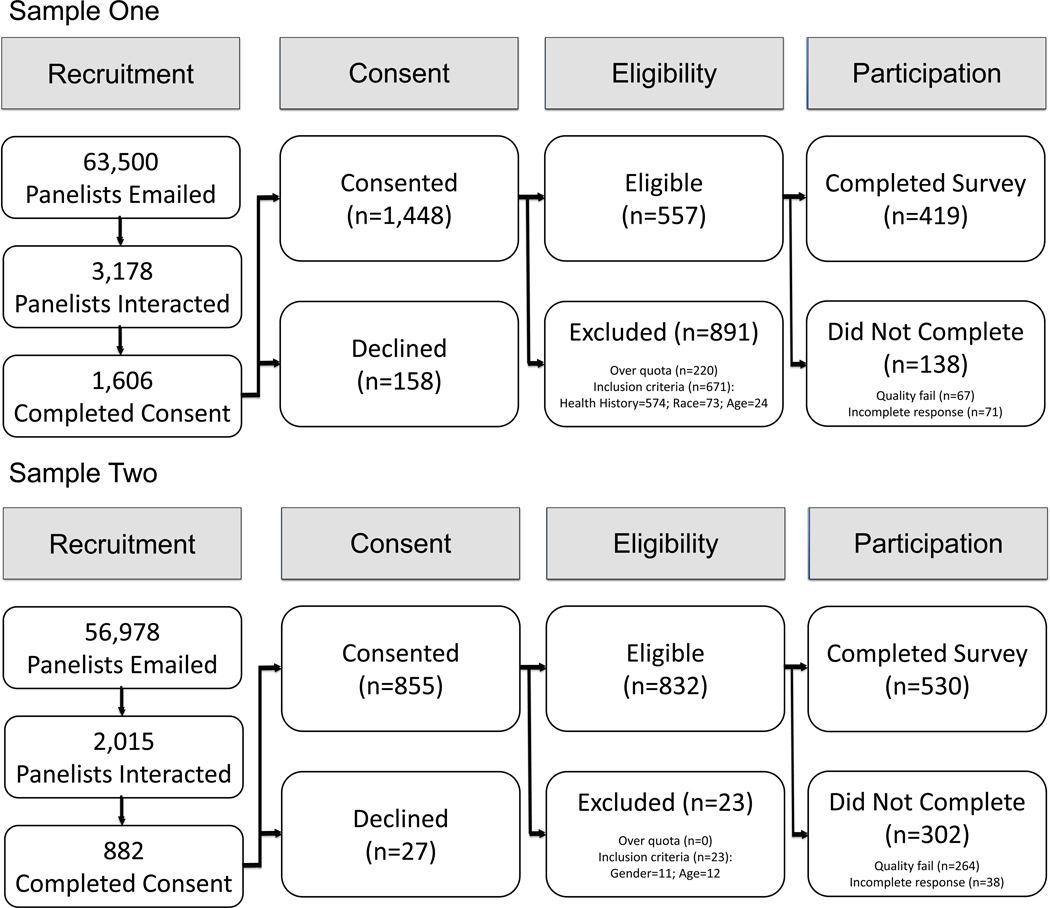

Survey recruitment and participant flow for each sample are depicted in Figure 1.

Figure 1.

contains a depiction of recruitment and participation within each sample.

Sample One.

Based on target demographics, approximately 63,500 panelists were invited to participate in this survey by email and other methods (e.g., messaging through online portals, text message, and in-app advertisements). Of those contacted, 3,178 panelists interacted with the survey by opening the survey invitation and/or survey link and 1,606 completed the consent page. One hundred and fifty-eight panelists did not consent (9.8%). Of the panelists who consented, 671 did not meet eligibility criteria (46.3%), the majority of whom were ineligible due to health history (n=574 reported a history of colorectal cancer or other predisposing condition). Two hundred and twenty respondents were excluded due to quota sampling (15.2%). Seventy-one respondents did not complete the survey entirely (4.9%) and an additional 67 were removed from the study for failing an attention check (i.e., one of three survey items that required specific responses) (4.6%). A total of n=419 panelists completed the survey (75.2% of those who agreed to participate and were eligible).

The average age of respondents who completed the survey was 58.5 years (sd=6.3). The sample consisted of n=279 females (66.6%) and as requested, an equal proportion of non-Hispanic White/Caucasian, non-Hispanic Black/African American, and Hispanic/Latino/Spanish respondents (33% each). However, the a priori income quotas proposed for this study were not fully implemented. Due to difficulties acquiring participants who reported an annual household income of ≥100k, we elected to eliminate the income quota after two weeks of data collection. To acquire an adequate sample size to ensure statistical power for the parent study (i.e., n=400), we used natural probability sampling to obtain the remaining number of participants. Ultimately, income levels were within 5% of the proportions requested, except respondents with a reported income of ≥100k were 10.5% of the final sample instead of the 20% initially proposed.

Sample Two.

The survey for sample two was distributed via email to approximately 56,978 panelists based on target demographics. A total of 2,015 panelists interacted with the survey (i.e., clicked on the survey invitation and/or survey link and 882 went on to complete the consent page. Three percent of these panelists did not consent (n=27). Among those who consented, 23 (2.7%) did not meet eligibility criteria (due to age and gender). No respondents were screened out due to being over quota. Thirty-eight respondents did not complete the survey entirely (4.4%) and an additional 264 were removed from the study for failing an attention check (i.e., one of three survey items that required specific responses) (30.9%). A total of n=530 panelists completed the survey (63.7% of those who consented and were eligible).

As intended, all respondents who completed the survey were female. On average, respondents were 33.9 years old (sd=7.9). Respondents were predominantly White/Caucasian (73.5%), 9.4% were Black/African American, 8.8% Hispanic, 5.0% Asian, 1.2% American Indian, and 2.1% were some other race/ethnicity. Thirty-nine percent of respondents were from the Southern US. Roughly one-quarter (24.5%) were residents of the Western region and the remaining respondents were from the Midwest (20.9%) and Northeast (15.6%).

Conclusions

This study described the samples resulting from two online surveys that recruited participants using quota sampling through the same commercial research panel. A thorough description of how quota sampling was used to obtain targeted samples – one racially and economically diverse sample of older adults and another geographically dispersed sample of younger adult women – via an online panel was provided. Survey recruitment and participant flow within each sample were examined. Taken together, results provide context and considerations for future cancer researchers – and researchers in general – contemplating the use of commercially administered, online research surveys.

The level of transparency regarding recruitment and participant flow reported in this study (e.g., number of panelists contacted, number of panelists that interacted with the survey, analysis of over quota exclusions, etc.) is greater than that typically reported in other recent studies using online research panels [20, 21]. The information outlined indicates that commercial research platforms have access to large panels of research participants. In both cases, approximately 60,000 panelists were sent a survey invitation. About one-half (50.5% and 43.8% in samples one and two, respectively) of those who interacted with the email ultimately completed the consent page of the survey. Although the traditional calculation of response for each of these samples was very low (i.e., 3% to 7% of panelists interacted with the survey), these results are consistent with prior research examining response across multiple panel vendors [22] but lower than another Qualtrics panel study reporting an 18.7% response rate [10]. However, among those who consented and were eligible for participation, most completed the survey (75.2% in sample one and 63.7% in sample two). For internet derived samples, this ‘completion rate’ (i.e., the proportion of survey completers out of all eligible respondents who initiate the survey) is frequently reported [7, 9, 23]. Our completion rates compare favorably to the typical response rates of epidemiologic studies which have been declining for the past several decades [24]. A review of case-control cancer studies conducted in 2001–2010 revealed median response rates of 75.6% for cases, 78.0% for medical source controls, and 53.0% for population controls [25]. The median response rate of the 2017 Behavioral Risk Factor Surveillance System (BRFSS) survey was approximately 45% [26].

Study-specific inclusion criterion were the primary reason for ineligibility within both samples, including more than 500 consented panelists with a history of colorectal cancer excluded in sample one. This presence of colorectal cancer survivors suggests Qualtrics may be a potentially promising but overlooked platform for recruiting cancer survivors. Additional panelists in the first survey screened out due to quota sampling (n=220), while no panelists were excluded from survey two for being over quota. This difference is not entirely surprising given that no specific limits were set for the quotas on geographic regions in sample two. That the desired geographic variation was achieved without defining precise proportions represents an important consideration for future study designs as having less stringent quotas could save time (faster sample acquisition), reduce expenses (complex quotas increase sample cost), and potentially, reduce selection bias (by retaining otherwise eligible respondents).

Despite initially contacting higher numbers of potential participants relative to traditional methods, online panelists meeting specific demographic criteria can be effectively targeted based on information contained in panelists’ profiles. There were few exclusions based on sociodemographic characteristics. Survey completers in both studies were consistent with the study inclusion criteria and set quotas (except for the highest income level). Therefore, it may be difficult to acquire more affluent participants. It should also be noted that sample one (with no inclusion or sampling criteria related to gender) had a relatively high proportion of female respondents (66.6%) while sample two (with no race/ethnicity quota) yielded a predominately White/Caucasian sample. These results support the use of quotas when demographic characteristics are germane to study aims. For example, cancer researchers may use quotas in case-control studies to identify controls that match on specific criteria (i.e., smoking history). However, large panels would be need to target exposures with low prevalence. Researchers should carefully weigh the benefits and potential drawbacks of using quota sampling.

Several additional practical implications can be gleaned from the examination of these online surveys. First, researchers received more completed survey responses than were purchased (e.g., 19–30) and samples were acquired relatively more quickly than traditional samples. Although both samples were acquired in a short period of time, it should be noted that sample one took three times longer than sample two, likely due to the older target population. Second, the proportion of eligible respondents who did not complete the survey was relatively low in both samples, but lower in the sample of younger adult women (i.e., 4.6% in sample two vs. 12.7% in sample one). Therefore, forced item response may not be necessary to promote complete data and may contribute to the higher incomplete rate observed in sample one. In addition, only one “speeder” (i.e., respondent with a total survey duration of ≤ one-third of the median duration of survey) was identified. Thus, researchers should be encouraged to use additional quality checks, such as attention checks, to safeguard against cheaters (i.e., respondents who rush through the survey and threaten data quality). In this analysis, the younger respondents in sample two were more likely than older respondents (sample one) to fail attention checks (31.7% and 12.0%, respectively). This finding may support that older respondents are more conscientious, or alternatively, the forced response of items in sample one may underlie the difference.

The present study highlights the relative ease of obtaining diverse samples (i.e., one racially and economically diverse and the other geographically dispersed) via quota sampling and online recruitment methods. Implementing sampling quotas for race/ethnicity represents a major advantage over traditional sampling methods that often consist of predominantly White/Caucasian participants [27–29]. Researchers who seek racial/ethnic diversity should utilize available representative samples whenever possible. When access to minorities is limited, however, online panel sampling using quotas sampling for race may be a valuable approach for reaching minority participants, as demonstrated in sample one and other panel samples [30, 31].

Finally, this study is one of the first to examine in-depth how studies using online panels function in terms of sampling, initial recruiting, and participation. Our results provide practical insights for cancer researchers – and researchers in general – to be cognizant of when designing future online surveys and recruitment strategies. In order to optimize novel and innovative strategies, researchers should carefully consider the strengths and drawbacks of online survey features including quota sampling and forced response. Our goal in documenting the methodologic aspects of recruitment and participation from two different study populations from online panels was to help build transparency in reporting online panel samples, as well as to provide a basis for comparison across different commercially available research panels. Like many other studies conducted using online panels, we were unable to fully ascertain how the panel was created or describe those who did not interact with the survey. According to Qualtrics [18], additional information related to these panelists (e.g., number of invalid emails and non-respondent characteristics) is not currently tracked. Another limitation of using commercial participant panels is that their overall size and the demographics of the underlying user populations are dynamic and often not available. Future studies should be proactive in negotiating additional information and addressing unknowns in panel recruitment procedures. In doing so, researchers could raise the bar on the standards of information provided by suppliers of commercial panels. These are important steps towards strengthening survey methodologies in the rapidly changing landscape of “citizen science” where the public actively engages in participatory research projects, such as online panels and scientific registries.

In summary, online research panels and quota sampling techniques provide new opportunities for the acquisition of traditionally underrepresented individuals or participants who meet narrowly specific inclusion criteria – an advantage over traditional sampling methods. Our results support leveraging online panels for cancer research. Future epidemiologic research using these methods to perform recruitment of targeted populations (e.g., cancer survivors (cases) and individuals (controls) residing in specific geographic areas, such as colorectal cancer “hotspots”) could alleviate the need for time- and cost-intensive methods such as mail-based and in-person correspondence. In comparison, web-based surveys participant incentives and administrative costs are substantially lower [32]. In conclusion, the use of panel participants could be leveraged to reach specific population groups, maximize limited research budgets, and therefore, enable novel cancer research focused on health disparities and cancer communication that are currently not feasible in either traditional small intervention or large population studies.

Acknowledgments

Financial support was provided in part to the corresponding author (CAM) by a Graduate Training in Disparities Research award GTDR14302086 from Susan G. Komen®” and a National Cancer Institute T32 award (2T32CA093423).

Footnotes

Conflict of interest statement:

The authors declare no potential conflicts of interest.

References

- 1.Dillman DA, Smyth JD, and Christian LM, Internet, phone, mail, and mixed-mode surveys: the tailored design method. 2014: John Wiley & Sons. [Google Scholar]

- 2.Van Selm M. and Jankowski NW, Conducting online surveys. Quality & Quantity, 2006. 40(3): p. 435–456. [Google Scholar]

- 3.Wright KB, Researching Internet-based populations: Advantages and disadvantages of online survey research, online questionnaire authoring software packages, and web survey services. Journal of computer-mediated communication, 2005. 10(3): p. JCMC1034. [Google Scholar]

- 4.Andrews D, Nonnecke B, and Preece J, Electronic survey methodology: A case study in reaching hard-to-involve Internet users. International journal of human-computer interaction, 2003. 16(2): p. 185–210. [Google Scholar]

- 5.Link MW, et al. , Mobile technologies for conducting, augmenting and potentially replacing surveys: Executive summary of the AAPOR task force on emerging technologies in public opinion research. 2014. 78(4): p. 779–787. [Google Scholar]

- 6.Lee YJ, Arida JA, and Donovan H.S.J.C.m., The application of crowdsourcing approaches to cancer research: a systematic review. 2017. 6(11): p. 2595–2605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Arch JJ and Carr A.L.J.P.o., Using Mechanical Turk for research on cancer survivors. 2017. 26(10): p. 1593–1603. [DOI] [PubMed] [Google Scholar]

- 8.Lipkus IM, et al. , Reactions to online colorectal cancer risk estimates among a nationally representative sample of adults who have never been screened. 2018. 41(3): p. 289–298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Porter RM, et al. , Cancer-salient messaging for Human Papillomavirus vaccine uptake: A randomized controlled trial. 2018. 36(18): p. 2494–2500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cataldo J.K.J.C.m., High-risk older smokers’ perceptions, attitudes, and beliefs about lung cancer screening. 2016. 5(4): p. 753–759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Guo X, et al. , Volunteer participation in the Health eHeart Study: A comparison with the US population. Scientific Reports, 2017. 7(1): p. 1956. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Farrell D. and Petersen JC, The growth of internet research methods and the reluctant sociologist. Sociological Inquiry, 2010. 80(1): p. 114–125. [Google Scholar]

- 13.Heen M, Lieberman JD, and Miethe TD, A comparison of different online sampling approaches for generating national samples. Center for Crime Justice Policy, 2014. 1: p. 1–8. [Google Scholar]

- 14.Paolacci G, Chandler J, and Ipeirotis PG, Running experiments on amazon mechanical turk. Judgment Decision Making, 2010. 5(5): p. 411–419. [Google Scholar]

- 15.Rouse SV, A reliability analysis of Mechanical Turk data. Computers in Human Behavior, 2015. 43: p. 304–307. [Google Scholar]

- 16.Callegaro M, et al. , Online panel research: A data quality perspective. 2014: John Wiley & Sons. [Google Scholar]

- 17.Baker R, et al. , Research synthesis: AAPOR report on online panels. Public Opinion Quarterly, 2010. 74(4): p. 711–781. [Google Scholar]

- 18.Taylor A, Personal Communication. October 7, 2019. [Google Scholar]

- 19.Guidry J, Designing Effective Messages to Promote Future Zika Vaccine Uptake. 2017. [Google Scholar]

- 20.Dunwoody PT and McFarland SG, Support for anti-Muslim policies: The role of political traits and threat perception. Political Psychology, 2018. 39(1): p. 89–106. [Google Scholar]

- 21.Guidry JP, et al. , Framing and visual type: Effect on future Zika vaccine uptake intent. Journal of Public Health Research, 2018. 7(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Craig BM, et al. , Comparison of US panel vendors for online surveys. 2013. 15(11): p. e260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Callegaro M. and DiSogra C, Computing response metrics for online panels. Public Opinion Quarterly, 2008. 72(5): p. 1008–1032. [Google Scholar]

- 24.Galea S. and Tracy M.J.A.o.e, Participation rates in epidemiologic studies. 2007. 17(9): p. 643–653. [DOI] [PubMed] [Google Scholar]

- 25.Xu M, et al. , Response rates in case-control studies of cancer by era of fieldwork and by characteristics of study design. 2018. 28(6): p. 385–391. [DOI] [PubMed] [Google Scholar]

- 26.Behavioral Risk Factor Surveillance System (BRFSS), 2017 Summary Data Quality Report. 2018, Centers for Disease Control and Prevention: Atlanta, GA. [Google Scholar]

- 27.Lipkus IM, et al. , Reactions to online colorectal cancer risk estimates among a nationally representative sample of adults who have never been screened. Journal of Behavioral Medicine, 2018. 41(3): p. 289–298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Molisani AJ, Evaluation and Comparison of Theoretical Models’ Abilities to Explain and Predict Colorectal Cancer Screening Behaviors. 2015. [Google Scholar]

- 29.Ferrer RA, et al. , An affective booster moderates the effect of gain-and loss-framed messages on behavioral intentions for colorectal cancer screening. Journal of Behavioral Medicine, 2012. 35(4): p. 452–461. [DOI] [PubMed] [Google Scholar]

- 30.Umbach PD, Web surveys: Best practices. New Directions for Institutional Research, 2004. 121: p. 23–38. [Google Scholar]

- 31.Hovick SR, et al. , User perceptions and reactions to an online cancer risk assessment tool: a process evaluation of Cancer Risk Check. Journal of Cancer Education, 2017. 32(1): p. 141–147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Greenlaw C. and Brown-Welty S.J.E.r., A comparison of web-based and paper-based survey methods: testing assumptions of survey mode and response cost. 2009. 33(5): p. 464–480. [DOI] [PubMed] [Google Scholar]