Abstract

Purpose:

Recent studies have demonstrated the ability to rapidly produce large field of view X-ray diffraction images, which provide rich new data relevant to the understanding and analysis of disease. However, work has only just begun on developing algorithms that maximize the performance towards decision-making and diagnostic tasks. In this study, we present the implementation of and comparison between rules-based and machine learning classifiers on X-ray diffraction images of medically relevant phantoms to explore the potential for increased classification performance.

Methods:

Medically relevant phantoms were utilized to provide well-characterized ground-truths for comparing classifier performance. Water and polylactic acid (PLA) plastic were used as surrogates for cancerous and healthy tissue, respectively, and phantoms were created with varying levels of spatial complexity and biologically relevant features for quantitative testing of classifier performance. Our previously developed X-ray scanner was used to acquire co-registered X-ray transmission and diffraction images of the phantoms. For classification algorithms, we explored and compared two rules-based classifiers (cross-correlation, or matched-filter, and linear least-squares unmixing) and two machine learning classifiers (support vector machines and shallow neural networks). Reference X-ray diffraction spectra (measured by a commercial diffractometer) were provided to the rules-based algorithms, while 60% of the measured X-ray diffraction pixels were used for training of the machine learning algorithms. The area under the receiver operating characteristic curve (AUC) was used as a comparative metric between the classification algorithms, along with the accuracy performance at the midpoint threshold for each classifier.

Results:

The AUC values for material classification were 0.994 (cross-correlation, CC), 0.994 (least-squares, LS), 0.995 (support vector machine, SVM), and 0.999 (shallow neural network, SNN). Setting the classification threshold to the midpoint for each classifier resulted in accuracy values of CC=96.48%, LS=96.48%, SVM=97.36%, and SNN=98.94%. If only considering pixels ± 3 mm from water-PLA boundaries (where partial volume effects could occur due to imaging resolution limits), the classification accuracies were CC=89.32%, LS=89.32%, SVM=92.03%, and SNN=96.79%, demonstrating an even larger improvement produced by the machine-learned algorithms in spatial regions critical for imaging tasks. Classification by transmission data alone produced an AUC of 0.773 and accuracy of 85.45%, well below the performance levels of any of the classifiers applied to X-ray diffraction image data.

Conclusions:

We demonstrated that machine learning-based classifiers outperformed rules-based approaches in terms of overall classification accuracy and improved the spatially resolved classification performance on X-ray diffraction images of medical phantoms. In particular, the machine learning algorithms demonstrated considerably improved performance whenever multiple materials existed in a single voxel. The quantitative performance gains demonstrate an avenue to extract and harness X-ray diffraction imaging data to improve material analysis for research, industrial, and clinical applications.

Keywords: X-ray diffraction imaging, machine learning, medical phantoms, multimodal imaging, X-ray contrast, coded aperture

Introduction

During the onset of various types of cancers, changes occur at the cellular level, which impact structures including collagen, extracellular matrices, and bone-mineral compositions1,2. X-ray diffraction (XRD) is a technology capable of detecting these changes through measuring the interference patterns of X-rays scattering off the molecular-scale structures3–6. While prior XRD measurement and analysis methods have proven useful for studying diseases7–10, they have typically been limited to analyzing only a few discrete locations at the surface of a prepared sample or specimen.

XRD imaging approaches have been developed that seek to circumvent sample preparation, which is often destructive to samples and their spatial correlations, while enabling faster measurements with larger fields of view11–15. These imaging methods, including energy-dispersive and angular-dispersive raster scanning measurements, coherent scattering computed tomography, and coded aperture systems, seek to measure the XRD signatures of materials throughout a large field of view within reasonable scan times while maintaining adequate spatial resolutions for imaging applications. While simulation studies are often conducted for the design and optimization of these novel imaging architectures16–19, experimental validations on biologically-relevant phantoms20–23 and real biospecimens3,6,8,13,15,24 have also occurred. These results have shown that tissues can be differentiated by spatially resolved XRD measurements; however, the resulting rich spatio-spectral datasets necessitate automatic data processing and classification techniques to parse the large data volume.

Conventional XRD classifiers depend on matching scatter peak locations or correlating measured spectra with databases, but more advanced algorithms offer the potential to outperform these simpler methods25. Studies have implemented multivariate analysis (e.g., principal component analysis) for tissue classification26, while more advanced algorithms (particularly convolution neural networks (CNNs) and their variants) have been applied extensively to crystalline XRD data27–31. Beyond algorithm development focused on XRD spectra, there have been developments to classify materials based on their 2D raw scatter measurements32–36. In contrast, our development of coded aperture XRD imaging systems has generated large amounts of data in the form of XRD images that contain full XRD spectra within each voxel of an object. Our previous success at developing CNN-based classification algorithms37 combined with the multitude of other approaches discussed above show that machine learning (ML) classifiers offer advantages relative to rules-based classifiers when applied to XRD data.

In this study, we perform a comparison of classifier performances using medical XRD phantoms with different geometries that are intended to model cancerous and adipose breast tissue. These phantoms provide well-characterized ground-truths that allow us to investigate the performance of multiple classification algorithms operating on data-rich XRD images. Our previously developed X-ray fan beam coded aperture imaging system15,19 is used to obtain XRD images that are fed into the classification algorithms for analysis. For all phantoms, 4 classification algorithms are compared: cross-correlation (CC), least-squares (LS) unmixing, support vector machines (SVM), and shallow neural networks (SNN).

Materials & Methods

Although the medical phantoms and material discrimination approaches discussed in this study are agnostic to XRD imaging architecture, we briefly describe the operation of our imaging system for the sake of completeness. After presenting the imaging system, we discuss our designed medical phantoms for XRD imaging and their purposes. Lastly, we outline the classification algorithms explored in this study for comparing rules-based and ML-based algorithms.

X-ray Fan Beam Coded Aperture Imaging System Data Acquisition

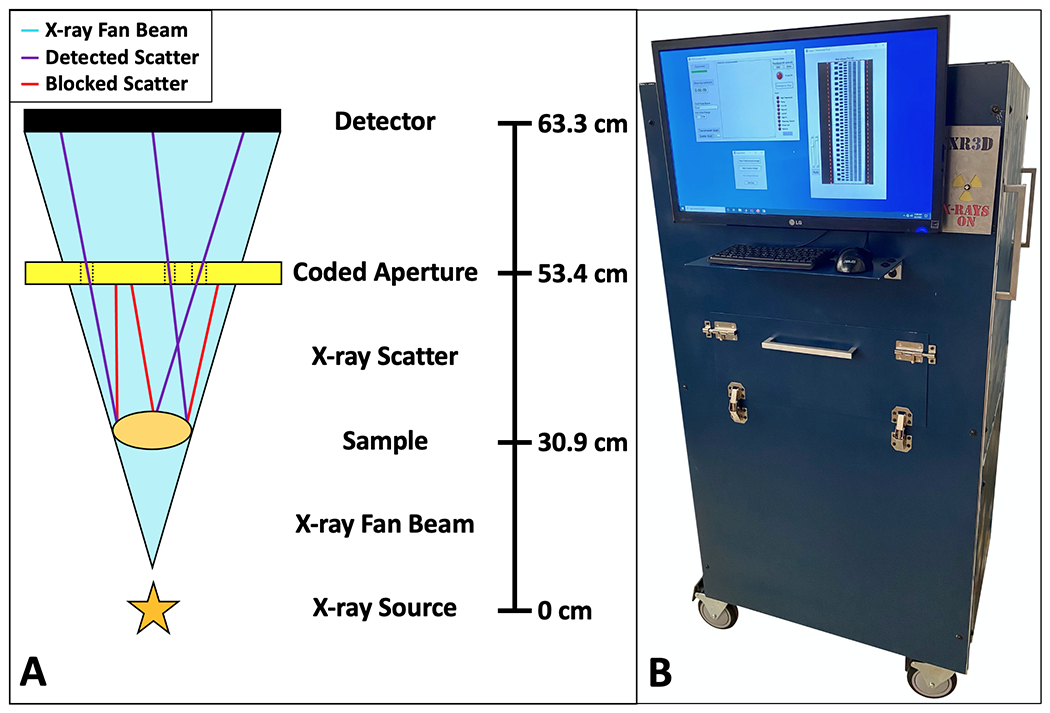

Our previously developed X-ray fan beam coded aperture imaging system15,19 allows for reduced measurement time by making multiplexed measurements of an object’s XRD data. The fan beam measures a 150x1 mm2 region through a single step-and-shoot exposure, while the coded aperture and a physics-based forward model of the system enable us to determine the origin of scattered X-rays along the fan beam extent38–40.

This X-ray imaging system was used to record the co-registered X-ray transmission and diffraction images used for training and testing in this study. As shown in Fig. 1 and discussed in depth in prior work15,19, the system consists of a spectrally-filtered X-ray tube (XRB Monoblock, Spellman HV, NY) that is spatially collimated to produce a fan beam at the sample plane. The sample is illuminated by the primary fan beam, and both the transmitted and XRD signals are measured at the detector (Xineos-2329, Teledyne Dalsa, CA). The system (Fig. 1B) is capable of transmission and XRD imaging of a 15x15 cm2 field of view within minutes. This rapid imaging makes it possible to generate large datasets that can be used in ML algorithm development.

Fig 1.

The X-ray fan beam coded aperture transmission and diffraction imaging system schematic and prototype. A) Schematic of the X-ray fan beam coded aperture transmission and diffraction system, where a collimated X-ray source produces a fan beam that illuminates a sample while signal is acquired at the detector. Scatter produced by the sample passes through the 2D coded aperture and is encoded prior to its measurement at the detector (purple = encoded scatter making it to detector, red = scatter blocked by coded aperture, teal = primary fan beam) (online version only). Scatter data is acquired sequentially after transmission scans. Denoted distances are from X-ray source focal spot to X-ray entrance of each object B) Photo of the constructed X-ray fan beam coded aperture imaging system, including a built-in monitor, computer, and lead shielding for operation, along with GUI apps for data acquisition and display.

During transmission image measurements, the system operates with the X-ray generator at 80 kVp/6 mA/100 ms fan slice-exposures. When the system has acquired fan beam data for the full extent of the scanned object, all slices are stitched together (with 50 μm overlap of adjacent fan acquisitions that is corrected in post-processing), with outer air scans used to display the scan in a logarithmic display of transmitted intensity that is standard for radiographs. The system has a transmission spatial resolution of ≈0.6 mm2, which is limited primarily by the X-ray source focal spot size. After acquiring the X-ray transmission data for each phantom, we acquire XRD data by a similar approach. The X-ray generator is operated at 160 kVp/3 mA/15 s fan slice-exposures. The XRD spectrum at each pixel in the object is determined from the raw scatter data using a post-acquisition reconstruction algorithm38,40,41. Using this approach, the current system’s XRD spatial resolution is ≈1.4 mm2 with a 0.01 1/Å momentum transfer resolution (q)15. For this study, we consider only geometrically thin (i.e., 5–10 mm) samples and focus on phantoms relevant to imaging biospecimens. Additionally, in this study q is defined as

where h is Planck’s constant, c is the speed of light, E is the energy of the X-rays, and θ is the angles the X-rays scatter at.

Designed Phantoms for Quantifying Material Classification Performance

To test the performance of classification algorithms on XRD image data, it is important to know the correct ground truth/labels of materials within each measured pixel. However, human tissues can have complex variations in their XRD spectra due to differences in patient genetics and mixing of tissue types within measured pixels. In addition, there are currently no standard methods for performing large field of view (≥10x10 cm2) XRD imaging. It is, therefore, prudent for empirical investigations of classification algorithms to start with manufactured phantoms that have known materials in all locations. Such phantoms allow for a direct, quantitative comparison between the perfectly known ground truth and recovered XRD spectra at each location within the phantom.

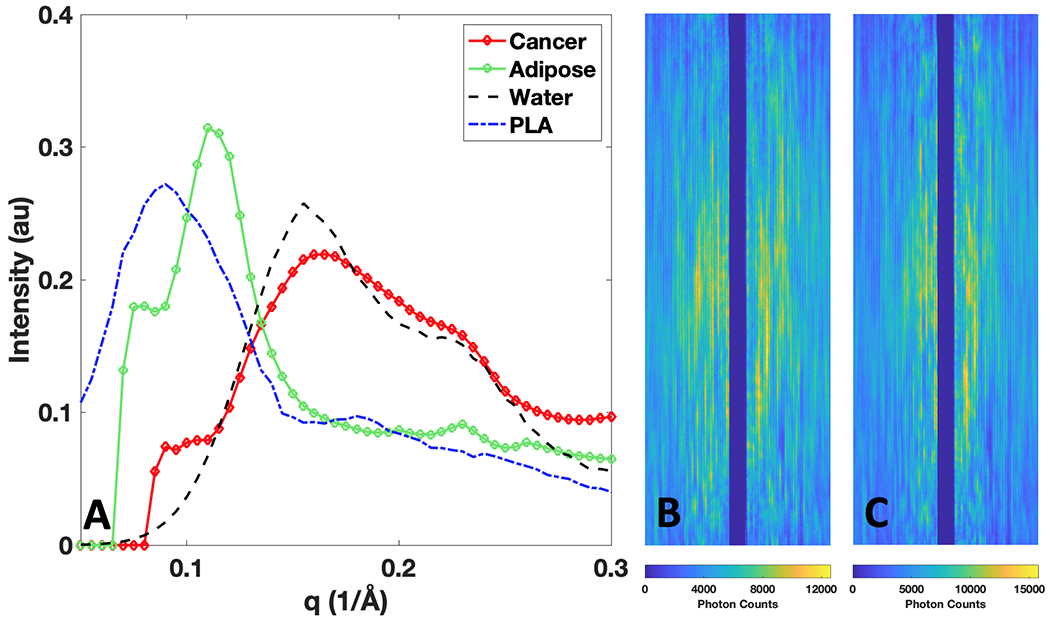

It is important to design phantoms that are consistent with intended application. Here we focus on the ability for XRD imaging to correctly classify cancer and benign tissues based on their XRD spectra; therefore, for the design of the phantom objects, water and polylactic acid (PLA) plastic were identified as viable simulants for cancer42 and adipose (fat)43,44,45(p3) tissue, respectively. Shown in Fig. 2A are the XRD spectra of breast cancer and adipose tissue1, along with XRD spectra of water and PLA that we measured within a commercial diffractometer (D2 Phaser, Bruker, MA). Observing the average XRD spectra for adipose (which is typically the most prevalent breast tissue by volume) and breast cancer as measured in literature1, it can be noted that adipose and PLA have narrower and higher intensity peaks at lower q values, while breast cancer and water have broader and lower intensity peaks spread out at higher q values. It is for this reason that water and PLA were selected as simulants—within the XRD domain, water’s relation to PLA is comparable to breast cancer’s relation with adipose. This phantom material choice is in agreement with previous XRD studies46,47. Additionally, water and PLA have similar densities to cancer and adipose (≈1 g/cm3), producing sufficiently similar scatter intensities.

Fig 2.

X-ray diffraction (XRD) spectra (in intensity vs momentum transfer (q) units) of breast tissues and simulant materials, along with raw 2D scatter measurements taken by the X-ray fan beam coded aperture system for water and PLA. A) XRD spectra of breast cancer and adipose1, along with their simulants of water and PLA B) Raw X-ray fan beam coded aperture measurement of water’s scattered X-rays along whole fan extent C) Raw X-ray fan beam coded aperture measurement of PLA’s scattered X-rays along whole fan extent.

It is worth noting that, while we focused on water and PLA in this study, this approach can be generalized to other materials (e.g. using other 3D printed materials and/or soft materials2,22 to fill the empty space). In addition, one can use more than two materials while still maintaining perfect spatial and material labeling at each pixel of the object.

For visualization of the raw scatter measurements taken on these simulants, Fig. 2B and 2C show the encoded scatter X-ray measurements of water and PLA acquired when water and PLA occupied the full extent of the fan beam (i.e., for the phantom shown in Fig. 3A). Water’s higher mean q value leads to a wider distribution of coherently scattered X-rays along the horizonal axis in Fig. 2B, while PLA’s lower mean q value generates a narrower distribution perpendicular to the fan beam extent. The higher peak intensity of PLA is shown in the maximum photon counts detected within a pixel being ≈15,000 counts, while water’s maximum intensity is lower at ≈12,000 counts—this is expected given PLA’s density of 1.24 g/cm3 relative to water’s density of 1 g/cm3.

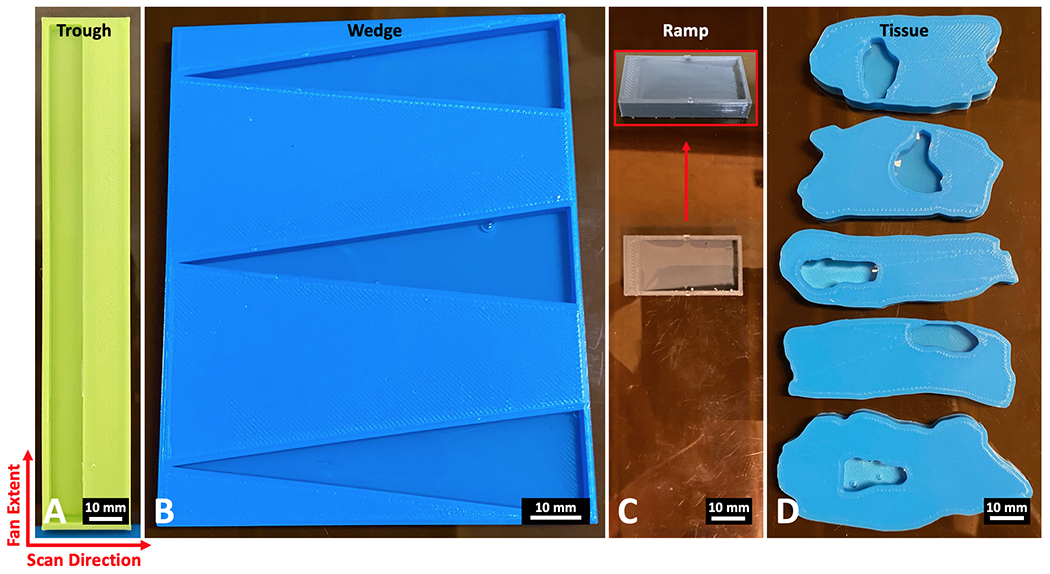

Fig 3.

Phantoms composed of water and PLA plastic (6 mm thick) for testing material classification of the X-ray fan beam coded aperture imaging system. A) A trough phantom containing rectangular regions of water and PLA B) A wedge phantom with three triangle water wells C) A ramp phantom that transitions from 6 mm PLA (left side) to 5.5 mm water (right side) by a ramp that changes the ratio of the materials through the axial dimension D) Tissue phantoms modeled after real lumpectomy tissue slices, with water wells placed at the locations of the tumors within the real samples. For imaging, the fan extends along the vertical axis of these images, while the scan direction occurs on the horizontal axis (together creating the transverse plane). The axis through the thickness of the phantoms represents the axial dimension.

With the simulant materials selected, we next designed phantoms (6 mm thick) with specific spatial-material configurations to test different aspects of the system’s material classification performance (see Fig. 3). A trough phantom was designed with a 150x10x5.5 mm3 well for placing water on the left side of a 154x24x6 mm3 block of PLA (see Fig. 3A). This object allows for testing the measurement and classification performance of materials while there is primarily a single material within the full extent of the X-ray fan beam during a measurement. This object can also be used to check the quality and uniformity of the measured XRD spectra over fan beam locations and time. The wedge phantom shown in Fig. 3B was designed as a more complex test, with the width of water along the X-ray fan beam extent going from a point to 20 mm wide across an 80 mm extent, with wells uniformly 5.5 mm deep. With a continuum of water widths, this object can show the resolution for detecting the water material embedded within PLA. Three wells were created within the wedge phantom (total phantom volume of 102x82x6 mm3) as an additional quality check of water detection resolution on the top, middle, and bottom region of the X-ray fan beam extent. The ramp phantom shown in Fig. 3C allows for testing material classification performance as the amount of each material varies through the thickness of the object (analogous to how tissue composition can vary within a 6 mm thick region), with the overall phantom volume being 17x33x6 mm3. On the left side of the ramp phantom is a 3 mm wide ledge of 6 mm thick PLA, which slowly ramps downward across 25 mm width leaving a 3 mm wide ledge of 0.5 mm thick PLA—water covers this ramp to create regions that vary from containing majority PLA to majority water throughout the phantom thickness. The final designed phantoms shown in Fig. 3D are tissue phantoms modeled after real malignant breast lumpectomy slices (based on digital slides provided for modeling by Duke Hospital Surgical Pathology, as shown in supplemental Fig. S1). These phantoms represent a biologically relevant test, as the spatial distribution of PLA represents healthy tissue while the location of water wells (all 5.5 mm deep) represents locations of malignant tumors. Unlike the previous phantoms, the tissue phantoms are not composed of rectangles/triangles and instead have curved boundaries between air, PLA, and water, providing more biologically realistic spatial distribution of simulant materials. This final set of phantoms seeks to characterize the potential performance as it relates to the specific task of classifying cancer and healthy tissue in excised breast lumpectomy specimens. These phantoms are a simplification of the real biological scenario, given that tissue heterogeneity through a 6 mm slice of tissue would in fact cause mixtures along the thickness of the material being scanned (with the presence of fibroglandular and other tissue types); however, the phantoms are taken as a reasonable approximation as it relates to the task of identifying primary tumors that are a few millimeters in diameter. Scans of the trough, wedge, ramp, and tissue phantoms were conducted over planar areas of 150x33 mm2, 150x89 mm2, 150x41 mm2, and 150x66 mm2, with the total scan times taking 8.25, 22.25, 10.25, and 16.5 minutes, respectively. Having presented these phantoms for conducting the material classification algorithm study, we next outline the classification algorithms under consideration.

Material Classification Algorithms

We considered both rule-based and ML-based approaches for performing classification on the measured XRD spectra. While rule-based approaches can take advantage of previously known differences (in the form of a database of XRD spectra that can be used for matched filtering48 or simple unmixing approaches49), ML approaches have the capability to learn additional relevant features50–52. Each XRD spectrum in this study is composed of 43 intensity values sampled from a q range of 0.09 to 0.3 Å−1, providing a sufficient number of features for the ML training process. This study seeks to evaluate the performance of both approaches—rule-based methods have well-established performance bounds and are straightforward in interpretation given their non-learned nature, whereas ML approaches have the potential for higher performance by overcoming noise and non-linearities at the cost of requiring larger training data and their behavior being less well understood.

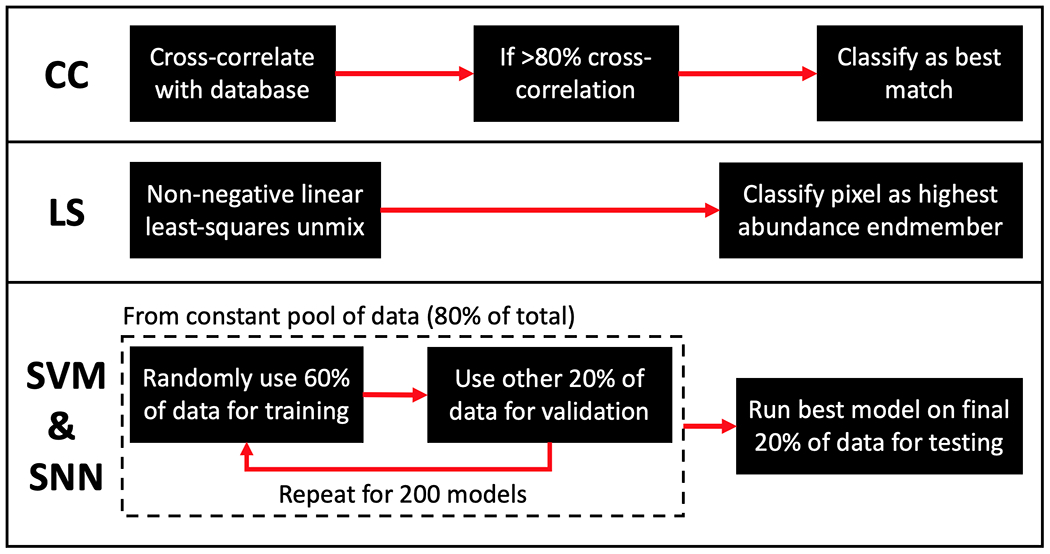

For rule-based approaches, the first method considered is CC of each measured XRD spectrum with the commercial diffractometer measurements of water and PLA. To remove slight variations in material densities as an impacting factor, all database and measured spectra are normalized so their sum of squares equals 1 for all classifiers (for spectra the normalization is: . We note that, for CC, this allows for using the inner product to compute the match ranging from 0 (effectively orthogonal) to 1 (perfectly identical). Pixels are classified as the material from the database with which they show the greatest match, while a threshold for cutting out measurements not belonging to any member of the database is implemented, such that any pixel with <80% CC with a database measurement is marked as a null class. We note that this choice of null threshold is well above water’s 60% match with PLA. While this null class was added to the algorithm to prevent poor matches being classified as a database material, in this study no measurements belonged to the null class due to the quality of measurements and fact that the phantoms only contained the two known materials. Another rule-based approach explored in this study is linear unmixing by LS, which aims to estimate the abundance of materials within a pixel by estimating the spectral mixture of database measurements that produces the best match with the data. We use MATLAB’s “lsqnonneg()” function to conduct non-negative linear LS unmixing by a previously developed algorithm53. As with the CC approach, the reference database and measured spectra are normalized by sum of squares. With each pixel’s predicted abundance of database materials generated, individual pixels are classified as their highest abundance material (for comparison to other binary classification methods considered) with the algorithm set to sort any perfect 50:50 mixture to be classified as the cancer simulant, water.

In consideration of ML approaches, SVMs and SNNs were identified as potential candidates for working with moderate amounts of training data52. When developing machine learning models, it is standard practice to divide all available data into a “training” dataset for training the model, a “validation” dataset for preventing overfitting, and a test dataset for quantifying performance. In this study we use the common splitting ratios of 60:20:20 for division of data into the training, validation, and test groups54 (for the SVM and SNN). Thus, we begin training of the SVM and SNN models by first randomly selecting 80% of the pixels along with their ground truth labeling to be used as a training/validation dataset pool (with the remaining 20% held out as an independent test set). For identification of the ML models that generalized best for classifying water and PLA, 60% of the pixels (3/4 of the training/validation pool) were randomly used for training, while the remaining 20% (1/4 of the training/validation pool) was used for validation to identify the ML models that generalized best. This training + validation process was repeated for 200 SVM and SNN models on the same pool of data (80%), allowing pixels to randomly shuffle between the training/validation groups in order to identify the sensitivity to this splitting and best observed generalization. The SVM and SNN models that performed best on the validation group (in terms of the receiver operating characteristic (ROC) area under the curve (AUC) value), were saved for comparison between classifiers. Lastly, the best SVM and SNN models were run on the test data (i.e., the final 20% of pixels that had been held out from the training/validation process) to quantify performance on an separate dataset. The schematic shown in Fig. 4 illustrates the classification approaches taken by the explored algorithms.

Fig 4.

Schematic of algorithmic flow for 4 classification methods explored. CC) Shows the deterministic matched-filter approach taken by the cross-correlation method LS) Shows the classification method of the non-negative linear least-squares approach utilizing known endmember XRD spectra SVM & SNN) Shows the training approach for the support vector machine and shallow neural network classifiers.

When training the SVM models, MATLAB’s “fitcsvm()” function (which uses a sequential minimal optimization55) was used for training, with a radial basis function kernel identified as optimal due to allowing for non-linearity in the classification boundary56. When training the SNN models, while the amount of data is not large enough to feasibly train a generalizable deep neural network, a SNN with 1-hidden layer containing 10 neurons was identified as optimal (by training/validating with varying numbers of hidden layers and neurons) for our XRD image dataset. A MATLAB “patternnet()” model was created which used Bayesian regularization backpropagation57,58 for training the SNN.

It should be noted that, for the current study, X-ray transmission data is obtained by the imaging system and is being utilized to identify locations in which no object is present (i.e., to mask out regions consisting of only air). For this study with 6 mm thick objects, pixels are marked as air if their attenuation is less than that of 0.5 mm of water. The air-material boundary is obtained with higher resolution by the transmission data (i.e., ≈0.6 mm2 transmission resolution vs ≈1.4 mm2 XRD resolution). Beyond removing air pixels, X-ray transmission data is used for visualization of XRD data by combining transmission data with mean q values of each pixel, producing a visualization of attenuation and average coherent scatter angle within a single 2D image15. An example of this colorized image is shown later in Fig. 6. This visualization is used for display purposes only and does not affect the classifier training data or results.

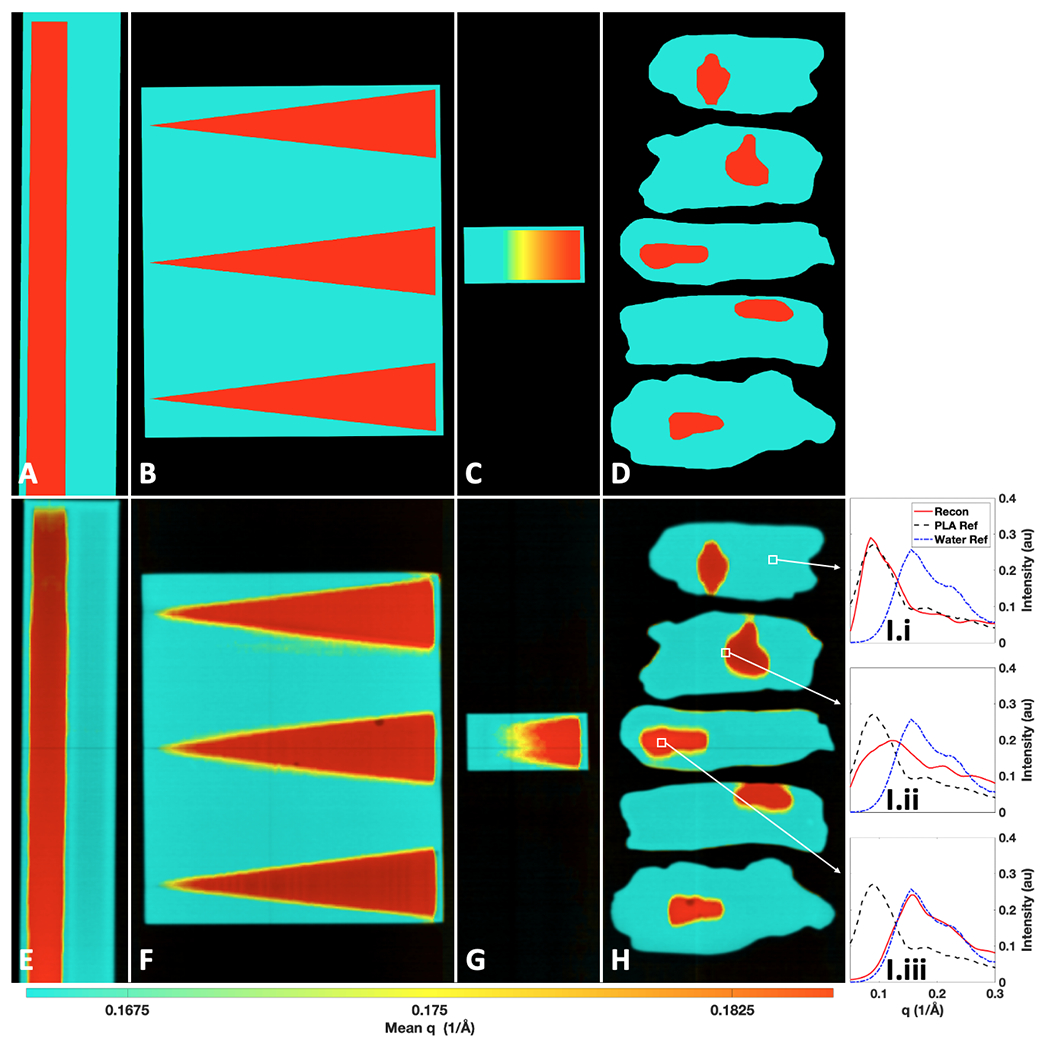

Fig 6.

Ground truth and measured X-ray transmission + momentum transfer (q) colorization (TQC) images of the water-PLA phantoms. Ground truth TQC images of the: trough (A), wedge (B), ramp (C), and tissue (D) phantoms. Measured TQC images of the: trough (E), wedge (F), ramp (G), and tissue (H) phantoms. Water and PLA correspond to red and teal coloring, respectively, on a continuum (online version only). I) The full reconstructed XRD spectra from marked regions in H containing PLA (I.i), a mixture of PLA and water (I.ii), and water (I.iii) compared against XRD spectra of water and PLA measured in a commercial diffractometer.

To obtain a robust comparison between the material classification algorithms, ROC curves were generated to view the trade-off between true positives and false positives at decision thresholds beyond the initially explored midpoint thresholds. Water is treated as the positive class since it is the simulant for cancer. For the CC algorithm, non-binary labels were generated by using a CC metric19 that ranged from 0 (PLA) to 1 (water) based on the material’s match. For LS, the abundance was taken as the non-binary label. For SVM and SNN, the naturally generated non-binary predicted labels were used. The ROC AUC value was computed for each curve for quantitative comparison of their overall performance. To quantify the global and material boundary performances, the accuracy, ROC curves, and AUC values are generated for the entire area of all phantoms as well as just for the regions surrounding the water-PLA boundaries. A region of ±3 mm around a boundary was selected for the latter analysis as it is slightly larger than the spatial resolution of the XRD system and, as such, will naturally include partial volume effects at the boundaries between different materials.

Results

X-ray Transmission and Diffraction Images of the Water-PLA Phantoms

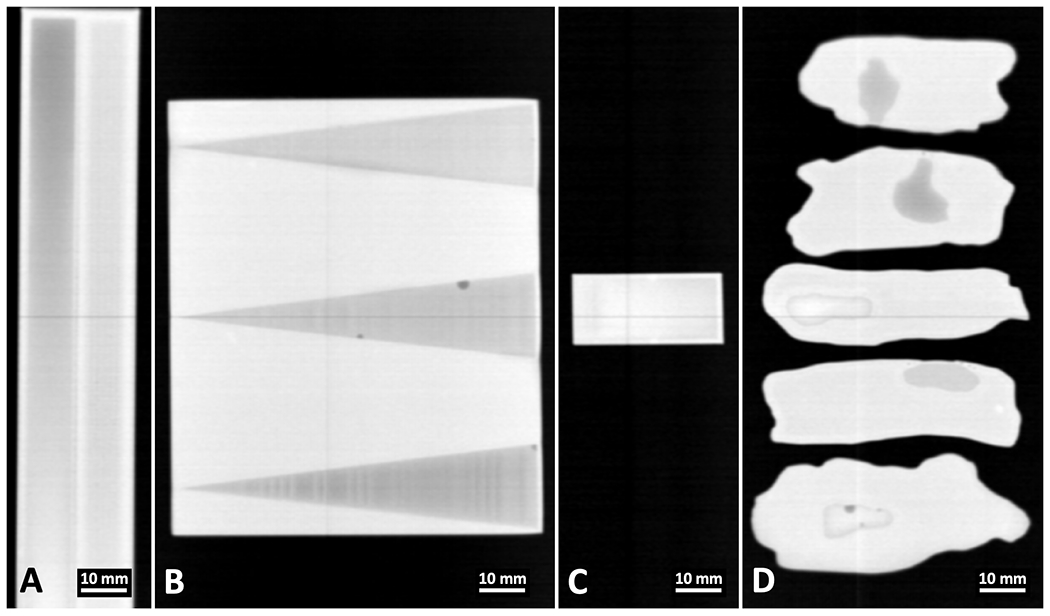

X-ray transmission images were acquired by the X-ray fan beam coded aperture system for all four sets of water-PLA phantoms, as shown in Fig. 5. It is worth noting that, while the differences in attenuation typically aid in highlighting the regions of PLA vs wells filled with another material (water in this study), this data alone does not uniquely identify the materials as water and PLA. This can be seen clearly in Fig. 5A and Fig. 5B, where a tilt relative to gravity or the presence of bubbles causes an apparent difference in attenuation that is attributable to thickness rather than material composition. Similarly, in Fig. 5C, the transmitted intensity looks almost perfectly uniform across the phantom, even though the ratio of water to PLA varies greatly across the phantom. Lastly, an effect similar to the trough phantom can be observed in Fig. 5D: water tension and variable thickness of water in wells create a range of attenuation intensities that all still belong to pixels containing water (with some being more and others less attenuating than the surrounding PLA). Beyond this qualitative assessment, supplemental Fig. S2 demonstrates a quantitative classification based on the log of the transmission intensities, where a ROC AUC of 0.773 and accuracy of 85.45% are achieved. Despite the transmission scans’ deficiencies in differentiating water from PLA, the ≈0.6 mm2 spatial resolution for this system’s transmission images15 provide a fine resolution for masking out air pixels within XRD images that do not contain materials before performing material classification.

Fig 5.

X-ray transmission scans of the water-PLA phantoms taken by the X-ray fan beam coded aperture imaging system. Transmission scan for the A) Trough phantom B) Wedge phantom C) Ramp phantom and D) Tissue phantoms.

Shown in Fig. 6 are the ground truth and measured X-ray transmission + q colorization (TQC) images created by combining XRD data with the transmission images. While each pixel does contain a full XRD spectrum (as an example, XRD spectra from Fig. 6H are shown within Fig. 6I.i–iii), colorizing the transmission scan by an intensity-weighted mean q value from the XRD spectra allows for visual separation of materials that have differences in their mean q values. Here red pixels contain water and teal pixels contain PLA, with a continuum of colors providing information about the mean q value (i.e., mean atomic spacing of the material present at a given location)15.

It should be emphasized that the ground truth images in Fig. 6A–D do not fully identify PLA from water, since for all wells there is a 0.5 mm thickness of PLA below the water for holding the liquid (though this signal relative to 5.5 mm of water is believed to have a negligible impact/be below the sensitivity of the system as observed in the average water spectrum measured in supplemental Fig. S3). Further, Fig. 6C is the most complex phantom given the mixing of water and PLA through the thickness. The visible gradient demonstrates the expected change in mean q colorization based on the abundance of each material along the ramp. Beyond this visualization of the ground truths’ mean q colorization, the classification labels for the ground truth pixels are treated as binary, where pixels are labeled as the most abundant material present within a 1/3 mm2 measurement sampling. For this study’s focus of classifying pixels that contain a majority of water or PLA, this simplification is acceptable.

As shown in Fig. 6E–H, PLA has a lower intensity-weighted mean q value compared to that of water (also observable in Fig. 3), allowing for the TQC images to provide better visual differentiation of water and PLA compared to transmission data alone (especially when compared to the range of attenuation values observed in Fig. 5 that all correspond to water). An appropriate windowing of mean q mapping to teal and red was selected for these two materials, but a color map with a wider range of colors and or colorization based on different spectral properties (e.g., median q, peak location, spectrum shape) could be used for various materials of interest. Within Fig. 6E–H, yellow pixels appear at the boundary of water and PLA. This spectral shift is due to the spectrally mixed XRD measurements that occur due to the ≈1.4 mm2 XRD spatial resolution of the utilized imaging system. This mixing of XRD spectra is shown in Fig. 6I.ii, where the XRD spectrum associated with an object pixel at a boundary of water and PLA has intensities that appear as a weighted combination of the two materials. The matching of X-ray fan beam coded aperture measurements from regions predominantly containing PLA and water in Fig. 6I.i and 6I.iii with commercial diffractometer measurements of water and PLA is encouraging, suggesting that the main challenge for accurate material classification will arise at pixels containing mixtures of materials. While for most of the phantoms these mixtures only occur in the transverse dimension (defined by the fan extent and scan direction plane in Fig. 3), within Fig. 6G we can observe how mean q colorization slowly shifts from teal to red down the ramp due to abundance of both materials changing throughout the phantom thickness (along the axial dimension) and our measurements’ integration along depth. See supplemental Fig. S4 for a comparison between the ramp phantom’s ground truth abundance vs the LS estimated abundance of water.

Performance of Material Classification Algorithms on the Water-PLA Phantoms

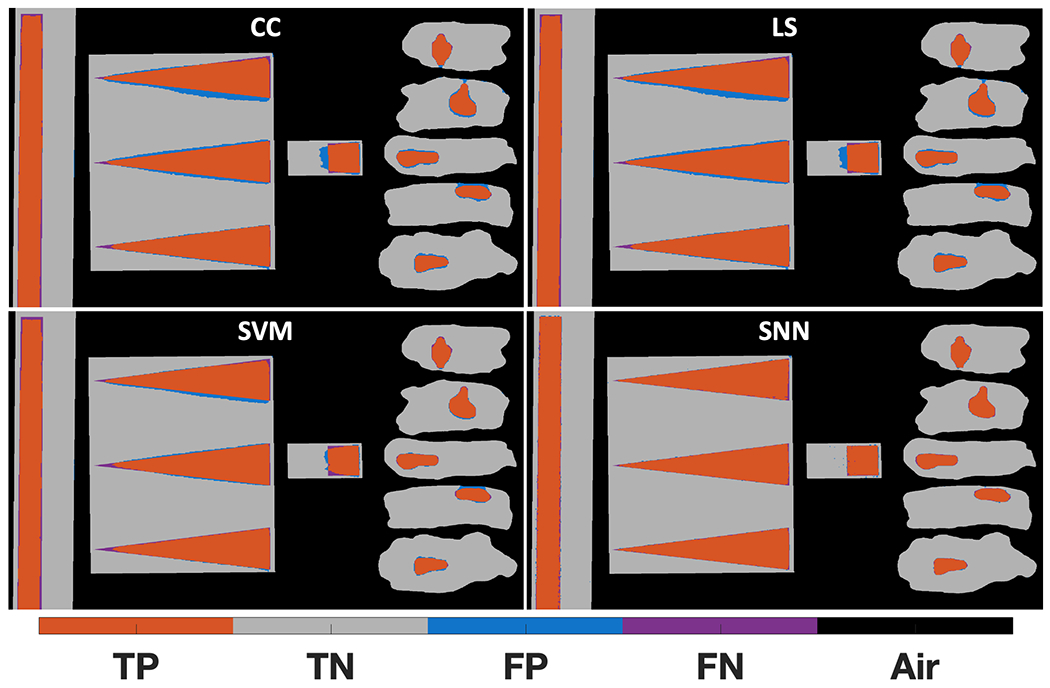

With the X-ray transmission and XRD data collected by the X-ray fan beam coded aperture imaging system (with 159,808 material pixels measured), we next conducted our study of the four material classification algorithms under consideration. The ML models were trained as previously described, with supplemental Fig. S5 showing the distribution of validation set AUC values along with the training, validation, and test dataset ROC curves for the best SVM and SNN models. A visual representation of the performance of each classifier across all phantoms (when operated at their midpoint classification thresholds) is shown in Fig. 7. Looking at the relative performances of the explored classifiers, the CC and LS method underperform in comparison to the ML approaches of SVMs and SNNs. While many pixels are classified correctly in the CC & LS approaches, there are more incorrectly classified pixels at the boundaries between water and PLA and as a function of water depth (in the ramp phantom) as compared to SNN. The SVM classifier reduces the misclassified pixels in regions where the ≈1.4 mm2 XRD spatial resolution appears to have impacted the CC & LS approaches. For the SNN classifier, the misclassified pixels at water-PLA boundaries have been reduced beyond that of the SVM approach; however, a few misclassifications occur in some homogenous material regions (see supplemental Fig. S6 for additional insight on the XRD spectra of these pixels classified by the SNN).

Fig 7.

Material classifier performance on the XRD data of the water-PLA phantoms. Sub-plots are shown for the cross-correlation (CC), linear least-squares (LS), support vector machine (SVM), and shallow neural network (SNN) classifiers. With water as the cancerous simulant, correctly identified water pixels are orange true positives, correctly identified PLA pixels are grey true negatives, and blue false positives and purple false negatives represent misclassified PLA and water pixels, respectively (online version only).

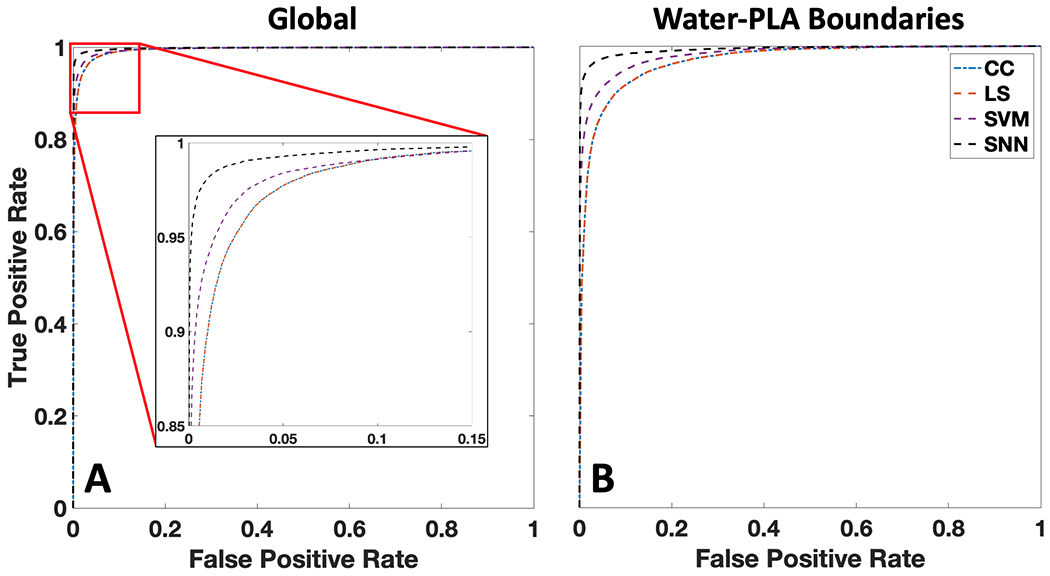

While the results shown in Fig. 7 represent binary classification of each pixel with its best match, the classification approaches provide predictions that exist on a continuum between 0 (PLA) and 1 (water). To complement these spatial representations of classification performance, we also quantitatively analyze the overall classification performance for all pixels across a range of different thresholds and show the resulting ROC curves in Fig. 8.

Fig 8.

Receiver operating characteristic (ROC) curves for the explored material classification algorithms for global and material boundary pixels within the water-PLA phantoms. A) ROC curves for all pixels within the 4 phantoms (global). Cross-correlation (CC) and least-squares unmixing (LS) have near identical performance, while support vector machine (SVM) and shallow neural network (SNN) improve performance. B) ROC curves generated from pixels within 3 mm of water-PLA boundaries for all four phantoms.

From Fig. 8, it can be observed that the ML approaches of SVM and SNN out-perform the rules-based CC and LS algorithms for all detection thresholds and across all pixels. This improvement is further accentuated when one considers only the regions surrounding material boundaries, which is particularly important for clinically relevant tasks such as margin assessment and identifying the extent of disease surrounded by healthy tissue. To provide quantification of the data shown in Figs. 7–8, Table 1 provides the percentage of correctly classified material pixels (when using a predicted classification of 0.5 as the threshold for positive as in Fig. 7) along with the AUC values for each classifier’s ROC curve. These metrics are provided for all pixels (‘global’) as well as the water-PLA boundary pixels (‘boundary’).

Table 1.

Comparison of material pixel classification accuracy and ROC AUC values for all algorithms applied to the XRD images of the water-PLA phantoms. Accuracy was computed as (% material pixels identified correctly) / (total number of material pixels), excluding air pixels from the metric. Accuracy and AUC values are reported for all phantom pixels (global) as well as those that are 3 mm from water-PLA boundaries (boundary). The commercial diffractometer measurements of water and PLA represent the 2 “database cases” provided to the cross-correlation and least squares algorithms, while the 95,885 cases represent the 60% of all material pixels that the SVM and SNN were trained on.

| Classifier | # Database or Training Spectra | Global Accuracy | Global AUC | Boundary Accuracy | Boundary AUC |

|---|---|---|---|---|---|

| Cross-Correlation | 2 | 96.48% | 0.9941 | 89.32% | 0.9683 |

| Least Squares | 2 | 96.48% | 0.9941 | 89.32% | 0.9682 |

| Support Vector Machine | 95,885 | 97.36% | 0.9952 | 92.03% | 0.9760 |

| Shallow Neural Network | 95,885 | 98.94% | 0.9989 | 96.79% | 0.9938 |

Table 1 shows that both CC measurements with a known database and predicting materials by largest abundance of database member (after LS unmixing) result in similar global accuracy levels (96.48%). While minor differences in their classification results on a per-pixel basis can be observed in Fig. 7, neither classifier appears to offer a distinct advantage over the other for this binary classification task. The SVM approach was the second-best performer with a global accuracy of 97.36%, while the SNN approach was shown to be the best for classifying materials, with a global accuracy of 98.94%. This ranking of performance is consistent across metrics (accuracy/AUC for global/boundary regions), suggesting that for any selected positive class threshold the ML approaches improve material classification performance. While the percentage point increase in global performance from worst to best algorithm is small (2.46%), within the material boundaries the percentage point increase is greater (7.47%), demonstrating that improvement of primary material classification within regions containing mixtures was achieved.

The ML model performance demonstrated in Table 1, particularly the ROC AUC value that agrees with the test set’s AUC value shown in supplemental Fig. S5, is suggestive that ML approaches are superior to rules-based classification. As an additional demonstration of the generalizability of the SNN, Fig. 9 shows that the SNN maintains a substantial (>2.04%) accuracy percentage point improvement relative to CC for a spatially complex, varying-thickness phantom that was not part of the internal training, validation, and test datasets.

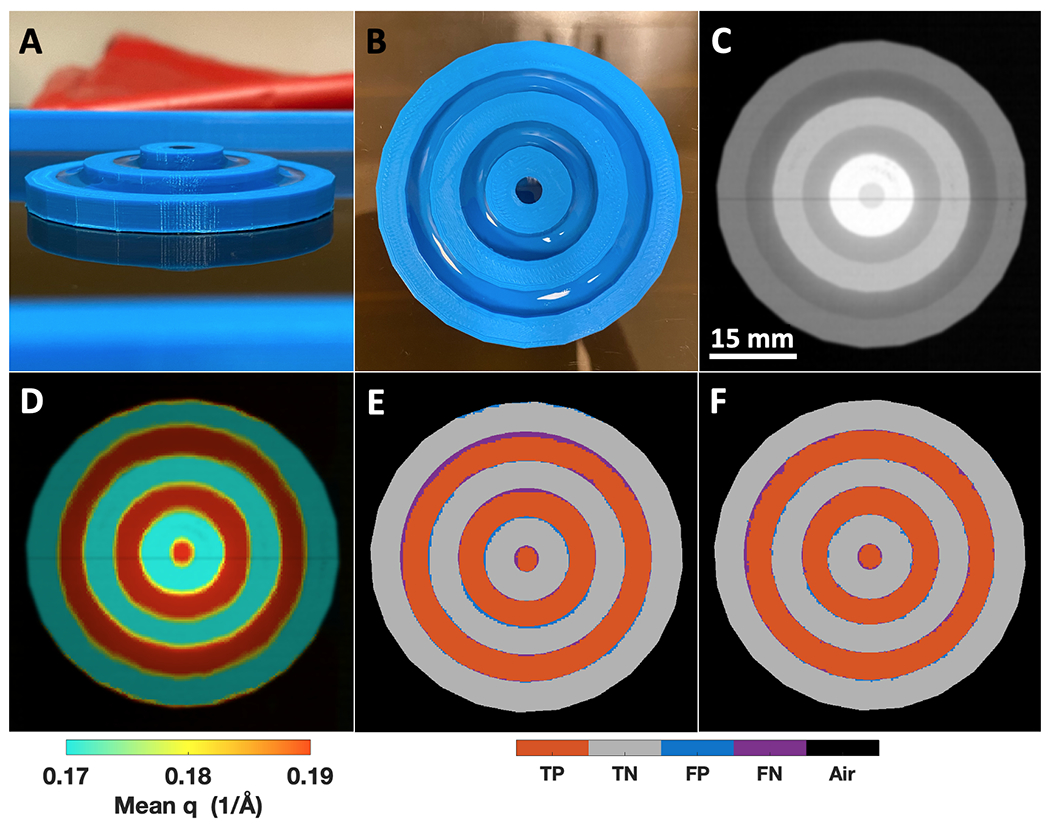

Fig 9.

A target phantom made of circular PLA wells of different heights containing water to study the impact of variable thickness while running CC and SNN classifiers on a new external test object. A) A sideview photograph of the target phantom with water in each of the PLA wells. B) A top view photograph of the target phantom, showing the uniform water within each PLA ring C) X-ray transmission scan of the target phantom. D) A transmission + q colorization (TQC) image of the target phantom. E) The target phantom confusion matrix image for the CC classifier previously shown in Fig. 7. F) Same as E, but for the SNN classifier with fewer blue false positive and purple false negative pixels (online version only).

The target phantom material heights from outer diameter to center are 5, 7.5, and 10 mm, with PLA walls and inner wells each 5 mm wide for an overall phantom diameter of 55 mm (Fig. 9A). All water regions have 0.5 mm of PLA at the bottom of the well, leading to water thicknesses of 4.5, 7, and 9.5 mm. As shown in Fig. 9C, slightly higher attenuation of PLA compared to water for each thickness is visible, while both materials’ attenuation values (intensity within this image) increase as thickness increases towards the center of the target phantom. Similar to Fig. 6E–H, the TQC image of the target phantom adds visual contrast between the PLA and water regions (Fig. 9D). Applying the same CC classifier used in Fig. 7 to the target phantom creates the confusion matrix image shown in Fig. 9E, achieving a 94.93% accuracy where PLA-water boundary pixels cause the most false positive and false negative pixels, with a few false positive pixels observable at the PLA-air boundary. Utilizing the SNN classifier developed on the previous phantoms achieves an accuracy of 96.97% (Fig. 9F), which is a 2.04% percentage point increase compared to the CC rules-based classifier. The SNN applied to the target phantom demonstrated generalizability to objects with variable thickness (5-10 mm compared to the 6 mm thick phantoms it was trained on) while eliminating misclassifications at the PLA-air boundary and reducing misclassifications at the PLA-water boundaries. The accuracies of the CC and SNN on this phantom are ≈2% lower than each classifiers’ respective performance on the phantoms used for training —this was anticipated given that the target phantom was designed with many more PLA-water boundaries (the most difficult to classify regions) compared to all other phantoms. The results of Fig. 9 suggest variable object thickness from 5 – 10 mm does not adversely impact XRD-based classifier performance for this study’s combination of imaging system and classifier algorithms. Additionally, the performance predicted by the SNN test set in supplemental Fig. S5 is realized using a new phantom that was not used as part of the SNN model’s initial training, validation, and testing process.

Discussion

This research has demonstrated the first application of ML based classification on X-ray diffraction imaging data of non-crystalline, biologically relevant phantoms. In particular, we found that ML algorithms outperformed conventional, rules-based approaches, especially in situations in which spectral mixing occurred. It was shown that classification algorithms based on CC, LS, SVM, and SNN could identify water and PLA with 96.48%, 96.48%, 97.36%, and 98.94% global accuracy when operated at the middle classification threshold value, respectively. Additionally, the ROC curves for the classification algorithms had AUC values of 0.994, 0.994, 0.995, and 0.999, respectively. In addition, we showed that co-registered, high-resolution X-ray transmission projection data, while only achieving a classification accuracy of 85.45% and ROC AUC of 0.773 by itself, is helpful for identifying regions containing only air. The material combination of water and PLA is of interest given that the XRD spectra and scatter intensity of water and PLA are similar to that of breast cancer and adipose tissue, suggesting this approach and level of performance could translate to medically focused imaging tasks and improve diagnostic and decision-based procedures if shown to be capable of identifying cancer surrounded by any type of real tissues (see supplemental Fig. S7 for how simulants of additional tissue types could be created).

The ability for ML algorithms to outperform a matched filter, which is the optimal linear classifier in the presence of additive noise, suggests a variety of opportunities for future improvement. For example, it suggests that such nonlinear classifiers can assist with overcoming issues that are typically inherent in computational imaging systems, such as non-gaussian and object-dependent noise in the XRD imaging measurement system, error within the physics models used for multiplexed measurement reconstruction, and mismatches between the spectral library and measured spectra. In addition, we find that the ML classification process, which nonlinearly maps the XRD spectra into the material subspace, results in a classification spatial resolution that is superior to the imaging spatial resolution. This result is analogous to nonlinear optical super-resolution techniques59, except that in this case the improved resolution is purely realized as a data processing step with task-specific applicability. Another example of the potential benefits of improved classifiers is better performance at a fixed SNR or comparable performance at reduced SNR, thus allowing for faster scan times. In the future, information theory methods will be explored to evaluate whether classifiers are reaching their optimal performance for various signal-to-noise levels that are affected by different XRD imaging systems60 and scan durations.

While the phantoms in this study consisted of two materials, future studies should consider phantoms with multiple materials and material mixtures. This situation arises in nearly all biological and medical imaging applications, where a variety of different materials are present in various concentrations and with a morphology that might vary at spatial scales smaller than the imaging system resolution. Future studies will explore how the classification methods shown here, combined with improved transverse and axial resolution, perform in the presence of more complex material distributions. In addition, studies involving the classification of mixed materials or thicker objects with variable X-ray attenuation will likely benefit from using our co-registered X-ray transmission information to further inform and constrain the reconstruction and classification process. Finally, the construction of new XRD imaging systems that take advantage of photon-counting detectors60 or more advanced filtration methods61 could further improve upon the performances demonstrated.

There are a variety of imaging tasks towards which the approaches demonstrated within this study can be adapted. For example, this imaging capability could be implemented in surgical pathology for identifying key tissue slices to turn into H&E slides for pathology assessment, while allowing for a reduction in the total number of tissue slices analyzed by this time-consuming process. Beyond medical applications, one could imagine applying this technology and classification approach to material composition analysis of raw materials and quality assurance of foods and pills—all via classification by rapidly acquired XRD images. To adapt this study’s presented ML classification of XRD images for more complex applications, it will be important to account for materials with higher variability in XRD signatures that still belong to the same class. This need could be addressed by conducting model training on simulated data that accurately accounts for the potential mixtures, scatter texturing, and measurement system noise that will be present in real measurements. Additionally, for tasks including tissue classification with known variation throughout the volume of the samples, it will be important to understand the composition throughout the sample thickness.

Conclusion

Medically relevant phantoms were created, imaged by an X-ray fan beam coded aperture system to obtain XRD images, and improved classification demonstrated by ML algorithms. Combining XRD images with ML classification algorithms demonstrated improved material classification accuracy and spatial resolution beyond what rules-based algorithms have previously achieved. Future work will seek to apply these methods to more complex tasks involving objects with higher degrees of mixing and spectral variations. The presented phantom structures and classification approaches can be implemented in a variety of XRD imaging systems and applications, leading to improved capabilities in research, industrial analysis, and medical tasks.

Supplementary Material

Fig S1 Example breast tissue lumpectomy slice and pathologist annotation that were used for creating water-PLA tissue phantoms. A) Photograph of a breast lumpectomy sample that is 5 mm thick containing cancer, adipose, and fibroglandular that are not immediately differentiable by the photograph B) A hematoxylin and eosin (H&E) stained slide from 10 μm of cells that were sliced and plated from the surface of the tissue in A after paraffin embedding, where a senior pathologist marked in red the region containing invasive ductal carcinoma (while the white regions are typically adipose tissue and pink regions are primarily fibroglandular tissue) C) The water-PLA phantom used in this study that was based on the pathologist annotation in B, where the approximate shape of the sample was traced and made of PLA, while the approximate shape of the cancerous region was turned into a 5.5 mm thick well for filling with the cancer simulant of water.

Fig S2 Classification of water-PLA phantoms based on X-ray transmission intensity. A) Histograms of transmission intensity in units of linear attenuation coefficient (μ) coupled with X-ray length (L) traveled along phantom thicknesses B) Receiver operating characteristic curve (ROC) generated by varying the μL classification threshold for identifying the positive class of water (cancer simulant), with an area under the curve (AUC) of 0.773 C) Confusion matrix image generated when operating at a μL classification threshold of 0.1281 (location marked by red vertical line in A and by red circle in B), with an overall accuracy of 85.45% for correctly classifying water and PLA. The wider distribution of water μL values can be attributed to some wells containing less water (underfilled) or more water (water tension allowing for bubbling above PLA height), and while volumetric measurements (e.g., CT scans) could potentially aid in overcoming this impact of variable thickness, it should be noted that for the ramp phantom that has water level with the top of PLA walls, the water μL values are inseparable from PLA (as shown by the primarily FN square in C, as well as uniform intensity shown in Fig. 5). These results demonstrate that transmission intensity alone can fail to robustly separate materials of interest (compared to Fig. 7), motivating this study’s exploration of X-ray diffraction data along with advanced machine learning classifiers.

Fig S3 Bruker D2 Phaser measured X-ray diffraction (XRD) spectra of water and PLA compared against the mean ± standard deviation of all water and PLA pixels measured by the X-ray fan beam coded aperture imaging system, where the deviation is based on pixel-to-pixel variations. A) The water XRD spectrum measured by the D2 Phaser (red) compared against the mean ± standard deviation spectrum (blue) of the reconstructed/imaged spectra of all pixels containing a majority of water from the studies four medical phantoms. The cross-correlation (CC) between the commercial diffractometer measurement (used by the rules-based algorithms) and measured mean spectrum of water are 99.99974% B) Same as A but for the PLA spectrum measured by the D2 Phaser (red) and mean ± standard deviation spectrum (blue) measured in this study for pixels containing a majority of PLA, with a CC of 99.99989%. These matches demonstrate that a commercial diffractometer and X-ray fan beam coded aperture imaging system used in this study achieve near-identical spectral imaging results for water and PLA, suggesting there is no innate difference/skewing of measurements obtained by our prototype imaging system that would necessitate using the prototype to generate the database/reference spectra for the rules-based approaches.

Fig S5 The distribution of the 200 created models’ validation group ROC AUC values and the ROC curves for the training, validation, and test dataset groups of the best SVM and SNN models (zoomed into TPR of 0.9 – 1 and FPR of 0 – 0.1). A) Histogram of ROC AUC values for all 200 created SVM models, where the model with the best validation group AUC value is saved as the best model for the final test against the 20% of data held out from the training process for measuring performance on new independent measurements. This process of creating 200 models and randomly shuffling the training/validation examples allows for identifying a model that generalizes best due to better random sorting of the data (while also allowing for quantification of variability in model performance over random instantiations), and then after this process comparing the best model’s performance to the 20% test data it has never seen. This process of training multiple of the same model while varying the training/validation sorting has been done previously for identifying feature/model stability and best generalization62,63 B) Same as A, but for the 200 SNN models C) All dataset group’s ROC curves for the best SVM model, showing AUC values all within a range of 0.9949 – 0.9963 (varying by third decimal place or less), suggesting the model did not overtrain, and that the representation of XRD cases in each dataset (split 60:20:20) was sufficient for creating a generalizable model D) same as C, but for the best SNN model. Unlike the SVM, a slightly better performance for the training dataset ROC can be observed in the top left, showing how for these pixels the SNN learned from, the model performs slightly better compared to the validation and testing group. The SNN AUC values are all within the range of 0.9987 – 0.9989, showing an even more consistent performance between datasets compared to the SVM. Due to all dataset groups for both the SVM and SNN having near identical AUC values (within individual models), in the main text Fig. 8 the ROC curves presented are for all pixels (not just test group) when comparing against the CC and LS classifiers.

Fig S4 Water abundance estimation in the ramp phantom for the least squares (LS) classification algorithm compared against ground truth water abundance. A) Side-profile photograph of the ramp phantom from main text Fig. 3C, shown again to highlight the phantom’s composition (through thickness) transitioning from PLA plastic (left) to water (right) B) LS estimated water abundance values from X-ray diffraction (XRD) spectra within each pixel, for the central region of the ramp phantom (not including outer PLA-only walls) C) Comparison of mean LS estimated water abundance (per column in B) vs ground truth abundances for each column as a red scatter plot, showing a trend in general agreement with the dashed black line that represents perfect agreement. Ledges on the left and right of the scatter plot suggest the LS water abundance estimate is not sensitive to 10-20% of water or PLA when the remaining abundance is the other material. Additionally, the LS estimated water abundance reaches 50% when ground truth abundance is 45% – this overestimation is observed in main text Fig. 7 LS, where there are blue false positive pixels to the left of the column where water becomes the majority abundance, when the correct binary label is PLA.

Fig S6 Mean XRD spectra with standard deviation bars for all phantom pixels sorted by the confusion matrix groups of the SNN used in the main text. This XRD data is from all pixels of the four types of phantoms from Fig. 7 subpanel “SNN”, with the confusion matrix classification based on the agreement/disagreement of ground truth and SNN predicted class. Unlike supplemental Fig. S3 that reports the average PLA and water spectra measured by the system based solely on ground truth classification, these results further subdivide the measurements based on which ones are correctly (TP, TN) or incorrectly (FP, FN) classified by the top performing SNN classifier. From these results, one can obtain insights into what XRD spectra shapes the SNN has learned from the normalized (by sum of squares) XRD spectra data, highlighting that the average TP and TN spectra are a great match with the XRD spectra of water and PLA as in Fig. S3, while showing that FP and FN present as a spectral mix of these two materials pure XRD spectra. The average FP measurements have higher intensities around 0.2 Å−1 in q space (closer to the spectral shape of water), while average FN measurements have higher intensities around 0.12 Å−1 (closer to the spectral shape of PLA). These mistakes present as reasonable for the SNN to make, suggesting both that the model has learned reasonable definitions for each material and that these few misclassified cases are the result of spectral mixing at the boundary of these materials on a spatial scale that is below the X-ray fan beam coded aperture imaging system’s ≈1.4 mm XRD spatial resolution. While the SNN has improved this spatial resolution from a classification task standpoint, there are still cases (FP, FN) at this boundary where the binary ground truth may be unreasonable given that the XRD spectra are around a 50:50 mixture of PLA-water.

Fig S7 A phantom made of PLA plastic wells containing mixtures of water and ethanol to study the impact of homogenous mixtures on X-ray fan beam coded aperture measured X-ray diffraction (XRD) spectra. A) Photograph of the phantom with wells filled (from top to bottom) with 100:0, 73.3:26,7, 46.7:53.3, and 20:80 water:ethanol mixtures. B) X-ray transmission scan of the water-ethanol phantom, where it can be observed that the attenuation of ethanol is lower than water. While this change in attenuation is observable, the identification of materials in each well is not immediately clear. C) A transmission + q colorization (TQC) image coloring each pixel by its average scattering angle, highlighting a greater difference between each well, while still not demonstrating the full amount of information present for all pixels containing full XRD spectra. D.i-iv) Example XRD measurements with matching colors for the wells they originated from, along with reference spectra from a commercial diffractometer (D2 Phaser, Bruker) for the mixtures of water and ethanol used in each well. This data demonstrates that for homogenous mixtures, it is possible to detect shifts in XRD spectra caused by variations in the concentration of each material within a given XRD image pixel. The combination of imaging system resolution (both spatial and momentum transfer) and classification algorithm sensitivity will dictate the smallest amount of a given material within a pixel mixture that can be detected. The approach of mixing homogenous materials together to obtain a desired XRD spectra could be used in future studies to simulate fibroglandular tissue that has a spectrum between that of adipose and cancer1 (shown here to be a close XRD spectral match to ≈50:50 water:ethanol in D.iii), testing the performance of classification algorithms in more complex cases.

Acknowledgements

This work was supported in part by the North Carolina Biotechnology Center under award 2018-BIG-6511 and by the National Cancer Institute of the National Institutes of Health under award 1R33CA256102.

Footnotes

Conflict of Interest Statement

JG and AK are co-inventors on a provisional patent filed by Duke University (USPA #20200225371) for “Systems and methods for tissue discrimination via multi-modality coded aperture X-ray imaging”. JG and AK have financial interest in Quadridox, Inc. SS declares no competing interests.

Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

- 1.Kidane G, Speller RD, Royle GJ, Hanby AM. X-ray scatter signatures for normal and neoplastic breast tissues. Phys Med Biol. 1999;44(7):1791–1802. doi: 10.1088/0031-9155/44/7/316 [DOI] [PubMed] [Google Scholar]

- 2.Batchelar DL, Davidson MTM, Dabrowski W, Cunningham IA. Bone-composition imaging using coherent-scatter computed tomography: assessing bone health beyond bone mineral density. Med Phys. 2006;33(4):904–915. doi: 10.1118/1.2179151 [DOI] [PubMed] [Google Scholar]

- 3.Poletti ME, Gonçalves OD, Mazzaro I. Coherent and incoherent scattering of 17.44 and 6.93 keV x-ray photons scattered from biological and biological-equivalent samples: characterization of tissues. X-Ray Spectrometry. 2002;31(1):57–61. doi: 10.1002/xrs.538 [DOI] [Google Scholar]

- 4.Alsharif AM, Sani SFA, Moradi F. X-ray diffraction method to identify epithelial to mesenchymal transition in breast cancer tissue. IOP Conf Ser: Mater Sci Eng. 2020;785:012044. doi: 10.1088/1757-899X/785/1/012044 [DOI] [Google Scholar]

- 5.Lewis RA, Rogers KD, Hall CJ, et al. Breast cancer diagnosis using scattered X-rays. J Synchrotron Rad. 2000;7(5):348–352. doi: 10.1107/S0909049500009973 [DOI] [PubMed] [Google Scholar]

- 6.Moss RM, Amin AS, Crews C, et al. Correlation of X-ray diffraction signatures of breast tissue and their histopathological classification. Scientific Reports. 2017;7(1):12998. doi: 10.1038/s41598-017-13399-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Leclair RJ, Johns PC. A semianalytic model to investigate the potential applications of x-ray scatter imaging. Medical Physics. 1998;25(6):1008–1020. doi: 10.1118/1.598279 [DOI] [PubMed] [Google Scholar]

- 8.Conceição ALC, Poletti ME. Identification Of Molecular Structures Of Normal And Pathological Human Breast Tissue Using Synchrotron Radiation. AIP Conference Proceedings. 2010;1266(1):72–77. doi: 10.1063/1.3478202 [DOI] [Google Scholar]

- 9.Royle GJ, Speller RD. Quantitative x-ray diffraction analysis of bone and marrow volumes in excised femoral head samples. Phys Med Biol. 1995;40(9):1487–1498. doi: 10.1088/0031-9155/40/9/008 [DOI] [PubMed] [Google Scholar]

- 10.Uvarov V, Popov I, Shapur N, et al. X-ray diffraction and SEM study of kidney stones in Israel: quantitative analysis, crystallite size determination, and statistical characterization. Environ Geochem Health. 2011;33(6):613–622. doi: 10.1007/s10653-011-9374-6 [DOI] [PubMed] [Google Scholar]

- 11.Zhu Z, Ellis RA, Pang S. Coded cone-beam x-ray diffraction tomography with a low-brilliance tabletop source. Optica, OPTICA. 2018;5(6):733–738. doi: 10.1364/OPTICA.5.000733 [DOI] [Google Scholar]

- 12.Sosa C, Malezan A, Poletti ME, Perez RD. Compact energy dispersive X-ray microdiffractometer for diagnosis of neoplastic tissues. Radiation Physics and Chemistry. 2017;137:125–129. doi: 10.1016/j.radphyschem.2016.11.005 [DOI] [Google Scholar]

- 13.Escudero RO, Cabral MC, Valladares M, Franco MA, Perez RD. Breast cancer analysis by confocal energy dispersive micro-XRD. Anal Methods. 2020;12(9):1250–1256. doi: 10.1039/C9AY02183C [DOI] [Google Scholar]

- 14.Kern K, Peerzada L, Hassan L, MacDonald C. Design for a coherent-scatter imaging system compatible with screening mammography. J Med Imaging (Bellingham). 2016;3(3). doi: 10.1117/1.JMI.3.3.030501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Stryker S, Greenberg JA, McCall SJ, Kapadia AJ. X-ray fan beam coded aperture transmission and diffraction imaging for fast material analysis. Scientific Reports. 2021;11(1):10585. doi: 10.1038/s41598-021-90163-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Marticke F, Montémont G, Paulus C, Michel O, Mars JI, Verger L. Simulation study of an X-ray diffraction system for breast tumordetection. Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment. 2017;867:20–31. doi: 10.1016/j.nima.2017.04.026 [DOI] [Google Scholar]

- 17.LeClair RJ, Ferreira AC, McDonald NE, Laamanen C, Tang RY zheng. Model predictions for the wide-angle x-ray scatter signals of healthy and malignant breast duct biopsies. JMI. 2015;2(4):043502. doi: 10.1117/1.JMI.2.4.043502 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hassan L, MacDonald CA. Coherent scatter imaging Monte Carlo simulation. J Med Imaging (Bellingham). 2016;3(3). doi: 10.1117/1.JMI.3.3.033504 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Stryker S, Kapadia AJ, Greenberg JA. Simulation based evaluation of a fan beam coded aperture x-ray diffraction imaging system for biospecimen analysis. Phys Med Biol. 2021;66(6):065022. doi: 10.1088/1361-6560/abe779 [DOI] [PubMed] [Google Scholar]

- 20.Westmore MS, Fenster A, Cunningham IA. Tomographic imaging of the angular-dependent coherent-scatter cross section. Med Phys. 1997;24(1):3–10. doi: 10.1118/1.597917 [DOI] [PubMed] [Google Scholar]

- 21.Albanese K, Morris R, Lakshmanan M, Greenberg J, Kapadia A. Tissue Equivalent Material Phantom to Test and Optimize Coherent Scatter Imaging for Tumor Classification. Medical Physics. 2015;42(6Part30):3575–3575. doi: 10.1118/1.4925454 [DOI] [Google Scholar]

- 22.Feldman V, Tabary J, Paulus C, Hazemann JL. Novel geometry for X-Ray diffraction mammary imaging: experimental validation on a breast phantom. In: Medical Imaging 2019: Physics of Medical Imaging. Vol 10948. International Society for Optics and Photonics; 2019:109485O. doi: 10.1117/12.2511460 [DOI] [Google Scholar]

- 23.Feldman V, Paulus C, Tabary J, Monnet O, Gentet MC, Hazemann JL. X-ray diffraction setup for breast tissue characterization: Experimental validation on beef phantoms. Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment. 2020;972:164075. doi: 10.1016/j.nima.2020.164075 [DOI] [Google Scholar]

- 24.Alaroui DF, Johnston EM, Farquharson MJ. Comparison of two X-ray detection systems used to investigate properties of normal and malignant breast tissues. Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment. 2020;954:161900. doi: 10.1016/j.nima.2019.02.027 [DOI] [Google Scholar]

- 25.Bai J, Lai Z, Yang R, Xue Y, Gregoire J, Gomes C. Imitation Refinement for X-ray Diffraction Signal Processing. In: ICASSP 2019 - 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE; 2019:3337–3341. doi: 10.1109/ICASSP.2019.8683723 [DOI] [Google Scholar]

- 26.Farquharson MJ, Al-Ebraheem A, Cornacchi S, Gohla G, Lovrics P. The use of X-ray interaction data to differentiate malignant from normal breast tissue at surgical margins and biopsy analysis. X-Ray Spectrometry. 2013;42(5):349–358. doi: 10.1002/xrs.2455 [DOI] [Google Scholar]

- 27.Oviedo F, Ren Z, Sun S, et al. Fast and interpretable classification of small X-ray diffraction datasets using data augmentation and deep neural networks. npj Computational Materials. 2019;5(1):1–9. doi: 10.1038/s41524-019-0196-x [DOI] [Google Scholar]

- 28.Vecsei PM, Choo K, Chang J, Neupert T. Neural network based classification of crystal symmetries from x-ray diffraction patterns. Phys Rev B. 2019;99(24):245120. doi: 10.1103/PhysRevB.99.245120 [DOI] [Google Scholar]

- 29.Lee JW, Park WB, Lee JH, Singh SP, Sohn KS. A deep-learning technique for phase identification in multiphase inorganic compounds using synthetic XRD powder patterns. Nature Communications. 2020;11(1):86. doi: 10.1038/s41467-019-13749-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Maffettone PM, Banko L, Cui P, et al. Crystallography companion agent for high-throughput materials discovery. Nature Computational Science. 2021;1(4):290–297. doi: 10.1038/s43588-021-00059-2 [DOI] [PubMed] [Google Scholar]

- 31.Wang H, Xie Y, Li D, et al. Rapid Identification of X-ray Diffraction Patterns Based on Very Limited Data by Interpretable Convolutional Neural Networks. J Chem Inf Model. 2020;60(4):2004–2011. doi: 10.1021/acs.jcim.0c00020 [DOI] [PubMed] [Google Scholar]

- 32.Park WB, Chung J, Jung J, et al. Classification of crystal structure using a convolutional neural network. IUCrJ. 2017;4(4):486–494. doi: 10.1107/S205225251700714X [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ziletti A, Kumar D, Scheffler M, Ghiringhelli LM. Insightful classification of crystal structures using deep learning. Nat Commun. 2018;9(1):2775. doi: 10.1038/s41467-018-05169-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Souza A, Oliveira LB, Hollatz S, et al. DiffraNet: Automatic Classification of Serial Crystallography Diffraction Patterns. Published online September 27, 2018. Accessed May 27, 2021. https://openreview.net/forum?id=BkfxKj09Km

- 35.Jha D, Kusne AG, Al-Bahrani R, et al. Peak Area Detection Network for Directly Learning Phase Regions from Raw X-ray Diffraction Patterns. In: 2019 International Joint Conference on Neural Networks (IJCNN). IEEE; 2019:1–8. doi: 10.1109/IJCNN.2019.8852096 [DOI] [Google Scholar]

- 36.Moeck P On Classification Approaches for Crystallographic Symmetries of Noisy 2D Periodic Patterns. IEEE Transactions on Nanotechnology. 2019;18:1166–1173. doi: 10.1109/TNANO.2019.2946597 [DOI] [Google Scholar]

- 37.Brumbaugh K, Royse C, Gregory C, Roe K, Greenberg JA, Diallo SO. Material classification using convolution neural network (CNN) for x-ray based coded aperture diffraction system. In: Anomaly Detection and Imaging with X-Rays (ADIX) IV. Vol 10999. International Society for Optics and Photonics; 2019:109990B. doi: 10.1117/12.2519983 [DOI] [Google Scholar]

- 38.Hassan M, Greenberg JA, Odinaka I, Brady DJ. Snapshot fan beam coded aperture coherent scatter tomography. Opt Express, OE. 2016;24(16):18277–18289. doi: 10.1364/OE.24.018277 [DOI] [PubMed] [Google Scholar]

- 39.MacCabe K, Krishnamurthy K, Chawla A, Marks D, Samei E, Brady D. Pencil beam coded aperture x-ray scatter imaging. Opt Express, OE. 2012;20(15):16310–16320. doi: 10.1364/OE.20.016310 [DOI] [Google Scholar]

- 40.Greenberg JA, Krishnamurthy K, Brady D. Snapshot molecular imaging using coded energy-sensitive detection. Opt Express, OE. 2013;21(21):25480–25491. doi: 10.1364/OE.21.025480 [DOI] [PubMed] [Google Scholar]

- 41.Greenberg JA, Wolter S. X-ray Diffraction Imaging Technology and Applications. In: Devices, Circuits, and Systems Series. CRC Press; 2019. [Google Scholar]

- 42.Paternò G, Cardarelli P, Gambaccini M, Taibi A. Comprehensive data set to include interference effects in Monte Carlo models of x-ray coherent scattering inside biological tissues. Phys Med Biol. 2020;65(24):245002. doi: 10.1088/1361-6560/aba7d2 [DOI] [PubMed] [Google Scholar]

- 43.Kiarashi N, Nolte AC, Sturgeon GM, et al. Development of realistic physical breast phantoms matched to virtual breast phantoms based on human subject data. Medical Physics. 2015;42(7):4116–4126. doi: 10.1118/1.4919771 [DOI] [PubMed] [Google Scholar]

- 44.He Y, Liu Y, Dyer BA, et al. 3D-printed breast phantom for multi-purpose and multi-modality imaging. Quant Imaging Med Surg. 2019;9(1):63–74. doi: 10.21037/qims.2019.01.05 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Georgiev TP, Kolev I, Dukov N, Mavrodinova S, Yordanova M, Bliznakova K. Development of an inkjet calibration phantom for x-ray imaging studies. Scripta Scientifica Medica. 2021;0(0). doi: 10.14748/ssm.v0i0.7410 [DOI] [Google Scholar]

- 46.King BW, Landheer KA, Johns PC. X-ray coherent scattering form factors of tissues, water and plastics using energy dispersion. Phys Med Biol. 2011;56(14):4377–4397. doi: 10.1088/0031-9155/56/14/010 [DOI] [PubMed] [Google Scholar]

- 47.Nisar M, Johns PC. Coherent scatter x-ray imaging of plastic/water phantoms. In: Armitage JC, Lessard RA, Lampropoulos GA, eds. ; 2004:445. doi: 10.1117/12.567128 [DOI] [Google Scholar]

- 48.Horner JL, Gianino PD. Phase-only matched filtering. Appl Opt, AO. 1984;23(6):812–816. doi: 10.1364/AO.23.000812 [DOI] [PubMed] [Google Scholar]

- 49.Ayhan B, Kwan C, Vance S. On The Use of a Linear Spectral Unmixing Technique For Concentration Estimation of APXS Spectrum. 2015;2(9):6. [Google Scholar]

- 50.Cruz JA, Wishart DS. Applications of Machine Learning in Cancer Prediction and Prognosis. Cancer Inform. 2006;2:117693510600200030. doi: 10.1177/117693510600200030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Royse C, Wolter S, Greenberg JA. Emergence and distinction of classes in XRD data via machine learning. In: Anomaly Detection and Imaging with X-Rays (ADIX) IV. Vol 10999. International Society for Optics and Photonics; 2019:109990D. doi: 10.1117/12.2519500 [DOI] [Google Scholar]

- 52.Zhao B, Wolter S, Greenberg JA. Application of machine learning to x-ray diffraction-based classification. In: Anomaly Detection and Imaging with X-Rays (ADIX) III. Vol 10632. International Society for Optics and Photonics; 2018:1063205. doi: 10.1117/12.2304683 [DOI] [Google Scholar]

- 53.Lawson CL, Hanson RJ. Solving Least-Squares Problems. In: Prentice Hall; 1974:Chapter 23, page 161. [Google Scholar]

- 54.Lever J, Krzywinski M, Altman N. Points of Significance: Model selection and overfitting. Nature Methods. 2016;13(9):703–705. doi: 10.1038/nmeth.3968 [DOI] [Google Scholar]

- 55.Fan RE, Chen PH, Lin CJ. Working Set Selection Using Second Order Information for Training Support Vector Machines. Journal of Machine Learning Research. 2005;6(63):1889–1918. [Google Scholar]

- 56.Prajapati GL, Patle A. On Performing Classification Using SVM with Radial Basis and Polynomial Kernel Functions. In: 2010 3rd International Conference on Emerging Trends in Engineering and Technology. ; 2010:512–515. doi: 10.1109/ICETET.2010.134 [DOI] [Google Scholar]

- 57.MacKay DJC. Bayesian Interpolation. Neural Computation. 1992;4(3):415–447. doi: 10.1162/neco.1992.4.3.415 [DOI] [Google Scholar]

- 58.Dan Foresee F, Hagan MT. Gauss-Newton approximation to Bayesian learning. In: Proceedings of International Conference on Neural Networks (ICNN’97). Vol 3. ; 1997:1930–1935 vol.3. doi: 10.1109/ICNN.1997.614194 [DOI] [Google Scholar]

- 59.Hell SW, Wichmann J. Breaking the diffraction resolution limit by stimulated emission: stimulated-emission-depletion fluorescence microscopy. Opt Lett, OL. 1994;19(11):780–782. doi: 10.1364/OL.19.000780 [DOI] [PubMed] [Google Scholar]

- 60.Pang S, Zhu Z, Wang G, Cong W. Small-angle scatter tomography with a photon-counting detector array. Phys Med Biol. 2016;61(10):3734–3748. doi: 10.1088/0031-9155/61/10/3734 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Beath SR, Cunningham IA. Pseudomonoenergetic x-ray diffraction measurements using balanced filters for coherent-scatter computed tomography. Medical Physics. 2009;36(5):1839–1847. doi: 10.1118/1.3108394 [DOI] [PubMed] [Google Scholar]

- 62.Parmar C, Grossmann P, Bussink J, Lambin P, Aerts HJWL. Machine Learning methods for Quantitative Radiomic Biomarkers. Sci Rep. 2015;5(1):13087. doi: 10.1038/srep13087 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Yu L, Ding C, Loscalzo S. Stable feature selection via dense feature groups. In: Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. KDD ‘08. Association for Computing Machinery; 2008:803–811. doi: 10.1145/1401890.1401986 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Fig S1 Example breast tissue lumpectomy slice and pathologist annotation that were used for creating water-PLA tissue phantoms. A) Photograph of a breast lumpectomy sample that is 5 mm thick containing cancer, adipose, and fibroglandular that are not immediately differentiable by the photograph B) A hematoxylin and eosin (H&E) stained slide from 10 μm of cells that were sliced and plated from the surface of the tissue in A after paraffin embedding, where a senior pathologist marked in red the region containing invasive ductal carcinoma (while the white regions are typically adipose tissue and pink regions are primarily fibroglandular tissue) C) The water-PLA phantom used in this study that was based on the pathologist annotation in B, where the approximate shape of the sample was traced and made of PLA, while the approximate shape of the cancerous region was turned into a 5.5 mm thick well for filling with the cancer simulant of water.

Fig S2 Classification of water-PLA phantoms based on X-ray transmission intensity. A) Histograms of transmission intensity in units of linear attenuation coefficient (μ) coupled with X-ray length (L) traveled along phantom thicknesses B) Receiver operating characteristic curve (ROC) generated by varying the μL classification threshold for identifying the positive class of water (cancer simulant), with an area under the curve (AUC) of 0.773 C) Confusion matrix image generated when operating at a μL classification threshold of 0.1281 (location marked by red vertical line in A and by red circle in B), with an overall accuracy of 85.45% for correctly classifying water and PLA. The wider distribution of water μL values can be attributed to some wells containing less water (underfilled) or more water (water tension allowing for bubbling above PLA height), and while volumetric measurements (e.g., CT scans) could potentially aid in overcoming this impact of variable thickness, it should be noted that for the ramp phantom that has water level with the top of PLA walls, the water μL values are inseparable from PLA (as shown by the primarily FN square in C, as well as uniform intensity shown in Fig. 5). These results demonstrate that transmission intensity alone can fail to robustly separate materials of interest (compared to Fig. 7), motivating this study’s exploration of X-ray diffraction data along with advanced machine learning classifiers.