Abstract

Objective

Many employers have introduced rewards programs as a new benefit design in which employees are paid $25–$500 if they receive care from lower‐priced providers. Our goal was to assess the impact of the rewards program on procedure prices and choice of provider and how these outcomes vary by length of exposure to the program and patient population.

Study Setting

A total of 87 employers from across the nation with 563,000 employees and dependents who have introduced the rewards program in 2017 and 2018.

Study Design

Difference‐in‐difference analysis comparing changes in average prices and market share of lower‐priced providers among employers who introduced the reward program to those that did not.

Data Collection Methods

We used claims data for 3.9 million enrollees of a large health plan.

Principal Findings

Introduction of the program was associated with a 1.3% reduction in prices during the first year and a 3.7% reduction in the second year of access. Use of the program and price reductions are concentrated among magnetic resonance imaging (MRI) services, for which 30% of patients engaged with the program, 5.6% of patients received an incentive payment in the first year, and 7.8% received an incentive payment in the second year. MRI prices were 3.7% and 6.5% lower in the first and second years, respectively. We did not observe differential impacts related to enrollment in a consumer‐directed health plan or the degree of market‐level price variation. We also did not observe a change in utilization.

Conclusions

The introduction of financial incentives to reward patients from receiving care from lower‐priced providers is associated with modest price reductions, and savings are concentrated among MRI services.

Keywords: price shopping, price variation, rewards programs

What is known on this topic

The wide variation in private insurance prices for common health services creates a potential opportunity for savings if programs can shift patients from higher‐priced to lower‐priced providers.

Rewards programs, which offer financial incentives to patients who receive care from designated lower‐priced providers, have grown in popularity among employer‐sponsored insurance plans.

What this study adds

Using data on 3.9 million enrollees of a large health plan, we find that introduction of the program among employer‐sponsored insurance plans was associated with modest reductions in prices.

Price reductions from selecting lower‐priced providers were 1.3% during the first year of the program and 3.7% during the second year of the program and were concentrated among magnetic resonance imaging services.

Rewards incentive programs result in modest savings, but the savings are less than what has been observed in other benefit design changes such as reference pricing.

1. INTRODUCTION

There is wide variation in health care prices within the United States among the commercially insured population 1 , 2 , 3 , 4 , 5 both across markets and within markets. This price variation for common “shoppable” services presents a substantial opportunity for savings. For example, if patients shifted their care from higher‐priced magnetic resonance imaging (MRI) providers to those at the 25th percentile within the same market, overall health care spending would be reduced by 1.1%. However, shifting patient demand to lower‐priced providers has proven challenging in health care. Popular policies such as price transparency initiatives and high‐deductible health plans have been ineffective in encouraging price shopping. 6 , 7 , 8 , 9 , 10

In contrast, benefit design choices such as reference pricing have been more effective. Under reference pricing, patients pay the full difference between a provider's price and a predefined price threshold. Reference pricing has led to price reductions ranging from 10% to 32% for services that are in the reference pricing program. 11 , 12 However, reference pricing has been ineffective in reducing total health care spending because it has been applied to a small number of services and few employers have implemented reference pricing. Many employers are reluctant to use reference pricing because it exposes their employees to potential out‐of‐pocket costs in the thousands of dollars. 13 , 14

Rewards programs are essentially the flip of reference pricing. To encourage the use of lower‐priced providers, the rewards program we study pays patients a financial reward, ranging from $25 to $500 depending on the service and the chosen provider, to patients who select lower‐priced providers. The rewards incentives serve as a “nudge” to address information barriers to price shopping. These programs have grown rapidly in the last several years. 15

To help examine the impacts of these programs, this paper studies how patients responded to a financial rewards program implemented by a large insurer, the Health Care Services Corporation (HCSC). In an earlier paper, we evaluated the impact of a pilot program among 35 employers and found it led to a 2.1% reduction in spending among services eligible for rewards. 16 Following the pilot program, the rewards program has been expanded to hundreds of other employers.

In this paper, we build on our earlier analysis of the pilot program in three ways. First, we examined the impact across a broader set of employers and, therefore, informed whether the savings observed in the pilot program were generalizable. Second, we assessed whether savings increase or decrease over time. Patient populations may learn how to navigate these programs over time, which could increase the impact, or they may lose interest after an initial implementation period, which would decrease the impact. Third, taking advantage of the larger study population, we studied how the rewards program intersects with other health plan and market characteristics. The impact of the reward program may differ among those enrolled in a high‐deductible health plan. Specifically, existing studies find that high deductibles do not lead to meaningful price shopping, although there is modest impact on prices for laboratory tests. 17 However, the impact of the interaction between consumer‐directed health plans (CDHPs), which pair high deductibles with tax‐advantaged health spending accounts, and the rewards program is unknown. The deductible may increase the incentives to price shop and enrollees in high‐deductible plans may have more experience with price shopping and thus may be more engaged in rewards program. We also compare the impact of the rewards programs in markets with wide variation in prices versus markets with less variation. While the program is tailored to specific markets, more variation in prices provides more opportunities for rewards and, therefore, greater potential impact.

Conceptually, the impact of rewards programs on prices and savings is unclear. The financial incentive of direct payments to the patient obviously can increase patient willingness to price shop. Rewards programs may also decrease utilization because the rewards program exposes patients to information about prices prior to receiving care. Upon learning about prices, some patients might view even low‐priced providers as too expensive and may decide not to receive care. This concern may be especially relevant for patients in high‐deductible health plans, given the rewards incentives are lower than mean deductibles.

However, rewards programs could drive increased utilization. For example, a patient on the margin on whether to have a procedure may choose to do so because of the potential reward which lowers the net price faced by patients (out‐of‐pocket costs minus any rewards). A rewards program may perversely encourage patients to choose higher‐priced providers because patients may equate provider lower price with lower provider quality. 18 The rewards make it easier for patients to identify lower‐priced providers. Because insurance coverage decreases patient sensitivity to provider prices, 19 patients may be unconcerned that higher prices lead to higher out‐of‐pocket costs.

Rewards programs may simply be ineffective because patients may be unaware that programs exist. Even if patients are aware of these programs, patients may not have information on provider prices and thus may not be able to navigate these programs. 20 To give patients access to information on provider prices, price transparency tools have been created, but use of these tools has been low and savings have been minimal. 3 , 7 , 8 , 21 , 22 , 23 One explanation is that patients cannot act on the price information. For example, many services require physician referrals, which limit the agency of patients to price shop, even for “commodity” health care services. 24 Physicians employed by a hospital or health system have incentives to refer patients to their employing hospital, which tend to be higher‐priced. 24 , 25

The key empirical question this paper seeks to address is whether these incentives are sufficiently high‐powered to change patient choice of provider and how the effect of these incentives varies by procedure, length of exposure to the program, among patients with alternative incentive programs, and based on market characteristics.

2. METHODS

2.1. Setting and program

HCSC is the fourth‐largest US insurer in the United States and the BlueCross BlueShield affiliate for Illinois, New Mexico, Montana, Oklahoma, and Texas. For its employer‐sponsored insurance plans, HCSC offers the Member Rewards program, which is administered by Vitals. Vitals is responsible for determining the providers eligible for rewards incentives and paying the rewards incentives. The Member Rewards program was piloted among 35 preselected employers in 2017 and was expanded as an option to all HCSC employers in 2018. For 135 select services or procedures (full list in Appendix Table 1), the Member Rewards program designates less‐expensive providers. Patients who receive care from these providers are eligible for a financial incentive that ranges from $25 to $500, depending on the procedure and the relative price of provider chosen. Average incentive payments range from $42 for mammograms to $301 for major procedures (Appendix Table 2).

To be eligible for a rewards incentive, patients are required to use the HCSC online price transparency tool or the phone‐based support line to search for price information prior to receiving the procedure. The search must be for the same procedure type that the patient receives but does not have to be for the exact same procedure (e.g., a search for a computed tomography scan enables rewards payments for MRIs). This step is designed to limit giving rewards to patients who would have gone to lower‐priced providers in the absence of the rewards program. If a patient receives the same service within a year of a price transparency search, they do not need to search prices again to be eligible for a rewards payment. However, after a year, the enrollee must again do a price transparency search if they want to receive a reward.

2.2. Data

We used 2016–2018 HCSC claims data that include procedure‐level information on the provider that performed the procedure, prices, patient cost‐sharing, patient demographics (e.g., age, gender, zip code), and patient diagnoses. We categorized the 135 individual procedures into eight procedure categories using the groupings in Appendix Table A1. Additionally, we linked data on rewards incentive payments to claims. Thus, we can observe which patients received a reward and the corresponding provider choice that led to that reward. For each of the relevant procedures, Vitals determines the set of providers for which patients are eligible to receive a rewards incentive payment if they go to that provider. This determination is based on the price distribution within the market and the availability of lower‐priced providers. While we do not have access to Vitals' designation on which providers are rewards‐eligible, because we have data on which procedures resulted in rewards, we can approximate the set of rewards‐eligible providers.

2.3. Pilot treatment group

In the pilot program, 35 self‐insured employers implemented the Member Rewards program starting in January 2017. As in our previous evaluation of the pilot program, we excluded six employers that lacked preperiod data. An additional seven employers with approximately 30,000 individuals either left HCSC or discontinued the rewards program. For the remaining 22 employers, which account for approximately 240,000 employees and their dependents, we have 2 years of postintervention data.

2.4. Expanded treatment group

The rewards program has provided to be popular among employers, and the number of employees and dependents covered has grown rapidly each year (Appendix Figure 1). In our expanded treatment group, we focused our analyses on self‐insured employers given their larger size. In the second year (start date January 2018), a total of 142 self‐insured employers covering 691,000 lives joined the program. Of these, we excluded 59 employers, which cover approximately 325,000 lives who were not HCSC client for the entire 2016–2018 study period. For the remaining 65 employers, covering approximately 341,000 covered lives, we have 1 year of postintervention data.

2.5. Control population

Our control population consists of all self‐insured employers with HCSC that did not implement the Member Rewards program and were continuously HCSC customers during the 2016–2018 period. This population includes 1135 employer accounts and covers 3.4 million covered lives.

2.6. Outcomes

We focused our analyses on the 135 select services or procedures eligible for rewards which included mammograms. In 2018, HCSC discontinued reimbursement for both digital and analog mammograms and only reimbursed for computer‐aided detection mammograms. To avoid contamination between changes in prices and changes in type of mammogram available to patients, we excluded mammograms from this analysis.

Our primary outcome is the log‐transformed procedure price. We used the negotiated price, often referred to as the “allowed amount,” that captures the total transacted amount paid by a combination of the employer (e.g., employer/insurer payments) and the patient (e.g., patient copays, coinsurance, deductible payments). We used the log‐transformed procedure price to limit the influence of outliers. Our secondary outcome was a binary use of lower‐priced providers. To define lower‐priced providers, we would ideally simply include providers for which patients are eligible to receive a rewards payment. Unfortunately, as noted above, we did not have access to this set of providers. To approximate this set, we examined the price distribution for each service within geographic markets, defined at the Metropolitan Statistical Area (MSA) level and looked for the cutoff where patients received rewards payments. 26 This point varied across services and markets ranging from the 33rd price percentile for major procedures to the 40th price percentile for minor surgical procedures. For simplicity, we used the 35th price percentile across all services as our definition of lower‐priced providers.

Another secondary outcome was the annual utilization of care for each procedure. As noted above, the impacts on utilization are ambiguous.

2.7. Analyses

We estimated the impact of the program using a difference‐in‐differences study design. We separated the first‐ and second‐year impacts of the program by estimating separate treatment effects for the two cohorts and estimate the following regression:

| (1) |

In this model, the dependent variable measures each of the outcomes (log procedure price, log employer payment, log patient payment, choice of lower‐priced provider, any service utilization, and annual spending) for patient who receives benefits from employer for procedure during time period . We included controls for patient age (in 10‐year categories), gender, and CDHP enrollment. We also included fixed effects for procedure, employer, and time period, which we measured as the interaction of year and calendar quarter. When estimating the utilization effects, we included service category rather than procedure‐fixed effects and included year‐fixed effects instead of the year‐by‐quarter‐fixed effects.

The term denotes the first‐year treatment program and is for the second‐year treatment program. The interaction of with 2017 estimates the impact of the first year of the program, while the 2018 interaction estimates the effects in the second year. For the treatment group, the 2018 interaction estimates the effects in the first year of the program. The time‐ and employer‐fixed effects negate the need for separate treatment and post main effects. We estimated this model for all combined procedures to estimate the overall impacts of the program and separately for each service category to assess differences across procedure types. We estimated this model using ordinary least squares. As a sensitivity test, we estimated separate regressions for the two cohorts. We likewise tested the sensitivity or our regression model to the included controls by iteratively adding employer‐fixed effects, MSA‐fixed effects, and interactions between state and year (Appendix Table 5). In an additional sensitivity test, we used the log‐transformed sum of the procedure price and any rewards incentive payments as a dependent variable. Including rewards incentive payments accounts for the costs of changing patient behavior.

In this model, the main identification assumption is that there are no contemporaneous events or policies that influence our outcomes of interest. We believed the decision to implement or not implement the program likely occurs quasi‐randomly at the employer level. A potential concern is that contemporaneous programs implemented by the employer could influence employee decisions. An example of such a program would be the implementation of a high‐deductible plan or other changes to benefit design. For this reason, we included controls for CDHP plans, and as shown in Table 2, trends in CDHP growth are similar between the two treatment groups and the control group. As a sensitivity test, we also estimated a specification that interacts the market‐ and year‐fixed effects. These interactions capture any unobserved changes at the market level that might impact our outcomes (e.g., provider entry or exit). As shown in the Appendix, iteratively adding these fixed effects does not meaningfully change the results.

To test the parallel trends assumption inherent in the difference‐in‐difference design, in this study, we used an event study approach. As in the main regression, we estimated the event study for the combined prices and separately for prices for each procedure. We also separated the two treatment populations. This approach allows us to examine trends in each outcome between both treatment groups and the control group, relative to the difference in the fourth quarter of 2016, which we use for the reference period. This approach also allows us to examine trends in postimplementation trends, which can inform how patients learn about the program. These results are presented in the Appendix. For both treatment populations, the preimplementation price trends are not statistically significant.

2.8. Sub‐group analyses

The efficacy of the rewards program we study may be influenced by both patient‐ and market‐level differences. In particular, many enrollees are in CDHPs that have high deductibles. As a sensitivity test, we estimated a triple‐differences regression model that interacts CDHP enrollment with each of the three difference‐in‐difference treatment. We again included employer‐fixed effects in these models. Thus, the heterogeneity in treatment effects is driven by plans that switch to CDHPs. We did not have information on the type of CDHP (e.g., whether the employer provided Health Retirement Account or Health Savings Account contributions) or the timing of when the patient met their deductible. Overall, there was an approximately 10 percentage point increase in the share of CDHP plans over the 3 years.

Likewise, the wide variation in market prices creates a potential for heterogeneous responses across markets. Markets with wide variation in provider prices offer a larger potential savings than markets with less price variation. To examine this dimension of heterogeneity, we calculated the 75th and 25th price percentiles for each procedure category, year, and MSA combination. We calculated the procedure category‐specific ratio of the 25th and 75th price within each MSA and year. Similar to the CDHP test, we then interacted the 75th and 25th price percentile ratios with each treatment variable in a triple‐differences regression. This regression measures the differential impacts of the program in markets based on the level of price variation in that market.

3. RESULTS

In 2018, there were approximately 241,000 individuals in the pilot treatment group, 361,000 in the expanded treatment group, and 3.4 million in the control group (Table 1). The majority of all enrollees in these three groups come from the South and Midwest regions. Across the three groups, age and gender distributions are similar. The share of enrollees in a CDHP during 2016 was 26.3% for the pilot treatment group, 37.6% for the expanded treatment group, and 29.4% for the control group. Given these differences, our regression models control for CDHP enrollment.

TABLE 1.

Descriptive characteristics of three cohorts preintervention (2016)

| Pilot cohort employers | Expanded treatment cohort | Control employers | |

|---|---|---|---|

| Total # of unique enrollees | 222,224 | 341,247 | 3,350,375 |

| Employers | 22 | 65 | 1135 |

| Enrollees per employer | 10,101 | 5250 | 2952 |

| Region of country | |||

| South | 47.8% | 36.1% | 42.8% |

| Midwest | 31.4% | 37.6% | 34.6% |

| West | 12.7% | 11.7% | 17.3% |

| Northeast | 8.2% | 14.6% | 5.4% |

| Average months enrolled | 11.2 | 11.2 | 11.2 |

| Avg. age | 33 | 32.5 | 34 |

| % Male | 45.9% | 50.3% | 51.1% |

| % of CDHP (high‐deductible plan) | 26.3% | 37.6% | 29.4% |

| # Enrollees with procedure | 35,511 | 60,325 | 515,907 |

| % of Enrollees with procedure | 16.0% | 17.7% | 15.4% |

| Mean procedures per person a , b | 2.0 | 2.0 | 2.0 |

| Mean procedure price a | $1320 | $1337 | $1370 |

| Mean patient payment | $273 | $219 | $216 |

Abbreviation: CDHP, consumer‐directed health plan.

Limited to rewards‐eligible procedures.

Among patients with any procedure.

3.1. Fraction of procedures for which patients price shopped

For the pilot treatment cohort, the fraction of rewards‐eligible services associated in which the patient used the price shopping tool in the 12 months prior to the service increased to 9.3% by end of first year and 13.4% by end of second year. In the expanded treatment cohort, this rate was lower, 7.3% rate by the end of their first year.

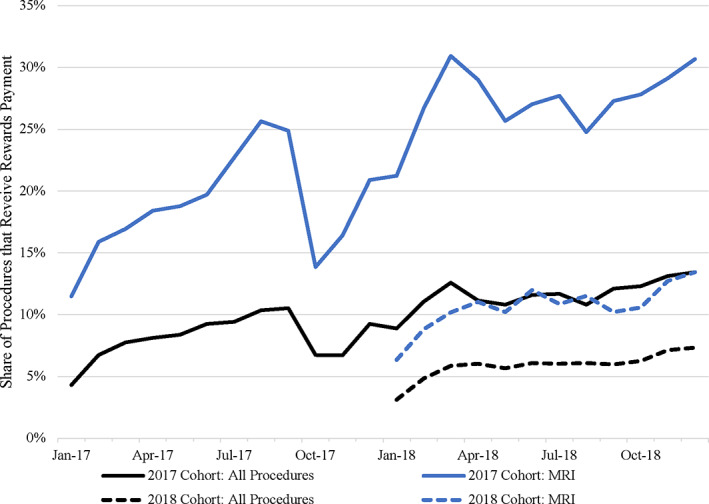

Across different types of services, MRI procedure was the procedure that resulted in the highest engagement with the price transparency tools (Figure 1). In the pilot group, the fraction of MRIs in which the patient used the price transparency tools reached 26.0% at the end of the first year and increased further to 30.7% in the end of the second year. Among the expanded cohort, 13.4% of MRIs procedures were preceded by a price comparison by the end of the first year.

FIGURE 1.

Rewards program engagement trends. This figure presents the share of procedures that used the online and/or telephone tools to view price information for all procedures (black line) and magnetic resonance imagings (MRIs) (blue line), which had the highest rate of engagement with the program, and minor procedures (red line), which had the lowest rate of engagement. For each procedure category, the solid line indicates the cohort of 22 pilot employers that implemented the rewards program in 2017, and the dashed line indicates the cohort of 65 employers that implemented the program in 2018 [Color figure can be viewed at wileyonlinelibrary.com]

The share of rewards‐eligible procedures that received a reward payment ranged from 0.3% for minor procedures to 7.8% for MRIs (Appendix Table 3). Thus, approximately 25% of the patients who engaged with the rewards program through viewing the price transparency resources received a rewards payment for selecting a lower‐priced provider.

3.2. Procedure prices and choice of providers

Compared to controls, we estimated there was a 1.3% reduction in prices for all rewards‐eligible services during the first year among in both the pilot and the expanded treatment cohorts (Table 2). In the second year of the program, there was a 3.7% reduction in overall prices among the pilot cohort. Based on baseline spending and use of procedures covered by the rewards program, the 3.7% overall price reduction translates to a $15.15 reduction in annual medical spending, per eligible member.

TABLE 2.

Association between implementation of rewards program and procedure price and provider price

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | |

|---|---|---|---|---|---|---|---|---|---|

| All procedures | CT scan | MRI | Ultrasound | Other imaging | Endoscopy | Minor procedure | Moderate procedure | Major procedure | |

| Panel A: Procedure price (% change in price) | |||||||||

| Pilot cohort × 2017 | −1.27%*** | −0.98% | −3.73%*** | −0.20% | −0.80% | −1.26% | −2.00% | 1.53% | 1.61% |

| Pilot cohort × 2018 | −3.59%* | −4.29% | −6.47%** | −2.67%* | −3.76% | −0.74% | −7.55%*** | −0.64% | −1.32% |

| Expanded cohort × 2018 | −1.31%*** | 0.050% | −3.05%*** | −0.92% | −2.92%** | −0.83% | −1.61% | −1.80%* | 2.02% |

| Panel B: Probability of selecting lower‐priced provider (percentage points) | |||||||||

| Pilot cohort × 2017 | 0.83** | 0.69 | 2.10*** | 0.10 | −0.6 | 1.62* | 1.44 | −0.34 | −2.65** |

| Pilot cohort × 2018 | 2.19** | 2.42 | 3.70** | 1.60* | 4.14** | 1.46 | 2.00** | −0.05 | −2.23 |

| Expanded cohort × 2018 | 1.07*** | 0.47 | 1.90*** | 0.48 | 3.62*** | 2.00*** | 0.96 | 0.65 | −2.20* |

Note: This table presents the procedure‐level difference‐in‐difference regression results that estimate the impact of the rewards program on procedure prices (Panel A) and use of lower‐priced providers (Panel B). Prices are log‐transformed. Within each outcome, the first row presents the first‐year effects for the pilot cohort that implemented the rewards program in 2017, the second row presents second‐year effects for the pilot cohort, and the third row presents first‐year effects for the expanded cohort that implemented the rewards program in 2018. All regressions include controls for age, gender, and consumer‐directed health plan (CDHP) enrollment and fixed effects for year, month, employer, Metropolitan Statistical Area (MSA), and specific procedure. Standard errors clustered at employer level. ***p < 0.01, **p < 0.05, and *p < 0.

Abbreviations: CT, computed tomography; MRI, magnetic resonance imaging.

Among MRI procedures, there was a 3.8% and 3.1% reduction in the first year for the pilot and expanded treatment cohorts, respectively, and a 6.7% reduction in the second year for the pilot cohort. During the second year of implementation, we observed a 2.7% reduction in ultrasounds and an 8.0% reduction in minor procedures. We did not find any clear impact on other types of services. The impact of implementation of the program on prices is reduced when including rewards incentive payments into procedure prices (Appendix Table 4).

We found similar results when we examined what fraction of services is provided by a lower‐priced provider (Table 2). The first year of the program was associated with 0.8 and 1.0 percentage point increases in choice of lower‐priced providers for the pilot and expanded treatment cohorts, respectively. During the second year of implementation in pilot employers, this association increased to a 2.2 percentage point increase. When compared to the baseline mean rate of 35%, these absolute changes range from 2.3% to 6.3% relative increases in the selection of lower‐priced providers. Among MRIs, there was a 2.1 and 1.9 percentage point increase in fraction of procedures performed by a lower‐priced provider during the first year for the pilot and expanded cohorts, respectively. In the second year, there was a 3.7 percentage points increase among the pilot cohort, a relative increase of 10.6%.

3.3. Sub‐group analyses

We did find not observe any meaningful differences in treatment effects in CDHP versus non‐CDHP plans (Table 3). In markets with more variation, implementation of the rewards program was associated with larger reductions in procedure prices (Table 3). For example, the MRI results show that, relative to markets with no price variation, a one‐unit increase in the 75th/25th price percentile ratio leads to an additional 3.5% decrease in prices for pilot cohort's first year and a 2.7% decrease for the expanded cohort's first year.

TABLE 3.

Differences in association between implementation of rewards program and procedure prices by consumer‐directed health plan (CDHP) enrollment and market‐level price variation

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | |

|---|---|---|---|---|---|---|---|---|---|

| All procedures | CT scan | MRI | Ultrasound | Other imaging | Endoscopy | Minor procedure | Moderate procedure | Major procedure | |

| Panel A: Differences by CDHP enrollment | |||||||||

| CDHP plan enrollment | 0.84%** | 0.92% | 0.40% | 0.49% | −0.09% | 1.06%** | 2.63%*** | 0.098% | 0.86% |

| Pilot cohort × 2017 × CDHP enrollment | −1.32% | −2.24% | −1.47% | 0.042% | 4.38% | −1.06% | −2.77% | −3.41% | 3.35% |

| Pilot cohort × 2018 × CDHP enrollment | −0.47% | 0.09% | −2.03% | −0.37% | 0.27% | 0.84% | −0.57% | 1.32% | 2.84% |

| Expanded cohort × 2018 × CDHP enrollment | 0.49% | 2.29% | 0.68% | −0.53% | 3.51%* | −2.41%** | 0.40% | 1.15% | 2.49% |

| Panel B: Differences by market‐level price variation | |||||||||

| Price variation (ratio of 75th and 25th price percentiles) | 3.29%*** | −2.75%*** | 0.047% | 1.25% | 0.050% | 0.28% | 0.93%* | −2.43%*** | −0.81% |

| Pilot cohort × 2017 × variation | −0.07% | −0.55% | −3.49%*** | −1.53%* | −0.01% | −4.12%** | −1.58%** | −1.65% | 0.21% |

| Pilot cohort × 2018 × variation | 0.27% | 0.23% | 0.47% | −1.68%* | −0.49% | −2.00% | 0.25% | −0.97% | −4.03%* |

| Expanded cohort × 2018 × variation | −0.16% | −0.30% | −2.67%*** | −0.348% | 0.23% | 0.03% | 1.32%* | −0.41% | −0.94% |

Note: This table presents the procedure‐level difference‐in‐difference‐in‐differences regression results that estimate differences in the impact of the rewards program based on consumer‐directed health plan (CDHP) enrollment procedure prices (Panel A) and market‐level prices price variation on procedure prices (Panel B). Procedure prices are log‐transformed. Market‐level price variation is defined as the ratio of the 75th and 25th price percentiles at the Metropolitan Statistical Area (MSA), procedure category, and year level. Within each outcome, the first row presents the main effect for CDHP plans/the 75th/25th price percentile ratio. The second, third, and fourth rows interact the 2017 pilot cohort, 2018 pilot cohort, and 2018 expanded cohort indicators with the CDHP/price variation measures. All regressions include controls for age, gender, and fixed effects for year, month, employer, MSA, and specific procedure. Standard errors clustered at employer‐level. ***p < 0.01, **p < 0.05, and *p < 0.

Abbreviations: CT, computed tomography; MRI, magnetic resonance imaging.

3.4. Utilization of rewards‐eligible procedures

We do not find an overall change in the probability of having a rewards‐eligible services following implementation of the program (Table 4). Among MRIs, there was a 0.6 percentage point reduction in the likelihood of receiving a procedure in the second year for the pilot cohort, but this was not seen in either cohort in the first year. We do not find statistically significant associations between implementation of the rewards program and procedure use for other procedures. Thus, the reduction in spending is equivalent to the reductions in procedure prices.

TABLE 4.

Association between implementation of rewards program and any claim during year

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | |

|---|---|---|---|---|---|---|---|---|---|

| All procedures | CT scan | MRI | Ultrasound | Other imaging | Endoscopy | Minor procedure | Moderate procedure | Major procedure | |

| Pilot cohort × 2017 | −0.28 | −0.12 | −0.06 | −0.05 | −0.10*** | 0.06 | −0.07** | −0.05 | 0.03 |

| Pilot cohort × 2018 | −0.51 | −0.37* | −0.59*** | 0.02 | −0.05 | 0.00 | −0.03 | 0.00 | −0.01 |

| Expanded cohort × 2018 | 0.04 | −0.06 | 0.00 | 0.03 | 0.02 | 0.05 | 0.01 | −0.01 | 0.01 |

| Baseline mean | 21.4% | 7.0% | 6.1% | 7.7% | 1.7% | 4.5% | 1.0% | 2.5% | 0.5% |

Note: This table presents the annual‐level difference‐in‐difference regression results that estimate the impact of the rewards program on the annual use of any rewards‐eligible procedure, measured in percentage points. The first row presents the first‐year effects for the pilot cohort that implemented the rewards program in 2017, the second row presents second‐year effects for the pilot cohort, and the third row presents first‐year effects for the expanded cohort that implemented the rewards program in 2018. All regressions include controls for age, gender, and consumer‐directed health plan (CDHP) enrollment and fixed effects for year, month, employer, Metropolitan Statistical Area (MSA), and specific procedure. Standard errors clustered at employer‐level are in parentheses. ***p < 0.01, **p < 0.05, and *p < 0.

Abbreviations: CT, computed tomography; MRI, magnetic resonance imaging.

4. DISCUSSION

While many employers have implemented rewards programs to encourage their employees to switch their care to lower‐priced providers, there has been little assessment on their impact. We examined the impacts of a financial rewards program implemented by a large insurer designed to encourage patients to choose lower‐priced providers. Overall, we find the incentive program has modest increases in choice of lower‐priced providers and modest reductions in prices. This impact roughly doubled from the first to second year of the program. By the second year of the program, the estimated savings translate to a $15 reduction in annual medical spending. We were unable to observe the administrative costs required to implement the program, but employers and purchasers that consider these programs should compare these costs to our estimated savings. The savings observed after the program was expanded to a larger set of employers was only slightly smaller than the savings observed among the pilot group of employers. The impact of the program was not clearly different among enrollees in high‐deductible health plans or markets with greater price variation.

We observed a roughly doubling of the effect from the first year to the second year of the program in the pilot employers. We believed this reflects greater familiarity and engagement with the program among employees. However, it is important to emphasize that overall engagement of the program is relatively modest in all cohorts and all years. This could be due to several unique institutional setting of health care markets. First, many patients may not be sensitive to provider prices, or there could be substantial heterogeneity in the share of patients that are price sensitive. Relatedly, there could be behavioral responses, and patients may be loss averse and may respond more to the adverse consequences of high cost sharing in programs like reference pricing than the positive incentives of the rewards program. 27 Patients may require even larger incentives to change behaviors. Finally, even if they are price sensitive, the program may not sufficiently address nonfinancial barriers to price shopping, such as restrictive referral networks and patient reluctance to override the recommendation of their providers on where to get care. Many services require physician referrals, which limit the agency of patients to price shop, even for “commodity” health care services. 24 In addition, physicians employed by a hospital or health system have incentives to refer patients to their employing hospital, which tend to be higher‐priced, instead of to independent providers, which tend to be lower‐priced. 24 , 25 Together these factors make it difficult for patients to “shop” for these services in the same way that consumers shop for other undifferentiated products and services.

The savings we observe are concentrated in imaging services, in particular MRIs. MRIs are also where there has been some impact of price transparency 3 , 7 and reference pricing programs. 12 , 28 , 29 Potential reasons include that imaging services may be considered a commodity by many patients and therefore patients may be more likely to switch. For other procedures, patients are more likely “shop” for a physician based on reputation and quality, and it may be more difficult to switch given the patient must obtain another preoperative appointment. We did not find differences by patient enrollment in CDHP plans. We also found inconsistent effect based on market‐level price variation. The program is tailored to specific markets, and so the lack of findings suggests that some market‐specific price variation may be incorporated into the design of the rewards program.

This study is not without limitations. First, we did not observe the amount paid to implement and administer the program and so cannot estimate whether the program is overall cost saving. Second, we observe 2 years of program implementation and observe increased savings in each year. As patients continue to learn about the program, savings may also increase in future years. Finally, we only examined the financial impacts of the program but did not consider nonfinancial impacts. Some patients may value the care navigation and information about providers. Other patients may consider this program an additional burden that further complicates navigating the US health care system.

Despite these limitations, there continues to be innovation in benefit design to encourage enrollees to switch to lower‐priced providers. Relative few employers have been enthusiastic about reference pricing (“the stick”) and rather are embracing the “carrot” of rewards programs. We find that rewards programs do reduce prices and spending, and this impact increases over time. However, the resulting savings are modest and are unlikely to meaningfully reduce health care spending. Despite the rapid adoption among employers, the minimal savings due to the program are driven by low patient engagement. To be successful, future innovations will have to both be commonly used by patients and lead to behavioral changes.

Supporting information

Supporting Information

ACKNOWLEDGMENTS

This research was supported by NIA 1K01AG061274 (Whaley) and a grant from the Laura and John Arnold Foundation. The views presented here are those of the authors and not necessarily those of the Laura and John Arnold Foundation, its directors, officers, or staff. We thank Jeffrey Kling and seminar participants at 2019 ASHEcon and Clemson University for helpful comments. This project was pre‐registered on the Open Sciences Framework: https://osf.io/d6jv9/

Whaley C, Sood N, Chernew M, Metcalfe L, Mehrotra A. Paying patients to use lower‐priced providers. Health Serv Res. 2022;57(1):37‐46. 10.1111/1475-6773.13711

Funding information Laura and John Arnold Foundation; National Institute on Aging, Grant/Award Number: K01 AG061274

REFERENCES

- 1. Baker L, Bundorf MK, Royalty A. Private insurers payments for routine physician office visits vary substantially across the United States. Health Aff. 2013;32(9):1583‐1590. 10.1377/hlthaff.2013.0309 [DOI] [PubMed] [Google Scholar]

- 2. Franzini L, White C, Taychakhoonavudh S, Parikh R, Zezza M, Mikhail O. Variation in inpatient hospital prices and outpatient service quantities drive geographic differences in private spending in Texas. Health Serv Res. 2014;49(6):1944‐1963. 10.1111/1475-6773.12192 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Whaley C, Chafen Schneider J, Pinkard S, et al. Association between availability of health service prices and payments for these services. JAMA. 2014;312(16):1670‐1676. 10.1001/jama.2014.13373 [DOI] [PubMed] [Google Scholar]

- 4. Cooper Z, Craig SV, Gaynor M, Van Reenen J. The price ain't right? Hospital prices and health spending on the privately insured. Q J Econ. 2019;134(1):51‐107. 10.1093/qje/qjy020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. NHE . National Health Expenditures Fact Sheet. Washington DC: Centers for Medicare & Medicaid Services; 2017. https://www.cms.gov/research‐statistics‐data‐and‐systems/statistics‐trends‐and‐reports/nationalhealthexpenddata/nhe‐fact‐sheet.html. Accessed April 9, 2017. [Google Scholar]

- 6. Sood N, Wagner Z, Huckfeldt P, Haviland AM. Price shopping in consumer‐directed health plans. Forum Health Econ Policy. 2013;16(1):35‐53. http://www.degruyter.com/view/j/fhep.2013.16.issue-1/fhep-2012-0028/fhep-2012-0028.xml Accessed January 16, 2014. [DOI] [PubMed] [Google Scholar]

- 7. Desai S, Hatfield LA, Hicks AL, et al. Offering a price transparency tool did not reduce overall spending among California public employees and retirees. Health Aff. 2017;36(8):1401‐1407. 10.1377/hlthaff.2016.1636 [DOI] [PubMed] [Google Scholar]

- 8. Desai S, Hatfield L, Hicks A, Chernew M, Mehrotra A. Association between availability of a price transparency tool and outpatient spending. JAMA. 2016;315(17):1874‐1881. 10.1001/jama.2016.4288 [DOI] [PubMed] [Google Scholar]

- 9. Haviland AM, Eisenberg MD, Mehrotra A, Huckfeldt PJ, Sood N. Do consumer‐directed health plans bend the cost curve over time? J Health Econ. 2016;46:33‐51. 10.1016/j.jhealeco.2016.01.001 [DOI] [PubMed] [Google Scholar]

- 10. Brot‐Goldberg ZC, Chandra A, Handel BR, Kolstad JT. What does a deductible do? The impact of cost‐sharing on health care prices, quantities, and spending dynamics. Q J Econ. 2017;132(3):1261‐1318. 10.1093/qje/qjx013 [DOI] [Google Scholar]

- 11. Robinson J, Brown T, Whaley C. Reference pricing changes the consumer choice architecture of health care. Health Aff. 2017;36(3):524‐530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Sinaiko AD, Mehrotra A. Association of a national insurer's reference‐based pricing program and choice of imaging facility, spending, and utilization. Health Serv Res. 2020;55(3):348‐356. 10.1111/1475-6773.13279 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Sinaiko AD, Shehnaz A, Mehrotra A. Why aren't more employers implementing reference‐based pricing benefit design? Am J Manag Care. 2019;25(2):85‐88. [PubMed] [Google Scholar]

- 14. Scanlon DP. If reference‐based benefit designs work, why are they not widely adopted? Insurers and administrators not doing enough to address price variation. Health Serv Res. 2020;55(3):344. 10.1111/1475-6773.13284 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Arndt R. Encouraging patients to shop around amid high healthcare prices. Mod Healthc. 2018. https://www.modernhealthcare.com/article/20181103/TRANSFORMATION04/181039995/encouraging‐patients‐to‐shop‐around‐amid‐high‐healthcare‐prices Accessed October 11, 2020. [Google Scholar]

- 16. Whaley CM, Vu L, Sood N, Chernew ME, Metcalfe L, Mehrotra A. Paying patients to switch: impact of a rewards program on choice of providers, prices, and utilization. Health Aff. 2019;38(3):440‐447. 10.1377/hlthaff.2018.05068 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Zhang X, Haviland A, Mehrotra A, Huckfeldt P, Wagner Z, Sood N. Does enrollment in high‐deductible health plans encourage price shopping? Health Serv Res. 2018;53:2718‐2734. 10.1111/1475-6773.12784 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Hussey PS, Wertheimer S, Mehrotra A. The association between health care quality and cost: a systematic review. Ann Intern Med. 2013;158(1):27‐34. 10.7326/0003-4819-158-1-201301010-00006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Whaley CM, Guo C, Brown TT. The moral hazard effects of consumer responses to targeted cost‐sharing. J Health Econ. 2017;56:201‐221. 10.1016/j.jhealeco.2017.09.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Arrow KJ. Uncertainty and the welfare economics of medical care. Am Econ Rev. 1963;53(5):941‐973. [Google Scholar]

- 21. Lieber EMJ. Does it pay to know prices in health care? Am Econ J Econ Policy. 2017;9(1):154‐179. [Google Scholar]

- 22. Brown ZY. Equilibrium effects of health care price information. Rev Econ Stat. 2018;101(4):699‐712. 10.1162/rest_a_00765 [DOI] [Google Scholar]

- 23. Desai SM, Shambhu S, Mehrotra A. Online advertising increased New Hampshire residents' use of provider price tool but not use of lower‐price providers. Health Aff. 2021;40(3):521‐528. 10.1377/hlthaff.2020.01039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Chernew M, Cooper Z, Larsen‐Hallock E, Morton FS. Are Health Care Services Shoppable? Evidence from the Consumption of Lower‐limb MRI Scans. National Bureau of Economic Research; 2018. 10.3386/w24869 [DOI] [Google Scholar]

- 25. Whaley C, Zhao X, Richards MR, Damberg C. Higher Medicare Spending on Imaging and Lab Services After Physician Practice Vertical Integration. Health Affairs. 2021;40(5):702‐709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Fulton BD. Health care market concentration trends in the United States: evidence and policy responses. Health Aff. 2017;36(9):1530‐1538. 10.1377/hlthaff.2017.0556 [DOI] [PubMed] [Google Scholar]

- 27. Kahneman D, Tversky A. Choices, Values, and Frames. Cambridge: Cambridge University Press; 2000. [Google Scholar]

- 28. Robinson JC, Whaley C, Brown TT. Reference pricing, consumer cost‐sharing, and insurer spending for advanced imaging tests. Med Care. 2016;54(12):1050‐1055. 10.1097/MLR.0000000000000605 [DOI] [PubMed] [Google Scholar]

- 29. Whaley C, Brown T, Robinson J. Consumer responses to price transparency alone versus price transparency combined with reference pricing. Am J Health Econ. 2019;5(2):227‐249. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information