Abstract

Human activity recognition (HAR) has multifaceted applications due to its worldly usage of acquisition devices such as smartphones, video cameras, and its ability to capture human activity data. While electronic devices and their applications are steadily growing, the advances in Artificial intelligence (AI) have revolutionized the ability to extract deep hidden information for accurate detection and its interpretation. This yields a better understanding of rapidly growing acquisition devices, AI, and applications, the three pillars of HAR under one roof. There are many review articles published on the general characteristics of HAR, a few have compared all the HAR devices at the same time, and few have explored the impact of evolving AI architecture. In our proposed review, a detailed narration on the three pillars of HAR is presented covering the period from 2011 to 2021. Further, the review presents the recommendations for an improved HAR design, its reliability, and stability. Five major findings were: (1) HAR constitutes three major pillars such as devices, AI and applications; (2) HAR has dominated the healthcare industry; (3) Hybrid AI models are in their infancy stage and needs considerable work for providing the stable and reliable design. Further, these trained models need solid prediction, high accuracy, generalization, and finally, meeting the objectives of the applications without bias; (4) little work was observed in abnormality detection during actions; and (5) almost no work has been done in forecasting actions. We conclude that: (a) HAR industry will evolve in terms of the three pillars of electronic devices, applications and the type of AI. (b) AI will provide a powerful impetus to the HAR industry in future.

Supplementary Information

The online version contains supplementary material available at 10.1007/s10462-021-10116-x.

Keywords: Human activity recognition, Sensor-based, Vision-based, Radio frequency-based identification, Device-free, Imaging, Deep learning, Machine learning, And hybrid models

Introduction

Human activity recognition (HAR) can be referred to as the art of identifying and naming activities using Artificial Intelligence (AI) from the gathered activity raw data by utilizing various sources (so-called devices). Examples of such devices include wearable sensors (Pham et al. 2020), electronic device sensors like smartphone inertial sensor (Qi et al. 2018; Zhu et al. 2019), camera devices like Kinect (Wang et al. 2019a; Phyo et al. 2019), closed-circuit television (CCTV) (Du et al. 2019), and some commercial off-the-shelf (COTS) equipment’s (Ding et al. 2015; Li et al. 2016). The use of diverse sources makes HAR important for multifaceted applications domains, such as healthcare (Pham et al. 2020; Zhu et al. 2019; Wang et al. 2018), surveillance (Thida et al. 2013; Deep and Zheng 2019; Vaniya and Bharathi 2016; Shuaibu et al. 2017; Beddiar et al. 2020) remote care to elderly people living alone (Phyo et al. 2019; Deep and Zheng 2019; Yao et al. 2018), smart home/office/city (Zhu et al. 2019; Deep and Zheng 2019; Fan et al. 2017), and various monitoring application like sports, and exercise (Ding et al. 2015). The widespread use of HAR is beneficial for the safety and quality of life for humans (Ding et al. 2015; Chen et al. 2020).

The existence of devices like sensors, video cameras, radio frequency identification (RFID), and Wi-Fi are not new, but the usage of these devices in HAR is in its infancy. The reason for HAR’s evolution is the fast growth of techniques such as AI, which enables the use of these devices in various application domains (Suthar and Gadhia 2021). Therefore, we can say that there is a mutual relationship between the AI techniques or AI models and HAR devices. Earlier these models were based on a single image or a small sequence of images, but the advancements in AI have provided more opportunities. According to our observations (Chen et al. 2020; Suthar and Gadhia 2021; Ding et al. 2019), the growth of HAR is directly proportional to the advancement of AI which thrives the scope of HAR in various application domains.

The introduction of deep learning (DL) in the HAR domain has made the task of meaningful feature extraction from the raw sensor data. The evolution of DL models such as (1) convolutional neural networks (CNN) (Tandel et al. 2020), (2) extending the role of transfer weighting schemes (it allows the knowledge reusability where the recognition model is trained on a set of data and the same trained knowledge can then be used by a different testing dataset) such as Inception (Szegedy et al. 2015, 2016, 2017), VGG-16 (Simonyan and Zisserman 2015), and Residual Neural Network (Resents)-50 (Nash et al. 2018), (3) series of hybrid DL models such as fusion of CNN with long short-term memory (LSTM), Inception with ResNets (Yao et al. 2017, 2019, 2018; Buffelli and Vandin 2020), (4) loss function designs such entropy, Kaulback Liberal divergence, and Tversky (Janocha and Czarnecki 2016; Wang et al. 2020a), (5) optimization paradigms such as cross-entropy, stochastic gradient descent (SGD) (Soydaner 2020; Sun et al. 2020) has made the task of HAR-based design plug-and-play based. Even though it is getting black-box oriented, it requires better understanding to actually ensure that the 3-legged stool is stable and effective.

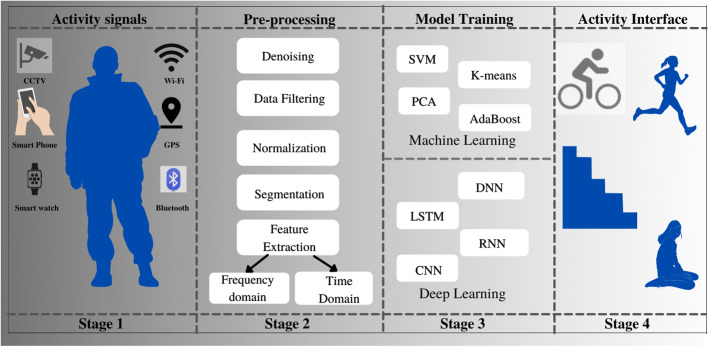

Typically, HAR consists of four stages (Fig. 1) including (1) capturing of signal activity, (2) data pre-processing, (3) AI-based activity recognition, and (4) the user interface for the management of HAR. Each stage can be implemented using several techniques bringing the HAR system to have multiple choices. Thus, the choice of the application domain, the type of data acquisition device, and the processing of artificial intelligence (AI) algorithms for activity detection makes the choices even more challenging.

Fig. 1.

Four stages of HAR process (Hx et al. 2017)

Numerous reviews in HAR have been published, but our observations show that most of the studies are associated with either vision-based (Beddiar et al. 2020; Dhiman Chhavi 2019; Ke et al. 2013) or sensor-based (Carvalho and Sofia 2020; Lima et al. 2019), while very few have considered RFID-based and device-free HAR. Further, there is no AI review article that covers the detailed analysis of all the four device types that includes all four types of devices such as sensor-based (Yao et al. 2017, 2019; Hx et al. 2017; Hsu et al. 2018; Xia et al. 2020; Murad and Pyun 2017), vision-based (Feichtenhofer et al. 2018; Simonyan and Zisserman 2014; Newell Alejandro 2016; Crasto et al. 2019), RFID-based (Han et al. 2014), and device-free (Zhang et al. 2011).

An important observation to note here is that technology has advanced in the field of AI, i.e., deep learning (Agarwal et al. 2021; Skandha et al. 2020; Saba et al. 2021) and machine learning methods (Hsu et al. 2018; Jamthikar et al. 2020) and is revolutionizing the ability to extract deep hidden information for accurate detection and interpretation. Thus, there is a need to understand the role of these new paradigms that are rapidly changing HAR devices. This puts the requirement to consider a review inclined to address simultaneously changing AI and HAR devices. Therefore, the main objective of this study is to better understand the HAR framework while integrating devices and application domains in the specialized AI framework. What types of devices can fit in which type of application, and what attributes of the AI can be considered during the design of such (Agarwal et al. 2021) a framework are some of the issues that need to be explored. Thus, this review is going to illustrate how one can select such a combination by first understanding the types of HAR devices, and then, the knowledge-based infrastructure in the fast-moving world of AI, knowing that some of such combinations can be transformed into different applications (domains).

The proposed review is structured as follows: Sect. 2 covers the search strategy, and literature review with statistical distributions of HAR attributes. Section 3 illustrates the description of the HAR stages, HAR devices, and HAR application domains in the AI framework. Section 4 illustrates the role of emerging AI as the core of HAR. Section 5 presents performance evaluation criteria in the HAR and integration of AI in HAR devices. Section 6 consists of a critical discussion on factors influencing HAR, benchmarking of the study against the previous studies, and finally, the recommendations. Section 7 finally concludes the study.

Search strategy and literature review

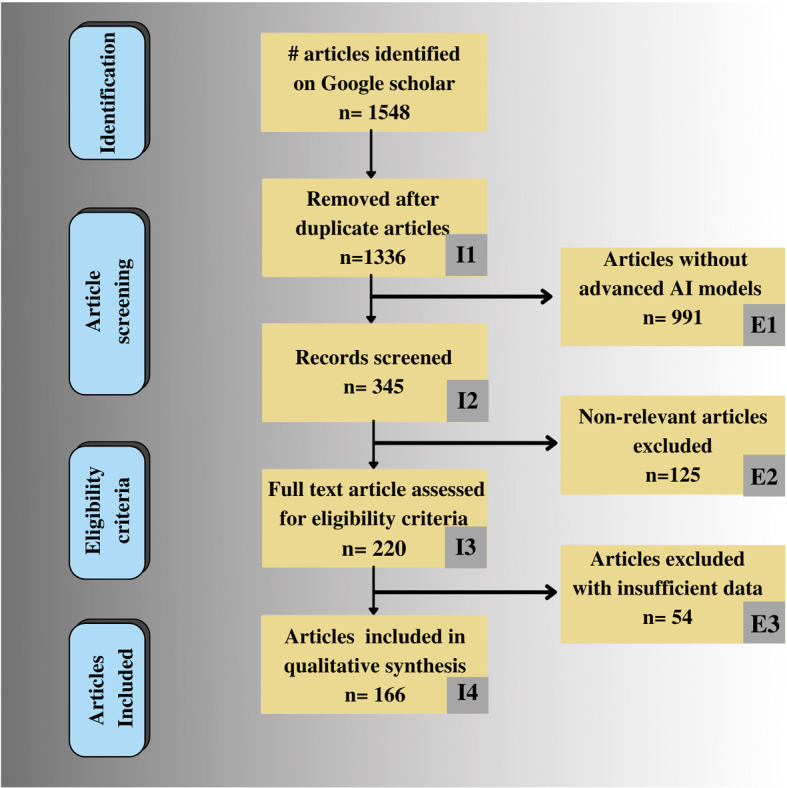

“Google Scholar” is used for searching articles published between the periods of 2011-present. The search included the keywords “human activity recognition” or “HAR” in combination with terms “machine learning”, “deep learning”, “sensor-based”, “vision-based”, “RFID-based” and, “device-free”. Figure 2 shows the PRISMA diagram showing the criteria for the selection of HAR articles. We identified around 1548 articles in the last 10 years period, which were then short-listed to 175 articles based on three major assessment criteria: AI models used, target application domain, and data acquisition devices which are the three main pillars of the proposed review. In the proposed review we have formed two clusters of attributes based on three major assessment criteria. Cluster 1 includes 7 HAR devices and applications-based attributes, and cluster 2 includes 7 AI attributes. HAR devices and application-based attributes are: data source, #activities, datasets, subjects, scenarios, total #actions and performance evaluation, while the AI attributes includes: #features, feature extraction, ML/DL model, architecture, metrics, validation and hyperparameters/optimizer/loss function. The description of HAR devices and applications-based attributes is given in Sect. 3.2. Further, the Tables A.1, A.2, A.3 and A.4 of "Appendix 1" illustrate these attributes for various studies considered in the proposed review. The cluster 2’s AI attributes are discussed in Sect. 4.2 and Table 3, 4, 5 and 6 illustrate the insight about AI models adapted by researchers in their HAR model. Apart from three major criteria, three exclusion, and four inclusion criteria were also followed in research articles selection. Excluded (1) articles with traditional and older AI techniques, (2) non-relevant articles, and (3) articles with insufficient data. These exclusion criteria consisted of 991, 125, and 54 articles (marked as E1, E2, and E3 in PRISMA flowchart) that lead to the finalization of the 175 articles. Included (1) non-redundant articles, (2) articles with the detailed screening of abstract and conclusion, (3) articles based on eligibility criteria assessment which includes advanced AI techniques, target domain, and device-type, and (4) article’s qualitative synthesisation including impact factor of journal, and author’s contribution in HAR domain; (marked as I1, I2, I3, and I4 in PRISMA flowchart).

Fig. 2.

PRISMA model for the study selection

Table A.1.

Sensor-based HAR

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | |

|---|---|---|---|---|---|---|---|---|

| DS& | Activities | Datasets | Subjects | Scenarios | Total # actions | Performance evaluation | CIT* | |

| R1 | WS | ADL: 10, Sports: 11 | Proprietary dataset | 13- 10 M and 3 F | RT, Sports | 1300/1100 | 99.65%, 99.92% | Hsu et al. (2018) |

| R2 | SPS | ADL: 6, 5 | UCI-HAR, Weakly labelled (WL) | 30 subs (UCI), 7 participants (WL) | Waist mounted SPS | 76,157 | UCIHAR- 93.41%, WL- 93.83% | Wang et al. (2019a) |

| R3 | SPS | ADL: 7 | Proprietary dataset | 100 participants | Texting, handheld, trouser pocket, backpack | 235 977 Samples | CNN-7–95.06%, Ensemble-96.11% | Zhu et al. (2019) |

| R4 | SPS | ADL: 4 | Proprietary dataset | Active: 147, inactive: 99, walking: 200 and driving: 120. Total 574 | Lab env | 4,99,276 | Mean accuracy-74.39% | Garcia-Gonzalez et al. (2020) |

| R5 | SPS | ADL: 6 | HHAR | 9 subs | Biking, walking, stairs | 4,39,30,257 | 96.79% | Sundaramoorthy and Gudur (2018) |

| R6 | SPS | ADL: 6, 8, 9 | HHAR, RWHAR, MobiAct | 9, 15 (M-8, F-7), 57 (M-42, F-15) | Indoor and outdoor | 4,39,30,257/2500 | F1-measure on three datasets | Gouineua et al. (2018) |

| R7 | SPS | ADL: 8 | RWHAR | 15 subs | Experimental setup | 8,85,360 | F1-Score:0.94 | Lawal and Bano 2020) |

| R8 | SPS | ADL: 6, 9, 14 | HHAR, PAMAP, USC-HAD | 9 (HHAR), 15 (RWHAR) | Waist mounted SPS, Experimental setup | 4,39,30,257/38,50,505 | F1-score: 0.848, 0.723, 0.702 | Buffelli and Vandin (2020) |

| R9 | SPS | ADL: 6 | HHAR | 9 subs | Biking, walking, stairs | 4,39,30,257 | 98% | Yao et al. (2019) |

| R10 | SPS | ADL: 6 | HHAR, carTrack | 9 subs | Biking, walking, stairs | 4,39,30,257 | 94.2% | Yao et al. (2017) |

| R11 | WS, SPS | ADL: 6, 7, 17 | UCIHAR, WISDM, OPPORTUNITY | 30 (UCI-HAR), 4 (OPPORTUNITY), 51 (WISDM) | Waist mounted SPS, Experimental setup, Biking, walking, stairs | 76,157/2,551/15,630,426 | F1-score: 92.63%, 95.85%, 95.78% | Xia et al. (2020) |

| R12 | SPS | ADL: 13, 5, 6 | UniMibShar, MobiAct | 57 (MobiAct) | Fall scenario | 11,771/2500 | 87.30 | Ferrari et al. (2020) |

| R13 | SPS | ADL: 50 | Vaizman dataset | 60 subs | Indoor, running (Phone in pocket) | 300 k | 92.80 | Fazli et al. (2021) |

| R14 | WS | 19 (ADL) | 19 NonSense | 13 subs (5 Female 8 male) of age (19–45 years) | 9 activities indoor and 9 outdoor | _ | Precision: 93.41%, recall: 93.16% | Pham et al. (2020) |

| R15 | WS | ADL: (6, 12,6,11), GC | UCI-HAR, DaphNet, OPPORTUNITY, Skoda | 30 (UCI-HAR), 4 (OPPORTUNITY) | ADL (UCI-HAR & Opportunity) Skoda: GC | 76,157/15,630,426 | 96.7%, 97.8%, 92.5%, 94.1%, 92.6% | Murad and Pyun (2017) |

| R16 | WS | 18 ADL | 19 NonSense | 13 subs of age (19–45 years) | 9 indoor and 9 outdoor | – | F1-score: 77.7 | Pham et al. (2017) |

*CIT citation

&DS data source

WS wearable sensor, SPS smartphone sensor, Acc accelerometer, Gyro gyroscope, Mag magnetometer, ADL activities of daily living, RT Rrutine tasks, GC gestures in car maintenance

Table A.2.

Vision-based HAR

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | |

|---|---|---|---|---|---|---|---|---|

| DS& | Activities | Datasets | Subjects | Scenarios | # Actions | Performance evaluation | CIT* | |

| R1 | DC | 10, 10 | UTKinect Action dataset, and CAD-60 | 10 (30 joints), 4 | ADL: Home, kitchen, bedroom, bathroom, living room | 60 | 97%, 96.5% | Phyo et al. (2019) |

| R2 | DC | 14, 20, 16 | CAD-60, MSR Action3D and MSR Daily Activity 3D | 4 (2 M-2F), 10, 10 | Indoor | 60/1000/567 | 94.12%, 86.81%, 68.75% | Qi et al. (2018) |

| R3 | Vid | 10 | Weizmann | 9 | Non uniform background, walk | 90 | 95.90% | Deep and Zheng (2019) |

| R4 | Vid | 101,51 | UCF-101 HMDB-51 | YT, GV, MC | UCF-101: H–O, ADL, RT. HMDB-51: FE, H–O, H–H, ADL | 13,320/7000 | 92.5%,65.4% | Feichtenhofer et al. (2016) |

| R5 | Vid | 101, 51 | UCF-101 HMDB-51 | YT, GV, MC | UCF-101: H–O, ADL, RT. HMDB-51: FE, H–O, H–H, ADL | 13,320/7000 | UCF 101: 93.4%, HMDB 51: 66.4% | Feichtenhofer et al. (2017) |

| R6 | Vid | 400, 600 | AVA, Kinetics 400, Kinetics-600, charades | MC and YT | H–O, H–H, ADL, RT | 57,600/3,00,000/5,00,000 | Kinetics:75.6 and 92.1, Kinetics 600: 81.8, 95.1 | Feichtenhofer et al. (2018) |

| R7 | Vid | - | AVA | Movie data | H–O, H–H, ADL, RT | 57,600 | Feichtenhofer and Ai (2019) | |

| R8 | Vid | 101, 51, 400, 174 | UCF-101, HMDB-51, kinetics-400, Something Something v1 | YT, GV, MC | H–O, ADL, RT | 13,320/7000/240 k/86,017 | 74.9%, 98.1%, 80.9%, 53.0% | Crasto et al. (2019) |

| R9 | Vid | Proposed HVU dataset (with YT-8 M), Kinetics-600 and HACS dataset | MC, YT | H–O, H–H | 572 k videos—9 M annotation | _ | Diba et al. (2020) | |

| R10 | Vid | 101 | UCF 101 | YT | H–O, H–H, playing musical instruments, and sports | 13,320 | 90.2% | Diba et al. (2016) |

| R11 | Vid | – | UCSD: Ped1 and Ped 2, Avenue, Subway: entrance and exit | SVF | Crowd data from surveillance camera | _ | 90.5%, 88.9%, 90.3%, 91.6%, 98.4% | Wang et al. (2018) |

| R12 | Vid | 101, 51 | UCF-101, HMDB-51 | YT, GV, MC | UCF-101: H–O, ADL, RT. HMDB-51: FE, H–O, H–H, ADL | 13,320/7000 | 98% | Simonyan and Zisserman (2014) |

| R13 | Vid | 174, 27, 101, 51, 400 | Something something v1 and v2, Jester, UCF-101, HMDB-51 and Kinetics-400 | Actions while using objects | V1, v2: H–O, Jester: crowd acted, | 108,499/220,887/148,092/300,000/13,320/6766 | 80.4, 89.8%, 99.9%, 91.6%,96.2%, 72.2% | Jiang et al. (2019) |

| R14 | Vid | UCSD Ped1, ped2 | SVF | Crowd data of surveillance camera | 70/28 | 97–98% accuracy on UMN dataset | Thida et al. (2013) | |

| R15 | Vid | 10, 6, 8 | Weizmann, KTH, Ballet | ADL, Ballet dance | Weizmann: walk pattern from different angles, Ballet: DVD | 90/6 × 100/44 | _ | Vishwakarma and Singh (2017) |

| R16 | DC | 17 (15 J), 4 Walk (20 T) | SPHERE, DGD: Gait Dataset | 9 and 7 subjects | SPHERE: with three anomalies (short stop, stairs with right and stairs with left leg, DGD: four gait types | 48/56 | F1-score: SPHERE: 1, DGD: 0.98 | Chaaraoui (2015) |

| R17 | DC | Full body poses | FLIC, MPII | Elbow and wrist action images | FLIC: images from films, MPII: daily activity pose images | 5003/25 k images | FLIC: 99% (elbow), 97% (wrist), MPII: 91.2% (elbow), 87.1% (wrist) | Newell Alejandro (2016) |

| R18 | DC | 20 | Proprietary dataset | 10 (9 M–1F) | Daily activities | 6220 frames | 90.91% | Xia et al. (2012) |

*CIT citation, &DS data source, DC depth camera, Vid video, J joints, ADL activities of daily living, H–H Human to human interaction, H–O Human to object interaction, RT routine tasks, YT YouTube, MC Movie clip, GV Google video

Table A.3.

RFID-based HAR

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | |

|---|---|---|---|---|---|---|---|---|

| Data source | Activities | Datasets | Subjects | Scenarios | # Actions | Accuracy | CIT* | |

| R1 | RFID passive tags | 10 free weight exercise | Proprietary dataset | 15 (10 exercise) | Two weeks in a gym environment | Exercise Data 15 subs for 10 activities | 90% | Ding et al. (2015) |

| R2 | RFID passive tags | 10 actions and 5 phases of resuscitation | Proprietary dataset | RSS during 16 actual trauma resuscitation | Realtime resuscitation | 16 Trauma resuscitation data | 80.2% | Li et al. (2016) |

| R3 | RFID passive tags | 23 orientation sensitive activities | Proprietary dataset | 6 (5 F, 1 M) | Lab environment | 23 actions performed by each subject for 120 secs | 96% | Yao et al. (2018) |

| R4 | RFID passive tags | 10 ADL activities | Replaced sensors with RFID tags in Ordonez dataset | 2 users’ data of 21 days | Indoor environment, H–O | 2747 instances | 78.3% | Du et al. (2019) |

| R5 | Smart Wall (passive rfid tags) | 12 simple & complex ADL activities | Proprietary dataset | 4 | 12 activities performed by4 volunteers in mock room with SmartWall | _ | 97.9%, | Oguntala et al. (2019) |

| R6 | RFID tags | 4 ADL | Proprietary dataset | 10 | Indoor | _ | 83.17% | Fan et al. (2017) |

H–O human–object interaction, M male, F female, ADL activities of daily living, RSS received signal strength

* CIT citation

Table A.4.

Device-free HAR

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | |

|---|---|---|---|---|---|---|---|---|

| Data source | Activities | Datasets | Subs | Scenarios | # Actions | Accuracy | CIT* | |

| R1 | TL-WDR6500 AP Tx and Intel 5300 NIC card RP Rx | 10 daily activities | Proprietary dataset | 6 volunteers (3 male, 3 female) | Meeting room and lab room | 4400 | 94.20% | Yan et al. (2020) |

| R2 | Intel 5300 NIC | 27 multi variation activities, Gaits: walk 10 m of volunteers | Proprietary dataset | 5 volunteers (multi variation activities), 10 volunteers (of 10 walk gait data) | Lab environment | 50 groups data for each volunteer | 95% | Fei et al. (2020) |

| R3 | Intel 5300 NIC | 8 activities divided into 2 groups: torso based and gesture based | Proprietary dataset | Test model performance for 6 volunteers’ data | Training in lab while testing in big hall, apartment and small office | 5760 | 96% | Wang et al. (2019d) |

NIC network interface card, Tx transmitter, Rx receiver, M male, F female

*CIT citation

Table 3.

Sensor-based HAR models

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | |

|---|---|---|---|---|---|---|---|---|

| # Features | Feature extraction | ML or DL model | Architecture | Metrics | Validation | Hyper-parameters/optimizer/loss function | CIT* | |

| R1 | 6/Time domain | Hand-crafted | SVM | SVM classifier for different kernels (polynomial, Radial basis function and linear) | F1-score, accuracy | tenfold | C, ℽ & degree in grid search | Garcia-Gonzalez et al. (2020) |

| R2 | Spatial features | Automatic | CNN | C (32) − C (64) − C (128) − P − C (128) − P − C (128) − P – FC (128) – SM | Accuracy | 10% data for validation | LR: 0.001, BS: 50/Adam | Wang et al. (2019a) |

| R3 | Frequency domain | Automatic | CNN | 3C with MP and dropout, 2 FC with dropout and SM | F1-score, Precision, recall | CV | LR: 0.01, DO/Adam | Lawal and Bano (2020) |

| R4 | Time domain | Automatic | CNN-RNN with attention mechanism | TRASEND: C1- C2- C3)- flatten and concat, merge layer- temporal information extractor using a 8-headed self-attention mechanism RNN, o/p layer | F1-score | Leave one user out and CV | LR: {0.001, 0.0001,,00,001}/Adam/Cross Entropy | Buffelli and Vandin (2020) |

| R5 | Spatial, Temporal | Automatic | LSTM-CNN | 2 LSTM layer (32 neurons), CNN (64), Max pooling, CNN (128), GAP, BN, o/p layer(softmax) | F1-score, accuracy | _ | LR: 0.001/Adam/Cross entropy | Xia et al. (2020) |

| R6 | 18/Time & Frequency domain | Hand-crafted | AdaBoost, AdaBoost-CNN, CNN-SVM | For AdaBoost CNN- 4C, AP, FC, SM | Accuracy | Sub-out validation | Experiment with and without personalization similarity | Ferrari et al. (2020) |

| R7 | 225 sensory features | Automatic | DNN | Layer 1(256), layer2 (512), layer 3 (128), O/p (softmax) | Accuracy, F1-score, Specificity, Sensitivity | 5% training data is used | No. of layers, no. of nodes per layer, appropriate regularization function | Fazli et al. (2021) |

| R8 | Time domain | Automatic | CNN- CapsNet architecture | SenseCapsNet: I/p, 1D C (K = 5, S = 1), Primary caps: C2(K = 5, S = 2) and squash, Activity caps where k is kernel size and S is strides | Precision, recall | tenfold CV | mini batches:64,LR: 0.01, DO/SGD | Pham et al. (2020) |

CV cross validation, LOSO leave one subject out, C convolution, P pooling, AP average pooling, MP max pooling, FC fully connected, SM softmax, BN batch normalization layer, LR learning rate, DO dropout, BS batch size, SGD stochastic gradient descent, concat concatenation, Spec. specificity, Sens sensitivity, TL transfer learning, CIT citations

Table 4.

Vision-based HAR models

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | |

|---|---|---|---|---|---|---|---|---|

| # Features | Feature extraction | ML/DL model | Architecture | Metrics | Validation | Hyper-parameters/optimizer/loss function | CIT* | |

| R1 | T. domain | Hand-crafted | 3DCNN on Color-skl-MHI and RJI | I/p layer with skeletal joints, Color-skl-MHI followed by 3D-DCNN, RJI followed by 3D-DCNN, decision fusion, o/p | Accuracy | Cross validation | DO ratios for the three hidden layers (0.1%, 0.2%,0.3%)/SGD | Phyo et al. (2019) |

| R2 | Spatio temporal | Automatic | VGG-16, VGG-19, inception v3 | 224 × 224 is image is input and features from fc1 layer are extracted which gives 4096-dimensional vector for per image | Accuracy, precision, recall, F1-score | 10% data is used for validation | All 3 CNNs trained on imageNet then trained on Weizmann reusing same weights | Deep and Zheng (2019) |

| R3 | Spatio temporal | Automatic | ResNet-50 | C1, MP, C2-C5, AP, FC (2048), SM | Accuracy | Evaluates UCF-101 and HMDB-51 | BS- 128, DO, LR: and /SGD | Feichtenhofer et al. (2017) |

| R4 | Spatio temporal | Automatic | ResNet-50 | Raw clip i/P C1- P- C2- C3- C4- C5- GAP- FC- No. of classes. Pre-train on Kinetics-400, Kinetics-600 and kinetics-700 | mAPS, GFLOPS | Evaluate model performance on AVA dataset | LR, WD: , Batch normalization/SGD | Feichtenhofer and Ai (2019) |

| R5 | Spatio temporal | Automatic | MERS model with ResNeXt-101 | MERS: Train using flow, freeze weights, train with RGB using MSE loss. MARS: Train using privileged flow n/w, freeze weights, use RGB frames during test phase | top-1 mean accuracy | Kinetics 40: 20 k, MiniKinetics: 5 k | WD = 0.0005, LR = 0.1, momentum = 0.9 and LR = 0.1 for 64f-clips/SGD/Cross entropy | Crasto et al. (2019) |

| R6 | Spatio temporal | Automatic | HATnet based on ResNet-50 and STCnet | 2D ConvNets: to extract spatial structure, 3DConv: to deal with interaction in frames. Both 2D and 3D use ResNet-50 | Top-1 mAPS | Kinetics 400 and 600 | Fine tune on UCF-101 & HMDB-51/Cross entropy | Diba et al. (2020) |

| R7 | Spatio-temporal | Automatic | 2D ResNet 50 with STM blocks | Video frames i/p, C1, C2x, C3x, C4x, C5x, FC, o/p. Replace all residual block with STM block (1 × 1 2D conv, followed by CMM and CSTM blocks, then 1 × 1 2D Conv) | top-1, top-5 accuracy | Kinetics 400: 19,095 | LR = 0.01, LR = 0.001 for 25 epochs, momentum = 0.9, WD = 2.5 /SGD | Jiang et al. (2019) |

T time, F frequency, CV cross validation, LOSO leave one subject out, C convolution, P pooling, AP average pooling, MP max pooling, FC fully connected, SM softmax, BN batch normalization layer, LR learning rate, DO dropout, BS batch size, SGD stochastic gradient descent, mAPs mean average precision, GFLOP giga floating point operations per second, Spec specificity, Sens sensitivity, AUC area under curve, EER equal error rate, TL transfer Learning

*CIT citations

Table 5.

RFID-based HAR models

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | |

|---|---|---|---|---|---|---|---|---|

| # features/ | Feature extraction | ML/DL model | Architecture | Metrics | Validation | Hyper-parameters/Optimizer Loss Function | CIT* | |

| R1 | 84 features for F-statistics, Relief-F, Fisher | Hand-crafted | Canonical Correlation Analysis (CCA) | Divide RSSI stream into segments, CCA for extracting features by computing canonical correlation for each feature pair, activity specific dictionary is formed: Sparse coding and dictionary is updated sequentially using K-SVD | F1-score | One sub out validation strategy | _ | Yao et al. (2018) |

| R2 | Frequency | Automatic | LSTM | I/p layer, two hidden layers and o/p layer | Precision, accuracy | _ | Timestep, neuron in hidden layers/Adam/Cross entropy | Du et al. (2019) |

| R3 | Frequency | Hand-crafted | Multi variate Gaussian Approach | ADL activity data gathering, score each activity with gaussian pdf, Human Activity Recognition Based on Maximum likelihood Estimation, Activity classification | Accuracy, precision, recall, F1-score, root mean square error (RMSE) | _ | _ | Oguntala et al. (2019) |

*CIT citations, DTW dynamic time wraping, DO dropout, LR learning rate, SVM support vector machine, LSTM long short-term memory

Table 6.

Device-free HAR models

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | |

|---|---|---|---|---|---|---|---|---|

| # Features | Feature extraction | ML/DL model | Architecture | Metrics | Validation | Hyper-parameters/optimizer loss function | CIT* | |

| R1 | Frequency | Hand-crafted | ELM | AACA: using the difference between the activity and the stationary parts in the signal variance feature. For recognition use 3-layer ELM with an i/p layer with 200 neurons, an o/p (10) and hidden layer (40) neurons | Accuracy | Tested the performance on 1100 samples | No. of hidden layer neurons (after 400 becomes stable), different users, impact of total no. of samples | Yan et al. (2020) |

| R2 | Frequency CSI | Hand-crafted | DTW: by comparing similarity b/w waveforms | CP decomposition: decompose the CSI signals with CP and each rank-one tensor after decomposition is regarded as the feature. With DTW, we can compare the similarity between 2 waveforms and identify action | Accuracy | Recognition of gaits using MARS | Impact of nearby people, test for system delay | Fei et al. (2020) |

| R3 | CSI (time & amplitude) | Automatic | LSTM | CNN extracts spatial features from multiple antenna pairs, then CNN o/p is given to LSTM followed by FC | FPR, precision, recall, F1-score | DO, Layer size, recognition method | Wang et al. (2019d) |

*CIT citations, DTW dynamic time wraping, FPR false positive rate, DO dropout, ELM extreme learning machine, CSI channel state information

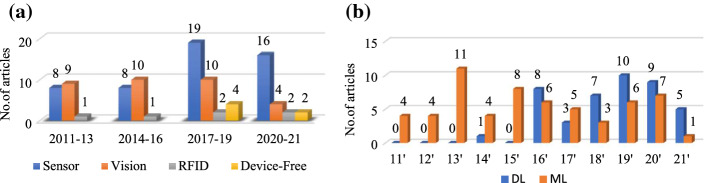

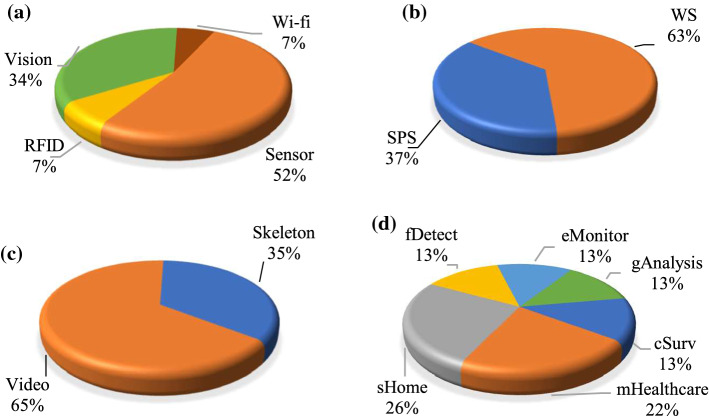

In the proposed review, we performed a rigorous analysis of the HAR framework in terms of AI techniques, device types, and application domain. One of the major observations of the proposed study is the existence of a mutual relationship among HAR device types and AI techniques. First, the analysis on HAR devices is presented in Fig. 3a which is based on the articles considered between the periods of 2011 to 2021. It shows the changing pattern of HAR devices over time. Secondly, the growth of ML and DL techniques is presented in Fig. 3b which shows that the HAR is trending towards the use of DL-based techniques. The HAR devices distribution is elaborated more in Fig. 4a, in Fig. 4b we have shown the further categorization of sensor-based HAR into the wearable sensor (WS) and smartphone sensor (SPS). Figure 4c shows the division of vision-based HAR into video and skeleton-based models. Further, Fig. 4d shows the types of HAR application domains.

Fig. 3.

a Changing pattern of HAR devices over time, b distribution of machine learning (ML) and deep learning (DL) articles in last decade

Fig. 4.

a Types of HAR devices, b sensor-based devices, c vision-based devices, d HAR applications. WS: wearable sensors, SPS: smartphone sensor, sHome: smart home, mHealthcare: health care monitoring, cSurv: crowd surveillance, fDetect: fall detection, eMonitor: exercise monitoring, gAnalysis: gait analysis

Observation 1

In Fig. 3a, according to the device-wise analysis vision-based HAR was popular between the period 2011–2016. But from the year 2017 sensor-based models’ growth is more prominent and this is the same time period when DL techniques entered the HAR domain (Fig. 3b). In the period 2017–2021, Wi-Fi devices evolved as one of the data sources for gathering activity.

Observation 2

Figure 3b shows the year-wise distribution of articles published using ML and DL techniques. The key observation is the transition of AI techniques from ML to DL. From the year 2011–2016, the HAR models with ML framework were popular, while the HAR models using DL techniques started to evolve from the year 2014. In the last 3 years, this growth has increased significantly. Therefore, after analysing graphs of Fig. 3a, b thoroughly, we can say that the HAR devices are evolving, as the trend is shifting towards the DL framework. This combined analysis verifies our claim of the existence of the mutual relationship between AI and device types.

Devices used in the HAR paradigm are the premier component of HAR by which HAR can be classified. We observed a total of 9 review articles arranged in chronological order (see Table 1). These reviews focused mainly on three sets of devices such as sensor-based (marked in light shade color) (Carvalho and Sofia 2020; Lima et al. 2019; Wang et al. 2016a, 2019b; Lara and Labrador 2013; Hx et al. 2017; Demrozi et al. 2020; Crasto et al. 2019; De-La-Hoz-Franco et al. 2018) or vision-based (marked with dark shade color) (Beddiar et al. 2020; Dhiman Chhavi 2019; Ke et al. 2013; Obaida and Saraee 2017; Popoola and Wang 2012), device-free HAR (Hussain et al. 2020). Table 1 summarizes the nine articles based on the focus area, keywords, number of keywords, research period, and #citations. Note that sensor-based HAR captures activity signals using ambient and embedded sensors, vision-based HAR involves 3-dimensional (3D) activity data gathering using a 3D camera or depth camera. In device-free HAR, activity data is captured using Wi-fi transmitter–receiver units.

Table 1.

Nine review articles published between 2013 and 2020

| S. no. | Citation | Year | Focus area | Keywords | #K* | Period | #CI$ |

|---|---|---|---|---|---|---|---|

| 1 | Wang et al. (2016a) | 2020 | Device-free HAR | HAR, gesture recognition, motion detection, Device-free, dense sensing, IoT, RFID, human object interaction | 7 | 2011–2017 | 212 |

| 2 | Wang et al. (2020) | 2020 | Sensor-based HAR | Mobile sensing, human-behaviour, human behaviour inference, cloud-edge computing, activity recognition, context awareness | 6 | 2007–2018 | 136 |

| 3 | Demrozi et al. (2019b) | 2020 | Sensor-based HAR | HAR, DL, ML, available datasets, accelerometer, sensors | 6 | 2015–2019 | 219 |

| 4 | Beddiar et al. (2020) | 2020 | Vision-based | HAR, behaviour understanding, Action representation, action detection, computer vision | 4 | 2010–2019 | 237 |

| 5 | Dhiman Chhavi (2019) | 2019 | Vision-based recognition | Two-dimension anomaly detection, three-dimensional anomaly detection, crowd anomaly, skeleton based fall detection, AAL | 5 | 2006–2018 | 226 |

| 6 | Lima et al. (2019) | 2019 | Sensor-based HAR | HAR, smartphones, feature extraction, inertial sensors | 4 | 2006–2017 | 149 |

| 7 | Lara and Labrador (2013) | 2018 | Sensor-based HAR | AAL, HAR, ADL, activity recognition system (ARS), dataset | 6 | 2003–2017 | 134 |

| 8 | Hx et al. (2017) | 2017 | Sensor-based HAR | DL, activity recognition, pervasive computing, pattern recognition | 4 | 2011–2017 | 80 |

| 9 | Ke et al. (2013) | 2013 | Video-based activity recognition | HAR, segmentation, feature representation, health monitoring, security surveillance, human computer interface | 5 | 2003– 2012 | 145 |

#K keywords, $#CI #citations, AAL ambient assistive living, ADL activity of daily living

HAR process, HAR devices, and HAR applications in AI framework

The objective of developing HAR models is to provide information about human actions which helps in analyzing the behavior of a person in a real environment. It allows computer-based applications to help users in performing tasks and to improve their lifestyle such as remote care to the elderly living alone, and posture monitoring during exercise. This section presents about HAR framework that includes HAR stages, HAR devices, and target application domains.

HAR process

There are four main stages in the HAR process: data acquisition, pre-processing, model training, and performance evaluation (Figure S.1(a) in supporting document). In stage 1, depending on the target application, a HAR device is selected. For example, in surveillance application involving multiple persons, the HAR device for data collection is the camera. Similarly, for applications where a person's daily activity monitoring is involved, the data acquisition source is sensor preferably. One can use a camera also, but it breaches the user's privacy and needs high computational cost. Table 2 illustrates the variation in HAR devices according to the application domains. It elaborates the description of diverse HAR applications in terms of various data sources and AI techniques. Note that sometimes the acquired data suffer from noise or other unwanted signals, and therefore offers challenges in post-processing AI-based systems. Thus, it is very important to have a robust feature extraction system with a robust network for better prediction. In stage 2, data cleaning is performed, which involves low-pass or high-pass filters for noise suppression or image enhancement (Suri 2013; Sudeep et al. 2016). This data undergoes regional and boundary segmentation (Multi Modality State-of-the-Art Medical Image Segmentation and 2011; Suri et al. 2002; Suri 2001). Our group has published several dedicated monograms on segmentation paradigms and are available as ready reference (Suri 2004, 2005; El-Baz and Jiang 2016; El-Baz and Suri JS 2019). This segmented data can now be used for model training. Stage 3 involves the training of HAR model using ML or DL techniques. When using hand-crafted features, one can use ML-based techniques (Maniruzzaman et al. 2017). For automated feature extraction, one can use the DL framework. Apart from automatic feature learning, DL offers knowledge reusability by providing transfer learning models, exploration of huge datasets (Biswas et al. 2018), and hybrid DL models usage which allows spatial as well as temporal features identification and learning. After stage 3, the HAR model is ready to be used for an application or prediction. Stage 4 is the most challenging part since the model is applied to the real data, whose behavior varies depending on physical factors like age, physique, and an approach for performing a task. An HAR model is efficient if its performance is independent of physical factors.

Table 2.

Multifaceted HAR application with varying HAR devices and AI

| App | Application | HAR device | Activity-type | AI model | Architecture | *CIT |

|---|---|---|---|---|---|---|

| cSurv | Crowd surveillance | Subway camera video footage | Group | DL | Laplacian eigenmap feature extraction and k-means clustering-based recognition | Thida et al. (2013) |

| Video data from mobile clips | ADL | DL | Transfer learning-based model using VGG-16 and InceptionV3 | Deep and Zheng (2019) | ||

| mHealthcare | Health monitoring | Smartphone sensor | ADL | DL | CNN (two variants CNN-2 and CNN-7) | Zhu et al. (2019) |

| Smartphone sensor | ADL | DL | Hierarchical model-based on DNN | Fazli et al. (2021) | ||

| On-body sensor (Watch and shoe) | ADL | DL | CapSense (a CNN capsule n/w) | Pham et al. (2020) | ||

| RFID data collection in an actual trauma room | Single person | DL | CNN model based on data collected from passive RFID tags for trauma resuscitation | Li et al. (2016) | ||

| sHome | Smart home/Smart cities | Smartphone sensor | ADL | DL | CNN (two variants CNN-2 and CNN-7) | Zhu et al. (2019) |

| Depth sensor | ADL | DL | 3DCNN (Color-skl-MHI and RJI) for elderly care in smart home | Phyo et al. (2019) | ||

| Wireless sensor | AAL | ML | Child activity monitoring based HAR model | Nam and Park (2013) | ||

| Video | Daily routine | ML | Disabled care HAR model | Jalal et al. (2012) | ||

| Wireless sensor | Forget and Repeat (AAL) | DL | RNN based smart home HAR model for dementia suffering patients | Arifoglu and Bouchachia (2017) | ||

| fDetect | Fall detection | Smartphone accelerometer | ADL | ML and DL | AdaBoost-HC, AdaBoost-CNN, SVM-CNN | Ferrari et al. (2020) |

| eMonitor | Exercise monitoring | Weizmann, KTH with ADL, Ballet: from DVD | ADL and Ballet dance moves | ML | SVM-KNN with PCA | Vishwakarma and Singh (2017) |

| Free weight exercise data recorded with RFID tags | Free weight exercise | RF | FEMO with Doppler shift profile | Ding et al. (2015) | ||

| gAnalysis | Gait analysis | SPHERE, DGD: DAI Gait | Gait pattern | ML | JMH feature and BagofKeyPoses recognition | Chaaraoui (2015) |

*CIT citation, RF conventional RF profiling, ML machine learning and DL deep learning, ADL activities of daily living, FEMO free weight exercise monitoring, Color-skl-MHI color skeleton motion history images, and RJI relative joint image

HAR devices

The HAR device type depends on the target application. Figure S.1(b) (Supporting document) presents the different sources for activity data: sensors, video cameras, RFID systems, and Wi-Fi devices.

Sensors

The sensors-based approaches can be categorized into wearable sensors and device sensors. In wearable sensor-based approach, a body-worn sensor module is designed which includes inertial sensors, environmental sensors units (Pham et al. 2017, 2020; Hsu et al. 2018; Xia et al. 2020; Murad and Pyun 2017; Saha et al. 2020; Tao et al. 2016a, b; Cook et al. 2013; Zhou et al. 2020; Wang et al. 2016b; Attal et al. 2015; Chen et al. 2021; Fullerton et al. 2017; Khalifa et al. 2018; Tian et al. 2019). Sometimes the wearable sensor devices can be stressful for the user, therefore the solution is the use of smart-device sensors. In device sensor approach data is captured using smartphone inertial sensors (Zhu et al. 2019; Yao et al. 2018; Wang et al. 2016a, 2019b; Zhou et al. 2020; Li et al. 2019; Civitarese et al. 2019; Chen and Shen 2017; Garcia-Gonzalez et al. 2020; Sundaramoorthy and Gudur 2018; Gouineua et al. 2018; Lawal and Bano 2019; Bashar et al. 2020). The most commonly used sensor for HAR is accelerometer and gyroscope. Table A.1 of “Appendix 1”, shows the types of data acquisition devices, activity classes, and scenarios in earlier sensor-based HAR models.

Video camera

It can be further classified into two types: 3D camera and depth camera. 3D camera-based HAR models uses closed-circuit television (CCTV) cameras in the user's environment for monitoring the actions performed by the user. Usually, the monitoring task is performed by humans or some innovative recognition model. Numerous HAR models were proposed by researchers, which can process and evaluate the activity video or image data and recognize the performed activities (Wang et al. 2018; Feichtenhofer et al. 2018, 2017, 2016; Diba et al. 2016, 2020; Yan et al. 2018; Chong and Tay 2017). The accuracy of activity recognition of 3D camera data depends on physical factors such as lighting and background color. The solution to this issue can be provided by using a depth camera (like Kinect). The Kinect camera consists of different data streams such as depth, RGB, and audio. Depth stream captures body joint coordinates, and based on joint coordinates, a skeleton-based HAR model can be developed. The skeleton-based HAR models have applications in domains that involve posture recognition (Liu et al. 2020; Abobakr et al. 2018; Akagündüz et al. 2016). Table A.2 of “Appendix 1” provides an overview of earlier vision-based HAR models. Apart from 3D and depth cameras, one can use thermal cameras but it can be expensive.

RFID tags and readers

By installing RFID passive tags in close proximity of the user, the activity data can be collected using RFID readers. As compared to active RFID tags, passive tags have more operational life as they do not need a separate battery. Rather it uses the reader's energy and converts it into an electrical signal for operating its circuitry. But the range of active tags is more than passive tags. They both can be used for HAR models (Du et al. 2019; Ding et al. 2015; Li et al. 2016; Yao et al. 2018; Zhang et al. 2011; Xia et al. 2012; Fan et al. 2019). The further description of existing RFID-based HAR models is provided in Table A.3 of “Appendix 1”.

Wi-Fi device: In the last 5 years, the device-free HAR has gained popularity. Researchers have explored the possibility of capturing activity signals using Wi-Fi devices. Channel state information (CSI) from the wireless signal is used to acquire activity data. Many models were developed for fall detection and gait recognition using CSI (Yao et al. 2018; Wang et al. 2019c, d, 2020b; Zou et al. 2019; Yan et al. 2020; Fei et al. 2020). The description of some popular existing Wi-Fi device-based HAR is provided in Table A.4 of “Appendix 1”.

Summary of challenges in HAR devices

There are almost four types of HAR devices, and researchers have proposed various HAR models with advanced AI techniques. Gradually, the usage of electronic devices for gathering activity data in HAR domain is increasing, but with this growth, the challenges are also evolving: (1) Video camera-based application involves data gathering using a video camera, which results in the invasion of user’s privacy. It also requires high power systems to process large data produced by video cameras, (2) In sensors-based HAR models, the use of wearable devices is stressful and inconvenient for the user, therefore smartphone sensors are more preferable. But the use of smartphone and smartwatch is limited to simple activities recognition such as walking, sitting, and going upstairs, (3) In RFID tags and reader-based HAR models, the usage of RFID in activity capturing is limited to indoor only. (4) Wi-Fi-based HAR models are new in the HAR industry, but there are few issues with it. Moreover, it can capture activities performed within the Wi-Fi range but cannot identify the movement in blind spot areas.

HAR applications using AI

In the last decade, researchers have developed various HAR models for different domains. “What type of HAR device is suitable for which application domain and what is the suitable AI methodology” is the biggest question that pops into the mind, once developing the HAR framework. The description of diverse HAR applications with data sources and AI techniques is illustrated in Table 2. It shows the variation in HAR devices and AI techniques depending on the application domain. The pie chart in Fig. 4d shows the distribution of applications based on existing articles. HAR is used in fields like:

Crowd surveillance (cSurv): Crowd pattern monitoring and detecting panic situations in the crowd.

Health care monitoring (mHealthcare): Assistive care to ICU patients, Trauma resuscitation.

Smart home (sHome): Care to elderly or dementia patients and child activity monitoring.

Fall detection (fDetect): Detection of abnormality in action which results in a person's fall.

Exercise monitoring (eMonitor): Pose estimation while doing exercise.

Gait analysis (gAnalysis): Analyze gait patterns to monitor health problems.

HAR applications with different activity-types

There is no predefined set of activities, rather the human activity type varies according to the application domain. Figure S.2 (Supporting document) shows the activity type involved in human activity recognition.

Single person activity

Here the action is performed by a person. Figure S.3 (Supporting document) shows examples of single-person activities (jumping jack, baby crawling, punching the boxing bag, and handstand walking). Single person action can be divided into the following categories:

Behavior: The goal of behavior recognition is to recognize a person’s behavior from activity data, and it is useful in monitoring applications: dementia patient & children behavior (Han et al. 2014; Nam and Park 2013; Arifoglu and Bouchachia 2017).

Gestures: It has application in sign language recognition for differently-abled persons. Wearable sensor-based HAR models are more suitable (Sreekanth and Narayanan 2017; Ohn-Bar and Trivedi 2014; Xie et al. 2018; Kasnesis et al. 2017; Zhu and Sheng 2012).

Activity of daily living (ADL) and Ambient assistive living (AAL): ADL activities are performed in an indoor environment cooking, sleeping, and sitting. In smart home, ADL monitoring for dementia patients can be performed using wireless sensor-based HAR models (Nguyen et al. 2017; Sung et al. 2012) or RFID tags based HAR models (Ke et al. 2013; Oguntala et al. 2019; Raad et al. 2018; Ronao and Cho 2016). AAL-based models help elderly and disabled people by providing remote care, medication reminder, and management (Rashidi and Mihailidis 2013). CCTV cameras are an ideal choice but they have privacy issues (Shivendra shivani and Agarwal 2018). Therefore, sensor or RFID-based HAR models (Parada et al. 2016; Adame et al. 2018) or wearable sensor-based models are more suitable (Azkune and Almeida 2018; Ehatisham-Ul-Haq et al. 2020; Magherini et al. 2013).

Multiple person activity

The action is performed by a group of persons. Multiple person movement is illustrated in Figure S.4 (Supporting document), depicts the normal human movement on a pedestrian pathway and anomalous activity of cyclist and truck in a pedestrian pathway. It can belong to the following categories.

Interaction: There are human–object (cooking, reading a book) (Kim et al. 2019; Koppula et al. 2013; Ni et al. 2013; Xu et al. 2017) and human–human (handshake) activities (Weng et al. 2021). A human–object interaction-based free weight exercise monitoring (FEMO) model using RFID devices that monitors exercise by installing a tag on dumbbells (Ding et al. 2015).

Group: It involves monitoring people's count in an indoor environment like a museum or crowd pattern monitoring (Chong and Tay 2017; Xu et al. 2013). To check the number of people in an area, we can use Wi-Fi units. Received signal strength can be used for counting people as it is user-sensitive.

Observation 3: Vision-based HAR has broad application domains, but they have limitations like privacy and the need for more resources (such as GPUs). These issues can be overcome with sensor-based HAR but their applications domain is currently limited to single-person activity monitoring.

Core of the HAR system design: emerging AI

The foremost goal of HAR is to predict the movement or action of a person based on the action data collected from a data acquisition device. These movements include activities like walking, exercising, and cooking. It is challenging to predict movements, as it involves huge amounts of unlabelled sensor data, and video data which suffer from conditions like lights, background noise, and scale variation. To overcome these challenges AI framework offers numerous ML, and DL techniques.

Artificial intelligence models in HAR

ML architectures: ML is a subset of AI, which aims at developing an intelligent model which involves the extraction of unique features, that helps in recognizing patterns in the input data (Maniruzzaman et al. 2018). There are two types of ML approaches: supervised and unsupervised. In supervised approach, a mathematical model is created based on the relationship between raw input data and output data. The idea behind the unsupervised approach is to detect patterns in raw input data without prior knowledge of output. Figure S.5 (Supporting document) illustrates the popular ML techniques used in recognizing human actions (Qi et al. 2018; Yao et al. 2019; Multi Modality State-of-the-Art Medical Image Segmentation and 2011). Several applications of ML models in handling different diseases have been developed by our group such as diabetes man(Maniruzzaman et al. 2018) liver cancer (Biswas et al. 2018), thyroid cancer (Rajendra Acharya et al. 2014), ovarian cancer (Acharya et al. 2013a, 2015), prostate (Pareek et al. 2013) breast (Huang et al. 2008), skin (Shrivastava et al. 2016), arrhythmia classification (Martis et al. 2013), and recently in cardiovascular (Acharya et al. 2012; Acharya et al. 2013b). In the last 5 years, the researchers' focus has been shifted to semi-supervised learning where the HAR model is trained on labelled as well as unlabelled data. The semi-supervised approach aims to label unlabelled data using the knowledge gained from the set of labelled data. In a semi-supervised approach, the HAR model is trained on popular labelled datasets and the new users' unlabelled test data and classified into activity classes according to the knowledge gained from training data (Mabrouk et al. 2015; Cardoso and Mendes Moreira 2016).

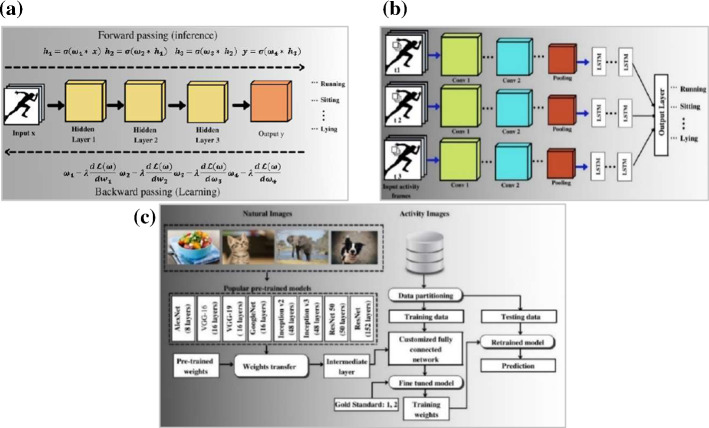

DL/TL Architectures: In recent years, DL has become quite popular due to its capability of learning high-level features and its superior performance (Saba et al. 2019; Biswas et al. 2019). The basic idea behind DL is data representation, which enables it to produce optimal features. It learns unknown patterns from raw data without human intervention. The DL techniques used in HAR can be divided into three parts such as deep neural networks (DNN), hybrid deep learning (HDL) models, and transfer learning (TL) based models (Agarwal et al. 2021). (Shown in Figure S.5 of Supporting document) The DNN includes the models like convolutional neural networks (CNN) (Deep and Zheng 2019; Liu et al. 2020; Zeng et al. 2014), recurrent neural networks (RNN) (Murad and Pyun 2017) and RNN variants which include long short-term memory (LSTM) and gated recurrent unit (GRU) (Zhu et al. 2019; Du et al. 2019; Fazli et al. 2021). In hybrid HAR models, the combination of CNN and RNN models is trained on spatio-temporal data. Researchers have proposed various hybrid models in the last 5 years, such as DeepSense (Yao et al. 2017) and DeepConvLSTM (Wang et al. 2019a). Apart from hybrid AI models, there are various transfer learning-based HAR models which involves pre-trained DL architectures like ResNet-50, Inceptionv3, VGG-16 (Feichtenhofer et al. 2018; Newell Alejandro 2016; Crasto et al. 2019; Tran et al. 2019; Feichtenhofer and Ai 2019). However, the role of TL in sensor-based HAR is still evolving (Deep and Zheng 2019).

Figure 5a depicts a representative CNN architecture for HAR, which shows the two convolution layers followed by a pooling layer for feature extraction for the activity image, leading to dimensionality reduction. This is then followed by a fully connected (FC) layer for iterative weight computations and a softmax layer for binary or granular decision making. After that, the input image is classified into an activity class. Figure 5b presents the representative TL-based HAR model, which includes pretrained models such as VGG-16, inception V3, and ResNet. The pre-trained model is trained on a large dataset of natural images such as man, cat, dog, and food. These pre-trained weights are applied to the training data of the sequence of images using an intermediate layer. It forms the customized fully connected layer. Further, the training weights are fine-tuned using the optimizer function. Next the retrained model is applied to testing data for the classification of the activity into an activity class.

Fig. 5.

a CNN model for HAR . , b TL-based model for HAR, and c hybrid HAR model (CNN-LSTM)

Miniaturized mobile devices are handy to use and offer a set of physiological sensors that can be used for capturing activity signals. But the problem is the complex structure and strong inner correlation in captured data. The deep learning models which are the combination of both CNN and RNN offer benefits to explore this complex data and identify detailed features for activity recognition. One such model offered by Ordonez et al. was DeepConvLSTM (Ordóñez and Roggen 2016), where CNN works as feature extractor and represent the sensor input data as feature maps, and LSTM layer explores the temporal dynamics of feature maps. Yao et al. have proposed similar model named as DeepSense in which two convolution layers (individual and merge conv layers) and stacked GRU layers were used as main building blocks (Yao et al. 2017). Figure 5c shows the representative hybrid HAR model with CNN-LSTM frameworks.

Loss function

DL model learns by means of loss function. It evaluates how well an algorithm models the applied data. If it deviates largely from actual output, the value of the loss function will be very large. The loss function with the help of optimization function learns gradually to reduce the prediction error. Mostly used loss functions in HAR models are mean squared loss and cross-entropy (Janocha and Czarnecki 2016; Wang et al. 2020a).

- Mean absolute error (δ): is calculated as the average sum of absolute differences between predicted and actual . N is the number of training samples

- Mean squared error (): is calculated as the average of the squared difference between the predicted and actual output . N is the number of training samples

- Cross-entropy loss (): evaluates the performance of a model whose output probability ranges between 0 and 1. The loss increases if predicted probability diverges from actual output .

- Binary cross-entropy loss: predict the probability between two activity classes.

- Multiclass cross-entropy loss: Multi-class CEL is the generalization of binary CEL where each class is assigned a unique integer value range between 0 to n −1 (n is a number of classes).

- Kullback Lieblar-divergence (KL-divergence): is a measure of how a probability distribution diverges from another distribution. For the probability distribution of P(x) and Q(x), KL-divergence is defined as the logarithmic difference between P(x) and Q(x) with respect to P(x).

Hyper-parameters and optimization

Drop-out rate: regularization technique where few neurons are dropped to avoid overfitting.

Learning rate: it defines how fast parameters are updated in a network.

Momentum: it helps in the next step direction based on knowledge gained in previous steps.

Number of hidden layers: number of hidden layers between input and output layers.

Optimization

It is a method used for changing the parameters of neural networks. DL provides a wide range of optimizers: gradient descent (GD), stochastic gradient descent (SGD), RMSprop, and Adam optimizers. GD is a first-order optimization that relies on the first-order derivative of the loss function. SGD is the variant of GD, which involves frequent variation in a model’s parameter. It computes the loss for each training sample and alters the model’s parameters. Further, the RMSprop optimizer lies in the domain of adaptive learning. RMSprop deals with the vanishing/exploding gradient issue by using a moving average of squared gradients to normalize the gradient. The most powerful optimizer is the Adam optimizer which has the strength of momentum of GD to hold the gained knowledge of updates, adaptive learning of RMSprop optimizer, offers two new hyper-parameters beta and beta 2 (Soydaner 2020; Sun et al. (2020).

Validation

The most common validation strategies are K-fold cross validation and leave one subject out (LOSO). In k-fold, the k-onefold is used for training and the remaining is used for validation. A similar pattern is followed in k-fold variants such as twofold, threefold, and tenfold cross-validation. In LOSO, out of whole dataset, the data of one subject is kept for validation and the rest is used for training.

AI models adapting HAR devices

There are various HAR devices for capturing human activity signals. The goal of HAR devices is to capture activity signals with minimal distortion. For providing deeper insight into the existing HAR models, we have identified seven AI attributes and used tabular representation for better understanding. It consists of attributes such as #features, feature extraction, AI model architecture, metrics, validation, hyper-parameters/optimizer/loss function. For in-depth description of recent HAR models between 2019 and 2021, we have made four tables for each HAR device: Table 3 (sensor), Table 4 (vision), Table 5 (RFID), and Table 6 (device-free).

In Table 3, we have provided insight into AI techniques adopted in sensor-based HAR models in the last two years. Apart from recent sensor-based HAR models, knowledge about previous sensor-based HAR models published between 2011–2018 is provided in Table S.1 (Supporting document) (Zhu et al. 2019; Ding et al. 2019; Yao et al. 2017, 2019; Hsu et al. 2018; Murad and Pyun 2017; Sundaramoorthy and Gudur 2018; Lawal and Bano 2019). The sensor-based HAR is more dominated by DL techniques especially CNN or the CNN's combination with RNN or its variants. In sensor-based HAR the most used hyper-parameters are learning rate, batch size, #layers, and drop out. Adam optimizer, cross-entropy loss, and k-fold validation are dominant in sensor-based HAR. For example, Table 3’s (R2, C4) presents the 3D CNN-based HAR model which includes 3 convolutional layers of size (32, 64,128) followed by a pooling layer, then an FC layer of size (128) and a softmax layer. Entry (R2, C6) illustrate the validation strategy (10% data was used for validation) and entry (R2, C7) illustrates the hyperparameters (i.e., LR = 0.001, batch size = 50) and selected optimiser (Adam) for performance fine-tuning. Table 4 illustrates the AI framework in vision-based HAR models published in recent 2 years. Further, description of earlier vision-based HAR models published between 2011–2018 are provided in Table S.2 (Supporting document) (Qi et al. 2018; Wang et al. 2018; Thida et al. 2013; Feichtenhofer et al. 2018, 2017; Simonyan and Zisserman 2014; Newell Alejandro 2016; Diba et al. 2016; Xia et al. 2012; Vishwakarma and Singh 2017; Chaaraoui 2015). Initial vision-based HAR models were dominated by ML algorithms such as support vector machine (SVM), k-means clustering with principal component analysis (PCA)-based feature extraction. In the last few years, researchers have shifted to DL paradigm and the most dominant DL techniques such as multi-dimensional CNN, LSTM, and a combination of both. In video camera-based HAR models, the incoming data is video stream which needs more resources and processing time. This issue gives rise to the usage of TL in vision-based HAR approaches. The hyper-parameters used in vision-based HAR are drop-out rate, learning rate, weight decay, and batch normalization. The mean square loss and cross-entropy loss are the most used loss functions, while RMSProp and SGD are the most dominant optimizers in vision-based HAR. For example, Table 4’s (R1, C3) illustrates the description of 3DCNN based HAR model which includes input layer with skeletal joints information split into coloured skeleton motion history images (Color-skl-MHI), and relative joint images (RJI) followed by 3DCNN, then a fusion layer to combine the o/p of both 3DCNN layers and last is the output layer. Table 5 shows the recognition models using RFID devices published in the last 2 years, while details of the earlier RFID-based HAR models are provided in Table S.3 (Supporting document) (Ding et al. 2015; Li et al. 2016; Fan et al. 2017). RFID-based HAR is mostly dominated by ML algorithms like SVM, sparse coding, and dictionary learning. Very few researchers have used DL techniques. Some RFID-based HAR models used traditional approach in which received signal strength indicator (RSSI) is used for data gathering and recognition task is performed by calculating the similarity in dynamic time warping (DTW). Table 6 provides the overview of device-free HAR models where Wi-Fi devices are used for collecting activity data. The recognition approach is similar to RFID-based HAR. Further, ML approaches are more dominant than DL.

Impact of DL on miniaturized mobile and wireless sensing HAR devices

A visible growth of DL in vision-based HAR devices is observed in terms of existing HAR models mentioned in Table 4 where most of the recent work is done using advanced DL techniques like TL using VGG-16, VGG-19, and ResNet-50. Apart from these TL-based models, there are hybrid models using autoencoders as shown in row R8 of Table 4 which includes CNN, LSTM, and autoencoder-based HAR model for extracting deep features from enormous volumed video datasets. But the impact of advanced DL techniques in sensors-based HAR and device-free HAR is not very powerful. Due to the compact size and versatility of miniaturized and wireless sensing devices, they are progressing to become the next revolution in the HAR framework, and the key to their progress is the emerging DL framework. The data gathered from these devices is unlabelled, complex, and has strong inter-correlation. DL offers (1) advanced algorithms like TL, and unsupervised learning techniques such as generative adversarial networks (GAN) and variational autoencoders (VAE), (2) fast optimization techniques such as SGD, Adam, and (3) dedicated DL libraries like TensorFlow, (Py) Torch, and Theano to handle complex data.

Observation 4: DL techniques are still in an evolving stage. Minimal work has been done using TL in sensor-based HAR models. Most of the approaches are discriminative where supervised learning is used for training HAR models. Generative models like VAE and GAN have evolved in the computer vision domain but they are still new in the HAR domain.

Performance evaluation in HAR and integration of AI in HAR devices

Performance evaluation

Researchers have adopted different metrics for evaluating the performance of HAR models, and the most popular evaluation metric is accuracy. The most used metrics in sensor-based HAR include accuracy, sensitivity, specificity, and F1-score. The evaluation metrics used in existing vision-based HAR models were accuracy i.e., top-1, top-5, and mean average precision (mAPS). Metrics used in RFID-based HAR include accuracy, F1-score, recall, and precision. The metrics used in Device-free HAR include F1-score, precision, recall, and accuracy. “Appendix 2” shows the mathematical representations of the performance evaluation metrics used in the HAR framework.

Integration of AI in HAR devices

In the last few years, a significant growth can be seen in the usage of DL in the HAR framework, but there are challenges associated with DL models such as (1) Overfitting/Underfitting: When the amount of activity data is limited, the HAR model learns too well during training that it learns the irregularities and random noise as part of data. As a result, it negatively impacts the model’s generalization ability. Underfitting is another negative condition where the HAR model neither models the new data nor generalizes to new unseen data. Both overfitting and underfitting result in lower performance. By selecting the appropriate optimizer, we can overcome the overfitting condition by tuning the right hyperparameters or by increasing the size of training data, or using k-fold cross validation. The challenge is to select the correct range of hyperparameters that can work well during training and testing protocols and works well when the HAR model is used in real-life applications. (2) Hardware integration in HAR devices: In the last 10 years various HAR models with high performance came into the picture, but the question is “how well they can be used in real-environment without integrating specialized hardware like graphics processing units (GPUs), and extra memory”. Therefore, the objective for designing a HAR model is to design a robust and lightweight model which can run in real-environment without the need for specialized hardware. For applications with huge data such as videos, we need GPUs for training the model. Python offers libraries (such as Keras, TensorFlow) for implementing AI framework on a general-purpose CPU processor. For working on GPUs, one needs to explore special libraries for implementing AI models. Sometimes, it may result in specialized hardware integration need in the target application which makes it expensive. Processing power and costs are interrelated i.e., one needs to pay more for extra power.

Critical discussion

In the proposed review, we made four observations based on the tri-stool of HAR which includes HAR devices, AI techniques, and applications. Based on these observations and challenges highlighted in Sects. 3 and 4, we have made three claims and four recommendations.

Claims based on in-depth analysis of HAR devices, AI, and HAR applications

(i) Mutual relationship among HAR devices and AI framework: Our first claim is based on the observation 1 and 2 where we illustrate that the advancement in AI directly affects the growth of HAR devices. In Sect. 2, Fig. 3a presents the growth of HAR devices in the last 10 years. Further, Fig. 3b illustrates the advancement in AI, which shows how researchers have shifted to the DL paradigm from ML in the last 5 years. Therefore, from observations 1 and 2, we can rationalize that the advancement in AI is resulting in the growth of HAR devices. Most of the earlier HAR models were dependent on cameras or customized wearable sensors data but in the last 5 years more devices like embedded sensors, Wi-Fi devices came into the picture as prominent HAR sources.

(ii) Growth in HAR devices increases the scope of HAR in various application domains: Claim 2 is based on observation 3, where we have shown that for the best results how a target application is depending on a HAR device. For applications like crowd monitoring, if we use sensor devices for gathering the activity data it will not be able to give prominent results because sensors are best for single person applications. Similarly, if we use a camera in a smart home environment, it will not be a good choice because cameras invade user’s privacy and require a high computational cost.

Therefore, we can conclude that multi-person applications like surveillance video cameras are proven best. However, for single-person monitoring applications smart device sensors are more suitable.

(iii) HAR devices, AI, and target application domains are three pillars in HAR framework: From all four observations and claims (1 and 2), we have proved that HAR devices, AI, and application domains are three pillars in the success of a HAR model.

Benchmarking: comparison between different HAR reviews

The objective of the proposed review is to provide a complete and comprehensive review of HAR based on the three pillars i.e., device-type, AI techniques, and application domains. Table 7 provides the benchmarking of the proposed review with existing studies.

Table 7.

Previous reviews versus proposed review

| C1 | C2 | C3 | C4 | C5 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Citation | Year | HAR devices | AI type | Dataset (HAR-devices) | ||||||||

| Sensor | Vision | RFID | DFp | ML | DL | Sensor | Vision | RFID | DFp | |||

| R1 | Hussain et al. (2020) | 2020 | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ |

| R2 | Carvalho and Sofia (2020) | 2020 | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ |

| R3 | Demrozi et al. (2020) | 2020 | ✓ | ✗ | ✗ | ✗ | ✓ | ✓ | ✓ | ✗ | ✗ | ✗ |

| R4 | Beddiar et al. (2020) | 2020 | ✗ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | ✓ | ✗ | ✗ |

| R5 | Dhiman Chhavi (2019) | 2019 | ✗ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | ✓ | ✗ | ✗ |

| R6 | Lima et al. (2019) | 2019 | ✓ | ✗ | ✗ | ✗ | ✓ | ✓ | ✓ | ✗ | ✗ | ✗ |

| R7 | De-La-Hoz-Franco (2018) | 2018 | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ |

| R8 | Hx et al. (2017) | 2017 | ✓ | ✗ | ✗ | ✗ | ✗ | ✓ | ✓ | ✗ | ✗ | ✗ |

| R9 | Ke et al. (2013) | 2013 | ✗ | ✓ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ |

| R10 | Proposed | 2021 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

DFp Device-free

A short note on HAR datasets

The narrative review surely needs a special note on types of HAR datasets. (1) Sensor-based: Researchers have proposed many popular sensor-based datasets. In Table A.5 (“Appendix 1”), the description of sensor-datasets is illustrated with attributes such as data source, #factors, sensor location, and activity type. It includes wearable sensor-based datasets (Alsheikh et al. 2016; Asteriadis and Daras 2017; Zhang et al. 2012; Chavarriaga et al. 2013; Munoz-Organero 2019; Roggen et al. 2010; Qin et al. 2019), as well as smart-device sensor-based datasets (Ravi et al. 2016; Cui and Xu 2013; Weiss et al. 2019; Miu et al. 2015; Reiss and Stricker 2012a, b; Lv et al. 2020; Gani et al. 2019; Stisen et al. 2015; Röcker et al. 2017; Micucci et al. 2017) Apart from datasets mentioned in Table A.5, there are few more datasets worth mentioning such as Kasteren dataset (Kasteren et al. 2011; Chen et al. 2017), which is also very popular. (2) Vision-based HAR: Devices for collecting 3D data are CCTV cameras (Koppula and Saxena 2016; Devanne et al. 2015; Zhang and Parker 2016; Li et al. 2010; Duan et al. 2020; Kalfaoglu et al. 2020; Gorelick et al. 2007; Mahadevan et al. 2010), depth cameras (Cippitelli et al. 2016; Gaglio et al. 2015; Neili Boualia and Essoukri Ben Amara 2021; Ding et al. 2016; Cornell Activity Datasets: CAD-60 & CAD-120 2021), and videos from public domains like YouTube and Hollywood movie scenes (Gu et al. 2018; Soomro et al. 2012; Kuehne et al. 2011; Sigurdsson et al. 2016; Kay et al. 2017; Carreira et al. 2018; Goyal et al. 2017). The reason behind using public domain videos is that they have no privacy issue, unlike with cameras. Table A.6 (“Appendix 1” illustrates the description of vision-based datasets which includes data source, #factors, sensor location, and activity type. Apart from datasets mentioned in Table A.6, there are few more publicly available datasets such as MCDS (Magnetic wall chess board video) datasets (Tanberk et al. 2020), NTU-RGBD datasets (Yan et al. 2018; Liu et al. 2016), VIRAT 1.0 (3 hour person vehicle interaction), and VIRAT 2.0 (8 hour surveillance scene of school parking) (Wang and Ji 2014). (3) RFID-based: RFID-based HAR is mostly used for smart home applications, where actions performed by the user are monitored by RFID tags. To the best of our knowledge, there is hardly a public dataset available for RFID-based HAR. Researchers have developed their own datasets for their respective applications. One such dataset was developed by Ding et al. (Ding et al. 2015) in 2015 which includes data of 10 exercises performed by 15 volunteers for 2 weeks with a total duration of 1543 min. Similarly, Li et al. developed the dataset for trauma resuscitation including the 10 activities and 5 resuscitation phases (Li et al. 2016). A similar strategy was followed by Du et al. (2019), Fan et al. (2017), Yao et al. (2017, 2019), Wang et al. (2019d). (4) Device-free: There are not many popular datasets that are publicly available. However, researchers followed the same strategy which is adopted in RFID-based HAR. Yan et al. included the data of 6 volunteers with 440 actions in their dataset with a total of 4400 samples (Wang et al. 2019c). Similarly, Yan et al. (2020), Fei et al. (2020), Wang et al. (2019d) have proposed their own datasets.

Table A.5.

Sensor datasets

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | C9 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dataset name/Publicly available | Year | Source | # Classes | # Actors | Sensor location | Activity type | Single/multiple person | Size | ||||||

| FE | H–O | H–H | ADL | G | RT | |||||||||

| R1 | Skoda/Pub | 2008 | WS | 10 gestures in car maintenance | 1 subject | 19 sensors on both arms | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | Single | _ |

| R2 | USC-HAD/Pub | 2012 | IMU with Acc, Gyro, Mag | 12 activities | 17 (7 M, 7 F) | Front right hip | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | Single | _ |

| R3 | PAMAP/Pub | 2012 | WS (IMU, 3 Colibri), HR monitor | 18 activities | 9 subjects | _ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | Single | 3,850,505 (52 attributes) |

| R4 | Opportunity/Pub | 2012 | WS:(7 IMU, 12 Acc,7 Loc), OS (12), AS (21) | 6 runs per subject (5 ADL and 6th for drill) | 4 subjects | Upper body, hip and leg | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | Single | 2551 (242 attributes) |

| R5 | UCI-HAR/Pub | 2012 | SPS (Acc, Gyro) | 6 activities | 30 (19–48 years) | Samsung galaxy SII mounted on waist | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | Single | 10,299 (561 attributes) |

| R6 | Heterogeinity (HHAR)/Pub | 2015 | SPS and SW Acc, Gyro | 6 activities | 9 users | 8 SPS & 4 SW | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | Single | 43,930,257 (16 attributes) |

| R7 | MobiAct/Pub | 2016 | SP (Acc, Gyro) | 9 ADL activities and 4 types of falls | 57 subjects (42 M, 15F) of (20–57 years) | Samsung Galaxy S3 SP in trousers’ pocket | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | Single | 2500 |

| R8 | UniMibShar/Pub | 2017 | SPS | 17 activities (9 ADL and 8 fall) | 30 of (18- 60 years) | _ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | Single | 11,771 samples |

| R9 | WISDM/Pub | 2019 | SPS and SW’s Acc, Gyro | 18 activities | 51 | _ | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | Single | 15,630,426 |

| R10 | 19NonSense/Pri | 2020 | e-Shoe, Samsung gear SW | 18 activities (9 indoor and 9 outdoor sports) | 13 (5F, 8 M) of (19–45 years) | Foot, arm | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | Single | Not public dataset |

* CIT citations, SPS smartphone sensor, WS wearable sensor, SP smartphone, Acc accelerometer, Gyro gyroscope, Loc location, SW smartwatch, ADL activities of daily living, M male, F female, Pub publicly available, Prop proprietary

Table A.6.

Video datasets

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | C9 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dataset name/Publicly available | Year | Source | # Classes | # Actor | Body part involved | Activity type | Single/multiple person | Size | ||||||

| FE | H–O | H–H | ADL | G | RT | |||||||||

| R1 | CAD-60/Pub | 2009 | Kinect | 5 (environments) 12 (activities) | 4 (2 M, 2F) | Whole body joint | ✗ | ✓ | ✗ | ✗ | ✗ | ✓ | Single | 60 videos |

| R2 | CAD-120/Pub | 2009 | Kinect | 10 (High level) 10 (sub activity labels) 12 (sub affordance labels) | 4 (2 M, 2F) | Whole body joint | ✗ | ✓ | ✗ | ✗ | ✗ | ✓ | Single | 120 videos |

| R3 | MSR Action 3D/Pub | 2009 | DC | 10 subjects, 20 action, 20 3D joints) | 10 | Whole body | ✗ | ✗ | ✗ | ✓ | ✓ | ✗ | Single | 336 action files |

| R4 | UT Kinect/Pub | 2012 | Kinect | 10 actions | 10 | Whole body | ✗ | ✗ | ✗ | ✓ | ✗ | ✓ | Single | 1.79 GB |

| R5 | AVA/Pub | 2018 | MC | 80 atomic visual actions | 192 movies | Whole body | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | Both | 57,600 videos |

| R6 | UCF-101/Pub | 2012 | YT | 101 actions | 2,500 videos | Whole body | ✗ | ✓ | ✓ | ✓ | ✗ | ✓ | Both | 13 K clips 27 h |

| R7 | HMDB51/Pub | 2011 | YT, GV, MC | 51 action classes | 3,312 videos | Whole body | ✗ | ✓ | ✓ | ✓ | ✗ | ✗ | Both | 6,766 clips of 2 GB |

| R8 | Charades/Pub | 2016 | ADL | 157 classes | 267 | Whole body | ✗ | ✓ | ✓ | ✓ | ✗ | ✗ | Single | 4855 KB |

| R9 | Kinetics 400/Pub | 2017 | YT | 400 | 400–1000 clips/class | Whole body | ✗ | ✓ | ✓ | ✓ | ✗ | ✗ | Both | 3,00,000 |

| R10 | Kinetics 600/Pub | 2018 | YT | 600 | 600–1000 clips/class | Whole body | ✗ | ✓ | ✓ | ✓ | ✗ | ✗ | Both | 5,00,000 |

| R11 | SomethingSomething/Pub | 2018 | Objects Actions | 174 classes | H-I actions | Whole body | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | H–O interaction | 108,499 videos |

| R12 | Weizmann/Pub | 2005 | ADL | 10 action classes | 2 subs | Whole body | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | Single | 90 videos |

| R13 | UCSD/Pub | 2013 | camera | Peds1 and Peds2 | Subway | People group | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | Surveillance data | Peds1: 60 & Peds2: 28 |

*CIT citations, ADL activities of daily living, M male, F female, YT YouTube, MC movie clip, DC depth camera, Pub publicly available, Prop proprietary

Strengths and limitations

Strengths