Abstract

High-resolution functional 2-photon microscopy of neural activity is a cornerstone technique in current neuroscience, enabling, for instance, the image-based analysis of relations of the organization of local neuron populations and their temporal neural activity patterns. Interpreting local image intensity as a direct quantitative measure of neural activity presumes, however, a consistent within- and across-image relationship between the image intensity and neural activity, which may be subject to interference by illumination artifacts. In particular, the so-called vignetting artifact—the decrease of image intensity toward the edges of an image—is, at the moment, widely neglected in the context of functional microscopy analyses of neural activity, but potentially introduces a substantial center-periphery bias of derived functional measures. In the present report, we propose a straightforward protocol for single image-based vignetting correction. Using immediate-early gene-based 2-photon microscopic neural image data of the mouse brain, we show the necessity of correcting both image brightness and contrast to improve within- and across-image intensity consistency and demonstrate the plausibility of the resulting functional data.

Keywords: vignetting correction, functional microscopic imaging, neural activity, image analysis, imaging artifacts

Introduction

Modern microscopic imaging techniques provide unique insights into the structure and functioning of complex biological neural systems. For many applications—ranging from whole-brain imaging (Gao et al., 2019) to single-cell isolation (Brasko et al., 2018)—it has been shown that illumination correction, that is, the removal of uneven illumination of a scene and specimen, facilitates image interpretation, improves visibility particularly of fine structures and is a pivotal pre-processing step for subsequent image analysis (Smith et al., 2015). Illumination correction is, therefore, often an essential component of microscopy imaging setups (Model, 2014; Khaw et al., 2018) and image post-processing (Ljosa and Carpenter, 2009; Caicedo et al., 2017; Todorov and Saeys, 2019).

A common and major illumination artifact in microscopic images is the vignetting artifact, which reflects a (usually radial) decrease of the image brightness, its contrast, or the saturation from the image center toward the periphery (Leong et al., 2003). Correction approaches can be divided into prospective methods that exploit reference images (Young, 2000), retrospective multiple image-based correction (Singh et al., 2014; Smith et al., 2015; Peng et al., 2017) and single image-based strategies. Reference and multiple image-based methods are considered most reliable (Smith et al., 2015), but are not always applicable due to application-specific constraints (e.g., data availability). At this point, single image correction strategies come into play (Leong et al., 2003; Zheng et al., 2008).

In the present report, we focus on high-resolution functional microscopic imaging and its application to studying the functioning of biological neural systems. Techniques such as calcium indicator-based (O’Donovan et al., 1993; Svoboda et al., 1997; Wachowiak and Cohen, 2001; Stosiek et al., 2003; Birkner et al., 2017; Tischbirek et al., 2019; Yildirim et al., 2019) or immediate early gene (IEG)-based imaging (Barth et al., 2004; Wang et al., 2006, 2019; Barth, 2007; Xie et al., 2014; Franceschini et al., 2020) allow, in principle, an interpretation of the read-out of local image intensity as neural activity. While, intuitively, a quantitative comparison of neural activity of, for example, local neuron populations is susceptible to illumination artifacts, illumination correction has, so far, been widely neglected or unreported in respective studies. A potential reason might be that established reference- and multiple image-based correction methods result in the same correction effect at any given location on different microscopic images. This in turn may compromise the analysis of interrelations of neural activity patterns by inducing artificial correlations between the modified images.

To overcome this issue,

-

•

we develop and propose a straightforward single image-based vignetting correction protocol and

-

•

demonstrate the value of the vignetting correction using single channel IEG microscopic images of the mouse brain.

Due to the application-inherent lack of ground truth data to evaluate the effect of the illumination correction, we focus on data plausibility: In the presence of a vignetting artifact, the functional data contain a significant center-periphery bias of neural activity measures that, for the performed experiments, cannot be accounted for by the underlying biology. Considering the reduction of the center-periphery bias in neural activity as well as consistency of absolute and relative activity changes over time as proxies of success, we demonstrate that the vignetting correction substantially improves data plausibility. Going beyond the standard approach of only taking into account the brightness of the image background (Leong et al., 2003), we further show that the largest improvement in data plausibility is achieved by correction of both image brightness and contrast.

Materials and Methods

Imaging and Image Data

The present study is based on single channel 2-photon imaging mouse data as detailed in Xie et al. (2014). The mouse strain was BAC-EGR-1-EGFP [Tg(Egr1-EGFP)GO90Gsat/Mmucd from the Gensat project, distributed by Jackson Laboratories]. Animal care was in accordance with the institutional guidelines of and the experimental protocol approved by Tsinghua University. To allow for in vivo imaging, a cranial window was implanted between the ears of 3–5 months old mice. Data recording started a month later. EGFP fluorescent intensity was imaged using an Olympus Fluoview 1200MPE with pre-chirp optics and a fast AOM mounted on an Olympus BX61WI upright microscope, coupled with a 2 mm working distance, 25 × water immersion lens (numerical aperture 1.05). The animals were anesthetized 1 h after they explored a multisensory environment. Under the given experimental conditions, anesthetization is known to have very little effect on protein expression (Bunting et al., 2016), and protein expression to reflect neural activity (Xie et al., 2014). We randomly selected one image stack with measurements acquired at 2 days (interval in between: 5 days) to showcase our analysis. The stack belonged to the primary visual (VISp) area. Stack size was 512 × 512 pixels with a pixel edge length of 0.996 μm for the in-plane slices, containing 350 slices in z-direction with a spacing of 2 μm. Neuron segmentation was performed as described in Xie et al. (2014). The segmentation was performed in the uncorrected image data, to avoid neuron position mismatches between the unprocessed and the corrected image data. The neuron center positions were used to evaluate neural activity.

Illumination Correction Pipeline

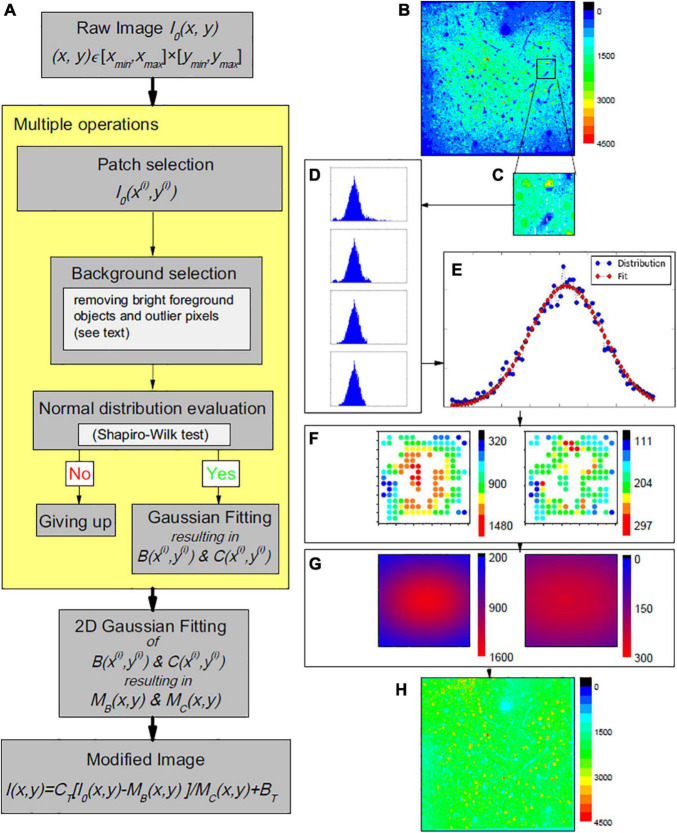

Our proposed single image-based pipeline for vignetting correction in 2-photon functional microscopy data is outlined in Figure 1A. Let a raw, i.e., measured and potentially post-processed, single channel microscopic image (an example is shown in Figure 1B) be denoted by I0(x,y) with (x,y) ∈ [xmin,xmax]×[ymin,ymax] = :Ω⊂ℝ2. In the following, we approximate the relationship between I0 and the sought true image and neural activity I, respectively, by

FIGURE 1.

(A) Working pipeline. (B–H) One example of the proposed vignetting correction of a 2-photon microscopy image of mouse area VISp at layers II/III depth (200 μm depth). (B) The original image, i.e., before correction. (C) Example patch selection. (D) Histograms of image pixel intensities, illustrating the effect of the performed outlier reduction. In top-down direction: first, histograms of all pixels in the patch; second, after dropping off the long tail; third, after dropping off some data of certain values at both ends; last, after dropping off additional data of certain amounts at both ends (details given in the source code). At the end, the similarity to a normal distribution estimated by a Shapiro-Wilk test is 0.996, larger than the threshold we used (0.98). (E) Corresponding Gaussian fitting of the background intensity for the patch pixels. (F) Supporting point (i.e., the patch centers) brightness B(x(i),y(i)) (left panel) and contrast C(x(i),y(i)) (right panel). (G) The estimated global brightness distributions MB(x,y) and MC(x,y) (left and right panel). (H) The final modified image.

| (1) |

with MC(x,y) and MB(x,y) as a spatially varying gain or contrast distribution and a spatially varying background brightness, respectively, that are to be estimated and compensated during the illumination correction. BT and CT are pre-defined additive and multiplicative constants to bring the corrected image into a desired value range (Piccinini et al., 2012), and are not part of the correction process. With CT = 1 and BT = 0, Equation 1 corresponds to the standard notation as, for instance, used by Smith et al. (2015).

The proposed correction approach follows the common assumption that vignetting effects can be approximated by Gaussian functions. Leong et al. (2003) for instance, described the uneven illumination as an additive low frequency signal and approximated it by an isotropic Gaussian distribution with large standard deviation. The distribution parameters were, however, chosen ad hoc and image-specific (Leong et al., 2003). Here, we also model MB(x,y) and MC(x,y) by 2-dimensional (2D) Gaussian functions, but estimate the distribution parameters by analysis of I0(x,y).

To be able to efficiently cope with potentially large image data sets, we employ a patched-based approach, consisting of two main steps:

[STEP 1] patch-based robust estimation of local background brightness and contrast for a sufficient amount of supporting points distributed across I0(x,y), and

[STEP 2] estimation of MB(x,y) and MC(x,y) based on the supporting point brightness and contrast values.

STEP 1: Patch-Based Estimation of Local Brightness and Contrast

In line with most existing methods in the given context, we focus on the image background to estimate the vignetting functions (Leong et al., 2003; Charles et al., 2008; Chalfoun et al., 2015). Let denote a patch of I0 with a patch center (x(i),y(i)) ∈ Ω and a domain [xi−λx/2,xi + λx/2]×[yi−λy/2,yi + λy/2] = :Ωi⊂Ω, with λx and λy as the side lengths of the patches (Figure 1C). Subsequently, we assume an ordered sequence of patches to be given that covers I0. However, the proposed approach is applicable to any patch sampling strategy. The patch centers are considered the supporting points for [STEP 2]; the associated brightness and contrast values were computed based on a histogram analysis of the intensity values of the patch pixels. Starting with the original histogram for a patch , contributions by high intensity, that is, foreground objects such as highly active neurons that lead to a long-tailed intensity distribution, were removed following the approach of Clauset et al. (2009) and Alstott et al. (2014) that seeks to find the minimum histogram data point to optimally fit a power law to the right tail of pixel intensity distribution. The histogram data points above this intensity value were discarded from further analysis. Further remaining high and low intensity values were removed by focusing on the central parts of the histogram and the intensity distribution, respectively. The intensity distribution of the remaining data points was tested for normality using the Shapiro-Wilk test (Shapiro and Wilk, 1965); only patches with a resulting value above a pre-defined threshold of the test statistic were considered valid supporting points for [STEP 2] (Figure 1D). The corresponding local brightness and contrast values B(x(i),y(i)) and C(x(i),y(i)) were approximated by the expectation and the standard deviation of the fitted normal distribution (Figure 1E). For further details and parameter values used in our study, we refer to the source code (see Data Availability).

STEP 2: Approximation of MB(x,y) and MC(x,y)

Based on the patches , i ∈ I⊆{1,…,n}, for which the intensity distributions passed the Shapiro-Wilk test, the corresponding set of patch estimates B(x(i),y(i)) and C(x(i),y(i)) (Figure 1F) were used to fit 2D-Gaussian distributions to estimate the sought distributions MB(x,y) and MC(x,y)(Figure 1G).

Experiments and Evaluation

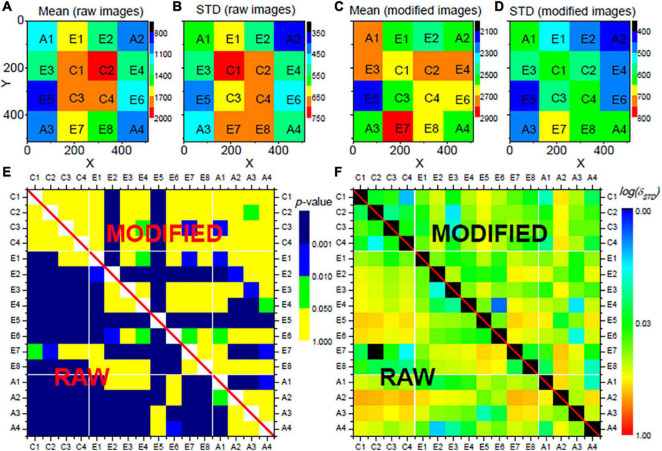

To evaluate plausibility of the image correction, the slices of the image stack (see section “Imaging and Image Data”) were evenly divided into 4 × 4 = 16 regions (see Figures 2A–D): four center regions (C1 to C4), eight edge regions (E1 to E8), and four angle regions (A1 to A4). If a vignetting effect exists, the comparison of neuronal activity derived for the different regions shows a significant bias, by which the center regions have high activity, which decreases radially (i.e., highest activity differences between the C and the A regions). Correspondingly, after successful correction of the vignetting effect, the bias should be significantly reduced. The slices were further grouped into four laminar compartments: layer II/III slices (95 slices in our selected stack), layer IV slices (41 slices), layer V slices (94 slices), and layer VI slices (97 slices). The layers were assigned manually.

FIGURE 2.

Evaluation results for the proposed vignetting correction for the neural activities Xl in layers II/III of a mouse VISp area. The mouse was located in the home cage. The images stack was evenly divided into 16 regions on x-y plane, as shown in (A–D). (A) Shows the mean activities of all neurons within each region sampled from the raw images, (B) shows their standard deviations. (C,D) Show the mean values and the standard deviations sampled from the modified images. (E) Shows the comparison of the neural activities between each pair of regions, where colors stand for the p-values of a respective t-test with Bonferroni correction. (F) Shows the comparison of the distributions of the neural activities between each pair of regions, where colors indicate δSTD with logarithm scale. In (E,F), the lower rectangles, i.e., the values below the diagonal, show the comparison with the data from the original images. The upper triangles represent corresponding data from the modified images. All parameters for the vignetting correction are the same as applied in Figure 1.

Motivated by functional neuroimaging studies (O’Donovan et al., 1993; Svoboda et al., 1997; Wachowiak and Cohen, 2001; Stosiek et al., 2003; Barth et al., 2004; Wang et al., 2006, 2019; Barth, 2007; Xie et al., 2014; Bunting et al., 2016; Birkner et al., 2017; Moeyaert et al., 2018; Tischbirek et al., 2019; Yildirim et al., 2019), we considered the following measures to analyze the impact of the vignetting effect and the reduction thereof:

-

•

Neural activity: Based on the segmentation of the neurons (see Sec. “Imaging and Image Data”), the activity Xl of the l-th detected neuron (l = 1,…,nl) is computed as the intensity of the center pixel of the segmentation mask.

-

•

Absolute changes of neural activity over time: Neural activity measurements were available for two different days, denoted as day1 and day0, with an intervening interval of 5 days. In addition to the neural activity, we also evaluated the absolute activity changes ΔXl = Xl(day1)−Xl(day0).

-

•

Relative changes of neural activity: In addition, we evaluated the relative neural activity changes δXl = [Xl(day1)−Xl(day0)]/[Xl(day1) + Xl(day0)].

The three measures Xl, ΔXl and δXl were separately evaluated for the 16 regions (C1-C4, E1-E8, A1-A4) and the four laminar compartments (II/III, IV, V, VI) before and after vignetting correction. Results are given as mean and standard deviation (STD) of the measures for the individual regions and laminar compartments. Significant differences between the measures of two different regions were evaluated separately for the different laminar compartments, applying t-tests with Bonferroni correction of the p-values. Moreover, for each pair of regions, the relative difference δSTD of the standard deviations of the considered measure values within the individual regions was computed for the different laminar compartments. Thus, for each laminar compartment, in total nc = 6 center-center (C-C) comparisons, nc = 28 edge-edge (E-E) comparisons, nc = 6 angle-angle (A-A) comparisons, nc = 32 center-edge (C-E) comparisons, nc = 32 edge-angle (E-A) comparisons, and nc = 16 center-angle (C-A) comparisons were performed.

To obtain further insights into a potential vignetting-associated center-to-periphery bias before image correction, we also evaluated the fraction of region pairs with non-significant differences (p≥0.01 after Bonferroni correction) for the different measures on a region-type level (i.e., focusing on all C-C, E-E, A-A, C-A, C-E, etc. comparisons). Let cp = nc(p≥0.01)/nc denote the fraction of the total number of region type-specific comparisons (e.g., nc = 16 for C-A comparisons) and the number nc(p≥0.01) of corresponding non-significant comparisons. Then, the ratio ΔC = cp/⟨cp⟩same was computed, with ⟨cp⟩same as the average value of cp values of the C-C, E-E, and A-A comparisons. The hypothesis was that, in the presence of a vignetting effect, ΔC values for, for example, the C-E and C-A comparisons are considerably smaller than one; after successful correction, the values should become closer to one. In particular, we assumed the comparison of ΔC values for C-A and E-E comparisons before and after correction to indicate presence and successful correction of the vignetting effect.

Similar to ΔC, we also evaluated differences of the relative standard deviations δSTD on a region-type level. With as average δSTD value for all pairs of two of the region types C, E or A for a specific layer compartment, we computed , with denoting the average value of for C-C, E-E, and A-A comparisons. A correction of a vignetting effect would, for instance, lead to significant reduction of ΔSTD for C-A comparisons, especially when compared to ΔSTD for E-E comparisons.

All measures were evaluated for the original image data as well as after vignetting correction according to Equation 1. In addition, we also applied a brightness-only correction, using

| (2) |

as well as a contrast-only correction,

| (3) |

to illustrate the respective effects.

Results

Subsequent results are based on automatically segmented 14.662 neurons. Within each region of the laminar compartments, on average 229 (STD: 78; range: 50–376) neurons were segmented. An example of an image slice before and after vignetting correction is shown in Figures 1B,H, respectively. In the original data, a strong image intensity decrease from the center toward the periphery can be seen. This gradient, in turn, leads to a larger number of significant differences of the measures derived for the different regions and region-types, indicated by blue and green fields in the lower triangles of Figure 2E (focusing on neural activity Xl itself), Supplementary Figure 1 (absolute changes ΔXlof the neural activity), and Supplementary Figure 2 (relative changes δXl of neural activity), respectively, for the layers II/III of the considered VISp area. Corresponding representations for layers IV, V, and VI are shown in Supplementary Figures 3–17. Analogously, large δSTD values are present for the original data (see lower triangle of Figure 1F and Supplementary Figures 1–17). In particular, the C-A comparisons exhibit a larger number of significant differences and larger δSTD values than the “within-type” comparisons, that is, the C-C, E-E, and A-A comparisons. Corresponding C-E and E-A values are somewhere in between. This finding clearly indicates the existence of vignetting effects in the original data.

After applying the proposed image correction procedure, the abovementioned effects are substantially diminished: The number of significant region-to-region differences of the evaluated measures is considerably reduced; similarly, the δSTD values are more consistent between the regions of different types (e.g., C-A and E-A; see upper triangle in Figures 1E,F and Supplementary Figures 1–11). This effect, however, is much less pronounced if only an intensity or only a contrast correction is applied (Supplementary Figures 12–17).

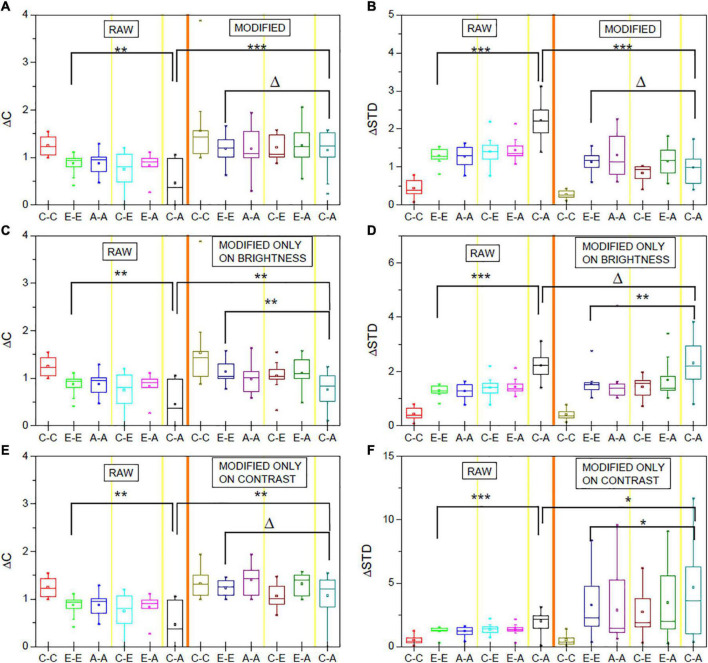

In line with these observations and the hypothesis described in section “Experiments and Evaluation,” before the vignetting effect correction, the ΔC values for the C-A comparisons are significantly lower (p < 0.01) and the ΔSTD values significantly higher than corresponding values for E-E comparisons (p < 0.001). After image correction, the differences are no longer significant, associated with a significant increase of ΔC and decrease of ΔSTD (both p < 0.001), due to the C-A values after image modification (Figures 3A,B).

FIGURE 3.

ΔC and ΔSTD values for the original (RAW) and corresponding values after vignetting correction. (A,B) Represent the effect if performing brightness and contrast correction. In (C,D) (right sides), only brightness was corrected. Similarly, (E,F) show the data for contrast-only correction. The shown data are averaged over the obtained normalized data for the three studied measures (neural activity, absolute differences of neural activity, relative differences) and the different layer compartments. Δp > 0.05, *p < 0.05; **p < 0.01, ***p < 0.001, t-test.

Figures 3C–F further reveal (similar to Supplementary Figures 12–17) that brightness-only or contrast-only correction does not sufficiently correct for the vignetting effect. If only a brightness correction is applied, ΔC values are, for instance, still significantly lower for C-A comparisons than for E-E comparisons. If only a contrast correction is applied, the C-A ΔSTD values are still significantly higher compared to the E-E values (p < 0.05).

Discussion

Center-Periphery Bias and Data Rationality

Correction of uneven illumination, and in particular a correction of the vignetting effect, has become a standard procedure in structural neural microscopy image processing (Brasko et al., 2018; Gao et al., 2019). In contrast, the application of such corrections to corresponding functional neural microscopy images is rarely reported, and the influence and effects of respective procedures remain unclear so far. This lack of studies may, in part, be due to a lack of ground truth data that could be used for evaluation purposes. However, we hypothesize that the intrinsic consistency and plausibility of the data is an appropriate indicator for the existence and the success of a correction of vignetting effects. While neural microscopy data and commonly derived measures, such as neural activity and absolute or relative activity, change over time and certainly exhibit spatially varying distributions, there is no convincing physiological explanation for the existence of a distribution of the measures with a center-periphery bias—that is, a vignetting effect. A reduction, and ideally the elimination, of a center-periphery bias can, therefore, be studied to assess the success of a correction in functional microscopic neural images.

In this work, we implemented a straightforward single image-based correction method, and used IEG 2-photon microscopic neural image data of the mouse brain to demonstrate that such data are affected by vignetting effects (see Figure 1B), and that the proposed method corrects for the resulting center-periphery bias of the image intensity and derived measures (see, e.g., Figure 2). We further illustrated that the most effective correction of the vignetting effect requires correction of both the brightness and the contrast of the image background. The corresponding code is publicly available to allow re-use of the proposed solutions (see section “Data Availability Statement”).

Effect of Patch Size

The patch size is a key parameter of the proposed vignetting correction protocol. The individual patches should contain a sufficient number of background pixels to allow for histogram fitting of sufficient quality (as evaluated by the Shapiro-Wilk test). The patches should, however, be sufficiently small and compared to the entire slice to guarantee existence of appropriate sampling points for the subsequent 2D Gaussian fitting and estimation of MB(x,y) and MC(x,y), respectively.

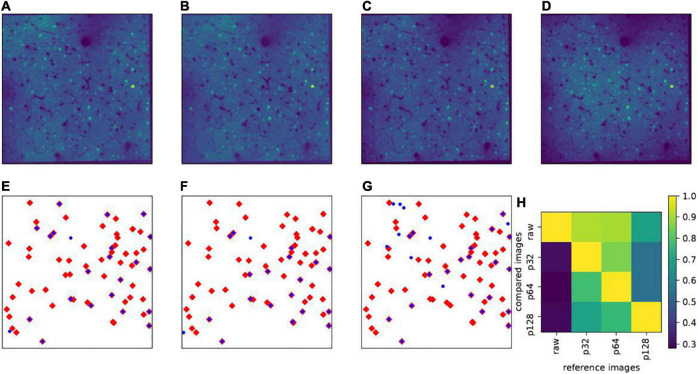

Thus, the selection of the patch size is a trade-off and depending on the size of the entire slice. Correction results with different patch sizes are illustrated in Figure 4 (Figure 4A: patch size 32×32 pixels; Figure 4B, 64×64pixels; Figure 4C, 128×128 pixels; and Figure 4D: raw image). Figures 4E–G illustrate the influence of the patch size selection on the data analysis. Throughout this work, including the example in Figure 4, the Shapiro-Wilk test threshold was always set to 0.98, and the slice size was always 512×512 pixels. For these parameters, a patch size of 32×32 pixels appeared visually sufficient for the correction process and the vignetting effect significantly reduced (see Figures 4A,D). A smaller patch size of 16×16 pixels usually led to failure of the Shapiro-Wilk test. A patch size of 64×64 pixels (Figure 4B) yielded very similar results to 32×32 pixel patches. However, for patch sizes of 128×128 pixels and larger, a residual vignetting effect is visible (Figure 4C).

FIGURE 4.

Effect of patch size. Upper panels show the same example slice (in layers II/III) with different patch sizes for vignetting correction: (A) Patch size 32×32 pixels (denoted as p32); (B) 64×64 pixels (p64); (C) 128×128 pixels (p128); and (D) raw images without vignetting correction. In (E–G), the blue dots show the projections of all highly active cells (δXl is larger than the mean value plus three times the standard deviation) in layers II/III on the x-y plane, calculated based on p32, p64, and p128, respectively, and the red diamonds represent the highly active cells calculated based on the raw images. (H) Shows the overlap rates (|Hi∩Hj|/|Hi|) of highly active cells between any pair of the aforementioned images, where Hi denotes the set of highly active cells from the reference image, Hj from the image used for comparison, and |⋅| denotes the cardinality of the set.

These considerations may be particularly relevant in the context of identifying highly active cells, which are used for the definition of so-called memory traces (Xie et al., 2014). Examples of such highly active cells (δXl larger than the mean activity values plus three times the standard deviation) are given in the lower panels of Figure 4. From those panels, it is easy to note that, even with different, but proper patch sizes, the highly active cells have very high overlaps (32×32pixelsversus 64×64pixels). In addition, we see in this example that the highly active cells calculated from the corrected images with proper patch sizes roughly constitute a subset of those apparent in raw images, where the ones calculated from raw images include many false positive cases (Figure 4H). This phenomenon may not always be present, as it is sensitive to the mathematical definition of how the highly active target cells are calculated, but it is conspicuous enough to suggest the existence of false positive detections in uncorrected images.

Multiple Image- vs. Single Image-Based Correction

As described in the Introduction, vignetting correction approaches can be divided into two main groups: multiple image-based vs. single image-based correction. A prerequisite of multiple image-based vignetting correction is, obviously, the availability of multiple images. Multiple image correction is often considered more reliable than single image correction, but also comes with some potential disadvantages. One aspect is that multiple image-based correction typically exploits the intensity distribution of pixels at the same spatial position but in different images. This relationship, in turn, implicitly assumes and induces a (potentially artificial) correlation between the images that are processed. The artificial correlation may not be of utmost relevance when processing structural microscopy data. In functional microscopy neural data analysis, however, existence or absence of a correlation of signals from different images is often at the core of the given research question (Xie et al., 2014; Wang et al., 2019). Thus, it has to be ensured that the observed correlations are of a biological nature, rather than artificial.

This requirement, in turn, renders single image-based image correction particularly useful for functional microscopic neural data analysis; it is applicable even if the exact form of the vignetting effects varies day-by-day. For functional neural microscopy imaging, such day-by-day variations exist due to several reasons. For instance, one such reason is that blood vessel volume and throughput can vary, with a substantial impact on light intensity (Shih et al., 2012). It is, in principle, technically possible to modify the craniofacial light intensities to a certain degree by adjusting the laser parameters, but this adjustment only eliminates the effects caused by vessels over the brain surface. Since there are far more unpredictable components influencing the light intensities inside the brain, for instance., the irregular distribution of blood vessels and dynamic locations of glia or immune cells, it is hardly possible to pre-set proper parameters at different depths to unify the intensities across days for both the superficial and deeper slices at the same time. In addition, daily shifts of the position of the mouse brain in the microscope induce additional sources of error. The errors are corrected by slightly rotating the images after scanning, but this inevitably also changes the appearance of the vignetting effects. Moreover, the optical pathways of the laser cannot be guaranteed to be sufficiently stable on long time scales. If the experiments last too long, this may also be a reason for daily variations of observed vignetting effects.

Furthermore, from a methodical perspective, underlying mechanisms of multiple and single image-based correction approaches are often, to a certain degree, similar. For example, Smith et al. (2015) simultaneously modified image brightness and contrast, similar to our proposed approach. For both approaches, the image modification is based on the analysis and transformation of a pixel intensity distribution—with the difference that Smith et al. (2015) determined the distribution of pixels from different images, but at the same spatial position. In contrast, we focus on patch pixels of a single image; in addition, we exclude bright objects (here: mainly pixels of high activity) to ensure an unbiased estimation of the background intensity distributions.

Thus, for single image-based vignetting correction, the multiple image-based prior information is essentially replaced by the hypothesis that the image background intensity and the image contrast distribution can be approximated by two-dimensional Gaussian distributions. The two-dimensional Gaussian fitting to the image background for single image-based vignetting correction was already proposed by Leong et al. (2003). In the present work, their idea was extended by additional consideration of the image background contrast. The described results indicated that both brightness and contrast correction appear essential. In addition, high R2 values for the fitting reveal appropriateness of the underlying assumption (R2 values of 0.9 and 0.75 for brightness and contrast fitting, respectively, for more than approximately 170 valid patches; see Supplementary Figure 18).

Unifying the Brightness and Contrast in Superior-Inferior Direction

The constants BT and CT can, in principle, be chosen arbitrarily and can be used to unify the mean background intensity and contrast across slices of a three-dimensional image stack, that is, along the superior-inferior direction, which is also considered important for analysis of respective data (Gaffling et al., 2014; Yayon et al., 2018). In our experiments, we indeed did so and set the constants to identical values for all slices. It should, however, be noted that one should be careful not to over-interpret the actual intensity levels in terms of neuroscientific conclusions, for instance, by comparison of absolute intensity values across cortical layers. Similar to the explanation of the existence of potential daily variations of the vignetting effect, the amount of synapses, glia cells, vessels, etc. and, thus, the light intensity for the different slices also varies. In turn, due the similar cellular composition of slices within a specific layer compartment, it appears reasonable to compare the intensity values within a laminar compartment. For a reliable comparison across laminar compartment borders, there is, however, a lack of supporting evidence.

Application to Calcium Imaging Data

The present approach may also be relevant for further imaging techniques such as Ca2+ imaging (O’Donovan et al., 1993; Svoboda et al., 1997; Wachowiak and Cohen, 2001; Stosiek et al., 2003; Birkner et al., 2017; Tischbirek et al., 2019; Yildirim et al., 2019; Chen et al., 2020). Compared to IEG-based images, which were used for illustration in this work, the application of the proposed vignetting correction protocol to calcium indicator-based imaging will face several challenges. Factors like a different signal-to-noise ratio and the spatial position of the vignetting center have theoretically little impact on the applicability of the protocol. However, an irregular spatial distribution of background noise represents a major obstacle. Unfortunately, using current calcium imaging techniques, with only a few exceptions (Chen et al., 2020), the background noise is disturbed by irregularly distributed axons and dendrites. In addition, the increased computational time required for processing large calcium imaging data sets has to be kept in mind when applying the proposed correction protocol to such data. Nonetheless, there may be potential for the current image correction approach in a variety of further structural and functional imaging techniques (Kherlopian et al., 2008; Yao and Wang, 2014).

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://github.com/IPMI-ICNS-UKE/VignettingCorrection.

Ethics Statement

The animal study was reviewed and approved by Tsinghua University, Beijing, China.

Author Contributions

J-SG and CH designed the research. DL and GW initialized the illumination correction idea. DL and RW developed the illumination correction and evaluation methodology. GW and HX worked on the animal experiments and data preparation. DL, RW, J-SG, and CH wrote the manuscript. All authors approved the manuscript.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Funding

This work was funded by the German Research Foundation (Deutsche Forschungsgemeinschaft, DFG) and the National Natural Science Foundation of China in the Project Cross-modal Learning, DFG TRR-169/NSFC (61621136008)-A2 to CH and J-SG, DFG SPP2041 as well as HBP/SGA2, SGA3, DFG SFB-936-A1, Z3 to CH, NSFC (31671104), NSFC (31970903) as well as Shanghai Ministry of Science and Technology (no. 19ZR1477400) to J-SG, and DFG SFB-1328-A2 (project no. 335447717) to RW.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fninf.2021.674439/full#supplementary-material

References

- Alstott J., Bullmore E., Plenz D. (2014). powerlaw: a python package for analysis of heavy-tailed distributions. PLoS One 9:e85777. 10.1371/journal.pone.0085777 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barth A. L. (2007). Visualizing circuits and systems using transgenic reporters of neural activity. Curr. Opin. Neurobiol. 17 567–571. 10.1016/j.conb.2007.10.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barth A. L., Gerkin R. C., Dean K. L. (2004). Alteration of neuronal firing properties after in vivo experience in a FosGFP transgenic mouse. J. Neurosci. 24 6466–6475. 10.1523/JNEUROSCI.4737-03.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birkner A., Tischbirek C. H., Konnerth A. (2017). Improved deep two-photon calcium imaging in vivo. Cell Calcium 64 29–35. 10.1016/j.ceca.2016.12.005 [DOI] [PubMed] [Google Scholar]

- Brasko C., Smith K., Molnar C., Farago N. (2018). Intelligent image-based in situ single-cell isolation. Nat. Commun. 9:226. 10.1038/s41467-017-02628-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bunting K. M., Nalloor R. I., Vazdarjanova A. (2016). Influence of isoflurane on immediate-early gene expression. Front. Behav. Neurosci. 9:363. 10.3389/fnbeh.2015.00363 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caicedo J. C., Cooper S., Heigwer F., Warchal S., Qiu P., Molnar C. (2017). Data-analysis strategies for image-based cell profiling. Nat. Methods 14 849–863. 10.1038/nmeth.4397 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chalfoun J., Majurski M., Bhadriraju K., Lund S., Bajcsy P., Brady M. (2015). Background intensity correction for terabyte-sized time-lapse images. J. Microsc. 257 226–237. 10.1111/jmi.12205 [DOI] [PubMed] [Google Scholar]

- Charles J., Kuncheva L. I., Wells B., Lim I. S. (2008). “Background segmentation in microscopy images,” in Proceedings of the Third International Conference on Computer Vision Theory and Applications, (Setúbal: SciTePress; ). [Google Scholar]

- Chen Y., Jang H., Spratt P. W. E., Kosar S., Taylor D. E., Essner R. A. (2020). Soma-targeted imaging of neural circuits by ribosome tethering. Neuron 107 454–469.e456. 10.1016/j.neuron.2020.05.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clauset A., Shalizi C. R., Newman M. E. (2009). Power-law distributions in empirical data. SIAM Rev. 51 661–703. 10.1137/070710111 [DOI] [Google Scholar]

- Franceschini A., Costantini I., Pavone F. S., Silvestri L. (2020). Dissecting neuronal activation on a brain-wide scale with immediate early genes. Front. Neurosci. 14:1111. 10.3389/fnins.2020.569517 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaffling S., Daum V., Steidl, Maier A., Kostler H., Hornegger J. (2014). A gauss-seidel iteration scheme for reference-free 3-D histological image reconstruction. IEEE Trans. Med. Imaging 34 514–530. 10.1109/TMI.2014.2361784 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gao R., Asano S. M., Upadhyayula S., Pisarev I., Milkie D. E., Liu T. L. (2019). Cortical column and whole-brain imaging with molecular contrast and nanoscale resolution. Science 363:eaau8302. 10.1126/science.aau8302 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khaw I., Croop B., Tang J., Möhl A., Fuchs U., Han K. Y. (2018). Flat-field illumination for quantitative fluorescence imaging. Opt. Express 26 15276–15288. 10.1364/OE.26.015276 [DOI] [PubMed] [Google Scholar]

- Kherlopian A. R., Song T., Duan Q., Neimark M. A., Po M. J., Gohagan J. K. (2008). A review of imaging techniques for systems biology. BMC Syst. Biol. 2:74. 10.1186/1752-0509-2-74 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leong F. W., Brady M., McGee J. O. D. (2003). Correction of uneven illumination (vignetting) in digital microscopy images. J. Clin. Pathol. 56 619–621. 10.1136/jcp.56.8.619 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ljosa V., Carpenter A. E. (2009). Introduction to the quantitative analysis of two-dimensional fluorescence microscopy images for cell-based screening. PLoS Comput. Biol. 5:e1000603. 10.1371/journal.pcbi.1000603 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Model M. (2014). Intensity calibration and flat-field correction for fluorescence microscopes. Curr. Protoc. Cytometry 68:10.14.1-10. 10.1002/0471142956.cy1014s68 [DOI] [PubMed] [Google Scholar]

- Moeyaert B., Holt G., Madangopal R., Perez-Alvarez A., Fearey B. C., Trojanowski N. F., et al. (2018). Improved methods for marking active neuron populations. Nat. Commun. 9:4440. 10.1038/s41467-018-06935-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Donovan M. J., Ho S., Sholomenko G., Yee W. (1993). Real-time imaging of neurons retrogradely and anterogradely labelled with calcium-sensitive dyes. J. Neurosci. Methods 46 91–106. 10.1016/0165-0270(93)90145-h [DOI] [PubMed] [Google Scholar]

- Peng T., Thorn K., Schroeder T., Wang L., Theis F. L., Marr C. (2017). A BaSiC tool for background and shading correction of optical microscopy images. Nat. Commun. 8:14836 10.1038/ncomms14836 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Piccinini F., Lucarelli E., Gherardi A., Bevilacqua A. (2012). Multi-image based method to correct vignetting effect in light microscopy images. J. Microsc. 248 6–22. 10.1111/j.1365-2818.2012.03645.x [DOI] [PubMed] [Google Scholar]

- Shapiro S. S., Wilk M. B. (1965). An analysis of variance test for normality (complete samples). Biometrika 52 591–611. 10.2307/2333709 [DOI] [Google Scholar]

- Shih A. Y., Driscoll J. D., Drew P. J., Nishimura N., Schaffer C. B., Kleinfeld D. (2012). Two-photon microscopy as a tool to study blood flow and neurovascular coupling in the rodent brain. J. Cereb. Blood Flow Metab. 32 1277–1309. 10.1038/jcbfm.2011.196 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singh S., Bray M. A., Jones T., Carpenter A. (2014). Pipeline for illumination correction of images for high-throughput microscopy. J. Microsc. 256 231–236. 10.1111/jmi.12178 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith K., Li Y., Piccinini F., Csucs G., Balazs C., Bevilacqua A. (2015). CIDRE: an illumination-correction method for optical microscopy. Nat. Methods 12 404–406. 10.1038/nmeth.3323 [DOI] [PubMed] [Google Scholar]

- Stosiek C., Garaschuk O., Holthoff K., Konnerth A. (2003). In vivo two-photon calcium imaging of neuronal networks. Proc. Natl. Acad. Sci. 100 7319–7324. 10.1073/pnas.1232232100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Svoboda K., Denk W., Kleinfeld D., Tank D. W. (1997). In vivo dendritic calcium dynamics in neocortical pyramidal neurons. Nature 385 161–165. 10.1038/385161a0 [DOI] [PubMed] [Google Scholar]

- Tischbirek C. H., Noda T., Tohmi M., Brinker A., Nelken I., Konnerth A. (2019). In vivo functional mapping of a cortical column at single-neuron resolution. Cell Rep. 27 1319–1326.e1315. 10.1016/j.celrep.2019.04.007 [DOI] [PubMed] [Google Scholar]

- Todorov H., Saeys Y. (2019). Computational approaches for high-throughput single-cell data analysis. FEBS J. 286 1451–1467. 10.1111/febs.14613 [DOI] [PubMed] [Google Scholar]

- Wachowiak M., Cohen L. B. (2001). Representation of odorants by receptor neuron input to the mouse olfactory bulb. Neuron 32 723–735. 10.1016/s0896-6273(01)00506-2 [DOI] [PubMed] [Google Scholar]

- Wang G., Xie H., Wang L., Luo W., Wang Y., Jiang J. (2019). Switching from fear to no fear by different neural ensembles in mouse retrosplenial cortex. Cereb. Cortex 29 5085–5097. 10.1093/cercor/bhz050 [DOI] [PubMed] [Google Scholar]

- Wang K. H., Majewska A., Schummers J., Farley B., Hu C., Sur M., et al. (2006). In vivo two-photon imaging reveals a role of arc in enhancing orientation specificity in visual cortex. Cell 126 389–402. 10.1016/j.cell.2006.06.038 [DOI] [PubMed] [Google Scholar]

- Xie H., Liu Y., Zhu Y., Ding X., Yang Y., Guan J. S. (2014). In vivo imaging of immediate early gene expression reveals layer-specific memory traces in the mammalian brain. Proc. Natl. Acad. Sci. 111 2788–2793. 10.1073/pnas.1316808111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yao J., Wang L. V. (2014). Photoacoustic brain imaging: from microscopic to macroscopic scales. Neurophotonics 1:011003. 10.1117/1.NPh.1.1.011003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yayon N., Dudai A., Vieler N., Amsalem O., London M., Soreq H., et al. (2018). Intensify3D: normalizing signal intensity in large heterogenic image stacks. Sci. Rep. 8:4311. 10.1038/s41598-018-22489-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yildirim M., Sugihara H., So P. T., Sur M. (2019). Functional imaging of visual cortical layers and subplate in awake mice with optimized three-photon microscopy. Nat. Commun. 10:117. 10.1038/s41467-018-08179-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young I. T. (2000). Shading correction: compensation for illumination and sensor inhomogeneities. Curr. Protoc. Cytometry 14 2–11. 10.1002/0471142956.cy0211s14 [DOI] [PubMed] [Google Scholar]

- Zheng Y., Lin S., Kambhamettu C., Yu J., Kang S. B. (2008). Single-image vignetting correction. IEEE Trans. Pattern Anal. Mach. Intell. 31 2243–2256. 10.1109/tpami.2008.263 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://github.com/IPMI-ICNS-UKE/VignettingCorrection.