Abstract

Molecular dynamics (MD) simulations are a powerful tool to follow the time evolution of biomolecular motions in atomistic resolution. However, the high computational demand of these simulations limits the timescales of motions that can be observed. To resolve this issue, so called enhanced sampling techniques are developed, which extend conventional MD algorithms to speed up the simulation process. Here, we focus on techniques that apply global biasing functions. We provide a broad overview of established enhanced sampling methods and promising new advances. As the ultimate goal is to retrieve unbiased information from biased ensembles, we also discuss benefits and limitations of common reweighting schemes. In addition to concisely summarizing critical assumptions and implications, we highlight the general application opportunities as well as uncertainties of global enhanced sampling.

Global enhanced sampling techniques bias the potential energy surface of biomolecules to overcome high energy barriers. Thereby, they aim to capture extensive conformational ensembles at comparably low computational cost.

1. General introduction

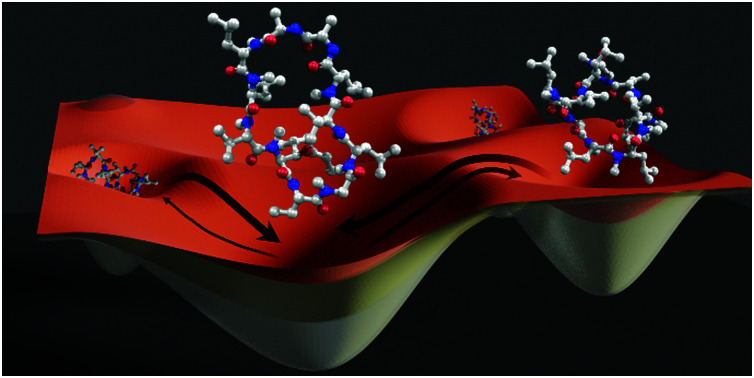

Biomolecules in solution constantly fluctuate within an ensemble of conformational states with varying probability.1 Each of these conformational states exhibits (slightly) altered biophysical properties and provides different opportunities for interactions with surrounding molecules.2–5 The dynamic nature of biomolecules is thus essential to both fundamental and applied research, e.g. for drug discovery and lead optimization.1,3,6 However, even after several decades of research in this area, the complexity and longevity of the conformational rearrangements of biomolecules still poses substantial challenges for computational and experimental methods.4,7 The ideal technique would be a “molecular camera”, which records the time evolution of the motion of a single molecule in atomic resolution. Despite massive progress in the field of experimental integrative modeling, the tool that currently comes closest to this ideal of a molecular camera is molecular dynamics (MD) simulations.8,9 The theoretical framework as well as the implementation of MD simulations is built on numerous approximations to reduce the computational costs to a tractable level, which limits the accuracy of the resulting dynamic models.10–12 These approximations can be broadly divided into two categories: (i) inaccuracies in the underlying force field, and (ii) uncertainties due to limited phase-space exploration. Within this review, we will focus on the latter, which is traditionally also referred to as the “sampling problem”.

A typical biomolecular system is characterized by a myriad of degrees of freedom, resulting in practically innumerable conformational and configurational states. This complex phase space translates into a free-energy surface that is vast and rugged. MD simulations offer the possibility to explore such free-energy landscapes with a resolution of nanometers and femtoseconds. However, in practice the system often gets trapped in a (local) minimum as high barriers to neighboring configurational states impose slow transition rates. With dedicated state-of-the-art hardware or exa-scale cloud-computing infrastructure, motions on the millisecond timescale can be observed for biomolecular systems of considerable size.13–15 Unfortunately, the general access to such supercomputing systems is limited. An alternative to brute force high-performance computing is to speed up the sampling process using enhanced sampling strategies.

Many different methodologies that fall into the category of enhanced sampling have been developed over the past decades (for previous reviews we refer the reader to ref. 16 and 17). Some of the most popular enhanced sampling strategies are pathway-dependent, meaning they rely on the definition of low-dimensional order parameters, also called reaction coordinates or collective variables (CVs). Methods such as local elevation18 or metadynamics,19 umbrella sampling20,21 or targeted MD22 increase sampling efficiency in a simulation by applying a bias along the selected CV. Consequently, identifying representative CVs is critical to the success of pathway-dependent enhanced sampling techniques, but reducing the complex dynamics of biomolecules to a few interpretable dimensions is far from trivial.23 Substantial research efforts are currently invested in the optimization and automatization of selecting appropriate CVs, e.g. with the aid of machine learning.24–26 Given relevant CVs, pathway-dependent methodologies can perform strikingly well, for example in modelling the activation of voltage-sensing domains of ion channels,27,28 the estimation of ligand koff rates,29 or membrane permeation probability calculations.30,31

Despite these successes, for many interesting biomolecular systems it is not straight-forward to derive a small number of representative observables as CVs. For example, when we simulate cyclic peptides in apolar environments, we usually observe one (or a few) well-defined “closed” structures. These closed conformational states can often be easily represented, e.g., via intramolecular hydrogen bond formation.32,33 However, when we study the same system in a polar environment, defining a unique representation immediately becomes more difficult. The ensembles of cyclic peptides in polar environments are generally much more diverse, and observables such as intramolecular hydrogen bonds or the radius of gyration fail to distinguish the conformational states. Other scenarios, which are challenging for CV-based pathway-dependent methods, include studies with the specific aim of identifying the most flexible domains of a biomolecule,34–36 or of discovering novel cryptic or allosteric binding sites.37–39 Whenever the goal is to explore and compare local flexibility patterns within one biomolecular system, a pathway-dependent bias should be avoided as it inherently steers the results towards the user-defined reaction coordinate.

Fortunately, also pathway-independent enhanced sampling techniques have been developed, which do not require the definition of CVs. Methods following the principles of hyperdynamics40 or parallel tempering41,42 add global biasing energies that act on the entire system simultaneously. Here, we provide an overview of currently available pathway-independent enhanced sampling methods, which we broadly categorize by whether the bias is defined via the potential or kinetic energy function. For each of the methodologies we describe in the following sections promising results that have been reported for various scientific problems. However, each approach also has its limitations. As we summarize benefits and pitfalls, we explain what can and cannot be expected from the different methods. Furthermore, we discuss the general and central challenge of extracting unbiased thermodynamic and kinetic information from biased ensembles. This process, typically referred to as reweighting, is in practice often decisive for the applicability of biasing methods.

2. Turning up the heat: biasing the kinetic energy

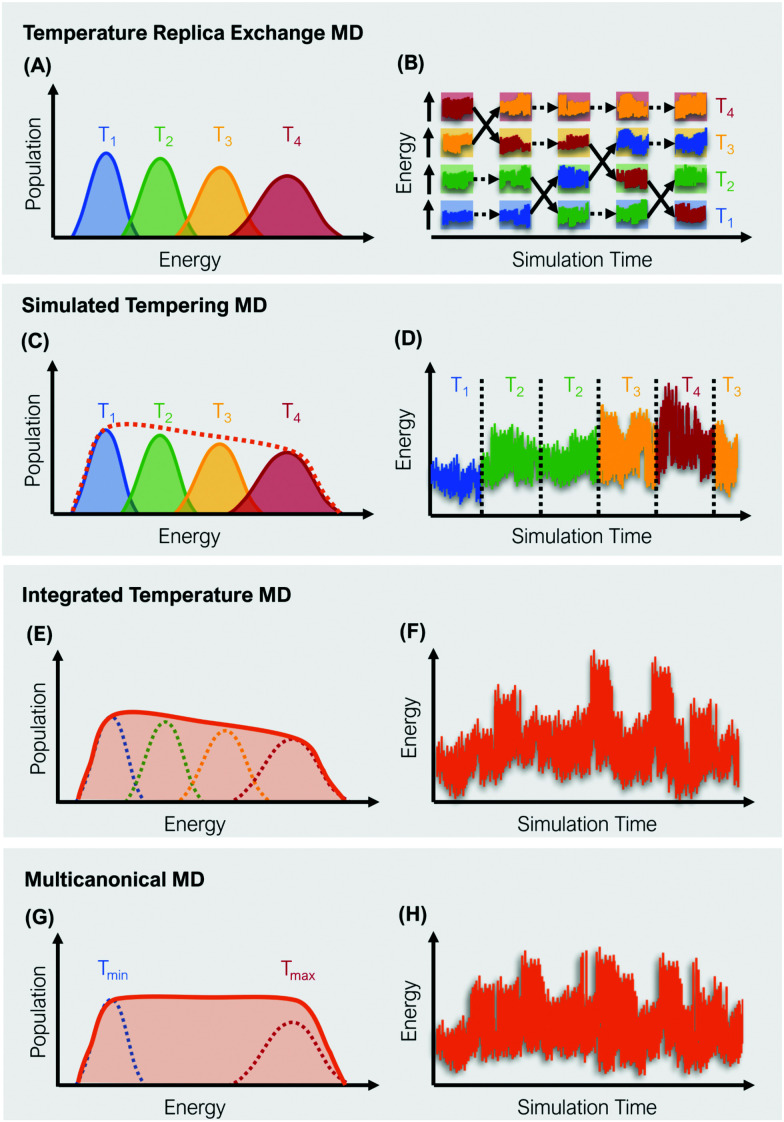

A straight-forward way to increase the velocity of motions in a simulated system is to elevate its temperature, i.e., to introduce a kinetic bias. At higher temperatures, the transition rates between local minima increase, thus a larger phase space can be explored in less computational time. Simulations at room temperature on the other hand ensure thorough sampling within the minima.43,44 The tempering approaches described below follow the same fundamental idea: Simulations at high and low temperatures are combined based on an energetic criterion, which retains the canonical ensemble (Fig. 1). Through this, conformational sampling is achieved more efficiently and more reliably than with conventional – single temperature – MD simulations. The practical implementation of this idea differs, however, greatly between individual enhanced sampling strategies, which we will outline in detail in the following paragraphs.

Fig. 1. Schematic representation of four global enhanced sampling techniques, where the bias is defined via the kinetic energy of the system: Temperature replica exchange MD (A and B), simulated tempering MD (C and D), integrated temperature MD (E and F) and multicanonical MD (G and H). The left column (A, C, E and G) illustrates the sampled energy distributions, while the right column (B, D, F and H) displays the energy as a function of the simulation time.

2.1. Temperature replica exchange MD

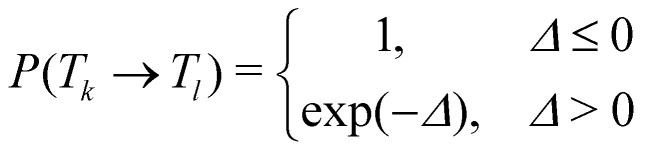

In temperature replica exchange MD (T-REMD), also referred to as parallel tempering, multiple simultaneous simulations of identical systems are performed at varying temperatures.41,42 Exchanges between neighboring replicas are attempted at defined time intervals and accepted or rejected based on an energetic criterion, which retains the canonical distribution (Fig. 1A and B).45 Typically, this routine is based on a Metropolis criterion, where the exchange probability P(Tk → Tl) depends on the reference temperature of two replicas (Tk, Tl) and their potential energies (Vk, Vl):46, 47

|

1 |

| Δ = (βk − βl)(Vk − Vl), | 2 |

with βk = 1/kBTk, βl = 1/kBTl being the inverse of the temperature Tk and Tl multiplied by the Boltzmann constant kB. The nature of this approach thus requires a computational setup, where multiple simulations can be performed in parallel with sufficiently fast and frequent communication between the computing nodes.43 While the parallelization posed a limitation for the applicability of the approach in the past, nowadays parallel computing is widely available with modern computing environments. However, the challenge increases for large biomolecular systems as the number of required replicas is estimated to increase with N1/2 for a system with N degrees of freedom.48 Nevertheless, assuming that the required high-performance computing environment is available, the main question remaining is how to choose the number and spacing of replicas and the overall temperature range. Various, mostly iterative schemes have been proposed to optimize the choice of these parameters.49,50 A quite popular tool to estimate the replica distribution is the temperature generator for REMD-simulations, introduced and hosted by David van der Spoel and co-workers.47,51 Their algorithm estimates the number and spacing between T-REMD replicas based on system size, temperature range, and a user-defined exchange probability (a practical rule of thumb is to choose an acceptance rate above 0.2 to 0.3.52) These predictions are, however, based on a certain test setup and should be re-evaluated for the user-specific combinations of MD engine and force field. For a detailed practical guide on how to run T-REMD simulations, we refer the interested reader to ref. 52.

Compared to serial simulated tempering (ST) simulations, T-REMD simulations have been found to converge slower at a higher computational cost,53 although depending on the studied system and simulation setup, T-REMD simulations could theoretically outperform ST simulations in terms of wall time. In practice, the use of T-REMD is notably more popular. This can most likely be attributed to its straight-forward implementation and the fact that no weighting factors need to be optimized.53 Furthermore, T-REMD simulations result in extensive sampling at different temperatures, which can provide valuable information beyond enhanced conformational sampling. Some of the most remarkable studies working with T-REMD include the first study of Sugita and Okomoto on the folding of Met-enkephalin,42 which was followed by numerous works using T-REMD to further elucidate the protein folding problem.54–58 Moreover, T-REMD has greatly aided the interpretation of experimental data from various sources.59–61 T-REMD simulations have also shown promising results in the area of cyclic peptides, generating ensembles that agree well with NMR interproton distances.62 In the study by Wakefield et al.,62 the authors were furthermore able to rationalize the varying binding affinities of the cyclic peptides through conformational pre-organization captured in T-REMD. In a different study, solvent induced conformational changes could be observed for cyclic tetrapeptides using T-REMD.63 Additionally, T-REMD simulations have shown valuable benefits in the parametrization of residue-specific force fields.64

2.2. Simulated tempering

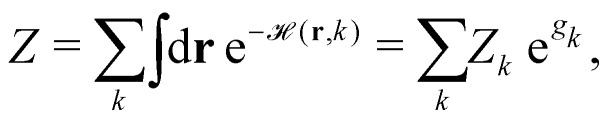

ST simulations, also called serial tempering,44 follow a similar sampling strategy as T-REMD. In ST simulations, temperature switches are also accepted or rejected based on an energetic criterion. The major difference in the ST setup is, however, that only one continuous simulation is performed and the Hamiltonian becomes dependent on the system's reference temperature Ti in this single simulation (Fig. 1C and D).65,66 Let's consider a system where H(r) is the Hamiltonian of configuration r. As we choose a discrete set of temperatures T1 < … < TK, we define a generalized Hamiltonian44 to run the ST simulation:

| (r,k) = βkH(r) − gk, | 3 |

here, the index k is referring to the temperature range and thus can take values from 1 to K. The logarithmic weight corresponding to the temperature Tk is denoted as gk. Consequently, the generalized partition function Z can be written as,

|

4 |

with Zk being the partition function at βk. This notation highlights that the generalized ensemble combines the canonical ensembles at each temperature using the weighting coefficients {gk}. With a user-defined frequency, attempts are made during the simulation to alter the system temperature Tk to a new trial temperature Tl, which is taken from the discrete set of selected temperatures. Whether or not the change is accepted is evaluated based on an energetic criterion, which is specifically designed to maintain the canonical distribution. Similar to T-REMD simulations, the acceptance probability for k → l is defined by min(1,e−Δ k→l(r)),66 where

| Δ k→l(r) := (r, l) − (r, k) = (βl − βk) H(r) − (gl − gk). | 5 |

Consequently, unbiased statistics of each temperature are collected for ST (as well as for T-REMD simulations), which do not need additional reweighting if analyzed individually. Achieving uniform sampling across the selected set of temperatures critically depends on the choice of the weighting coefficients gk. Optimization of these weights (and the automatization of it) is thus the main challenge in working with ST simulations.67 In practice, this is often a tedious task, which requires numerous short trial simulations as the weights are not known a priori.44,68 Nonetheless, ST simulations have been found to be quite robust across various computing environments as they only require a single computing node. The approach has already facilitated several studies which explore the free-energy landscapes of biomolecules (e.g. BPTI, Villin, Trp-cage, or fast folding WW-domain peptides) at low computational cost with speedups of several orders of magnitude.68,69 Further prominent examples for the application of ST simulations include folding dynamics of multiple mini-proteins in explicit solvent68,69 and Alzheimer related peptide aggregation.67

2.3. Integrated temperature sampling

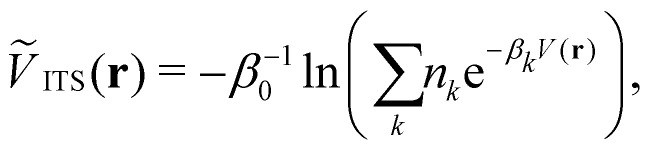

Integrated temperature sampling (ITS) introduces a sum-over-temperature non-Boltzmann factor, which is essentially a linear combination of Boltzmann distributions at different temperatures.70,71 This means that the simulation is performed on a temperature-biased effective potential Ṽ(r), which is defined in the following manner for each configuration r:

|

6 |

where β0 corresponds to the the desired inverse reference temperature and {βk} denotes the the selected inverse temperature range. {nk} are the weighting coefficients, which determine the contribution of the potential energy V(r) at each inverse temperature βk. Typically, it is best to sample the full temperature range uniformly. However, in theory it is straight-forward to focus the sampling to selected temperature regions using {nk}.72 Hence, ITS bears similarities to enveloping distribution sampling,73 as it combines multiple potentials (at different temperatures) to sample one generalized (non-Boltzmann) distribution. The resulting effective potential thus facilitates efficient phase-space exploration across a chosen temperature range within a single simulation (Fig. 1E and F).45,72 The main challenge in ITS is to identify suitable coefficients {nk}, which can be estimated in an iterative procedure during the simulation.72,74 This central process is fast for comparably simple systems, but can become much more difficult for large-scale biomolecular systems.70 This potential limitation is balanced against the advantageous features of the method, i.e. that the convergence behavior of ITS has been observed to be superior to other global enhanced sampling techniques and that it is computationally substantially more efficient than the related T-REMD in terms of CPU time.45

2.4. Multicanonical molecular dynamics

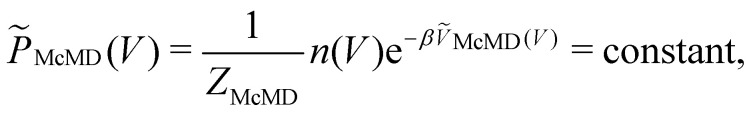

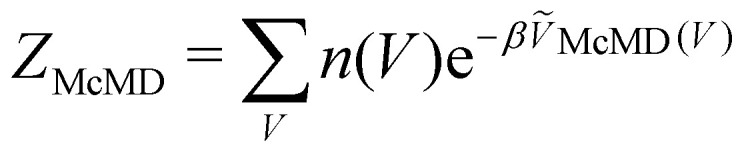

Multicanonical MD (McMD) simulations aim to uniformly sample the potential-energy surface (PES) between high temperature and low temperature regions (Fig. 1G and H).75,76 In other words, the aim is to derive a flat probability distribution function P̃McMD of the potential energy V:75

|

7 |

where the partition function in the multicanonical ensemble is defined as  , n(V) is the density of states, and ṼMcMD(V) is the effective potential of the McMD simulation. ṼMcMD(V) is not known a priori and needs to be determined from a preceding conventional MD simulation as a function of its unbiased potential energy, V i.e.,77

, n(V) is the density of states, and ṼMcMD(V) is the effective potential of the McMD simulation. ṼMcMD(V) is not known a priori and needs to be determined from a preceding conventional MD simulation as a function of its unbiased potential energy, V i.e.,77

| ṼMcMD(V) = V + kBT0 ln(P(V, T0)), | 8 |

with P(V, T0) being the probability distribution of the unbiased potential energy V and the selected temperature T0. This initial temperature T0 is typically set to comparably high values to cover a sufficiently broad energetic space. However, the resulting ṼMcMD(V) usually cannot be used as the final effective potential for productive McMD runs. It rather serves as the first input for an iterative refinement scheme that optimizes ṼMcMD(V) for a particular system under study.77 The aim of this refinement is to converge to that flat probability distribution function between a chosen temperature range [Tmin, Tmax], where Tmin is generally chosen to be slightly below room temperature and Tmax is in the range of 700 K to 1000 K.78 Since its first introduction by Berg and Neuhaus in 1992, who applied the McMD algorithm to study the two-dimensional Potts system,79 McMD has found broad usage in the biomolecular simulations community.77,80 In particular for investigations on the dynamics of (bio-) pharmaceutical systems such as antibodies81,82 and cyclic peptides,76,83 McMD was successfully applied to bypass high energetic barriers.

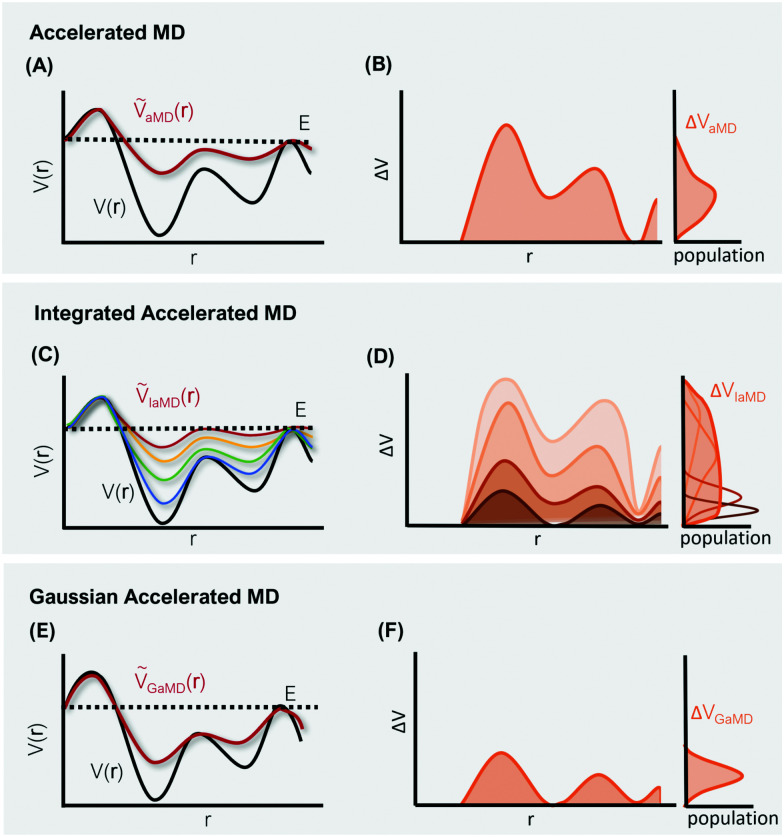

3. Flooding valleys and shaving peaks: biasing the potential energy

More common than changing the kinetic component of the Hamiltonian is the introduction of a bias to the potential energy. Different algorithms have been developed, which decrease the barriers between conformational states either by “filling up” the minima or flattening the maxima of the PES. The varying benefits and limitations of the approaches described in the following paragraphs mostly stem from differences in the functional form of the implemented bias.

3.1. Hyperdynamics

The general idea of distorting the PES with a global biasing potential was first introduced by Arthur Voter in 1997.40 The initial implementation of the approach required the calculation of the Hessian matrix to identify transition states, which inherently limited its applicability to relatively small systems. In further development, Hamelberg et al.84 reformulated the approach for large solvated biomolecular systems using a more simplistic biasing potential. From then on the approach became known as accelerated MD (aMD) simulations.

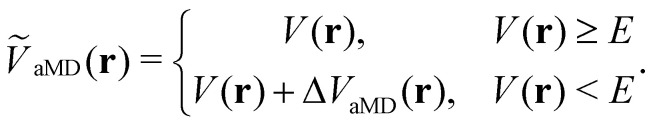

In aMD simulations, a biasing term ΔVaMD(r) is added to the potential energy V(r), whenever it is below a certain threshold E. The effective potential ṼaMD(r) can thus be written as,

|

9 |

In the initial formulation of aMD, the biasing term ΔVaMD(r) – typically referred to as boosting potential – itself is defined as,

|

10 |

where α is the so-called tuning or acceleration parameter, which determines the smoothness of the effective potential ṼaMD(r) (Fig. 2). Hence, the magnitude of the added biasing potential increases when the difference between the threshold energy E and the unbiased potential V(r) is large. This means that the minima are elevated, which in turn decreases the height of the barriers between neighboring conformational states. From a comparison to a millisecond trajectory of the bovine pancreatic trypsin inhibitor (BPTI), it has been estimated that aMD can result in an impressive speed-up of three orders of magnitude.85, 86 This speed-up is, however, dependent on system size and most importantly also on the chosen acceleration parameters E and α.

Fig. 2. Schematic representation of three different hyperdynamics implementations: accelerated MD (A and B), integrated accelerated MD (C and D), Gaussian accelerated MD (D and E). The left column (A, C and E) illustrates the smoothing of the PES along a selected reaction coordinate r. The right column (B, D and F) displays the corresponding distributions of the boosting potential ΔV for each implementation.

Since their introduction in 2004, aMD simulations have been employed to study a broad range of biomolecules. Some of the most notable applications include the work of Miao et al.,87,88 in which the free-energy surface of G-protein-coupled receptors was elucidated, as well as the investigation from Markwick et al.,89 where aMD predicted motions in protein GB3 on the millisecond timescale in agreement with NMR measurements. Generally, aMD has shown great potential in predicting, complementing, and refining NMR data for numerous biomolecular systems.86,90–94 In particular, aMD simulations have also been shown to produce reliable ensembles of macrocycles and cyclic peptides,94 which can be further utilized for drug optimization.95,96

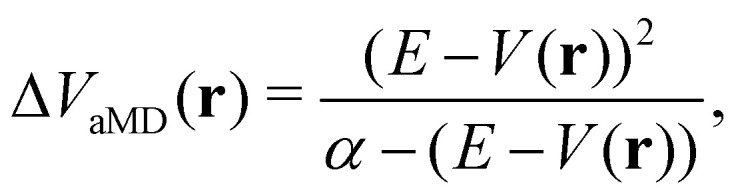

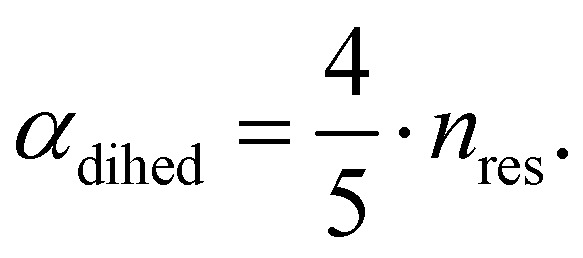

The central challenge when working with aMD is to select appropriate values for the two acceleration parameters E and α. In double boost aMD,97 the variant of aMD that is probably most-widely used for biomolecules, all particles in the system are biased together with an extra boost on the dihedral terms. For this setup, four parameters need to be defined, i.e. threshold energy and smoothing parameters for both the dihedral terms (Edihed and αdihed) and the total potential energy (Etexttotal and αtexttotal). These values are typically derived from short conventional MD simulations based on empirically derived formulae that take into account the average total energy 〈Vtotal〉, the average dihedral energy 〈Vdihed〉, and the number of atoms natom and number of residues nres, respectively.85,98 To derive the dihedral biasing potential the following equations are commonly applied:

| Edihed = 〈Vdihed〉 + 4·nres | 11 |

|

12 |

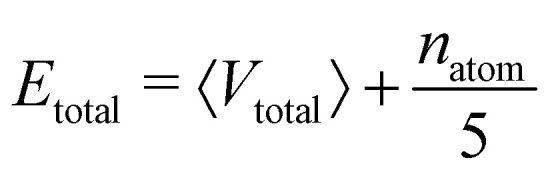

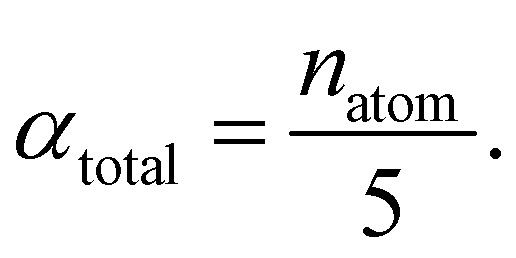

For the calculation of the total energy biasing potential, parameters can be calculated via

|

13 |

|

14 |

Despite these rules of thumb, choosing appropriate values for the acceleration parameters is far from trivial as the introduced bias should ideally increase sampling speed substantially without flattening the PES too severely. In our experience, it is usually worthwhile to start multiple short aMD simulation with different sets of parameters to assess the impact of the bias on the observed motions. If the PES becomes too distorted, the simulation mostly visits irrelevant high-energy states, which for proteins typically means irreversible unfolding. The latter scenario is probably one of the most dreaded risks of enhanced sampling in general, although seldom discussed in the literature. To circumvent the issue of manually selecting optimal parameters, a combination of aMD and replica exchange simulations (RE-aMD) has been proposed.99,100 Despite the advantages of RE-aMD, this approach has not found a great echo within the community. This might be due to the fact that RE-aMD requires replicas to be simulated in parallel, while one of the main selling points of the original aMD algorithm was that it can be carried out on a single computing node. This was a particularly intriguing feature in the early 2000s, when access to high-performance computing facilities was more scarce. Although the advances in computer power since then have worked in favor of parallel simulation techniques, the full potential of the RE-aMD approach has not yet been exploited, probably also due to several other intriguing advancements of aMD.

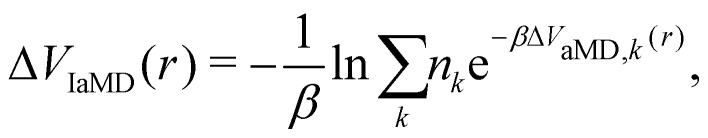

One of these more recent adaptations of the methodology is called integrated aMD (IaMD), which combines multiple aMD simulations with varying acceleration parameters into one trajectory by following the principle of ITS (Fig. 2).101–103 Here, the total boosting potential is defined as,

|

15 |

where ΔVaMD,k(r) is the standard aMD boosting potential as defined in eqn (10) for the kth set of acceleration parameters. The main challenge with IaMD is that – similar to ITS – the weighting coefficients {nk} need to be optimized in addition to the parameters {E} and {α}. A highly compelling argument for IaMD is, however, the convergence speed-up of up to three orders of magnitudes compared to aMD simulations, which has been reported for different fast folding proteins.45,102 This advantage arises from combining multiple biasing potentials into one, which circumvents the problem of oversampling high-energy states. Also for large biomolecular systems, such as the RNase P holoenzyme in complex with pre-tRNA, IaMD simulations have provided valuable mechanistic insights.104 Further variations of the aMD approach include adaptive aMD,105,106 where the threshold energy is reevaluated and adjusted on-the-fly during the simulation, or “lowering-barrier” aMD,107 where the biasing function directly acts on the maxima instead of the minima of the PES.

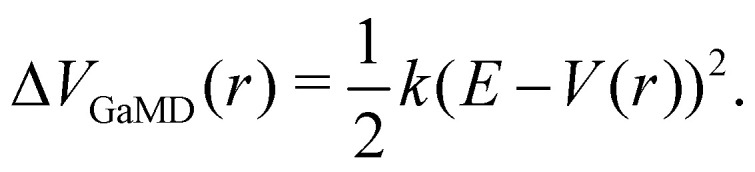

The probably most notable advancement of the aMD approach is termed Gaussian aMD (GaMD).108 The central idea in GaMD simulations is to inherently restrict the boosting potential in such a way that a Gaussian distribution is obtained (Fig. 2),

|

16 |

Again, this boosting potential is only applied when the system's potential energy is below a defined threshold energy E (see eqn (9)). In standard aMD simulations, the distribution of the boosting potential is often spread across a large energy range from tens to hundreds of kJ mol−1 in a non-Gaussian manner.109 This can lead to slow convergence as low-energy states are not sufficiently sampled, and additionally introduces severe difficulties for the subsequent reweighting procedure (see Section 4).45 By restricting the distribution of the boosting potential in GaMD, both of these issues can be circumvented. Nevertheless, also GaMD simulations require the definition of several parameters. These can again be optimized in an automated manner based on a short simulation preceding the production run. In this parameter optimization scheme, the threshold energy E is typically set to the system's maximum potential energy (while it can be freely chosen in aMD).108 The average boosting potential as well as its standard deviation have been shown to be lower compared to standard aMD.108 With the aid of GaMD simulations, large biomolecular systems have been studied, such as the CRISPR-Cas9 system,110 allergen-antibody complexes,111 or T-cell receptors binding to peptide-MHC complexes.112 For more details on the methodology and applications of GaMD, we recommend a recent and comprehensive review from the Palermo and Miao groups in ref. 113.

3.2. Hamiltonian replica exchange

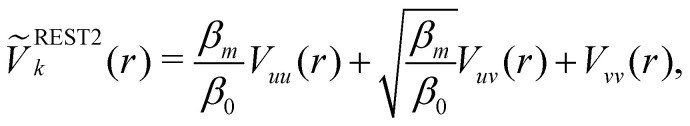

Hamiltonian replica exchange MD (H-REMD) in principle also includes T-REMD, as temperature scaling inherently affects the full Hamiltonian.48 However, the term H-REMD is typically used to refer to methods, which alter the Hamiltonian via the potential-energy contribution. The replica exchange mechanism works as described for T-REMD, yet there is more freedom in the form of the implemented bias. This bias can act on selected force-field terms,114–117 or on the full energy function.100,118,119 One prominent example of a H-REMD approach is replica exchange with solute scaling (REST2).120 In the preceding version of this approach (i.e. replica exchange with solute tempering (REST1)), both the temperature and the potential energy vary between replicas.120,121 REST2 simulations, on the other hand, are carried out at a constant temperature T0, while the potential energy of each replica k is scaled via,

|

17 |

where Vuu(r) is the solute–solute interaction energy, Vuv(r) is the solute–solvent interaction energy, Vvv(r) is the solvent–solvent interaction energy, and βm = 1/kbTm. The main advantage of REST2 over REST1 and T-REMD is that it requires a smaller number of replicas and it converges faster, as shown for the test systems Trp-cage and β-hairpin.120 In our own work, we have used H-REMD to generate diverse conformational sets for cyclic peptides with scaled dihedral potentials.32,122,123 Other implementations that truly act on the full potential-energy function include the above mentioned RE-aMD99,100 as well as RE-gaMD.119 In both cases, the replica exchange setup bypasses the issue of choosing optimal acceleration parameters. The approaches promise enhanced sampling of all degrees of freedom while retaining sufficient sampling of low-energy states. Example applications are the folding dynamics of mini-peptides,100 and the dynamics of the HIV protease.100,119 Some practical challenges of all these H-REMD schemes are, however, the setup with the parallel replicas (i.e. considerable computational demand) as well as the choice of range and distribution of the replicas.

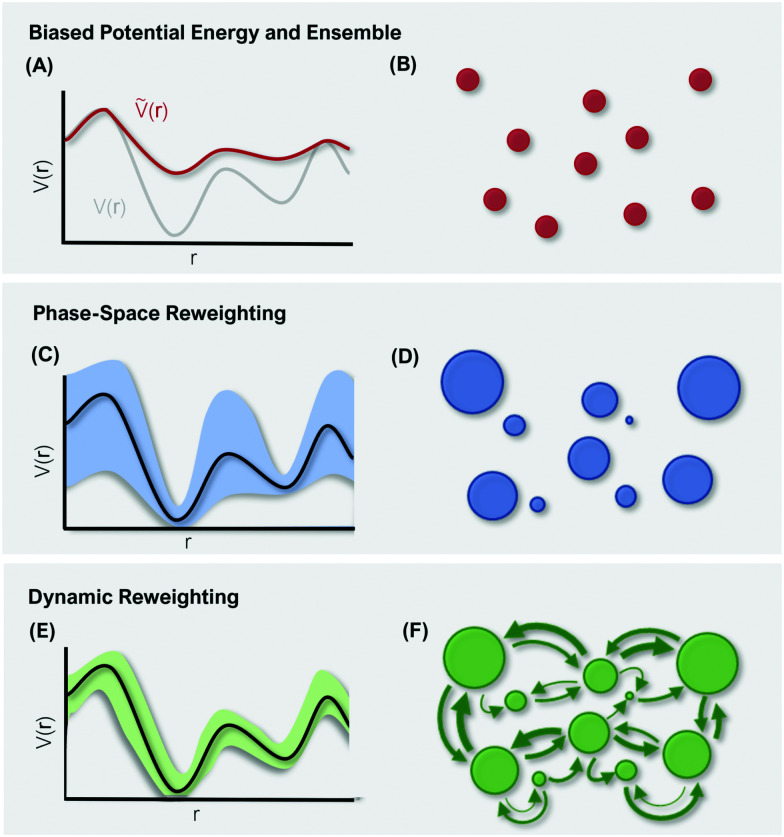

4. Reweighting

The purpose of MD simulations is typically to model the dynamic behavior of a system at experimental or physiological conditions. Yet, the bias introduced with enhanced sampling techniques – be it on the kinetic or potential energy - distorts the free-energy landscape and consequently does not allow direct comparison with experimental data.124 However, as the form and extent of the biasing potential is known at any given simulation step, the unbiased information can be retrieved using reweighting schemes.125, 126

Thermodynamic quantities (e.g. free-energy differences or stationary distributions) are usually more easily accessible than kinetic information (e.g. transition rates), which are particularly challenging to recover.127,128 Therefore, different reweighting approaches have been developed that either focus on reconstruction of thermodynamic quantities, or additionally perform reweighting of the systems kinetics. In the following, we will discern between these two incentives as “phase-space reweighting” and “dynamic reweighting”. We are providing a condensed overview of both types of reweighting approaches, for a more detailed discussion we refer the interested reader to dedicated reviews and the original literature.124,127–129

4.1. Phase-space reweighting

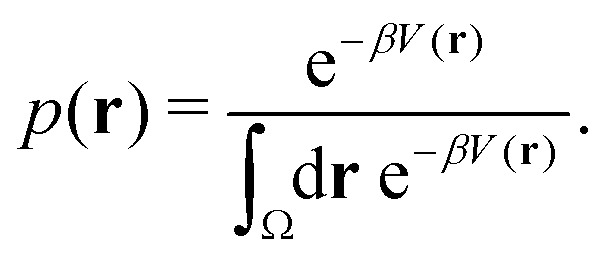

Studies that leverage the sampling efficiency of a global biasing potential typically focus on the systems thermodynamics. For methods such as aMD, the most common way to reweight the trajectory data to the unbiased ensemble is to apply a Boltzmann-type reweighting (see the discussion in ref. 84 and 129). The probability of a configuration r on the unbiased PES V(r) is given by,

|

18 |

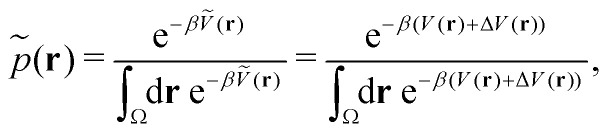

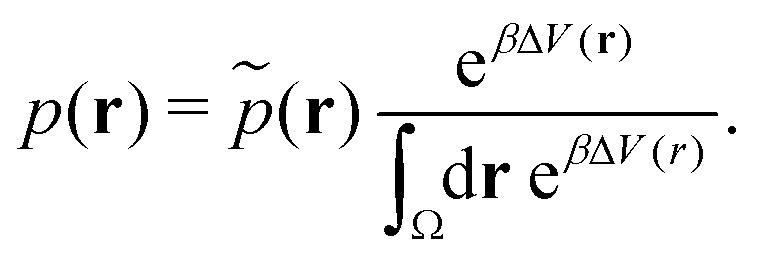

Accordingly, the probability of configuration r on the biased PES Ṽ(r) is,

|

19 |

where ΔV is the difference between the biased and unbiased PES. Thus, in theory, the probability distribution on the original PES can be reconstructed from the biased PES by multiplication with the Boltzmann factor of the biasing potential,

|

20 |

However, the biasing potential distribution is often very broad in practice, which means that the simulation often spends a substantial amount of time sampling high-energy states. As these high-energy states do not significantly contribute to the ensemble average, the reweighting is effectively based on a relatively small number of frames from the free-energy minima.124 Due to the nature of the exponential, the reweighting procedure only works well for comparably narrow distributions of the biasing potential (around 20kBT), and is known to be fairly inaccurate for large systems with broad bias distributions.129 This limitation has been bypassed by approximating the exponential term either by a Maclaurin series or cumulant expansion.129 The latter has been shown to provide the most reliable results, but is only applicable when the biasing potential follows a Gaussian distribution (which is enforced in the gaMD approach). Still, in particular around the transition regions, the biasing potentials are – by design – comparably small and in practice still vanish in the statistical noise (Fig. 3C). Consequently, relative differences in free energy can typically be reweighted to the unbiased ensemble with robust accuracy, while accurate estimations of barrier heights (i.e. kinetics) remain difficult or even inaccessible (Fig. 3).

Fig. 3. Schematic representation of differences in reweighting a biased PES (A) and a biased ensemble (B). Phase-space reweighting (C and D) reliably recovers the system's thermodynamics, while dynamic reweighting (E and F) additionally provides robust kinetic estimates. The colored bands in (C and E) represent the uncertainty of the reweighted energy profile. The circles in (B, D, and F) represent the system's conformational states, with the size corresponding to their thermodynamic weight.

A widely used approach that has been applied to reweight T-REMD simulations is the weighted histogram analysis method (WHAM).130 WHAM was originally defined for a joint analysis of independent simulations in the canonical ensemble and is similar to the Bennet acceptance ratio.131 However, advancements of the methodology allow now to generally combine multiple biased ensembles to retrieve a systems unbiased thermodynamics, e.g. from T-REMD simulations.132,133 Most notably Chodera et al.133 have introduced an extended WHAM workflow that explicitly considers the time correlation between configurations sampled in each replica. The estimation of the WHAM uncertainty is then corrected by adjusting the true number of independent samples.

The reweighting methods discussed above assume that the input data (i.e. the trajectory frames) are uncorrelated samples. However, in biomolecular systems with typically long correlation times this assumption does not necessarily hold.134 In particular, simulations of complex biomolecules often do not reversibly sample the equilibrium between different states. Even with enhanced sampling, transitions between conformational states may be observed rarely or only in one direction. Consequently, the proper equilibrium is not sampled and a critical assumption of WHAM is violated.

In summary, phase-space reweighting methods are straight-forward to implement and quickly produce an estimate of the thermodynamics of the system. In recent advances made to GaMD (i.e. Ligand-GaMD135 and Peptide GaMD136), also kinetic information was retrieved directly using Kramer's rate theory. The resulting (un)binding kinetics were found to be on the same order of magnitude with the experimental reference. However, to recover both thermodynamics and kinetics more accurately, the more costly dynamic reweighting methods need to be employed (Fig. 3E and F).

4.2. Dynamic reweighting

For unbiased MD simulations, the currently most widely used approach to retrieve robust estimates on a system's kinetics and thermodynamics is to construct a Markov state model (MSM).137–140 The same information can be obtained from biased simulation data using dynamic reweighting methods.141 The main advantage of MSMs is that the condition of local equilibrium within the simulation data is inherently enforced. The entire approach is based on transitions between states, and in theory only motions that show reversible exchanges between states are considered. Consequently, MSMs provide an ideal theoretical framework for dynamic reweighting strategies.

In recent years, two main classes of dynamic reweighting methods have emerged, path-based and energy-based. The more recently developed path-based reweighting schemes include Weber–Pande reweighting142 and Girsanov reweighting.143 While their implementation is far from trivial, both have shown reliable results in reweighting dynamics, e.g. from umbrella sampling simulations.142, 143 The main challenge with path-based dynamic reweighting methods is that they are integrator-dependent, and in practice reweighting needs to be performed on-the-fly and not as a post-processing step.128 Energy-based reweighting algorithms, on the other hand, are agnostic to the simulation engine used as they only require information on the bias energy of each analyzed conformation. This less complex handling comes, however, at the price of accuracy – energy-based reweighting does not explicitly account for the possibility that different paths can contribute to the same transition probability. This issue becomes critical whenever the energy profile of the biased PES varies substantially between different pathways. Typically, such a behavior is inherent to pathway-dependent local biasing methodologies and not as pressing with global enhanced sampling techniques. However, in particular in studies on ligand (un)binding or protein folding mechanisms different pathways can lead to substantial deviations in the associated transition rates.104, 144 Hence, path-based reweighting might result in more accurate estimates even when a global bias is applied. Prominent examples for energy-based reweighting schemes include the dynamic histogram analysis method (DHAM),145 the transition-based reweighting analysis method (TRAM),134 and extensions thereof.146

While these dynamic reweighting methods were developed and implemented independently by different groups, we were recently able to demonstrate the relationship between them.128 In this work by Linker et al., we show that the path-independent DHAM equation is a special case of path-dependent dynamic reweighting. Additionally, we show that both dynamic reweighting families can be connected by introducing a path-correction term to the energy-based method. In doing so, the strongly limiting integrator-dependence of path-based reweighting is omitted, while retaining high accuracy and low parameter sensitivity.128

5. Conclusion

Enhanced sampling with global biasing functions has massively advanced the field of biomolecular simulations. The constant optimization and extension of promising algorithms has provided our community with the means to simulate large biomolecular complexes, and has opened the door to study conformational changes in the millisecond range. Nevertheless, a common theme for all methodologies is the challenge of choosing optimal parameters with as little pre-processing effort as possible. First attempts towards automated parameter optimization are already being developed, but will need further efficiency improvements for global enhanced sampling to reach its full potential in the study of large biomolecular systems.

As important as the methods for enhanced phase-space exploration are the tools to reweight the biased simulation data to the unbiased canonical ensemble. The development of enhanced sampling techniques goes therefore hand in hand with that of reweighting methods. Over the past decade, multiple algorithms have been proposed that not only recover thermodynamic but also kinetic information from biased simulations. Most of these methods will require further refinement based on “real-world” complex biomolecular systems.

A general question in the application of the methods discussed above is how to validate the insights extracted from the simulations. Direct comparison with experiment is often challenging as techniques such as NMR, cryo-EM, or X-ray crystallography only provide ensemble-averaged data and cannot resolve high-energy states observed in MD simulations. Furthermore, they only provide limited information on a system's kinetics. While NMR (e.g. with relaxation dispersion experiments147,148) can provide dynamic information, the time scale is typically too long (hundreds of microseconds to milliseconds) even for enhanced sampling MD simulations. One promising strategy to generate reference data for the validation of global enhanced sampling techniques may be the combination of experimental data and MD simulations in integrative structural modeling studies.4 Information derived from experiments can be used to augment and guide MD simulations, which in turn provide a structural and dynamic explanation for the measured data.149,150

Conflicts of interest

There are no conflicts to declare.

Supplementary Material

Acknowledgments

The authors gratefully acknowledge financial support by the Swiss National Science Foundation (Grant Number 200021-178762), the Scholarship Fund of the Swiss Chemical Industry, the German National Academic Foundation PhD scholarship (granted to S. M. L.), and the Austrian Science Fund (Erwin Schrödinger fellowship no. J-4568 granted to A. S. K).

Biographies

Biography

Anna S. Kamenik .

Anna Sophia Kamenik received her doctoral degree in Chemistry from the University of Innsbruck, Austria (UIBK) under the supervision Prof. Klaus Liedl (UIBK) and Prof. Brian Shoichet (UCSF). She is currently a postdoctoral fellow in the group of Prof. Sereina Riniker at ETH Zürich. Her research is driven by an excitement for molecular dynamics of (bio)pharmaceutically relevant system, in particular cyclic peptides and macrocycles.

Biography

Stephanie M. Linker .

Stephanie M. Linker obtained her degree in Biochemistry and Biophysics at the University of Frankfurt and the Max-Planck-Institute of Biophysics. After a research stay at the Computational Chemistry department of Boehringer Ingelheim and the European Bioinformatic Institute in Cambridge/UK, she started her PhD in the lab of Sereina Riniker (ETH Zurich) in 2018. In her PhD she develops reweighting methods for enhanced sampling simulations and studies the interactions between cyclic peptides and biological membranes.

Biography

Sereina Riniker .

Sereina Riniker completed her Master's degree in chemistry at ETH Zurich in 2008. After an internship in the research department of Givaudan AG and a research stay at the University of California Berkeley, she returned in 2009 to ETH Zurich to obtain a PhD in molecular dynamics simulations. From 2012 to 2014, she held a postdoctoral position in cheminformatics at the Novartis Institutes for BioMedical Research in Basel, Switzerland and Cambridge, Massachusetts. In 2014, Sereina Riniker started as Assistant Professor (with tenure track) of Computational Chemistry at the Department of Chemistry and Applied Biosciences at ETH Zurich. She was promoted to Associate Professor in 2020.

References

- Henzler-Wildman K. Kern D. Nature. 2007;450:964–972. doi: 10.1038/nature06522. [DOI] [PubMed] [Google Scholar]

- Bhabha G. Biel J. T. Fraser J. S. Acc. Chem. Res. 2015;48:423–430. doi: 10.1021/ar5003158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischer M. Coleman R. G. Fraser J. S. Shoichet B. K. Nat. Chem. 2014;6:575–583. doi: 10.1038/nchem.1954. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Den Bedem H. Fraser J. S. Nat. Methods. 2015;12:307–318. doi: 10.1038/nmeth.3324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wei G. Xi W. Nussinov R. Ma B. Chem. Rev. 2016;116:6516–6551. doi: 10.1021/acs.chemrev.5b00562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitsawong W. Buosi V. Otten R. Agafonov R. V. Zorba A. Kern N. Kutter S. Kern G. Pádua R. A. Meniche X. Kern D. eLife. 2018;7:e36656. doi: 10.7554/eLife.36656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischer M. Shoichet B. K. Fraser J. S. ChemBioChem. 2015;16:1560. doi: 10.1002/cbic.201500196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hansson T. Oostenbrink C. van Gunsteren W. Curr. Opin. Struct. Biol. 2002;12:190–196. doi: 10.1016/S0959-440X(02)00308-1. [DOI] [PubMed] [Google Scholar]

- van Gunsteren W. F. Berendsen H. J. Angew. Chem., Int. Ed. Engl. 1990;29:992–1023. doi: 10.1002/anie.199009921. [DOI] [Google Scholar]

- Huang W. Lin Z. van Gunsteren W. F. J. Chem. Theory Comput. 2011;7:1237–1243. doi: 10.1021/ct100747y. [DOI] [PubMed] [Google Scholar]

- Riniker S. J. Chem. Inf. Model. 2018;58:565–578. doi: 10.1021/acs.jcim.8b00042. [DOI] [PubMed] [Google Scholar]

- van Gunsteren W. F. Daura X. Hansen N. Mark A. E. Oostenbrink C. Riniker S. Smith L. J. Angew. Chem., Int. Ed. 2018;57:884–902. doi: 10.1002/anie.201702945. [DOI] [PubMed] [Google Scholar]

- Lindorff-Larsen K. Piana S. Dror R. O. Shaw D. E. Science. 2011;334:517–520. doi: 10.1126/science.1208351. [DOI] [PubMed] [Google Scholar]

- Zimmerman M. I. Porter J. R. Ward M. D. Singh S. Vithani N. Meller A. Mallimadugula U. L. Kuhn C. E. Borowsky J. H. Wiewiora R. P. Hurley M. F. D. Harbison A. M. Fogarty C. A. Coffland J. E. Fadda E. Voelz V. A. Chodera J. D. Bowman G. R. Nat. Chem. 2021;13:651–659. doi: 10.1038/s41557-021-00707-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohlhoff K. J. Shukla D. Lawrenz M. Bowman G. R. Konerding D. E. Belov D. Altman R. B. Pande V. S. Nat. Chem. 2014;6:15–21. doi: 10.1038/nchem.1821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miao Y. McCammon J. A. Mol. Simul. 2016;42:1046–1055. doi: 10.1080/08927022.2015.1121541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernardi R. C. Melo M. C. Schulten K. Biochim. Biophys. Acta, Gen. Subj. 2015;1850:872–877. doi: 10.1016/j.bbagen.2014.10.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huber T. Torda A. E. Van Gunsteren W. F. J. Comput.-Aided Mol. Des. 1994;8:695–708. doi: 10.1007/BF00124016. [DOI] [PubMed] [Google Scholar]

- Laio A. Parrinello M. Proc. Natl. Acad. Sci. U. S. A. 2002;99:12562–12566. doi: 10.1073/pnas.202427399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Torrie G. M. Valleau J. P. Chem. Phys. Lett. 1974;28:578–581. doi: 10.1016/0009-2614(74)80109-0. [DOI] [Google Scholar]

- Torrie G. M. Valleau J. P. J. Comput. Phys. 1977;23:187–199. doi: 10.1016/0021-9991(77)90121-8. [DOI] [Google Scholar]

- Schlitter J. Engels M. Krüger P. Jacoby E. Wollmer A. Mol. Simul. 1993;10:291–308. doi: 10.1080/08927029308022170. [DOI] [Google Scholar]

- Noé F. Clementi C. Curr. Opin. Struct. Biol. 2017;43:141–147. doi: 10.1016/j.sbi.2017.02.006. [DOI] [PubMed] [Google Scholar]

- Ribeiro J. M. L. Bravo P. Wang Y. Tiwary P. J. Chem. Phys. 2018;149:072301. doi: 10.1063/1.5025487. [DOI] [PubMed] [Google Scholar]

- Sultan M. M. Pande V. S. J. Chem. Phys. 2018;149:094106. doi: 10.1063/1.5029972. [DOI] [PubMed] [Google Scholar]

- Wang Y. Ribeiro J. M. L. Tiwary P. Nat. Commun. 2019;10:3573. doi: 10.1038/s41467-019-11405-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delemotte L. Kasimova M. A. Klein M. L. Tarek M. Carnevale V. Proc. Natl. Acad. Sci. U. S. A. 2015;112:124–129. doi: 10.1073/pnas.1416959112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernández-Quintero M. L. El Ghaleb Y. Tuluc P. Campiglio M. Liedl K. R. Flucher B. E. eLife. 2021;10:e64087. doi: 10.7554/eLife.64087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tiwary P. Limongelli V. Salvalaglio M. Parrinello M. Proc. Natl. Acad. Sci. U. S. A. 2015;112:E386–E391. doi: 10.1073/pnas.1424461112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugita M. Sugiyama S. Fujie T. Yoshikawa Y. Yanagisawa K. Ohue M. Akiyama Y. J. Chem. Inf. Model. 2021;61:3681–3695. doi: 10.1021/acs.jcim.1c00380. [DOI] [PubMed] [Google Scholar]

- Badaoui M. Kells A. Molteni C. Dickson C. J. Hornak V. Rosta E. J. Phys. Chem. B. 2018;122:11571–11578. doi: 10.1021/acs.jpcb.8b07442. [DOI] [PubMed] [Google Scholar]

- Witek J. Wang S. Schroeder B. Lingwood R. Dounas A. Roth H.-J. Fouché M. Blatter M. Lemke O. Keller B. Riniker S. J. Chem. Inf. Model. 2018;59:294–308. doi: 10.1021/acs.jcim.8b00485. [DOI] [PubMed] [Google Scholar]

- Rezai T. Yu B. Millhauser G. L. Jacobson M. P. Lokey R. S. J. Am. Chem. Soc. 2006;128:2510–2511. doi: 10.1021/ja0563455. [DOI] [PubMed] [Google Scholar]

- Fuchs J. E. von Grafenstein S. Huber R. G. Wallnoefer H. G. Liedl K. R. Proteins. 2014;82:546–555. doi: 10.1002/prot.24417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiß R. G. Losfeld M.-E. Aebi M. Riniker S. J. Phys. Chem. B. 2021;125:9467–9479. doi: 10.1021/acs.jpcb.1c04279. [DOI] [PubMed] [Google Scholar]

- Winter P. Stubenvoll S. Scheiblhofer S. Joubert I. A. Strasser L. Briganser C. Soh W. T. Hofer F. Kamenik A. S. Dietrich V. Michelini S. Laimer J. Lackner P. Horejs-Hoeck J. Tollinger M. Liedl K. R. Brandstetter J. Huber C. G. Weiss R. Front. Immunol. 2020;11:1824. doi: 10.3389/fimmu.2020.01824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Markwick P. R. Peacock R. B. Komives E. A. Biophys. J. 2019;116:49–56. doi: 10.1016/j.bpj.2018.11.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith R. D. Carlson H. A. J. Chem. Inf. Model. 2021;61:1287–1299. doi: 10.1021/acs.jcim.0c01002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sztain T. Amaro R. McCammon J. A. J. Chem. Inf. Model. 2021;61:3495–3501. doi: 10.1021/acs.jcim.1c00140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Voter A. F. Phys. Rev. Lett. 1997;78:3908. doi: 10.1103/PhysRevLett.78.3908. [DOI] [Google Scholar]

- Hansmann U. H. Chem. Phys. Lett. 1997;281:140–150. doi: 10.1016/S0009-2614(97)01198-6. [DOI] [Google Scholar]

- Sugita Y. Okamoto Y. Chem. Phys. Lett. 1999;314:141–151. doi: 10.1016/S0009-2614(99)01123-9. [DOI] [Google Scholar]

- Earl D. J. Deem M. W. Phys. Chem. Chem. Phys. 2005;7:3910–3916. doi: 10.1039/B509983H. [DOI] [PubMed] [Google Scholar]

- Park S. Pande V. S. Phys. Rev. E: Stat., Nonlinear, Soft Matter Phys. 2007;76:016703. doi: 10.1103/PhysRevE.76.016703. [DOI] [PubMed] [Google Scholar]

- Yang L. Shao Q. Gao Y. Q. J. Chem. Phys. 2009;130:124111. doi: 10.1063/1.3097129. [DOI] [PubMed] [Google Scholar]

- Okabe T. Kawata M. Okamoto Y. Mikami M. Chem. Phys. Lett. 2001;335:435–439. doi: 10.1016/S0009-2614(01)00055-0. [DOI] [Google Scholar]

- Patriksson A. van der Spoel D. Phys. Chem. Chem. Phys. 2008;10:2073–2077. doi: 10.1039/B716554D. [DOI] [PubMed] [Google Scholar]

- Fukunishi H. Watanabe O. Takada S. J. Chem. Phys. 2002;116:9058–9067. doi: 10.1063/1.1472510. [DOI] [Google Scholar]

- Kone A. Kofke D. A. J. Chem. Phys. 2005;122:206101. doi: 10.1063/1.1917749. [DOI] [PubMed] [Google Scholar]

- Rathore N. Chopra M. de Pablo J. J. J. Chem. Phys. 2005;122:024111. doi: 10.1063/1.1831273. [DOI] [PubMed] [Google Scholar]

- Temperature generator for REMD-simulations, http://virtualchemistry.org//remd-temperature-generator/index.php, accessed: 03.12.2021

- Qi R. Wei G. Ma B. Nussinov R. Methods Mol. Biol. 2018;1777:101. doi: 10.1007/978-1-4939-7811-3_5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang C. Ma J. J. Chem. Phys. 2008;129:134112. doi: 10.1063/1.2988339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rao F. Caflisch A. J. Chem. Phys. 2003;119:4035–4042. doi: 10.1063/1.1591721. [DOI] [Google Scholar]

- Beck D. A. White G. W. Daggett V. J. Struct. Biol. 2007;157:514–523. doi: 10.1016/j.jsb.2006.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang P. Yasar F. Hansmann U. H. J. Chem. Theory Comput. 2013;9:3816–3825. doi: 10.1021/ct400312d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang F. Wu Y.-D. J. Am. Chem. Soc. 2014;136:9536–9539. doi: 10.1021/ja502735c. [DOI] [PubMed] [Google Scholar]

- Jain K. Ghribi O. Delhommelle J. J. Chem. Inf. Model. 2020;61:432–443. doi: 10.1021/acs.jcim.0c01278. [DOI] [PubMed] [Google Scholar]

- Chen J. Won H.-S. Im W. Dyson H. J. Brooks C. L. J. Biomol. NMR. 2005;31:59–64. doi: 10.1007/s10858-004-6056-z. [DOI] [PubMed] [Google Scholar]

- Gnanakaran S. Hochstrasser R. M. Garca A. E. Proc. Natl. Acad. Sci. U. S. A. 2004;101:9229–9234. doi: 10.1073/pnas.0402933101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jas G. S. Kuczera K. Biophys. J. 2004;87:3786–3798. doi: 10.1529/biophysj.104.045419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wakefield A. E. Wuest W. M. Voelz V. A. J. Chem. Inf. Model. 2015;55:806–813. doi: 10.1021/ci500768u. [DOI] [PubMed] [Google Scholar]

- Merten C. Li F. Bravo-Rodriguez K. Sanchez-Garcia E. Xu Y. Sander W. Phys. Chem. Chem. Phys. 2014;16:5627–5633. doi: 10.1039/C3CP55018D. [DOI] [PubMed] [Google Scholar]

- Geng H. Jiang F. Wu Y.-D. J. Phys. Chem. Lett. 2016;7:1805–1810. doi: 10.1021/acs.jpclett.6b00452. [DOI] [PubMed] [Google Scholar]

- Marinari E. Parisi G. Europhys. Lett. 1992;19:451. doi: 10.1209/0295-5075/19/6/002. [DOI] [Google Scholar]

- Rosta E. Hummer G. J. Chem. Phys. 2010;132:034102. doi: 10.1063/1.3290767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nguyen P. H. Okamoto Y. Derreumaux P. J. Chem. Phys. 2013;138:061102. doi: 10.1063/1.4792046. [DOI] [PubMed] [Google Scholar]

- Zhang T. Nguyen P. H. Nasica-Labouze J. Mu Y. Derreumaux P. J. Phys. Chem. B. 2015;119:6941–6951. doi: 10.1021/acs.jpcb.5b03381. [DOI] [PubMed] [Google Scholar]

- Pan A. C. Weinreich T. M. Piana S. Shaw D. E. J. Chem. Theory Comput. 2016;12:1360–1367. doi: 10.1021/acs.jctc.5b00913. [DOI] [PubMed] [Google Scholar]

- Shao Q. Shi J. Zhu W. J. Chem. Theory Comput. 2017;13:1229–1243. doi: 10.1021/acs.jctc.6b00967. [DOI] [PubMed] [Google Scholar]

- Yang Y. I. Shao Q. Zhang J. Yang L. Gao Y. Q. J. Chem. Phys. 2019;151:070902. doi: 10.1063/1.5109531. [DOI] [PubMed] [Google Scholar]

- Gao Y. Q. J. Chem. Phys. 2008;128:064105. doi: 10.1063/1.2825614. [DOI] [PubMed] [Google Scholar]

- Christ C. D. van Gunsteren W. F. J. Chem. Phys. 2007;126:184110. doi: 10.1063/1.2730508. [DOI] [PubMed] [Google Scholar]

- Shao Q. J. Phys. Chem. B. 2014;118:5891–5900. doi: 10.1021/jp5043393. [DOI] [PubMed] [Google Scholar]

- Nakajima N. Nakamura H. Kidera A. J. Phys. Chem. B. 1997;101:817–824. doi: 10.1021/jp962142e. [DOI] [Google Scholar]

- Ono S. Naylor M. R. Townsend C. E. Okumura C. Okada O. Lokey R. S. J. Chem. Inf. Model. 2019;59:2952–2963. doi: 10.1021/acs.jcim.9b00217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Higo J. Ikebe J. Kamiya N. Nakamura H. Biophys. Rev. 2012;4:27–44. doi: 10.1007/s12551-011-0063-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Higo J. Nishimura Y. Nakamura H. J. Am. Chem. Soc. 2011;133:10448–10458. doi: 10.1021/ja110338e. [DOI] [PubMed] [Google Scholar]

- Berg B. A. Neuhaus T. Phys. Rev. Lett. 1992;68:9. doi: 10.1103/PhysRevLett.68.9. [DOI] [PubMed] [Google Scholar]

- Bekker G.-J. Fukuda I. Higo J. Fukunishi Y. Kamiya N. Sci. Rep. 2021;11:5046. doi: 10.1038/s41598-021-84488-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shirai H. Nakajima N. Higo J. Kidera A. Nakamura H. J. Mol. Biol. 1998;278:481–496. doi: 10.1006/jmbi.1998.1698. [DOI] [PubMed] [Google Scholar]

- Bekker G.-J. Fukuda I. Higo J. Kamiya N. Sci. Rep. 2020;10:1406. doi: 10.1038/s41598-020-58320-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ono S. Naylor M. R. Townsend C. E. Okumura C. Okada O. Lee H.-W. Lokey R. S. J. Chem. Inf. Model. 2021;61:5601–5613. doi: 10.1021/acs.jcim.1c00771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamelberg D. Mongan J. McCammon J. A. J. Chem. Phys. 2004;120:11919–11929. doi: 10.1063/1.1755656. [DOI] [PubMed] [Google Scholar]

- Pierce L. C. Salomon-Ferrer R. Augusto C. de Oliveira F. McCammon J. A. Walker R. C. J. Chem. Theory Comput. 2012;8:2997–3002. doi: 10.1021/ct300284c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamenik A. S. Kahler U. Fuchs J. E. Liedl K. R. J. Chem. Theory Comput. 2016;12:3449–3455. doi: 10.1021/acs.jctc.6b00231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miao Y. Nichols S. E. Gasper P. M. Metzger V. T. McCammon J. A. Proc. Natl. Acad. Sci. U. S. A. 2013;110:10982–10987. doi: 10.1073/pnas.1309755110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miao Y. Nichols S. E. McCammon J. A. Phys. Chem. Chem. Phys. 2014;16:6398–6406. doi: 10.1039/C3CP53962H. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Markwick P. R. Bouvignies G. Blackledge M. J. Am. Chem. Soc. 2007;129:4724–4730. doi: 10.1021/ja0687668. [DOI] [PubMed] [Google Scholar]

- Markwick P. R. McCammon J. A. Phys. Chem. Chem. Phys. 2011;13:20053–20065. doi: 10.1039/C1CP22100K. [DOI] [PubMed] [Google Scholar]

- Bucher D. Grant B. J. Markwick P. R. McCammon J. A. PLoS Comput. Biol. 2011;7:e1002034. doi: 10.1371/journal.pcbi.1002034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuglestad B. Gasper P. M. Tonelli M. McCammon J. A. Markwick P. R. Komives E. A. Biophys. J. 2012;103:79–88. doi: 10.1016/j.bpj.2012.05.047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Markwick P. R. Nilges M. Chem. Phys. 2012;396:124–134. doi: 10.1016/j.chemphys.2011.11.023. [DOI] [Google Scholar]

- Kamenik A. S. Lessel U. Fuchs J. E. Fox T. Liedl K. R. J. Chem. Inf. Model. 2018;58:982–992. doi: 10.1021/acs.jcim.8b00097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engelhardt H. Boese D. Petronczki M. Scharn D. Bader G. Baum A. Bergner A. Chong E. Doebel S. Egger G. Engelhardt C. Ettmayer P. Fuchs J. E. Gerstberger T. Gonnella N. Grimm A. Grondal E. Haddad N. Hopfgartner B. Kousek R. Krawiec M. Kriz M. Lamarre L. Leung J. Mayer M. Patel N. D. Peric Simov B. Reeves J. T. Schnitzer R. Schrenk A. Sharps B. Solca F. Stadtmüller H. Tan Z. Wunberg T. Zoephel A. McConnell D. B. J. Med. Chem. 2019;62:10272–10293. doi: 10.1021/acs.jmedchem.9b01169. [DOI] [PubMed] [Google Scholar]

- Kamenik A. S. Kraml J. Hofer F. Waibl F. Quoika P. K. Kahler U. Schauperl M. Liedl K. R. J. Chem. Inf. Model. 2020;60:3508–3517. doi: 10.1021/acs.jcim.0c00280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamelberg D. de Oliveira C. A. F. McCammon J. A. J. Chem. Phys. 2007;127:10B614. doi: 10.1063/1.2789432. [DOI] [PubMed] [Google Scholar]

- Wereszczynski J. and McCammon J. A., in Accelerated Molecular Dynamics in Computational Drug Design, ed. R. Baron, Springer New York, New York, NY, 2012, pp. 515–524 [DOI] [PubMed] [Google Scholar]

- Fajer M. Hamelberg D. McCammon J. A. J. Chem. Theory Comput. 2008;4:1565–1569. doi: 10.1021/ct800250m. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roe D. R. Bergonzo C. Cheatham III T. E. J. Phys. Chem. B. 2014;118:3543–3552. doi: 10.1021/jp4125099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tworowski D. Feldman A. V. Safro M. G. J. Mol. Biol. 2005;350:866–882. doi: 10.1016/j.jmb.2005.05.051. [DOI] [PubMed] [Google Scholar]

- Peng X. Zhang Y. Li Y. Liu Q. Chu H. Zhang D. Li G. J. Chem. Theory Comput. 2018;14:1216–1227. doi: 10.1021/acs.jctc.7b01211. [DOI] [PubMed] [Google Scholar]

- Wang A. Zhang D. Li Y. Zhang Z. Li G. J. Phys. Chem. Lett. 2019;11:325–332. doi: 10.1021/acs.jpclett.9b03399. [DOI] [PubMed] [Google Scholar]

- Lan P. Tan M. Zhang Y. Niu S. Chen J. Shi S. Qiu S. Wang X. Peng X. Cai G. Cheng H. Wu J. Li G. Lei M. Science. 2018;362:eaat6678. doi: 10.1126/science.aat6678. [DOI] [PubMed] [Google Scholar]

- Markwick P. R. Pierce L. C. Goodin D. B. McCammon J. A. J. Phys. Chem. Lett. 2011;2:158–164. doi: 10.1021/jz101462n. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gao N. Yang L. Gao F. Kurtz R. West D. Zhang S. J. Phys.: Condens. Matter. 2017;29:145201. doi: 10.1088/1361-648X/aa574b. [DOI] [PubMed] [Google Scholar]

- Sinko W. de Oliveira C. A. F. Pierce L. C. McCammon J. A. J. Chem. Theory Comput. 2012;8:17–23. doi: 10.1021/ct200615k. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miao Y. Feher V. A. McCammon J. A. J. Chem. Theory Comput. 2015;11:3584–3595. doi: 10.1021/acs.jctc.5b00436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miao Y. and McCammon J. A., Annual Reports in Computational Chemistry, Elsevier, 2017, vol. 13, pp. 231–278 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palermo G. Miao Y. Walker R. C. Jinek M. McCammon J. A. Proc. Natl. Acad. Sci. U. S. A. 2017;114:7260–7265. doi: 10.1073/pnas.1707645114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernández-Quintero M. L. Loeffler J. R. Waibl F. Kamenik A. S. Hofer F. Liedl K. R. Protein Eng., Des. Sel. 2019;32:513–523. doi: 10.1093/protein/gzaa014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sibener L. V. Fernandes R. A. Kolawole E. M. Carbone C. B. Liu F. McAffee D. Birnbaum M. E. Yang X. Su L. F. Yu W. Dong S. Gee M. H. Jude K. M. Davis M. M. Groves J. T. Goddard III W. A. Heath J. R. Evavold B. D. Vale R. D. Garcia K. C. Cell. 2018;174:672–687. doi: 10.1016/j.cell.2018.06.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang J. Arantes P. R. Bhattarai A. Hsu R. V. Pawnikar S. Huang Y.-M. M. Palermo G. Miao Y. Wiley Interdiscip. Rev.: Comput. Mol. Sci. 2021:e1521. doi: 10.1002/wcms.1521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kwak W. Hansmann U. H. Phys. Rev. Lett. 2005;95:138102. doi: 10.1103/PhysRevLett.95.138102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu P. Huang X. Zhou R. Berne B. J. J. Phys. Chem. B. 2006;110:19018–19022. doi: 10.1021/jp060365r. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Affentranger R. Tavernelli I. Di Iorio E. E. J. Chem. Theory Comput. 2006;2:217–228. doi: 10.1021/ct050250b. [DOI] [PubMed] [Google Scholar]

- Itoh S. G. Okumura H. Okamoto Y. J. Chem. Phys. 2010;132:134105. doi: 10.1063/1.3372767. [DOI] [PubMed] [Google Scholar]

- Voter A. F. Germann T. C. MRS Proc. 1998;528:221. doi: 10.1557/PROC-528-221. [DOI] [Google Scholar]

- Huang Y.-M. M. McCammon J. A. Miao Y. J. Chem. Theory Comput. 2018;14:1853–1864. doi: 10.1021/acs.jctc.7b01226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang L. Friesner R. A. Berne B. J. Phys. Chem. B. 2011;115:9431–9438. doi: 10.1021/jp204407d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu P. Kim B. Friesner R. A. Berne B. Proc. Natl. Acad. Sci. U. S. A. 2005;102:13749–13754. doi: 10.1073/pnas.0506346102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Witek J. Keller B. G. Blatter M. Meissner A. Wagner T. Riniker S. J. Chem. Inf. Model. 2016;56:1547–1562. doi: 10.1021/acs.jcim.6b00251. [DOI] [PubMed] [Google Scholar]

- Witek J. Mühlbauer M. Keller B. G. Blatter M. Meissner A. Wagner T. Riniker S. ChemPhysChem. 2017;18:3309–3314. doi: 10.1002/cphc.201700995. [DOI] [PubMed] [Google Scholar]

- Shen T. Hamelberg D. J. Chem. Phys. 2008;129:034103. doi: 10.1063/1.2944250. [DOI] [PubMed] [Google Scholar]

- Zwanzig R. W. J. Chem. Phys. 1954;22:1420–1426. doi: 10.1063/1.1740409. [DOI] [Google Scholar]

- Ferrenberg A. M. Swendsen R. H. Phys. Rev. Lett. 1989;63:1195. doi: 10.1103/PhysRevLett.63.1195. [DOI] [PubMed] [Google Scholar]

- Kieninger S. Donati L. Keller B. G. Curr. Opin. Struct. Biol. 2020;61:124–131. doi: 10.1016/j.sbi.2019.12.018. [DOI] [PubMed] [Google Scholar]

- Linker S. M. Weiß R. G. Riniker S. J. Chem. Phys. 2020;153:234106. doi: 10.1063/5.0019687. [DOI] [PubMed] [Google Scholar]

- Miao Y. Sinko W. Pierce L. Bucher D. Walker R. C. McCammon J. A. J. Chem. Theory Comput. 2014;10:2677–2689. doi: 10.1021/ct500090q. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kumar S. Rosenberg J. M. Bouzida D. Swendsen R. H. Kollman P. A. J. Comput. Chem. 1992;13:1011–1021. doi: 10.1002/jcc.540130812. [DOI] [Google Scholar]

- Shirts M. R. Chodera J. D. J. Chem. Phys. 2008;129:124105. doi: 10.1063/1.2978177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallicchio E. Andrec M. Felts A. K. Levy R. M. J. Phys. Chem. B. 2005;109:6722–6731. doi: 10.1021/jp045294f. [DOI] [PubMed] [Google Scholar]

- Chodera J. D. Swope W. C. Pitera J. W. Seok C. Dill K. A. J. Chem. Theory Comput. 2007;3:26–41. doi: 10.1021/ct0502864. [DOI] [PubMed] [Google Scholar]

- Wu H. Paul F. Wehmeyer C. Noé F. Proc. Natl. Acad. Sci. U. S. A. 2016;113:E3221–E3230. doi: 10.1073/pnas.1525092113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miao Y. Bhattarai A. Wang J. J. Chem. Theory Comput. 2020;16:5526–5547. doi: 10.1021/acs.jctc.0c00395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang J. Miao Y. J. Chem. Phys. 2020;153:154109. doi: 10.1063/5.0021399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schütte C. Fischer A. Huisinga W. Deuflhard P. J. Comp. Physiol., A. 1999;151:146–168. [Google Scholar]

- Swope W. C. Pitera J. W. Suits F. J. Phys. Chem. B. 2004;108:6571–6581. doi: 10.1021/jp037421y. [DOI] [Google Scholar]

- Prinz J.-H. Wu H. Sarich M. Keller B. Senne M. Held M. Chodera J. D. Schütte C. Noé F. J. Chem. Phys. 2011;134:174105. doi: 10.1063/1.3565032. [DOI] [PubMed] [Google Scholar]

- Chodera J. D. Noé F. Curr. Opin. Struct. Biol. 2014;25:135–144. doi: 10.1016/j.sbi.2014.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chodera J. D. Swope W. C. Noé F. Prinz J.-H. Shirts M. R. Pande V. S. J. Chem. Phys. 2011;134:06B612. doi: 10.1063/1.3592152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weber J. K. Pande V. S. J. Chem. Theory Comput. 2015;11:2412–2420. doi: 10.1021/acs.jctc.5b00031. [DOI] [PubMed] [Google Scholar]

- Donati L. Hartmann C. Keller B. G. J. Chem. Phys. 2017;146:244112. doi: 10.1063/1.4989474. [DOI] [PubMed] [Google Scholar]

- Plattner N. Noé F. Nat. Commun. 2015;6:7653. doi: 10.1038/ncomms8653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosta E. Hummer G. J. Chem. Theory Comput. 2015;11:276–285. doi: 10.1021/ct500719p. [DOI] [PubMed] [Google Scholar]

- Stelzl L. S. Kells A. Rosta E. Hummer G. J. Chem. Theory Comput. 2017;13:6328–6342. doi: 10.1021/acs.jctc.7b00373. [DOI] [PubMed] [Google Scholar]

- Loria J. P. Rance M. Palmer A. G. J. Am. Chem. Soc. 1999;121:2331–2332. doi: 10.1021/ja983961a. [DOI] [Google Scholar]

- Akke M. Palmer A. G. J. Am. Chem. Soc. 1996;118:911–912. doi: 10.1021/ja953503r. [DOI] [Google Scholar]

- Olsson S. Wu H. Paul F. Clementi C. Noé F. Proc. Natl. Acad. Sci. U. S. A. 2017;114:8265–8270. doi: 10.1073/pnas.1704803114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bottaro S. Lindorff-Larsen K. Science. 2018;361:355–360. doi: 10.1126/science.aat4010. [DOI] [PubMed] [Google Scholar]